Open AI Hires Open Claw Developer Peter Steinberger: The Future of Personal AI Agents [2025]

Introduction: The Biggest AI Agent Acquisition Nobody Fully Understood Yet

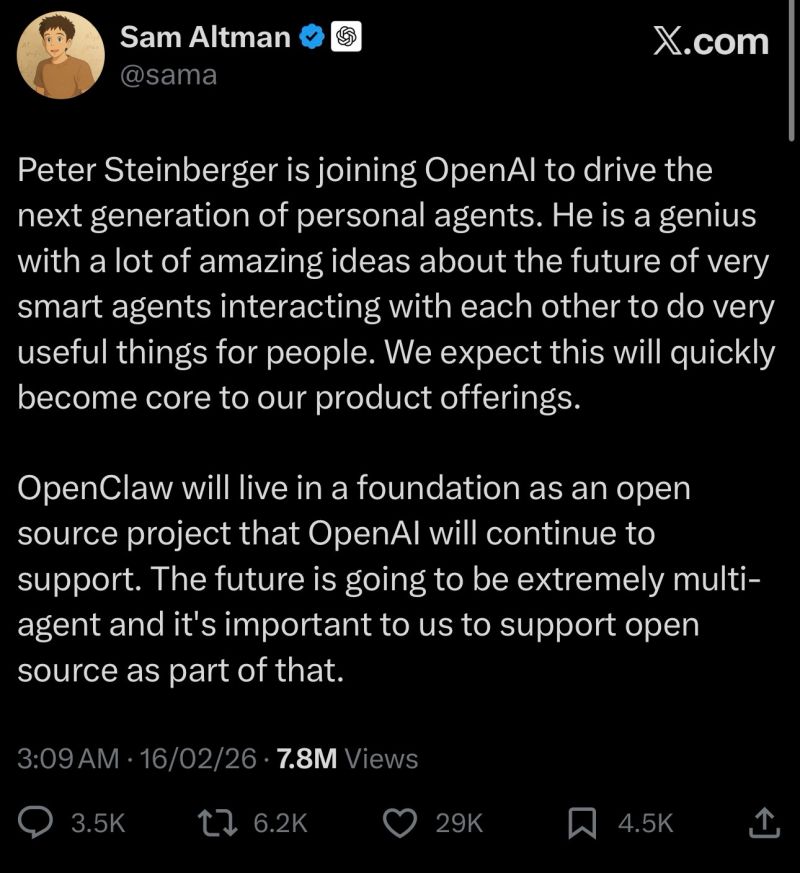

In early 2025, something significant happened in the AI world that flew under many people's radar. Sam Altman, CEO of Open AI, announced via X that the company had hired Peter Steinberger, the developer behind Open Claw, an increasingly popular open-source AI agent framework. The move wasn't about acquiring code or a team. It was about acquiring a person, a vision, and momentum.

Here's the thing that made this acquisition different from typical tech hiring: Open Claw didn't get absorbed into Open AI's proprietary stack. Instead, Steinberger committed to keeping it independent, moving it to a foundation model, and maintaining its open-source nature. Open AI essentially hired the person while letting the project remain free and accessible to the world. That's unusually generous in an industry where acquisitions typically mean proprietary lock-in.

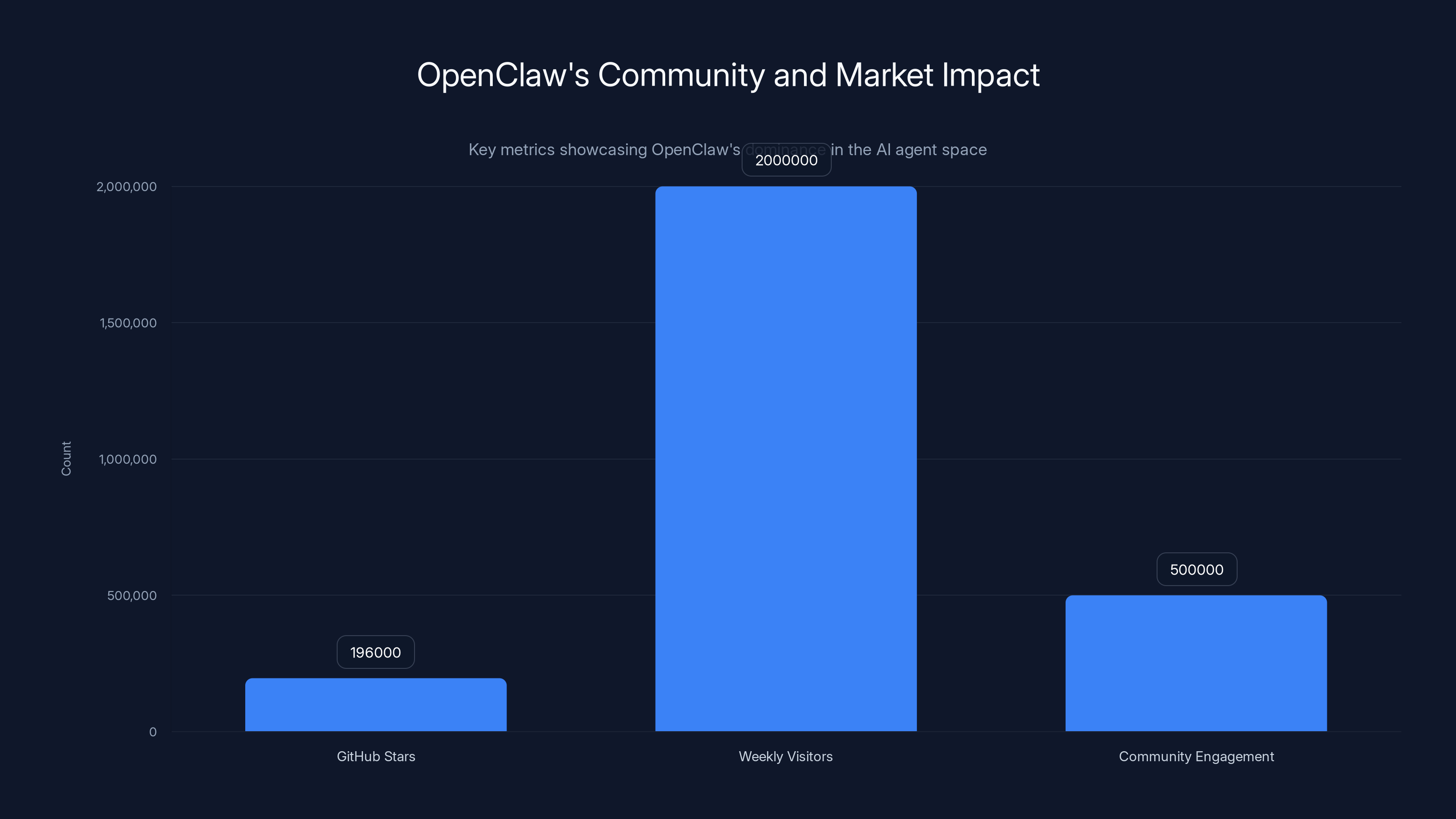

But why did this matter so much? Because by early 2025, Open Claw had become something of a phenomenon in developer communities. The project boasted 196,000 Git Hub stars, 2 million weekly visitors, and had become the de facto framework for building personal AI agents. People were using it to automate email inboxes, control smart home systems, write code, manage Spotify playlists, and handle tasks that previously required manual intervention or custom scripts.

The broader context: both Open AI and Meta were reportedly in a bidding war for Steinberger's talent and project, with offers allegedly in the billions of dollars. The real draw wasn't Open Claw's codebase—it was the community, the momentum, and Steinberger's vision for how AI agents should work in the real world. Open AI won, and in doing so, signaled that personal AI agents are about to become a core focus for the company.

This isn't just another hiring announcement. This is a strategic bet on the future of human-computer interaction, a recognition that the next frontier isn't just better language models, but smarter systems that can act on our behalf, autonomously managing our digital lives.

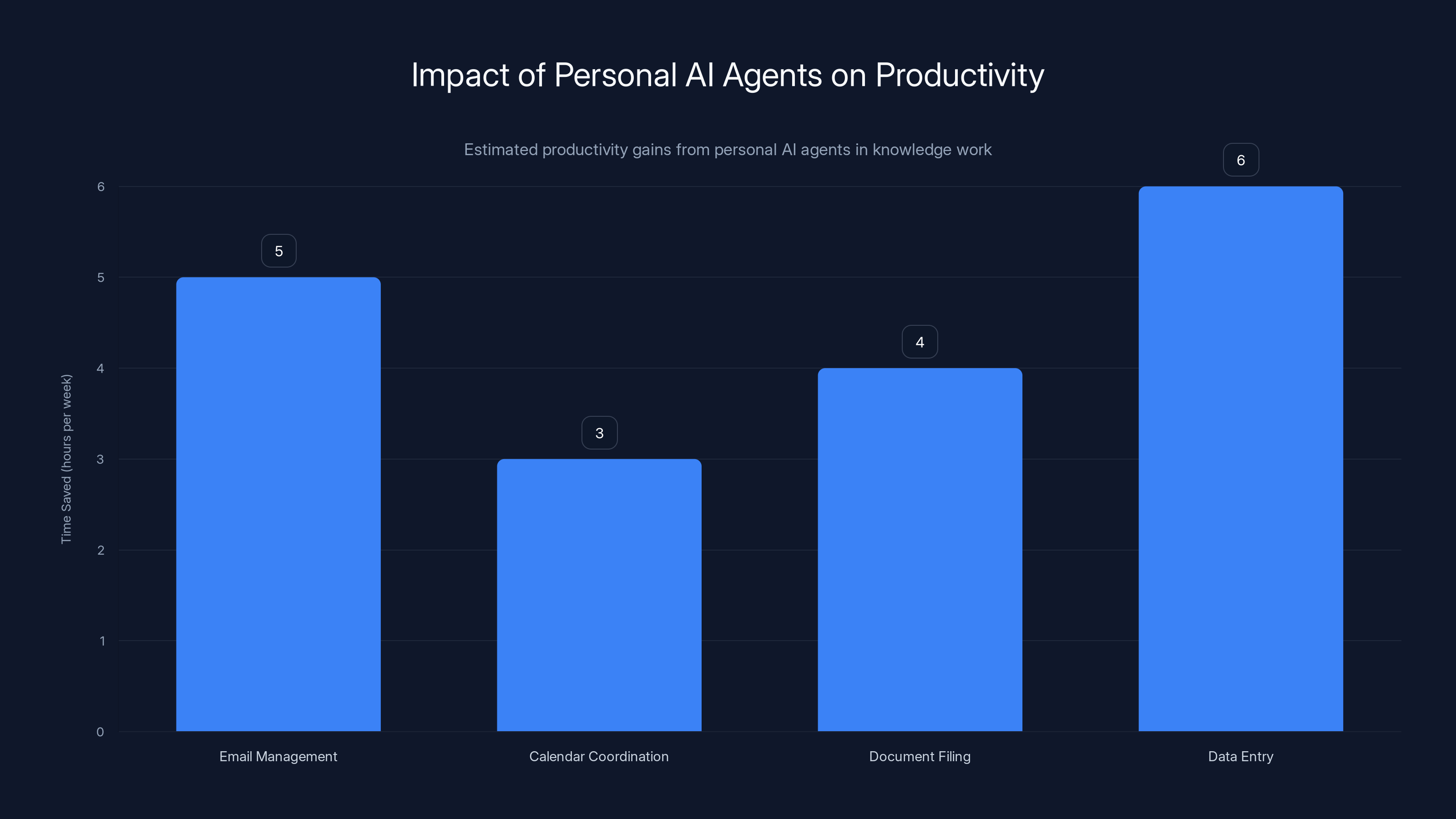

Estimated data suggests personal AI agents could save knowledge workers up to 18 hours per week by automating routine tasks, significantly boosting productivity.

TL; DR

- Who Got Hired: Peter Steinberger, creator of Open Claw, joined Open AI to lead the next generation of personal AI agents

- What Open Claw Is: An open-source framework with 196,000 Git Hub stars enabling users to build autonomous agents that control apps, email, smart homes, and more

- The Strategic Play: Open AI and Meta were bidding billions for Steinberger and Open Claw's community momentum, not its code

- What Happens Next: Open Claw moves to a foundation to stay independent while Steinberger drives Open AI's agent roadmap

- Why It Matters: Personal AI agents are becoming the next battleground in AI, with massive implications for productivity, automation, and how we interact with software

Personal agents can save significant time by automating routine tasks, with email management potentially reclaiming up to 7.5 hours weekly. Estimated data.

Understanding Open Claw: What Actually Made It Special

Before we talk about why Open AI wanted Peter Steinberger so badly, we need to understand what Open Claw actually does and why developers fell in love with it.

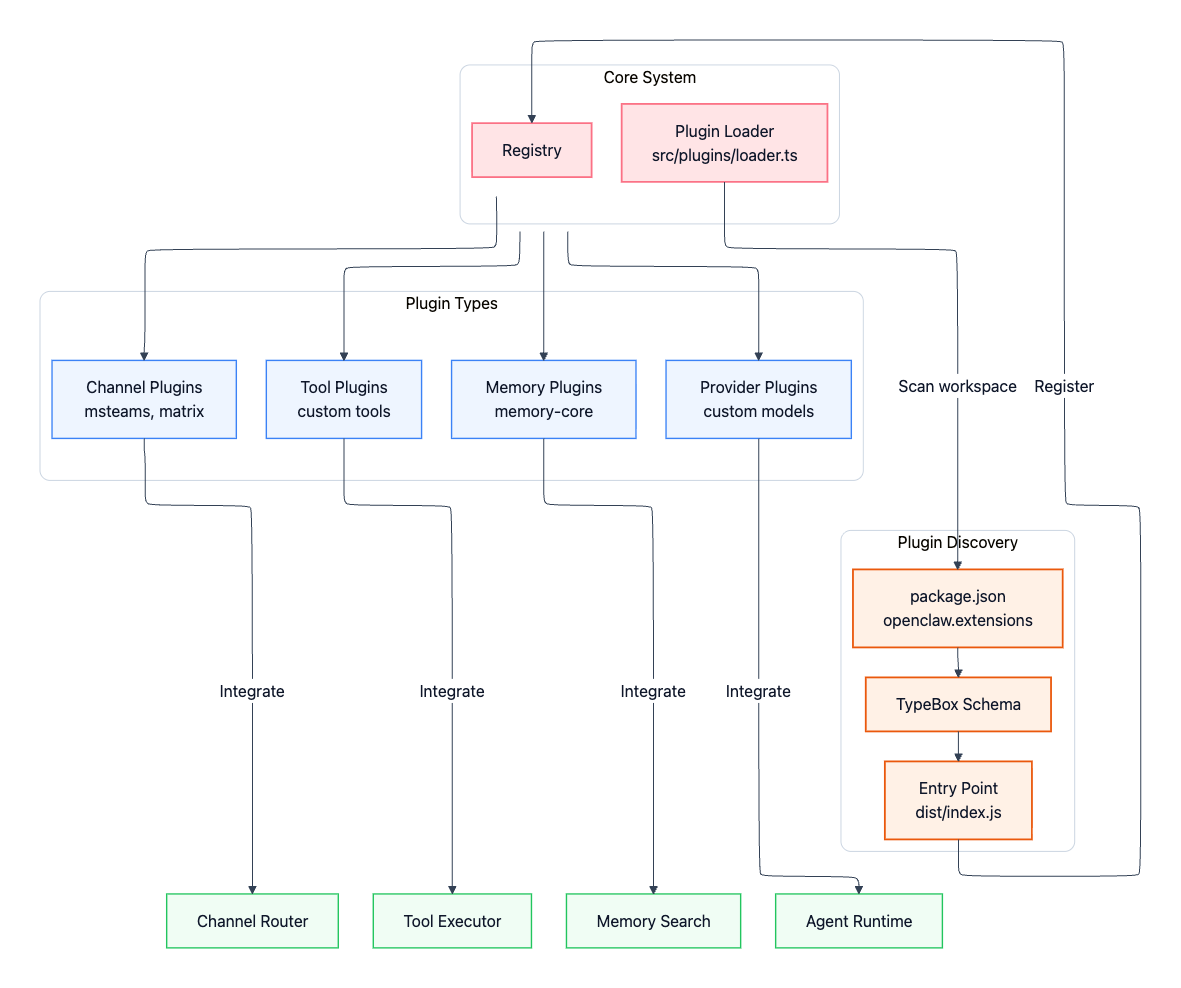

Open Claw is a framework for building AI agents—basically, autonomous programs that can interact with other applications on your behalf. Unlike traditional automation tools that require complex conditional logic or API integrations, Open Claw uses large language models to understand what you want and figure out how to do it.

The concept isn't entirely new. Companies like Zapier and Make have been automating workflows for years. But they require you to define workflows explicitly: "If email arrives with keyword X, then do Y." Open Claw flips this around. You describe the task in natural language, and the AI figures out how to accomplish it by controlling your applications.

Think of it like having a capable assistant who understands both your intent and the software you use. Tell the assistant "clean up my email inbox and mark newsletters for later," and it figures out the mechanics—opening Gmail, identifying newsletters, applying filters—without you writing a single line of code or creating a complex workflow.

The Technical Architecture That Changed Everything

What made Open Claw technically superior to existing automation solutions was its architecture. The system didn't rely on parsing HTML or maintaining specific integrations for each application. Instead, it used computer vision combined with natural language understanding to interact with applications the way humans do—by understanding screenshots and knowing where to click.

This approach, sometimes called "UI automation" or "agent-based RPA" (Robotic Process Automation), had a massive advantage: it worked with any application that had a visual interface. You didn't need official API support. You didn't need custom integrations built by Open Claw's team. An agent could learn to use almost anything.

The technical stack leveraged state-of-the-art vision language models to analyze what's on screen, understand the context, and make decisions about what to do next. This required sophisticated prompt engineering, careful handling of state management, and robust error recovery—all things Steinberger and contributors had refined through months of development and real-world usage.

Real-World Use Cases That Drove Adoption

Open Claw's rise wasn't theoretical. Developers started building genuinely useful things with it, and word spread fast.

Developers used it to create agents that could write code, receiving natural language descriptions of features and outputting working code without human intervention. Others built inbox management agents that processed hundreds of emails automatically, categorizing, filing, and responding to routine messages. Some built shopping agents that could navigate websites, find products, compare prices, and complete purchases.

One fascinating category: personal assistant agents that integrated multiple applications. An agent might check your calendar, review your inbox, monitor your smart home sensors, and autonomously adjust your environment—dimming lights, closing blinds, adjusting temperature—based on your schedule and preferences.

The diversity of use cases was striking. Software engineers used it for code generation. Marketing teams used it for social media scheduling. Operations teams used it for customer support automation. The framework's flexibility meant that once you understood the basics, you could build almost anything.

What really drove adoption, though, was the developer experience. Open Claw's documentation was excellent, the community was welcoming, and the framework was genuinely fun to experiment with. You could get your first agent running in minutes, not hours.

The Bidding War Nobody Saw Coming

By late 2024, both Open AI and Meta realized they had a problem. The future of AI wasn't just about building better models—it was about building systems that could actually do useful work in the real world. Personal AI agents were becoming the obvious next frontier, and Open Claw had already captured the mindshare and community trust.

According to reports, both companies made offers to Steinberger in the billions of dollars. This wasn't typical acquisition-level money for a single engineer or even a small team. This was the kind of valuation you see for actual companies being acquired. The difference: neither company was primarily interested in Open Claw's codebase.

Why The Code Didn't Matter (Much)

This is crucial to understand. If Open AI or Meta wanted the technology, they had the resources to build it themselves. Open AI had world-class research teams. Meta had Llama and decades of AI expertise. Either company could've replicated Open Claw's functionality in months.

What they couldn't replicate easily was Open Claw's community. The 196,000 Git Hub stars represented genuine developer enthusiasm. The 2 million weekly visitors meant tens of thousands of developers were actively using and building with Open Claw. The project had reached that critical inflection point where it was becoming the default choice for a specific problem domain.

In venture capital and M&A, this is known as "platform lock-in" or "network effects." Once enough developers choose your platform, other developers follow, creating a self-reinforcing cycle. Breaking that cycle requires acquiring the community itself, and the community follows the founder.

The Meta Factor

Meta's interest in the space made sense. The company had been investing heavily in AI agent research through its AI division. Meta was also competing with Open AI across multiple fronts—language models, image generation, and increasingly, agent-based systems. Losing the Open Claw acquisition to Open AI meant Meta would be playing catch-up in the agent space, at least in terms of developer mindshare.

Meta had reportedly made offers in the billions as well, suggesting serious commitment to the space. But Open AI won. The reasons likely relate to perception: developers already associated Open AI with cutting-edge AI research and deployment at scale. Joining Open AI positioned Steinberger and his work at what many developers saw as the center of AI innovation.

Open AI's willingness to let Open Claw remain independent and open-source probably also swayed the decision. Steinberger had built an open-source project on community principles. Joining a company that would have absorbed it into proprietary systems might have felt like a betrayal of that vision. Open AI's offer to maintain Open Claw's independence was likely the deciding factor.

OpenClaw has achieved significant traction with 196,000 GitHub stars and 2 million weekly visitors, highlighting its dominance in the personal agent development space. Estimated data for community engagement.

What Steinberger Actually Gets (And What Open AI Gets Back)

Let's break down what this hiring decision means for both parties.

For Peter Steinberger

Steiberger gains access to Open AI's resources, research capabilities, and distribution. Open AI's API platform already powers thousands of applications. Steinberger's personal agent framework could potentially become the agent infrastructure that Open AI recommends and supports.

He also gains runway and resources to develop personal agents to their fullest potential. Building agent frameworks is capital-intensive work—compute costs, research, testing, safety validation. Having Open AI's backing means Steinberger doesn't have to worry about funding or sustainability. He can focus on the vision.

Maybe most importantly, Steinberger gains credibility and mainstream exposure. Being hired by Open AI is the kind of validation that opens doors in the AI industry. Conferences will invite him to speak. Journalists will cover his work. Investors will want to fund follow-on projects.

For Open AI

Open AI gets a smart engineer who's proven he can build developer communities and create tools people actually use. Steinberger isn't just a coder—he's demonstrated an understanding of developer needs, a knack for excellent documentation, and the ability to build community-driven projects that achieve critical mass.

More importantly, Open AI gets Steinberger's vision for how personal agents should work. The company now has someone leading agent development who has hands-on experience building in the space, getting feedback from developers, and iterating based on real-world usage patterns.

This directly supports Open AI's broader agent roadmap. Sam Altman has repeatedly discussed personal agents as the next frontier. Having Steinberger lead that effort ensures continuity between Open AI's public vision and the technical reality of implementation.

There's also a defensive element. By hiring Steinberger, Open AI prevents competitors from acquiring this talent and vision. The bidding war itself signals how valuable this human capital is in the agent space.

The Bigger Picture: Why Personal AI Agents Are About To Explode

To understand why Open AI and Meta were willing to spend billions on Steinberger and Open Claw, we need to zoom out and look at why personal AI agents are becoming so important.

The Productivity Crisis in Knowledge Work

Here's a problem that affects millions of knowledge workers: they spend more time managing information and routine tasks than doing actual work. Email management, calendar coordination, document filing, data entry, routine communications—these tasks consume hours daily and require conscious attention despite being completely automatable.

Traditional automation solutions haven't solved this because they're too rigid. They break when software updates change interfaces. They require technical expertise to set up. They can't handle nuance or exception cases.

Personal AI agents fix this. An agent can understand your needs conversationally, adapt to interface changes automatically, and handle edge cases with reasoning rather than brittle conditional logic. This unlocks productivity gains that automation has promised for decades but never quite delivered.

The Shift From Information Retrieval to Information Action

For the last fifteen years, AI progress focused on answering questions and providing information. Search engines improved. Question-answering systems emerged. Then large language models made information retrieval incredibly sophisticated.

But the bottleneck for most people isn't finding information anymore—it's acting on it. You know what emails matter. You know what tasks are important. You know what decisions need to be made. What you lack is time and energy to actually do these things.

Personal agents shift focus from "how do I find information" to "how do I act on information automatically." This is a subtle but massive shift in what AI systems need to do.

The Technical Infrastructure Finally Exists

Personal agents require several technical breakthroughs to work properly:

- Powerful language models that can understand complex tasks and reason about solutions

- Vision language models that can understand screens and interfaces

- Reliable APIs for applications that agents need to control

- Robust error handling that allows agents to recover from failures

- Cost-effective inference so running agents continuously doesn't become prohibitively expensive

As of 2025, all of these pieces exist. Language models like GPT-4 and its successors are sufficiently capable. Vision language models can reliably understand UI. Most applications have public APIs or support programmatic control. Inference costs have dropped dramatically.

For the first time in computing history, the technical foundations for personal agents are solid enough to make them practical at scale. This was true in 2024 when Open Claw gained traction, and it's even more true in 2025.

The Developer Community Effect

Before Open Claw, agent frameworks existed but hadn't achieved critical mass in the developer community. Anthropic's research on agentic AI was influential. Lang Chain provided tooling for building agent systems. But none had captured the imagination and enthusiasm that Open Claw achieved.

Open Claw's rapid growth signaled something important: developers were ready to build with agents, and the framework solved real problems they were facing. This community momentum became self-reinforcing. As more developers used Open Claw, more examples and integrations emerged. As examples multiplied, adoption accelerated. By the time Open AI and Meta were bidding for Steinberger, Open Claw had achieved unmistakable mainstream momentum.

This is exactly the kind of community momentum that becomes hard to replicate or displace once established. It's why Open AI's acquisition of Steinberger and support for Open Claw's independence was strategically brilliant—it preserved and strengthened the community while bringing its creator inside.

The alignment problem and security are rated as the most critical concerns for personal agents, highlighting the need for robust solutions. (Estimated data)

Open AI's Agent Roadmap: What This Hire Signals

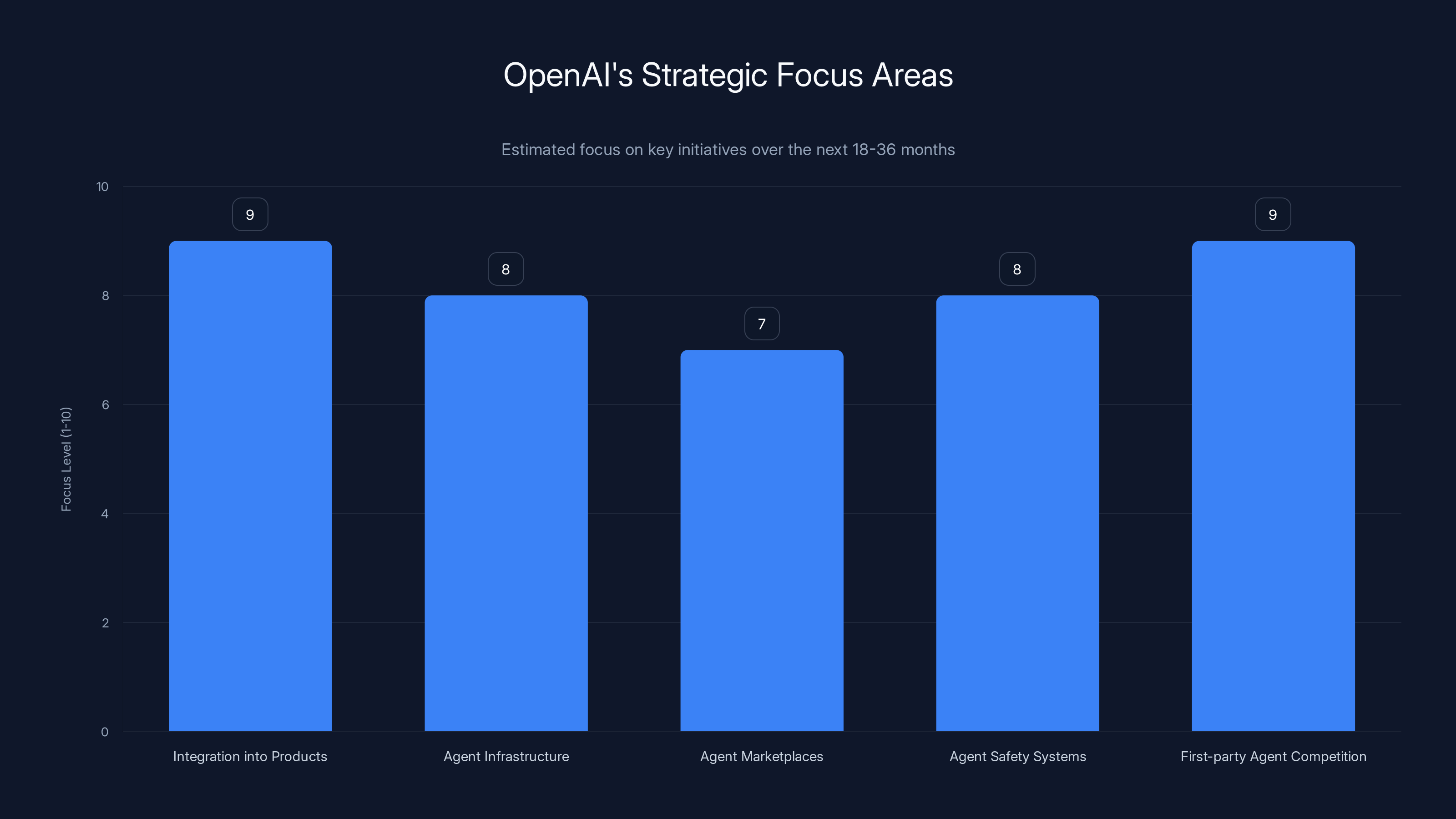

The hiring of Steinberger tells us a lot about Open AI's actual priorities and plans for the next 18-36 months.

Personal Agents As Core Product, Not Side Feature

Some companies acquire talent and bury them in existing structures. Open AI's public positioning of Steinberger suggests personal agents will be a central, visible part of the company's product roadmap. Sam Altman explicitly said Steinberger joined "to drive the next generation of personal agents." This isn't a sidelined research position—it's a driver's seat role.

What does this mean practically? It likely means Open AI will be:

- Integrating agents directly into its products, making them first-class citizens alongside language models

- Building agent-specific infrastructure, like hosted agent execution, monitoring, and safety frameworks

- Creating agent marketplaces, allowing developers to share and monetize agents they've built

- Implementing agent safety systems, ensuring agents don't do harmful or unintended things

- Competing in the agent space, offering first-party agents for common tasks (email management, data analysis, customer support, etc.)

Each of these is a massive undertaking that requires deep expertise in agent systems. Bringing in Steinberger signals Open AI's seriousness about executing on this vision.

The Foundation Move Is Critical

The fact that Open Claw is moving to a foundation structure is remarkable in itself. Many tech acquisitions result in open-source projects being closed or neglected. Open AI's decision to maintain Open Claw as truly independent is unusual and significant.

This likely reflects Sam Altman's stated philosophy about open-source AI and developer ecosystems. Altman has repeatedly argued that open-source models and tools are important for the health of the AI industry, even when it creates competition for Open AI.

For Open Claw specifically, moving to a foundation means:

- The project can't be killed or abandoned if Open AI's priorities shift

- The community maintains governance authority

- Competing companies (like Meta) can still contribute and benefit from improvements

- Developers retain confidence that the project has long-term stability

This is strategically brilliant because it doesn't just preserve Open Claw—it strengthens the entire ecosystem that Open AI benefits from. Developers will be more enthusiastic about building agent systems if they know the foundational tools remain independent and stable.

How Open Claw Compares To Existing Agent Frameworks

To fully appreciate why Open Claw became such a big deal, we need to understand how it compares to what existed before.

Lang Chain: The Existing Standard

Lang Chain had been the dominant framework for building agent systems. It provided abstractions for working with language models, managing prompts, handling tool use, and orchestrating workflows.

Lang Chain is powerful and has become the industry standard. But it requires developers to write significant code and explicitly define how agents should behave. It's more of a library that enables agent building than a framework that makes agent building intuitive.

Anthropic's Research Contributions

Anthropic published influential research on agentic AI systems, including work on tool use and agent safety. This research informed how many systems approach agent design. But Anthropic didn't initially release a comprehensive framework for building personal agents at scale.

Why Open Claw Won

Open Claw succeeded where others had good research or libraries because it solved the entire problem, not just parts of it. It offered:

- End-to-end solutions for common agent tasks

- Excellent developer documentation that made getting started easy

- Community examples showing how to build real things

- UI automation capabilities that worked with existing applications without API support

- Natural language interfaces that let non-technical people create agents

The combination of technical capability and developer experience created a flywheel effect that accelerated adoption.

OpenAI is likely to prioritize integrating personal agents into products and competing in the agent space, with significant focus on building infrastructure and safety systems. (Estimated data)

The Open Source Bet: Why Open Claw Stays Independent

One of the most interesting aspects of this deal is Open AI's commitment to keeping Open Claw open-source and independent. This represents a bet on a specific vision of how the agent ecosystem should develop.

The Network Effects Argument

Open AI likely believes that the agent ecosystem benefits from having an independent, community-driven foundation. When a tool is truly open-source and not controlled by a single company, it becomes neutral ground where competitors can collaborate.

Developers who might hesitate to build on a framework controlled by Open AI will more enthusiastically build on an independent foundation. This creates more integrations, more examples, and more ecosystem value—which ultimately benefits Open AI even though Open AI doesn't directly control the project.

This is similar to how Linux became the dominant server operating system despite being open-source. The neutrality of open-source made it the obvious choice for enterprises that wanted to avoid vendor lock-in. The massive adoption created enormous ecosystem value that benefited companies like Red Hat and Canonical that built on top of Linux, even though they didn't control Linux itself.

The Credibility Angle

Keeping Open Claw independent also protects Open AI's credibility in the developer community. If Open AI had absorbed Open Claw and made it proprietary, developers would reasonably question whether Open AI was making decisions for the common good or just for competitive advantage.

By preserving Open Claw's independence, Open AI demonstrates genuine commitment to open-source principles and developer freedom. This builds trust that carries over to Open AI's other initiatives.

The Research Acceleration Angle

From a pure research perspective, an open-source framework gets contributions from thousands of developers and researchers worldwide. Open AI could implement improvements in a closed environment, but they'd benefit more from having the entire global developer community contributing ideas and improvements.

This is especially true for agent systems, which are still nascent technology. The best designs and approaches haven't been discovered yet. By making Open Claw open-source and truly independent, Open AI maximizes the chances that breakthrough ideas will emerge from the community.

What Personal Agents Actually Enable: Use Cases and Implications

Let's get concrete about what personal agents enable and why this matters.

Email and Communication Management

Imagine an agent that understands your email preferences, automatically categorizes incoming messages, identifies urgent items, drafts responses to routine inquiries, and summarizes long conversation threads.

This isn't just a nice-to-have. The average office worker spends 2+ hours daily on email. An agent that handles routine email could reclaim 5-10 hours per week of productive time.

Oscar agent frameworks like Open Claw make this possible. An agent can:

- Read your email

- Understand the context and intent

- Identify the type of message (request, question, announcement, etc.)

- Determine appropriate actions (file, respond, escalate)

- Execute actions by controlling your email client

Data Analysis and Reporting

Businesses create hundreds of reports monthly. Most are routine—marketing reports showing website traffic, sales reports showing pipeline progress, operations reports showing system metrics.

Personal agents can automate these almost entirely. An agent configured for reporting can:

- Pull data from data sources (databases, APIs, spreadsheets)

- Analyze the data according to pre-defined criteria

- Generate written narratives explaining the data

- Create visualizations

- Distribute reports to stakeholders

Work that currently takes hours could run automatically overnight.

Smart Home Integration

Control systems for smart homes are currently fragmented. Each device manufacturer (Philips Hue, Nest, etc.) offers a separate app and API. Creating sophisticated home automation requires integrating multiple systems, which is complex and error-prone.

A personal agent could abstract away this complexity. You'd describe what you want ("when I leave the office, prepare the house for evening") and the agent would figure out what calls to make to which systems to achieve your goal.

More ambitiously, agents could learn your preferences and anticipate your needs. Based on weather, calendar, and historical patterns, an agent could automatically adjust your home environment.

Customer Support and Service Automation

Companies currently use chatbots for customer support, but chatbots are limited to answering questions from predefined responses. Personal agents can actually help customers by controlling applications on their behalf.

A support agent could:

- Listen to a customer's problem

- Access the company's systems to understand the customer's account

- Identify and execute solutions

- Update systems to reflect the resolution

- Follow up to ensure satisfaction

This moves customer support from "answering questions" to "actually solving problems."

Software Development Assistance

Developers have long wanted AI that could actually write code. But AI code generation currently requires developers to review and test all generated code carefully.

Personal development agents could take this further. An agent could:

- Understand the requirements from natural language descriptions

- Write code

- Run tests automatically

- Debug failures and fix issues

- Deploy code to environments

This transforms AI from "code suggestion" to "autonomous coding teammate."

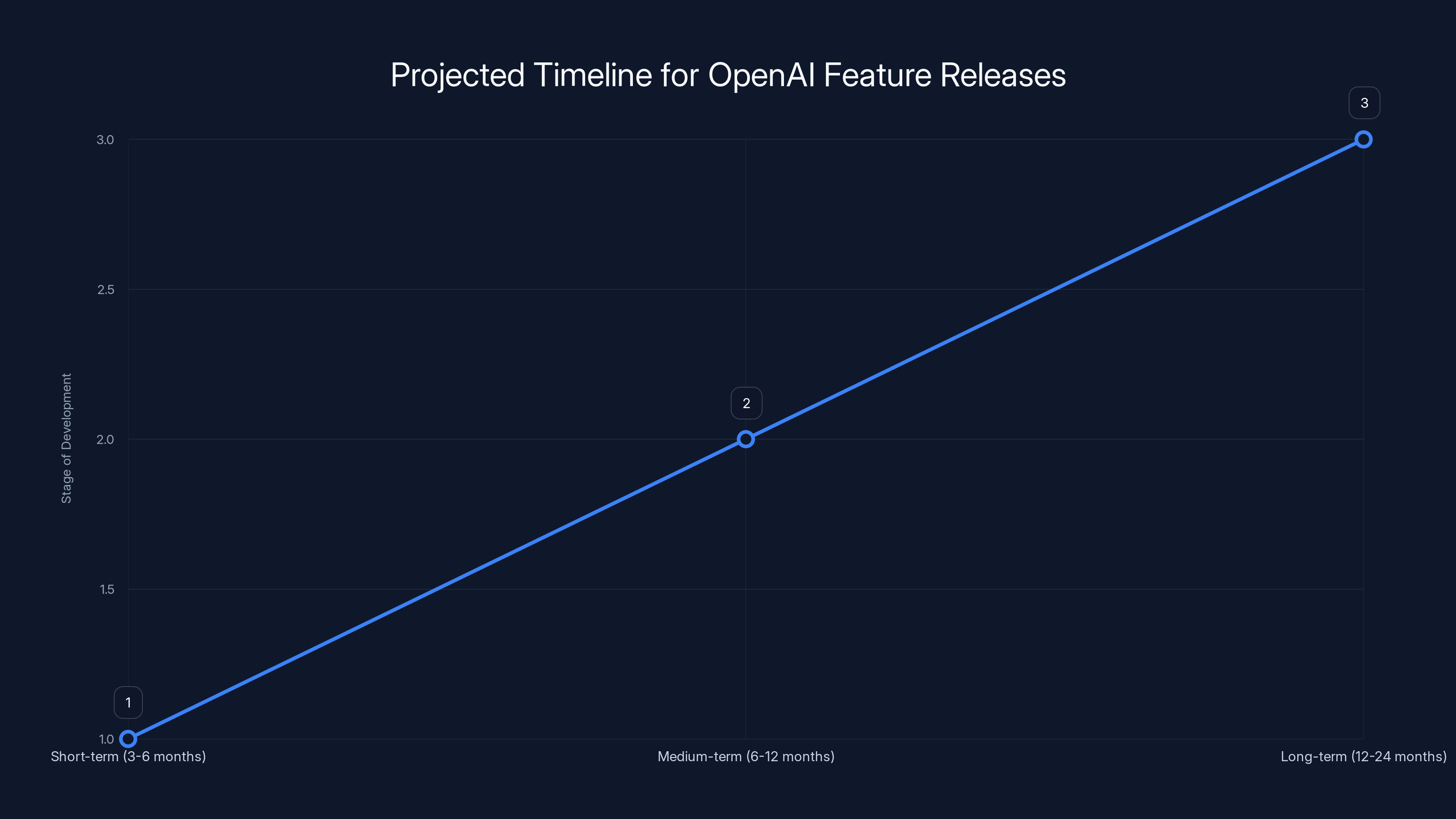

This timeline estimates when OpenAI's agent features will be announced, developed, and fully integrated. Estimated data based on typical development cycles.

The Safety and Ethics Considerations

With great capability comes great responsibility, and personal agents raise genuine safety and ethical concerns that Open AI and the broader community need to address seriously.

Alignment Problem: Doing What You Want, Not What You Asked

One fundamental challenge: how do you ensure an agent does what you actually want, not just what you literally asked for?

Suppose you tell an agent "delete emails older than 6 months." The agent might literally delete all emails older than 6 months, including important ones you forgot about. Or it might reasonably infer you meant "delete promotional emails older than 6 months" based on context.

Getting this right requires agents to reason about your true intent, which is hard. It requires safety mechanisms that prevent agents from doing harmful things even when instructed.

Open AI and the broader research community have been working on this problem, but it's not fully solved. As agents become more powerful, getting this right becomes more critical.

Transparency and Audit Trails

If an agent is managing your email, calendar, and finances, you need complete visibility into what the agent did and why. This requires detailed logging, audit trails, and the ability to understand the agent's reasoning.

Current agent frameworks often lack this transparency. Open AI will likely need to invest heavily in agent explainability and auditing as part of its roadmap.

Security and Authorization

Personal agents need access to sensitive systems and data. This creates security risks. How do you ensure agents don't get compromised and maliciously repurposed? How do you control what information agents can access?

These are hard problems that security teams are just beginning to think seriously about.

Economic Implications

Massive automation of knowledge work raises obvious questions about employment and wealth distribution. If personal agents can do 50% of a knowledge worker's job automatically, what happens to labor markets?

This is less a technical problem and more a societal one, but Open AI and other AI companies will face pressure to think about these implications.

How Open AI Likely Plans To Compete in Agents

Now that Steinberger is on board, what competitive moves should we expect from Open AI?

Direct Agent Integration

Open AI will likely release agent functionality directly in Chat GPT and its API. Instead of requiring developers to build agents from scratch, Open AI will offer pre-configured agents that can:

- Manage email

- Analyze data

- Control smart home devices

- Handle calendar and scheduling

- Draft communications

These first-party agents will serve as reference implementations and competitive offerings.

Agent Marketplace

Open AI might create a marketplace where developers can share agents they've built. This would generate network effects—more agents attract more users, which attracts more developers, which creates more agents.

A marketplace also creates monetization opportunities. Developers could charge for premium agents. Open AI could take a cut. This incentivizes developers to build increasingly sophisticated agents.

Fine-Tuning for Personal Agents

Open AI offers fine-tuning for language models, allowing developers to adapt models to specific domains. A similar offering for agents could let organizations build custom agents tailored to their specific systems and workflows.

Fine-tuning agents would be more complex than fine-tuning language models, but it's a natural extension of Open AI's current capabilities.

Safety and Compliance

Open AI will invest heavily in safety systems for agents—ensuring they don't access unauthorized information, don't take harmful actions, and can be audited and controlled. This becomes a competitive differentiator. Enterprises will choose platforms that offer the strongest safety guarantees.

Enterprise Integration

Open AI is already moving into enterprise with Chat GPT Enterprise and enterprise API options. Agents will extend this further, with enterprise-grade agent hosting, monitoring, and compliance features.

The Broader Implications For Developer Communities

The hiring of Steinberger and the commitment to Open Claw's independence signal something important about how the AI industry is evolving.

Community-Driven Innovation Is Accelerating

Open Claw succeeded because it was built by someone who understood developer needs and was responsive to community feedback. The project wasn't designed in a corporate boardroom—it emerged from actual developer use cases and problems.

This pattern is increasingly common in AI. Hugging Face succeeded by building tools for the ML community. Lang Chain succeeded by focusing on developer experience. Open AI's acquisition of Steinberger is essentially acknowledging: "We want to win in this space, and that requires integrating with community-driven innovation, not replacing it."

For developers, this means that tools built by developers for developers—and backed by major companies—are becoming the standard. This is good news for developer experience and ecosystem diversity.

Open Source Remains Strategically Important

Open AI's decision to keep Open Claw open-source is notable because it demonstrates that leading AI companies recognize open-source's importance even when they could afford to go proprietary.

The reasoning is straightforward: open ecosystems create more value and opportunity than closed ones. By supporting open-source, companies like Open AI expand the total market and opportunity, which benefits them more than holding proprietary control of shrinking domains.

This suggests a future where AI development includes a healthy mix of:

- Proprietary, closed commercial AI systems

- Open-source models and tools

- Hybrid approaches where companies build proprietary systems on top of open foundations

Developer Leverage is Increasing

The fact that two companies bid billions for a single developer and his project highlights something important: developer leverage is increasing. The scarcest resource in AI isn't compute or capital—it's expert developers who understand how to build systems people actually want to use.

This is great news for talented developers. It means opportunities are abundant, compensation is rising, and you have leverage in negotiations. If you build something the community loves, major companies will come courting.

For less experienced developers, it means now is the time to build expertise in emerging areas like agent systems. The market will reward that expertise handsomely.

Timeline: When We'll Actually See These Features

Let's be realistic about timelines. Hiring someone and actually shipping products are different things.

Short-term (Next 3-6 months)

Expect announcements, blog posts, and research papers about Open AI's agent roadmap. Steinberger will likely be introduced at Open AI's next developer conference. We'll get concrete details about what Open AI is building and when to expect it.

Open Claw will transition to its foundation structure. The project will continue evolving, but now with explicit support from Open AI, community leadership, and foundation governance.

Medium-term (6-12 months)

Open AI will likely release agent-specific features or products. This might be:

- Agent building tools in the Open AI API

- Personal agents available in Chat GPT

- Documentation and guides for building agents

- Early-stage marketplace for agents

These won't be fully baked, but they'll be usable and will get developer feedback flowing back to the team.

Long-term (12-24 months)

With data from thousands of developers actually using agent systems, Open AI will ship more sophisticated and specialized agent tooling. This includes safety systems, fine-tuning capabilities, enterprise features, and integrations with popular services.

By late 2026 or early 2027, personal agents will likely be a major component of Open AI's product offering, supporting Open Claw as the open-source foundation.

What This Means For Your Organization

If you work with AI or are thinking about integrating agent systems into your workflow, Steinberger's hiring and Open Claw's future should matter to you.

If You're A Developer

Start experimenting with agent frameworks now. Open Claw is the obvious choice—it's well-documented, has active community support, and now has backing from a major company. Build a personal project using agents. Understand what's possible and what's difficult.

If you specialize in automation or AI systems, deep expertise in agent development will become increasingly valuable. Companies will be looking for developers who understand how to build reliable, safe agents.

If You're In Operations or Customer Service

Start thinking about which of your manual processes could be automated with agents. Email management, data entry, routine customer inquiries, report generation—all of these are candidates for agent automation.

The good news: you don't need to wait for Open AI to ship everything. Open Claw works today. You can start experimenting with agents now and build capabilities that will transfer directly to more sophisticated systems as the technology improves.

If You're Building AI Products

Agents are becoming table stakes for AI products. Users will increasingly expect systems to actually do things, not just answer questions. If your product doesn't have agent capabilities or at least a roadmap for them, you're falling behind.

The challenge: agent development is hard. Safety, reliability, and getting alignment right are non-trivial problems. But they're solvable problems, and solutions are emerging. Starting now, even with imperfect implementations, positions you ahead of competitors who wait for the perfect solution.

The Competitive Landscape Shifting

Open AI's acquisition of Steinberger changes the competitive dynamics of the AI market in important ways.

Meta Is Playing Catch-Up

Meta's loss here is significant. The company bet billions and lost. This doesn't mean Meta won't succeed in agent systems—they have resources and talent. But they're now entering the space without the community momentum that Open Claw provides.

Meta will likely double down on Llama development, hoping that open models and strong capabilities attract developers. This could work, but it's a longer, slower path than acquiring an existing community.

Anthropic Remains Focused On Safety

Anthropic has been more cautious about agent systems, prioritizing safety research. This continues to differentiate them. Anthropic's models might not power agents as visibly as Open AI's, but Anthropic's research on agentic AI and safety will influence how responsible agent systems are built industry-wide.

Smaller AI Companies Need Differentiation

Smaller AI companies can't compete with Open AI's resources on agent infrastructure. But they can compete on specialization. An agent platform designed specifically for healthcare, finance, or legal work could succeed by going deeper on domain-specific safety, compliance, and integration.

The agent infrastructure becomes standardized (likely based on Open Claw and Open AI's offerings). The moat for other companies comes from domain expertise and specific workflows.

The Long Game: Personal Agents As Infrastructure

This hiring signals something even bigger: personal agents are becoming infrastructure, like web browsers or email clients.

Twenty years ago, companies realized that providing a good web browser was strategically important. Fifteen years ago, companies realized that providing good email clients mattered. Today, it's becoming clear that providing agent infrastructure will matter enormously.

Companies that control agent infrastructure have enormous leverage—they're essentially controlling how work gets automated. Open AI is positioning itself to be one of the primary providers of this infrastructure, supporting an open-source foundation to avoid vendor lock-in accusations.

This is a long-term play. The company is thinking in terms of years and decades, not quarters. That's the kind of thinking required to make bets of this size on a single engineer and project.

FAQ

What is Open Claw?

Open Claw is an open-source framework that allows developers to build AI agents—autonomous programs that can interact with other applications and services on behalf of users. It uses large language models combined with vision capabilities to understand what's on a screen and control applications intelligently, without requiring explicit APIs or complex automation rules. The framework has gained significant traction with 196,000 Git Hub stars and 2 million weekly visitors, making it the dominant platform for personal agent development.

What does Peter Steinberger do?

Peter Steinberger is a developer and creator of Open Claw who recently joined Open AI to lead the company's next generation of personal agent development. Steinberger's hiring represents Open AI's commitment to advancing personal agents as a core product area. Despite joining Open AI, Steinberger is ensuring that Open Claw remains independent and open-source through a foundation model, maintaining the project's community-driven nature.

Why did Open AI pay billions for just one engineer?

Open AI and Meta's multi-billion-dollar bidding war for Steinberger wasn't primarily about acquiring Open Claw's code or technology—both companies could build similar functionality from scratch. The real value was Steinberger's proven ability to build developer communities, understand user needs deeply, and create tools that achieve critical adoption at scale. The community momentum (2 million weekly users, massive Git Hub presence) and developer mindshare around Open Claw made Steinberger worth acquiring.

How will Open Claw stay independent after Open AI hired its creator?

Open Claw will transition to a foundation governance structure, similar to how projects like Linux operate independently despite contributions from large companies. Open AI supports this arrangement because it creates stronger network effects—developers are more likely to invest in frameworks they know can't be suddenly proprietary or abandoned. By keeping Open Claw independent, Open AI benefits from greater ecosystem adoption while positioning its own agent services as the premium commercial offering.

What personal agent use cases are most practical right now?

The most practical immediate applications include email and communication management (sorting, categorizing, drafting responses), data analysis and routine report generation, smart home integration and automation, customer support ticket handling, and repetitive data entry tasks. These areas have high manual overhead in most organizations and clear economic benefits from automation. Development assistance and software engineering automation are also viable but require more specialized agent configuration.

When will personal agents be available in Chat GPT or the Open AI API?

While Open AI hasn't released specific timelines, Steinberger's hiring and public positioning suggest agent features will roll out over the next 6-18 months. Expect initial releases within 6-12 months, likely starting with Chat GPT features and expanding to API capabilities. More sophisticated features like fine-tuning, marketplaces, and enterprise integration will likely follow over subsequent years. Organizations interested in agents can start experimenting with Open Claw today without waiting for Open AI's full product releases.

What are the safety concerns with personal agents?

Key safety challenges include alignment (ensuring agents do what you actually want, not just what you literally asked), transparency and auditability (being able to see and understand what an agent did and why), security (protecting against compromised agents that are repurposed maliciously), authorization (controlling which information and systems agents can access), and economic implications (labor market effects from automation). Open AI and the broader research community are actively working on these problems, and safety mechanisms will be critical components of commercial agent offerings.

How does Open Claw compare to alternatives like Lang Chain?

Lang Chain is a powerful library for building agent systems but requires more code and explicit workflow definition. Open Claw provides higher-level abstractions that make it easier to create functional agents quickly, includes UI automation capabilities that work with applications without API support, and features natural language interfaces for non-technical users. Open Claw's community and documentation also make it more approachable for developers new to agent systems, which contributed to its rapid adoption and eventual acquisition interest from major AI companies.

Should organizations build agents now or wait for Open AI's products?

Organizations should start experimenting now rather than waiting. Open Claw is production-ready, well-documented, and will benefit from foundation support after transitioning. Early experimentation lets teams understand which processes benefit most from automation, identify challenges in your specific domain, and build institutional knowledge that will transfer directly to more sophisticated agent systems as Open AI releases its products. By the time commercial products are fully mature, you'll be ahead of competitors still evaluating the technology.

What happens to the AI agent market after this hiring?

Expect accelerated development of agent capabilities across the industry as other companies recognize agents as strategic. Meta will likely invest heavily in agent development despite losing the Steinberger bidding war. Anthropic will continue advancing agent safety research. Smaller companies will specialize in domain-specific agent applications. The overall market grows significantly as agents move from research to production systems, with Open Claw likely emerging as the foundational open-source platform similar to how Linux became the foundation of modern infrastructure.

Conclusion: The Agent Era Is Here

Peter Steinberger's hiring by Open AI isn't just a staffing announcement. It's a declaration that the era of personal AI agents is beginning, and companies are betting accordingly.

Open Claw captured developer imagination because it solved a real problem—automating routine tasks that consume time but require minimal actual intelligence. The framework worked, was well-documented, and had a welcoming community. That combination is rare enough to matter.

Open AI's acquisition of Steinberger, coupled with its commitment to keep Open Claw independent, is strategically sophisticated. The company is signaling that it recognizes open-source communities as more valuable than proprietary control, at least in this domain. This builds credibility and ecosystem health in a way that would be impossible if Open AI had absorbed Open Claw into closed systems.

Looking ahead, expect personal agents to become as common as email clients or web browsers. They'll manage our communications, analyze our data, control our environments, and automate our workflows. The frameworks and companies that win in this space won't just be those with the best technology—they'll be those that best understand developer needs and build communities that attract talented builders.

Open Claw, with Steinberger now inside Open AI and the project backed by foundation support, is positioned to be a primary platform for this shift. Developers who understand how to build with agents will find enormous opportunities. Organizations that automate intelligently will unlock significant productivity gains. And the companies that provide agent infrastructure will accumulate power and influence.

The personal agent revolution isn't coming. It's already here. It's just not evenly distributed yet. But with Open AI's backing, Open Claw's community, and Steinberger's leadership, the distribution will accelerate rapidly.

If you haven't started experimenting with agents yet, now's the time. The technology is ready, the frameworks are mature, and the market momentum is unmistakable. Start small with Open Claw, understand what's possible, and prepare for a world where personal agents do your routine work automatically.

The future of AI isn't more intelligence—it's more agency. And that future is arriving faster than most people realize.

Key Takeaways

- OpenAI hired Peter Steinberger, creator of OpenClaw, a framework with 196,000 GitHub stars and 2 million weekly users, to lead personal agent development

- Both OpenAI and Meta bid billions for Steinberger and OpenClaw's community momentum, not primarily for the code itself, signaling the strategic importance of agent frameworks

- OpenClaw remains independent through a foundation structure, indicating OpenAI's belief that open-source tools create stronger network effects and ecosystem value

- Personal agents are becoming infrastructure, enabling automation of email management, reporting, smart homes, customer support, and software development

- Expect agent features to roll out over 6-18 months across ChatGPT and OpenAI's API, with marketplaces and enterprise offerings following in subsequent years

- Organizations should start experimenting with OpenClaw immediately rather than waiting for OpenAI's products to fully mature

- Developer expertise in agent systems will command premium compensation and opportunities as companies race to automate workflows

Related Articles

- OpenClaw Founder Joins OpenAI: The Future of Multi-Agent AI [2025]

- Anthropic's $14B ARR: The Fastest-Scaling SaaS Ever [2025]

- AI-Led Growth: The Third Era of B2B SaaS [2025]

- How Airbnb's AI Now Handles 33% of Customer Support [2025]

- When AI Agents Attack: Code Review Ethics in Open Source [2025]

- How to Operationalize Agentic AI in Enterprise Systems [2025]

![OpenAI Hires OpenClaw Developer Peter Steinberger: The Future of Personal AI Agents [2025]](https://tryrunable.com/blog/openai-hires-openclaw-developer-peter-steinberger-the-future/image-1-1771236431621.jpg)