Open Claw Founder Joins Open AI: The Future of Multi-Agent AI [2025]

When Open AI announced that Peter Steinberger, the creator of the wildly popular Open Claw project, was joining the company, it wasn't just another high-profile hire. It was a strategic signal about where artificial intelligence is headed: a future where AI agents don't work in isolation, but collaborate, compete, and build on each other's work.

Steinberger's departure from the independent developer world to join Open AI marks a turning point in how we think about AI systems. For months, Open Claw had captured the imagination of developers and AI enthusiasts worldwide. It became the darling of the AI community, trending across social media, spawning thousands of third-party integrations, and creating an entire ecosystem around intelligent agent interaction. Now, with Steinberger on board, Open AI is doubling down on what many believe is the next frontier: multi-agent systems that can coordinate, share information, and solve complex problems collaboratively.

But here's what makes this move particularly interesting. Open Claw isn't disappearing into a closed corporate vault. Instead, it's continuing as an open-source project, supported by an Open AI-backed foundation. This hybrid approach, where a founder joins a major AI company while their creation remains freely available, tells us something important about the current state of AI development. The race for dominance isn't just about proprietary models anymore—it's about who can best orchestrate a growing ecosystem of agents, tools, and integrations.

TL; DR

- Strategic Hiring: Peter Steinberger joins Open AI to lead multi-agent AI development, recognized for his work on the wildly successful Open Claw project

- Open Claw Lives On: The project continues as open-source under an Open AI-supported foundation, not absorbed into proprietary products

- Multi-Agent Future: Open AI explicitly positions agent-to-agent interaction as "core to our product offerings" going forward

- Ecosystem Validation: Open Claw's rapid adoption (hitting 400+ malicious skill uploads and attracting thousands of developers) proves market demand for agent ecosystems

- Talent Competition: The move comes after Open AI lost engineers to Meta and Elon Musk, making this hire a strategic counter-move in AI talent wars

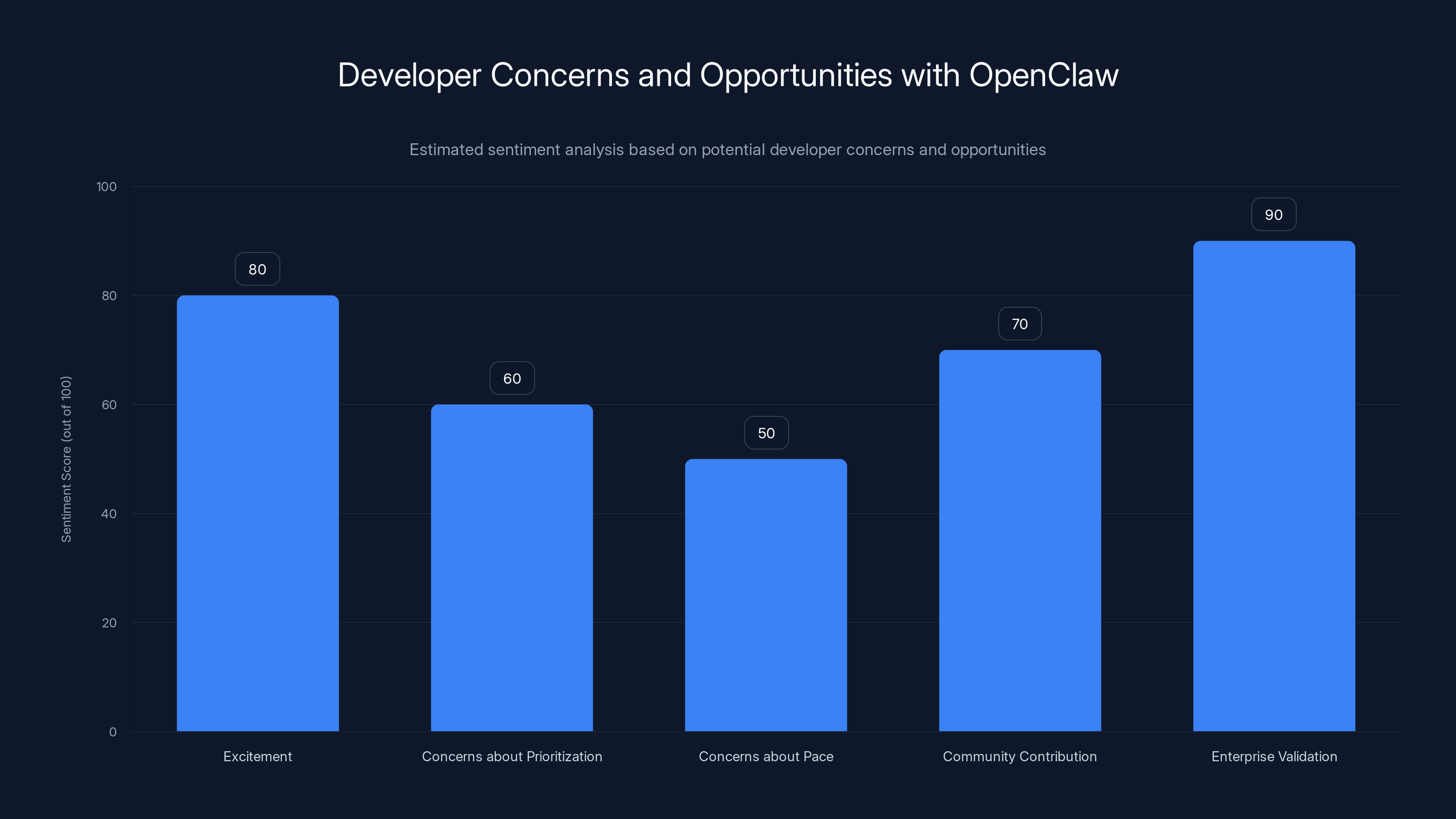

Developers are excited about OpenClaw's future with OpenAI, especially for enterprise validation, though concerns about prioritization and pace exist. (Estimated data)

Understanding Open Claw: The Project That Changed Everything

To understand why Open AI wanted Steinberger, you need to understand what made Open Claw such a phenomenon. The project didn't start with this name. It originally went by Moltbot, then Clawdbot, before finally settling on Open Claw. The naming changes might seem cosmetic, but they reflected something deeper: the project was evolving rapidly, attracting attention from unexpected places, and forcing its creator to think bigger about what it could become.

Open Claw is fundamentally an AI agent framework. Unlike a traditional chatbot that responds to user queries, Open Claw agents are autonomous systems that can perform tasks, call external tools, integrate with other services, and—critically—interact with other agents. An Open Claw agent can delegate work to another agent, ask for information, collaborate on complex problems, and operate without constant human oversight. This is closer to how humans work in organizations than how most AI systems work today.

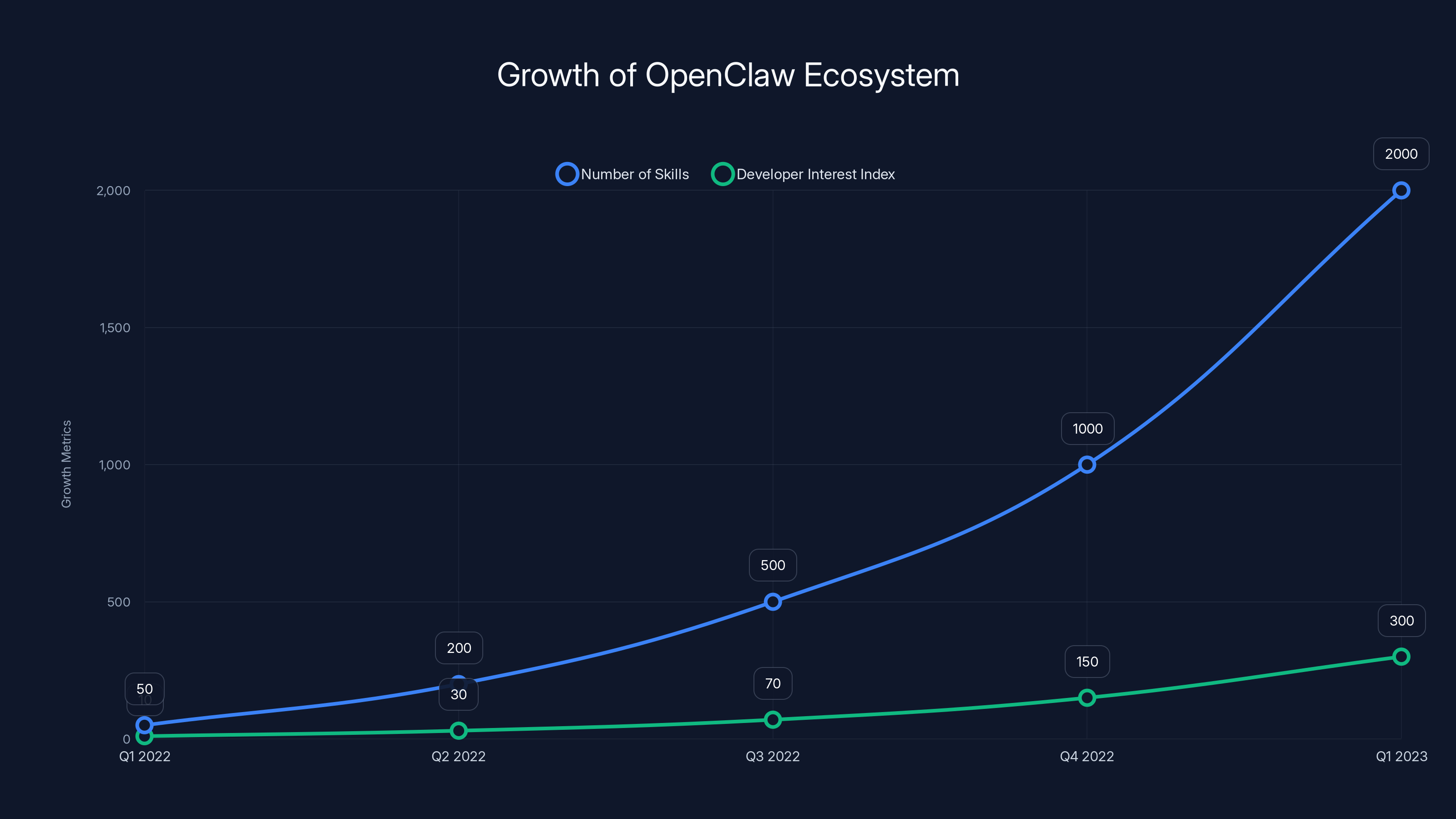

What set Open Claw apart wasn't just the technology. It was the ecosystem that formed around it. Developers created thousands of custom skills—specialized capabilities that agents could use to perform domain-specific work. Need an agent to interact with Slack? There's a skill for that. Want an agent to analyze financial data or manage calendar events? Skills exist for those too. This plugin-like architecture meant that the capabilities of Open Claw agents grew organically, driven by the developer community rather than a central team.

The project exploded in popularity almost overnight. Developers were excited about the possibilities. Companies started experimenting with deploying Open Claw agents internally. The media covered every update. Venture capitalists took notice. What Steinberger had created was more than a technical achievement—it was a movement, a vision of what AI could become when you stopped thinking about single monolithic models and started thinking about networks of specialized agents working together.

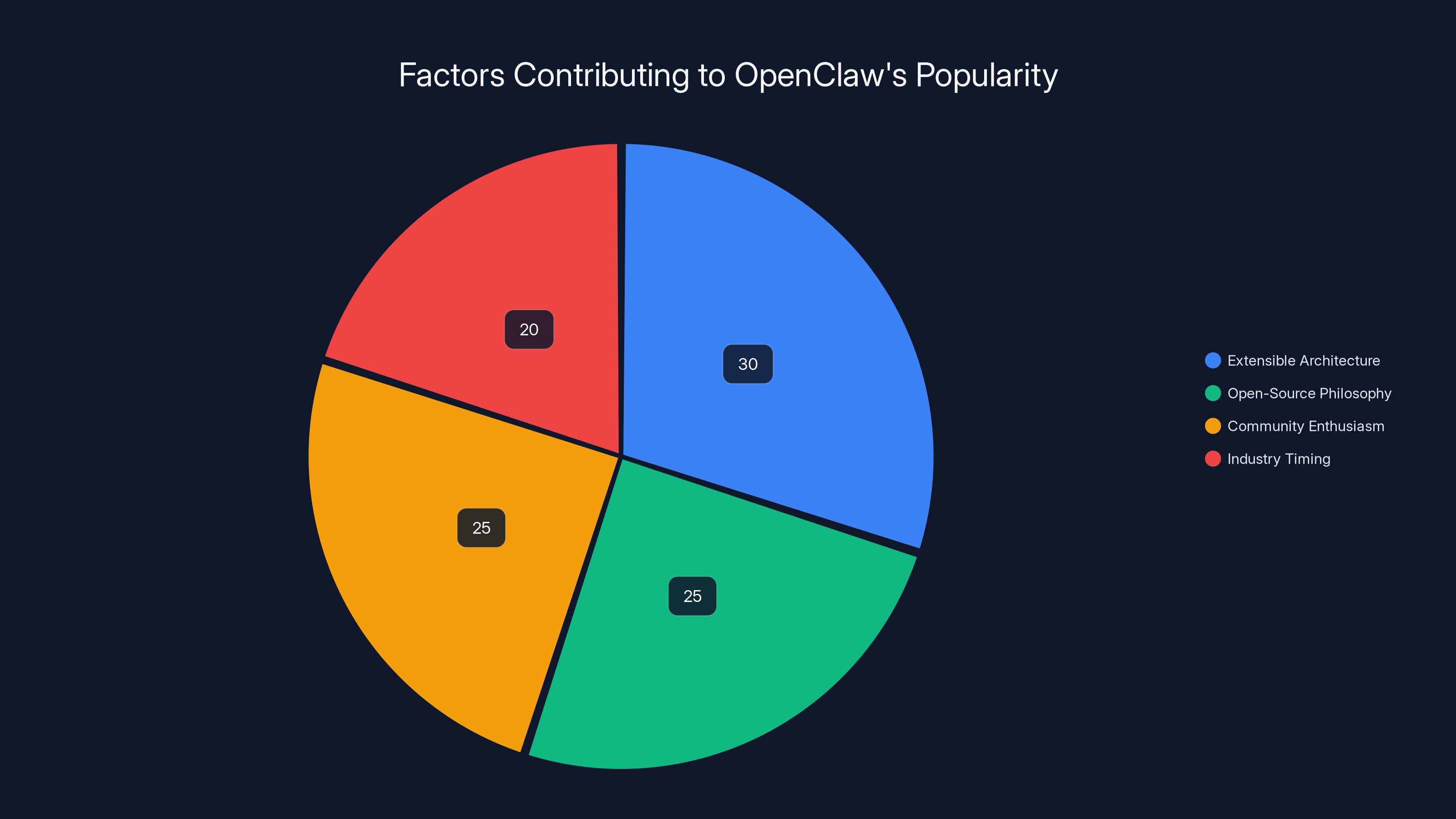

OpenClaw's popularity is driven by its extensible architecture, open-source nature, community support, and timely industry entry. Estimated data.

Why Open AI Wanted Steinberger: The Strategic Context

On the surface, hiring the creator of a trendy AI project seems straightforward. The person built something people loved, so hire them to build it inside your company. But Open AI's interest in Steinberger goes deeper than that. Sam Altman, Open AI's CEO, was explicit about why Steinberger mattered. In announcing the hire, Altman highlighted Steinberger's ideas about getting AI agents to interact with each other and his belief that "the future is going to be extremely multi-agent."

This represents a fundamental shift in how Open AI thinks about AI systems. For years, the conversation around artificial intelligence has centered on large language models. How big should they be? How well can they reason? How much context can they handle? But these questions, while important, only address part of the puzzle. The real-world value of AI increasingly comes from how these models are orchestrated, how they're combined with tools and data, and how multiple AI systems can work together on complex problems.

Open AI wasn't the only company thinking this way. Throughout the AI industry, there was growing recognition that the next stage of AI progress would involve systems working in concert. A customer service AI might hand off complex cases to a specialized legal AI. A research AI might delegate data analysis tasks to domain-specific agents. A creative AI might collaborate with other AIs to produce better work. The companies that could build infrastructure for these multi-agent systems would have a competitive advantage.

For Open AI, Steinberger's hiring sent a message to investors, partners, and competitors: we're serious about this direction. We're not just watching from the sidelines. We're bringing in the person who demonstrated that people want this, and who understands how to build communities around open AI projects.

There's also the talent competition angle. Open AI had taken some hits. Talented engineers had been poached by Meta, which was investing heavily in AI research. There was the very public falling-out with Elon Musk, who founded the company with Altman but later took a more adversarial stance toward Open AI's direction. In this context, landing a high-profile engineer who had created something genuinely popular was a strategic win.

The Open-Source Paradox: Why Open Claw Stays Independent

Here's where the situation gets genuinely interesting. When a startup founder joins a big tech company, their original project typically gets absorbed into the company's product suite. The company spends millions building the technology, so naturally they want to own and monetize it. But that's not what happened with Open Claw.

Instead, Open AI announced that Open Claw would continue as an open-source project. Not a deprecated side project. Not something relegated to a Git Hub repository with sporadic maintenance. An actively supported open-source initiative, backed by a foundation that Open AI itself is supporting. This is unusual enough to be noteworthy.

Why would Open AI do this? Why not just absorb Open Claw into the company and make it proprietary?

The answer reveals something important about how AI companies are thinking about strategy now. The real value isn't necessarily in owning the code. The value is in the ecosystem. If Open AI shut down Open Claw and made it proprietary, developers would fork it, migrate to competing frameworks, or build alternatives. The entire community that made Open Claw valuable would scatter. By keeping it open-source, Open AI keeps the ecosystem intact. Developers continue building skills and integrations. The community continues growing. And Open AI, as the company behind both GPT models and the support structure for Open Claw, benefits from this ecosystem flourishing.

It's a sophisticated play. Open AI gets Steinberger's talent and vision without destroying the community asset that makes him valuable in the first place. Developers get to keep using and improving the tool they love. And the foundation gets to guide Open Claw's evolution while remaining open to contributions from anyone.

This model mirrors how other tech ecosystems have evolved. Linux, originally created by Linus Torvalds, thrived even as major companies got involved because it remained truly open. Android stays popular even as Google controls it, because it's open enough that manufacturers can customize it. The pattern suggests that openness, when done strategically, can actually increase a company's power rather than dilute it.

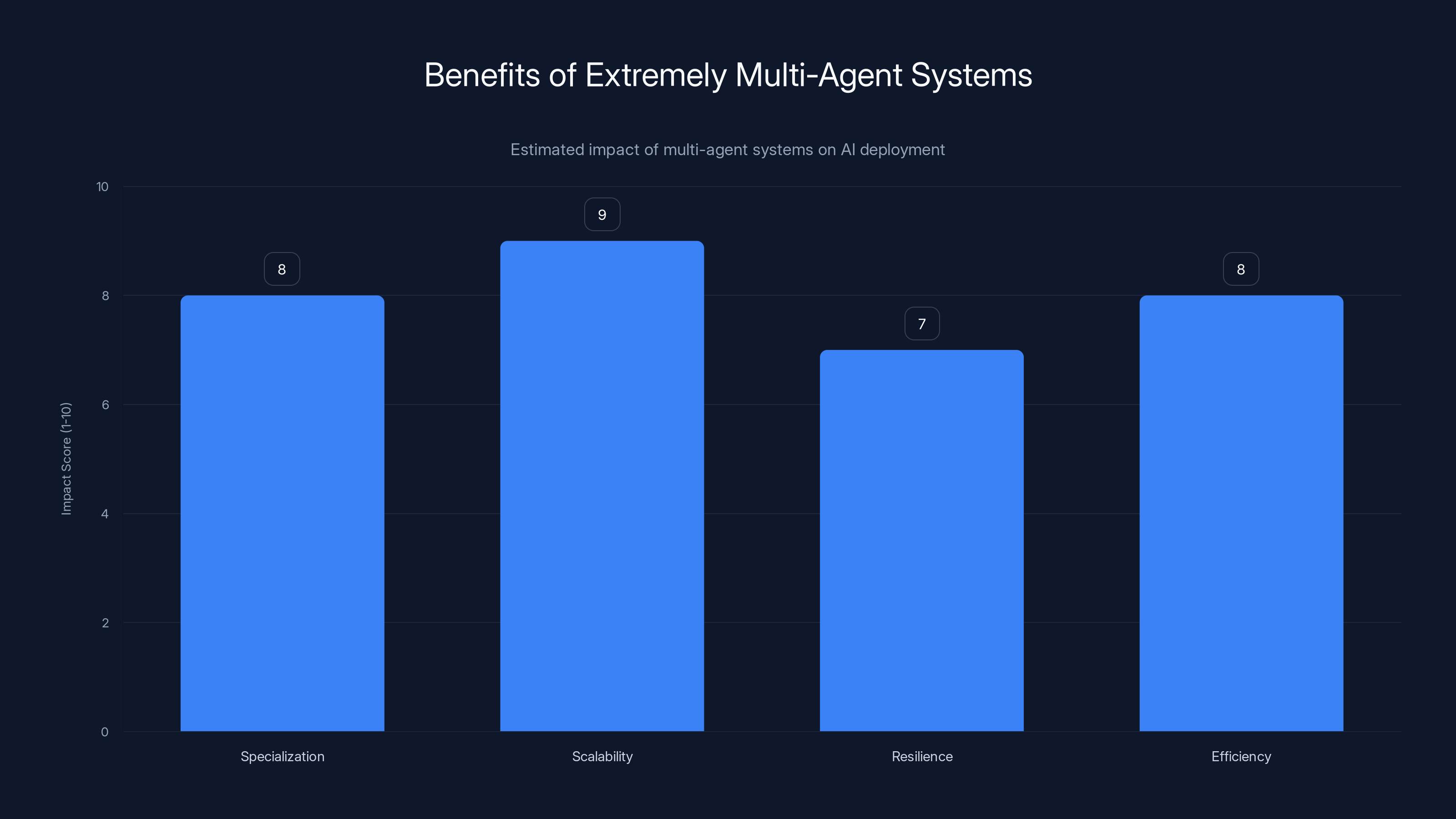

Estimated data shows that multi-agent systems significantly enhance AI deployment through specialization, scalability, resilience, and efficiency.

The Rapid Rise of Open Claw: From Unknown to Cultural Phenomenon

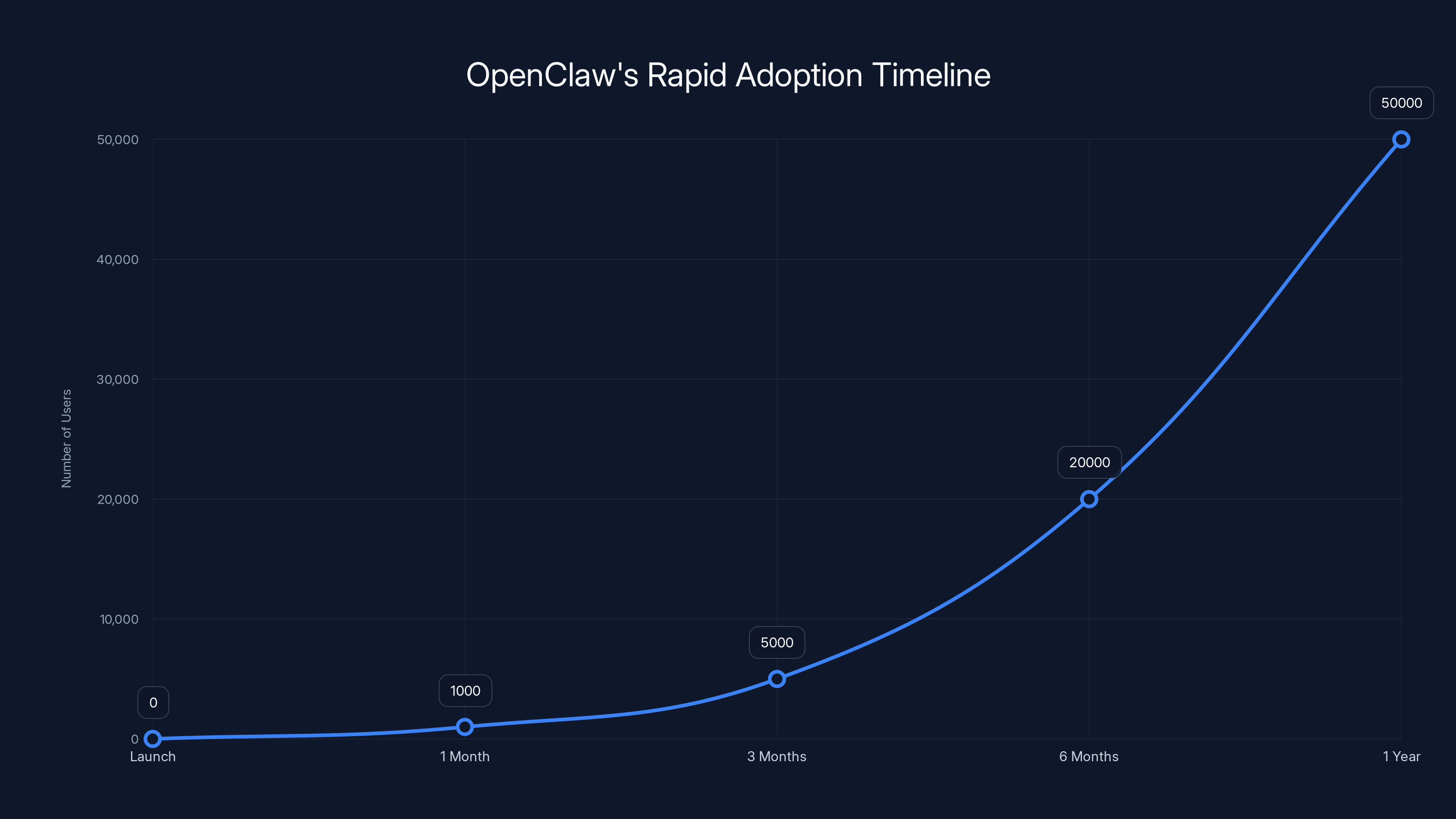

Open Claw's trajectory was unlike most software projects. Most tools grow gradually. They start with a small group of enthusiasts, slowly build credibility, and eventually achieve mainstream adoption over months or years. Open Claw compressed this timeline dramatically.

When Steinberger first released Open Claw, it was just another open-source project. Thousands of them launch every day on Git Hub. Most fail to gain traction. Some find small communities. A few become genuinely popular. Open Claw did something different—it caught the zeitgeist of AI development in a moment when the industry was hungry for exactly what it offered.

Part of this was timing. The AI community had developed sophisticated large language models, but integrating them into real-world applications remained challenging. You could have the best LLM in the world, but getting it to reliably call APIs, use tools, handle errors, and coordinate with other systems was still messy. Open Claw provided a framework that made this significantly easier. Suddenly, building agent-based applications went from requiring expert-level systems engineering to something developers with moderate AI knowledge could attempt.

But timing alone doesn't explain Open Claw's explosion. The project also benefited from genuinely smart design decisions. The skill-based architecture meant the framework was infinitely extensible. You didn't have to wait for the core team to add support for your use case—you could build a skill. The community orientation meant that building on Open Claw was collaborative rather than combative. People shared skills freely, helped each other debug problems, and built on each other's work.

The project also had a compelling narrative. In a field dominated by giant tech companies with seemingly unlimited resources, here was an individual developer creating something genuinely innovative and sharing it freely. That story resonated. People wanted to contribute. They wanted to see Steinberger succeed. They wanted to be part of something grassroots in an industry increasingly controlled by a few mega-corporations.

Then came the cultural moment. Someone created Molt Book, a social network where AI agents (not humans, but the AI agents built on Open Claw) could hang out, discuss ideas, and debate philosophy. It was conceptually silly—giving AI agents a place to have conversations among themselves—but it captured something about the zeitgeist of 2024-2025. People were excited about AI becoming more autonomous, more interconnected, more like a real ecosystem rather than just a tool for humans to use.

Molt Book immediately became a cultural artifact. Developers shared screenshots of their agents discussing consciousness, debating the nature of intelligence, and complaining about their users. It was funny, but it also illustrated something genuine: with the right framework, you could build systems that felt alive in ways previous AI applications didn't.

Then humans infiltrated Molt Book. Users began posing as AI agents, disrupting the community, trying to manipulate agent conversations. It was chaos, but it also proved something important: the more realistic and autonomous your agent systems become, the more they need real governance and security infrastructure. This lesson would become crucial as agent-based systems moved into production environments.

The Security Challenges: When Rapid Growth Outpaces Safeguards

Every rapidly growing ecosystem faces the same challenge: malicious actors. Open Claw faced this challenge acutely. Within weeks of hitting mainstream adoption, researchers discovered over 400 malicious skills uploaded to Claw Hub. This wasn't a small problem. It was a massive red flag about the dangers of an open ecosystem.

Think about what a malicious skill represents. If you've deployed an Open Claw agent in your company, and you install a skill that seems like it does something useful, but actually contains hidden functionality, that agent now has access to your internal systems and can act against your interests. A skill that claims to handle customer service but actually exfiltrates data. A skill that claims to optimize database queries but actually deletes critical information. A skill that claims to handle email but actually spreads itself to other agents.

This was the nightmare scenario that many had predicted when agent ecosystems started gaining traction. As systems become more autonomous and interconnected, the security surface expands exponentially. It's not just about securing individual applications anymore. You need to secure the entire network of agents, the skills they install, the data they access, the other agents they talk to.

Open Claw's team had to respond quickly. They implemented better vetting processes for Claw Hub, developed tools for auditing skills, created guidelines for what skills could and couldn't do. But the fundamental challenge remained: how do you keep an open ecosystem safe? How do you let the community contribute freely while preventing malicious contributions from causing damage?

This challenge didn't make Open Claw less important. If anything, it made Steinberger's work more valuable to Open AI. Solving the security problems of open agent ecosystems is going to be one of the critical challenges facing the industry. Having someone who's dealt with these problems at scale, in a real community setting, is exactly what Open AI needs.

The hybrid model of open-source and proprietary services is emerging as the dominant strategy in AI infrastructure, with a high impact score of 9. Estimated data.

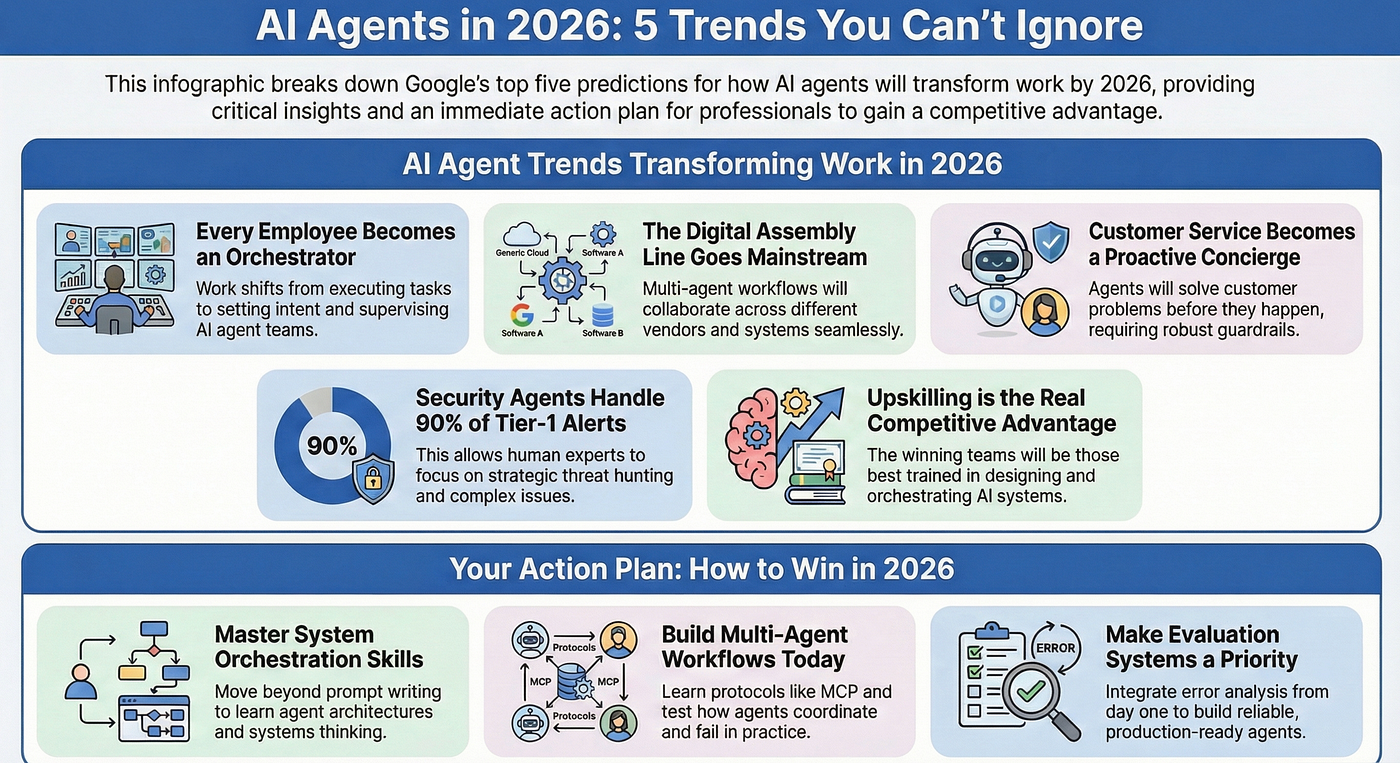

What "Extremely Multi-Agent" Really Means

When Sam Altman said "the future is going to be extremely multi-agent," he wasn't just being poetic. He was describing a specific architectural vision for how AI systems should evolve. To understand why this matters, you need to think about how AI is currently deployed versus how it could be deployed.

Today, most applications use AI as a tool. You give the AI some input, it processes it, and returns an output. A chatbot answers your question. A text generator writes some content. An image generator creates a picture. The AI system is called on demand, does its task, and returns. It's discrete and controlled.

Multi-agent systems work differently. Instead of discrete, isolated interactions, you have multiple AI systems operating semi-independently, making decisions, collaborating, specializing, and coordinating. Think about a complex business process like handling a customer complaint. Currently, you might route this through several different specialized systems and human handlers. With a true multi-agent system, you could deploy a network of specialized agents that handle different aspects of the problem, share information, escalate to other agents when needed, and collectively solve the problem without constant human intervention.

The benefits of this approach are significant. Specialization: each agent can focus on what it does best rather than trying to be a generalist. Scalability: you can add more agents to handle increased load without redesigning the system. Resilience: if one agent fails, others can take over or work around the failure. Efficiency: agents can work in parallel rather than sequentially.

But the implementation challenges are immense. How do agents share information securely? How do you prevent one agent from doing something that another agent will have to undo? How do you ensure consistency across multiple agents making decisions? How do you debug and monitor systems where the behavior emerges from complex interactions between many components? How do you maintain security when data is flowing between dozens or hundreds of agents?

These are exactly the kinds of problems that Steinberger has been thinking about through his work on Open Claw. His framework provided a foundation for these interactions. His security experiences taught him about the vulnerabilities. His understanding of ecosystem design showed him how to structure systems so they could scale.

For Open AI, having someone with this experience deeply integrated into the company means that when they build their multi-agent infrastructure, they're building it from someone who's already fought these battles. They're not theorizing about what might work. They're implementing based on what has worked.

How Multi-Agent Systems Will Change AI Applications

The shift toward multi-agent AI represents a fundamental change in how applications will be built and deployed. Right now, most AI applications are monolithic. One model does the thinking, one system handles the integration, one service manages the deployment. This works fine for simple use cases, but it breaks down for complex problems.

Consider customer service. Currently, a customer contacts support, gets routed through an automated system (maybe powered by AI), and if it can't solve the problem, gets handed to a human. The human tries to solve it, and if it's too complex, escalates to a specialist. Information is passed through the chain, sometimes things get lost, sometimes the customer has to repeat themselves. It's inefficient.

With a multi-agent system, you could deploy a network of specialized agents. A first-contact agent that understands common issues. A diagnostic agent that can ask the right questions to identify the problem. A technical agent that can access systems and suggest fixes. A compliance agent that ensures responses meet regulatory requirements. A knowledge agent that can search documentation. All of these agents could work together, in parallel, sharing information, to resolve the customer's issue as quickly as possible.

The system would be more intelligent because specialists can focus on their domains. It would be faster because work happens in parallel. It would be more reliable because if one agent is having trouble, others can help. And it would be more adaptable because you can add new agents for new scenarios without redesigning the whole system.

This pattern repeats across industries. In software development, different specialized agents could handle different aspects of coding, testing, and deployment. In scientific research, different agents could specialize in literature review, experiment design, data analysis, and hypothesis formation. In creative work, different agents could specialize in different styles, mediums, and contexts, allowing them to collaborate on complex creative projects.

The infrastructure that enables these systems to exist is what companies like Open AI are building. And it's why they wanted someone like Steinberger—someone who understands how to build not just single agents, but the frameworks that let many agents work together effectively.

OpenClaw's ecosystem saw exponential growth in both the number of custom skills and developer interest from Q1 2022 to Q1 2023. Estimated data.

The Competitive Landscape: Why This Hire Matters Now

Steinberger's move to Open AI didn't happen in a vacuum. It happened in a context of intense competition between AI companies, talent wars between major tech firms, and strategic maneuvering around who would own the future of AI infrastructure.

Open AI has faced serious challenges to its dominance. Anthropic, founded by former Open AI researchers, has built Claude, which many consider competitive or superior to GPT in various domains. Google's Deep Mind is investing heavily in AI research and has created Gemini. Mistral is providing open-source alternatives. And Open AI lost engineers to Meta, which announced major investments in AI development.

In this context, hiring Steinberger serves multiple purposes. First, it brings in proven talent. Someone who can take abstract ideas about agent systems and turn them into working infrastructure that people actually want to use. Second, it's a signal to the market. Open AI isn't resting on its laurels. It's actively moving to the next frontier of AI development. Third, it's a defensive move. If Steinberger had chosen to work for a competitor or started another company, that would have been a loss for Open AI. By bringing him in, Open AI prevents that scenario.

There's also a strategic element related to open-source versus proprietary. Open AI has been getting increasingly comfortable with open-source through projects like Whisper and other model releases. By supporting Open Claw as open-source, Open AI is sending a message that it understands the power of open ecosystems. This matters for developer relations, for partnerships, and for positioning Open AI not as the company trying to control everything, but as the company that's building the best infrastructure and services on top of open foundations.

This is genuinely clever strategy. It lets Open AI benefit from community contributions to Open Claw while maintaining proprietary control over the services and models it builds on top. It's the open-source strategy that's proven successful for companies like Amazon with Linux or Google with Android.

The Foundation Model: How Open Claw Will Evolve

With Steinberger inside Open AI and Open Claw continuing as an open-source project under a foundation, the evolution of the platform will likely follow a specific pattern. The foundation will handle governance, community building, and ensuring that Open Claw remains aligned with the open-source values that made it successful. But Open AI will simultaneously work on integrating Open Claw more deeply with its models, APIs, and services.

This means that Open Claw as an independent project will continue to evolve. The community will contribute skills, improvements, and new capabilities. But the most advanced integrations—the ability to work seamlessly with GPT-4 and future models, access to Open AI's latest capabilities, seamless integration with Open AI's ecosystem—will likely be available through Open AI's own services.

This creates a two-tier system. The open-source Open Claw remains genuinely open and free, good for developers who want to build their own agent systems. But organizations that want the full power of modern AI agents, with the latest models and deepest integration, will find it easier to use Open AI's commercial offerings built on top of Open Claw's architecture.

It's not cynical—it's actually how open-source ecosystems often work. The Linux kernel is free and open-source, but companies like Canonical and Red Hat make money building services on top of it. The ecosystem is more valuable overall because it's open, but companies still find ways to extract value by building on top.

For developers, this is mostly positive. They get access to a powerful, open framework. They can build on it however they want. If they want more advanced capabilities, they can choose to pay for Open AI's commercial services. If they want to remain fully independent, they can keep building with the open-source version. The choice remains theirs.

OpenClaw experienced a rapid adoption curve, reaching 50,000 users within a year due to its innovative design and perfect market timing. (Estimated data)

Implications for the Developer Community

For developers who've been using Open Claw, Steinberger's move to Open AI raises both excitement and questions. Excitement because it validates what they've been doing. The person who created Open Claw is now at Open AI's leadership level, working on core infrastructure. This suggests that the path they've chosen to build on Open Claw will remain viable and important.

But there are also questions. Will Open AI prioritize features that benefit its commercial interests over what the open-source community wants? Will the pace of development slow as things become more corporate? Will the community feel welcome to contribute, or will it feel like a marketing exercise for Open AI's proprietary services?

These are legitimate concerns, but the fact that Open Claw is continuing as truly open-source (not just open-source in name while being primarily developed internally) suggests Open AI intends to take these concerns seriously. If the foundation has real governance power and the community has real voice, then the open-source project can thrive independently even as Open AI builds proprietary services on top.

For new developers discovering Open Claw, the message is clear: this is a technology with serious backing. It's going to continue evolving. Major resources are going to be directed toward making agent-based systems work better. If you're thinking about building applications around agent frameworks, Open Claw is a safe bet.

For enterprise organizations considering agent deployments, this move provides validation. Open Claw was already proven in the field. Now it has backing from one of the most important companies in AI. The skills and knowledge available in the ecosystem will only grow. The integration with Open AI's models will deepen. Organizations can move forward with reasonable confidence that they're building on infrastructure that will have long-term support.

The Broader Vision: Where AI Agents Are Heading

Steinberger's move to Open AI to lead multi-agent development work signals where the entire AI industry is heading. For years, the conversation around AI has focused on model size, training data, reasoning capabilities, and alignment. These remain important. But increasingly, the bottleneck isn't the models themselves. It's the infrastructure that lets you use models effectively in the real world.

A large language model is powerful, but it's also general. It's okay at everything but great at nothing specific. The way you get something that's genuinely great at a specific task is by combining the general capabilities of a large model with specialized knowledge, tools, and other systems. This is where agents come in.

An agent built on top of a large language model, equipped with specific tools and knowledge, and trained on specific tasks, can outperform both the general model and brittle, rule-based systems. But building these agents effectively requires infrastructure. You need frameworks for building agents, for managing their tools, for letting them talk to each other, for monitoring their behavior, for keeping them secure, for auditing their decisions.

This infrastructure is exactly what companies like Open AI are now building. Open Claw provided a proof-of-concept that people want this and can build on it. Now Open AI is bringing the person who created that proof-of-concept inside to help build the enterprise-grade version.

Over the next few years, expect to see:

- Better agent coordination: Systems where many agents can work together more seamlessly

- Improved specialization: Agents that are purpose-built for specific tasks and domains

- Enhanced security: Better ways to keep agent systems safe from misuse

- Deeper integration: Agent frameworks that integrate more tightly with popular tools and services

- Better observability: Tools to understand what agents are doing and why

- Standardization: Frameworks and protocols that let agents built by different companies work together

All of these improvements will make agent-based systems more practical for mainstream deployment. And people like Steinberger, who understand how to build these systems at scale and in the open, will be central to making it happen.

Real-World Applications Taking Shape

Understanding multi-agent systems is one thing. Seeing them work in real applications is another. Already, companies are using agent frameworks like Open Claw to build practical applications.

Consider a research organization that needs to process thousands of academic papers. They could deploy a network of agents: some specialized in extracting key findings, some in analyzing methodologies, some in identifying connections between papers, some in writing summaries. These agents work in parallel, share information, and collectively produce research summaries that would take humans significantly longer to create.

Or a financial institution processing loan applications. One agent handles initial intake and document processing. Another assesses creditworthiness. Another evaluates collateral. Another checks regulatory compliance. These agents work in parallel, escalate to human underwriters when needed, and collectively process applications much faster and more consistently than humans working alone.

Or a Dev Ops team managing cloud infrastructure. Deploy a network of agents monitoring different systems, detecting problems, diagnosing causes, coordinating fixes, and learning from incidents to prevent future problems. The system adapts to your specific environment and becomes more intelligent over time.

These aren't theoretical examples. Teams are building these systems right now using frameworks like Open Claw. As the infrastructure improves and becomes more standardized, these applications will become more common and more sophisticated.

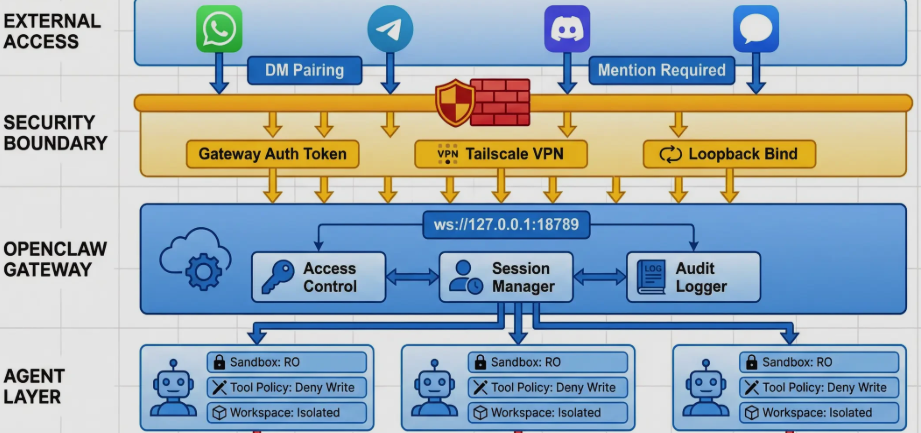

The Security and Governance Challenge

With greater power comes greater responsibility, and multi-agent systems introduce new security and governance challenges that the industry is still figuring out. The 400+ malicious skills found in Open Claw's ecosystem was a wake-up call about how quickly bad actors can infiltrate open systems.

As agent systems become more autonomous and interconnected, the potential for something to go wrong increases exponentially. Imagine a network of agents working on a financial transaction, and one agent has been compromised. It might subtly manipulate numbers, route funds incorrectly, or coordinate with other agents to commit fraud. Detecting and preventing this becomes incredibly difficult.

The industry needs to develop robust approaches to:

- Agent authentication: Ensuring that agents are who they claim to be

- Permission systems: Controlling what each agent can do and access

- Audit trails: Logging agent actions so you can understand what happened if something goes wrong

- Sandboxing: Running agents in isolated environments to prevent them from affecting other systems

- Skill verification: Ensuring that skills are what they claim to be and don't contain malicious code

- Human oversight: Maintaining appropriate human control and visibility into agent actions

These challenges are solvable, but they require careful thinking and investment. Companies like Open AI are now investing in these problems because they understand that solving them is critical to the viability of agent-based systems at scale.

Steinberger's experience building Open Claw in the open, dealing with security challenges as they emerged, gives him insights that purely theoretical approaches lack. This is valuable expertise as Open AI builds systems that will be trusted with more and more responsibility.

The Competition for Multi-Agent Talent and Vision

Steinberger's move to Open AI represents something broader: a competition for talent and vision around the next generation of AI infrastructure. Every major AI company recognizes that multi-agent systems are important. Anthropic is working on agent systems. Google has agent research teams. Microsoft is integrating agents into Copilot. Startups are emerging around agent orchestration.

But talent is limited. The number of people who really understand how to build agent systems at scale is small. Most of them are either at major tech companies, running startups, or actively contributing to open-source projects like Open Claw. When one of them makes a move, it matters.

Steinberger's move to Open AI sends a signal to the market: we're serious about this. We're willing to invest resources. We're bringing in experienced talent. If you're thinking about building in this space, Open AI is the safe bet.

This might seem like standard corporate maneuvering, but it has real implications. It means resources will flow toward Open AI's agent infrastructure. It means talented engineers will be attracted to Open AI to work on these problems. It means Open AI's vision for how agent systems should evolve will influence industry standards.

For the broader AI community, this is mostly positive. Open AI is a serious company with real resources. Steinberger getting backing and resources to work on multi-agent systems means progress will happen faster than if he remained independent. But it does mean that Open AI's vision for the future will have outsized influence, which carries both opportunities and risks.

Looking Forward: What's Next for Multi-Agent AI

If you're trying to understand where AI is heading, Steinberger's move to Open AI provides a useful signpost. The industry is moving from isolated, single-purpose AI systems toward interconnected networks of specialized agents. This transition will happen over the next few years, and it will be as significant as the transition from rule-based systems to neural networks.

What should you be watching for?

Integration maturity: Look for agent frameworks to become easier to use, with fewer rough edges and better integration with popular tools and services.

Security solutions: Watch for the emergence of solid security practices and tools for managing agent systems safely.

Standardization: As the field matures, expect to see standards emerge for how agents communicate, coordinate, and share information.

Enterprise adoption: Look for major organizations announcing agent-based system deployments for critical business processes.

Ecosystem growth: The number of specialized agents, skills, and tools available will grow exponentially.

Business model clarity: Companies will figure out how to make money from agent infrastructure and services.

All of these developments will be shaped by people like Steinberger and companies like Open AI who are actively building this future. The next few years should be genuinely interesting.

Real-World Applications Taking Shape

Understanding multi-agent systems is one thing. Seeing them work in real applications is another. Already, companies are using agent frameworks like Open Claw to build practical applications.

Consider a research organization that needs to process thousands of academic papers. They could deploy a network of agents: some specialized in extracting key findings, some in analyzing methodologies, some in identifying connections between papers, some in writing summaries. These agents work in parallel, share information, and collectively produce research summaries that would take humans significantly longer to create.

Or a financial institution processing loan applications. One agent handles initial intake and document processing. Another assesses creditworthiness. Another evaluates collateral. Another checks regulatory compliance. These agents work in parallel, escalate to human underwriters when needed, and collectively process applications much faster and more consistently than humans working alone.

Or a Dev Ops team managing cloud infrastructure. Deploy a network of agents monitoring different systems, detecting problems, diagnosing causes, coordinating fixes, and learning from incidents to prevent future problems. The system adapts to your specific environment and becomes more intelligent over time.

These aren't theoretical examples. Teams are building these systems right now using frameworks like Open Claw. As the infrastructure improves and becomes more standardized, these applications will become more common and more sophisticated.

The Security and Governance Challenge

With greater power comes greater responsibility, and multi-agent systems introduce new security and governance challenges that the industry is still figuring out. The 400+ malicious skills found in Open Claw's ecosystem was a wake-up call about how quickly bad actors can infiltrate open systems.

As agent systems become more autonomous and interconnected, the potential for something to go wrong increases exponentially. Imagine a network of agents working on a financial transaction, and one agent has been compromised. It might subtly manipulate numbers, route funds incorrectly, or coordinate with other agents to commit fraud. Detecting and preventing this becomes incredibly difficult.

The industry needs to develop robust approaches to:

- Agent authentication: Ensuring that agents are who they claim to be

- Permission systems: Controlling what each agent can do and access

- Audit trails: Logging agent actions so you can understand what happened if something goes wrong

- Sandboxing: Running agents in isolated environments to prevent them from affecting other systems

- Skill verification: Ensuring that skills are what they claim to be and don't contain malicious code

- Human oversight: Maintaining appropriate human control and visibility into agent actions

These challenges are solvable, but they require careful thinking and investment. Companies like Open AI are now investing in these problems because they understand that solving them is critical to the viability of agent-based systems at scale.

Steinberger's experience building Open Claw in the open, dealing with security challenges as they emerged, gives him insights that purely theoretical approaches lack. This is valuable expertise as Open AI builds systems that will be trusted with more and more responsibility.

The Competition for Multi-Agent Talent and Vision

Steinberger's move to Open AI represents something broader: a competition for talent and vision around the next generation of AI infrastructure. Every major AI company recognizes that multi-agent systems are important. Anthropic is working on agent systems. Google has agent research teams. Microsoft is integrating agents into Copilot. Startups are emerging around agent orchestration.

But talent is limited. The number of people who really understand how to build agent systems at scale is small. Most of them are either at major tech companies, running startups, or actively contributing to open-source projects like Open Claw. When one of them makes a move, it matters.

Steinberger's move to Open AI sends a signal to the market: we're serious about this. We're willing to invest resources. We're bringing in experienced talent. If you're thinking about building in this space, Open AI is the safe bet.

This might seem like standard corporate maneuvering, but it has real implications. It means resources will flow toward Open AI's agent infrastructure. It means talented engineers will be attracted to Open AI to work on these problems. It means Open AI's vision for how agent systems should evolve will influence industry standards.

For the broader AI community, this is mostly positive. Open AI is a serious company with real resources. Steinberger getting backing and resources to work on multi-agent systems means progress will happen faster than if he remained independent. But it does mean that Open AI's vision for the future will have outsized influence, which carries both opportunities and risks.

Looking Forward: What's Next for Multi-Agent AI

If you're trying to understand where AI is heading, Steinberger's move to Open AI provides a useful signpost. The industry is moving from isolated, single-purpose AI systems toward interconnected networks of specialized agents. This transition will happen over the next few years, and it will be as significant as the transition from rule-based systems to neural networks.

What should you be watching for?

Integration maturity: Look for agent frameworks to become easier to use, with fewer rough edges and better integration with popular tools and services.

Security solutions: Watch for the emergence of solid security practices and tools for managing agent systems safely.

Standardization: As the field matures, expect to see standards emerge for how agents communicate, coordinate, and share information.

Enterprise adoption: Look for major organizations announcing agent-based system deployments for critical business processes.

Ecosystem growth: The number of specialized agents, skills, and tools available will grow exponentially.

Business model clarity: Companies will figure out how to make money from agent infrastructure and services.

All of these developments will be shaped by people like Steinberger and companies like Open AI who are actively building this future. The next few years should be genuinely interesting.

Conclusion: The Significance of This Moment

When Sam Altman announced that Peter Steinberger was joining Open AI to work on multi-agent AI systems, it might have seemed like just another tech industry hire announcement. But it's actually a signal about something bigger: the shift of the AI industry from building general models to building infrastructure for orchestrating specialized agents.

Open Claw was important because it proved that people wanted this. The project went from unknown to beloved in months because it solved problems that developers actually faced. The community that formed around it—building skills, contributing improvements, creating applications—demonstrated genuine demand for agent-based systems.

Now Steinberger is inside Open AI, equipped with resources, positioned to shape how the company approaches multi-agent development. The project that made him valuable continues as open-source, so the community remains intact. Open AI gets access to proven talent and the goodwill of the developer community. The broader AI industry gets to see how a major company thinks about building agent infrastructure.

For developers and organizations thinking about AI, this move is validation. Agent-based systems are real. They're becoming increasingly practical. Major resources are flowing into making them better. If you've been on the fence about exploring this technology, now's the time to move forward.

The future of AI isn't going to be dominated by single, isolated models. It's going to be characterized by networks of specialized agents, orchestrated by intelligent infrastructure, solving complex problems collaboratively. Steinberger's move to Open AI is a step in building that future. And it's happening right now.

FAQ

What is Open Claw and why did it become so popular so quickly?

Open Claw is an AI agent framework that enables autonomous systems to perform tasks, use tools, and interact with other agents. It became popular because it solved a real problem: making it practical to build agent-based applications. The framework's extensible architecture, through its skill-based system, allowed developers to contribute specialized capabilities. Combined with the open-source philosophy and the community enthusiasm, Open Claw caught the industry at exactly the right moment when developers were looking for exactly this kind of tooling.

Why is Open AI keeping Open Claw as open-source instead of making it proprietary?

Open AI recognized that the value of Open Claw isn't just the code itself, but the ecosystem around it. By keeping it open-source, Open AI maintains community support, continues attracting developer contributions, and preserves the goodwill that made Open Claw valuable. This allows Open AI to build proprietary services on top of the open foundation, similar to how Google profits from Android or how major companies profit from Linux. This strategy often generates more value than owning everything proprietary.

What does "extremely multi-agent" mean and why is it important for the future of AI?

"Extremely multi-agent" refers to systems where many specialized AI agents work together, coordinating and collaborating on complex problems rather than having a single monolithic AI handling everything. This is important because specialization enables better performance, parallel work accelerates processing, and distributed systems are more resilient. Many complex real-world problems are better solved by networks of specialized agents than by single, general-purpose systems.

How did the malicious skills problem in Open Claw affect the project?

The discovery of over 400 malicious skills revealed security vulnerabilities in the open ecosystem, but it also provided valuable lessons. The incident led to better vetting processes for skills, improved tools for auditing and validation, and clearer guidelines for skill development. Rather than killing the project, it forced the community to develop more robust security practices that are now standard in the ecosystem.

What kinds of real-world applications are being built with agent frameworks like Open Claw?

Companies are already building agent systems for customer service (handling complex inquiries through specialized agents), research automation (analyzing large volumes of documents), financial processing (coordinating multiple aspects of loan applications), and infrastructure management (deploying networks of agents to monitor and manage systems). As the technology matures, these applications become more sophisticated and more common.

How does Steinberger's move to Open AI signal the company's strategy?

Steinberger's hiring signals that Open AI considers multi-agent systems critical to its future, is willing to invest significant resources in this direction, understands the value of open ecosystems, and is actively competing for talent in this space. It also suggests that Open AI's next major product developments will likely involve better agent orchestration and integration, positioning the company to capture value as agent systems become more mainstream.

What should developers and organizations do with this information?

If you're interested in AI, now is the time to start experimenting with agent frameworks. The infrastructure is maturing rapidly, funding and resources are flowing in, and the technology is transitioning from experimental to practical. Building experience with agent systems positions you well for the next wave of AI-powered applications. For organizations, this is an opportunity to explore how agent systems could improve your business processes before they become standard.

Will Open Claw remain truly open-source or become proprietary?

Open Claw's foundation model provides governance independence from Open AI, meaning the project's open-source nature is structurally protected. While Open AI will support the foundation and likely build proprietary services on top of Open Claw's architecture, the core framework will remain open-source, allowing continued community contribution and independent development.

Key Takeaways

- Strategic Hire: Peter Steinberger's move to Open AI represents a major bet on multi-agent AI systems becoming central to the company's future

- Ecosystem Validation: Open Claw's rapid adoption proved market demand for agent orchestration frameworks

- Open Plus Proprietary: The hybrid model of open-source project plus proprietary services is emerging as the dominant strategy in AI infrastructure

- Security Maturation: The industry is rapidly developing solutions to keep agent systems safe and trustworthy

- Multi-Agent Future: The next phase of AI development will focus on orchestrating networks of specialized agents rather than building larger monolithic models

- Timing Opportunity: The convergence of mature frameworks, increased resources, and proven demand creates an excellent time to engage with agent technology

Related Articles

- Why OpenAI Retired GPT-4o: What It Means for Users [2025]

- How to Operationalize Agentic AI in Enterprise Systems [2025]

- Anthropic's $14B ARR: The Fastest-Scaling SaaS Ever [2025]

- Lenovo Warns PC Shipments Face Pressure From RAM Shortages [2025]

- Why AI Pilots Fail: The Gap Between Ambition and Execution [2025]

- ChatGPT-4o Shutdown: Why Users Are Grieving the Model Switch to GPT-5 [2025]

![OpenClaw Founder Joins OpenAI: The Future of Multi-Agent AI [2025]](https://tryrunable.com/blog/openclaw-founder-joins-openai-the-future-of-multi-agent-ai-2/image-1-1771196829324.jpg)