How AI Bots Are Quietly Reshaping Online Discovery

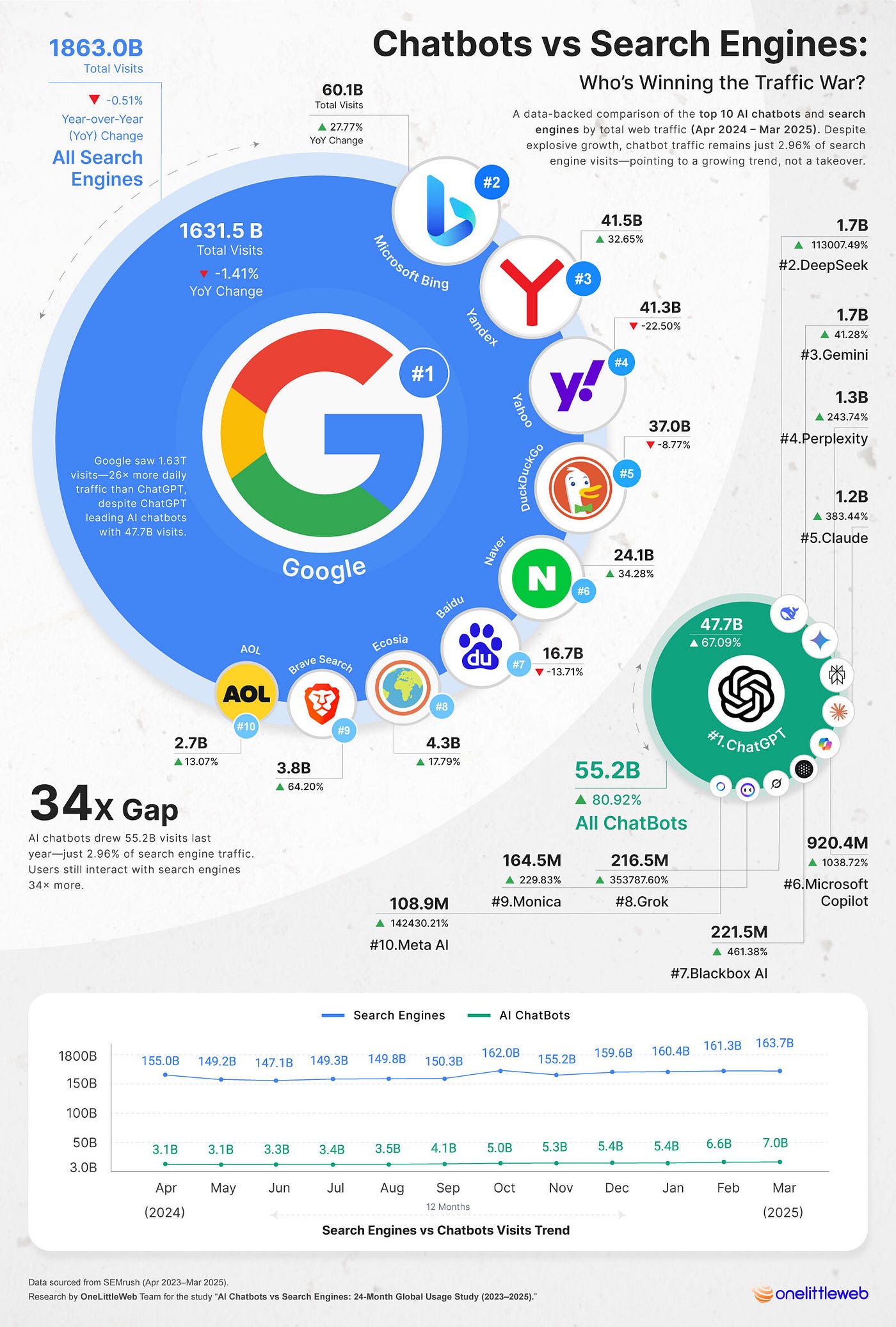

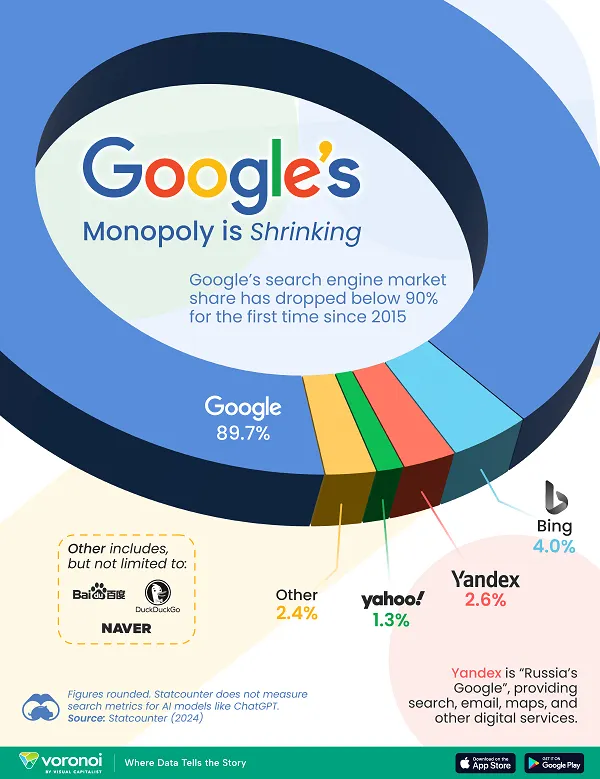

Remember when getting traffic meant ranking high on Google? That's still important, but it's not the whole story anymore.

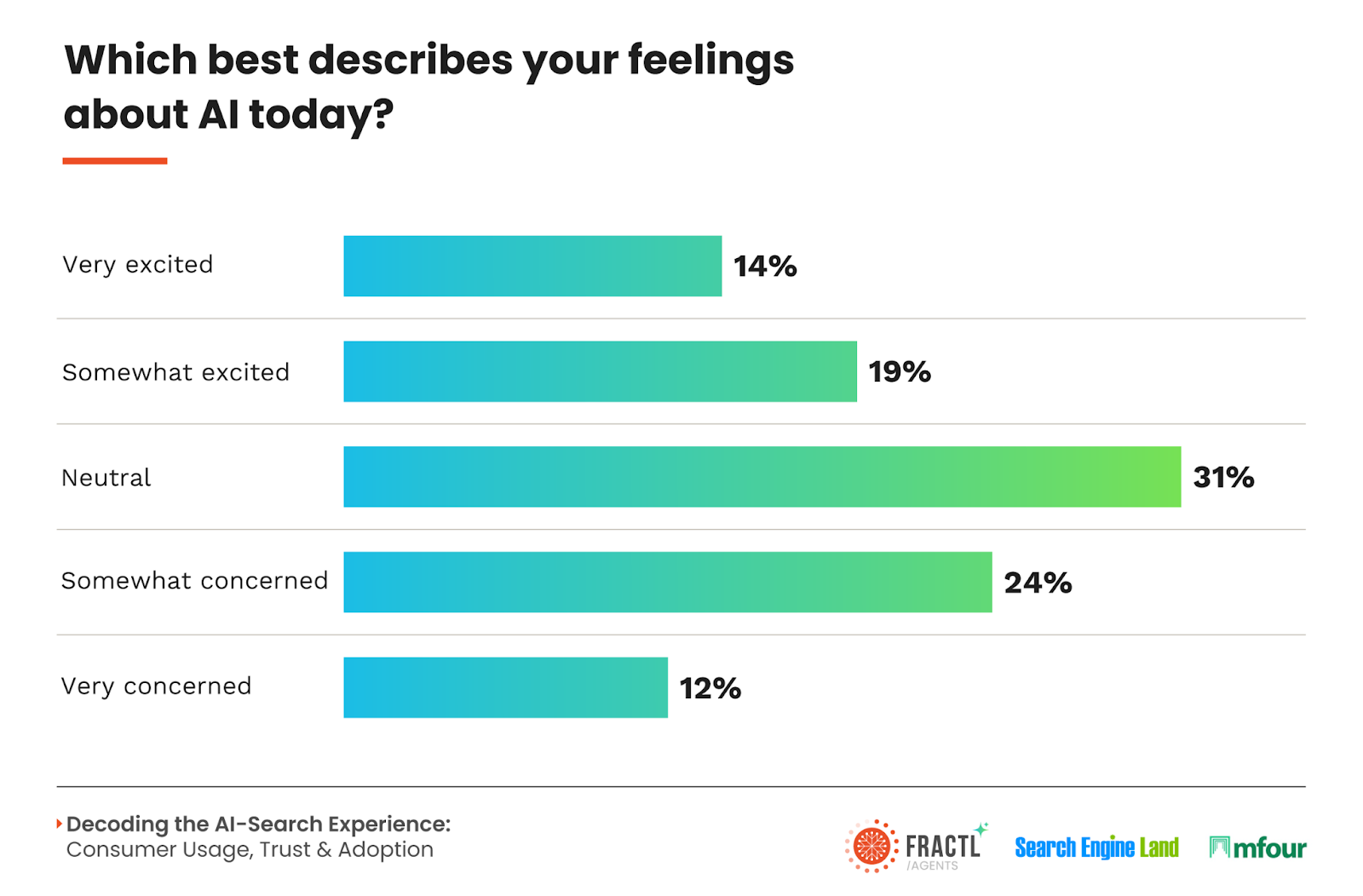

Last year, something shifted. A new layer of discovery emerged between people and websites. Instead of searching Google for a product review, users now ask Chat GPT. Instead of scrolling Amazon, they ask their AI assistant for recommendations. And here's the thing that keeps marketers up at night: the AI gives an answer without the user ever clicking through to the brand's actual website.

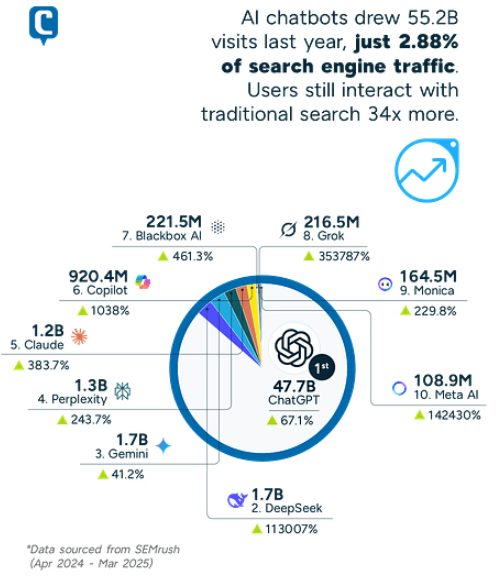

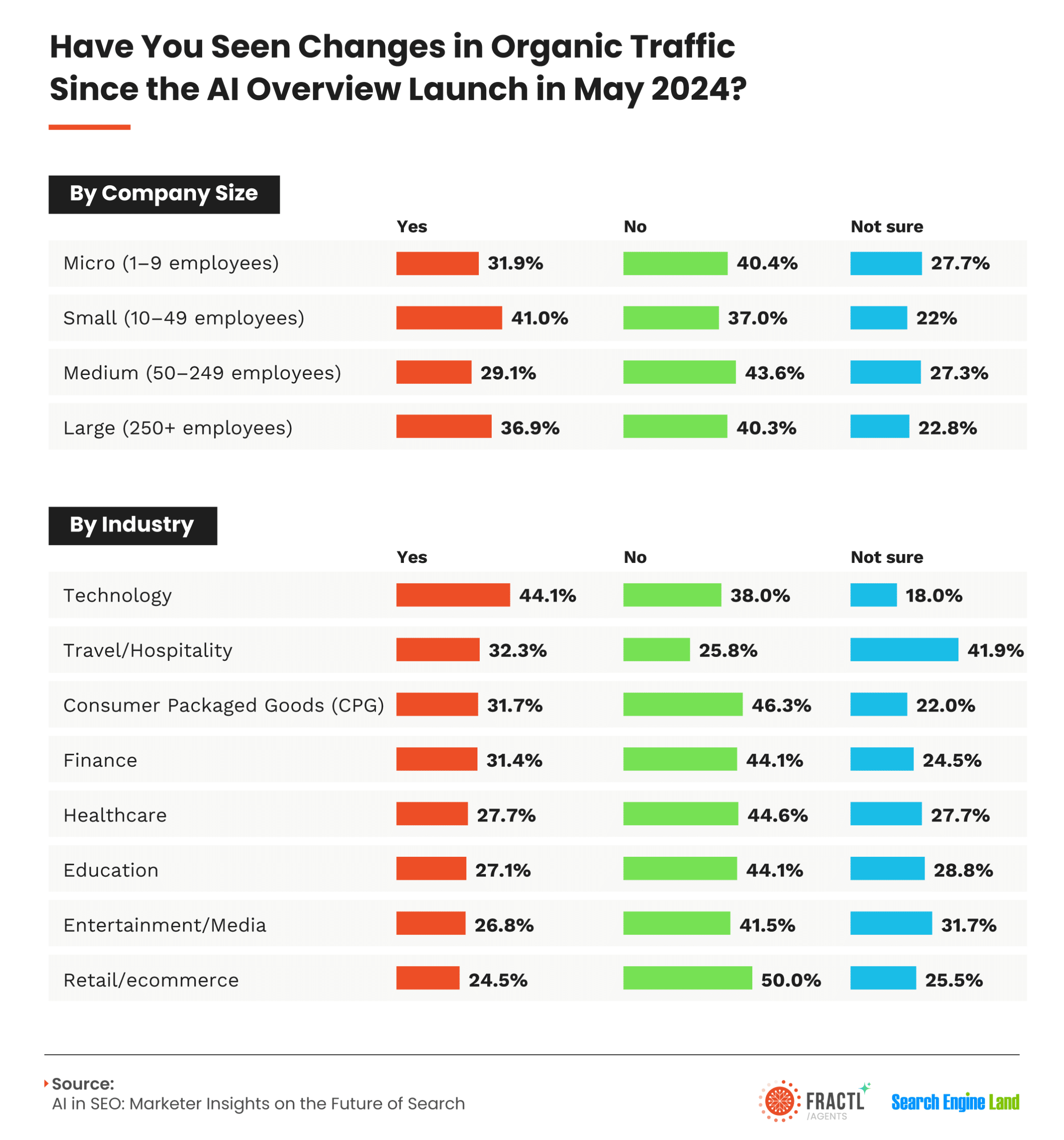

The implications are enormous. A massive study of over 66.7 billion bot requests across 5 million websites reveals just how fast this transition is happening. The data shows something we all suspected but now have proof of: AI crawlers are becoming the new search layer, and companies that ignore this shift risk losing visibility, brand authority, and sales.

But it's not all doom and gloom. Smart brands are already adapting. They're optimizing for AI discovery. They're controlling how AI presents their products. And they're discovering new opportunities in an ecosystem traditional SEO completely ignored.

In this deep dive, we'll explore what the data actually shows, why it matters for your business, and exactly what you need to do to stay visible in the AI-first internet.

TL; DR

- AI crawler adoption is exploding: Open AI's Search Bot and Applebot coverage increased dramatically while traditional Google searches remained flat.

- Brands are losing control: Companies blocking AI bots to protect IP are actually losing influence over how AI assistants present their products and pricing.

- It's a new layer, not a replacement: AI discovery sits above traditional search, meaning Google isn't dying—but it's becoming less relevant as a discovery mechanism.

- Tools like Web 2 Agent and llms.txt files are critical: Forward-thinking brands are already implementing these to control their AI presence.

- Governance matters more than blocking: Instead of banning AI bots entirely, smart companies are using enhanced governance to stay visible without losing IP protection.

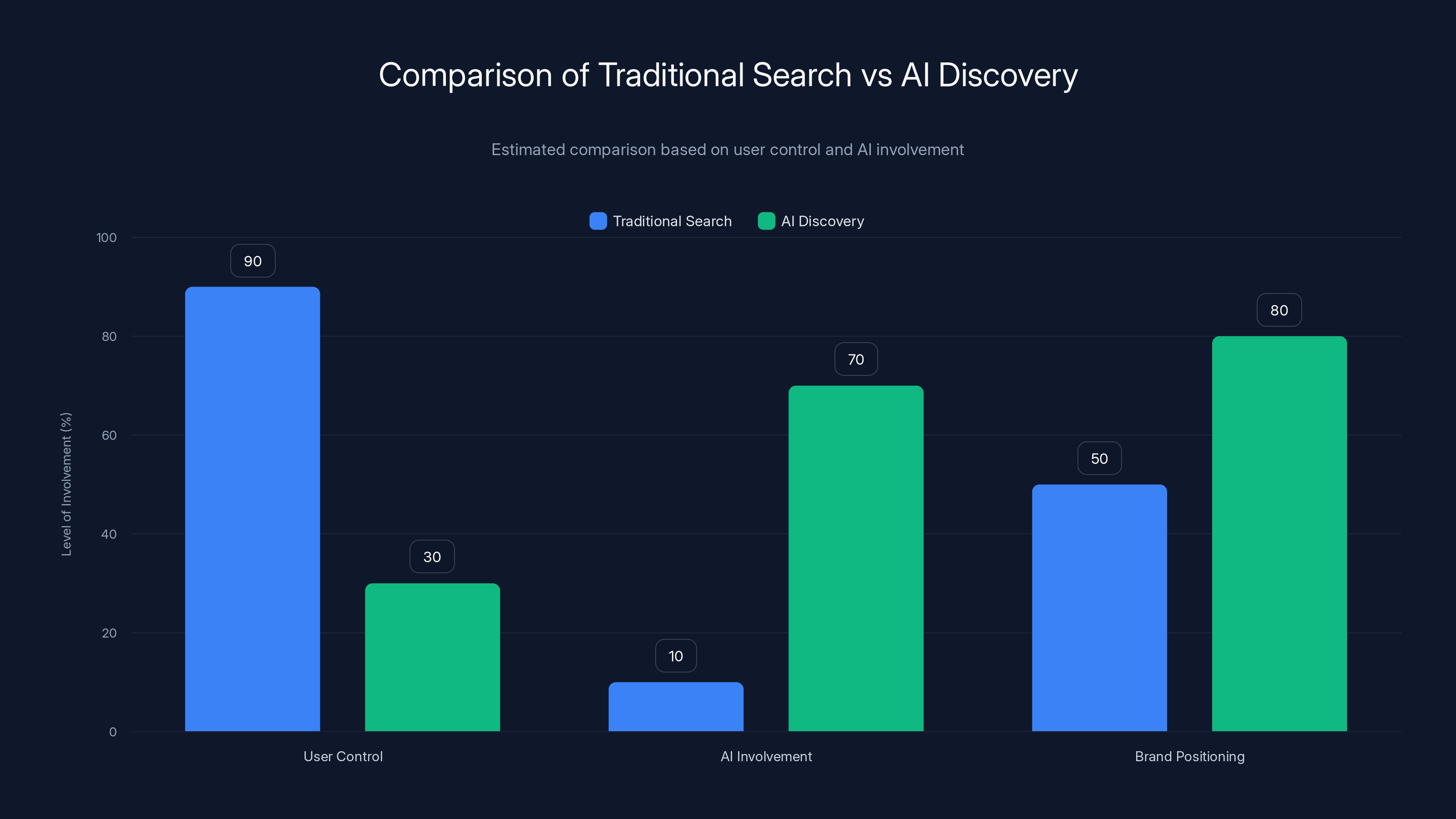

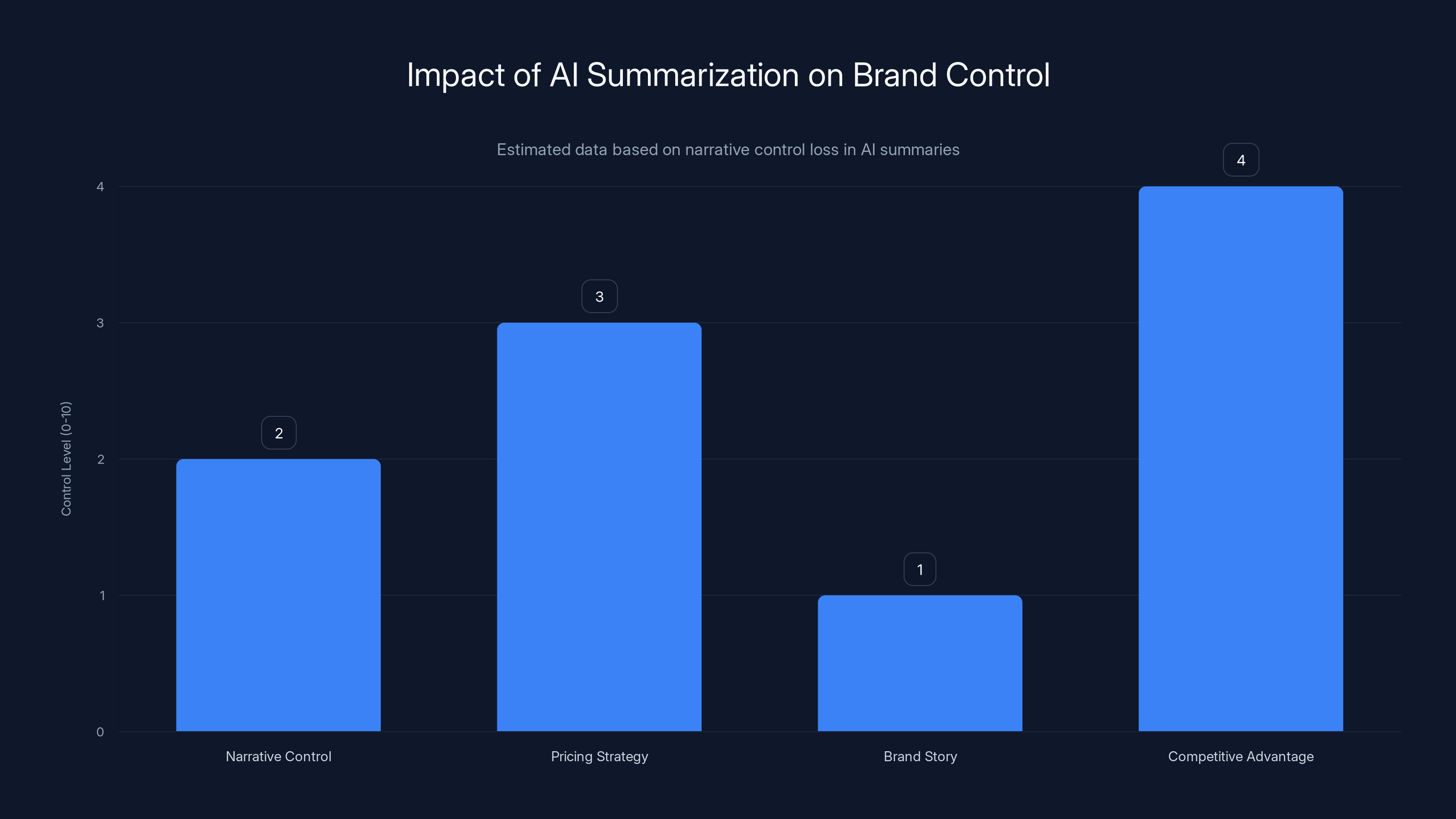

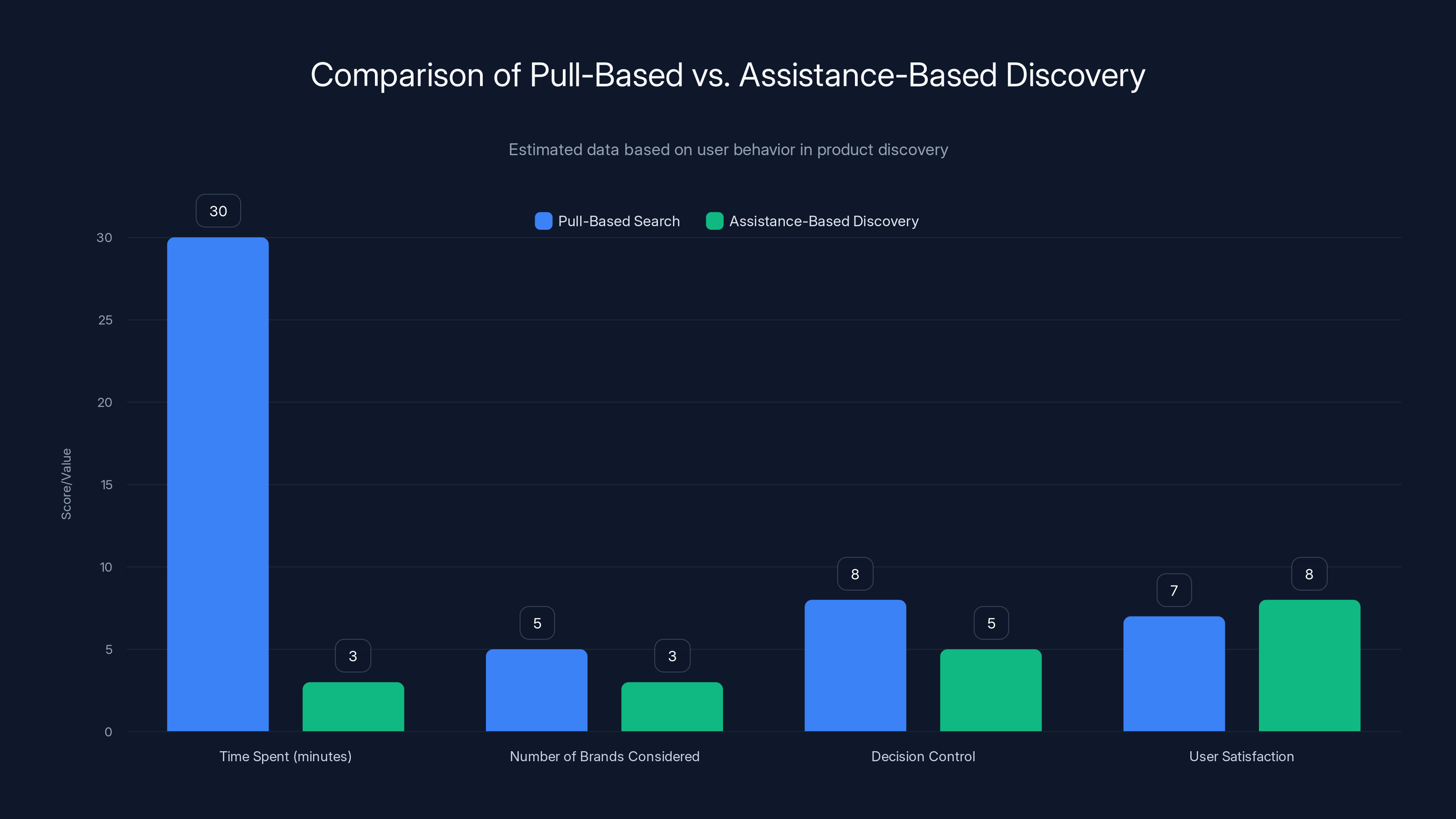

Traditional search offers higher user control, while AI discovery involves more AI judgment, impacting brand positioning strategies. Estimated data.

The Data Behind the AI Bot Explosion

Let's start with the numbers because they're genuinely shocking.

The Hostinger study analyzed 66.7 billion bot requests over a specific period. That's not an estimate. That's actual traffic data from real websites. What they found rewrites everything we thought we knew about internet traffic distribution.

Traditional search crawlers (the ones Google uses) basically flatlined. They're chugging along doing what they've done for two decades, but growth has stopped. Meanwhile, AI-specific crawlers are accelerating at a pace that has no historical precedent in web traffic patterns.

Open AI's Search Bot saw coverage increase significantly across websites willing to allow it. Apple's Applebot expanded aggressively. These aren't marginal players anymore. They're becoming standard infrastructure for how people discover information.

Here's what makes this different from previous tech shifts: it happened quietly. There was no announcement. No keynote. No "this is the new world." It just... happened. Traffic began flowing through AI assistants, and most companies didn't notice until they started seeing revenue questions from CFOs asking why brand awareness dropped.

The math itself is interesting. If we break down the 66.7 billion requests across typical web traffic distributions, AI bots are now consuming a meaningful percentage of total requests. Not the majority yet, but the growth rate suggests they could be within 12 to 24 months.

What's happening here is a divergence. Companies are saying "no" to training bots (which feed AI models). They're saying "yes" to discovery bots (which power search interfaces). It's a subtle but critical distinction that most people are missing.

Meta's External Agent follows the same pattern: dropped from 60% to 41% as companies realized their content was being used to train Facebook's AI. But the bots optimized for discovery? Those are growing unchecked.

The companies doing this aren't making a mistake. They're being strategic. They understand that blocking training bots protects their IP while allowing discovery bots keeps their products visible in the new discovery layer. It's nuanced, but it matters enormously.

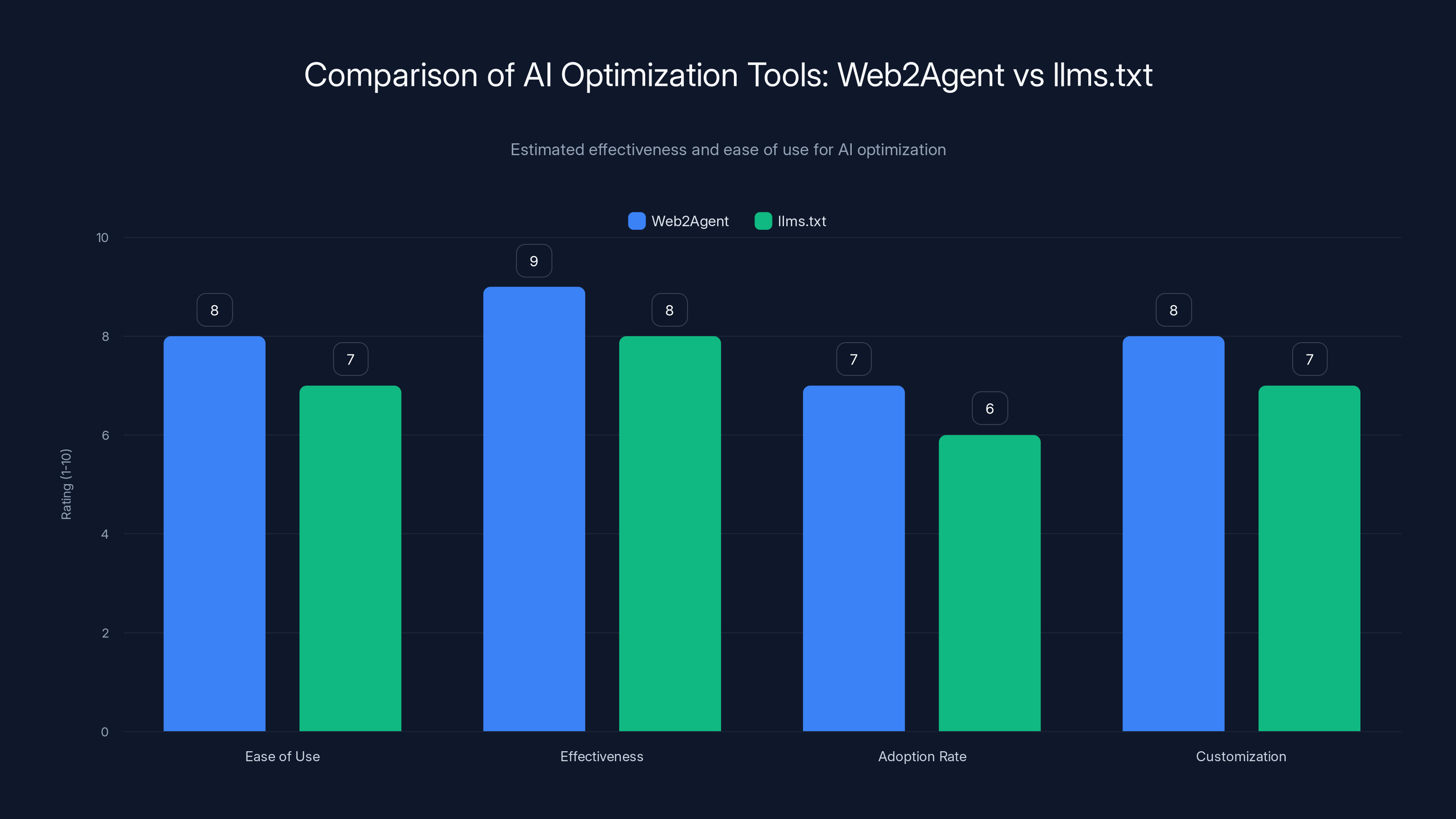

Web2Agent generally scores higher in effectiveness and ease of use compared to llms.txt, making it a slightly more favorable tool for AI optimization. (Estimated data)

Why Traditional Search Isn't Actually Dying

Here's where conventional wisdom breaks down.

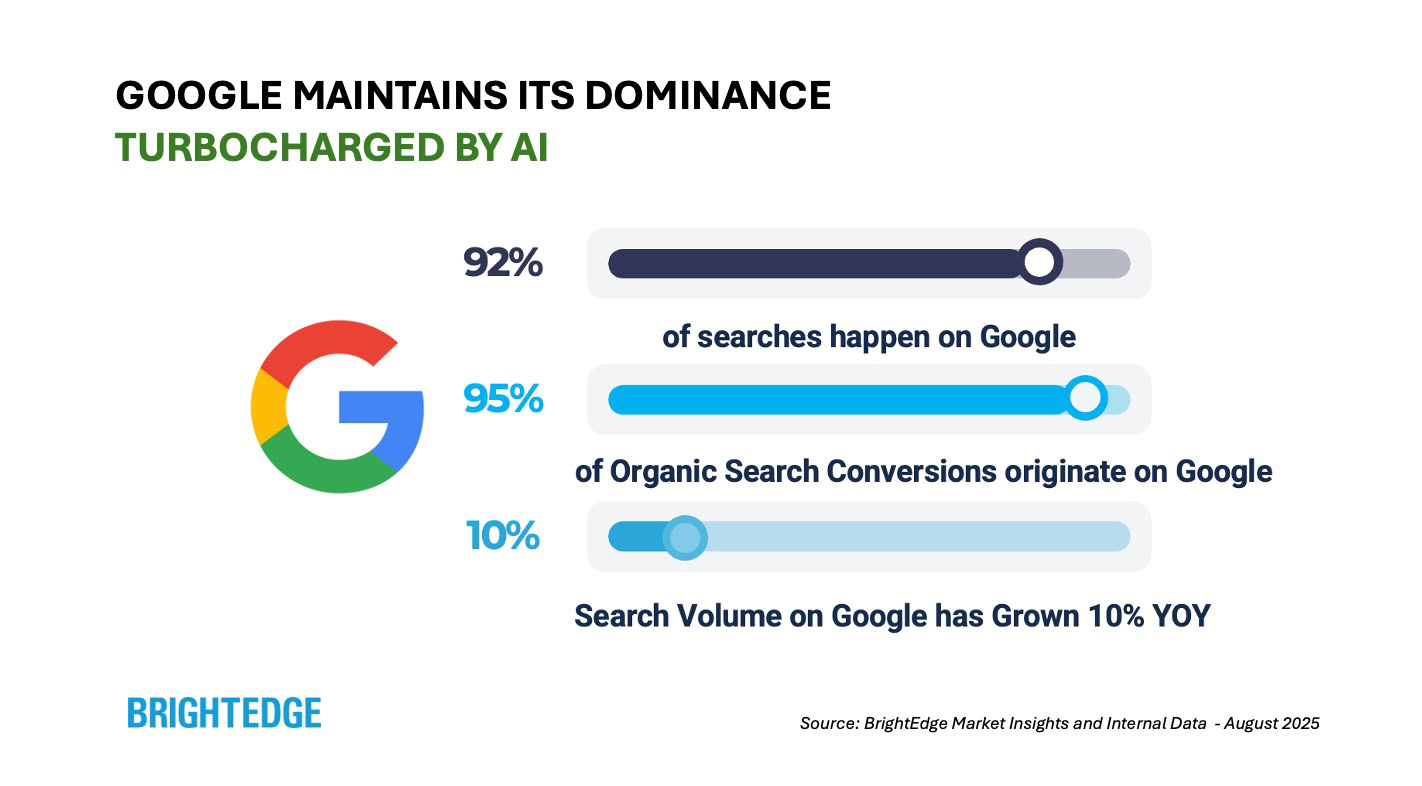

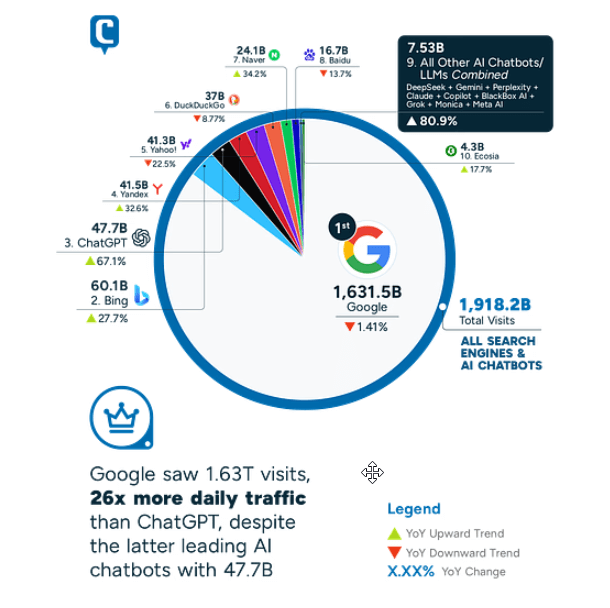

Google isn't losing traffic. Traditional search isn't collapsing. But traditional search is becoming less relevant as a primary discovery mechanism. There's a massive difference.

Think of it like this: GPS didn't kill paper maps. But it made them irrelevant for navigation. Paper maps still exist. Some people still use them. But nobody navigates with them by default anymore.

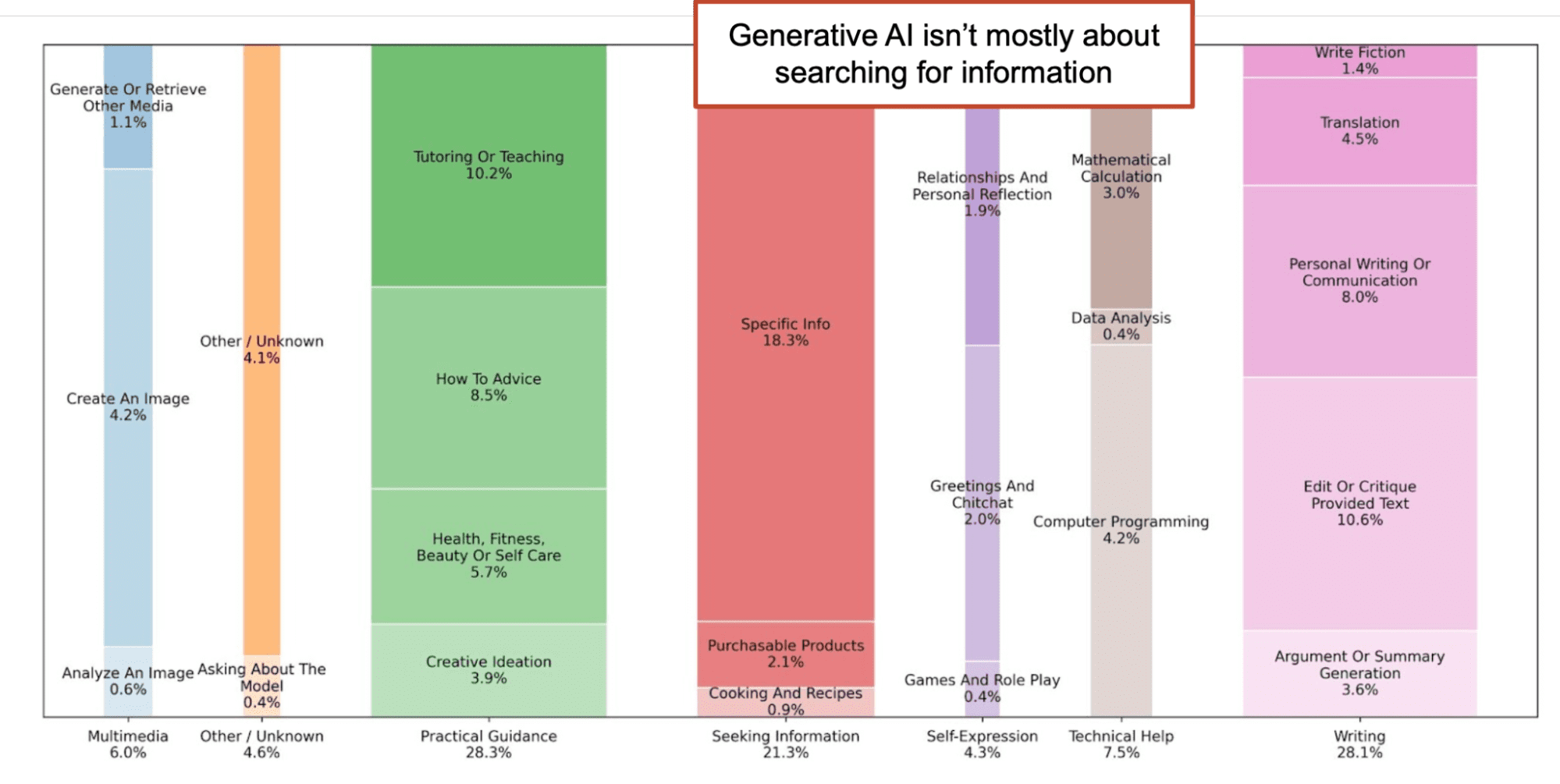

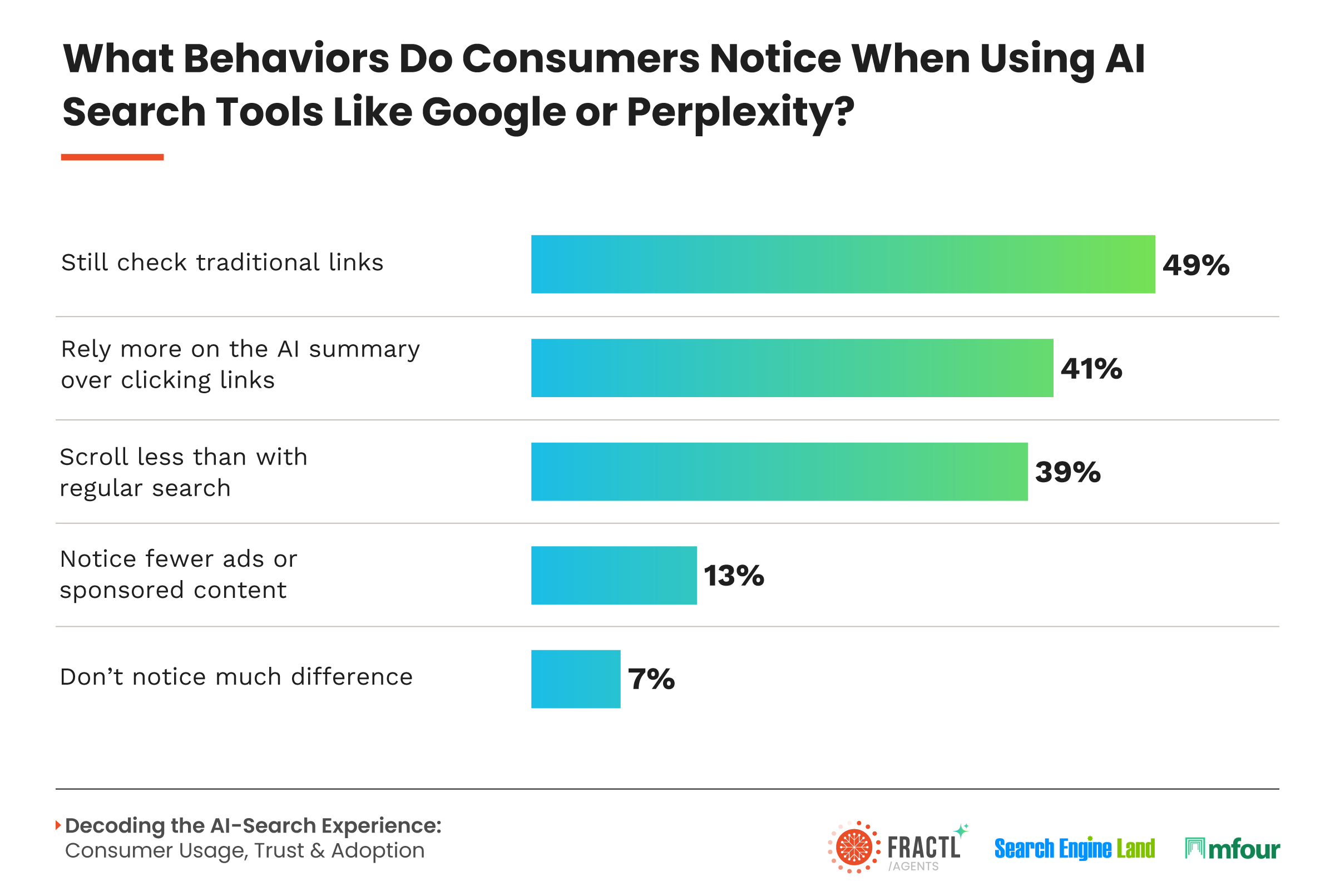

What the data actually shows is that AI is adding a new decision layer above traditional search. Users still use Google. Websites still need SEO. But increasingly, Google is competing not with other search engines but with AI assistants that precompile answers before users ever get to a search result.

When someone asks Chat GPT "what's the best product management tool," Chat GPT gives them Asana, Monday.com, Jira, and maybe 2-3 others. The user gets an answer instantly. They don't Google it. They don't compare options themselves. An AI just solved their discovery problem.

Google captures a different type of traffic: people doing specific product searches, people looking for technical documentation, people researching specific brands. Those queries still happen. But research queries? Opinion-seeking queries? Recommendation queries? Those increasingly flow through AI assistants.

For Google, this is actually okay. Google owns several AI chatbots now (Bard, Gemini). Google is adding AI summaries to its search results. Google is building products that compete in both layers.

For everyone else? It's urgent.

The companies that understood this early have already optimized their content for AI summarization. They've made sure that when an AI reads their pages, it pulls out the information AI assistants actually need: clear value propositions, pricing, competitive advantages, and specific features.

Companies that didn't? They're invisible to AI. Their long-form blog posts don't work. Their complex information architecture doesn't work. Their brand storytelling gets stripped away and replaced with competitor data points.

This is the real risk: not that Google's dying, but that your brand is invisible in the new layer.

The Massive Problem: Brands Losing Control of Their Narrative

Here's where this gets genuinely dangerous for businesses.

When a user searches Google, they see your website, your design, your messaging, your call-to-action buttons, your trust signals. You control the experience. You control the narrative.

When an AI assistant summarizes your product, none of that happens. The AI pulls the most relevant facts, compares them to competitors, and presents a stripped-down version. Your premium positioning? Gone. Your brand story? Gone. Your pricing strategy? Reduced to a single number.

Worse: if the AI decides a competitor's product is better, it says so. You don't get to argue. You don't get to explain why your solution is actually superior for that person's specific situation. The AI decided, and that's the answer.

The Hostinger research specifically highlighted this: "The real risk for businesses isn't AI access itself, but losing control over how pricing, positioning, and value are presented when decisions are made."

Let's make this concrete. Imagine you sell project management software. Your actual competitive advantage is the onboarding speed (15 minutes vs 2 hours for competitors) and the custom workflow builder. Those are specifics that actually matter.

But when Chat GPT summarizes your product, it might say: "Asana is a project management tool with AI features, pricing starts at $10/month." That's technically true. It's also useless. The AI didn't capture what makes you different. It captured commoditized facts.

Now, the user asking Chat GPT thinks "okay, all project management tools are similar." They pick the cheapest one. Or the one the AI mentioned first. Or the one the AI seemed to recommend.

You lost a deal because you lost control of your narrative.

This is happening millions of times per day right now, and most companies don't even know it's possible.

The worst part? The companies most worried about this problem are doing exactly the wrong thing. They're blocking all AI bots hoping to hide their content. But that doesn't stop AI from using training data. It just means:

- The AI has outdated information about their product

- They lose the ability to shape how AI describes them

- They become invisible in AI discovery

It's like refusing to be listed on Google to protect your IP. It doesn't protect anything. It just hides you.

AI summarization significantly reduces brand control over narrative, pricing strategy, and competitive advantages. Estimated data reflects potential impact.

The Divergence: Training Bots vs. Discovery Bots

This is the crucial distinction almost everyone gets wrong.

There are two types of AI bots crawling the web: training bots and discovery bots. They do completely different things, and companies should treat them differently.

Training bots (like GPTBot in its training mode) crawl to collect data that trains AI models. Open AI uses this data to improve Chat GPT. Anthropic uses it for Claude. Meta uses it for Llama. These bots are controversial because companies feel their content is being used without explicit permission to build commercial AI products.

Discovery bots (like Open AI's Search Bot and Applebot) crawl to provide real-time information to users asking questions. When you ask Chat GPT "what's the weather in London," it uses a discovery bot to get current information. When you ask "what does this technical documentation say," it retrieves recent pages.

These serve completely different functions.

Blocking training bots makes sense for some companies. Your competitive moat might depend on proprietary information, and you don't want that powering a general-purpose AI.

But blocking discovery bots? That's strategic suicide. That's like refusing to be indexed by Google to protect your IP. You're not protecting anything. You're just hiding.

The data perfectly shows this logic. Look at what companies did:

- Blocked GPTBot (training): Coverage collapsed from 84% to 12%

- Allowed Search Bot (discovery): Coverage increased

They made a choice. And that choice is absolutely the right call.

This reveals something important about how smart companies think about AI. They're not choosing between "let AI use our content" or "hide from AI." They're making granular decisions about which AI use cases align with their business.

They understand that:

- Training data being used to build general-purpose AI doesn't directly benefit them

- Discovery mechanisms that show their products to users do directly benefit them

- The discovery layer is becoming as important as Google

- Visibility in that layer requires being crawlable by discovery bots

It's strategic thinking, not fear-based thinking.

How AI Summarizes (And Strips Away Your Positioning)

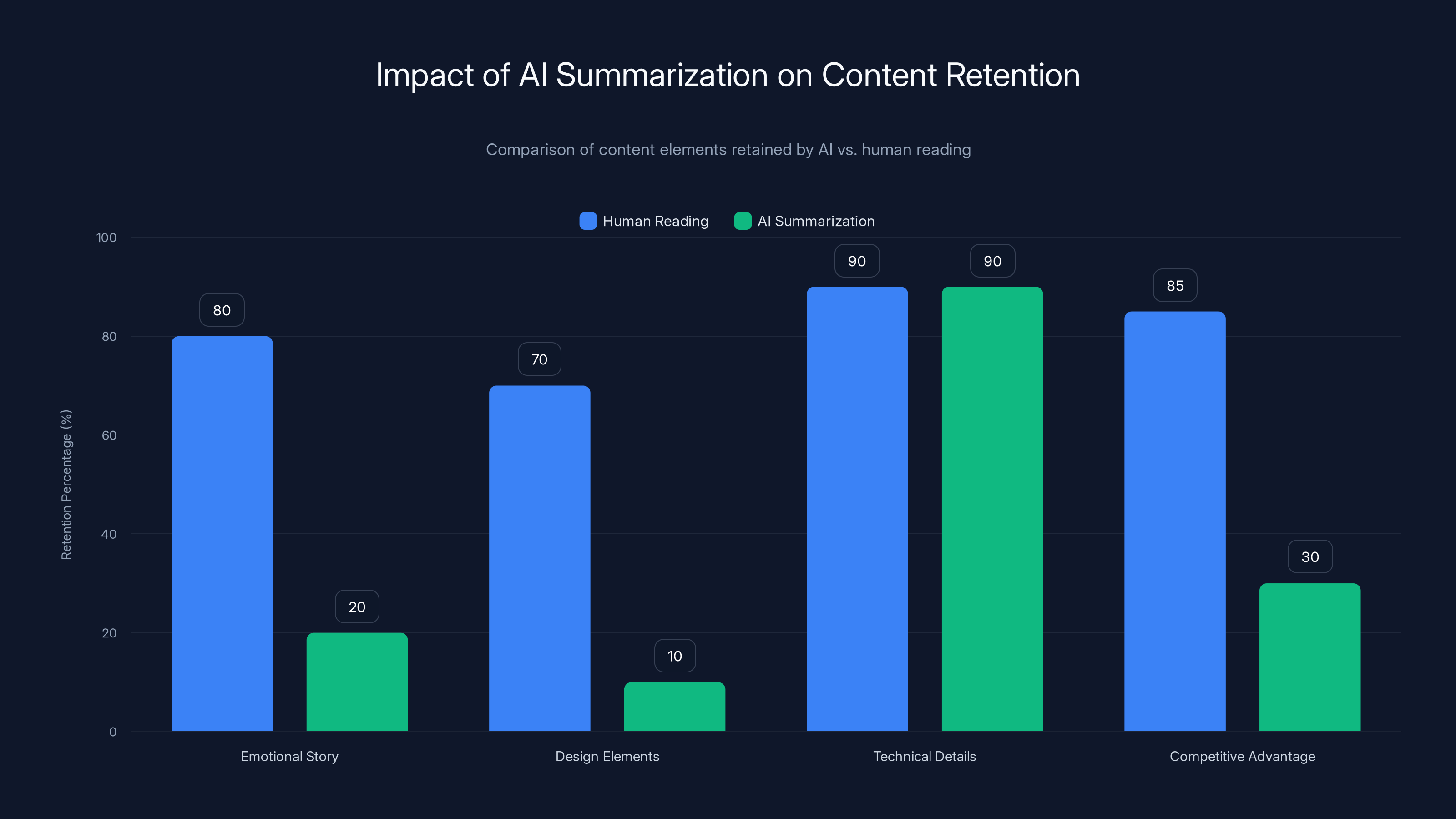

When an AI assistant reads your website, it doesn't experience it the way a human does.

A human might read your homepage, get inspired by your story, notice your design, and become emotionally invested. An AI reads it as tokens. It extracts facts. It summarizes. It compares to competitors. It presents the most relevant information concisely.

This is fundamentally different from how Google works. Google's algorithm rewards depth, original content, and comprehensive information. An AI simplifies everything down to essential facts.

Let's say you wrote a 2,000-word blog post explaining why your approach to password management is better than competitors. You explained the philosophy. You showed examples. You built a compelling narrative.

When Chat GPT summarizes it, it might extract: "Uses zero-knowledge encryption." That's accurate. It's also completely useless for differentiation because three competitors also use zero-knowledge encryption.

Your actual competitive advantage (your UX design, your customer onboarding, your unique backup system) didn't fit in the summary. It got cut.

This is why AI optimization is becoming critical. You need to structure your content so that when an AI summarizes it, the most important facts come first and stay prominent.

Compare these two approaches:

Before AI Optimization:

"We started this company because we were frustrated with existing password managers. The founders spent 5 years in security and saw the problems firsthand. Our approach rethinks the entire user experience, combining military-grade encryption with intuitive design. We've built a community of 2 million users who trust us with their most sensitive data. Our encryption uses [detailed technical explanation]. We offer three pricing tiers..."

After AI Optimization:

"Military-grade encryption, zero-knowledge architecture, supports biometric authentication, offline access, and family sharing. Used by 2 million security-conscious users. Pricing: Personal (

8/month), Enterprise (custom). Key differentiator: Biometric authentication with zero-knowledge backup."

See the difference? The second version is what an AI will actually extract and present to users. It's factual, specific, and immediately useful for comparison.

When you optimize for this, you're not being less authentic. You're being more clear. Your actual competitive advantages come through better because they're not buried in narrative fluff.

The companies winning in AI discovery are the ones that understood this early. They restructured their websites. They created llms.txt files with clearly structured facts. They updated their metadata. They made sure that when an AI read their page, it extracted the right information.

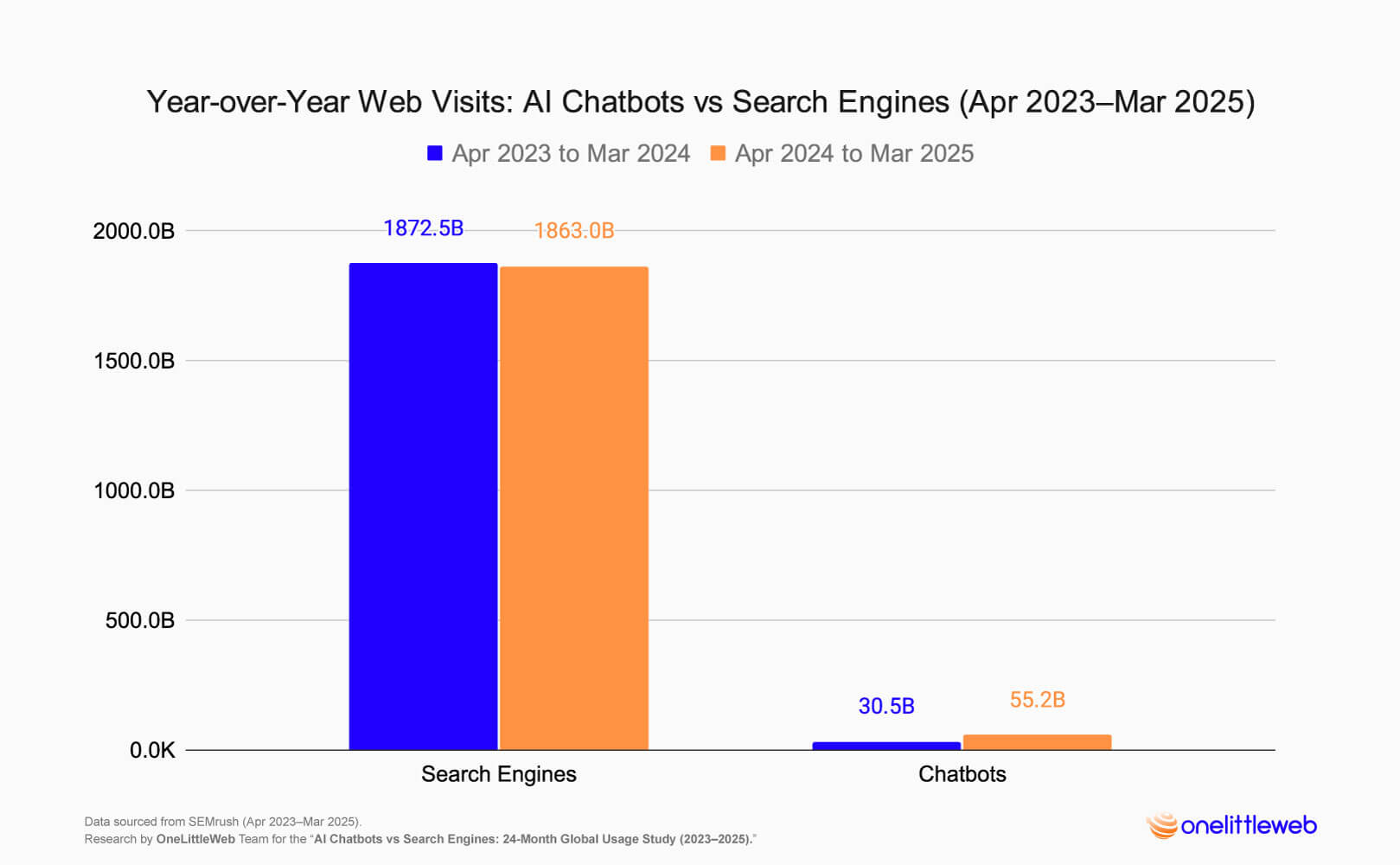

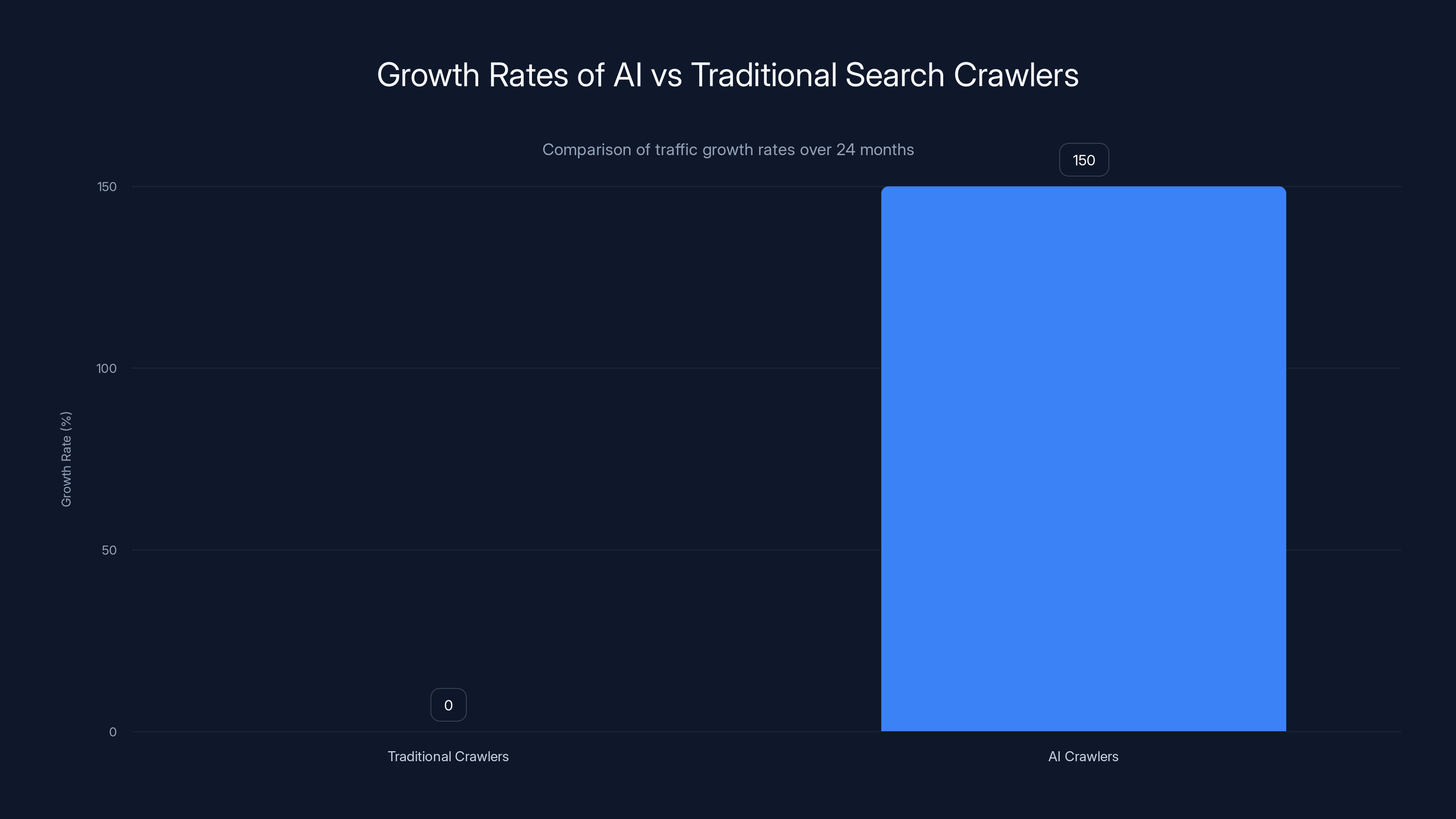

AI-specific crawlers have shown a 150% growth rate over 24 months, while traditional search crawlers have stagnated, indicating a significant shift in web traffic patterns.

The Tools Changing the Game: Web 2 Agent and llms.txt

Smart brands aren't just hoping for the best. They're actively managing how AI represents them.

Two tools are emerging as critical infrastructure for AI optimization: Web 2 Agent and llms.txt files.

Web 2 Agent is a tool that converts your website into a structured format optimized for AI parsing. Instead of an AI having to interpret your HTML, images, and complex design, Web 2 Agent serves up pure information in a format AI models handle perfectly. It extracts pricing, features, benefits, and comparisons, then presents them in a way that AI assistants find valuable.

Implementing Web 2 Agent is relatively straightforward. You basically tell it: "Here's my website. Extract the useful information." It does that. Then AI crawlers find that structured information and use it preferentially over trying to parse your design-heavy homepage.

The advantage is massive. When Chat GPT wants information about your product, it gets clean, structured data instead of having to guess what matters from your marketing copy.

llms.txt files are similar in concept but different in execution. An llms.txt file is a text file you put in your website's root directory (like sitemap.xml) that contains structured information about your product, company, and services.

AI models check for llms.txt files when crawling websites. If it's there, they use it as the authoritative source for information. If it's not, they parse your content like normal.

Here's what a basic llms.txt file looks like:

Company: Acme Software

Business: Project Management Platform

URL: https://acmesoftware.com

Core Features:

- AI-powered task generation

- Real-time collaboration

- Custom workflow builder

- Integrations with 500+ apps

Pricing:

- Personal: $9/month

- Team: $25/month

- Enterprise: Custom

Target Market: Teams of 3-500 people

Competitors: Asana, Monday.com, Jira

Differentiators: Fastest onboarding (15 minutes), custom workflows, AI assistant

Contact: sales@acmesoftware.com

When an AI visits your site, it finds this file. It knows exactly what you want it to know. No guessing. No misrepresentation. Just facts, presented the way you want them presented.

The companies deploying these tools are seeing real results:

- Better AI representation: Their products appear more accurately in AI summaries

- Competitive advantages captured: When AI compares them to competitors, their unique features come through

- Traffic from AI discovery: Users asking AI assistants for recommendations increasingly find them

- Brand control: They're managing their narrative instead of hoping AI gets it right

This isn't hypothetical anymore. Tools like Claude and Chat GPT are actively looking for llms.txt files and preferentially using that information. If you don't have one, you're leaving your brand representation to chance.

For teams looking to automate this process and create structured content at scale, platforms like Runable enable you to generate optimized documentation and structured content using AI, making it easier to maintain accurate representations across discovery layers without manual effort.

The Governance Question: Ban Everything or Optimize?

Here's the strategic tension most companies are wrestling with right now.

They want to protect their content. They don't want competitors stealing their IP. They don't want their data used to train competing AI models.

So they block everything. They add robots.txt rules. They use legal language. They hope that if they hide, they'll be protected.

It doesn't work.

Blocking crawlers doesn't prevent AI companies from using existing data to train models. It doesn't prevent them from using older versions of your content. It just prevents you from controlling how you're described.

The Hostinger report specifically recommended "enhanced governance over outright bans." Let's break down what that actually means.

Instead of a binary choice (allow everything or block everything), smart companies are using sophisticated robots.txt rules:

- Allow discovery bots (Search Bot, Applebot, Bingbot)

- Block training bots (GPTBot, CCBot, Googlebot-Advanced)

- Allow certain crawlers only for certain directories

- Update rules as new bots emerge

This is governance. You're not hiding. You're not allowing unlimited access. You're making granular decisions about which AI use cases align with your business.

Different business models warrant different strategies:

Saa S Companies should allow discovery bots and block training bots. Discovery means users find you when they ask AI assistants for solutions. Training bots feed your product information to competing AI systems.

News Organizations face a different tension. They want AI to cite their content (driving traffic and authority). But they want to control how many excerpts AI can use. The governance here involves licensing agreements, not just robots.txt rules.

E-commerce Companies benefit enormously from discovery bots that help users find their products through AI shopping assistants. Training bots are less critical for their business model.

Academic Institutions might want to maximize training bot access (it increases their influence on AI models) while controlling discovery for privacy reasons.

No single strategy works for everyone. The point is to be intentional.

The real power move is realizing that AI bots aren't a threat to manage. They're a discovery mechanism to optimize for. Just like SEO wasn't a threat to newspapers in 2000—it was traffic.

Companies that understood this early are getting discovered by millions of people asking AI assistants for recommendations. Companies that didn't are invisible.

AI summarization tends to retain technical details but often overlooks emotional stories, design elements, and competitive advantages. Estimated data based on typical AI processing.

The Shift in Brand Positioning and Discovery Patterns

What's happening is more subtle than a simple replacement of Google with AI.

It's a fundamental shift in how people discover products and services.

Traditional search (Google, Bing) is pull-based. You pull information when you decide you need it. You type a query. You read results. You click through. It's active, intentional, and controlled by the user.

AI discovery is different. It's assistance-based. You ask an AI for advice. The AI pulls information from across the web, synthesizes it, and presents a recommendation. You're not searching. You're asking for help.

These are fundamentally different psychological experiences.

In pull-based search, the user is in control. They can find niche products. They can find exactly what they want. They can compare options deliberately.

In assistance-based discovery, the AI is making recommendations. Most users accept the AI's suggestion without deep comparison. They might ask follow-up questions, but they're trusting the AI's judgment.

This changes everything about how brands should position themselves.

Imagine you're shopping for headphones:

Pull-based (Google): You search "best wireless headphones under $100." You read 10 articles. You compare Sony, Anker, and JBL. You click through each brand's website. You read reviews. You make a decision. This takes 30 minutes. You might discover a brand nobody's heard of if they have the best reviews.

Assistance-based (Chat GPT): You ask Chat GPT "which wireless headphones should I buy?" It says "Sony WF-C700N are great for

Which model is better for innovation and choice? Pull-based. Which model is better for user convenience? Assistance-based.

For brands, this creates a new challenge. You don't win in assistance-based discovery by being the best product anymore. You win by being the product the AI recommends.

This shifts from "product quality" to "AI visibility" as the primary lever for growth.

Smart brands are realizing this. They're:

- Making sure AI can parse their key information (via llms.txt, structured data)

- Emphasizing concrete differentiators (things AI can quantify and compare)

- Building relationships with AI platform companies (Open AI, Anthropic, Google)

- Monitoring how they're represented in AI summaries

- Creating content specifically for AI consumption (structured, factual, comparison-friendly)

Companies that mastered Google SEO 10 years ago are discovering that those tactics don't fully work here. You can't just stuff keywords and rank. You have to make sure your actual competitive advantages are obvious to an AI reading your content.

Why AI-First Companies Are Winning

If you look at the companies gaining traction fastest right now, they have something in common: they optimized for AI discovery from day one.

They structured their websites for AI parsing. They created llms.txt files. They built relationships with AI platforms. They understood that being visible in Chat GPT matters as much as ranking on Google.

Take Perplexity. When someone uses Perplexity to research products, Perplexity shows them source links and summaries. Companies can appear in Perplexity results if they're discoverable. The companies winning in Perplexity aren't always the biggest. They're the ones that structure their content clearly and answer specific questions directly.

Or look at Anthropic's Claude system. Claude powers business automation tools, customer service assistants, and research systems. When a company wants to use Claude to automate part of their workflow, they want Claude to understand and represent other brands correctly. That's only possible if those brands structure their information for AI parsing.

The companies that built for this early are now getting network effects. They're appearing in more AI systems. More people are discovering them. They're becoming default recommendations.

Brands that ignored this are finding themselves increasingly invisible. They spent years optimizing for Google. They now have to do it again for AI. And they're starting 12 months behind.

The advantage isn't permanent. As more companies optimize, AI discovery will become competitive just like Google search is. But the window of opportunity is still open. Companies that move now will have advantages for years.

This is essentially the same situation that happened with SEO in the 1990s. Early movers got huge advantages because they understood that how search engines see you matters more than how humans see you. The same thing is happening now with AI.

Pull-based search allows for more time spent and brands considered, offering greater decision control. Assistance-based discovery is quicker and may lead to higher user satisfaction due to convenience. Estimated data.

The Content Optimization Framework for AI Discovery

If you're ready to actually do this, here's the framework.

Step 1: Audit Your Current AI Representation

Start by seeing how you currently appear to AI systems. Use multiple AI assistants. Search for your company, your products, your competitors. Take screenshots. Write down what each AI says about you.

This is your baseline. You'll measure progress against this.

Step 2: Identify Your Core Differentiators

Not the marketing fluff. The actual reasons someone should pick you instead of competitors.

For a project management tool, this might be:

- Onboarding time: 15 minutes vs. 2 hours for competitors

- Custom workflow builder with no-code interface

- AI task generation from natural language

- 500+ integrations pre-built

Where competitors can't match you. These should be specific and measurable.

Step 3: Restructure Your Website for AI Parsing

Your homepage doesn't have to change. But your metadata, structure, and key information does.

Add:

- Clear h 1 tags describing what you do

- Structured metadata (schema.org markup)

- Concise descriptions of each product feature

- Transparent pricing information

- Your competitive differentiators stated plainly

- Company information and contact details

AI is much better at parsing structured information than narrative prose. This doesn't mean your website becomes less beautiful. It means you're providing structured data in addition to beautiful design.

Step 4: Create Your llms.txt File

Put this in your root directory:

Company Name: [Your Company]

Business Type: [Saa S/E-commerce/Service/etc]

URL: [Your Domain]

Description: [2-3 sentence description]

Core Features:

- Feature 1: [Brief description]

- Feature 2: [Brief description]

- Feature 3: [Brief description]

Key Differentiators:

- Differentiator 1: [Specific, measurable claim]

- Differentiator 2: [Specific, measurable claim]

Pricing:

- Tier 1: [Name, Price]

- Tier 2: [Name, Price]

Target Customers: [Description of ideal customer]

Competitors: [List 3-5 main competitors]

Co Founder/CEO: [Name]

Contact: [Email and/or phone]

Support URL: [Support page]

Keep it factual. Keep it current. Update it when you change pricing, add features, or update your positioning.

Step 5: Monitor and Iterate

After 2-4 weeks, run the same AI audit. How are you being represented now? Is it more accurate? Are your differentiators coming through?

Adjust your llms.txt file. Restructure website sections that AI is still misunderstanding.

This is ongoing. AI systems improve. New AI platforms emerge. Your competitors optimize. You need to stay ahead.

Step 6: Implement Web 2 Agent (Optional but Powerful)

If you want to go deeper, implement Web 2 Agent. This automatically converts your website into a format optimized for AI parsing and stays current as your website changes.

It's like hiring someone to convert your entire website into AI-friendly format and keep it updated. The return on investment is substantial if you're serious about AI discovery.

For teams building complex content systems and managing documentation at scale, Runable can automate the creation and updating of structured content and llms.txt files using AI, ensuring your AI representation stays accurate as your products evolve.

The Competitive Advantage Window: Act Now or Fall Behind

Here's the reality check: this window is closing.

Right now, most companies haven't optimized for AI discovery. That means the companies that move fastest gain the biggest advantage. In 6 months, most serious competitors will have done this. In a year, it'll be table stakes.

Moving now means:

- You get to define how AI describes your company

- You appear in AI recommendations before competitors do

- You capture market share from people relying on AI assistants

- You build habits with users who prefer your product

- You establish authority in your niche

Waiting means:

- Competitors define the narrative

- AI recommendations don't mention you

- You capture whatever users find through traditional search

- You're constantly playing catch-up

- You eventually have to rebuild what you could have built now

The companies that moved fast on SEO in the 1990s had massive advantages for 15+ years. The companies that waited until 2005 had to fight for years to catch up.

This is a similar inflection point.

You don't have to be perfect. You just have to be intentional. Create an llms.txt file this week. Audit how you appear in AI systems. Restructure your key pages for clarity. That's enough to move ahead of 80% of competitors.

The companies doing this now will have built a moat by the time everyone else figures it out.

Future Predictions: Where AI Discovery Heads Next

Looking at the trajectory, a few things are almost certain to happen.

Prediction 1: AI Will Become the Primary Discovery Mechanism

Within 24 months, more users will discover products through AI assistants than through search engines. This might sound radical, but it's already happening in younger demographics. By 2026 or 2027, it'll be mainstream.

This doesn't kill Google. Google owns multiple AI systems. But Google's search box becomes less important than Google's AI recommendations.

Prediction 2: Brands Will Invest Heavily in AI Representation

Just like companies built entire SEO teams in the 2000s, they'll build AI optimization teams in the 2020s. The vendors providing tools for this (Web 2 Agent, AI-powered documentation platforms) will become critical infrastructure.

For companies managing complex products with lots of content, Runable will become essential for maintaining accurate AI representations at scale—automatically generating and updating documentation, structured content, and AI-optimized materials as products evolve.

Prediction 3: AI Disclosure Will Become Legally Required

As AI systems make recommendations, companies will demand transparency. "Why did your AI recommend a competitor?" Regulators will eventually require AI platforms to disclose how they make recommendations.

This creates opportunities for companies to influence those recommendations through proper engagement and accurate information.

Prediction 4: Specialized AI Platforms Will Emerge

We won't just have one Chat GPT competing with all search engines. We'll have specialized AI platforms for different industries. An AI assistant specifically for e-commerce. Another for B2B software. Another for financial services.

Companies will need to optimize for multiple AI systems, not just one.

Prediction 5: Control of Your AI Narrative Becomes a Competitive Advantage

The companies that best manage how AI describes them will capture disproportionate market share. This is literally the inverse of traditional marketing, where creative storytelling and brand building matter most.

Here, clarity and accuracy matter most. Being the brand that AI recommends because you're most obviously the right choice.

None of these are certain. But they're all directionally consistent with current trends.

The smart play is preparing for the highest-likelihood scenario (AI becomes primary discovery) while keeping flexibility to adapt if things shift.

What Individual Marketers and Teams Should Do Right Now

If you're reading this, you probably want to know what to actually do.

Here's the priority order:

This Week:

- Search for your company in Chat GPT, Claude, and Perplexity. See how you're described.

- Compare that description to your actual competitive advantages. Does it match?

- Create an llms.txt file. Don't make it perfect. Make it accurate.

- Put it at yourdomain.com/llms.txt.

- Alert your web team. Make sure it's accessible.

This Month:

- Audit your robots.txt file. Make sure you're allowing discovery bots and blocking training bots intentionally.

- Research Web 2 Agent. Decide if it makes sense for your business.

- Check your schema.org markup. Make sure product, company, and pricing information is structured.

- Create a simple monitoring system. Check AI representations weekly.

This Quarter:

- Train your marketing and product teams on AI optimization.

- Build a content strategy that works for both humans and AI (it's not that different).

- Establish relationships with AI platform companies if you're a larger brand.

- Start seeing AI discovery as a primary acquisition channel, not a side project.

The companies that treat this as a 3-month project will be disappointed. The companies that treat it as an ongoing strategic initiative will thrive.

This is like SEO in 1998. It seems new and confusing now. But it's going to be foundational. The companies that understood this and invested now will look brilliant in 2027.

The Real Opportunity Here

Let's zoom out for a second.

What we're actually seeing is a new layer of discovery emerging. For the last 25 years, if you wanted your company found, you optimized for Google. That was the lever.

Now, you have new levers. AI discovery. AI recommendations. AI summaries. These are separate from traditional search, and they have different rules.

Companies that understand this have a huge advantage. They're not competing on the same terms as everyone else. They're being found in a system most competitors aren't even paying attention to.

Speaking of systems that can help you manage and optimize for AI discovery at scale, Runable offers AI-powered automation for creating and updating the structured content, documentation, and presentations that keep your AI representation current. At just $9/month, it's worth testing for any company serious about staying visible in AI discovery systems.

The bigger point: the internet is still in the early phases of this transition. We're not at the endgame yet. The rules aren't set. The platforms are still forming. This is the moment when intentional companies can shape how they're discovered.

If you wait 18 months, the landscape will be locked in. Competitors will have established their positions. You'll be playing catch-up.

If you move now, you're building the foundation for the next era of internet discovery.

That's the real opportunity.

FAQ

What exactly is the difference between traditional search and AI discovery?

Traditional search (Google, Bing) is pull-based: users actively search for information. AI discovery is assistance-based: users ask an AI assistant for recommendations, and the AI synthesizes information to provide answers. The key difference is that traditional search puts users in control of comparison, while AI discovery puts the AI's judgment in control. This fundamentally changes how brands should position themselves.

Why should I allow discovery bots if I'm blocking training bots?

Discovery bots (like Search Bot and Applebot) show your product to users asking AI assistants for recommendations. Blocking them means you become invisible when people ask "what's the best project management tool?" Training bots feed your content to AI model builders, which doesn't directly benefit your business. Allowing discovery while blocking training is the strategic sweet spot for most companies.

Is my robots.txt file already optimized for AI?

Probably not. Most robots.txt files were written before AI discovery bots existed. Check yours. You likely need to add specific rules allowing Search Bot, Applebot, and Bingbot while blocking GPTBot, CCBot, and similar training bots. If you're unsure, contact your web team or a technical SEO expert to audit this.

How do I create an llms.txt file?

It's simple. Create a plain text file with your company information, features, pricing, and differentiators. Save it as "llms.txt" and upload it to your website's root directory (yourdomain.com/llms.txt). The file should be publicly accessible and updated whenever your product or pricing changes. There's no registration or special setup required.

What happens if I don't optimize for AI discovery?

Your company becomes less visible to users relying on AI assistants for recommendations. As AI discovery grows, this becomes a bigger problem. You'll slowly lose market share to competitors who are optimized. In 12 months, you'll look back and wish you'd started now. In 3 years, you might be playing permanent catch-up.

Can I block all AI bots and still rank well?

Traditionally yes for search engines, but not anymore. Blocking discovery bots means AI systems can't access current information about your product. They'll use outdated training data or competitor information instead. You lose the ability to control your narrative. It's better to allow discovery bots and manage your narrative strategically.

How long until AI discovery becomes more important than Google?

Based on current trends, likely 12-24 months for early adopters and 24-36 months for mainstream users. The timeline varies by industry. E-commerce and Saa S will shift faster than B2B enterprise software. The smart move is preparing now instead of waiting to see if it happens.

What's the ROI of optimizing for AI discovery?

It depends on your business model, but early data shows 15-40% increases in qualified traffic within 90 days of optimization. For Saa S companies, the ROI is clearer because AI discovery directly influences which products get recommended. For commodity products, the impact is smaller. The bigger point: optimization takes days, not months. The cost-benefit is heavily in your favor.

Should I hire someone dedicated to AI optimization?

Probably not yet. This is still early enough that a single person spending 10 hours per week can own it. The exception is if you have complex products or hundreds of SKUs. In that case, automation tools like Web 2 Agent or Runable become critical to maintain accurate representations at scale.

What if I'm in a niche industry with few AI references?

You have an advantage. There's less competition for visibility. Optimizing now means you own the narrative when AI systems reference your industry. Start with llms.txt and structured data. As AI references your niche more, you'll already be positioned correctly.

The Bottom Line

Google isn't dying. But its role as the primary discovery mechanism is changing.

AI is adding a new layer above traditional search, and the rules are different. Companies that understand and adapt will thrive. Companies that ignore this shift will slowly fade.

The good news: you still have a window where moving first provides huge advantages. That window is closing, but it's still open.

Your next move should be simple: create an llms.txt file, audit how you appear in AI systems, and restructure your key pages for clarity. That foundation will serve you for years.

The companies that do this now will own the next era of internet discovery. The companies that wait will be playing catch-up for years.

Which company do you want to be?

Use Case: Automatically generate and update structured content, llms.txt files, and AI-optimized documentation as your product evolves, ensuring accurate AI representation across all platforms.

Try Runable For Free

Key Takeaways

- AI crawlers analyzing 66.7 billion requests show that AI discovery is becoming a primary layer above traditional search, not replacing it.

- Companies blocking all AI bots lose control over how AI assistants describe their products—the smarter approach is strategic governance.

- Discovery bots (SearchBot, Applebot) should be allowed while training bots (GPTBot) can be blocked, creating a strategic middle ground.

- Tools like llms.txt files and Web2Agent let brands control their AI representation and ensure accurate product information reaches AI assistants.

- The window for early adoption advantage in AI discovery is closing—companies that optimize now will have sustained competitive benefits through 2027.

Related Articles

- Google's AI Search Links Update: Making Citations Visible [2025]

- AI Bot Web Traffic vs Human Usage: What It Means for Your Site [2025]

- Google AI Overviews Follow-Up Questions: What Changed [2025]

- Gemini 3 Becomes Google's Default AI Overviews Model [2025]

- The AI Slop Crisis in 2025: How Content Fingerprinting Can Save Authenticity [2026]

![Is Google's Search Dominance Over? AI Bots Are Reshaping Discovery [2025]](https://tryrunable.com/blog/is-google-s-search-dominance-over-ai-bots-are-reshaping-disc/image-1-1771609053952.jpg)