Jimmy Wales on Wikipedia Neutrality: Building Trust in a Post-Truth World

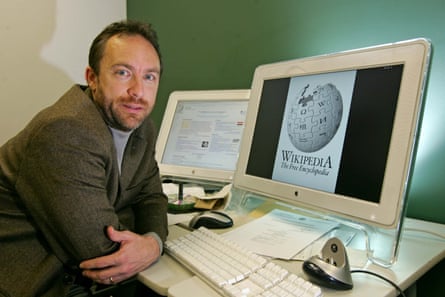

Jimmy Wales doesn't fit the mold of today's tech elite. No yacht, no MAGA dinners, no performative power plays. When most billionaire founders are chasing growth metrics and profit maximization, he's tinkering with Home Assistant to optimize his UK Wi-Fi setup. It's almost disarmingly normal, which is precisely what makes him unusual in an industry obsessed with disruption theater.

Yet Wales is anything but irrelevant. Wikipedia, the platform he co-founded 25 years ago, serves over 6 billion people annually. Every day, millions rely on it for information spanning everything from quantum physics to celebrity gossip to political controversies. It's arguably the most consequential non-profit tech platform ever built, and it operates on principles that directly contradict modern Silicon Valley orthodoxy: it prioritizes accuracy over engagement, neutrality over virality, and community over algorithms.

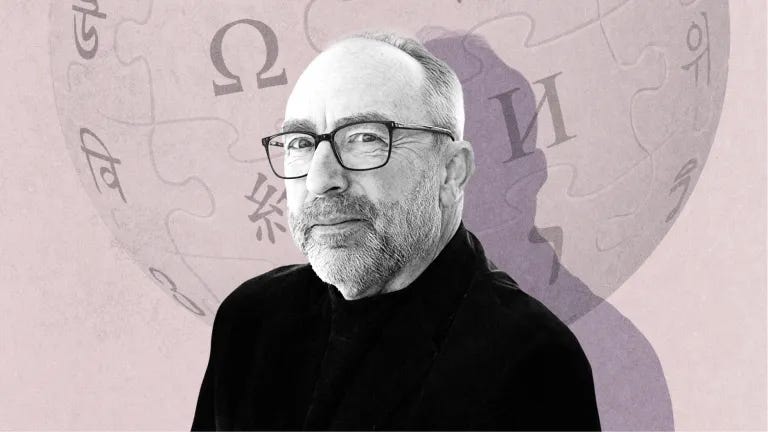

In recent months, Wales has been promoting his book, "The Seven Rules of Trust," which distills Wikipedia's unlikely survival strategy into a playbook for rebuilding institutional trust in a fractured society. It's timely. We're living through an era where facts themselves have become contested territory. Governments from Russia to Saudi Arabia have weaponized disinformation against Wikipedia. Conspiracy theorists attack its neutrality. AI companies scrape its data without permission. Billionaire-backed platforms deliberately spread falsehoods. Meanwhile, trust in institutions has cratered to historic lows.

Wales recently sat down for an extended conversation to discuss what it actually takes to maintain neutrality at scale, why he'll never personally edit Donald Trump's Wikipedia entry (spoiler: it would compromise everything Wikipedia stands for), and how the platform is preparing for an AI-dominated information landscape where bad actors are exponentially more capable of spreading fiction.

What emerges is a portrait of someone genuinely committed to a radical idea: that billions of people can collectively create reliable information without centralizing power, monetizing attention, or surrendering to political pressure. In 2025, that's not just countercultural. It's revolutionary.

TL; DR

- Wikipedia serves 6+ billion users annually by refusing to optimize for engagement or profit, instead prioritizing accuracy and editorial neutrality

- Jimmy Wales refuses to personally edit controversial pages like Donald Trump's because founder involvement would compromise the platform's neutrality principle and undermine trust

- Authoritarian governments and conspiracy networks actively target Wikipedia, requiring constant vigilance to prevent propaganda infiltration while maintaining openness to legitimate editing

- AI poses an existential threat to Wikipedia's model: both through unauthorized data scraping by LLM companies and the proliferation of AI-generated disinformation that Wikipedia volunteers must combat

- The neutrality mandate is Wikipedia's core strength, not a limitation—it's what allows billions of people from hostile political backgrounds to treat the platform as the closest thing to shared reality that exists online

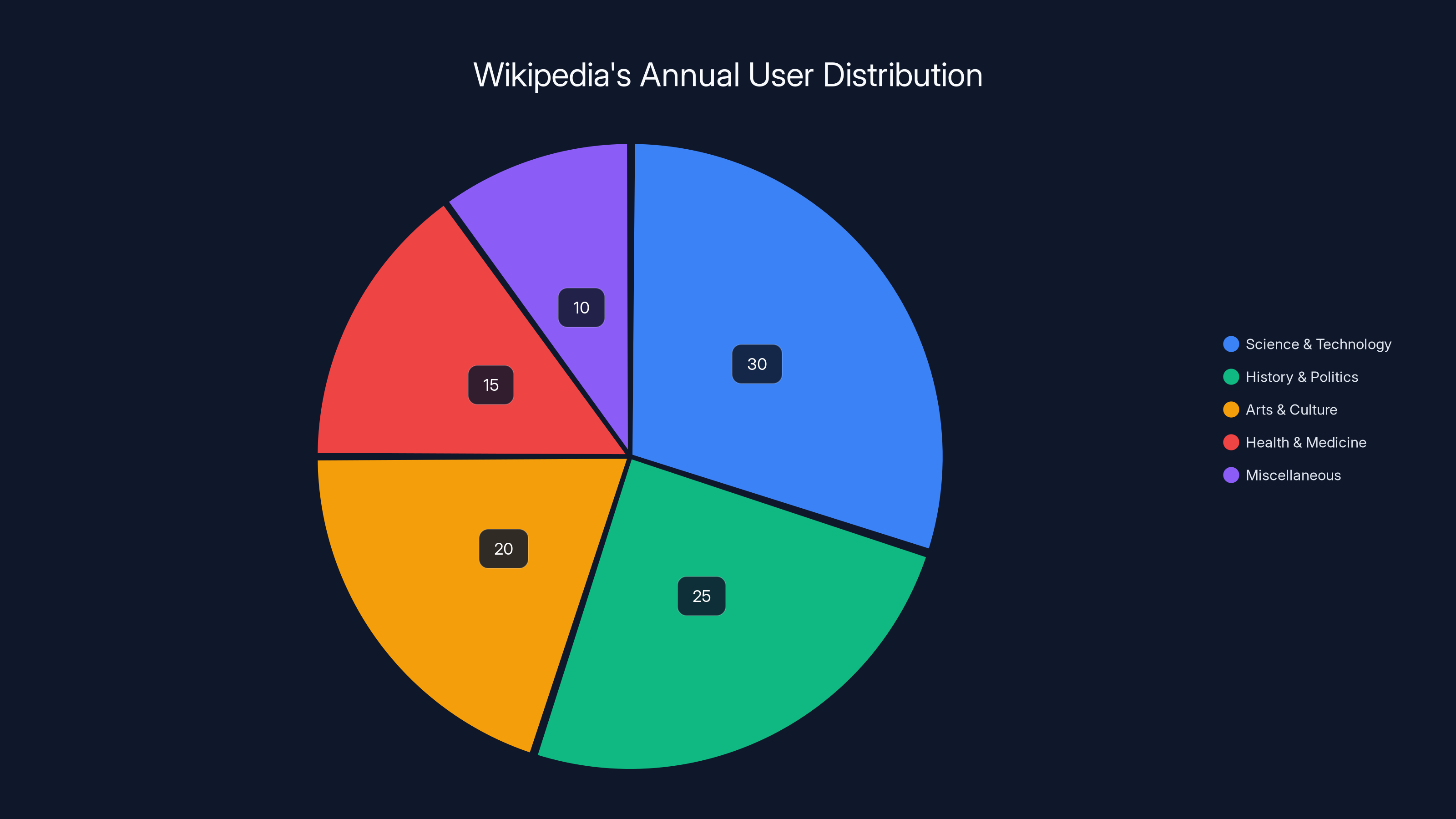

Estimated data shows that science and technology topics attract the largest share of Wikipedia's 6 billion annual users, highlighting the platform's role in providing reliable information across diverse fields.

The Accidental Billionaire Who Still Tinkers with Smart Home Code

Wales didn't set out to create the internet's most trusted reference material. In the late 1990s, he was running Bomis, a web search directory that operated more like an old-school Yellow Pages than a search engine. It was a competent business. It paid salaries. It wasn't revolutionary.

Then came the epiphany: what if you crowdsourced encyclopedia creation? What if you removed the gatekeepers, the credentialing requirements, the institutional barriers? What if you trusted people?

It sounds naive in retrospect. In 1999, it was considered laughably impossible. Academics predicted that anonymous internet randos would produce garbage. Critics argued that without institutional authority, Wikipedia would be overrun with bias, vandalism, and misinformation. They weren't wrong about the challenges—they just underestimated the power of community-driven editorial standards and the human desire to contribute to something meaningful.

Wales is noticeably uncomfortable with the "billionaire" label. He spends more time discussing Wikipedia's volunteer editor community than discussing his own wealth. He lives in London partly because the city allows him to maintain genuine friendships outside the tech bubble. When you ask him what he'd be doing if Wikipedia hadn't happened, he doesn't cite venture capital ambitions or serial entrepreneur fantasies. He says he'd probably be a programmer.

This is either genuine humility or masterful branding. Possibly both.

What's undeniable is that Wales has consistently rejected the startup playbook that made peers like Mark Zuckerberg and Elon Musk wealthy. Wikipedia operates as a non-profit. Its servers run on donations. It has no advertising. It doesn't track users. It doesn't manipulate behavior with algorithmic feeds. It doesn't sell data. Every design decision explicitly constrains growth and monetization potential.

In an era where tech leaders measure success by usage growth, revenue multiples, and stock price appreciation, Wikipedia measures success by accuracy, citation completeness, and community trust. The metrics are almost perversely anti-capitalist.

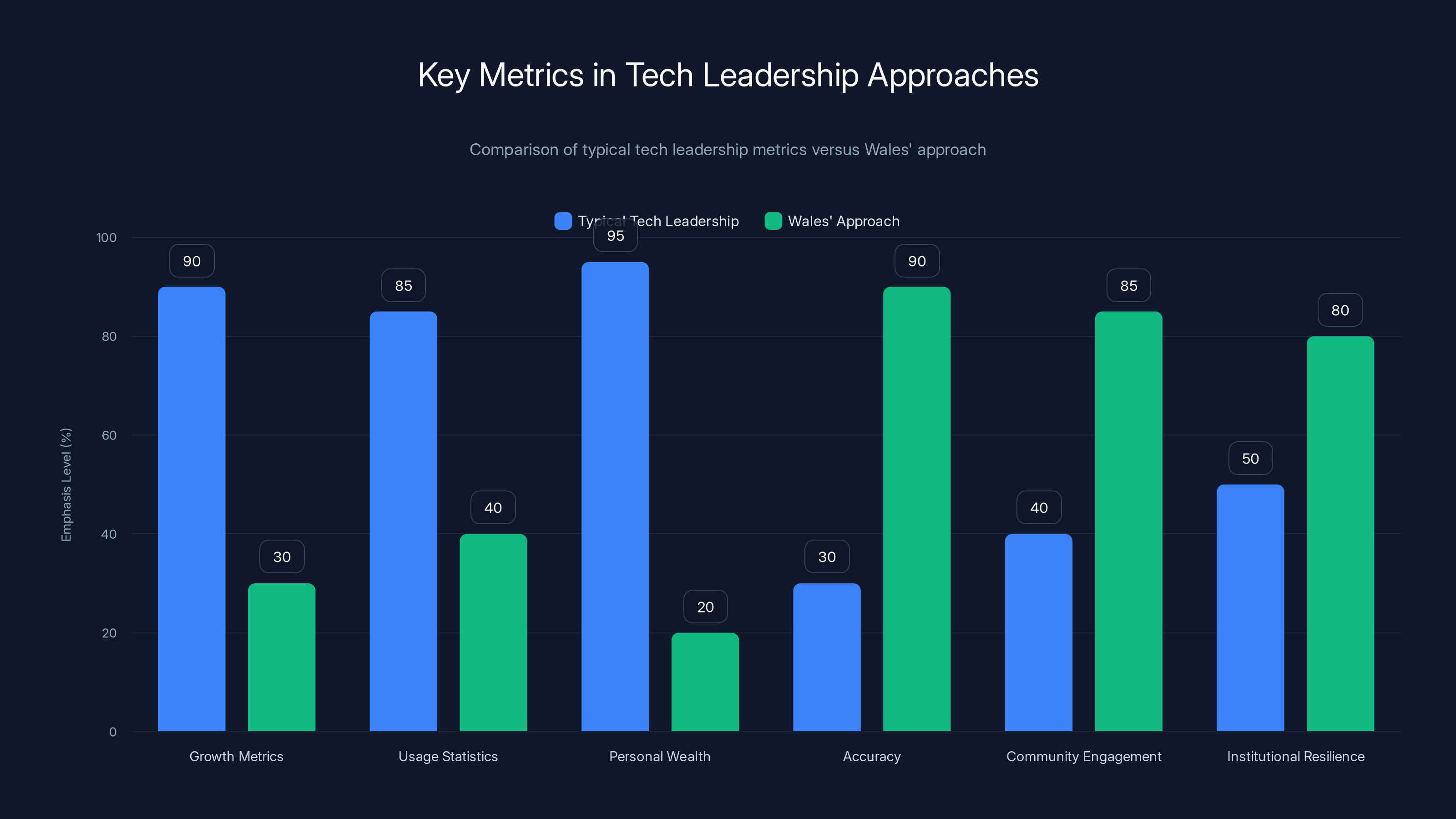

Wales' approach emphasizes accuracy, community engagement, and resilience over typical growth and wealth metrics, demonstrating a different model of tech leadership. Estimated data.

Why Neutrality Point of View Isn't Weakness—It's the Entire Strength

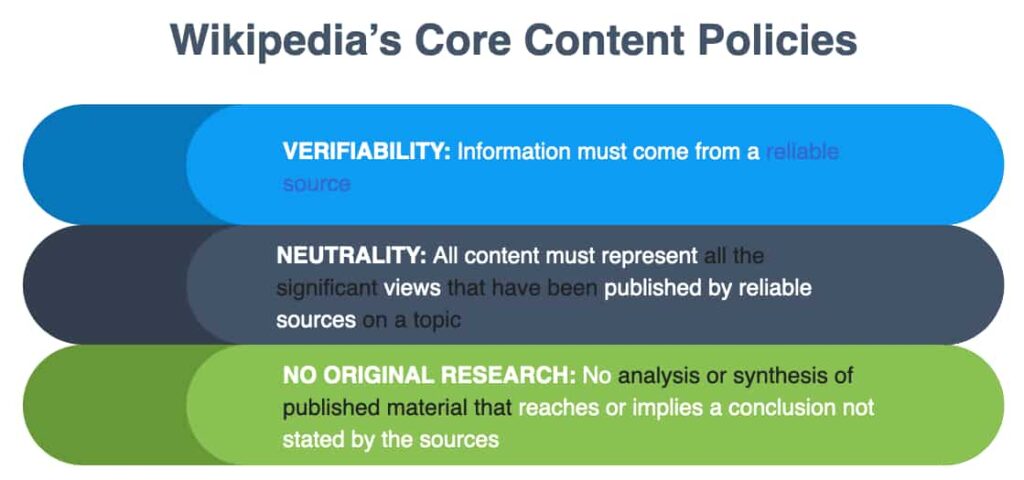

Wikipedia's foundational policy is called Neutral Point of View (NPOV). It's not neutrality as in "both sides are equally valid." It's not "false balance" where fringe theories get equal billing with established facts. Rather, it's a commitment to representing all legitimate, documented positions without editorial bias toward any particular faction.

Here's the nuance that most people miss: NPOV allows Wikipedia to state objective facts while acknowledging legitimate disagreement about their implications. On climate change, Wikipedia clearly documents the scientific consensus on human-caused warming. It also documents the existence of contrarian viewpoints—not to validate them, but to acknowledge that they exist and describe what their proponents actually argue. This is fundamentally different from false balance.

The brilliance of NPOV is that it creates something genuinely rare: a space where people with opposing political views can find shared factual ground. A conservative and a progressive might violently disagree about the policy implications of climate science, but they can both use Wikipedia's climate article as a reliable reference point. That shared reference point becomes increasingly valuable as wider culture fragments into competing epistemological camps.

Wales describes NPOV as the platform's immune system. When controversial figures or topics generate heated editing, the policy provides a framework for resolution that doesn't require Wikipedia administrators to make political judgments about whose side is "right." Instead, they evaluate which sources are authoritative, which claims are well-documented, and how to represent contested issues accurately.

This is why Wales refuses to personally edit controversial pages. If the founder started editing Trump coverage, it would instantly politicize the process. Rival editors could claim bias. The entire system of distributed editorial authority would collapse. Wales understands something that most tech leaders don't: sometimes the most powerful position is staying out.

The challenge is that NPOV requires genuine intellectual humility. It demands acknowledging complexity rather than reducing issues to simple narratives. It requires citing sources rather than relying on personal authority. In an age of increasing polarization and declining attention spans, this is countercultural. People don't want nuance. They want affirmation.

Yet this commitment to nuanced accuracy is what allowed Wikipedia to remain relatively trusted across the political spectrum, even as mainstream media outlets lost credibility with substantial portions of the population. When institutional authorities lose trust, Wikipedia's distributed model becomes more valuable, not less.

How Governments, Conspiracy Networks, and Bad Actors Target Wikipedia

Wikipedia's commitment to accuracy makes it a target. Governments that thrive on controlled information flows see Wikipedia as a threat. Conspiracy networks that depend on information ecosystems isolated from fact-checking view it as an adversary. Bad actors who benefit from confusion have every incentive to corrupt it.

The attacks are sophisticated and multifaceted. Russian state actors have attempted to manipulate Wikipedia coverage of Ukraine, Crimea, and Russian political figures. Saudi Arabia has reportedly targeted articles covering human rights. Chinese authorities have blocked Wikipedia entirely in mainland China. Right-wing conspiracy networks have waged coordinated campaigns to change climate science articles. During elections, bad actors attempt to inject false information about candidates, voting procedures, and election security.

Wales describes these attacks as inevitable consequences of Wikipedia's influence. The platform has become important enough that powerful actors now consider it worth attacking. This wasn't true in 2005. It is absolutely true now.

The defense mechanisms are mostly human-powered. Wikipedia relies on thousands of volunteer editors who monitor articles for vandalism, flag suspicious edits, and enforce editorial standards. It's fundamentally low-tech by contemporary standards—humans reading, evaluating, and discussing rather than algorithmic detection systems. This approach is slower than automated solutions, but it's more resistant to gaming. Bad actors can fool algorithms. They have much harder time fooling experienced volunteer editors who know the subject matter deeply.

Wales is candid about limitations. Wikipedia doesn't have infinite resources. Its volunteer base, while substantial, has plateaued or declined in some areas. The platform can't monitor every article every second. Sophisticated state actors with significant resources could potentially corrupt specific articles if they were willing to invest enough effort. The question isn't whether Wikipedia can be attacked. The question is whether distributed community resistance can scale fast enough to catch and correct attacks before they spread.

Wikipedia's unique operational model emphasizes a non-profit approach, user privacy, and community-driven content, contrasting with typical for-profit tech companies. (Estimated data)

The AI Crisis: Both Scraping and Disinformation

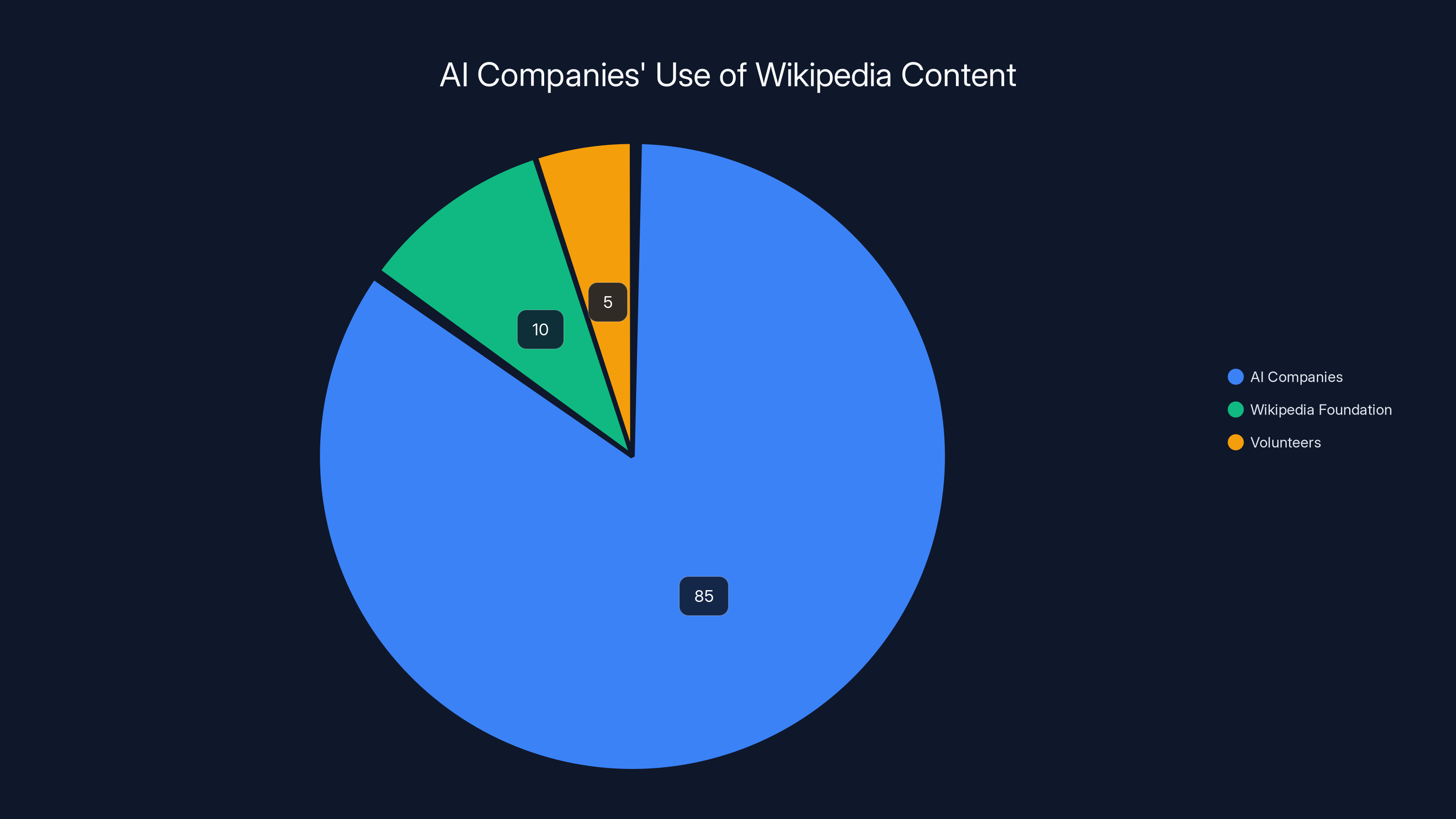

Wales is particularly concerned about AI's dual threat to Wikipedia. On one hand, major LLM companies have scraped Wikipedia data without permission to train language models. These companies profit from Wikipedia's collective intellectual property while contributing nothing back. On the other hand, those same LLMs are becoming incredibly effective at generating plausible-sounding disinformation that Wikipedia volunteers must continually identify and remove.

Consider the scraping issue first. Wikipedia's content is released under Creative Commons licensing, which technically permits reuse with attribution. However, the scale and commercial intent of LLM training creates a different dynamic than typical academic reuse. Billions of dollars in AI company valuations are partly built on Wikipedia content that volunteers created for free. The volunteers get nothing. The foundation gets nothing. Meanwhile, AI companies generate billions in market value.

Wales is exploring potential legal responses, but the problem is structural. Once content is published on the internet, controlling its use becomes nearly impossible. The ethical question isn't really about legality. It's about reciprocity. If AI companies benefit from Wikipedia, shouldn't they contribute to its maintenance and accuracy?

The disinformation angle is more immediately threatening. As AI-generated content becomes more convincing, malicious actors gain new capabilities. Someone could generate sophisticated fake sources and edit them into Wikipedia articles, or create entirely fabricated articles with realistic citation structures. Detecting these attacks requires either increasingly sophisticated automated detection (which has accuracy limitations) or human expertise (which is expensive and limited in supply).

Wales describes AI-generated disinformation as possibly the existential threat to Wikipedia's model. If bad actors can generate convincing false information faster than volunteers can identify and remove it, the platform's credibility collapses. The solution probably isn't banning AI. It's building better detection tools, increasing investment in volunteer communities, and perhaps requiring more rigorous source verification for certain categories of information.

This is where Wales' approach diverges sharply from most tech leaders. He's not looking for a purely technical solution. He's thinking about human capacity, community resilience, and distributed decision-making. These aren't sexy problems with clean technical fixes.

Trust Building vs. Trust Keeping: Why One Is Harder Than You Think

Early in our conversation, Wales was asked directly: what's harder, building trust or keeping trust? His answer was surprising. "They're kind of the same thing, but I think building trust is harder. Particularly these days, when we are in an era with such low trust that you have to get past people's skepticism."

This is counterintuitive. Most people assume trust maintenance is the challenge—that once you've earned credibility, the hard part is not blowing it. Wales sees it differently. Building initial trust when skepticism is the default state requires overcoming massive psychological resistance. People have been burned by institutions. They expect deception. They assume hidden agendas.

Wikipedia's solution is radical transparency. The platform publishes its editorial disputes openly. Article talk pages show exactly what editors disagree about and why. Edit histories are completely visible. Financial statements are public. Decision-making processes are documented. There's nowhere to hide.

This transparency is uncomfortable. It exposes Wikipedia's imperfections, internal contradictions, and the reality that human volunteers sometimes make mistakes. A traditional institution would hide these things behind corporate communications and legal teams. Wikipedia puts them on display.

Yet this transparency is precisely what builds trust in an era of institutional skepticism. People can see that Wikipedia isn't hiding anything. Decisions about controversial content aren't made in backrooms. Anyone can verify sources. The process is visible, documented, and subject to community scrutiny.

Wales notes that trust-keeping follows naturally from this foundation. Once people internalize that Wikipedia operates with genuine transparency, they're much more forgiving of errors. "Part of trust is you can make mistakes and people forgive you because they do fundamentally trust you," he explains. This is a sophisticated understanding of how trust actually functions. Trust isn't about perfection. It's about demonstrating good faith through transparent process and willingness to acknowledge and correct mistakes.

In contrast, institutions that try to maintain trust through image management and carefully controlled messaging often trigger exactly the skepticism they're trying to prevent. People sense the PR operation and discount the message.

Estimated data shows AI companies gain the majority of benefits from Wikipedia content, while the foundation and volunteers receive minimal returns.

The Paradox of Neutrality in a Polarized World

Wales faces a genuine paradox: the more polarized the world becomes, the more valuable Wikipedia's neutrality principle becomes, yet also the more it's attacked. Both left and right have accused Wikipedia of bias. Conservatives argue it favors liberal sources. Progressives argue it overrepresents conservative voices in the name of balance. Both can't be entirely wrong, and both can't be entirely right.

Here's where understanding NPOV becomes crucial. The goal isn't to satisfy every group equally. The goal is to represent what reliable sources actually document, without editorial preference for any political faction. This means sometimes looking biased to people whose worldview is itself biased against reliable information.

For example, on controversial medical topics, Wikipedia reflects scientific consensus. To people who reject scientific consensus, this looks like bias. But Wikipedia isn't choosing political correctness over truth. It's saying: this is what authoritative sources document. If you disagree, cite better sources. Until then, scientific consensus governs.

Wales understands this creates a difficult communication challenge. Critics on all sides claim bias. Wikipedia can't win approval from everyone. The platform can only maintain consistency with its stated principles while continuing to improve citation quality and representation.

The deeper insight is that genuine neutrality sometimes looks biased to ideologically committed observers. Objectivity doesn't mean giving equal platform to true and false claims. It means accurate representation of what reliable sources document, with transparency about disputes. This requires intellectual sophistication to understand, which ironically makes it harder to defend politically.

Wikipedia's Volunteer Community: The Actual Engine

Wales often deflects credit toward Wikipedia's volunteer editors. He's not being falsely modest. The platform's quality genuinely depends on thousands of unpaid people who spend hours each week improving articles, catching vandalism, arbitrating disputes, and enforcing standards.

This is increasingly challenging to sustain. Wikipedia's volunteer editor count has plateaued or declined in recent years. The platform that once attracted enthusiastic contributors who saw themselves as building free knowledge infrastructure now struggles to recruit new editors. Potential contributors see hostile editing communities on contentious topics and decide the social cost isn't worth it.

Wales acknowledges this as perhaps Wikipedia's most serious long-term problem. The platform can survive technical challenges. It can adapt to AI threats through better detection and human oversight. But if the volunteer community continues shrinking, the entire model degrades. You can't verify sources and catch disinformation at scale without human expertise.

What makes people stay as Wikipedia editors despite difficult conditions? Wales suggests it's something intrinsic: the desire to contribute to something meaningful beyond personal financial reward. Wikipedia editors don't get paid. They don't get fame. They often face hostility from people invested in false narratives. Yet they continue because they believe in the mission.

This is almost quaint in contemporary terms. Most major platforms are built on monetized user engagement and algorithmic feedback loops designed to maximize addiction. Wikipedia's model asks: what if we created a platform where people contribute because they believe it matters? What if we made that the actual design principle rather than a side effect?

The challenge is scaling this intrinsic motivation in an era where online spaces are increasingly hostile and fractured. How do you maintain a community dedicated to shared truth when significant portions of the population deny that objective truth exists? This isn't really a technical problem. It's a civilizational question.

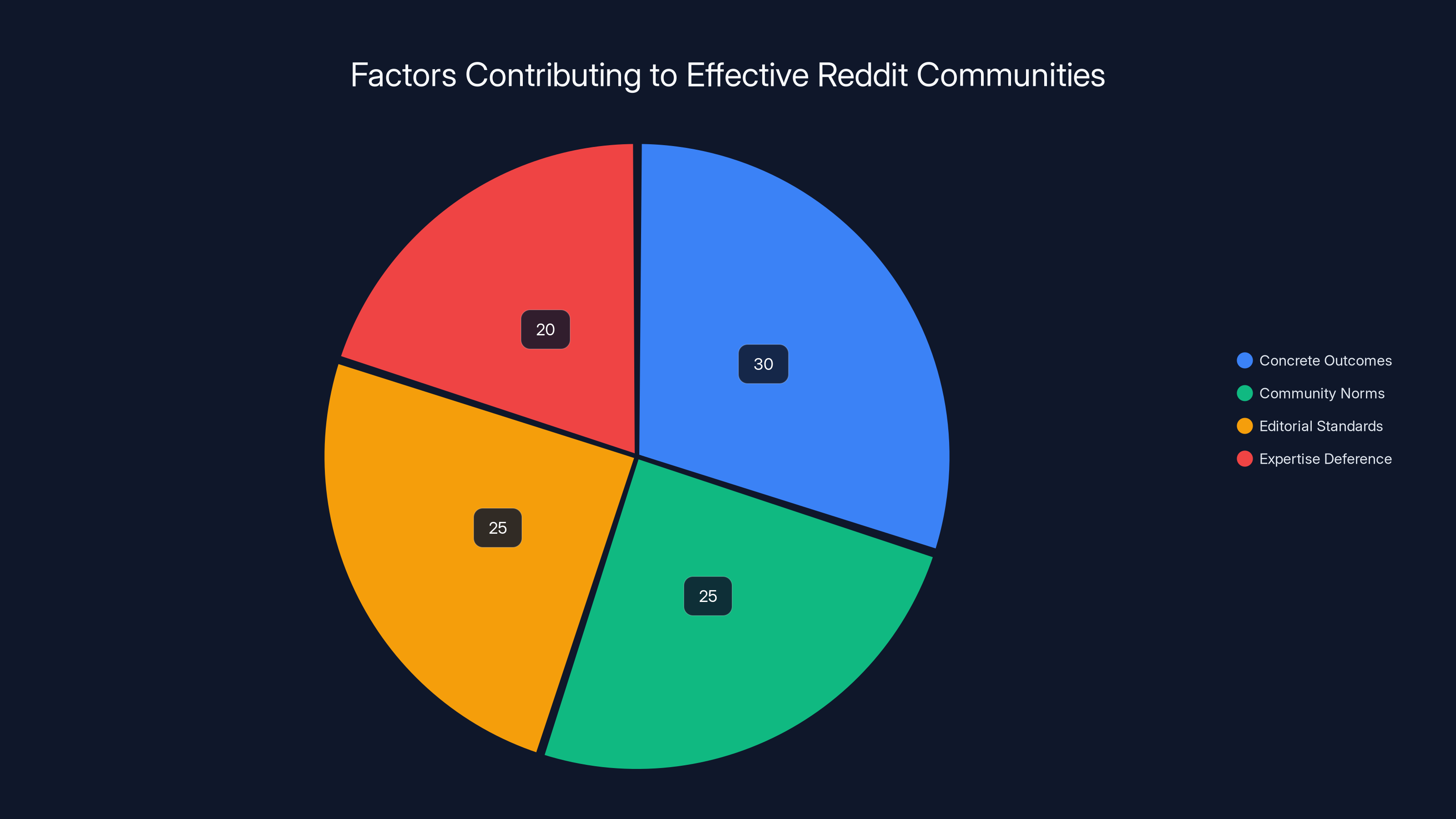

Estimated data shows that concrete outcomes and community norms are key factors in maintaining quality in Reddit communities, with editorial standards and expertise deference also playing significant roles.

When Founders Should Stay Out: The Trump Edit Case Study

This is where Wales' thinking becomes genuinely interesting. When you ask him whether he's ever personally edited Wikipedia articles, he's largely circumspect. But ask him whether he would ever edit coverage of Donald Trump, and you get an emphatic answer: absolutely not. "It makes me insane," he says.

The restraint here is remarkable. Wales has strong political opinions. He has the technical ability to edit Wikipedia. He has the social capital to influence what gets published. Yet he explicitly refuses to exercise these powers because doing so would corrupt the very thing that makes Wikipedia valuable.

If Wales started editing Trump coverage, it would instantly weaponize his involvement. Supporters would say he's spreading bias. Critics would claim he's using his platform inappropriately. The entire system of distributed editorial authority would crumble. Why should other editors accept community standards when the founder is apparently above them? If Wales can edit politically sensitive pages based on personal conviction, so can everyone else.

Wales understands that the power of Wikipedia comes precisely from the diffusion of authority. The most important protection against bias isn't any individual person's commitment to fairness. It's the fact that no single person controls outcomes. Decisions are made through community deliberation, source verification, and editorial consensus.

This is counterintuitive for someone accustomed to leadership models where authority flows from the top. Tech founders are trained to believe they should drive important decisions. Wales has explicitly rejected this. The more hands-off he remains from controversial editorial decisions, the more credible Wikipedia's neutrality becomes.

There's a lesson here for anyone building institutions or platforms that require community trust. Sometimes the most powerful thing a leader can do is voluntarily constrain their own power. This signals commitment to systems that work without depending on any individual's judgment.

Reddit, Personal Finance Communities, and Why Anonymous Communities Sometimes Work

When asked about his favorite websites, Wales mentions Reddit, particularly the personal finance subreddits. He describes being "amazed" by how thoughtful and helpful these communities are. This is interesting because Reddit is typically understood as a chaotic platform filled with misinformation and toxic behavior.

Yet Wales' observation is accurate. Certain Reddit communities have developed their own editorial standards and community norms that maintain reasonably high-quality discourse. Personal finance communities work because the stakes are concrete. If someone gives bad advice about investments, other community members see it immediately. Bad advice gets downvoted. Good advice gets shared.

This contrasts with communities organized around politics or ideology, where the consequences of misinformation are less immediately visible and more abstract. You can't definitively prove whether a political prediction was right or wrong in the moment.

Wales sees Reddit as a model that demonstrates how anonymous, decentralized communities can maintain quality standards when the community is organized around concrete outcomes rather than abstract ideology. This aligns with Wikipedia's model: people are more willing to defer to expertise and factual accuracy when they're collaborating on something practical.

The broader insight is that community norms can't be imposed from above. They have to emerge organically from repeated interaction and shared purpose. Wikipedia's editorial standards developed over years of community deliberation. They're not mandates from on high. They're standards that editors agreed to because they produced better outcomes.

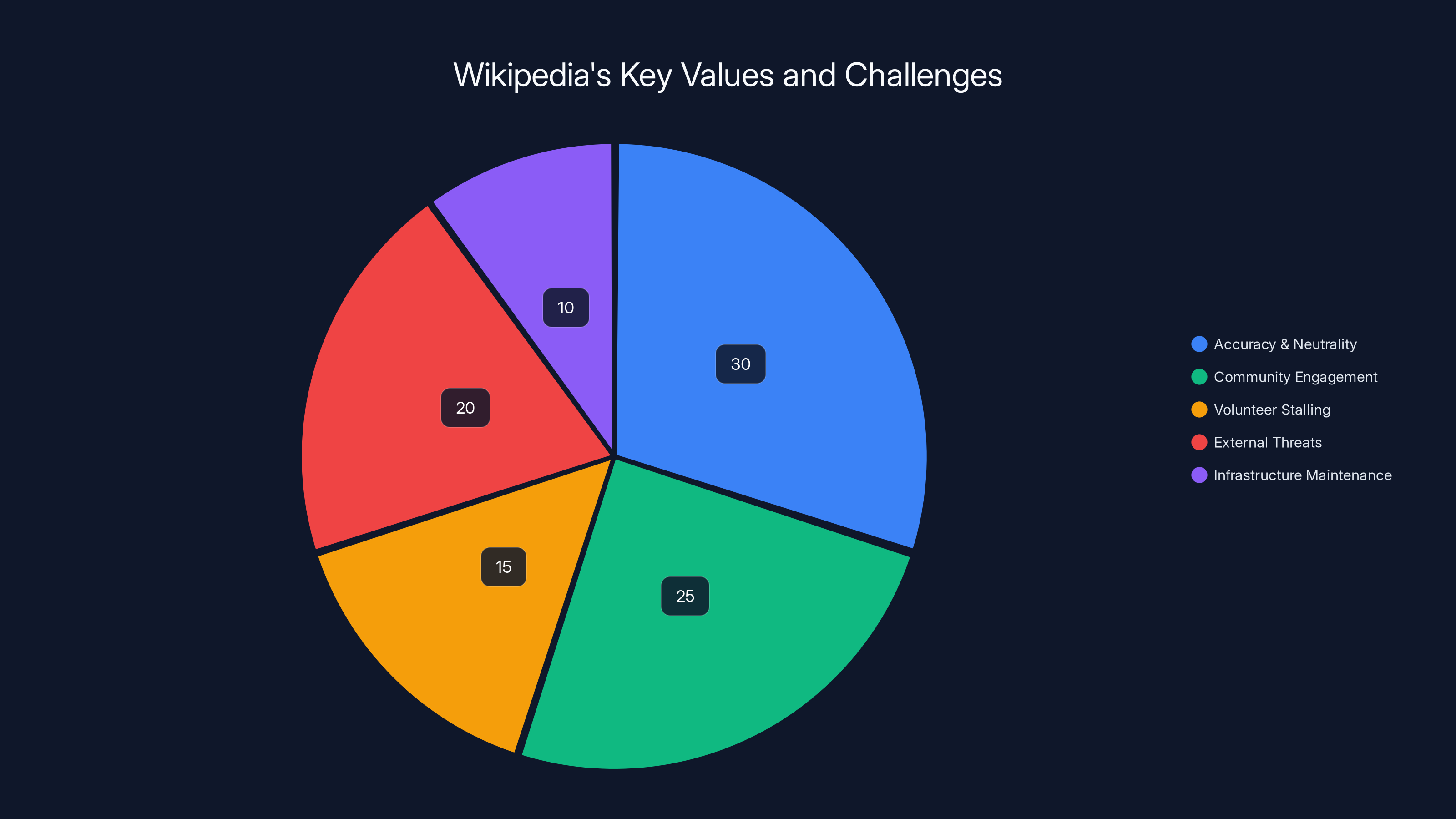

Wikipedia's commitment to accuracy and neutrality is its largest focus area, followed by community engagement. External threats and volunteer stalling are significant challenges. (Estimated data)

The Wi-Fi Obsession: Technical Thinking Applied to Infrastructure

There's something revealing about Wales spending his free time optimizing Home Assistant and his home Wi-Fi setup. It suggests someone who thinks deeply about infrastructure, systems, and how things actually work at technical level.

Home Assistant is essentially a decentralized smart home platform. You run it on your own hardware rather than relying on cloud services controlled by major tech companies. It's open source. You own your data. It aligns perfectly with Wales' apparent philosophy about technology: distributed authority, transparency, user control.

Some might dismiss this as nerdy hobby stuff. In reality, it's revealing about how Wales approaches problem-solving. He's not interested in magic solutions or purely technical fixes. He's interested in understanding systems deeply and optimizing them locally. This same approach applies to Wikipedia. Problems aren't solved through algorithmic interventions from above. They're solved through community capacity, distributed decision-making, and local optimization.

This is why his concerns about AI aren't about developing better AI systems to combat misinformation. They're about scaling human capacity to evaluate information and catch bad actors. The solution involves tools, certainly, but fundamentally depends on people understanding what they're looking at.

The "Seven Rules of Trust" and Wikipedia's Playbook for Rebuilding Institutional Faith

Wales' book distills Wikipedia's survival strategy into principles applicable beyond encyclopedias. While the full analysis belongs to the book itself, the underlying logic is worth examining.

The first principle is transparency. You can't build trust while hiding things. This seems obvious until you look at how most institutions operate. Government agencies restrict information. Corporations control their narrative. Tech platforms hide their algorithms. Wikipedia does the opposite. Everything is visible.

Second is accountability. If decisions matter, whoever makes them should be identifiable and answerable. This doesn't mean Wikipedia gives individual editors perfect oversight. It means decisions are documented, deliberable, and subject to review.

Third is community participation. Trust can't be imposed. It has to be earned through repeated positive interaction. This requires creating space for people to participate in decisions that affect them.

Fourth is independence from commercial incentives. When financial interests distort judgment, trust erodes. Wikipedia's non-profit status removes obvious financial conflicts.

Fifth is commitment to expertise and evidence. Trust requires deference to reliable sources and documented facts. This sounds banal until you observe how many institutions make decisions based on ideology, political pressure, or financial self-interest rather than evidence.

Sixth is patience with complexity. Simple narratives build engagement faster. Complex truths build sustainable trust. Wikipedia's commitment to nuance reflects this principle.

Seventh is consistency. Trust builds through predictable behavior. Wikipedia's commitment to NPOV is maintained even when it's unpopular or inconvenient. This consistency signals that principles aren't situational.

These principles don't guarantee success. They require constant recommitment and are vulnerable to external pressure. But they create infrastructure that makes trust-building possible.

Wikipedia at 25: Still Growing, Still Under Attack, Still Committed to Facts

As Wikipedia enters its 26th year, the platform faces unprecedented challenges. The information environment is more hostile than ever. Disinformation campaigns are more sophisticated. AI capabilities create new attack surfaces. Meanwhile, volunteer engagement has stagnated, and the platform's funding model depends on periodic donation campaigns that generate uncertainty.

Yet Wikipedia remains arguably the most trusted information platform globally. In an era of institutional skepticism and epistemological fragmentation, it's increasingly valuable precisely because it refuses to optimize for engagement, profit, or political alignment.

Wales seems reasonably optimistic about Wikipedia's long-term survival, though not complacent. The platform has proven more durable than early critics predicted. Its principles are more powerful than observers realize. Its community, despite challenges, remains committed.

But sustainability isn't guaranteed. Wikipedia's future depends on sustaining volunteer communities, maintaining technical infrastructure against increasingly sophisticated attacks, and adapting to AI-driven information ecosystems while maintaining editorial integrity.

The stakes are genuinely high. As institutional authority fragments further and disinformation becomes cheaper to produce, Wikipedia's commitment to verifiable facts and collaborative truth-seeking becomes more important, not less. The platform represents a genuine alternative to centralized algorithmic platforms and institutional gatekeeping.

Whether that alternative can scale in an environment increasingly hostile to objective truth remains the open question.

The Broader Conversation: Tech Leadership in an Era of Low Trust

Wales' approach offers an implicit critique of contemporary tech leadership. Most tech billionaires measure success through growth metrics, usage statistics, and personal wealth accumulation. Wales measures success through accuracy, community engagement, and institutional resilience.

Most tech platforms optimize for engagement, which means optimizing for reactions that hold attention. Wikipedia optimizes for accuracy, which sometimes means less engaging content. Most tech companies defend their algorithms as proprietary secrets. Wikipedia publishes its editorial standards openly.

Most tech leaders claim visionary authority over their platforms. Wales has voluntarily constrained his own power. Most tech companies fight regulation. Wikipedia cooperates with governments and researchers studying online information flows.

None of this makes Wales' approach universally applicable. Not every problem can be solved through decentralized community action. Not every platform benefits from NPOV principles. Transparency sometimes conflicts with privacy protection. But Wales demonstrates that alternative models exist and can thrive.

In an era where tech platforms face increasing skepticism and regulatory scrutiny, and where public trust in institutions continues declining, Wales offers a living proof that technology can be built differently. Not necessarily better for every purpose. But demonstrably more trustworthy, more durable, and more resistant to corruption when the stakes are accurate information.

FAQ

What is Wikipedia's Neutral Point of View policy?

Wikipedia's Neutral Point of View (NPOV) policy requires editors to represent all legitimate, well-documented positions on controversial topics without editorial bias, while clearly stating established facts and scientific consensus. It's not false balance between true and false claims. Rather, it's transparent representation of what reliable sources actually document, with acknowledgment of legitimate disputes and different interpretations of documented facts.

Why does Jimmy Wales refuse to personally edit Wikipedia articles about Donald Trump?

Wales refuses to edit politically sensitive pages because founder involvement would compromise Wikipedia's distributed editorial authority and independence. If the founder started making editorial decisions on controversial topics, it would instantly politicize those decisions and undermine the platform's credibility as a neutral institution. The power of Wikipedia comes from diffused authority where no single person controls outcomes—Wales preserves this by explicitly constraining his own editorial power.

How does Wikipedia defend itself against government disinformation campaigns and vandalism?

Wikipedia relies primarily on volunteer editors who monitor articles, flag suspicious edits, and enforce editorial standards through community deliberation. The platform also uses tools like Pending Changes (which requires experienced editor approval before new contributions go live on sensitive articles) and detailed edit tracking that makes coordinated attacks visible. Detection is largely human-powered rather than algorithmic because experienced editors understand subject matter context better than automated systems can.

What is the dual threat Wikipedia faces from AI?

First, major LLM companies have scraped Wikipedia's content without permission to train language models, profiting from Wikipedia's freely-created intellectual property while contributing nothing back. Second, as AI-generated disinformation becomes more convincing, malicious actors gain new capabilities to create sophisticated fake sources and embed false information into Wikipedia articles. Detecting AI-generated disinformation at scale requires either better automated detection tools or increased human expertise—both present significant challenges.

Why does Wikipedia's volunteer editor community matter more than technical infrastructure?

Wikipedia's quality ultimately depends on humans reading, evaluating, and verifying information. You can't catch sophisticated disinformation through algorithms alone. You can't maintain appropriate citation standards without subject matter expertise. The platform's volunteer editor community provides this human capacity. As volunteer numbers plateau or decline, Wikipedia's ability to maintain quality and catch attacks degrades, making this arguably the platform's most serious long-term sustainability challenge.

Can Wikipedia survive in an era of increasingly polarized politics and bad-faith actors?

Wikipedia's survival depends on several factors working together: sustained volunteer engagement, adequate technical resources, international community commitment to shared factual standards, and public recognition of the platform's value as a trusted information source. The platform has proven more durable than early critics predicted, and it's increasingly valuable as institutional trust declines. However, sustainability is not guaranteed if volunteer engagement continues declining or if coordinated disinformation campaigns become significantly more sophisticated than current detection capabilities can handle.

What does the "Seven Rules of Trust" framework suggest about rebuilding institutional credibility?

Wales' framework emphasizes transparency (making all information visible), accountability (documenting decisions and making them reviewable), community participation (allowing people to influence decisions that affect them), independence from commercial incentives (removing obvious conflicts of interest), commitment to evidence (deferring to expertise and documented facts), patience with complexity (accepting nuance over simple narratives), and consistency (maintaining principles even when unpopular). These principles don't guarantee success but create infrastructure that makes sustainable trust-building possible.

How does Wikipedia's model compare to other online communities like Reddit?

Wikipedia and effective Reddit communities both demonstrate that decentralized groups can maintain quality standards through clear community norms and shared purpose. However, Reddit communities focused on concrete outcomes (like personal finance) maintain better quality than communities organized around ideology or abstract topics. Both platforms show that community standards work best when community members have repeated interaction, concrete stakes in outcomes, and shared commitment to accuracy.

The Bottom Line: Why Wikipedia Matters More Now Than Ever

Jimmy Wales' quiet refusal to exercise founder authority, his candid acknowledgment of Wikipedia's challenges, and his commitment to principles that constrain profit and power offer a striking contrast to Silicon Valley orthodoxy. He's not building the next unicorn. He's maintaining infrastructure for collective truth-seeking in an era increasingly hostile to objective reality.

Wikipedia represents something genuinely rare: a technological platform that prioritizes accuracy over engagement, community over algorithms, and long-term institutional integrity over short-term growth. It operates at massive scale—billions of monthly users—while refusing the monetization and attention-optimization strategies that define contemporary tech.

The platform's success isn't inevitable. Authoritarian governments attack it. Conspiracy networks target it. AI threatens both its data and its editorial capacity. Volunteer engagement is stalling. The information environment is increasingly hostile to neutral, factual discourse.

Yet Wikipedia endures. It remains the closest thing to shared factual ground that billions of people from opposing political backgrounds can access. In that role, it's become almost alarmingly important. As institutions lose trust, as media fragments, as algorithmic platforms radicalize, Wikipedia's commitment to accuracy and neutrality makes it increasingly valuable.

Wales understands this. His book, his principles, and his decision to remain deliberately absent from controversial editorial decisions all reflect deep thinking about how trust actually works. It's not about being perfect. It's about being transparent, accountable, and genuinely committed to something beyond personal benefit.

In 2025, that's a radical idea. Which is precisely why it matters.

Key Takeaways

- Wikipedia serves 6+ billion users annually by refusing to monetize engagement, instead prioritizing accuracy and editorial neutrality as core design principles

- Jimmy Wales voluntarily constrains his own founder authority on controversial pages like Donald Trump's article, understanding that distributed editorial control is Wikipedia's primary defense against bias and corruption

- Neutral Point of View (NPOV) isn't false balance—it's transparent representation of well-documented positions with clear distinction between established facts and legitimate disagreement

- AI presents dual threats to Wikipedia: unauthorized data scraping by LLM companies and the exponential increase in sophisticated AI-generated disinformation that volunteers must detect and remove

- Wikipedia's volunteer editor community is the platform's most critical resource and greatest vulnerability—stagnating editor numbers threaten long-term sustainability more than technical challenges

- Trust is built through radical transparency, accountability structures, community participation, and consistent commitment to principles even when unpopular—Wales' framework applies beyond Wikipedia to institutional credibility broadly

Related Articles

- Wikipedia's Existential Crisis: AI, Politics, and Dwindling Volunteers [2025]

- Wikipedia's 25-Year Journey: Inside the Lives of Global Volunteer Editors [2025]

- Meta's Oversight Board and Permanent Bans: What It Means [2025]

- The Post-Truth Era: How AI Is Destroying Digital Authenticity [2025]

- How Grok's Deepfake Crisis Exposed AI Safety's Critical Failure [2025]

- Disney's Thread Deletion Scandal: How Brands Mishandle Political Content [2025]

![Jimmy Wales on Wikipedia Neutrality: The Last Tech Baron's Fight for Facts [2025]](https://tryrunable.com/blog/jimmy-wales-on-wikipedia-neutrality-the-last-tech-baron-s-fi/image-1-1768910931380.jpg)