Introduction: When Social Media Becomes Permanent

Imagine logging into Instagram and finding your entire account gone. No warning. No second chance. No clear explanation of what you did wrong. Your photos, messages, business connections, and years of memories vanish. For creators and business owners, it's worse. You lose your audience, your revenue stream, your entire digital livelihood.

This isn't hypothetical anymore.

Meta's Oversight Board just opened its doors to something unprecedented in its five-year history: examining whether permanent account bans are fair, transparent, and truly necessary. This matters way more than tech news headlines suggest. We're talking about the first real institutional challenge to Meta's ability to erase someone from the internet with minimal recourse.

The case involves a high-profile Instagram user who posted threats against journalists, hate speech, and explicit content. Meta didn't gradually escalate enforcement. Instead, the company went straight to permanent deletion. The Board wants answers: How does this decision happen? Who reviews it? What happens next if you disagree?

Here's why this is important to you, even if you've never violated a single community standard. This case sets precedent. The Board's recommendations could reshape how Meta handles millions of enforcement decisions across Facebook, Instagram, Threads, and WhatsApp. It could mean better appeals processes. It could mean transparency. Or it could mean nothing changes at all.

But first, let's understand what we're actually dealing with. Permanent bans are the nuclear option of content moderation. They're supposed to be rare, justified, and reserved for the worst of the worst. Except they're not always rare. And lately, users have been complaining that they're rarely transparent.

In 2024 and early 2025, stories exploded across social media about mass account bans with zero explanation. Facebook Groups got nuked. Individual accounts disappeared overnight. People with Meta Verified subscriptions—paid accounts supposedly offering extra support—discovered the premium service couldn't help them appeal a ban.

Meta's response has been inconsistent. Sometimes the company claims automated moderation flagged something. Sometimes human reviewers made the call. Sometimes Meta admits it was a mistake, sometimes not.

The Oversight Board's investigation could change this. Or it could reveal that Meta's approach is working exactly as designed: removing problematic users fast, without friction, and without full transparency. Let's dig into what's happening.

TL; DR

- First time ever: Meta's Oversight Board is examining permanent account bans in an institutional capacity, marking a significant shift in how social media platforms handle enforcement.

- The case: A high-profile Instagram user posted threats, hate speech, and explicit content, leading to permanent deletion without gradual enforcement escalation.

- What's at stake: Millions of users face potential permanent bans, but lack transparent processes, clear appeals, or meaningful recourse.

- The Board's limits: While influential, the Oversight Board can't force Meta to change broader policies—it can only recommend and overturn individual decisions.

- Impact timeline: Meta has 60 days to respond to the Board's recommendations, which could reshape account enforcement globally.

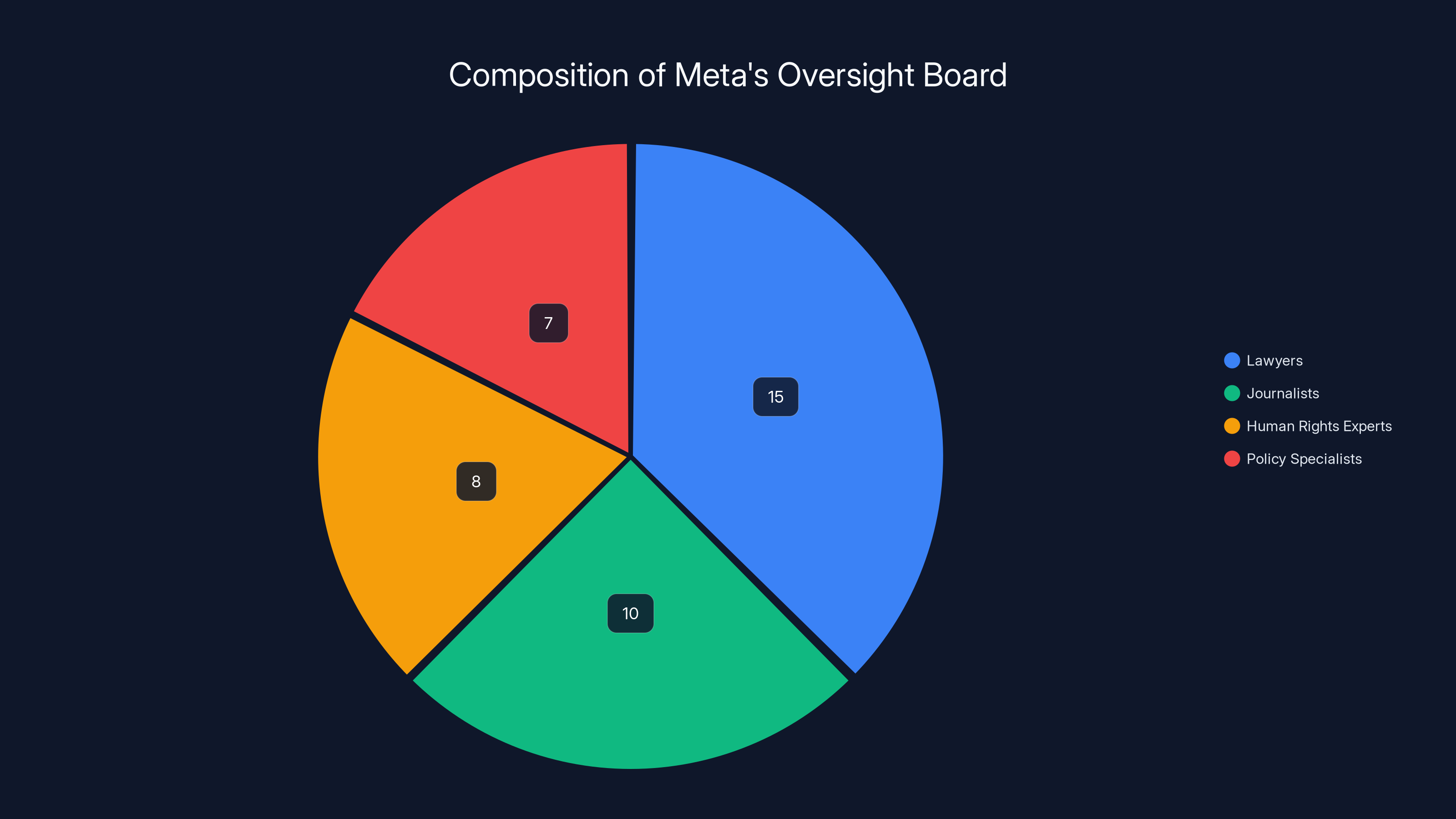

The Oversight Board consists of diverse professionals, with lawyers making up the largest group. Estimated data based on typical board composition.

Understanding Meta's Oversight Board: History and Scope

Meta's Oversight Board didn't spring up overnight. It was born from crisis.

Back in 2018 and 2019, Facebook faced relentless criticism over misinformation, Russian election interference, hate speech, and the Myanmar genocide facilitated by Facebook posts. Mark Zuckerberg got hammered in Congressional hearings. The company's content moderation decisions looked arbitrary and unaccountable.

In 2019, Meta announced it would create an independent board to review its most controversial content decisions. Think of it as a Supreme Court for social media, except the company that loses the case can still ignore the ruling if it really wants to.

The Board launched in 2020 with 40 members including journalists, human rights experts, constitutional lawyers, and policy specialists. Today it has even more. The mandate was clear: review difficult content moderation cases, issue binding decisions on specific posts or accounts, and offer policy recommendations.

But here's the catch that nobody talks about loudly enough. The Board can't investigate things on its own. It can't force Meta to refer cases. It can't override business decisions that don't involve content moderation. And critically, it was never designed to tackle systemic issues or permanent account removal.

Until now.

The Board's scope has historically been narrow and reactive. Users or Meta could refer specific content decisions. The Board would examine the post, apply Meta's stated policies, and decide if it violated community standards. If the post didn't violate standards, it stayed up. If it did, it came down. Simple.

Permanent bans were different. They weren't about individual pieces of content. They were existential consequences affecting entire accounts, years of data, and in many cases, professional livelihoods.

The organization itself says this is the first time it's focused specifically on permanent account bans in an institutional policy sense. That's significant. It means the Board has decided this issue is big enough, systemic enough, or problematic enough to warrant investigation beyond one random Instagram user.

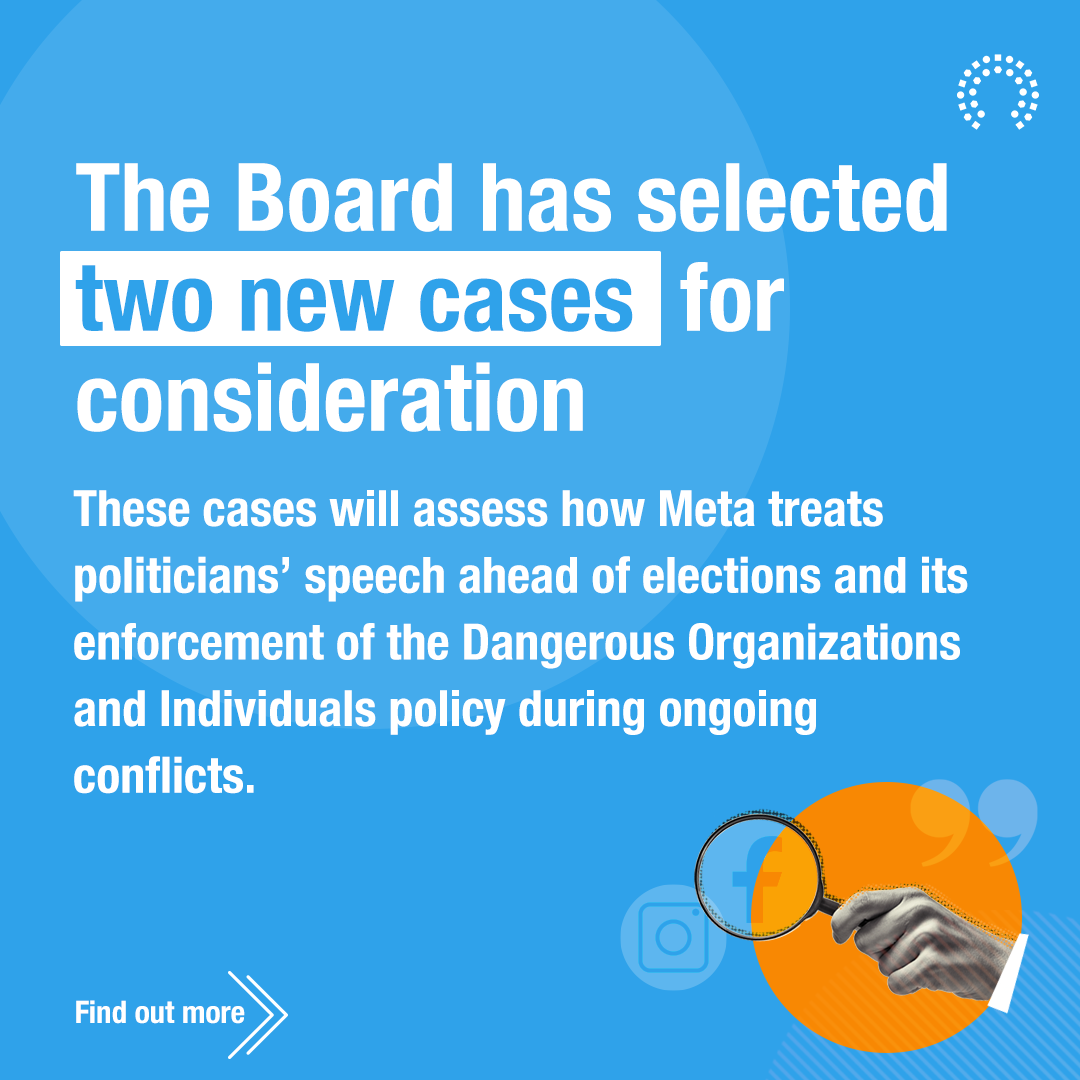

What makes this case unique is the scope of questions Meta is asking. The company isn't just asking, "Did this user violate policies?" It's asking the Board to help it figure out policy itself. How should permanent bans be processed fairly? How transparent should these decisions be? What tools work best to protect journalists from harassment? Are automated systems creating false positives?

These aren't technical questions. They're existential questions about power, accountability, and what Meta owes its users.

The Specific Case: High-Profile User, High Stakes

Meta's Oversight Board didn't choose a random person's account to examine.

The case involves a high-profile Instagram user who repeatedly violated Meta's Community Standards. We're not talking about one controversial post or a misunderstanding. The user repeatedly posted visual threats of violence against a female journalist, anti-gay slurs against politicians, content depicting sex acts, and allegations of misconduct against minorities.

This happened over time. The Board's materials referenced five specific posts made over the year before the permanent ban. That's important: we're not talking about a single egregious violation. This was a pattern.

But here's where it gets complicated. The account hadn't accumulated enough "strikes" to trigger Meta's automated ban system. Meta didn't follow its own escalation policy. Instead, a human or system at Meta decided this account was problematic enough to warrant permanent deletion.

When Meta makes that call, users rarely understand why. They don't get told which specific posts violated which policies. They don't get offered an appeal to human reviewers. They don't get a timeline or explanation. They just get locked out.

For a high-profile user with many followers, this creates additional complications. The person likely built a business or community around the account. Fans and customers lost access to them. Revenue streams ended. Messaging infrastructure disappeared.

Meta's position is straightforward: this user repeatedly created content that violates Community Standards. We're not obligated to host harmful content. If someone uses our platform to threaten journalists and post hate speech, we can remove their account. End of discussion.

But the Board's investigation suggests the conversation is more nuanced. Yes, the user violated policies. But did Meta follow fair procedures? Did it consider context? Did it explore warnings or temporary suspensions first? Could a journalist or public figure legitimately argue this was politically motivated or disproportionate?

More importantly: should permanent account deletion be an option Meta can exercise unilaterally, or should it require some form of review or appeal before going final?

These questions matter because what happens with this one account could shape how Meta treats millions of others. The Board's recommendations could establish precedent. And Meta has explicitly said it's open to hearing those recommendations.

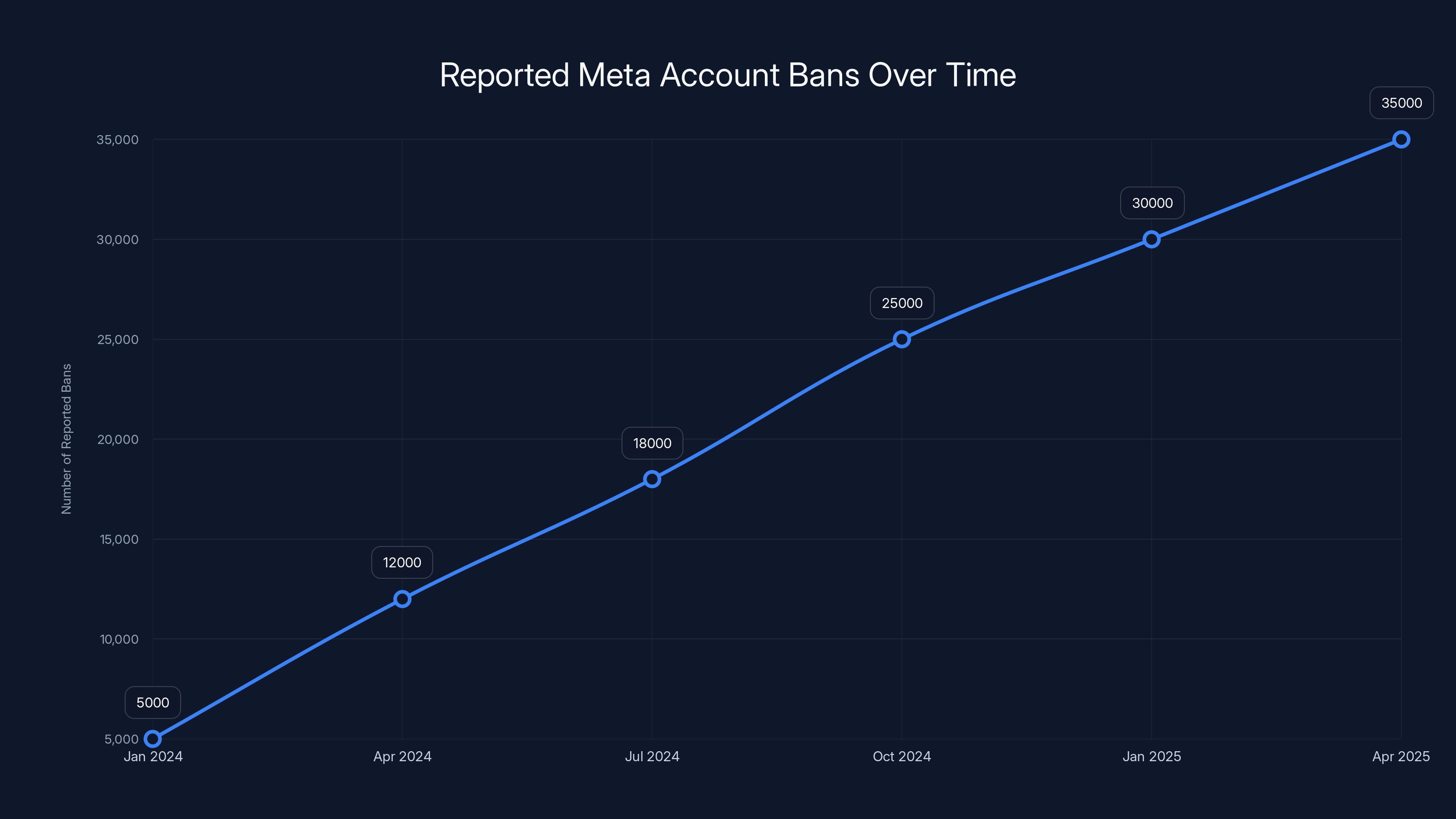

Estimated data shows a significant increase in reported Meta account bans from early 2024 to mid-2025, highlighting the growing concern over transparency and support issues.

The Broader Problem: Mass Account Bans and Missing Transparency

This case didn't emerge in a vacuum.

Throughout 2024 and into 2025, Meta users reported experiencing sudden, unexplained account bans at alarming rates. Facebook Groups got permanently disabled. Individual accounts vanished. The pattern was unsettling: users got no warning, no explanation, no meaningful way to appeal.

Some reported that Meta's automated moderation systems flagged them incorrectly. Others suspected shadow banning or algorithmic errors. Still others believed their accounts were targeted for political or ideological reasons, though they couldn't prove it.

What unified these complaints was the lack of transparency. Meta wouldn't explain what happened. The company's support infrastructure couldn't help. And most frustratingly, Meta Verified—the paid subscription tier—proved useless in these situations.

Meta Verified is positioned as premium support. For

This created a trust crisis. If you pay Meta for verified status and account protection, and then Meta bans your account anyway without explanation, what did you buy? What's the value of a paid service that doesn't protect you from the core risk?

The automation problem is real too. Meta has acknowledged that its moderation systems sometimes flag content incorrectly. An image might be detected as depicting violence when it actually shows something innocuous. A political post might trigger hate speech filters when it's legitimate political speech. These errors happen at scale, affecting millions of users.

But here's the policy question: when automation fails, whose job is it to fix the mistake? Should Meta be required to provide a free human review? Should users get to appeal? Should there be time limits on how long a ban stays active before an appeal must be heard?

Meta's current system says: we review appeals when we get them, but we're not obligated to do so quickly or thoroughly. The appeals process is opaque. You don't get to know why you were banned or what evidence Meta used to ban you. You submit an appeal into a void, and sometimes someone responds, and sometimes they don't.

The Oversight Board is asking if this system is acceptable.

Why Permanent Bans Are Different From Temporary Suspensions

There's a huge difference between a 24-hour timeout and permanent deletion, but Meta often treats them the same way.

Temporary suspensions are punishment with mercy built in. You get locked out for a day or a week, but you know when you get back. Your account, memories, and connections remain intact. You can adjust your behavior, cool off, and return. It's like detention in school—uncomfortable but ultimately survivable.

Permanent bans are digital capital punishment. They're not punishment with a sentence; they're execution. Your entire presence disappears. If you built a business on that account, it evaporates. If you're a creator with a million followers, they vanish. If you stored important messages or memories, they're gone.

For businesses and creators, permanent bans are particularly devastating. A photographer who built their portfolio on Instagram can't access their own images. A small business that used Facebook to reach customers loses their entire customer database. A musician with a following gets erased, along with their booking history.

Worse, there's no bankruptcy court for social media. You can't restart or recover what you lost. You don't get a reduced sentence for good behavior. It's final.

This is why the Oversight Board is focusing on permanent bans specifically. The stakes are different. A temporary suspension might be an acceptable enforcement tool even if the appeals process is imperfect. A permanent ban is an existential sanction that deserves a higher standard of review.

Meta's position has been that permanent bans are necessary to remove serial offenders or people who pose genuine safety risks. That's defensible. Some accounts legitimately need to be gone—the user is doxxing people, coordinating harassment campaigns, or inciting violence.

But that doesn't mean the process should be invisible or unappealable. Courts gave us trials for a reason. Even serious criminals get to present a defense. Why shouldn't users accused of serious violations get the same?

The Oversight Board's investigation is examining whether Meta has legitimate safety justifications for its permanent ban practices, and whether the process is fair enough to justify the severity of the outcome.

Meta's Key Questions for the Oversight Board

When Meta referred this case to the Oversight Board, it didn't just ask, "Was this ban justified?" Instead, the company posed several specific policy questions, signaling that it's genuinely uncertain about its own enforcement approach.

First, Meta asked: How can permanent bans be processed fairly? This is the core question. What does fairness look like? Does it mean users should get warning before permanent deletion? Should they get to appeal to human reviewers? Should there be a cooling-off period where the ban is temporary and renewable rather than final?

Second: How effective are Meta's current tools for protecting public figures and journalists from repeated abuse and threats of violence? This question suggests Meta knows its current approach is insufficient. Journalists and public figures get harassed constantly. Posts threaten their safety. But Meta's enforcement isn't stopping it. So either the tools don't work, or they're not being used consistently. The Board is being asked to figure out which.

Third: What are the challenges of identifying off-platform content? This is interesting because it suggests Meta is considering enforcement based on behavior outside Meta's platforms. If someone posts a threat on Twitter or their personal website, should Meta ban them from Instagram? Should the platform monitor external platforms for policy violations? This raises surveillance and privacy concerns that go beyond typical content moderation.

Fourth: Do punitive measures actually shape online behavior? This gets philosophical. Meta wants to know if banning people actually changes their conduct, or if they just go somewhere else and continue the same behavior on a different platform. If bans don't work, why use them? But if they do work, how do you measure that success?

Fifth: What are best practices for transparent reporting on account enforcement decisions? This is the question that cuts to the heart of user frustration. Meta wants the Board to tell it how transparent it should be about why it bans people. Should users get full explanations? Partial explanations? Just knowing they violated policies without specifics?

These aren't gotcha questions. They're genuine policy inquiries. Meta is asking the Board to help it figure out its own enforcement philosophy.

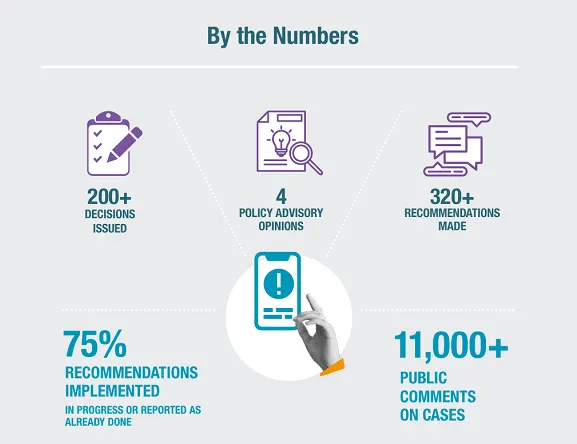

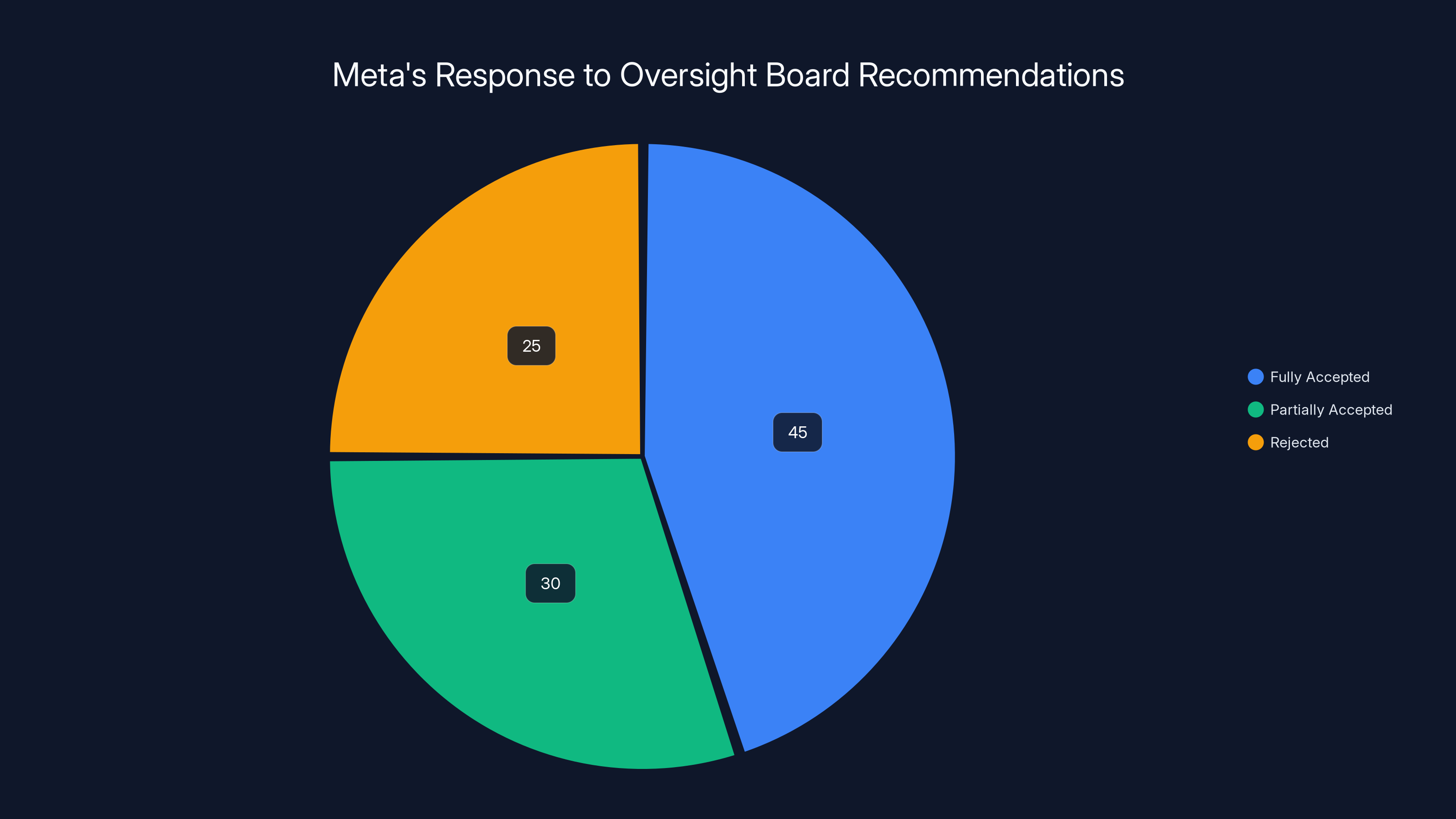

In 2024, Meta implemented 75% of the Oversight Board's policy recommendations, leaving 25% unaddressed. This highlights the Board's influence but also its limited enforcement power.

The Oversight Board's Limited Powers and Real Influence

Here's the uncomfortable truth: the Oversight Board can't force Meta to do anything.

It can recommend policies. It can overturn specific content moderation decisions. But it can't mandate that Meta implement those recommendations. It can't investigate systemic issues. It can't subpoena internal documents or compel testimony. It can't override business decisions that don't involve content moderation directly.

In 2024, Meta implemented about 75% of the Board's policy recommendations. That sounds good until you realize it means Meta ignored 25% of what the Board suggested. And the Board can't do anything about that. It has no enforcement mechanism.

Worst, Mark Zuckerberg isn't bound by the Board's decisions at all when it comes to company-wide policy changes. In early 2025, Meta unilaterally decided to relax hate speech restrictions on its platforms. It didn't consult the Oversight Board. Zuckerberg just announced it, and it happened.

So the Board's power is real but limited. It's like an ombudsman—it can investigate, recommend, and publish findings, but it can't force compliance. Companies often do what ombudsmen recommend because it looks good and maintains trust. But they're not legally obligated to.

That said, the Board's influence shouldn't be dismissed. When the Board publishes a decision, it gets media coverage. It creates accountability pressure. Users know they have a recourse mechanism, even if that mechanism is weak. And Meta clearly cares about appearing responsive to the Board's suggestions. The company keeps implementing most recommendations, which suggests there's at least some internal pressure to maintain the Board's legitimacy.

Moreover, the Board is independent. It's funded by Meta but operates autonomously. Its members don't work for Meta. The organization has published decisions that publicly criticized Meta's enforcement, which required courage and suggests genuine independence.

For permanent account bans specifically, the Board's influence could be significant. If the Board recommends that Meta provide better appeals processes, more transparency, or clearer escalation procedures, and Meta ignores those recommendations, the public relations cost could be substantial. Users would know they were denied a transparent appeals process. Journalists would report on it. The story would be: "Meta rejected its own Oversight Board's recommendation."

That's a cost Meta might not want to pay.

The Role of Automated Moderation in Permanent Bans

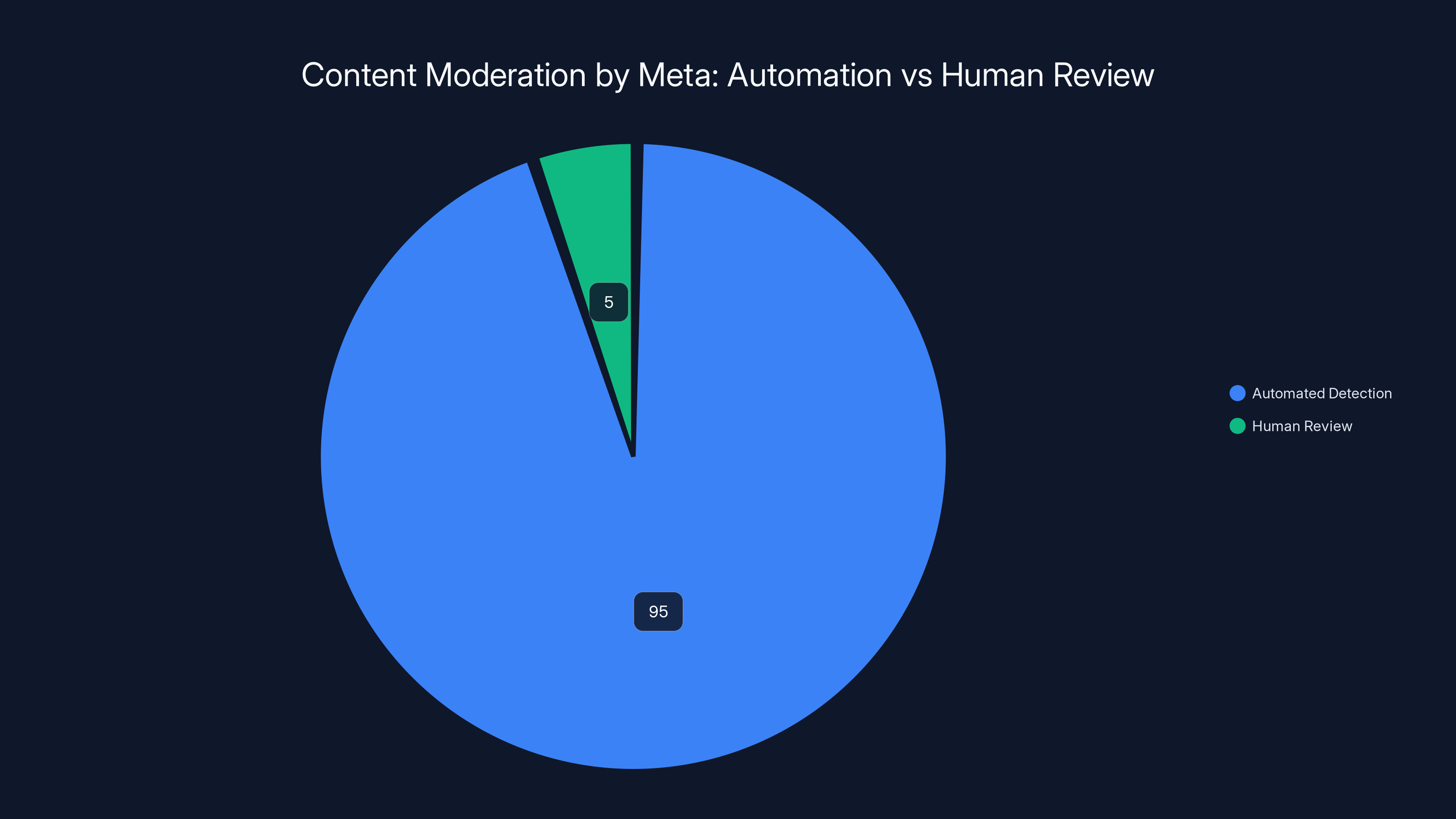

Meta doesn't have enough human moderators to review millions of content decisions every day. So automation does the heavy lifting.

Meta's AI systems can identify potential policy violations at remarkable scale. They flag content that might contain hate speech, violence, sexual material, or other violations. They estimate (Meta claims) that 98% of the hate speech removed from Facebook is detected before anyone reports it—caught by automation, not humans.

But automation has serious limits. AI can identify patterns, but it can't understand context the way humans can. A post saying "If I see you again, I'll break your legs" is clearly a threat. But a post saying "This political opponent should be tried for treason" is more ambiguous. Is it a call for violence? Is it hyperbolic political speech? Different humans might disagree.

Automated systems tend to default to caution. If there's any chance a post violates policy, they flag it. This creates false positives—legitimate content gets flagged as violating when it doesn't actually breach policies.

When human reviewers work as a secondary check, they can catch these errors. But here's the efficiency problem: if automation is removing or flagging 20 million pieces of content per day, human review of even a small percentage becomes impossible. Meta has tens of thousands of content reviewers worldwide, which sounds like a lot until you do the math against the volume of content.

This is where permanent bans become problematic with current systems. If automation flags an account repeatedly for violations, and no human ever reviews those flags carefully, the account might get banned based on algorithmic errors. Nobody checks if the AI misinterpreted context. Nobody considers whether the user had legitimate reasons for the flagged content.

The Oversight Board's investigation is examining whether this is happening, and if so, whether it's acceptable.

Comparing Meta's Approach to Other Platforms' Enforcement

Meta isn't the only platform struggling with permanent bans and transparency. But its approach is notably aggressive.

Twitter (now X) has banned accounts, including major political figures and journalists. But the company typically provided at least some explanation, even if the explanation was controversial. X's founder Elon Musk has been transparent about why certain accounts were removed.

TikTok removes accounts but generally follows clearer escalation paths. First, content gets flagged. Then the account gets warnings. Then temporary bans. Only persistent, egregious violations lead to permanent deletion.

YouTube has a well-documented appeals process. If your channel gets terminated, you can request a human review. Google has published detailed policies about what triggers channel termination. There's still secrecy, but there's also process.

Meta's approach, by comparison, has historically been more opaque. The company bans accounts, and users get generic messages about violating Community Standards. They can appeal, but the appeal goes into a system that might or might not review it carefully. Some appeals get reversed (suggesting they were mistakes), while others don't (suggesting someone reviewed them and disagreed).

This inconsistency is exactly what the Oversight Board is investigating. If Meta has enforceable policies about permanent bans, those policies should be applied consistently. If they're not being applied consistently, why not? Is it human discretion? Is it automation errors? Is it bias?

The Board's recommendations could push Meta toward greater procedural consistency and transparency. That might mean Meta adopting clearer policies about what triggers permanent deletion. It might mean requiring human review before permanent bans go final. It might mean giving users more information about why they were banned.

Other platforms do some of these things already. The question is whether Meta will too.

Estimated data based on historical trends: Meta has historically implemented 75% of recommendations, with a mix of full and partial acceptance.

User Rights and Digital Citizenship in the Social Media Era

Underlying this entire discussion is a bigger question about user rights in the social media age.

When you create a Facebook or Instagram account, you agree to Meta's Terms of Service. That agreement states that Meta can terminate your account at any time for any reason. Legally, that's probably enforceable. The platform owns the infrastructure, and you don't have a constitutional right to use it.

But morally and practically, that's getting harder to defend.

For many people, social media accounts aren't optional luxury goods. They're essential infrastructure for social connection, professional networking, and business operation. If you're a freelancer, your Instagram following is your portfolio and client base. If you're an activist, your Facebook is your organizing tool. If you're a creator, your YouTube channel is your livelihood.

When a platform can delete that at will, without appeal, you've handed enormous power over your life to a corporation.

The Oversight Board's work touches on this issue. By examining whether permanent bans are fair and transparent, the Board is implicitly arguing that account deletion is significant enough to deserve procedural fairness. You're not just losing a service. You're losing digital property that has real value.

This connects to bigger conversations about digital citizenship. Some policy experts argue that major platforms have become quasi-public spaces, hosting as much speech and community as traditional public forums. If that's true, should they have the same obligations that governments have to respect speech rights and due process?

Meta would argue no. The company is private, not government. The First Amendment doesn't apply to private companies. They can set their own rules.

But users increasingly argue that the scale and centrality of platforms like Meta blur the distinction between private and public spaces. When 3 billion people use Facebook, the platform effectively functions as public infrastructure, even if it's privately owned.

The Oversight Board sits somewhere in this middle ground. It's not arguing that Meta must treat users like a government treats citizens. But it is arguing that Meta should have fair processes for consequential decisions like permanent account termination.

The 60-Day Response Timeline and What's Expected

Meta now has 60 days to respond to whatever recommendations the Oversight Board issues.

That timeline is important because it means we're not waiting years for an answer. The Board's analysis will happen over several months (the Board typically takes time with its investigations), but once recommendations are issued, Meta has to respond publicly within 60 days.

That response could take several forms. Meta could accept all recommendations and commit to implementing them. The company could partially accept recommendations while arguing against others. Meta could reject recommendations entirely, which would require publicly explaining why.

The public response is crucial because it's where accountability happens. If Meta rejects the Board's recommendations on transparency, for example, that rejection becomes a news story. Users see that Meta chose opacity. The company faces pressure if that decision looks indefensible.

Historically, Meta has been responsive to Board recommendations. The 75% implementation rate suggests the company takes the Board seriously. But that doesn't mean Meta will implement every recommendation in this case.

Some recommendations might be expensive to implement. If the Board recommends that every permanent ban gets a human review before becoming final, that requires hiring more content moderation staff. That's a real cost.

Other recommendations might conflict with business goals. If the Board recommends that Meta be more transparent about enforcement, that transparency might reveal inconsistencies or bias in how the platform treats different users or political viewpoints. Meta might prefer to keep that hidden.

The Board can't force implementation, but it can publish Meta's response and reasoning. That creates transparency about the company's priorities and values. Sometimes, that pressure is enough to drive change.

Public Comment Period and the Importance of User Input

The Oversight Board is also soliciting public comments on this case.

That's significant because it opens the investigation to input beyond Meta's perspective and the Board members' own analysis. Users, civil rights groups, journalism organizations, and other stakeholders can submit comments explaining why they think permanent bans should or shouldn't be allowed, how the process should work, and what safeguards matter.

These comments matter because the Board pays attention to them. The organization regularly cites public input in its published decisions. When thousands of people submit comments explaining how Meta bans have damaged their lives, that resonates. The Board takes that seriously.

But there's a catch: these comments cannot be anonymous. You have to put your name on your submission.

That's a meaningful barrier, especially for people who fear retaliation from Meta. If you submit a critical comment under your real name, Meta can (and presumably does) see it. There's no legal retaliation Meta can undertake, but there are subtler forms of enforcement. Your content could get deprioritized. Your account could be flagged for additional review. You might get targeted with ads.

For people who depend on Meta platforms for their livelihoods, submitting a critical public comment carries risk. That's probably intentional on the Board's part—anonymity creates noise and reduces accountability. But it also suppresses dissenting voices from people who are most vulnerable to retaliation.

Despite that barrier, the Board does get substantial public input. Civil rights organizations submit comments. Journalists submit comments. Former employees who know Meta's systems submit comments. The Board publishes these comments (or summaries) alongside its decisions.

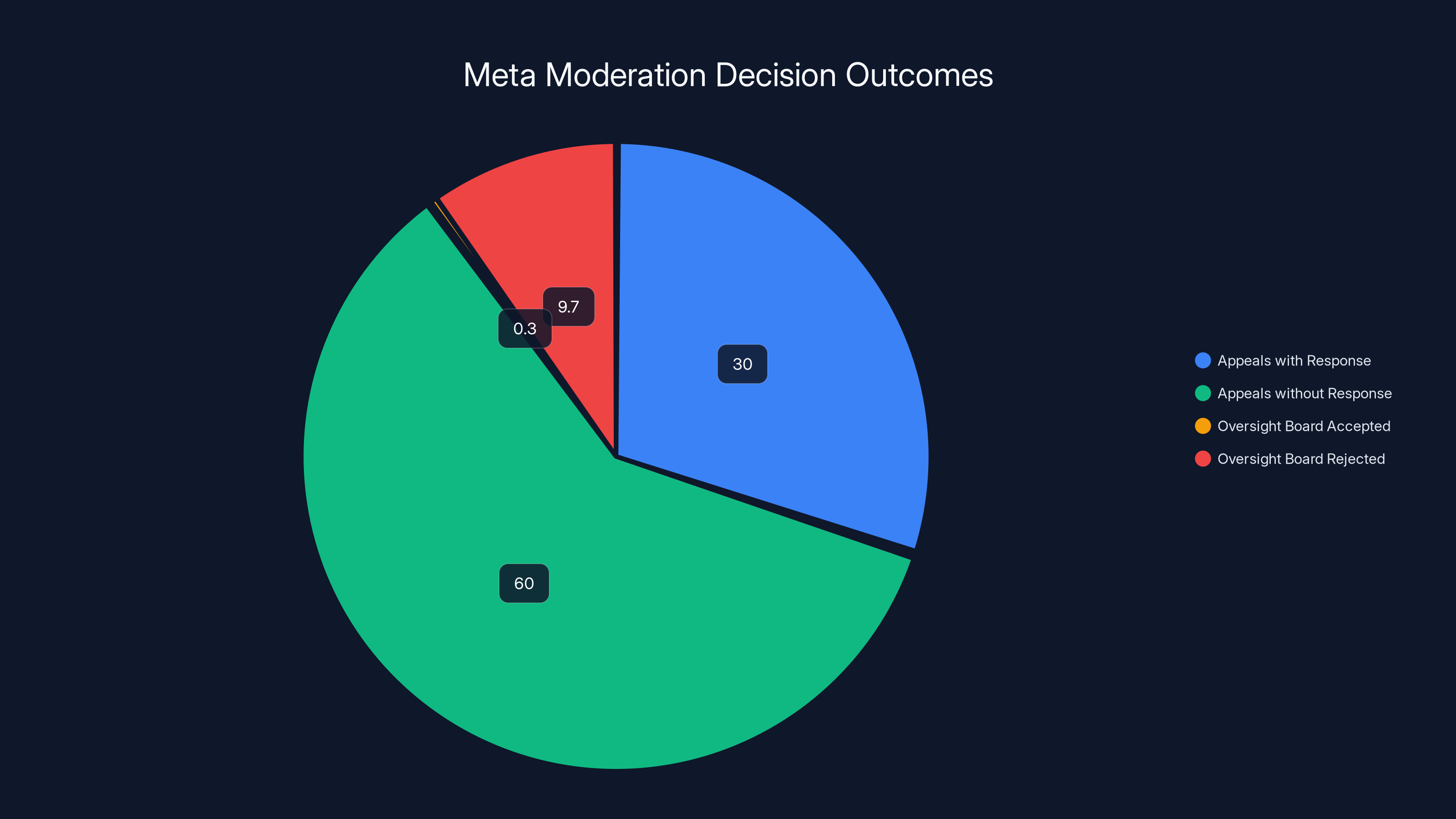

Estimated data shows that most appeals to Meta moderation decisions do not receive a response, while only 0.3% of cases are accepted by the Oversight Board, highlighting the rarity and thoroughness of their process.

How This Case Could Set Precedent

Permanent account bans aren't rare. Meta removes thousands of accounts every day, though the exact number is classified.

If the Oversight Board recommends changes to how permanent bans work, those recommendations could affect millions of users going forward. That's precedent.

For example, if the Board recommends that users get clear explanations before permanent deletion, Meta might have to update its entire enforcement process. That means changing automated messages, requiring reviewers to document their reasoning, and potentially increasing the time between ban decision and implementation to allow for appeals.

Or if the Board recommends that permanent bans require human review before becoming final, Meta might need to hire thousands of additional content moderators globally. That's a significant business cost.

Or if the Board recommends that users get to appeal permanent bans through a secondary review process, that creates infrastructure Meta doesn't currently have. Implementing it would require development time and operational changes.

Meta knows this. That's probably why the company referred this case to the Board in the first place. Meta wants the Board to help shape what permanent bans should look like going forward. If the Board recommends something Meta is already doing, that's validation. If the Board recommends something new, that's permission to implement it—Meta can say, "Our Oversight Board recommended this, so we're doing it," rather than making a unilateral call that looks corporate and dictatorial.

From Meta's perspective, engaging with the Board on this issue is strategic. The company gets input that might improve its system. It gets independent endorsement for whatever changes it decides to make. And it gets credit for being responsive to oversight.

From the user perspective, it's an opportunity. If the Board recommends better appeals processes or more transparency, those are real improvements that could matter to millions of people.

The Broader Context: Meta's Recent Policy Shifts

This permanent bans case doesn't exist in isolation. It comes after several Meta policy changes that have altered the company's approach to content moderation.

In early 2025, Meta announced it would relax restrictions on hate speech. The company would allow more speech about sexuality, gender identity, and national origin. Meta justified this by saying the previous rules were too strict and suppressed legitimate political discourse.

Meta also changed its approach to fact-checking. The company moved away from third-party fact-checkers toward a community note system more similar to Twitter/X's approach. Instead of Meta deciding what's false, the platform lets users vote on whether information is accurate.

Both changes suggest Meta is moving toward less content moderation, not more. The company seems to be embracing a hands-off approach where it only intervenes for the most egregious violations (hate speech, violence, illegal content).

But that creates tension with the permanent bans case. If Meta is generally reducing content moderation, why are permanent bans increasing? Is it because users are posting more egregious content? Or is it because automated systems are getting more aggressive?

The Oversight Board's investigation sits at this nexus. The Board is examining whether permanent bans are justified at all, while Meta simultaneously signals it wants less content moderation overall.

This could be leading toward a system where temporary suspensions are reduced, but permanent bans remain as the ultimate enforcement tool. Essentially: fewer restrictions on borderline content, but swift removal of the worst violations.

Or it could be leading toward the Board recommending that permanent bans need stronger justification and clearer process now that they're Meta's primary enforcement mechanism.

We won't know until the Board releases its recommendations.

Financial and Business Implications

The permanent bans case has direct financial implications for Meta.

First, there's the cost of potential implementation. If the Board recommends changes to enforcement procedures, those changes might require hiring more staff, building new systems, or changing workflows. All of that costs money.

Second, there's reputational cost. Meta's stock price is influenced by user trust and regulatory perception. If the Oversight Board concludes that Meta's permanent bans are unfair or unjustified, that's a negative headline. The company's reputation suffers, which affects both user acquisition and advertiser confidence.

Third, there's the regulatory risk. Governments globally are considering regulating social media platforms more aggressively. If the Oversight Board finds that Meta's enforcement is problematic, regulators take note. That could influence future legislation or enforcement action against Meta.

On the flip side, if Meta proves cooperative with the Board's recommendations and implements changes quickly, that shows regulators that Meta is willing to self-regulate and respond to oversight. That could reduce regulatory pressure.

Meta's business model depends on having users and advertiser trust. The permanent bans case touches both. If users feel like they could be erased from the platform arbitrarily, some leave. If advertisers see that Meta can turn off accounts without explanation, they worry about the stability of accounts they use for marketing.

The Oversight Board's investigation and recommendations could address these concerns or exacerbate them, depending on what's recommended and how Meta responds.

Estimated data suggests that the vast majority of content moderation actions are handled by automated systems, with a small fraction reviewed by humans.

The Challenges Meta Actually Faces

Understanding Meta's perspective matters too, even if you disagree with the company's approach.

Meta moderation teams deal with genuinely difficult cases constantly. They have to balance free expression against platform safety. They have to respect global cultural differences while enforcing universal policies. They have to make decisions at scale with incomplete information.

Some permanent bans are absolutely justified. If someone is doxxing journalists, coordinating harassment campaigns, or inciting violence, removing them from the platform makes sense. Letting them continue operating creates harm.

The challenge is distinguishing between those clear cases and edge cases where the violation is real but not severe enough to deserve permanent deletion. Should a one-time threat against a public figure result in permanent banning? Or a temporary suspension? Different people have different answers.

Meta also faces enforcement challenges that user-facing policies don't address. Bad actors operate on the platform constantly. They evade detection by using coded language, deleting posts after they go viral, or operating across multiple accounts. Enforcement requires constant escalation and adaptation.

Given those challenges, automatic permanent bans for repeat serious violations make operational sense. It's more scalable than trying to proportionally escalate every case. It removes bad actors faster. It signals strength to potential violators.

But operational convenience doesn't always align with fairness. The Oversight Board is asking Meta to prove that permanent bans serve legitimate purposes, not just operational ones.

What Happens After the Board's Recommendations?

Once the Oversight Board issues its recommendations (probably in spring or summer 2025), Meta has to respond publicly within 60 days.

The most likely scenarios:

Scenario 1: Meta accepts recommendations and commits to implementation. This is the best-case outcome for users and critics. Meta would announce that it's updating its permanent ban procedures to be more transparent, more fair, or more appealable. The company would set a timeline for implementation. That becomes the new standard for permanent bans.

Scenario 2: Meta partially accepts recommendations. The company commits to implementing some recommendations but argues against others, explaining its reasoning. This requires Meta to articulate why it won't accept certain recommendations, which creates accountability. Some improvements happen, but not all.

Scenario 3: Meta rejects recommendations or accepts them symbolically. Meta announces that it carefully considered the Board's input but maintains its current approach. The company might implement minor changes while avoiding the substantive recommendations. This creates negative coverage but doesn't directly harm the company.

Scenario 4: Meta agrees to implement but does so slowly. The company commits to changes but sets a multi-year implementation timeline. Some users and advocates see this as good faith. Others see it as stalling. The real change takes years to roll out, if it happens at all.

After Meta's response, the Oversight Board might issue follow-up reports. The organization might revisit the case in a year or two to assess whether Meta followed through. The Board might issue public statements criticizing Meta's response if it thinks the company is not taking recommendations seriously.

What won't happen: the Board can't force Meta to do anything. Ultimately, it's Meta's choice whether to implement recommendations, and that's the actual limit of the Board's power.

The Broader Implication for Content Moderation

This case could reshape how platforms think about enforcement generally.

Right now, most platforms treat content moderation decisions as largely internal matters. Users can appeal, but appeals are opaque. Platforms decide what counts as harmful, and users have to accept that judgment.

The Oversight Board's investigation suggests a different model: platforms should justify enforcement decisions to external scrutiny. That scrutiny might reveal that enforcement policies are arbitrary, inconsistent, or biased. And if they are, platforms should change them.

That's a more transparent model. It's also more costly and slower. But it treats users like stakeholders whose interests matter, not just users whose data is being monetized.

Other platforms watch what happens with Meta's Oversight Board. If the Board proves effective at creating meaningful change, other companies might create similar boards. If the Board's recommendations get ignored, competitors will conclude that external oversight is just PR and won't bother.

Meta is essentially running an experiment: can an internal Oversight Board actually influence how a major tech platform operates? The answer shapes not just Meta's future, but the future of platform accountability industry-wide.

Lessons for Users: What to Do Now

If you rely on Meta platforms for your livelihood or community, you should take several precautions.

First, diversify your presence. Don't put all your content, audience, or business infrastructure on a single platform. Meta could delete your account for reasons you don't understand, and you need alternatives in place before that happens.

Second, back up your data. Meta makes it difficult to export your full account history, but you can download photos, videos, and posts. Do it regularly. If your account gets deleted, you'll have copies.

Third, understand the policies. Read Meta's Community Standards and Terms of Service carefully. Know what's prohibited and what's gray area. Think about what content might trigger enforcement.

Fourth, document interactions. If you get warnings or suspensions, take screenshots. Note dates and details. If you're permanently banned, that documentation helps you appeal or file a Board complaint.

Fifth, engage with the appeals process. If you get banned, appeal through Meta's standard channels. Document everything. If you want to escalate to the Oversight Board, you'll need evidence of Meta's error or unfairness.

Sixth, consider paid services strategically. Meta Verified might be worth it if it gives you priority support, but understand its limits. Paid status doesn't protect you from permanent bans if Meta decides you've violated policies.

Seventh, connect with communities. Use other platforms and owned channels to maintain your audience. If Meta removes you, your audience can follow you elsewhere.

Where This Goes From Here

The permanent bans case is just one investigation, but it could be the beginning of a larger shift in how Meta and other platforms handle user enforcement.

The Board might recommend creating a new appeals tier specifically for permanent bans. It might recommend publishing aggregate data about bans by category and violation type. It might recommend training reviewers specifically on permanent ban decisions. It might recommend that Meta establish clear criteria for when permanent bans are appropriate versus other penalties.

Whatever the Board recommends, Meta has to respond. And users will know what the company decided. That transparency is valuable regardless of the outcome.

The case also signals that permanent account deletion is finally being treated as a serious policy issue. For years, users complained about bans with no recourse. Now an internationally recognized board is investigating whether the system is fair. That's progress, even if it's partial and slow.

For content creators, business owners, and communities that depend on Meta platforms, this investigation matters. The recommendations could make the platform more fair or more dangerous. The company's response will show whether Meta is genuinely responsive to oversight or just pretending.

We'll know more in a few months when the Board releases its recommendations. Until then, the investigation continues.

FAQ

What exactly is Meta's Oversight Board?

Meta's Oversight Board is an independent organization funded by Meta that reviews the company's most controversial content moderation decisions. It functions like a Supreme Court for social media, examining whether specific posts or accounts should be removed based on Meta's stated policies. The Board has about 40 members including lawyers, journalists, human rights experts, and policy specialists. It can overturn Meta's decisions on individual pieces of content or accounts, and it issues policy recommendations that Meta considers but is not legally required to follow. Importantly, the Board is independent from Meta's day-to-day operations and publishes detailed decisions explaining its reasoning.

Why is this permanent bans case significant?

This is the first time in the Board's five-year history that it's focused specifically on permanent account bans as a policy matter. Permanent bans are different from temporary suspensions because they're not punishments with sentences; they're irreversible removals of entire accounts, which can devastate creators' businesses and destroy years of stored memories and connections. The case signals that permanent bans deserve higher scrutiny than individual content decisions because the consequences are so severe. Meta is explicitly asking the Board to help shape what permanent bans should look like going forward, suggesting the company is uncertain about its own policy in this area.

What did the user in the case actually do?

The user posted threats of violence against journalists, anti-gay slurs against politicians, explicit sexual content, and accusations of misconduct against minorities. These weren't isolated incidents but repeated violations over a year. However, the account hadn't accumulated enough automatic strikes to trigger Meta's automated ban system, so a human reviewer or Meta decision-maker chose permanent deletion rather than following the usual escalation process. That choice is what the Board is examining: was it justified? Was the process fair? Could the same decision happen to other users for less egregious violations?

Can Meta's Oversight Board force the company to change its policies?

No. The Board can recommend policies and overturn specific content decisions, but it cannot force Meta to implement recommendations. About 75% of the Board's previous recommendations have been implemented by Meta, suggesting the company takes the recommendations seriously, but Meta is legally free to ignore them. The real power of the Board is public accountability: if Meta rejects the Board's recommendations, that becomes a news story, and users see that Meta chose not to follow independent oversight advice. That reputational pressure sometimes drives companies to comply even when they're not legally required to.

What happens if your account is permanently banned?

If Meta permanently bans your account, you lose access to it immediately. Your profile, photos, messages, and connections all become inaccessible. If you tried to create a new account with the same email or phone number, Meta would likely link it to the ban and delete it too. Your business or community assets disappear. You can appeal through Meta's standard appeals process, but that process is opaque—you won't know why you were banned, and your appeal might get rejected without explanation. For egregious violations (threats, illegal content, harassment), permanent bans might be justified. But for borderline cases, the lack of transparency and recourse is problematic.

How does this case affect other platforms like Twitter or YouTube?

This case doesn't directly affect other platforms, but it sets industry precedent. If Meta's Oversight Board establishes that permanent bans require transparent processes and meaningful appeals, other platforms might face similar pressure from users and advocates. Twitter, TikTok, and YouTube already handle enforcement more transparently than Meta has historically done, so they're ahead on this issue. But this case could raise expectations for all platforms about what constitutes fair enforcement. If Meta improves its procedures, that becomes the baseline standard that users expect elsewhere.

What's the timeline for the Board's decision?

The Oversight Board typically takes several months to investigate cases and issue decisions. Once the Board publishes recommendations, Meta has 60 days to respond publicly. So we're probably looking at a timeline of spring or summer 2025 for the Board's decision, and then Meta's response by summer or fall 2025. This isn't as fast as users might want, but it's actually quite rapid for institutional review processes. The public will get answers within a reasonable timeframe.

Can I submit a comment to the Oversight Board about this case?

Yes. The Oversight Board is soliciting public comments on the permanent bans issue. You can submit a comment explaining your perspective on whether permanent bans are justified, how the process should work, and what safeguards matter. The catch is that submissions cannot be anonymous—you have to use your real identity. That creates a barrier for people who fear retaliation, but it also makes the comment process more accountable. You can find information about submitting comments on the Oversight Board's official website.

If I've been permanently banned, can I appeal to the Oversight Board?

You can submit your case to the Oversight Board if you believe it meets their criteria for review. The Board only accepts a small fraction of cases referred to it (less than 1%), so approval is not guaranteed. But if you were permanently banned without explanation, if Meta's support couldn't help, or if you believe the ban was unjust, submitting a formal request to the Board is worth trying. The Board publishes detailed decisions, so even if your case isn't selected, you'll benefit from the general guidance the Board issues about permanent bans.

What's Meta Verified and does it help if I'm banned?

Meta Verified is a paid subscription (about $12-20 per month) that gives users verification status, a blue check, account protection features, and priority support. However, several users have reported that Meta Verified didn't help them when they were permanently banned. The premium support couldn't reverse a ban or provide meaningful recourse. So while Meta Verified might offer some protections, permanent bans apparently exceed its scope. Treat it as a supplement to your strategy, not as insurance against permanent deletion.

Conclusion: Accountability in the Age of Digital Platforms

Meta's Oversight Board investigation into permanent account bans represents something important: the first institutional attempt to scrutinize one of social media's most severe enforcement actions.

Permanent bans are consequential. They're not like removing a post or suspending an account for a week. They're digital capital punishment. For creators and businesses, they're existence-threatening. For everyday users, they're permanent loss of photos, messages, and memories.

For too long, these decisions happened in black boxes. Meta would ban an account, users wouldn't understand why, and that was that. No transparency. No appeals. No recourse. Just deletion.

The Oversight Board's investigation changes that dynamic. It forces Meta to articulate why permanent bans are necessary. It requires the company to justify the process. It opens space for users and advocates to argue that the current system is unfair.

Will the Board's recommendations actually change anything? That's uncertain. Meta implements most Board recommendations, but not all. The company might accept some suggestions while maintaining the core practices users are complaining about.

But here's what's already changed: the conversation. Users now have an official channel to challenge permanent bans. The Board is asking questions that Meta hasn't been comfortable answering before. Journalists are covering the investigation. Policymakers are paying attention.

That's not nothing. It's the beginning of holding social media companies accountable for their most severe enforcement actions.

For users, the lesson is clear: you have more power than you think. The Oversight Board exists because people complained loudly enough that Meta decided to create it. You can engage with the appeals process. You can submit comments. You can document enforcement decisions. You can build communities outside of platforms. You can demand transparency.

For Meta, the lesson is equally clear: enforcement practices matter. They shape whether users trust the platform. They influence whether regulators step in. They determine whether talented creators and businesses are willing to depend on your infrastructure.

Getting permanent bans right—making them fair, transparent, and appealable—isn't just ethics. It's business. And the Oversight Board is going to make sure Meta understands that.

The investigation is ongoing. Meta's response will come in the coming months. Users are watching. The tech industry is watching. And now, for the first time, permanent bans are getting the institutional scrutiny they've deserved all along.

Key Takeaways

- Meta's Oversight Board is investigating permanent account bans for the first time, marking a significant shift toward scrutinizing social media's most severe enforcement actions.

- Permanent bans are existential consequences for users, erasing years of memories, business assets, and digital identity without transparent recourse or appeals.

- The Board's recommendations could reshape enforcement policies affecting millions of users, though Meta retains final authority over implementation.

- Current enforcement processes lack transparency, with users receiving vague notifications, opaque appeals, and minimal explanation for permanent deletion decisions.

- Users should diversify their presence across platforms, maintain data backups, document enforcement decisions, and engage with appeals processes to protect themselves from permanent bans.

Related Articles

- How Grok's Deepfake Crisis Exposed AI Safety's Critical Failure [2025]

- xAI's Grok Deepfake Crisis: What You Need to Know [2025]

- Grok's Deepfake Crisis: The Ashley St. Clair Lawsuit and AI Accountability [2025]

- Grok's Unsafe Image Generation Problem Persists Despite Restrictions [2025]

- Bandcamp's AI Music Ban Sets the Bar for Streaming Platforms [2025]

- Apple and Google's App Store Deepfake Crisis: The 2025 Reckoning [2025]

![Meta's Oversight Board and Permanent Bans: What It Means [2025]](https://tryrunable.com/blog/meta-s-oversight-board-and-permanent-bans-what-it-means-2025/image-1-1768909166206.png)