The Unexpected Crisis Behind Apple's Smallest Mac

If you've tried buying a Mac mini lately, you might've noticed something's changed. Stock is getting harder to find. Wait times are stretching. Prices in some markets are creeping upward. It's not a product recall or a manufacturing defect. It's something way more interesting: artificial intelligence.

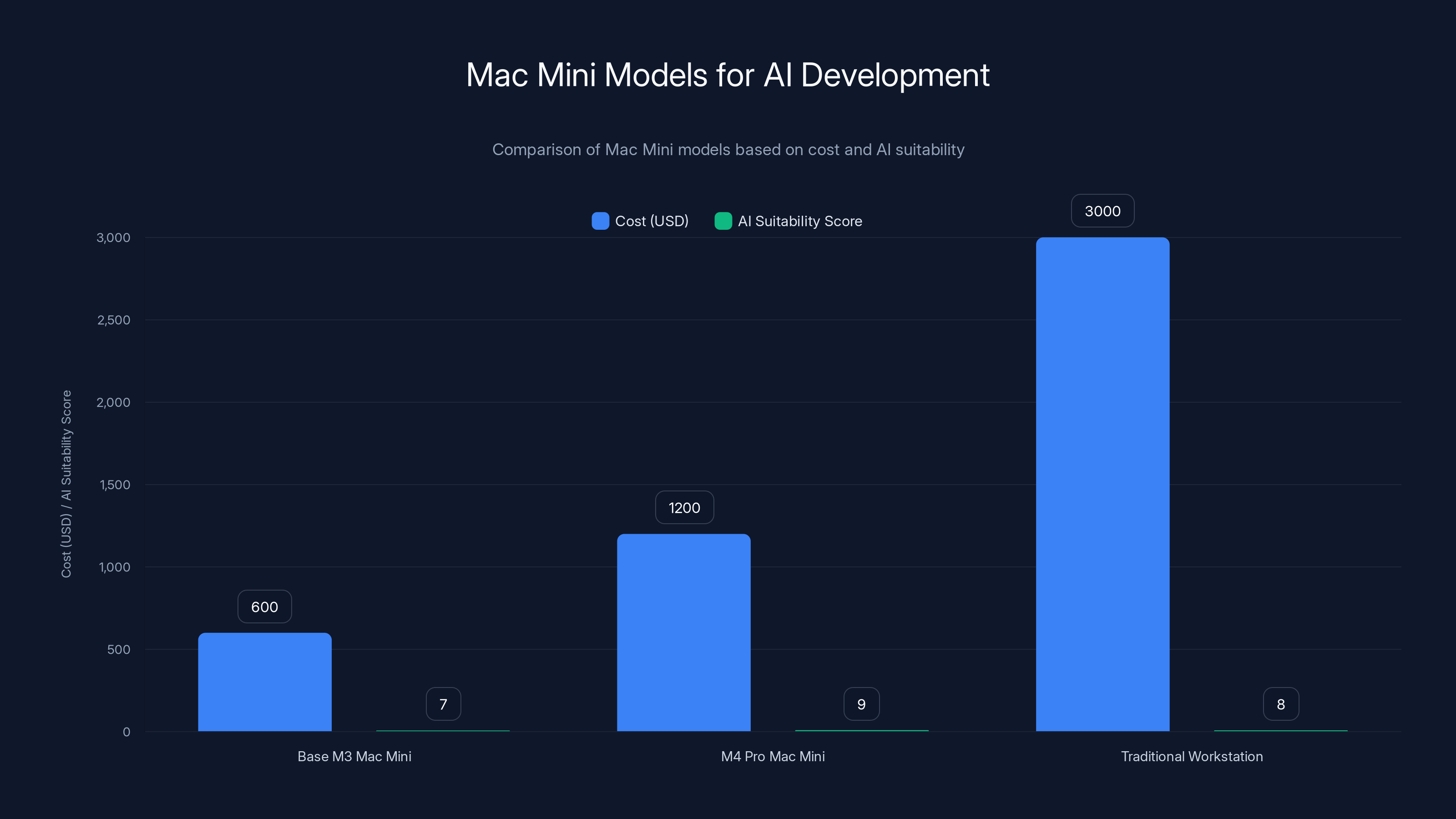

The Mac mini, that unassuming desktop computer sitting in the corner of design studios and developer workstations everywhere, has become unexpectedly popular. Not because it suddenly got better. Not because marketing finally figured out how to sell a tiny computer without an M1 processor joke. The reason is simpler and more profound. Developers and AI teams discovered that the Mac mini's price-to-performance ratio makes it an ideal machine for running large language models, training datasets, and orchestrating AI workflows locally.

This is a story about how one product became a bottleneck for an entire industry that's moving faster than supply chains can keep up. It's about the collision between Apple's manufacturing capacity and an AI boom that nobody fully anticipated. And it's about what this means for you if you actually want to buy one.

Here's the thing: Apple designs products for consumers and creatives. Engineers and AI researchers? That's usually an afterthought. But the Mac mini turned out to be something unexpected. It's powerful enough for serious computational work. It's affordable enough that a small team can buy several units without blowing their budget. And its efficient Apple Silicon architecture means you can run inference on language models without incurring massive electricity costs. That combination created a quiet stampede.

Understanding the Mac Mini's Unexpected Appeal for AI

The Mac mini isn't marketed as a development machine. Apple doesn't run ads showing engineers training neural networks on Mac minis. The marketing focuses on creatives, remote workers, and people who want a compact desktop without the price tag of a 24-inch iMac. But starting around mid-2024, something shifted in developer communities.

When you run a large language model locally, you need serious hardware. You need fast processors. You need abundant memory. You need storage that won't bottleneck data loading. Historically, that meant spending

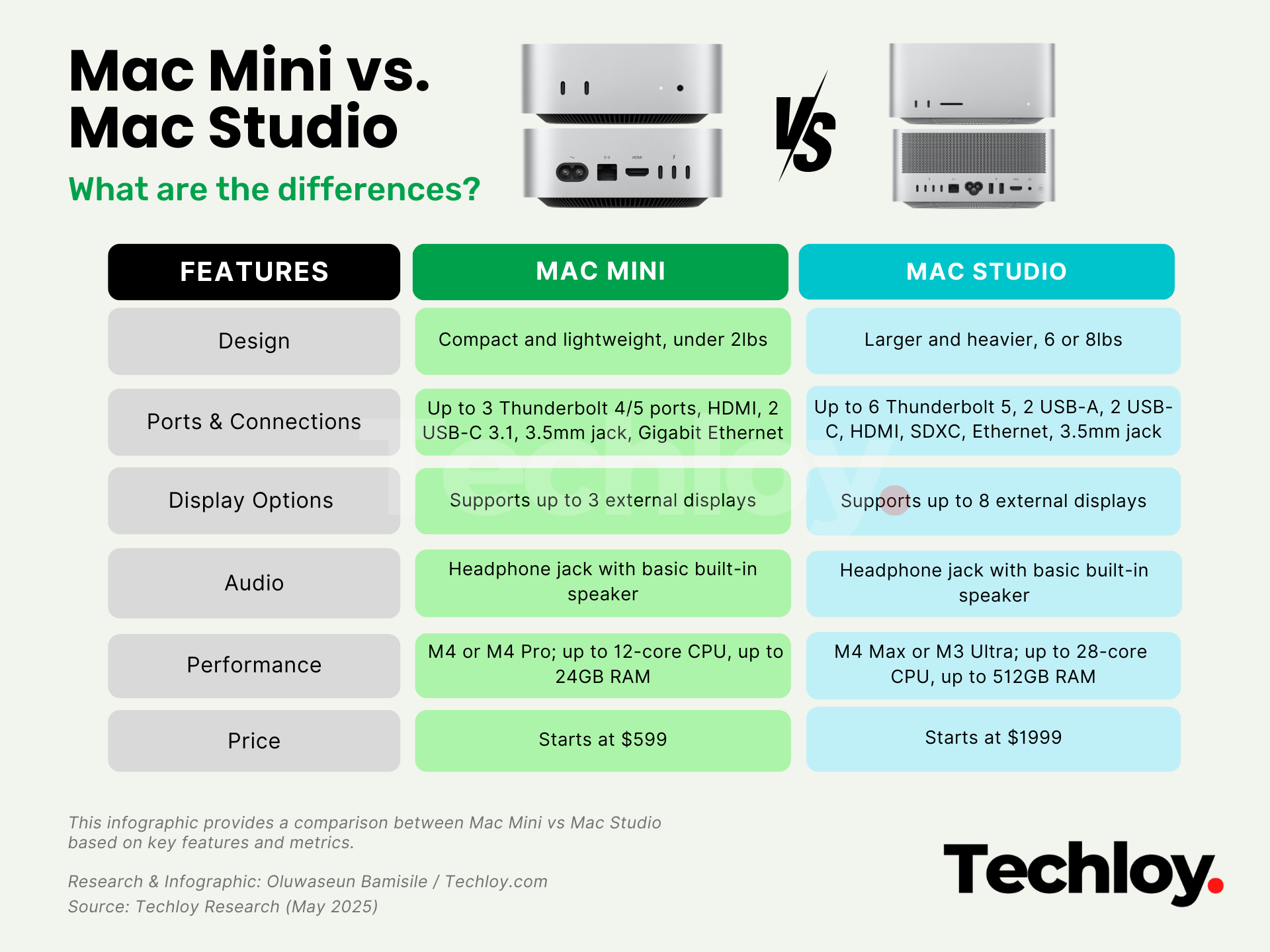

The base M3 Mac mini costs around

AI researchers and machine learning engineers started noticing this. Reddit threads appeared. Discord communities formed. GitHub discussions filled with people sharing benchmarks showing Mac mini performance on various quantized models. Suddenly, the affordable Mac wasn't just for video editors anymore. It became the go-to machine for local AI development.

The appeal multiplied when you realized you could buy multiple units. Instead of one expensive workstation, you could buy three Mac minis and distribute inference tasks across them. You could run different models simultaneously. You could experiment with architectures without monopolizing a single expensive GPU. The economics shifted in favor of quantity over raw single-machine power.

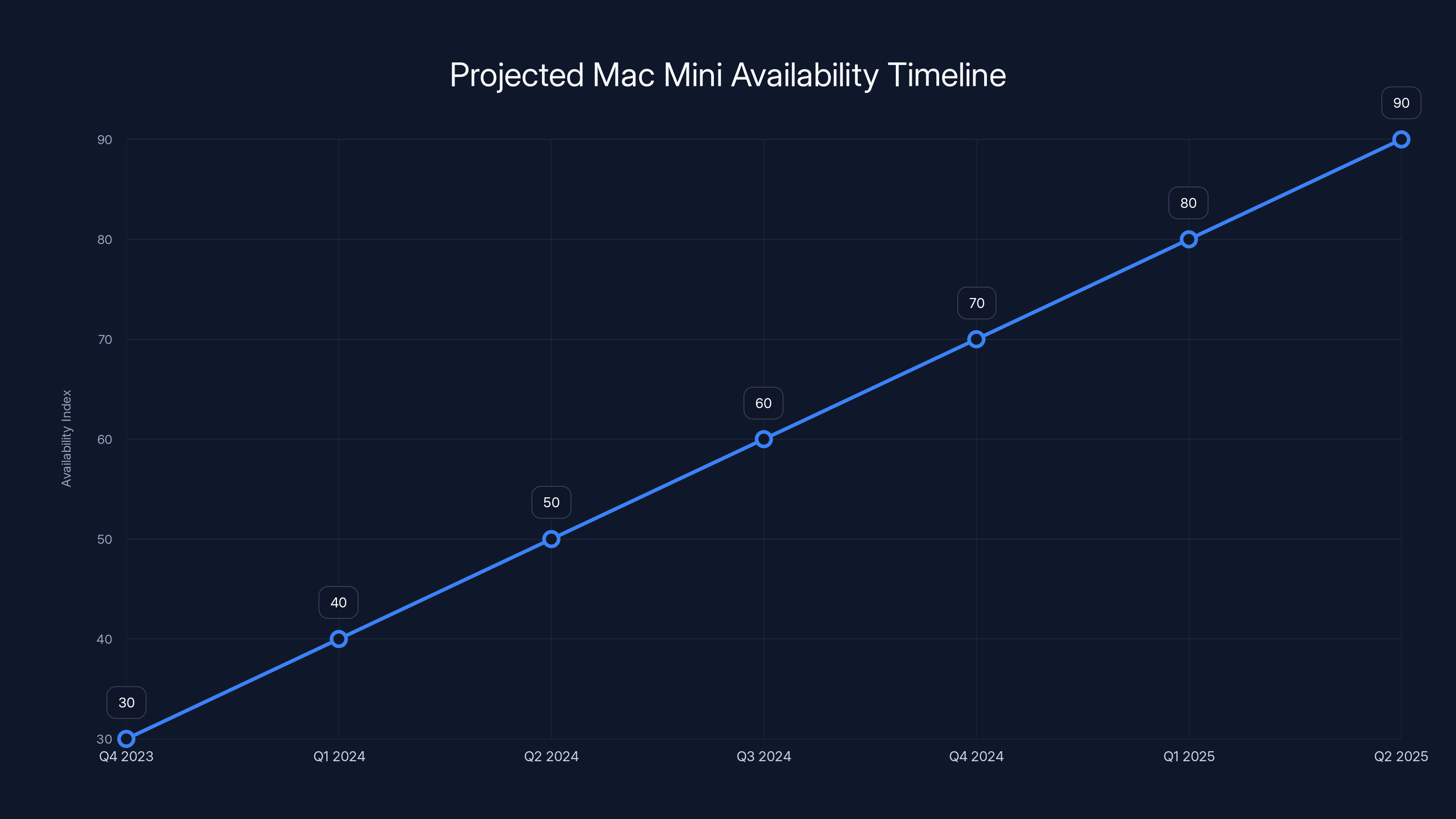

Mac mini availability is expected to gradually improve, potentially normalizing by mid-2025, assuming AI demand stabilizes. Estimated data based on supply chain analysis.

The Supply Chain Equation: Why Demand Exceeds Production

Apple manufactures Mac minis in Taiwan, primarily through Foxconn. The supply chain is complex and interconnected with dozens of component suppliers. Apple doesn't suddenly increase production because one use case becomes popular. Manufacturing timelines stretch months in advance. You can't tell a factory, "Hey, developers need more Mac minis for AI work," and expect increased inventory next quarter.

Apple designs manufacturing capacity based on projected sales. The Mac mini has never been Apple's profit center. It's a product for niche audiences: developers, designers, folks building server clusters. Historical demand was stable and predictable. Then AI happened, and demand models broke.

Here's the core problem: when demand outpaces production, you have three options. You can increase prices. You can reduce the product's capabilities to be cheaper and easier to manufacture. You can accept longer wait times and hope production catches up. Apple's chosen something closer to option three, though prices have remained stable. But wait times grew.

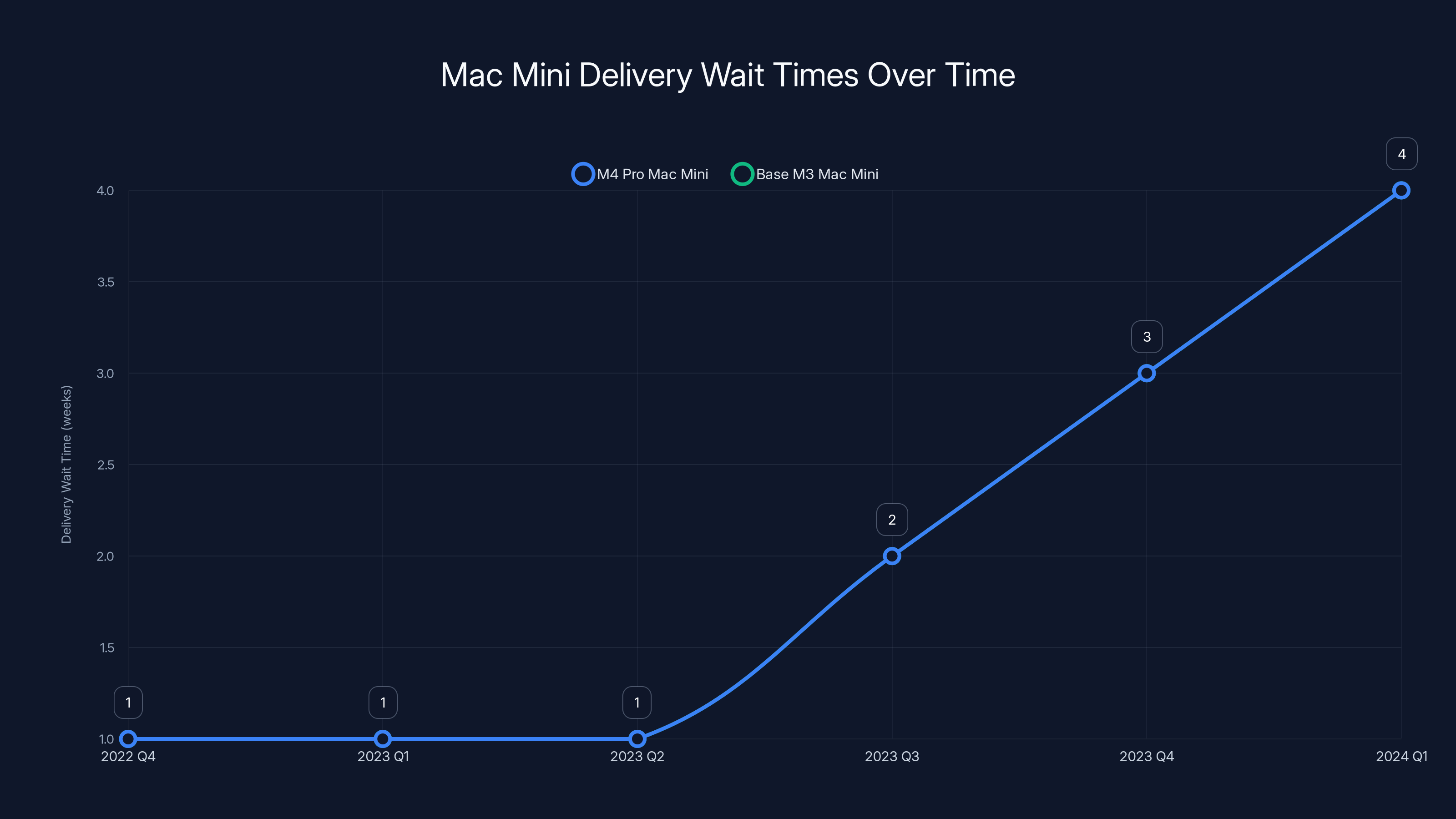

In late 2024, new M4 Pro Mac mini orders were showing delivery windows of 2-4 weeks in major markets. Base M3 models were hitting similar delays. This is unusual. Historically, Mac minis ship same-day or next-day from Apple's stores. You could walk in, buy one, leave with it. Now you're waiting.

The bottleneck isn't a single component. It's the cumulative effect of every step in the supply chain running at capacity. The chips come from TSMC. The enclosures come from one supplier. The power supplies come from another. The assembly and testing happen in Taiwan. Any step running at 95% capacity with no buffer means delays ripple backward. And when demand spikes, you hit delays immediately.

Apple's manufacturing partners are already running near capacity on other products too. iPhones still dominate production. MacBook Airs and Pros need allocation. The iPad line requires resources. The Mac mini is smaller, less profitable, and lower priority when capacity becomes constrained. It doesn't mean Apple is ignoring the problem. It means solving it requires months of negotiation with suppliers, capital investment in new production lines, and careful coordination across a global supply network.

Mac Mini prices are projected to remain stable through 2025, with a potential increase in 2026 if supply constraints persist. Estimated data based on market analysis.

How AI Training and Inference Changed Developer Hardware Decisions

To understand why developers suddenly care about Mac minis, you need to understand what changed in AI accessibility. A few years ago, running large language models locally was impractical for most developers. Model sizes were massive. Quantization techniques were immature. Consumer hardware simply wasn't powerful enough.

Then two things happened simultaneously. Model optimization improved dramatically, and Apple's processors became genuinely competitive for inference workloads. Projects like llama.cpp made it possible to run Llama models on consumer hardware with reasonable performance. Tools like Ollama abstracted away the complexity, making local model serving as simple as typing a command.

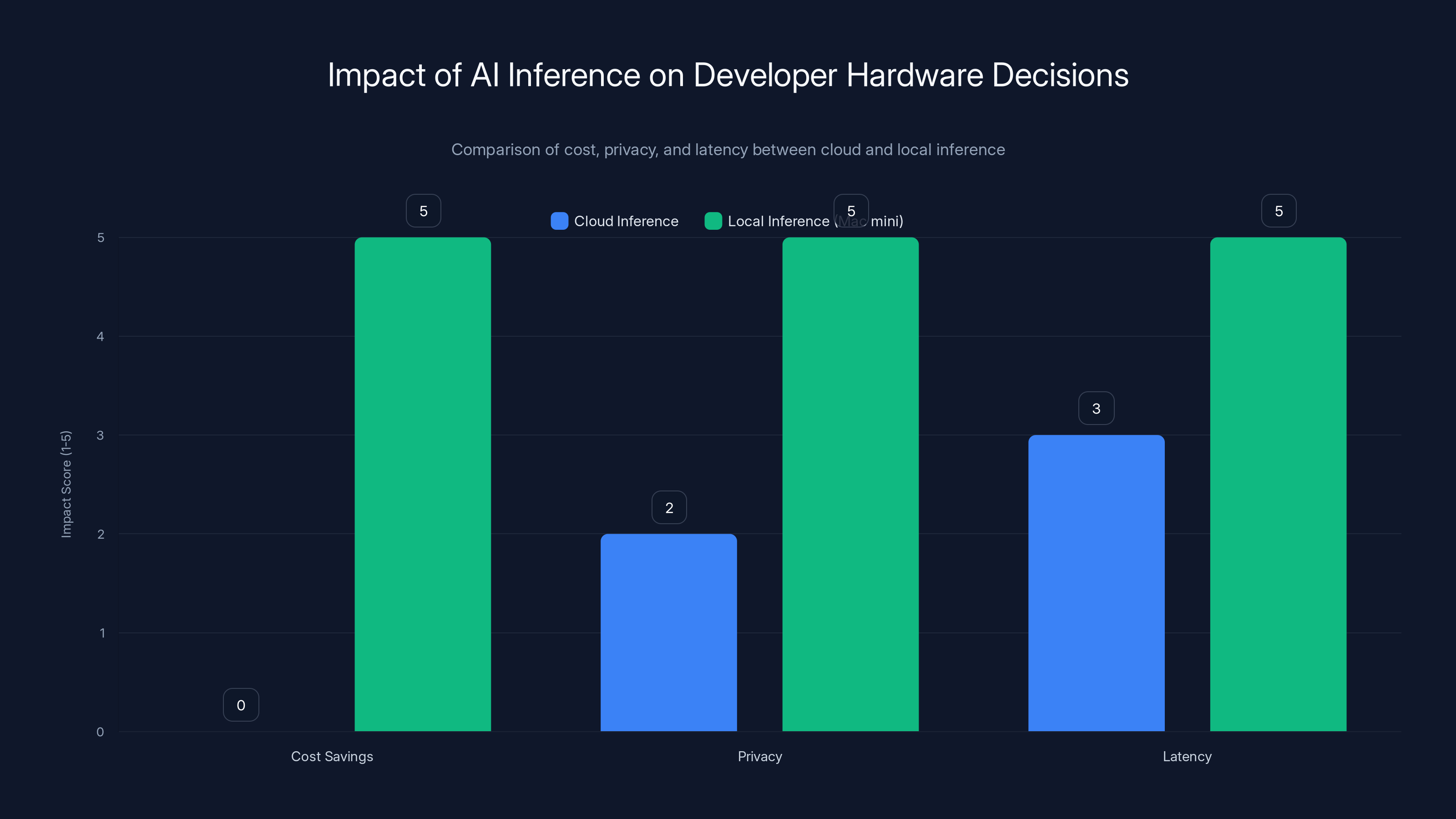

Suddenly, developers could run inference locally for fractions of a cent per request instead of paying cloud API providers. A developer running 10,000 inference calls per day could save hundreds of dollars monthly by running a local model on their Mac mini instead of hitting an API endpoint. Scale that to a team of five developers, and you're talking thousands of dollars in monthly savings.

But cost savings weren't the only factor. Privacy mattered. If you're building an application that processes sensitive data, sending it to a cloud API means trusting that provider with your information. Local inference on your own hardware means you control the data entirely. No transmission. No logging. No compliance concerns. For enterprise customers especially, this was a game-changer.

Latency improved too. Cloud APIs have network overhead. You send a request, wait for response, handle retries if something fails. Local inference is instant. The response time goes from hundreds of milliseconds to tens of milliseconds. For interactive applications where responsiveness matters, this difference is significant.

Developers who were previously considering expensive GPU workstations or cloud infrastructure for AI experimentation suddenly realized a Mac mini could handle 80% of their use cases. The remaining 20% could use cloud APIs when necessary. But for the core development loop, local development on cheap hardware was suddenly viable.

The M-Series Architecture: Why Apple Silicon Excels at AI

Apple's M-series processors have a fundamental architectural advantage for AI workloads that most people don't fully appreciate. The traditional GPU-centric approach dominates cloud AI: throw a beefy NVIDIA GPU at the problem, and you can parallelize inference across thousands of processing cores. It works, but it's power-hungry and expensive.

Apple's approach is different. The M-series uses a unified memory architecture. Your CPU cores, GPU cores, media engines, and neural processing unit all share the same memory pool. This eliminates the copying overhead that plagues traditional GPU-based systems. You load data once into memory, and every processor can access it directly.

For inference specifically, this matters enormously. You load a quantized model into memory. The CPU handles tokenization and post-processing. The GPU accelerates matrix multiplication. The neural engine handles specific tensor operations. Everything works in parallel without copying data between different memory spaces. The efficiency is remarkable.

Quantization, the technique of reducing model precision from 32-bit to 8-bit or 4-bit, works especially well on Apple Silicon. The unified architecture means quantized models run efficiently without the precision penalties you'd see on traditional systems. You can run a 70-billion parameter model on an M4 Pro with 36GB of memory by using aggressive quantization, and the quality degradation is acceptable for many applications.

Benchmarks reflect this. On standard AI inference benchmarks, M-series processors punch above their weight class. They don't beat high-end NVIDIA GPUs on raw throughput. But they beat them on power efficiency, cost-per-token, and the ability to run inference without specialized cooling or power infrastructure.

For a development team, this combination is powerful. You don't need a separate AI server with its own power, cooling, and network requirements. You buy a Mac mini, put it on a desk, and it handles most inference workloads while consuming minimal electricity. The environmental impact is lower. The infrastructure complexity is lower. The cost is lower.

The Mac Mini models offer a cost-effective solution for AI development, with the M4 Pro providing high suitability for AI tasks at a competitive price. Estimated data.

Enterprise Adoption: When Startups and Institutions Take Notice

The supply crunch accelerated when enterprise adoption started happening. Individual developers discovering Mac minis was interesting. Startups and research institutions buying them in bulk was a different story. When a machine learning research lab buys 50 Mac minis instead of ordering 50 workstations, demand spikes immediately.

Small AI startups discovered that the Mac mini cluster approach was viable. Instead of buying expensive server hardware or renting cloud GPUs, they could build local inference clusters with Mac minis. Five machines could handle significant inference load. Ten machines could serve a production API. Twenty machines could replace a cloud infrastructure bill entirely.

Universities jumped on this too. Computer science departments could outfit entire labs with Mac minis at a fraction of traditional cost. Graduate students working on AI research could use actual hardware instead of relying on cloud credits. The economics changed fundamentally.

This enterprise wave hit different than developer adoption. When one developer buys a Mac mini, it's a blip in global production. When a 50-person startup decides to buy 25 units, it's meaningful volume. When multiple universities place simultaneous orders, supply tightens noticeably.

Apple's sales and account teams also started paying attention. The Mac mini became part of conversations with enterprise customers. Instead of pointing them toward expensive server solutions, Apple reps could say, "Here's a simple, cost-effective option that handles your inference needs." It expanded the addressable market for a product that had previously been niche.

The challenge is that enterprise adoption happens in lumpy waves. One large order doesn't mean sustained demand. But multiple large orders, from multiple organizations, happening simultaneously, absolutely strains capacity. And because procurement timelines are long, these large orders get placed months in advance. By the time supply issues become visible to consumers, orders are already backed up.

Market Dynamics: When One Product Becomes a Constraint

The Mac mini shortage is creating unusual market dynamics. Secondary markets are active. Used Mac minis are selling for close to new prices. People are flipping new purchases. Build-to-order delays mean some buyers are getting creative about sourcing.

This creates interesting ripple effects. People who wanted Mac minis for legitimate reasons, maybe a small creative business or a developer who uses one as a workstation, are finding they can't buy one at a reasonable price or delivery window. Meanwhile, speculators and bulk buyers are hoarding inventory. The market isn't clearing efficiently.

Alternatives are becoming appealing. Developers who might've bought Mac minis are looking at alternatives. Some are using cloud services more than they planned. Others are building clusters on Linux machines instead. A few are even revisiting Windows-based solutions. When you can't buy the product you want at the price you expected, your second choice becomes your first choice.

This is problematic for Apple's position in the developer community. The Mac mini was becoming a gateway product. A developer buys one for AI work, likes the experience, and eventually buys a MacBook Pro or iMac. Losing a developer at the entry point is costly long-term. It shifts them toward other ecosystems.

Apple's also losing credibility in conversations with enterprise customers. When you can't deliver the hardware you promised on a reasonable timeline, it undermines confidence. A startup evaluating whether to commit to Mac minis for infrastructure might decide that uncertainty isn't worth it and stick with more reliable supply chains, even if those options are more expensive.

Local inference on devices like Mac minis offers significant cost savings, enhanced privacy, and improved latency compared to cloud-based inference. Estimated data based on typical developer experiences.

The Supply Chain Response: When Manufacturers Scale

Apple's suppliers are mobilizing. Foxconn has indicated plans to increase Mac mini production. TSMC is increasing M-series allocation. Component suppliers are investing in additional capacity. But all of this takes time. New production lines require months to install and validate. Supply agreements take time to negotiate. Manufacturing ramp-up is gradual, not instantaneous.

The challenge is predicting demand accurately. If Apple over-invests in Mac mini production and demand falls back to baseline, they're stuck with excess capacity. If they under-invest, shortages persist. Getting the forecast right is critical, and forecasting something as unprecedented as AI-driven Mac mini demand is inherently uncertain.

Historically, Apple tends to be conservative with supply increases. The company prefers slightly constraining supply to avoid the appearance of over-production. Artificial scarcity is better marketing than clearance sales. But this philosophy works differently when the constraint blocks an emerging market. If developers can't buy Mac minis, they don't wait patiently. They find alternatives.

Some supply chain observers expect production to stabilize by Q2 or Q3 of 2025, assuming AI demand doesn't spike further. But that's contingent on demand plateauing, not accelerating. If large institutions continue placing bulk orders, or if AI use cases expand to new categories of users, the shortage persists.

Apple is also constrained by capital allocation. Every dollar spent increasing Mac mini production is a dollar not spent on MacBook or iPhone manufacturing. Strategically, iPhones are more important to Apple's bottom line. The company won't compromise iPhone production to increase Mac mini supply. This creates a permanent bottleneck on the high side.

Pricing Pressures: Will Mac Mini Costs Rise?

Interestingly, Mac mini prices have remained stable despite supply constraints. Apple isn't raising prices to balance supply and demand, which would be the economically rational move. A product in shortage with prices held constant indicates either supply will increase, or demand management via scarcity is intentional.

However, pricing pressure exists elsewhere. Retailers in some markets are marking up Mac minis. Resale markets show premiums. If you buy a Mac mini and resell it on secondary markets, you might pocket $100-300 depending on configuration and market conditions. This gray market activity suggests room for official price increases without demand destruction.

Apple's likely thinking long-term. Raising prices on the Mac mini during a shortage would damage the product's value proposition and alienate developers and startups who are discovering the product as a cost-effective solution. Holding prices steady while supply gradually increases creates goodwill. The short-term revenue hit from not raising prices is offset by the long-term benefit of expanded market mindshare.

However, if supply remains constrained for many more months, price increases become more likely. Companies eventually have to manage scarcity, and price is the primary lever. Expect prices to remain stable through 2025, but revisit this assumption if shortages extend into late 2025 or 2026.

Estimated data shows a significant increase in delivery wait times for Mac Mini models from same-day to 4 weeks by early 2024, highlighting supply chain constraints.

The Ripple Effects: How Other Products Are Impacted

The Mac mini shortage has secondary effects on related products. Some developers considering a MacBook Pro as their primary machine are instead buying a Mac mini to save money, deferring the MacBook purchase. This reduces MacBook sales volume. It's not massive, but when you're talking about a constraint that affects thousands of units monthly, the impact compounds.

Desktop monitor and peripheral manufacturers are also affected. Mac mini buyers typically need external displays, keyboards, and mice. When Mac mini sales are constrained, peripheral sales decline slightly as a consequence. This is particularly noticeable in the premium monitor market where Apple ecosystem users disproportionately concentrate.

Apple's services are tangentially affected too. Fewer Mac sales mean fewer devices eligible for AppleCare+, fewer users in the Apple ecosystem, and slightly slower growth in the installed base. When the installed base stalls, services growth inevitably slows.

The startup ecosystem is experiencing the ripple effects most acutely. Founders building companies around local inference, distributed ML, or edge AI suddenly face hardware constraints they didn't anticipate. Some are pivoting to cloud-first strategies. Others are diversifying hardware platforms. A few are even building custom solutions instead of relying on Mac minis.

Alternative Solutions: What Developers Are Doing Instead

With Mac mini availability constrained, developers are exploring alternatives. Some are moving inference to cloud services they were trying to avoid. Others are building clusters on cheap Linux machines, accepting the operational overhead in exchange for availability. A few brave souls are exploring custom silicon solutions.

Cloud services have seen increased adoption as a direct result. OpenAI's API, Anthropic's Claude API, and other commercial endpoints are seeing more traffic from developers who would've preferred local inference but can't source the hardware. This is somewhat ironic for cloud providers: the Mac mini shortage is actually driving business back to them.

Linux-based alternatives are gaining ground too. Used to be, developers chose Macs for Unix-like development environments. Now they're choosing Linux servers explicitly for AI inference. Docker containers running quantized models on rented Linux instances are viable. It's more operationally complex, but availability is better than waiting for Mac minis.

For developers with larger budgets, high-end GPU solutions like NVIDIA's H100 or A100 clusters are still the standard for serious training work. But for inference, the Mac mini's price-to-performance was creating a gap between cloud APIs and on-premise hardware. That gap is closing as developers migrate back to cloud solutions out of necessity.

Global Availability: Regional Differences in Supply Constraints

The shortage isn't uniform globally. Some regions experience tighter constraints than others. The United States and Western Europe have steady but delayed supply. China has better availability, partly because AI adoption is happening faster there and supply chains are responding more aggressively. Emerging markets have even longer wait times due to lower priority in Apple's allocation.

This creates arbitrage opportunities for distribution networks. Some resellers are buying Mac minis in regions with better availability and selling into markets with shortages. This gray market activity is economically rational but frustrating for end users who face higher prices.

Apple's regional distribution strategy is also a factor. Different regions get different allocation percentages based on predicted demand and strategic importance. The United States, as Apple's largest market, gets higher allocation. But even the U.S. isn't immune to the shortage.

International buyers sometimes have to wait longer simply because they're not in a primary market. A developer in Singapore might wait six weeks where someone in San Francisco waits three weeks. This regional variance creates frustration and pushes some international customers toward alternatives.

When Will Supply Normalize? Timeline and Expectations

Forecasting when the shortage ends is speculative, but several scenarios are plausible. Optimistic scenario: production increases substantially by Q2 2025, and wait times drop to normal 1-2 week ranges by mid-2025. Moderate scenario: supply gradually loosens through 2025, with normalized availability by late 2025 or early 2026. Pessimistic scenario: if AI demand continues accelerating, constraints persist throughout 2025.

The key variable is demand trajectory. If AI adoption curves continue escalating, shortages persist regardless of production increases. If demand plateaus, supply increases will eventually catch up. Betting on plateau happening seems reasonable, but it's not guaranteed. AI development is moving fast, and the Mac mini's role in AI infrastructure is relatively new.

Apple hasn't officially commented on timelines or supply increases for Mac minis. The company rarely discusses specific product constraints publicly. Supply chain analysts estimate production capacity could increase 30-50% over the next 12 months, which would alleviate most current constraints but wouldn't eliminate all demand. Some friction would remain.

For potential buyers, the practical takeaway is: don't expect instant availability anytime soon, but also don't expect to wait six months. The shortage is real but not apocalyptic. Waiting 2-4 weeks is likely the baseline for new orders through at least mid-2025.

The Broader Picture: Why This Matters Beyond Mac Mini

The Mac mini shortage is a window into how rapidly the AI economy is disrupting assumptions about hardware and supply chains. The product nobody expected to be popular for AI suddenly is, and the supply chain can't keep up. This pattern will repeat with other products.

GPU manufacturers are facing similar pressures. NVIDIA saw demand accelerate faster than supply capabilities. Memory manufacturers are overwhelmed. Thermal solutions, power supplies, and cooling systems are all experiencing increased demand from AI applications. When an entire industry shifts toward a new infrastructure paradigm, supply chains strain.

The lesson is that AI adoption is happening faster than many companies anticipated. Suppliers are learning this lesson too. Next time demand spikes unexpectedly, they'll be more cautious about underestimating allocation. But that learning doesn't help Apple or developers facing this shortage right now.

For Apple specifically, it's a reminder that even niche products can become mainstream when use cases shift dramatically. The Mac mini was a known quantity with stable, predictable demand. One emerging technology disrupted that assumption. It won't be the last time.

What Buyers Should Do Right Now

If you're considering a Mac mini purchase, here's practical advice. First, assess whether you actually need one urgently. If you're experimenting with AI and could wait until supply normalizes, waiting is rational. You'll pay the same price with a shorter wait time.

If you need one for work or business reasons, order now and accept the wait. Two to four weeks isn't excessive by historical standards. Set expectations accordingly and don't assume you'll receive it immediately.

Consider configuration carefully. Base M3 models have longer waits than M4 Pro models in some regions. If you have flexibility on specs, checking different configurations might reveal shorter availability on some options.

Explore refurbished options. Apple's refurbished store often has Mac minis with shorter delivery windows. You get the same warranty, same performance, and a lower price. It's a legitimate way to acquire the product faster.

If you're buying for a team or organization, contact Apple's business sales directly. They have different inventory channels and might be able to help with bulk orders better than retail channels.

Finally, don't pay markups on secondary markets unless the time cost of waiting exceeds the markup amount. Paying

Implications for the Mac Mini's Future

If the shortage persists and demand remains strong, Apple will eventually increase production. The company's strategic interest is in the Mac mini becoming a cornerstone of the developer and AI computing ecosystem. That requires reliable availability at stable prices.

Expect the M5 and subsequent generations to receive more manufacturing priority. Apple learns from supply constraints and adapts allocation over time. The Mac mini's role in AI infrastructure will inform future product development, feature allocation, and manufacturing investment.

There's also a chance Apple discontinues or refocuses the product if the developer market doesn't solidify. Temporary demand spikes sometimes disappear when initial enthusiasm fades. But early evidence suggests AI use cases for local inference are genuinely sustainable, not transient fads.

Long-term, expect Mac minis to become increasingly important to Apple's story. Not as a consumer product, but as a developer platform and AI infrastructure component. That positioning justifies manufacturing investment and supports the premium pricing that comes with Apple's brand.

FAQ

Why are Mac mini supplies so constrained right now?

Supply is constrained primarily due to unexpected demand from the AI community. Developers and organizations discovered that Mac minis offer exceptional price-to-performance for running language models and AI inference locally. Apple's manufacturing capacity, designed for stable historical demand, can't keep pace with this surge. Supply chain components from TSMC chips to enclosures all run at capacity, creating bottlenecks throughout production.

How is AI demand specifically driving Mac mini shortages?

Mac minis running Apple's M-series processors can execute AI inference workloads efficiently due to unified memory architecture and low power consumption. The combination of affordability (starting at $600) and AI-capable performance created a compelling value proposition for developers and startups. Teams that would've spent thousands on server hardware realized they could buy multiple Mac minis more cheaply, accelerating demand beyond Apple's production capacity.

When will Mac mini availability return to normal?

Most supply chain analysts expect gradually improving availability through 2025, with more normalized conditions potentially emerging in the second or third quarter. However, this assumes AI demand plateaus. If demand continues accelerating, constraints could persist longer. Apple hasn't provided official guidance, but the company is reportedly working with suppliers to increase production capacity by 30-50% over the next twelve months.

Should I buy a Mac mini now or wait for stock to normalize?

If you need one immediately for work or business purposes, order now and accept a 2-4 week wait. If you're experimenting or your timeline is flexible, waiting potentially avoids the wait entirely. Consider checking Apple's refurbished inventory, which often has shorter delivery windows, or explore cloud-based alternatives if local AI infrastructure isn't mandatory.

Are Mac mini prices increasing due to the shortage?

Official retail prices have remained stable so far. Apple isn't using price increases to manage scarcity, likely to preserve the product's value proposition for developers. However, resale and gray market prices do carry premiums. Expect prices to stay level through 2025, but monitor this assumption if shortages extend significantly beyond that.

What are the best alternatives if I can't get a Mac mini?

Alternatives depend on your use case. For AI inference, cloud services like OpenAI's API or Anthropic's Claude API are viable. For developers wanting local infrastructure, Linux-based servers offer better availability. For enterprise needs, traditional server hardware or GPU clusters are established options. Each has different cost and operational complexity tradeoffs compared to the Mac mini.

Why doesn't Apple just increase production immediately?

Apple can't increase production instantly because manufacturing takes months to scale. Production lines need setup, component suppliers need to increase output, supply agreements need negotiation, and quality assurance requires testing. Additionally, every manufacturing dollar spent on Mac minis is a dollar not spent on higher-priority products like iPhones and MacBooks. Apple strategically allocates production capacity, and dramatic increases require capital investment decisions.

Will Mac mini prices drop once supply normalizes?

Historically, prices remain stable when supply normalizes. Apple rarely uses clearance or discounting on products. Once supply catches up to demand, prices likely stay at current levels. However, there's always a possibility of price changes with new product generations. Watch for announcements of M5 or later generations, which might include pricing adjustments.

Key Takeaways

- Mac mini availability is constrained by unexpected demand from AI developers and organizations seeking affordable local inference hardware

- Apple's M-series unified memory architecture makes Mac minis exceptional for running quantized language models efficiently compared to traditional GPU approaches

- Supply chain components from TSMC through manufacturing partners all run at capacity, creating bottlenecks that can't resolve quickly

- Developers are exploring alternatives including cloud APIs, Linux servers, and GPU clusters when Mac minis remain unavailable

- Production capacity is expected to increase 30-50% through 2025, but more normalized availability likely extends into mid-to-late 2025

Related Articles

- Steam Deck Out of Stock: RAM Shortage Impact [2025]

- PS6 Delayed to 2029, Switch 2 Price Hike: RAM Shortage Impact [2025]

- Steam Deck Stock Crisis: Memory & Storage Shortage Impact [2025]

- Cohere's Tiny Aya Models: Open Multilingual AI for 70+ Languages [2025]

- Steam Deck OLED Out of Stock: The RAM Crisis Explained [2025]

- Dell Pro Max Tower T2 Review: Professional Workstation [2025]

![Mac Mini Shortages Explained: Why AI Demand is Reshaping Apple's Supply [2025]](https://tryrunable.com/blog/mac-mini-shortages-explained-why-ai-demand-is-reshaping-appl/image-1-1771357058738.jpg)