Major Cybersecurity Threats & Digital Crime This Week: What You Need to Know

Every week, the cybersecurity landscape shifts under our feet. New vulnerabilities emerge. Criminal networks scale. Governments deploy surveillance tools at unprecedented scale. And this week was no exception—it was a masterclass in why staying informed about security threats matters more than ever.

Let me be direct: the stories breaking right now aren't just headlines for security professionals anymore. They're relevant to anyone with a digital life. From AI agents that can hijack your email to international crime syndicates operating with stunning sophistication, the scope of risk has widened dramatically.

This week alone brought us extraordinary developments across multiple threat vectors. An informant revealed that Jeffrey Epstein had employed a highly skilled hacker who exploited zero-day vulnerabilities in iOS, BlackBerry, and Firefox. A viral AI assistant called Open Claw captured millions of users' attention—and exposed serious security trade-offs that most people don't understand. Chinese authorities executed members of organized crime families who operated scam compounds that stole

What ties these stories together? They reveal how modern crime, espionage, and state power operate in 2025. The tools are more sophisticated. The networks are more distributed. The stakes are higher. And the gap between public understanding and technical reality keeps widening.

I've spent the last week digging into each of these stories to understand not just what happened, but why it matters. Here's what you need to know.

TL; DR

- Jeffrey Epstein's "Personal Hacker": An informant revealed Epstein employed someone with advanced iOS, BlackBerry, and Firefox exploits who sold zero-day vulnerabilities to governments and allegedly to Hezbollah.

- Open Claw AI Security Crisis: A viral AI agent gained 2+ million users in one week while exposing critical security vulnerabilities, with hundreds of instances of users accidentally exposing full system access to the internet.

- Chinese Crime Family Executions: Authorities executed 11 members of the Ming crime family for operating Southeast Asian scam compounds that generated $1.4 billion in eight years using forced labor.

- Deepfake Sexual Abuse: "Nudify" technology is becoming increasingly accessible and sophisticated, posing escalating risks to millions of people.

- Immigration Enforcement AI: ICE deployed Palantir's AI system to process surveillance tips and facial recognition tools to scan citizens and immigrants, raising significant privacy concerns.

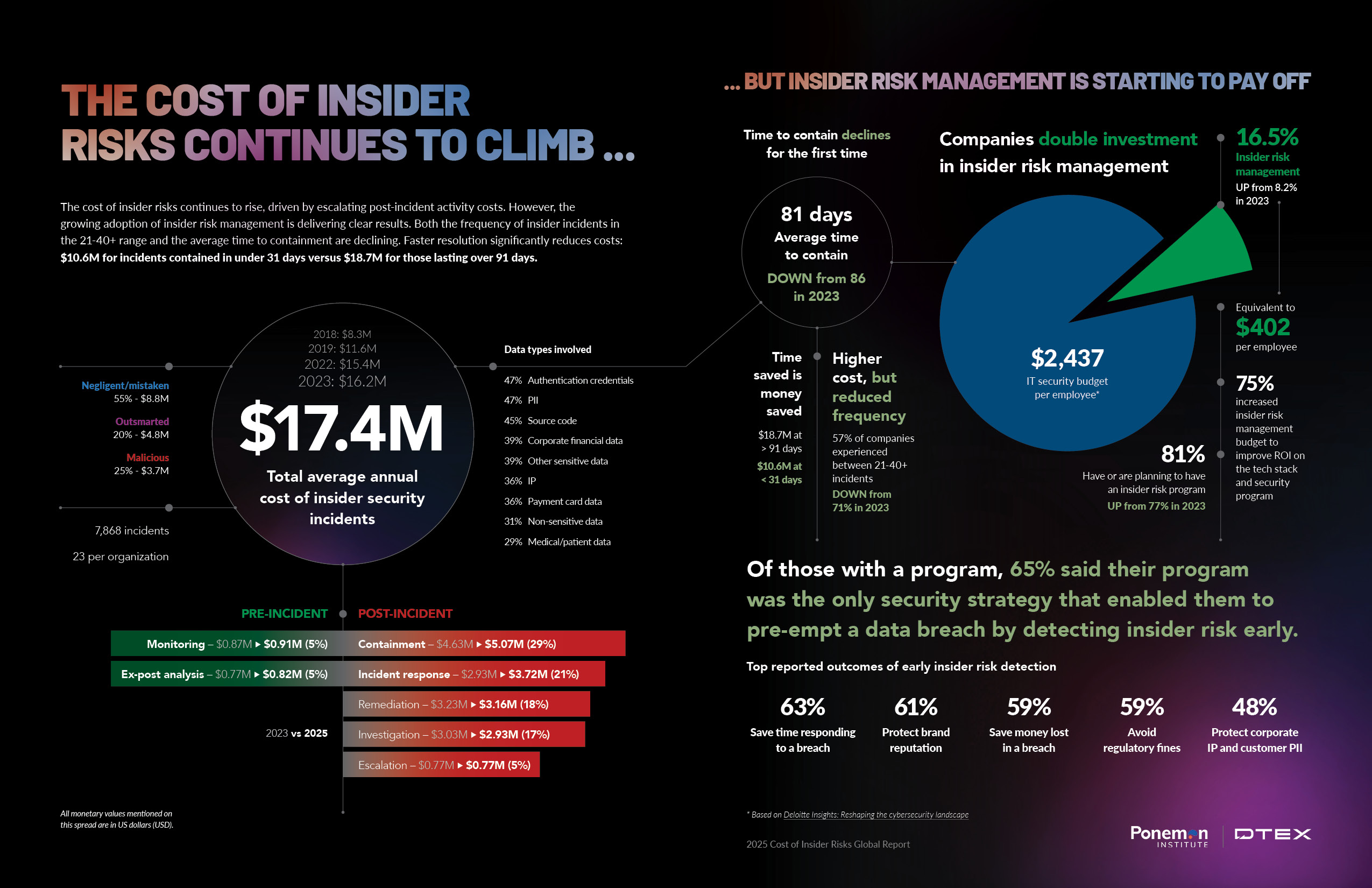

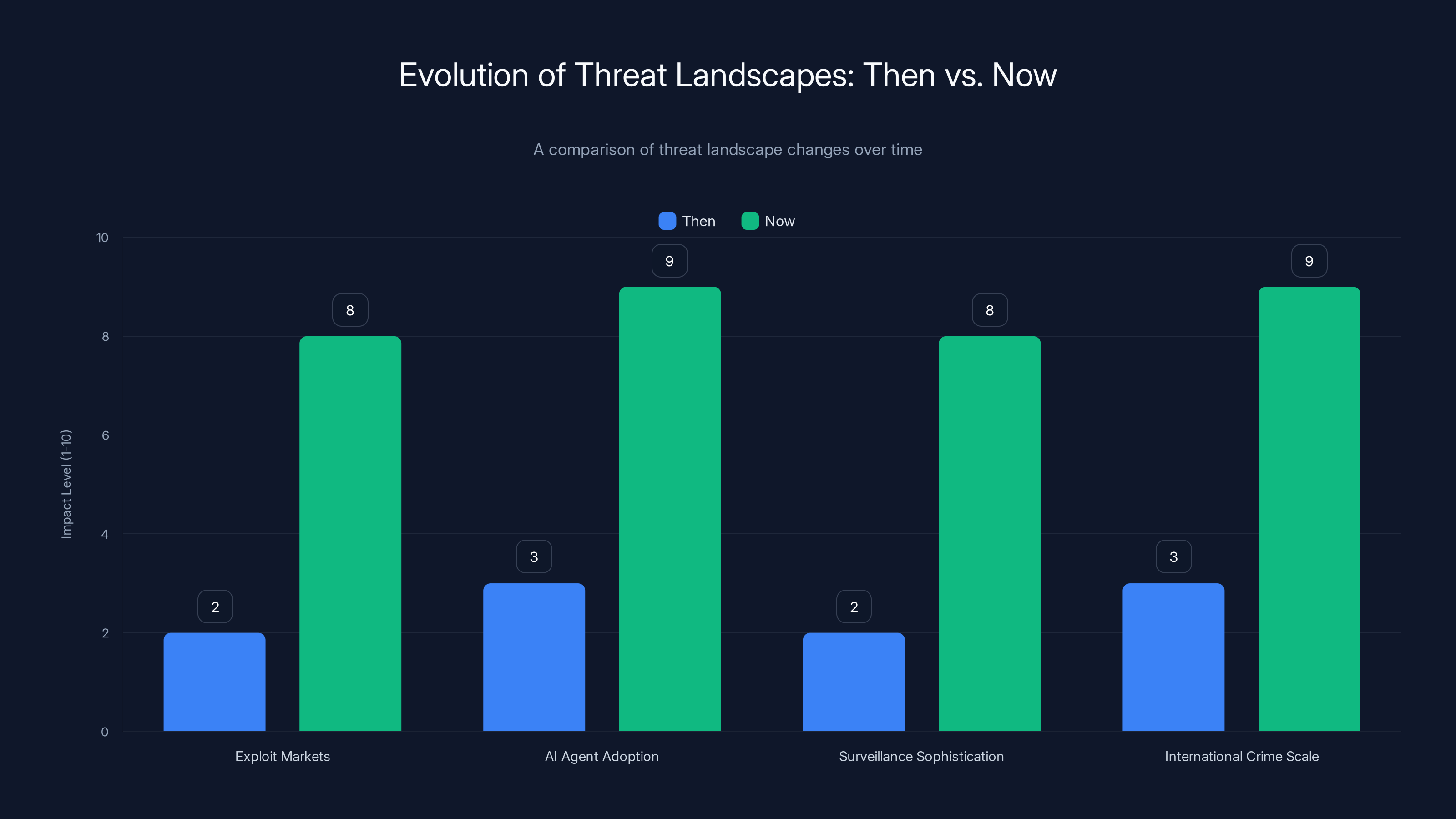

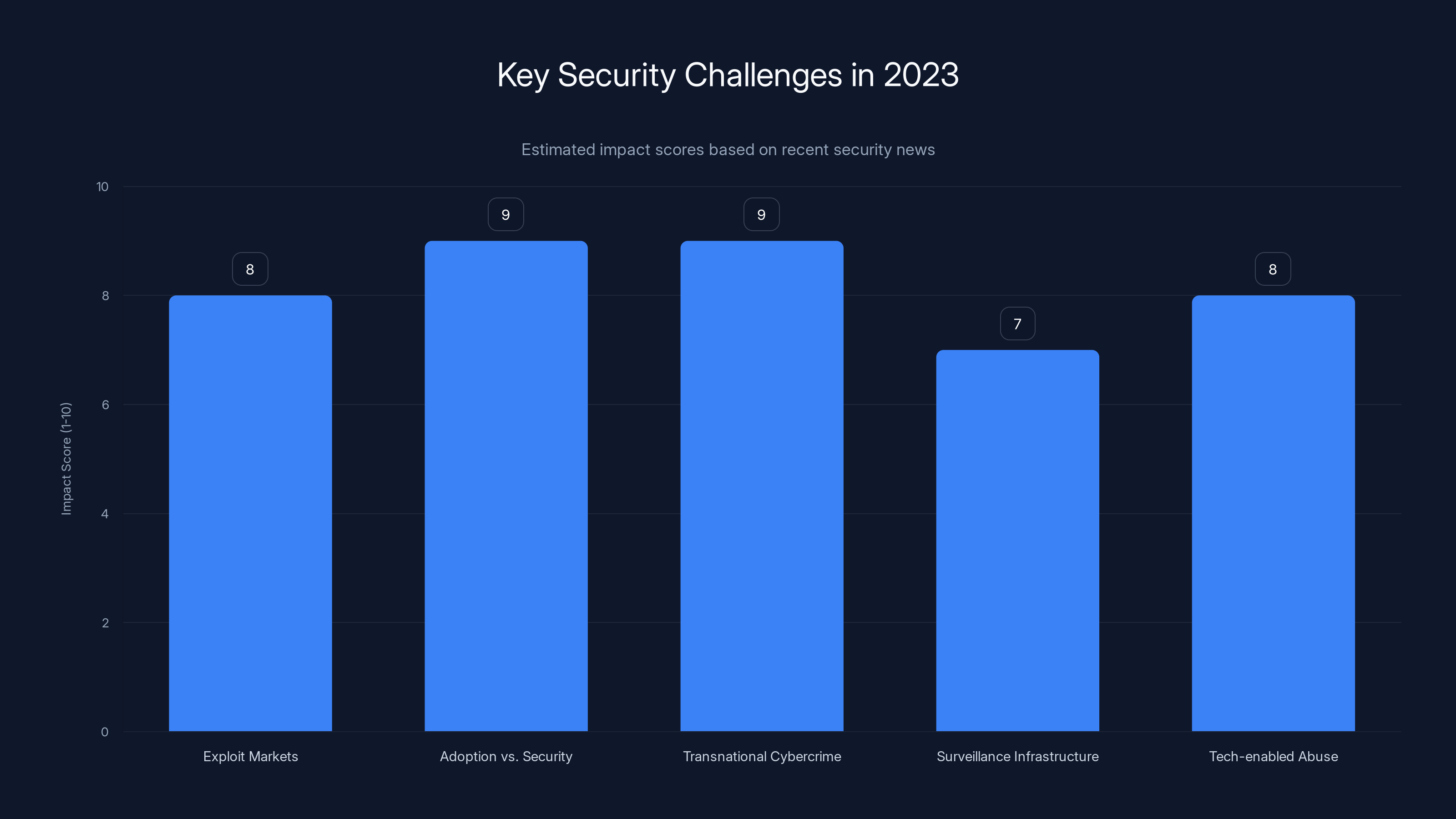

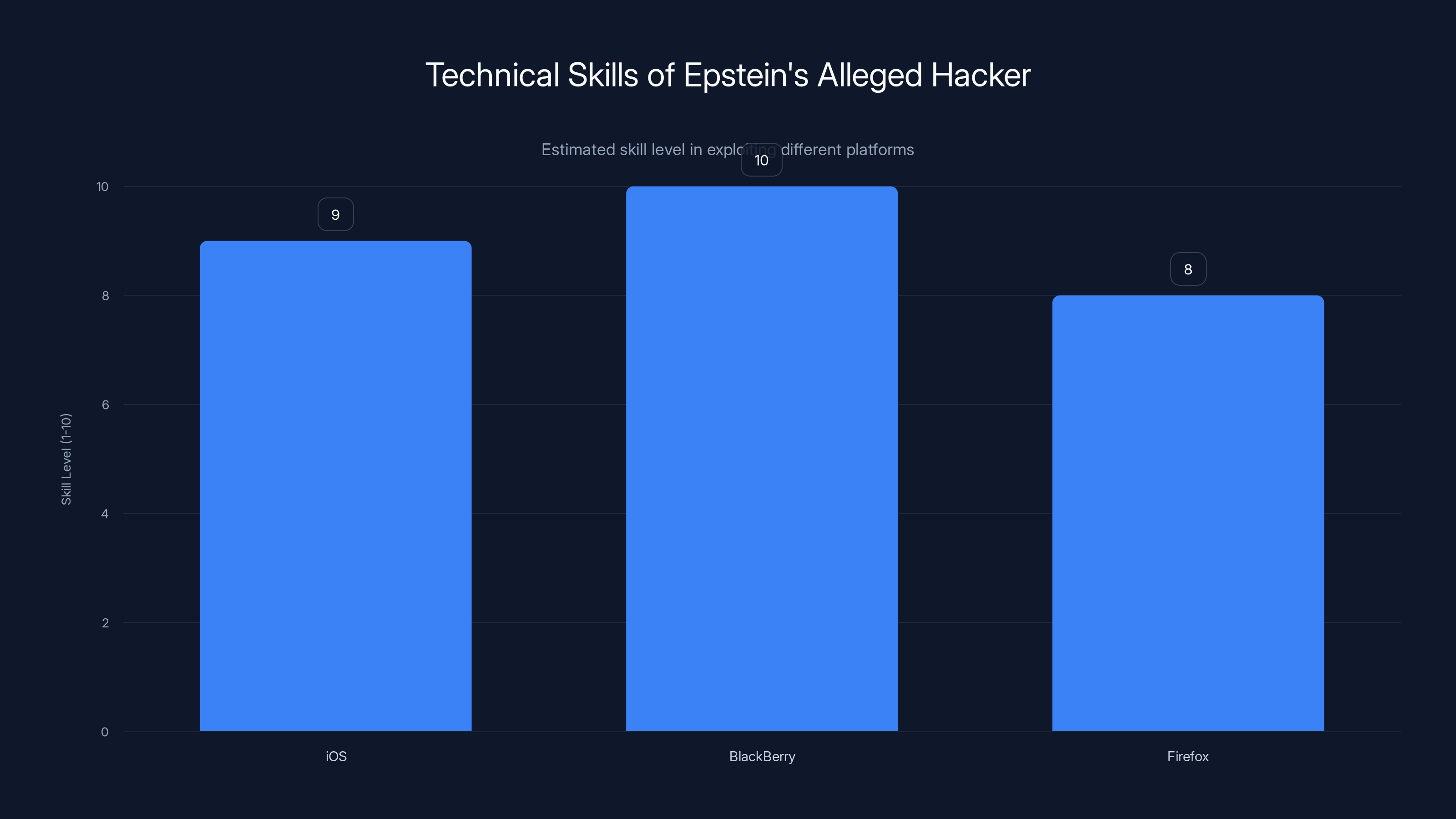

The threat landscape has evolved significantly, with exploit markets, AI adoption, surveillance, and international crime scaling up dramatically. Estimated data.

The Epstein "Personal Hacker" Revelation: What We Know

Let's start with what might be the most unexpected revelation this week. The U.S. Department of Justice released a document on Friday detailing an FBI interview from 2017 in which an informant claimed Jeffrey Epstein had employed a personal hacker. This isn't speculation or a rumor—it's part of the official investigative record.

Here's what's significant: this wasn't just some script kiddie running basic attacks. According to the informant's account, this hacker had serious technical chops.

The Alleged Hacker's Background and Skills

The document describes someone allegedly born in southern Calabria, Italy—a region with historical ties to organized crime organizations. But the informant's focus was on technical capabilities, not family background. The hacker supposedly specialized in discovering vulnerabilities in three specific targets: Apple's iOS mobile operating system, BlackBerry devices, and the Firefox browser.

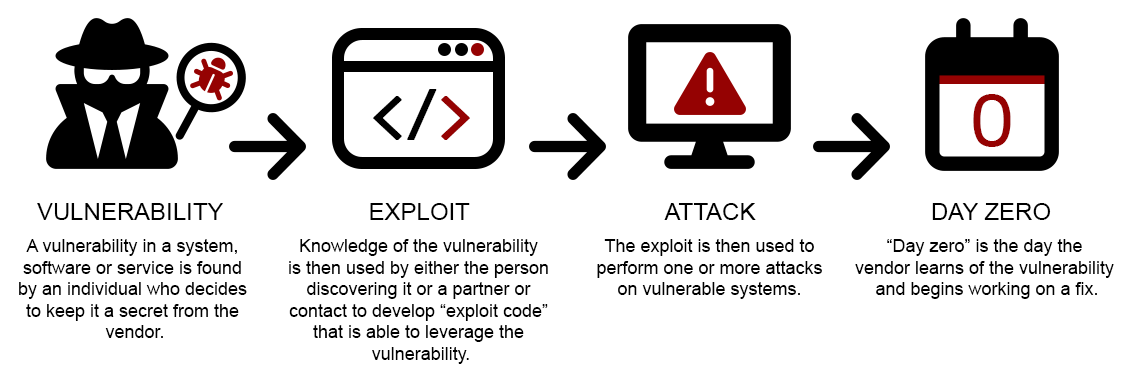

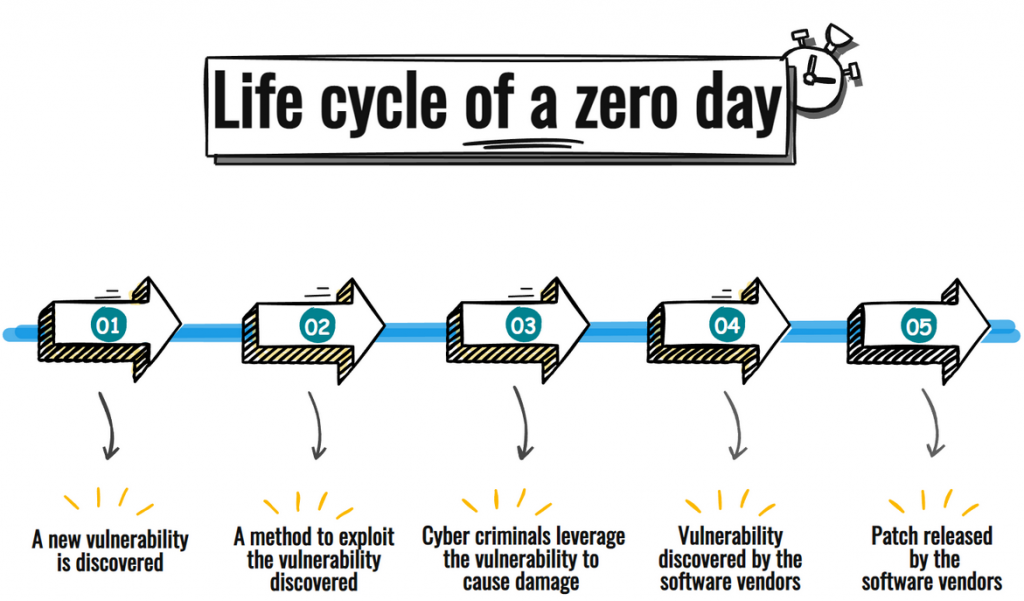

When the informant told the FBI that this person "was very good at finding vulnerabilities," they weren't exaggerating. Finding zero-day vulnerabilities—bugs that vendors don't know about yet—requires deep technical knowledge, creative thinking, and serious expertise. It's not something casual hackers do. It requires understanding operating system architecture, memory management, code execution flows, and exploit development.

The specificity matters here. iOS and BlackBerry weren't random targets. In 2017, those were two of the most security-conscious platforms in the market. BlackBerry in particular was considered a fortress by security standards—it was the phone of choice for government officials, military personnel, and anyone seriously concerned about being hacked. Finding exploits in BlackBerry wasn't just technically difficult. It was extremely valuable.

The Exploit Development and Sales Pipeline

What makes this story particularly interesting is what allegedly happened next: the hacker didn't keep these exploits. They sold them.

According to the informant, this person developed offensive hacking tools including exploits for unknown and unpatched vulnerabilities and sold access to multiple governments and other buyers. The list of buyers is striking: an unnamed central African government, the United Kingdom, the United States, and—most surprisingly—Hezbollah, a designated terrorist organization. The informant even reported that Hezbollah paid in cash. Literally "a trunk of cash."

This raises immediate questions about what Hezbollah wanted with iOS exploits. Were they trying to compromise devices of Israeli officials? American intelligence personnel? Business targets? The informant's account doesn't specify, but the fact that a sophisticated terrorist organization was willing to pay significant money for these exploits tells us something important: they saw real tactical value.

Critical Uncertainties

Here's what we don't know, and it matters significantly. The FBI document doesn't say whether the informant's account was accurate. It doesn't say whether investigators verified any of these claims. It doesn't provide the alleged hacker's name or any identifying information beyond the Calabria birthplace. And it doesn't explain why the informant had this information or how reliable they were.

It's possible the informant was completely accurate. It's also possible they were misremembering, exaggerating, or even fabricating details. Federal investigators have encountered all three scenarios countless times. The fact that a document exists doesn't automatically mean every claim in it is true.

What we do know is that zero-day exploit trading is a real, documented industry. Legitimate security companies like Zerodium and Exodus Intelligence operate publicly and pay significant money for exploits—sometimes in the six-figure range. Government agencies do buy exploits for intelligence gathering. And terrorist organizations have attempted to acquire hacking capabilities before.

So while this specific story has gaps and uncertainties, it fits within a real ecosystem of exploit development and sales that definitely exists.

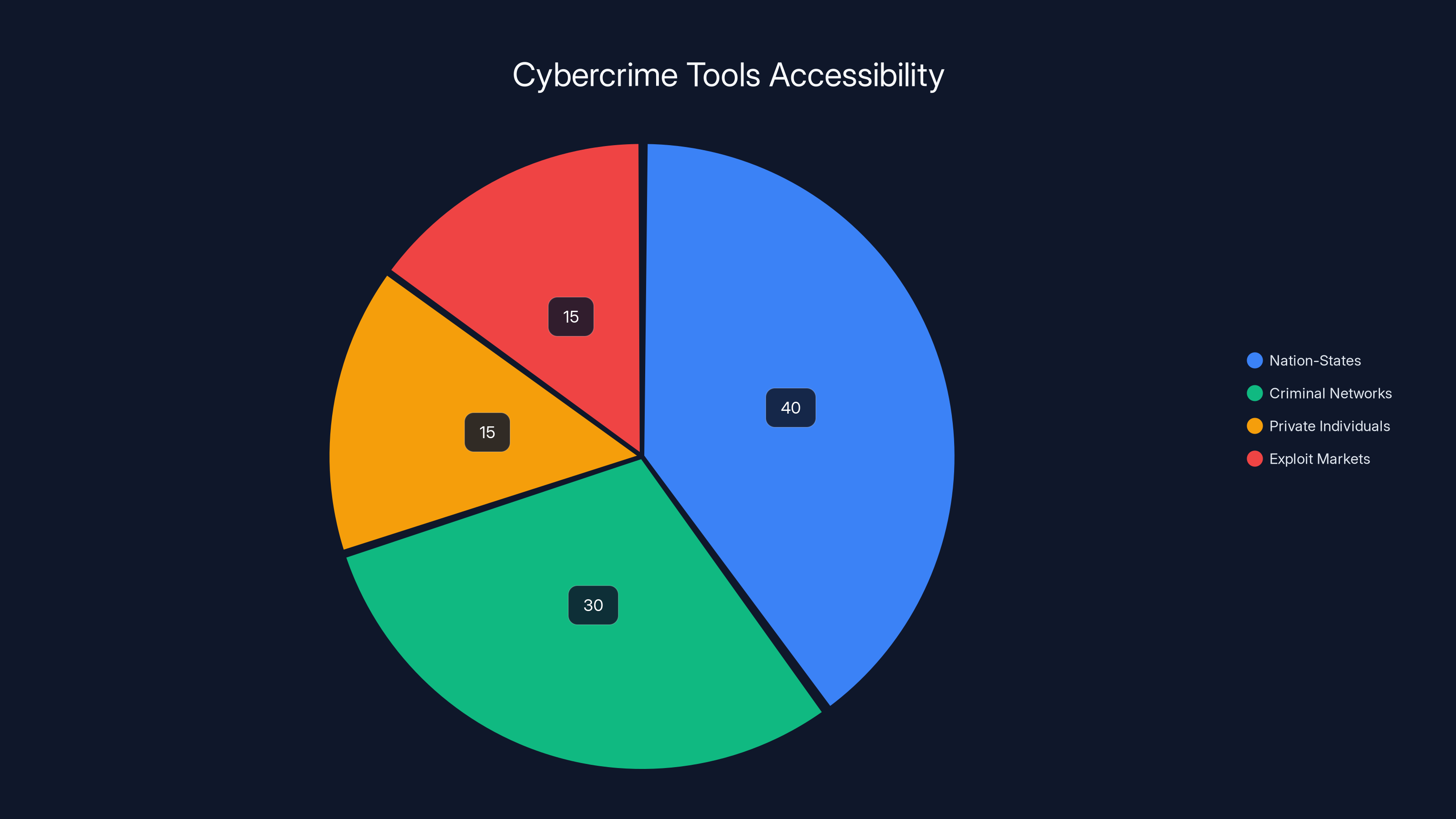

Estimated data suggests that while nation-states held the majority of advanced hacking tools in 2017, criminal networks and exploit markets also had significant access, highlighting the democratization of cyber capabilities.

Open Claw's Viral Rise and Hidden Security Dangers

Now let's shift gears to something happening right now, in real time. An AI assistant called Open Claw has exploded in popularity over the past week. If you're paying attention to AI developments, you've probably heard the hype. More than 2 million people have visited the project in seven days. Tech entrepreneurs are celebrating it. And security researchers are absolutely panicking.

Here's the core appeal: Open Claw is an AI agent that can automate tasks on your computer. You connect it to your online accounts—Gmail, Amazon, whatever—and it takes actions on your behalf. One entrepreneur told journalists: "I could basically automate anything. It was magical."

That magical feeling is exactly the problem.

How Open Claw Works and What It Can Do

Open Claw runs on your personal computer. It connects to other AI models via API and operates through a conversational interface. You describe what you want automated, and the system breaks it down into steps. Need to organize your inbox? Draft emails? Process orders? The system can handle it if you give it permission.

The technical architecture is actually clever. Rather than being a monolithic tool, Open Claw orchestrates multiple AI models—it might use one model for planning, another for execution, another for verification. This distributed approach makes the system more capable than any single model.

But here's where it gets dangerous: to access your accounts and take actions, the system needs credentials. You have to give it login information for Gmail, Amazon, and dozens of other services. From a convenience perspective, that's perfect. From a security perspective, it's a nightmare.

The Scope of the Security Problem

Within days of Open Claw's viral spread, security researchers identified "hundreds" of instances where users had exposed their systems to the internet with full access. Some had no authentication at all. Some were broadcasting their entire system's capabilities publicly, essentially saying: "Here's my digital life. Please hack me."

Let me paint a concrete scenario: Someone installs Open Claw. They connect it to Gmail, their bank's API, their AWS account, their corporate VPN. They expose the interface to the internet so they can access it from anywhere. They don't set a password because they're too impatient. Now, anyone scanning the internet for exposed services can find it. An attacker doesn't need to hack their password. They don't need to crack encryption. They just access the interface and ask it to transfer money, delete emails, download documents, whatever they want.

And the system, trained to be helpful, does it.

The problem is fundamental to AI agents. Unlike traditional software where you explicitly grant permission for specific actions, AI agents are designed to be flexible and adaptive. They infer what you probably want based on context. That flexibility is their strength. But it's also their security weakness.

Why This Happened Despite Known Risks

This isn't the first time security researchers have warned about AI agent risks. There's been literature on this for years. But Open Claw's creators apparently prioritized ease of use over security. Or perhaps they underestimated how quickly users would adopt the tool before security practices caught up.

There's also a timing issue here. AI agents are genuinely new in terms of widespread adoption. Most people haven't developed mental models for thinking about agent security. With traditional software, users understand: "Don't give out your password." With agents, the concept is more abstract: "Don't expose your agent's control interface without authentication." For people excited about automation, that security consideration doesn't register emotionally until something goes wrong.

The viral adoption happened faster than security best practices could spread. That's becoming a pattern with popular AI tools.

Chinese Crime Family Executions: The Scale of Southeast Asian Scam Operations

Let's move to something that sounds like it's from a crime novel, but it's recent criminal justice news with major implications for transnational crime enforcement.

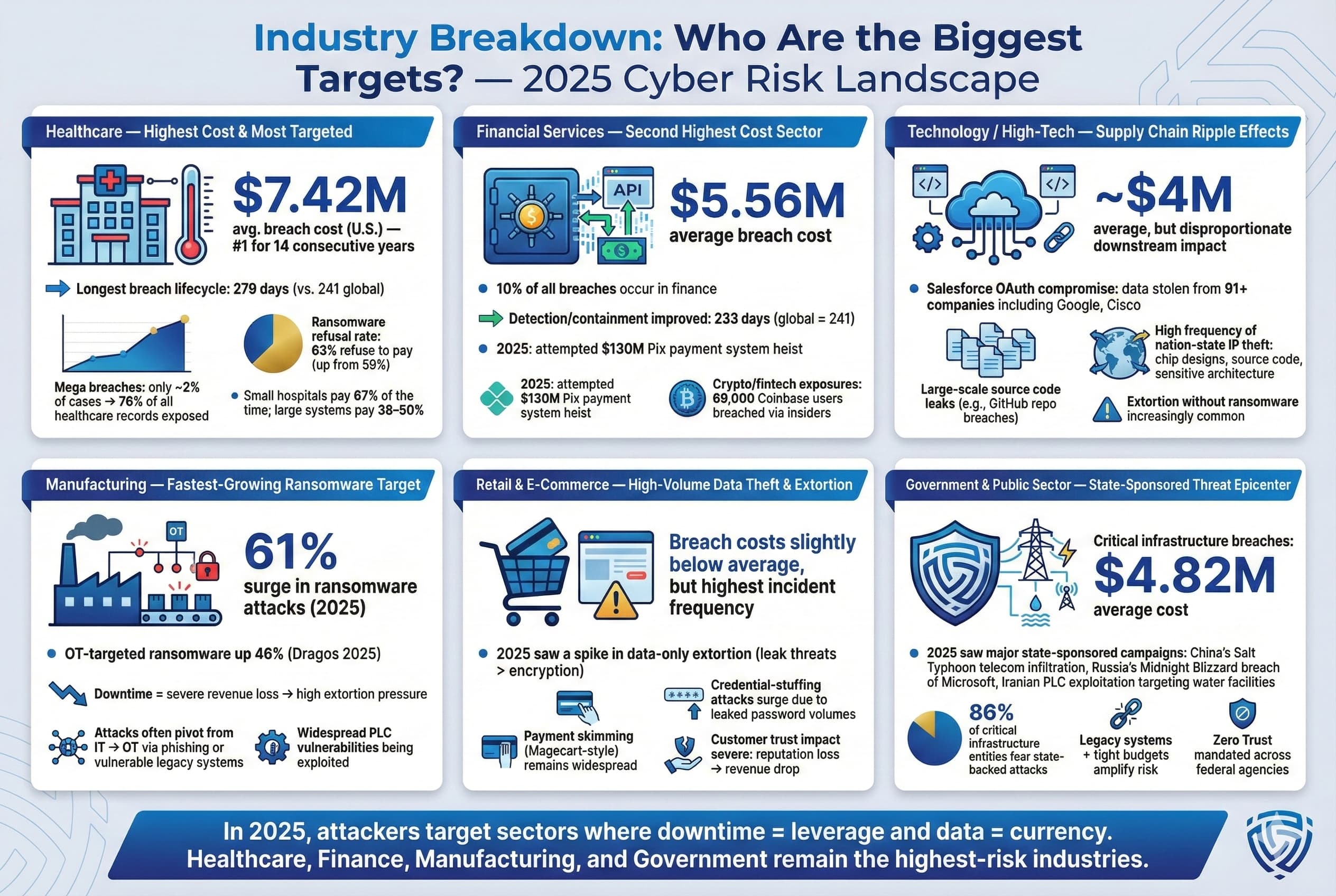

This week, Chinese authorities announced the execution of 11 members of the Ming crime family. These weren't random organized crime members. They were convicted of running scam compounds in Southeast Asia—specifically Myanmar—that generated extraordinary sums of money through fraud.

Here's the financial scale: between 2015 and 2023, the Ming family's criminal operations generated approximately

How the Scam Compound Infrastructure Works

These aren't simple operations. Scam compounds in Southeast Asia operate as sophisticated crime factories. They employ hundreds of workers—many of them trafficked and held against their will. The workers are organized into departments: some handle romance fraud and build fake profiles, others manage crypto schemes, others handle romance scams and romance fraud that targets lonely people in developed countries.

The compounds have infrastructure: servers, internet infrastructure, accounting departments, and management hierarchies. They have lawyers, bribing officials in host countries. They coordinate across borders. Some workers operate from computers, running scripts and managing fake identities. Others handle phone-based social engineering, calling victims and building relationships under false pretenses.

The targets are diverse: vulnerable people looking for love, investors seeking cryptocurrency gains, business owners looking for deals. The approach is customized. For romance fraud, scammers build months-long relationships, gaining emotional trust before eventually requesting money for "emergencies." For investment fraud, they promise unrealistic returns and use sophisticated presentation materials that look legitimate.

What's particularly exploitative is the labor force. Many workers are trafficked into these compounds and held against their will. They face violence and threats. Escape attempts are punished. This isn't just about fraud—it's about human trafficking, forced labor, and organized crime on an industrial scale.

The Geographic and Criminal Network Context

Why Southeast Asia specifically? The region offers several strategic advantages for criminal enterprises: relatively weak governance in some areas, corruption that can be exploited, proximity to major financial flows, and geographic distance from enforcement in Western countries where victims are located.

Myanmar has been particularly affected. The country's political instability and military coup created governance vacuums that organized crime exploited. Compounds in Myanmar—and in neighboring Cambodia, Laos, and Thailand—operate with relative impunity because local authorities are either compromised, overwhelmed, or both.

What's notable about this week's executions is that they represent significant enforcement action. Chinese organized crime groups traditionally operated with relative protection from Chinese authorities. The fact that the Ming family members faced execution suggests either a genuine shift in enforcement priorities or an internal political calculation within China.

Separately, another 20 Ming family members were sentenced in September of the previous year. And members of a rival crime family, the Bai family, have also faced sentencing. This suggests a broader law enforcement campaign targeting these networks.

The Trafficking and Human Rights Dimension

One aspect that deserves more attention: these operations depend on human trafficking. WIRED's investigation, interviewing a trafficking victim codenamed "Red Bull," revealed the human reality behind these statistics. Victims are recruited, often through deceptive means, then held in compounds where they work under threat of violence.

Red Bull provided internal compound documents showing how operations were organized, how profits were managed, and how the system perpetuated itself. The documents revealed hierarchy, security protocols, and methods used to prevent escapes. They showed that operators understood they were committing serious crimes and took measures to avoid detection.

This victim reporting is crucial because it humanizes statistics. We hear "$1.4 billion in fraud" and it's an abstract number. But when you hear from someone who actually lived through the compounds, worked under threat, and escaped, the reality becomes visceral. These operations aren't just about money theft—they're about coercion, exploitation, and violation of human dignity.

The Ming crime family generated approximately

The $40 Million Cryptocurrency Theft: An Unexpected Culprit

Cryptocurrency theft stories have become almost routine in security news. But this week's development was genuinely surprising. A major crypto theft—approximately $40 million—appears to have an unexpected alleged perpetrator.

Without naming specific individuals before facts are established, what's worth understanding is how cryptocurrency theft investigations often develop. Initially, investigators assume external attackers. They look for compromised wallets, stolen private keys, fraudulent transactions. But as investigation deepens, sometimes the finger points internally. Someone with legitimate access. Someone trusted.

This particular case fits that pattern. The alleged involvement is unexpected enough that it's attracted significant attention from security professionals and cryptocurrency observers. It reveals how even in systems designed to be trustless and transparent, social engineering and insider threat remain devastating vectors.

Lessons From Crypto Theft Patterns

Across all cryptocurrency thefts, certain patterns emerge consistently. Many involve compromised exchange accounts. Some involve theft from wallets with inadequate security. But a significant percentage—more than many realize—involve insider threats or social engineering attacks that compromise individuals with access to keys or systems.

The forensic work in cryptocurrency theft is specialized. Unlike traditional financial crime where transactions might be reversed, cryptocurrency transactions are often irreversible. Once funds leave a wallet, recovery is extremely difficult. Investigators must trace through blockchain transactions—which are pseudonymous but not anonymous—trying to identify where funds went, which exchanges they were moved to, and which individuals executed the transactions.

In cases where internal involvement is suspected, investigators look for transaction patterns that would be visible only to insiders, transaction timing that correlates with specific individuals' access windows, or transactions that use unusual security procedures in ways that suggest insider knowledge.

This particular case may eventually clarify how insider threats operate in cryptocurrency environments. That clarity will be valuable for security teams trying to protect assets.

ICE's Expanded AI Surveillance Capabilities

Zooming out from specific incidents to systemic issues: Immigration and Customs Enforcement's expanding use of surveillance technology represents a significant privacy and civil liberties development that affects millions of people.

This week brought new details about ICE's deployment of AI systems for surveillance, specifically regarding an AI-powered Palantir system that's been in use since spring. Let's understand what this means and why it matters.

The Palantir AI Summary System

Palantir is a data integration and analysis company that works extensively with government agencies. The system deployed by ICE is designed to process tips from the ICE tip line—information provided by members of the public reporting suspected immigration violations or criminal activity.

Historically, tips would be manually reviewed by agents. The Palantir system uses AI to automatically summarize these tips, extract key information, and prioritize them. That's the ostensible purpose: increasing efficiency in reviewing reports.

But here's where it gets complex. The system doesn't just summarize neutral facts. It analyzes and categorizes information in ways that can introduce bias. If the AI system learns patterns that correlate certain characteristics—accent, name patterns, neighborhood—with immigration violations, it will flag cases with those characteristics more aggressively. This isn't necessarily because the system designers intended bias; it's because machine learning systems learn patterns in training data, and if training data contains bias (which historical law enforcement data typically does), the system replicates that bias.

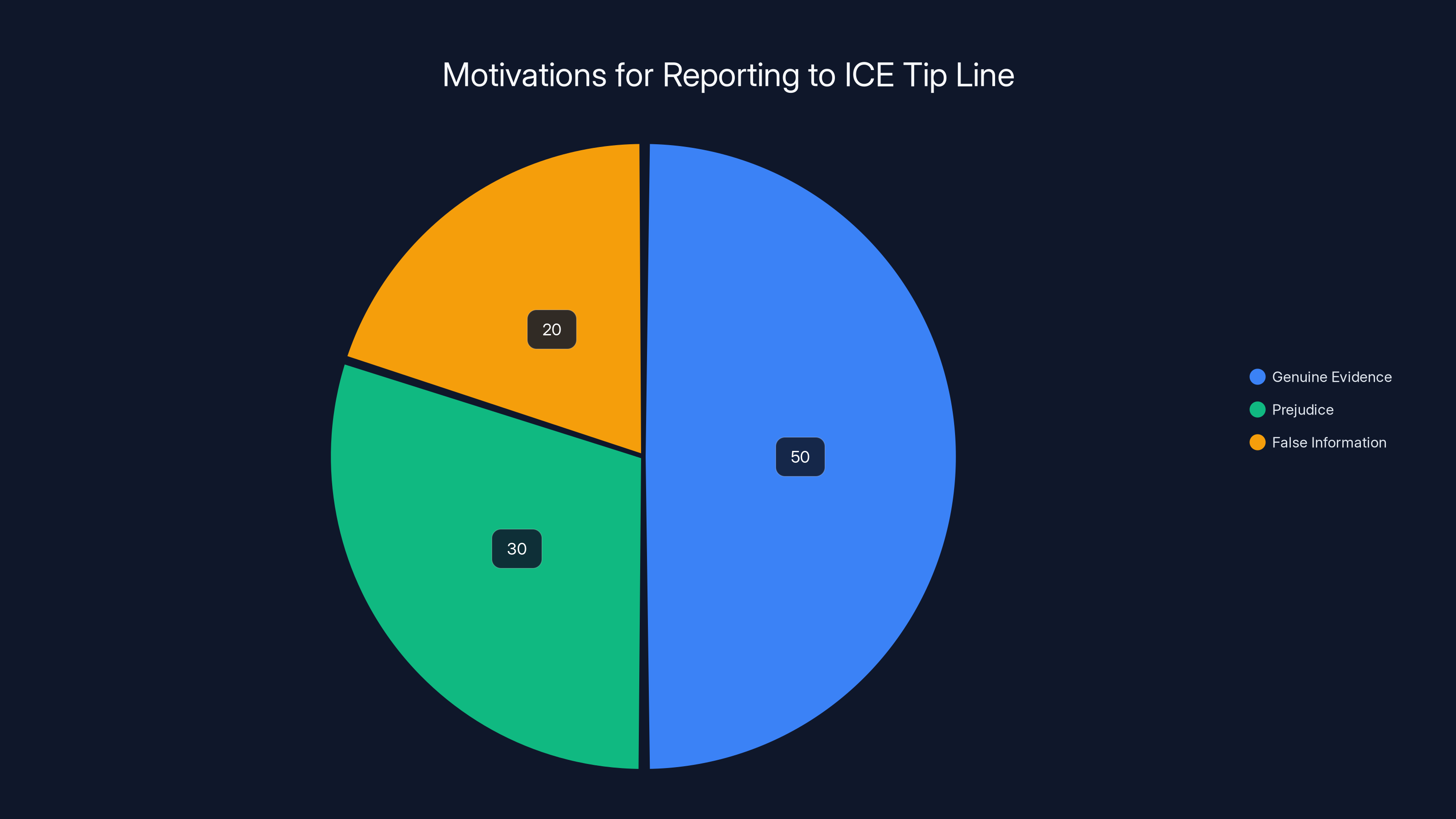

Additionally, the system processes tips from a surveillance tip line. Who calls in tips? People motivated to report something. But what motivates reporting? Sometimes genuine evidence of crime. Sometimes prejudice against specific groups. Sometimes false information to harass someone. The system treats all tips similarly, feeding them into the AI analysis engine.

Mobile Fortify and Facial Recognition Deployment

Separately, ICE has been using Mobile Fortify, a facial recognition application, to scan faces of individuals in the United States. The scope here is disturbing: "countless people" according to the documents, "including many citizens."

Facial recognition technology has documented accuracy problems, particularly for people of color and women. When deployed by law enforcement without these limitations being understood or communicated, innocent people get flagged for investigation based on algorithmic error.

ICE's deployment appears to involve scanning faces in public or semi-public spaces, comparing them against watchlists or databases, and identifying people who match certain criteria. The system doesn't distinguish between citizens and non-citizens. It doesn't distinguish between people suspected of crimes and people simply going about their lives.

The integration of facial recognition into immigration enforcement creates a panopticon effect: anyone in public could be scanned, compared against databases, and flagged. This has chilling effects on First Amendment freedoms. People become less likely to attend protests, visit certain areas, or associate with specific communities if they know they'll be scanned and their presence recorded.

Commercial Tools for Government Surveillance

Perhaps most telling is the revelation that ICE is considering commercial tools for "ad tech and big data analysis" for law enforcement and surveillance purposes. This is outsourcing of surveillance capability to private companies.

When government agencies buy commercial surveillance tools originally designed for marketing and advertising, they're buying systems designed to manipulate and track behavior. These systems use sophisticated profiling, behavioral prediction, and persuasion techniques. Deployed for law enforcement, these capabilities become intrusive and dangerous.

And there's a commercial incentive structure here: the more government agencies buy surveillance tools, the more companies develop and refine them. The more refined they become, the more valuable they are to governments. It creates a feedback loop incentivizing sophisticated surveillance technology development.

The Legal and Judicial Response

This week, a federal judge delayed a decision on whether Department of Homeland Security is using armed raids to pressure Minnesota into abandoning sanctuary policies for immigrants. The fact that this question is even being asked suggests a recognition that ICE enforcement actions have become coercive in ways that raise constitutional concerns.

Minnesota has sanctuary policies that limit cooperation between state and local law enforcement with immigration enforcement. Instead of working through legal channels, the argument goes, ICE is conducting armed raids specifically designed to pressure the state into abandoning these policies.

This is a fascinating legal question: Can law enforcement actions—even if technically legal individual actions—constitute coercion when deployed with clear intent to pressure policy changes? It's an unusual legal theory, but it reflects recognition that enforcement intensity has become a political tool.

The rapid adoption of new technologies and the industrial scale of cybercrime are among the highest impact challenges for security professionals in 2023. (Estimated data)

The Death of Alex Pretti and Post-Incident Politics

Context matters for understanding the current immigration enforcement climate. A federal immigration officer shot and killed 37-year-old Alex Pretti in Minneapolis last Saturday. Within minutes, before facts were established, Trump administration officials and right-wing influencers mounted a coordinated campaign characterizing Pretti as a "terrorist" and "lunatic."

This rapid narrative deployment—before investigation was complete, before the full context was understood—reveals how immigration enforcement has become politicized. Rather than allowing facts to emerge, political actors immediately weaponize incidents for advantage.

The actual circumstances: why was Pretti encountered? What was he doing? Did he pose a genuine threat? How did the interaction escalate to lethal force? These are investigative questions that take time to answer properly. But in the current environment, facts are secondary to narrative control.

What this reflects is an environment where immigration enforcement has become not just about enforcing laws, but about political dominance and intimidation.

Deepfake Sexual Abuse and "Nudify" Technology

Let's turn to another category of threat that's accelerating in sophistication and accessibility: deepfake sexual abuse material, specifically "nudify" technology.

The Technology and Its Capabilities

"Nudify" tools use neural networks to generate synthetic images—typically by taking clothed photos of people and generating images showing them in various states of undress. The technology has existed for several years, but what's changed is accessibility and sophistication.

Earlier versions required technical expertise and expensive hardware. Modern versions run on consumer computers. Some are available online. All you need is a photo and a few clicks.

The quality has also improved. Early deepfakes were obviously fake—you could see artifacts, distortions, errors. Modern versions are increasingly photorealistic. A casual observer might not immediately recognize them as synthetic. Some versions are good enough to fool automated content moderation systems.

The Scale of Abuse

Research this week found that the vast majority of deepfake sexual abuse material involves real women and girls who never consented to having their images created or shared. The psychological harm is severe: violation of consent, public humiliation, reputation damage, threat to employment, threat to relationships.

The targets are often minors. Teenagers have had explicit deepfakes of themselves generated and shared without consent. Young women have discovered explicit deepfakes circulating among peers or posted to social media.

What makes this different from traditional sexual harassment or abuse is the technological mediation. It's not a human attacker directly threatening someone. It's an automated tool creating fake abuse material that can be shared infinitely. It's hard to control distribution because it can be re-shared, modified, and disseminated through countless channels.

The Challenge of Legal and Practical Response

Legally, response has been limited. Some jurisdictions have criminalized non-consensual deepfake pornography. Others haven't. Even in jurisdictions with laws, enforcement is difficult because the tool creators claim neutrality—the tool isn't designed for abuse, it just has that capability.

Technically, detecting deepfakes is an ongoing arms race. Companies develop detection systems, deepfake creators improve their techniques to evade detection. Each advancement in deepfake quality makes detection harder.

Socially, there's a significant gap between the scale of the problem and public awareness. Many people don't realize that "nudify" tools exist or how easy they are to use. Victims often suffer in silence, embarrassed to report or uncertain who to report to.

The alleged hacker had high skill levels in exploiting iOS, BlackBerry, and Firefox, with BlackBerry being the most challenging due to its security reputation. (Estimated data)

The Bondu AI Stuffed Animal Vulnerability

Sometimes security vulnerabilities have an almost absurd quality to them. A research team discovered that an AI stuffed animal toy made by Bondu had its web console almost entirely unprotected. The console contained approximately 50,000 logs of chat interactions between the toy and children.

Think about what that means: a toy designed for kids, trained to be conversational and engaging, was logging conversations. Those conversations were stored on a web server. That web server was protected so inadequately that anyone with a Gmail account could access it.

What Was Exposed

The logs contained conversations between children and the AI system. Kids were talking to the toy about their lives, their feelings, their families. Some conversations were probably innocent. Others probably revealed sensitive information—family conflicts, insecurities, personal details.

Now, that information was accessible to anyone who knew to look. Anyone could read what children were saying to a toy designed to be trustworthy and confidential.

The Broader Io T Security Problem

This incident is one example of a much larger problem: the Internet of Things (Io T) security crisis. Consumer devices—toys, speakers, cameras, thermostats, appliances—increasingly have network connectivity and cloud storage. Many are developed by small companies with limited security expertise. Security is an afterthought.

The Bondu toy incident is particularly troubling because it involves children. There's an expectation that products marketed to kids will be secure and safe. But technical reality often falls far short of that expectation.

Companies argue that security and features are in tension. Adding strong security makes products more expensive and harder to develop. But that's not actually true—good security practices, implemented from the beginning, don't significantly increase cost. The problem is that security is treated as optional rather than fundamental.

The Military Assessment of ICE Tactics

An active military officer provided analysis of ICE enforcement actions in Minneapolis, concluding that ICE is masquerading as a military force while actually using tactics that would be considered incompetent by military standards.

What's this about? ICE conducts armed raids with tactical gear, vehicles, and deployment patterns that look military-like. But the operational execution often falls short of military competence. Insufficient intelligence, poor planning, excessive force for objectives—these are tactics that real military personnel would recognize as problematic.

The military perspective is valuable because it applies expertise from a domain that does take tactical competence seriously. You don't survive in military combat operations with poor planning and careless execution. The fact that someone with that background is noting that ICE tactics would be dangerous in actual military context suggests a broader competence problem.

Estimated data suggests that while genuine evidence is the primary motivation for reporting, a significant portion of reports may stem from prejudice or false information.

Comparing Threat Landscapes: Then vs. Now

Zooming out, this week's news reveals how dramatically the threat landscape has changed over even the past few years.

Evolution of Exploit Markets

Twenty years ago, if you discovered a vulnerability, you published it, vendors patched it. Sophisticated actors would sometimes keep vulnerabilities secret, but it was mostly nation-states with state-level resources.

Now, there's an open market for exploits. Legitimate companies trade them. Criminal organizations traffic them. The barrier to entry for sophisticated hacking has dramatically lowered. You don't need to discover exploits yourself—you can buy them. The Epstein case, if accurate, reveals this ecosystem operating at least as far back as 2017.

AI Agent Adoption Velocity

Fifteen years ago, when iPhones launched, smartphones took years to achieve broad adoption. People were skeptical. Developers had to build the app ecosystem. Security best practices had to emerge.

With AI agents, adoption is happening at internet speed. Millions of users in a week. Security implications aren't being thought through because the adoption is too fast. By the time security practices catch up, critical mistakes have already been made.

Surveillance Scale and Sophistication

A decade ago, facial recognition was an interesting technology that might exist in the future. Now it's deployed by law enforcement for mass surveillance. A decade ago, the idea of AI automatically analyzing millions of public tips to flag suspects would have seemed dystopian. Now it's actually happening.

The infrastructure for comprehensive surveillance is being built in real time, piece by piece. Not as a coordinated strategy, but as individual agencies adopt tools that are available. The aggregate effect is surveillance at a scale that would have been unimaginable before.

International Crime Scale

A generation ago, major crime operations were typically localized or national. Now, the Ming crime family operated across Southeast Asia, targeting victims worldwide, using industrial-scale infrastructure. The scale of revenue generation—$1.4 billion over eight years—rivals legitimate businesses.

Cyber-enabled crime has enabled this scale. The same internet that makes a business global makes criminal enterprises global. Victims and perpetrators can be on opposite sides of the world, connected only by digital systems.

What Security Practitioners Should Do

If you're responsible for security in any context—organizational, personal, or informational—what does this week's news mean for your practice?

Evaluate Your AI Tool Adoption

If your organization is considering deploying AI agents or similar tools, have security conversations before adoption. What data will the system access? What credentials will it need? How will you audit what it does? What security incidents could emerge?

Don't adopt tools first and think about security later. That's the path that leads to hundreds of exposed systems.

Assume Credentials Are Vulnerable

The fact that so many Open Claw users exposed their credentials suggests people aren't thinking seriously about credential management. If you're giving an AI system—or any system—access to your accounts, treat that as equivalent to giving a house key to someone.

Use environment-specific credentials when possible. If a system needs Gmail access, create a Gmail account specifically for that system with limited permissions. Don't use your main Gmail account.

Monitor Your Digital Presence

If there are photos of you online, assume they might be used to generate deepfakes. That's not paranoia, it's pattern recognition based on current prevalence. Monitor for non-consensual explicit images with tools like Google's takedown service or other specialized services.

Understand What Agencies Can Access

If you travel internationally or live in areas with intensive enforcement activity, understand what surveillance systems you might be subject to. Facial recognition systems, location tracking, tip-line databases—these aren't hypotheticals. They exist. Know your rights and plan accordingly.

The Future of Threat Evolution

Looking forward, several trends from this week's news will likely intensify.

Exploit markets will continue scaling. The fact that governments will openly buy exploits and criminal organizations will openly trade them suggests these markets will become more formalized and efficient.

AI agents will become increasingly capable and increasingly risky. As systems become more useful, adoption velocity will accelerate. Security practices will lag adoption. The gap between capability and safety will remain dangerous.

International crime will continue operating at industrial scale. The revenue potential is too high, the enforcement challenges too great, and the enabling technology too accessible for anything else to happen.

Surveillance infrastructure will continue proliferating. Not necessarily as a coordinated plan, but because individual tools get deployed individually. The aggregate effect is creeping normalization of mass surveillance.

FAQ

What is the significance of Jeffrey Epstein having a personal hacker?

The revelation suggests that high-profile criminals have access to sophisticated cyber capabilities, including zero-day exploits for critical platforms like iOS and BlackBerry. If accurate, this indicates that expertise in vulnerability discovery wasn't limited to nation-states in 2017—criminal networks could also acquire advanced hacking tools through exploit markets. This has implications for how we think about cybercriminal infrastructure and the accessibility of sophisticated hacking capabilities to wealthy individuals.

How does Open Claw work and why is it a security concern?

Open Claw is an AI agent that runs on your personal computer, connects to your online accounts like Gmail and Amazon, and automates tasks on your behalf based on conversational instructions. The security risk emerges because users must provide credentials for the system to access these accounts. Many early adopters exposed their systems to the internet without authentication, accidentally creating easy entry points for attackers who could control their accounts, steal data, or execute fraudulent transactions.

What are the implications of the Chinese crime family executions?

The execution of 11 Ming crime family members demonstrates that Southeast Asian scam compounds—which generate billions through fraud and human trafficking—are significant enough to warrant capital punishment in China. This signals both the scale of transnational cybercrime operations and potential shifts in how governments are enforcing against organized crime networks. It also highlights how criminal enterprises use forced labor and trafficking as foundational elements of their infrastructure.

How are deepfake sexual abuse images created and distributed?

"Nudify" tools use artificial neural networks to generate synthetic images that appear to show people in undressed states. Modern tools require only a photograph and a few clicks, making them accessible to people without technical expertise. Distribution occurs through social media, encrypted messaging, and peer-to-peer sharing networks. The psychological harm to victims is severe because the images can be shared infinitely, are difficult to remove from the internet, and create lasting reputation damage.

What is Palantir's role in ICE surveillance operations?

Palantir is a data integration company whose AI systems have been deployed by ICE to automatically summarize and categorize tips received through ICE's tip line. Rather than manual review by agents, the system uses machine learning to extract information and prioritize cases. This creates efficiency gains but also introduces algorithmic bias, as the system learns patterns from historical law enforcement data that often contains inherent prejudices.

Why is facial recognition deployment by ICE controversial?

Facial recognition technology has documented accuracy issues, particularly affecting people of color and women. When deployed for immigration enforcement without transparency or adequate accuracy standards, innocent people—including U.S. citizens—can be flagged for investigation based on algorithmic error. The lack of distinction between citizens and non-citizens creates mass surveillance effects that chill freedoms of movement and association.

What should individuals do to protect themselves from emerging threats?

Individuals should carefully evaluate before granting AI systems or applications access to their accounts, use account-specific credentials rather than main accounts for system access, monitor their online photos and presence for potential deepfake abuse, understand what surveillance systems operate in their jurisdiction, and stay informed about data practices of tools and services they use. For organizations, security conversations should precede tool adoption rather than follow it.

How do scam compounds operate in Southeast Asia?

Scam compounds operate as crime factories with organized departments handling different fraud types: romance fraud, investment fraud, cryptocurrency schemes. Workers—often trafficked and coerced—manage fake online identities, build relationships with victims, and execute social engineering attacks. The operations employ hundreds of people, have formal hierarchies, and generate extraordinary revenue. They operate in countries with weak governance where corruption enables their activities.

What makes the Bondu toy security vulnerability particularly problematic?

The unprotected exposure of 50,000 conversations between children and an AI toy violates the expectation that toys marketed to children are secure and trustworthy. The incident reveals broader IoT security problems where small companies developing consumer devices prioritize features over security, resulting in inadequately protected systems that store sensitive personal information. This is particularly troubling when children's communications and personal details are exposed.

What is the long-term trajectory of cybercriminal capabilities?

Exploit markets are formalizing and scaling, making sophisticated hacking capabilities increasingly accessible. International crime operations are achieving industrial scale through cyber-enabled infrastructure. AI agent technology is advancing faster than security practices, creating widening gaps between capability and safety. Surveillance infrastructure is proliferating through individual tool deployments that collectively create mass monitoring capabilities. These trends suggest threats will accelerate in sophistication, scale, and accessibility.

Key Takeaways for Security Professionals

This week's security news reveals several critical patterns:

-

Exploit markets are real and scaled: The alleged Epstein hacker story confirms what security researchers have long known—there's a formal market for zero-day exploits, and sophisticated actors including nations and criminal organizations participate actively.

-

Adoption velocity exceeds security readiness: Open Claw's 2+ million users in one week demonstrates that AI tool adoption happens faster than security practices can catch up, leaving users vulnerable to self-inflicted breaches.

-

Transnational cybercrime operates at industrial scale: The Ming family's $1.4 billion in fraud revenue shows that cyber-enabled crime has achieved business-scale profitability, enabling sophisticated operations across borders.

-

Surveillance infrastructure is normalizing: Individual agency adoptions of facial recognition, AI analysis, and data aggregation tools are collectively creating comprehensive surveillance capabilities that affect millions.

-

Technology-enabled abuse is accelerating: Deepfake sexual abuse material, compromised IoT devices, and similar threats show how accessibility and sophistication of abuse tools are increasing simultaneously.

Conclusion: Living in the New Threat Reality

The security landscape has fundamentally shifted. We're no longer in a world where cybercrime and digital threats are niche concerns managed by specialized professionals. We're in a world where major crime syndicates operate globally using cyber-infrastructure, where AI systems with millions of users are deployed without adequate security thought, where governments conduct mass surveillance through commercial tools, and where sexual abuse material can be synthetically generated and distributed at scale.

These aren't distant future scenarios. They're happening this week.

What strikes me most about this week's news is how normalized these threats are becoming. A revelation that a high-profile criminal employed a skilled hacker—extraordinary. An AI tool with millions of users and serious security problems—viral moment, quickly accepted and adopted. A crime family executing industrial-scale fraud for a decade—reported, then moves on. Government surveillance at scale—policy debate rather than shocking revelation.

Normalization is the real danger. Once we accept that these threats exist, we stop being shocked. Shock is often what drives security improvements.

If you work in security, this week should crystallize your priorities. Assume that sophisticated exploit capabilities exist and are being actively traded. Assume that users will adopt powerful tools without thinking through security implications. Assume that international crime operations are increasingly capable. Assume that surveillance infrastructure will continue expanding.

Then build defenses accordingly.

For everyone else, the takeaway is simpler: security matters more than ever. The threats are real, they're scaling, and they affect normal people going about their lives. Think about what credentials you're giving to AI systems. Think about what photos of you are circulating online. Think about what surveillance systems you might encounter. Think about what information you're sharing.

Because the threats we discussed this week? They're only accelerating.

Related Articles

- Jeffrey Epstein's 'Personal Hacker': Zero-Day Exploits & Cyber Espionage [2025]

- Bumble & Match Cyberattack: What Happened & How to Protect Your Data [2025]

- Facial Recognition and Government Surveillance: The Global Entry Revocation Story [2025]

- AI Agent Social Networks: Inside Moltbook and the Rise of Digital Consciousness [2025]

- AI Toy Security Breaches Expose Children's Private Chats [2025]

- Best Cheap VPN 2026: Complete Guide & Alternatives

![Major Cybersecurity Threats & Digital Crime This Week [2025]](https://tryrunable.com/blog/major-cybersecurity-threats-digital-crime-this-week-2025/image-1-1769861162956.jpg)