Meta Closes 550,000 Accounts: Australia's Social Media Ban Impact and What It Means for Big Tech

Back in December 2024, Australia did something no other democracy had ever attempted. The country passed legislation banning social media for anyone under 16. It wasn't a polite suggestion or a gentle nudge toward better parenting. It was law, enforced with teeth—companies that don't comply face fines up to

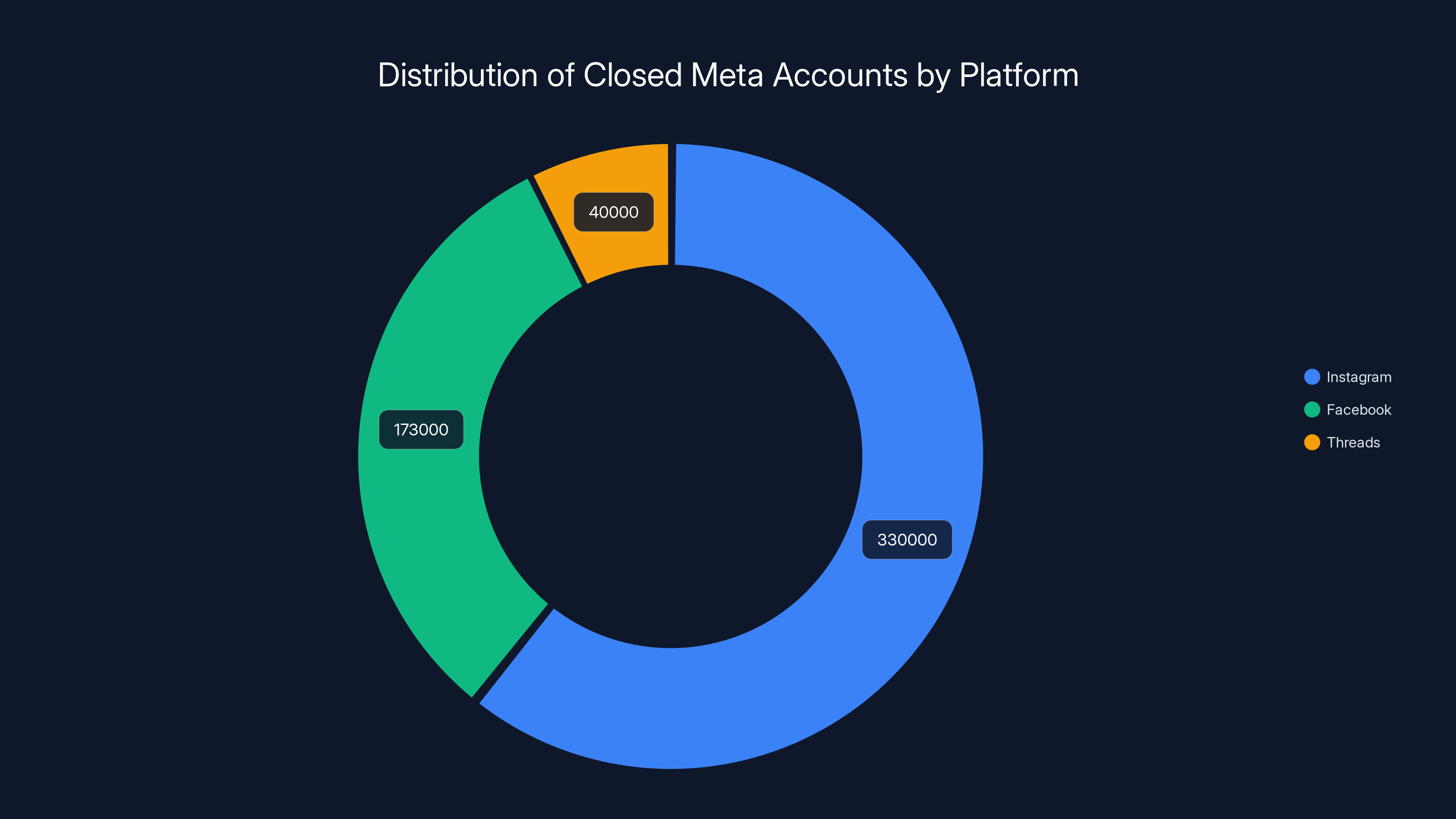

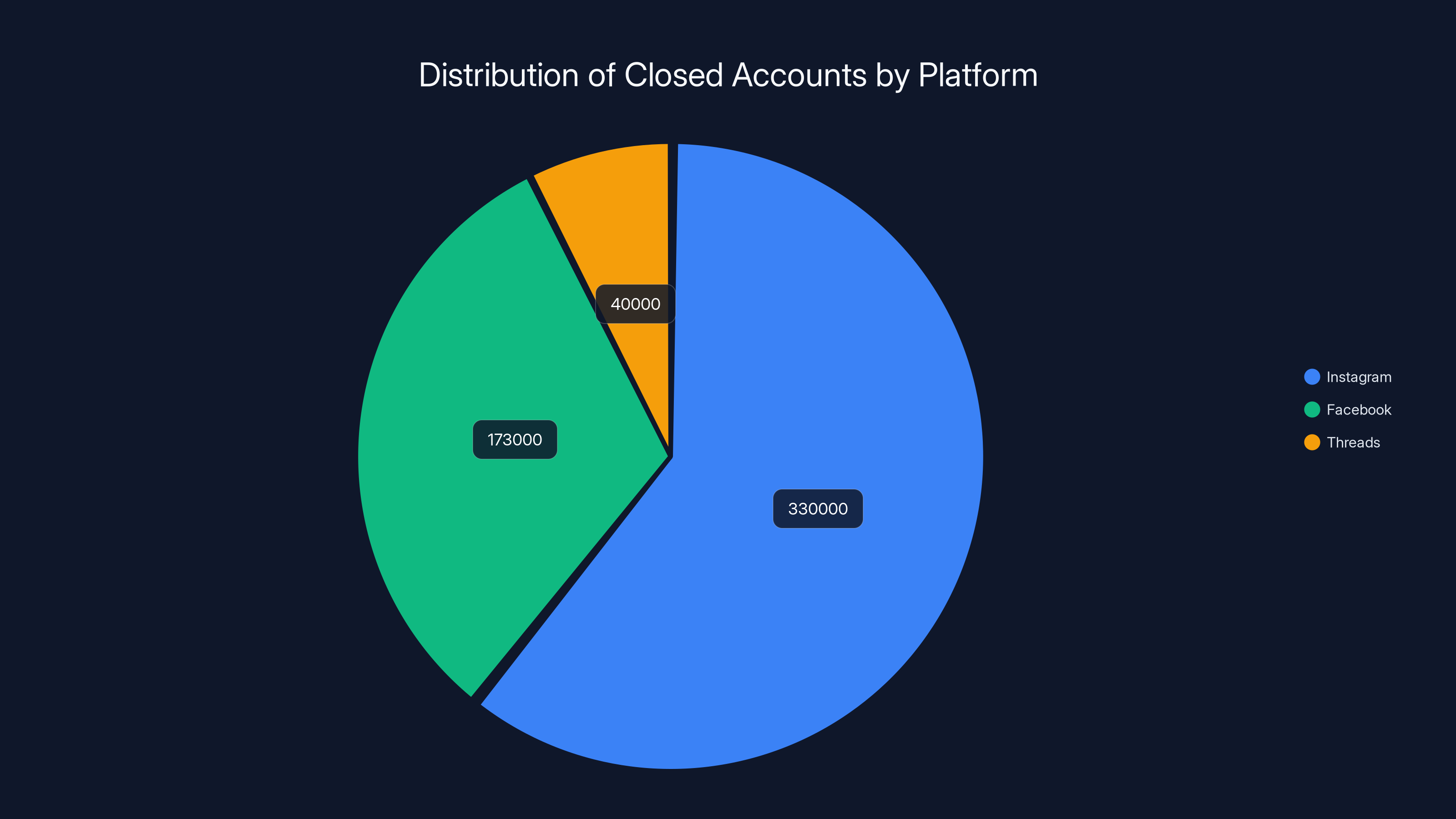

Within weeks, the real-world impact started showing up in corporate disclosures. Meta announced it had closed nearly 550,000 accounts deemed to belong to minors. That's not a typo. Half a million accounts. Breaking it down: 330,000 from Instagram, 173,000 from Facebook, and 40,000 from Threads.

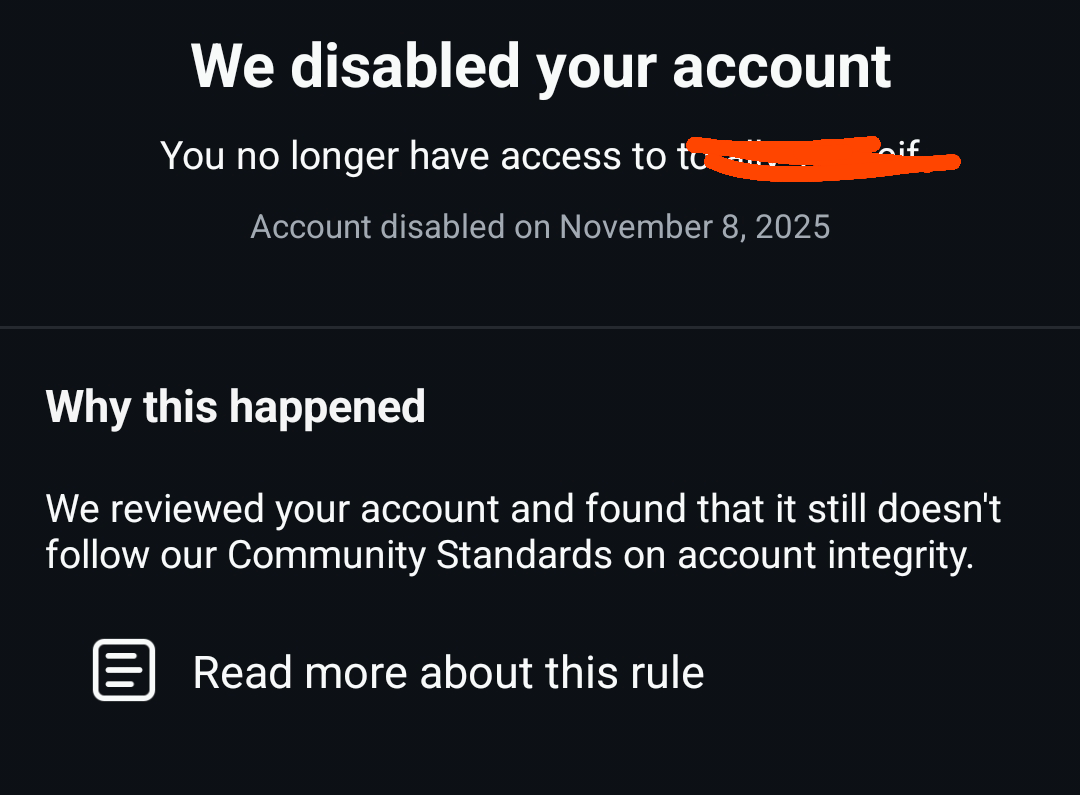

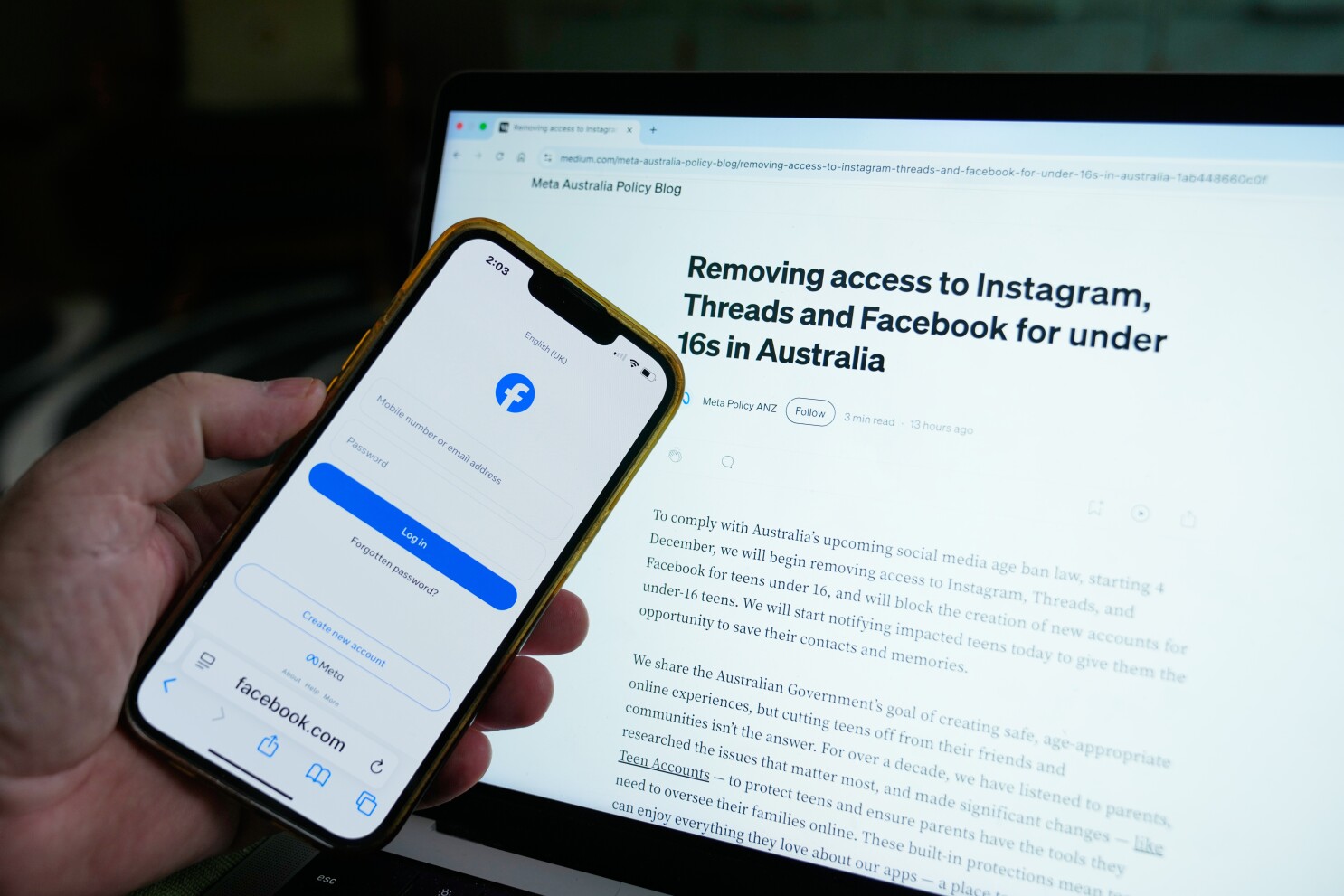

But here's where it gets interesting. Meta didn't just accept this quietly. The company posted grievances on Medium, basically saying, "Look, we tried, but this is messy, and we're concerned about privacy." The company acknowledged the challenge of determining age online without industry standards, hinting that their enforcement will always be imperfect.

This moment matters more than it initially seems. Australia's ban represents a fundamental shift in how democracies regulate technology. It's not about improving content moderation or fighting misinformation. It's governments saying: social media platforms are too risky for developing brains, full stop. No exceptions. No parental consent workarounds.

For the tech industry, this creates a cascading problem. If Australia can do it, what's stopping the European Union? Canada? The United Kingdom? Suddenly, Meta, Tik Tok, Snapchat, and X aren't just dealing with one market's rules. They're facing the possibility of fragmented regulations across dozens of countries, each with slightly different enforcement mechanisms and fines.

The real story here isn't about 550,000 accounts being deleted. It's about the first domino falling in what could become a global trend of age-gated social media. And it's forcing platforms to make impossible choices: comply with increasingly strict regulations or risk billions in fines.

Let's dig into what actually happened, why it matters, and what comes next.

TL; DR

- Meta closed 550,000 accounts in Australia following the country's first-of-its-kind under-16 social media ban that took effect December 10, 2024

- The ban affects 10 major platforms including Facebook, Instagram, Tik Tok, Snapchat, X, Reddit, and Threads with fines up to $33 million USD for non-compliance

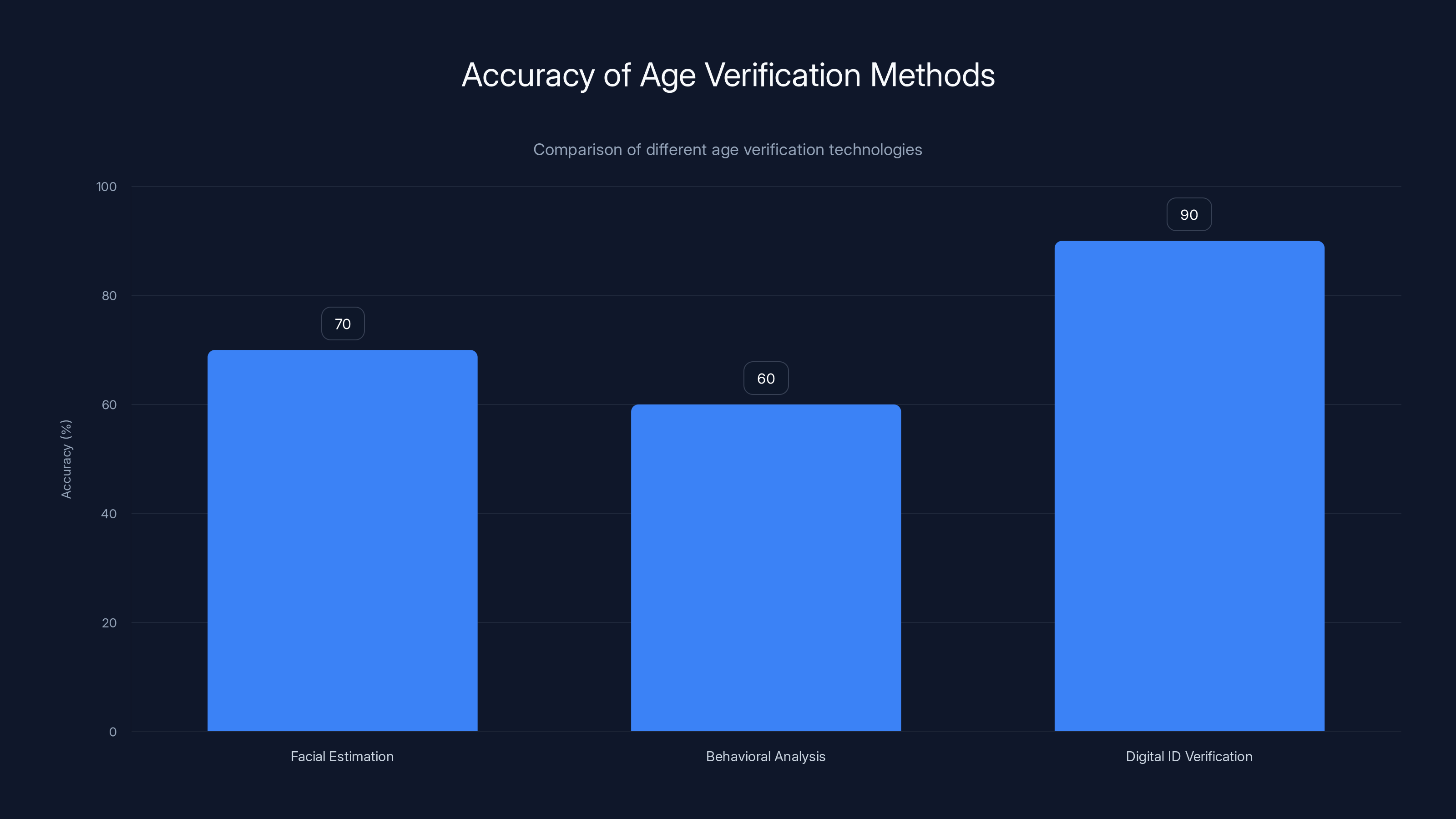

- Age verification is messy with platforms using activity inference, facial recognition, selfies, and other unreliable methods to identify minors

- Other platforms are resisting: Reddit sued the Australian government claiming it's not a social media site; Meta warned the ban drives teens to less regulated parts of the internet

- Global implications are significant: Australia's ban sets a precedent that could spread to the EU, UK, Canada, and other democracies, fundamentally reshaping social media regulation worldwide

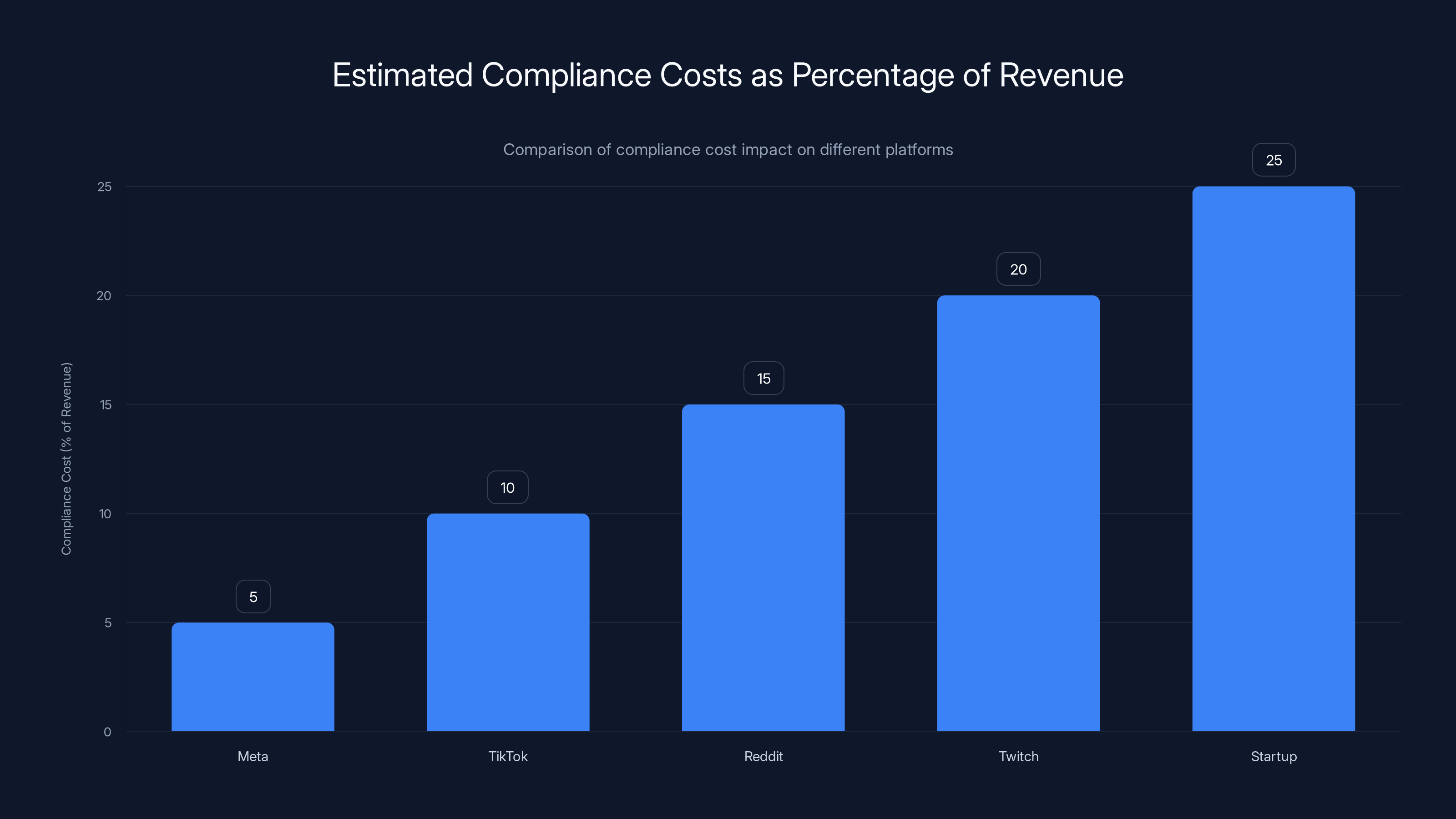

Estimated data shows that smaller platforms like Reddit, Twitch, and startups face higher compliance costs as a percentage of revenue, potentially impacting their viability.

Understanding Australia's Historic Social Media Ban

When Prime Minister Anthony Albanese announced Australia's social media ban in September 2024, he framed it as child protection. The rhetoric was straightforward: social media harms young people's mental health, disrupts sleep, fuels anxiety, and creates dependency. Therefore, minors simply shouldn't have access as noted by The New York Times.

The legislation passed with broad bipartisan support. Labor, the Coalition, and the Greens all voted yes. The public largely supported it too—polls showed roughly 70% approval. No one wanted to be seen as defending unrestricted teen access to platforms designed to be addictive.

But the law itself is unusually blunt. It doesn't say platforms should implement better age-verification or improve safeguards. It says platforms cannot knowingly allow anyone under 16 to use their service. Period. The responsibility falls entirely on the platforms, not on parents or schools or teens themselves.

The minimum age requirement kicked in on December 10, 2024. Platforms had to comply immediately or face enforcement. The Australian Communications and Media Authority (ACMA) became the watchdog, with power to investigate and levy fines as highlighted by The Guardian.

What made this law globally significant was timing and precedent. No other major democracy had attempted an outright ban on social media for minors. The EU has the Digital Services Act, which imposed stricter rules on platforms but didn't ban services. The U. S. has section 230 protection, which shields platforms from liability. Australia just said no.

The 10 platforms specifically targeted include the obvious ones—Facebook, Instagram, Tik Tok, Snapchat, X—but also less obvious ones like Reddit, Twitch, You Tube, Be Real, and Bluesky. Each platform interprets the law differently based on what they consider "social media" versus other categories.

The fine structure is aggressive. Up to

Meta closed 550,000 accounts to comply with Australia's social media ban, with Instagram having the highest closures at 330,000 accounts.

Meta's Response: 550,000 Accounts Removed

Meta moved quickly. Within weeks of the ban's implementation, the company published a Medium post announcing account closures and outlining its compliance strategy. The numbers were striking: 330,000 Instagram accounts, 173,000 Facebook accounts, and 40,000 Threads accounts.

But Meta also signaled frustration. The company stated: "Ongoing compliance with the law will be a multi-layered process that we will continue to refine, though our concerns about determining age online without an industry standard remain."

Translation: We're complying, but it's imperfect and we're not happy about it.

Meta's approach to age verification involves multiple detection methods working in combination. First, there's behavioral analysis—looking at activity patterns, signup information, and engagement behavior to infer likely age. If someone's profile says they went to high school in 2019, they're probably not 12.

Second, there's digital ID verification, which some users can opt into. This is voluntary and uses technology partners who verify government-issued ID.

Third, there's facial age estimation—AI models that attempt to estimate age from profile photos or selfies. This is controversial and has accuracy issues, but it's part of the toolkit.

The reality is that none of these methods are foolproof. Activity inference can be spoofed. Teens know how to lie on signup forms. Facial age estimation has documented bias issues and accuracy rates that vary widely depending on ethnicity and presentation.

Meta knows this. Which is why the company emphasized in its compliance statement that this will be an ongoing process requiring refinement. They're essentially saying, "We'll catch a lot of underage users, but definitely not all."

The 550,000 figure represents accounts Meta believed were operated by minors. But that's 550,000 identified and removed. How many slipped through? Probably millions. A teenager with basic deception skills can easily maintain an account. Use an older age. Use a parent's ID photo for facial verification. Use friends' activity patterns as cover.

Meta's compliance strategy also includes user reporting and appeals. If your account gets flagged, you can provide age verification to fight the removal. This creates a workflow where Meta isn't just removing accounts unilaterally—it's asking teens to prove their age to keep access.

The Technology of Age Verification: Promise and Problems

Age verification seems straightforward in theory. Confirm someone's birth date. Check government ID. Problem solved. In practice, it's complicated by privacy, technology limitations, and the fundamental challenge that age verification was never designed into social media platforms.

Facial age estimation is a particularly contentious method. The technology uses machine learning models trained on thousands of face images to predict age from visual features. Studies show these systems can be reasonably accurate when used on diverse populations, but accuracy drops significantly for younger people and across ethnic groups.

One major problem: fatigue and angle affect perceived age. A tired teenager in harsh lighting might appear older. Someone with youthful features in flattering light might appear younger. The margin of error creates false positives and false negatives.

Behavioral analysis is more sophisticated but equally imperfect. Platforms analyze things like:

- Profile information (school names, graduation years, family relationships)

- Content consumption patterns (what types of videos they watch, posts they engage with)

- Interaction styles (language patterns, emoji usage, posting frequency)

- Device and connection patterns (location data, device age, OS version)

- Account creation details (signup IP, payment information, phone number)

The theory is that a 13-year-old's digital behavior is meaningfully different from a 23-year-old's. And broadly, that's true. But it's not reliable enough for binary enforcement. A 16-year-old who watches educational videos and uses sophisticated language might score as adult. A 35-year-old gamer who posts frequently might score as teen.

Digital ID verification is the most reliable method but has major privacy implications. It requires users to upload government identification—driver's licenses, passports, national ID cards. This data is extraordinarily sensitive. Who stores it? How's it encrypted? What's the breach risk? What happens if an identity theft service gets access to millions of government IDs?

Meta partners with third-party verification companies like Veriff and Jumio to handle digital ID. These companies specialize in identity verification and presumably have better security than Meta would. But it still creates another vector for data exposure.

There's also the socioeconomic dimension. Digital ID verification requires government-issued identification. What about homeless youth? Undocumented immigrants? Kids from families without bureaucratic access to IDs? The ban potentially affects these populations differently.

This is why Meta's statement about lacking "industry standards" matters. If every platform used different verification methods with different accuracy rates, the user experience becomes fragmented and the security becomes patchwork.

Digital ID verification is the most accurate method with 90% accuracy, but it raises significant privacy concerns. Facial estimation and behavioral analysis are less accurate, with 70% and 60% accuracy respectively. Estimated data.

Other Platforms' Responses: Resistance and Workarounds

Meta might be complying, but other platforms are fighting back more aggressively.

Reddit filed a formal legal challenge to the ban, arguing that Reddit isn't actually a social media platform—it's a discussion forum or community site. The distinction might seem semantic, but Reddit makes an argument: social media implies algorithmic feed-based content tailored to individual users. Reddit uses community moderation and chronological post ordering within communities.

It's a clever legal argument, and it might work in court. But it also sidesteps the deeper question: even if Reddit isn't technically "social media," is it appropriate for under-16s? The platform hosts explicit content, conspiracy theories, and adult communities alongside educational spaces.

Reddit also raised privacy concerns about the ban's enforcement mechanism. The company warned that age verification methods required by the law could create "serious privacy and political expression issues." If users have to prove their age to use the platform, it creates linkage between identity and online activity. That's dangerous for political dissidents, LGBTQ+ youth, and others who need anonymity online.

Tik Tok has taken a quieter approach, implementing age verification but also lobbying behind the scenes. The company is the most directly affected since it's heavily used by teenagers. Tik Tok's parent company Byte Dance is evaluating whether to accept the ban or fight it through legal channels.

Snapchat has implemented strict age gates, asking users to verify their age through a variety of methods. The company positioned itself as relatively compliant, though like Meta, imperfect compliance is inevitable.

X (formerly Twitter) has been less transparent about its approach. The platform has fewer teen users than Meta or Tik Tok, so compliance might be simpler. But X also has looser content moderation overall, which could make age verification enforcement harder.

You Tube is interesting because Google framed it as compliant even though You Tube isn't explicitly listed in the ban. Google distinguishes between You Tube (which it positions as primarily adult video sharing) and You Tube Kids (which is explicitly for under-13s). This distinction might shield You Tube from the strictest enforcement.

The broader pattern: platforms with primarily adult user bases or alternative architectures are fighting or minimizing compliance. Platforms like Meta and Snapchat, heavily used by teens, are implementing aggressive age gates. This creates incentive for teenagers to migrate to less compliant platforms—exactly what Meta warned about.

Meta's Official Concerns: A Valid Argument?

In its Medium post about the ban, Meta raised several substantive criticisms. Understanding these is important because they're not just corporate whining—they touch on genuine policy trade-offs.

Isolation from Support Communities: Meta argued that removing teens from social media isolates them from valuable online communities. A teen struggling with depression might find support in mental health communities. A LGBTQ+ kid in a conservative area might find acceptance in online communities. A teen with niche interests—obscure gaming, rare hobbies—can connect with peers worldwide.

Remove social media access and you remove these lifelines. That's not entirely theoretical. Studies show online communities provide genuine mental health support for isolated teens. The ban doesn't distinguish between supportive communities and harmful ones.

Driving Teens to Unregulated Spaces: Meta pointed out that the ban might drive teens to less regulated parts of the internet. If Instagram is blocked, they'll find Discord servers, Telegram groups, Whats App communities, Reddit threads, and private forums. These spaces have even less moderation and oversight than mainstream platforms.

In some ways, this argument is self-serving. Meta is saying, "If you ban us, teens will go somewhere worse." But there's truth to it. Unregulated spaces are genuinely riskier for exploitation, misinformation, and harmful content.

Privacy and Verification Concerns: Meta noted the difficulty of determining age online without creating privacy risks. The company is correct that robust age verification requires either invasive surveillance (behavioral tracking) or sensitive data collection (government IDs). The ban forces platforms to choose between privacy and compliance.

Lack of Parental Authority: Meta pointed out that the ban removes parental choice. Some parents might want their mature 15-year-old to have Instagram access. The ban says no—it's a hard floor, not a guideline. Parents lose agency.

These aren't slam-dunk arguments against the ban. But they're not frivolous either. They highlight genuine policy trade-offs that Australian legislators might not have fully considered.

However, Meta's credibility on teen safety is weak. The company has repeatedly downplayed or suppressed research showing harms from its platforms. Internal documents (the Facebook Papers, revealed in 2021) showed Meta knew Instagram was harming teen body image and mental health. The company deprioritized these findings.

So when Meta warns about the ban's downsides, listeners reasonably discount it. Yes, there might be trade-offs, but Meta has shown it prioritizes engagement metrics over teen safety. Its concerns deserve consideration, not trust.

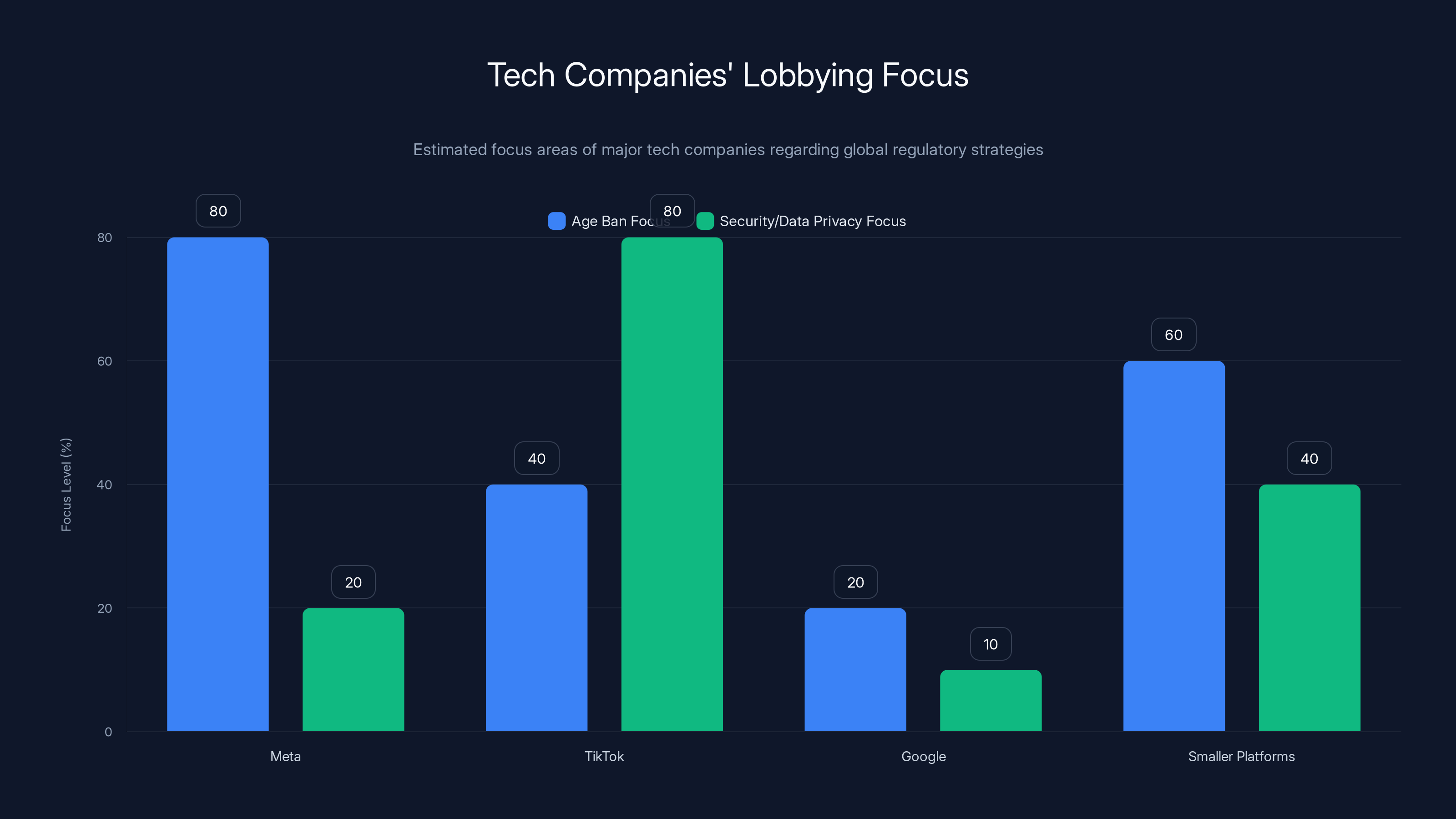

Meta is highly focused on lobbying against age bans, while TikTok is more concerned with security/data privacy issues. Google and smaller platforms have varied focuses. (Estimated data)

Global Implications: Is This the Beginning of a Regulatory Wave?

Australia's ban matters because it signals a possibility that other democracies might pursue similar legislation. Several countries are already exploring comparable approaches.

The European Union's Digital Services Act (DSA) doesn't ban social media for minors, but it imposes stricter requirements: age verification, parental consent for under-18s, enhanced content moderation. The EU is more regulatory-minded than Australia. If the Australian ban works without cratering the economy, the EU might pursue something stronger.

The United Kingdom has expressed interest in age-appropriate design standards. The Online Safety Bill (now law) gives regulators power to mandate age verification for certain content categories. A teen social media ban might be on the table for future legislation.

Canada's online harms bill is under development. There's appetite for stronger teen protections, though an outright ban seems less likely than Australia's.

The United States is unlikely to pass a federal social media ban due to First Amendment protections. But individual states (New York, Texas, California) have proposed age-verification laws for social media or content platforms. These are more narrow than Australia's ban but move in the same direction.

What makes Australia's ban exportable is that it's legally simple and philosophically appealing. It doesn't require nuanced implementation or expertise in content moderation. It's a bright-line rule: no minors. Platforms comply or face massive fines. Governments like simplicity.

But there are barriers to global adoption. Privacy concerns are real. Questions about government overreach are legitimate. In countries with weak rule of law, age verification requirements could be weaponized for surveillance. A government could mandate age verification, then use that data to track political dissidents.

There's also the question of enforceability. Australia has relatively small population (26 million) and is a wealthy country with regulatory capacity. Can India enforce an age ban with 1.4 billion people? Can Brazil with weaker institutional infrastructure?

But wealthy democracies? That's a different story. If the UK, Canada, or a few EU countries follow Australia, you'd have a fragmented global social media landscape. Different platforms in different countries. Some serving adults only, others with teen access, others completely banned depending on location.

The Economics of Compliance: What This Costs Platforms

Closing 550,000 accounts is economically significant for Meta. Not because each account generates enormous revenue—teen social media accounts have limited purchasing power. But because account growth is how Meta justifies its valuation.

Meta's stock price depends on user growth metrics. Losing 550,000 accounts—even teenage accounts—signals shrinking user base in an important market. When Meta reports quarterly earnings, it has to explain missing accounts.

Beyond the direct user loss, there's the compliance cost. Implementing age verification systems requires engineering resources, new features, customer support capacity, and legal work. Meta likely spent millions just building out the compliance infrastructure.

And it's ongoing. The company will need to continuously refine age detection, respond to appeals, update systems as new spoofing techniques emerge. It's not a one-time cost—it's permanent infrastructure.

For smaller platforms, the burden is disproportionate. Tik Tok can probably absorb the engineering cost. Reddit or Twitch might struggle more. A startup platform couldn't operate profitably if forced to implement expensive age verification from day one.

This creates a regulatory moat. Established platforms can afford compliance costs. New entrants can't. The ban potentially entrenches Meta, Google, and other giants by raising barriers to competition.

There's also the lost advertising revenue. If Meta can't serve teenagers in Australia, it loses the advertising value of that segment. Teen engagement is valuable to advertisers. Losing it is real economic harm.

But here's the thing: platforms will pass these costs to users and advertisers. Subscription services become more expensive. Advertising rates rise. The economic burden distributes across consumers, not concentrated on platforms.

For Meta specifically, Australia is a small market. 26 million people, highly developed, already expensive digital advertising market. The loss of 550,000 accounts is significant but not catastrophic. If similar bans hit the EU or North America, the impact would be massive—potentially $10-20 billion in annual revenue across all affected platforms.

That's the real risk that makes platforms fight these bans so hard. One country setting precedent is manageable. A dozen countries with similar rules creates a fundamentally different business model.

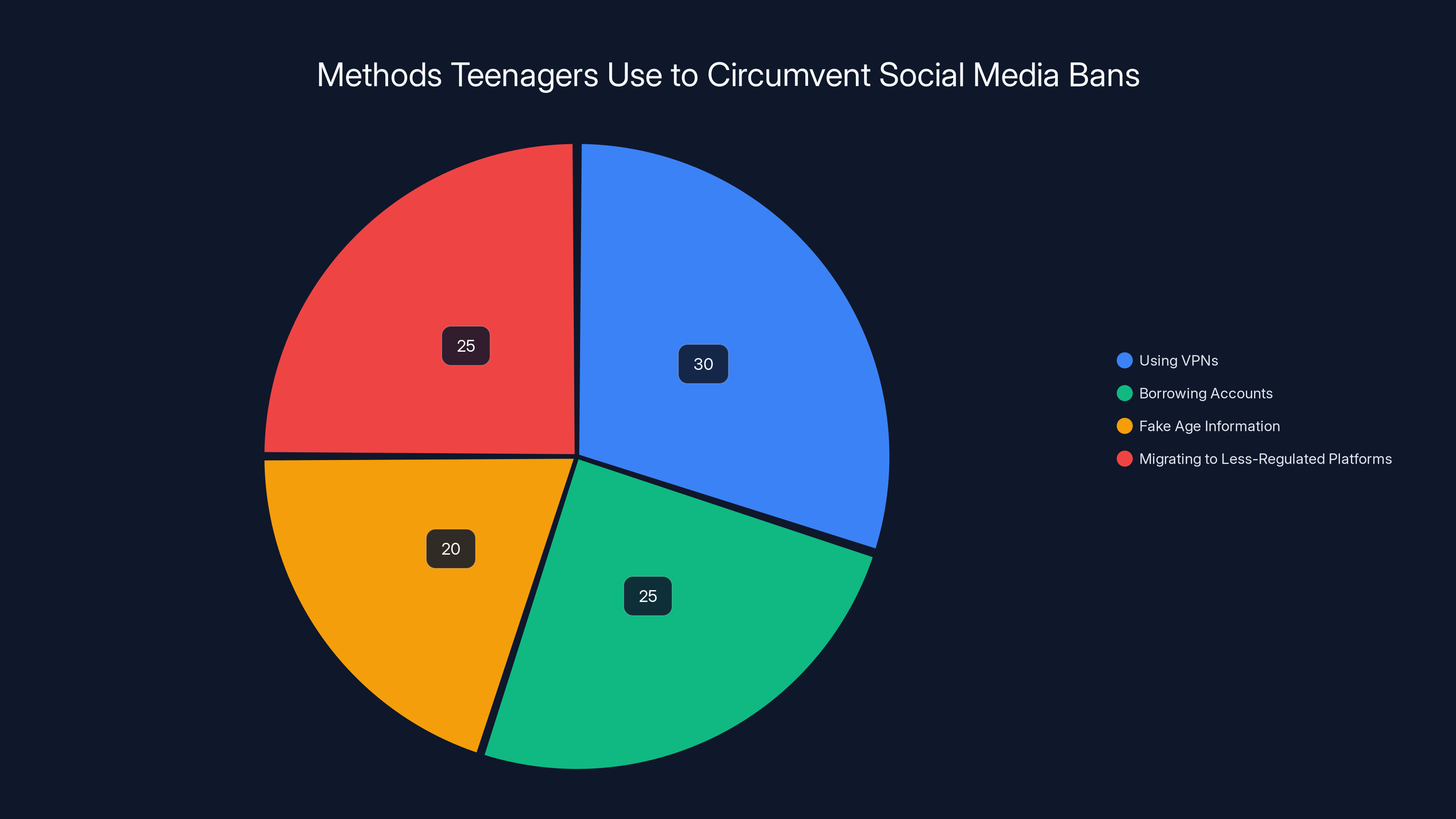

Estimated data suggests that using VPNs and migrating to less-regulated platforms are the most common methods teenagers use to bypass social media bans in Australia.

How Teenagers Are Circumventing the Ban

Here's the uncomfortable truth: many Australian teenagers are still using Instagram, Facebook, and Tik Tok. The ban's enforcement is imperfect, and teenagers are clever.

The most obvious workaround is using VPNs. If a platform detects someone's location as Australia based on IP address, a VPN can mask that and make it appear they're in another country. Detection is possible but not foolproof.

Another workaround is borrowing accounts. Use an older sibling's or friend's account to maintain access. Platforms can detect suspicious location changes or activity patterns, but it's not reliable.

Fake age information also works. Platforms have to balance aggressive verification (which creates privacy invasion and false positives) against lighter verification (which allows spoofing). Most choose lighter verification. A teenager can claim to be 18 on signup and face low follow-up verification.

Migrating to less-regulated platforms is another pattern. Discord, Telegram, Whats App, and private subreddits don't have the same age enforcement. If Instagram becomes inaccessible, teens move to these alternatives.

This creates an unintended consequence: Australian teenagers might move from regulated platforms (with content moderation, reporting systems, some safety features) to unregulated platforms with fewer protections. Mission accomplished?

One fascinating implication: the ban might reduce reported harms on platforms without actually reducing real-world harms. Teens still get exposure to harmful content—they just get it on less-monitored platforms. From a public health perspective, the outcome might be worse, not better.

The Australian government is banking on the assumption that reduced access to social media reduces harms more than regulatory alternatives. But it's an untested hypothesis at scale. We'll know in 2-3 years whether teen mental health metrics improve, worsen, or stay the same.

Privacy Paradox: Security vs. Freedom in Age Verification

The deep problem with age verification isn't technical—it's philosophical. Accurate age verification requires either invasive surveillance or sensitive data collection. You can't have strong privacy AND strong age verification. You have to choose one.

This is the privacy paradox that Meta highlighted. If you want to reliably verify age, you need to:

- Track behavioral data extensively (what people watch, who they follow, when they're online, what devices they use)

- Collect biometric data (facial recognition, voice analysis)

- Require government ID uploads (storing identity documents on servers)

- Create linkage between online identity and government identity (you're no longer anonymous)

Any of these alone raises privacy concerns. All together, they create surveillance infrastructure that governments could weaponize.

For dissidents, LGBTQ+ youth, and others who need online anonymity, the privacy cost is severe. You can't safely use social media in Australia because you have to prove who you are.

This is a legitimate concern, not corporate concern-trolling. Privacy organizations have raised the same issue. The Electronic Frontier Foundation (a digital rights nonprofit) has warned about age verification as a surveillance tool.

But the countervailing concern is real too: social media genuinely does cause harm to some teenagers. Eating disorders, depression, anxiety, sleep disruption—these are documented harms with real prevalence.

So democracies face a genuine dilemma: allow potentially harmful platforms with privacy, or restrict access with surveillance? There's no good answer. Australia chose to restrict with surveillance. Other countries might choose differently.

What's likely to emerge is a compromise: light age verification with high false positive/negative rates, but relatively little privacy invasion. Platforms will implement behavioral analysis and activity inference without requiring ID uploads or facial recognition for most users.

This means the ban won't be airtight. Teenagers will slip through. But it will be good enough to satisfy the government while preserving relative privacy. It's not perfect, but perfect was never realistic.

Meta closed 550,000 accounts in response to Australia's social media ban, with Instagram accounting for the majority at 330,000 closures.

Legal Precedent and Constitutional Questions

Australia's ban raises interesting constitutional questions, even within Australia's system. The country doesn't have a written bill of rights like the U. S., but it does have implied rights in its Constitution.

There are three areas where the ban faces legal vulnerability: freedom of expression, right to education, and right to communication.

Freedom of Expression: Does restricting social media access violate implied freedom of expression? The courts might argue that social media is a medium for expression, and banning it from a segment of the population limits their expressive capacity. This argument is relatively weak because the ban is age-based, not content-based. It's not saying "no expressing these ideas." It's saying, "You're too young." Courts generally allow age-based restrictions.

Right to Education: Does social media contribute to education? Could argue yes, since social media enables learning communities, access to educational content, skill-building. But this argument is also weak because the government could argue there are alternative educational platforms (actual educational websites, institutional platforms, etc.).

Right to Communication: This is the strongest argument. Humans have an inherent right to communicate with others. Banning social media restricts that. But again, it's age-based, not absolute, so courts might allow it.

The more likely legal challenge in Australia is about executive power and specificity. Did the government exceed its regulatory authority? Are the fines proportional to violations? These are administrative law questions, not constitutional ones.

For platforms like Reddit, the legal strategy is clearer. Challenge the government's classification of Reddit as "social media." If successful, Reddit escapes the ban entirely. If unsuccessful, the precedent makes it harder for other platforms to find exemptions.

Globally, the ban might face World Trade Organization challenges. If other democracies implement similar bans, trade law specialists worry about violations of services trade agreements. But this seems unlikely to succeed—governments can restrict digital services for public health reasons.

The deeper precedent is about regulatory authority. If Australia's ban stands without being overturned on legal grounds, it signals to other democracies that age-based social media bans are legally sustainable. That's more important than the specific economic or privacy impacts.

The Role of Research: What Do Studies Actually Say About Teen Social Media?

The legislative push behind Australia's ban relies on research suggesting social media harms teenagers. But what does the evidence actually show?

The research is genuinely mixed. Some studies show correlations between heavy social media use and depression, anxiety, sleep disruption, and body image issues. Other studies show correlations between social media use and social connection, community support, and reduced isolation.

The correlation challenge: Most research shows correlation, not causation. Does Instagram cause depression, or do depressed teens use Instagram more to seek connection? Both probably. But the direction of causality matters for policy.

The dosage question: Some harm might come from excessive use (4+ hours daily), while moderate use (30 minutes to 1 hour) might be neutral or beneficial. The ban doesn't distinguish between dosages. It's absolute.

The vulnerable population specificity: The research often finds that harms concentrate among vulnerable populations—teens with pre-existing mental health conditions, those experiencing bullying, those with body image concerns. Banning everyone might be overtreatment for the 80% who use platforms without serious harm.

Meta's point (however self-serving) has some merit: the research doesn't clearly justify an absolute ban. It justifies restrictions on excessive use, algorithmic amplification of harmful content, certain features (like public comments on minors' posts), or targeted protections for vulnerable users.

The strongest evidence supports targeted restrictions, not blanket bans. But blanket bans are simpler to enforce and more legally defensible. So Australia chose simplicity over nuance.

There's also publication bias in the research. Studies finding harms get published more readily than studies finding no effects. This creates an inflated impression of social media's negative impact in the academic literature.

For policymakers, the responsible approach would be funding more research while implementing light-touch interventions—age verification without surveillance, transparency requirements, algorithm audits. The ban is a sledgehammer for a problem requiring a scalpel.

But governments rarely choose nuance when simple answers are politically available. If the public wants action on teen social media harms, and a ban is simple and popular, politicians will vote for it regardless of what the research precisely says.

Industry Alternatives: What Could Work Better?

Instead of banning social media for all teenagers, consider these alternatives that other democracies are exploring:

Algorithmic Transparency: Require platforms to explain how their algorithms work and allow users to opt for chronological feeds instead of engagement-optimized algorithms. This addresses the concern that platforms deliberately amplify engaging-but-harmful content. Teens could use social media but without the addictive algorithm.

Age-Appropriate Features: Limit certain features for young users—no autoplay, no infinite scroll, restricted comment visibility, no algorithmic recommendations. Design platforms differently for different age groups.

Mandatory Verification with Privacy Protection: Require age verification using privacy-preserving techniques (zero-knowledge proofs, decentralized verification) instead of surveillance-heavy methods. Verify age without linking identity to accounts.

Educational Requirements: Teach digital literacy in schools—how algorithms work, how to recognize misinformation, how to protect privacy, how to notice signs of dependency. Informed users can self-moderate better than bans.

Parental Controls: Give parents actual tools to monitor, limit, and manage teen social media use without platforms harvesting data. Parental consent instead of government bans.

Liability Reform: Make platforms liable for specific harms (algorithmic promotion of self-harm content, child exploitation material, illegal activity) without making them liable for all user behavior. Incentivize safety without requiring blanket bans.

Each of these alternatives has trade-offs. Algorithmic transparency requires platforms to reveal competitive secrets. Parental controls might reduce privacy if platforms share data with parents. Age-appropriate features could reduce product consistency.

But they're less likely to create unintended consequences than a blanket ban. They're also easier to undo if research shows they're ineffective.

Australia had the opportunity to implement one of these alternatives. Instead, it chose the broadest possible intervention. That will be the real test: not whether the ban reduces social media use (it obviously will), but whether it actually improves teen mental health and whether the unintended consequences outweigh the benefits.

Global Tech Response: Industry Lobbying and Regulatory Strategy

Platforms aren't just complying passively with Australia's ban. They're also using it as a test case for understanding global regulatory trends and building defenses against similar legislation elsewhere.

Meta has been particularly vocal, not just about Australia but about the broader regulatory environment. The company is lobbying governments in other countries to avoid age bans. Its argument: bans don't work, create privacy risks, drive teens to unregulated platforms, and are impossible to enforce fairly. Instead, adopt nuanced regulations like the EU's DSA model.

Tik Tok is in a different position. The company is already facing potential bans in the U. S. and UK, not for age reasons but for national security/data privacy concerns. Australia's age ban is secondary to these existential threats. But Tik Tok is still paying attention—if age bans spread, combined with security bans, the company might exit certain markets entirely.

Google is mostly unconcerned because You Tube isn't explicitly included in Australia's ban (unlike Facebook, Instagram, Tik Tok). The company is positioning You Tube as different from social media. This distinction is important for Google because it exempts the company from the strictest enforcement.

Smaller platforms (Discord, Reddit, Snapchat) are engaged in both compliance and legal challenge. They want to understand whether the ban will spread before investing in expensive infrastructure to combat it.

The broader industry strategy is to make age bans seem impractical. Platforms highlight the technical challenges, privacy costs, and unintended consequences. If they can convince legislators that bans don't work, they can push for lighter-touch regulations instead.

But they're fighting an uphill battle. Public opinion favors protecting kids, regardless of technical challenges. And once one democracy implements a ban successfully (even imperfectly), other democracies will follow.

What's emerging is a two-tier system: democracies like Australia implementing tough bans and age verification, and others like the U. S. taking lighter regulatory approaches. Platforms are adapting to operate in both environments—strict in some markets, relatively open in others.

For smaller markets and developing countries, the picture is murkier. Many don't have the regulatory capacity to enforce age bans or the economic leverage to negotiate exemptions. Compliance will be inconsistent, creating opportunities for platforms to manipulate enforcement.

Looking Forward: Will Other Countries Follow Australia's Lead?

The critical question: does Australia's ban become a global template or remain an outlier?

Several factors will determine this:

Success metrics: If teen mental health improves significantly in Australia over the next 2-3 years, other countries will follow. If metrics stay flat or worsen, countries will be skeptical. We'll have a clear answer by 2027.

Implementation challenges: If Australia faces massive evasion (most teens still access platforms), enforcement costs explode, and privacy concerns escalate, other countries will avoid similar bans. But if Australia makes the system work reasonably well at acceptable cost, the precedent is powerful.

Economic impact: If platforms don't actually leave Australia or significantly reduce investment, the risk of retaliation seems manageable. But if Meta or Tik Tok threatens to exit countries implementing bans, that's powerful leverage.

Political context: Left-leaning governments in the EU and UK are more likely to implement bans than right-leaning governments (which tend to favor free markets and lighter regulation). As political winds shift, regulatory appetite changes.

Tech alternatives: If new social media platforms emerge specifically designed for younger users with safety built in, they might displace the current dominant platforms. That could reduce pressure for bans.

My prediction: 3-5 countries will implement similar bans within the next 5 years. Likely candidates are UK, Canada, and possibly a few EU countries. But the U. S. will likely never implement a national ban due to First Amendment concerns. China and other authoritarian countries will use age bans as cover for broader censorship.

The outcome is a fragmented global social media landscape where different regions have different age requirements. This might actually be beneficial—it lets democracies experiment with different approaches and observe which work best.

The Deeper Question: Are We Asking the Right Problem?

Underlying Australia's ban is an implicit question: what's the appropriate relationship between teenagers and social media?

The ban assumes the answer is "none." Teenagers shouldn't use social media at all.

But maybe the real answer is more nuanced. Teenagers should use social media, but differently than adults. With different tools, different algorithms, different features, different privacy protections.

The problem is that platforms have zero incentive to build "teen social media." They want one product that maximizes engagement for all ages. A teen-specific version would be less engaging (by design, to reduce addiction). Less engaging means less advertising revenue.

So platforms won't voluntarily build safer social media for teenagers. They need regulation forcing the issue.

Australia chose to regulate through age gating. Other democracies might regulate through feature requirements, algorithmic restrictions, or liability laws.

But the underlying regulatory goal should be: teenagers can use social media, but in ways that minimize documented harms. That's a more nuanced goal than banning entirely, but it requires more sophisticated regulation.

Australia took the simple approach. We'll learn whether simple approaches work better than nuanced ones.

FAQ

What is Australia's social media ban?

Australia's social media ban is legislation that prohibits anyone under 16 from accessing social media platforms including Facebook, Instagram, Tik Tok, Snapchat, X, You Tube, Reddit, Twitch, Be Real, and Bluesky. The law took effect December 10, 2024, and requires platforms to enforce the restriction or face fines up to $AUD 49.5 million. It's the first age-based social media ban implemented by a major democracy.

How does Meta determine if someone is underage?

Meta uses multiple methods to identify underage users: behavioral analysis (examining activity patterns and profile information), digital ID verification (users can voluntarily upload government ID), facial age estimation (AI models attempting to estimate age from photos), and reported accounts. The company combines these signals to flag likely underage accounts, which are then removed or suspended. However, none of these methods is foolproof, and evasion is possible through VPNs, borrowed accounts, or false age information at signup.

Why did Meta close 550,000 accounts?

Meta identified these accounts as likely belonging to users under 16 based on age verification signals. The company was attempting to comply with Australia's legal requirement that platforms prevent underage access. The 330,000 Instagram, 173,000 Facebook, and 40,000 Threads accounts represented users Meta's systems flagged as minors. However, many underage users likely maintain accounts by successfully spoofing age verification checks.

What are the privacy concerns with age verification?

Accurate age verification requires either extensive behavioral surveillance (tracking activity, device information, connections) or sensitive biometric collection (facial recognition, government ID uploads). Both approaches create privacy risks. Behavioral tracking creates detailed profiles of teen activity. Biometric collection and ID uploads expose government identification to storage and breach risks. For marginalized teens who need online anonymity for safety, these privacy costs are especially concerning.

Can teenagers still access social media in Australia?

Yes, teenagers can access social media using various workarounds: VPNs to mask location, borrowed accounts from older users, false age information at signup, or migrating to less-regulated platforms like Discord or Telegram. The ban reduces access but doesn't eliminate it. Platforms must balance aggressive verification (which creates privacy invasion) against light verification (which allows spoofing). Most platforms choose lighter verification, meaning many teen accounts slip through.

What will happen if other countries implement similar bans?

If multiple democracies implement age-based social media bans, platforms will face fragmented regulatory requirements across different markets. This increases compliance costs, creates different user experiences by region, and potentially drives teenagers toward less-regulated platforms. However, it also allows democracies to experiment with different regulatory approaches and learn which are most effective at reducing harms while minimizing unintended consequences.

Is the ban constitutional in Australia?

Australia's ban faces potential constitutional challenges around freedom of expression, right to communication, and administrative law proportionality. However, courts traditionally allow age-based restrictions more readily than content-based restrictions. The strongest legal challenges will likely come from platforms like Reddit arguing they're not "social media" and thus exempt. Courts will need to define what constitutes social media versus other digital platforms.

What are experts saying about whether the ban will work?

Experts are divided. Some public health researchers support age-restricted access as a harm-reduction strategy, noting research linking heavy social media use to mental health issues. Other researchers point out that available evidence shows weak causation, that correlation is equally explained by depressed teens seeking connection online, and that bans might drive teenagers to less-regulated platforms. Privacy and digital rights experts warn about surveillance infrastructure created by age verification. Technologists note that the enforcement is imperfect and evasion is inevitable.

How much does age verification cost platforms?

The cost varies significantly. Meta likely spent several million dollars implementing age verification infrastructure for the Australian market. For smaller platforms, compliance costs could represent 10-20% of annual revenue, potentially making their business models unviable. Ongoing costs are permanent—platforms must continuously refine detection methods, respond to appeals, and update systems as new evasion techniques emerge. This creates a regulatory moat where established players can afford compliance but startups cannot.

What are teenagers doing to get around the ban?

Common workarounds include using VPNs to appear as though they're accessing from outside Australia, using older siblings' or friends' accounts, providing false age information at signup, and switching to less-regulated platforms like Discord, Telegram, Whats App, or private Reddit communities. Platforms can detect some workarounds (suspicious location changes, activity patterns, simultaneous account access) but detection is imperfect. The most effective workaround is likely simple: lie about your age on signup, since platforms rarely verify claims without additional signals.

The Broader Conversation

Australia's decision to ban social media for under-16s represents a fundamental shift in how democracies approach tech regulation. Instead of trying to make platforms safer, Australia essentially said: these services are too risky for teenagers, full stop.

It's a legitimate policy position, and reasonable people disagree about whether it's justified. The research on social media harms is real but modest. The privacy costs of enforcement are substantial. The unintended consequences (teenagers moving to less-regulated platforms) are genuine concerns.

But the precedent matters more than the specifics. Once one democracy implements an age ban successfully, others will follow. The question isn't whether the Australian ban is perfect. It's whether it works well enough and creates enough positive precedent to spread globally.

If it does, we're entering a new era where social media companies operate under fundamentally different rules by region. That's economically significant, creates privacy implications, and fundamentally changes how teenagers experience the internet.

Over the next 2-3 years, watch four metrics: teen mental health outcomes in Australia, the practical evasion rate (what percentage of Australian teens still access banned platforms), the spread of similar legislation to other democracies, and whether platforms adjust their business models in response.

Those metrics will tell us whether Australia stumbled onto an effective policy or created a regulatory nightmare with minimal benefit. For now, we're watching the first major test of whether democracies can actually regulate social media effectively, rather than just trying to make it slightly safer around the edges.

The answer will shape how billions of teenagers experience the internet for decades to come.

Key Takeaways

- Meta closed 550,000 accounts across Instagram, Facebook, and Threads to comply with Australia's first-of-its-kind under-16 social media ban implemented December 10, 2024

- Ten major platforms face fines up to 33 million USD) for non-compliance, creating immediate economic pressure to enforce age restrictions

- Age verification technology is fundamentally flawed, requiring either invasive behavioral tracking or sensitive biometric data collection—creating a privacy paradox with no perfect solution

- Teenagers are circumventing the ban through VPNs, borrowed accounts, false age information, and migration to less-regulated platforms like Discord and Telegram

- Australia's ban sets a global precedent that could spread to the EU, UK, Canada, and other democracies within 3-5 years, fundamentally reshaping social media regulation worldwide

- The ban's real success depends on whether it measurably improves teen mental health outcomes—if it does, replication is likely; if outcomes remain flat, other countries will resist similar legislation

Related Articles

- App Store Age Verification: The New Digital Battleground [2025]

- Roblox Age Verification Requirements: How Chat Safety Changes Work [2025]

- Indonesia Blocks Grok Over Deepfakes: What Happened [2025]

- Apple's ICEBlock Ban: Why Tech Companies Must Defend Civic Accountability [2025]

- WhatsApp Under EU Scrutiny: What the Digital Services Act Means [2025]

- EU Digital Networks Act: Why Big Tech Got a Pass [2025]

![Meta Closes 550,000 Accounts: Australia's Social Media Ban Impact [2025]](https://tryrunable.com/blog/meta-closes-550-000-accounts-australia-s-social-media-ban-im/image-1-1768225035514.jpg)