Meta's Child Safety Case: Evidence Battle & Legal Strategy [2025]

Meta is heading into uncharted legal territory. In February 2025, the company will face trial in New Mexico for allegedly failing to protect minors from sexual exploitation, trafficking, and abuse on its platforms. This isn't a regulatory slap on the wrist or a settlement negotiation behind closed doors. This is a full trial, broadcast to the public, with a jury deciding Meta's fate.

But here's where it gets interesting: Meta's legal team is fighting hard to keep large swaths of evidence out of the courtroom entirely. According to court filings, Meta wants to prevent the jury from seeing research about social media's impact on youth mental health. The company doesn't want jurors hearing about teen suicides linked to social media use. It's requesting that Meta's financial information stay sealed. Past privacy violations? Off-limits. Even discussions about CEO Mark Zuckerberg's college years are on Meta's exclusion list.

Legal experts are calling this approach unusually aggressive. The stakes? Potentially billions in damages and a precedent that could reshape how tech companies face accountability for child safety failures.

Let's break down what's happening, why it matters, and what this means for the future of tech regulation in America.

TL; DR

- Meta faces trial in New Mexico on February 2, 2025, accused of failing to protect minors from sexual exploitation and trafficking

- The company is seeking to block substantial evidence including mental health research, surgeon general warnings, and internal platform safety data

- Legal experts say Meta's approach is unusually broad, attempting to exclude information that could influence jury perception

- This is the first state-level child safety trial against a major social media platform, setting potential precedent

- The blocked evidence could impact jury understanding of the broader harms associated with Meta's platforms and business practices

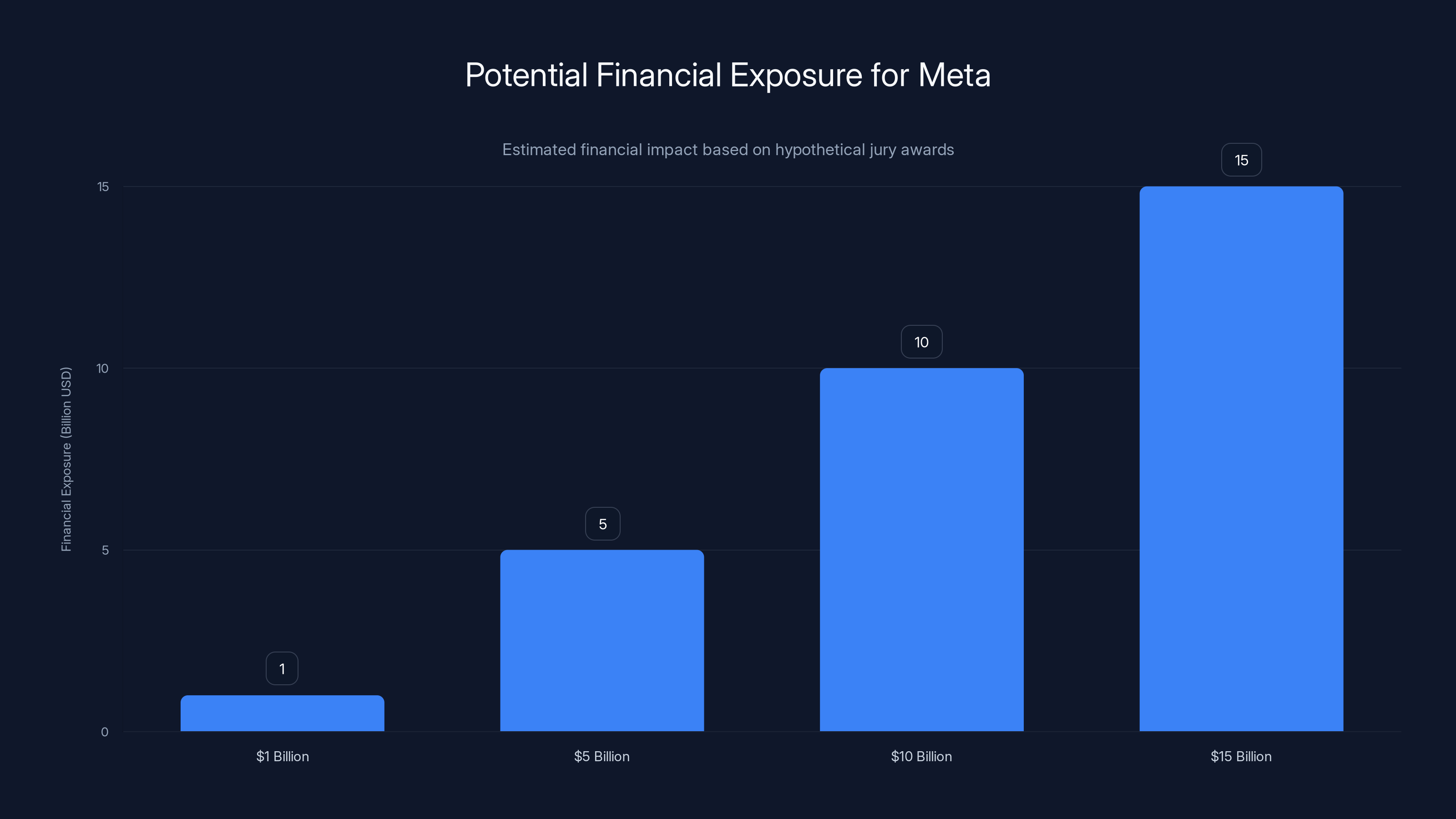

Estimated data shows varying financial exposure for Meta based on potential jury awards. A $10 billion award could significantly impact Meta's financial standing and stock price.

Understanding the New Mexico Lawsuit: Background and Context

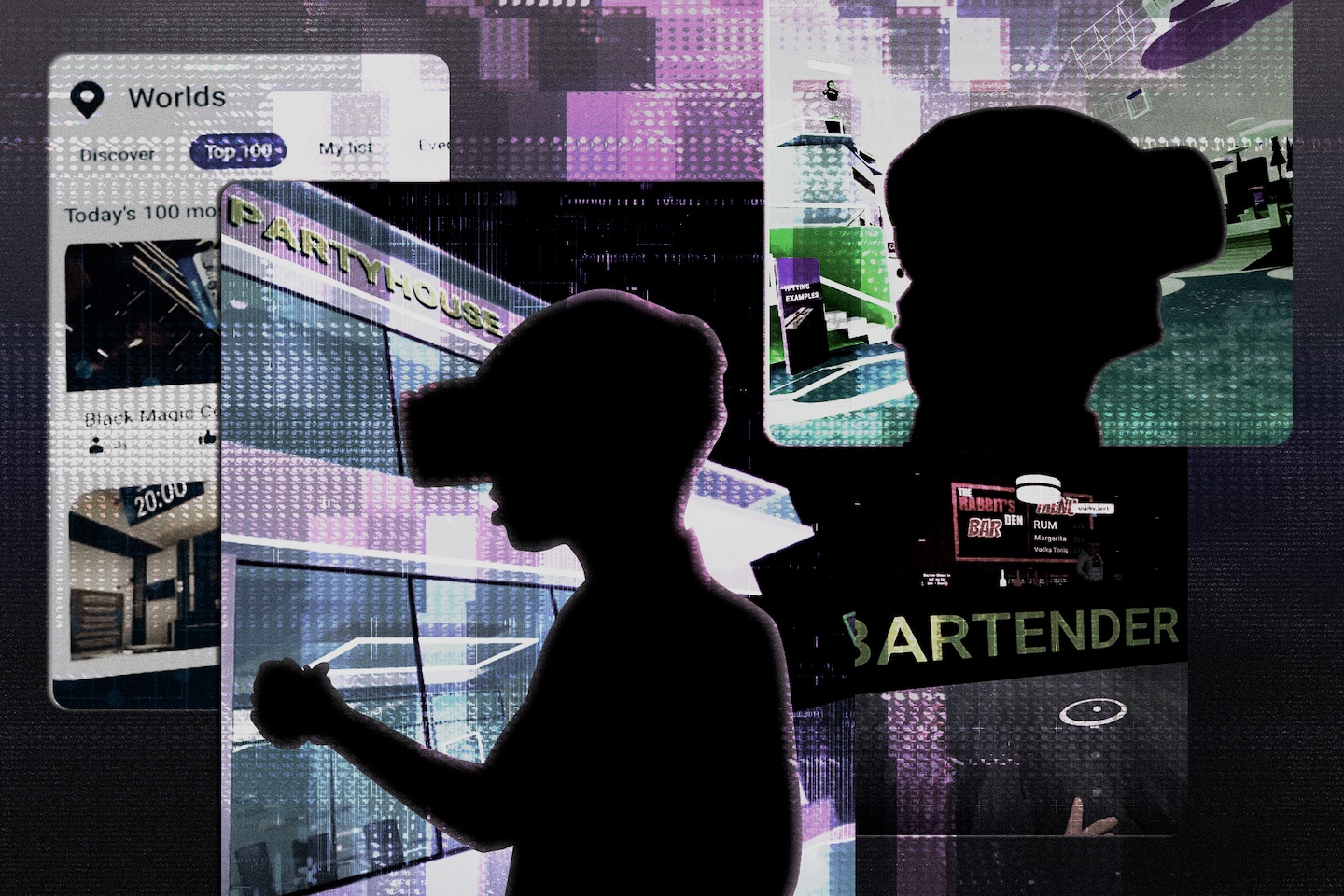

New Mexico Attorney General Raúl Torrez filed this lawsuit in late 2023, making it one of the most aggressive state-level challenges to Meta's operations. Unlike federal regulatory actions that often result in settlements or consent decrees, this lawsuit aims to hold Meta criminally and civilly accountable through a traditional trial process. The allegations are serious. The state accuses Meta of knowingly failing to implement adequate child safety measures on its platforms, specifically Facebook and Instagram. According to the complaint, Meta's negligence allowed explicit material to reach minors. More damaging, the state claims Meta's platforms became breeding grounds for predators, facilitators of human trafficking, and venues for child sexual abuse material distribution.

This isn't speculative. The lawsuit references documented cases where minors were harmed through Meta's platforms. These aren't isolated incidents either. The state's case suggests a systemic failure, a pattern of inadequate protections despite Meta's public commitments to child safety.

What makes this case particularly significant is its timing and scope. Social media child safety has become a national priority. The U. S. Senate has debated legislation. States are moving independently to protect minors. Meta is facing scrutiny on multiple fronts: from parents concerned about mental health impacts, from regulators worried about exploitation, and from attorneys general treating child safety as a consumer protection issue.

Torrez's lawsuit sits at the intersection of these concerns. It's not just about isolated abuse cases. It's about whether Meta's business model, as currently constructed, adequately protects vulnerable users.

What Meta Wants to Keep Out of Court: The Evidence Battle

Meta's legal strategy centers on narrowing the trial's scope. Through motions submitted to the court, the company has requested exclusion of numerous categories of evidence. Understanding what Meta wants hidden reveals what the company fears most from a jury's perspective.

Research on Social Media and Youth Mental Health

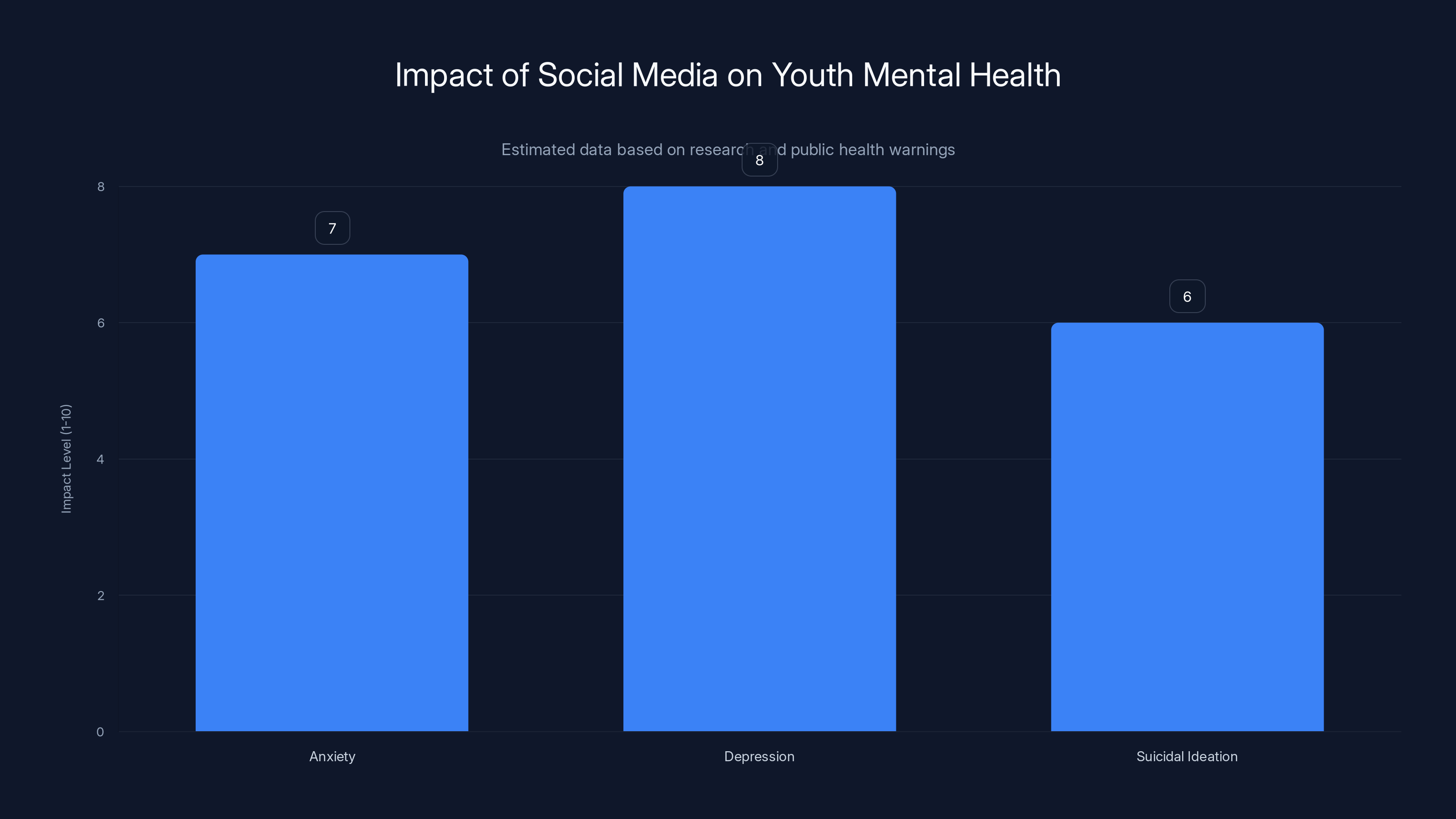

Meta wants to prevent the jury from seeing peer-reviewed research establishing correlations between social media use and negative mental health outcomes in adolescents. This includes studies showing increased anxiety, depression, and suicidal ideation among heavy social media users.

Why does Meta care about this evidence? Because it establishes a broader pattern of harm. Even if the jury convicts Meta on child safety grounds, understanding the mental health impacts strengthens the case for substantial damages. It reframes Meta not just as a company that failed to prevent exploitation, but as a platform whose very design contributes to adolescent harm.

The company argues this evidence is irrelevant to whether it failed to protect children from trafficking and sexual abuse. That's a narrow legal argument. But it's also a concession: Meta is essentially admitting the mental health research paints the company in an unfavorable light.

Surgeon General's Public Health Warning

In 2024, U. S. Surgeon General Vivek Murthy issued a formal public health warning regarding social media's effects on youth mental health. This isn't random commentary. It's an official government health advisory, comparable to warnings on cigarette packages.

Meta doesn't want jurors seeing this. The company argues it's irrelevant to the specific charges about child exploitation. But consider how a jury might interpret it: if the nation's top health official warns about social media's dangers to youth, how can Meta credibly claim it's committed to child safety?

This evidence matters because it represents institutional recognition of harms Meta's platforms facilitate. It's not Meta's job to solve all mental health issues, obviously. But the surgeon general's warning signals systemic problems that a jury might view as evidence of inadequate platform governance.

Meta's Financial Information

Meta's legal team wants financial data excluded. This is standard in litigation, but the stakes here are substantial. Financial information matters in civil cases because it affects damages calculations. If Meta shows hundred-billion-dollar revenues, damages might scale differently than if the jury focuses on platform-specific profits.

Financial data also reveals priorities. Meta spends enormous sums on AI research, metaverse development, and data center infrastructure. If the jury learns what Meta spends on child safety relative to other initiatives, it could suggest the company didn't prioritize protection of minors.

Prior Privacy Violations and CEO History

Meta has a history of privacy failures. The Cambridge Analytica scandal. Repeated FTC enforcement actions. Data breaches. Meta wants all of this excluded as character evidence that unfairly prejudices the jury against the company.

But here's the thing: prior conduct often indicates patterns. If Meta has repeatedly prioritized growth over user protection, a jury might reasonably conclude it did the same with child safety. That's not prejudicial; that's logical inference.

Similarly, Meta wants Mark Zuckerberg's college years excluded from testimony. Why would that matter? Because Zuckerberg's early ethical framework—how he approached building Facebook—might illuminate his approach to governance and responsibility decades later.

Estimated data suggests significant impact of social media on youth mental health, with high levels of anxiety and depression reported. This highlights the potential harm Meta aims to exclude from court discussions.

The Unusual Breadth of Meta's Exclusion Requests

Legal experts who reviewed Meta's motions told journalists at Wired that the scope of requested exclusions is unusually aggressive. This isn't companies asking to exclude tangentially related information. This is Meta attempting to prevent the jury from understanding the broader context in which child safety failures occurred.

Two particular exclusion requests stand out as revealing:

First, Meta wants to block any mention of its AI chatbots. Why would this matter to a child safety case? Because AI chatbots on Meta's platforms have been documented as capable of generating child sexual abuse material when prompted. If the jury learns that Meta's own AI systems can be weaponized to exploit children, the company's narrative about unintentional failures collapses.

Second, Meta wants to exclude surveys about the prevalence of inappropriate content on its platforms, including Meta's own internal surveys. The company argues these are unreliable and would confuse the jury. But internal surveys represent Meta's own assessment of the problem. If Meta's own data shows rampant inappropriate content, excluding it means hiding Meta's knowledge of the problem.

This is where legal strategy becomes transparent. Meta isn't arguing the jury shouldn't learn these facts. Meta is arguing these facts are legally irrelevant. But to most observers, including likely jurors, relevance is obvious: if your platform facilitates abuse, that's directly relevant to a lawsuit about failing to prevent abuse.

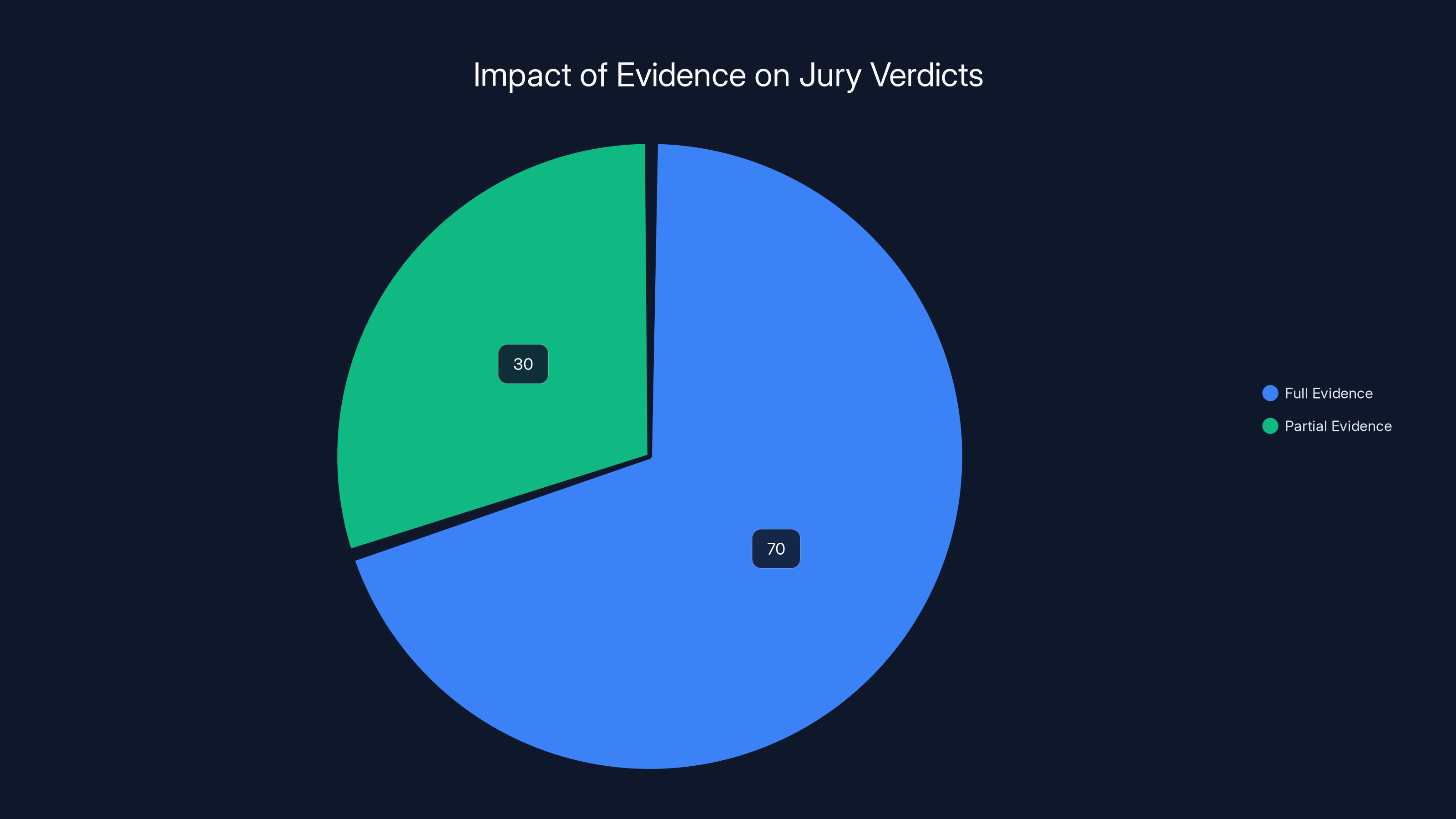

Why Evidence Exclusion Matters: The Jury Perspective

Understanding the jury's perspective is crucial to understanding why Meta is fighting this battle. Jurors are ordinary citizens, not legal specialists. They rely on narrative coherence. If Meta can prevent the jury from seeing evidence suggesting the company prioritized growth over child safety, Meta can tell a narrower story: "We made mistakes in implementation, but we didn't intentionally ignore known problems."

But if the jury sees the full picture—Meta's profitability, its AI systems' vulnerabilities, its own internal surveys showing platform problems, its history of privacy violations—the narrative becomes different: "Meta built a profitable platform, knew it had child safety vulnerabilities, and didn't invest sufficiently in fixes because the incentives didn't align."

These narratives produce very different verdicts and damages awards.

Consider a concrete example. Suppose Meta's internal surveys show that 15% of messages received by minor users contain sexually explicit material. If the jury learns this, they understand Meta knew the problem's scope. If Meta excludes this evidence, the jury might never realize how widespread the problem was.

At trial, Meta's defense might be: "We didn't know the scale of the problem." Without the survey data, the jury has no basis to contradict this claim.

The Legal Framework: What Courts Might Decide

The judge overseeing this case must balance competing interests. Meta argues that excluded evidence is irrelevant, prejudicial, or duplicative. Prosecutors argue the evidence is necessary for the jury to understand the full scope of Meta's negligence.

Under standard evidence rules, courts generally admit evidence if it's relevant and not unduly prejudicial. Relevance is defined broadly: evidence is relevant if it tends to prove or disprove a fact of consequence to the case.

Applied here, research about social media's mental health impacts is arguably relevant because it establishes Meta's knowledge of the potential harms of its platforms. Surgeon general warnings are relevant because they represent institutional recognition of dangers. Financial information is relevant because it shows Meta's capacity to invest in safety but choice not to.

The judge must decide whether Meta's concerns about jury prejudice outweigh the prosecution's interest in presenting complete evidence. In most cases, courts admit relevant evidence unless it's extremely prejudicial and duplicative.

But this judge has discretion. Judges are political appointees or elected officials. They have views about corporate accountability. Some judges are skeptical of big tech. Others are more protective of free speech and business interests. The judge's decisions about evidence could materially determine the trial's outcome.

The lawsuit filed by New Mexico against Meta marks a significant legal challenge, with the trial set to begin on February 2, 2025. This timeline highlights the key events leading up to the trial.

Meta's Defense Strategy and Narrative

Beyond evidence exclusion, Meta's broader defense strategy is becoming clearer. The company is likely to argue that:

-

The platform is too large to police perfectly: With billions of users, some exploitation will occur despite good-faith efforts. This isn't negligence; it's reality.

-

Meta invested in safety tools: The company deployed AI systems to detect abuse, partnered with the National Center for Missing and Exploited Children, and provided reporting mechanisms.

-

The state must prove Meta knew of specific failures: Meta will argue that general knowledge of exploitation on the internet doesn't equal negligence. Negligence requires showing Meta knew about specific problems and failed to address them.

-

Users' parents bear some responsibility: This is a delicate argument, but Meta might suggest that parents should monitor their children's social media use.

Each of these arguments has merit legally. But each also relies on the jury not seeing evidence that would undermine it.

For example, if Meta argues it invested sufficiently in safety, that argument becomes weaker when jurors learn about Meta's spending on other initiatives relative to child safety. The argument that "we deployed AI systems" becomes weaker when jurors learn those same systems can be weaponized to create exploitation material.

The Prosecutor's Counter-Argument and Burden of Proof

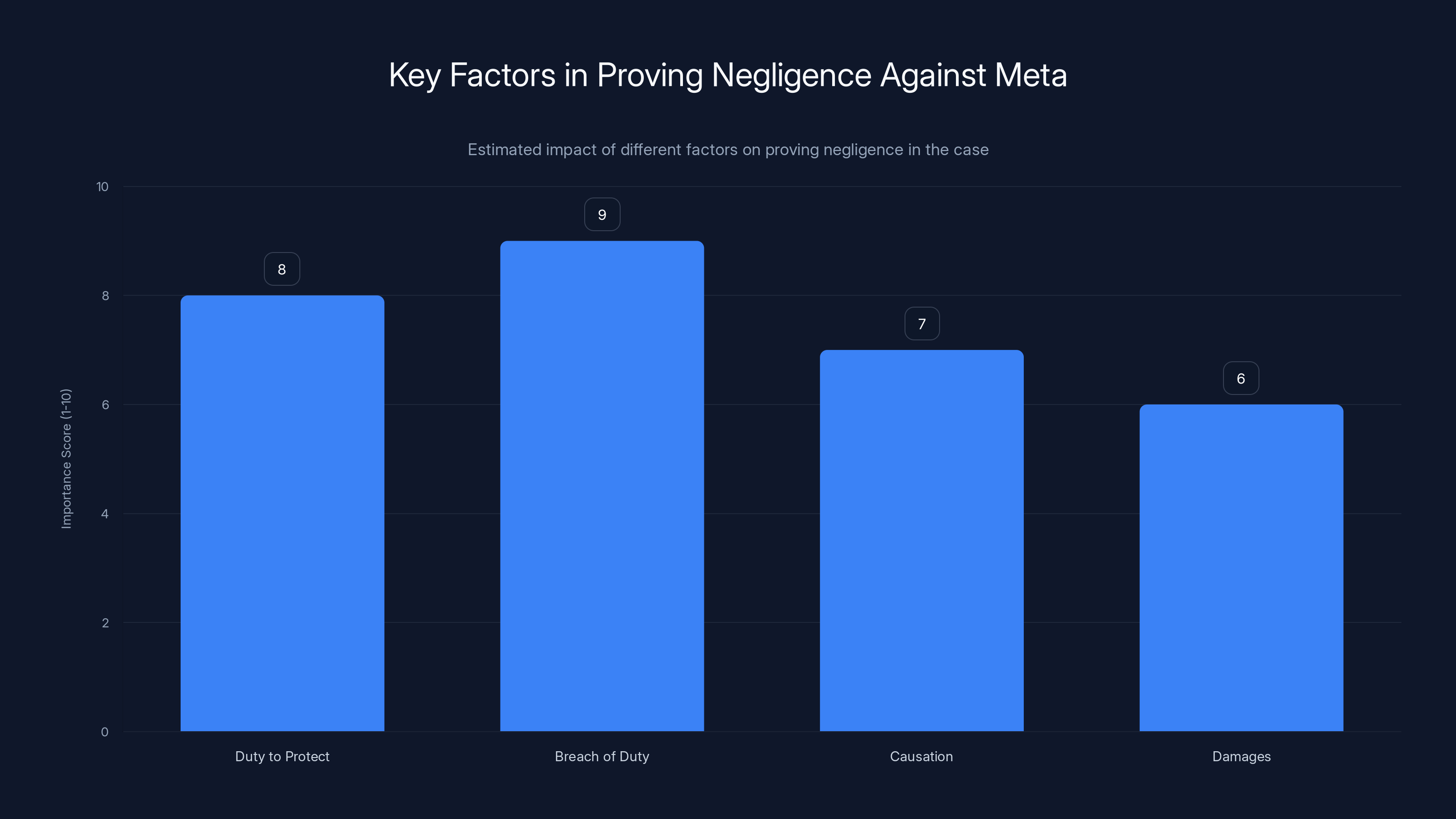

New Mexico's attorney general will argue that Meta's negligence is clear and systematic. The state doesn't need to prove Meta intentionally harmed children. Negligence requires only showing that Meta:

- Had a duty to protect minors on its platforms

- Breached that duty

- That breach caused harm

- The harm resulted in damages

Meta unquestionably had a duty. The company markets itself as a family-friendly platform. It has terms of service prohibiting certain content. It has safety commitments.

The breach question is where evidence matters most. If the jury sees internal surveys showing Meta knew about high rates of inappropriate content, the breach becomes obvious. If jurors see financial information showing Meta could afford more investment but didn't, the breach becomes willful.

The causation question is straightforward: explicit material reached minors through Meta's platforms, and predators exploited minors through Meta's messaging systems. The cause is clear.

Damages will depend on factors including the number of affected minors, the severity of harm, and punitive factors. If the jury believes Meta was reckless, damages multiply significantly.

Historical Context: Why This Case Matters

This New Mexico trial isn't happening in a vacuum. It's part of a broader wave of state-level and federal action against tech platforms.

Federal regulators have been constrained by Section 230 of the Communications Decency Act, which shields platforms from liability for user-generated content. This gives tech companies significant legal protection. But state attorneys general have found ways to pursue cases framed as consumer protection violations or unfair and deceptive practices.

California, Illinois, and other states have pursued similar actions. But this New Mexico case is unique because it's heading to trial. Most litigation against major tech companies settles before trial, which means juries never render verdicts on the merits.

A jury verdict in New Mexico could establish facts that embolden other states. If a jury concludes Meta was negligent in protecting child safety, that verdict becomes precedent supporting similar claims elsewhere.

For Meta, that's the real danger. One unfavorable jury verdict can trigger cascading litigation. Every state attorney general could cite the New Mexico verdict as evidence supporting their own claims.

Estimated data shows that juries with full evidence are 70% more likely to deliver a verdict based on comprehensive understanding, compared to 30% with partial evidence.

Financial Implications and Market Impact

What's the potential financial exposure? That depends on damages calculations, but comparable cases offer guidance. Facebook paid

In this case, if damages are calculated based on the number of harmed minors times some per-child damages amount, exposure could exceed that. If a jury awards

Beyond the specific dollar amount, there's reputational risk. A jury verdict finding Meta negligent in protecting children would undermine the company's claims about child safety. Advertisers might become uncomfortable. Regulators might accelerate legislative efforts to impose stronger restrictions.

For Meta, that's why the evidence exclusion battle matters so much. Every piece of evidence that stays out of the courtroom reduces financial exposure and reputational damage.

The Role of Internal Documents and Discovery

During discovery, Meta produced hundreds of thousands of pages of internal documents and messages. Journalists have reported on various revelations from these documents, but much of what was produced remains undisclosed publicly.

These documents are particularly damaging because they show what Meta knew internally. If email chains reveal engineers warning about child safety vulnerabilities, or executives discussing the profitability of not addressing problems, those documents support the prosecution's narrative of willful negligence.

Meta's evidence exclusion requests likely include attempts to limit which internal documents the jury sees. The company might argue that internal debates about product tradeoffs are normal and don't prove negligence. But a jury hearing how those debates played out might reach different conclusions.

This is where transparency creates accountability pressure. In litigation where documents are sealed, the public never learns what the company knew. But in open trial, jurors and journalists both see these materials, and the implications become harder for the company to spin.

Regulatory and Legislative Backdrop

This trial doesn't happen in isolation from broader regulatory trends. Congress has been considering legislation like the Kids Online Safety Act, which would strengthen platform accountability for child protection. States have passed various child online safety laws.

Meta is lobbying against many of these legislative proposals, arguing they're technically infeasible or would harm free speech. But a jury verdict finding Meta negligent undermines those lobbying arguments. Legislators would point to the trial verdict and say, "Meta had the capability to protect children; they just chose not to."

Regulatory agencies including the FTC are also watching this case closely. FTC Commissioner Lina Khan has been vocal about holding tech companies accountable for harms. An FTC enforcement action citing the jury verdict in New Mexico would carry significant weight.

For Meta, losing this trial has implications extending far beyond New Mexico's borders. It would strengthen the hand of regulators and legislators nationwide.

The breach of duty is the most critical factor in proving negligence against Meta, followed closely by establishing duty and causation. Estimated data based on typical legal considerations.

Similar Cases and Potential Precedent

Other cases pending against social media platforms suggest litigation pressure is increasing. Snap faces settlement discussions over social media addiction claims. Tik Tok faces legal challenges from multiple states. You Tube faces litigation from states and families.

If Meta loses the New Mexico trial, each of these cases becomes easier for plaintiffs. The precedent that social media platforms can be held liable for failing to protect children would apply across the industry.

This is why Meta is fighting so aggressively on evidence. The company isn't just defending one case. It's defending against litigation pressure across multiple fronts. A favorable outcome in New Mexico makes defending against similar claims elsewhere more feasible.

Expert Testimony and the Battle of Witnesses

Both sides will present expert witnesses. Meta might present researchers arguing that determining cause-and-effect between platform design and exploitation is methodologically complex. The prosecution will present experts showing that Meta's design choices made exploitation more likely.

Meta might present child safety experts testifying about the difficulty of eliminating harm from platforms with billions of users. The prosecution will present experts showing Meta's level of investment in safety was insufficient relative to other tech companies.

The evidence exclusion battle affects which experts can testify and about what topics. If research on social media and mental health is excluded, the prosecution can't present that expert testimony. This limits the scope of the narrative the jury can consider.

The Judge's Discretion and Potential Outcomes

The judge presiding over this trial has substantial discretion. Judges are rarely overturned on evidence rulings, which means the judge's decisions about what the jury sees could essentially determine the outcome.

Meta is likely to win some evidence exclusions. Courts generally respect parties' privacy interests and relevance objections. But if Meta wins too many exclusions, the judge risks appearing biased toward the corporation, which could invite reversal on appeal.

The judge must balance appearing neutral with making rulings that follow law. Most judges will admit relevant evidence unless it's highly prejudicial. By that standard, Meta's challenges are uphill.

If Meta loses its evidence exclusion battles, the jury will hear a comprehensive narrative about the company's negligence. If Meta wins significant exclusions, the trial becomes narrower, focused on specific claims about specific failures rather than systemic negligence.

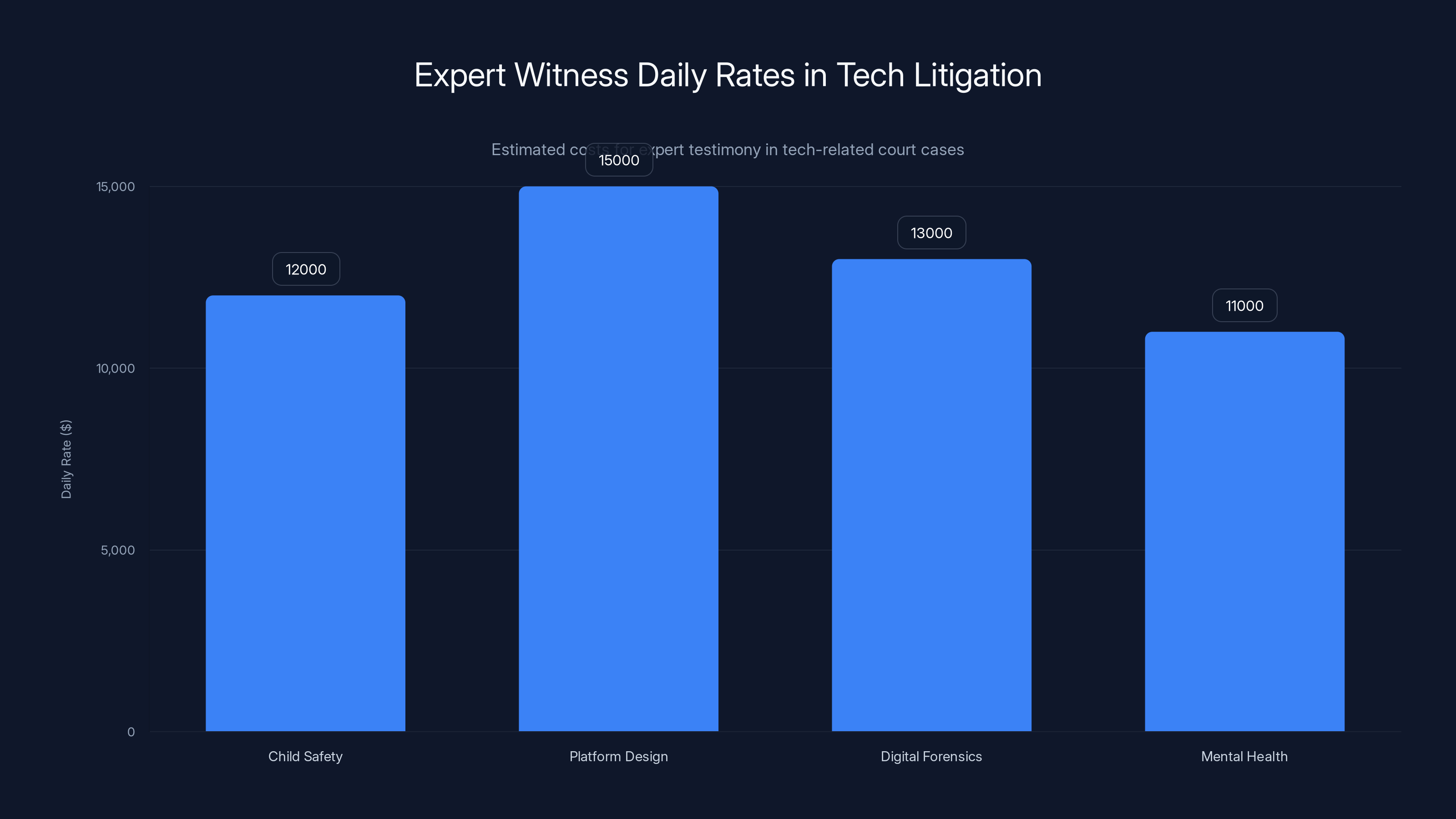

Leading experts in tech litigation fields command daily rates often exceeding $10,000, highlighting the significant financial investment in expert testimony. (Estimated data)

Timeline and Trial Logistics

The trial begins February 2, 2025. That's soon. Both sides are in final preparation. Discovery is closed. Evidence disputes are being resolved.

Trials in complex civil litigation typically last weeks or months. This case involves allegations of harm to potentially thousands of minors. Evidence presentation will be extensive. Jury deliberations could take days or weeks.

A decision probably won't come until spring 2025. Even then, appeals could extend the litigation years further. But the jury's verdict, regardless of appeal, will become public record and inform the litigation landscape for years afterward.

What This Means for Tech Companies Broadly

Beyond Meta specifically, this case sends signals to other tech platforms. Companies in similar positions—You Tube, Snapchat, Tik Tok—are watching carefully.

If Meta loses, those companies can expect increased litigation. If Meta wins, it sets a precedent that platforms bear limited liability for user-generated exploitation, even when design choices facilitate harm.

The case also affects how tech companies approach evidence in litigation going forward. If Meta's aggressive evidence exclusion strategy fails, other companies will know that courts aren't sympathetic to such approaches. They'll adjust litigation strategies accordingly.

For users and advocates, this case represents a moment where corporate accountability mechanisms might actually function. Most tech regulation happens through lobbying and lobbying pushback, behind closed doors. Trials are public. Juries must render decisions. That's genuinely different.

Future Implications and Potential Legal Reforms

Regardless of the trial's outcome, child online safety is becoming a policy priority. The question isn't whether regulation will increase. It's how.

If Meta wins, Congress might pursue legislative approaches since litigation provides insufficient leverage. If Meta loses, litigation becomes a viable complement to regulation.

Either way, we're likely to see stronger accountability standards for tech platforms regarding child safety. The current model, where companies deploy safety tools while maintaining business models that monetize attention regardless of age, faces increasing pressure.

Platforms might be required to implement stronger age verification. Content moderation standards might tighten. Recommendation algorithms might be restricted when they risk exposing minors to inappropriate material. Default privacy settings might shift to protect younger users.

Meta has already implemented some child safety features, including the "Close Friends" feature and restricted messaging for younger users. But these feel incremental compared to what comprehensive child protection might require.

The New Mexico trial is essentially a test case for what accountability looks like when litigation succeeds. If the jury imposes substantial damages, platforms will accelerate their investment in child safety as a cost-benefit decision. If the jury exonerates Meta, platforms will continue current practices.

The Broader Context: Corporate Accountability in the Digital Age

This case exemplifies a broader tension in digital regulation. Tech platforms argue they can't be responsible for all user behavior on systems with billions of participants. Regulators and advocates argue platforms bear responsibility because their design choices shape user behavior.

Who's right depends on how we think about corporate responsibility. If companies are responsible only for intentional harms, Meta likely prevails. If companies are responsible for negligence—failing to take reasonable precautions despite knowledge of foreseeable harms—Meta likely loses.

The New Mexico jury will essentially decide which theory of corporate responsibility applies to tech platforms. That verdict will reverberate through tech policy for years.

What Happens After the Verdict?

Assuming the jury reaches a verdict by mid-2025, the losing side will likely appeal. Appeals in complex civil litigation typically take 12 to 24 months.

If Meta loses and appeals, the appellate court will review whether the trial judge made legal errors. Appellate courts rarely overturn jury verdicts on factual questions, so the verdict would likely stand even if the court modifies damages.

If Meta prevails and the prosecution appeals, a similar process follows. Appeals courts would review legal questions, not the jury's factual findings.

Beyond appeals, the verdict becomes precedent. Other states citing the New Mexico verdict could argue similar facts support similar legal conclusions. The verdict doesn't bind other courts, but it influences how they evaluate similar cases.

For Meta, even a partial loss could trigger cascading litigation. Every state attorney general would see a blueprint for similar cases. The company's potential exposure could extend from the

That prospect likely explains Meta's aggressive evidence exclusion strategy. The company is fighting not just for this case but for its litigation position across the entire country.

The Human Impact: Why This Case Matters Beyond Law

Ultimately, this case is about children. Real minors were harmed through Meta's platforms. Some experienced exploitation. Others experienced abuse. Their families are pursuing accountability through the legal system.

For those families, this trial represents vindication. It's a forum where their stories matter, where evidence of their children's suffering influences legal outcomes.

Meta wants to limit what the jury hears about those harms. The company argues that sympathy for victims shouldn't override legal standards. That's a reasonable legal argument. But it's also an argument that feels at odds with accountability.

When corporations invest in preventing juries from understanding harms, something fundamental shifts in how we think about corporate responsibility. Accountability becomes possible only when harms are fully visible.

What Comes Next for Meta and the Broader Tech Industry

Meta's post-trial strategy depends on the verdict. If the company prevails, expect investment in litigation defense and lobbying to preempt similar cases.

If Meta loses, expect rapid appeal and legislative lobbying. The company will argue that the verdict is outlier, that other juries would reach different conclusions, that Congress should clarify platform liability rules.

Regardless, this case accelerates the reckoning with social media's role in child safety. The comfortable status quo where platforms deploy safety theater while maintaining exploitative business models faces genuine pressure.

For investors, this case represents Meta risk. If exposure exceeds a few billion dollars, it impacts earnings. If multiple verdicts follow, it could reshape Meta's valuation.

For users, this case signals that accountability mechanisms exist, even if imperfect. It's no longer a question of whether social media harms children. It's a question of who bears responsibility for preventing those harms.

FAQ

What is the New Mexico child safety case against Meta?

New Mexico Attorney General Raúl Torrez filed a lawsuit in late 2023 accusing Meta of failing to adequately protect minors from sexual exploitation, trafficking, and abuse on Facebook and Instagram. The case is scheduled for trial beginning February 2, 2025, and represents the first state-level trial against a major social media platform on these grounds. The state claims Meta allowed explicit material to reach minors and failed to implement sufficient child safety measures despite having the resources and knowledge to do so.

Why is Meta trying to exclude evidence from the trial?

Meta's legal strategy focuses on narrowing the trial's scope by requesting the court exclude evidence the company believes is irrelevant, prejudicial, or duplicative. The company wants to prevent jurors from seeing research on social media's mental health impacts, surgeon general warnings, financial information, prior privacy violations, and internal surveys about inappropriate content on its platforms. Meta argues this evidence would unfairly prejudice the jury against the company rather than helping them understand the specific allegations about child safety failures.

What evidence does Meta want blocked, and why?

Meta seeks to exclude: research on social media's impact on youth mental health, the U. S. Surgeon General's public health warning about social media, Meta's financial information, the company's history of privacy violations, CEO Mark Zuckerberg's college years, mentions of Meta's AI chatbots, and internal surveys about inappropriate content prevalence. The company argues this evidence is irrelevant to whether it failed to protect children from exploitation. However, legal experts argue this breadth of exclusions is unusually aggressive and suggests Meta wants to prevent the jury from understanding the broader context of negligence.

How significant is this case for the tech industry?

This case is potentially transformative because it's the first state-level trial against a major social media platform focused on child safety failures. If the jury finds Meta negligent, it establishes precedent supporting similar claims against other platforms including You Tube, Snapchat, and Tik Tok. The verdict would also strengthen the hand of regulators and legislators pursuing stronger child protection standards. For Meta, exposure includes not just trial damages but cascading litigation across multiple states. For the broader industry, the case represents a moment where litigation becomes a viable accountability mechanism beyond regulatory settlement.

What is Meta's defense strategy in this case?

Meta's defense likely emphasizes several points: that policing a platform with billions of users perfectly is impossible, that the company invested substantially in safety tools and partnerships, that Meta must be proven to have known of specific failures rather than general exploitation on the internet, and that parents bear some responsibility for monitoring their children's social media use. The defense also argues through evidence exclusion requests that broader context about social media harms, company finances, and prior conduct shouldn't influence the jury's evaluation of specific allegations about child safety negligence. This strategy frames the case narrowly rather than as a referendum on Meta's overall responsibility.

What happens if Meta loses this trial?

If the jury finds Meta liable, the company faces damages that could range from millions to billions depending on the number of affected minors and the severity of harm. More significantly, the verdict becomes public record and precedent supporting similar claims in other jurisdictions. Other states would cite the New Mexico verdict to support their own litigation. The case could trigger cascading lawsuits against Meta and other tech platforms. Additionally, a negative verdict would strengthen arguments for stronger legislative restrictions on platform design and child protection requirements. Meta would likely appeal, but appellate courts rarely overturn jury verdicts on factual questions, so the verdict would likely stand even if damages are modified.

How do evidence exclusion requests typically affect trial outcomes?

Evidence exclusion profoundly influences trial outcomes because juries make decisions based on information they receive. If Meta prevents the jury from learning about internal surveys showing high rates of inappropriate content on its platforms, the jury can't use that information to conclude the company knew about problems. Similarly, excluding research about social media's harms prevents the jury from understanding broader patterns of negligence. While judges rarely overturn for evidence rulings because discretion is broad, courts must admit relevant evidence unless it's highly prejudicial. If the judge admits most evidence Meta wants excluded, Meta's exposure increases significantly because the jury gets a more complete narrative about the company's negligence.

What is the timeline for this case?

The trial began in early February 2025 and is expected to last weeks to months depending on the complexity of evidence and number of witnesses. A jury verdict is likely in spring 2025. Following the verdict, the losing party will likely appeal, a process typically taking 12 to 24 months. Even after appellate decisions, Meta could face further appeals or related litigation in other jurisdictions. The immediate legal fight—whether evidence is excluded—will be resolved over the next few weeks as the trial proceeds and the judge rules on Meta's motions.

How does Section 230 affect this case?

Section 230 of the Communications Decency Act shields platforms from liability for user-generated content. This is why most federal litigation against Meta settles rather than going to trial. However, states have found ways to pursue cases framed as consumer protection violations or unfair business practices. The New Mexico case is structured to work around Section 230's protections by focusing on Meta's failure to implement adequate safety measures rather than on Meta's responsibility for what users post. If Meta loses this case, it establishes that Section 230 doesn't shield platforms from liability for negligence in protecting minors, even though the law shields platforms from liability for third-party content itself.

What can other platforms learn from this litigation?

Other social media platforms including You Tube, Snapchat, and Tik Tok are watching closely because a Meta loss signals that litigation is a viable accountability mechanism. If Meta loses, these platforms should expect increased litigation from state attorneys general alleging similar failures to protect children. Platforms will likely accelerate investment in child safety features, implement stronger age verification, and adjust recommendation algorithms to limit minor exposure to inappropriate content. For all platforms, the case suggests that having safety tools is insufficient if design choices overall facilitate exploitation. Simply deploying moderation AI isn't enough if the platform's business model incentivizes engagement regardless of user age or vulnerability.

The New Mexico trial against Meta represents a watershed moment for tech regulation. For the first time, a major social media platform faces a public jury trial focused on child safety failures, with comprehensive evidence available to shape the outcome. Meta's fight to exclude evidence reveals what the company fears most: jury understanding of the company's choices, profits, and knowledge regarding child exploitation on its platforms.

Regardless of the specific verdict, this case has already accomplished something significant. It's brought child safety from regulatory settlements and policy documents into open court where ordinary citizens render judgment. That shift from settlement to trial, from confidential documents to public testimony, from corporate negotiation to jury accountability, changes the fundamental dynamics of tech regulation.

For Meta, the stakes are enormous. For the tech industry, the precedent matters even more. And for the millions of children using these platforms, this case represents a genuine mechanism for accountability that hasn't existed before.

The trial begins in weeks. The outcome will shape tech policy for years.

Key Takeaways

- Meta faces its first state-level trial on child safety allegations, scheduled for February 2025, with potential exposure reaching billions in damages

- The company's aggressive evidence exclusion strategy reveals fears about jury exposure to research, financial data, and prior privacy violations that could support negligence findings

- Legal experts view Meta's breadth of exclusion requests as unusually broad and potentially counterproductive to the company's defense narrative

- A jury verdict against Meta would establish precedent supporting similar litigation against other platforms including YouTube, Snapchat, and TikTok

- The trial represents a shift from confidential regulatory settlements to public accountability, with implications for tech regulation nationwide

Related Articles

- Meta's Aggressive Legal Defense in Child Safety Trial [2025]

- UK VPN Ban Explained: Government's Online Safety Plan [2025]

- FBI Device Seizure From Washington Post Reporter: Journalist Rights and First Amendment [2025]

- UK Social Media Ban for Under-16s: What You Need to Know [2025]

- Meta's Illegal Gambling Ad Problem: What the UK Watchdog Found [2025]

- Grok's Deepfake Crisis: The Ashley St. Clair Lawsuit and AI Accountability [2025]

![Meta's Child Safety Case: Evidence Battle & Legal Strategy [2025]](https://tryrunable.com/blog/meta-s-child-safety-case-evidence-battle-legal-strategy-2025/image-1-1769108967663.jpg)