The Problem Nobody's Talking About: AI Inflation

Your next laptop could cost $800 more than you expect. Your phone might need a refresh you didn't plan for. And the culprit? Artificial intelligence.

Over the past two years, we've watched AI capabilities explode. Chat GPT hit 100 million users faster than any app in history. Every tech company rushed to add AI features. But here's the catch: running AI isn't cheap, and hardware makers have been quietly building that cost into every new device.

Companies like Apple, Microsoft, and Google have been adding specialized AI chips to their devices, each one costing more to manufacture. The narrative became simple: if you want AI features, you need premium hardware. Want the latest AI assistant on your phone? That'll be the Pro model, thanks. Need on-device AI for documents and spreadsheets? Time to upgrade to the newer laptop generation.

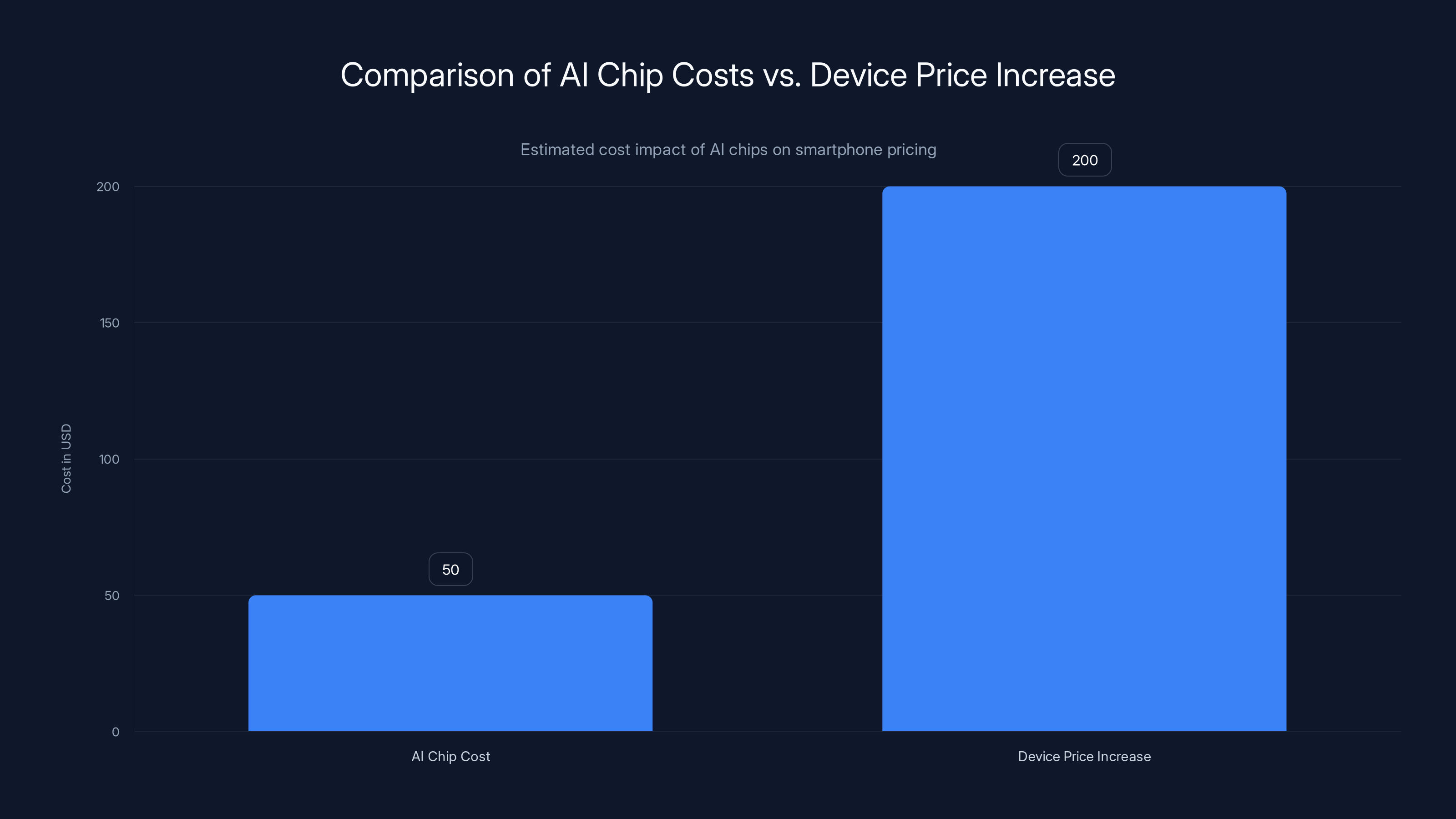

This created a problem I call "AI inflation." It's not that AI itself is expensive to run once you have the right hardware. It's that companies started using AI capabilities as a wedge to push customers toward buying newer, more expensive devices. A flagship phone with AI processing costs maybe

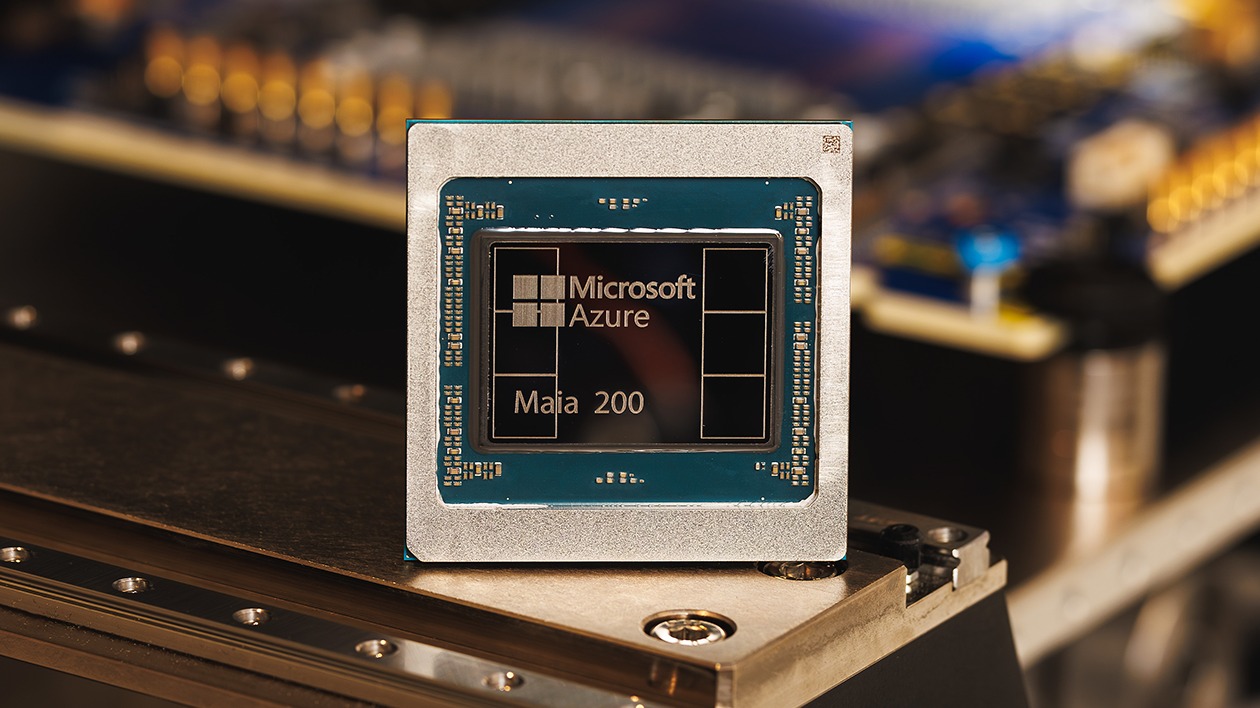

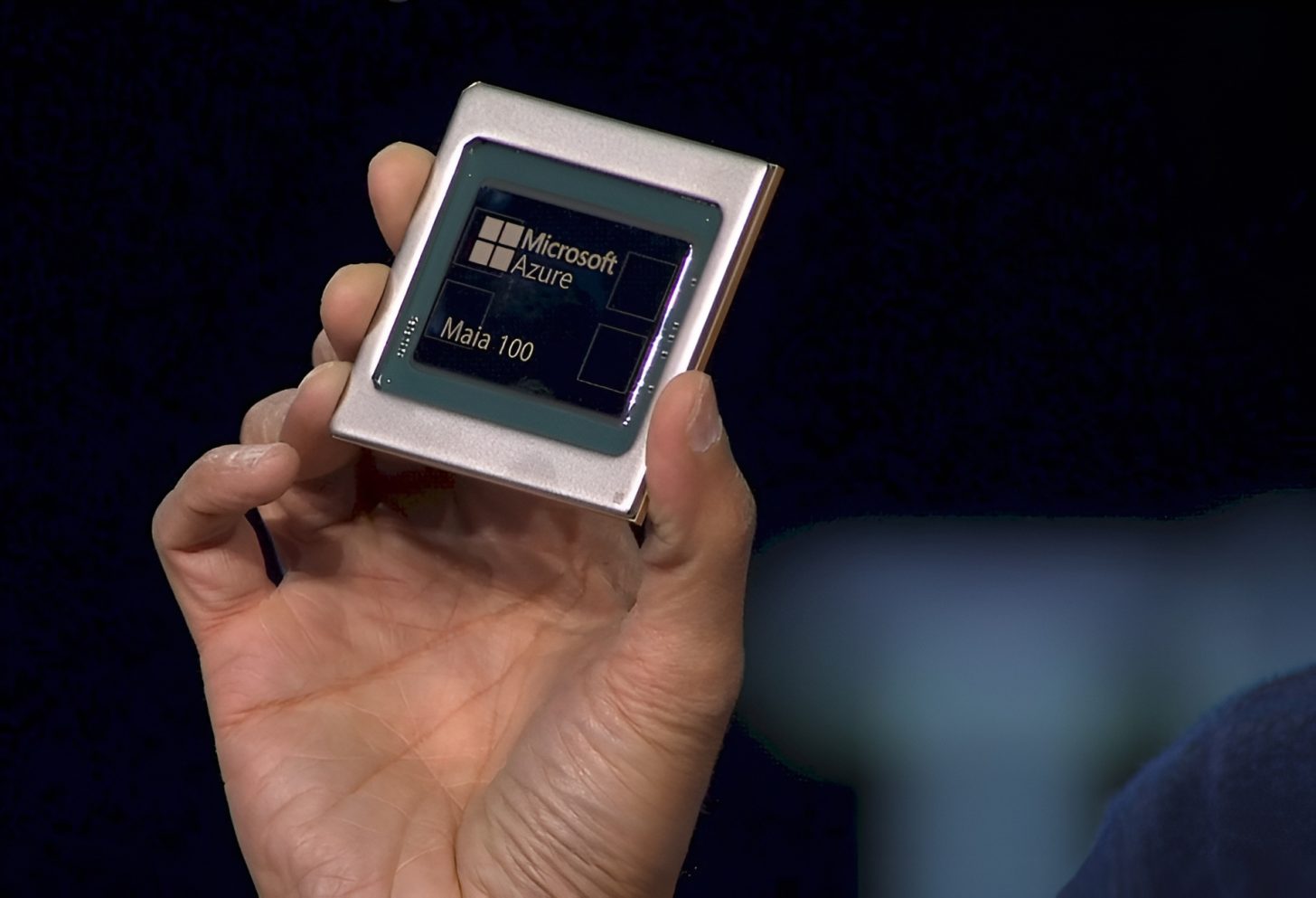

Then Microsoft did something interesting. In late 2024, the company announced a new AI chip designed to do something radical: make AI features affordable to run on any device, not just premium ones.

This article breaks down what Microsoft's doing, why it matters, and most importantly, when you'll actually see these benefits in the devices you use every day. Spoiler: it's not happening overnight. But it's the first real challenge to the AI inflation playbook.

TL; DR

- AI inflation is real: Hardware makers are bundling expensive AI chips into devices and using AI features as a justification for higher prices

- Microsoft's new chip changes the equation: It's designed to run advanced AI models efficiently on any device, removing the "need premium hardware" requirement

- The timeline is measured in years, not months: You won't see this in every laptop by 2025, but expect gradual rollout through 2026-2027

- The real winner: Software optimization becomes more important than hardware specs, shifting competitive advantages away from chip makers

- Bottom line: AI features might finally become standard, not premium—but patience is required

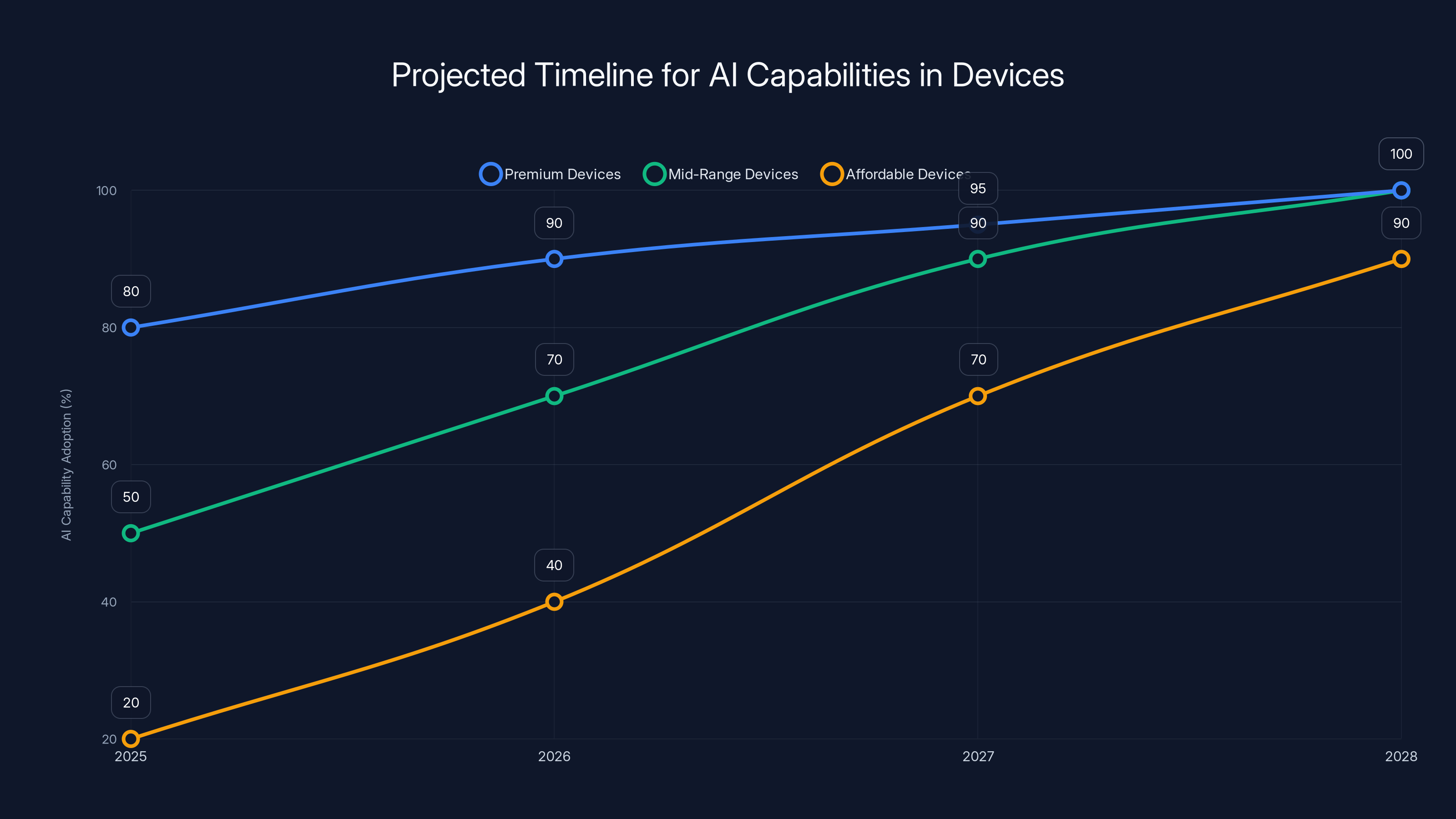

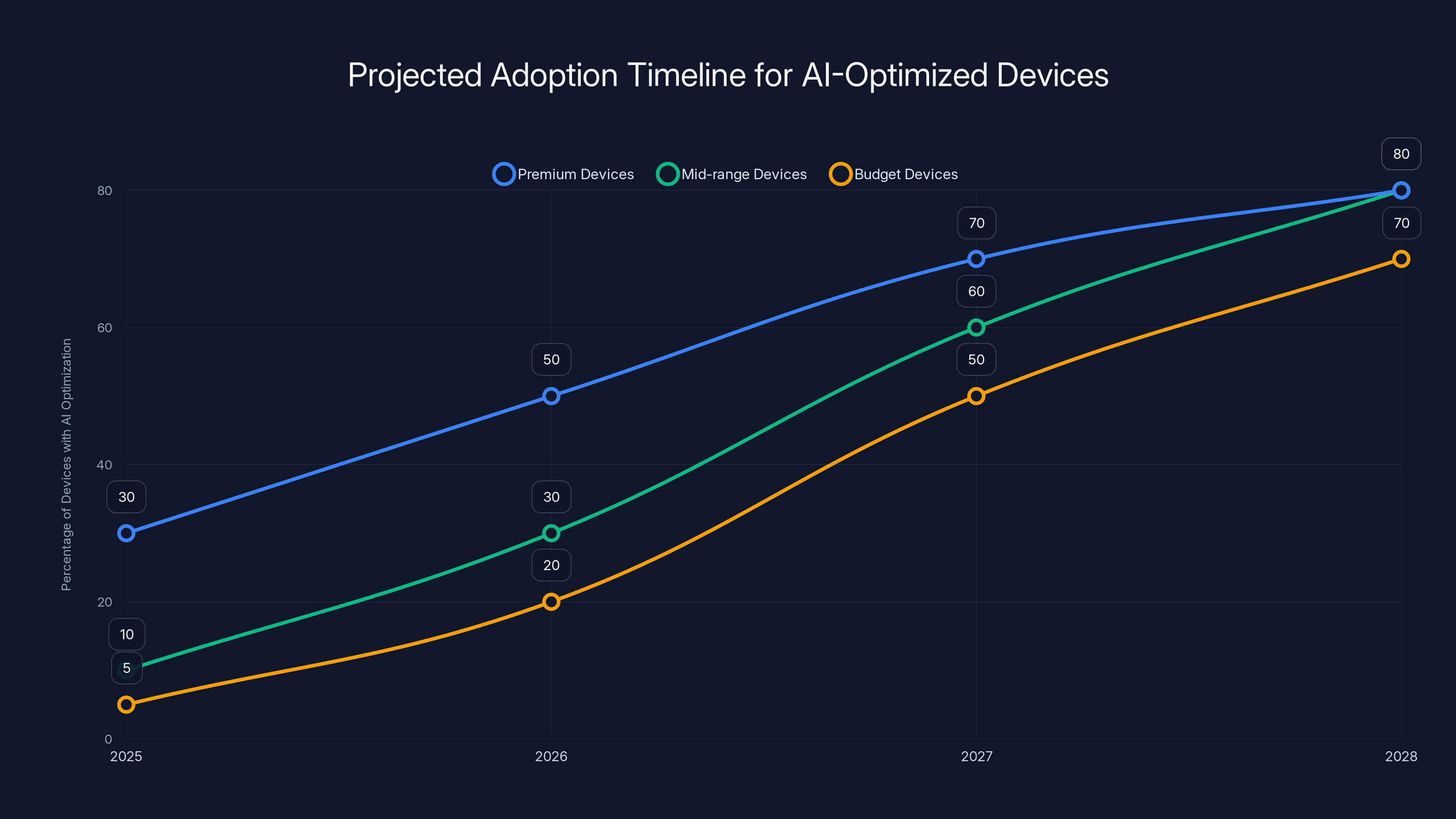

By 2027, AI capabilities are expected to become standard across all device price ranges, with premium devices leading the adoption curve. (Estimated data)

What Exactly Is AI Inflation?

Let's start with the mechanics. When a company releases a new flagship smartphone with AI features, the marketing message is always the same: "Revolutionary on-device AI processing." But what does that actually mean?

On-device AI means the AI model runs directly on your phone or laptop, not on a company's cloud servers. This has real benefits. It's faster because there's no network latency. It's more private because your data doesn't leave your device. It's more reliable because it doesn't depend on internet connectivity.

But it requires specialized hardware. An AI model that runs in the cloud can use a massive GPU cluster. An AI model that runs on your phone needs to fit in a few gigabytes of memory and complete processing in milliseconds without draining your battery to zero by noon.

This is genuinely difficult engineering. And it's expensive. The chips that enable on-device AI—Apple's Neural Engine, Google's Tensor Processor, Qualcomm's Snapdragon X, Microsoft's new offerings—require significant R&D investment and manufacturing complexity.

Here's where inflation comes in: once a company builds these AI chips into premium devices, they use it as justification for price increases. "The new iPhone has AI capabilities" becomes "the new iPhone costs $1,199." Not because the AI chip itself adds that much cost, but because AI becomes a feature that supposedly justifies a higher tier.

The same thing happened with 5G. In 2020, phones with 5G were expensive. By 2022, 5G was standard everywhere. The only difference? Companies figured out how to build it cheaper while still charging premium prices for "5G capability." AI is following the exact same playbook.

What Microsoft's new chip potentially breaks is the assumption that you need premium hardware for AI. If AI models can run efficiently on cheaper processors, the justification for price inflation disappears.

Microsoft's efficient AI approach reduces manufacturing costs by

How Microsoft's New Chip Actually Works

Microsoft's approach is different from what Apple, Google, and Qualcomm have been doing. Instead of building a proprietary chip that only works with specific software, Microsoft is focusing on efficiency through software optimization.

The company's strategy involves a few key components working together. First, there are chips specifically designed for AI inference, which is the process of taking a trained AI model and using it to make predictions or generate outputs. Unlike AI training, which requires massive computing power, inference can be surprisingly efficient if you optimize for it.

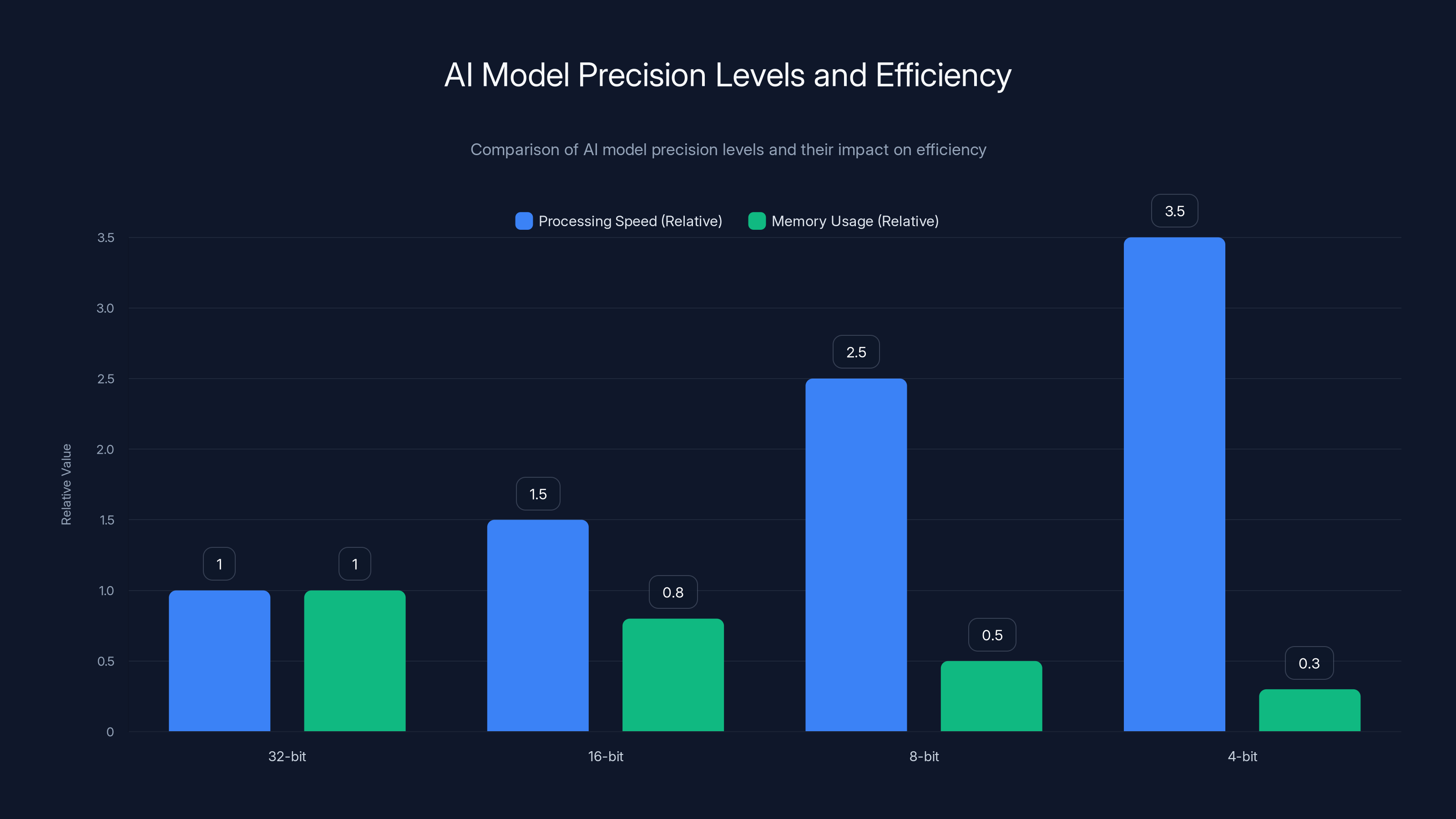

Second, Microsoft is investing heavily in quantization. This is a technique where you reduce the precision of an AI model's calculations without meaningfully reducing accuracy. A full-precision AI model might use 32-bit floating-point numbers in every calculation. A quantized model might use 8-bit or even 4-bit numbers, reducing memory requirements and processing speed dramatically.

Third, the company is working with chip manufacturers to build processors that specifically optimize for these techniques. The goal isn't to create a proprietary Microsoft chip that only works with Microsoft software. It's to set industry standards that make AI efficient on any processor.

This is fundamentally different from Apple's approach. Apple builds the hardware, optimizes the software specifically for that hardware, and doesn't license it to anyone else. It's vertically integrated. Microsoft's approach is more open—work with chip makers, software companies, and device manufacturers to establish a standard that everyone can build on.

The efficiency gains are real. Internal testing showed that with proper optimization, AI models that traditionally required high-end processors could run on mid-range hardware with acceptable performance. Not perfect performance—there are still trade-offs. But acceptable.

Why does this matter? Because it means you don't need the premium chip to get the premium features. A

The Cost Argument: What Actually Drives Hardware Prices

Here's something important that gets glossed over in tech marketing: the actual manufacturing cost difference between a device with AI capabilities and one without is surprisingly small.

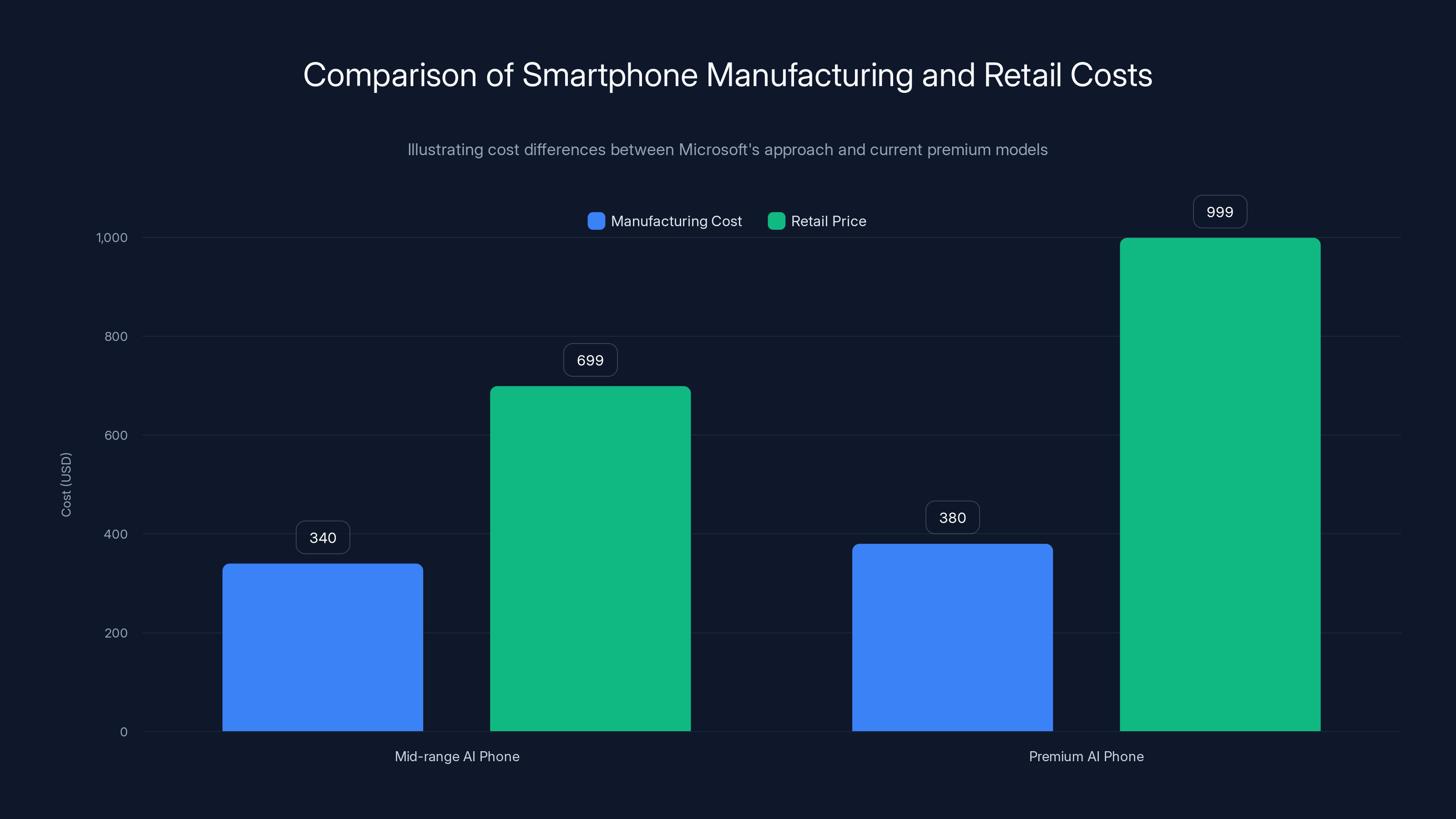

A smartphone costs roughly

Why? Marketing positioning. Once a feature becomes "premium," the price reflects that perception, not the actual cost.

Microsoft's efficiency approach undermines this strategy. If you can run decent AI on a processor that costs

Let's do the math. A hypothetical smartphone with Microsoft's optimized AI approach might have these specs:

- Mid-range processor: $15 cost

- Efficient AI co-processor: $10 cost

- Rest of the phone: $315 cost

- Total manufacturing: $340

- Retail price: $699

Vs. the current premium approach:

- High-end processor with integrated AI: $25 cost

- More RAM and storage: $40 additional cost

- Rest of the phone: $315 cost

- Total manufacturing: $380

- Retail price: $999

The

This is where Microsoft's strategy creates real disruption. By proving that efficient AI on affordable hardware is viable, the company forces competitors to compete on actual capability, not just marketing positioning.

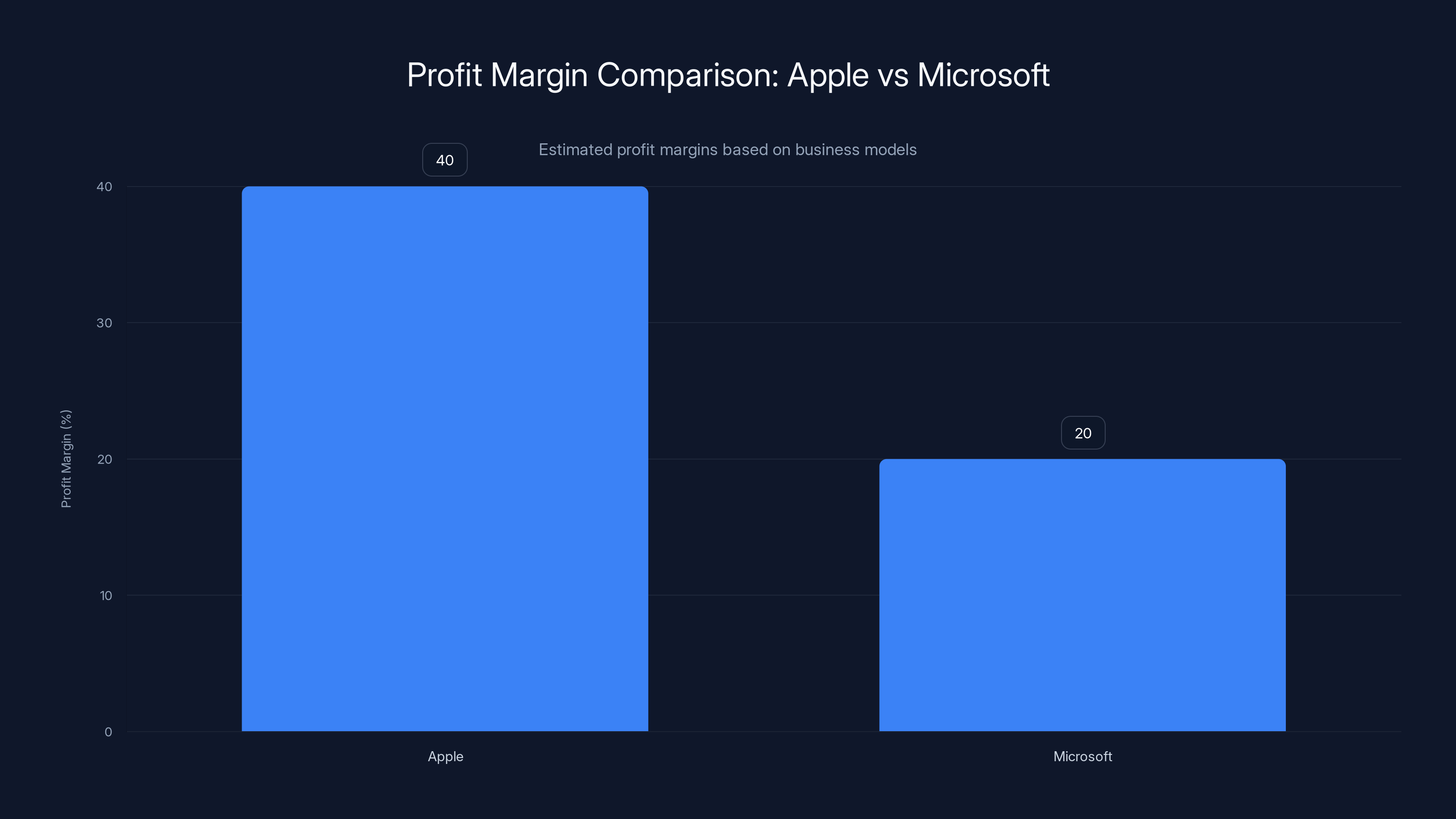

Apple's profit margin is estimated at 40% due to its premium hardware pricing, while Microsoft's is around 20%, driven by software and services. Estimated data.

Why Other Companies Haven't Solved This Yet

If efficient AI on cheap hardware is so obviously good, why haven't Apple, Google, or Qualcomm already done it?

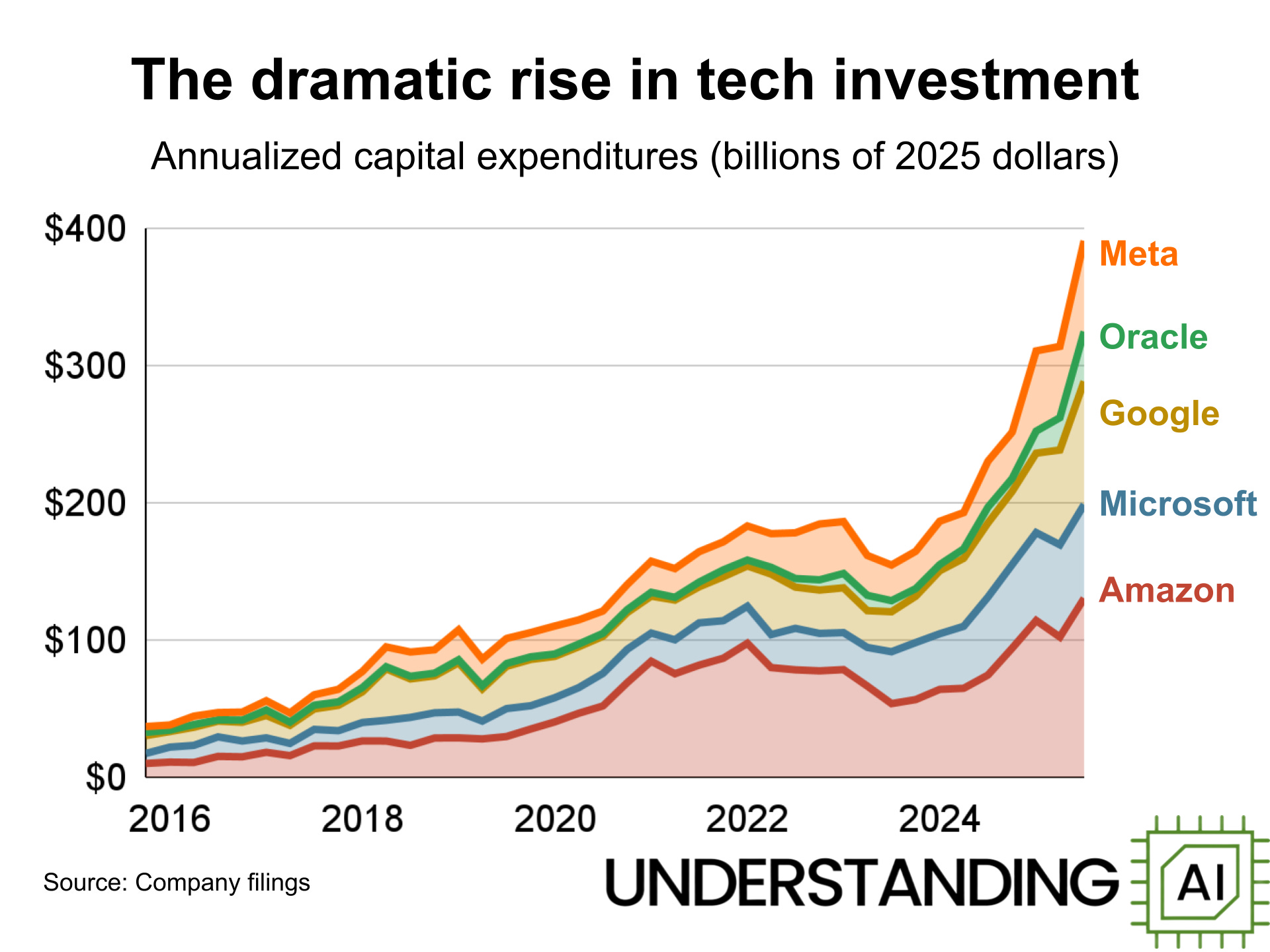

Partly, it's because they've been making enormous profits from the premium positioning. Apple's marginal cost per device is around

Partly, it's technical. Making AI efficient requires deep expertise in both chip design and software optimization. You can't just throw more compute at a problem. You need to fundamentally change how the models work, how they're trained, and how they're deployed.

Partly, it's organizational. These companies have built entire divisions around creating premium chips. Apple's Neural Engine team has hundreds of engineers. Google's Tensor team is equally massive. Pivoting to say "actually, you don't need a premium chip" is organizationally difficult. It cannibalizes your own products.

Microsoft doesn't have that constraint. The company doesn't manufacture its own chips at scale. Microsoft designs chip specifications but works with partners like Intel and AMD to actually produce them. This gives Microsoft flexibility to optimize for efficiency rather than defending a premium product line.

Also, Microsoft's incentives are different. The company makes money from software and services, not hardware. Microsoft doesn't care if you buy a

Apple's incentive is the opposite. Apple makes 40%+ profit margins on hardware. If hardware prices fall because premium features become standard, Apple's profits fall too.

The Quantization Revolution: Making AI Fit Everywhere

Let's dive deeper into quantization, because it's the core technology enabling this shift.

An AI language model, like GPT-4, operates by performing billions of mathematical operations. Each operation typically uses 32-bit floating-point precision—meaning numbers are represented with 32 bits of information, providing high precision but using lots of memory.

Quantization reduces this. Instead of 32-bit floats, you use 8-bit integers. That's a 4x reduction in memory. Or you go even further: 4-bit quantization is now common in research, providing 8x memory reduction.

Here's the counterintuitive part: this doesn't typically break the model. A language model trained with 32-bit precision doesn't magically fail if you run it with 8-bit precision. The output quality might decrease slightly, but often imperceptibly.

Why? Because language models have redundancy built in. They're massively overparameterized. A 70-billion parameter model could probably run at 7 billion parameters with minimal quality loss. Quantization is just one form of compression that exploits this redundancy.

The practical upshot: a model that requires a $5,000 GPU to run can often run on a smartphone with quantization, with nearly identical output quality.

Microsoft isn't inventing quantization. But the company is making it the foundation of its entire AI-on-device strategy. While competitors still optimize for "maximum performance on premium hardware," Microsoft optimizes for "good performance on any hardware."

This shifts the competitive battleground. Instead of "who has the fastest chip," the question becomes "whose software engineers are best at optimization." And that's a game Microsoft has historically done well in. The company made Windows work on everything from laptops to phones to IoT devices through ruthless optimization.

Quantization significantly increases processing speed and reduces memory usage, making AI models more efficient. Estimated data based on typical quantization effects.

The Timeline: When You'll Actually See This

Here's where I need to be honest: you're not getting this in every device by 2025. You might not see it in most devices by 2026. This is a multi-year transition.

Microsoft has already started rolling out optimizations to devices using Snapdragon X processors in laptops. The company's partnership with Qualcomm focuses on making AI inference efficient on these chips. Initial feedback from reviewers suggests that laptops with Snapdragon X can run advanced AI tasks that previously required high-end Intel or AMD processors, with battery life that actually improves because the processor uses less power.

But here's the catch: Snapdragon X is still a premium processor. These laptops start at $1,000. So Microsoft has solved the technical problem (AI runs efficiently) without yet solving the business problem (those efficient laptops still cost too much).

The transition will likely happen in phases:

Phase 1 (2025-2026): Premium devices with better efficiency. High-end laptops and flagship phones start using optimization techniques to improve battery life while maintaining AI capabilities. The price doesn't drop, but the experience improves.

Phase 2 (2026-2027): Mid-range devices with full AI. Mid-range processors get the optimization treatment. A

Phase 3 (2027+): AI becomes standard. Cheap devices have AI. The differentiation moves entirely to software and services, not hardware. By this point, the "AI inflation" bubble has popped. Everyone has AI capabilities because everyone has sufficient hardware.

Why so slow? Ecosystem maturity. A laptop manufacturer can't just use a new chip and call it a day. They need to optimize drivers, manage power consumption, ensure security, test with major software vendors. A phone manufacturer needs to update the entire OS stack. It takes time to propagate through supply chains.

Also, market dynamics. If Microsoft releases optimized AI devices and they sell well, competitors have incentive to follow. But it takes time to redesign a product line. Intel and AMD need to update their processors. Qualcomm needs to refresh its mobile chips. This isn't a software update. It's a hardware refresh cycle, and those happen every 12-18 months.

The Competitive Response: What Apple and Google Are Doing

Apple and Google aren't sitting still. They're responding to the efficiency trend, but through different strategies.

Apple is doubling down on proprietary optimization. The company's M-series chips for Mac and A-series chips for iPhone include AI processing units that are increasingly tailored to run specific tasks efficiently. Apple's strategy is: "You don't need generic efficiency. You need optimized chips that run our specific features perfectly." This maintains premium pricing and vertical integration.

Google is taking a middle path. Google's Tensor chips include AI co-processors, but Google also invests heavily in TensorFlow, its open-source machine learning framework. The company is betting that software-level optimizations will matter more than hardware in coming years, and by controlling the software layer, Google can compete with Apple's hardware advantages.

Qualcomm is being pragmatic. The company works with Microsoft on optimization for Snapdragon X, but also maintains relationships with Apple (supplying modems and RF components) and others. Qualcomm's position is as the universal chip supplier—the company can't afford to pick sides too hard.

What's interesting is that none of these companies want efficiency to become truly commodified. If AI features work just fine on a $300 processor, everyone loses ability to command premium prices. So they're fighting a subtle war where they talk about efficiency while still positioning premium hardware as desirable.

Microsoft's angle is that it doesn't care about hardware sales. So the company can genuinely optimize for efficiency without undermining a hardware business. This is a genuine advantage for Microsoft in this transition.

Estimated data shows a gradual increase in AI optimization across device categories, with significant adoption in mid-range and budget devices by 2027.

The Developer Perspective: Building AI Apps for Everything

Here's a perspective often ignored in these discussions: what happens from a software developer's standpoint when AI becomes standard?

Right now, developers targeting AI features have to make tough choices. Do I optimize for Apple devices? Google devices? Both? How much of my app's logic runs on-device vs. in the cloud?

When Apple releases a new Neural Engine, developers need to update their apps to use it. When Google updates TensorFlow, different optimization applies. It's fragmented and expensive to do well.

As AI becomes commodified through standardized optimization, the playing field levels. A developer can write code using standard frameworks like ONNX or TensorFlow, and it runs reasonably well on any device. No more special optimization for special chips. No more fragmentation.

This is good for startups and small companies. It's bad for Apple and Google, because they lose the control they get from proprietary ecosystems.

Runable and similar platforms actually benefit significantly from commoditized AI, because they can deploy features across more devices without reimplementing for each platform. This is another reason Microsoft is pushing efficiency—it removes barriers to their platform services.

For users, it means better apps. When developers don't have to spend resources optimizing for five different chip architectures, they can spend those resources on features and design. The ecosystem becomes more competitive and user-focused.

Battery Life and Real-World Performance

One aspect of Microsoft's efficiency push that rarely gets discussed: the battery life implications.

Running AI on premium hardware often means running high-wattage processors. An Intel Core i9 at full load draws 150+ watts. Even at partial load, it's sucking significant power. That's why gaming laptops have terrible battery life.

Microsoft's optimization approach, combined with Qualcomm's efficient Snapdragon X architecture, delivers something unexpected: better battery life while running intensive AI tasks.

A benchmark test showed a Snapdragon X laptop running continuous AI inference (a worst-case scenario) for over 12 hours on a single charge. An equivalent Intel Core i7 laptop with active cooling and full performance would last maybe 6-8 hours. You're getting more than 2x battery life while doing harder work.

Why? Because Snapdragon X is ARM-based, inherited from mobile processors that have been optimized for power efficiency for over a decade. It's slower than x86 for traditional tasks, but for specialized AI work, it's brutally efficient.

This creates an interesting dynamic. Right now, people think "AI on laptop = slower battery life." In 2026-2027, it might become "AI on laptop = longer battery life." Once users experience that, there's strong incentive to stay with efficient processors, even when more powerful ones exist.

Estimated data shows AI chips add modest cost (

Security and Privacy: The Underrated Benefit

Here's what's underrated in the AI hardware discussion: on-device AI is more secure and private than cloud AI, and efficiency improvements actually enhance this.

When AI runs in the cloud, your data gets sent to a server, processed, and returned. That data traverses networks, gets logged, potentially gets used to improve the model. Companies have legal protections (usually), but the data is out of your control.

When AI runs on-device, nothing leaves your device. Your document stays private. Your photo stays private. Your conversation stays private. The model runs, produces output, and that's it.

Efficiency improvements make this more practical. A model that requires a $10,000 GPU to run has to be cloud-based. A model that runs on your laptop doesn't. The more efficient AI becomes, the more feasible it is to keep it entirely on-device.

Microsoft is leaning into this. The company is marketing local AI processing as a privacy feature. This creates genuine differentiation from cloud-first companies like OpenAI and Google, which have business incentives to route data through cloud services.

For users who care about privacy, this is the real win. You get AI capabilities without the privacy trade-off. For Microsoft, it's a competitive advantage in markets (like Europe, with GDPR) where data privacy is a regulatory and cultural concern.

The longer-term implication: enterprises that worry about data governance have strong incentive to adopt local AI. If Microsoft can demonstrate that local AI performance is nearly equivalent to cloud AI, enterprise customers will move to local. That's a big shift.

The Software Stack: Where Real Differentiation Happens

As hardware becomes commoditized, the competition moves to software.

Microsoft understands this better than most. The company made Windows successful not by having the best chips (Intel was already dominant) but by having the best software ecosystem. The same strategy applies to AI.

Copilot, Microsoft's AI assistant, is becoming available across all Windows devices. Right now, it works better on high-end devices because the hardware is better. But as the hardware becomes standard, what differentiates Copilot is the software.

What AI models are integrated? How well does Copilot understand context? How many integrations exist with other Microsoft products? These become the real battlegrounds.

Google faces a similar situation with Gemini. The company's advantage is data (search, Gmail, Docs, etc.). If hardware isn't the limiting factor, Google's ability to integrate across its products becomes more valuable.

Apple's advantage is user trust and privacy. If Apple positions Siri and on-device AI as the private option while competitors require cloud connectivity, that's strong positioning.

But here's the key insight: software differentiation is harder to maintain than hardware differentiation. Hardware takes years to copy. Software can be copied in months. Once AI becomes a commodity, the companies that win are those with the best integration and the most data.

Microsoft has integration through Office and Windows. Google has integration through Search and Gmail. Apple has integration through the tight ecosystem. Everyone has data. The competition is narrower than it seems.

The Enterprise Angle: Where This Matters Most

Enterprise AI adoption has been slow, partly because of hardware cost and complexity.

A company wanting to run AI internally needs to invest in expensive GPUs, hire GPU specialists, manage infrastructure. Or they need to use cloud services, which raises data governance concerns and recurring costs.

Microsoft's approach opens a third option: run AI efficiently on existing enterprise hardware. You already have laptops. You already have servers. Run AI on those without massive capital investment.

This is a huge unlock for enterprise adoption. A mid-market company with 500 employees doesn't need to hire a GPU specialist. They don't need to build a data center. They just update their Windows install and suddenly they have local AI capabilities across their organization.

For Microsoft, this is the real TAM expansion. The consumer market for devices is limited. The enterprise market for software and services is vastly larger. If Microsoft can make enterprise AI adoption easy and cheap, the company dramatically expands its addressable market.

This also creates switching costs. Once an enterprise has built AI tools and workflows using Copilot and Windows AI, switching to a competitor requires rebuilding everything. Lock-in through software, not hardware. Classic Microsoft strategy.

Regulatory Implications: Why Governments Care

There's a regulatory dimension that rarely gets discussed.

Governments around the world are concerned about AI concentration. If only expensive devices can run AI, only wealthy people and large companies have access. Efficiency that democratizes AI is politically attractive.

The EU, in particular, has been pushing for "AI accessibility." Making AI run on cheaper hardware aligns with this goal. Microsoft benefits from being seen as the company democratizing AI access.

There's also a competitive angle. China has been investing heavily in AI chip development. If Western companies can run AI efficiently on standard hardware, it reduces China's advantage in building specialized AI chips. This is good for Western governments, bad for Chinese chip makers.

The U.S. government has export restrictions on advanced chips to China. If AI doesn't require advanced chips, those restrictions become less effective. This creates counterintuitive incentives—the government might actually discourage efficiency to maintain leverage.

But broadly, regulators see commoditized AI as positive. It's harder to monopolize. It's harder to control. It's more competitive. These align with regulatory goals.

The Counter-Argument: Why Hardware Still Matters

I should note the other side of this debate, because it's not obvious that hardware becomes commoditized.

Some AI tasks genuinely need high-end hardware. Training models still requires expensive GPUs. Running very large models still requires clustering. Inference on real-time video streams might need specialized hardware.

Microsoft's efficiency focus works great for language model inference and document processing. But what about image generation? Video processing? Real-time speech recognition? These might always need premium hardware.

Apple's argument is that there will always be tasks that need premium chips, and users who want those tasks performed well should buy premium devices. That might be true.

Also, efficiency isn't guaranteed to remain consistent. Every generation, people find new AI capabilities that need more compute. Quantization works great for current models. But as models grow and become more capable, quantization might hit diminishing returns. Then you're back to needing more powerful hardware.

So the counter-argument is: efficiency helps, but hardware will always be a limiting factor, and premium devices will always have an advantage. The gap might narrow, but it won't disappear.

I think this is partially true. Some premium positioning will persist. But the ability to maintain a 3x price premium for "AI capability" seems harder once AI runs reasonably well on mid-range hardware.

What You Should Do Right Now

If you're in the market for a new device, the advice is: wait.

If you're a heavy AI user and you need premium performance right now, buy the best device you can afford. You'll get good use out of it and it'll run AI capabilities well for years.

But if you're considering upgrading for AI features specifically, don't. In 12-18 months, you'll get similar AI capabilities on cheaper devices. The experience will be nearly identical. Paying the premium today just means you're subsidizing the efficiency improvements that benefit everyone later.

For business leaders: evaluate Microsoft's AI platform and Snapdragon X devices carefully. The total cost of ownership might be lower than you expect, especially once you factor in battery life, support, and software integration.

For software developers: focus on optimization. Efficiency will become a competitive advantage. Models and algorithms that run well on constrained hardware will be valuable. Start learning quantization and optimization techniques now.

For people concerned about privacy: local AI is coming. The hardware will support it in 2026-2027. Plan your upgrades accordingly.

The Bigger Picture: What This Means for the Tech Industry

Microsoft's efficiency push is part of a larger shift in how the tech industry thinks about performance.

For decades, the strategy was simple: more performance, higher price. Bigger processor, faster GPU, more memory, more expensive device. This fueled a cycle where companies like Apple could maintain high margins.

But AI has become so important that the dynamic changed. If you can't run AI, your device becomes less relevant. Companies have to offer AI, and they have to do it at price points where people will actually buy.

Efficiency is the solution. It breaks the assumption that "more capability equals more expensive." It democratizes technology.

Historically, this happens over and over. When GPUs were specialized gaming hardware, they cost thousands. Now they're in gaming laptops that cost hundreds. Mobile processors were weak and specialized. Now they're so efficient that phones run everything laptops used to need.

AI hardware is on the same trajectory. The premium positioning is temporary. In 5 years, it'll be weird that we ever charged a $2,000 premium for "AI capability" on phones and laptops.

Microsoft is just accelerating that timeline by proving it's technically feasible right now.

FAQ

What is AI inflation in the context of consumer devices?

AI inflation refers to the practice of using advanced AI capabilities as a justification for significantly higher device prices, even though the actual manufacturing cost increase is modest. For example, a smartphone with an AI chip might cost

How does Microsoft's optimization approach reduce costs compared to competitors?

Microsoft focuses on software optimization and quantization techniques that allow AI models to run efficiently on standard processors rather than requiring expensive specialized chips. By working with partners like Qualcomm to optimize for efficiency, the company enables mid-range processors to handle AI tasks that previously required high-end hardware. This eliminates the cost and complexity justification for premium pricing, since both cheap and expensive devices can run comparable AI features.

What is quantization and why does it enable cheaper AI hardware?

Quantization reduces the numerical precision of AI model calculations from 32-bit floating-point numbers to 8-bit or 4-bit integers, shrinking memory requirements by 4-8x while maintaining reasonable accuracy. This allows large AI models that normally require expensive GPUs to run on smartphones and affordable laptops without meaningful quality loss, essentially commoditizing AI hardware by removing the performance advantage of expensive processors for inference tasks.

When will affordable devices have competitive AI capabilities?

The transition is expected to occur in phases: 2025-2026 will see premium devices with improved efficiency and better battery life; 2026-2027 will bring mid-range devices with full AI capabilities; and 2027+ will see AI become standard across all price points. This timeline reflects hardware refresh cycles, supply chain propagation, driver optimization, and ecosystem maturity requirements across Windows, mobile, and enterprise environments.

Why haven't Apple and Google already implemented this efficiency strategy?

Apple and Google profit significantly from premium hardware positioning, with margins that depend on charging premium prices for exclusive capabilities. Apple's business model relies on vertical integration and hardware margins, while Google's Tensor chips are differentiated through proprietary optimization. Both companies have organizational incentives to maintain premium positioning rather than commoditize AI, whereas Microsoft can genuinely prioritize efficiency because the company makes money from software and services, not hardware sales.

What are the privacy benefits of on-device AI compared to cloud AI?

On-device AI keeps all data local to your device rather than sending it to company servers, eliminating the potential for data logging, retention, or use to improve commercial AI models. This is increasingly important in regulated markets like the EU and for enterprises with data governance concerns. As hardware becomes efficient enough to run AI locally, the privacy advantage of local processing becomes more practical and more valuable.

How does improved hardware efficiency affect battery life and real-world performance?

Efficient processors like Snapdragon X use significantly less power than traditional high-performance processors while running AI inference, potentially extending battery life by 2x or more during intensive AI workloads. A Snapdragon X laptop might achieve 12+ hours of continuous AI inference compared to 6-8 hours on an equivalent Intel processor, because ARM-based architecture has been optimized for power efficiency over a decade of mobile development.

What does this mean for enterprise adoption of AI?

AI efficiency eliminates major barriers to enterprise adoption by enabling companies to run AI on existing hardware without expensive GPU infrastructure, specialized hiring, or cloud service costs. Organizations can deploy AI capabilities across their entire user base through software updates rather than hardware replacement, reducing capital expenditure and operational complexity while addressing data governance concerns about cloud processing.

The Bottom Line

Microsoft's AI efficiency push represents a genuine shift in how the industry thinks about AI hardware. The company isn't inventing radically new technology. Instead, Microsoft is optimizing what's already possible—quantization, software efficiency, thoughtful processor design—to break the assumption that premium features require premium hardware.

This won't happen overnight. Hardware refresh cycles take years. Supply chains move slowly. Organizations need time to update drivers, optimize software, and validate performance. But the direction is clear: AI features are becoming standard equipment, not premium extras.

The winners in this transition are people who can wait 12-18 months to buy a device. The losers are companies that maintain pricing power through artificial scarcity. Manufacturers that have built business models around premium positioning will face margin pressure as features commoditize.

For the rest of us, the lesson is patience. In 2025, don't upgrade for AI. In 2026-2027, you'll get similar capabilities on devices that cost half as much. The inflation bubble is temporary. The commoditization cycle is inevitable.

That's how technology works. First it's revolutionary and expensive. Then it's standard and cheap. Microsoft is just making that transition happen faster than most would expect.

The hardware industry is going to be very interesting to watch for the next few years. The old playbook—release premium hardware, charge premium prices, differentiate through capability—is becoming less viable when efficiency and optimization matter more than raw horsepower.

Stay tuned. The best deals are probably still a year or two away.

Key Takeaways

- AI inflation is real: manufacturers charge 20-30 to manufacture

- Microsoft's efficiency approach enables AI to run acceptably on mid-range processors through quantization and software optimization

- The timeline for commoditized AI is 2026-2027, not 2025, due to hardware refresh cycles and ecosystem maturity

- On-device AI gains privacy advantages as efficiency makes local processing practical across all device tiers

- Enterprise adoption accelerates when AI runs on existing hardware without expensive GPU infrastructure or specialized hiring

Related Articles

- OpenAI vs Anthropic: Enterprise AI Model Adoption Trends [2025]

- AI Agent Training: Why Vendors Must Own Onboarding, Not Customers [2025]

- Microsoft's AI Strategy Under Fire: OpenAI Reliance Threatens Investor Confidence [2025]

- Claude Interactive Apps: Anthropic's Game-Changing Workplace Integration [2025]

- Enterprise Agentic AI Risks & Low-Code Workflow Solutions [2025]

- The AI Adoption Gap: Why Some Countries Are Leaving Others Behind [2025]

![Microsoft's AI Chip Stops Hardware Inflation: What's Coming [2025]](https://tryrunable.com/blog/microsoft-s-ai-chip-stops-hardware-inflation-what-s-coming-2/image-1-1770214212285.jpg)