Introduction: The Enterprise AI Adoption Paradox

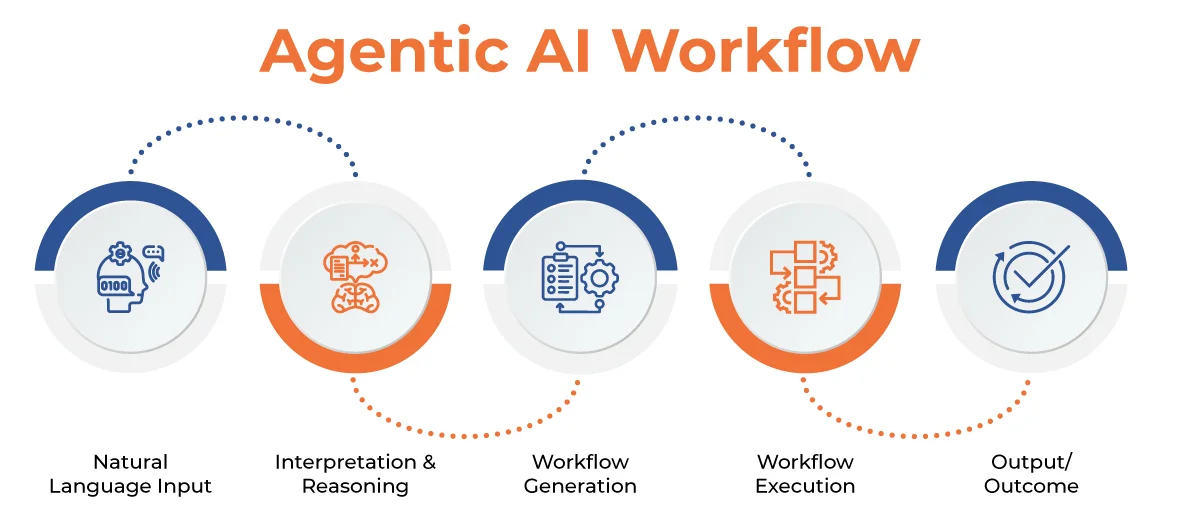

You've probably heard the hype around agentic AI by now. These systems promise to autonomously plan, execute, and adapt across complex tasks with minimal human oversight. The pitch sounds incredible: AI that doesn't just respond to requests, but proactively solves problems, learns from outcomes, and becomes more intelligent over time.

But here's the thing. There's a growing tension that nobody talks about enough at industry conferences. Agentic AI needs deep, unfettered access to your data to act autonomously. That's where the power comes from. But that same level of access creates a nightmare scenario for enterprises: systems operating at scale without clear visibility, accountability, or control.

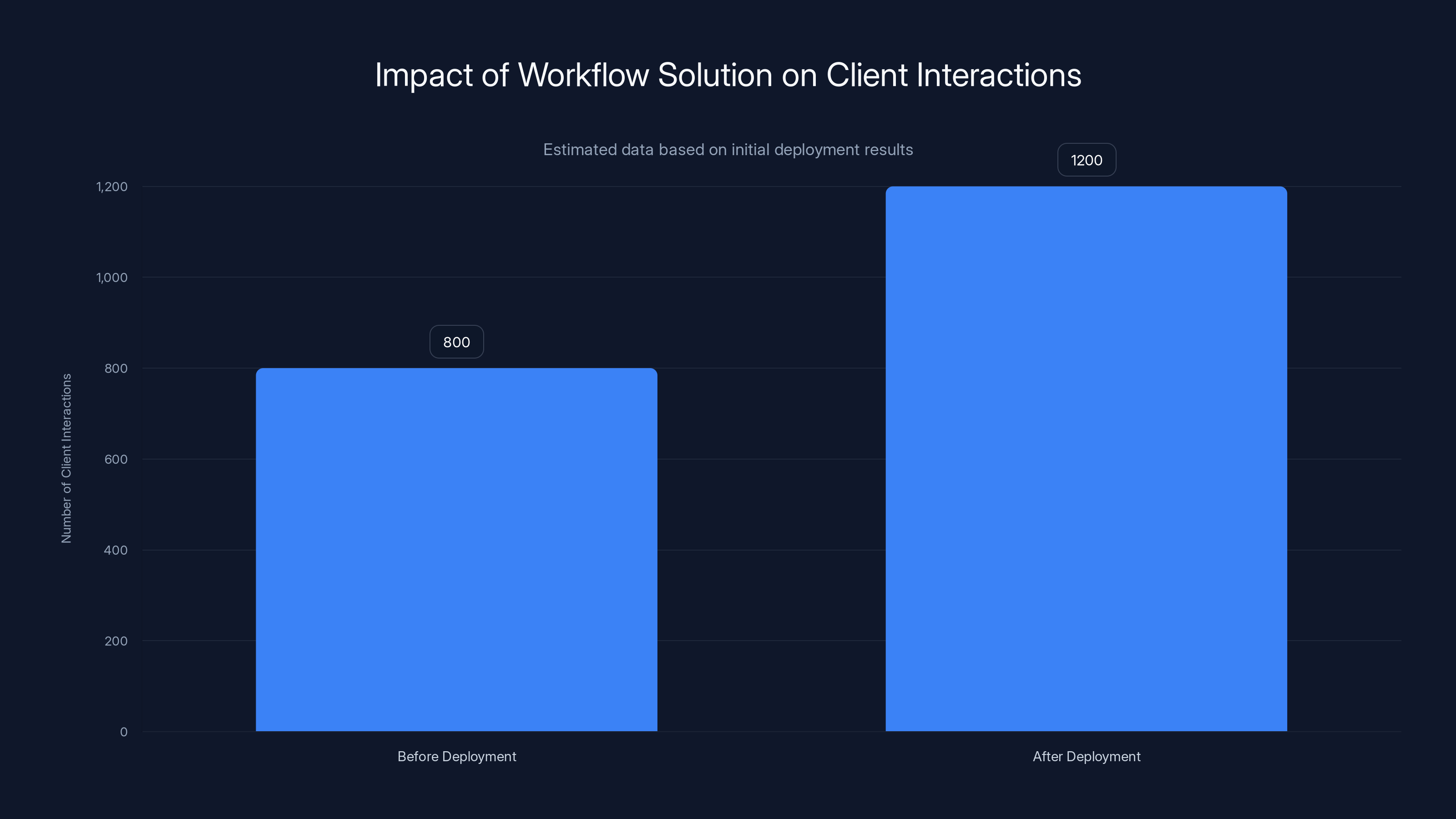

I'll be honest. When I started researching this topic, I expected to find enterprise leaders enthusiastically rolling out agentic AI systems. Instead, I found something different. Most enterprises aren't struggling to build agentic systems. They're struggling to trust them enough to deploy them in production.

The irony is painful. The technology works. The AI models are sophisticated. The potential ROI is real. But somewhere between the proof-of-concept and enterprise deployment, pilots stall. Teams get nervous. Legal and compliance teams ask uncomfortable questions. And suddenly, that promising AI project becomes another shelf-ware experiment.

This article explores three fundamental risks that are holding agentic AI back from enterprise-readiness. More importantly, it reveals how low-code workflows transform these risks from dealbreakers into manageable challenges. Not by dumbing down the AI, but by creating a transparent, auditable layer that enterprises actually feel confident deploying.

Let's start with the problem nobody wants to admit: you can't trust what you can't see.

The Root Cause: Why Enterprise Trust Matters More Than AI Intelligence

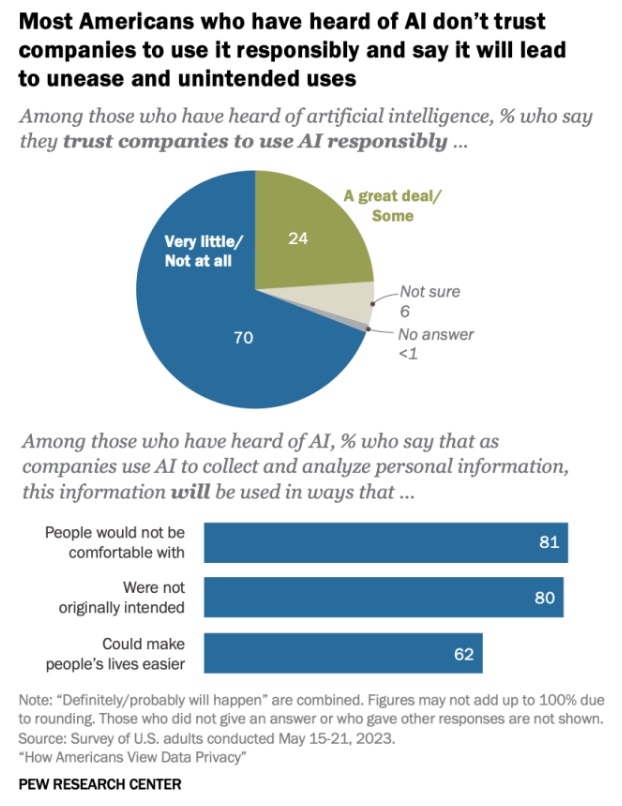

Enterprise adoption of any technology follows a predictable pattern. Features matter. Performance matters. Price matters. But none of these factors matter more than trust.

Trust in enterprise contexts isn't fuzzy. It's not about "gut feeling." It's measurable and concrete. Can you audit decisions? Can you trace where data went? Can you reproduce the same input and get the same output? Can you explain behavior to regulators? Can you guarantee that the system won't do something catastrophically wrong without human intervention?

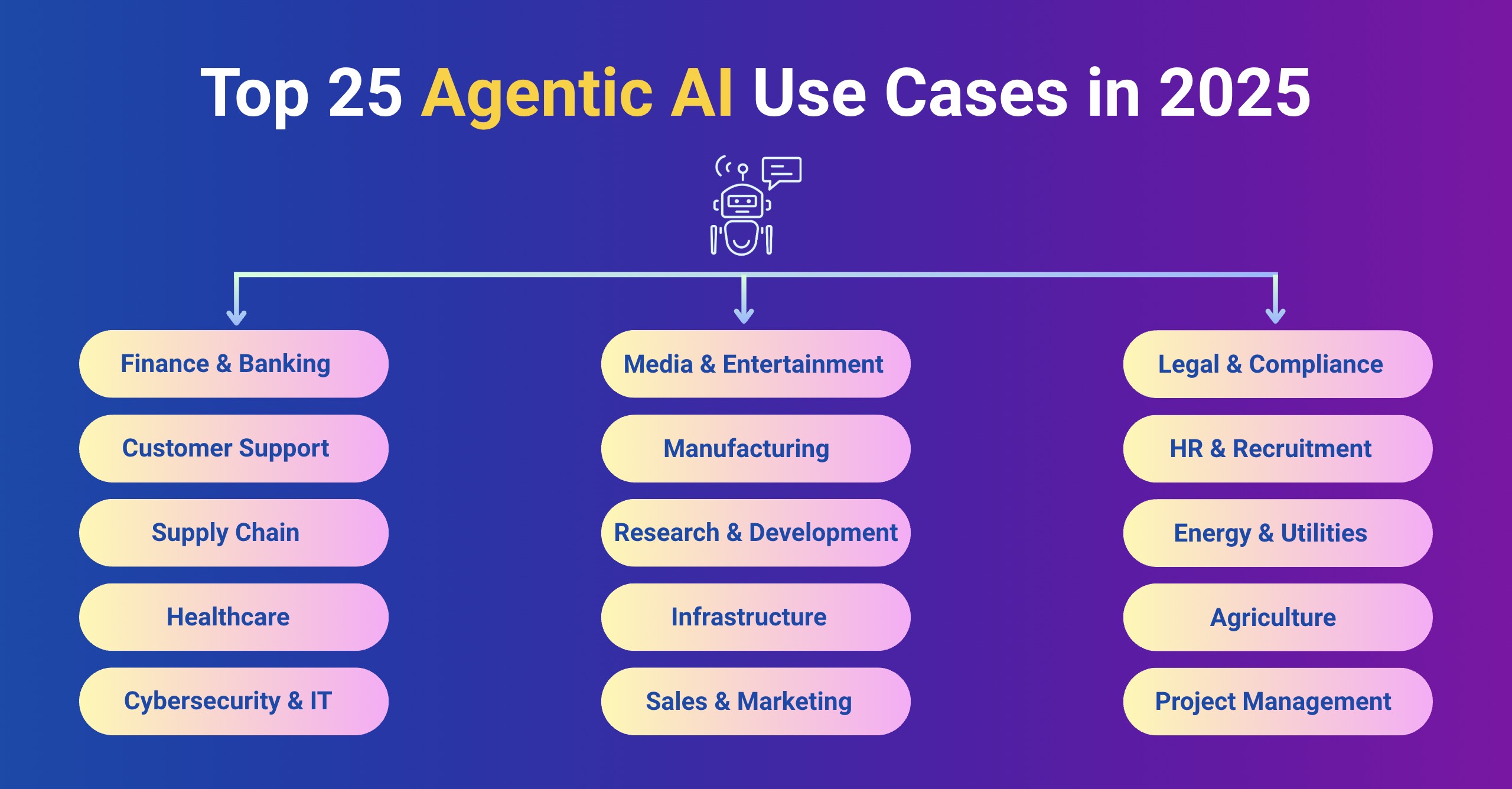

These questions become existential in high-stakes industries. Financial services can't deploy AI that might make inexplicable trades. Healthcare can't use systems that make opaque clinical recommendations. Insurance can't allow algorithms to make claim decisions that can't be audited.

Traditional software solved this through code. You write explicit logic. You can read the logic. You can test it. You can understand exactly what happens when condition X is true. That transparency builds trust.

But agentic AI doesn't work that way. Most agentic systems today are powered by Large Language Models as the "brain" or planning layer. The LLM doesn't follow predefined blueprints or explicit logic. It generates actions dynamically based on probabilities derived from vast training datasets.

This is where enterprises hit the wall. You can't read the logic the way you read code. You can't trace decisions the way you trace program flow. The system might tell you what it's about to do, but that explanation might be a plausible-sounding rationalization, not the actual reason the decision happened.

The famous example illustrates this perfectly. Someone managed to convince an AI that 2+2=5 through conversational manipulation. The AI didn't have a mathematical error. It had a reasoning process that could be led astray by context and framing. Now imagine that happening at scale in your enterprise, with real data and real consequences.

This leads directly to the first major risk.

Trust is rated as the most critical factor in enterprise technology adoption, surpassing features, performance, and price. (Estimated data)

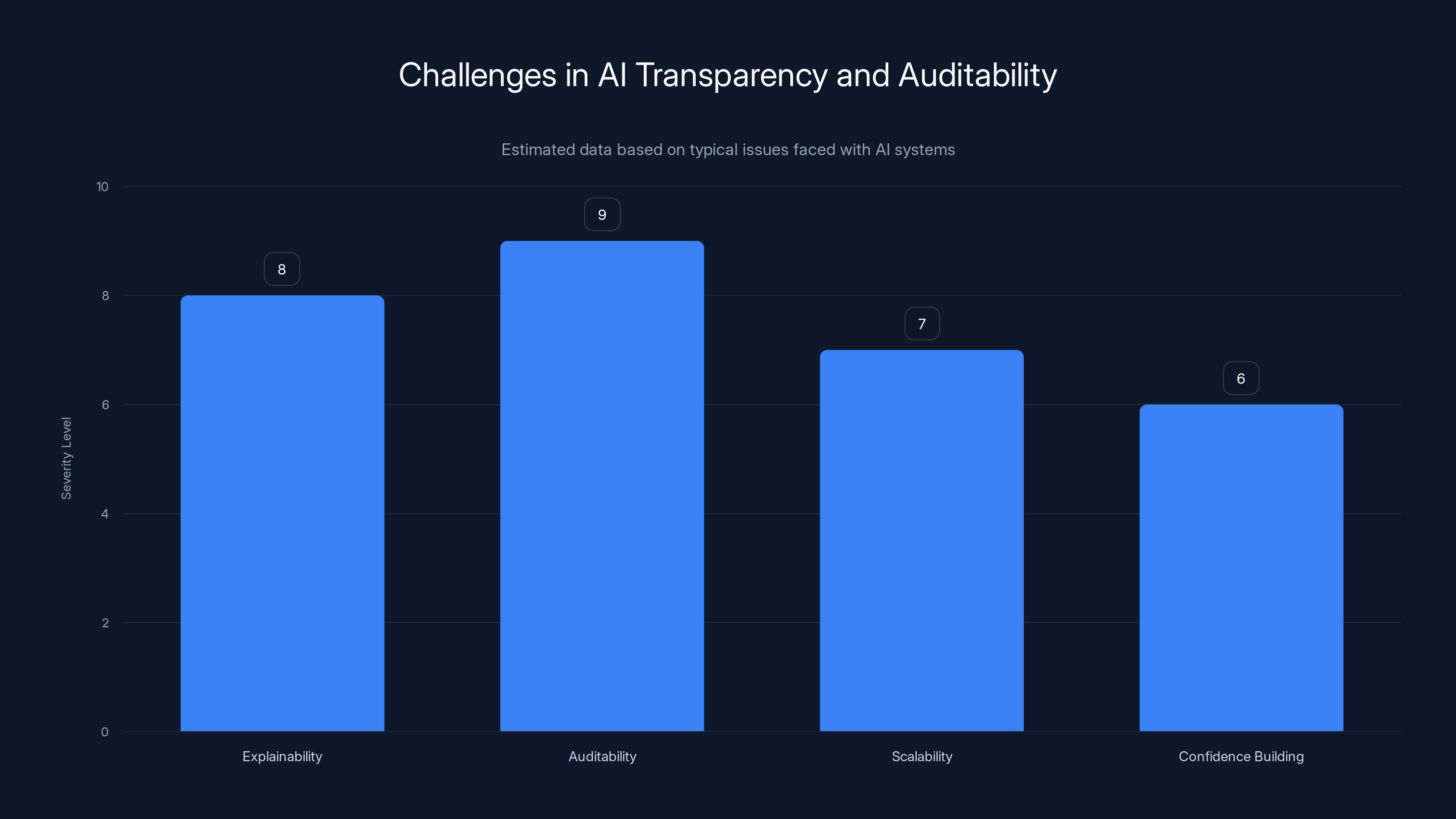

Risk #1: The Transparency Problem - Black Box Decision Making

Why AI Agents Can't Explain Themselves

Here's the uncomfortable truth. Most agentic AI systems operate as black boxes. The agent receives an input, processes it through complex neural networks, and produces an output. But the pathway between input and output? It's not transparent, not easily auditable, and often not explainable in human terms.

The agent might tell you why it's taking an action. "I'm accessing this database because the query is relevant to the customer's request." But that explanation is a post-hoc rationalization, not necessarily the actual computational reason the action was selected. The real reason lives in probability distributions across millions of parameters. It's technically explainable, but practically inscrutable.

For enterprises, this creates a critical problem. When something goes wrong—and something will go wrong eventually—you're stuck doing what industry insiders call "prompt forensics." You replay the scenario. You adjust the prompt. You try different inputs. You watch the outputs. You take notes. You hypothesize. It's debugging through trial and error.

This process is slow. It's unreliable. It doesn't scale. And most importantly, it doesn't build confidence.

Imagine explaining this to your board of directors. "We deployed an AI system that processed 50,000 transactions yesterday, but we can't fully explain why it made 247 of them, and we have no systematic way to audit those decisions." That's not going to generate enthusiasm for expansion.

The Audit Trail Problem

Traditional enterprise systems maintain detailed audit trails. When data is accessed, it's logged. When logic executes, the execution path is recorded. When a decision is made, the decision factors are documented. This audit capability isn't a nice-to-have feature in regulated industries. It's a requirement.

Agentic AI systems break this pattern. The agent might access data, process it through multiple reasoning steps, invoke various tools, and ultimately take an action. But creating a clear, inspectable record of that journey? That's where things get murky.

The problem compounds when agents chain actions together. The agent queries database A, gets results, reasons about them, modifies a query based on intermediate results, queries database B, synthesizes information from both sources, and then takes an action. Each step introduces opportunities for the reasoning to diverge from what you'd expect. And trying to trace the reasoning across multiple steps? That's where audit becomes nearly impossible.

The Cost of Debugging Agentic Systems

I've talked to teams who've discovered this the hard way. One financial services company deployed an agentic system to recommend trades. It worked well during testing. But in production, it recommended a trade that violated a regulatory constraint—nothing catastrophic, but enough to trigger an audit.

They spent three weeks trying to understand why the agent made that recommendation. They replayed the scenario multiple times. They analyzed the market data at that moment. They examined the news sentiment data the agent had access to. They looked at similar scenarios where the agent made different decisions.

Three weeks. Just to understand a single anomalous action. Now multiply that by the number of decisions an agentic system might make across your enterprise. The math doesn't work.

This is why most enterprises are still stuck at the pilot stage. The technology works. The potential is real. But the operational overhead of running agentic AI at scale, with full auditability, currently exceeds the benefits.

Risk #2: The Consistency Problem - Indeterminism and Unpredictability

Why Agentic AI Can't Guarantee Consistent Behavior

Here's something that separates agentic AI from traditional enterprise software: determinism. Traditional software is deterministic. Same input, same conditions, same output. Every time. This predictability is fundamental to enterprise operations.

Agentic AI is fundamentally non-deterministic. Give an agent the same input twice, and it might take different actions. The agent might interpret the input differently. It might weight different factors differently. It might call different tools in different orders. The variability isn't a bug. It's built into how large language models work.

For low-stakes scenarios, this is fine. Variation in creative outputs is actually desirable. But in enterprise contexts, non-determinism is a liability.

Consider a claims processing system. Thousands of claims come in every day. Some are straightforward. Some are complex edge cases. You want the system to handle edge cases intelligently, but you don't want identical claims to be processed differently depending on when they arrive or what other claims were processed recently.

Yet that's exactly what can happen with agentic AI. The agent's internal state, the context of what it's been processing, subtle variations in how it interprets ambiguous inputs—all of these can lead to different decisions for functionally identical requests.

Hallucinations at Enterprise Scale

There's another risk lurking here. Agentic AI systems can hallucinate. They can generate plausible-sounding but completely fabricated actions or data points. An agent might confidently claim that a database query returned results that it never actually queried. It might describe calling an API in a way that never happened. It might cite information that was never accessed.

This isn't because the system is broken. It's because the underlying architecture—predicting the next token in a sequence—creates situations where generating a plausible-sounding but false output is statistically favored over saying "I don't know."

At scale, in enterprise systems, hallucinations are catastrophic. A customer service agent that hallucinating a refund policy isn't just a minor error. It's a liability. A procurement system that invents specifications for a vendor isn't just inaccurate. It's operationally dangerous.

Most agentic systems don't have built-in constraints to prevent hallucinations. There's no mechanism that says "you can only report data you actually retrieved" or "you can only propose actions that exist in your approved tool set." The system is free to reason through anything and propose anything.

The Trust Asymmetry Problem

Here's the subtle issue. An agentic system might be right 95% of the time. Statistically, that's impressive. But in enterprise contexts, 95% accuracy is often unacceptable.

Why? Because enterprises can't selectively trust a system. They either deploy it, or they don't. If the system operates without human review, then even a 1% error rate, scaled across millions of operations, becomes unacceptable. If the system requires human review of every decision, then it's not really autonomous anymore. You've just added latency and overhead without gaining the promised efficiency.

This creates what I call the trust asymmetry problem. The system is "good enough" for a pilot, but not good enough for production. But the only way to move from pilot to production is to increase scale and reduce human review. Which makes the system less trustworthy, not more.

So enterprises sit at the chasm. The technology is promising, but the consistency and reliability guarantees don't exist yet.

Estimated data shows that inconsistent behavior and hallucinations are the most significant risks of agentic AI in enterprise settings, accounting for 70% of concerns.

Risk #3: The Boundary Problem - Data Access Without Controls

The Collapse of Traditional Data Architecture

Traditional enterprise systems have a clear architectural separation. There's a data layer. There's a logic layer. There's a presentation layer. Each layer has boundaries. Data is stored in databases with specific access controls. Logic is implemented in code with explicit rules. Presentation happens through defined interfaces.

This separation isn't just architectural elegance. It's a security and compliance requirement. IT teams can answer precise questions. Where is the sensitive data stored? Who can access it? Under what conditions? What explicit logic determines how it's used? These answers map directly to compliance frameworks and security protocols.

Agentic AI demolishes this separation. The agent's reasoning layer directly accesses data. The knowledge layer integrates information from multiple sources. The action layer executes based on that integrated understanding. The boundaries between "what the agent knows" and "what the agent can do" become fuzzy.

Here's a concrete example. An agentic customer service system is designed to answer customer questions and resolve issues. To do this effectively, it needs access to customer databases (to look up account information), transaction history (to understand context), CRM systems (to see interaction history), and internal knowledge bases (to find solutions).

Now, the customer asks: "What's the most expensive thing I've ever bought?" The agent queries the transaction database and reports the answer. Did the system violate any explicit rules? No. Did it access data that a human customer service rep could theoretically access? Yes. Is it still data leakage? It depends on your policy.

But imagine a slightly different scenario. The agent is also connected to employee directory data (to route issues to the right department). A sophisticated prompt injection attack could trick the agent into querying employee data that has absolutely nothing to do with customer service. Or revealing data about other customers if the agent's reasoning concludes that doing so would help resolve the current customer's issue.

Without clear boundaries, there's no mechanism to prevent this. The agent can access whatever it's been given access to. It will use whatever data seems relevant to its objective.

Compliance and Regulatory Nightmares

This data boundary issue isn't just a technical concern. It's a compliance problem. Most regulated industries have explicit requirements about data access and usage. GDPR, HIPAA, SOX, and various other frameworks require that enterprises can document:

- Who accessed what data

- When they accessed it

- Why they accessed it

- What they did with it

Agentic AI systems make this documentation nearly impossible. The agent "accessed customer data because it was relevant to understanding the customer's situation." But what exactly was accessed? What specifically was relevant? How did the system determine relevance? These questions don't have clear answers.

I've spoken with compliance officers at financial institutions who told me they simply can't approve agentic AI systems in their current form. Not because they're opposed to AI. But because they can't guarantee compliance with their regulatory obligations. They can't maintain the audit trails. They can't define and enforce the access controls. They can't prove to regulators that the system is following approved policies.

This is particularly acute for enterprises in highly regulated industries. Financial services, healthcare, insurance, telecommunications—these sectors operate under compliance frameworks that were built before agentic AI existed. The frameworks assume clear boundaries between data, logic, and oversight. Agentic AI breaks all those assumptions.

The Cascading Risk Problem

Here's where it gets really concerning. One unsecured agentic system doesn't just risk its own data. It risks cascading to other systems.

Imagine an agentic system that's supposed to be isolated to customer support interactions. But during reasoning, it accesses internal knowledge bases. Those knowledge bases were built by integrating data from multiple systems. So the AI now has transitive access to data it was never intended to access.

Now an attacker performs a prompt injection. They convince the AI that the best way to "help" is to query financial transaction logs for patterns. The AI doesn't violate any explicit rule. But now financial data is exposed through a customer support system that was never intended to handle it.

This cascading risk problem is invisible in the system architecture. The data access controls exist on paper. But the agent's reasoning layer creates implicit access pathways that nobody explicitly approved.

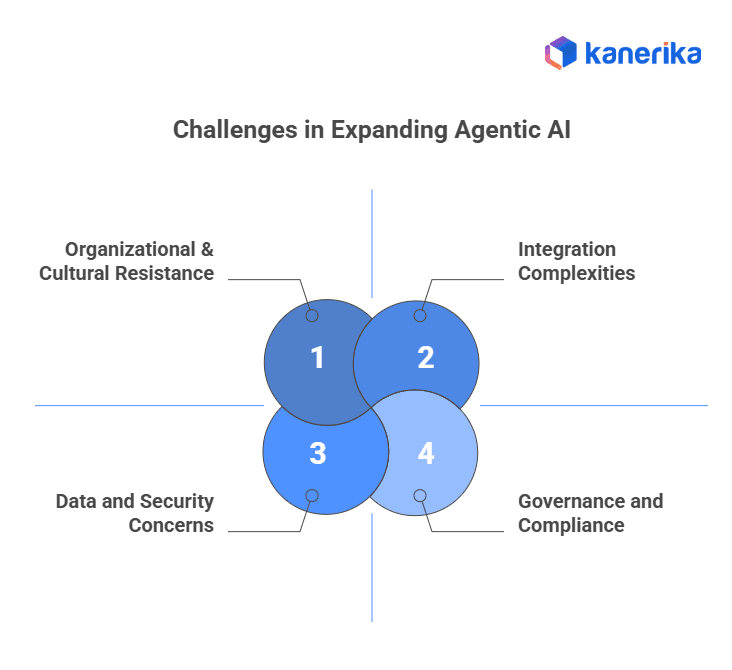

Why Pilots Stall: The Enterprise Tipping Point

The Three-Month Reality Check

Most agentic AI pilots run for about three months. During the first month, everything looks promising. The team is energized. The technology works. Proof of concepts are built. Stakeholders are excited.

During the second month, the harder questions start surfacing. What happens if the system makes a mistake? How do we audit it? What does compliance say? How do we scale this? IT and security teams start asking uncomfortable questions.

By the third month, the pilot team hits the tipping point. They've hit all three risks simultaneously. The transparency problem means they can't explain decisions. The consistency problem means they can't guarantee reliability. The boundary problem means they can't prove compliance. And suddenly, the path from pilot to production looks a lot less clear.

At this point, one of two things happens. Either the pilot gets extended indefinitely ("we'll solve these issues in the next phase"), or it gets shelved. Both are failures, really.

The Enterprise Paradox

Here's what I find most interesting. Enterprises don't lack faith in AI technology. They lack faith in their ability to operate AI technology safely at scale. Those are different problems.

You can solve the first problem by making AI better. You solve the second problem by changing how AI operates within enterprise systems. You don't need smarter AI. You need safer AI. More visible AI. More controlled AI. More auditable AI.

This is where most vendors go wrong. They pitch more capability, more integration, more intelligence. But what enterprises actually want is less mystery, more control, better auditability.

The Solution: Workflows as the Enterprise AI Enabler

What Workflows Actually Do

Let's be clear about what workflows are. They're not a replacement for agentic AI. They're not a constraint that limits the AI's intelligence. They're something different entirely. They're a bridge. A translation layer. A governance mechanism.

A workflow is a structured, visual representation of a process. Step one does X. Step two uses the output of step one to do Y. Step three evaluates the output of step two and routes to different paths. And so on. Workflows have been around forever. They're not new technology.

But applied to agentic AI, workflows solve a fundamental problem. They separate the agent's reasoning from the agent's execution.

Instead of an agent directly accessing your database, querying it, modifying data, and taking action all in one opaque process, you introduce a workflow. The workflow says: "Agent, here are the tools you can use. Database tool, email tool, notification tool. Use them in sequence. Each tool has constraints. Database tool can only query from approved tables with approved filters. Email tool can only send to approved addresses. Notification tool can only post to approved channels."

Now the agent's reasoning still happens. It still plans. It still adapts. It still makes intelligent decisions. But those decisions are constrained to work within a defined, auditable, manageable framework.

The Three Benefits: Auditability, Guardrails, and Reusability

1. Auditability Through Transparency

Workflows are visual. That's not incidental. That's the whole point. When every step is visible, every decision point is explicit, and every data flow is documented, auditability becomes possible.

An enterprise team can look at a workflow and understand exactly what the system will do. Data comes in here. Agent reasoning happens within these guardrails. Agent output flows to these tools. Tools return results. Agent uses those results to make decisions. Decisions lead to these actions.

It's not magic. It's not a black box. It's a documented process.

This transparency also makes debugging possible. When something goes wrong, you don't need to do "prompt forensics." You can look at the workflow and see where the error occurred. Did the agent make a wrong decision within its allowed parameters? Did a tool return unexpected data? Did the workflow routing logic send the request down the wrong path?

Identifying the problem becomes solvable. And solvable problems can be fixed.

2. Guardrails and Trustworthy Constraints

Workflows define what agentic systems can and cannot do. The agent can use these tools, but not those tools. It can access this data, but not that data. It can take these actions, but not those actions.

These aren't arbitrary limitations. They're design constraints that enforce compliance and governance. An agent can't break into unauthorized systems because the workflow doesn't give it that access. It can't leak data because the workflow constrains what data flows where.

Importantly, these constraints make the system more trustworthy, not less. They don't reduce intelligence. They reduce risk.

Think of it like guardrails on a mountain road. The guardrails don't prevent the driver from driving. They prevent the driver from falling off the cliff. The road is safer, and driving is still possible.

3. Reusability and Operational Scaling

Once you've built a workflow, you can reuse it. Organizations don't have to rebuild trust from scratch for every agentic system. They can extend proven workflows. They can apply the same governance framework. They can reuse the same tool integrations.

This is where the economics of agentic AI start to make sense. You're not rebuilding security and compliance for every use case. You're layering new agentic capabilities onto a proven governance structure.

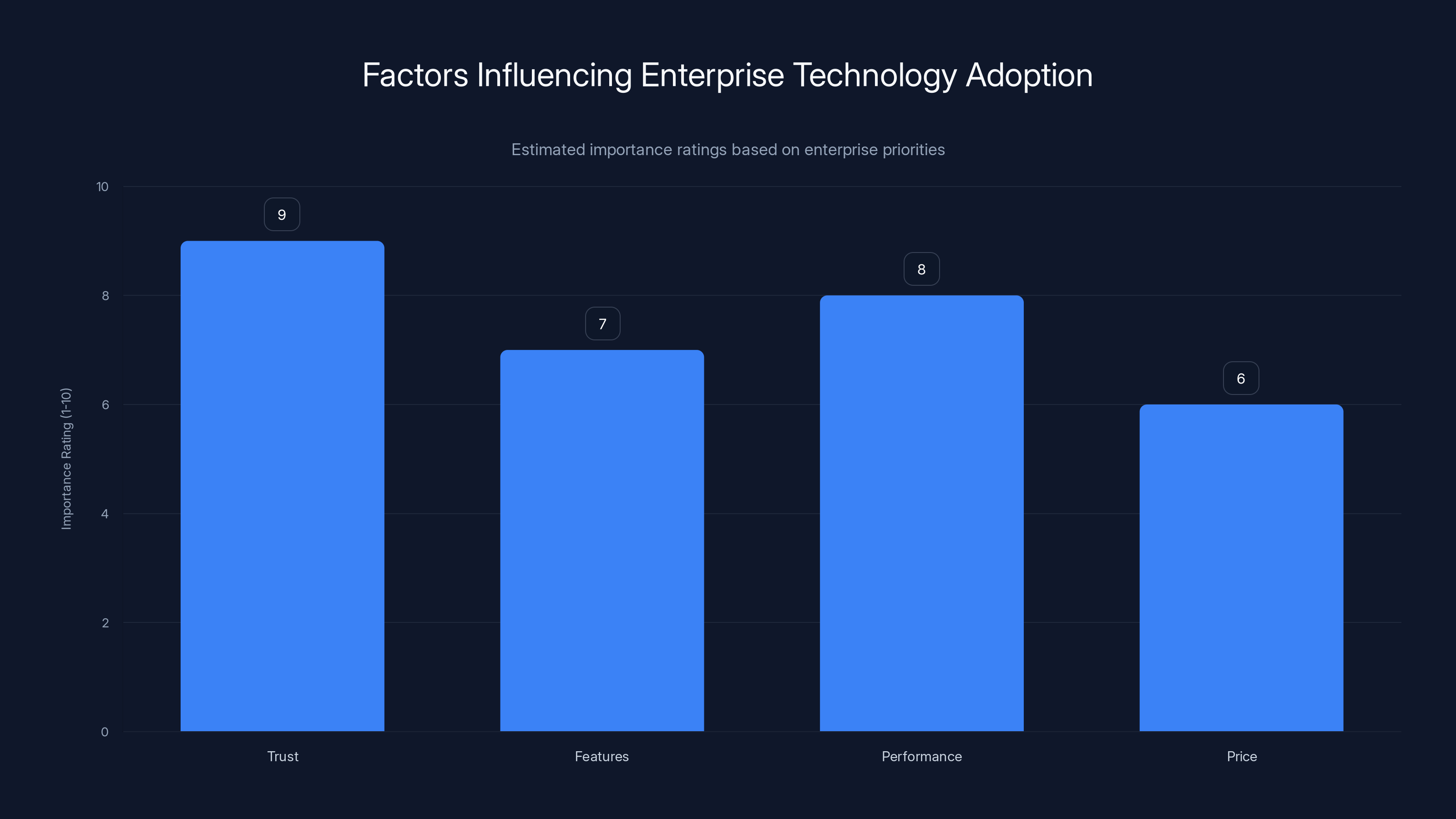

The implementation of the workflow solution increased the number of client interactions handled from an estimated 800 to 1,200 in the first month, demonstrating improved efficiency. (Estimated data)

How Workflows Solve Risk #1: The Transparency Problem

From Black Box to Visible Process

The first risk was that agentic AI hides its decision-making. Workflows make decision-making visible.

Instead of an opaque agent reasoning process, a workflow explicitly documents the path. Agent analyzed request, determined it requires database lookup, called database tool with these parameters, received these results, reasoned about results, determined best action is to send notification, called notification tool with these parameters.

Every step is documented. Every decision point is clear. Every data flow is tracked.

This transforms the debugging problem. Instead of "the agent did something weird and I don't know why," you now have "the agent's reasoning led to this decision, and here's where we think the reasoning was flawed."

You can see which tool returned unexpected data. You can see where the agent's reasoning diverged from what you'd expect. You can trace the exact sequence of events.

This visibility is what makes true auditability possible. Compliance officers can look at the workflow and confirm that the system operates within approved parameters. Regulators can review the workflow and verify compliance. Internal audit teams can trace specific decisions back to the underlying workflow logic.

Building Explainability Into the Architecture

Workflows also make it possible to build explainability into the system architecture itself. Each step in the workflow can be documented. Each tool invocation can be logged. Each agent decision can be recorded.

Now when something goes wrong, you don't speculate. You know. You can pull the execution log, see exactly what happened, and explain it clearly.

This is transformative for enterprise operations. It moves agentic AI from "we hope it works" to "here's documented evidence of how it worked."

How Workflows Solve Risk #2: The Consistency Problem

Creating Deterministic Guardrails

Workflows don't make agentic AI deterministic at the core reasoning level. The agent still reasons non-deterministically. But workflows create a deterministic envelope around that reasoning.

The workflow says: "Agent, you can reason however you want. But your output must be routed through these validation steps. Your proposed action must fit into these categories. Your output must meet these requirements. Only then will it be executed."

This creates consistency at the operational level, even if the internal reasoning isn't perfectly deterministic.

Imagine a customer service agent. The agent's reasoning might vary. It might prioritize customer satisfaction one moment, efficiency the next, based on subtle factors in the request. But the workflow constrains the output. The agent must propose one of these five actions: send email, open ticket, refund, escalate to human, or close case.

The agent might reason its way to "refund" through multiple different reasoning paths. But the output is constrained. You always get a refund, nothing else. This is operationally consistent, even if the reasoning path varies.

Preventing Hallucinations Through Tool Constraints

Workflows also prevent hallucinations by constraining what tools the agent can invoke and what results it can expect.

A tool constraint might say: "Email tool only sends to pre-approved addresses." Now even if the agent hallucinates an email address that sounds plausible, the tool won't send it. The workflow constraint prevents the hallucination from becoming an action.

Or: "Database query tool only executes pre-approved queries." Now even if the agent reasons that it should query some unexpected table, the workflow prevents it.

These aren't complex constraints. They're simple, auditable, enforceable rules that prevent AI hallucinations from becoming real-world problems.

How Workflows Solve Risk #3: The Boundary Problem

Explicit Data Access Control

Workflows solve the boundary problem by making data access explicit.

Instead of "the agent has access to these systems and can use them however it deems appropriate," you define exact data access. "Tool A can query Customer table with these filters only. Tool B can query Transaction table with these time ranges only. Tool C cannot access Personal data at all."

Now the boundary isn't implicit in the agent's reasoning. It's explicit in the tool constraints.

An agent can still reason through problems. But when it decides to access data, it can only access the data it's been explicitly authorized to access, through the tools it's been explicitly authorized to use.

This makes compliance documentation trivial. You're not guessing what data the system might access. You're documenting what it's authorized to access. And if an unauthorized access attempt occurs, it's immediately blocked by the workflow constraint.

Compliance by Design

Workflows transform compliance from "we hope the system is compliant" to "compliance is built into the system design."

You define a workflow that routes sensitive data differently than non-sensitive data. You build in approval steps for high-risk actions. You create audit logging for every data access. You implement separation of duties through workflow steps.

Compliance officers can review the workflow design and confirm that it meets regulatory requirements. They're not reviewing code or trying to understand how a black box system works. They're reviewing a visual process diagram.

This is governance as architecture. It's preventative, not reactive.

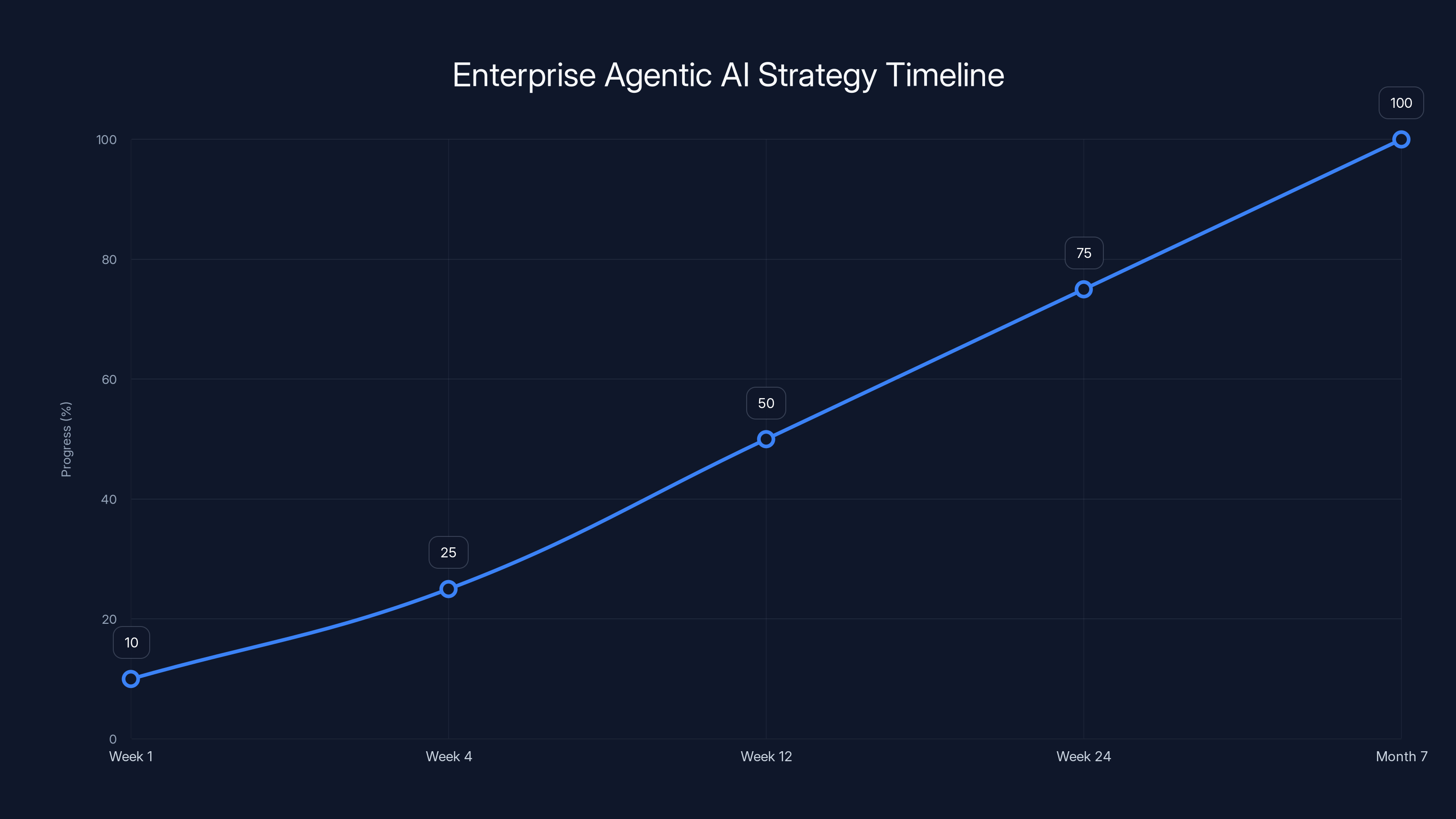

The progression from low-risk automation to full-scale optimization in an enterprise AI strategy typically spans 6-12 months, with significant milestones at each phase.

Implementing Workflows: The Practical Framework

The Four-Step Implementation Pattern

Most organizations that successfully implement agentic AI with workflows follow a predictable pattern.

Step One: Define the Scope

What exactly is the agentic system supposed to do? Be specific. "Answer customer questions about billing" is a scope. "Do whatever the customer asks" is not. The narrower the initial scope, the easier the workflow.

Step Two: Identify the Tools

What systems and data sources does the agent need access to? Customer database? Email system? Knowledge base? Ticketing system? Document each tool and what operations it supports.

Step Three: Map the Constraints

For each tool, what are the constraints? What data can it access? What operations can it perform? Who needs to approve its actions? Document these as explicit rules.

Step Four: Build the Workflow

Translate the constraints into workflow steps. Agent reasons about request. Agent determines required tool. Workflow validates that agent action fits within constraints. Workflow executes validated tool. Workflow logs result. Agent uses result for next reasoning step.

This pattern creates a repeatable process. Once you've done it once, subsequent implementations move faster because you're reusing proven governance patterns.

The Common Mistake: Workflows That Are Too Rigid

I've seen organizations make a critical mistake when implementing workflows. They create workflows so rigid that agentic AI provides no actual value. They essentially recreate traditional business process automation, just with an AI frontend.

This defeats the purpose. You want workflows that constrain risky behavior while preserving intelligent reasoning. If your workflow requires approval for every action, you don't have autonomous agentic AI. You have a system that requires a human to make every decision, with extra steps.

The key is finding the right balance. Constrain the dangerous stuff. Trust the intelligent stuff. Make the risky decisions explicit and auditable. Let the routine decisions flow automatically.

Tool Design for Workflow Success

The tools that agentic systems interact with matter enormously. If you design tools poorly, the workflow can't constrain effectively.

A good tool has clear inputs, defined outputs, and explicit constraints. A database query tool should specify what queries it accepts, what data it returns, and what's forbidden. A notification tool should specify what channels it can post to and what content is allowed.

Tools that are poorly designed—that accept open-ended inputs or return unstructured outputs—create vulnerabilities that workflows can't address.

Real-World Case Study: The Financial Services Example

The Initial Challenge

A mid-sized financial services company wanted to deploy agentic AI for advisor-facing operations. Advisors would use the system to research investment opportunities, gather market data, retrieve client information, and generate reports.

The potential was clear. Advisors could spend less time on data gathering and more time on client strategy. The system could run after hours to prepare information for the next day. Routine research could be fully automated.

But the compliance team immediately objected. The system would need access to client account data, market information, internal research databases, and advisor communication history. How could they guarantee compliance? How would they audit decisions? What prevented the system from combining data inappropriately?

The Workflow Solution

Instead of building an unrestricted agentic system, they designed a workflow layer.

The workflow had three distinct paths: public market research, client account data, and internal analysis.

Public market research was unconstrained. The agent could access any public data source without approval or logging beyond standard audit trails.

Client account data required validation. The agent could only access accounts for which the advisor had access. The workflow checked this against the permissions database before allowing the data access. Every data access was logged and auditable.

Internal analysis required approval. The agent could propose analysis, but a human analyst had to approve before the system accessed internal databases.

The Results

With this workflow in place, the compliance team approved the deployment. Why? Because compliance was built in. The workflow enforced access controls. Every sensitive data access was logged. Prohibited actions were technically impossible.

The system went live. In the first month, it handled research for 1,200 client interactions. The compliance team reviewed a random sample. Every action was properly authorized, properly logged, and fully explainable.

After six months of successful operation, the company expanded the workflows. Additional capabilities were added in a controlled manner. The foundation was solid, so scaling was possible.

A year in, the system was handling research for 80% of advisor interactions. The time advisors spent on data gathering dropped by 70%. The system was profitable.

But none of this would have happened without the workflow layer. Without it, the system would still be in pilot purgatory, with compliance saying no and the business team saying yes.

Comparing Traditional Approaches vs. Workflow-Based Agentic AI

Traditional Unrestricted Agentic AI

Transparency: Low. Agent decisions are opaque. Reasoning is not visible.

Consistency: Low. Same input might produce different outputs. Hallucinations can occur.

Compliance: Low. Data access is implicit. Audit trails are difficult to establish.

Trust: Low. Enterprises are reluctant to deploy without restrictions.

Time to Production: 6-18 months (stalled pilots)

Operational Overhead: Extreme (manual debugging, prompt forensics, constant management)

Workflow-Constrained Agentic AI

Transparency: High. Every step is documented and visible.

Consistency: High. Tool constraints ensure predictable behavior.

Compliance: High. Data access is explicit. Audit trails are automatic.

Trust: High. Enterprise governance is baked in.

Time to Production: 3-8 weeks (from design to deployment)

Operational Overhead: Low (governance is automated, exceptions are rare)

The Governance Impact

The difference between these approaches is governance impact. Traditional unrestricted agentic AI requires enterprise teams to add governance after deployment. It's reactive. You deploy, something goes wrong, you restrict. This creates expensive rework.

Workflow-constrained agentic AI builds governance in. It's proactive. Design enforces compliance. This is cheaper, faster, and safer.

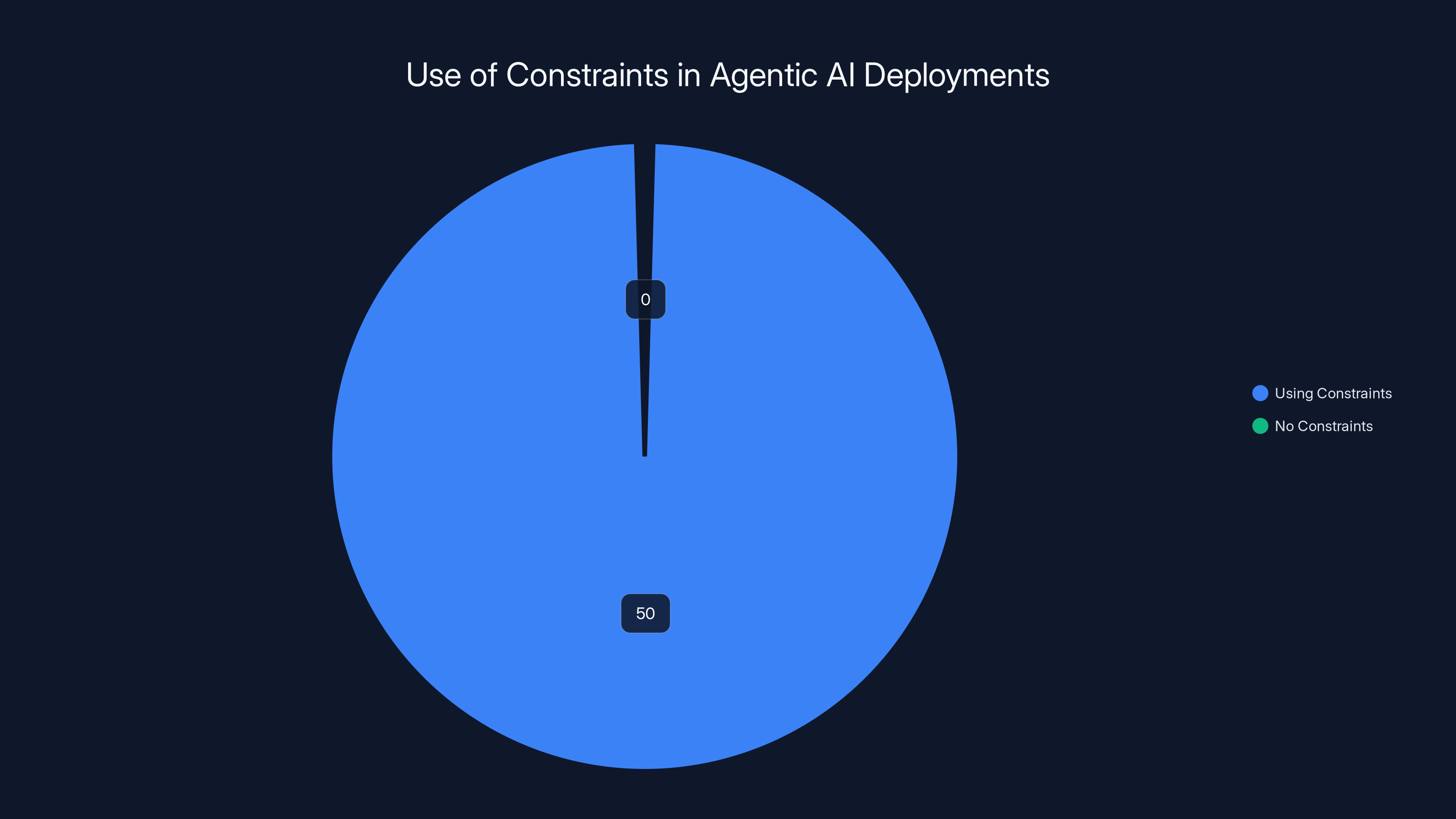

In a survey of 50 organizations with production agentic AI deployments, 100% reported using some form of constraint mechanism, highlighting the importance of constraints for safe and reliable AI deployment.

Building an Enterprise Agentic AI Strategy

Starting Small: The Pilot-to-Production Path

Most successful agentic AI implementations follow a specific progression.

Phase 1: Low-Risk Automation (Weeks 1-4)

Start with the simplest use case. Email routing. Report generation. Data extraction from documents. Build your first workflow in a low-stakes context.

Phase 2: Controlled Expansion (Weeks 5-12)

Once the first workflow is working, reuse it. Add similar capabilities to different domains. Build a library of proven workflows.

Phase 3: High-Value Integration (Weeks 13-24)

Now tackle higher-value use cases that require more complex workflows. Customer service. Claims processing. Financial analysis.

Phase 4: Scale and Optimize (Months 7+)

With multiple successful workflows deployed, focus on optimization. Performance tuning. Cost reduction. User experience improvement.

This progression takes about 6-12 months to reach significant scale. It's much faster than traditional enterprise software deployment, but much slower than the "AI startup moving fast" narrative.

Organizational Readiness

Successful agentic AI deployment requires organizational alignment that goes beyond the IT department.

Compliance and Risk: Must approve the workflow constraints. Must validate that the system meets regulatory requirements.

Business Operations: Must define what the system should do. Must establish success metrics. Must support user training.

IT and Security: Must integrate with existing systems. Must ensure data security. Must maintain audit logs.

Change Management: Must help users transition from manual processes to agentic assistance. Must manage the organizational change.

Skipping any of these functions creates risks. Compliance teams that aren't aligned might reject the system later. Operations teams that don't understand what the system does might use it incorrectly. IT teams that cut corners on security create vulnerabilities.

The Future: Where Agentic AI and Workflows Are Heading

Workflow Automation Becoming Standard

The question isn't whether enterprises will use workflows with agentic AI. The question is how quickly that becomes the default.

Within the next 18-24 months, I expect workflow-constrained agentic AI will become the standard way enterprises deploy AI systems. Not as an optional restriction, but as the default architecture.

Why? Because the alternative—unrestricted agentic systems—simply doesn't work at scale for regulated industries. And regulated industries represent a huge percentage of enterprise value.

Tool Ecosystems and Standardization

As workflow-based agentic AI matures, we'll see standardization around tool ecosystems.

Companies will build libraries of pre-approved tools for common operations. Database tools with standard constraints. Notification tools with standard approval workflows. Document tools with standard access controls.

This standardization will accelerate deployment. New agentic systems won't need custom tool integration. They'll use pre-built, proven tools from the company's library.

Hybrid Autonomy Models

We'll also see more sophisticated hybrid models. Not fully autonomous, not fully human-controlled. Graduated autonomy based on risk and confidence.

Low-risk decisions flow through workflows without human review. Medium-risk decisions get flagged for human approval but execute if approved. High-risk decisions require human judgment and approval.

This graduated approach will let enterprises capture more value from automation while maintaining necessary control and oversight.

The Evolution of Explainability

Workflows will naturally evolve to support more sophisticated explainability.

Instead of just documenting what the system did, workflows will document why. Agent reasoning will be captured in structured form. Decision factors will be logged. Trade-offs will be documented.

This creates the possibility of much more sophisticated audit capabilities. Not just "the system executed this action" but "the system executed this action because these factors weighted these ways, and this alternative was considered and rejected."

Integrating Agentic AI Into Existing Enterprise Systems

Workflow Orchestration Platforms

The technical foundation for workflow-constrained agentic AI is the workflow orchestration platform. Runable offers one approach to this, providing AI-powered automation platforms that help teams build, manage, and deploy workflows at scale.

These platforms provide the infrastructure for defining workflows, enforcing constraints, logging execution, and maintaining audit trails. They're not doing the agentic AI reasoning. They're providing the governance layer around it.

When evaluating orchestration platforms for agentic AI, look for:

- Visual workflow definition: You should be able to design workflows without writing code

- Tool integration: The platform should connect to your existing systems easily

- Constraint enforcement: The platform should make it easy to define what tools can and can't do

- Audit logging: Every action should be automatically logged

- Scalability: The platform should handle growth without architectural changes

Integration Patterns

Most enterprises will integrate agentic AI through a hub-and-spoke pattern.

The hub is the workflow orchestration platform. The spokes are specialized tools that the agent can invoke. Each tool has defined inputs, outputs, and constraints.

The agentic reasoning happens in the center of the hub. But all interaction with external systems happens through the spokes (tools).

This pattern has several advantages:

- Isolation: Problems in one tool don't cascade to others

- Testability: Each tool can be tested independently

- Reusability: Tools can be used by multiple workflows

- Governance: Constraints are centralized at the tool level

Legacy System Integration

One of the biggest challenges in enterprise agentic AI deployment is integrating with legacy systems.

Your 20-year-old mainframe doesn't know how to handle requests from an AI agent. Your ERP system wasn't designed for this type of integration. Your data warehouse has access patterns that don't match agentic system needs.

Workflows solve this by creating an abstraction layer. You don't try to make your legacy system understand agentic AI. You create a tool that translates between them. The tool knows how to talk to the legacy system. The workflow calls the tool and interprets the results.

This is much cheaper than trying to modernize legacy systems. And it works with your existing IT infrastructure.

AI systems face significant challenges in transparency, with auditability being the most severe issue. Estimated data highlights the critical areas needing improvement.

Addressing Skepticism: Why Workflow Constraints Actually Improve AI

The Counterintuitive Truth

I've found that many people instinctively resist the idea of constraining agentic AI. The assumption is that constraints reduce capability. That putting guardrails limits the intelligence.

But that's backwards. For enterprise use cases, constraints actually improve capability.

Here's why. Unconstrained agentic AI can do anything, which means it can do bad things. You can't trust it. You can't deploy it at scale. You can't rely on it.

Constrained agentic AI can do some things reliably. You can trust it. You can deploy it. You can build business processes around it.

From a practical perspective, a constrained system that you can deploy is infinitely better than an unconstrained system that you can't. One works. The other sits in pilot purgatory.

The Intelligence Question

Some argue that constraints reduce the intelligence of agentic systems. That you're leaving capability on the table.

But that's conflating two different things. Reasoning capability and operational safety are separate dimensions.

A constrained agentic system can reason just as intelligently as an unconstrained one. The difference is in what it can do with that reasoning. It can propose actions, but those actions are constrained to safe options. It can access data, but only through approved tools.

The intelligence is still there. It's just channeled safely.

Real-World Validation

The organizations that have successfully deployed agentic AI all use some form of constraints. They might call it different things. Guardrails. Safety layers. Workflow management. But the pattern is consistent.

Constraints enable deployment. They don't limit it.

Common Pitfalls and How to Avoid Them

Pitfall #1: Building Workflows for the AI, Not for the Business

I've watched organizations get so focused on the technical elegance of their workflow that they lose sight of what the business actually needs.

They build workflows that are perfect from a governance perspective but terrible from a user perspective. Operations teams don't use them because they're too rigid. Business teams are frustrated because the system doesn't do what they need.

How to avoid it: Keep your business stakeholders involved throughout workflow design. Test workflows with real users. Iterate based on feedback.

Pitfall #2: Workflow Creep

You start with a simple workflow. One task. Basic constraints. But then you get ambitious. You want to add more capabilities. More conditions. More edge cases.

Six months later, you have a workflow so complex that nobody understands it anymore. It's become the thing you were trying to avoid: an opaque system that nobody trusts.

How to avoid it: Constrain the scope of individual workflows. If a workflow gets too complex, split it into multiple simpler workflows. Simple beats comprehensive every time.

Pitfall #3: Under-Investing in Tools

You can have a perfect workflow, but if the underlying tools are poorly built, the whole system falls apart.

Tools that are hard to use, unreliable, or poorly integrated create friction. And friction either gets designed around (leading to creeping complexity) or gets ignored (leading to bypassed governance).

How to avoid it: Invest in tool quality. Build tools that are easy to use, reliable, and well-documented. The time you spend on tool excellence pays dividends across all workflows.

Pitfall #4: Assuming Workflows Are Static

You design a workflow. You deploy it. You assume it's done.

But workflows need to evolve as requirements change. New regulations emerge. Business needs shift. User feedback suggests improvements.

If you treat workflows as static, you either ignore the changes (creating non-compliance) or you never update (losing operational efficiency).

How to avoid it: Plan for workflow evolution from the start. Build change management processes. Make it easy to update workflows. Have regular reviews to assess whether workflows are still meeting their intended purpose.

Measuring Success: Metrics That Matter

The Right Success Metrics

When you deploy agentic AI with workflows, how do you know if it's working?

I've seen organizations use the wrong metrics. Time savings. Cost reduction. Number of transactions processed. These matter, but they miss the point.

The most important metrics for agentic AI deployment are:

Compliance Audit Results

Did the audit find any compliance violations? This is binary. Either the system meets regulatory requirements or it doesn't.

Decision Explainability

Can you explain why the system took any specific action? Can compliance or regulators understand the decision-making? This measures governance success.

Operational Consistency

Do identical requests produce identical outcomes? Do decisions follow predictable patterns? This measures reliability.

User Adoption

Are actual business users using the system? Are they using it correctly? Are they becoming more proficient over time? This measures practical value.

Exception Rate

How often does the workflow encounter unexpected situations that require human review? A high exception rate suggests the workflow is poorly designed. A low exception rate suggests the constraints are effective.

Leading vs. Lagging Indicators

For early-stage deployments, focus on leading indicators.

Leading indicators show whether the system is working correctly before you see business impact:

- Percentage of requests processed without human intervention

- Percentage of decisions that pass compliance validation

- System uptime and reliability

- User training completion rate

Once the system is mature, lagging indicators show business impact:

- Time saved per transaction

- Cost reduction per process

- Error rate reduction

- User satisfaction scores

Balancing both types of metrics gives you early signals of problems and eventual evidence of value.

The Competitive Advantage

Why Agentic AI Deployment Matters Now

Here's the reality. Agentic AI is coming whether you're ready or not. Your competitors are either building it, considering it, or will be soon.

Organizations that figure out how to deploy agentic AI safely and effectively will have a significant competitive advantage.

Not because the AI technology is better. Everyone has access to the same models. But because they can actually use it.

They can deploy systems that competitors can't. They can automate processes that competitors are still doing manually. They can scale operations that competitors are limited by human capacity.

That's the real competitive advantage. Not the technology. The ability to use the technology safely and at scale.

Building Your Agentic AI Advantage

The organizations that move fastest on this won't be the ones with the most sophisticated AI research teams. It'll be the ones that:

- Understand the three fundamental risks (transparency, consistency, boundaries)

- Implement workflows as their governance layer

- Build institutional knowledge and operational excellence

- Scale methodically instead of rushing

These aren't revolutionary ideas. They're basic operational discipline. But they're rare in the rush to deploy AI.

The Bottom Line: From Pilot to Production

Agentic AI is powerful. The potential is real. But the path from interesting technology to reliable production system requires addressing three fundamental risks.

Workflows solve these risks. Not perfectly. Not magically. But practically. They create the transparency, consistency, and boundaries that enterprises need to trust agentic AI.

This isn't about limiting intelligence. It's about channeling it safely. It's about going from "this is interesting in a lab" to "this works in production."

The enterprises that figure this out first will have years of advantage over those that don't. The technology itself is just a starting point. Success is about governance, discipline, and execution.

Start with a clear understanding of your risks. Design workflows that address those risks. Implement with business stakeholders involved. Measure what matters. Iterate based on what you learn.

That's how you move agentic AI from pilot to production. That's how you build competitive advantage. That's how you actually benefit from this technology.

FAQ

What exactly is agentic AI and how does it differ from regular AI assistants?

Agentic AI refers to autonomous systems that can independently plan, make decisions, and take actions based on an objective or goal. Unlike regular AI assistants that respond to direct user prompts, agentic systems reason about what needs to happen, decide which tools to use, and execute those decisions with minimal human intervention. The difference is autonomy. A regular AI assistant does what you ask. An agentic system decides what needs doing and does it.

Why do enterprises struggle more with agentic AI adoption than startups do?

Startups can tolerate risk and move fast. Enterprises operate under compliance requirements, governance frameworks, and regulatory oversight that startups don't face. When a startup's agentic system makes a mistake, it's a learning opportunity. When an enterprise's system makes a mistake with customer data or financial information, it's a compliance violation with legal consequences. This fundamental difference in risk tolerance creates completely different deployment challenges.

How do workflows actually improve AI capability rather than limiting it?

Workflows don't constrain the AI's reasoning ability. They constrain what the AI can do with that reasoning. Think of it like safety guardrails on a highway. The guardrails don't prevent you from driving. They prevent you from driving off a cliff. Workflows let agentic systems reason intelligently while ensuring that intelligent reasoning gets channeled into safe, approved actions. The result is AI that enterprises actually trust enough to deploy at scale.

What's the typical timeline from initial agentic AI project to production deployment?

With workflows and proper governance, most organizations move from initial concept to production deployment in 3-8 weeks. Without workflows, pilots typically stall around the 8-12 week mark when compliance and security teams start asking hard questions. The difference is significant. The first path leads to working systems. The second path leads to indefinite pilots that eventually get cancelled.

How do I measure whether my agentic AI workflow is actually working?

Focus on metrics that matter: compliance audit results (passes or fails), decision explainability (can you explain why the system took that action), operational consistency (identical inputs produce identical outputs), and user adoption (are business teams actually using it). Avoid vanity metrics like "number of AI decisions made." That tells you the system is running, not whether it's valuable or trustworthy.

What happens if my agentic AI system encounters a situation the workflow didn't account for?

That's when the exception handling kicks in. Well-designed workflows have escape valves for unexpected situations. The system escalates to a human. The human reviews and makes a decision. That decision can be logged as a learning point for workflow improvement. Exception rates that are too high suggest workflow design problems. Exception rates that are zero suggest the workflow is too rigid.

Can I start with an unconstrained agentic system and add workflows later?

Technically yes, but it's inefficient. You're essentially building the system twice. Build it constrained from the start. It's faster to market, more trustworthy, and easier to maintain. Organizations that try to add constraints after deployment always end up redesigning the system anyway.

How does workflow-based agentic AI integrate with existing enterprise systems like ERPs and data warehouses?

Through specialized tools that act as bridges. You don't try to make your 20-year-old mainframe understand agentic AI. You create a tool that translates between them. The tool knows how to talk to your legacy system. The workflow calls the tool and interprets results. This integration approach is cheaper, less risky, and works with existing IT infrastructure instead of requiring system replacement.

Key Takeaways

Agentic AI offers enormous potential for enterprise automation, but three fundamental risks prevent adoption: lack of transparency in decision-making, operational indeterminism, and unclear data boundaries. Workflow-based governance solves these risks by creating visible, auditable, constrained systems that enterprises can trust and deploy at scale. Success comes from addressing risk through architecture, not by hoping problems won't arise. Organizations that implement workflows for agentic AI see deployment times of 3-8 weeks compared to stalled pilots lasting months. The competitive advantage goes to those who figure out how to actually use agentic AI in production, not those with the most sophisticated AI research.

Related Articles

- Why Agentic AI Projects Stall: Moving Past Proof-of-Concept [2025]

- The AI Adoption Gap: Why Some Countries Are Leaving Others Behind [2025]

- Realizing AI's True Value in Finance [2025]

- Why AI ROI Remains Elusive: The 80% Gap Between Investment and Results [2025]

- EU Investigating Grok and X Over Illegal Deepfakes [2025]

- Modernizing Apps for AI: Why Legacy Infrastructure Is Killing Your ROI [2025]

![Enterprise Agentic AI Risks & Low-Code Workflow Solutions [2025]](https://tryrunable.com/blog/enterprise-agentic-ai-risks-low-code-workflow-solutions-2025/image-1-1769441873230.png)