Nvidia's $100 Billion Open AI Investment: Separating Fact from Speculation in 2025

The technology industry thrives on dramatic narratives, and few stories capture headlines quite like the rumored dissolution of a multi-billion-dollar partnership between two of the most influential companies in artificial intelligence. In late January 2025, reports surfaced suggesting that Nvidia CEO Jensen Huang had begun scaling back his company's commitment to a $100 billion investment in Open AI, a deal that was announced with considerable fanfare just months earlier in September 2024. These reports claimed internal friction, strategic misalignment, and concerns about competing AI platforms were driving a wedge between the two companies.

Yet when asked directly about these claims, Huang dismissed them entirely, calling reports of partnership strain "nonsense" and doubling down on his commitment to Open AI. This public contradiction raised fundamental questions: What's actually happening between these two AI powerhouses? How serious was the original investment commitment? What changed in the five months between announcement and the reported cooling-off period? And perhaps most importantly, what do these developments reveal about the competitive dynamics, investment strategies, and infrastructure needs within the rapidly evolving AI ecosystem?

This comprehensive analysis examines the Nvidia-Open AI partnership from multiple angles—the original deal structure, the reported tensions, the broader competitive landscape, and what this means for developers, enterprises, and the future of AI infrastructure. Rather than accepting headlines at face value, we'll dig into the business logic, the technical requirements, and the strategic incentives that underpin one of technology's most consequential relationships.

The Nvidia-Open AI partnership represents more than a simple financial transaction. It reflects deep interdependencies between chip manufacturing, AI model development, and cloud computing infrastructure that will shape how artificial intelligence evolves over the next decade. Understanding the nuances of this relationship—and the forces that could strain or strengthen it—is essential for anyone building on AI platforms, investing in tech infrastructure, or trying to anticipate the next phase of the AI revolution.

The Original Deal: What Nvidia and Open AI Actually Announced

The September 2024 Announcement Details

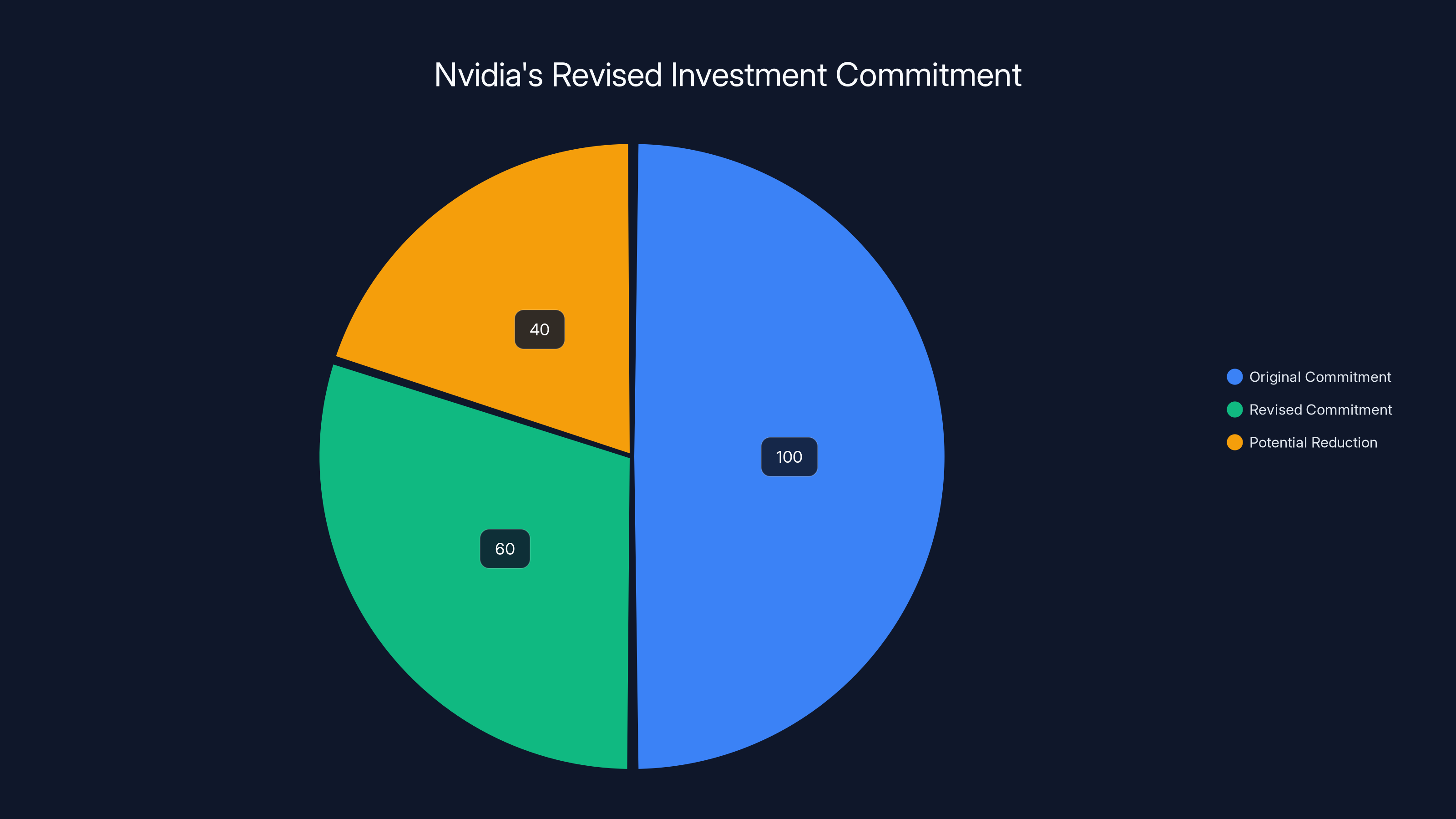

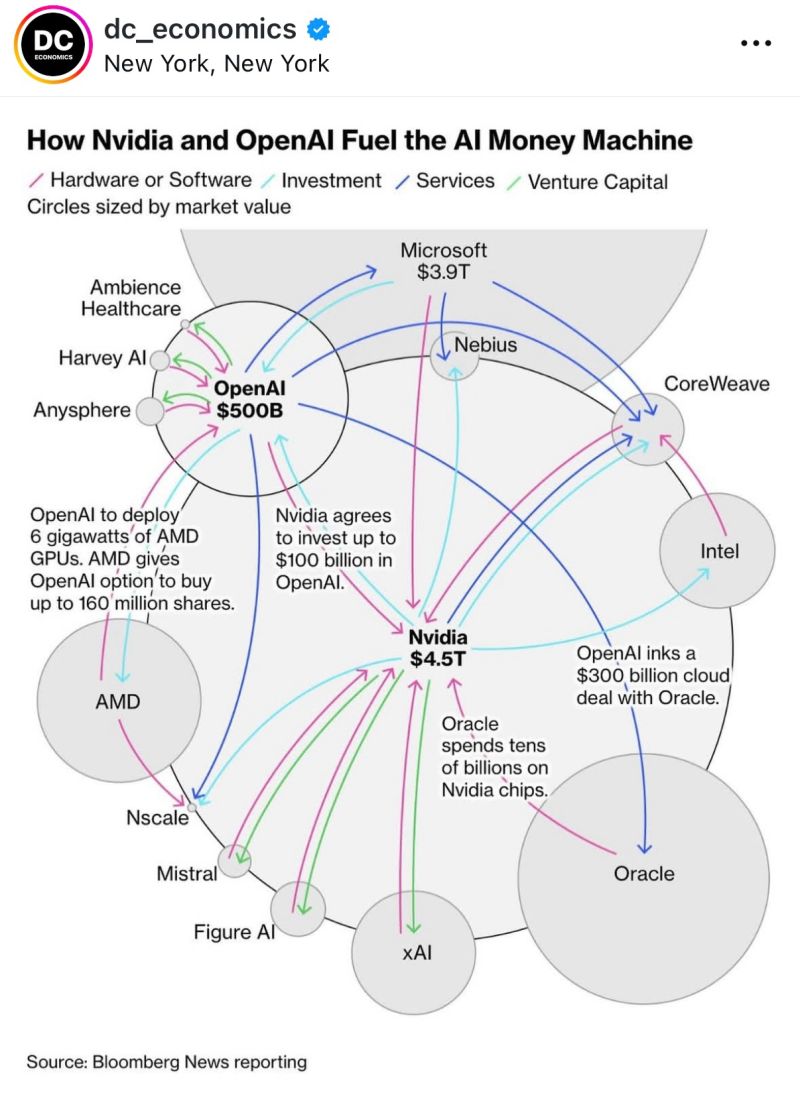

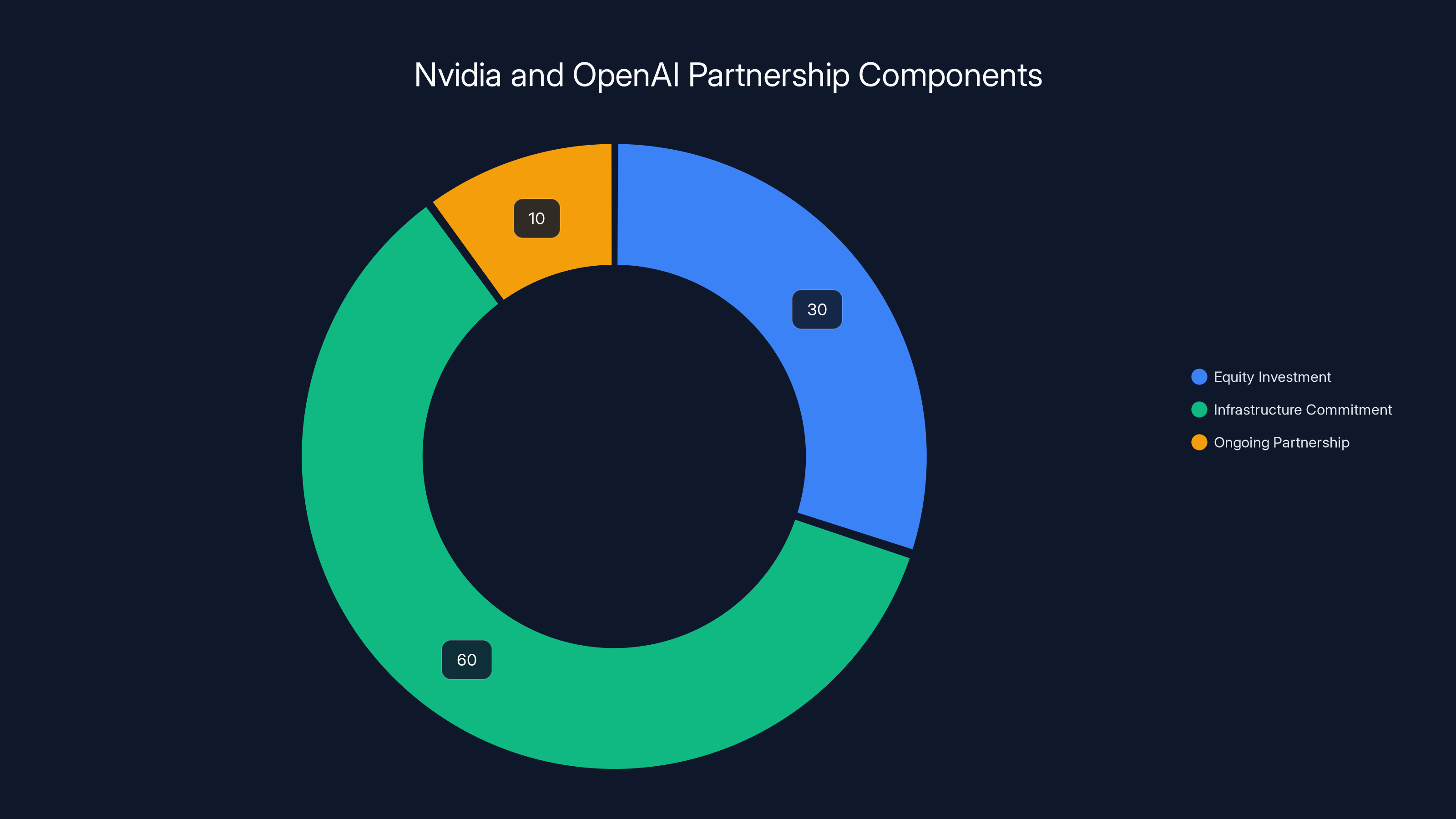

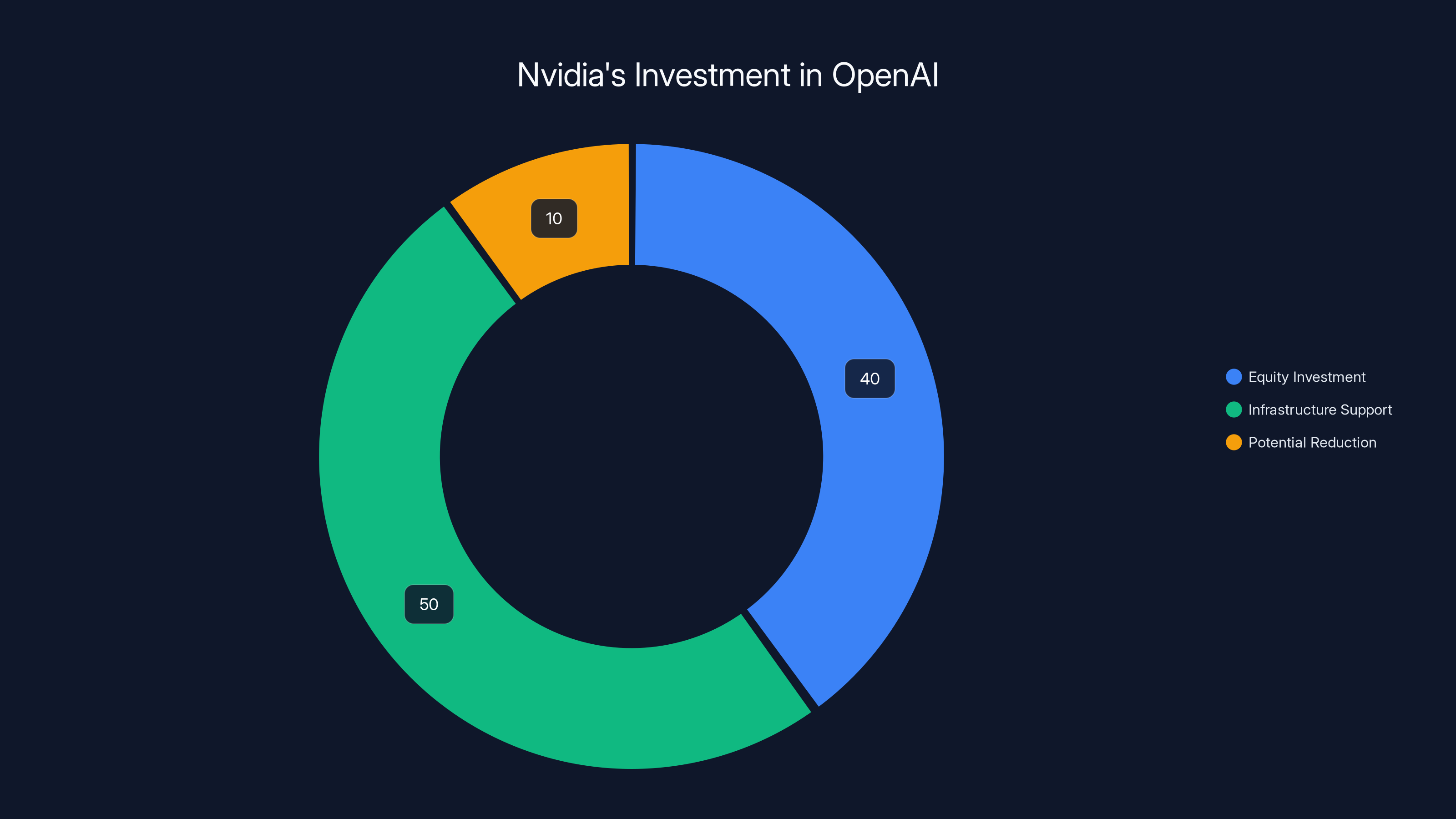

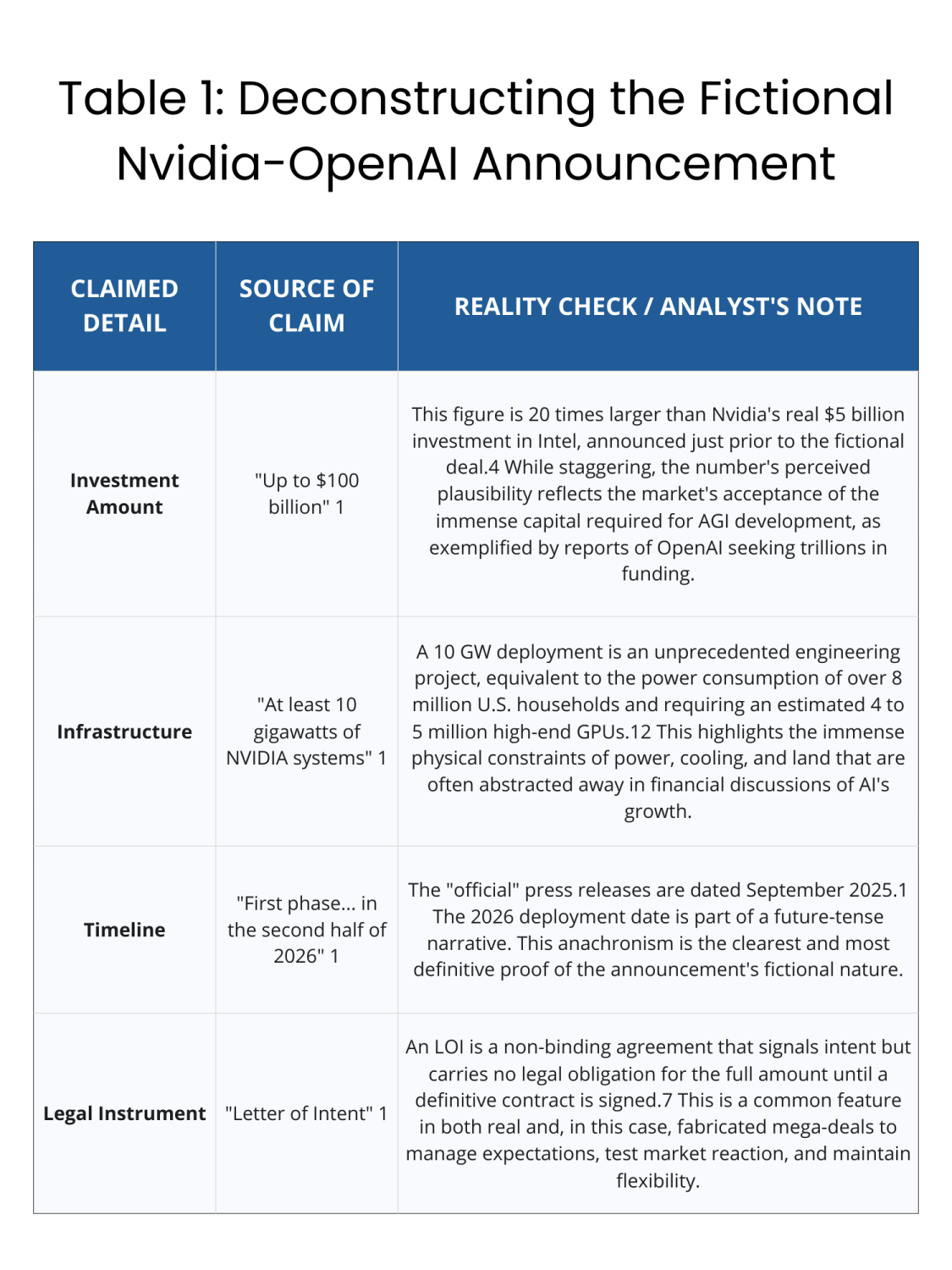

In September 2024, Nvidia and Open AI announced a partnership framework that captured the imagination of the tech industry. The headline figure of $100 billion in investment immediately dominated coverage, but the actual structure of the deal was more nuanced than a simple equity check. The arrangement comprised three primary components: an equity investment from Nvidia into Open AI, a commitment to build computing infrastructure specifically for Open AI's needs, and an ongoing partnership structure for providing specialized hardware and support.

The equity investment component represented Nvidia's direct financial stake in Open AI's future success. By taking an ownership position, Nvidia aligned its financial incentives with Open AI's growth and profitability—a standard venture capital approach that committed capital typically represents. However, the actual equity percentage Nvidia would receive was never publicly disclosed, leaving room for interpretation about the true valuation implied by the deal.

The infrastructure component of the deal was arguably more significant than the equity investment itself. Nvidia committed to supporting the construction of 10 gigawatts of computing capacity specifically configured for Open AI's operations. To contextualize this scale: a gigawatt of computing capacity represents an enormous amount of processing power. For perspective, a typical large data center consumes between 100 and 500 megawatts of power. Ten gigawatts would therefore support dozens of world-class data centers, each capable of training and running the most advanced AI models available.

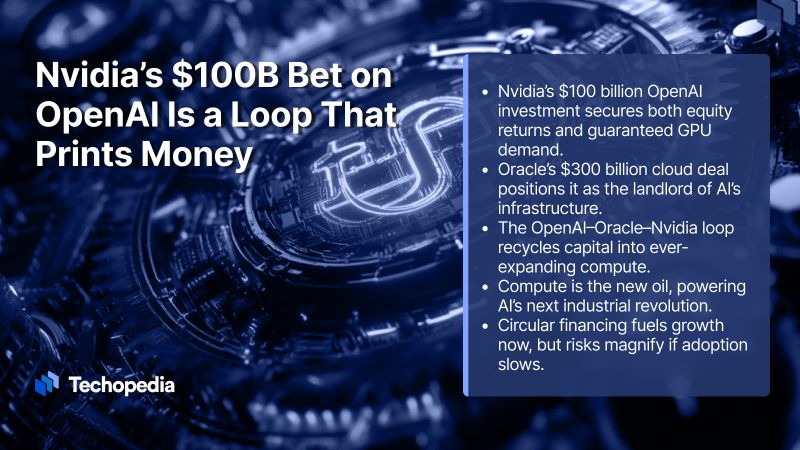

This infrastructure commitment was actually the more economically significant piece of the arrangement for Nvidia. Building specialized computing infrastructure for a customer as important as Open AI would generate sustained hardware revenues for Nvidia over many years. Every AI model trained by Open AI would run on Nvidia chips; every inference request from millions of users would flow through Nvidia's processors. This created a recurring revenue stream that could eventually exceed the value of a one-time equity investment.

Why This Deal Made Sense Strategically

From Nvidia's perspective, the partnership with Open AI served multiple strategic objectives simultaneously. First, it provided an anchor customer commitment—a guarantee that one of the world's most demanding AI companies would continue purchasing Nvidia's most advanced and profitable chips. In the intensely competitive AI chip market, securing long-term commitments from major customers provided revenue predictability and justified Nvidia's continued investment in next-generation processor development.

Second, the partnership demonstrated Nvidia's dominance in AI infrastructure. By publicly partnering with Open AI, the most visible AI company in the world, Nvidia reinforced the narrative that its chips were essential for building advanced AI systems. This had cascading effects throughout the industry—it encouraged other companies to also purchase Nvidia chips, created competitive pressure for alternative chip makers, and solidified Nvidia's position as the gatekeeper of AI progress.

Third, the equity investment gave Nvidia optionality regarding Open AI's commercialization path. As Open AI considered various business model approaches—whether to emphasize subscriptions, API access, or enterprise licensing—Nvidia could influence these decisions and ensure they benefited Nvidia's own bottom line. An ownership stake meant Nvidia had a voice in strategic decisions.

From Open AI's perspective, the arrangement solved immediate and pressing problems. The company needed massive amounts of computing power to develop, train, and serve its AI models. The cost of purchasing and operating this infrastructure independently would have required either enormous capital raising or accepting lower performance in their product offerings. By securing infrastructure support from Nvidia, Open AI could focus its fundraising on operational expenses and R&D rather than capital expenditures.

Additionally, the partnership with Nvidia provided legitimacy and stability. Open AI's relationships with traditional cloud providers like Microsoft and Amazon were complex—these companies competed with Open AI in some areas while supporting it in others. Nvidia, as a pure infrastructure play, had simpler incentives: the company benefited directly from Open AI's success and from the scale at which Open AI operated.

The Wall Street Journal reported that Nvidia might reduce its investment in OpenAI from

Understanding the Reported Tension Points

The Wall Street Journal's Central Claims

In January 2025, the Wall Street Journal published an investigation revealing that discussions between Nvidia and Open AI had shifted significantly from the original September announcement. The reporting made several specific claims that, if accurate, suggested material changes to the partnership:

First, the WSJ reported that Nvidia was "looking to scale back its investment," implying that the company was reconsidering the magnitude of capital it would deploy. This contrasted sharply with the headline $100 billion figure, suggesting instead that Nvidia might commit "tens of billions of dollars" rather than the originally announced amount. For context, tens of billions still represents an enormous commitment, but at roughly 50-70% of the original figure, it would materially change the nature of the partnership.

Second, the reporting indicated that Huang had begun emphasizing the non-binding nature of the original commitment. This distinction matters legally and strategically. A binding commitment creates contractual obligations that, if breached, could expose Nvidia to litigation. A non-binding commitment, sometimes called a "term sheet" or "agreement in principle," creates moral and reputational pressure but doesn't force either party to complete the transaction. If Huang was publicly highlighting the non-binding nature of the deal, it suggested Nvidia was preserving its optionality—maintaining the right to walk away if circumstances changed.

Third, the WSJ reported that Huang had expressed private concerns about competitive threats. Specifically, the reporting mentioned that Huang worried about competitors like Anthropic and Google developing alternative approaches to AI infrastructure and model training. If Open AI faced serious competitive pressure from other well-funded AI companies, the economic calculus for Nvidia's investment might shift. Rather than Open AI enjoying unchallenged dominance in the AI market, Nvidia could be hedging its bets by diversifying its partnerships across multiple AI companies.

Fourth, the reporting suggested that the two companies were actively "rethinking their relationship." This phrase implied ongoing negotiations about the terms, structure, and magnitude of the partnership. The WSJ characterized these discussions as focused on defining the actual equity investment amount and the specific infrastructure commitments Nvidia would make.

Alternative Explanations for the Reported Shift

While the WSJ's reporting was specific and sourced, the business community offered several plausible alternative explanations for why the partnership terms might be evolving:

Due diligence maturation: When companies announce major deals in principle, they often haven't completed detailed financial and operational due diligence. The months between September and January would have allowed both companies' finance and operations teams to dig into details that might not have been apparent during initial negotiations. Perhaps the due diligence revealed complexities, additional costs, or technical challenges that warranted adjusting the deal structure.

Regulatory scrutiny: The AI industry faced increasing regulatory attention globally during this period. Governments were examining AI companies' market power, data practices, and competitive dynamics. If regulators were investigating Nvidia's market dominance in AI chips or scrutinizing the competitive implications of Nvidia providing infrastructure exclusively to Open AI, both companies might have realized they needed to modify the arrangement to satisfy regulatory concerns.

Capital deployment efficiency: Companies constantly optimize how they deploy capital. For Nvidia, investing heavily in building infrastructure specifically for Open AI might have been less efficient than other uses of capital—such as manufacturing more chips that could be sold to multiple customers, investing in R&D for next-generation processors, or acquiring complementary technologies. As Nvidia's leadership reviewed financial models, they might have concluded that a scaled-back version of the Open AI partnership allowed more efficient capital deployment.

Market competition and hedging: The reported concerns about Anthropic and Google suggested that Nvidia might be reconsidering whether betting so heavily on Open AI made strategic sense. If other AI companies were developing competitive products and securing funding, Nvidia's risk would be diversified by supporting multiple AI companies rather than concentrating so heavily on one.

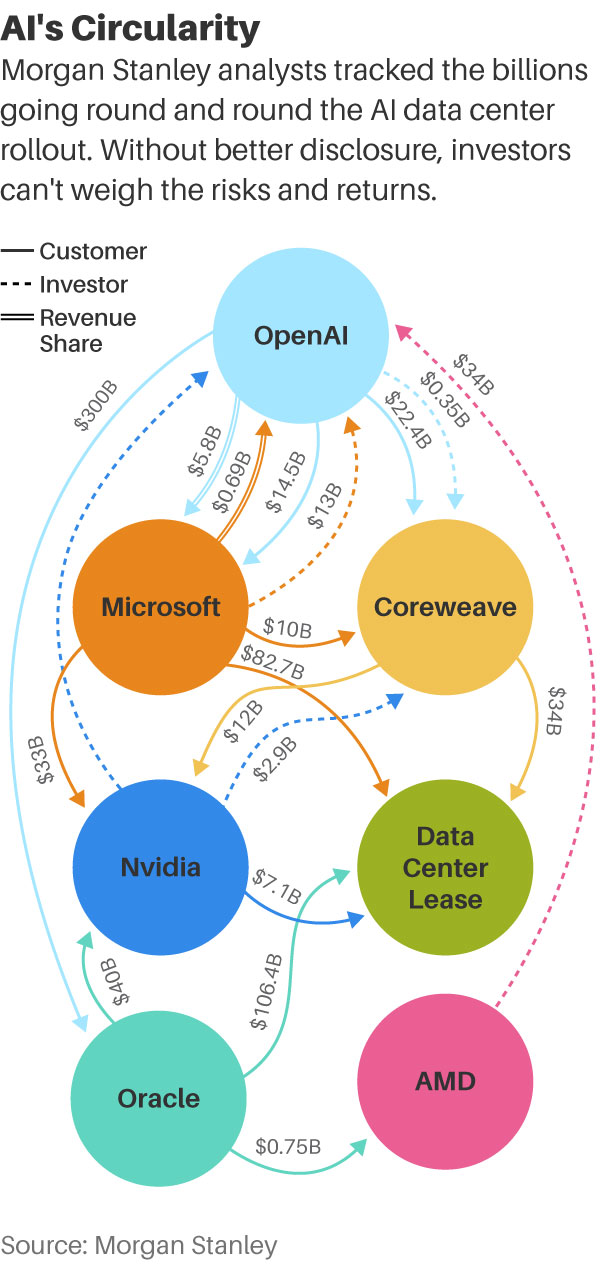

Estimated data suggests that the infrastructure commitment represents the largest portion of Nvidia's $100 billion partnership with OpenAI, highlighting its strategic importance.

Jensen Huang's Defense and the Credibility Question

Huang's Direct Response to the Reports

When asked about the WSJ reporting during a visit to Taipei, Jensen Huang directly contradicted the claims in several key ways. Rather than confirming that Nvidia was scaling back its commitment, Huang stated that Nvidia would "definitely participate" in Open AI's funding round and would "invest a great deal of money" in the company. He described Open AI as "such a good investment" and praised the company's team and capabilities, stating that Open AI was "one of the most consequential companies of our time."

These statements directly challenged the core thesis of the WSJ reporting. Where the Journal suggested cooling and retrenchment, Huang emphasized enthusiasm and commitment. Where the reporting described tensions and reassessment, Huang's comments suggested confidence in Open AI's direction and Nvidia's commitment.

However, Huang notably declined to specify the exact amount Nvidia would invest, instead deferring to Open AI CEO Sam Altman to announce the fundraising details. This strategic ambiguity is worth examining. By refusing to commit to a specific number, Huang preserved flexibility. If Nvidia ultimately invested

Evaluating the Credibility of Both Narratives

When a high-profile CEO publicly denies specific reporting, observers face a credibility puzzle. Is the CEO being truthful and the reporting is flawed? Is the reporting accurate and the CEO is defending his company's reputation? Or does a more nuanced truth exist somewhere between these extremes?

Several factors support the credibility of Huang's defense:

The incentive to participate in Open AI's funding round remains strong. Nvidia's business fundamentally depends on AI adoption accelerating and scaling globally. Open AI, as one of the most visible and influential AI companies, drives consumer awareness and enterprise adoption of AI broadly. Supporting Open AI's growth continues to serve Nvidia's interests, even if the specific terms are being negotiated.

Huang's enthusiastic language matches broader market trends. By January 2025, the AI market had matured beyond the peak hype of 2023. Serious companies and investors were demonstrating that AI systems could be deployed profitably and at scale. Huang's confidence in Open AI reflected not blind enthusiasm but rather evidence of market adoption and value creation.

Refusing to specify amounts is standard CEO behavior. Executives routinely avoid discussing exact financial terms in public settings because these details are subject to ongoing negotiations, regulatory review, and investor relations considerations. Huang's refusal to commit to specific numbers is consistent with how savvy executives handle similar questions.

However, several factors suggest the WSJ's reporting captured genuine tensions or at minimum significant refinements to the original deal:

Market context for the reports. The WSJ didn't publish speculative reporting; the story was sourced to people familiar with the discussions. For sources to go on record (even anonymously) expressing skepticism about the partnership, there had to be genuine conversations happening between the companies that suggested adjustments were under consideration.

The non-binding nature of major deals. The fact that Huang felt compelled to emphasize Nvidia's commitment suggests questions had been raised. If the partnership remained completely solid, there would have been no reason to publicly defend it. The fact that Huang was addressing the concerns at all suggests they had some credibility in the market.

The logical evolution of deal terms. Major partnerships typically do evolve between the announcement phase and execution. The $100 billion figure was likely an aspirational maximum rather than a guaranteed minimum. As the companies engaged in detailed planning, adjustments to the amount, timing, and structure would be entirely normal.

The Broader Context: Open AI's Fundraising Needs

The $100 Billion Series B Funding Round

To properly understand the Nvidia-Open AI relationship, it's essential to examine Open AI's fundraising activities in parallel. In late 2024 and early 2025, Open AI was actively fundraising for a massive new funding round. Various reports suggested the company was targeting a $100 billion valuation and seeking to raise capital at that valuation—a figure that would place Open AI among the most valuable privately-held companies in the world.

This fundraising round served multiple purposes for Open AI. First, it funded the company's enormous operational expenses. Training advanced AI models, operating inference infrastructure for millions of users, and paying world-class AI researchers and engineers costs billions of dollars annually. Open AI's burn rate—the speed at which the company spends cash—had likely grown substantially as the company scaled product usage and expanded its research capabilities.

Second, the fundraising provided capital for infrastructure investment. While Nvidia was supporting infrastructure development, Open AI's own capital was necessary for everything else: real estate for offices and data centers, power infrastructure, networking equipment, cooling systems, and the thousands of non-chip elements required to operate world-scale AI systems.

Third, a successful fundraising round validated Open AI's business model and growth trajectory. Investors participating in a

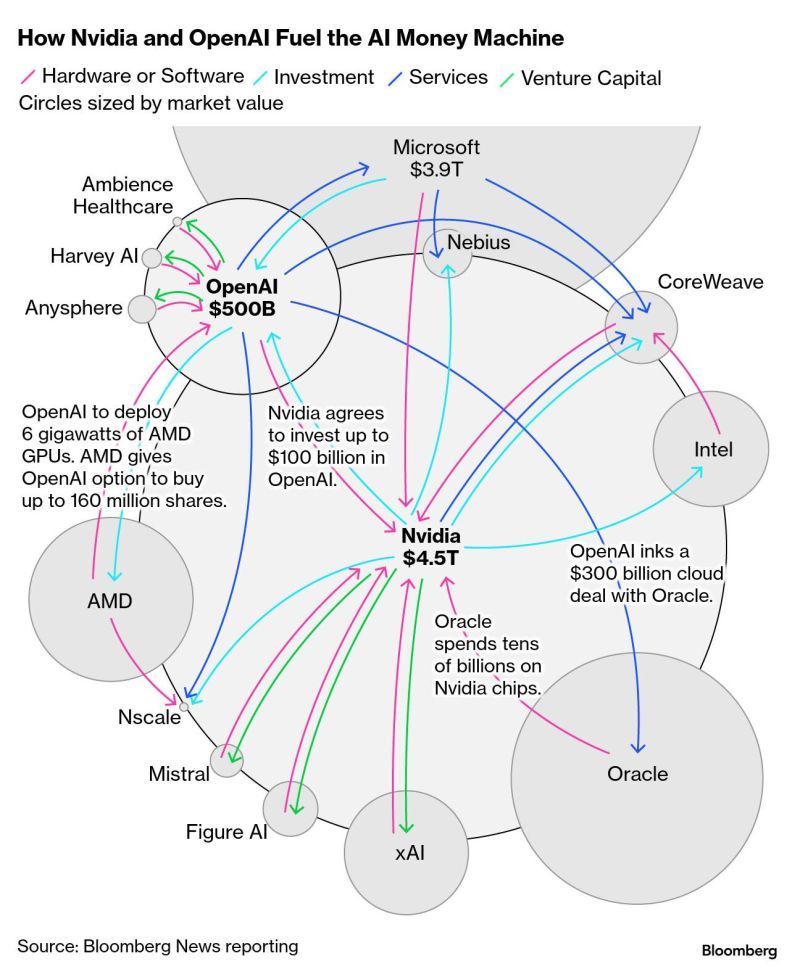

The Investor Consortium Assembling Around Open AI

According to various reporting, multiple technology giants were discussing potential investments in Open AI's fundraising round. These included Amazon, Microsoft, Soft Bank, and Nvidia. The participation of multiple large investors was significant because it suggested Open AI was not entirely dependent on any single source of capital or infrastructure support.

Microsoft, which had invested heavily in Open AI previously and had exclusive rights to use Open AI's technology for certain Azure services, was expected to participate. However, the involvement of Amazon, Soft Bank, and potentially other investors suggested Open AI was diversifying its funding sources and relationships. This diversification actually aligned with the concerns Huang reportedly expressed—if Open AI could get infrastructure support from multiple providers rather than being entirely dependent on Nvidia, the company would be in a stronger negotiating position.

For Nvidia, being one of several investors rather than the sole infrastructure partner had implications. On one hand, it diluted Nvidia's control and influence over Open AI's strategic decisions. On the other hand, it reduced Nvidia's risk by ensuring Open AI wouldn't be so dependent on a single relationship that any friction could jeopardize Open AI's operations.

How This Affected the Nvidia-Open AI Dynamics

Open AI's ability to raise capital from multiple sources actually strengthened its negotiating position with Nvidia. If Open AI had been entirely dependent on Nvidia for infrastructure support and capital, the company would have been in a weak position to negotiate better terms or to diversify its chip suppliers. However, with backing from Amazon, Microsoft, and Soft Bank, Open AI could credibly threaten to reduce its dependence on Nvidia or to develop alternative infrastructure partnerships.

This dynamic likely informed the reported shift in Nvidia-Open AI negotiations. Rather than a partnership where Nvidia was the essential, irreplaceable provider of infrastructure, the relationship became more of a negotiated deal between two relatively powerful parties. Both had alternatives—Nvidia could invest in other AI companies, and Open AI could seek infrastructure from other sources or partners.

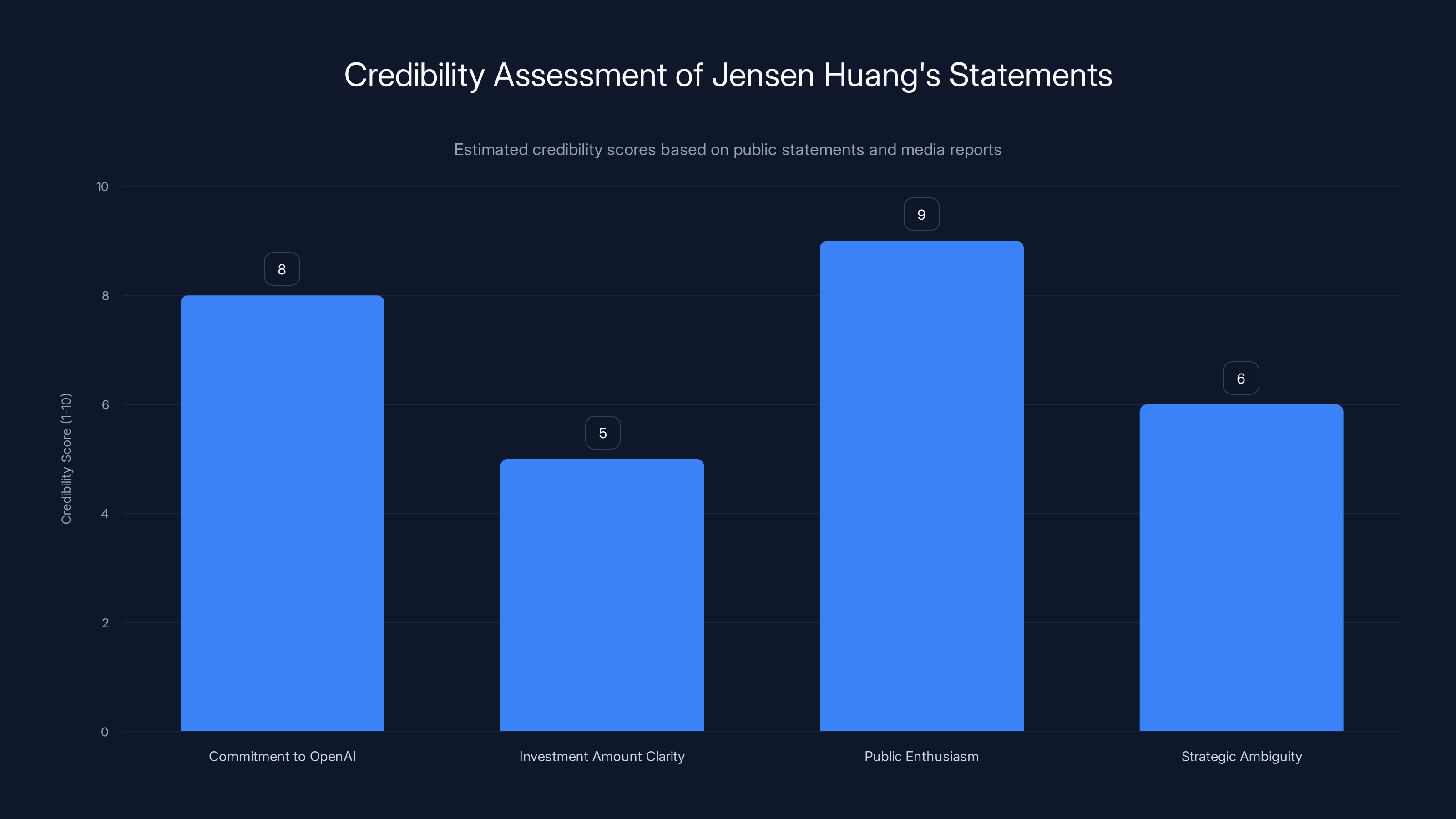

Estimated credibility scores suggest strong public enthusiasm and commitment to OpenAI, but strategic ambiguity in investment amount clarity.

Competitive Pressure: Anthropic, Google, and Other Rivals

The Rise of Anthropic as a Serious Competitor

During the period when Nvidia and Open AI were discussing their partnership, Anthropic emerged as a formidable competitor to Open AI. Founded by former Open AI researchers including Dario and Daniela Amodei, Anthropic developed Claude, an AI model that many users and companies considered competitive with Open AI's GPT models in various capabilities.

Anthropically's success was more than technological; it was also financial. The company raised over $5 billion in funding from leading investors, including Google. This funding provided Anthropic with the resources to build infrastructure, hire top talent, and rapidly iterate on its AI models. Importantly, Anthropic had relationships with multiple infrastructure providers—not just Nvidia, but also Google's custom AI chips and other options.

For Nvidia, Anthropic's rise posed a specific challenge. If Anthropic succeeded in developing advanced AI capabilities while using a diverse set of chips and infrastructure providers, it would demonstrate that Open AI didn't have a monopoly on AI leadership. This could reduce the premium Nvidia could charge for its chips, increase competition in the AI chip market, and ultimately reduce Nvidia's market power.

The concern Huang reportedly expressed about Anthropic wasn't necessarily that Open AI couldn't compete, but rather that Nvidia's business depended on the entire AI industry scaling rapidly. If competitive alternatives existed and were well-funded, the industry would grow faster and more broadly. However, Nvidia's dominance in chips meant that regardless of which AI company "won" the competition, Nvidia's chips would be essential to all of them. This is why Huang's reported concern about Anthropic was somewhat puzzling—Anthropic's success actually meant more AI infrastructure demand, which benefited Nvidia.

Google's Competitive Positioning

Google represented an even more complex competitive threat than Anthropic. Google had developed Gemini, a multimodal AI model that competed directly with Open AI's offerings. More significantly, Google had the resources, infrastructure, and expertise to potentially reduce its dependence on Nvidia for AI chip needs. Google had invested billions in developing its own custom AI chips (TPUs) and had announced plans to expand these capabilities.

If Google succeeded in building advanced AI capabilities using primarily its own chips rather than Nvidia's, the strategic implications would be enormous. Google would demonstrate an alternative path to AI dominance that didn't rely on Nvidia as a critical infrastructure provider. While Nvidia would still benefit from Google's purchases, the company's negotiating leverage with other AI companies would be reduced.

Further, Google had relationships with Open AI's other investors and could potentially influence Open AI's strategic direction. Google Cloud provided some of Open AI's infrastructure services, and Google had made investments in other AI companies. This meant Google had multiple leverage points with Open AI beyond just competition in models.

How Competitive Concerns Affected the Nvidia Deal

The competitive landscape emerging in late 2024 and early 2025 actually provided legitimate reasons for both Nvidia and Open AI to reassess their partnership. From Nvidia's perspective, if Open AI faced serious competition and the overall AI market's growth rate slowed, the economics of a massive infrastructure investment might shift. Nvidia could achieve better returns by investing capital in other companies or other uses.

From Open AI's perspective, if Anthropic and Google were making significant advances, Open AI needed to ensure it could continue innovating rapidly. This might require different infrastructure approaches, different partnerships, or different capital deployment strategies. Reassessing the Nvidia deal in light of competitive developments made sense for both parties.

The Technical Reality: Why Nvidia's Chips Remain Essential

The Constraints of AI Chip Alternatives

Despite all the talk of competition and alternative chip providers, the technical reality remained clear: Nvidia's chips were the dominant choice for training and deploying large-scale AI models. The company's CUDA software ecosystem, driver maturity, developer familiarity, and raw performance created a deep moat around Nvidia's position in the market.

Alternative chip providers did exist. Google's TPUs offered competitive performance for certain workloads. AMD was developing more capable GPU alternatives. Other startups were building custom chips for AI. However, none of these alternatives had achieved the level of maturity, ecosystem support, and proven reliability that Nvidia's chips offered.

For companies like Open AI, switching away from Nvidia chips would require significant investment and risk. The company would need to retrain its engineering teams, rewrite portions of its software stack, potentially accept performance reductions, and face uncertainty about whether the alternative chips would remain competitive over time. Unless Nvidia raised prices to extortionate levels or stopped innovating, the switching costs for Open AI would likely be prohibitive.

This technical reality underlay all the Nvidia-Open AI partnership negotiations. Both companies understood that they were dependent on each other and that neither could easily find a replacement. Nvidia needed Open AI as a major customer and as a symbol of its dominance in AI. Open AI needed Nvidia's chips to remain competitive in the AI market. Given this mutual dependence, some level of partnership was inevitable.

The Infrastructure Requirements of Advanced AI

To understand why Nvidia chips were so essential, it helps to understand the scale of infrastructure required for advanced AI systems. Training a state-of-the-art large language model like GPT-4 or Claude requires enormous amounts of computing power. The training process can take months and consume the equivalent of thousands of GPU-years of computation.

Once a model is trained, deploying it at scale for millions of users creates equally enormous infrastructure demands. Every time a user submits a prompt to Chat GPT, Open AI must run an inference operation on that prompt, which involves processing it through billions of neural network parameters. At Open AI's scale, this meant handling millions of simultaneous inference requests globally.

The infrastructure required to support this scale involved not just the chips themselves, but the entire ecosystem surrounding them. Nvidia's software stack, driver technology, and optimization for AI workloads made the company's chips substantially more efficient for these tasks than alternatives. The 10 gigawatts of infrastructure Nvidia committed to building would be a small portion of the total infrastructure Open AI needed, but it would represent the highest-quality, most-optimized computing capacity available.

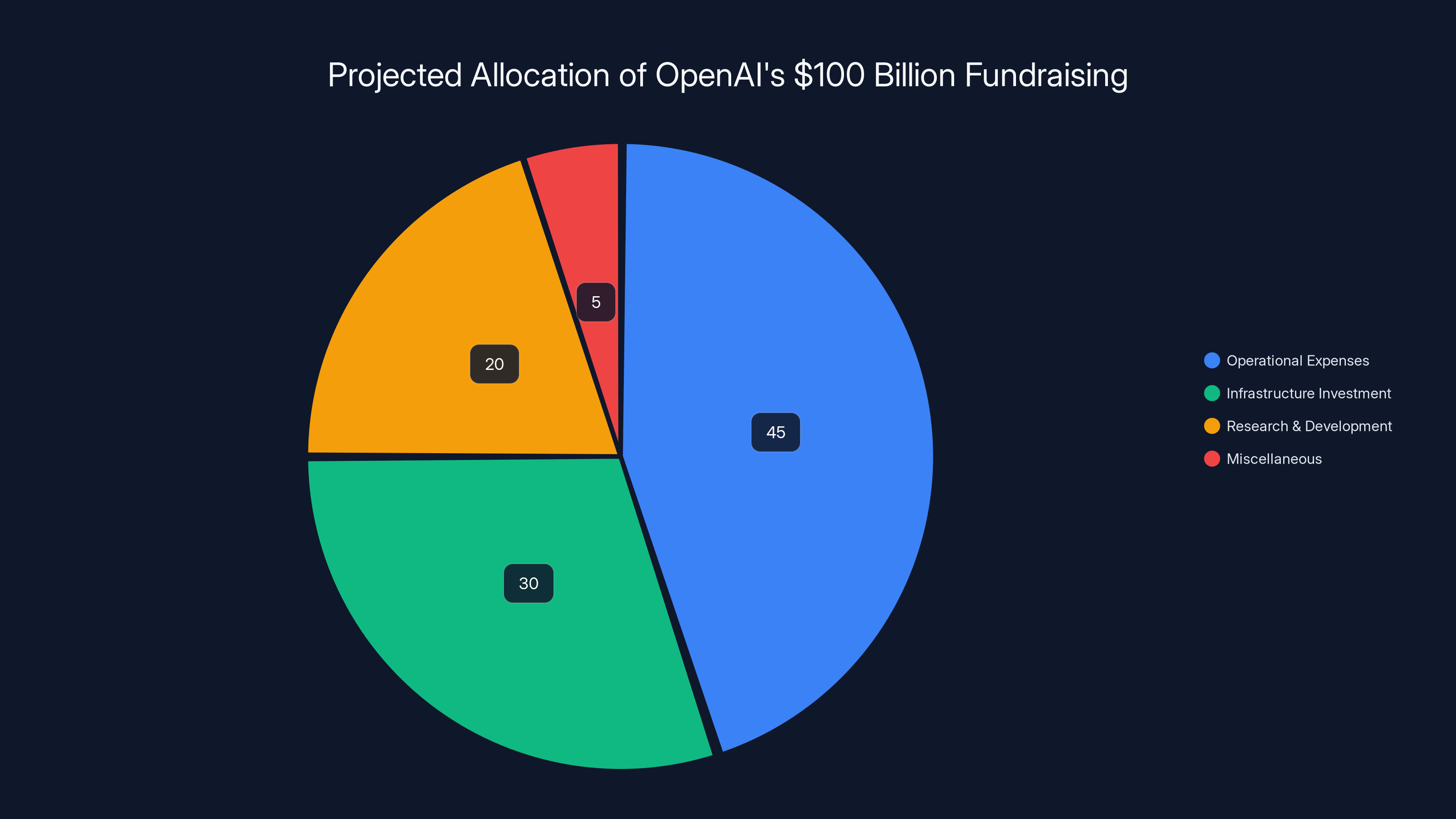

Estimated data shows that a significant portion of OpenAI's $100 billion fundraising is likely allocated to operational expenses and infrastructure investment, supporting its growth and scalability.

The Business Model Question: How This Investment Differs from Traditional Venture Capital

Infrastructure Investment vs. Traditional Equity Stakes

A key aspect of the Nvidia-Open AI partnership that deserves deeper examination is the distinction between the infrastructure investment component and the pure equity investment component. Traditional venture capital firms invest capital in exchange for equity—partial ownership of a company. In return, they gain influence over strategic decisions, the right to exit profitably when the company achieves success, and the potential for substantial returns on their investment.

Nvidia's approach was somewhat different. While Nvidia did take an equity stake in Open AI, the more economically significant component of the deal was the infrastructure commitment. Rather than building infrastructure that Nvidia would own and then rent to Open AI, Nvidia was committing to deploy capital and resources specifically for Open AI's use. This created a revenue stream for Nvidia that looked more like a long-term customer relationship than a traditional venture investment.

This distinction mattered for several reasons. First, the infrastructure revenue was more predictable and certain than equity returns. If Nvidia deployed chips and infrastructure for Open AI's operations, Nvidia would receive revenue regardless of whether Open AI's business achieved the valuations that venture investors were expecting. Even if Open AI's stock price didn't reach anticipated levels, Open AI would still need to operate its infrastructure and pay Nvidia for the services provided.

Second, the infrastructure investment created a different type of leverage for Nvidia. Rather than influence over strategic decisions (which comes with traditional equity stakes), Nvidia gained operational leverage. Open AI would become dependent on Nvidia's infrastructure working reliably and efficiently. This dependence could potentially be leveraged in future negotiations.

Third, the infrastructure commitment provided Nvidia with a way to support a major customer while maintaining some arms-length relationship. Rather than becoming a deep strategic partner with governance rights, Nvidia could position itself as a critical infrastructure provider—a role that was valuable but somewhat separate from Open AI's core business decisions.

The Return Profile of Infrastructure Investment

Nvidia's expected return profile from the infrastructure investment would depend on several variables:

Capacity utilization: If Open AI deployed the 10 gigawatts of infrastructure and ran it at high utilization rates (meaning the chips were being used most of the time), Nvidia would maximize revenue. If utilization was lower, Nvidia's revenue would suffer. The pricing structure of the deal—whether Nvidia charged per compute-hour, per model-training run, or some other metric—would determine how much of Open AI's growing computational demand would flow to Nvidia as revenue.

Technology upgrade cycles: Nvidia releases new, more powerful GPU generations roughly every 18-24 months. If the original infrastructure built in 2024-2025 became outdated, Open AI might need to refresh it with newer chips. This created recurring revenue opportunities for Nvidia. However, if customers and competitors developed superior alternatives, Open AI might diversify away from Nvidia chips.

Competitive pricing dynamics: As competition in AI chips intensified, the prices Nvidia could charge for its products would likely decline. The infrastructure investment had to be profitable at declining price points, or Nvidia needed to maintain enough performance leadership that it could sustain premium pricing despite competition.

These dynamics meant Nvidia's infrastructure investment was not a simple, straightforward bet on Open AI's success. Rather, it was a complex wager on Open AI's growth trajectory, Nvidia's ability to maintain competitive advantages in chips, industry-wide infrastructure demands, and pricing dynamics. All of these factors could justify both a more optimistic and a more pessimistic view of the partnership's value.

Market Impact and Investor Reactions

How Tech Industry Observers Interpreted the Reports

When the Wall Street Journal's reporting about potential scaling back of the Nvidia-Open AI deal became public, it rippled through the technology investment community. Observers parsed the details for clues about broader trends in AI infrastructure spending, competitive dynamics, and the maturation of the AI market.

Some investors interpreted the reported tension as a sign that the initial $100 billion figure had been aspirational rather than realistic. In major deal announcements, companies sometimes release headline numbers that reflect potential upside scenarios rather than committed minimums. The idea that actual negotiations might result in a smaller commitment wasn't surprising—it was how complex deals typically evolved.

Other observers saw the reports as evidence that the AI infrastructure market was shifting from a period of unbounded growth assumptions to a more rational assessment of actual needs and returns. During the peak hype phase of 2023-2024, investors and companies had assumed that AI infrastructure spending would grow exponentially and indefinitely. By January 2025, as actual adoption data emerged, the growth curve looked steeper but perhaps more rational than the most optimistic extrapolations.

A third interpretation viewed the reports as evidence that Nvidia's market dominance—while still substantial—was not as unshakeable as had been believed. If even Open AI, one of Nvidia's most important customers, was reassessing the infrastructure partnership, perhaps other companies would do the same. This interpretation suggested the AI chip market would become more competitive, with customers having genuine alternatives to Nvidia.

Stock Market and Analyst Responses

The reports about the Nvidia-Open AI partnership didn't move technology stock prices dramatically, largely because investors had already internalized much of the narrative. Nvidia's stock price reflected assumptions about the company's dominance in AI chips, but it wasn't based entirely on the specific Open AI deal. Nvidia's dominance extended to many other AI companies, and the company had diversified revenue streams beyond any single customer.

Analysts' reactions to the reports tended to be measured. Most recognized that partnerships between major companies frequently evolved from their initial announcement terms as the companies engaged in detailed execution planning. Rather than viewing the reported adjustments as failures, analysts saw them as normal business negotiations. The key question for investors was whether Nvidia and Open AI would ultimately execute a deal that maintained Nvidia's strategic position in the AI ecosystem—and on that measure, the answer appeared to be yes.

Some analysts actually saw the reported shift as positive for Nvidia. If Nvidia had over-committed to a massive infrastructure build-out that didn't achieve high utilization, the company's returns would suffer. A more measured, phased approach that tracked with actual demand growth could be more profitable and more sustainable for Nvidia in the long term.

Nvidia's $100 billion commitment to OpenAI is estimated to be divided into equity investment, infrastructure support, and potential reductions. Estimated data.

The Role of Regulatory Scrutiny in Partnership Adjustments

Antitrust Concerns and Competition Policy

While the immediate reporting about the Nvidia-Open AI partnership focused on business strategy and internal disagreements, a broader regulatory context was important context for understanding why the deal might have evolved. During late 2024 and early 2025, governments globally were examining the concentration of power in AI markets, the role of dominant chip makers, and potential anticompetitive practices.

A deal in which Nvidia invested $100 billion in Open AI while also providing exclusive infrastructure support raised questions that regulators might examine. Was Nvidia using its dominance in chips to unfairly advantage Open AI against competitors? Were Open AI and Nvidia acting as de facto partners in ways that excluded other AI companies from fair access to infrastructure? Could Nvidia leverage its infrastructure investment to gain improper influence over Open AI's business decisions?

These questions didn't necessarily mean that Nvidia-Open AI partnership was illegal or improperly anticompetitive. However, companies might modify deal structures to preempt regulatory concerns before they became more serious. By scaling back the infrastructure investment and ensuring that Open AI remained independent and sourced from multiple providers, both companies could reduce regulatory scrutiny.

Regulatory Pressure on Data Center Consolidation

Another regulatory concern involved the concentration of computing infrastructure and who had access to it. If a small number of companies controlled most of the world's AI infrastructure, this created potential concerns about competition and innovation. Regulators and policymakers in the US, EU, and other jurisdictions were examining whether the current infrastructure allocation patterns were healthy for competition.

Nvidia's commitment to build 10 gigawatts of infrastructure specifically for Open AI could be viewed as creating further consolidation—putting more computing power under the control of two companies that dominated their respective markets. By scaling back or restructuring the infrastructure commitment, Nvidia and Open AI could demonstrate greater openness to competition and broader infrastructure access.

The Practical Reality: How Infrastructure Buildout Works

The Timeline and Phasing of Infrastructure Deployment

One aspect of the reported partnership adjustment that often goes overlooked is the fundamental timeline question. Building 10 gigawatts of infrastructure isn't something that happens instantly. Even with massive capital and commitment, the actual deployment would take years and would proceed in phases.

When Nvidia and Open AI announced their partnership in September 2024, they weren't committing to having all 10 gigawatts operational immediately. Instead, they would likely have outlined a multi-year buildout plan, with certain milestones and phases. Early phases might be smaller in scale, allowing both companies to verify that the infrastructure was performing as expected before committing to larger deployments.

By January 2025, as initial phases were being planned in detail, both companies might have realized that the phased approach looked different than the original concept. Perhaps the early phases should be smaller, allowing for more flexibility. Perhaps demand projections had shifted. Perhaps technical considerations suggested different infrastructure configurations. These kinds of refinements during execution planning are entirely normal.

The Complexity of Building AI Infrastructure

Building world-scale AI infrastructure is not a straightforward process. It requires coordinating:

- Power infrastructure: Securing reliable, abundant electricity from power plants or renewable sources

- Cooling systems: Managing the enormous heat generated by running millions of GPUs simultaneously

- Networking: Building high-speed connections between different parts of the infrastructure and to the internet

- Real estate: Securing suitable locations for data centers that have space, power, and connectivity

- Supply chain: Ensuring consistent supply of chips, switches, servers, and other hardware

- Personnel: Hiring and training engineers to design, build, and operate the infrastructure

- Compliance and permitting: Navigating regulatory approvals, environmental reviews, and local government requirements

Each of these elements involves complexity, potential delays, and cost variations. As Nvidia and Open AI moved from announcing partnership in principle to planning actual buildout, they would have encountered specific technical and logistical challenges. Refining the partnership to match reality rather than aspirational targets would be a natural response.

Anthropic, with $5 billion in funding, is a strong competitor to OpenAI and Google. Google's larger funding and market influence highlight its significant position in the AI industry. (Estimated data)

Alternative Platform Approaches: Considering Runable and Other Tools

While the Nvidia-Open AI infrastructure partnership focuses on large-scale, specialized AI infrastructure, it's worth noting that the broader AI ecosystem has diversified significantly. For developers and organizations building AI applications who don't require the absolute cutting-edge capabilities of systems like GPT-4, alternative approaches have emerged that might offer different advantages.

For teams seeking cost-effective AI automation without the need to develop custom infrastructure, platforms like Runable offer AI agents for content generation, workflow automation, and document processing. At just $9/month, such platforms provide an alternative approach that abstracts away infrastructure complexity entirely. While Runable operates at a completely different scale than Open AI's systems, it represents the broader trend of AI capabilities becoming more accessible and affordable for everyday business use.

The infrastructure questions Nvidia and Open AI are wrestling with—who owns the chips, how infrastructure should be shared, what the economic model should be—increasingly have parallels in the application layer. For many use cases, developers don't need to worry about gigawatts of infrastructure; they can simply use APIs and platforms that handle the infrastructure complexity on their behalf. This democratization of AI access is ultimately what validates the infrastructure investments that companies like Nvidia and Open AI are making.

The Broader Implications for AI Development and Competition

What the Nvidia-Open AI Dynamic Reveals About AI Market Structure

Regardless of the specific terms that Nvidia and Open AI ultimately agreed to, the negotiation process itself reveals important structural dynamics in the AI market. The partnership between Nvidia (the dominant infrastructure provider) and Open AI (the leading visible AI company) was never going to be a simple customer-vendor relationship. Both companies have too much power, too much at stake, and too many alternative options for the relationship to be one-sided.

The dynamic that emerged—public confidence in the partnership alongside private negotiations about scaled terms—is actually quite rational. Both companies benefit from being seen as strategically aligned (this supports their narratives and market positions). However, both companies also benefit from maintaining negotiating leverage and flexibility. The public positioning allows them to maintain the partnership narrative while private discussions ensure they're not over-committing.

This dynamic will likely characterize major partnerships in the AI industry more broadly. Companies will announce partnerships that sound massive and strategic, but actual execution will involve significant negotiation and refinement. This isn't a sign of failure or fundamental misalignment; it's how sophisticated business partnerships work when the parties have significant leverage and options.

The Shift from AI Hype to AI Realism

The Nvidia-Open AI partnership, viewed more broadly, represents the maturation of the AI market from hype cycle to reality. In 2023, when Chat GPT was new and AI seemed to have unlimited potential, companies announced partnerships with enormous headline numbers and grand ambitions. By late 2024 and early 2025, as actual costs, technical challenges, and competitive dynamics became clearer, partnerships were being refined to match reality.

This isn't negative for the AI industry. In fact, it's healthy. A market that's transitioning from irrational exuberance to rational assessment of value and returns is a market that will have sustainable growth. Companies that over-invest in infrastructure they don't need or that make commitments they can't sustain will ultimately underperform. By adjusting partnerships to match actual needs and realistic projections, Nvidia and Open AI are positioning themselves for sustainable competitive success.

Timeline of Events: Key Moments in the Nvidia-Open AI Partnership

The Partnership Announcement Phase

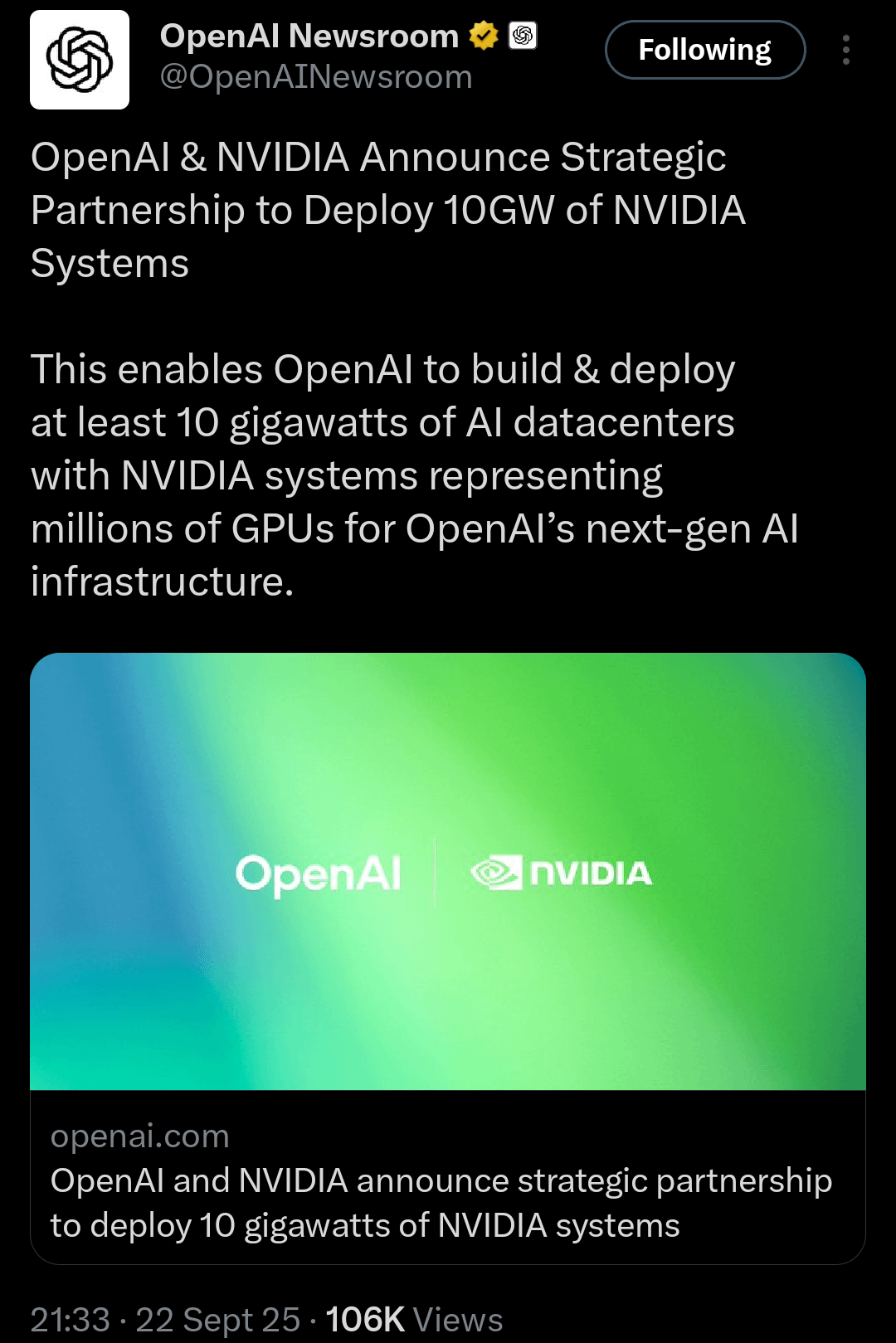

September 2024: Nvidia and Open AI announce partnership framework involving up to $100 billion in Nvidia investment and commitment to build 10 gigawatts of infrastructure. Media coverage focuses on the massive headline number and the strategic significance of the two companies joining forces.

September-December 2024: Both companies begin detailed planning and due diligence. Engineering teams from Nvidia and Open AI start coordinating on infrastructure specifications. Finance teams work through deal terms and structure. The initial enthusiasm begins to encounter real-world complexity.

The Reporting and Verification Phase

Late January 2025: Wall Street Journal publishes detailed reporting suggesting Nvidia is scaling back its commitment and that internal discussions between the companies have shifted from the original terms. The reporting cites sources with knowledge of the discussions and provides specific details about concerns Huang has expressed.

Early February 2025: Jensen Huang responds to the reporting, stating that claims about partnership strain are "nonsense" and reaffirming Nvidia's commitment to Open AI. He emphasizes that Nvidia will "definitely participate" in Open AI's funding round and will "invest a great deal of money."

Early 2025 (ongoing): The actual financial and structural details of the Nvidia-Open AI partnership remain somewhat undisclosed. Both companies benefit from ambiguity about the exact terms while they continue executing on the partnership.

The Path Forward: What to Watch

Key Milestones to Indicate Partnership Health

To assess whether the Nvidia-Open AI partnership remains on track despite the reported tensions, observers should monitor several key indicators:

Infrastructure deployment milestones: If Open AI announces that specific infrastructure projects are proceeding on schedule, with Nvidia chips being deployed at scale, this would confirm that the partnership is advancing. Conversely, delays or cancellations of infrastructure projects would suggest ongoing problems.

Equity investment completion: When Open AI completes its fundraising round and discloses the investors who participated, Nvidia's actual investment amount will become public. This will provide concrete evidence of the partnership's scope.

Technical collaboration announcements: If Nvidia and Open AI announce joint technical achievements, software optimizations, or infrastructure innovations, this would indicate active partnership and alignment at the engineering level.

Competitive developments: Monitoring whether Open AI continues to rely on Nvidia chips exclusively or begins diversifying to other providers would indicate whether the partnership's fundamental technical dimensions remain strong.

The Longer-Term Strategic Questions

Beyond the immediate partnership status, several longer-term strategic questions will shape the trajectory of Nvidia-Open AI dynamics:

Will infrastructure consolidation increase or decrease? If AI infrastructure becomes increasingly consolidated under a few major providers (Nvidia for chips, hyperscalers like Amazon and Microsoft for hosting), this could make the industry more efficient but also create potential competitive and regulatory concerns.

Can alternative chip providers gain meaningful market share? If Google's TPUs, AMD's GPUs, or new startups develop genuinely competitive alternatives to Nvidia, this would reshape the economics of AI infrastructure and reduce Nvidia's leverage in negotiations.

How will competitive AI companies navigate infrastructure partnerships? If Anthropic, Google, and other AI companies develop successful business models while maintaining more independence from Nvidia, this could establish alternative patterns for industry organization.

Conclusion: The Nvidia-Open AI Partnership in Context

The Nvidia-Open AI partnership announcement in September 2024 captured headlines with its massive $100 billion figure and the strategic significance of two dominant companies joining forces. By January 2025, reports suggested the partnership was being refined—possibly scaled back—leading to public denial from Jensen Huang and substantive questions about the partnership's health and direction.

The reality, viewed more objectively, is that both the initial announcement and the subsequent refinement are entirely consistent with how sophisticated, complex partnerships between major technology companies actually work. When companies announce major deals in principle, they're committing to a broad strategic direction but not necessarily to every detail of the ultimate arrangement. The months between announcement and execution involve detailed due diligence, technical planning, market assessment, and negotiation about specific terms.

What's most important about the Nvidia-Open AI partnership, regardless of whether the final deal is closer to

For companies and developers building AI applications, the Nvidia-Open AI partnership has important implications. The quality of AI infrastructure matters for performance, cost, and reliability. The relationships between infrastructure providers, AI companies, and end-users will shape the next phase of AI development. Companies should be thinking about their own infrastructure strategies—whether to rely on hyperscalers' offerings, to develop custom solutions, or to use alternative platforms that abstract away infrastructure complexity.

The reported friction and refinement of the Nvidia-Open AI partnership also serves as a reminder that the AI industry is maturing from the hype phase toward a more realistic assessment of value, costs, and returns. This maturation is ultimately healthy for the industry and for the companies involved. Nvidia and Open AI, despite any reported tensions, remain strategically aligned on the fundamental reality that building advanced AI systems requires enormous amounts of specialized computing power—something Nvidia is uniquely positioned to provide.

The partnership's evolution from its initial announcement to its current form reflects not weakness or failure, but rather the normal process of translating strategic vision into operational reality. As the AI industry continues to scale and mature, similar partnerships will continue to evolve, and the specific terms will continue to be negotiated based on actual market conditions, technical progress, and competitive developments.

FAQ

What is the Nvidia-Open AI partnership?

The Nvidia-Open AI partnership announced in September 2024 represents a strategic alliance in which Nvidia commits to investing up to $100 billion in Open AI and building 10 gigawatts of computing infrastructure specifically for Open AI's AI model development and deployment. The partnership combines a direct equity investment with an infrastructure support component designed to ensure Open AI has access to cutting-edge GPU technology as the company scales its operations.

Why did Jensen Huang deny reports of partnership slowdown?

Jensen Huang publicly rejected Wall Street Journal reporting that suggested Nvidia was scaling back its Open AI investment, calling the claims "nonsense." Huang's response emphasized that Nvidia would "definitely participate" in Open AI's funding round and invest "a great deal of money," though he declined to specify exact amounts. His denial was intended to reassure the market that Nvidia remained committed to Open AI despite the reported tension between the companies.

What were the specific concerns reported between Nvidia and Open AI?

According to Wall Street Journal reporting, Nvidia CEO Jensen Huang raised several concerns about the partnership, including: the non-binding nature of the original commitment, potential competition from companies like Anthropic and Google developing AI capabilities, and questions about whether Open AI's business strategy would deliver sufficient returns on Nvidia's infrastructure investment. The companies were reportedly rethinking their relationship, with discussions focusing on reducing the investment from $100 billion to potentially tens of billions instead.

How much is Nvidia actually investing in Open AI?

The exact amount of Nvidia's investment in Open AI has not been publicly disclosed. While the partnership announced a framework involving up to $100 billion, Jensen Huang's statements suggest the actual investment may be scaled differently than initially announced. Huang deferred to Open AI CEO Sam Altman to announce the specific fundraising details, indicating that the terms are still being finalized.

What does the infrastructure component of the deal involve?

Nvidia committed to supporting the construction and operation of 10 gigawatts of computing infrastructure specifically configured for Open AI's needs. For context, a gigawatt represents enormous computing capacity—enough to power dozens of world-class data centers. This infrastructure would provide Open AI with specialized GPU capacity for both training advanced AI models and serving millions of inference requests from end-users globally.

Why might Nvidia want to scale back its Open AI investment?

Potential reasons for Nvidia's reported reconsideration include: the emergence of competitive AI companies like Anthropic and Google as viable alternatives in the market, questions about whether Open AI's business model would deliver sufficient returns to justify a $100 billion investment, the complexity and cost of actually building the proposed infrastructure, and regulatory concerns about the competitive implications of such a deep partnership. Additionally, Nvidia might achieve better returns by investing capital in other companies or deploying chips to multiple customers rather than concentrating so heavily on Open AI.

How does this partnership affect other AI companies?

The Nvidia-Open AI partnership has implications for other AI companies in several ways. It reinforces Nvidia's position as the essential infrastructure provider for advanced AI development, but it also demonstrates that companies like Anthropic and Google can develop competitive AI capabilities while sourcing from diverse infrastructure providers. The partnership also influences the broader question of whether AI infrastructure will become increasingly consolidated or whether multiple providers will compete for customer relationships.

Is the Nvidia-Open AI partnership still happening?

Based on Jensen Huang's public statements, the partnership between Nvidia and Open AI is continuing. Huang confirmed that Nvidia will "definitely participate" in Open AI's current funding round and will invest substantial capital in the company. While the specific terms and magnitude may differ from the original $100 billion announcement, both companies appear committed to maintaining their strategic relationship and infrastructure collaboration.

What alternatives exist to Nvidia's infrastructure for AI companies?

Alternative infrastructure providers include Google's TPUs (tensor processing units), which are competitive for certain AI workloads; AMD's graphics processors, which are developing more mature AI capabilities; custom silicon developed by major cloud providers; and emerging startups building specialized AI chips. However, Nvidia's CUDA ecosystem, software maturity, and proven track record continue to make the company's GPUs the dominant choice for most large-scale AI development despite these alternatives.

How does this partnership relate to Open AI's broader fundraising efforts?

The Nvidia-Open AI partnership is one component of Open AI's broader capital raising strategy. Open AI is simultaneously raising capital from multiple sources including Amazon, Microsoft, Soft Bank, and others. By diversifying its funding sources, Open AI reduces its dependence on any single provider and strengthens its negotiating position with Nvidia and other partners. This diversification likely contributed to the reported refinement of the Nvidia partnership, as Open AI demonstrated it could access capital and infrastructure from multiple providers.

Key Takeaways

- Nvidia-OpenAI partnership announced $100B investment and 10 gigawatt infrastructure commitment in September 2024, but terms evolved during execution planning

- Wall Street Journal reported partnership was being scaled back, with actual investment potentially tens of billions rather than $100 billion

- Jensen Huang dismissed scaling-back reports as nonsense but declined to specify exact investment amounts, preserving negotiating flexibility

- Competitive pressure from Anthropic, Google, and other AI companies informed partnership reassessment and justified more cautious infrastructure commitment

- Technical reality remains that Nvidia chips are essential for large-scale AI development; no viable alternatives have achieved comparable ecosystem maturity

- Partnership refinement reflects normal business negotiation, not fundamental misalignment; sophisticated companies rarely execute deals on exactly the terms initially announced

- Regulatory scrutiny regarding market concentration and antitrust concerns may have influenced both companies' willingness to refine the partnership

- Alternative platforms like Runable offer different approaches to AI automation for teams not requiring hyperscale infrastructure capabilities

- Infrastructure buildout timelines, supply chain complexity, and phased deployment naturally lead to adjustments from initial partnership framework

- Partnership serves as indicator that AI industry is maturing from hype cycle to realistic assessment of costs, returns, and competitive dynamics

Related Articles

- Nvidia's $2B CoreWeave Investment: AI Infrastructure Strategy Explained

- Nvidia's $2B CoreWeave Bet: Vera Rubin CPUs & AI Factories Explained [2025]

- OpenAI's Sales Leader Joins Acrew VC: AI Startup Moat Strategy 2025

- Nvidia's Decade-Long Shield TV Support Strategy: A Masterclass [2025]

- Nvidia GeForce Now on Linux: The Game Changer Linux Gamers Needed [2025]

- Nvidia's AI Chip Strategy in China: How Policy Shifted [2025]

![Nvidia's $100B OpenAI Investment: Reality vs. Reports [2025]](https://tryrunable.com/blog/nvidia-s-100b-openai-investment-reality-vs-reports-2025/image-1-1769882811297.jpg)