Open AI's Former Sales Leader Joins Acrew: Building Defensive Moats for AI Startups

Introduction: When AI Market Expertise Becomes Venture Capital Gold

The venture capital landscape is undergoing a profound transformation as seasoned operators from the world's most influential AI companies are transitioning into investment roles. This movement represents more than just career changes—it reflects a fundamental shift in how emerging companies navigate the increasingly competitive artificial intelligence market. Aliisa Rosenthal, who served as Open AI's first sales leader for three years, recently joined Acrew Capital as a general partner, bringing with her hard-won knowledge about enterprise AI adoption, competitive positioning, and the future landscape of AI-powered applications.

Rosenthal's journey from sales leadership at Open AI to venture capital investing offers valuable lessons for founders, investors, and technology leaders trying to understand where the next wave of AI innovation will emerge. During her tenure at Open AI, she scaled the enterprise sales organization from just two people to hundreds, witnessing firsthand the launch of transformative products like DALL·E, Chat GPT, Chat GPT Enterprise, and Sora. This experience placed her in a unique position to observe how enterprises think about AI adoption, what gaps exist between organizational aspirations and practical deployment, and most critically, how emerging startups can build defensible competitive advantages in a market where the largest AI labs continue to expand their offerings.

The timing of this transition is particularly significant. The AI industry has matured considerably since the viral success of Chat GPT in late 2022. Initial euphoria about AI's transformative potential has given way to more pragmatic assessments of where value can actually be created. Enterprise organizations are struggling with real implementation challenges—how to integrate AI into existing workflows, how to manage the quality of outputs, how to handle data security and compliance, and how to measure return on investment. Simultaneously, the competitive landscape has intensified dramatically. Not only are well-funded AI research labs like Open AI, Anthropic, Google Deep Mind, and others releasing increasingly capable models, but the barrier to entry for building AI applications has dropped significantly.

Rosenthal's transition to venture capital comes at a moment when founders desperately need this kind of insider perspective. Entrepreneurs building on top of foundation models face a fundamental question that keeps them awake at night: What happens when the model providers decide to build my application themselves? This existential risk has haunted the AI startup ecosystem since Chat GPT's release, and it's only become more acute as Open AI, Google, Anthropic, and other labs have demonstrated their willingness to expand vertically into application layers. Her insights into how to answer this question represent invaluable IP for the portfolio companies she'll work with at Acrew Capital.

This article provides an in-depth analysis of Rosenthal's transition, the strategic insights she brings to venture capital, and the broader implications for how AI startups should position themselves in an era of increasingly capable foundation models. We'll examine her thesis on defensible competitive advantages, explore the specific moats she identifies as viable for emerging companies, and consider how her perspective aligns with or challenges conventional wisdom in the AI startup ecosystem.

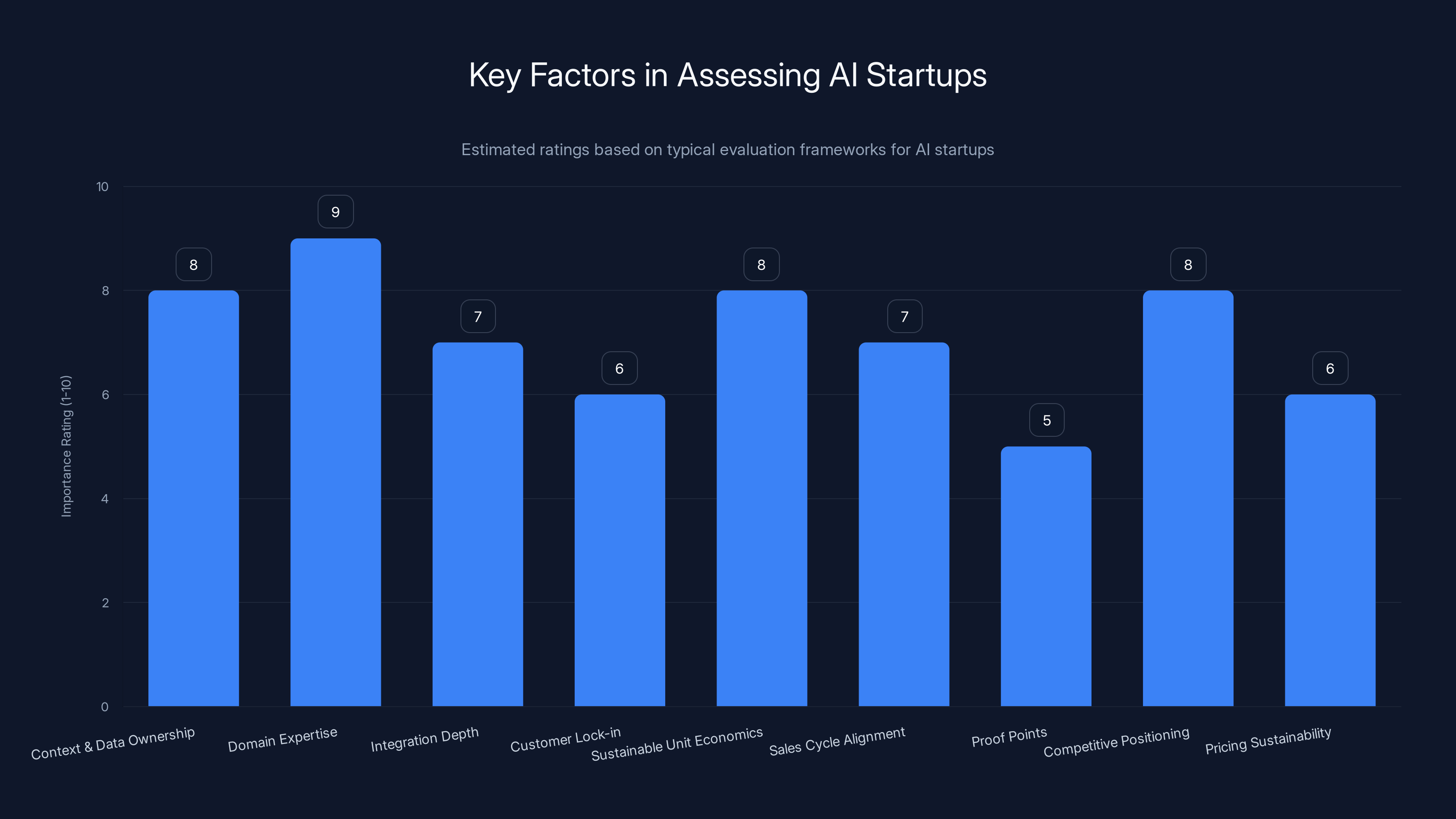

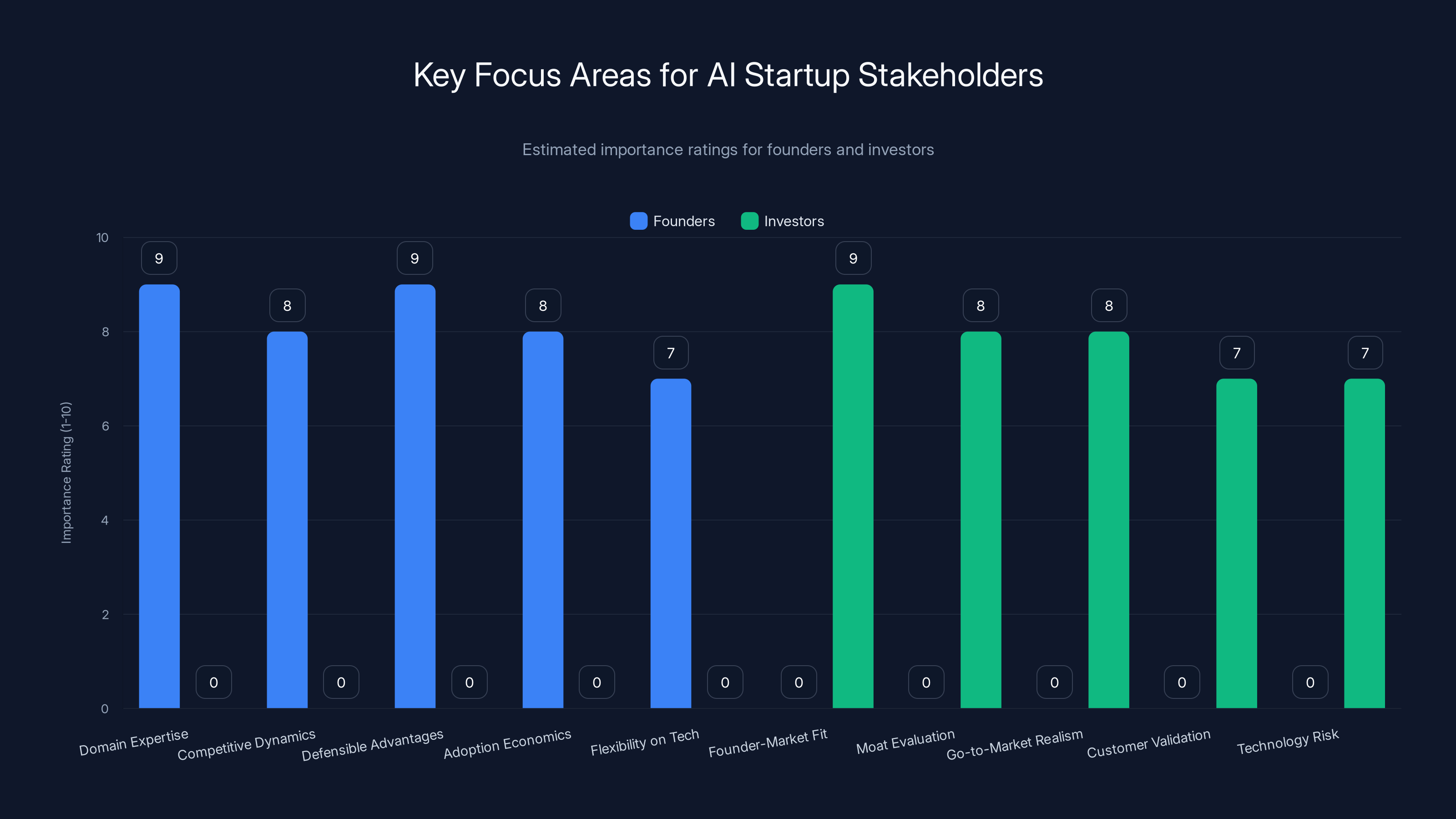

Key factors in assessing AI startups are rated based on their importance, with Domain Expertise and Context & Data Ownership being the most critical. (Estimated data)

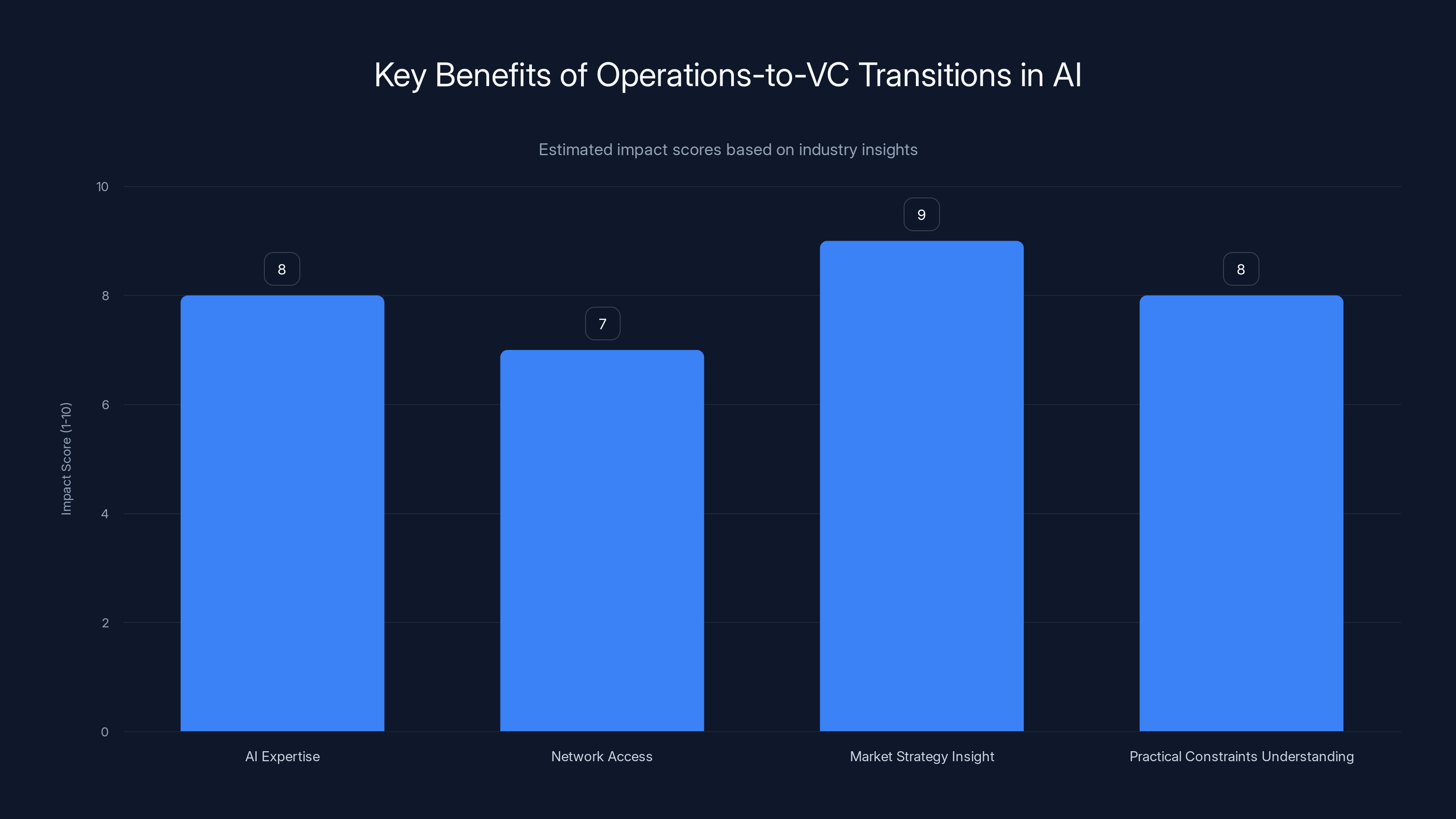

The Case for Operations-to-VC Transitions in AI

Why AI Expertise from Model Providers Is Particularly Valuable

The transition of talented operators from leading AI companies to venture capital roles represents a meaningful trend in the industry. Peter Deng, who previously served as Open AI's head of consumer products, made a similar move to Felicis Ventures approximately a year before Rosenthal joined Acrew. Since that transition, Deng has reportedly been "crushing it," securing significant allocations in hot early-stage companies like LMArena and Periodic Labs. This pattern suggests that venture capital firms recognize tremendous value in bringing in people who deeply understand how the leading AI labs operate, what they're likely to build next, and where the gaps in their strategies actually lie.

The advantage isn't merely about having a Rolodex, though the network of Open AI alumni certainly doesn't hurt. Rather, it's about possessing a calibrated understanding of what's actually possible with current technology. Many venture capitalists and founders operate with somewhat romanticized or outdated notions of what AI can accomplish in real enterprise environments. They may read research papers or product announcements and extrapolate forward without fully understanding the practical constraints that organizations face when trying to implement these capabilities at scale. Someone like Rosenthal, who spent three years watching enterprises attempt to adopt AI solutions, absorbing their feedback, understanding their hesitations, and observing what actually drives purchasing decisions, possesses a different kind of intelligence that's extraordinarily valuable.

Furthermore, operators from Open AI have witnessed the evolution of go-to-market strategy for AI products in real time. Rosenthal watched as Open AI experimented with different approaches to enterprise adoption, learned what messaging resonated with different buyer personas, understood what pricing structures made sense, and ultimately understood the gap between what organizations think is possible with AI and what they can actually deploy today. This empirical understanding of market dynamics—gleaned from hundreds of enterprise conversations—is far more valuable than any consultant's report or analyst forecast.

The Growing Precedent for This Career Path

Rosenthal's move to Acrew Capital isn't happening in isolation. There's now a growing precedent for high-level Open AI executives and staff members to transition into seed-stage investing. The Open AI alumni network has become sufficiently mature and distributed that multiple cohorts of early employees have now begun exploring alternative career paths. Some have founded startups (like those at Anthropic and Safe Superintelligence), while others have explicitly chosen the investment side.

This trend reflects a few dynamics. First, the compensation structures in venture capital, particularly at promising firms like Acrew, can be competitive with or exceed compensation packages at AI labs, especially as new funds close larger fund sizes and demonstrate track records. Second, investing appeals to operators who want to maintain broad exposure to the industry while working with multiple companies rather than going all-in on a single technical or product vision. Third, there's something psychologically appealing about a transition that leverages deep expertise in a new way—Rosenthal gets to apply everything she learned at Open AI without starting from scratch at a new company or narrowing her focus to a single vertical.

Understanding the AI Startup Moat Question: The Existential Challenge

The Core Threat: Will Model Makers Simply Build Everything?

When Rosenthal was asked directly whether Open AI would "just build everything and put every company out of business," her answer revealed the nuanced thinking that venture capital needs to hear. She acknowledged that Open AI is remarkably active across multiple domains—consumer products, enterprise software, hardware devices. Yet she simultaneously suggested that total vertical integration is neither technically feasible nor strategically sensible for a company like Open AI. This distinction is crucial for founders trying to understand where they can credibly compete.

The threat of disintermediation is very real. Numerous startups have been founded on the assumption that they could build valuable applications on top of Chat GPT's API. Some have built meaningful businesses. Many others have watched nervously as Open AI added features directly to Chat GPT or Chat GPT Enterprise that replicated the startup's core value proposition. The platform risk is not theoretical—it's been demonstrated repeatedly over the past two years.

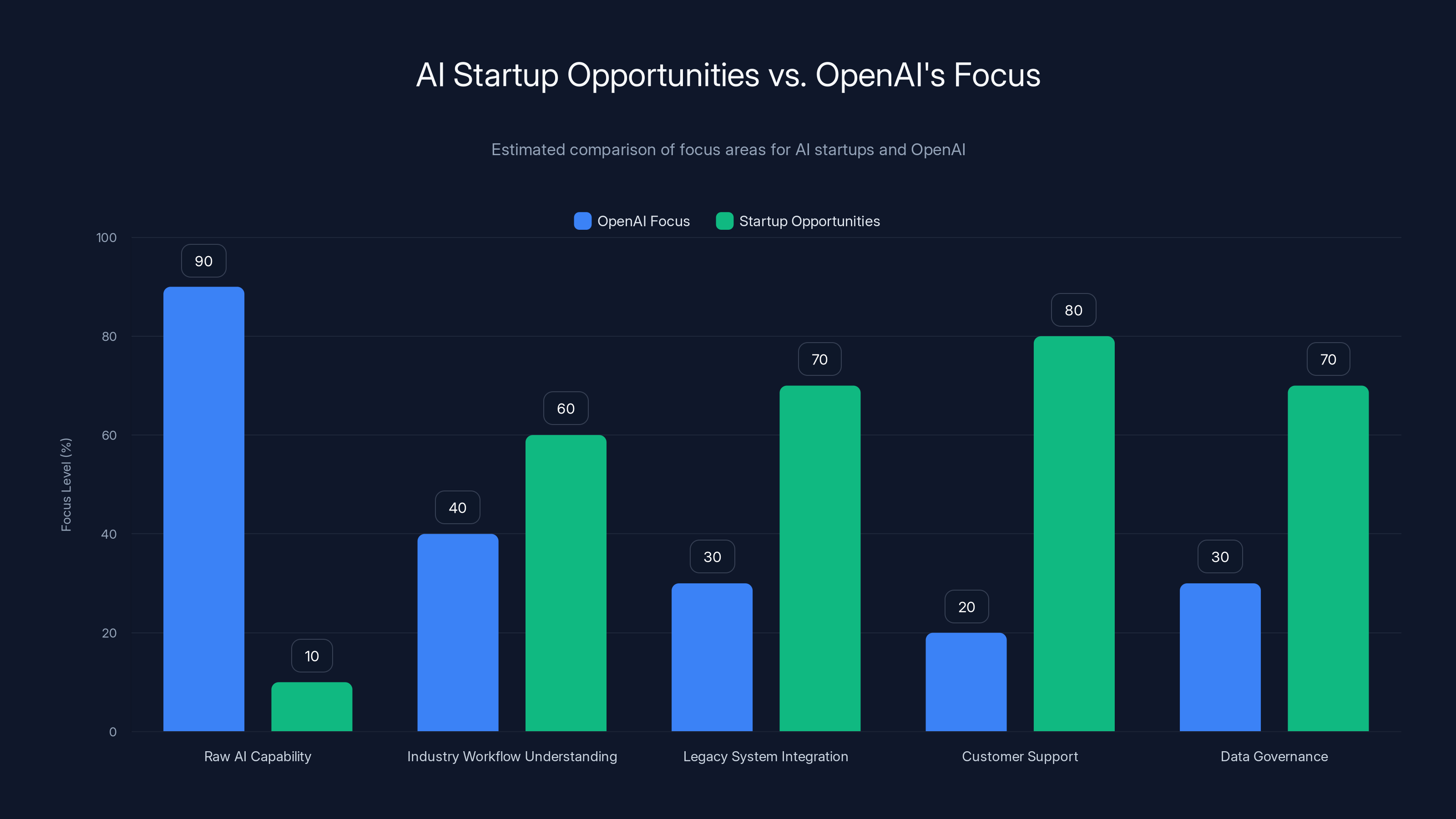

However, Rosenthal's observation that Open AI won't pursue every potential enterprise application points to a critical insight: distribution and support capacity are real constraints, even for the most capable AI labs. Open AI can build remarkably sophisticated AI capabilities, but building a complete enterprise application involves far more than raw model performance. It requires understanding specific industry workflows, building integrations with legacy systems, providing customer support and training, managing data governance concerns, and maintaining relationships with buyers who may have complex procurement processes and risk requirements.

This is where startup opportunities actually emerge. It's not that startups will outcompete Open AI on raw intelligence or capability. Rather, startups can win by going deep in specific domains where they understand the workflow better, build the integrations that customers actually need, and provide the customer experience that fits into how real organizations operate.

The Difference Between Possible and Deployable

One of Rosenthal's most insightful observations is the distinction between what's theoretically possible with AI and what organizations can actually deploy in production environments. She notes: "There's a really large gap that I am very optimistic can be filled." This gap exists for multiple reasons. Organizations don't have the technical expertise to fine-tune models effectively. They're concerned about data privacy and don't want to send sensitive information to public APIs. They need their AI solutions to work with specific data formats or legacy systems. They require explainability or auditability that general-purpose models don't provide by default. They need SLA guarantees, dedicated support, and contractual commitments that startups can often provide more flexibly than massive companies.

This gap between possibility and deployment represents the actual market for AI startups. The companies that will thrive are those that recognize this distinction and build products and services that bridge it. They're not trying to outcompete Open AI on model capability. Instead, they're taking the capability that's available (either through APIs or open-source models) and wrapping it in the domain expertise, integrations, interfaces, and support structures that real organizations need.

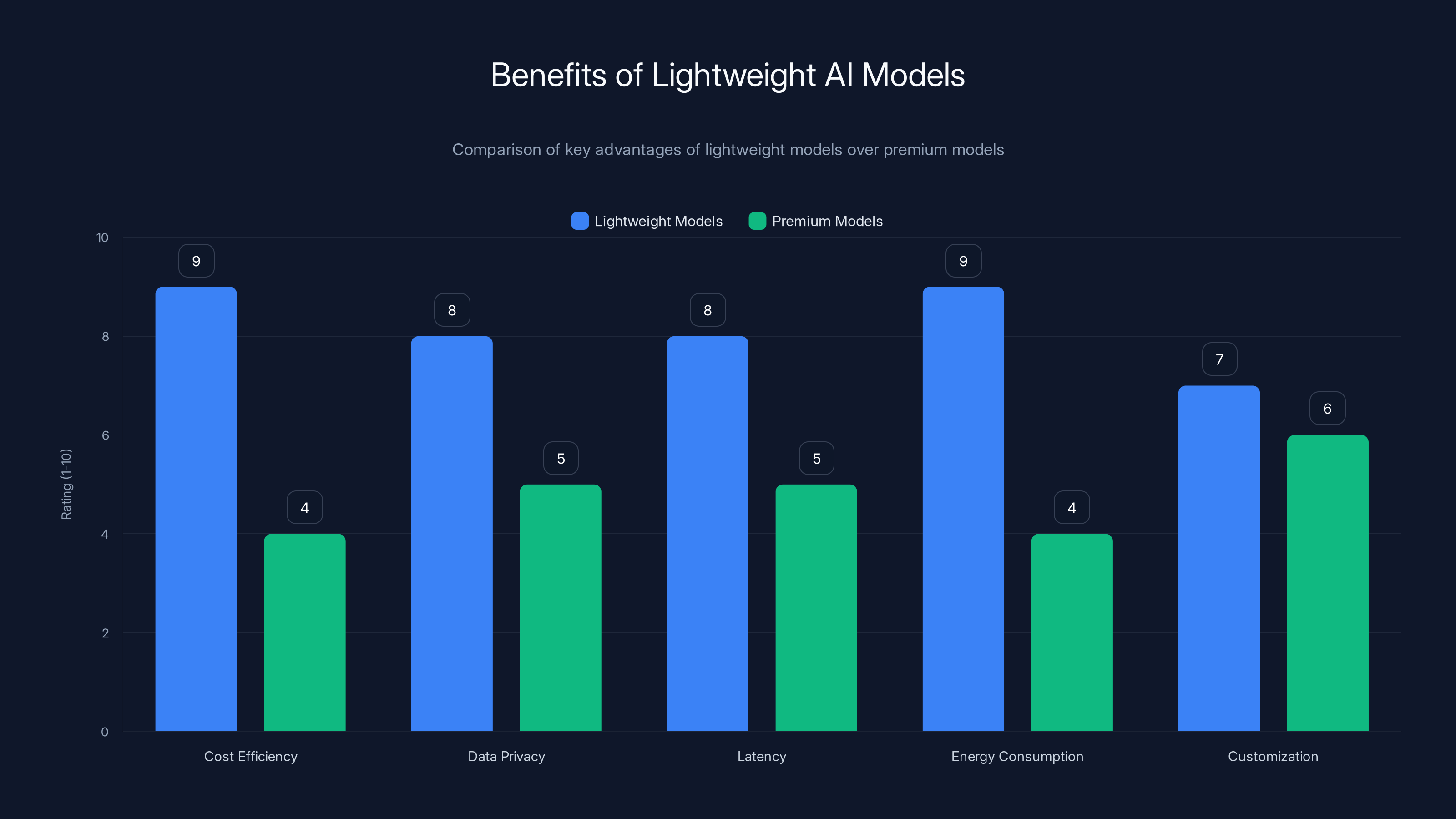

Lightweight models offer significant advantages in cost efficiency, data privacy, and energy consumption compared to premium models. Estimated data.

Building Defensible Moats: Context, Specialization, and Application Layers

The Context Moat: Why Information Management Becomes Competitive Advantage

Rosenthal identifies context as one of the most promising sources of defensible competitive advantage for AI startups. Specifically, she emphasizes the importance of what gets stored in a model's context window—the informational substrate that the AI leverages when processing requests and generating responses. This insight reflects deep understanding of how enterprise AI actually creates value.

Context is dynamic, adaptable, and scalable in ways that base model capabilities are not. As AI applications mature, the competitive advantage increasingly comes not from having access to the smartest model, but from having access to the best information and being able to leverage it effectively. A startup that builds an application focused on, say, customer service automation doesn't win because it has access to a smarter language model than its competitors. It wins because it has accumulated superior knowledge about that customer's specific customer base, support issues, preferred responses, compliance requirements, and historical patterns. This contextual information becomes increasingly valuable over time as the application handles more requests and learns more about the customer's specific situation.

Moreover, context is something that enterprises can actually own and control. They can feed their own proprietary data into context windows. They can ensure that only approved information is available to the AI system. They can maintain audit trails. This is fundamentally different from base model capability, which is controlled by the lab that trained the model. From a strategic perspective, enterprises prefer to own and control the sources of competitive advantage when possible.

Rosenthal notes that the industry is moving beyond basic RAG (Retrieval-Augmented Generation) toward more sophisticated approaches like context graphs. Traditional RAG involved retrieving relevant information from a knowledge base and including it in the prompt to the language model. Context graphs represent a more structured approach where information is organized as a graph of connected entities and relationships, allowing for richer reasoning and more sophisticated information retrieval. This evolution suggests that as the field matures, companies that build superior context engineering capabilities will develop increasingly defensible advantages.

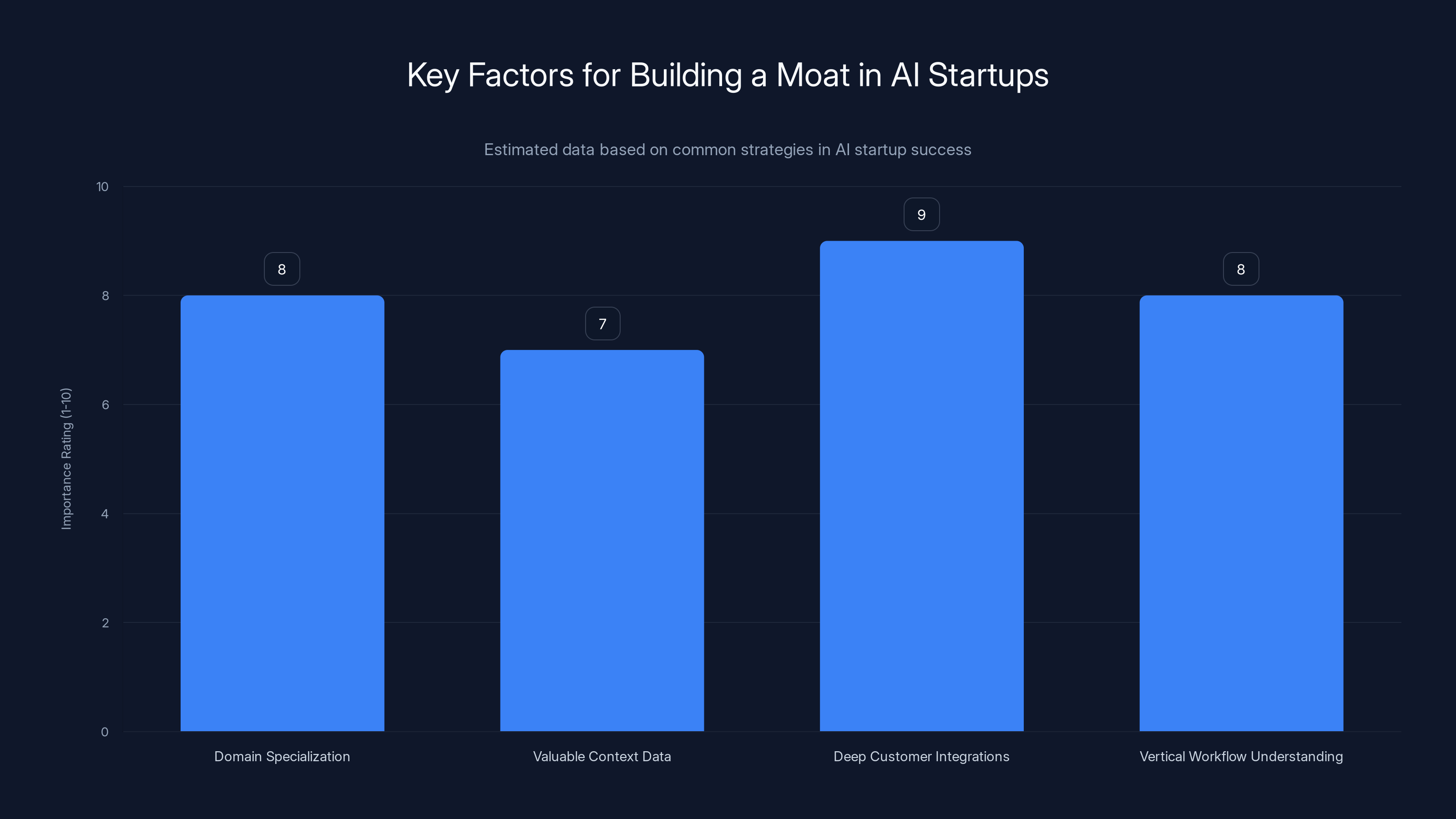

The Specialization Moat: Vertical Focus as Differentiation

Rosenthal emphasizes that one of the clearest moats for enterprise AI startups is specialization. Rather than building horizontal AI tools that claim to work for everyone (a strategy that invites direct competition with model providers), successful startups should focus deeply on specific verticals or use cases. A company that becomes the leading AI solution for legal document analysis, for example, can build deep expertise in legal workflows, compliance requirements, and the specific document formats that lawyers work with. This specialization creates multiple sources of competitive advantage: deeper domain understanding than horizontal competitors, better integrations with vertical-specific systems, and greater trust among customers who know the company has deep expertise in their world.

This is not a new insight in software, but it's particularly important in the AI era because of the tendency to view AI as a horizontal technology that works everywhere. While AI is indeed powerful and broadly applicable, the actual creation of value still requires deep vertical understanding. The startups that will thrive are those that recognize that AI is a tool that becomes far more powerful when combined with domain expertise, not a standalone competitive advantage.

The Application Layer Moat: Building Where Users Actually Work

Rosenthal explicitly states: "Where I'm really excited to invest is on the application layer." This reveals her conviction that while the most cutting-edge work is happening at the foundation model layer, the most defensible and valuable businesses are being built at the application layer. This makes intuitive sense: foundation models are moving faster, becoming more commodified, and increasingly accessible. The margins are under pressure. But applications that help enterprise employees work more efficiently? Those serve a customer need that will exist regardless of which foundation model is powering them.

The application layer advantage is compounded by switching costs and user adaptation. If an enterprise deploys an AI-powered application and 500 employees become trained on how to use it, switching to an alternative requires not just re-purchasing software, but also training those employees on new workflows. This creates genuine lock-in that makes applications more defensible than infrastructure.

Rosenthal is particularly interested in startups with "interesting use cases" that leverage AI to improve how people work. This focus reflects mature thinking about where startup value actually accrues in the AI era. It's not in racing for the next research breakthrough. It's in taking the breakthroughs that exist and making them useful for real human beings trying to accomplish real work.

The Economics of Model Costs and Lightweight Inference

Challenging the Premium Model Assumption

A counterintuitive thesis that Rosenthal brings to her investment strategy is the opportunity in cheaper, lighter-weight models. The conventional wisdom in some startup circles is that you want to use the most capable foundation models available—GPT-4, Claude 3.5, Gemini 2—because they produce the best outputs. But Rosenthal identifies a significant market opportunity in models that may not top the leaderboards but are nonetheless "very useful" and dramatically more affordable.

This insight reflects real economics. The cost structure of serving API calls to increasingly large models is pushing some use cases toward infeasibility or requiring startups to accept terrible unit economics. A startup might build an application that genuinely helps users but find that the cost of inference consumes so much of customer lifetime value that the business model breaks down. By contrast, a startup that can solve the same customer problem with a smaller, more efficient model—perhaps even a fine-tuned smaller model—can maintain healthy margins while pricing competitively.

There are also practical advantages to smaller models beyond just cost. They can run on-device or on-premises, addressing data privacy concerns. They have lower latency, which matters for real-time applications. They consume less computational energy, which increasingly matters to large enterprises with sustainability commitments. They're easier for startups to control and modify through fine-tuning, potentially allowing for better customization to specific use cases.

This thesis suggests that the market for inference-optimized models and the applications built on them is still in early stages. As enterprises become more sophisticated about AI adoption and move beyond proof-of-concept experiments, cost optimization and efficiency become increasingly important. Startups that build for this requirement rather than assuming unlimited access to the most expensive models may find themselves in attractive positions.

The Fine-Tuning and Specialization Strategy

Related to the cost thesis is the strategic opportunity of fine-tuning smaller models for specific domains. While large, general-purpose models are remarkable feats of engineering, there are diminishing returns to scale beyond a certain point for many specific applications. A smaller model that's been fine-tuned on domain-specific data can often outperform a much larger general-purpose model on tasks within that domain.

For AI startups, this represents an opportunity to build defensible advantages through domain-specific model optimization. Rather than competing directly with Open AI or Google on raw model capability, a startup might train or fine-tune a smaller model specifically for, say, medical coding or patent analysis or financial compliance. The result is a model that outperforms larger general-purpose models on that specific task while being cheaper to serve and easier to integrate into domain-specific applications.

This strategy also has the advantage of reducing vendor lock-in risk. A startup that builds its business entirely on Open AI's API is fundamentally dependent on Open AI's decisions about pricing, feature development, and availability. A startup that maintains its own specialized models (or works with partners who do) has more independence. This independence itself can be a moat—customers may prefer to work with vendors who aren't entirely dependent on a third-party provider.

Enterprise AI Adoption: The Reality Gap

What Enterprises Actually Understand About AI Capabilities

Rosenthal's extensive experience with enterprise customers gave her visibility into a critical insight: there is a massive gap between what enterprises understand AI can do and what it can actually do given their constraints and current technology. This gap goes in both directions. Some enterprises overestimate AI's current capabilities—they've read about AI breakthroughs and imagine that their most complex problems can now be solved with Chat GPT. Other enterprises drastically underestimate what's possible—they haven't integrated AI into their thinking about how to improve their operations.

This information asymmetry creates market opportunity. Enterprises that understand the true boundaries of current AI capability, and know how to deploy it effectively within those boundaries, gain competitive advantage. Startups that help enterprises understand what's actually possible (no more, no less) and guide them toward realistic applications will build trust and loyalty.

The gap also explains why startups have such an advantage over very large, slow-moving enterprises in AI adoption. A nimble startup can experiment, fail fast, and learn what works in specific domains. A large enterprise with established processes and risk-management procedures moves slower but ultimately has deeper resources to deploy. The startups that will win are those that use their speed advantage to develop domain expertise before larger competitors figure out those domains are worth competing in.

The Customer Education Imperative

Rosenthal's observation that "enterprises still don't understand how much AI can do for them" points to a crucial role that successful AI startups must fill: customer education. This isn't just about marketing. It's about helping customers understand what's genuinely possible in their specific context, what isn't possible, what would require custom work versus off-the-shelf capabilities, and what the actual ROI timeline looks like.

Customers who feel educated about the realistic potential of AI are far more likely to deploy solutions effectively and expand usage over time. Customers who feel misled or oversold on AI's capabilities become frustrated and reduce their usage. The startups that win in enterprise AI are those that excel at this education function—they help customers maintain realistic expectations while still identifying genuine opportunities to improve how they work.

Operators transitioning to VC roles bring significant AI expertise, market strategy insights, and practical understanding of constraints, which are highly valued in venture capital. (Estimated data)

The Role of Context Graphs and Advanced AI Architecture

Moving Beyond RAG: The Next Generation of AI Context Management

Rosenthal identifies context graphs as a particular area where she expects significant innovation in 2025 and beyond. Traditional RAG (Retrieval-Augmented Generation) has been the dominant approach for giving language models access to specific knowledge. RAG works by retrieving relevant documents or passages from a knowledge base and including them in the model's context window. While RAG represents a major improvement over using only the model's training data, it has limitations. Document retrieval can miss relevant information if it's worded differently than the query. RAG doesn't capture relationships between pieces of information. It doesn't maintain state across multiple interactions.

Context graphs address these limitations by organizing information as a graph structure—entities and their relationships—rather than as documents or passages. This allows for more sophisticated retrieval (finding related information by traversing the graph rather than just keyword matching), better reasoning about relationships, and more coherent responses that take into account multiple pieces of connected information.

The important insight Rosenthal makes is that this area is not yet fully solved. There's significant remaining work in developing the infrastructure, techniques, and architectures that will make context graphs truly practical for enterprise applications. This represents a genuine innovation frontier, and startups that develop better context graph technologies may build valuable moats.

Memory and Reasoning Beyond Pattern Recognition

Rosenthal notes that significant technical challenges remain in the area of memory and reasoning. Current language models are fundamentally pattern-matching systems—they're extraordinarily sophisticated at recognizing patterns in training data and extrapolating those patterns, but they don't truly "remember" in the way humans do, and they don't engage in logical reasoning in the way formal systems do.

Improving these capabilities is not just a theoretical exercise. Enterprise applications require different behavior from consumer applications. A customer service AI that can remember previous interactions with a customer and reason about how they relate to current requests would be dramatically more valuable than one that starts from scratch with each interaction. A financial analysis AI that can reason through complex logical chains rather than just pattern-matching would be more trustworthy for high-stakes decisions.

For startups, this points to a significant frontier. Building better memory and reasoning capabilities—either at the model level or at the application level through clever architecture—could yield genuine competitive advantages. This is not an area where we've hit diminishing returns or maturity. There's still fundamental work to be done.

Acrew Capital: The Platform and Partnership

Lauren Kolodny's Vision for AI Investing

Rosenthal is joining Acrew Capital as a general partner working alongside founding partner Lauren Kolodny. Kolodny's track record in AI investing is worth understanding to appreciate what Rosenthal is joining. Acrew has positioned itself as a thoughtful investor in AI applications and infrastructure, with a thesis that doesn't just chase hot trends but instead focuses on where genuine value is being created. The addition of Rosenthal to the partnership brings complementary expertise—Kolodny's investment experience and pattern recognition about what makes good businesses, combined with Rosenthal's insider knowledge of how AI model providers actually work and what enterprises need.

This partnership structure—pairing operational expertise with investment expertise—is increasingly common in venture capital and reflects recognition that both skill sets are necessary for success. Investors who've built businesses understand what founders are going through. Operators who transition to investing bring pattern recognition and networks that investors without operating experience lack.

The Expanded Open AI Alum Network as an Advantage

Rosenthal explicitly notes that she'll be working with the Open AI alum network to source deal opportunities. As Open AI has grown from a small research organization to a company with significant scale, the alum network has expanded dramatically. Many Open AI alumni have already founded startups, some of which have raised significant funding at high valuations. Anthropic is the most obvious example—a company founded by Open AI alumni that has become a major AI lab in its own right and achieved $5 billion+ valuation. Safe Superintelligence is another example of an Open AI alum-founded company that has attracted significant investor interest despite being early-stage.

For Rosenthal, this network is both a source of deal flow and a source of advantage in evaluating deals. She can speak to people who were colleagues of founders. She understands the culture and incentives of the organization where the founding team trained. She can assess technical founders' credibility and capability based on what she knows about their work at Open AI.

The Buyer-Investor Network: A Unique Advantage

Bridging the Model Providers and Enterprise Customers

Perhaps Rosenthal's most valuable asset for her role at Acrew is her deep relationships with enterprise customers and decision-makers. She spent three years as Open AI's sales leader, which means she's had hundreds of conversations with enterprise buyers—CIOs, technology leaders, business executives—about how they think about AI, what they're trying to accomplish, what concerns keep them up at night, and what they're actually willing to pay for.

This buyer network is extraordinarily valuable for venture capital for several reasons. First, when evaluating AI startups, having direct access to potential customers who can evaluate whether the startup's product actually solves real problems is invaluable. Second, these relationships can be activated as customer references or early adopters for Acrew portfolio companies. Enterprise deals often move slowly, but an introduction from a trusted buyer who understands AI can dramatically accelerate sales cycles. Third, understanding deeply how enterprise buyers think about software purchasing decisions helps Rosenthal evaluate whether a startup's go-to-market strategy is realistic and whether its product-market fit thesis holds up to scrutiny.

The Information Advantage in Enterprise AI

Rosenthal possesses information that most venture capitalists don't have. She knows which industries are farthest along in AI adoption and which are still in early stages. She knows which use cases enterprises have actually tried to implement and found valuable, and which seem theoretically promising but haven't worked out in practice. She knows what budget cycles look like, what approval processes are required, and what proof points enterprises need to justify AI investments.

This information advantage is valuable when assessing startups that claim to have solved enterprise AI problems. A venture capitalist without this experience might be impressed by a startup's pitch about how it's using AI to improve supply chain management. Rosenthal can immediately contextualize that claim: Is this solving a problem enterprises actually care enough about to pay for? Is the go-to-market strategy realistic given how software procurement works in that industry? What are the real barriers to adoption?

Estimated data suggests startups have opportunities in areas like industry workflow understanding and customer support, where OpenAI's focus is less intense.

The Competitive Landscape: Who Wins in the AI Era

The Model Providers' Strategy and Limitations

Understanding Open AI's strategy—and by extension, the strategy of other major model providers—is crucial for understanding where AI startups can succeed. Rosenthal's insider perspective reveals that while model providers are indeed expanding into multiple domains, they're not attempting to build every possible application. This is partly a resource allocation question (building and supporting a software application is genuinely different from building a model), partly a strategic focus question (trying to be everything to everyone often results in being nothing to anybody), and partly a market structure question (the software application market is enormous and not necessarily the highest-margin business).

Model providers' primary advantage—the ability to build incredibly capable models—doesn't necessarily translate to dominance in every software application. A software application has to solve a specific customer problem, integrate with specific workflows, provide specific features and user experiences, and maintain specific relationships with customers. Building increasingly powerful models doesn't automatically mean you can do all of that better than specialists.

The Tier-1 AI Startup Opportunity

The companies that are best positioned to win are those that understand this dynamic and position themselves accordingly. Tier-1 AI startups will likely be those that: (1) solve genuinely important problems for paying customers, (2) build defensible advantages through domain expertise or data ownership rather than model capability, (3) maintain flexibility about which models they use (neither dependent on proprietary APIs nor locked into a particular open-source model), and (4) focus obsessively on customer success and expansion rather than just customer acquisition.

Rosenthal's investment thesis at Acrew will likely focus on identifying founders and companies with these characteristics. She's looking for teams that understand enterprise needs deeply, that have plausible strategies for building competitive advantages that will survive for years, and that have realistic go-to-market strategies that account for how enterprises actually buy software.

Strategic Implications for AI Startup Founders

Lessons From Rosenthal's Insights

Founders building AI startups today should pay close attention to the themes that Rosenthal emphasizes. First, competition with model providers is not your primary strategic challenge. Your primary challenge is solving customer problems better than non-AI alternatives or better than generalist AI solutions. Second, specialization and context ownership are more defensible than trying to be broadly applicable. Third, efficiency (both in model costs and in the user experience of your application) matters more than most founders initially appreciate.

Fourth, the gap between what's theoretically possible with AI and what customers can actually deploy is real and represents opportunity. Startups that excel at bridging this gap—through integration, customization, support, and customer education—will win. Fifth, the relationship between your startup and the underlying models is strategic. Being completely dependent on a single API or model is riskier than most founders acknowledge. Sixth, enterprise customers are simultaneously more sophisticated about AI (having experimented with it) and more frustrated (having tried things that didn't work) than they were two years ago. This requires a different sales and success approach than was needed in early 2023.

Building for Longevity, Not Just the Current Moment

Rosenthal's perspective—informed by watching how rapidly the AI landscape has evolved—suggests that founders should build for longevity rather than for today's model capabilities. Assume that the models available in 2027 will be different and better than those available today. Assume that Open AI, Google, and other providers will continue expanding into new domains. Assume that the competitive landscape will intensify and that more money will be raised for AI startups in promising areas.

Given these assumptions, what competitive advantages can you build that will still be valuable in three years? The answer lies in domain expertise, customer relationships, understanding of workflows, and the ability to maintain and evolve products as the underlying technology evolves. These are harder to build than temporary technical advantages, but they're more defensible.

Future Trends in Enterprise AI: What Rosenthal Expects

The Evolution of Context and Memory

Rosenthal explicitly predicts that 2025 will see "new approaches—the idea of context and memory" becoming increasingly important. This suggests she expects significant innovation in how AI systems maintain state, remember interactions, and leverage information effectively. This is a prediction worth taking seriously because it comes from someone who spent three years watching how enterprises actually use AI.

The implication is that enterprise AI applications that are built on solid architecture for context management and memory—rather than just stringing together API calls to language models—will age better and deliver more value over time. Startups that invest in getting this right early will have advantages over those that take shortcuts.

The Maturation of AI Procurement and Integration

Rosenthal notes that enterprises have a "really large gap" between what they understand AI can do and what they can actually deploy. Over time, this gap will close as enterprises gain more experience, as software becomes easier to integrate and deploy, and as standards emerge for how to handle security, compliance, and governance around AI. The startups that help enterprises navigate this maturation process—through consulting, implementation support, integration, and managed services—are likely to find lucrative markets.

Founders should focus on domain expertise and defensible advantages, while investors prioritize founder-market fit and moat evaluation. (Estimated data)

Comparing AI Platform Approaches: Context Strategies

Different AI startups and platforms are taking notably different approaches to managing context, which will significantly influence their competitive positioning over the next few years.

| Approach | Advantages | Limitations | Best For |

|---|---|---|---|

| Basic RAG with vector embeddings | Simple to implement, works well for document retrieval | Limited relationship reasoning, poor at nuanced queries | Document search, QA systems |

| Context graphs | Rich relationship modeling, sophisticated reasoning | Requires careful knowledge engineering, more complex | Complex enterprise domains |

| Fine-tuned models with domain data | Specialized performance, reduced API costs | Requires training data and expertise, less flexible | Narrow, high-value applications |

| Hybrid multi-model approach | Flexibility, resilience, cost optimization | Complexity, routing decisions, integration overhead | Large enterprises with diverse needs |

| Agent-based systems with memory | Persistent state, complex task chains, human-in-loop | Higher latency, more complex orchestration | Knowledge work automation |

Each approach has strategic implications for startups. Those that choose the right approach for their specific use case and customer segment will build stronger competitive positions than those that over-engineer or under-engineer their context management strategy.

The Talent and Knowledge Migration Paradox

Why Open AI Losing Talent Actually Strengthens the Ecosystem

One might initially view Rosenthal's departure from Open AI as a loss for the company. From a broader industry perspective, however, it's actually a sign of ecosystem health. When talented people from leading companies transition into roles where they can help other entrepreneurs succeed, the overall ecosystem benefits. Rosenthal can now help multiple portfolio companies avoid mistakes, accelerate their success, and navigate challenges that she understands deeply. This multiplier effect—one person's expertise helping dozens of companies—is more valuable to the ecosystem than that person's output in a single company, however important that person is.

Moreover, people like Rosenthal leaving Open AI to pursue new opportunities signals that Open AI has successfully developed enough depth that it can lose talented individuals without catastrophic impact. It signals that Open AI employees believe there are valuable opportunities outside of working at Open AI itself. It signals that the AI ecosystem is mature enough that there are meaningful paths forward for talented people other than joining a handful of labs.

The Information Flow and Innovation Acceleration

Rosenthal's transition to venture capital also facilitates information flow in the ecosystem. As a GP at Acrew, she'll see dozens of emerging AI startups, understand their approaches, observe what works and what doesn't, and synthesize this information. Over time, this gives her a comprehensive view of how the industry is evolving that she couldn't have had even with a front-row seat at Open AI. She'll take this knowledge back to her portfolio companies, creating a positive feedback loop where good ideas propagate faster and bad approaches get identified and corrected sooner.

Practical Investment Thesis: What Rosenthal Will Look For

The Founder Signal

Based on Rosenthal's background and experience, we can infer some of the founder signals she's likely to value highly. She'll prioritize founders with direct experience in the domains they're trying to serve. A founding team that includes someone who spent years in legal services is more credible to her when they pitch a legal AI application than a team that just thinks law is an interesting domain. She'll value founders who understand the distinction between what's theoretically possible and what's practically deployable. She'll be skeptical of teams that assume Open AI or Google won't eventually build their application.

She'll look for founders who are thoughtful about their positioning relative to larger players. Not defensive or fearful, but clear-eyed about competitive dynamics. She'll likely value teams that have already found initial customers and demonstrated that they can solve real problems, even if the problem is being solved in a scrappy, partially manual way. She'll be interested in teams that can articulate a clear moat that will be defensible for multiple years, not just for the next 12 months.

The Market Signal

Rosenthal is looking for companies serving markets where enterprise customers have genuine, acute problems that they're currently struggling to solve. She's particularly interested in verticals where she has observed significant pain during her time at Open AI—areas where she saw enterprises getting excited about possibilities but struggling with implementation. She's skeptical of horizontal platform plays and much more interested in vertical applications and domain-specific solutions.

She's looking for markets where the competitive landscape isn't already crowded with well-funded AI startups. While some competition is good (it validates the market), she's interested in positions where there's room for a focused team to build a defensible position before the market becomes saturated.

The Product Signal

Products that Rosenthal will likely find interesting are those that are clearly better at something specific than the available alternatives—whether those alternatives are legacy software, generalist AI chatbots, or partially manual processes. She's not interested in products that only work because they're using the newest, most expensive model. She's interested in products that solve customer problems efficiently and sustainably.

AI startups often succeed by building moats through deep customer integrations and understanding vertical workflows, which are rated as highly important strategies. (Estimated data)

Competitive Analysis: Other VCs in the AI Startup Space

Rosenthal is joining Acrew Capital at a moment when many venture capital firms are explicitly competing for AI startup investments. Understanding the competitive landscape for investor talent provides context for why Rosenthal's hire is significant.

Several major venture capital firms have brought in operators from AI labs and AI-focused companies. Sequoia, Andreessen Horowitz (a 16z), and others have explicitly built teams around AI investing. However, Acrew's explicit thesis around AI applications and infrastructure, combined with Kolodny's demonstrated expertise in this space, creates a distinctive positioning. Adding Rosenthal to this team provides a unique angle: deep insider knowledge of how Open AI operates and thinks, combined with extensive enterprise customer relationships.

This combination is difficult for most other venture capital firms to replicate. While they might bring in other operators from AI labs, few have the specific combination of sales leadership experience (understanding go-to-market) and extensive enterprise customer relationships (understanding buyer behavior) that Rosenthal brings.

The Regulatory and Governance Implications

How Context and Data Ownership Intersect with Regulation

Rosenthal's emphasis on context as a defensible moat has interesting implications for regulatory and governance concerns around AI. To the extent that enterprises retain control over the data and information in their AI system's context, they maintain some degree of control and visibility over how the system behaves. This is more defensible from a governance perspective than systems that are entirely dependent on proprietary models run by external providers.

As regulation around AI becomes increasingly sophisticated and requirements around explainability, auditability, and data governance become more stringent, startups that have built their architectures with these concerns in mind will be better positioned. Rosenthal, having worked with enterprises on adoption, likely understands these governance concerns deeply.

Enterprise Compliance and Data Privacy

Startups that make it easy for enterprises to comply with data privacy regulations, to maintain audit trails of what their AI systems are doing, and to ensure that sensitive data doesn't leak will have significant competitive advantages. These concerns are not theoretical—they're actual blockers that prevent enterprises from deploying AI solutions today. Startups that solve these problems, in addition to providing core functionality, multiply their addressable market.

Lessons for Developers and Engineering Teams

Building for Context and Memory

For developers building AI applications, Rosenthal's emphasis on context and memory as moats points to specific technical directions worth pursuing. Rather than building applications that rely entirely on the base capabilities of language models (or worse, that rely on techniques that will become obsolete as models improve), developers should focus on building sophisticated context management layers. This might involve:

- Building knowledge graphs that capture relationships between pieces of information relevant to your application

- Implementing persistent memory systems that maintain state across interactions

- Creating specialized retrieval systems tailored to your domain rather than relying on generic vector similarity search

- Developing fine-tuning pipelines that specialize models for your specific use cases

- Building systems that maintain audit trails and explainability for enterprise customers

These technical investments are less flashy than chasing the latest model releases, but they create more sustainable competitive advantages.

Integrations and Workflow Automation

Rosenthal's observation about the gap between theoretical possibility and practical deployment suggests that developers who excel at integrations and workflow automation will build valuable products. Enterprise customers aren't looking for AI for AI's sake. They're looking for AI that fits seamlessly into how they actually work. This means investing in integrations with the systems they use daily, building interfaces that feel natural within their workflows, and creating automation that reduces friction without introducing new friction.

The Next Wave: What 2025-2026 Will Look Like

Model Consolidation and Specialization

Based on Rosenthal's insights and the trajectory of the AI industry, we can anticipate several developments over the next 18-24 months. First, the market for foundation models will likely experience some consolidation. The number of well-funded, capable foundation model companies will probably decrease as the competition for computational resources intensifies and as the capital required to compete increases. However, we'll simultaneously see proliferation of specialized models fine-tuned for specific domains. The bifurcation between general-purpose models and domain-specialized models will likely become more pronounced.

Application Layer Maturation

Second, the application layer will mature significantly. Early AI applications were often literal chatbot wrappers around language models. Over the next few years, successful applications will be increasingly sophisticated, leveraging context graphs, persistent memory, specialized models, and deep domain integration. The "AI wrapper" approach will be increasingly recognized as insufficient for building defensible businesses.

Enterprise Procurement Evolution

Third, how enterprises procure and deploy AI will continue evolving. Procurement teams will become more sophisticated. RFPs will become more specific. Enterprises will move from proof-of-concept experiments to production deployments, which requires different capabilities from vendors (reliability, support, security, compliance). Startups that help enterprises navigate this transition will find significant opportunities.

The Rise of AI Infrastructure for Enterprises

Fourth, we'll see the emergence of more sophisticated AI infrastructure specifically designed for enterprise use cases. This includes context management systems, fine-tuning platforms, compliance and governance tools, and integration platforms. Startups that build these foundational tools will serve the larger ecosystem of AI startups and enterprises.

Case Study Synthesis: Learning From Early AI Successes

While specific recent case studies cannot be named without attribution to sources, the general patterns from successful AI startups align closely with Rosenthal's investment thesis. Companies that have achieved strong product-market fit and significant customer traction typically share several characteristics:

- Clear domain focus: They serve a specific vertical or use case rather than attempting to be broadly applicable

- Understanding of integration requirements: They've spent time understanding how their solution fits into existing workflows and systems

- Reasonable unit economics: They can solve customer problems without requiring prohibitively expensive model API calls

- Defensible differentiation: Their advantage isn't primarily that they're using the latest model, but that they're using AI effectively in a context-specific way

- Strong customer relationships: They've invested heavily in understanding customer needs and maintaining customer success

- Flexibility on technology choices: They're not locked into proprietary technologies; they can evolve their technology stack as the landscape changes

These patterns suggest that Rosenthal's investment thesis is grounded in realistic assessment of what's actually working in the market.

Actionable Frameworks for Assessing AI Startups

The Moat Evaluation Framework

When evaluating whether an AI startup has defensible competitive advantages, consider:

Context and Data Ownership: Does the startup have a clear path to accumulating valuable context or data that customers control and that provides competitive advantage? Or is the advantage primarily derived from temporary access to latest models?

Domain Expertise: Does the team demonstrate genuine expertise in the domain they're serving, or are they applying general AI skills to problems they don't deeply understand?

Integration Depth: Have they invested in understanding and integrating with the systems and workflows their customers actually use, or are they building generic solutions?

Customer Lock-in: Once a customer starts using their product, are there switching costs that make alternatives less attractive? Or could a customer switch to competitors with minimal friction?

Sustainable Unit Economics: Can they solve customer problems profitably, or does their current approach require prohibitively expensive model calls?

The Go-to-Market Evaluation Framework

Assess whether the startup's go-to-market strategy is realistic:

Sales Cycle Alignment: Have they honestly assessed how long it takes to sell to their target customers? Enterprise sales cycles are typically 6-9 months or longer. SMB sales cycles are shorter but still non-trivial.

Proof Points: Do they have actual customers willing to serve as references, or are they still in proof-of-concept phase with potential customers?

Competitive Positioning: Can they clearly articulate how they differentiate from: (a) incumbent solutions, (b) other AI startups serving the same market, and (c) the possibility that large model providers will eventually build a competing solution?

Pricing Sustainability: Is their pricing strategy sustainable given how enterprise budgets work and what customers perceive as fair value? (This is particularly important given the rapid decrease in model API costs over time.)

Broader Implications for the AI Ecosystem

The Transition From Lab to Commercial Deployment

Rosenthal's movement into venture capital represents the broader transition of AI from primarily academic and research endeavor to commercial enterprise. As AI moves from "will this be possible?" to "how do we make this valuable for paying customers?", the insights of people like Rosenthal—who understand both the cutting-edge capabilities and the messy realities of customer adoption—become increasingly valuable.

This transition has implications for how the industry should structure itself. The most valuable value-add from investors in this era isn't necessarily pattern matching based on research papers or technical benchmarks. It's understanding the practical, commercial reality of how enterprises adopt and benefit from AI.

The Convergence of AI and Traditional Software Markets

As AI matures, the competitive dynamics in AI-adjacent software markets are beginning to resemble traditional software markets in interesting ways. The companies winning in enterprise software over the past 20 years have typically won through some combination of better product, better integrations, better customer success, and lower total cost of ownership. The same dynamics are now playing out in AI software. This suggests that patterns from traditional software success—build strong customer relationships, focus on customer success, integrate deeply with customers' existing systems, maintain efficiency—remain relevant in the AI era.

Critical Uncertainties and Risk Factors

Model Capability Evolution Uncertainty

One substantial uncertainty facing everyone in this space, including Rosenthal, is the rate at which model capabilities will continue to improve. If models improve at a slower pace than expected, the relative importance of specialization and domain expertise increases—this benefits startups. If models improve dramatically faster, it might reduce the moat available to startups unless they can maintain advantages in other dimensions (context, domain expertise, customer relationships, integration depth).

Regulatory Uncertainty

Regulation around AI remains unsettled. Different jurisdictions are taking different approaches. If regulation becomes very onerous, it could increase barriers to entry and thus help startups with compliance expertise. If regulation becomes very permissive or if certain uses of AI are heavily restricted, it could shift which applications are viable.

Commercial Market Development Uncertainty

While everyone in the AI industry believes that AI will be transformative, the actual pace at which enterprises deploy AI and the actual economic value created remains uncertain. If the pace of adoption accelerates significantly, the market opportunity for startups expands. If adoption is slower than expected, it might compress the window of opportunity for startups to establish themselves before larger competitors enter.

Recommendations for Different Stakeholder Groups

For Founders Building AI Startups

-

Develop genuine domain expertise: Don't just apply AI to problems. Understand the domain deeply enough that you could credibly advise customers on how to approach their challenges.

-

Be realistic about competitive dynamics: Understand that model providers are not your primary competitors for most enterprise use cases. Your primary competition is status quo (how they solve the problem today) and other startups solving similar problems.

-

Build defensible advantages through context and integration: Rather than trying to compete on model capability, focus on unique context, better integrations, and deeper customer understanding.

-

Invest in understanding customer adoption economics: Understand the full cost to customers of adopting your solution (not just software cost, but training, integration, change management) and ensure your value proposition justifies that investment.

-

Maintain flexibility on technology choices: Don't lock yourself into proprietary technologies or single model providers. Build architectures that allow you to evolve as the underlying technology evolves.

For Investors Evaluating AI Startups

-

Look for founder-market fit: Do founders have genuine expertise in the markets they're serving? Have they spent time understanding the problems they're solving?

-

Evaluate the moat: What defensible advantages will this company have in 3-5 years, not just in the next 12 months? Is it truly defensible against well-funded competitors and potential model provider competition?

-

Assess the go-to-market realism: Is the timeline for customer adoption realistic? Is the pricing model sustainable given competitive and technology evolution?

-

Look for customer validation: Have they achieved actual customer adoption, or are they still in proof-of-concept phase?

-

Understand the technology risk: Is their current approach dependent on specific models or technologies that might become obsolete? Or are they building in a way that's robust to technology evolution?

For Enterprise Customers Evaluating AI Solutions

-

Be honest about your capabilities: What can your team actually manage in terms of AI systems? What requires partner support?

-

Focus on specific problems: Don't try to deploy AI broadly. Focus on specific, high-value problems where you can measure and demonstrate ROI.

-

Prioritize vendor stability and support: Early-stage startups can be risky. Ensure you're getting the support and guarantees you need.

-

Invest in your context and data: Focus on collecting and organizing the high-quality data and context that will make your AI systems valuable.

-

Plan for evolution: The solutions you deploy today will need to evolve over time. Choose vendors and architectures that allow for this evolution.

Conclusion: The Significance of Insider Perspectives in VC

Aliisa Rosenthal's transition from Open AI's first sales leader to general partner at Acrew Capital represents more than an individual career move. It's a meaningful signal about the maturation of the AI industry and the evolution of venture capital. As AI moves from research frontier to commercial reality, the investors and advisors who will be most valuable are those who understand both the cutting-edge capabilities of AI and the practical, messy reality of how enterprises actually adopt and benefit from these technologies.

Rosenthal brings three unique assets to her role at Acrew: (1) insider knowledge of how Open AI operates and what it's likely to build next, (2) extensive understanding of how enterprise customers think about AI, what problems they're trying to solve, and what misconceptions they harbor, and (3) deep relationships with enterprise decision-makers who will ultimately be the customers of the startups she invests in. These assets don't just make her a better investor for the portfolio companies she works with. They make her valuable to the entire AI ecosystem by helping to accelerate the maturation and professionalization of AI adoption.

For founders, investors, and enterprises navigating the AI landscape, Rosenthal's public insights offer valuable guidance. Build defensible advantages through context, specialization, and domain expertise rather than trying to compete directly on model capability. Recognize the gap between what's theoretically possible and what enterprises can actually deploy, and focus on bridging that gap. Maintain realistic expectations about competition from model providers while recognizing that they won't build every application. Focus on customer success and sustainable unit economics rather than chasing the latest models. And above all, remember that successful AI applications are ultimately about solving real customer problems effectively, not about deploying the most sophisticated AI technology.

The next wave of valuable AI companies will be built by founders who understand these principles deeply and who execute flawlessly on them. Investors like Rosenthal will accelerate this development by helping founders navigate the path from promising technology to sustainable, profitable business. For the AI industry as a whole, this represents genuine progress toward the commercialization and maturation that will ultimately prove AI's true value to the economy and to society.

The precedent set by Rosenthal's transition also matters for the broader ecosystem. As other talented operators from leading AI companies make similar moves—either to venture capital or to starting startups or to leading other organizations—they bring with them empirical understanding of how AI development and commercialization actually work. This knowledge diffusion accelerates the entire ecosystem, helping more companies avoid mistakes and identify opportunities faster. In a competitive landscape that's moving with remarkable speed, this acceleration may be as important as any individual startup's success.

For those watching the AI industry develop, Rosenthal's insights about context graphs, the importance of specialization, the challenges of enterprise adoption, and the remaining technical problems in memory and reasoning offer valuable signals about where the next wave of innovation will emerge. These aren't the predictions of someone outside looking in. These are observations from someone who has been at the center of the AI revolution and is now positioned to help guide the next chapter of that story. For founders, investors, and enterprises, paying attention to her thesis and approach is worthwhile. The companies she helps to build and advise over the next few years will likely define significant portions of the AI application landscape for the decade ahead.

FAQ

What is the significance of Aliisa Rosenthal joining Acrew Capital?

Aliisa Rosenthal's transition from being Open AI's first sales leader to a general partner at Acrew Capital is significant because she brings insider knowledge of how leading AI labs operate, combined with extensive experience understanding enterprise customer needs and adoption challenges. This combination of operational expertise and investor perspective is relatively rare and positions her to help portfolio companies navigate the complex landscape of AI commercialization more effectively than investors without this background.

How does the concept of "moat" apply to AI startups?

In AI startups, a moat refers to defensible competitive advantages that protect a company from being outcompeted by larger players (like Open AI or Google) or other startups. Rather than trying to compete on raw model capability, successful AI startups build moats through domain specialization, ownership of valuable context data, deep customer integrations, and understanding of specific vertical workflows. These advantages are harder for larger companies to replicate because they require deep domain expertise rather than just access to better models.

What is the context graph that Rosenthal mentions as an emerging opportunity?

A context graph is an advanced approach to organizing and managing information for AI systems that moves beyond traditional Retrieval-Augmented Generation (RAG). Instead of storing information as separate documents, context graphs organize information as interconnected entities and relationships, allowing AI systems to reason more sophisticatedly about how different pieces of information relate to each other. This enables richer reasoning, better information retrieval, and more coherent responses that account for multiple related pieces of information. Rosenthal identifies this as an area where significant innovation is still needed and where startups could build valuable competitive advantages.

Why is there a gap between what enterprises think AI can do and what it can actually deploy?

Enterprises often overestimate what's immediately possible with AI because they read about research breakthroughs and cutting-edge demonstrations without fully understanding the practical constraints. These constraints include integration challenges with legacy systems, data privacy and security requirements, regulatory compliance needs, the need for customization to specific workflows, insufficient training data, and the reality that many use cases require human-in-the-loop approaches rather than fully automated AI systems. Additionally, many organizations lack the internal technical expertise to implement AI solutions without external help. Successful startups bridge this gap by helping enterprises understand realistic applications and providing the integrations and customization necessary to actually deploy AI effectively.

How should AI startups position themselves relative to large model providers like Open AI?

Rather than trying to compete directly with model providers on capability, AI startups should position themselves as specialists in specific domains or verticals. This might involve (1) serving specific industries or use cases that model providers won't prioritize, (2) building superior integrations with industry-specific systems and workflows, (3) maintaining focus on customer success and support rather than spreading resources broadly, (4) developing domain-specific knowledge and context that makes their solutions more valuable in their niche, or (5) using smaller, more cost-effective models for their applications rather than the most expensive frontier models. The goal is to be irreplaceable to customers in a specific context rather than competing for general-purpose AI capability.

What role do enterprise customer relationships play in AI startup success?

Enterprise customer relationships are crucial for several reasons. First, they provide direct feedback on what problems are actually worth solving and whether your proposed solution genuinely helps. Second, early customers become reference customers and case studies that help future sales. Third, understanding how enterprises buy software, what their procurement processes are, and what concerns keep their decision-makers awake at night informs realistic go-to-market strategy. Fourth, enterprises often need customization and ongoing support, which builds switching costs and stickiness. Rosenthal's value to Acrew Capital partly derives from her extensive relationships with enterprise customers who can evaluate whether startup solutions actually solve real problems.

How is the AI startup market different from previous software market cycles?

The AI startup market has some similarities to previous software cycles but also meaningful differences. Like previous waves, successful companies will likely win through better products, better customer relationships, and more efficient unit economics. However, the AI era is different in that (1) the underlying technology is evolving extraordinarily rapidly, making bets on specific technologies risky, (2) there's a real possibility that well-funded labs will eventually compete in your space, requiring defensible advantages beyond technology, and (3) many enterprises are still in early learning phases about what AI can accomplish, creating both opportunity and risk around overhype and underwhelming initial results. Founders should build as if the core technology will change while the importance of domain expertise and customer relationships remains constant.

What investment opportunities does Rosenthal identify beyond foundation models?

Rosenthal explicitly states she's most excited about investments in the application layer—companies building solutions that help enterprise employees work more efficiently. Beyond applications, she's interested in (1) companies building infrastructure for context management and memory systems, (2) startups focused on fine-tuning and optimizing smaller models for specific domains rather than always using the most powerful models, (3) companies that help enterprises overcome the gap between theoretical possibility and practical deployment, and (4) solutions that address compliance, governance, and data privacy concerns that prevent enterprises from deploying AI more broadly. These areas offer opportunities that don't require competing directly on model capability.

How should enterprises decide which AI solutions to adopt?

Enterprises should start by identifying specific, high-value problems where AI could help (rather than trying to deploy AI broadly across the organization). Second, they should evaluate whether proposed solutions genuinely understand their specific workflows and integrate with their existing systems. Third, they should look for vendors who provide ongoing support, training, and success metrics rather than just selling and disappearing. Fourth, they should be honest about their own team's capabilities—do they have the expertise to implement and manage these solutions internally, or do they need vendor support? Fifth, they should pilot with manageable scope rather than attempting to transform their entire organization at once. Finally, they should invest in gathering and organizing the high-quality data and context that will make their AI systems valuable.

What does Rosenthal's career transition suggest about the maturity of the AI industry?

Rosenthal's transition from operational leadership at a major AI lab to venture capital suggests that the AI industry is maturing in several ways. First, the field is transitioning from fundamental research and capability development toward commercialization and practical business building. Second, there's now enough accumulated knowledge about what works and what doesn't in AI commercialization that this knowledge becomes valuable to capture and share across multiple companies. Third, the ecosystem recognizes that building successful AI businesses requires different expertise than building AI models—you need domain expertise, customer understanding, integrations, support, and business discipline. Fourth, the existence of a substantial Open AI alumni network that has developed to the point where people can transition into other meaningful roles signals that the ecosystem has matured beyond any single company being the only game in town.

What are the most likely risks for AI startups based on Rosenthal's perspective?

Based on Rosenthal's insights, the most significant risks for AI startups include (1) building applications on foundation models without developing defensible advantages that will survive technology changes, (2) targeting use cases that model providers will eventually build themselves, (3) failing to understand the practical constraints enterprises face when adopting AI, (4) building expensive solutions that require prohibitively expensive model API calls, making unit economics unworkable, (5) overestimating what enterprises can actually deploy given their existing technical capabilities, (6) underestimating the time and effort required for enterprise adoption and customer success, and (7) failing to develop the domain expertise that would make their solutions meaningfully better than generalist alternatives. Startups that recognize and mitigate these risks—by building defensible moats, maintaining efficient unit economics, understanding enterprise constraints, and developing genuine domain expertise—will be better positioned for success.

Key Takeaways

- Aliisa Rosenthal's transition from OpenAI sales leader to Acrew Capital GP reflects the commercialization phase of AI industry, where operational expertise becomes as valuable as technical capability

- AI startups build defensible moats through context ownership (information control), vertical specialization (deep domain expertise), and application-layer innovation—not through competing on base model capability

- Significant gap exists between what enterprises believe AI can accomplish and what they can practically deploy, representing the actual market opportunity for startups to address

- Context graphs and advanced memory systems represent emerging innovation frontier where startups can build competitive advantages over generic AI applications

- Model providers will continue expanding into multiple domains but won't build every enterprise application, creating sustainable opportunity for focused startups with defensible strategies

- Enterprise customer relationships and understanding of procurement processes matter more than access to frontier models for long-term startup success

- Smaller, more efficient models optimized for specific use cases may deliver better unit economics and customer value than always using the most powerful available models

- Founders should build for technology evolution and changing competitive landscapes rather than assuming current model capabilities or competitive positions will remain static

Related Articles

- Why Deepinder Goyal Stepped Down as Eternal CEO in 2025

- OpenAI's 2026 'Practical Adoption' Strategy: Closing the AI Gap [2025]

- Tesla's Dojo Supercomputer Restart: What Musk's AI Vision Really Means [2025]

- AI Bubble Myth: Understanding 3 Distinct Layers & Timelines

- Google's Search Antitrust Appeal: Monopoly Ruling & Data Sharing Impact 2025

- OpenAI Ads in ChatGPT: Why Free AI Just Got Monetized [2025]