Nvidia's Push to Become the Android of Robotics: What It Means for the Future [2025]

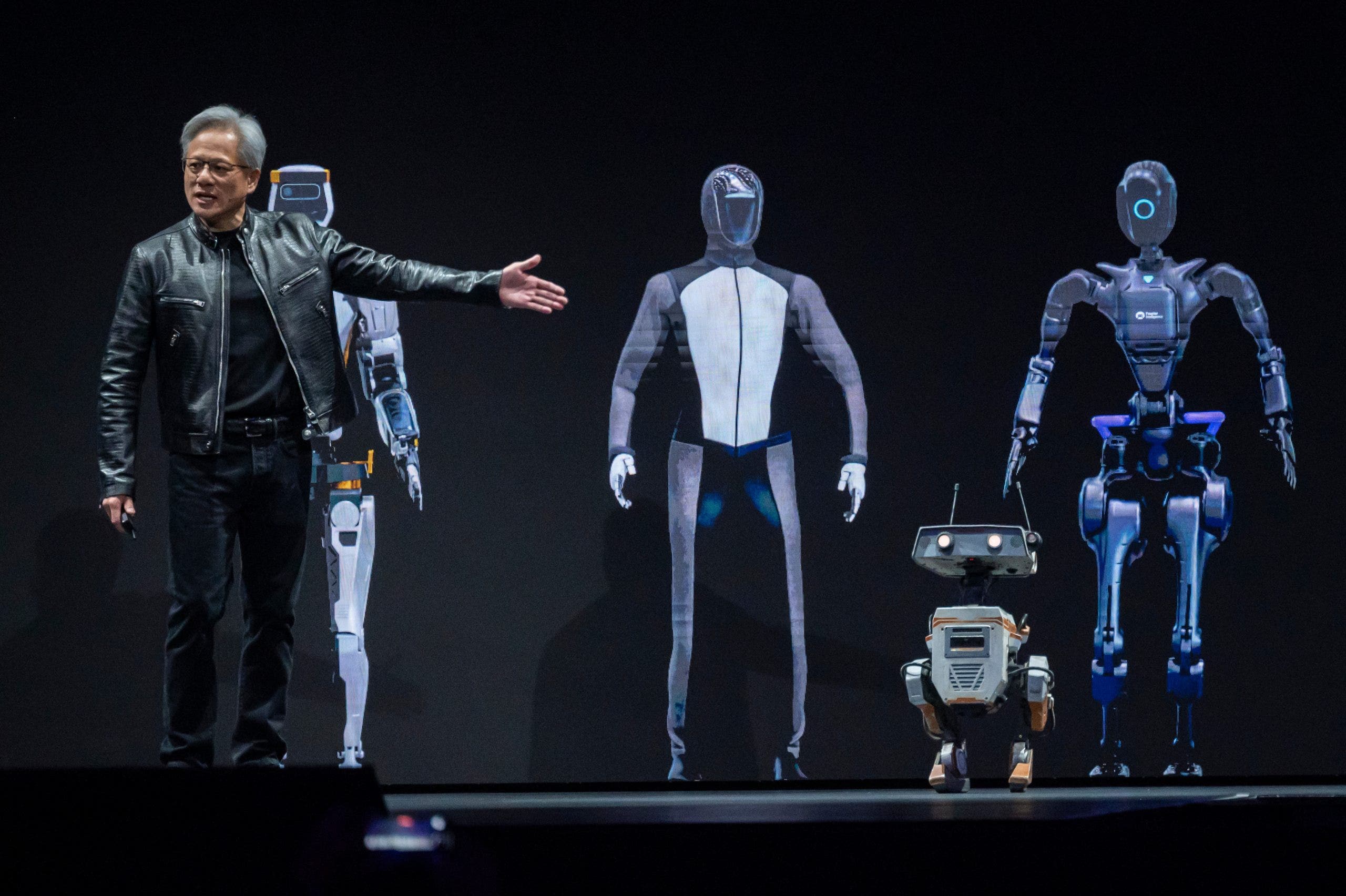

Last week, Nvidia dropped something massive at CES 2026. Not another GPU announcement. Not another AI model. A complete, end-to-end robotics platform. And here's what caught my attention: they're positioning it exactly like Android positioned itself in mobile.

Nvidia isn't trying to build every robot. They're trying to become the foundation that everyone else builds on top of. The underlying layer. The operating system. The invisible infrastructure.

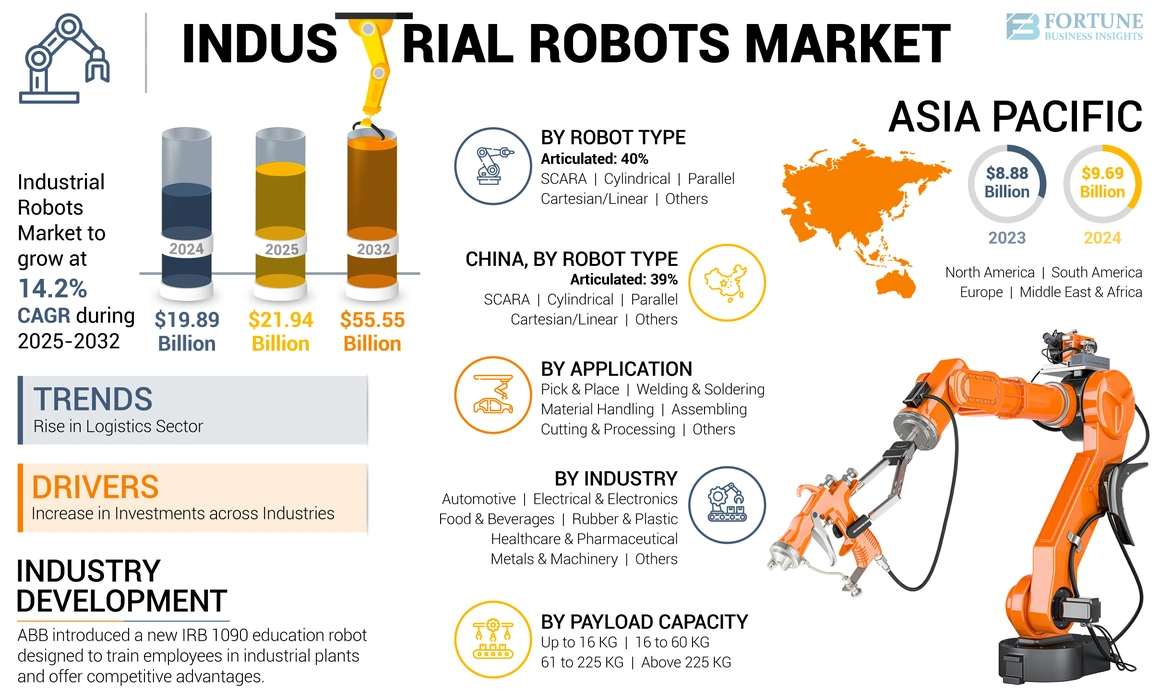

This matters because robotics has been fragmented forever. Boston Dynamics builds bipedal walkers. Tesla focuses on humanoids. Smaller startups struggle with simulation, training data, edge deployment, and hardware compatibility. There's no unified stack. No standard way to train a robot for task A, then adapt it for task B. No common platform.

That's changing. And if Nvidia pulls it off, we're looking at a shift as significant as Android was for mobile. Potentially bigger, actually, because robots operate in the physical world. Getting this wrong means broken hardware, missed timelines, and wasted billions in robotics development.

Let me walk you through what Nvidia actually announced, why it matters, and where this is heading.

The Core Problem Nvidia is Solving

Robotics development today is brutally inefficient. Here's what the process looks like for most companies.

You start with a hardware platform: maybe a Boston Dynamics Spot, a Tesla Bot prototype, or a custom humanoid. Then you collect data. Lots of it. Thousands of hours of robot interaction, human demonstrations, trial and error. You build a simulation environment because testing everything in the real world takes forever and costs a fortune. Every crash, every dropped object, every failed grasp is a setback.

Then you train a model. Ideally a generalist model that can learn one task and apply concepts to similar tasks. But most models are narrow. They're trained for one specific environment, one robot morphology, one set of objects. Transfer learning barely works. The moment you change the robot's joints, the gripper design, or the workspace, you're starting over.

Finally, you deploy. And deployment is where everything breaks. Models that worked perfectly in simulation fail catastrophically on real hardware. Latency issues. Sensor noise. Physical variability. Small differences in how a robot's actuators behave compound into massive failures.

According to research from the past few years, most robotics companies spend 60-70% of their development time not on innovation, but on this pipeline. On simulation. On data labeling. On infrastructure. On making one robot's code work on another robot's hardware.

This is the problem Nvidia is solving.

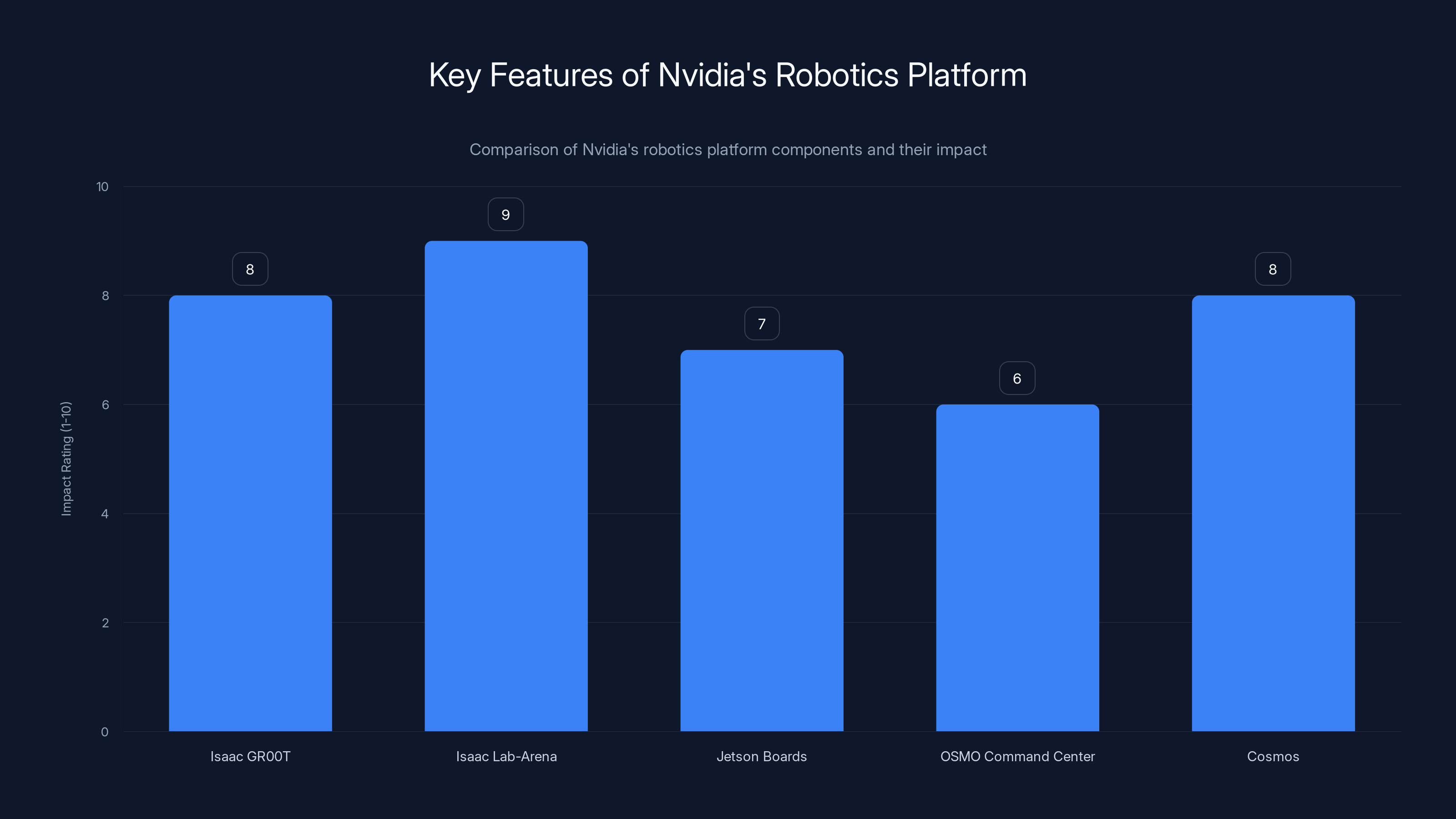

Isaac Lab-Arena and Isaac GR00T are rated highly for their impact on standardizing simulations and enabling generalization across robot morphologies. Estimated data.

Foundation Models: The Brain Layer

At the center of Nvidia's ecosystem are four new foundation models. And I need to be specific here because there's a real architecture underneath.

Cosmos Transfer 2.5 and Cosmos Predict 2.5 are world models. Think of them as predictive engines that understand physics. You feed them video of a robot interacting with objects. The model learns to predict what happens next. What does gravity do? How do objects collide? What happens when a gripper closes?

That might sound simple, but it's the foundation for everything else. If a model can predict the physical world accurately, it can simulate environments without needing a hand-coded physics engine. It can generate synthetic training data. It can let you test thousands of robot behaviors in parallel without running actual robots.

This is huge because synthetic data is cheap. Real data from robots is expensive. If you can generate realistic synthetic data with a world model, you've just dramatically reduced the cost of robot training.

Cosmos Reason 2 is a vision language model. It takes raw sensory input from a robot—camera feeds, depth sensors, maybe lidar—and generates reasoning about what it sees. Not just "object detected." More like "I see a fragile ceramic cup inside a cardboard box. The box is partially open. There's packing material on the left side. If I reach with my left gripper and tilt 15 degrees, I can probably grab the cup without damaging the packing material."

That's reasoning with visual understanding. It's a step beyond simple object detection. The model has to understand spatial relationships, material properties, physics constraints, and risk.

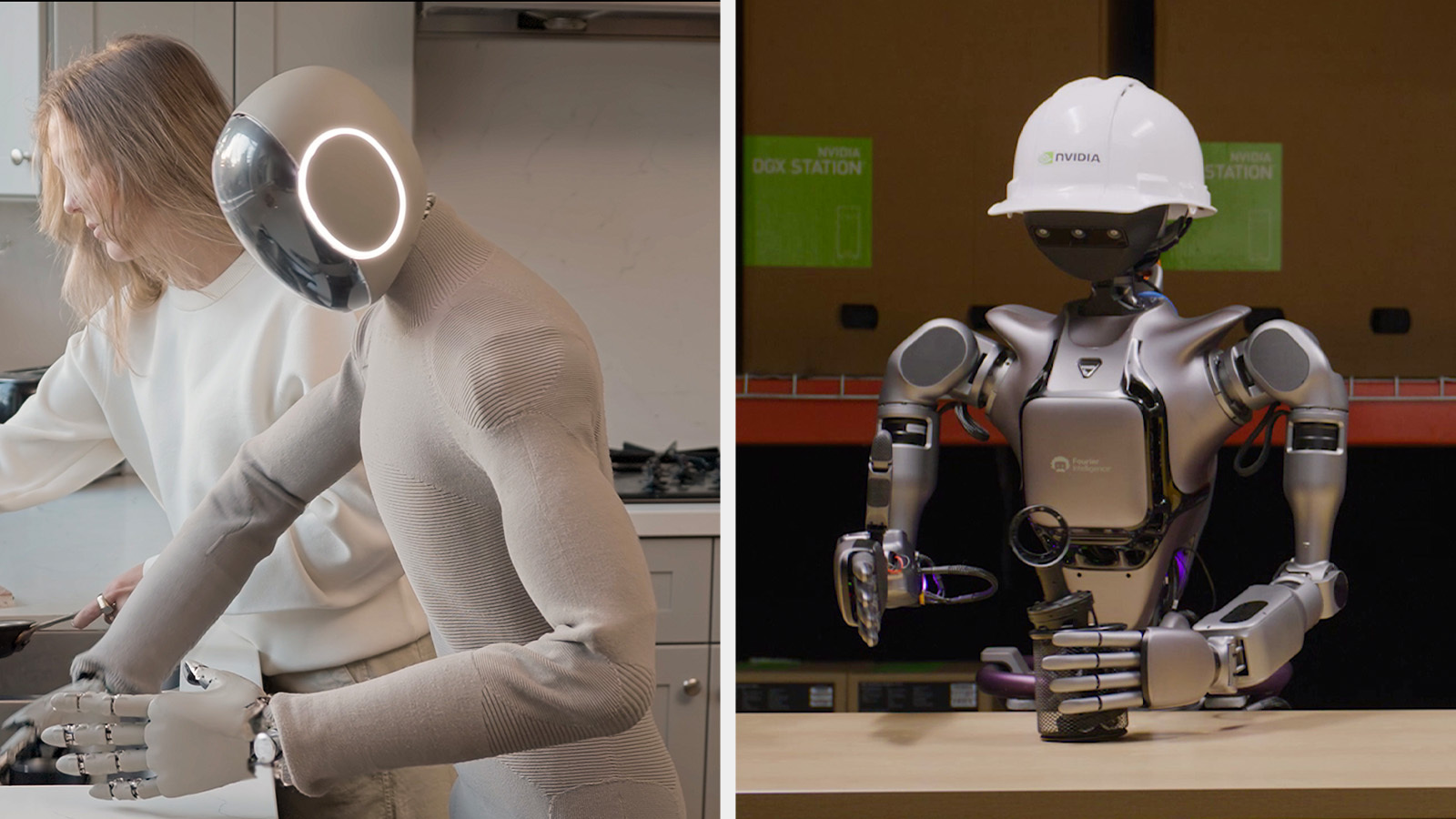

Isaac GR00T N1.6 is the big one. GR00T stands for Generalist Robot 00T, and it's positioned as the flagship vision-language-action model for humanoid robots. Here's the architecture: Cosmos Reason 2 serves as its perception brain. It takes camera input, understands the scene, and feeds that understanding into GR00T. GR00T then outputs whole-body motor commands.

Whole-body control is important. Older models controlled individual limbs. Move the arm. Move the legs. Independently. GR00T coordinates everything. Your left arm can be manipulating an object while your legs are walking. You can adjust your body angle while grasping. You can think about spatial coordination instead of individual servo commands.

The advantage is compounding. A humanoid robot with coordinated full-body control can do tasks that single-limb robots simply can't. Climbing. Complex manipulation. Navigation through tight spaces.

These models aren't proprietary. They're available on Hugging Face. Open source. Downloadable. The strategy here is clear: Nvidia wants developers building on top of these models. They want ecosystem lock-in through usefulness, not legal restrictions.

Simulation: The Training Multiplier

Building foundation models is one piece. The other piece is simulation. And Nvidia announced Isaac Lab-Arena, which is basically a unified testing ground for all of this.

Here's what simulation usually looks like for robotics teams. You have your physics engine—maybe Gazebo, maybe Py Bullet, maybe Mu Jo Co. You have your robot's URDF file, which describes the physical structure. You write environments. You write tasks. You manually create scenarios for testing. You run everything locally on your desktop because cloud simulation adds latency.

It's fragmented. Every company does it slightly differently. Benchmarking is impossible because nobody agrees on what a benchmark even is.

Isaac Lab-Arena consolidates all of this. It includes established benchmarks like Libero (for dexterous manipulation), Robo Casa (for household tasks), and Robo Twin (for digital twins of real robots). It runs efficiently on consumer hardware. It integrates with the foundation models. And critically, it gives everyone a standard framework.

Why does standardization matter? Because it turns robotics into a competitive problem, not an infrastructure problem. Companies stop spending cycles building simulation infrastructure and start competing on algorithms. On task design. On model quality. On actual innovation.

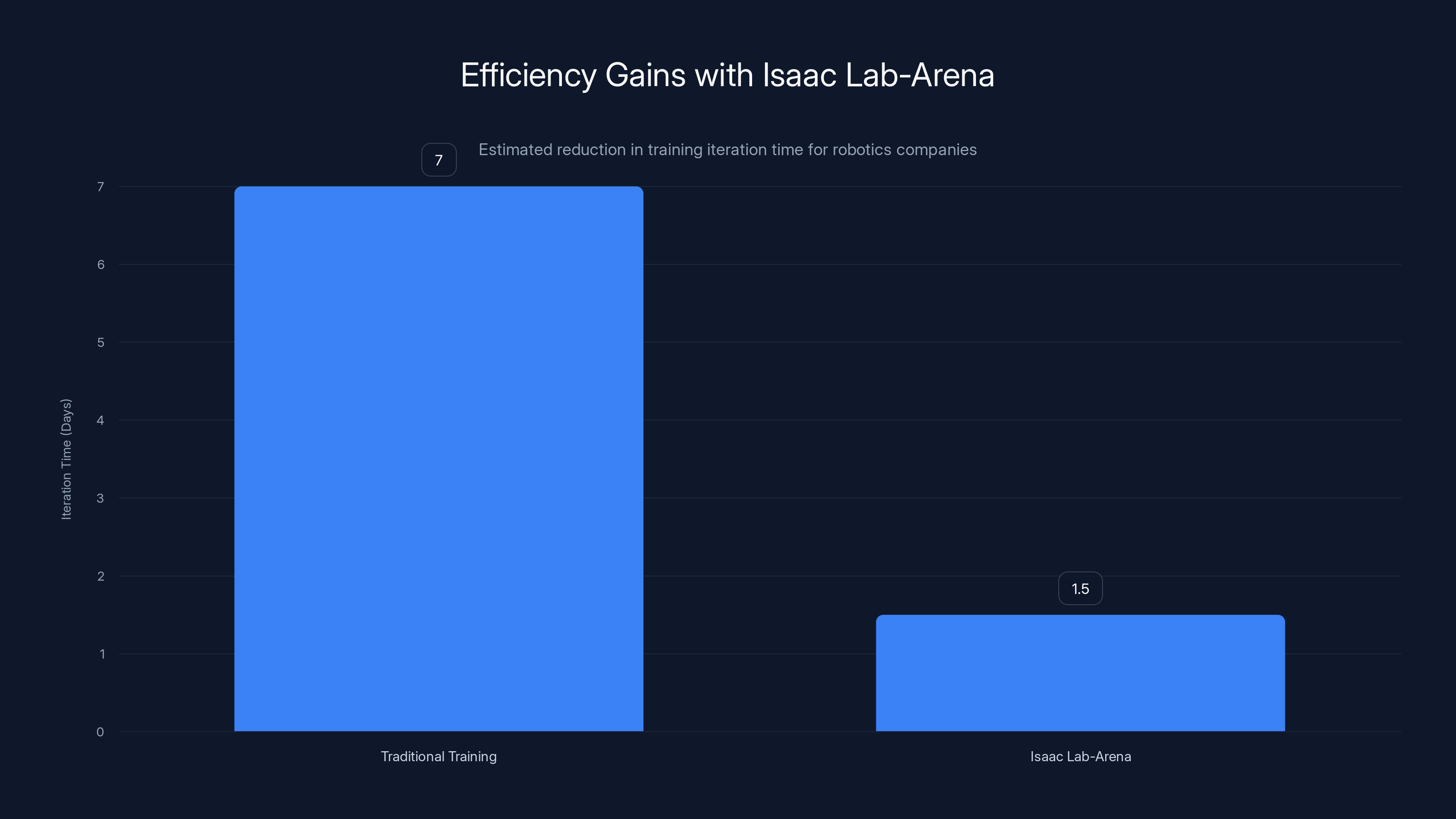

The efficiency gains are real. A company using Isaac Lab-Arena for training might reduce iteration time by 40-50%. Instead of training a model for a week on expensive hardware, you train in simulation for a day, then finetune on real robots for a few hours.

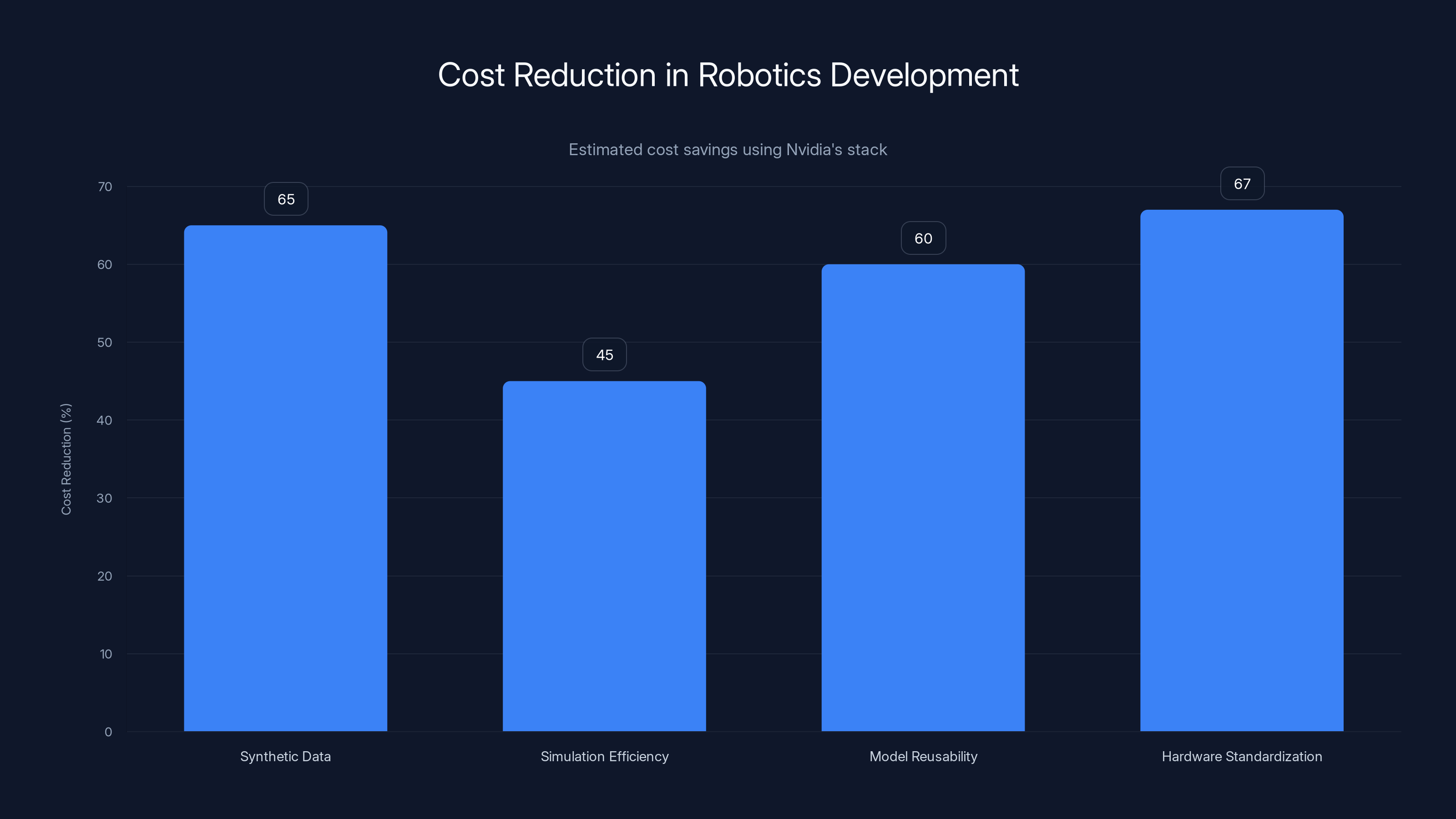

Nvidia's stack offers significant cost reductions across various areas, with synthetic data generation and hardware standardization providing the highest savings. Estimated data.

The Hardware Layer: Jetson and Edge Deployment

Foundation models and simulation are cloud problems. But robots operate on the edge. They can't phone home to Nvidia's data centers every time they need to make a decision. Latency kills real-time control. So Nvidia announced new edge hardware.

The Jetson T4000 is the new high-end option. It's a Blackwell-based GPU with 1200 teraflops of AI compute, 64GB of memory, and power efficiency at 40-70 watts. That's the key: it can run a full vision-language-action model locally on the robot.

Think about what this enables. A humanoid robot with a Jetson T4000 onboard can run Isaac GR00T directly. No cloud connection needed. No latency. The robot perceives, reasons, and acts in real time. All onboard.

That's a constraint-changing move. Previously, edge hardware couldn't run these models. You had to do inference in the cloud. Now you don't.

Jetson boards have been dominant in robotics for years. Tesla uses them. Boston Dynamics uses them. Most startups use them. By making the Jetson pipeline better—easier to deploy models, better libraries, tighter integration with Isaac tools—Nvidia increases the switching cost for using something else. You're already building on Jetson. Why switch?

This is how Android won. They didn't charge phone makers for the OS. They made the OS so good, so free, so well-integrated with their other services that rejecting it wasn't rational.

The Developer Ecosystem: Le Robot and Hugging Face Integration

Nvidia announced a deepened partnership with Hugging Face. Le Robot is Hugging Face's robotics framework. It's open-source. It lets developers train and share robot models without needing Nvidia hardware. But the new integration directly connects Hugging Face's Le Robot into Nvidia's Isaac and GR00T pipelines.

What this does is connect two massive developer communities. Nvidia has 2 million robotics developers. Hugging Face has 13 million AI builders. The integration means both groups can work together seamlessly.

Hugging Face released Reachy 2, an open-source humanoid that now works directly with Jetson Thor. The implication: developers can use the same model architecture across different hardware platforms. Train on one robot. Deploy on another. The abstraction layer works.

This is ecosystem strategy. Nvidia isn't trying to be the only robotics company. They're trying to be the foundation that all robotics companies build on. Hugging Face brings developer distribution. Brings legitimacy in the open-source community. Brings models. Brings a reputation for not restricting technology.

Combined, they're creating a network effect. More developers use Nvidia tools because Hugging Face developers do. More Hugging Face developers use Nvidia tools because it integrates with robotics. Both communities grow. Switching costs increase.

Why the Android Comparison Actually Fits

Android's dominance in mobile wasn't inevitable. In 2008, nobody was sure Android would win. i Phone was slick. Windows Mobile had enterprise support. Black Berry was the obvious choice for businesses.

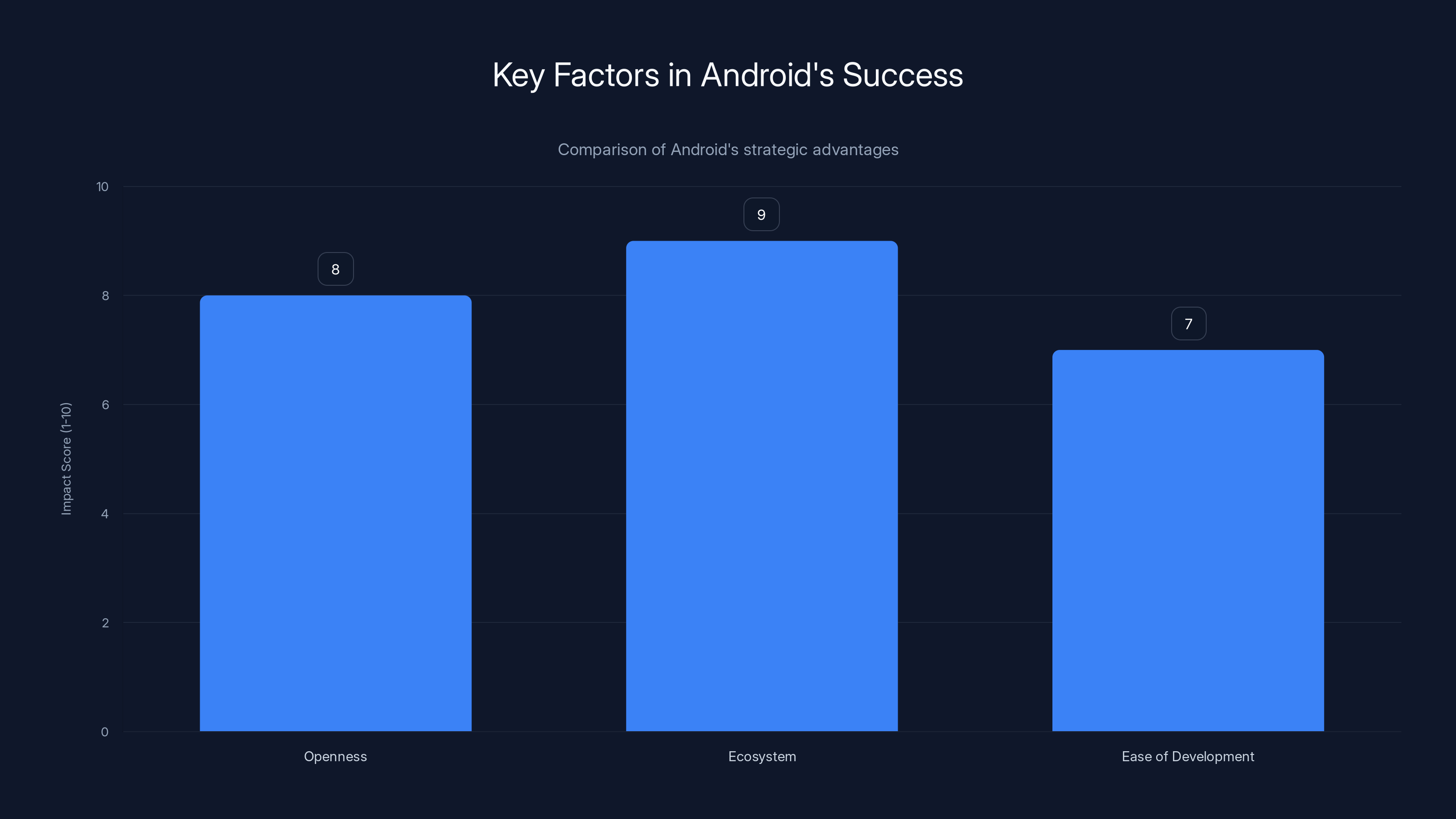

Android won because of three things:

First, Google made it open-source. Manufacturers could fork it. Customize it. Make it their own. Samsung didn't have to use i OS. They could use Android and differentiate on hardware. That openness was revolutionary. It made Android adoptable.

Second, Google built an ecosystem around it. Not just the OS. Maps. Gmail. Play Store. You Tube integration. Services. The OS was the hook, but the ecosystem was the lock-in. You couldn't get all those services as well on other platforms.

Third, Google made it incredibly easy to build for. Good documentation. Development tools. Developer conferences. Consistent APIs. You could build an app once and deploy it across thousands of Android phones with different manufacturers, different RAM, different processors. That consistency was valuable.

Nvidia is following the exact same playbook with robotics.

Openness: Foundation models on Hugging Face. Isaac Lab-Arena is open-source. Le Robot integration is open. Developers can fork it. Customize it. Build proprietary layers on top if they want.

Ecosystem: Foundation models that work across robot morphologies. Simulation that works with multiple environments. Hardware from multiple manufacturers that all support Jetson. Integration with Hugging Face and its massive community. You're not locked into Nvidia robots. You're locked into Nvidia software.

Ease of development: Unified pipeline from simulation to training to deployment. Benchmarks. Pre-trained models. Tools that abstract away hardware complexity. A developer can start with GR00T, finetune it for a specific task, and deploy it to a dozen different robot platforms without rewriting code.

That's Android for robotics.

Using Isaac Lab-Arena can reduce training iteration time by approximately 40-50%, allowing companies to focus more on innovation rather than infrastructure. (Estimated data)

The Competitive Landscape and Early Adoption Signals

Where's the evidence this is working? Robotics is the fastest-growing category on Hugging Face right now. Nvidia's models are leading downloads. Real robotics companies are using Nvidia's tech.

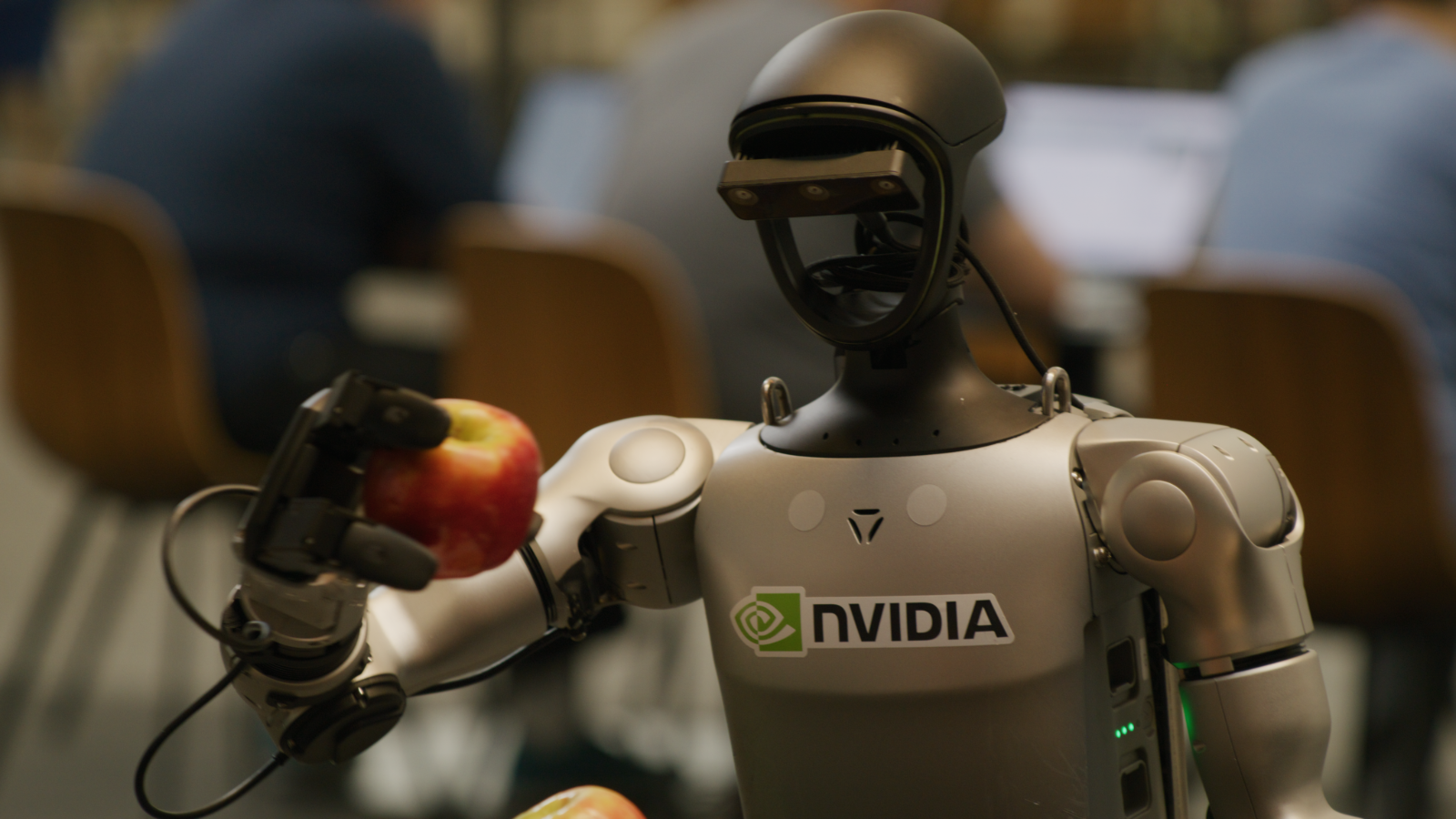

Boston Dynamics uses Nvidia hardware and is exploring Nvidia's foundation models. Tesla's humanoid development likely involves Jetson hardware. Caterpillar is using Nvidia's robotics stack for autonomous equipment. Franka Robots, NEURA Robotics, and dozens of others have announced Nvidia integration.

This isn't required. These companies could build their own simulation environments. Develop proprietary models. Use alternative edge hardware. But they're choosing Nvidia because the integrated stack is better than the alternative.

There's inertia here. Engineers at these companies already know Jetson. They've already built on Isaac tools. Switching costs are real. And if Isaac Lab-Arena becomes the standard benchmark, there's competitive pressure to use it. If 80% of robots in the industry are benchmarked on Isaac Lab-Arena, running your tests there becomes necessary just to compare favorably.

Economic Implications: Cost Reduction at Scale

Let's talk about the economic impact of this stack.

Robotics development is capital-intensive. Hardware costs are high. Data collection is expensive. Simulation infrastructure is expensive. Compute for training is expensive. Most companies estimate robotics development at

Nvidia's stack reduces these costs significantly.

Synthetic data generation: Instead of collecting thousands of hours of real robot data, world models generate synthetic data. Cost reduction: 60-70%.

Simulation efficiency: Isaac Lab-Arena is optimized for consumer hardware. No need for expensive cloud simulation infrastructure. Cost reduction: 40-50%.

Model reusability: Instead of training a model from scratch for each task, finetune foundation models. Cost reduction: 50-70%.

Hardware standardization: Using Jetson means you're buying a standard product with economies of scale. If you were building custom edge hardware, costs would be 2-3x higher.

Combined, a company that previously needed

This cost reduction is transformative. It moves robotics from a "only well-funded companies can compete" industry to a "startups can compete" industry. It democratizes access.

The Training Pipeline: From Cloud to Edge

Let me walk through how this actually works in practice. The pipeline is:

Phase 1: Synthetic data generation. You start with Cosmos Transfer 2.5. Feed it videos of robot interactions. It learns physics. Then generate millions of synthetic robot-environment interactions. Different robot morphologies. Different tasks. Different environments. All synthetic. All cheap.

Phase 2: Foundation model pretraining. Take those millions of interactions. Train Isaac GR00T on them. You end up with a model that understands how robots interact with the world generically. It's not trained for any specific task. It's trained on the physics and dynamics of robotic interaction.

Phase 3: Task-specific finetuning. Now you have a real task. Let's say: grasp a randomly oriented coffee mug from a cluttered kitchen countertop. In simulation, collect a few thousand real or synthetic examples of this specific scenario. Finetune GR00T. A few hours of training. Maybe a day.

Phase 4: Sim-to-real transfer. Deploy the finetuned model to a real robot. The model's never seen the real world. But because it was pretrained broadly and finetuned in simulation, it generalizes. The first few real-world examples might fail. But the model learns quickly. After 50-100 real interactions, it's performing well.

Phase 5: Continuous deployment and improvement. The robot runs on edge hardware. Every interaction is captured. Shared back to the cloud. Combined with data from thousands of other robots. Used to improve the next version of the foundation model.

This pipeline is the real innovation. Not any individual component. The entire flow from cloud training to edge deployment, with feedback loops.

It's designed for scale. One company trains a model. Thousands of companies deploy it. Thousands of robots generate data. That data improves the model. Better model helps all robots. That's how you get exponential improvement.

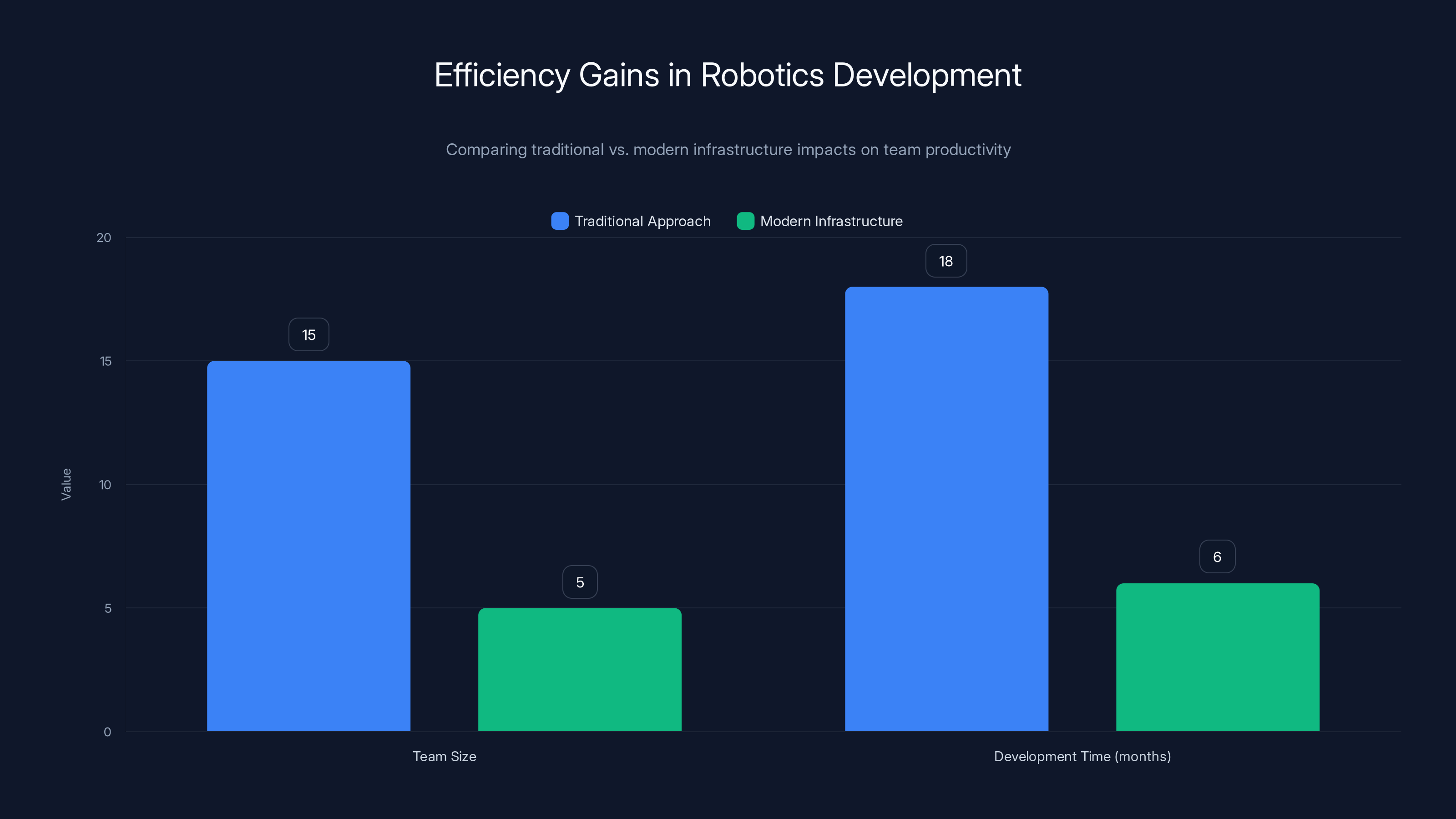

Estimated data shows modern infrastructure allows a smaller team to achieve in 6 months what a larger team took 18 months to accomplish, highlighting significant efficiency gains.

Generalization Across Robot Morphologies

Here's a technical detail that matters: the models are designed to generalize across different robot bodies.

Traditional approaches train a model for a specific robot. A Boston Dynamics Spot model won't work on a Tesla Bot. Different morphology. Different sensors. Different actuators.

But foundation models trained broadly can transfer across morphologies. Isaac GR00T, because it was trained on millions of interactions from dozens of different robots, understands the abstract concept of "grasping" independent of which gripper is doing it.

This is non-obvious. It requires:

Morphology-agnostic representations: The model doesn't output joint angles directly. It outputs abstract actions like "close gripper with X force." Different robots interpret that command using their own hardware API.

Sensor-agnostic perception: The vision model doesn't assume a specific camera. It works with RGB-D, thermal, lidar, or multi-camera setups.

Task-centric learning: Instead of learning "how to move servo 3 to position 45 degrees," it learns "how to grasp objects in various configurations."

The math here involves representation learning. Creating embeddings where different robot morphologies map to similar feature spaces for the same task.

In practice, this means:

- Train a model on Boston Dynamics robots

- It transfers to humanoids with minimal finetuning

- It works on industrial arms with a few examples

- New robots created next year can use it without retraining from scratch

This is the compounding advantage of foundation models. Every new robot benefits from the training that every previous robot generated.

The Simulation-to-Reality Gap and How Cosmos Closes It

One of robotics' oldest problems is the sim-to-real gap. A robot that works perfectly in simulation might completely fail in the real world.

Why? Physics engines are approximations. Sensors are noisy. Actuators have friction and backlash that simulators don't model perfectly. Environmental variations—temperature, humidity, dust—affect real robots but are invisible in simulation.

Cosmos world models help close this gap through a different approach. Instead of trying to make simulation more realistic (which is computationally expensive and requires constant tuning), Cosmos learns from actual robot videos what the real world looks like.

You collect a dataset of real robot videos. Cosmos Predict 2.5 learns to predict the next frame. It's learning a compression of the real world's dynamics. When you then train a robot policy in Cosmos-generated environments, the policy is training in a space much closer to reality.

This is probabilistic. It's not perfect. But it's often better than traditional physics simulators for policy training because it's learned from real data.

The innovation is using learned world models instead of hand-engineered physics engines for policy training. It's a shift in how simulation works.

Safety, Cost, and the Scaling Economics

Here's an angle that doesn't get discussed enough: Nvidia's stack makes robotics safer and more economical to scale.

Traditional robotics development requires lots of real-world testing. Robots fall. Robots break things. Robots injure people if safety isn't perfect. This creates liability, cost, and slow iteration.

Isaac Lab-Arena lets you test millions of scenarios in simulation before anything physical happens. You can test edge cases, failures, and recovery behaviors entirely digitally. Only when you're confident does the policy go to real robots.

This reduces:

- Robotics R&D costs: Less physical testing, fewer broken robots

- Liability risk: More extensively tested policies before deployment

- Development time: Faster iteration in simulation

- Scaling costs: When deploying to 1,000 robots, they all benefit from the work done in simulation

For a company deploying 1,000 humanoid robots across warehouses, the difference is massive. Instead of testing each robot individually (which would take months), test in simulation, then deploy. Total time: weeks instead of months.

This is the economic moat. Companies that adopt this stack earlier will deploy faster, iterate faster, and improve faster than competitors using traditional approaches.

The Jetson T4000 significantly improves AI compute and memory while maintaining power efficiency compared to previous models. Estimated data.

Open Source Strategy and Competitive Positioning

Nvidia could have locked all of this down. Proprietary models. Closed simulators. Private APIs. Instead, they went open source.

Why?

Network effects: More developers using these tools means more companies building on Nvidia hardware. More companies building robotics products using Nvidia tech. More robotics companies buying Nvidia chips.

Standard setting: By open-sourcing the benchmarks, Nvidia influences how the entire industry measures progress. Isaac Lab-Arena becomes the standard. That's power.

Avoiding lock-in backlash: If Nvidia locked everything down, robotics companies would invest in alternatives. Open source eliminates that pressure. You can use Nvidia tools without feeling trapped.

Community contributions: Open-source tools get better faster. Developers contribute. Bug reports. New features. Nvidia's engineers can't do all that alone.

This is different from past Nvidia strategies. They've typically been proprietary. CUDA is proprietary in important ways. But for robotics, an emerging field without a clear winner, open source is smarter.

It's the same calculation Google made with Android. You're not trying to lock people in. You're trying to be so useful that locking them out would be irrational.

Future Roadmap: Where This Goes

The stack Nvidia announced at CES 2026 is the foundation. What comes next?

More specialized foundation models: GR00T is for humanoids. But there will be models for quadrupeds. Models for industrial arms. Models for mobile manipulation platforms. Each trained on thousands of robots of that type.

Better world models: Cosmos Predict 3.0 will likely handle longer-horizon predictions. More accurate physics. Multi-modal inputs. Your world model won't just predict "what happens next frame." It will predict "what happens next 10 seconds if I execute this action sequence."

Continual learning: Robots deployed in the field will continuously improve. Every robot's experience feeds back into better models. Robots deployed in 2026 will be better than robots deployed in 2025, just from the accumulated field data.

Cross-embodiment learning: Instead of training a model for humans, training it to work across humans and robots. We might see humanoid robots learning from human videos, learning human movement efficiency, and applying those patterns to robotic movement.

Integration with large language models: GR00T + GPT-4 (or equivalent). A robot that can understand complex language instructions, reason about their feasibility, and execute them. "Walk to the kitchen and get me a coffee" becomes a natural instruction a robot can understand.

Real-time adaptation: Models that update in milliseconds as they learn new environments. A robot deployed in a new warehouse adapts to the specific layout without retraining.

The next 3-5 years of this roadmap will determine whether Nvidia actually becomes "the Android of robotics." If they execute, this becomes the dominant platform. If they stumble, competitors will fill the gap.

Comparing to Alternatives: Why This Stack Wins

Nvidia isn't the only player trying to create a robotics platform. But their approach has structural advantages.

Tesla's approach: Build proprietary robots. Control the entire stack. The advantage: perfect integration. The disadvantage: nobody else can use your models or tools. You're building a robot empire, not a platform.

Google's approach: Fund individual robotics startups. Share research. No unified platform. The advantage: diverse approaches. The disadvantage: fragmentation. No standard. Hard to compare progress.

Open-source approaches: Various startups building piecemeal solutions. Some open robotics simulation platforms. Some model architectures. The advantage: freedom. The disadvantage: nothing cohesive. Nothing at the scale of Nvidia's stack.

Nvidia's advantage is integration. Foundation models + simulation + edge hardware + developer tools + ecosystem partnerships. It's all designed to work together. You don't need to stitch together five different tools from five different companies.

For companies building robotics products, that integration is valuable. It reduces development time. Reduces risk. Reduces cost.

Estimated data shows that Android's openness and ecosystem were the most impactful factors in its success, closely followed by ease of development.

The Broader AI-to-Physical-World Trend

Nvidia's robotics push is part of a larger trend: AI moving off cloud servers into the physical world.

For the past 5 years, AI meant language models and image generation. Cloud-based. Server-based. Remote.

But AI in robotics is different. It has to work in real time. It has to be resilient to sensor noise. It has to work in constrained environments where you can't rely on cloud connectivity.

This is why companies like Open AI are building robotics teams. Why Meta is investing in embodied AI. Why every major tech company is suddenly interested in robots.

Robotics is where AI becomes material. Literally. It doesn't just generate text or images. It controls things. It affects the physical world.

Nvidia's positioning themselves as the infrastructure layer for this transition. From AI on servers to AI in robots.

That's a bigger market than data centers. It's every factory, every warehouse, every delivery fleet, every construction site, every autonomous vehicle, every space exploration mission.

If Nvidia becomes the standard platform for that, the revenue and influence implications are enormous.

Practical Implementation: What This Means for Roboticists

If you're a robotics engineer or startup founder, what does this mean?

Access: You now have access to foundation models that previously required millions in R&D. Download Isaac GR00T. Finetune it for your task. Deploy it. No need to train from scratch.

Benchmarking: You have standardized benchmarks for comparing your approach to others. Did you improve manipulation accuracy by 5%? Run it on Isaac Lab-Arena. Compare to published baselines. That's meaningful progress you can communicate.

Simulation: You don't need to spend 6 months building a custom simulation environment. Use Isaac Lab-Arena. It has the tools and benchmarks built-in.

Hardware: Use Jetson boards that Nvidia optimizes for. You're buying a commodity product with mature tooling. Or use alternatives if you prefer, but integration with Nvidia tools will be seamless.

Community: Join 2 million robotics developers using these tools. Share models. Share datasets. Collaborate.

The practical impact: a team of 5 engineers might do in 6 months what previously took 15 engineers 18 months. That's not hyperbole. It's the advantage of good infrastructure.

The Critical Unknown: Will This Actually Work?

I should be clear: foundation models for robotics are still early. GR00T exists. It's real. But it's not deployed at massive scale yet. It's not proven that you can train a model on 10,000 hours of diverse robot data and deploy it to new robots without extensive finetuning.

There are unsolved problems:

Generalization limits: How well does a model trained on humanoids work on quadrupeds? Current approaches suggest not well. You might need morphology-specific models after all.

Long-horizon planning: Most current models work for 30-second tasks. What about hour-long tasks? Multi-step planning? That's harder.

Real-world robustness: Lab results rarely transfer perfectly to real robotics. Dust. Temperature variations. Hardware drift. These affect real robots constantly.

Safety and verification: How do you guarantee a deployed robot won't break things or hurt people? Testing simulations aren't reality. This is an open problem.

Nvidia's stack doesn't solve these overnight. But it provides the infrastructure to solve them faster.

If generalization improves in 2026 such that models trained on 5,000 robots work on new robots with 10% less real-world data collection, that's a win. If it compounds, the advantage becomes decisive.

The Timeline: When Does This Matter?

Nvidia announced this at CES January 2026. When do we see real impact?

2026: Early adoption. Leading robotics companies integrate Isaac tools. First generation of GR00T-based robots deployed. Results are mixed but promising.

2027: Momentum builds. More robotics startups founded using Nvidia's stack as the foundation. Development times drop 20-30% compared to startups using old approaches. Ecosystem effects start.

2028-2029: Nvidia's stack becomes de facto standard. Most new robotics companies build on it. Most new robots run Jetson hardware. The network effect locks in. Switching becomes expensive.

2030+: Mature platform. Foundation models improve yearly from accumulated data. Robotics development is commoditized. Anyone can build a robot by assembling Nvidia components and finetuning Nvidia models.

This timeline assumes Nvidia executes well and competitors don't leapfrog. Both are big assumptions.

Critical Insights for Industry Watchers

If you're tracking AI and robotics, here's what matters:

Nvidia is shifting from GPU company to robotics infrastructure company. This is a big strategic move. It diversifies revenue. It increases switching costs across customers who need both compute and robotics tools.

Open source is a strategic weapon. Nvidia's openness isn't kindness. It's ecosystem strategy. Same as Google with Android. Same as Meta with Py Torch. Openness increases adoption. Adoption creates network effects. Network effects create moats.

Simulation matters more than many realize. The Cosmos + Isaac Lab-Arena combination might be more important than the foundation models. Good simulation lets you iterate faster. Faster iteration compounds.

We're at an inflection point in robotics. Before 2024, robotics was AI-skeptical. Build physical systems. Optimize mechanics. Be skeptical of AI hype. After 2024, it's flipped. Foundation models, simulation, end-to-end learning. This is the new playbook.

The companies that adopt this playbook fastest will pull ahead. Those clinging to old approaches will find themselves uncompetitive.

Conclusion: The Android Moment for Robotics

Nvidia's push to become "the Android of robotics" is the most strategically sound move in AI infrastructure since Google released Tensor Flow.

It's not that Nvidia invented any breakthrough technology at CES 2026. Foundation models exist. Simulation tools exist. Edge hardware exists. The breakthrough is integration. Creating a unified platform where everything works together seamlessly.

That's harder than any individual technology. It requires deep understanding of customer pain points. Deep partnerships. Willingness to open-source core technology. Patient capital to build ecosystem effects.

Nvidia has all three. And they're executing.

If they succeed, the impact is enormous. Robotics development becomes faster, cheaper, and more accessible. The barrier to entry drops from

This is how platforms work. Android didn't invent mobile. It made mobile accessible to everyone. Nvidia isn't inventing robotics. But they're making robotics accessible to everyone.

That's the real win. And that's why this announcement matters more than the technical details. It's not about one model or one simulation tool. It's about positioning Nvidia as the foundation of the next era of robotics.

The companies that build on that foundation fastest will shape what robots look like for the next decade. Nvidia isn't building those robots. They're building the platform those robots run on.

That's Android. That's power. That's why robotics companies from Boston Dynamics to NEURA to Tesla's bot team are paying attention.

FAQ

What exactly is Nvidia's robotics platform?

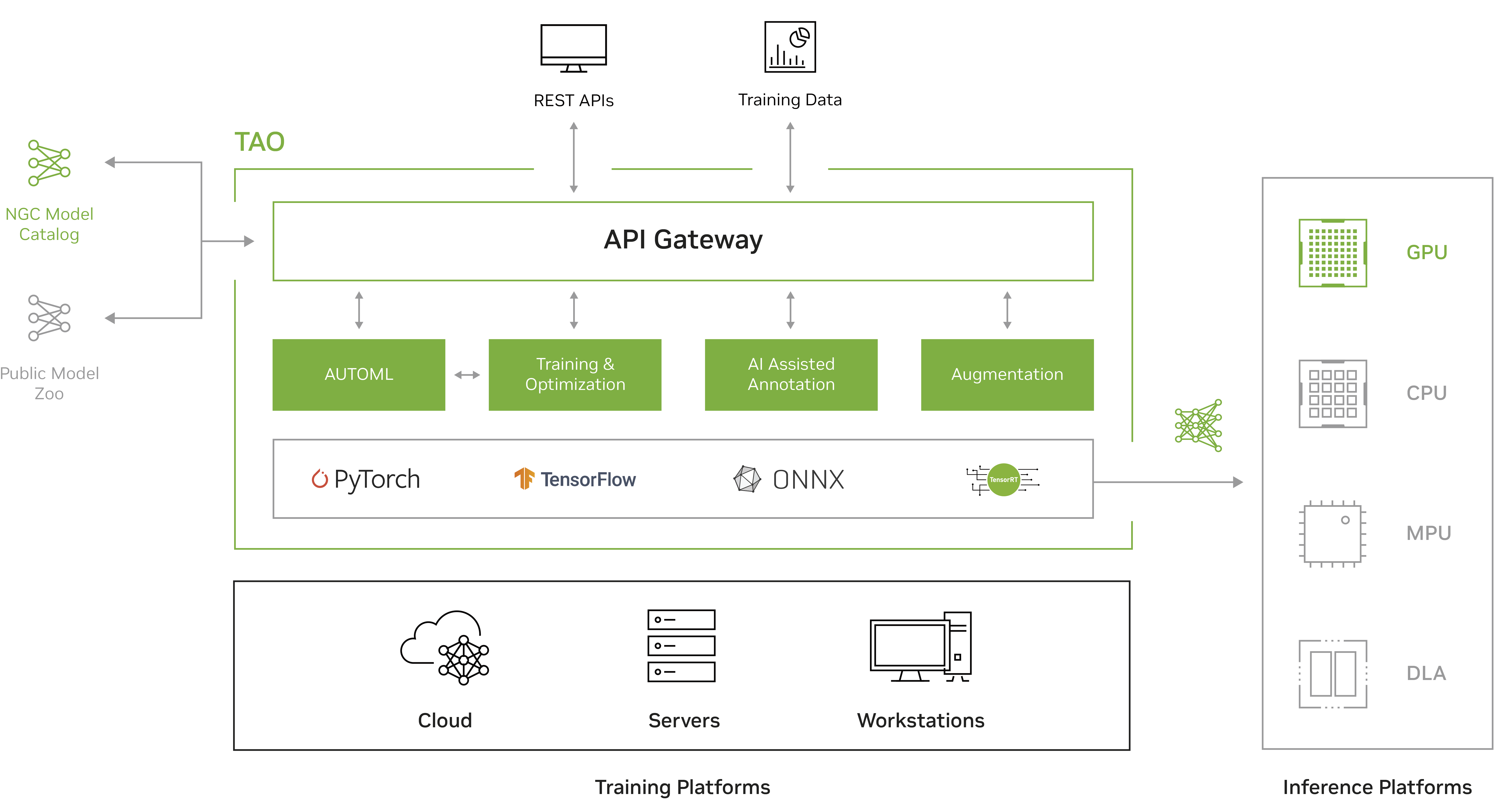

Nvidia's robotics platform is a complete stack combining foundation models (Isaac GR00T, Cosmos), simulation tools (Isaac Lab-Arena), edge hardware (Jetson boards like the T4000), and developer tools (OSMO command center). It's designed to let robotics companies train models in simulation and deploy them to real robots without building infrastructure from scratch.

How does Nvidia's approach differ from building proprietary robots?

Nvidia isn't building robots themselves. They're building the platform other companies build robots on top of. It's like Android: Google doesn't make most Android phones, but provides the operating system and tools that manufacturers use. Nvidia is trying to be the infrastructure layer rather than the robot manufacturer.

Can robots trained on GR00T work on different robot hardware?

This is the key innovation. GR00T is trained on diverse robot morphologies, so models show some generalization across different robots, though with limits. A model trained primarily on humanoids will work better on humanoids than quadrupeds. But the foundation makes transfer learning much cheaper than training from scratch.

What makes Isaac Lab-Arena important for the ecosystem?

Isaac Lab-Arena standardizes simulation benchmarks and environments. Instead of every robotics team building custom simulators, they use a shared standard. This lets teams focus on algorithm improvement rather than infrastructure. It also creates industry-standard benchmarks for comparing approaches fairly.

How does synthetic data from Cosmos help with robot training?

Cosmos world models learn physics from real robot videos, then generate synthetic training data for policy learning. This is cheaper than collecting real robot data and reduces the expensive hardware testing phase. A team can generate millions of synthetic training examples for the cost of recording a few weeks of real robot interaction.

Will this actually make robotics development significantly faster and cheaper?

Based on industry estimates, the combination of foundation models, simulation, and edge hardware could reduce robotics R&D costs by 60-70% compared to building everything from scratch. Development timelines could compress from 18 months to 6 months for teams adopting the full stack effectively.

What are the limitations of foundation models for robotics?

Generalization across very different robot morphologies is still limited. Long-horizon planning (tasks longer than 30 seconds) is challenging. Real-world robustness in varying conditions remains an open problem. Safety verification for deployed robots isn't solved. These are areas where even Nvidia's platform requires additional development work.

How does the partnership with Hugging Face strengthen Nvidia's position?

Hugging Face brings 13 million AI developers to the robotics ecosystem through integration with Le Robot. It provides distribution and legitimacy in open-source communities. Nvidia gains access to a larger developer base, while Hugging Face users gain access to Nvidia's robotics infrastructure. The partnership creates network effects that benefit both.

Is this really comparable to Android's impact on mobile?

The parallel is strategic positioning, not guaranteed outcome. Both focus on openness, ecosystem development, and becoming the default platform. But robotics faces different challenges: physical constraints, safety requirements, hardware diversity. Android's success suggests the approach works, but robotics will have different scaling curves.

What should robotics companies do in response to Nvidia's announcement?

Startups should seriously evaluate building on Nvidia's stack rather than custom infrastructure. It reduces time-to-market and integrates with an ecosystem of 2 million developers. Incumbent robotics companies should ensure their systems work well with Nvidia tools to stay compatible as the ecosystem matures. Investors should expect robotics companies using Nvidia's platform to move faster and cheaper than those building from scratch.

Want to accelerate your robotics development? Teams using modern automation platforms like Runable combine it with robotics infrastructure for faster project documentation, automated report generation, and streamlined team coordination. Try Runable to see how AI-powered automation complements your robotics workflow.

Key Takeaways

- Nvidia announced a full-stack robotics platform at CES 2026 including foundation models (GR00T, Cosmos), simulation (Isaac Lab-Arena), and edge hardware (Jetson T4000)

- The strategy mirrors Android's approach: providing an open, integrated platform that becomes the default for an entire industry rather than building proprietary products

- Foundation models reduce robotics R&D costs by 60-70% and compress development timelines from 18 months to 6 months by enabling reusable, transferable AI capabilities

- Isaac Lab-Arena standardizes simulation and benchmarking across the industry, eliminating the need for companies to build custom infrastructure and enabling fair competitive comparison

- The Hugging Face partnership connects 13 million AI developers with 2 million roboticists, creating network effects that strengthen ecosystem lock-in through usefulness rather than restrictions

Related Articles

- AMD at CES 2026: Lisa Su's AI Revolution & Ryzen Announcements [2026]

- Intel Core Ultra 300 Series: Will Panther Lake Finally Win Back the Market? [2026]

- Nvidia's Vera Rubin Chips Enter Full Production [2025]

- AI Isn't Slop: Why Nadella's Vision Actually Makes Business Sense [2025]

- MSI Crosshair 16 Max HX: Thinner Chassis, Stronger Specs [2026]

- Insight Partners Sued: Inside Kate Lowry's Discrimination Case [2025]

![Nvidia's Android-Style Robotics Platform Explained [2025]](https://tryrunable.com/blog/nvidia-s-android-style-robotics-platform-explained-2025/image-1-1767656531672.png)