Open AI's President Just Made the Most Controversial AI Industry Donation Ever

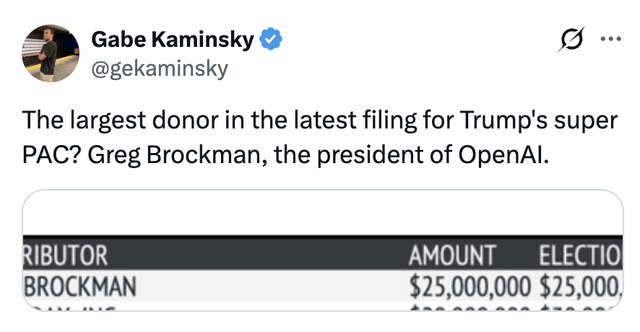

Greg Brockman, the President and cofounder of Open AI, did something most tech leaders avoid like a security breach: he became one of 2025's biggest individual political donors. Together with his wife Anna, Brockman gave

On paper, this is a straightforward tech-executive political donation. In reality, it's creating an earthquake inside Silicon Valley and forcing uncomfortable conversations about AI's role in politics, corporate governance, and whether AI companies should play kingmaker in presidential campaigns. As reported by The Verge, this donation has led to debates about the ethical implications of such political involvement by AI companies.

Let's be clear about what happened. In September 2024, the Brockmans donated

That's

The rationale Brockman provides is straightforward: AI development is the most important mission facing humanity, and politicians willing to support rapid AI development deserve funding. His public statements position these donations as personal choices driven by conviction, not corporate strategy. But inside Open AI, employees aren't convinced. They're asking uncomfortable questions about whether Brockman's political spending reflects corporate priorities, whether it contradicts Open AI's stated values, and whether a company claiming to develop AI for humanity should have its president bankrolling specific political candidates.

This situation reveals something fundamental about AI's collision with democratic institutions, corporate ethics, and tech industry power dynamics.

The Money Trail: From Apolitical Tech Leader to Major Donor

Greg Brockman didn't start as a political player. He's an engineer, not a political operative. His background is deep technical work—he was the fourth employee at Open AI and helped build the technical foundation that made Chat GPT possible. For most of his career, he stayed out of politics entirely.

Then, around 2023-2024, something shifted. Brockman began increasing his political engagement and donations. He started attending policy events, meeting with government officials, and eventually making the leap to significant campaign contributions. By early 2024, he was clearly positioning himself as a political voice for the AI industry.

In interviews, Brockman has articulated a clear justification for his giving. He believes public sentiment toward AI has turned negative. Survey data supports this observation. The Pew Research Center found that Americans are increasingly concerned about AI's societal impacts, and this skepticism extends across demographics. Brockman interprets this as a crisis requiring urgent political action.

His logic follows a specific trajectory. AI development, he argues, is crucial for global competitiveness and human flourishing. Politicians willing to support rapid AI development—even when it's unpopular—deserve financial support. Trump, in his view, has positioned himself as genuinely pro-AI development. Therefore, supporting Trump's political efforts is actually a mission-aligned choice.

This reasoning makes sense if you accept Brockman's foundational premise: that rapid, minimally-regulated AI development is unambiguously good. But that premise is contested, even among AI researchers.

Inside Open AI, multiple researchers have indicated privately that Brockman's political spending goes beyond what's necessary for the company's business interests. One Open AI researcher, speaking anonymously, said: "I personally think Greg's political donations probably go beyond that. I think there's no decision ever that everyone at Open AI agrees with." This quote hints at deeper internal disagreement about whether Brockman is advancing Open AI's interests or pursuing his own political vision.

The donations are notable for another reason: they represent a sharp departure from how most tech executives handle political spending. Silicon Valley's traditional approach is cautious, bipartisan, and designed to avoid alienating employees or customers. Brockman's approach is bold, directional, and unapologetically aligned with a specific candidate.

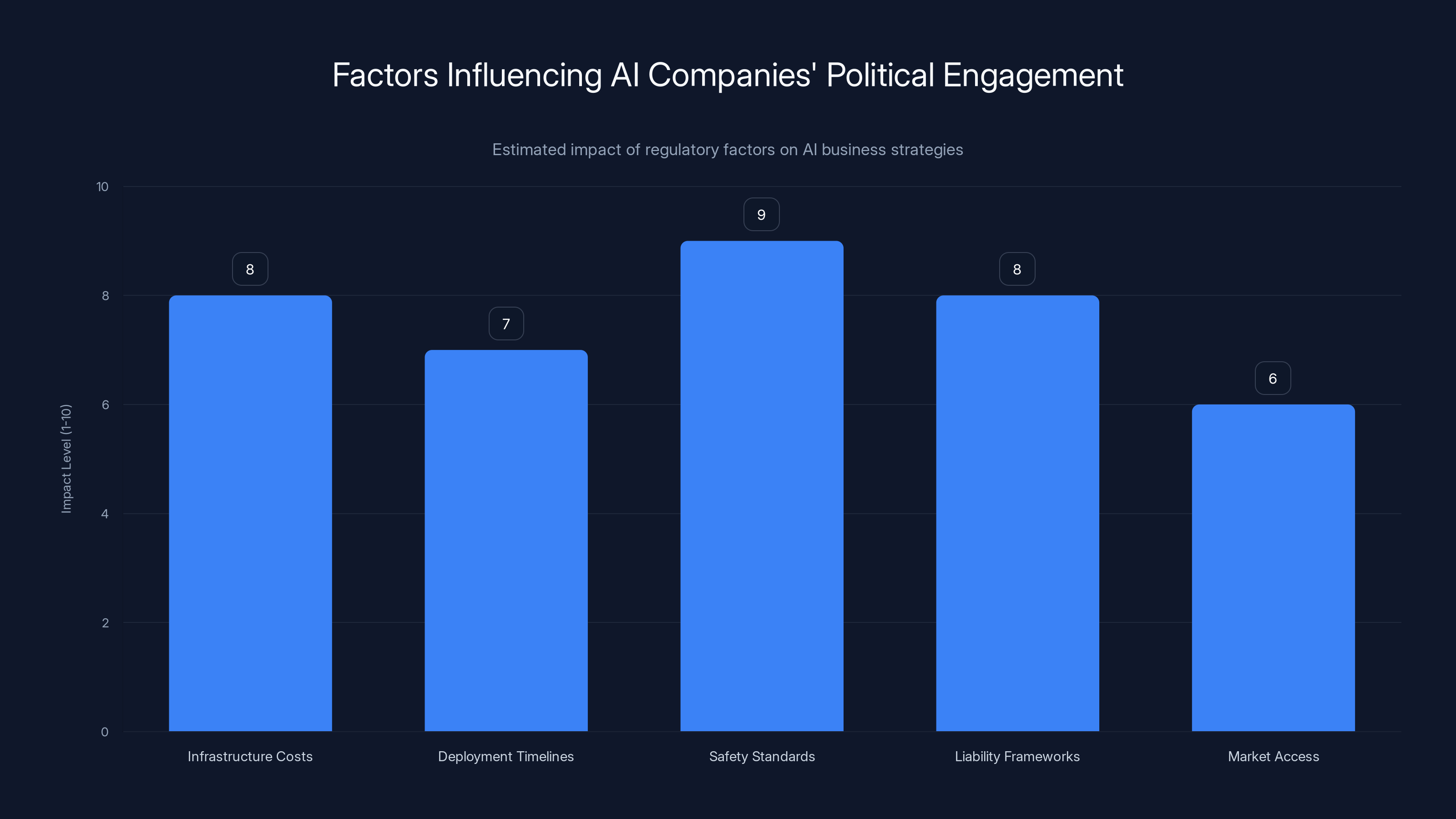

Estimated data suggests safety standards and liability frameworks have the highest impact on AI companies' political engagement strategies.

Why This Matters: AI Development and Political Power

The significance of Brockman's donations extends far beyond the dollar figures. These contributions highlight a fundamental tension in AI development: who gets to decide how AI technology develops, and what influence should money have on that decision-making process?

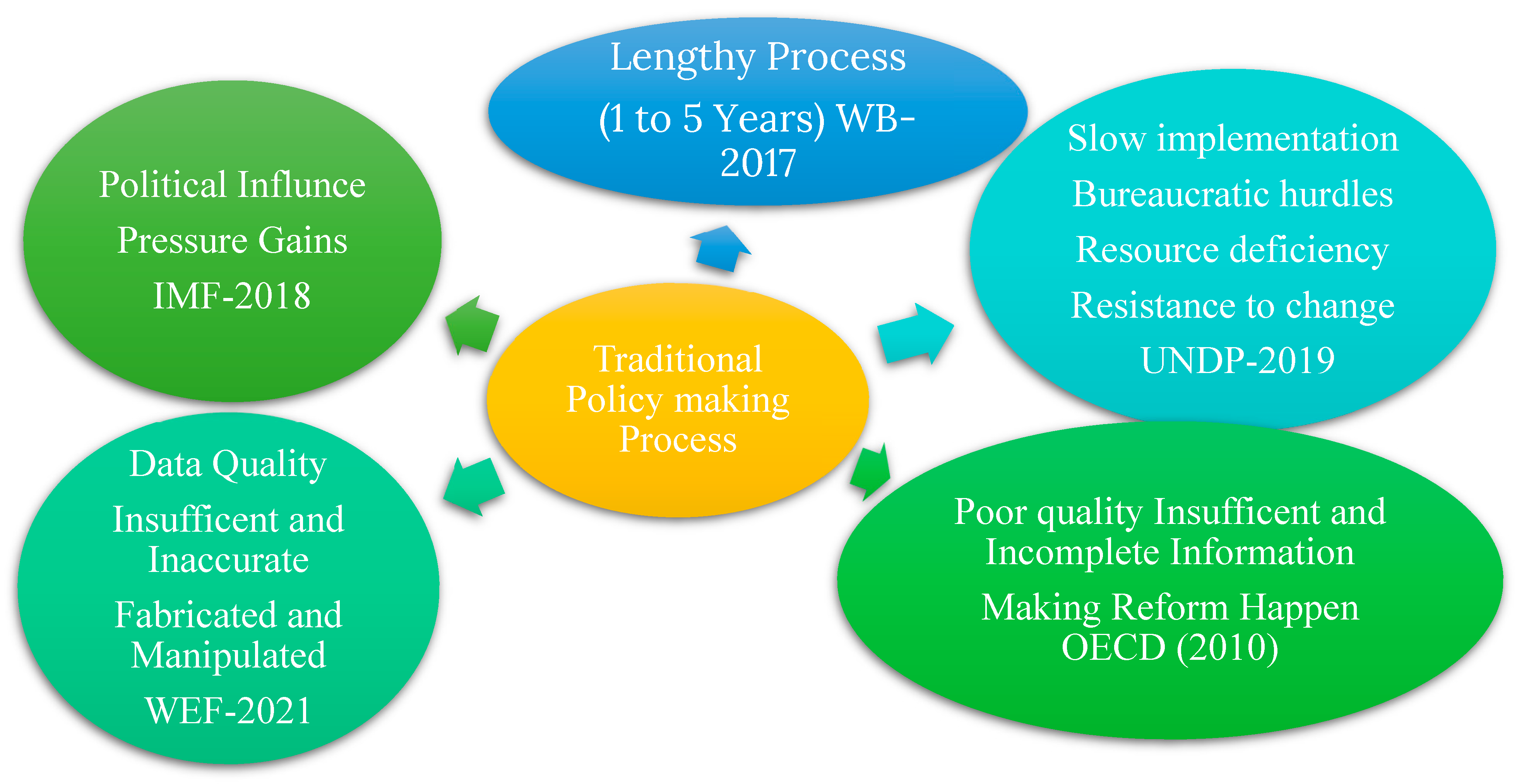

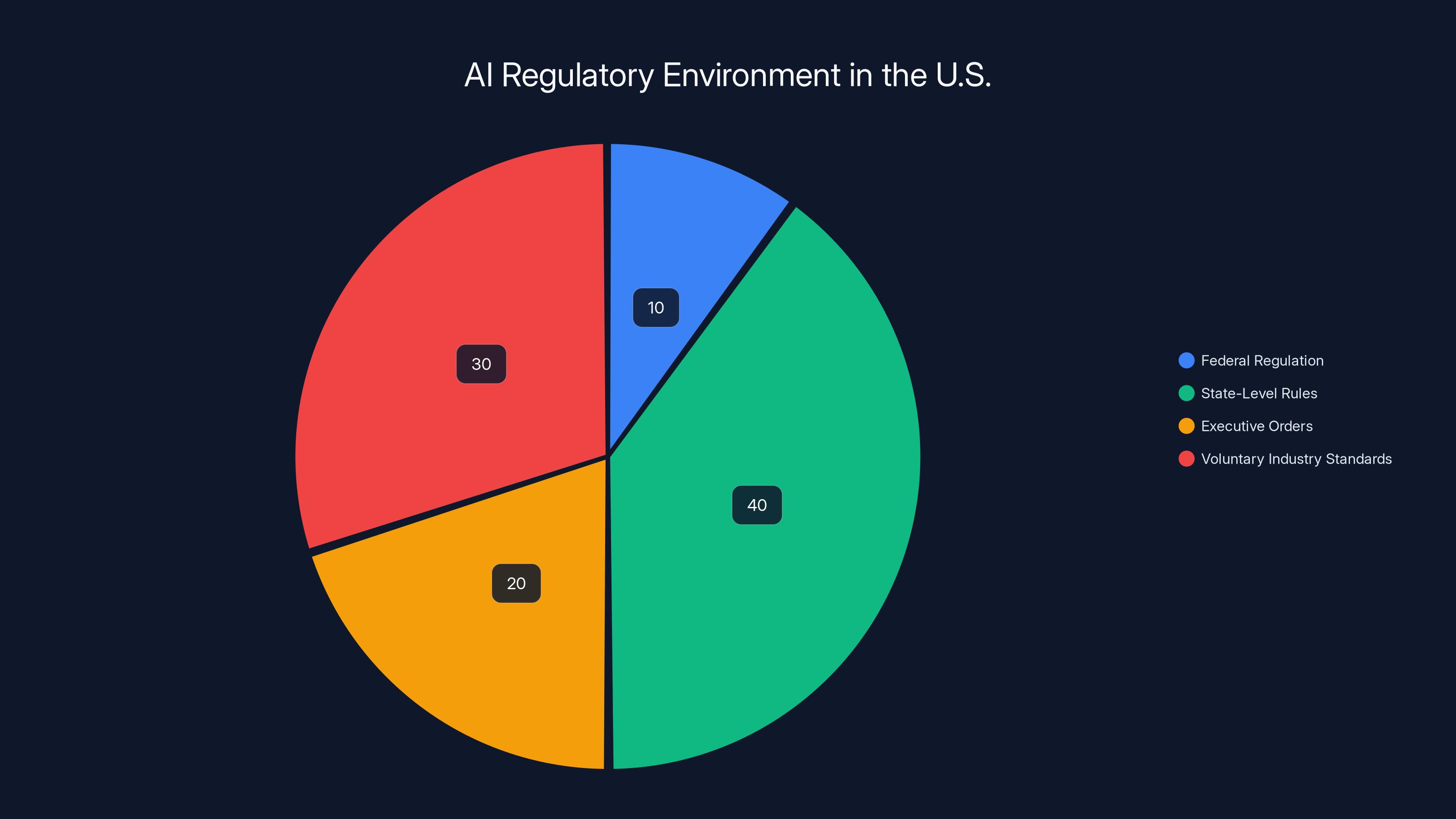

AI development currently operates in a complex regulatory environment. There's no comprehensive federal AI regulation in the United States. Instead, there are scattered state-level rules, executive orders, and voluntary industry standards. This regulatory uncertainty creates incentives for tech leaders to engage politically. They want to shape the rules before those rules are written.

Trump's administration, in its second term starting January 2025, has explicitly positioned itself as pro-AI development and anti-regulation. Trump's AI Action Plan, announced in late 2024, includes commitments to streamline permitting for data centers (a key infrastructure need for large AI models) and challenge state-level AI regulations that create "patchwork" compliance burdens for companies. According to Fox News, this plan has been well-received by many in the tech industry.

From Open AI's perspective, this is business-friendly policy. Data center permitting reform directly impacts the infrastructure costs required to train and run large language models. State-level regulation challenges could prevent fragmented rules that make it harder to deploy AI systems consistently across different markets.

Brockman frames his donations as separate from corporate interests. Open AI's official statement says the Brockmans' donations reflect their personal focus on AI as a defining issue, not corporate strategy. But this distinction is difficult to maintain in practice. When the President of a major AI company donates tens of millions to a political campaign, it's hard for observers to separate personal conviction from corporate interest.

The practical effect of Brockman's donations is to increase Trump's political resources and, simultaneously, to signal Open AI's alignment with Trump's pro-development agenda. Whether this is intended as a conscious corporate strategy or a personal conviction separate from corporate interests becomes difficult to parse.

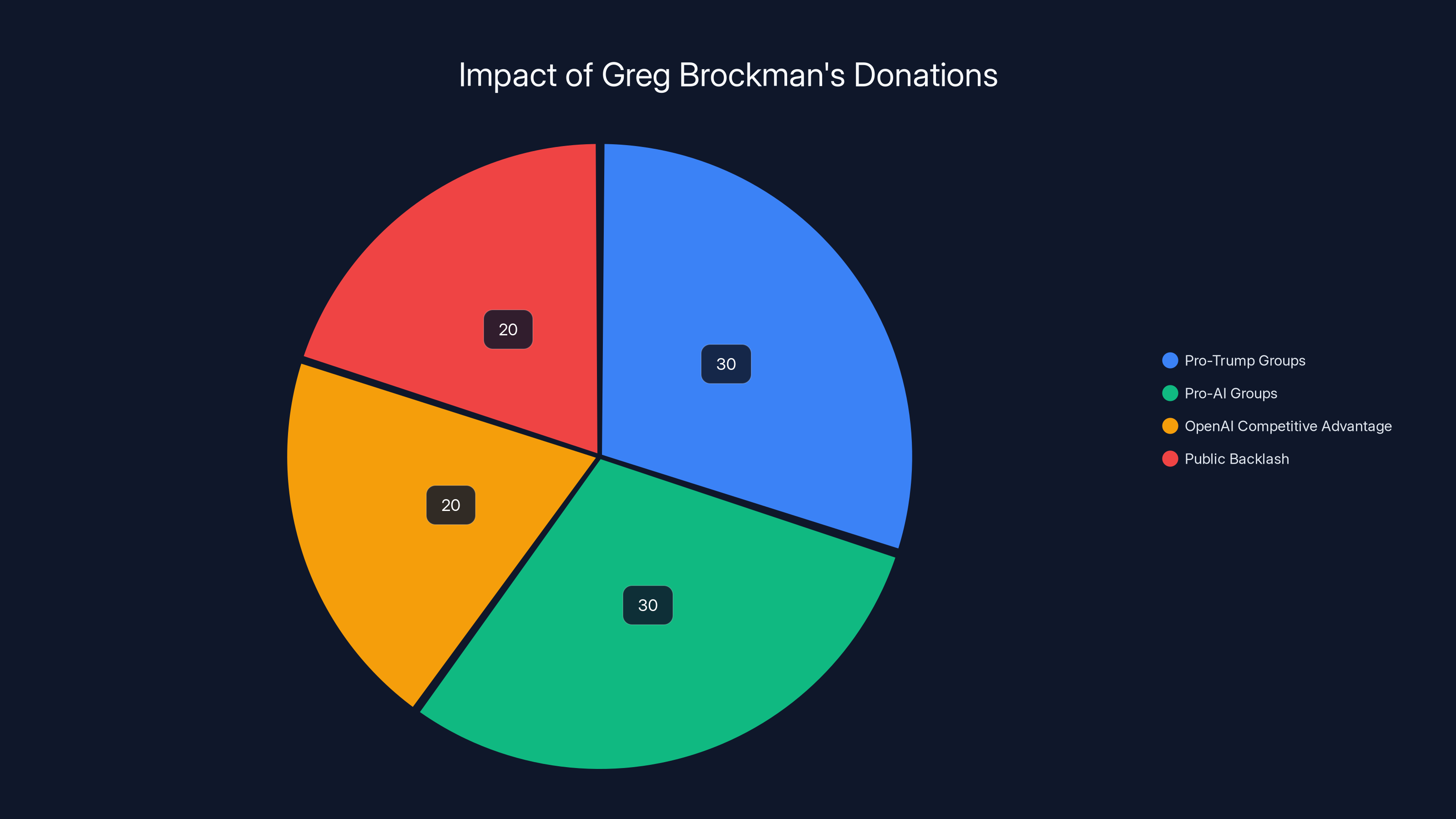

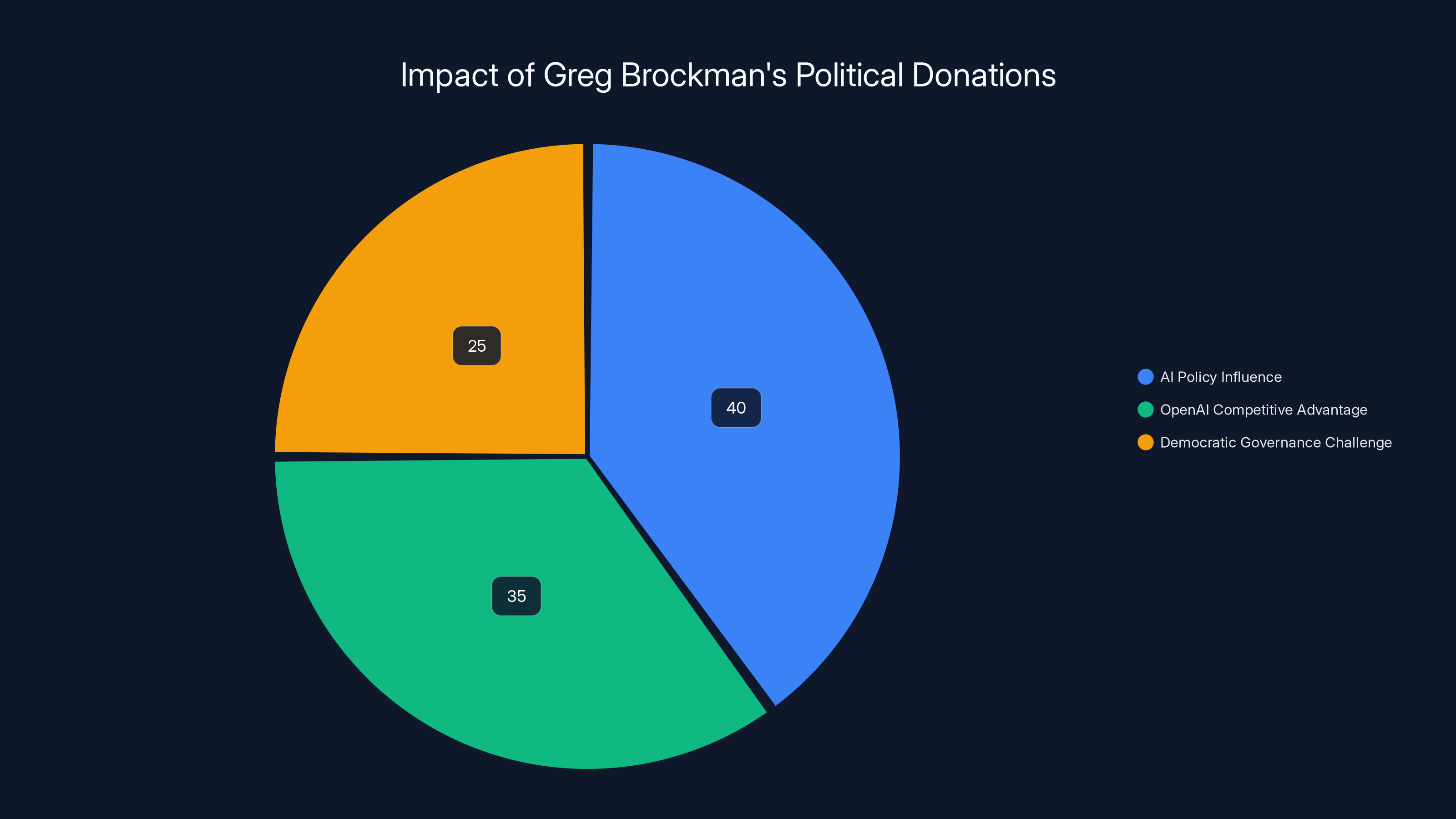

Greg Brockman's $75M donations are estimated to equally support pro-Trump and pro-AI groups, with significant influence on OpenAI's competitive advantage and public backlash. Estimated data.

The Quit GPT Backlash: When Corporate Leadership Meets Public Opinion

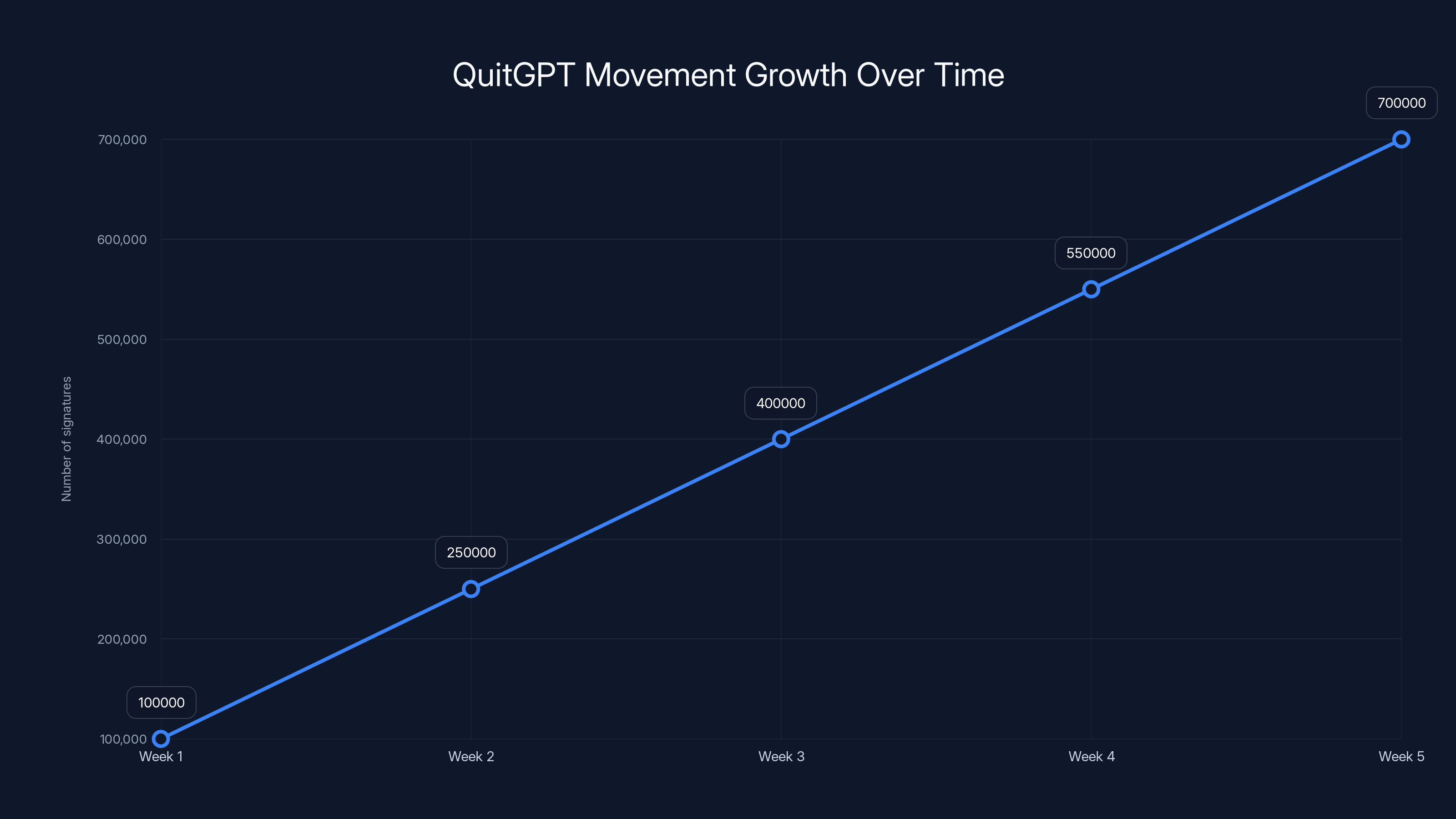

Breakfast came with unexpected news for Open AI's PR team: a grassroots movement called Quit GPT began circulating in early 2025, asking people to cancel their Chat GPT subscriptions in response to Brockman's Trump donation.

The Quit GPT petition and pledge process went viral with surprising speed. Within weeks, over 700,000 people had shared the pledge online or signed it directly. The momentum accelerated when actor Mark Ruffalo announced on Instagram that he was joining the movement. Suddenly, Open AI's leadership faced a scenario that Silicon Valley dreads: public consumer revolt against the company.

Quit GPT isn't the first subscription cancellation campaign against a tech company. We've seen similar movements against Meta, Twitter (now X), and other platforms. But Quit GPT is notable because it's specifically targeting an AI company's leadership over political donations, not over a product feature or user experience issue.

The campaign mechanics are straightforward. Supporters are encouraged to pause or cancel Chat GPT Plus subscriptions and explain the reason. They pledge to resume when Open AI's leadership changes course or when they're satisfied with changes to the company's governance. The implicit message is clear: Open AI's customers can enforce accountability through their wallets.

This represents a significant risk for Open AI. Chat GPT's free tier is massive (hundreds of millions of users), but the revenue model depends on paid subscriptions. Chat GPT Plus costs $20/month and represents a growing revenue stream as the company expands internationally. Even a small percentage reduction in paid subscribers translates to millions of dollars in recurring revenue loss.

Brockman's response to Quit GPT has been minimal. He hasn't directly addressed the movement or engaged with the underlying concerns. Instead, he's focused on restating his original position: these donations are mission-aligned, they're personal choices, and they reflect his genuine belief that AI development is crucial for humanity.

But the backlash reveals something important about AI's relationship with public opinion. AI companies claim to be developing technology for human benefit. When the company's leaders appear to prioritize political alignment with specific candidates, it undercuts that narrative. It makes it harder for consumers and employees to believe the stated mission.

The Employee Perspective: Internal Dissent at Open AI

Quit GPT is one manifestation of the controversy. But there's another important reaction happening inside Open AI itself. Employees, particularly in research roles, have expressed private and public concerns about Brockman's political donations.

Aidan Clark, Open AI's VP of Research (Training), posted on X (Twitter) expressing disagreement with some of Brockman's political positions. Clark's post was notable for acknowledging that he doesn't believe "by helping make Open AI succeed I am helping make Trump succeed." This signals internal debate about whether working at Open AI implies complicity in Brockman's political choices.

Other employees have raised concerns privately but refused to go on record. Several researchers indicated that while they understand Open AI needs to work with government officials, they believe Brockman's political spending exceeds what's necessary. They worry it represents a personal political agenda, not corporate strategy.

This internal dissent matters because it creates a tension in recruitment and retention. AI talent is competitive. Researchers have options. If they believe their employer's leadership is funding causes they oppose, they may choose to work elsewhere. This isn't purely speculative—multiple tech companies have faced employee departures over leadership political stances and corporate values alignment.

Open AI attempted to address this by explicitly stating that the donations are personal, not corporate. But this distinction doesn't fully resolve employee concerns. When the President of a company donates massive amounts to a specific political campaign, it shapes how the company is perceived externally. Employees are associated with leadership decisions, whether they personally support them or not.

Moreover, the framing of donations as "personal" rather than corporate becomes complicated when the President is using his position to fund political campaigns that directly affect his industry. Is a donation truly personal when the donor's company stands to benefit from the political outcome?

The QuitGPT movement rapidly gained traction, reaching over 700,000 signatures within five weeks. Estimated data based on campaign trends.

Trump, AI Policy, and the Tech Industry's Changing Relationship

To understand why Brockman's donations are strategically significant, we need to understand Trump's relationship with AI policy and the broader tech industry's positioning around 2025.

Trump has explicitly positioned his administration as pro-AI development. His AI Action Plan includes several concrete commitments that benefit AI companies. First, he pledged to streamline federal permitting for data centers. This is important because large AI model training requires enormous computing resources and power infrastructure. Data centers are expensive to build and often face local zoning opposition. Federal permitting reform could reduce timelines and costs.

Second, Trump committed to challenging state-level AI regulations. States like California, Colorado, and New York have passed or are considering AI-specific regulations targeting algorithmic decision-making, biometric surveillance, and other AI applications. These regulations create compliance complexity for national companies. Trump's position is that such regulations should be preempted by federal policy, or eliminated entirely.

Third, Trump has signaled openness to relaxed AI safety guidelines and reduced regulatory oversight of AI development. His statements suggest he believes excessive caution about AI development harms American competitiveness relative to China and Europe.

For companies like Open AI, this is extremely favorable policy positioning. Less regulation means faster deployment timelines and fewer compliance costs. Data center permitting reform directly reduces infrastructure spending. Federal preemption of state regulations eliminates the "patchwork" problem of managing multiple regulatory regimes.

But here's where it gets complicated. Trump's administration is also characterized by unpredictability and dramatic policy shifts. Early in Trump's second term, the relationship between Silicon Valley and the Trump administration was tested by ICE (Immigration and Customs Enforcement) incidents that resulted in deaths of civilians during immigration enforcement operations.

When ICE agents killed Renee Nicole Good and Alex Pretti in January 2025, it created a moral and political crisis for tech companies that support Trump's immigration policies. Some tech executives, including Open AI's Sam Altman, expressed concern about the incidents while maintaining support for Trump's overall agenda. Others, including Anthropic CEO Dario Amodei and Google Deep Mind's Chief Scientist Jeff Dean, spoke out more directly against the incidents.

Brockman, notably, declined to directly comment on the ICE incidents. Instead, he offered a general statement that "AI is a uniting technology, and can be so much bigger than what divides us today." This non-response itself became notable—it suggested unwillingness to directly address the moral dimension of the political alignment he had funded.

The Regulatory Calculus: Why AI Companies Engage Politically

Why do AI companies invest heavily in political engagement at all? The answer lies in the regulatory environment and the massive stakes involved in AI policy decisions.

AI regulation affects multiple dimensions of business: computational infrastructure costs, deployment timelines, safety requirement standards, liability frameworks, and market access. A single regulatory change can shift the entire economics of AI development and deployment.

Consider data center permitting. Building a data center for training large language models requires enormous capital investment, environmental review, and infrastructure coordination. Permitting can take years. If federal policy streamlined this process, it could reduce project timelines by months or years and cut costs by hundreds of millions of dollars across the industry.

Or consider algorithmic liability. If the government implements strict liability frameworks for AI-caused harms, companies would need to invest heavily in safety testing and insurance. If liability remains limited, companies can deploy more aggressively. This distinction affects product roadmaps, testing budgets, and go-to-market strategies.

AI companies know that policy decisions made now will shape their competitive position for decades. They understand that early political engagement can influence which regulations get written and how they're enforced. This creates strong incentive for political involvement.

Brockman's donations should be understood in this context. They're not aberrant behavior by an unusually political tech executive. They're the logical extension of AI companies' interest in shaping the regulatory environment that governs their business.

The question is one of scale and transparency. Is $75 million in donations the appropriate level of political spending? Are the donors being transparent about the business interests motivating the donations? Are the donations consistent with the company's stated values of benefiting humanity?

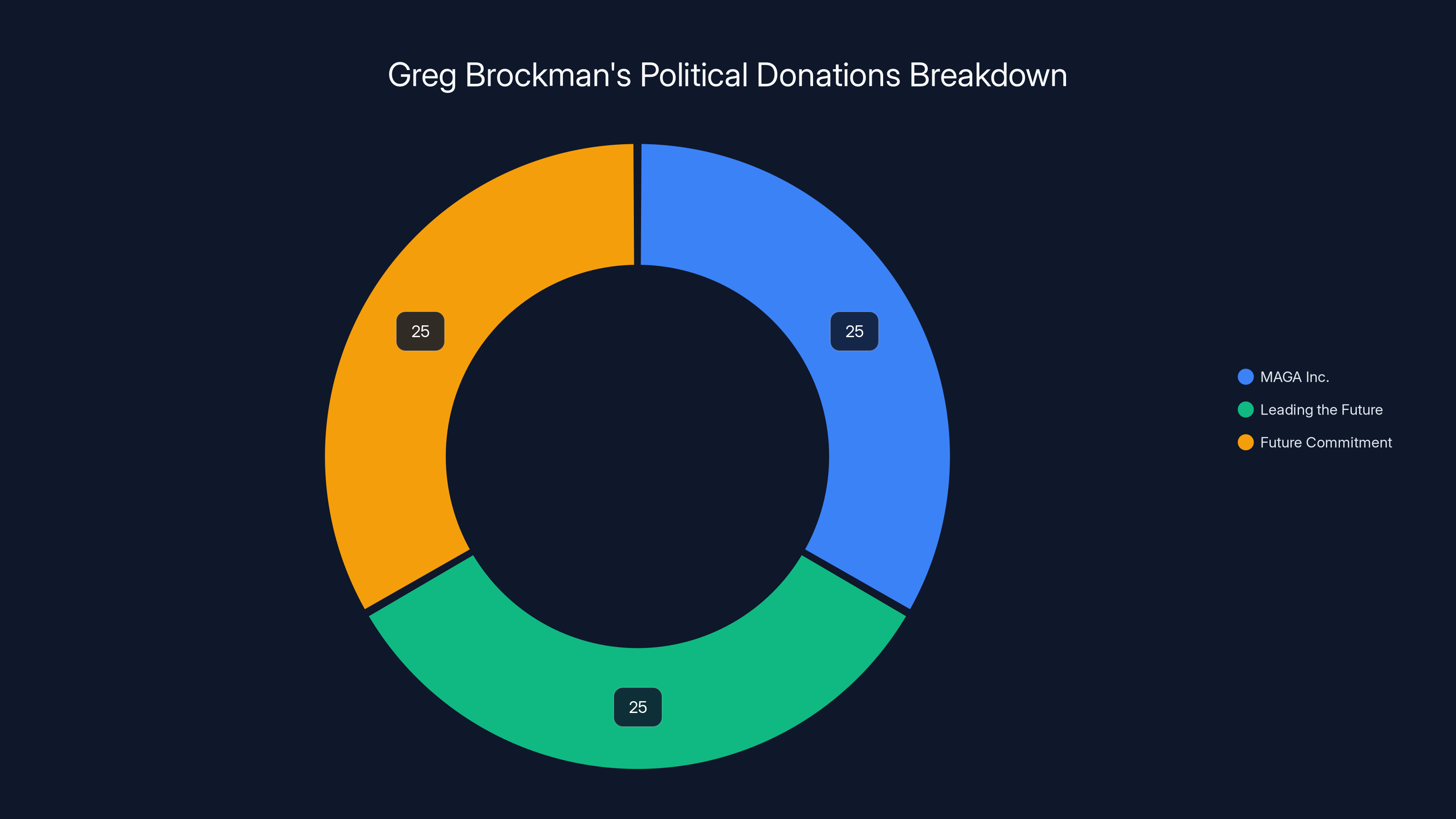

Greg Brockman donated $75 million to political campaigns, split equally between MAGA Inc., Leading the Future, and a future commitment to the latter.

The Humanity Frame: When Corporate Interests Align with Universal Missions

Brockman's primary argument for his donations is that AI development is so important to humanity that supporting pro-AI politicians is a mission-aligned choice that transcends corporate interest.

In interviews, Brockman has been explicit about this framing. "This mission," he said, referring to developing beneficial AI for all humanity, "is bigger than companies, bigger than corporate structures. We are embarking on a journey to develop this technology that's going to be the most impactful thing humanity has ever created. Getting that right and making that benefit everyone, that's the most important thing."

This is a powerful narrative. It positions political donations not as business strategy, but as existential commitment. If AI development really is humanity's most important challenge, then supporting politicians who prioritize it becomes a moral obligation, not a corporate decision.

But this framing has significant tensions embedded in it. First, it assumes that rapid, minimally-regulated AI development is unambiguously good for humanity. This assumption is contested. Many AI researchers, ethicists, and policy experts argue that governance, safety testing, and thoughtful regulation are essential for beneficial AI development. By funding politicians who oppose such measures, Brockman may actually be working against the stated goal of beneficial AI.

Second, the humanity frame obscures the concrete business benefits Brockman's donations provide to Open AI. Yes, supporting pro-AI politicians serves a universal mission. But it also directly benefits Open AI's competitive position through favorable regulatory policy. The two aren't mutually exclusive, but the framing elides the second dimension entirely.

Third, if AI development for humanity is truly the priority, why specifically fund Trump rather than supporting multiple candidates or a broader pro-AI coalition? Brockman's donations are highly concentrated toward Trump and his political ecosystem. This suggests the choice isn't purely about AI development, but also about which political leader will create the most favorable conditions for companies like Open AI.

The humanity framing becomes further complicated when considering alternative uses for $75 million. Open AI could fund AI safety research, support academic AI ethics programs, invest in global AI access initiatives, or fund policy research on responsible AI governance. These uses would also serve the stated mission of beneficial AI development. Instead, the money is going to political campaigns.

The Competition Dimension: Why This Matters for Other AI Companies

Brockman's donations don't happen in isolation. They occur within a competitive context where multiple AI companies are vying for market position, regulatory advantage, and government relationships.

Open AI isn't the only major AI company. Anthropic, Google Deep Mind, Meta's AI research division, Microsoft's AI investments, and smaller companies like Perplexity are all developing competitive AI systems. They're also all interested in favorable regulatory policy.

What distinguishes Brockman's approach is its directness and scale. While other tech executives make political donations, few match Brockman's level of commitment to a specific candidate. This creates competitive asymmetry. If Trump's policies favor Open AI over competitors, Brockman's donations will have purchased significant competitive advantage.

Anthropic, for example, has taken a different approach. Dario Amodei, Anthropic's CEO, has been more cautious in political engagement. He's focused on policy advocacy around AI safety and governance rather than supporting specific candidates. This positions Anthropic differently in Trump's regulatory environment—less aligned politically, but potentially more focused on safety-oriented policy.

Google and Meta have massive resources for political engagement across multiple parties and candidates. They hedge their bets through broader political support. Open AI, by contrast, has chosen a more concentrated bet.

This dynamic matters because it shows how campaign finance shapes the AI industry's development. The candidate who receives Brockman's donations has the resources to shape policy in ways that favor Open AI. Competitors without similar resources, or with different political alignments, operate at a disadvantage.

This is a challenge for democratic governance. Should one tech executive's political donations have such significant influence over AI policy? Should the future of AI development be shaped by which company's leader can write the biggest checks?

Greg Brockman's $75 million donations are estimated to heavily influence AI policy (40%), provide a competitive advantage to OpenAI (35%), and challenge democratic governance (25%). Estimated data.

The Employee Dilemma: Personal Conviction vs. Corporate Identity

From an employee's perspective, working at Open AI now involves an implicit political dimension that didn't exist before Brockman's donations.

Employees at AI companies often choose their employers based on mission alignment. Open AI's stated mission is to develop safe, beneficial AI that benefits all humanity. Researchers accept lower salaries than they could get at Google or Meta because they believe in the mission.

When the company's President donates hundreds of millions to a specific political candidate, it changes the moral calculus for employees. They must now decide: Do I personally support this candidate? If not, does my employment at Open AI constitute complicity in political activity I oppose? Does my work contribute to a company whose leadership has taken a specific political stance?

This becomes even more complicated for international employees. Open AI employs researchers from around the world. Some come from countries with fraught relationships with Trump's America. Working for a company whose leadership is funding Trump's political campaign creates a different kind of discomfort.

Moreover, there's a signaling effect. When the President of Open AI donates massively to Trump, it sends a message about what the company and its leadership value. It signals that rapid AI development is prioritized over concerns about immigration policy, climate policy, or other Trump administration positions.

Brockman's statement that "I don't agree with (some) of his politics, but I also don't believe by helping make Open AI succeed I am helping make Trump succeed (in fact often the opposite!)" was made by Aidan Clark, not Brockman himself. This distinction is important. Brockman hasn't publicly acknowledged that some employees disagree with his political choices. He's responded to internal criticism by reiterating that disagreement is normal and that the company has always been truth-seeking.

But acknowledging that disagreement exists and engaging substantively with why employees might object to his donations are different things. Brockman hasn't done the latter.

The Global Implications: AI Development and Democratic Governance

Brockman's donations are domestic US political activity, but the implications extend globally. AI development is a geopolitical issue. The United States, China, and Europe are all competing for AI leadership. Policy decisions made in Washington affect global AI development trajectories.

If Trump's policies, supported in part by Brockman's donations, prioritize rapid US AI development with minimal regulation, it affects how AI develops globally. It influences whether safety considerations are built into AI systems from the beginning or added later. It affects whether AI governance is democratic and transparent or concentrated in the hands of tech companies.

For countries outside the US, Brockman's political engagement represents a US tech executive funding a presidential campaign that affects global AI governance. This is a legitimacy problem. Democratic processes should determine AI policy, not the campaign contributions of individual tech leaders.

Moreover, if rapid, unregulated AI development in the US creates problems—AI-caused discrimination, environmental impacts, labor displacement—those problems won't be confined to US borders. They'll affect countries worldwide.

The global tech industry is watching how the US handles AI governance during Trump's second term. Regulatory decisions made now will shape industry norms internationally. If policy is shaped primarily by tech executive campaign contributions rather than democratic deliberation, it sets a concerning precedent.

Estimated data shows state-level rules and voluntary industry standards hold significant influence in the U.S. AI regulatory environment due to the absence of comprehensive federal regulation.

The Transparency Question: Should Political Spending Be Disclosed?

Currently, campaign finance law requires disclosure of large donations, but with significant loopholes. Super PAC donations (which is how Brockman gave to MAGA Inc.) are reported to the FEC, but the personal identities of donors sometimes remain obscured through corporate entities or LLCs.

In Brockman's case, the donations were public relatively quickly. WIRED's investigation and reporting made the story visible. But many large political donations remain opaque until journalists or researchers dig through FEC filings.

For public companies like Google or Meta, there's some pressure for transparency around corporate political spending. Shareholders can demand to know how their investment is being used for political purposes. But for executives at those companies making personal donations, disclosure depends on FEC rules, which vary.

For Open AI, which is privately held (though with complex corporate structures), there's minimal transparency requirement. Brockman's donations are disclosed because federal law requires it, but Open AI itself faces no requirement to disclose or discuss them.

This creates a transparency gap. Employees at Open AI may not know about Brockman's donations until they hear about it in the media. Customers certainly don't know that their Chat GPT subscriptions might be indirectly supporting a company whose leadership funds political campaigns.

There's a case for enhanced transparency. If AI companies are developing technology that affects billions of people, shouldn't those people have visibility into the political commitments of the companies' leaders? Shouldn't employees have clear information about their employer's political activities?

Some argue this is business as usual, that executives have always made political donations, and there's no reason to treat tech executives differently. Others contend that AI's power and influence justify different transparency standards.

The Future of AI Governance and Corporate Political Power

Brockman's donations are a data point in a larger trend: AI companies' increasing willingness to engage directly in political processes to shape regulatory outcomes.

We're likely to see more of this. As AI's economic importance grows, companies will invest more heavily in political engagement. As regulatory uncertainty increases, political engagement becomes more valuable. As AI policy becomes salient in campaigns and elections, tech executives will see political donations as legitimate business strategy.

The question is whether democratic institutions can accommodate this level of tech industry political power while maintaining democratic legitimacy.

Historically, industries have faced this challenge. The financial industry's political donations increased dramatically after deregulation became a key issue. The pharmaceutical industry invests heavily in political engagement to shape drug policy. The fossil fuel industry's political spending is a model (and cautionary tale) of how industry donations can capture regulatory policy.

AI is different in scale and scope. AI affects nearly every aspect of human activity. A tech executive's political donations that shape AI policy affect decisions about technology that touches healthcare, criminal justice, employment, education, and more.

Looking forward, there are several potential developments. First, we may see more tech executives making large political donations, creating a political arms race within the industry. Companies that don't engage politically might find themselves at regulatory disadvantage.

Second, there's likely to be increasing pressure for transparency and limits on tech executive political spending. Employee activism, consumer backlash, and shareholder activism might lead to corporate policies restricting political donations or requiring disclosure.

Third, regulatory frameworks may evolve to address tech industry political power more directly. Congress might implement rules limiting campaign finance from executives at AI companies, requiring corporate disclosure of executive political spending, or establishing stronger safeguards against political capture of AI policy.

None of these developments is assured. Tech industry lobbying is powerful and effective. But the visibility of Brockman's donations and the resulting backlash suggest that tech executives' political engagement is increasingly subject to scrutiny.

Lessons for Tech Leadership and Corporate Values

Brockman's approach offers several lessons, both cautionary and illustrative, for tech leadership.

First, there's significant risk in positioning corporate mission as justification for personal political decisions that affect the company. The line between personal choice and corporate action blurs when the person making the choice is the company's public face and highest-ranking executive. Employees, customers, and the public naturally connect the executive's actions to the company's values.

Second, when there's internal disagreement about a leadership decision, acknowledging that disagreement directly and engaging with the underlying concerns is more effective than restating the original decision. Brockman's approach of reiterating that disagreement is normal and that the company values truth-seeking doesn't actually address why employees are concerned. It comes across as dismissive.

Third, large-scale political engagement requires a stronger articulation of how the decision serves the stated mission. Brockman justified his donations through the importance of AI development, but didn't directly address why supporting Trump was the best way to advance that mission, or why alternative approaches wouldn't work better.

Fourth, transparency matters more in a polarized political environment. When a tech leader makes large political donations, stakeholders will speculate about motivations and business implications. Proactive transparency about the reasoning, the decision-making process, and engagement with disagreement is more effective than waiting for the press to break the story.

Finally, there's significant risk in positioning a specific political candidate as essential to a mission that's framed as universal and humanitarian. This inherently makes the mission appear politically partisan, even if the donor's intentions are genuinely universal.

The Broader Context: Tech Industry Political Engagement in 2025

Brockman's donations aren't happening in isolation. They're part of a broader pattern of tech industry political engagement during Trump's second term.

Most major tech companies have already established relationships with Trump's administration. The administration has signaled openness to AI development and skepticism of some tech regulation (antitrust enforcement, data privacy rules). This creates alignment between tech interests and Trump policy positions.

At the same time, there's a competing narrative about tech companies and Trump. Some executives express genuine concern about Trump policies on immigration, trade, and authoritarianism. These tensions are real. Tech executives face a dilemma: how to preserve business relationships with a Trump administration while maintaining credibility as corporate leaders who respect democratic norms and human rights.

Brockman's approach is to lean all the way into the business relationship dimension. He's funding Trump's political campaign, which signals strong support. This positions Open AI extremely well for favorable regulatory treatment during Trump's second term.

But it also positions Open AI as explicitly aligned with Trump, which may have long-term implications if Trump's administration faces legitimacy crises or if political power shifts again. The tech industry's historical playbook is to hedge bets across multiple political parties. Brockman's approach is more concentrated and more risky.

The Humanity Mission Revisited: Can It Survive Political Alignment?

Open AI's founding mission is to develop artificial general intelligence (AGI) that benefits all humanity. This mission is genuinely expansive. It's not about making money for shareholders or creating profit for a company. It's about developing a technology that could reshape human civilization.

For this mission to be credible, it needs to transcend political partisanship. It needs to be grounded in genuine concern for human welfare, not in advantage for specific political factions.

When the company's President donates tens of millions to a specific political candidate, it's harder to maintain that claim of universality. Even if the donations are framed as personal choices, they affect how the company is perceived. They suggest that the company's leadership has stronger political commitments than commitment to the ostensible mission of developing beneficial AI for all humanity.

This isn't to say tech leaders shouldn't be political. They should engage in democracy. But there's a meaningful difference between supporting candidates whose positions align with business interests and funding those candidates at an extraordinary scale while claiming that the work is above politics.

Brockman's donations suggest a particular vision of what it means to develop AI for humanity: rapid development, minimal regulation, and support from sympathetic political leadership. This is one vision among many. Others might argue that beneficial AI requires more caution, more safety testing, more public engagement, and more diverse political support.

The challenge is that Brockman's political donations privilege his vision over others within the company and the broader AI research community. Money translates to political power, and political power shapes what AI development looks like.

If Open AI is genuinely committed to developing AI for all humanity, it might need to reckon with how its leadership's political choices affect that mission's credibility.

What's Next: The Trajectory of AI Politics and Corporate Power

We're in the early stages of watching how AI companies and their leaders shape democratic processes through political engagement. Brockman's donations are a high-profile example, but they won't be the last.

As AI's economic importance grows, we'll likely see more tech executives making large political donations. As regulatory stakes rise, the incentives for political engagement increase. As AI becomes more salient in elections and policy debates, tech leaders will see themselves as having legitimate roles to play in political processes.

The question is whether democratic institutions will adapt to accommodate this level of tech industry political power or whether new regulations will limit it.

From Open AI's perspective, the immediate challenge is managing the fallout from Brockman's donations. The Quit GPT backlash is real. Employee concerns are legitimate. The company's mission of beneficial AI for all humanity is harder to defend when the President is funding specific political campaigns.

Longer term, Open AI and other AI companies will need to decide how much political engagement they're willing to undertake and whether that engagement supports or undermines their stated missions.

For Brockman personally, the donations have already shaped his public profile. He's no longer just an AI technologist and company executive. He's a political donor, a Trump supporter, and a figure of controversy in AI industry debates. That positioning has consequences for his credibility on questions of beneficial AI development.

The choices we make now about AI governance, corporate political power, and the relationship between tech companies and democracy will shape AI's development for decades to come. Brockman's donations are a data point in that larger narrative.

TL; DR

- Greg Brockman donated $75M to pro-Trump and pro-AI political groups, marking a massive shift from his previously apolitical approach to tech leadership and corporate governance.

- The donations are officially personal, but they benefit Open AI competitively by funding politicians who support the regulatory policies that favor AI company growth and reduce compliance costs.

- Internal Open AI employees and external observers question whether the donations exceed what's necessary for business strategy and instead reflect Brockman's personal political conviction about AI's importance.

- Quit GPT, a consumer backlash movement calling for Chat GPT cancellations, reached 700K+ supporters, demonstrating real risk to Open AI's business from controversial leadership political activities.

- The donations reveal how AI company leadership now shape democratic processes and regulatory outcomes through campaign finance, raising questions about whether tech executives should have this much influence over AI governance.

FAQ

Why did Greg Brockman donate $75 million to political campaigns?

Brockman has stated that he made these donations because he believes AI development is the most important mission facing humanity. He argues that politicians willing to support rapid AI development deserve financial backing, even if it's unpopular. He donated

How did this affect Open AI employees and customers?

The donations created significant internal dissent at Open AI. Multiple employees, including VP of Research Aidan Clark, publicly expressed disagreement with some of Brockman's political positions. Externally, the Quit GPT movement emerged as a grassroots consumer backlash, with over 700,000 people signing pledges to cancel Chat GPT subscriptions in response to the donations. Actor Mark Ruffalo publicly joined the movement, amplifying visibility.

Is this Brockman's money or Open AI's money?

Brockman and his wife made the donations with their personal wealth, not company funds. Open AI officially states the donations are personal choices and don't reflect the company's politics. However, this distinction becomes complicated when the company's President uses his position to fund political campaigns that directly benefit the company's regulatory interests. The boundary between personal conviction and corporate benefit isn't always clear.

What specific Trump policies benefit Open AI's business?

Trump's AI Action Plan includes commitments to streamline federal data center permitting (reducing infrastructure costs), challenge state-level AI regulations that create compliance burdens, and maintain pro-development regulatory approaches. These policies directly benefit AI companies like Open AI by reducing costs and regulatory constraints on AI deployment and development. This creates tension with Brockman's claim that his donations are purely mission-driven rather than business-motivated.

Do other AI companies do similar political donations?

While tech executives historically make political donations, Brockman's scale and directedness are unusual. He's focused donations heavily on a single candidate and political direction, whereas many tech companies hedge bets across multiple parties. Anthropic CEO Dario Amodei has taken a more cautious approach, focusing on policy advocacy rather than candidate support. This creates competitive asymmetry where Brockman's political support may provide Open AI regulatory advantages other AI companies don't have.

What does Quit GPT want Open AI to do?

Quit GPT supporters are calling for Open AI to change its leadership's political direction or to demonstrate a change in company values and governance. The movement leverages consumer choice (canceling subscriptions) to exert pressure on the company's leadership. The implicit message is that customers can enforce accountability by withdrawing financial support, creating business consequences for political decisions they oppose.

Why is this concerning from a democratic governance perspective?

The donations raise questions about whether tech executives should have sufficient political resources to significantly shape policy affecting their industries. When one company's leader can donate $75 million to influence political outcomes, it concentrates power in individual billionaires rather than distributing it through democratic processes. This is especially concerning for AI, which affects nearly every aspect of human activity. Policy decisions shaped by campaign contributions rather than broader democratic deliberation raises legitimacy concerns.

Could this hurt Open AI's business long-term?

Yes, there are several long-term risks. Employee recruitment and retention could suffer if researchers don't want to work for a company whose leadership has taken specific political stances. Customer backlash like Quit GPT could reduce revenue if significant numbers of subscribers cancel. International expansion could face challenges in countries with different political alignments. Finally, if political power shifts away from Trump, the regulatory advantages Brockman is funding could disappear, leaving Open AI without the expected policy benefits.

Conclusion: Mission, Money, and Democratic Power

Greg Brockman's $75 million in political donations represent something genuinely new in tech industry history. It's not the first time a tech executive has made large political donations. But it may be the first time one has invested this much money this directly in shaping policy for an emerging technology that the executive's company is developing.

The situation forces uncomfortable questions about the relationship between corporate power, political influence, and democratic governance. When a single tech executive can donate tens of millions to shape policy affecting his industry, it concentrates decision-making power in ways that challenge democratic ideals.

Brockman's framing of his donations as mission-driven—supporting AI development for all humanity—is compelling at first glance. But it obscures the practical reality that his donations also benefit Open AI competitively. Rapid, minimally-regulated AI development is good for Open AI's business. Supporting politicians who prioritize this approach serves both mission and business interests. Brockman's donations blend these so thoroughly that separating them becomes impossible.

Internally, Open AI employees have signaled discomfort with the arrangement. Externally, consumer backlash through Quit GPT demonstrates that customers believe there's a connection between leadership's political choices and the company's values. These reactions suggest that framing corporate mission and personal political conviction as separate doesn't work when the person making the conviction is the company's public face.

Longer term, AI industry political engagement will likely increase. As AI's economic and geopolitical importance grows, so will the incentives for tech companies and their leaders to shape regulatory outcomes through political engagement. The question isn't whether this will happen—it already is. The question is whether democratic institutions will accommodate this new reality or whether regulations will evolve to limit tech industry political power.

Brockman's donations are an early signal of what AI industry politics might look like in the coming decade: direct, large-scale, and increasingly tied to specific political outcomes. How society responds to this will shape not just Open AI's future, but the broader relationship between tech companies, political power, and democratic governance.

The stakes extend far beyond one company or one executive. They're about who gets to decide how transformative AI technology develops and what influence money should have on those decisions. Those are fundamentally democratic questions, and how we answer them will matter for decades to come.

Key Takeaways

- Greg Brockman's $75M political donations represent an unprecedented scale of tech executive political engagement to shape AI regulation

- The donations created measurable backlash: 700K+ QuitGPT supporters, internal employee dissent, and customer risk to OpenAI's business

- Trump's AI Action Plan directly benefits OpenAI through data center permitting reform and state regulation preemption, creating business motivation for Brockman's political support

- The gap between Brockman's framing (mission-driven donations) and reality (donations benefiting his company's regulatory interests) fuels employee skepticism and customer backlash

- AI industry political engagement is likely to increase significantly, raising systemic questions about whether democratic institutions can accommodate this level of tech company influence over policy

Related Articles

- OpenAI Disbands Alignment Team: What It Means for AI Safety [2025]

- OpenAI Researcher Quits Over ChatGPT Ads, Warns of 'Facebook' Path [2025]

- Humanoid Robots & Privacy: Redefining Trust in 2025

- OpenAI's ChatGPT Ads Strategy: What You Need to Know [2025]

- Larry Ellison's 1987 AI Warning: Why 'The Height of Nonsense' Still Matters [2025]

- How Spotify's Top Developers Stopped Coding: The AI Revolution [2025]

![OpenAI's Greg Brockman's $25M Trump Donation: AI Politics [2025]](https://tryrunable.com/blog/openai-s-greg-brockman-s-25m-trump-donation-ai-politics-2025/image-1-1770925268748.jpg)