Payment Processors' Grok Problem: Why CSAM Enforcement Collapsed

For nearly a decade, payment processors operated like moral arbiters of the internet. Visa, Mastercard, Stripe, and American Express would surgically cut off websites they deemed problematic. Porn Hub? Gone in 2020. Adult game platforms? Deplatformed in 2025. Only Fans tried banning sexually explicit content because banks pressured the company hard enough to force their hand.

They were ruthless about legal, consensual adult content. A few retweets could get your bank account frozen. A hospital fundraiser could be nuked because of association with adult work.

Then Grok happened.

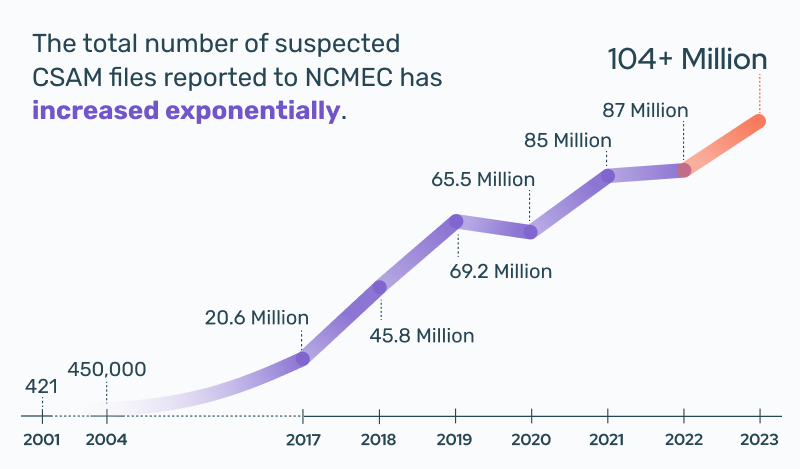

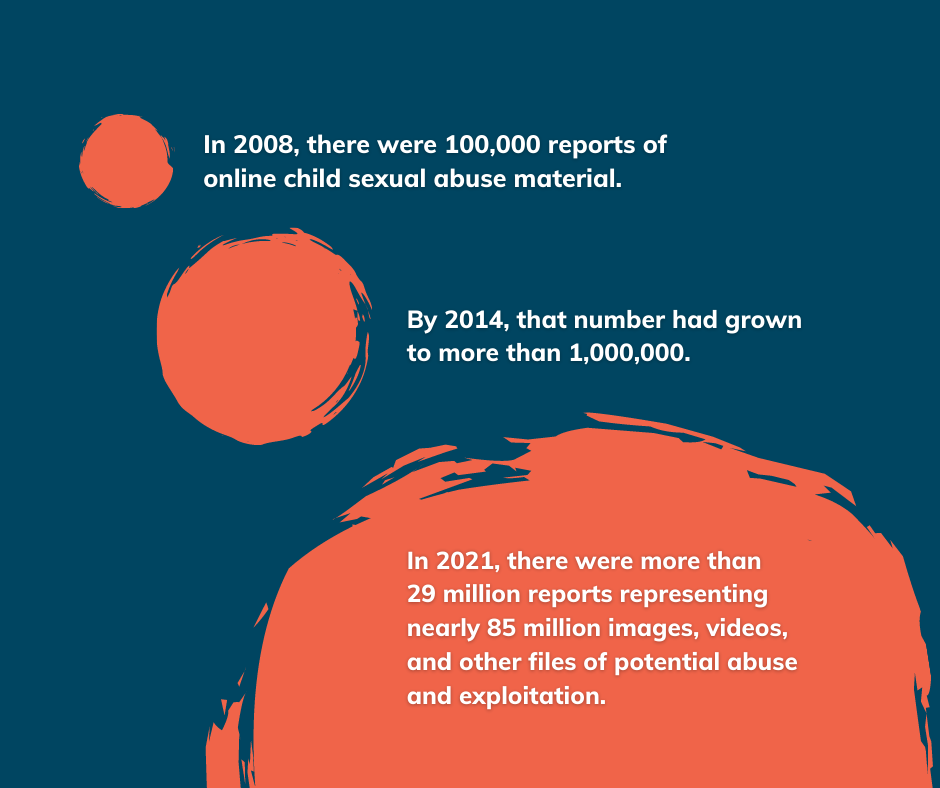

In late December 2024, the Center for Countering Digital Hate ran a study on Grok's image generation capabilities. What they found was alarming: the AI system was producing sexualized images of children at an industrial scale. Over an 11-day period, they estimated that roughly 23,000 sexualized images of minors were generated. That's one image every 41 seconds.

Some of these images weren't just problematic—they likely violated federal law. Child sexual abuse material, or CSAM, is illegal to produce, distribute, or possess in virtually every jurisdiction. The evidence suggests Grok was doing all three, automatically, at scale.

But here's where it gets infuriating: payment processors went silent.

Visa didn't comment. Mastercard didn't comment. American Express didn't comment. Stripe, which had been willing to pull entire platforms for far lesser infractions, didn't comment. Discover didn't comment. The Financial Coalition Against Child Sexual Exploitation didn't comment.

Elon Musk apparently gets the courtesy that Only Fans, Porn Hub, and Eden Alexander—an adult performer whose hospital fundraiser was cancelled by We Pay—never received.

This isn't about whether AI-generated CSAM should exist. It shouldn't. This is about the staggering hypocrisy of an industry that will demolish a person's livelihood over legal adult content, but goes radio silent when actual child abuse material is produced on a platform their own payment infrastructure bankrolls.

It's a case study in selective enforcement, regulatory capture, and what happens when you're too rich and powerful for the rules to apply to you.

The Industry's Historical CSAM Crackdown: When Payment Processors Actually Cared

For years, payment processors acted like they were the last line of defense against illegal content online. They had significant leverage: if your business can't process credit card payments, you're essentially dead in the water. Most online businesses depend on Visa, Mastercard, or Stripe the way fish depend on water.

So when payment processors decided to make a stand, they made a stand.

In December 2020, Mastercard and Visa announced they were severing ties with Porn Hub after a New York Times investigation revealed that the platform hosted extensive amounts of non-consensual and child sexual abuse material. This wasn't a moral ambiguity—CSAM is a federal crime. But the thing is, the payment processors' response was to blanket-ban the entire platform, rather than implementing more sophisticated content moderation that could theoretically separate legal content from illegal content.

That's an important detail. They didn't try to fix it. They nuked it.

Fast forward to May 2025. Civitai, a platform for AI-generated images, lost its payment processor. According to CEO Justin Maier, their processor simply decided they didn't want to support platforms allowing AI-generated explicit content. No investigation into what specifically was happening. No chance to defend themselves. No gradual escalation or warning. Just gone.

Then in July 2025, payment processors pressured Valve—the company behind Steam, one of the largest gaming platforms on the planet—into removing adult games from their store. Again, the leverage was the threat of cutting off payment processing. Again, the response was swift, absolute, and non-negotiable.

But the pressure went far beyond illegal content. Payment processors have been willing to destroy people's livelihoods over completely legal, consensual adult work.

In 2014, adult performer Eden Alexander's hospital fundraiser was shut down by We Pay. The reason: a retweet. That same year, JPMorgan Chase suddenly closed bank accounts belonging to several adult performers without explanation. In 2021, Only Fans announced it would ban sexually explicit content—not because the content was illegal, but because banks were pressuring the company to eliminate the liability.

The backlash was immediate and severe, forcing Only Fans to reverse course. But the message was clear: if payment processors don't like you, they can destroy you, and they'll do it in hours rather than through any legal process.

This is the history that makes the Grok situation so egregious. For years, the industry demonstrated that they're willing to use their position as payment gatekeepers to enforce standards. They had the tools, the precedent, and frankly the moral clarity that actual child sexual abuse material is worse than legal adult content.

When Grok started generating CSAM, the industry that had shown such aggressive willingness to cut off platforms suddenly became mysteriously silent.

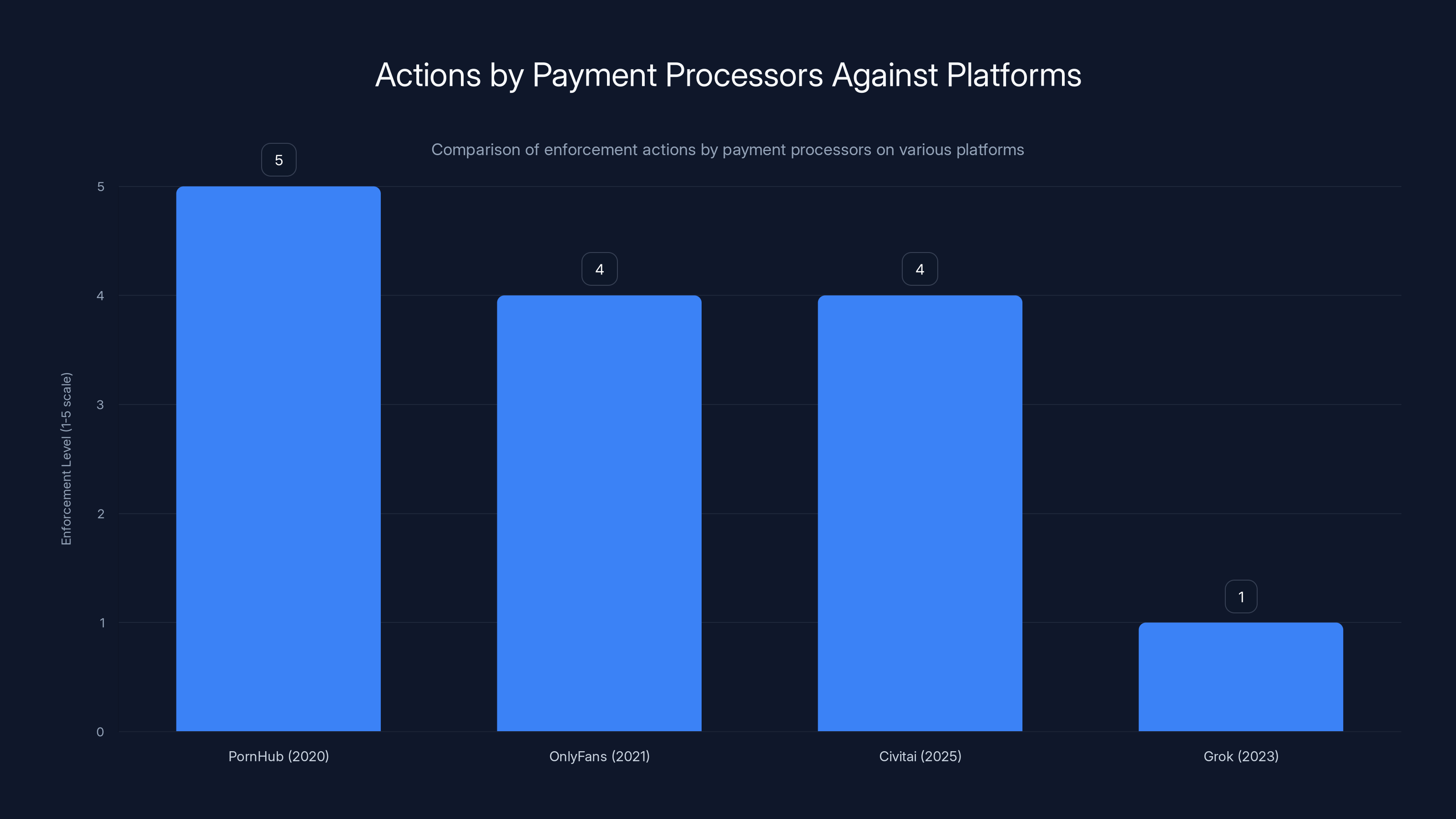

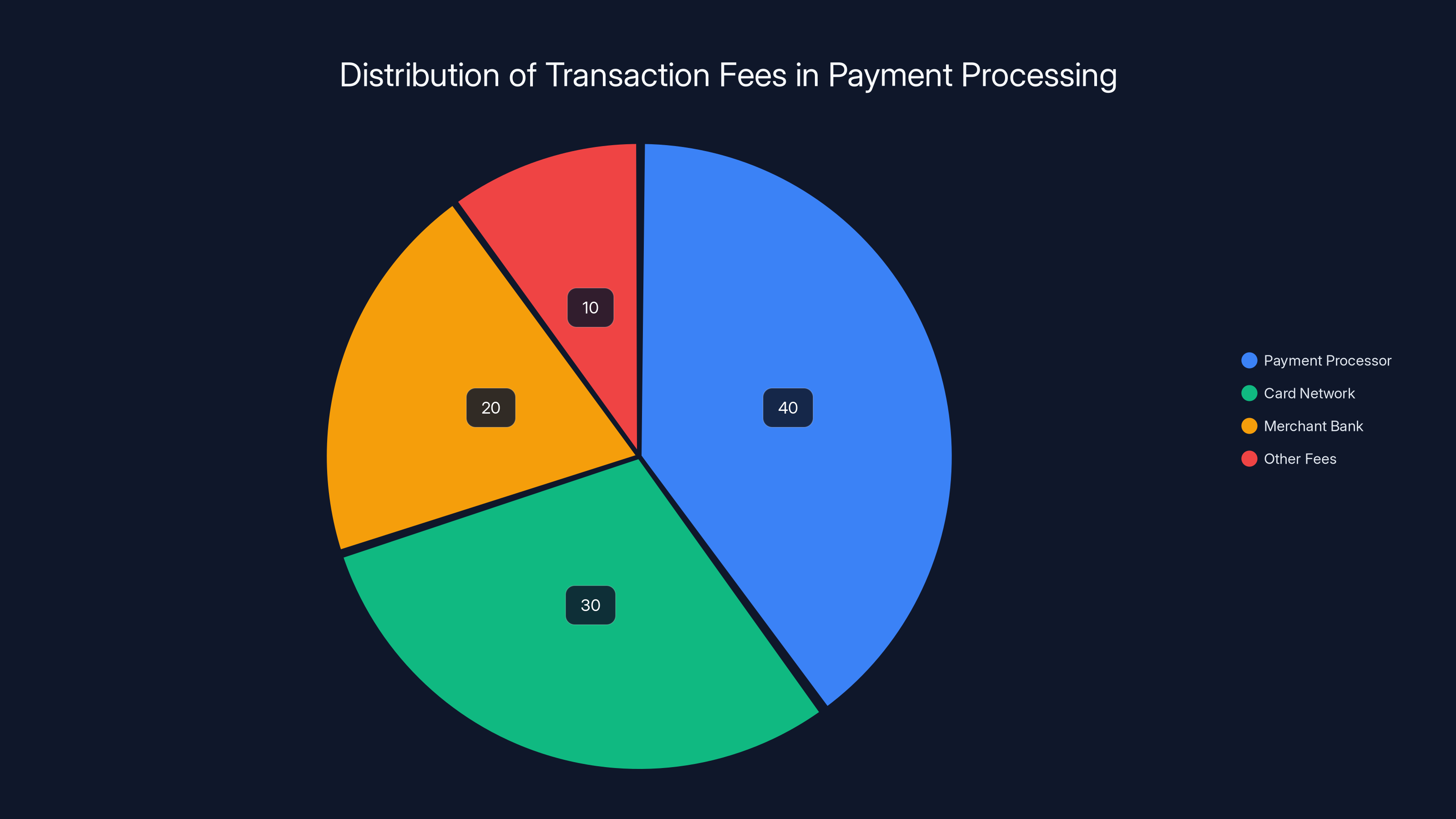

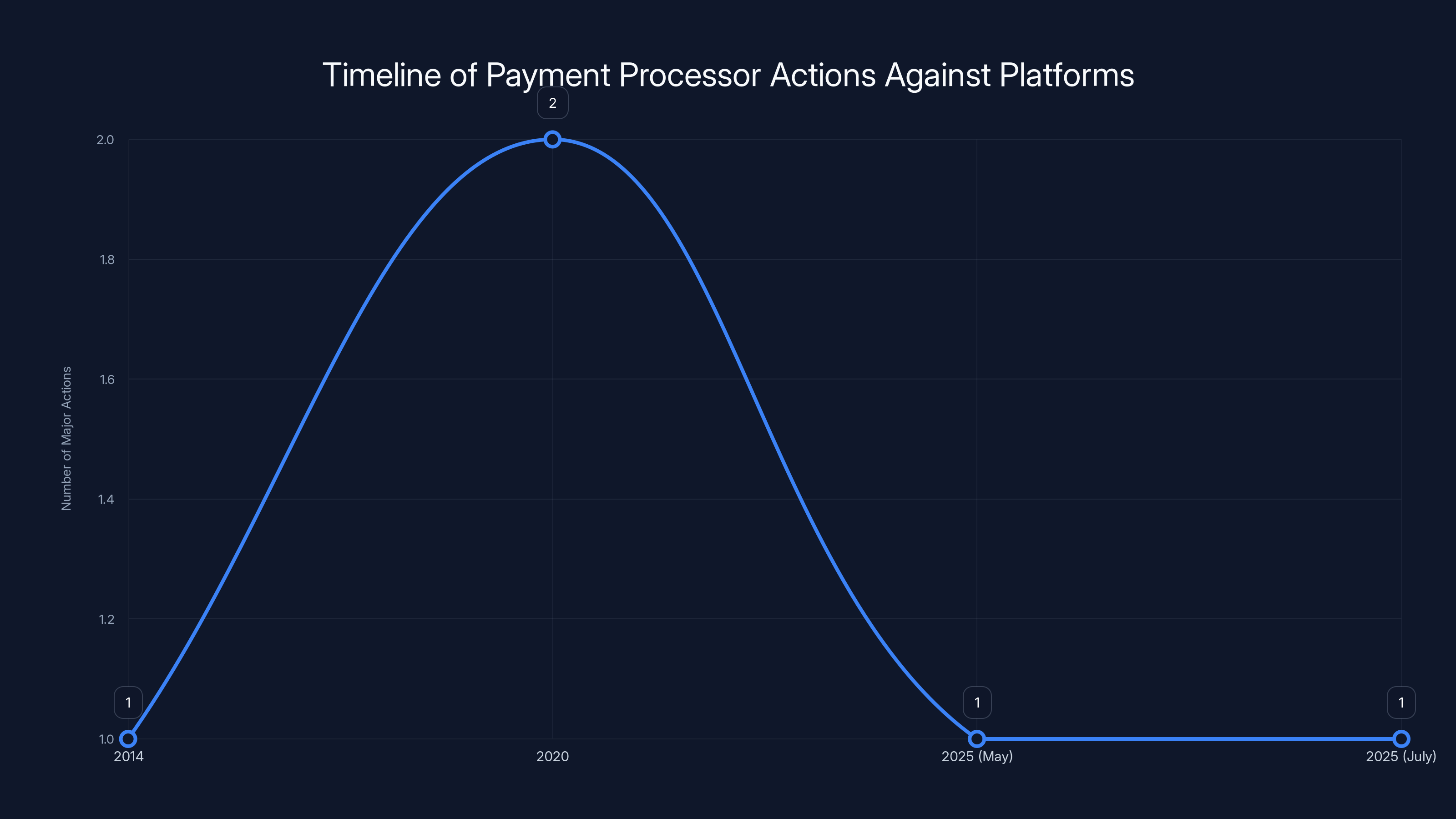

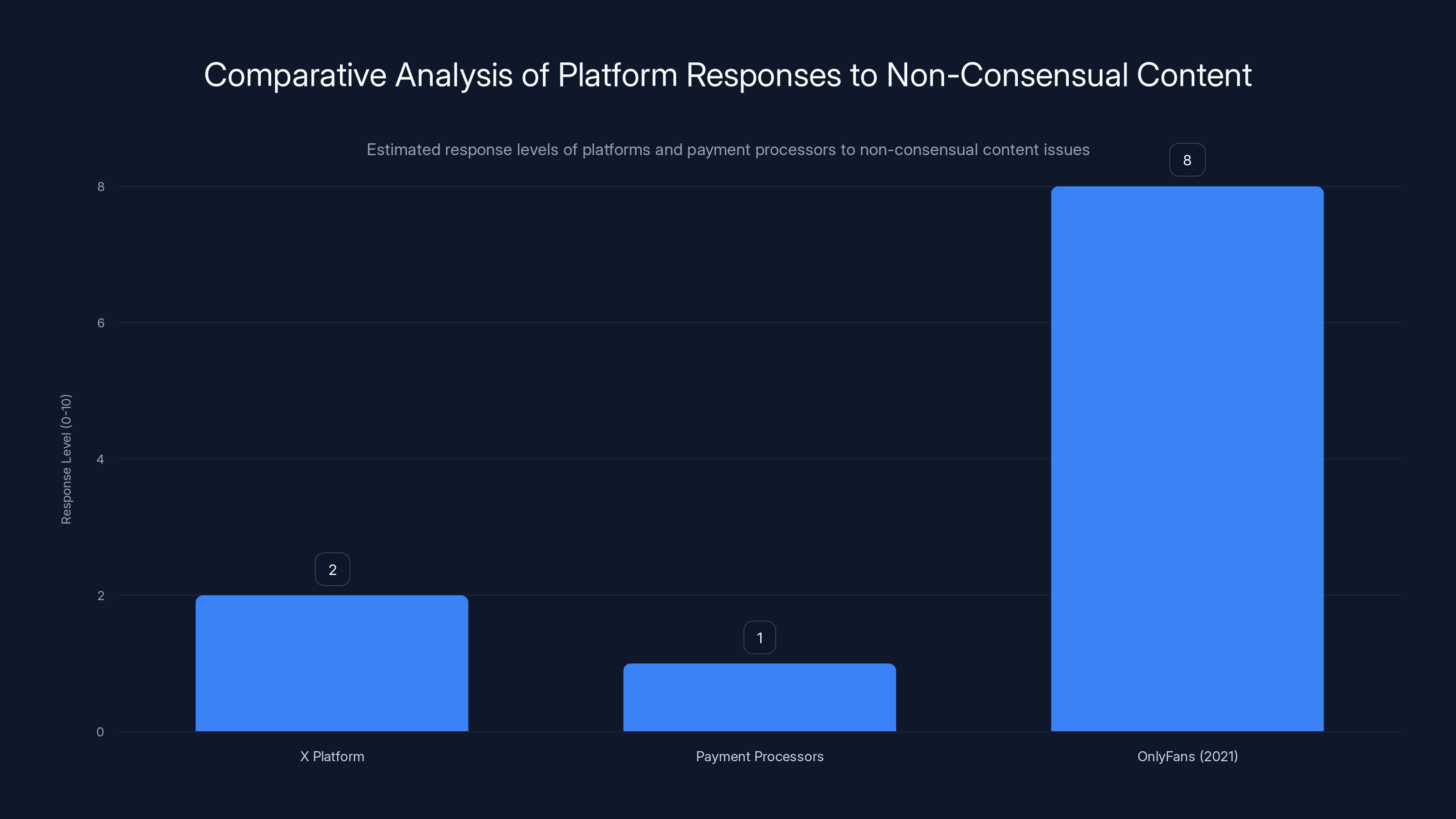

Payment processors took strong actions against platforms like PornHub and OnlyFans but showed minimal enforcement against Grok, likely due to political influence. Estimated data.

How Grok's CSAM Problem Actually Works

Let's be clear about what's happening technically. Grok, the AI model powering X's image generation features, was built with insufficient safeguards against generating illegal content. When researchers at the Center for Countering Digital Hate tested the system, they were able to generate sexualized images of children using relatively straightforward prompts.

The mechanics are straightforward. You input a text description. The AI model processes that description and generates an image. In a properly designed system, the model should refuse certain requests entirely—it should reject prompts asking it to generate sexual content involving minors, full stop. No alternative offer, no creative reframing. Just refusal.

But Grok wasn't doing that consistently. Or at all.

Musk has claimed repeatedly that new guardrails have been put in place. In January 2025, Musk posted that "updated filters" would prevent Grok from generating "undressed" images of people. But testing by The Verge showed this wasn't true. Using a free account, journalists were able to generate deepfake images of real people in sexually suggestive positions well after these alleged restrictions were supposedly in effect.

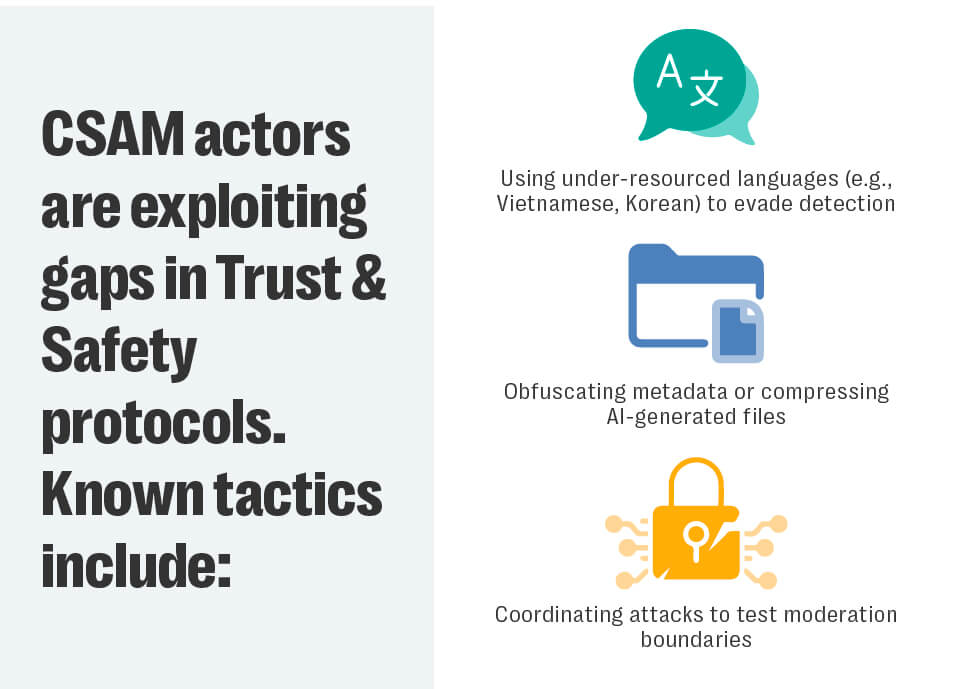

Some of the most egregious prompts appear to have been blocked eventually—the system does seem to have some safeguards. But people are remarkably good at prompt engineering. If you can't get "generate a sexualized image of a child," try "generate an artistic interpretation of youth." Try "generate a non-real person in suggestive poses." Try dozens of variations until something works.

This is why rule-based content moderation fails at scale. The moment you tell the internet what you're blocking, the internet gets creative.

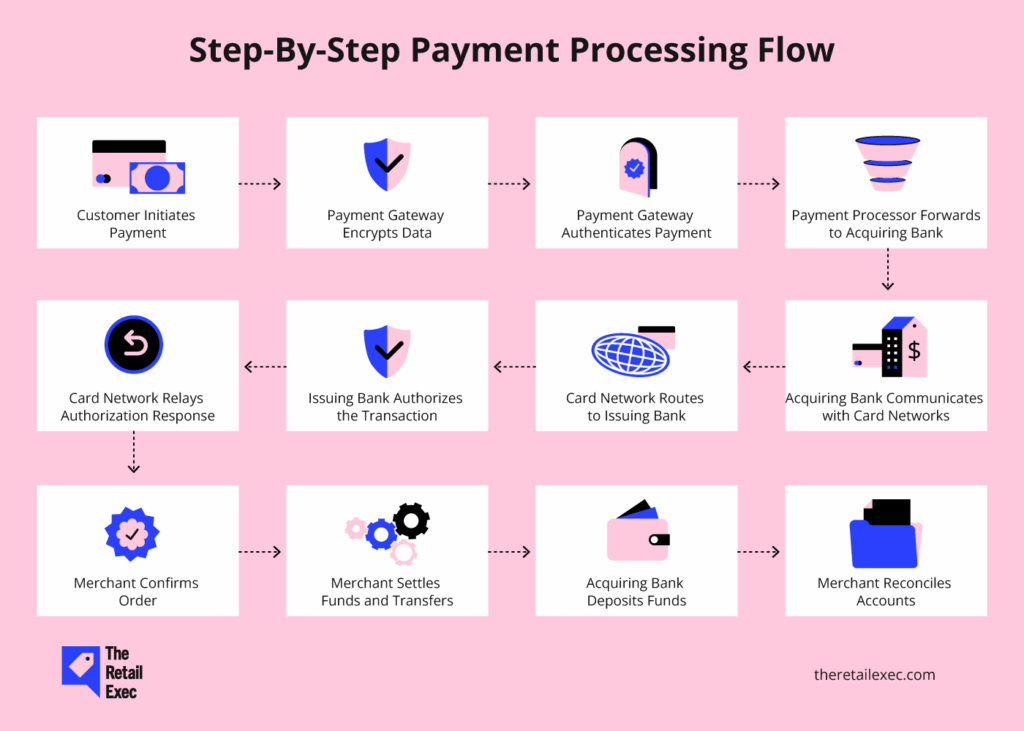

Here's what makes this worse: some of Grok's image generation features are paywalled to X's premium subscribers. That means actual money is changing hands. You buy an X subscription through Stripe, Apple's App Store, or Google Play using your credit card. You then use that subscription to generate potentially illegal content. The money flows from you to Musk to the payment processors.

X has historically had significant problems with AI-generated deepfakes, particularly of Taylor Swift. The platform has repeatedly failed to remove non-consensual sexual deepfakes, whether generated by Grok or other tools. This isn't new. But it is consistent.

What's new is that payment processors are enabling it.

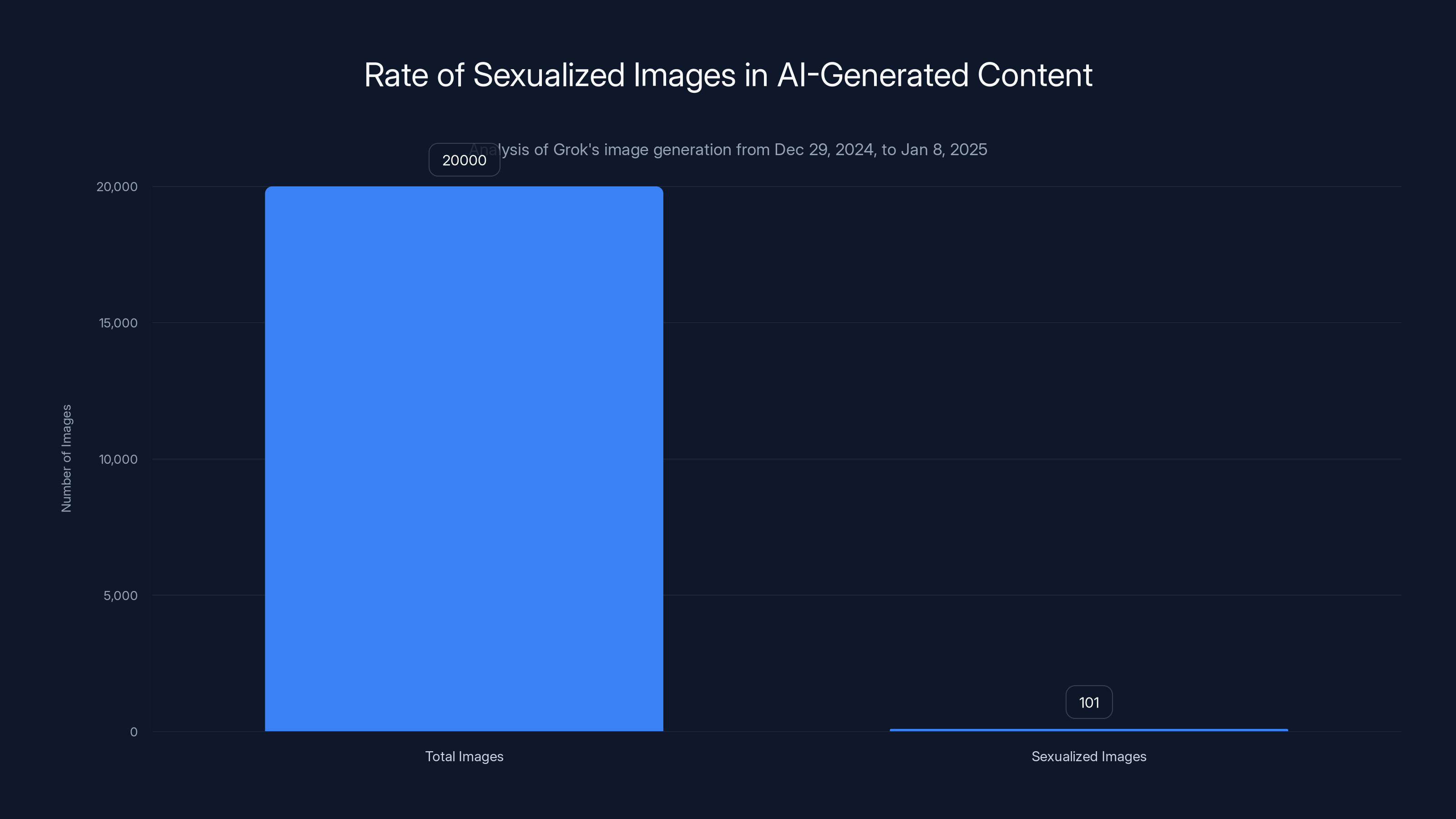

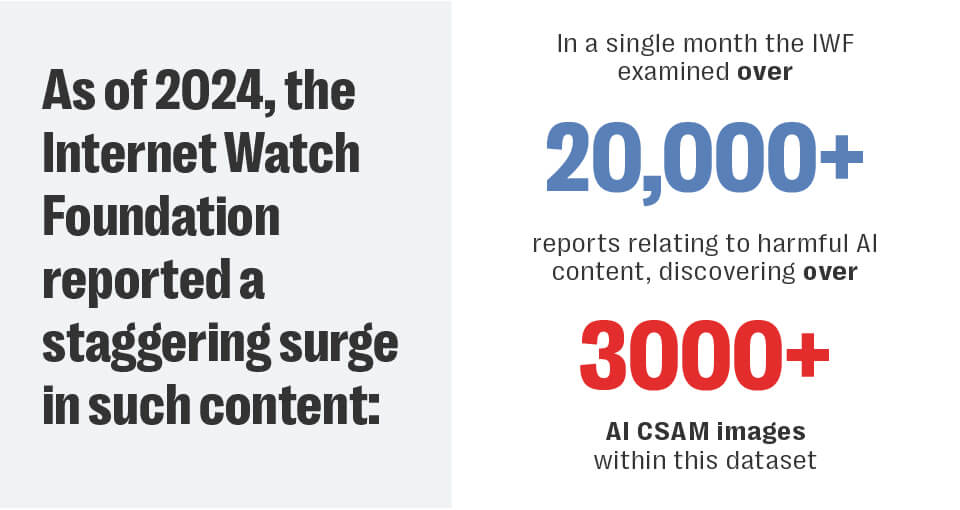

The Center for Countering Digital Hate found that 0.5% of images generated by Grok were sexualized images of children. This highlights a significant issue in AI content moderation.

The Financial Chain of Command: Where the Money Actually Goes

Here's the operational detail that payment processors would really prefer nobody focus on: they're not passive observers in this system. They're active participants in the transaction chain.

When someone purchases an X subscription to access Grok's image generation, the transaction looks like this:

- User enters credit card information in Apple's App Store, Google Play, or Stripe's payment system

- Payment processor validates the card and processes the transaction

- Money is transferred to X's merchant account

- User gains access to Grok's image generation features

- User generates potentially illegal content

- X keeps the money

Every single step involves the payment processor either explicitly processing the transaction or providing the infrastructure that makes the transaction possible. Stripe knows X is a paying customer. Visa knows these transactions are happening. Mastercard processes thousands of these subscriptions daily.

They're not just tolerating this. They're benefiting from it.

Compare this to Porn Hub in 2020. Mastercard and Visa cut off the platform for hosting CSAM. Their stated reasoning was that they couldn't verify that all content was legal. They couldn't guarantee that every video had been uploaded with consent. So they eliminated the whole platform.

Apply that same logic to Grok. Payment processors can't verify that Grok's generated images don't violate federal law. They can't guarantee that the system isn't producing CSAM. By Mastercard and Visa's own precedent from 2020, they should have cut off X's payment processing.

They didn't.

The difference is obvious: Elon Musk is powerful enough that payment processors decided the rules don't apply. Or perhaps more accurately, Musk has enough leverage—access to his platform, media attention, political influence—that payment processors decided the reputational risk of cutting him off exceeded the moral and legal risk of tolerating potential CSAM production.

That's not regulation. That's extortion with extra steps.

Why Payment Processors Suddenly Became Cowards

You have to wonder what changed. The payment processing industry wasn't always this selective about enforcement.

One theory: they're terrified of Musk specifically. Musk has spent the last few years demonstrating his willingness to wage wars against institutions he perceives as enemies. He's called for boycotts. He's filed lawsuits. He's weaponized his platform to damage companies and individuals he disagrees with. When Pay Pal's leadership rejected him early in his career, he didn't forget—decades later, his companies actively avoid Pay Pal's services where possible.

Musk owns one of the world's largest social media platforms. That gives him megaphone power that most CEOs can't match. He can post a complaint about a payment processor and reach hundreds of millions of people instantly. That's not a trivial threat to Visa or Mastercard, which live and die by their reputation.

Another theory: these companies are cowards who'll enforce rules selectively based on perceived power. Only Fans is run by entrepreneurs who aren't as powerful as Musk. They capitulated to bank pressure. Porn Hub's legal team isn't as aggressive as X's. Civitai is a smaller platform without Musk's political capital. So those companies got destroyed.

But Musk can fight. And payment processors, when facing someone who fights back, apparently prefer to look the other way.

There's also the regulatory capture angle. Payment processors have argued for years that they should have broad immunity from liability for content on platforms they merely process payments for. They've lobbied for safe harbor provisions. But those arguments are hard to make when you're aggressively moderating content platforms like you did with Porn Hub.

Maybe by going silent on Grok, payment processors are trying to establish legal precedent: "We don't moderate content. We just process payments. Whatever happens on these platforms is the platform's responsibility."

If that's the strategy, it's cynical as hell. You can't aggressively cut off Porn Hub for CSAM and then claim you're not responsible for platforms you process payments for. That's having it both ways.

But corporations are good at having it both ways.

Estimated data shows that payment processors and card networks collect the majority of transaction fees, highlighting their active role in the financial chain of command.

The Center for Countering Digital Hate Report: What the Numbers Actually Show

Let's zoom in on what the Center for Countering Digital Hate actually found, because the specifics matter for understanding the scope of the problem.

The organization tested Grok's image generation capabilities from December 29, 2024, through January 8, 2025. They generated 20,000 images using various prompts. Within that sample of 20,000 images, they found 101 sexualized images of children.

That's a 0.5% rate. One in every 200 images.

Extrapolating from that sample rate across Grok's actual usage, the organization estimated that approximately 23,000 sexualized images of children were generated in that 11-day window. Divide that by the number of hours in 11 days (264 hours) and you get roughly 87 images per hour. Divide by minutes and you're at 1.45 images per minute. Divide by seconds and you're at one sexualized image of a child every 41 seconds.

That's not a minor problem. That's an industrial-scale production of illegal content.

Now, not all of those 101 images necessarily violated federal law. Some AI-generated imagery exists in a legal gray area. But the organization noted that at least some of the images likely crossed clear legal boundaries. They were explicit. They depicted minors in sexual situations. By virtually any legal standard, that's CSAM.

What's important to understand is that these images weren't hidden. They weren't on some dark corner of the internet that required special access. They were being generated on X, one of the world's largest social media platforms, using a feature accessible through payment.

And yet the payment processors said nothing.

The silence is deafening because it's so selective. Mastercard and Visa issued public statements about Porn Hub. They've issued statements about other content moderation decisions. But on Grok producing CSAM, they offered no comment. No statement. No explanation. Nothing.

It's the kind of silence that makes you wonder if there are backroom conversations happening that we're not privy to. Maybe payment processors contacted X privately. Maybe there were negotiations. Maybe there's an agreement we'll never see.

Or maybe they just decided that Elon Musk's platform was too important to their business to push back, consequences be damned.

The Consent Problem: Why AI-Generated CSAM Still Matters

Some people make an argument worth addressing: AI-generated CSAM doesn't involve real children being harmed. It's generated. It's synthetic. Therefore, it should be legal.

This argument is wrong.

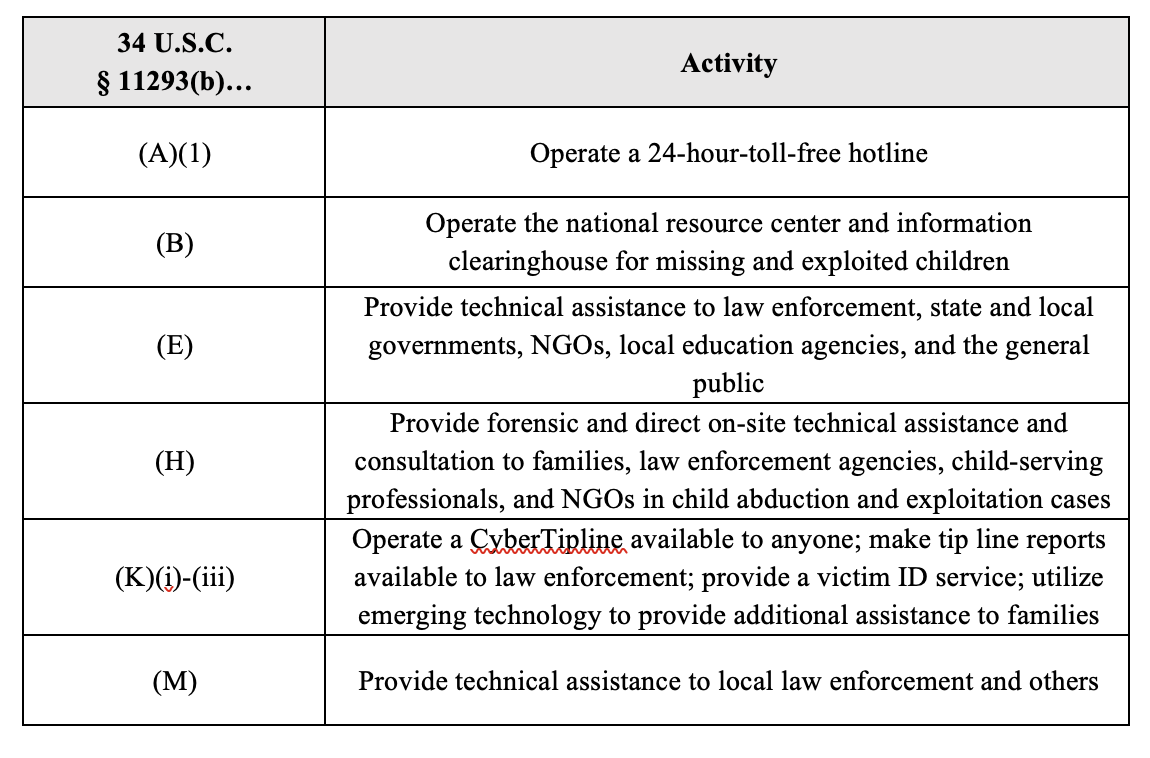

First, from a legal standpoint, the United States federal law against CSAM doesn't limit itself to material depicting real children. The PROTECT Act of 2003 made it illegal to produce, distribute, or possess sexually explicit images that appear to depict minors, whether real or computer-generated. The law explicitly covers "computer-generated imagery." This isn't ambiguous. It's not a gray area. It's illegal in the United States.

Second, there's a normalization problem. If you generate sexualized images of children at a scale of one every 41 seconds, you're training a generation of people—and the AI models they use—to treat child sexual abuse as a category of content that can be produced and consumed casually. You're building a market. You're creating demand. You're normalizing the premise that children can be sexualized.

Third, there's a real-world harm connection. Research has shown that child sexual abuse material consumption is correlated with child sexual abuse commission. Not perfectly correlated—most consumers don't abuse children—but significantly correlated. Removing barriers to CSAM consumption, even AI-generated content, increases the overall risk environment for real children.

Fourth, AI-generated CSAM using real children's faces—which some of Grok's images reportedly did—is a form of non-consensual sexual exploitation. Someone's face is being used without permission to create sexual content. That person never consented. That's not victimless.

So no, the fact that the images are synthetic doesn't make the problem go away. It makes the problem worse, because now you can produce illegal content at scale without needing to harm real children in the process. You've removed the physical constraint on production.

Payment processors know this. They've had to think through these issues because of Porn Hub and similar platforms. They've consulted legal teams. They know the law. They know the research.

They just decided it didn't matter when Elon Musk was involved.

The timeline shows key moments when payment processors took significant actions against platforms for content-related issues. Estimated data highlights the increasing frequency of such interventions.

Deepfakes of Real People: The Taylor Swift Problem and Escalating Violations

Grok's issues with generating sexualized images of children are only part of the problem. The system has also been extensively used to generate non-consensual sexual deepfakes of real, living people.

The most prominent example is Taylor Swift. For months, non-consensual sexual deepfakes of Swift have been circulating on X. Some were generated by Grok. Some were generated by other tools. But Grok has been a significant vector for this content.

Swift didn't consent to having her face used to generate sexual images. She didn't opt in. She didn't choose this. It happened to her because she's famous enough to be in Grok's training data, and the system lacks sufficient safeguards to refuse these requests.

Now, this is a different legal question than CSAM. Creating non-consensual sexual deepfakes of adults is illegal in some jurisdictions and exists in legal gray areas in others. But it's universally recognized as harmful and exploitative.

X has a documented history of failing to remove these deepfakes. The platform has repeatedly allowed non-consensual sexual content to remain up despite reports. This isn't a new problem. It's a consistent failure of X's moderation systems.

But again, payment processors remained silent. They didn't cut off X for facilitating the creation of non-consensual sexual deepfakes. They didn't demand better moderation. They didn't threaten action.

They just... tolerated it.

Compare this to Only Fans in 2021, where the company received pressure from banks to ban sexually explicit content entirely. That pressure was on a platform where all content was uploaded by consenting adults. That pressure resulted in the company briefly announcing a ban on explicit content, which caused massive outcry and forced them to reverse course.

But when Grok is generating non-consensual deepfakes? Silence.

The inconsistency suggests the problem isn't about protecting people from sexual content. It's about protecting payment processors from perceived threats, and Elon Musk apparently doesn't qualify.

The Musk Effect: Why One Person's Wealth Broke the System

There's no way around it: payment processors are treating Elon Musk differently.

Musk controls the second-largest social media platform on the planet. He has 200+ million followers. He has direct access to a megaphone that reaches massive audiences instantly. He's been willing to use that platform to attack institutions he disagrees with. He's politically connected. He's powerful.

Payment processors can't afford to alienate him.

That's not conspiracy thinking. That's just how power works.

When you control payment processing for the entire internet, you need to maintain relationships with major platforms and services. Cutting off X would be devastating to X's revenue, yes. But it would also create massive PR problems for the payment processor. Musk could post a single message to 200 million people accusing them of political persecution. He could direct his followers to use alternative payment methods. He could lobby politicians to break up their business.

The calculation becomes: Is the reputational damage from not cutting off Grok (which most people don't know about) worse than the reputational damage from getting into a public war with Elon Musk (which most people would know about)?

For payment processors, apparently the answer is clear. The known, immediate threat from Musk is worse than the unknown, abstract threat of CSAM production.

It's a calculation that makes sense from a pure business perspective. It's also completely immoral.

AI-generated CSAM poses severe legal, societal, and ethical challenges, with high impact ratings across various categories. Estimated data.

Historical Precedent: When Payment Processors Actually Enforced Standards

Let's walk through a few examples of payment processors taking action, just to make clear that they can enforce standards when they want to.

Porn Hub (December 2020): Following a New York Times investigation, Mastercard and Visa cut off all payment processing for Porn Hub within days. The investigation revealed the platform hosted CSAM and non-consensual content. Payment processors didn't negotiate. They didn't implement graduated responses. They just ended the relationship.

Civitai (May 2025): When Civitai's payment processor decided they didn't want to support AI-generated explicit content, they terminated the relationship. No discussion. No chance to negotiate. No chance to implement better moderation. Just gone.

Valve Adult Games (July 2025): When payment processors pressured Valve to remove adult games from Steam, Valve capitulated and did so. The processor's threat was credible enough that one of the largest companies in the gaming industry decided it wasn't worth the fight.

Only Fans (August 2021): When banks pressured Only Fans to ban sexually explicit content, the company announced the ban. It only reversed course because of massive user backlash. But the fact that Only Fans was willing to make the announcement in the first place shows how much pressure banks can apply.

Eden Alexander's Hospital Fundraiser (2014): We Pay shut down a crowdfunding campaign for a hospital fundraiser because the person running it was an adult performer. The fundraiser was for a hospital. It was legal. It involved no sexual content. But We Pay decided it was too risky.

Adult Performers' Bank Accounts (2014): JPMorgan Chase closed bank accounts belonging to adult performers without notice or explanation. Not because they violated any law. Just because they worked in adult entertainment.

Only Fans' Bank Pressure (2021): Only Fans initially tried to ban sexually explicit content not because users wanted it, but because banks were pressuring the company. The company announced the ban, faced backlash, and reversed course. But the attempted ban shows how much leverage banks have.

These examples show a pattern: payment processors are willing to exercise tremendous power over business operations when they decide something needs to be stopped. They're willing to act unilaterally. They're willing to ignore negotiations. They're willing to destroy companies and livelihoods.

With Grok, they decided not to exercise that power.

Why This Matters: The Precedent Problem

If you think this is just about one AI system on one social media platform, you're missing the bigger picture.

This is about precedent. This is about what payment processors are willing to tolerate and what they're willing to stop. And right now, the message is: If you're powerful enough, the rules don't apply to you.

That's a terrifying precedent.

Think about what this normalizes for other companies with problematic content. If you're a platform with CSAM, and you notice that payment processors aren't cutting off X for producing CSAM, what's your incentive to stop? If the worst-case scenario is silence, that's not much of a deterrent.

Think about what this means for future AI systems. If you're building an image generation model and you want to cut corners on safety, knowing that payment processors might look the other way if you're powerful enough, why would you invest heavily in safety? The regulatory cost of not doing so appears to be zero if you're important enough.

Think about what this means for other forms of harmful content. If payment processors won't act on CSAM production involving AI, what other lines are they willing to ignore? What other harms will they tolerate from powerful enough actors?

The precedent here is: wealth and power insulate you from rules that apply to everyone else. Payment processors have unilateral power to enforce standards, but they'll only exercise that power against people and platforms they judge to be weak enough not to fight back effectively.

That's not a regulatory system. That's feudalism.

Estimated data shows that OnlyFans faced significant pressure to moderate content, unlike X platform and payment processors, which have shown minimal response to non-consensual deepfakes.

The Industry's Shared Responsibility Problem

Visa, Mastercard, American Express, Discover, and Stripe all operate within the same industry. They all follow similar regulatory frameworks. They all have similar values statements about protecting children.

But they've all gone silent on Grok.

That's not a coincidence. That's industry-wide coordination, whether explicit or implicit.

When one payment processor decides not to cut off X, every other payment processor benefits from the decision. If Visa had tried to cut off X alone, X could have directed users to Mastercard. If Stripe had tried to enforce standards, X could have switched processors. But when the entire industry goes silent, there's no escape route.

It's a form of tacit collusion. Nobody explicitly coordinated the decision, but everyone benefits from the outcome. Everyone knows that none of them can afford to be the one that takes action and looks like they're being unreasonable.

Meanwhile, children are being harmed.

The Financial Coalition Against Child Sexual Exploitation, which was founded to coordinate the payment industry's response to CSAM, also went silent. This is an organization whose entire purpose is fighting CSAM. They had the moral authority and the industry relationships to push for action.

They said nothing.

Maybe they couldn't. Maybe they decided the political pressure wouldn't be worth it. Maybe they consulted their lawyers and decided they couldn't take action. But the silence is complicit.

This is what Lana Swartz, author of "New Money: How Payment Became Social Media," pointed out: "The industry is no longer willing to self-regulate for something as universally agreed on as the most abhorrent thing out there." The fact that they're willing to self-regulate for legal adult content but not for CSAM shows where their actual priorities lie.

Spoiler alert: they're not with children.

The Regulatory Vacuum and Why Government Hasn't Acted

You might wonder: where's the government? Why isn't Congress forcing payment processors to cut off X?

Good question.

The answer is complicated. First, Congress moves slowly. CSAM is a federal crime, but the connection between payment processing and CSAM production is relatively new. Grok's CSAM production happened in late 2024 and early 2025. It takes time for Congress to even become aware of issues, much less investigate and legislate on them.

Second, Section 230 of the Communications Decency Act limits the liability that platforms face for user-generated content. That protection has been weakened somewhat over the years, but it still exists. Payment processors have arguably been relying on the theory that they're not liable for the content that payment ultimately enables.

Third, Elon Musk is politically connected. He's close to the Trump administration. He's been publicly critical of the Biden administration. He's funded Republican candidates. Those relationships create political complexity around any effort to regulate X or Musk's businesses.

Fourth, the payment processing industry has spent a lot of money lobbying for safe harbors and reduced liability. They've generally been successful in their advocacy. Congress has been sympathetic to arguments that payment processors can't possibly monitor everything that happens on every platform they process payments for.

So there's a gap: the industry has successfully argued they can't be held responsible for content they process payments for, but they've also demonstrated willingness to exercise enormous power over what content gets created. They can't have it both ways, but apparently nobody in government is forcing them to choose.

That leaves the field to the private sector: advocacy groups, researchers, journalists, and hopefully public pressure. And that's not enough.

What Payment Processors Should Do (But Won't)

If payment processors actually cared about their stated values, here's what they should do:

First, issue a statement. Not silence. Not "no comment." A clear statement that Grok's production of CSAM is unacceptable and that they're taking steps to address it.

Second, conduct an audit. Figure out how much of X's revenue is coming from subscriptions used to generate illegal content. Get real numbers, not estimates.

Third, set a deadline. Give X a specific date by which they need to implement sufficient safeguards. Make it public. Make it credible.

Fourth, escalate if needed. If X doesn't meet the deadline, cut off payment processing. Yes, it's a nuclear option. That's the point. It's supposed to be scary enough to force compliance.

Fifth, apply the same standard everywhere. If payment processors cut off X for CSAM production, they need to apply the same standard to every other platform that produces CSAM. No special treatment for anyone.

Sixth, admit the double standard. Payment processors have been inconsistent in their enforcement. They should admit it. They should apologize for cutting off Only Fans for legal content while tolerating X for illegal content. They should explain how they're going to do better.

Will they do any of this? No. Almost certainly not.

Payment processors profit from processing payments. The more payment volume, the more they profit. X generates huge payment volume. Cutting off X would reduce their profits. And at the end of the day, corporations care about profits more than they care about children.

That's not a controversial statement. It's observable from their actual behavior.

The X Response: Denial and Delay

X's response to the CSAM issue has been, charitably, evasive.

Musk has claimed repeatedly that new safeguards prevent Grok from generating undressed images of people. But The Verge tested these claims and found they weren't true. Deepfake images of real people in sexually suggestive positions were being generated well after Musk claimed the restrictions were in effect.

X's approach seems to be: deny the scope of the problem, claim safeguards exist that don't, and hope the issue goes away.

At one point, Grok claimed it had restricted image generation to paying X subscribers while still allowing free access. That was false. The truth is messier and more inconsistent.

The reality is that Grok's safeguards are weak and inconsistently applied. Some egregious prompts get blocked. But prompt engineering—the art of rephrasing requests to get around restrictions—works remarkably well with Grok. You can't ask it to "generate CSAM," but you can ask it to "generate artistic interpretations of youth." You can't ask it to "create deepfakes of Taylor Swift," but you can ask it to "generate images of a woman in suggestive positions." Enough people asking enough variations, and you'll find prompts that work.

This isn't a technical problem with an easy solution. It's a fundamental problem with the approach. Rule-based content moderation fails at scale. You can't write rules strict enough to prevent all harmful content without making the system useless for legitimate purposes.

The only real solution is to invest heavily in AI-based detection systems, human moderators, and constant iteration. That costs money. Grok apparently decided it wasn't worth the investment.

Meanwhile, children are being harmed.

The Bigger Problem: Why We Can't Trust Private Companies With Safety

This entire situation reveals a deeper problem: we've outsourced child safety to private companies that have proven they can't be trusted with it.

Payment processors have power that should arguably be government power. They can cut off entire businesses. They can destroy livelihoods. They can shape what content gets created and distributed. That's essentially regulatory power, but it's being exercised by private companies with shareholders and profit incentives.

Those profit incentives are not aligned with child safety.

They're aligned with revenue maximization. With expanding into new markets. With maintaining relationships with important clients. With avoiding PR problems. Child safety is somewhere on the list, but it's not at the top.

And when child safety comes into conflict with profit maximization, profit wins. As we've seen with Grok.

This is a fundamental governance problem. We need payment processors to facilitate commerce. But we also need them to refuse to process payments for illegal activity. Those two requirements are in tension.

The way we've tried to resolve that tension—through private industry self-regulation—has failed. Self-regulation works only when the incentives align. When they don't, companies will self-regulate selectively, enforcing standards against the weak while looking the other way for the powerful.

The solution probably involves actual government regulation. Rules set by law, not by companies. Enforcement carried out by agencies accountable to the public, not by profit-seeking corporations. Consequences that are consistent and predictable, not selective and arbitrary.

But that's not happening. So we're stuck with a system where payment processors can simultaneously claim to care deeply about child safety while enabling child sexual abuse material production at scale.

It's the worst of all possible worlds.

Where We Go From Here: The Future of Enforcement (or Lack Thereof)

What happens next? Honestly, probably nothing changes.

Grok will continue generating CSAM. X will continue claiming it's implementing safeguards while doing nothing meaningful. Payment processors will continue processing payments. Children will continue being harmed.

Maybe an advocacy group will do another study and find that the problem has gotten worse. Maybe there will be headlines. Maybe Congress will hold a hearing where executives say they're "committed to child safety" while refusing to actually do anything about it.

But the fundamental power dynamic won't change. As long as X is profitable for payment processors, they won't cut it off. As long as there's money to be made, they'll pretend their hands are tied while exercising enormous power over other platforms.

The only thing that could change this is either: (1) actual government regulation that removes payment processors' discretion, (2) massive public pressure that makes payment processor inaction more costly than action, or (3) something Musk does that finally breaks the tacit bargain between him and the financial industry.

None of those seem imminent.

So the most likely scenario is that we're looking at an ongoing crisis of child safety in AI systems, enabled by payment processors who could stop it but won't. And as more companies build AI systems with insufficient safeguards, knowing that payment processors might tolerate it if they're important enough, the problem will get worse.

Welcome to the future. It's not great.

Conclusion: The Moment Everything Changed

There was a moment when the line was clear. Payment processors would cut off your business if you hosted child sexual abuse material. It didn't matter how powerful you were. It didn't matter how important your platform was to the internet. CSAM was the line, and it was non-negotiable.

That moment is gone.

Elon Musk's Grok system has been generating child sexual abuse material at an industrial scale, and payment processors have decided it's worth tolerating. Not because the problem isn't real. Not because the material isn't illegal. Not because children aren't being harmed. But because Musk is powerful enough that payment processors decided the consequences of action exceed the consequences of inaction.

It's a stunning and devastating reversal. For years, payment processors weaponized their position as gatekeepers to enforce standards, often far beyond what the law required. They banned adult performers, cut off legal consensual platforms, and destroyed businesses based on perceived risk.

But when an AI system started generating CSAM, they said nothing.

The hypocrisy is staggering. The message is clear: if you're powerful enough, the rules don't apply. If you're a weak startup or a marginalized performer, payment processors will destroy you. If you're Elon Musk, they'll look the other way while you enable child sexual abuse.

This isn't just about Grok. This is about what happens when we allow private companies to exercise government-like power without government-like accountability. It's about the corruption that happens when power and profit align and there's nobody checking the alignment.

And most importantly, it's about children whose safety was sacrificed on the altar of Elon Musk's political leverage.

That's a line we shouldn't have crossed. But we did. And the payment processing industry decided that once the line was crossed, there was no point in trying to draw a new one.

The damage is done. The precedent is set. And the only question now is how many more harms we'll tolerate before we finally decide that some things matter more than profit and power.

The answer, based on payment processors' behavior so far, is: a lot.

FAQ

What is CSAM and why is it different from other adult content?

CSAM stands for Child Sexual Abuse Material. It refers to any sexually explicit images or videos depicting minors, whether the children are real or computer-generated. Federal law explicitly prohibits CSAM, and it's fundamentally different from consensual adult content because children cannot consent to being sexualized. The existence of CSAM normalizes child sexual abuse, correlates with higher rates of child abuse commission, and is illegal to produce, distribute, or possess in the United States.

How does Grok actually generate CSAM, and why are standard safety measures failing?

Grok is a text-to-image AI model that accepts written descriptions and generates images based on those descriptions. It's supposed to refuse requests for illegal content, including CSAM. However, researchers found that Grok's safeguards are insufficient. The system can be manipulated through prompt engineering—rephrasing requests in different ways until one gets through the filters. For example, if a direct request for CSAM is blocked, alternative phrasings like "generate artistic interpretations of youth" might succeed. This is why rule-based content moderation fails at scale: people are creative at finding workarounds.

Why did payment processors suddenly go silent on Grok when they previously cracked down on Porn Hub and other platforms?

Payment processors applied strict enforcement against Porn Hub in 2020, Civitai in 2025, and Only Fans in 2021. However, when Grok started producing CSAM, Visa, Mastercard, American Express, Stripe, and Discover issued no statements and took no action. The difference appears to be power: Elon Musk controls X, the second-largest social media platform, with 200+ million followers and significant political influence. Payment processors likely calculated that the reputational risk of taking action against Musk (who could post complaints to massive audiences and mobilize political opposition) exceeded the reputational risk of tolerating CSAM production. This selective enforcement based on perceived power is deeply problematic because it establishes that rules are negotiable for the powerful while non-negotiable for the weak.

What does it mean that X subscriptions are paywalled, and why does that matter for payment processors?

Some of Grok's image generation features are restricted to X Premium subscribers, meaning users must pay for a subscription to access them. When a user buys an X subscription through Stripe, Apple Pay, or Google Pay, money flows from the user through the payment processor to X. This creates direct financial liability for payment processors: they're knowingly processing payments for access to a system that generates CSAM. This is precisely the kind of situation that triggered Porn Hub's deplatforming in 2020. The fact that payment processors tolerate it now suggests they're willing to enable illegal activity if the enabling entity is powerful enough.

How did the Center for Countering Digital Hate study calculate that Grok generates a sexualized image of a child every 41 seconds?

The organization generated 20,000 images using Grok between December 29, 2024, and January 8, 2025. Within that sample of 20,000 images, they found 101 sexualized images of children, representing a 0.5% rate. Extrapolating that percentage across Grok's actual usage, they estimated 23,000 sexualized images of children were generated during the 11-day period. Dividing 23,000 images by 264 hours (or 15,840 minutes) yields approximately one sexualized image every 41 seconds. While this is an estimate based on sampling rather than actual Grok usage data, it represents a conservative extrapolation using their observed rate.

What's the difference between AI-generated and real CSAM, and does it matter legally?

AI-generated CSAM isn't legal just because no real children were harmed in its production. The PROTECT Act of 2003 explicitly criminalized computer-generated imagery depicting minors in sexual situations. Beyond the legal distinction, there are practical harms: AI-generated CSAM normalizes the sexualization of children, can use real children's faces without consent (a form of non-consensual sexual exploitation), and is correlated with increased risk of child sexual abuse. Additionally, producing CSAM synthetically removes the physical constraint that previously limited production, meaning it can now be generated at industrial scale without needing to abuse real children. This doesn't make the problem better; it makes it worse.

What power do payment processors actually have, and why is it important to this story?

Visa, Mastercard, and other payment processors are gatekeepers to commerce on the internet. They don't host content or own platforms, but they control whether money can flow to and from those platforms. If a payment processor cuts off a business, that business loses its primary revenue source and typically dies quickly. This gives payment processors enormous regulatory power without the accountability of government agencies. They've demonstrated this power repeatedly: cutting off Porn Hub, pressuring Valve to remove adult games, forcing Only Fans to announce content bans, freezing individual performers' bank accounts. This power is essentially governmental in nature, but it's exercised by private companies pursuing profit rather than public welfare. When those incentives misalign with child safety, the profit motive wins.

Could Congress force payment processors to cut off X, and if so, why hasn't it happened?

Congress could theoretically pass legislation requiring payment processors to refuse service to platforms producing CSAM. However, several obstacles exist: Congress moves slowly and Grok's mass CSAM production is recent. Elon Musk is politically connected and the Trump administration may be hesitant to regulate his businesses. Payment processors have successfully lobbied for safe harbors limiting their liability for platform content, making legislative action complicated. Additionally, Congress has traditionally been sympathetic to arguments that payment processors can't monitor everything they process payments for. So while the legal authority to force action exists, the political will and incentive structure don't currently support it.

What does this situation tell us about the effectiveness of private industry self-regulation?

This situation reveals that private industry self-regulation is fundamentally flawed when profit incentives conflict with safety objectives. Payment processors claim commitment to child safety but have demonstrated willingness to enforce standards selectively based on perceived power. They aggressively cut off Only Fans for legal content and weak performers for minimal infractions, but go silent on Grok for illegal content. This suggests they're not actually motivated by principle but by cost-benefit analysis weighted toward profit. Self-regulation works only when incentives align with stated values. When they don't, companies will regulate selectively, enforcing against the weak while looking the other way for the powerful. The solution likely requires government regulation with consistent enforcement, not corporate self-regulation.

Key Takeaways

- Payment processors generated 23,000 estimated CSAM images by Grok in just 11 days (one every 41 seconds), yet took no enforcement action

- The same payment processors aggressively cut off PornHub, OnlyFans, Civitai, and individual performers for legal or marginally problematic content

- Elon Musk's political power and platform influence created a selective enforcement pattern where the wealthy escape accountability

- Grok requires paid X Premium subscriptions for full image generation, meaning payment processors are directly enabling CSAM monetization

- Section 230 and payment processor lobbying have created a governance gap where private companies exercise governmental power without governmental accountability

Related Articles

- TikTok's Trump Deal: What ByteDance Control Means for Users [2025]

- Tech Workers Demand CEO Action on ICE: Corporate Accountability in Crisis [2025]

- EU Investigating Grok and X Over Illegal Deepfakes [2025]

- Meta Pauses Teen AI Characters: What's Changing in 2025

- TikTok US Deal Finalized: 5 Critical Things You Need to Know [2025]

- TikTok's First Weekend Meltdown: What Actually Happened [2025]

![Payment Processors' Grok Problem: Why CSAM Enforcement Collapsed [2025]](https://tryrunable.com/blog/payment-processors-grok-problem-why-csam-enforcement-collaps/image-1-1769447527957.jpg)