Introduction: When a Doorbell Becomes a Neighborhood Watchdog

There's a moment that every tech company dreads. It's when customers realize that the product they've invited into their home might be doing more than advertised. For Amazon-owned Ring, that moment arrived in early 2025 when the company announced a partnership with Flock Safety, a surveillance company with deep ties to law enforcement.

Within weeks, the backlash was swift and damaging. Ring users were publicly destroying their cameras. Online communities erupted with calls to boycott. A Super Bowl ad featuring the company's new Search Party feature ignited fears of mass surveillance networks sweeping through neighborhoods. And then, just as quickly as it began, Amazon pulled the plug entirely.

But here's what makes this story worth examining: it reveals something fundamental about the direction technology is heading and how public pressure can still change corporate decisions. This isn't just about Ring and Flock. It's about the broader tension between convenience and privacy, between the smart home we're building and the surveillance infrastructure we're inadvertently creating.

The Ring-Flock partnership cancellation happened quietly compared to the initial announcement. The company released a brief statement saying the integration would require "significantly more time and resources than anticipated." But that's corporate speak. What really happened was a calculated decision that the reputational damage outweighed the integration benefits.

Understanding why this happened, how it happened, and what it means for the smart home industry requires looking at several interconnected pieces: Ring's evolving relationship with law enforcement, the growing sophistication of AI-powered surveillance, the specific concerns around Flock Safety itself, and the mechanics of how social media campaigns can force major tech companies to reverse course.

Let's start with the basics. Ring isn't a surveillance company by original design. It started as a simple video doorbell. But over the past decade, it's evolved into a sophisticated home security ecosystem with facial recognition, AI-powered threat detection, and now features that can scan entire neighborhoods.

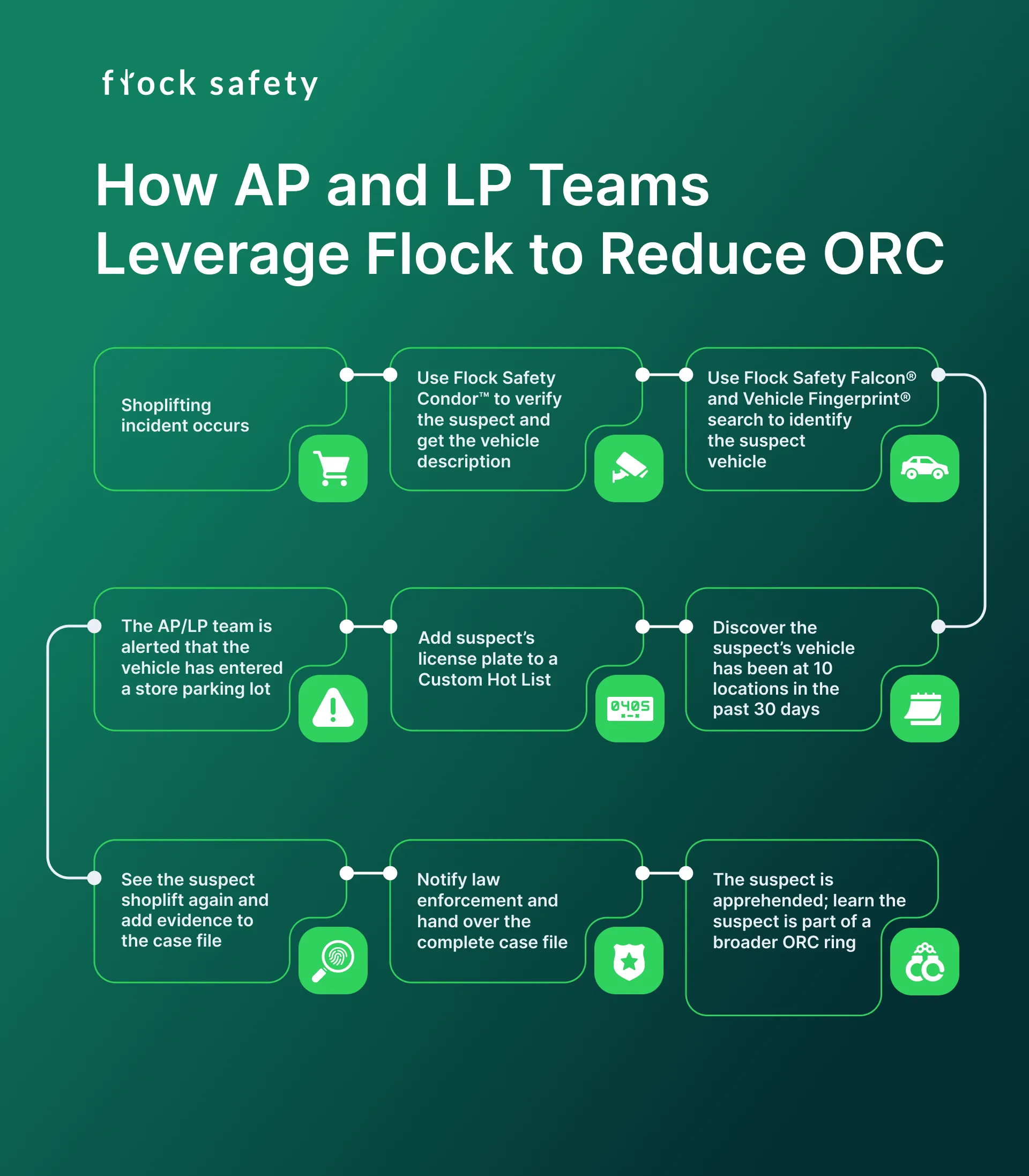

Meanwhile, Flock Safety operates networks of surveillance cameras across thousands of U.S. cities. These aren't Ring cameras. They're dedicated cameras mounted on utility poles, in parking lots, and on building facades. Flock's software ties them together into a searchable database that law enforcement can query. The company claims this helps solve crimes faster. Critics argue it creates a mass surveillance network that disproportionately impacts communities of color and threatens civil liberties.

When Ring announced the partnership in October 2024, the plan was straightforward: allow law enforcement agencies to access Ring customer videos through Flock's network. This would've been optional for users, but it would've fundamentally changed the nature of Ring's ecosystem from a home security tool to part of a larger surveillance apparatus.

What Amazon didn't anticipate was just how much the public would care. And that oversight cost them.

The Partnership That Nobody Asked For

Ring's move toward law enforcement integration didn't happen overnight. The company has been carefully cultivating relationships with police departments since around 2016, when Ring began its Request for Assistance program. This program allowed police to ask Ring users to voluntarily share footage from their cameras.

On the surface, this sounds reasonable. If a crime happens on your street, wouldn't you want to help catch the perpetrator? In practice, though, critics raised serious concerns. The program required no warrant. Police could ask for video from any address in a neighborhood, and Ring would help distribute the request. This meant Ring was essentially acting as a broker between law enforcement and its users, without explicit consent from those users whose footage might be requested.

Consumer advocacy groups like the Electronic Frontier Foundation pushed back hard. They pointed out that the Fourth Amendment protects against unreasonable searches, and while the program was technically voluntary, the power dynamic created subtle coercion. If police are asking for your footage, many people feel obligated to comply, warrant or not.

Ring eventually shut down the Request for Assistance program and replaced it with Community Requests, which was supposed to be more restricted. But the damage to public trust was already done. The company had established itself as willing to work directly with law enforcement in ways that didn't require traditional legal oversight.

Enter Flock Safety. The company had built something genuinely powerful: a network of thousands of surveillance cameras that could be searched by law enforcement almost instantly. Need to find a car that drove past a crime scene? Flock could search their entire database across multiple cities. Need to identify a person who walked through a parking lot? The company's AI could help with that too.

For Ring, the partnership seemed strategically logical. Ring could offer law enforcement a new tool while maintaining its commitment to what the company called "community safety." For Flock, the partnership would've expanded their network into millions of private homes. Ring's doorbell and home security cameras would've effectively become part of Flock's searchable surveillance database.

But there was one problem: Flock Safety's reputation was already problematic in certain communities.

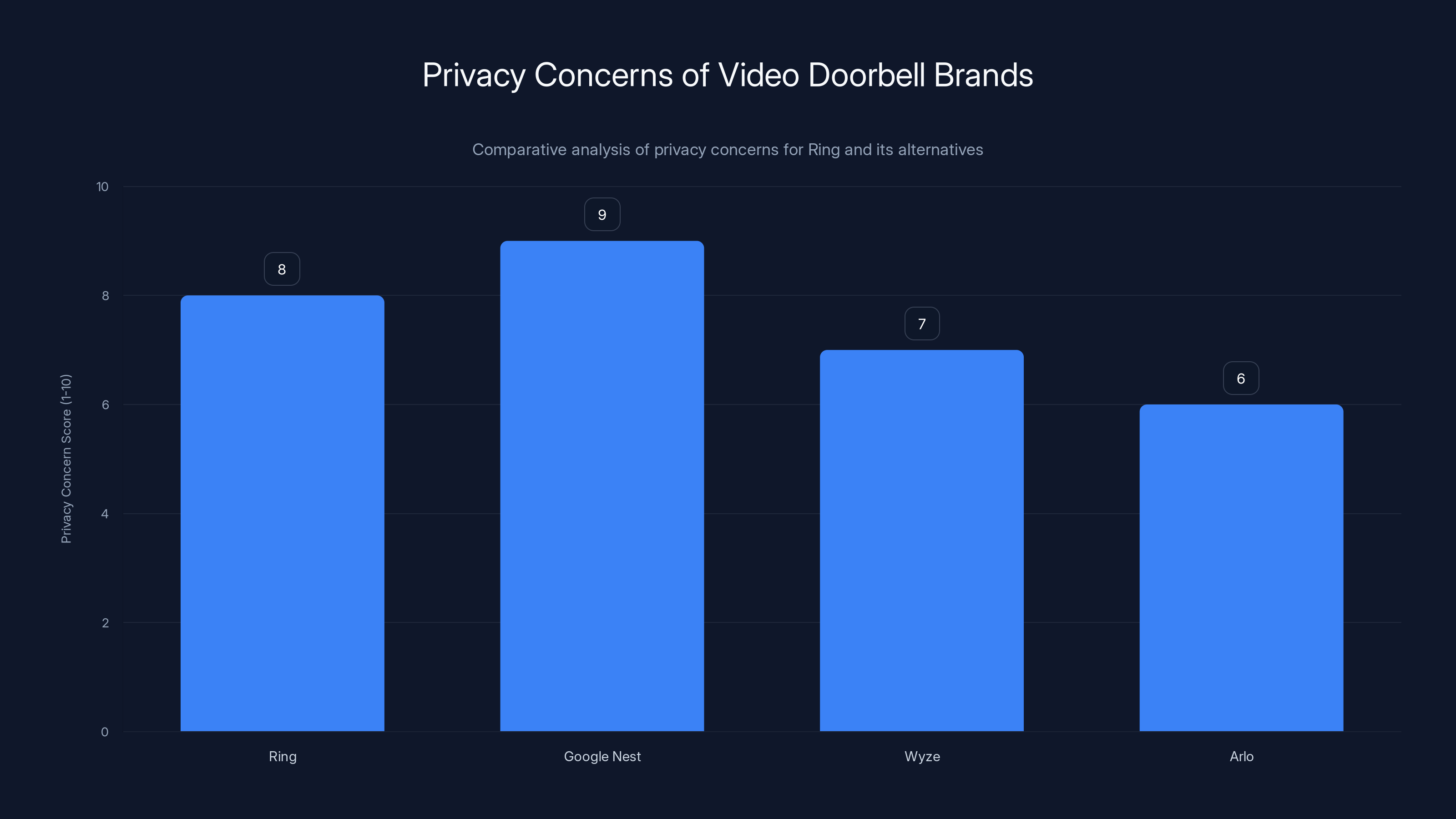

Ring and Google Nest have higher privacy concern scores due to aggressive data collection and surveillance partnerships. Estimated data based on typical privacy issues.

Flock Safety's Controversial History

Flock Safety isn't a household name like Ring or Amazon. But in certain circles—particularly immigrant communities, civil liberties organizations, and communities that have experienced heavy-handed enforcement—Flock's name carries serious weight.

The company operates camera networks in cities across the United States. These aren't optional home security cameras. They're permanent fixtures in public spaces, operated by Flock, searchable by law enforcement with minimal oversight. Some cities have installed thousands of these cameras as part of public safety initiatives.

But here's where things get controversial. Multiple reports suggested that Flock had allowed Immigration and Customs Enforcement (ICE) to access its surveillance network. If that's true, it means that someone could've been identified through Flock's database and targeted for deportation based on their location data captured by these cameras.

For immigrant communities already wary of government surveillance, this is an existential threat. It's not theoretical. ICE has demonstrated a willingness to conduct workplace raids, airport checkpoints, and neighborhood sweeps. If Flock's cameras could be used to identify and locate undocumented immigrants, the company's network transforms from a crime-fighting tool into something closer to a deportation weapon.

Ring users, particularly in immigrant communities, immediately understood the implications. If Ring integrated with Flock, then Ring cameras in their homes could potentially be searched by federal agents looking for undocumented residents. That's not acceptable to many people, regardless of the company's assurances that integration would be "optional."

Flock's official position is that they comply with all legal requests from law enforcement. That includes ICE. The company argues that this is simply how the legal system works—if a government agency has probable cause or a warrant, they can request data. But critics point out that the Fourth Amendment standard requires a warrant based on probable cause. If Flock is simply handing over data to ICE without rigorous legal standards, the company may be enabling unconstitutional searches.

None of this was widely known outside policy and civil liberties circles until Ring announced the partnership. Then, suddenly, millions of Ring users who had invited the company into their homes realized that their cameras might eventually connect to networks they didn't want to be part of.

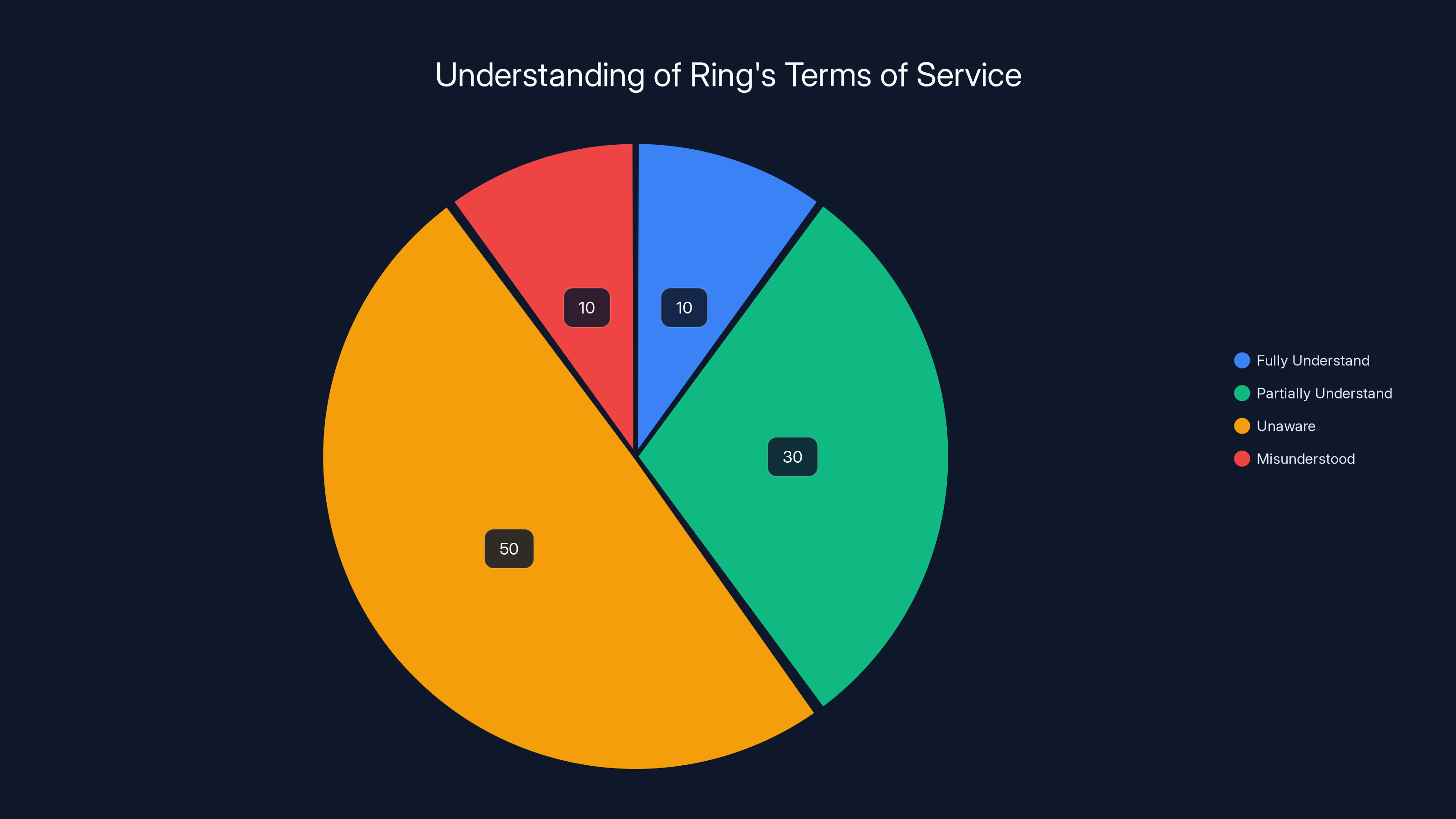

Estimated data suggests that a majority of Ring users are unaware or misunderstand the full implications of the terms of service, highlighting a gap in meaningful consent.

The Super Bowl Ad Disaster

Ring's leadership clearly didn't anticipate the backlash. If they had, they probably wouldn't have aired a Super Bowl commercial for their new Search Party feature right in the middle of the controversy.

The ad was meant to be heartwarming. It showed Ring cameras in a neighborhood detecting and tracking a lost dog named Toast, with neighbors helping reunite the dog with its owner. The message was clear: Ring's AI technology can help communities solve problems. Isn't that great?

Instead, what viewers saw was something far more unsettling. They saw dozens of Ring cameras positioned throughout a neighborhood, all scanning the streets in perfect synchronization. They saw artificial intelligence processing video feeds to identify and track a subject. They saw the technological foundation for a mass surveillance network.

The ad became instantly memetic. Social media users shared edited versions showing the same technology being used to track people instead of dogs. The implication was obvious: if Ring's AI can identify and locate a lost dog, why couldn't it identify and locate a person? And if all these cameras are networked together, who controls them?

Ring insisted that Search Party is designed only to find lost animals and that the feature explicitly cannot identify or locate people. But the damage was done. The ad had visualized exactly what civil liberties advocates had been warning about: the infrastructure for neighborhood-scale surveillance already exists. It's installed in millions of homes. It's AI-powered. And it's just one feature update away from being weaponized.

The timing couldn't have been worse. This ad aired while the Flock partnership was still active, while protests were ongoing, and while Senator Ed Markey was preparing an open letter calling on Amazon to abandon its facial recognition features entirely.

Several things happened simultaneously after the ad aired. Ring users on social media posted videos of themselves destroying their Ring devices. Some users announced they were removing Ring cameras from their homes and switching to competitors. Others mocked Ring's assurances about privacy, pointing out that the company's own product demonstrations showed exactly what many feared: comprehensive neighborhood surveillance.

The online conversation shifted from academic debates about privacy to visceral, emotional reactions. People didn't want to be part of this. They felt deceived. They realized that by installing a Ring camera, they might've participated in building something they didn't consent to.

The Political Pressure Campaign

While social media users were destroying Ring devices and expressing outrage online, something else was happening behind the scenes: political pressure.

Senator Ed Markey of Massachusetts has been Ring's most consistent critic in Congress. Markey has a long track record of questioning the company's privacy practices and its relationship with law enforcement. When the Flock partnership was announced, Markey didn't just issue a statement. He wrote an open letter to Amazon CEO Andy Jassy directly calling for the company to cancel its facial recognition features.

Markey's letter was significant because it represented official government concern. When a U.S. Senator publicly calls on a major corporation to abandon a specific product feature, it carries weight. It signals that regulators are watching. It suggests that there might be legislative consequences if the company doesn't respond.

But Markey wasn't alone. Various civil liberties organizations, immigrant rights groups, and privacy advocates added their voices. These weren't just fringe critics. Many of these organizations have legitimate influence with lawmakers and media outlets.

The combination of social media pressure, public outcry, and official political concern created what researchers call a "reputational crisis." For Amazon, Ring's parent company, the situation was becoming untenable. Every day the partnership remained active, more customers expressed their intention to switch. Every day, more negative press coverage accumulated. Every day, the company's brand took more damage.

More importantly, there was the possibility of legislative action. If Ring continued expanding its surveillance capabilities in partnership with companies like Flock, Congress might decide to regulate the company. That could mean restrictions on what Ring can do, requirements for explicit consent before data sharing, warrants required for law enforcement access, or other limitations that would significantly impact Ring's business model.

From a purely business perspective, canceling the Flock partnership became the rational choice. Yes, the partnership might've offered long-term benefits. But those benefits weren't worth the immediate reputational damage, the loss of customers, and the political attention that might lead to unfavorable regulations.

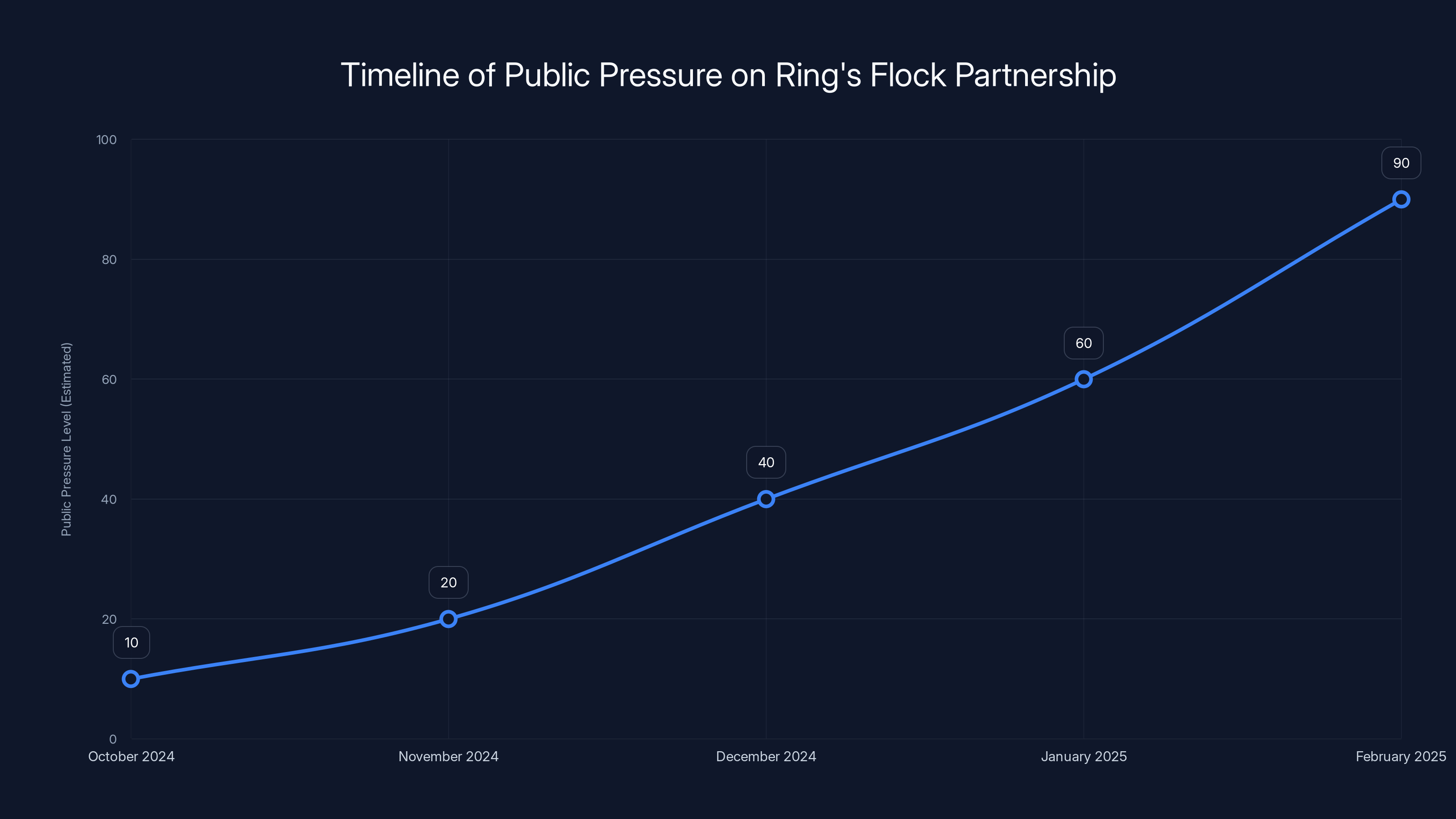

The timeline shows a gradual increase in public pressure from October 2024 to February 2025, peaking after the Super Bowl ad and Senator Markey's intervention. Estimated data.

Familiar Faces: The Facial Recognition Feature That Started It All

The Flock partnership would've been controversial enough on its own. But it arrived in the context of another Ring feature that was already generating significant concern: Familiar Faces.

Familiar Faces is Ring's facial recognition feature. When it detects someone at your door, instead of just alerting you to "a person at your door," it can identify whether that person has been seen before. If you've taught the system to recognize your family members, delivery drivers, or friends, the alert will say "Mom at the door" instead of "unknown person at the door."

On paper, this sounds like a useful feature. Who wouldn't want to know if a family member has arrived home? In practice, though, facial recognition technology is controversial for several important reasons.

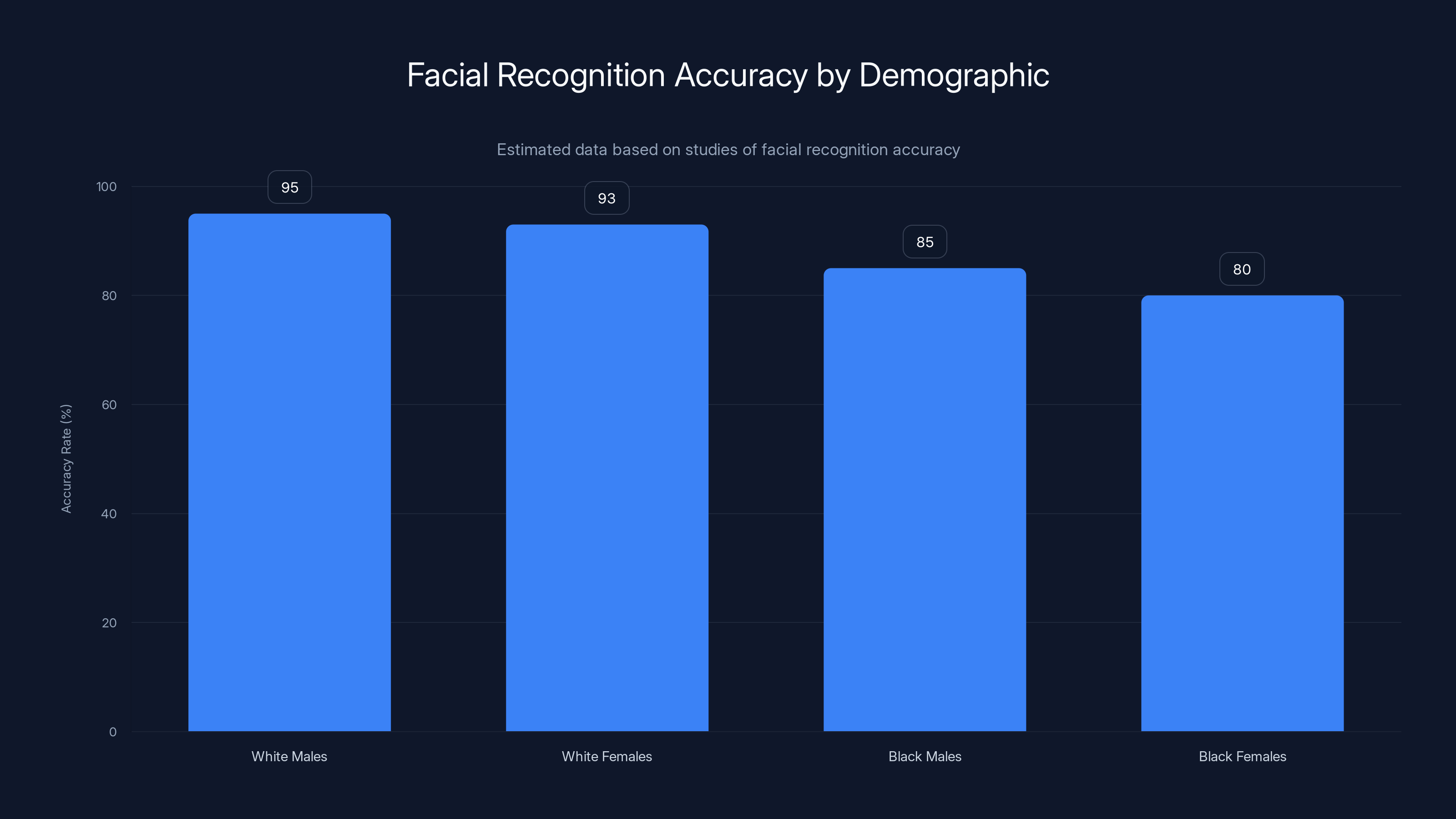

First, facial recognition is not perfectly accurate, and the errors it makes are not random. Studies have consistently shown that facial recognition systems are less accurate at identifying people with darker skin tones, particularly women. This means that if Ring's Familiar Faces feature is based on flawed facial recognition technology, it could systematically misidentify people based on race. That's not just a technical problem. That's a civil rights problem.

Second, facial recognition creates what privacy advocates call a "chilling effect." If people know they're being identified by face, they may change their behavior. People may avoid certain neighborhoods if they know they'll be identified there. Activists may reconsider attending protests if they know their faces will be recorded and stored. Free movement and free assembly are increasingly difficult in a world where every face is catalogued and searchable.

Third, facial recognition data can be weaponized. Law enforcement can request facial recognition data without a warrant. Unauthorized users can gain access to facial databases through hacking or insider threats. Authoritarian regimes have used facial recognition to target dissidents and minority groups. It's not paranoid to worry about the long-term implications of storing facial recognition data for millions of people.

When Senator Markey called for Ring to abandon Familiar Faces entirely, he was addressing this underlying concern. He wasn't arguing that Ring customers shouldn't be able to identify family members. He was arguing that the specific technology chosen to do this identification—facial recognition—carries risks that outweigh the benefits.

Ring's response was to defend the feature. The company pointed out that Familiar Faces is opt-in, meaning users have to actively enable it. The company also stated that facial recognition data is stored locally on the Ring device, not in the cloud, which means it's theoretically less vulnerable to unauthorized access.

But even if those assurances are true, the fundamental concern remains. A facial recognition system, even if optional and locally stored, still creates the infrastructure for identification and tracking. Combined with other Ring features like Search Party and integrated with networks like Flock, that infrastructure becomes something approaching comprehensive neighborhood surveillance.

Ring eventually made Familiar Faces opt-in from the start, but the feature remained controversial. For many users, the very existence of Ring's facial recognition capabilities was reason enough to remove the company's products from their homes.

How the Backlash Unfolded: A Timeline of Pressure

Ring's cancellation of the Flock partnership didn't happen suddenly. It followed a clear escalation of public pressure over several weeks. Understanding the timeline helps explain how much momentum built up against the company.

The partnership was announced in October 2024. Initially, there wasn't massive public reaction. The announcement was largely covered in tech and policy circles, not mainstream news. Most Ring users probably didn't even hear about it.

But over the following weeks, the story percolated through civil liberties organizations, immigration advocacy groups, and tech-focused social media communities. People started sharing information about Flock Safety, its relationship with ICE, and the implications of Ring's integration. These conversations happened primarily on platforms like Twitter, Reddit, and niche forums, not mainstream television.

By early February 2025, as the Super Bowl approached, the story started breaking into broader media coverage. The Washington Post, The Verge, and other major outlets published articles examining Ring's relationship with law enforcement and Flock's history with ICE. These articles reached wider audiences and validated the concerns that had been brewing online.

Then came the Super Bowl ad. The timing, from a PR perspective, was catastrophic. The ad aired while concerns about the Flock partnership were already building. It served as visual evidence of exactly what critics had been warning about. Within hours of the ad airing, social media was flooded with mocking responses, destruction videos, and calls for boycotts.

Senator Markey sent his open letter shortly after. This was significant because it elevated the discussion from consumer complaint to official government concern. Once a U.S. Senator is publicly calling for a company to change its practices, media coverage intensifies. Regulatory attention increases. The problem becomes harder to ignore.

Ring issued statements defending the partnership and the Search Party feature. But these defensive statements likely made things worse, not better. For every statement Ring released, activists and journalists found new angles to criticize. The company seemed tone-deaf to legitimate privacy concerns, which fed the narrative that Ring didn't care about its users.

The week after the Super Bowl was particularly brutal for Ring's reputation. Tech news outlets were covering the story daily. Civil liberties organizations were issuing formal statements opposing the Flock partnership. Immigration advocacy groups were warning their communities about the risks. Ring's customer service was reportedly overwhelmed with inquiries from people wanting to remove their devices.

By mid-February, it was clear to Amazon's leadership that the partnership couldn't survive. The company announced the cancellation in a carefully worded statement that emphasized this was a "joint decision" with Flock and that the integration would require "significantly more time and resources than anticipated."

But the real reason was obvious to anyone paying attention: the company's leadership realized that continuing the partnership would cause more damage to Ring's brand, market position, and customer relationships than any benefit the partnership could provide.

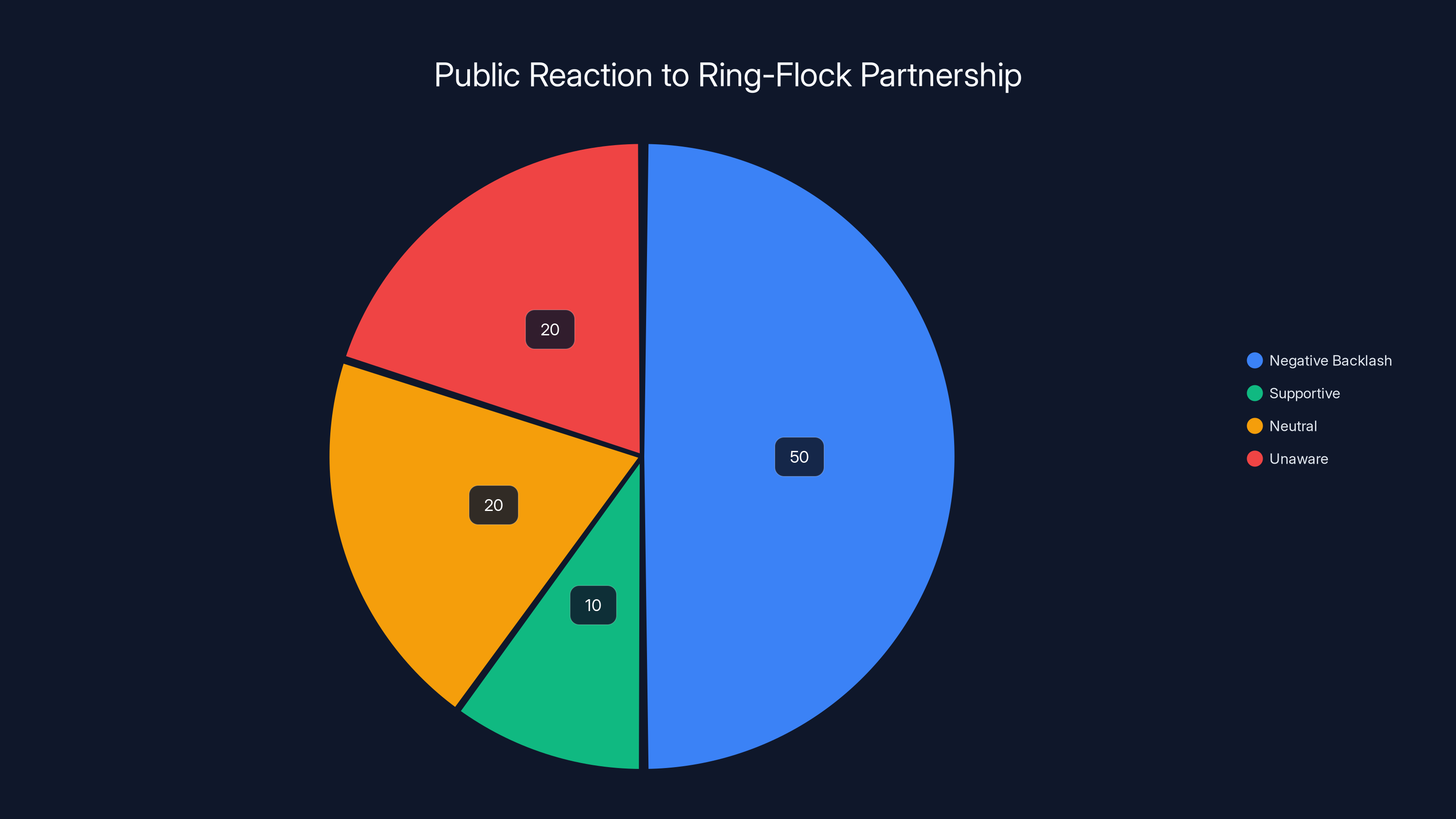

Estimated data shows a significant negative backlash (50%) against the Ring-Flock partnership, highlighting public concern over privacy and surveillance.

The Broader Implications: What This Means for Smart Home Privacy

The Ring-Flock cancellation is significant for reasons that go far beyond Ring and Flock. It reveals something important about how tech companies operate and what it takes to force them to change course.

First, it shows that public pressure still works. In an era when tech companies seem increasingly insulated from criticism, Ring's decision proves that massive, organized public opposition can still change corporate behavior. This is important because it suggests that consumers aren't entirely powerless, even when facing companies as large and powerful as Amazon.

But it also reveals the inadequacy of relying on public pressure as a privacy protection mechanism. Ring shouldn't have announced the Flock partnership in the first place. The company should have realized in advance that integrating with a surveillance company with ties to ICE would be controversial. That it took weeks of public outcry to change course suggests that Ring's privacy considerations are not as robust as they should be.

Second, the cancellation highlights the tension between the "smart home" narrative and the surveillance reality. Tech companies promote smart home devices as convenient, helpful, and privacy-respecting. Users can lock and unlock their doors remotely. They can see if their packages have been delivered. They can check on their kids or pets while away from home.

But each of these conveniences requires cameras, microphones, and AI analysis. And those capabilities, once built into homes, can be repurposed for surveillance at scale. Ring's own products demonstrate this. The company has successfully built the technical infrastructure for comprehensive neighborhood surveillance. The question isn't whether it's possible anymore. The question is whether it's appropriate.

Third, the incident raises questions about the adequacy of existing regulatory frameworks. Ring operates in a largely unregulated space. There's no federal law specifically requiring Ring to get permission before sharing camera footage with law enforcement. There's no law preventing Ring from integrating with surveillance companies like Flock. There's no law mandating that facial recognition systems be audited for bias.

Instead, companies like Ring operate under the assumption that if they don't explicitly break the law, they can do whatever seems strategically beneficial. It took overwhelming public pressure to change Ring's mind, not legal obligation. That's a fragile foundation for privacy protection.

Fourth, the incident reveals the specific vulnerability of people in immigrant communities. Flock's integration with ICE creates a surveillance infrastructure that can be used for immigration enforcement. Ring's willingness to partner with Flock suggests the company either didn't fully understand these implications or didn't consider them significant. Either way, the incident demonstrates that tech companies' privacy considerations often don't adequately account for the needs and vulnerabilities of the most at-risk communities.

Finally, the incident serves as a case study in how surveillance infrastructure spreads. No one company built a comprehensive surveillance network through a single decision. Rather, each company makes reasonable-seeming decisions in isolation. Ring creates a useful doorbell camera. Flock builds a helpful law enforcement tool. When these systems integrate, suddenly a surveillance network exists that nobody explicitly consented to. The sum of many reasonable decisions becomes something unreasonable in aggregate.

Ring's Law Enforcement Strategy: A Broader Pattern

The Flock partnership didn't exist in isolation. It's part of a broader strategy by Ring to position itself as essential to law enforcement and community safety.

Ring has spent years cultivating relationships with police departments. The company funds police foundations. It sponsors law enforcement conferences. It provides Ring cameras to police departments at discounted rates. Through programs like Community Requests, Ring has made it easy for law enforcement to leverage the company's installed base of millions of cameras.

From Ring's perspective, this strategy makes business sense. If police departments depend on Ring cameras as an investigative tool, police will recommend Ring to their communities. Police will encourage neighborhoods to install Ring cameras. Ring becomes not just a private home security device but also a public safety tool.

But from a civil liberties perspective, this strategy is problematic. It transforms Ring from a device that individuals choose to install for their own security into a piece of critical infrastructure that law enforcement depends on. Once law enforcement is dependent on Ring's network, Ring has leverage over law enforcement. And law enforcement has leverage over Ring users.

The Flock partnership represented the logical endpoint of this strategy. Instead of just making it easy for law enforcement to request footage from individual Ring cameras, Ring would integrate directly with Flock's network. Ring would become part of the official law enforcement surveillance infrastructure.

Ring's statements about this partnership emphasized community safety and voluntary participation. But the core concern was always about power. Once Ring integrated with Flock, law enforcement agencies wouldn't just be able to request video from specific Ring users. They'd be able to conduct broad searches across Ring's entire network in their jurisdiction, identifying people and vehicles without individual warrants.

That's fundamentally different from the traditional law enforcement model, where officers had to go to specific people and ask for their video. In the Flock-integrated model, law enforcement could conduct what amounts to mass surveillance, with Ring's infrastructure making it possible.

Ring's cancellation of the partnership suggests that the company's leadership understands some of these concerns, at least at the reputational level. But whether Ring has fundamentally changed its approach to law enforcement cooperation remains unclear. The company continues to operate Community Requests. The company hasn't committed to refusing all law enforcement requests. Ring's basic architecture still makes it possible for law enforcement to access its network.

What the Flock cancellation really shows is that Ring was willing to go further than public opinion would tolerate. The company tested the boundaries of what it could do. When it discovered those boundaries were closer than it expected, the company stepped back. But that doesn't mean Ring has abandoned its strategy of becoming essential to law enforcement. It just means the company will approach that strategy more carefully in the future.

Facial recognition systems show varying accuracy across demographics, with lower accuracy for darker skin tones, particularly among women. Estimated data based on typical findings.

The Technical Reality: What Integration Would Have Enabled

To fully understand why the Flock partnership was so concerning, it's worth examining exactly what the technical integration would have enabled.

Flock Safety operates a network of fixed surveillance cameras in cities across the United States. These cameras capture continuous video feeds and feed them to Flock's servers, where AI analyzes the footage to identify and track vehicles and people.

Ring operates a network of doorbell cameras, security cameras, and other devices installed in millions of homes. Ring's cameras are distributed across neighborhoods, mounted on the sides of homes where they point at streets, driveways, and yards.

If Ring integrated with Flock, the two networks would essentially merge. Law enforcement could search across both Ring's distributed network of home cameras and Flock's network of public cameras. A person could be tracked not just through Flock's public cameras but through a combination of public surveillance and private home cameras.

Let's say law enforcement was investigating a crime that occurred on a particular street. With Flock alone, they could search Flock's cameras on that street and nearby areas. With Ring-Flock integration, they could additionally search every Ring camera installed in the neighborhood, potentially tracking a suspect's movements through residential areas.

Or imagine a law enforcement agency trying to identify someone based on a photo or description. With Flock alone, they might find video of that person on a utility pole camera. With Ring-Flock integration, they could potentially track that person's movements across an entire neighborhood by accessing Ring cameras at multiple homes.

Ring has developed sophisticated AI capabilities to support these searches. The company's algorithms can recognize vehicles, identify people, and track movements across multiple cameras. These capabilities were designed for home security. But they're equally applicable to law enforcement surveillance.

Combine Ring's AI with Flock's network infrastructure, and you create something approaching comprehensive neighborhood-scale surveillance. A person couldn't enter or exit a neighborhood with Ring cameras without potentially being tracked. Activists couldn't gather without being identified. Vulnerable populations couldn't move about without leaving a searchable digital trail.

This isn't speculative. Other surveillance companies have already demonstrated this capability. Companies like Clearview AI have shown that comprehensive identification across camera networks is technically feasible. Ring and Flock would've created a similar system, but with much wider geographic coverage because Ring cameras are installed in private homes.

The key difference between Ring-Flock integration and other surveillance systems is that Ring cameras are in private homes. This creates a unique legal and ethical question: if law enforcement can search surveillance systems in public spaces, should they also be able to search surveillance systems in private homes? Most people's intuitive answer is no. The Fourth Amendment is supposed to protect the home from unreasonable government intrusion.

But once Ring cameras integrate with Flock, the distinction between public and private surveillance becomes blurred. A Ring camera on a private home still captures images of public streets. Should law enforcement be able to search those public street images without involving the homeowner? What if the Ring camera captures images of people and vehicles on private property adjacent to the public street?

These legal questions would've eventually ended up in court. By canceling the partnership, Ring avoided the legal and regulatory battles that the integration would've triggered.

Public Response: The Destruction of Ring Devices

One of the most visible manifestations of the public backlash against Ring's Flock partnership was the destruction of Ring devices. Online, users posted videos of themselves smashing Ring cameras with hammers, throwing them in the trash, or physically dismantling them.

This wasn't just vandalism or political theater. For many of these users, removing Ring from their homes was a principled stand against surveillance infrastructure. These people had invited Ring into their homes thinking it was a private security tool. When they discovered that Ring was planning to integrate with law enforcement networks, they felt betrayed.

The destruction videos served an important function in the broader backlash. They were visceral proof that Ring's customer base was unhappy. They generated viral social media engagement. They forced mainstream media outlets to cover the story more extensively. They demonstrated to other companies considering similar surveillance integrations that public backlash can be severe.

But the destruction videos also revealed something about consumer activism in the digital age. Unlike boycotts or organized campaigns, destruction videos are individual actions that collectively create an impression of massive discontent. No coordinating organization orchestrated these videos. But through social media sharing and algorithmic amplification, they collectively conveyed the sense that Ring customers were in rebellion.

For Amazon's leadership, these videos represented a tangible signal that the Flock partnership was damaging the Ring brand. It's one thing for advocacy organizations to issue statements opposing a partnership. It's another thing entirely for your paying customers to publicly destroy your products.

Some of the people destroying Ring devices switched to competitor products like Google Nest or Wyze. Others decided to rely on traditional home security without internet-connected cameras. Still others began questioning whether they needed video doorbells at all.

This customer churn was likely a significant factor in Ring's decision to cancel the Flock partnership. The company realized that continuing with the partnership would lead to more customer defection. Even if the Flock integration never launched, the mere existence of the partnership was damaging Ring's business.

In this sense, the destruction videos and social media campaigns were effective activism. They converted abstract privacy concerns into concrete business problems. They forced a corporation to change its behavior not through regulation or legal action, but through direct customer pressure.

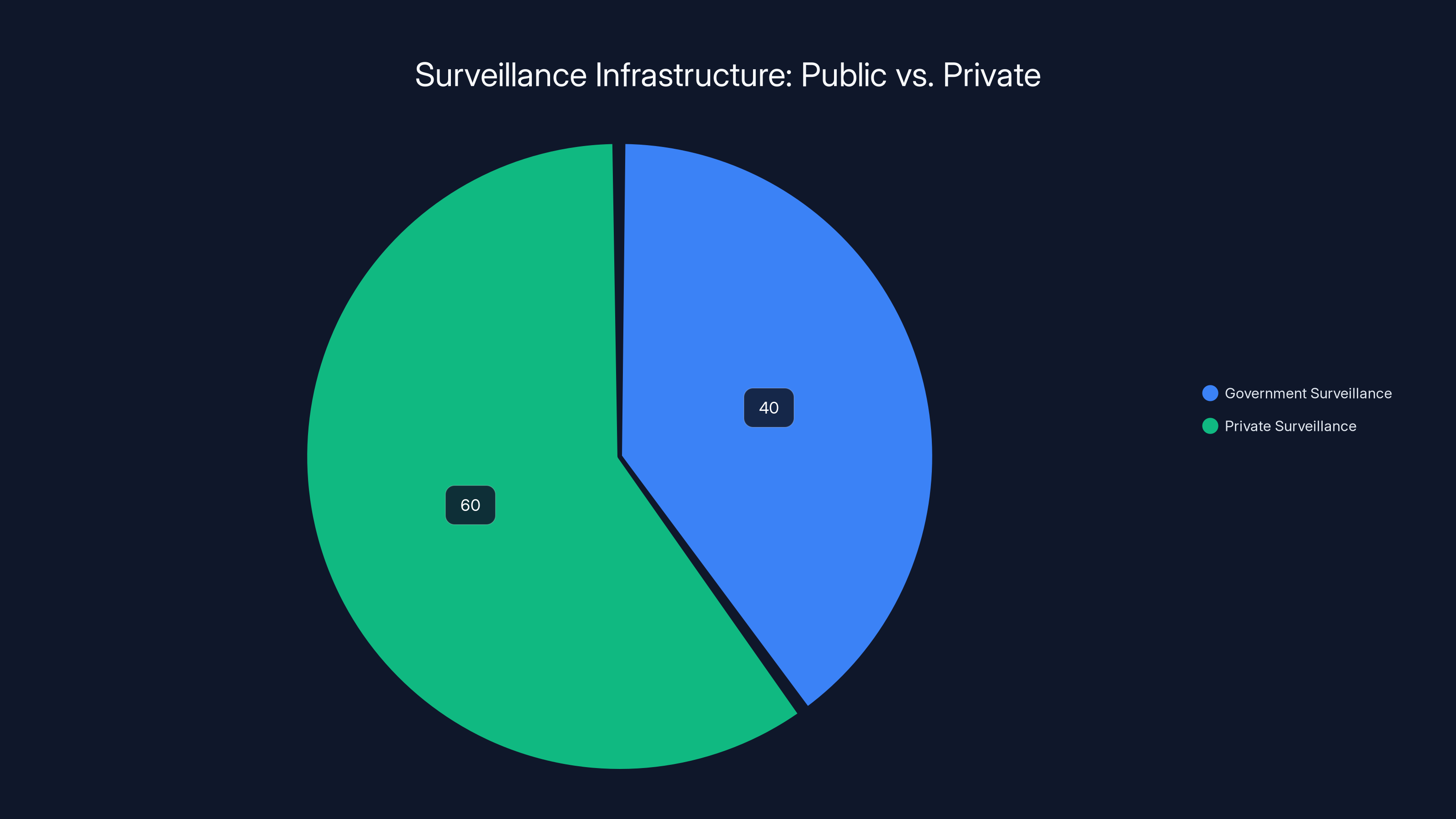

Estimated data shows that private surveillance systems now constitute a larger portion of the surveillance infrastructure in the U.S., highlighting the shift towards privatization.

The Question of Consent: Did Ring Users Know What They Were Agreeing To?

One of the most fundamental issues raised by the Flock partnership and broader Ring surveillance capabilities is the question of consent. When people install Ring cameras, what exactly are they consenting to?

Ring's terms of service are lengthy and complex, like most tech company terms of service. In theory, they specify what Ring can do with user data. But in practice, most people don't read these terms. They install Ring for the obvious benefit, a video doorbell, without fully understanding the broader surveillance ecosystem they're participating in.

Even if someone reads Ring's terms of service carefully, those terms are not static. Companies update terms regularly to reflect new capabilities and partnerships. Ring could have updated its terms to allow Flock integration without explicitly notifying users. Many people would never have realized that the device they thought was just a home security tool was now part of a law enforcement surveillance network.

This is the paradox of digital consent. Companies want legal protection by claiming users consented to their practices. But meaningful consent requires understanding what you're consenting to. Most people installing a Ring camera don't understand that they're potentially participating in a neighborhood-scale surveillance infrastructure. They don't understand the implications for immigrant communities if the device integrates with ICE-connected systems. They don't understand how facial recognition could eventually be applied to their families and neighbors.

The Flock partnership would've pushed this tension to its breaking point. Ring would've been claiming that integrating with Flock fell within the scope of what users consented to when they installed the camera. But most users would've had no idea that their home camera was being integrated with external law enforcement networks.

Senator Markey and other privacy advocates have argued that consent in this context is meaningless. If people don't understand what they're consenting to, they haven't really consented. The fact that they clicked "agree" doesn't change that fundamental problem.

Ring's cancellation of the Flock partnership doesn't solve this consent problem. The company still operates Community Requests, which allows law enforcement to request video from Ring users. Ring still owns facial recognition technology that could theoretically be applied at scale. Ring still operates an infrastructure that law enforcement depends on.

The question of meaningful consent remains unresolved. At best, the Flock cancellation shows that Ring recognizes there are limits to what its customers will tolerate. But it doesn't suggest that Ring has fundamentally rethought its approach to the broader question of how its devices can and should be used.

Regulatory Landscape: What Happens Next?

The Ring-Flock cancellation occurred against a backdrop of increasing regulatory interest in surveillance technology. Federal lawmakers, state legislators, and city councils are all beginning to examine how surveillance systems are regulated, how much data companies can collect, and what limitations should apply to law enforcement access to that data.

At the federal level, Senator Markey has introduced legislation addressing facial recognition and biometric surveillance. Other lawmakers have proposed bills requiring warrants for law enforcement access to private surveillance systems. The FTC has begun investigating major tech companies' privacy practices.

At the state level, several states have passed or proposed legislation limiting law enforcement's access to surveillance technologies. California, for example, has restricted how law enforcement can use facial recognition. Other states are considering similar restrictions.

At the local level, some cities have banned government use of facial recognition entirely. Other cities are requiring community approval before police can use certain surveillance technologies.

The Flock cancellation occurs in this context of increasing regulatory scrutiny. The company made the decision to cancel the partnership partly because of public pressure, but also because the regulatory environment is shifting. If the partnership had proceeded and generated sustained legal challenges, Ring and Amazon might've faced unfavorable court decisions or aggressive regulatory action.

By canceling the partnership proactively, Ring likely hoped to forestall regulatory action. The company could point to the cancellation as evidence that it responds to public concerns and doesn't need to be forced to change through regulation.

But this strategy may not work. The fact that Ring was willing to attempt the Flock integration in the first place suggests that, absent public pressure, the company would continue pursuing similar surveillance integrations. Lawmakers and regulators may decide that relying on public pressure to constrain companies' surveillance ambitions is insufficient. They may decide that explicit legal restrictions on how surveillance companies can operate are necessary.

The Ring-Flock case will likely be cited in future regulatory discussions as an example of why surveillance technologies need oversight. It shows both the risks of unconstrained surveillance integration and the power of public pressure to force change. Regulators will probably use this case to argue for proactive rules rather than reactive responses to each new controversial partnership.

Community Requests: Ring's Remaining Law Enforcement Portal

While much of the discussion has focused on the canceled Flock partnership, Ring still operates a significant law enforcement cooperation program called Community Requests. This program remains controversial and represents the company's ongoing commitment to law enforcement integration.

Community Requests allows local law enforcement agencies to submit requests for video from Ring customers. Unlike the old Request for Assistance program, which Ring shut down, Community Requests comes with some additional safeguards. Ring says the program is more restricted and transparent.

But the fundamental issue remains. Law enforcement can request video from Ring customers, and Ring facilitates that request. While the system is nominally voluntary, many Ring customers feel pressure to comply when police request their footage. If police are asking for your video, it can feel like you don't have a real choice whether to share it.

Community Requests is available in thousands of police departments across the country. This means that in many neighborhoods, law enforcement has easy access to a network of Ring cameras. To find out who walked past a particular house, to identify a suspect's movements, or to locate a missing person, law enforcement can simply query Ring's network.

Ring claims this helps solve crimes faster. The company points to cases where Community Requests helped law enforcement catch criminals or find missing people. These are compelling examples of surveillance technology creating real benefits for public safety.

But they come at a cost. Surveillance technology that can help find a criminal can also be used to track activists, identify undocumented immigrants, or monitor people involved in lawful but stigmatized activities. The same capability that solves crimes can be weaponized against vulnerable populations.

The Flock partnership cancellation drew attention to these concerns, but Community Requests remains largely unexamined. It's less flashy than integrating with an external surveillance company, so it receives less public attention. But from a civil liberties perspective, Community Requests raises very similar concerns.

Ring didn't make any significant changes to Community Requests after the Flock backlash. The company continues operating the program essentially as it was before. This suggests that Ring views the Flock cancellation as damage control, not as a fundamental reconsideration of how the company should relate to law enforcement.

What Customers Should Do: Evaluating Ring and Alternatives

For people who own Ring cameras or are considering purchasing one, the Flock partnership cancellation raises important questions. What does it mean for Ring's future? Should people trust Ring to respect their privacy? Are there better alternatives?

First, the cancellation shows that Ring responds to public pressure. If you have concerns about how Ring is operating, making those concerns public can influence the company's decisions. But this also suggests that Ring's privacy practices aren't deeply embedded in the company's core values. They're driven by market pressure and reputation management.

Second, the Flock partnership revealed that Ring is exploring aggressive surveillance integrations. The company was willing to partner with a company that enables ICE surveillance. That speaks to Ring's strategic thinking and suggests that the company may attempt similar partnerships in the future, potentially with less public attention.

Third, Ring's core technology—distributed home cameras with AI analysis—creates infrastructure that can be repurposed for surveillance. It's not necessarily Ring's current leadership that should concern you, but future leadership's different priorities. Surveillance infrastructure, once built, is difficult to dismantle.

If you currently own Ring devices, you have several options. You could continue using them but disable features like Community Requests (assuming Ring provides that option). You could remove the devices entirely. Or you could keep them and hope that public pressure continues to constrain Ring's behavior.

If you're considering purchasing a video doorbell or security camera, you should evaluate alternatives. Google Nest, Wyze, and other companies offer similar products. However, these companies have their own privacy concerns. Google is known for aggressive data collection. Any product connected to the internet raises potential privacy issues.

The key is to ask: what features do you actually need, and what are you willing to trade privacy for? Do you need video doorbell capability? Do you need facial recognition? Do you need cloud storage of videos? Each feature potentially expands your privacy exposure.

Some people have concluded that the risks of internet-connected home cameras outweigh the benefits. They've switched to security systems that don't use cloud storage or that operate on local networks only. Others have decided they don't need video doorbell capability at all.

The Ring-Flock incident should prompt anyone using these devices to think carefully about what they're participating in. You're installing cameras that create a record of everyone who comes to your house. That footage could potentially be accessed by law enforcement. It could potentially be hacked. It could potentially be sold to data brokers. The convenience of seeing who's at your door might not be worth the privacy trade-off.

The Surveillance Landscape: How This Fits Into a Broader Pattern

The Ring-Flock partnership cancellation is important not because it solved a problem, but because it highlights a broader problem that remains unresolved: the expansion of surveillance infrastructure across American cities and neighborhoods.

For decades, surveillance was primarily a government function. Police departments operated cameras. Federal agencies operated networks. But increasingly, surveillance is becoming privatized. Private companies like Ring, Flock, Clearview AI, and others are building surveillance networks that rival or exceed government systems in scope.

This privatization of surveillance creates unique problems. Private companies aren't subject to the same legal constraints as government agencies. The Fourth Amendment protects you from unreasonable government searches, but it doesn't protect you from private companies collecting information about you. Private companies can collect, store, and sell surveillance data with minimal legal oversight.

Flock Safety operates surveillance cameras that are accessible by law enforcement, but Flock is a private company. Ring operates surveillance cameras in millions of homes, with the company deciding what law enforcement can access. These are private surveillance systems that have been integrated into law enforcement practices.

The Flock cancellation shows one mechanism through which these private systems are expanding: partnerships. Ring and Flock weren't forced into a partnership by government mandate. They chose to integrate because they thought it would be strategically beneficial. This creates a system where private companies are voluntarily expanding surveillance, integrating with law enforcement, and creating infrastructure for mass surveillance.

The problem is that once this infrastructure exists, it's very difficult to constrain. Even if Ring cancels the Flock partnership, the underlying capability remains. Ring still operates millions of cameras. Ring still has the ability to access law enforcement networks. Ring still has AI capabilities that could be applied at scale.

The only real solution is to establish legal constraints on how surveillance infrastructure can be developed and used. This probably requires federal legislation addressing: what data companies can collect, what entities can access that data, what legal process is required for law enforcement access, and what oversight mechanisms exist.

But that legislation hasn't been passed yet. In the absence of legal constraints, companies like Ring are free to pursue whatever surveillance integrations seem strategically beneficial, subject only to public pressure and market pressure.

The Ring-Flock incident shows that public pressure can work. But it's not a reliable or permanent solution. The next company with surveillance capabilities might not face the same backlash. The next partnership might occur with less public attention. The next surveillance integration might proceed without incident.

Regulation, not public pressure, is the long-term solution. The Ring-Flock cancellation should prompt lawmakers to take action rather than suggest that the problem has been solved.

The Path Forward: What Happens to Surveillance Technology?

The Ring-Flock cancellation is a moment, not an endpoint. It's a pause in the expansion of surveillance infrastructure, not a reversal. Understanding what comes next requires thinking about the broader trajectory of surveillance technology and how companies will continue to develop it.

First, Ring will almost certainly pursue other law enforcement partnerships, just with more careful public relations planning. The company's basic approach won't change. Ring views itself as a tool for public safety, and public safety increasingly involves law enforcement. The company will continue to facilitate access to its network.

Second, other companies will learn from Ring's experience and try to pursue surveillance integrations more quietly. Instead of announcing partnerships publicly, companies might make surveillance integrations quietly, burying them in terms of service updates or gradually rolling out features without public attention.

Third, surveillance technology will continue to improve. AI will get better at identifying people and vehicles. Cameras will become higher resolution. Data analysis will become faster and more comprehensive. The technical capability for comprehensive surveillance is advancing regardless of what any individual company does.

Fourth, fragmentation in the surveillance space will probably continue. Rather than one integrated network, we'll see multiple competing networks: Ring, Nest, Wyze, Flock, and others, each with their own law enforcement integrations and data-sharing policies. This fragmentation could be positive in that it prevents any one company from having too much power. But it could also be negative in that it creates more total surveillance infrastructure.

The question facing society is not whether surveillance technology will continue advancing. It will. The question is what constraints we want to place on how that technology is developed and used.

The Ring-Flock incident shows that public pressure can constrain companies. But it's a blunt instrument, dependent on awareness, organization, and timing. The next big surveillance integration might not attract the same attention. The next company might have better public relations. The narrative might be different.

What's really needed is clear regulatory frameworks. These frameworks should establish: what data can be collected from private cameras, who can access that data, what legal process is required, what audit mechanisms exist, and what penalties apply to violations.

Some of this regulation is beginning to happen at the state and local level. But federal action is probably necessary to create consistent standards and ensure that privacy protection doesn't depend on which state you live in.

The Ring-Flock cancellation is a victory for privacy advocates in the short term. But it should also be a warning about how fragile privacy protection is when it relies on public pressure rather than legal requirements. As surveillance infrastructure continues to expand, that fragility will become increasingly problematic.

FAQ

What was Ring's partnership with Flock Safety supposed to do?

Ring planned to integrate its doorbell and home security cameras with Flock Safety's surveillance network, allowing law enforcement agencies to search across both systems simultaneously. This would've enabled police to track people and vehicles through a combination of public surveillance cameras and private home cameras across entire neighborhoods without individual warrants for each search.

Why did Ring cancel the Flock partnership?

Ring canceled the partnership after weeks of intense public backlash, including social media campaigns, customer destruction of Ring devices, and political pressure from Senator Ed Markey and civil liberties organizations. The Super Bowl ad for Ring's Search Party feature sparked widespread concerns about mass surveillance, and Amazon's leadership determined that continuing with the partnership would cause more reputational and business damage than any potential benefit.

What is Flock Safety's connection to ICE and immigration enforcement?

Multiple reports have indicated that Flock Safety allowed Immigration and Customs Enforcement (ICE) to access its surveillance camera network. For immigrant communities, this created significant concern that Ring's integration with Flock could enable their location to be tracked for immigration enforcement purposes. While Flock maintains it complies with lawful law enforcement requests, the potential for surveillance data to be weaponized for deportation made the partnership extremely controversial in immigrant advocacy circles.

What is Ring's Familiar Faces facial recognition feature?

Familiar Faces is Ring's AI-powered facial recognition technology that identifies people at your door based on stored facial data. Instead of alerting you to "a person at the door," it can say "Mom at the door" if you've trained the system to recognize family members. While presented as a convenience feature, facial recognition technology raises significant civil rights concerns, including reduced accuracy for people with darker skin tones and the creation of searchable facial databases.

Is Ring still working with law enforcement through Community Requests?

Yes. While Ring canceled its partnership with Flock Safety, the company continues operating Community Requests, a program that allows local law enforcement to request video footage from Ring customers. This program remains controversial because while nominally voluntary, it effectively gives law enforcement access to a vast network of private home cameras without necessarily requiring individual warrants for each request.

What happened with Ring's Super Bowl ad and why was it controversial?

Ring's Super Bowl 2025 ad showed its Search Party feature detecting and tracking a lost dog through multiple Ring cameras in a neighborhood. The ad visualized exactly what privacy advocates had been warning about: comprehensive neighborhood-scale surveillance with coordinated cameras scanning the streets. Critics pointed out that the same technology could be used to track and identify people, not just lost animals, raising fears about the infrastructure for mass surveillance that Ring had already built.

What are safer alternatives to Ring if I'm concerned about surveillance and privacy?

Alternatives include local-storage security systems that don't use cloud cameras, non-connected video doorbells, or traditional security systems without internet connectivity. Companies like Wyze and Google Nest offer connected alternatives, but each has its own privacy concerns. The safest approach is to assess what features you actually need and whether the convenience justifies the privacy trade-offs involved in internet-connected surveillance systems.

Could the Ring-Flock integration happen with a different company in the future?

Absolutely. Ring's cancellation of the specific Flock partnership doesn't prevent the company from pursuing similar surveillance integrations with other companies or from expanding its own surveillance capabilities. The incident suggests Ring will likely pursue such integrations more carefully to avoid public backlash, but the company's fundamental strategic interest in surveillance infrastructure suggests more partnerships are likely.

Conclusion: The Unsolved Problem of Surveillance Infrastructure

The Ring-Flock partnership cancellation is worth celebrating as a victory for public pressure and privacy advocacy. Millions of people took notice. Millions expressed concern. And a major corporation changed course. That's not nothing.

But it's also important not to overstate what was accomplished. Ring didn't fundamentally change its approach to law enforcement. The company didn't commit to refusing surveillance integrations in the future. Ring didn't dismantle its facial recognition technology or shut down Community Requests. The company simply stepped back from one specific partnership that became too controversial to continue.

The underlying problem remains. Ring has built surveillance infrastructure across millions of American homes. That infrastructure is integrated with law enforcement. That infrastructure is AI-powered and constantly improving. That infrastructure is deployed in a regulatory vacuum where private companies make decisions about surveillance capabilities with minimal legal oversight.

The Flock partnership represented an escalation of this problem. It would've taken the surveillance infrastructure Ring had built and made it officially part of law enforcement practice. It would've transformed Ring from a company that facilitates law enforcement access to one that actively partners with law enforcement surveillance systems.

That escalation was stopped. But without new legal frameworks and regulatory oversight, the next escalation is probably coming. The next company with surveillance capabilities might pursue similar integrations. Or Ring might return to the negotiating table with Flock or a competitor after the public attention dies down.

The real solution requires three things. First, public awareness and pressure must continue. Companies respond to business and reputational concerns. If customers continue to demand privacy protection, companies have incentives to provide it.

Second, regulatory oversight must develop. Federal legislation should establish clear rules about what surveillance companies can and cannot do, what legal process must be followed before law enforcement can access surveillance data, and what penalties apply to violations.

Third, civil society institutions must develop to contest surveillance infrastructure. Privacy advocacy organizations, immigrant rights groups, civil liberties organizations, and other groups must continue pushing back against surveillance expansion.

None of these alone is sufficient. But together, they create the conditions for meaningful privacy protection in an increasingly surveilled society.

The Ring-Flock incident shows that change is possible. Millions of people cared enough about privacy to push back against a major corporation. That public engagement is the foundation for more comprehensive privacy protection in the future.

What happens next depends on whether that momentum continues.

Key Takeaways

- Ring canceled its Flock Safety partnership after weeks of public pressure, customer device destruction, and political intervention from Senator Ed Markey

- The integration would have created unprecedented neighborhood-scale surveillance by linking private home cameras with law enforcement networks

- Flock Safety's reported integration with ICE created specific concerns for immigrant communities about being tracked for deportation enforcement

- Ring's Super Bowl 2025 ad for Search Party visualized mass surveillance capabilities, igniting broader concerns about the company's facial recognition infrastructure

- While the Flock cancellation represents a public pressure victory, Ring continues operating Community Requests and other law enforcement cooperation programs

- Meaningful privacy protection requires regulatory frameworks, not just public pressure, as surveillance technology continues to advance

Related Articles

- CBP's Clearview AI Deal: What Facial Recognition at the Border Means [2025]

- Ring's Search Party Surveillance Feature Sparks Mass Backlash [2025]

- AI Ethics, Tech Workers, and Government Surveillance [2025]

- ExpressVPN Pricing Deals: Save on the Cheapest VPN [2025]

- Surfshark VPN 87% Off: Complete Deal Analysis & VPN Buyer's Guide [2025]

- Disney's Frozen Ever After: Animatronic Tech That 'Hopped Off Screen' [2025]

![Ring Cancels Flock Safety Partnership Amid Surveillance Backlash [2025]](https://tryrunable.com/blog/ring-cancels-flock-safety-partnership-amid-surveillance-back/image-1-1770937659882.jpg)