Seedance 2.0 Sparks Hollywood Copyright War: What's Really at Stake [2025]

You probably haven't heard of Seedance 2.0 yet, but Hollywood certainly has. And they're furious.

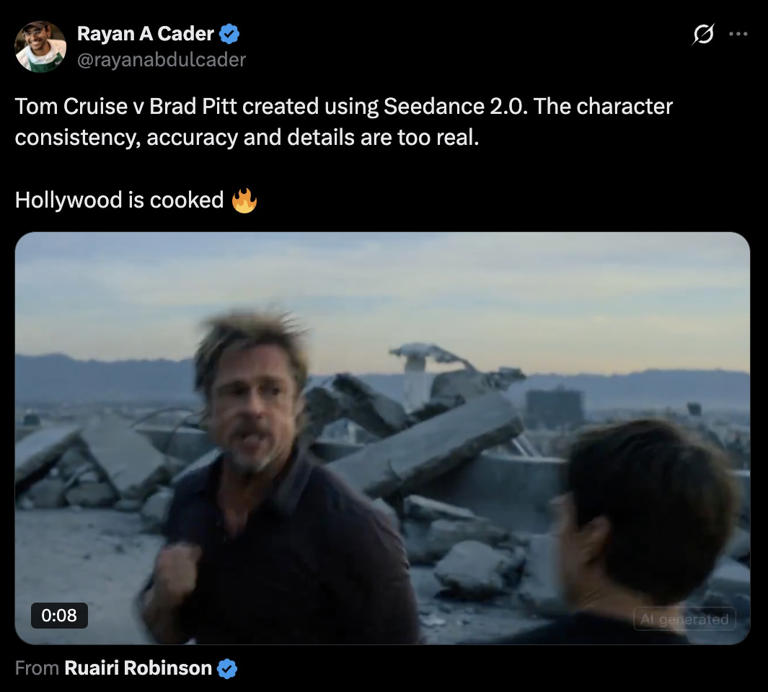

In February 2025, Byte Dance—the Chinese tech giant behind TikTok—quietly launched what might be the most controversial AI video generator since OpenAI's Sora. Within hours, the platform exploded across social media with videos of Tom Cruise fighting Brad Pitt, Spider-Man battling Iron Man, and Baby Yoda driving a sports car. All generated from simple text prompts. None of it authorized.

The reaction was immediate and brutal. The Motion Picture Association demanded Byte Dance "immediately cease its infringing activity." Disney sent cease-and-desist letters. SAG-AFTRA condemned it. The Human Artistry Campaign—a coalition of Hollywood unions—called it "an attack on every creator around the world."

But here's what most people got wrong about this story: it's not really about whether AI can generate videos. It's about a fundamental question that the entertainment industry, tech companies, and governments are still struggling to answer. Where's the line between innovation and theft?

This article digs into what actually happened with Seedance 2.0, why it terrifies Hollywood, what the legal landscape looks like, and what comes next for AI video generation in the real world.

TL; DR

- Seedance 2.0 launched without copyright safeguards, enabling users to create videos of copyrighted characters and scenarios within hours

- Multiple Hollywood studios filed cease-and-desist letters, with Disney claiming Byte Dance was engaged in a "virtual smash-and-grab of Disney's IP"

- The Motion Picture Association issued formal demands, accusing Byte Dance of unauthorized use of "U. S. copyrighted works on a massive scale"

- Legal precedent remains murky: AI copyright cases are still working through courts, with no clear guidance on what counts as fair use

- The real issue goes deeper than one product: This reflects a collision between two competing visions of AI development and intellectual property rights

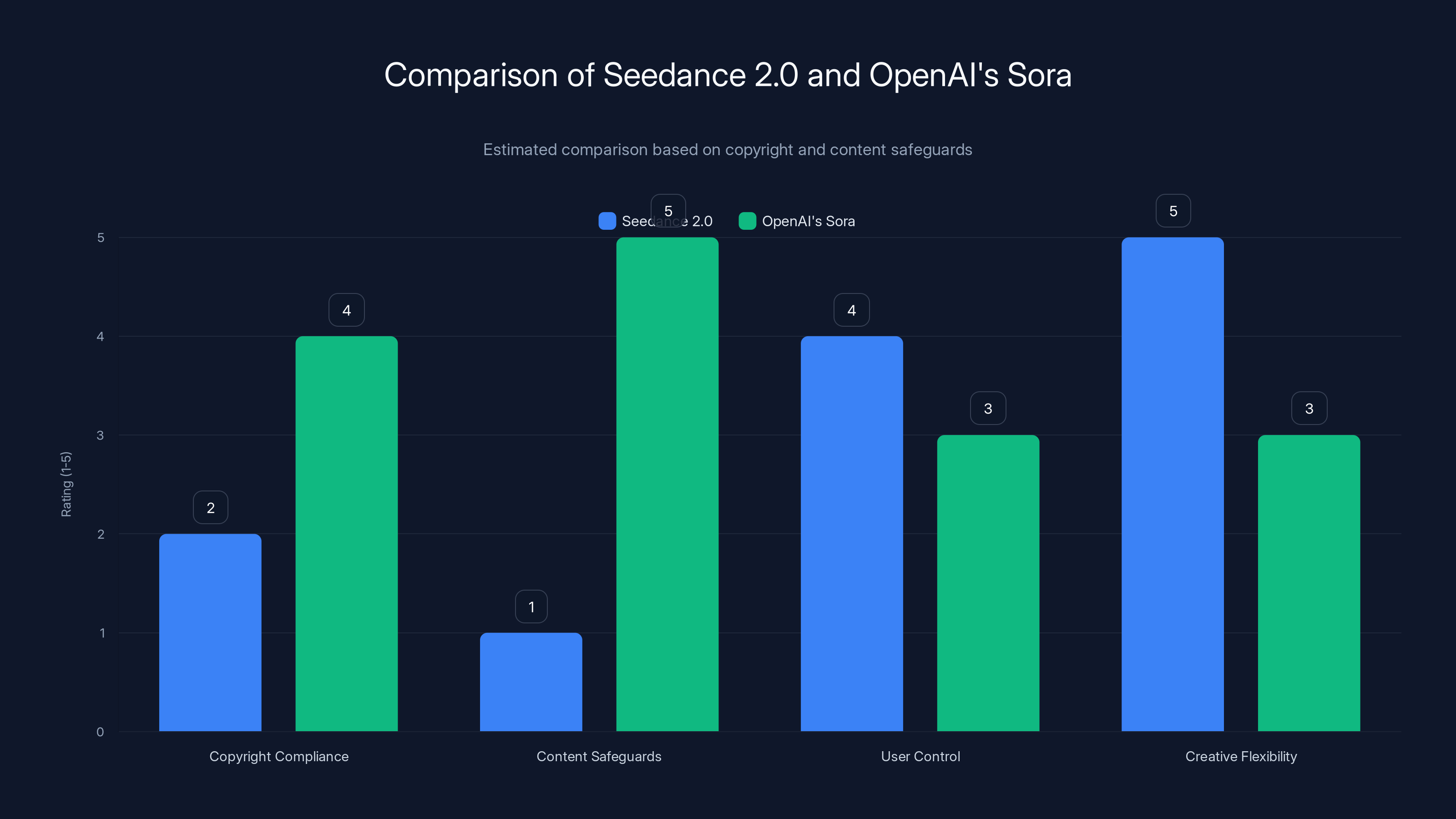

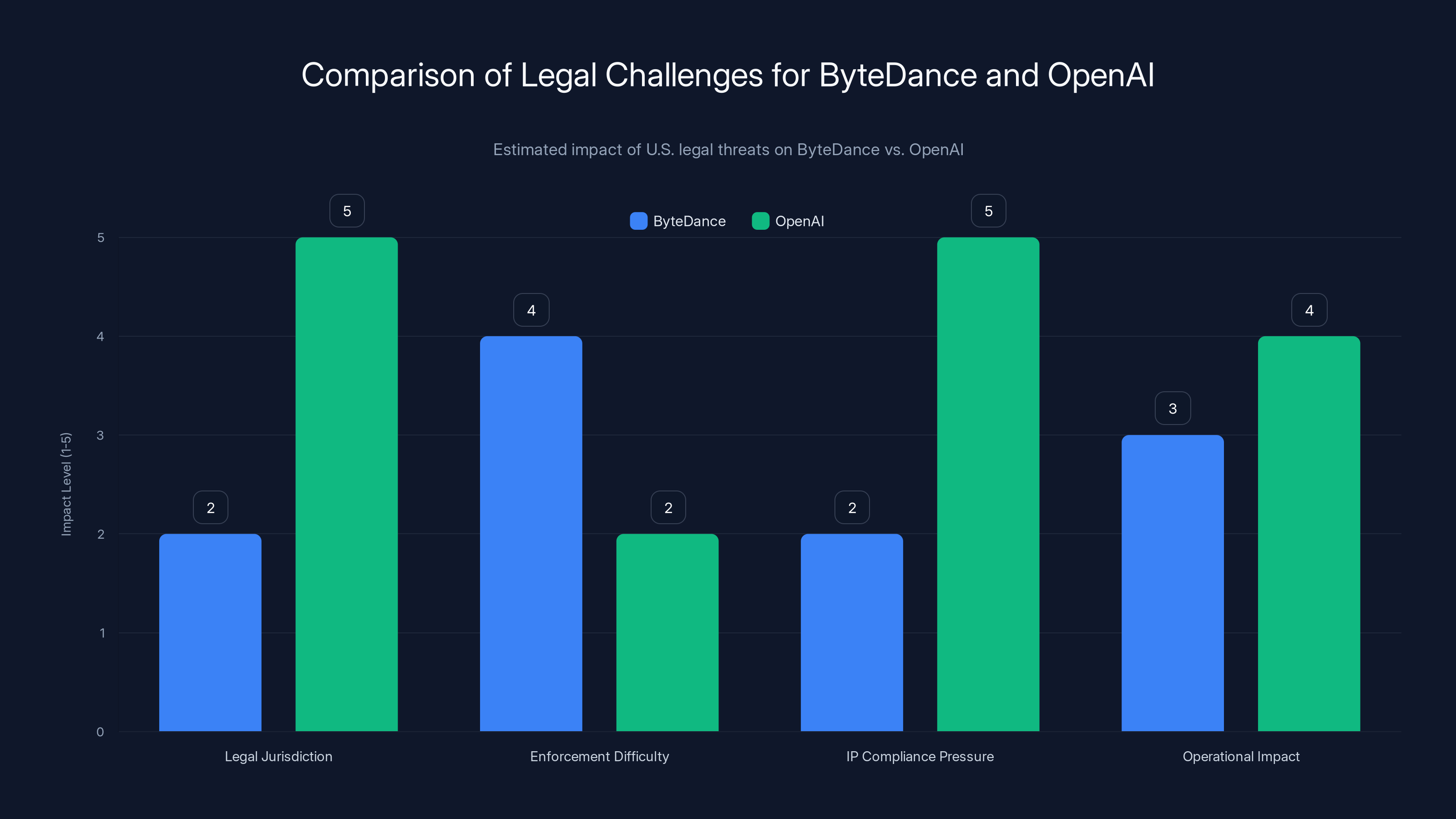

Seedance 2.0 excels in creative flexibility but lacks in copyright compliance and content safeguards compared to OpenAI's Sora. Estimated data.

What Exactly Is Seedance 2.0?

Let's start with the basics, because the technology itself matters as much as the controversy around it.

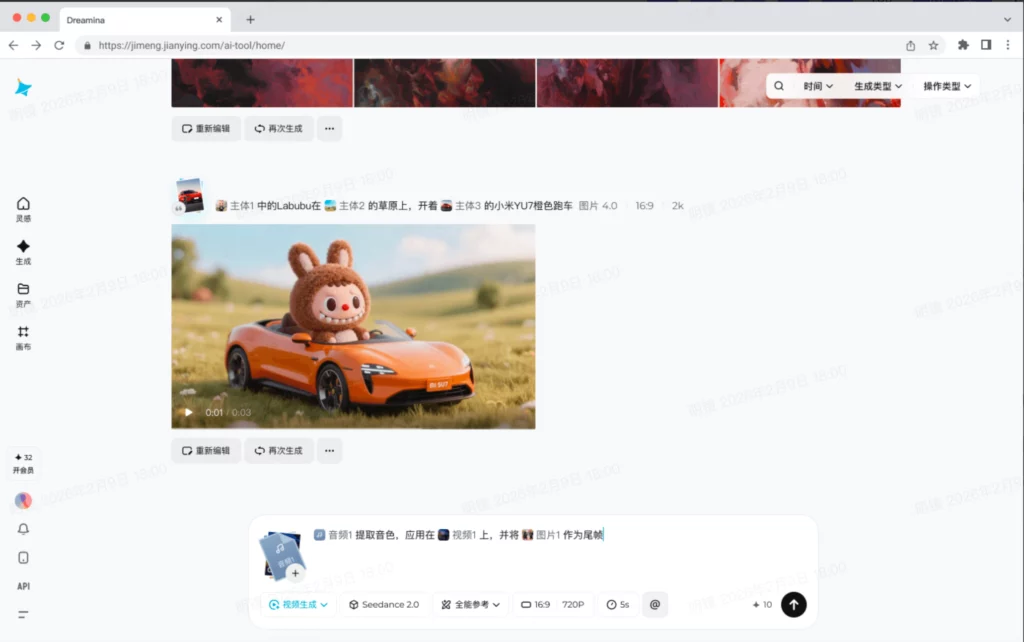

Seedance 2.0 is an AI video generator built by Byte Dance, the company behind TikTok, Douyin (TikTok's Chinese equivalent), and CapCut (a widely used video editing app). Think of it like a text-to-video engine. You type a description—"Tom Cruise fighting Brad Pitt in slow motion" or "Spider-Man swinging through New York"—and the AI generates a video matching that description.

The technical approach is similar to other text-to-video models like OpenAI's Sora or Google's Veo. The system was trained on massive amounts of video data, learning to recognize patterns between text descriptions and visual content. When you give it a prompt, it uses those learned patterns to synthesize a new video from scratch.

Currently, Seedance 2.0 is limited to 15-second videos. It's being rolled out in phases: first to users of Byte Dance's Jianying app in China, then to global users of CapCut. The company hasn't released detailed technical specifications, but based on available demos, the quality is genuinely impressive. The generated videos have coherent motion, reasonable physics, and recognizable subjects.

Here's the key technical detail that matters: Seedance 2.0 appears to have minimal to no content filtering. You can ask it to generate videos of real celebrities. You can ask it to recreate scenes from copyrighted movies. You can ask it to generate content that imitates the style and subject matter of copyrighted work. And it will attempt to do exactly that.

Compare this to OpenAI's Sora, which was released with significant restrictions. Sora blocks requests for real people's likenesses. It won't generate videos that appear to violate copyrights. It includes watermarks. There's a whole review process.

Seedance 2.0 launched with essentially none of that. That's not a bug. It was a deliberate choice.

Sora emphasizes strong safeguards and copyright protections, while Seedance 2.0 prioritizes rapid public release with minimal initial restrictions. Estimated data based on described philosophies.

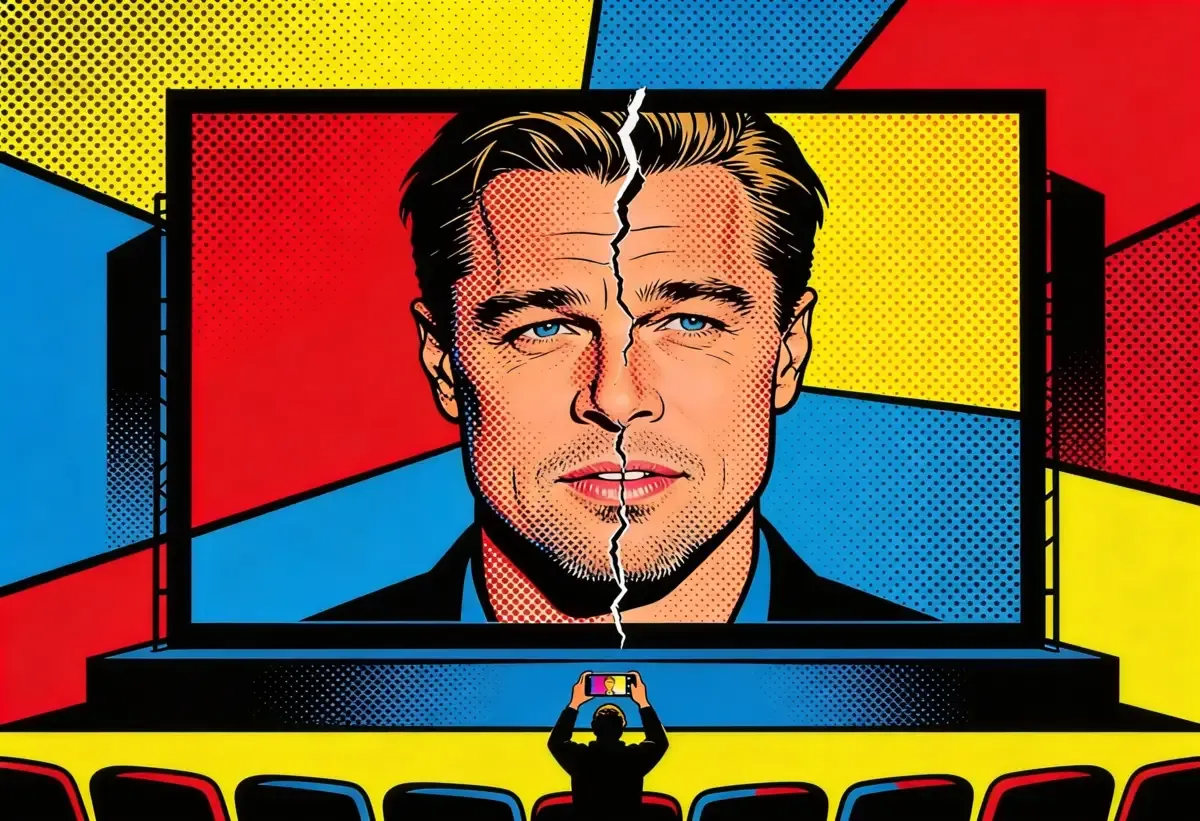

Why Hollywood Freaked Out (And Rightfully So)

Within a single day of Seedance 2.0's launch, Hollywood's worst fears materialized.

An X user posted a video showing Tom Cruise and Brad Pitt in a confrontation. The caption read simply: "generated with a 2 line prompt in seedance 2." This wasn't a deepfake in the traditional sense—not a face swap of existing footage. This was a completely synthesized video of two of the world's biggest movie stars, generated from text, showing them doing something neither actually did.

The implications hit like a shockwave through the entertainment industry.

Rhett Reese, screenwriter of "Deadpool" and "Deadpool 2," responded with five words that captured the existential dread: "I hate to say it. It's likely over for us."

Within hours, more evidence emerged. Seedance 2.0 users had generated videos featuring Disney characters. Spider-Man appeared in original scenarios. Darth Vader showed up in new scenes. Baby Yoda, officially known as Grogu from "The Mandalorian," appeared in situations the character never experienced.

These weren't isolated incidents. They were proof of concept that Seedance 2.0 could generate derivative works based on copyrighted characters and intellectual property without any authorization.

For Hollywood studios, this represented a category-5 threat. Let's break down why:

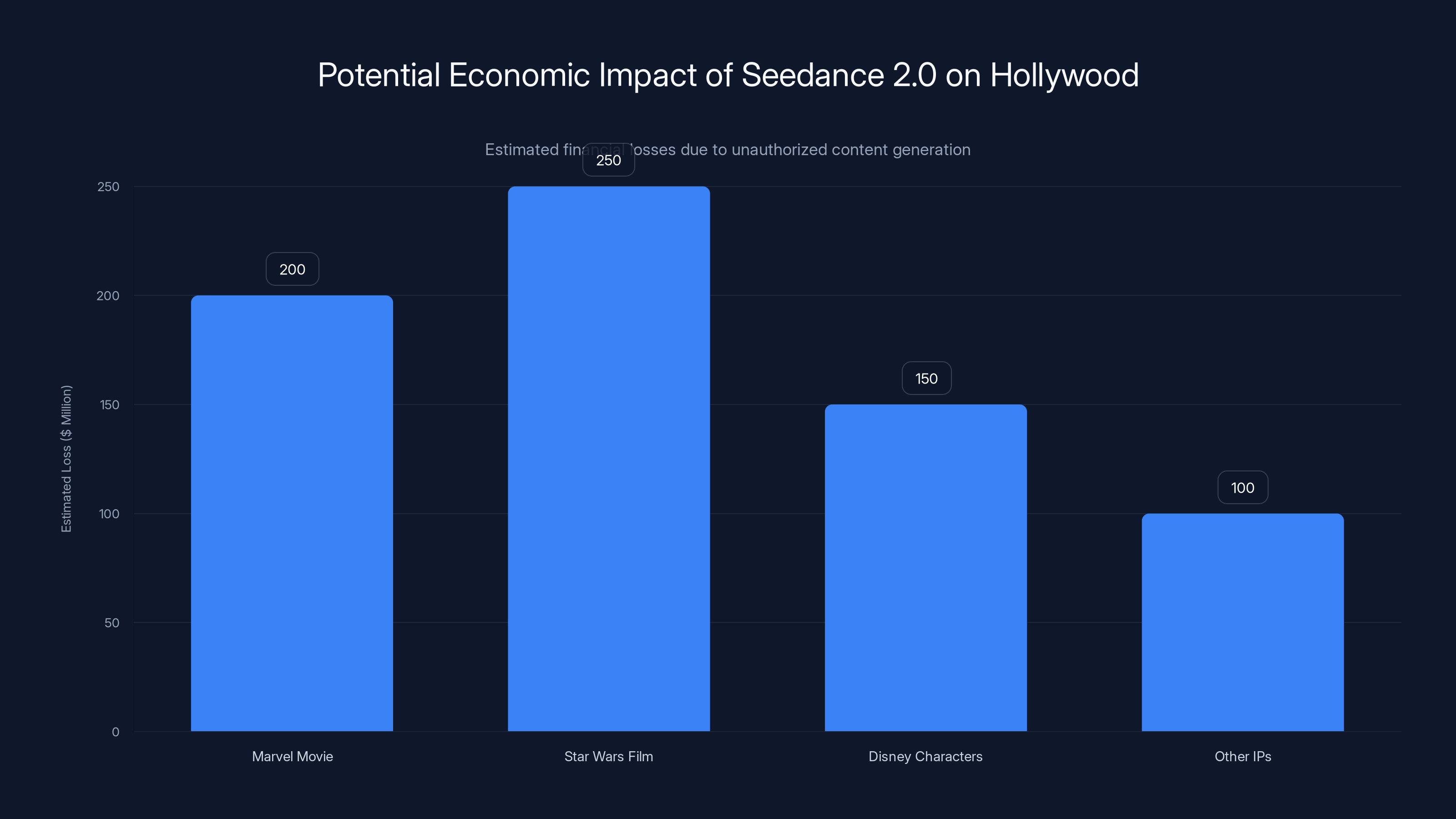

First, the economic threat. Studios spend hundreds of millions of dollars on intellectual property. A Marvel movie costs

Second, the consent problem. None of these actors consented to appear in these videos. None of the studios authorized their characters or story worlds to be used this way. This isn't like fan art, which has existed for decades in a legal gray area. This is industrial-scale, automated generation of derivative works without permission.

Third, the scale problem. Deepfakes of celebrities have existed for years, but they've been niche. Creating a convincing deepfake requires technical skill, significant computational resources, and hours of work. Seedance 2.0 makes this accessible to anyone with a text prompt and internet access. What was once a rare occurrence could become commonplace in minutes.

Fourth, the precedent problem. If Seedance 2.0 succeeds without meaningful consequences, it sets a precedent. Every other AI company will follow suit. Why add safeguards if your competitors don't?

Hollywood's response wasn't hysteria. It was pattern recognition based on decades of experience with new technologies that disrupted intellectual property.

The Legal Cease-and-Desist Barrage

Within days, the legal threats started flying.

Disney sent Byte Dance a cease-and-desist letter accusing the company of a "virtual smash-and-grab of Disney's IP." The letter claimed Byte Dance was "hijacking Disney's characters by reproducing, distributing, and creating derivative works featuring those characters."

The Motion Picture Association, representing major studios including Disney, Warner Bros., Paramount, Sony, and others, issued a formal statement from CEO Charles Rivkin. The language was unambiguous: "In a single day, the Chinese AI service Seedance 2.0 has engaged in unauthorized use of U. S. copyrighted works on a massive scale. By launching a service that operates without meaningful safeguards against infringement, Byte Dance is disregarding well-established copyright law that protects the rights of creators and underpins millions of American jobs."

SAG-AFTRA, the actors' union, weighed in: "SAG-AFTRA stands with the studios in condemning the blatant infringement enabled by Byte Dance's new AI video model Seedance 2.0."

The Human Artistry Campaign, a coalition of Hollywood unions and guilds formed specifically to push back against AI threats to creative workers, called Seedance 2.0 "an attack on every creator around the world."

Now here's where it gets interesting: legally speaking, these letters are opening moves in a game whose rules are still being written.

The cease-and-desist letters themselves aren't binding. They're formal notices saying "stop doing this, or we will sue." The real question is whether those lawsuits would succeed.

Under U. S. copyright law, creating a derivative work without permission is infringement. If Seedance 2.0 generates a video of Spider-Man—a character owned by Disney and Sony—that appears to be infringing on copyright. But here's where it gets murky: did the AI model infringe, or did the user? If I request a video of Spider-Man, am I the infringer, or is Byte Dance?

Traditional copyright law doesn't have a clear answer to this question because it was written for a world where the creator and the distributor were usually the same entity. A movie studio made movies and distributed them. A publisher published books. Copyright infringement meant they were the ones doing the unauthorized copying.

But with generative AI, there's a separation. Byte Dance built the model (which was trained on data). Users are generating the content (which violates copyrights). This creates a chain of potential liability questions that courts haven't fully resolved.

Seedance 2.0 poses a significant economic threat to Hollywood, potentially undermining investments in major IPs like Marvel and Star Wars by enabling unauthorized content creation. Estimated data.

The Training Data Problem

There's another legal issue lurking beneath the surface, and it might ultimately be more important than the end-user generation problem.

How was Seedance 2.0 trained? What data went into building this model?

For text-to-video models to work well, they need to be trained on massive amounts of video data. That training data teaches the model what visual patterns correspond to what descriptions. If you want a model that can generate videos of Spider-Man, it probably learned from videos containing Spider-Man.

Where does that data come from? The internet. YouTube. Movie clips. Licensed video databases. Potentially proprietary footage.

When OpenAI trained Sora, there was significant discussion about where the training data came from. The company has been somewhat vague about the specifics, but there was at least discussion and pushback. With Seedance 2.0, Byte Dance has been even less transparent.

This matters legally because there's a separate category of copyright infringement that applies to training data itself. If Byte Dance used copyrighted movies, TV shows, or clips to train Seedance 2.0 without permission, that's infringement separate from what users do with the model.

Hollywood's argument is essentially: "Even if we ignore what users do with this tool, the fact that you built it using our copyrighted material without permission is illegal."

The counterargument from Byte Dance would likely invoke fair use. Fair use is a legal doctrine that allows limited use of copyrighted material for purposes like criticism, commentary, teaching, or research. Some AI companies argue that training AI models on copyrighted data is fair use because it's for a transformative purpose (creating new models and capabilities).

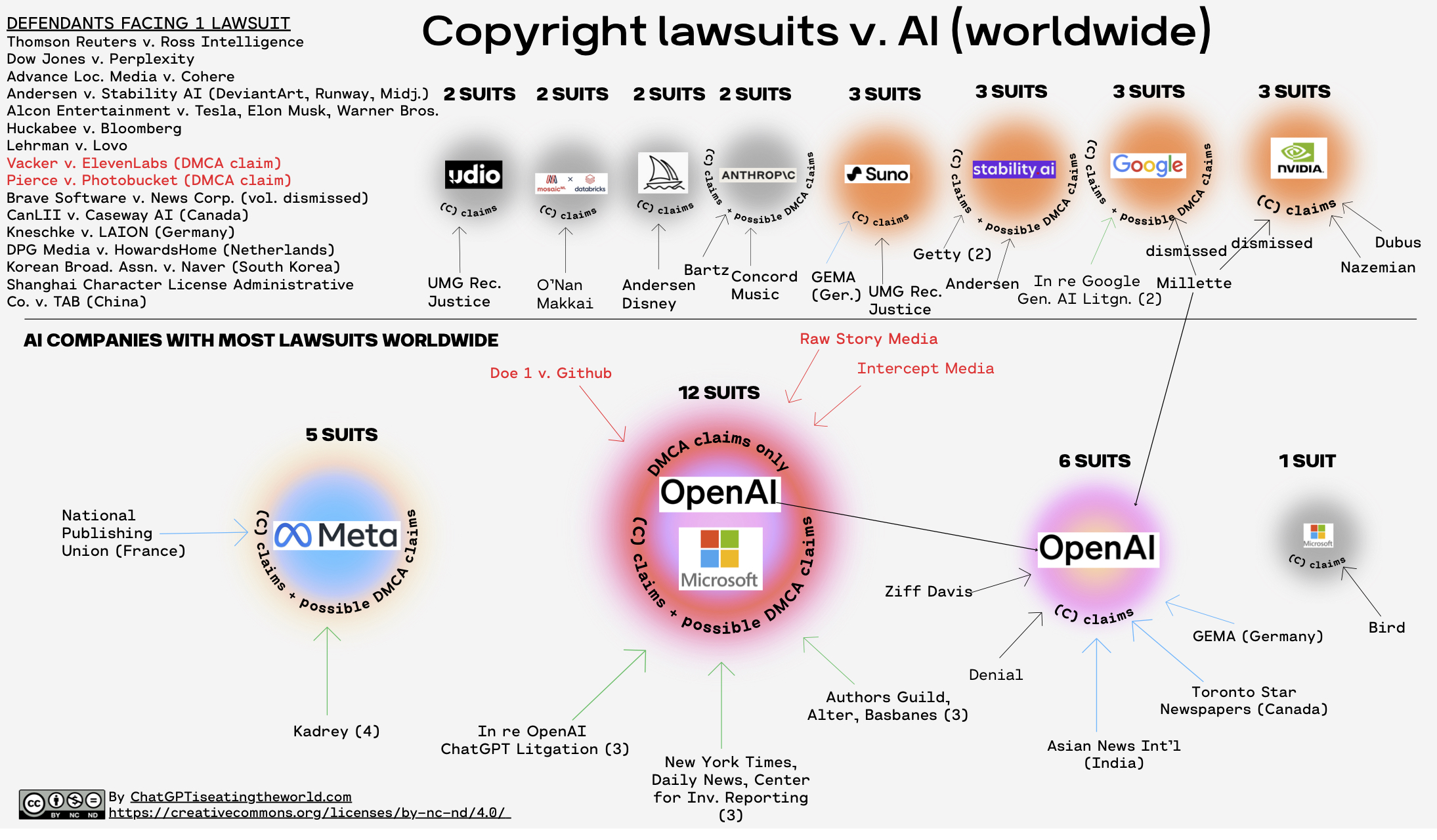

Courts haven't definitively ruled on this yet. There are active lawsuits between Hollywood studios and AI companies over training data, but no final verdicts. The New York Times is suing OpenAI and Microsoft. Authors including John Grisham and Jodi Picoult are suing multiple AI companies. These cases could take years to resolve.

What we know: if Byte Dance trained Seedance 2.0 on copyrighted Hollywood movies without permission, that's almost certainly going to be part of the legal dispute.

Comparing Seedance 2.0 to Sora: A Study in Different Philosophies

To understand what makes Seedance 2.0 uniquely controversial, it helps to compare it to OpenAI's Sora, which was released earlier in 2024.

Both are text-to-video models. Both generate short videos from text prompts. Both represent genuine technical achievements. But they took fundamentally different approaches to safety and copyright concerns.

OpenAI's Sora: The Cautious Approach

When OpenAI released Sora, it did so carefully. The tool wasn't immediately available to the public. Instead, it went to a limited group of "red teamers"—security researchers and content creators who were tasked with finding ways to misuse it.

Sora includes built-in safeguards. You cannot generate videos of real, identifiable people. The system rejects these requests. You cannot generate videos that appear to violate copyrights. There's a watermarking system that marks generated content. OpenAI reviewed training data and attempted to exclude certain categories of problematic material.

When Sora eventually became available to paying customers, it was with clear terms of service that restricted use. You can't use it to generate misleading content. You can't use it to impersonate real people. You agree that you're liable if your generated content violates anyone's rights.

Is Sora perfect? No. Are there ways users might still generate problematic content? Probably. But the intent was clearly to build safeguards that would reduce (though not eliminate) the risk of copyright infringement and deepfakes.

Seedance 2.0: The Move-Fast Approach

Seedance 2.0 took a different philosophical approach. Rather than building safeguards first and then releasing to a limited audience, Byte Dance built a tool that appears to have minimal restrictions and launched it immediately to a mass audience.

This could be interpreted charitably: maybe Byte Dance believes that restricting AI capabilities based on speculative future harms is unnecessary. Maybe they believe that the right way to handle copyright concerns is through legal mechanisms, not technical restrictions. Maybe they believe that users should be trusted to use tools responsibly.

Or it could be interpreted cynically: maybe Byte Dance calculated that by launching without restrictions and to a massive Chinese user base first, they could establish the tool's utility and market share before any legal action could stop them. Maybe they knew that enforcement against a Chinese company would be difficult and slow. Maybe they were making a bet that by the time legal consequences arrived, the AI would already be widely used and difficult to shut down.

Without insight into Byte Dance's internal thinking, we can't know for sure. But the practical effect was the same: a tool designed to enable the exact behavior Hollywood most feared, released to minimize friction and maximize immediate adoption.

The comparison matters because it shows that these aren't technical inevitabilities. These are choices. OpenAI chose to build safeguards. Byte Dance chose not to. Both companies are capable of building text-to-video models. The difference is in their risk tolerance and their views on responsibility.

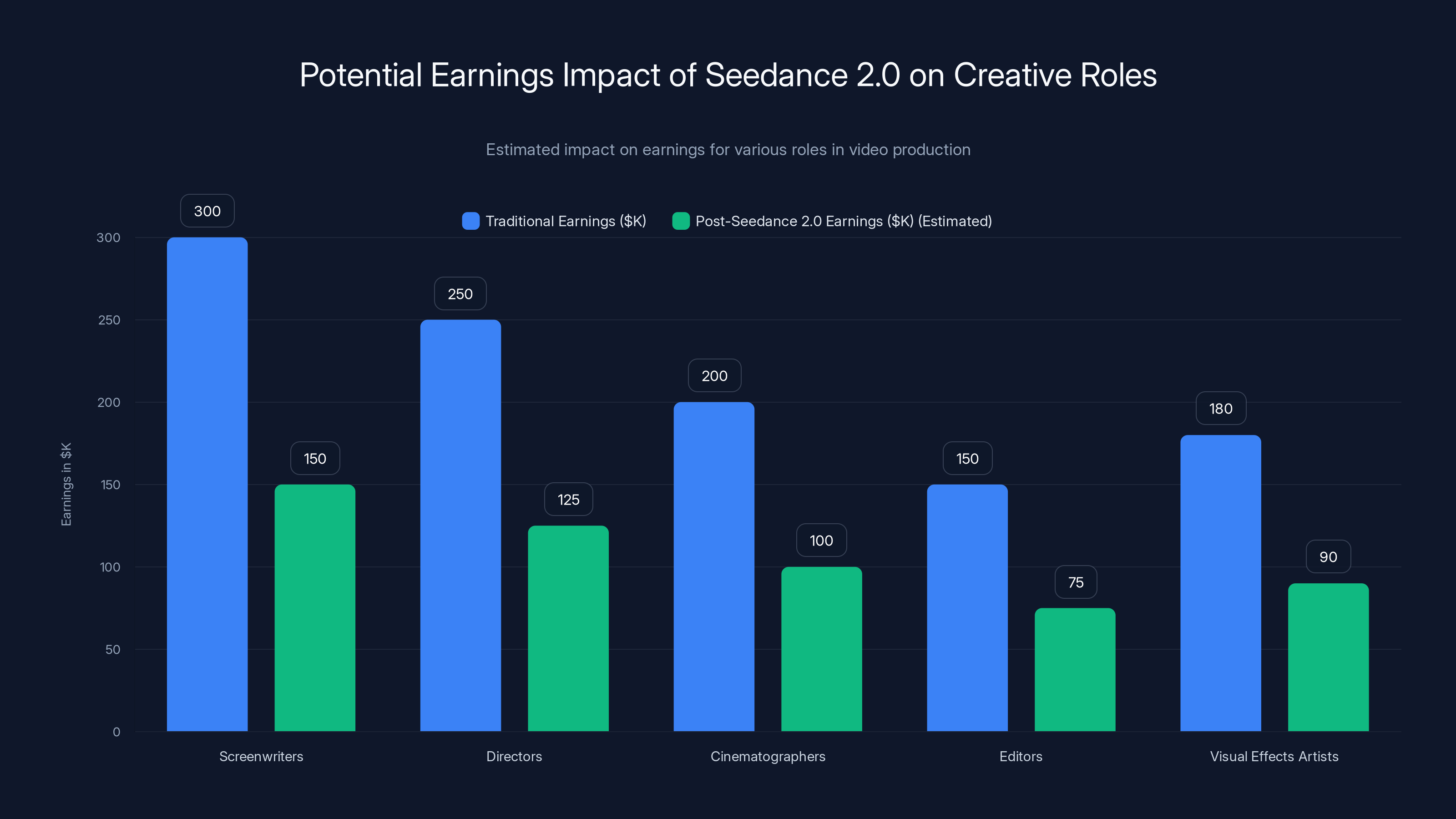

Estimated data suggests a significant reduction in earnings for creative roles due to Seedance 2.0, highlighting potential economic challenges for content creators.

The Geopolitical Angle: Why Byte Dance Might Not Care About U. S. Legal Threats

Here's a question that doesn't get enough attention: why would Byte Dance launch Seedance 2.0 knowing that Hollywood would immediately come after them legally?

Part of the answer is geopolitical.

Byte Dance is a Chinese company. It's subject to Chinese law and regulation, not U. S. law. When the Motion Picture Association, an American industry group, sends a cease-and-desist letter, Byte Dance could theoretically ignore it. The letter has no legal force in China.

Sure, Byte Dance wants to do business globally. But its core operations and user base are in China. Seedance 2.0 is being rolled out first to Chinese users of Jianying. The company could argue that it's not primarily a U. S. product.

If Disney, the Motion Picture Association, and other American copyright holders want to sue, they'd need to file a lawsuit in U. S. courts. They'd need to establish jurisdiction. They'd potentially need to enforce a judgment against a Chinese company, which is extremely difficult.

China, for its part, doesn't have the same historical commitment to Western copyright standards. The country has been accused for decades of tolerating IP theft and counterfeiting. There's no guarantee that Chinese regulators would back American copyright holders against a domestic tech company.

This doesn't mean Byte Dance will face no legal consequences. It means the consequences will be slower, less certain, and less likely to completely stop the product.

Compare this to OpenAI, which is very much an American company operating in the American legal system. Sora's design reflects that reality. OpenAI has to care deeply about U. S. copyright law because they operate here and are subject to these laws.

Byte Dance's calculation might be: we can launch this globally, we can claim we're primarily serving Chinese users, and by the time Western legal systems figure out how to stop us, we'll have millions of users and the precedent will be set.

It's a risky bet. But it's a bet that makes sense from the perspective of a company that operates in a different legal and regulatory environment.

What This Means for AI Video Technology Going Forward

Let's zoom out and think about the bigger picture. What does Seedance 2.0 signal about the future of AI video generation?

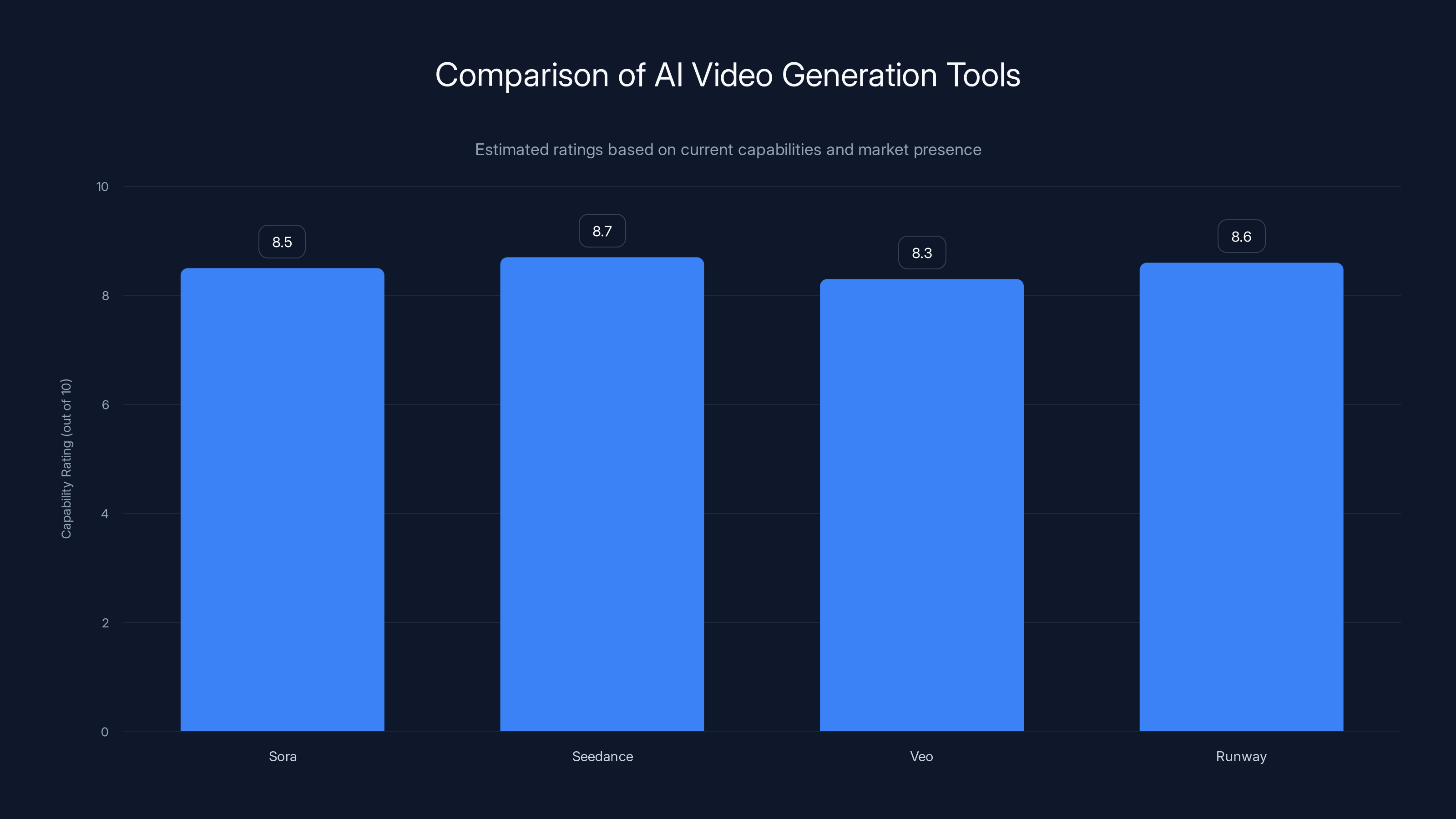

First, the race is on. Sora, Seedance, Veo, Runway—multiple companies are building text-to-video models. They're all trying to achieve the same technical capability. The quality gap between the best ones is narrow. This isn't a situation where one company has a unique edge. This is a technology that multiple companies can and will develop.

When multiple companies are racing to build the same capability, there's pressure to move fast and cut corners. If one company adds safeguards that make the product slower, harder to use, or less capable, and a competitor doesn't, the competitor wins market share. This creates a race-to-the-bottom dynamic where the company willing to compromise on safety gets ahead.

Seedance 2.0 might be a preview of what that looks like.

Second, regulation is coming, but it's messy. Hollywood's legal threats are one form of regulation (enforcement of existing copyright law). But governments are also considering new regulation.

The European Union is already working on AI regulation through the AI Act. The U. S. government has been discussing AI regulation, though the legislative process has been slow. Some countries are considering specific rules about deep fakes and synthetic media.

But regulating AI video generation is genuinely hard. You can't just ban the technology—it has legitimate uses. You can't just require watermarks—watermarks can be removed. You can't just restrict it to certain countries—the internet is global and open-source models can be shared anywhere.

The most effective regulations will probably combine technical requirements (like built-in safeguards), legal liability (holding companies responsible for what their tools enable), and transparency requirements (disclosing what data was used for training).

Seedance 2.0 will likely accelerate this process. When the risk of harm becomes obvious enough, governments move faster.

Third, the consent question becomes unavoidable. One thing that's remarkable about the reaction to Seedance 2.0 is how much it focused on the lack of consent. Disney didn't consent. The actors didn't consent. The studios didn't consent.

This points to a fundamental question about generative AI: should creators and rights-holders have the ability to opt out of having their work used for training or generation?

Right now, most AI companies operate on an opt-out basis. Your content is fair game for training unless you explicitly ask to be excluded. Some AI companies have added opt-out mechanisms (Getty Images, for example, lets photographers request their images not be used for training). But these are new.

Hollywood's argument is essentially: we shouldn't have to opt out. You shouldn't be using our work without permission in the first place.

Future AI regulation might require opt-in consent instead of opt-out opt-outs. That would be a massive change from how most current models were trained.

ByteDance faces lower immediate legal pressure in the U.S. due to jurisdictional and enforcement challenges, unlike OpenAI, which operates under direct U.S. legal oversight. (Estimated data)

The Economic Ripple Effects: What Seedance 2.0 Means for Content Creators

Let's talk about something that doesn't get enough attention: how Seedance 2.0 affects actual people making a living in the entertainment industry.

Consider a screenwriter. Their job is to write original stories, characters, and dialogue. This requires skill, creativity, and years of development. A produced screenplay might earn

With Seedance 2.0, or tools like it, someone could generate a professionally-looking video from a text prompt. They wouldn't need to hire writers, directors, cinematographers, editors, or visual effects artists. The AI would do all of it.

This isn't theoretical. We can extrapolate from what's already happened with text generation and image generation. When ChatGPT and Midjourney launched, there was immediate concern about impact on writers and visual artists. Companies started using these tools to replace human workers. Some writers lost jobs. Some artists found their rates undercut.

With video, the impact could be larger. Video is more economically significant than text or images. More money flows through video production than through written content or imagery.

SAG-AFTRA's concern about Seedance 2.0 isn't just about copyright. It's about livelihoods. If every video production company can use AI to generate footage instead of hiring cinematographers and editors, those jobs go away.

This is what makes the Hollywood response more than just corporate copyright defense. It's about the economics of creative work.

Now, there's a counter-argument worth taking seriously. Technologies that automate certain tasks often create new opportunities elsewhere. When photography was invented, it didn't end visual art—it transformed it. When editing software became available, it didn't end filmmaking—it democratized it.

Maybe the same will happen with AI video generation. Maybe it will create new jobs, new types of creative work, new opportunities for people who couldn't afford traditional production equipment.

That's possible. But the people whose jobs are at immediate risk—cinematographers, visual effects artists, screenwriters—are right to be concerned. The transition might create new opportunities in aggregate, but those opportunities might not be available to the same people in the same locations with the same timing.

This is a distributional problem. The benefits of AI automation might be concentrated in certain areas (cheaper video production, more people able to create video content) while the costs are concentrated in other areas (lost jobs for specialized workers).

Seedance 2.0 throws this question into sharp relief. It's forcing a conversation that needs to happen.

The Copyright Law Landscape: What Actually Happens Next

So let's get specific about what might happen legally.

Scenario 1: Hollywood Wins Decisively

Disney and the Motion Picture Association sue Byte Dance. They argue that Seedance 2.0 infringes their copyrights both in terms of the training data (if Byte Dance used copyrighted videos) and in terms of what users generate (derived works created without permission).

Byte Dance loses, or settles. They're required to add content filters preventing generation of copyrighted characters. They pay damages. Seedance 2.0 becomes restricted in similar ways to Sora.

Probability: Medium. Courts might rule against Byte Dance, especially on the training data question. But enforcing a judgment against a Chinese company is hard.

Scenario 2: The Case Drags On for Years

Lawsuits are filed. Discovery happens. The case proceeds through the American legal system. Meanwhile, Seedance 2.0 keeps operating and evolving. By the time a verdict comes in, the technology has moved on. The case becomes a precedent, but it doesn't practically stop the tool from operating.

Probability: High. This is what usually happens with IP cases against foreign companies.

Scenario 3: Regulation Moves Faster Than Courts

Even before the lawsuit concludes, new legislation passes. The government requires AI video generators to implement certain safeguards, disclose training data, or obtain licenses. Byte Dance either complies or faces sanctions that make doing business globally harder.

Probability: Medium-High. Governments are already moving on AI regulation. The visibility of Seedance 2.0 might accelerate this.

Scenario 4: An Uneasy Settlement

Instead of fighting, Byte Dance reaches an agreement with studios. Something like what music labels did with streaming services—Seedance 2.0 remains available, but it includes safeguards preventing copyrighted character generation, and Byte Dance pays licensing fees.

Probability: Medium. There's precedent for this with other tech platforms and copyright holders.

The most likely outcome is some combination of these. Lawsuits will proceed slowly. Regulation will move faster. Eventually, some accommodation is reached that satisfies neither side completely but prevents the worst outcomes.

Seedance 2.0 and its competitors are closely matched in terms of capability, highlighting the intense competition in AI video generation. (Estimated data)

What This Looks Like for Users and Creators

Practically speaking, what does this mean for people actually using or building AI video tools?

If you're a user of Seedance 2.0 right now: legal uncertainty is the main risk. Generating videos of copyrighted characters is almost certainly legally risky. If you publish that content, you could face takedown notices or legal action from studios. This isn't the same as watching something infringing—creating and distributing infringing content is more legally dangerous.

If you're a company building an AI video tool: Sora's approach (build safeguards, be thoughtful about training data) is increasingly the safe bet. It might be less "cutting edge" but it's less likely to result in lawsuits. If you're going to build a tool without safeguards, you need to understand that you're taking on significant legal risk.

If you're a creative professional: AI video generation will likely affect your field. The question is how. Some jobs will be threatened. Some new opportunities will emerge. The best career strategy is probably to develop skills that AI tools can't easily replace (creative direction, narrative development, understanding of human psychology) while learning to work with the new tools rather than against them.

If you're a studio or media company: you probably need to start thinking about how to use these tools for your own purposes before competitors do. You also need to participate in standard-setting and regulation discussions to help shape how these tools develop.

The Bigger Picture: AI Development Philosophy

Underneath all of this—the legal threats, the cease-and-desist letters, the accusations—there's a fundamental disagreement about how AI should be developed.

One philosophy says: build powerful tools, include safeguards to reduce obvious harms, and then release them to users who are responsible for how they use them. This is roughly OpenAI's approach with Sora.

Another philosophy says: build powerful tools, release them quickly with minimal safeguards, and let the market and legal system sort out problems. This appears to be Byte Dance's approach with Seedance 2.0.

A third philosophy says: develop AI carefully with extensive review, only release what's provably safe, and prioritize alignment and safety over capability. This is roughly what Anthropic tries to do with Claude.

Each approach has trade-offs. The "move fast and iterate" approach accelerates innovation but increases the risk of harms. The "build safeguards first" approach is slower but reduces some risks. The "extreme caution" approach is safest but might slow valuable development.

Seedance 2.0 is a data point suggesting that the move-fast approach creates real problems. Copyright holders get harmed. Workers worry about their jobs. Public trust in AI development decreases. This probably argues for more safeguards, not fewer.

But reasonable people can disagree about how many safeguards are the right amount, and at what point safeguards become restrictions that prevent beneficial uses.

What Disney and Other Studios Are Actually Worried About

It's worth being specific about what studios fear from tools like Seedance 2.0, because the concern goes deeper than just "people generating fan videos."

Disney makes money from IP in multiple ways. They produce movies and TV shows. They license characters to merchandise companies. They operate theme parks featuring their characters. They sell streaming subscriptions. They publish comics and books. The entire Disney enterprise is built on controlling and monetizing intellectual property.

If anyone can generate Spider-Man content for free, some of those revenue streams are threatened. Why buy a Spider-Man toy if you can generate a video of Spider-Man doing whatever you want? Why subscribe to Disney+ if you can generate Disney content on demand?

Now, generated content isn't perfect. It might not be as high quality as studio productions. But it's good enough for a lot of uses. And more importantly, the cost approaches zero.

This is the existential threat from Disney's perspective. Not that the technology exists, but that it exists outside of Disney's control.

The same applies to every other studio, every music label, every publisher. The entire creative industry is built on the premise that if you want to consume or distribute their content, you have to go through them and follow their terms.

Generative AI that works well threatens that entire business model.

Hollywood's legal response isn't just about defending past creations. It's about preventing an economic system from existing in which AI can bypass the studios entirely.

Whether that's possible to prevent is another question. Technology usually wins these battles eventually. But Hollywood is fighting for as long as possible because the stakes are genuinely high.

The Global Dimension: Why Seedance 2.0 Is Specifically a Byte Dance Problem

Why did Byte Dance launch Seedance 2.0 knowing it would provoke this response? Why not OpenAI or Google? Why specifically a Chinese company?

Part of it is strategic positioning. Byte Dance is locked out of many Western markets due to geopolitical concerns about TikTok and Chinese companies. Developing a controversial AI tool that Western companies would face legal consequences for might actually be a rational strategy. It allows Byte Dance to develop advanced capabilities and market share while Western companies are hampered by legal concerns.

Part of it is also that Byte Dance operates in a different regulatory environment. China doesn't have the same copyright enforcement traditions. The company's accountability is primarily to Chinese regulators, not American courts.

Part of it might be that Byte Dance calculates it can move faster and farther in China before Western legal systems even figure out how to address the problem.

This dynamic is likely to become more common as AI development becomes increasingly global. Companies in different jurisdictions face different legal and regulatory constraints. The companies facing fewer constraints will be able to move faster and take more risks.

This creates a kind of race-to-the-bottom where the jurisdiction with the fewest constraints essentially sets the global standard.

Western governments are aware of this problem and it's likely to be a driver of more aggressive AI regulation in the coming years. If you can't compete with companies that don't have to follow your rules, the obvious response is to change your rules or make it illegal to do business with companies that don't follow them.

What Comes Next: The Evolution of AI Video Tools

Seedance 2.0 won't be the last controversial AI video tool. It probably won't even be the most controversial.

We can predict with confidence that:

The technology will improve. Video generation is still early. Sora, Seedance, Veo, and other tools will get better. They'll generate longer videos. Higher quality videos. More controllable videos. Eventually, they might generate videos indistinguishable from professionally produced content.

More companies will build similar tools. This isn't a unique capability that only Byte Dance can develop. Other AI companies will build text-to-video models. Some will include safeguards, some won't. The competition will intensify.

Legal fights will multiply. This is just the opening volley. As video generation becomes more mainstream, there will be more lawsuits. More cease-and-desist letters. More regulatory actions. Some will succeed, some will fail, and the legal landscape will gradually clarify.

Regulation will become more specific. Right now, there's no clear regulation of AI-generated video. But governments will write rules. Those rules might require disclosure of training data. They might require safeguards against deepfakes. They might require watermarks or authenticity certificates. This will drive how tools are designed.

Legitimate uses will emerge. Even with all the controversy, there are legitimate uses for AI video generation. Marketing content. Educational videos. Accessibility features. Entertainment. Companies will build business models around these uses.

The economic effects will become undeniable. As the technology gets better and cheaper, the impact on creative workers will become obvious. This will drive demand for policy responses like retraining programs, unemployment insurance adjustments, or requirements that companies using AI for creative work contribute to funding for displaced workers.

The Seedance 2.0 moment is probably a turning point. Before this, AI copyright concerns were abstract. After this, they're concrete. Before this, Hollywood could argue this was speculative risk. After this, it's demonstrated risk.

That changes the political economy of AI regulation. When you can point to a specific, widely-known product causing specific harms, it's much easier to build political support for regulation.

The Implications for Other Creative Fields

Hollywood is fighting back against Seedance 2.0, but what about music, literature, visual art, and other creative fields?

They're facing similar threats from similar tools. Music generation models like Music LM and Jukebox can create original music. Text generation models like GPT-4 can write stories and screenplays. Image generation models like Midjourney and Stable Diffusion can create visual art.

None of these fields has mounted a response as coordinated as Hollywood's. Why?

Partly because Hollywood has more concentrated economic power. A few studios control most of the industry, so they can mount a unified legal response. In music, there are more independent artists. In literature, publishing is more fragmented. Coordination is harder.

Partly because some creative fields have more experience living with AI. Writers have been dealing with ChatGPT and plagiarism for a year longer than video creators have been dealing with text-to-video tools. The shock is wearing off. Some writers are starting to figure out how to work with AI rather than against it.

Partly because Hollywood has existing legal frameworks it can activate. Studios have in-house legal teams trained in IP defense. They have relationships with government regulators. They have public advocacy infrastructure. Other creative fields don't have the same apparatus.

But the principles are the same. Whether it's video, music, or text, the question is: who has the right to decide how creative work is used, and what compensation do creators get when their work is used to train AI?

Lessons for Tech Companies Building AI Products

If you're building an AI product that touches creative content, Seedance 2.0 offers some lessons.

First, ignoring IP concerns doesn't make them go away. Seedance 2.0 hoped that by moving fast and building a mass user base quickly, they could establish the product as inevitable and harder to stop. But they underestimated Hollywood's resources and legal power. The strategy might work tactically (the tool might continue operating despite the legal threats) but it created a massive reputational problem and accelerated demand for regulation. A smarter strategy would have been to address IP concerns proactively.

Second, different stakeholders have different concerns. Studios care about copyright. Actors care about consent and deepfakes. Workers care about job displacement. A product that succeeds needs to address multiple concerns, not just build the most capable tool.

Third, the regulatory environment matters more than ever. A few years ago, you could build an AI product and ask for forgiveness later. Now, governments are moving to regulation before the harms become visible. Building something that will pass likely future regulatory standards is increasingly important.

Fourth, transparency and safety are competitive advantages. Sora's careful approach, with safeguards and transparency, looks less exciting than Seedance's unrestricted tool. But it's actually a smarter long-term strategy because it builds trust with regulators, creators, and the public. That trust is worth more than short-term capability advantages.

The Long View: How This Story Ends

We're not at the end of this story. We're at the beginning.

In five years, AI video generation will be much better than it is now. It will probably be widely available through multiple tools. Some will have safeguards, some won't. Courts will have ruled on some copyright questions, leaving others still unsettled.

Creative workers will have adapted in some ways and struggled in others. Some will have learned to work with AI. Others will have left the industry. The economics of creative work will be different.

Regulation will exist, but it will always lag behind the technology. There will be rules about deepfakes and non-consensual intimate images. There will be disclosure requirements. There might be mandatory licensing schemes. But creative companies and regulators will still be arguing about the details.

The fundamental tension—between the power of AI tools to generate value and the rights of creators to control their work—will still exist. It probably will for decades.

What Seedance 2.0 demonstrates is that this tension is real, immediate, and increasingly hard to ignore. It forced a conversation that was previously abstract into concrete, visible form.

In that sense, whether Byte Dance "wins" this particular legal battle might matter less than the fact that they forced the issue into the open. Regulators, tech companies, and creative workers can no longer pretend this is a theoretical problem. It's here now.

What happens next is up to all of us.

FAQ

What is Seedance 2.0 and how does it work?

Seedance 2.0 is an AI video generator created by Byte Dance that allows users to create short videos (currently up to 15 seconds) by entering text descriptions. The system was trained on large datasets of video content and has learned to generate new videos from text prompts. Users can request videos of specific scenarios, characters, or concepts, and the AI synthesizes original video content matching those descriptions without requiring traditional video production skills or resources.

Why did Hollywood react so strongly to Seedance 2.0?

Hollywood's strong reaction stems from multiple concerns. First, users quickly demonstrated the ability to generate videos of copyrighted characters like Spider-Man, Darth Vader, and Baby Yoda without authorization. Second, the tool enables creation of deepfake-style content of real celebrities without consent. Third, studios fear this technology threatens their core business model, which depends on controlling intellectual property and monetizing creative content. Finally, the tool operates without the content safeguards that competitors like Sora include, creating risks at industrial scale.

What are the main legal arguments against Seedance 2.0?

Hollywood's legal position includes several layers. The Motion Picture Association argues that the tool violates copyright law by enabling creation of derivative works based on copyrighted characters without permission. Disney and other studios claim the tool was trained on copyrighted movies and video content without authorization, which itself constitutes infringement. Additionally, there are concerns about right of publicity violations, since the tool can generate videos featuring real people's likenesses without consent. The legal landscape remains unsettled because courts haven't definitively ruled on how copyright law applies to generative AI models.

How does Seedance 2.0 compare to OpenAI's Sora in terms of copyright safeguards?

OpenAI's Sora was designed with explicit safeguards from launch. The system rejects requests to generate videos of real, identifiable people, includes watermarks on generated content, and was trained more selectively. Sora's release was gradual, limited to trusted users, with a review process for generated content. Seedance 2.0 took an opposite approach, launching immediately with minimal restrictions, allowing users to generate videos of real celebrities and copyrighted characters without apparent content filtering. This difference in philosophy created much of the controversy.

Could Byte Dance face legal consequences for Seedance 2.0, and if so, what might those be?

Byte Dance faces cease-and-desist letters from Disney, the Motion Picture Association, and other studios, which could escalate to lawsuits. However, enforcement is complicated because Byte Dance is a Chinese company subject to different legal systems. Potential consequences could include requirement to add content filters, payment of damages if courts rule against them, restrictions on distributing the tool in certain jurisdictions, or negotiated licensing agreements similar to music streaming deals. However, legal proceedings could take years to conclude, during which Seedance 2.0 will likely continue operating and evolving.

What impact might Seedance 2.0 have on creative workers and the entertainment industry?

The long-term impact depends on how the technology develops and how regulation evolves. If AI video generation becomes cheap and widely available, demand for cinematographers, visual effects artists, editors, and some screenwriting work could decrease significantly. Some creative jobs may be displaced, though new opportunities in AI-assisted production might emerge. The economic disruption is already being felt, with workers increasingly concerned about job security. Some creative professionals are adapting by learning to work with AI tools rather than against them, focusing on skills like creative direction and narrative development that are harder for AI to replace.

How might governments regulate AI video generation in response to Seedance 2.0?

Governments are likely to develop regulations addressing multiple concerns. Expected approaches include requirements for disclosure of training data sources, mandatory content safeguards preventing deepfakes and copyrighted character generation, watermarking and authenticity verification systems, and clarification of copyright liability. The European Union's AI Act may serve as a model. Regulations might also address right of publicity concerns, requiring consent before generating videos of real people. However, regulating a global technology is challenging, and rules could vary significantly across jurisdictions, creating compliance complexity for multinational companies.

Is there any legitimate use case for AI video generation tools like Seedance 2.0?

Yes, there are genuine beneficial uses for this technology. Marketing teams can create promotional content more quickly and cheaply. Educational institutions can generate video content for learning materials. Accessibility features could help people with disabilities describe and experience video content. Entertainment companies could use the technology for early concept visualization or to supplement traditional production. Independent creators could produce content they couldn't otherwise afford to make. The challenge is enabling these legitimate uses while preventing harmful applications like non-consensual deepfakes and copyright infringement.

What does Seedance 2.0 reveal about AI development philosophy and corporate strategy?

Seedance 2.0 illustrates a fundamental split in how AI companies approach safety and responsibility. Byte Dance's approach prioritizes rapid capability deployment with minimal safeguards, betting that speed and market penetration matter more than risk mitigation. This strategy works better for a company operating primarily in a different regulatory environment with different legal traditions. OpenAI's more cautious approach prioritizes building safeguards and public trust, accepting slower deployment timelines. These different philosophies will likely shape the competitive landscape and regulatory response for years. The response to Seedance 2.0 suggests that the cautious approach may be strategically superior long-term despite being slower initially.

How might this situation affect regulation of other AI tools like language models or image generators?

Seedance 2.0's high-profile copyright violations and lack of safeguards will likely accelerate regulatory action across all generative AI tools. Policymakers will be more willing to impose requirements on language models, image generators, and other AI tools based on demonstrated harms from video generation. The situation strengthens arguments for requiring companies to disclose training data sources, implement content safeguards, establish clear copyright policies, and maintain transparency about AI-generated content. Companies developing text, image, or other generative AI tools are already facing increased scrutiny, and Seedance 2.0 will reinforce the momentum toward comprehensive AI regulation globally.

Key Takeaways

- Seedance 2.0 enables generation of copyrighted content and celebrity deepfakes with minimal safeguards, unlike competitors such as OpenAI's Sora

- Multiple cease-and-desist letters from Disney and the Motion Picture Association signal legal warfare, but enforcement against a Chinese company is complex and slow

- The tool was deliberately designed without content restrictions, suggesting a deliberate choice to move fast and establish market dominance before legal action could stop it

- Copyright law remains unsettled for generative AI, with questions about training data legality and end-user liability still unresolved in courts

- Economic impact on creative workers is immediate and measurable, threatening screenwriters, cinematographers, and visual effects professionals with job displacement

Related Articles

- SAG-AFTRA vs Seedance 2.0: AI-Generated Deepfakes Spark Industry Crisis [2025]

- AI Video Generation Without Degradation: How Error Recycling Fixes Drift [2025]

- India's Domain Registrar Takedowns: How Piracy Enforcement Changed [2025]

- How Airbnb's AI Now Handles 33% of Customer Support [2025]

- AI in Film Production: How 'Killing Satoshi' Changes Hollywood [2025]

- xAI's Mass Exodus: What Musk's Spin Can't Hide [2025]

![Seedance 2.0 Sparks Hollywood Copyright War: What's Really at Stake [2025]](https://tryrunable.com/blog/seedance-2-0-sparks-hollywood-copyright-war-what-s-really-at/image-1-1771094208256.jpg)