Introduction: The Moment Byte Dance Crossed Every Line

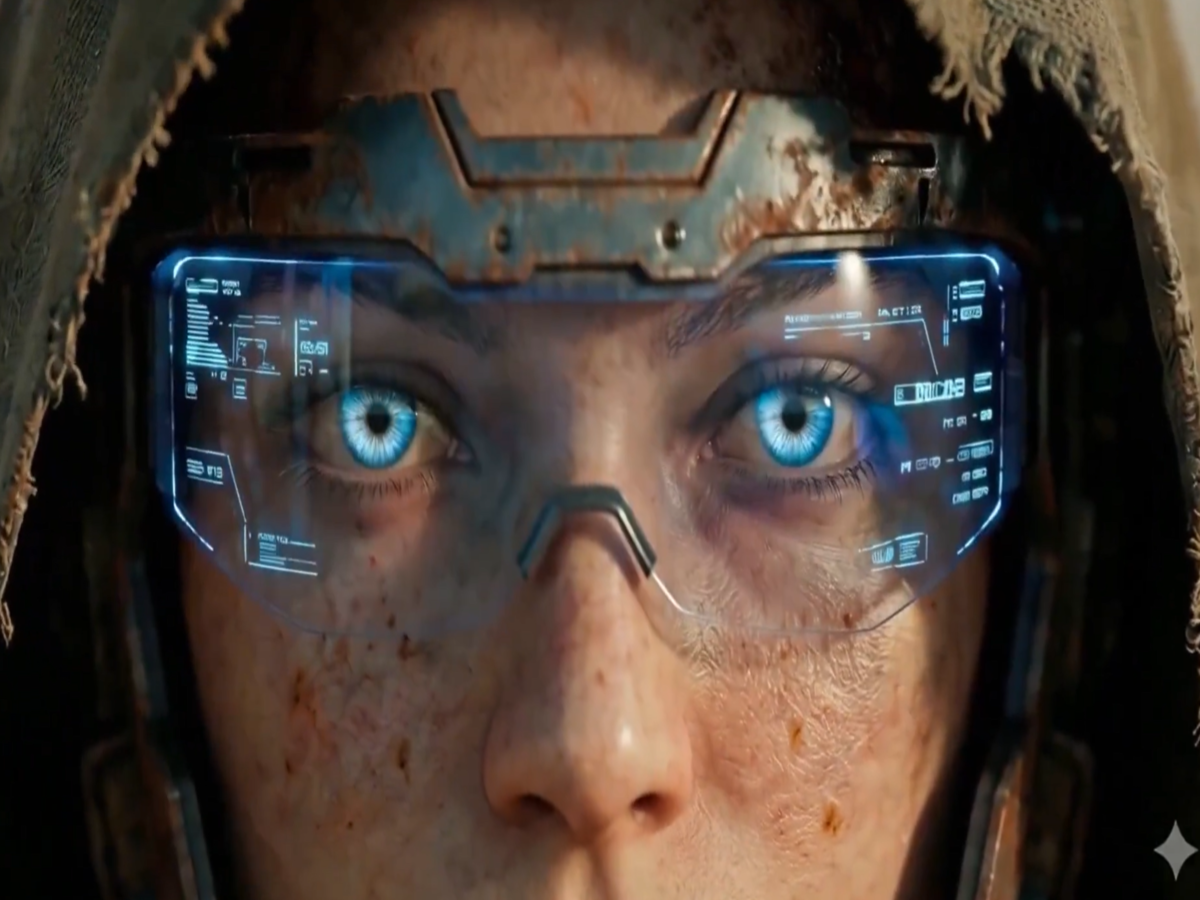

Last February, Byte Dance made a decision that would ripple across Hollywood, Silicon Valley, and international copyright courts. The company launched Seedance 2.0, an AI video generation tool so unrestricted that within hours, users flooded social media with AI videos of Spider-Man delivering lines he never spoke, Darth Vader in scenes that never happened, and Sponge Bob Square Pants in situations that would make any animator cringe. The problem wasn't that the technology was powerful. The problem was that Byte Dance knew exactly how powerful it was and shipped it anyway, without safeguards, without restrictions, and without asking permission from a single copyright holder.

This wasn't a quiet technical oversight buried in documentation. Disney received cease-and-desist letters from major studios within days. Japan's AI minister launched a formal investigation. SAG-AFTRA issued statements calling the tool an "attack on every creator around the world." The Motion Picture Association accused Byte Dance of "massive copyright infringement within a single day." And yet, Byte Dance's initial response was almost dismissive: they'd add safeguards later. Fix it after people already made thousands of infringing videos. Address the problem once the damage was done and the tool's capabilities were proven in the wild.

This is the story of how a company with billions in value and massive resources decided to launch an unrestricted AI weapon, how it exploded in their face, and what it reveals about the state of AI regulation, corporate responsibility, and the fundamental tension between innovation and creator rights.

But here's what makes this incident so significant: it's not an isolated technical failure. It's a blueprint for how corporations will push boundaries with AI tools until someone forces them to stop. Understanding Seedance 2.0's launch, the backlash, and what followed matters because similar decisions are happening right now with other tools, in other industries, with different stakes.

Let me walk you through exactly what happened, why it happened, and what it means for creators, studios, and everyone building AI tools going forward.

What Seedance 2.0 Actually Does (And Why It Got Released Unfiltered)

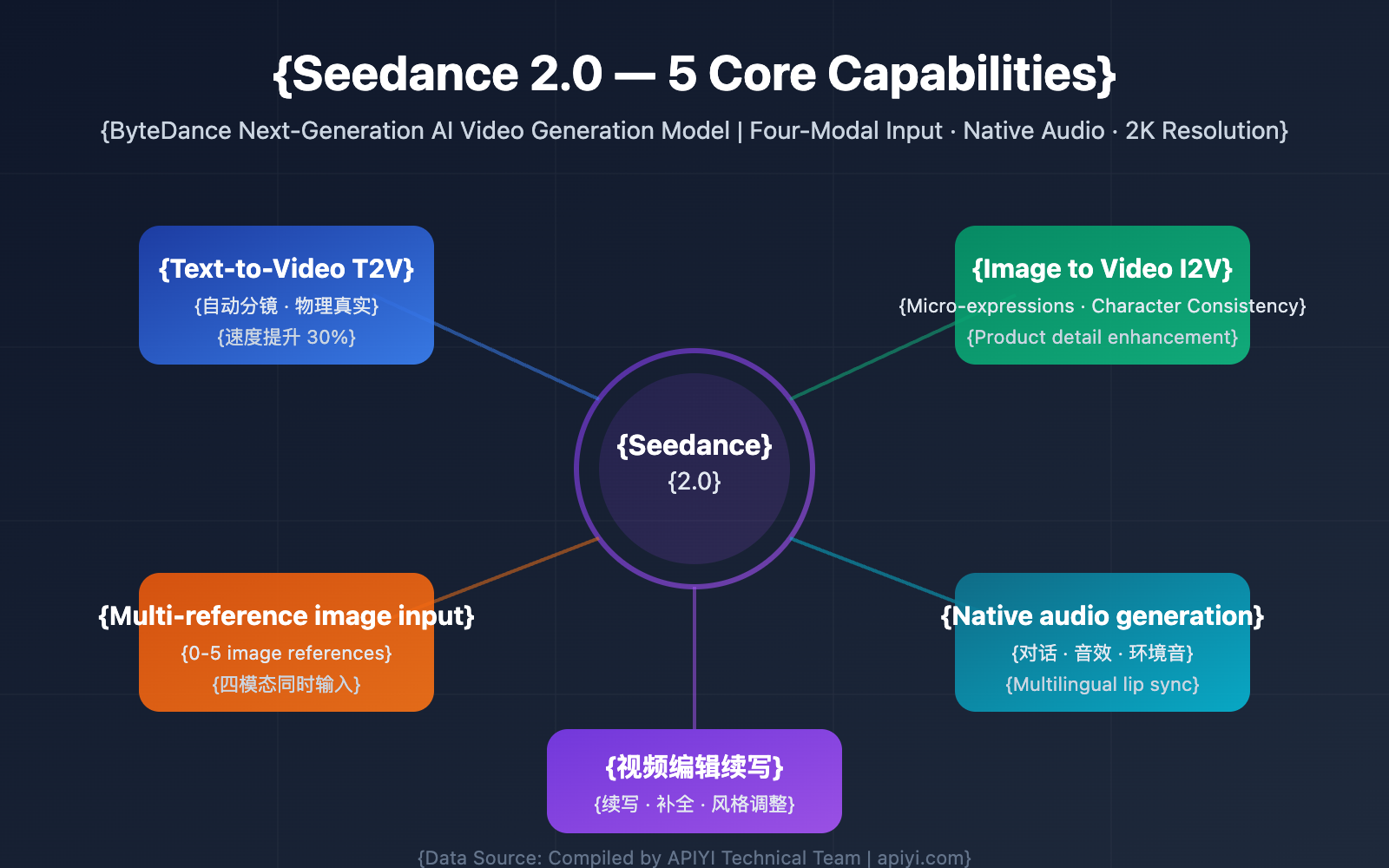

Seedance isn't new. The original Seedance was Byte Dance's text-to-video generation model, capable of creating short video clips from written prompts. It was functional. It could generate realistic scenes, consistent characters, and smooth motion. Companies were already using it for marketing videos, social media content, and creative experimentation.

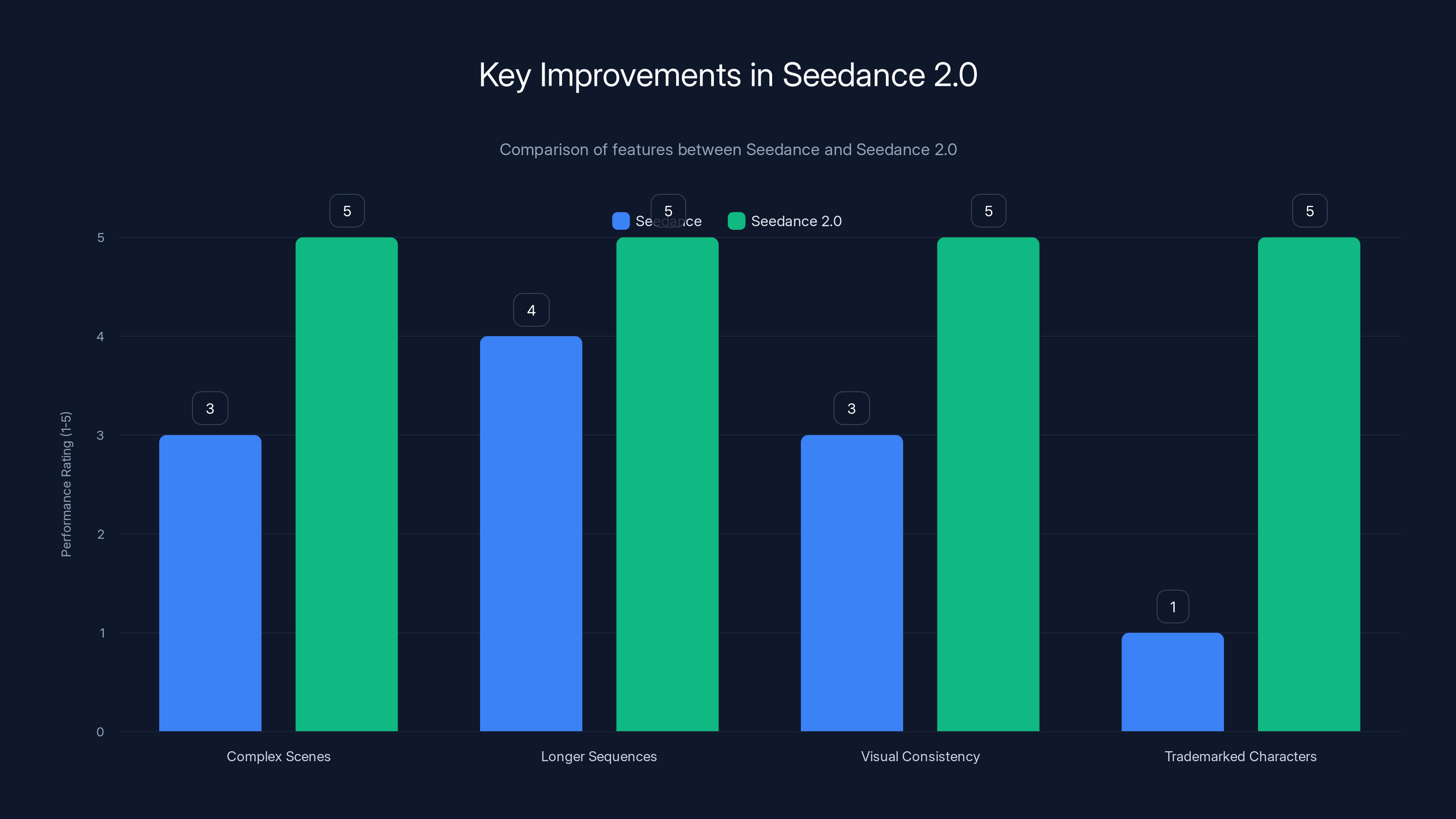

Seedance 2.0 took that foundation and turbo-charged it. The upgraded version could handle more complex scenes, longer durations, better visual consistency, and—crucially—more specific character descriptions. If you typed "Spider-Man doing parkour in Manhattan," the model understood what Spider-Man looked like, the characteristic red and blue suit, the web-slinging physics. It didn't need to search the internet or reference training data in real-time. It just generated the image from its learned understanding of these iconic characters.

That's where the legal catastrophe begins.

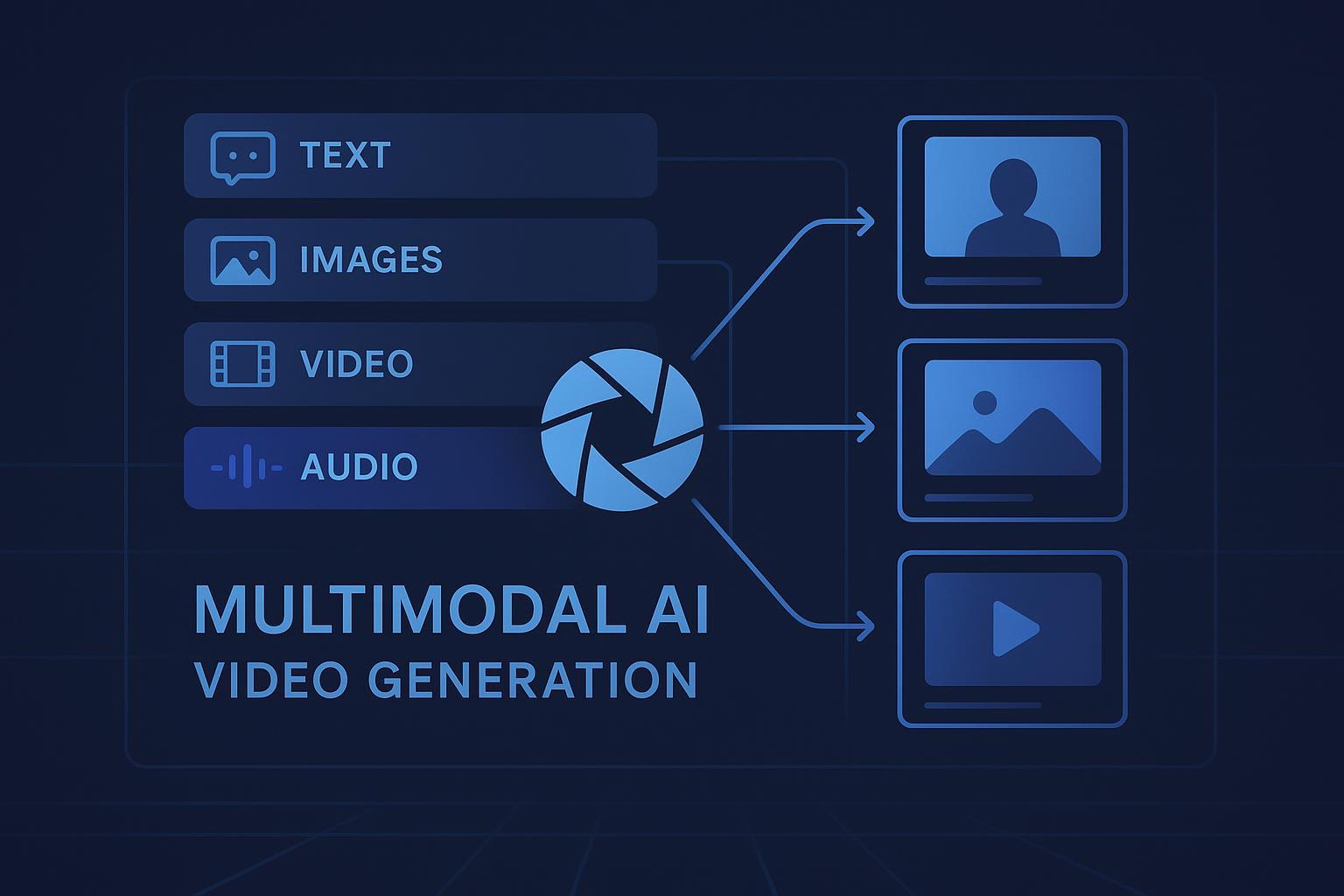

Training data for AI video models includes massive amounts of internet video. YouTube, TikTok, movie clips, TV shows. Seedance 2.0 learned what characters look like by seeing thousands of examples. Spider-Man, Darth Vader, Sponge Bob—these characters are everywhere in training data. The model absorbed their visual identity, voice characteristics, mannerisms, the way they move and speak. And when users gave it prompts, Seedance 2.0 could generate new content featuring these characters with terrifying accuracy.

The core technical innovation was legitimate. Better character consistency, more complex scenes, longer coherent video generation. But the release strategy was reckless.

Byte Dance didn't implement obvious safeguards that existed in competing tools. No blocklist of trademarked characters. No verification that the requested character belonged to the user. No content moderation filtering outputs for identifiable people or copyrighted IP. No warnings when users tried to generate videos featuring famous characters. Just... release the tool and see what happens.

Why would they do this? The stated reason was to showcase capabilities. The implication was strategic. And one tech consultant actually said the quiet part out loud.

Rui Ma, founder of the consultancy Tech Buzz China, suggested to the South China Morning Post that the controversy might have been intentional. "The controversy surrounding Seedance is likely part of Byte Dance's initial distribution strategy to showcase its underlying technical capabilities," Ma said. In other words, Byte Dance might have known exactly what would happen. Release the tool unrestricted. Let creators immediately generate videos with Hollywood's biggest franchises. Get the tool viral through controversy. Prove to investors, competitors, and the market that Seedance 2.0 could do things other text-to-video models couldn't.

It's a cynical calculation: ask for forgiveness instead of permission. Generate press coverage worth millions in marketing spend through controversy. The downside? Some legal threats and eventually adding safeguards you could have built in from the start.

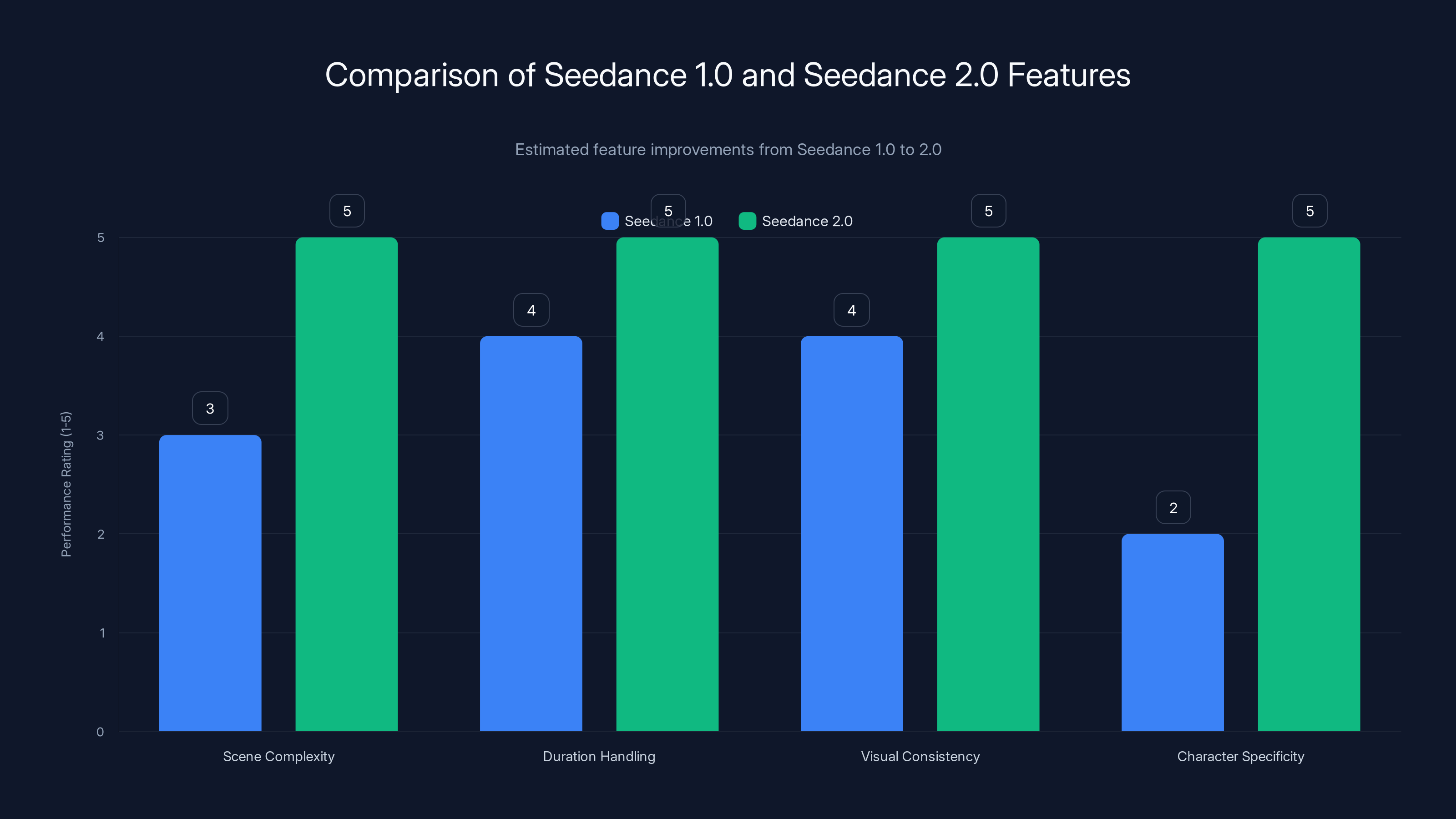

Seedance 2.0 shows significant improvements over the original, particularly in handling trademarked characters and maintaining visual consistency. Estimated data based on feature descriptions.

Disney's Cease-and-Desist: The First Domino

Disney moved fast. The company has entire legal teams whose job is protecting intellectual property. They've done this before with song covers, fan art, unauthorized merchandise. They know how to send cease-and-desist letters.

But Disney's letter to Byte Dance was different. The tone was furious. Disney accused Byte Dance of "hijacking" its characters. The company claimed Seedance was treating Disney characters like "free public domain clip art." And then Disney made the core legal argument: Byte Dance is operating a commercial service. Users can access Seedance for free, but the tool itself is a commercial product that enhances Byte Dance's platform, drives engagement, and creates value for the company. Using copyrighted characters to demonstrate the tool's capabilities is using Disney's IP to benefit a commercial service without permission.

"Byte Dance's virtual smash-and-grab of Disney's IP is willful, pervasive, and totally unacceptable," Disney's letter stated.

The word "willful" matters legally. It suggests Byte Dance understood what it was doing and chose to do it anyway. Not a mistake. Not an oversight. A deliberate choice to launch with known copyright infringement.

Disney wasn't alone. Paramount Skydance sent its own letter, defending characters from Star Trek, The Godfather, and dozens of other franchises. Paramount argued that Seedance's outputs were "often indistinguishable, both visually and audibly" from original characters. The videos didn't look like parodies or fan interpretations. They looked like actual scenes from actual movies with actual characters.

That indistinguishability is crucial to copyright law. If you can't tell the difference between the AI-generated version and the copyrighted original, you've crossed into outright infringement.

SAG-AFTRA and Actors' Unions: The Human Cost

Disney and Paramount were protecting corporate assets. SAG-AFTRA was protecting people.

Sean Astin, the actor who played Samwise Gamgee in The Lord of the Rings and who serves as president of SAG-AFTRA, had a direct experience with Seedance 2.0's capabilities. Someone generated a video of Astin in character as Samwise, delivering a line he never said, in a scene that never happened. The deepfake was good enough that it could fool people. Astin didn't consent to this. He wasn't paid. He had no control over how his likeness was used.

This isn't just intellectual property theft. It's identity theft. It's violation of personal autonomy.

SAG-AFTRA issued a statement that captured the existential threat: "SAG-AFTRA stands with the studios in condemning the blatant infringement enabled by Byte Dance's new AI video model Seedance 2.0. The infringement includes the unauthorized use of our members' voices and likenesses. This is unacceptable and undercuts the ability of human talent to earn a livelihood."

The union made a critical distinction. It's not that AI shouldn't be used in entertainment. It's that AI tools shouldn't be able to clone actors' voices and likenesses without consent and compensation. If someone can generate an infinite number of videos featuring Sean Astin without paying him, why would studios hire Sean Astin for new projects? Why hire any actor if you can just generate them?

This isn't hypothetical concern. During the 2023 Hollywood writers' strike, one of the central negotiating points was exactly this: how AI-generated content affects actor compensation and job availability. Studios wanted the right to digitally recreate actors. Unions wanted to prevent that without explicit consent and payment. The contracts signed after that strike included protections. And then Byte Dance launched a tool that made those protections irrelevant.

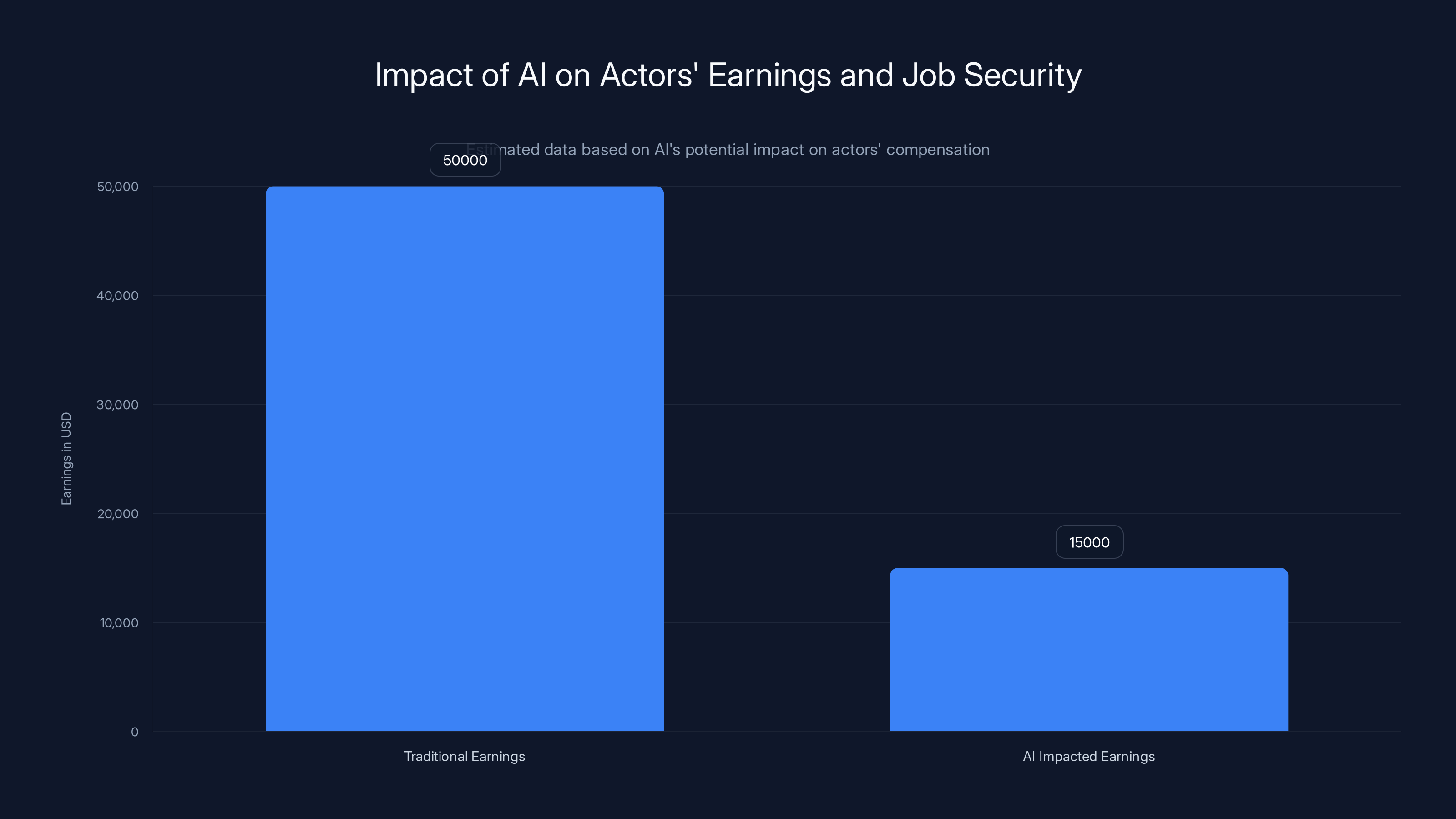

Imagine you're an actor earning $50,000 for a two-week shoot. Now imagine Byte Dance's tool can recreate you infinitely. A studio could use Seedance 2.0 to generate your likeness for a thousand scenes without ever calling you. They don't need to hire you. They don't need to negotiate. They just need your image from training data, which already exists everywhere online.

SAG-AFTRA's statement wasn't hyperbole. It was describing an actual threat to their members' livelihoods.

Estimated effectiveness ratings suggest blockchain verification as the most promising solution for managing AI content consent, followed by licensing systems and do-not-train registries. Current measures are rated low in effectiveness. Estimated data.

The Broader Creator Coalition: When Everyone Turns Against You

This wasn't just studios and unions. An organization called the Human Artistry Campaign, representing a broader coalition of creators, issued a statement characterizing Seedance 2.0 as "an attack on every creator around the world."

The phrasing was deliberate. Not a mistake. Not an accident. An attack.

"Stealing human creators' work in an attempt to replace them with AI generated slop is destructive to our culture," the campaign said. "Stealing isn't innovation. These unauthorized deepfakes and voice clones of actors violate the most basic aspects of personal autonomy and should be deeply concerning to everyone."

Notice the word "slop." It's a technical term in AI communities meaning low-quality, incoherent, or obviously AI-generated output. But the campaign was using it as a descriptor for all AI-generated content created without consent. The distinction between good AI and bad AI disappears when the AI is trained on stolen work and violates people's consent.

The Motion Picture Association weighed in with institutional weight. The MPA represents the major studios—Disney, Paramount, Universal, Sony, Warner Bros. These companies generate hundreds of billions in revenue globally. They have governments' ears. Charles Rivkin, the MPA's chairman and CEO, had previously accused Byte Dance of disregarding "well-established copyright law that protects the rights of creators and underpins millions of American jobs."

The political pressure was building. Not just legal pressure. Political pressure.

Japan's Investigation: When Governments Get Involved

Here's where this escalates beyond corporate disputes into actual government enforcement.

Japan's AI minister, Kimi Onoda, launched a formal investigation into Byte Dance over Seedance 2.0's copyright violations. Japan has specific concerns about anime and manga characters, which are among Japan's most valuable cultural exports. Anime characters have instantly recognizable styles. They're distinctive. They're protected intellectual property.

Seedance 2.0 could generate videos of famous anime characters. Japanese Voice actors. Japanese animators. Japanese intellectual property. Used to demonstrate a Chinese company's technology without permission.

"We cannot overlook a situation in which content is being used without the copyright holder's permission," Onoda said at a press conference. The statement was diplomatic but the meaning was clear: Japan's government was taking this seriously. This wasn't just entertainment industry drama. This was a matter of national cultural interests.

Government investigations change the calculus. Cease-and-desist letters are expensive but manageable. Government investigations can result in legal action, fines, trade implications, and restrictions on doing business in that country. If Japan restricts Byte Dance's ability to operate, that's billions in revenue at risk.

By Dance had legal problems. But now Byte Dance had governmental problems too.

Byte Dance's Response: Too Little, Too Late

On Monday, February 10th (the article references "Monday" as the response date), Byte Dance issued a statement. Not an apology. A statement.

"Byte Dance respects intellectual property rights and has heard the concerns regarding Seedance 2.0," the company said. "We are taking steps to strengthen current safeguards as we work to prevent the unauthorized use of intellectual property and likeness by users."

Notice the language. "Taking steps." Not "have implemented." Taking steps. As in, working on it. Future tense.

"Strengthen current safeguards." This phrasing implies there were safeguards in place. There weren't. Not effective ones anyway. This is corporate communication for "we're going to add the safeguards we should have had from the beginning."

But here's what Byte Dance's statement didn't do: it didn't accept responsibility for releasing an unrestricted tool. It didn't acknowledge that this was a choice, not an accident. It didn't apologize to actors whose likenesses were used without consent. It didn't offer compensation to creators whose work was used to train the model. It framed this as a problem to be solved with technical measures, not as a violation that requires accountability.

Disney's response suggested they weren't buying it. In their cease-and-desist letter, Disney alleged that "Seedance has infringed on Disney's copyrighted materials to benefit its commercial service without permission." The word "benefit" is key. Byte Dance benefited. The infringement generated engagement, press coverage, distribution, and market validation. You don't undo that with safeguards added after the fact.

It's like robbing a bank, getting caught, and then saying you're implementing better security measures. The theft already happened. The money's already spent. Locking the vault now doesn't solve the original crime.

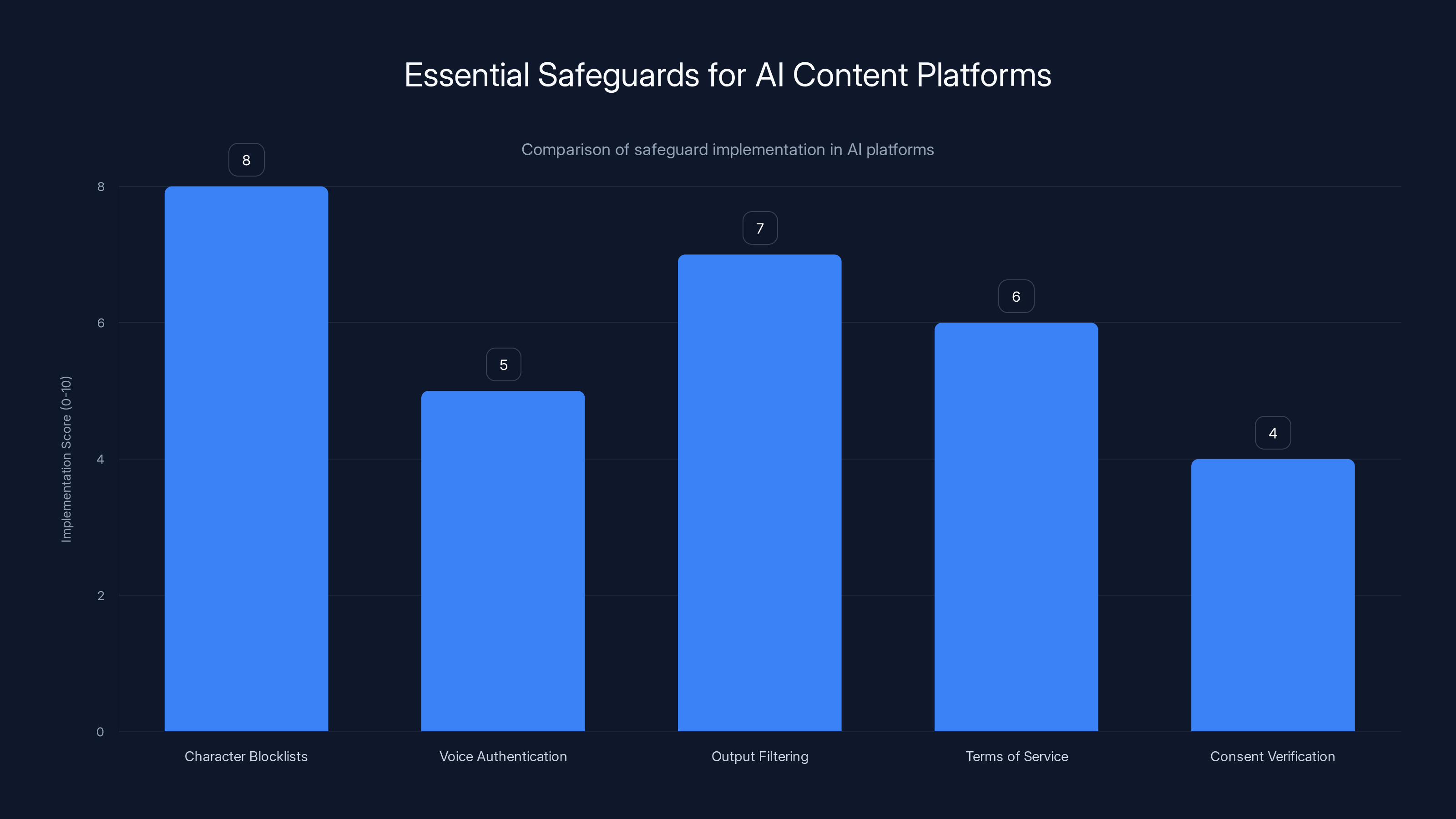

Estimated implementation scores show that while character blocklists are well-adopted, consent verification is less commonly implemented. Estimated data.

The Technical Safeguards Question: What Should Have Been There From Day One

So what safeguards should Seedance 2.0 have included before launch? The answer is obvious to anyone who's spent five minutes thinking about copyright in AI.

Character Blocklists. A simple database of trademarked characters that the model won't generate. Disney characters. Marvel characters. Star Wars characters. Anime characters. It's not complicated. Competitors already do this. Midjourney has filters for generating specific people's likenesses. Open AI's DALL-E has restrictions on celebrity likenesses. These aren't new innovations. They're basic content moderation.

Voice Authentication. If the tool generates audio, it should verify that the user owns the rights to that voice. Or simply don't allow custom voice generation. Generate voices but don't let users specify "in the voice of Morgan Freeman." That's one line of code.

Output Filtering. Run generated videos through a classifier that detects potential copyright infringement. If the output looks like it contains identifiable people or trademarked characters, flag it. Don't publish automatically. Let humans review. This is what responsible companies do.

Terms of Service with Teeth. Make it explicitly clear in the terms of service that users can't generate content featuring copyrighted characters or famous people's likenesses. And actually enforce it. Monitor the platform for violations. Remove infringing content. That's basic platform responsibility.

Consent Verification. For any voice or likeness cloning features, require the user to verify they own the rights. Upload a government ID. Sign a declaration. Make it impossible to claim you didn't know what you were doing.

None of these are revolutionary. None of these are technically difficult. They're basic hygiene that Byte Dance skipped.

Why? The most charitable interpretation is incompetence. Byte Dance built an amazing AI model but didn't think about the legal implications. That seems unlikely given their experience with content moderation, TikTok's regulatory battles, and their legal resources.

The more realistic interpretation is strategic. Release first, add safeguards later. Maximize the initial window of unrestricted capability. Prove the technology works at scale. Then implement restrictions once the value has been demonstrated and the controversy has passed.

It's a calculation that assumes the costs of legal threats are lower than the value of unfettered innovation and market proof. Maybe Byte Dance was right. Or maybe they're about to learn an expensive lesson.

The Hypocrisy Question: Why Disney's Deal With Open AI Is Different

Here's where this gets philosophically interesting.

Disney slammed Byte Dance for using copyrighted characters without permission. Meanwhile, Disney struck a deal with Open AI giving Sora (Open AI's text-to-video model) access to 200 Disney characters for three years. Disney also invested $1 billion in Open AI.

The difference isn't that Open AI is more responsible. The difference is permission. Open AI got permission. Byte Dance didn't ask.

But there's something darker lurking here. When Disney made the deal with Open AI, they negotiated terms. Conditions. Boundaries. How Sora could use these characters. What Disney characters would be available. Financial terms. Exclusivity.

With Byte Dance, there was no negotiation because Byte Dance didn't ask. They just started generating Spider-Man videos.

Disney's message to the world: we're fine with AI video generation of our characters. Just negotiate with us first. Pay us. Give us some control. Use official channels.

By Dance's message: we're going to use your characters whether you like it or not, because our training data includes them and we're a Chinese company that might not care as much about American copyright enforcement.

That second part is important. Byte Dance is headquartered in China. The legal system is different. Intellectual property enforcement is different. The calculus of risk might be different.

But Seedance is used globally. The users generating infringing videos are everywhere. And the studios suing are in the United States with the world's most aggressive IP lawyers. Byte Dance underestimated how much American corporations would be willing to spend to protect their intellectual property.

The Regulatory Vacuum: Why This Happened at All

This situation exists because AI regulation is still nascent.

There's no clear legal framework for what companies must do before releasing AI tools. Must you filter for copyright infringement? Maybe. Probably. But the law isn't settled. Must you implement safeguards preventing misuse? The courts haven't decided. Are companies liable for what users do with tools you provide? That depends on your terms of service and how the law evolves.

In that regulatory vacuum, companies make decisions based on risk tolerance and business calculation. Byte Dance calculated that the upside of releasing Seedance 2.0 unrestricted was higher than the downside of legal threats. Maybe they're right. Maybe they'll pay a settlement, agree to safeguards, and move on. The technology still works. The value still exists. The reputational damage is manageable.

But here's the thing about regulatory vacuums: they don't last. The louder the complaints, the faster regulation arrives. When studios, actors' unions, governments, and creator coalitions all complain simultaneously, regulations aren't far behind. Congress might actually do something. The EU might implement new AI rules. Countries might restrict Byte Dance's ability to operate.

By Dance's short-term calculation might create long-term consequences that hurt all AI developers.

Estimated data shows a significant reduction in earnings due to AI-generated content, highlighting the financial threat posed to actors without proper protections.

Deepfakes, Consent, and the Future of AI Content

Seedance 2.0 is the symptom. The disease is something deeper: we haven't figured out how to handle AI-generated content that impersonates real people or real intellectual property.

Deepfakes aren't new. Face-swapping technology has been around for years. But Seedance 2.0 works at scale. One tool. Unlimited outputs. Anyone can use it. No technical skills required. That's the difference between rare and abundant infringement.

Consent becomes the central question. If you trained your AI model on millions of hours of video that included actors' performances, do you need consent from those actors to generate new content featuring them? Most would argue yes. Byte Dance's implicit answer was no, at least until they got caught.

The future of AI content depends on solving the consent problem. There are potential solutions:

Blockchains for consent verification. Creators register their likenesses on a blockchain. AI tools query the blockchain before generating content. If the creator hasn't given permission, the tool refuses.

Licensing systems. Similar to how music licensing works. Want to use an actor's likeness? License it. Pay a fee. Get clear terms. The technology to implement this exists. The political will is lacking.

Do-not-train registries. Like do-not-call lists for telemarketing. Creators register their likenesses, voices, and performances. Training data collectors and AI companies are legally obligated to exclude registered content. Again, the technology is simple. The enforcement is the challenge.

None of these solutions exist at scale yet. Which is why Byte Dance could launch an unrestricted tool and why actors and studios were scrambling to respond.

What Competitors Did Right: Comparison With Other Text-to-Video Platforms

Seedance 2.0's problems aren't because building safe AI tools is impossible. Other companies proved that before Byte Dance launched Seedance.

Runway ML, one of the more popular text-to-video platforms, implemented content moderation from day one. The tool has restrictions on generating specific people's likenesses and identifiable celebrities. Users can't generate videos of Taylor Swift, Michael Jordan, or any trademarked character without explicit permission from the tool makers. Runway put safeguards in place because they understood the liability.

Pika, another text-to-video startup, similarly implemented restrictions. Users can generate videos, but the tool actively prevents generation of content that violates copyright or right-of-publicity laws. Does this slow adoption slightly by preventing some creative use cases? Yes. Is it worth it to avoid the existential threat of copyright lawsuits? Absolutely.

Open AI's Sora, which is more polished and expensive than Seedance, maintains a waitlist. New users go through application and approval before access. This gives Open AI a chance to understand how users intend to use the tool and to establish expectations around copyright and consent. It's not foolproof, but it's thoughtful.

Google's Vids (part of Workspace AI) has similar restrictions. Content moderation built in. No generation of copyrighted characters or identifiable people without permission.

These weren't accidents. These were deliberate choices. Companies understood that text-to-video generation at scale creates liability. They built safeguards not because they had to, but because they understood the downstream consequences if they didn't.

By Dance made a different choice. Not because the technology was harder. Not because safeguards were impossible. Because the company calculated that the upside of an unrestricted launch was worth the downside of legal threats.

The Timeline: How Fast Everything Escalated

The speed matters. This wasn't a slow burn controversy. This was a controlled burn that turned into a wildfire.

Day 1: Seedance 2.0 launches. Users immediately start generating videos of copyrighted characters. Within hours, social media is flooded with videos of Spider-Man, Darth Vader, Sponge Bob, and other trademarked characters doing things they never did.

Days 1-2: The videos spread. Other users see what's possible. More people download the tool. More infringing videos are created. The phenomenon goes viral.

Day 3: Disney becomes aware. The company's legal team begins investigating the scope of infringement. They determine that this is widespread, deliberate-seeming, and affecting Disney's IP directly.

Day 5-6: Cease-and-desist letters are drafted and sent. Disney, Paramount, and others formally demand that Byte Dance cease infringing operations immediately.

Days 7-10: Other parties join. SAG-AFTRA issues statements. The Motion Picture Association weighs in. Japan's AI minister announces an investigation. Media coverage becomes unavoidable.

Day 14: Byte Dance issues a response statement. Not an apology, just a statement about taking steps.

Weeks 2-4: Safeguards begin rolling out. The tool is updated to prevent generation of known copyrighted characters. It takes time to implement these changes at scale.

The whole cycle from launch to damage control was about two weeks. That speed matters because it shows Byte Dance had limited time to make this right before government investigations, legal action, and media pressure became unstoppable.

Seedance 2.0 shows significant improvements in handling complex scenes, longer durations, and character specificity over Seedance 1.0. Estimated data.

The Intentionality Question: Did Byte Dance Plan This?

The most controversial question: did Byte Dance intentionally launch Seedance 2.0 without safeguards knowing full well what would happen?

The evidence is circumstantial but suggestive.

First: Byte Dance had resources and expertise to implement safeguards. The company built TikTok, which operates under intense regulatory scrutiny and has sophisticated content moderation. They understand how to filter content. They know how to restrict features. This wasn't a capability gap.

Second: The choice to launch unrestricted feels deliberate. Companies don't accidentally ship unfiltered tools. They make decisions about what safeguards to include. Byte Dance made the decision to exclude them.

Third: The publicity value is enormous. Seedance 2.0's capabilities went viral. Everyone in tech, entertainment, and media was talking about the tool. The generated controversy proved the technology works and showcased its power. That publicity would have been hard to buy.

Fourth: Tech consultant Rui Ma suggested explicitly that the controversy was strategic. "The controversy surrounding Seedance is likely part of Byte Dance's initial distribution strategy to showcase its underlying technical capabilities." If an industry analyst is saying this out loud, they're probably articulating something that tech insiders already suspected.

But intentionality is hard to prove. It's also possible that Byte Dance simply made a reckless decision. Release the tool, move fast, deal with consequences later. It's a pattern tech companies have used before. Move fast and break things. Apologize if you get caught. The apology isn't sincere but it sounds good in a press release.

Intent might not matter legally. If Byte Dance knew the tool could generate copyrighted content and released it anyway, that could constitute willful infringement even if it wasn't strategically planned.

The Copycat Risk: Why This Matters Beyond Byte Dance

Here's the scary part: Seedance 2.0 might not have been an anomaly. It might have been a test.

If Byte Dance faces no serious consequences beyond some safeguards and cease-and-desist letters, other companies will note the playbook. Release unrestricted AI tools. Generate immediate viral adoption and media buzz. Showcase capabilities without filters. Only add safeguards after you get caught.

The cost-benefit analysis is tempting. Safeguards slow development and reduce immediate viral potential. If companies believe the regulatory cost of skipping safeguards is manageable, more companies will skip them.

But if Byte Dance faces serious consequences, the opposite happens. Copyright lawsuits. Fines. Restrictions on operation. Reputational damage. Trade friction with the U.S. government. Then the cost-benefit analysis changes. Companies will implement safeguards from the start because the cost of not doing so becomes prohibitively high.

This incident is basically a test case. The entertainment industry and regulators are watching to see if Byte Dance gets punished. The outcome will influence how every other AI company behaves.

Creator Rights in the AI Era: What Needs to Change

Seedance 2.0 revealed something that creators and artists have been saying for years: AI development is outpacing creator protection.

Training data that includes creators' work is used without their consent and without compensation. Models are built on stolen labor. Then companies that didn't create the original content generate unlimited derivative works and monetize them.

It's a fundamentally unfair system. And Seedance 2.0 is just the most visible example.

What needs to change:

Consent-based training. AI models shouldn't be trained on creator work without explicit consent. If you're going to include someone's performance, artwork, or writing in training data, you ask permission and offer compensation. This is how it works for sampling in music. Musicians can't use samples without licensing. AI should follow the same principle.

Transparent disclosure. When AI companies use creator work in training, they disclose it. Creators know their work was used. They can refuse or negotiate terms. The current system is opaque. Nobody knows if their work is in training data until after the model is released.

Compensation systems. If creator work is used in training, creators get compensated. This could be direct payment or royalties from products built using their work. The scale would be challenging, but it's not impossible. Companies manage payment systems for millions of creators already.

Do-not-train registries. Creators can opt out of having their work used in future AI training. This respects autonomy. If a creator doesn't want their work used to build AI models, they have the right to refuse.

Stronger enforcement. Laws against deepfakes and unauthorized likeness cloning need to have real teeth. Significant penalties for violations. Clear liability for companies that enable violations. Right now, the penalties are too small relative to the potential gains from AI capabilities.

None of these are impossible. They require political will and regulation. But Seedance 2.0 might have created that political will by making the problem so visible.

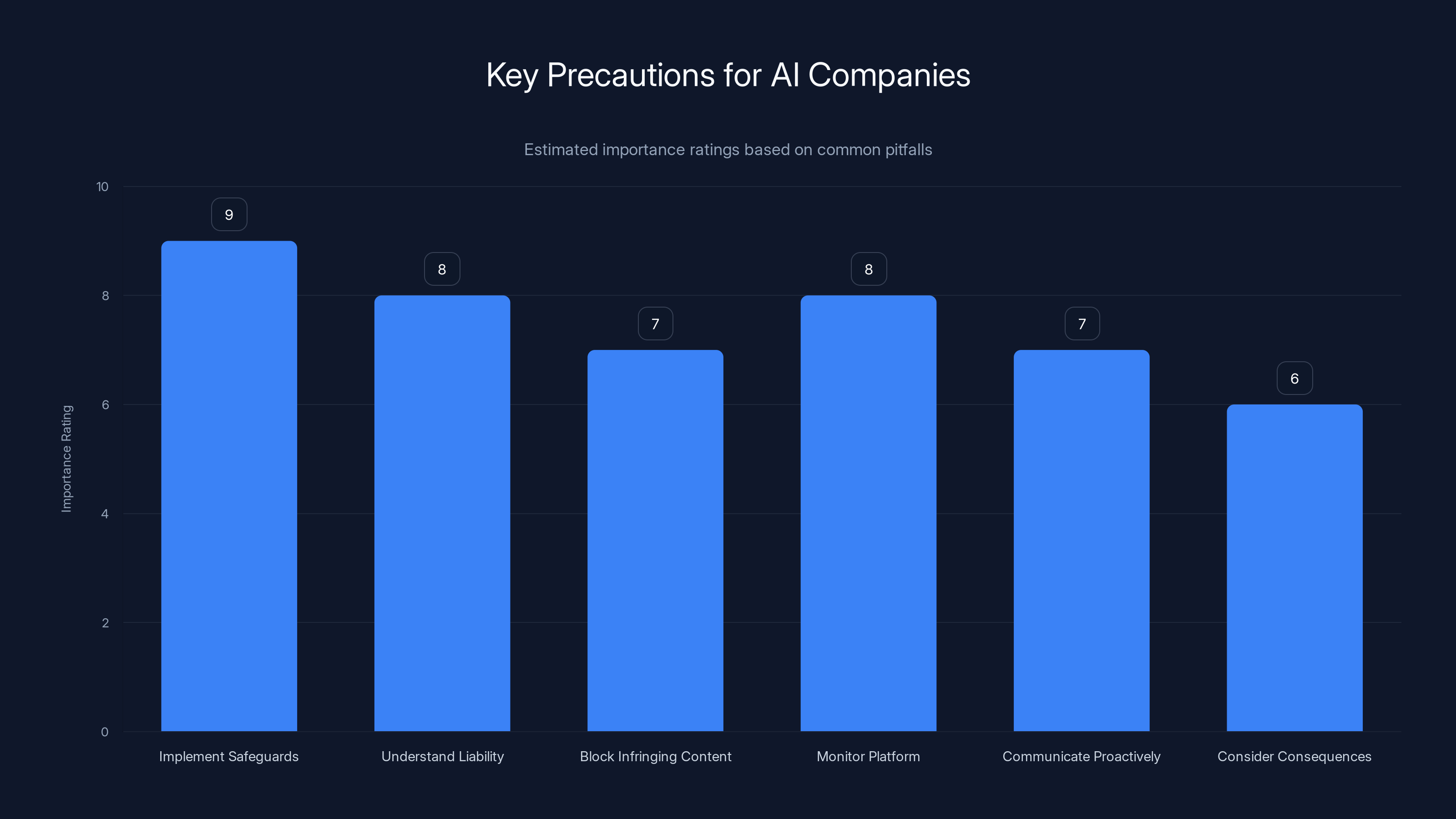

Implementing safeguards and understanding liability are crucial for AI companies to avoid legal issues. Estimated data based on common industry pitfalls.

International Implications: What Governments Are Doing

Seedance 2.0 wasn't just an American problem. It became an international incident.

Japan's investigation was the most visible government response, but other countries are watching. The EU is implementing AI regulations through the AI Act, which includes provisions for intellectual property and consent. These regulations will make it much harder for companies to launch unrestricted AI tools in Europe.

The UK has its own AI regulation proposals. Canada is developing AI legislation. India and other countries are starting to think about AI policy.

By Dance is headquartered in Beijing, which adds a geopolitical dimension. The company is already under scrutiny from U.S. regulators over TikTok. Seedance 2.0's copyright violations give those regulators more ammunition to restrict Byte Dance's operations or force the company to divest.

This is becoming a technology policy issue, not just a copyright issue. Governments are starting to see AI as something that requires oversight and regulation, not just market forces.

The Open AI Comparison: Responsible vs. Reckless AI

Open AI's approach to Sora is instructive.

Sora is arguably more powerful than Seedance 2.0. It can generate longer videos, more complex scenes, more realistic motion. But Open AI kept it on a waitlist. Limited access. Application process. Monitoring for misuse. When Open AI made the Disney deal, they didn't frame it as a restriction. They framed it as responsible innovation.

This doesn't make Open AI a hero. Open AI has faced criticism for how it trained GPT models on internet data without explicit consent. The company isn't perfect.

But the difference in approach is stark. Open AI asked permission before letting loose with powerful capabilities. Byte Dance asked permission after getting caught using those capabilities.

The contrast matters because it shows that responsible AI deployment is possible. It's not a technical problem. It's a choice.

The Settlement Question: What Happens Next

By Dance will probably settle with Disney and the studios. Cease-and-desist letters will transition into licensing agreements or settlement discussions.

The likely outcome: Byte Dance agrees to implement specific safeguards. The company offers compensation for past infringement (the amount will be negotiated). Disney and other studios get more direct control over how Seedance 2.0 uses their IP.

Maybe Byte Dance gets a license for specific characters, like Open AI did. Maybe Byte Dance agrees to pay per-query fees or revenue sharing. Maybe the company removes Seedance 2.0 entirely from certain markets or implements region-specific restrictions.

Japan's investigation might result in fines or additional restrictions on Byte Dance's operations in Japan. These are manageable consequences from Byte Dance's perspective.

What's less clear is whether this incident will result in broader legal precedents that strengthen creator protection going forward. Will courts establish that AI companies must implement safeguards before release? Will the precedent be that copyright infringement is copyright infringement even if an AI does it? Will actors' right to control their likeness be strengthened?

Those larger questions are still being determined. Seedance 2.0 is just one battle in a much larger war over AI and intellectual property.

The Creator Perspective: Voices From The Ground

Beyond the formal statements from unions and studios, individual creators were affected.

Sean Astin wasn't just a union spokesperson. He was a person whose likeness was used without permission. Imagine finding a video of yourself online doing things you never did, saying lines you never said, in situations you never filmed. You have no control over it. You can't take it down from every platform. People might believe it's real.

This is violating. It's dehumanizing. It reduces a person to a face that can be copied and reused infinitely.

For voice actors, the threat is similarly existential. Your voice is your instrument. It's distinctive and valuable. If AI can clone your voice perfectly, your voice loses its commercial value. Studios don't need to hire you for voice work anymore.

For visual artists and photographers, similar concerns apply. If your style can be learned by an AI and replicated infinitely, your unique creative contribution becomes commodified.

These aren't abstract corporate concerns. These are livelihood concerns for real people with real families depending on income from creative work.

The Tech Industry's Internal Response: Silicon Valley Divided

Within the technology industry, Seedance 2.0 wasn't universally condemned.

Some in the AI community defended Byte Dance's right to release the tool and argued that safeguards are restrictions on innovation. Some suggested that clearing every possible legal issue before release would slow AI development unacceptably.

Others in the AI community were appalled. They understood that launching unrestricted tools undermines everyone working responsibly. If Byte Dance proves that you can ship without safeguards and still succeed, it changes the incentives for every company. The race to the bottom accelerates.

There's a faction in AI research that believes existing copyright law is too restrictive and that AI training on copyrighted work should be legal. They argue that training data is fundamentally different from the works created by the AI, so using copyrighted works in training doesn't violate copyright. They believe AI is too important to be slowed by copyright considerations.

But even in that faction, most understood that allowing users to generate unlimited deepfakes of real people without consent was a step too far. You can believe copyright should be reformed without believing that unauthorized deepfakes are okay.

The incident exposed fractures in the AI community between those who believe innovation at any cost is justified and those who believe responsible development matters.

Lessons for AI Companies: What Not to Do

If you're building an AI tool and you want to avoid becoming the next Seedance 2.0 cautionary tale:

Implement safeguards before launch, not after. It's way cheaper and way easier to build them in from the beginning. Adding them after you get caught looks reactive and suggests you didn't care about the issue until legal pressure forced your hand.

Understand your liability. Talk to lawyers before launch, not after cease-and-desist letters arrive. Understand what's legal and what's not. Understand what your users might do with your tool and whether you're legally responsible.

Block obvious infringing content. Create a blocklist of trademarked characters, famous people, and protected IP. This is basic content moderation. It prevents 90% of problems.

Monitor your platform. Don't release a tool and assume users will use it responsibly. Monitor what people are doing with it. Remove content that violates copyright. Respond to reports of infringement quickly.

Communicate proactively. Don't wait for cease-and-desist letters. If you're worried about copyright issues, reach out to relevant parties. Negotiate licenses. Ask permission. Be transparent about what your tool can and cannot do.

Think about the downstream consequences. Before you release something, think about all the ways it could be misused. Not to be paranoid, but to be thorough. If you can think of how it violates copyright, so can lawyers.

These aren't restrictions on innovation. They're precautions against legal disaster.

The Future of Deepfake Regulation: Policy Implications

Seedance 2.0 will likely accelerate deepfake regulation.

Several countries and jurisdictions are considering laws that would:

Ban non-consensual deepfakes. Creating deepfakes of real people without consent would be illegal. This would apply to video, audio, and synthetic media generally.

Require disclosure when using AI-generated content. If you generate a video with AI, you have to disclose that fact. No passing off AI-generated content as real footage.

Establish liability for platform hosts. If a platform enables deepfakes, the platform is partially liable. This creates incentive to moderate content.

Require AI companies to implement safeguards. Before releasing AI tools that can generate video, audio, or synthetic media, companies must implement safeguards preventing misuse. This is moving from voluntary best practices to legal requirements.

These regulations are still being debated, but the trajectory is clear. Seedance 2.0 accelerated the timeline. The industry had maybe 5 years to self-regulate. Byte Dance's recklessness probably condensed that to 1 year.

The Broader Pattern: When Companies Push Until They Can't

Seedance 2.0 isn't an aberration. It's a pattern.

Companies push boundaries. They ship features that might be problematic. They see if anyone stops them. If regulators don't immediately shut them down, they interpret that as permission to keep going. Eventually someone does object loudly enough that companies have to back off.

Uber did this with labor law. They wanted to classify drivers as independent contractors. Regulators said no in some jurisdictions and yes in others. Eventually Uber adjusted.

Airbnb did this with housing regulations. They wanted to let anyone rent out their home. Cities pushed back. Airbnb negotiated.

Facebook did this with privacy and content moderation. They pushed further and further until massive political backlash forced the company to change.

This pattern happens because the upside of pushing is often higher than the downside of getting caught. By the time you're forced to stop, you've already gained massive value and market share.

By Dance probably calculated this. Yes, we'll get cease-and-desist letters. But in the two weeks before that happens, Seedance goes viral, we get massive press coverage, the technology is proven at scale, and we've cemented our position in the market.

The calculation might have been right. But it also might have miscalculated how hard entertainment industry lawyers would come back.

Conclusion: The Precedent Matters More Than The Tool

Seedance 2.0 itself will probably end up being a minor historical footnote. Byte Dance will add safeguards, settle with studios, and move on. The tool will still work for its intended purposes. Byte Dance will still be enormously valuable.

But the precedent matters profoundly.

This incident answered several important questions about AI development and corporate responsibility:

First: Companies cannot launch unrestricted AI tools that enable large-scale copyright infringement without consequences. The studios had leverage and they used it. That sets a precedent for future AI releases.

Second: Governments are willing to investigate and take action on AI copyright violations. Japan's investigation showed that nation-states aren't powerless. They can investigate, apply pressure, and potentially restrict company operations.

Third: Actor unions and creator organizations have power to coordinate opposition to problematic AI. SAG-AFTRA's statement was powerful. The Human Artistry Campaign's statement was powerful. Collective action matters.

Fourth: Implementing safeguards before launch is preferable to adding them after getting caught. Byte Dance looks irresponsible. Competitors who built safeguards in from the beginning look responsible. That reputation matters.

The implications ripple outward. Other AI companies see what happened to Byte Dance and adjust. Safeguards become table stakes, not optional. The cost of irresponsible AI deployment goes up.

This doesn't solve the broader problem of creator rights in the AI era. It doesn't establish consent frameworks or compensation systems. It doesn't answer questions about what intellectual property protections should exist in AI.

But it does establish that there are boundaries. Push too far and face consequences. The entertainment industry will fight back. Governments will investigate. Public opinion matters.

That boundary setting might be the most important outcome of this entire incident.

For creators, the message is: you have more power than you think. When you organize, when studios coordinate, when governments pay attention, irresponsible companies face costs. The outcome of Seedance 2.0's aftermath will likely make future AI tools more creator-friendly because the cost of being creator-hostile just went way up.

For AI companies, the message is clear: implement safeguards before launch. Ask permission before using copyrighted material. Respect creator rights. These aren't obstacles to innovation. They're the price of responsible innovation.

For regulators, this incident provided a roadmap. Here's how companies push boundaries. Here's what happens when they get caught. Here's how to apply pressure and force change. The regulatory response to Seedance 2.0 will be faster and more aggressive than it would have been without this incident.

The tool itself might fade away. But the precedent will stick around. And that precedent makes the world safer for creators and more thoughtful about AI development going forward.

FAQ

What is Seedance 2.0 and how does it differ from the original Seedance?

Seedance 2.0 is Byte Dance's upgraded text-to-video generation model that can create realistic video clips from written prompts. The 2.0 version improved upon the original by handling more complex scenes, generating longer video sequences, maintaining better visual consistency between frames, and most critically, understanding and generating specific trademarked characters like Spider-Man, Darth Vader, and Sponge Bob Square Pants. The original Seedance could generate generic scenes, but Seedance 2.0 could generate recognizable, protected intellectual property without permission from copyright holders.

Why did Byte Dance launch Seedance 2.0 without safeguards against copyright infringement?

The most likely explanation is strategic calculation. By launching without safeguards, Byte Dance maximized initial adoption, viral spread, and press coverage demonstrating the tool's capabilities. The company had the technical expertise to implement restrictions like character blocklists and content moderation, as proven by their experience with TikTok. Instead, they chose to skip safeguards initially and add them later after getting caught, which suggests either reckless confidence or intentional strategy. Tech consultant Rui Ma suggested the controversy might have been part of Byte Dance's distribution strategy to showcase technical capabilities and prove the tool's power through real-world use of copyrighted content.

What legal consequences has Byte Dance faced from the Seedance 2.0 launch?

By Dance faced cease-and-desist letters from Disney, Paramount Skydance, and other studios alleging massive copyright infringement. Multiple organizations including SAG-AFTRA, the Motion Picture Association, and the Human Artistry Campaign issued public statements condemning the tool. Japan launched a formal government investigation into the copyright violations. While specific settlements haven't been disclosed, Byte Dance responded by implementing safeguards, though the company's statement avoided directly accepting responsibility for the original violations. The long-term consequences, including potential fines or litigation outcomes, are still developing.

How does Seedance 2.0's approach compare to how other AI companies handle copyright protection?

Competitors like Runway ML, Pika, and Midjourney implemented content moderation and safeguards before launch to prevent generation of copyrighted characters and identifiable people. Open AI's Sora limited initial access through a waitlist and negotiated licensing deals with content partners like Disney before wider release. These companies understood that text-to-video generation creates legal liability and chose to implement protections proactively rather than reactively. Byte Dance's decision to launch unrestricted contrasted sharply with this industry norm, suggesting either unique risk tolerance or deliberate strategy to maximize initial capability demonstration.

What safeguards should AI video generation tools implement to prevent copyright infringement?

Responsible safeguards include character blocklists that prevent generation of trademarked characters, voice authentication systems to prevent unauthorized voice cloning, output filtering to detect potential copyright infringement before publishing, robust terms of service that explicitly forbid generating copyrighted content with actual enforcement mechanisms, and consent verification for any likeness or voice cloning features. These safeguards aren't new or technically difficult—competing platforms implement them. The absence of these protections in Seedance 2.0 at launch suggests they were skipped deliberately rather than due to technical limitations.

Why is actor consent such a critical issue in the Seedance 2.0 situation?

Actors' ability to control how their likenesses and voices are used is foundational to their economic value and personal autonomy. When Seedance 2.0 could generate deepfakes of actors like Sean Astin delivering lines they never spoke, it violated both their right of publicity and their fundamental autonomy. If studios can generate unlimited performances using AI deepfakes, there's no economic incentive to hire actual actors for many roles. SAG-AFTRA correctly identified this as an existential threat to actors' livelihoods. The issue extends beyond entertainment to all public figures whose likenesses can be cloned infinitely without compensation or permission.

What role did governments play in responding to the Seedance 2.0 copyright violations?

Japan's AI minister Kimi Onoda launched a formal investigation into Byte Dance specifically concerned about protection of anime and manga characters, which are valuable Japanese cultural exports. This government action gave teeth to industry complaints and created regulatory consequences beyond cease-and-desist letters. The investigation suggests nations are willing to take action on AI copyright violations and that regulatory response can be faster and more aggressive when issues become public and politically salient. This marks a shift from market-only solutions toward government intervention in AI deployment decisions.

Could Byte Dance's strategy have been intentional as a market demonstration tactic?

It's possible but unproven. Tech consultant Rui Ma explicitly suggested the controversy was part of Byte Dance's distribution strategy to showcase technical capabilities through real-world demonstration. The evidence supporting this includes that Byte Dance had the expertise to implement safeguards but didn't, that the viral spread of copyrighted content provided enormous publicity value, that proving the technology works at scale matters more to investors than a clean launch, and that the company could have predicted the response. However, intent is difficult to prove legally, and the outcome—forced safeguards and legal threats—is the same regardless of whether the lack of initial safeguards was intentional strategy or reckless incompetence.

How does this incident impact future AI tool development and deployment?

Seedance 2.0 likely accelerated implementation of safeguards across the AI industry as companies recognize the cost of irresponsible deployment. It provided a roadmap for how governments and industry organizations can coordinate to pressure companies into compliance. It strengthened creator rights discourse by making violations impossible to ignore. It created precedent that launching unrestricted tools enabling copyright infringement has serious consequences. Most importantly, it signaled that the window for self-regulation in AI is closing—companies that don't implement safeguards proactively will face regulatory action. For future AI development, Seedance 2.0 makes responsible development not just morally right but commercially necessary.

What compensation or remedies should creators receive for AI training on their work?

Multiple approaches are being discussed. Direct payment models would compensate creators when their work is used in training data. Licensing systems similar to music licensing could allow creators to opt-in or opt-out of AI training while receiving compensation. Do-not-train registries would let creators legally prevent their work from being used in future AI development. Revenue-sharing arrangements could give creators a percentage of profits from products built using their work. Currently, none of these exist at scale, which is why Seedance 2.0 highlighted the problem—creators had no mechanism to consent or receive compensation for having their work used to build the tool.

What is the likelihood of stronger deepfake and AI regulation following this incident?

Regulation is highly likely in the near term. Multiple jurisdictions including the EU, UK, and various countries are developing AI regulation specifically addressing deepfakes, consent, and copyright protection. Seedance 2.0 accelerated timeline for this regulation by making the problems visible and politically salient. Laws being proposed include bans on non-consensual deepfakes, requirements to disclose AI-generated content, platform liability for enabling deepfakes, and mandatory safeguards before AI tool release. These regulations will move from voluntary industry standards to legal requirements, making responsible AI development not just good practice but legal obligation.

Key Takeaways

- ByteDance launched Seedance 2.0 without copyright safeguards, enabling unrestricted generation of protected characters like Spider-Man and Darth Vader

- Disney, Paramount, SAG-AFTRA, Japan's government, and creator coalitions coordinated rapid response through cease-and-desist letters and formal investigations

- Competing AI companies like OpenAI, Runway ML, and Pika had implemented character blocklists and content moderation from launch, suggesting ByteDance skipped safeguards strategically

- The incident exposed threats to actor livelihoods through voice cloning and deepfakes, prompting SAG-AFTRA to frame it as an 'attack on every creator around the world'

- Seedance 2.0 likely accelerated AI regulation globally as governments and studios recognize need for mandatory safeguards before tool deployment

Related Articles

- ByteDance's Seedance 2.0 AI Video Generator Faces IP Crisis [2025]

- ByteDance's Seedance 2.0 AI Video Copyright Crisis: What Hollywood Wants [2025]

- Seedance 2.0 and Hollywood's AI Reckoning [2025]

- Seedance 2.0 Sparks Hollywood Copyright War: What's Really at Stake [2025]

- AI Video Generation Without Degradation: How Error Recycling Fixes Drift [2025]

- Runway's $315M Funding Round and the Future of AI World Models [2025]

![ByteDance's Seedance 2.0 Deepfake Disaster: What Went Wrong [2025]](https://tryrunable.com/blog/bytedance-s-seedance-2-0-deepfake-disaster-what-went-wrong-2/image-1-1771265382279.jpg)