Deepfake Detection Deadline: Instagram and X Face an Impossible Challenge [2025]

Somewhere in a Meta office, someone just realized they have nine days to solve a problem the entire tech industry has been struggling with for years. India's new deepfake detection mandate arrives February 20th, 2025, and it's not asking for effort or best practices. It's demanding results. Specific, measurable, enforceable results.

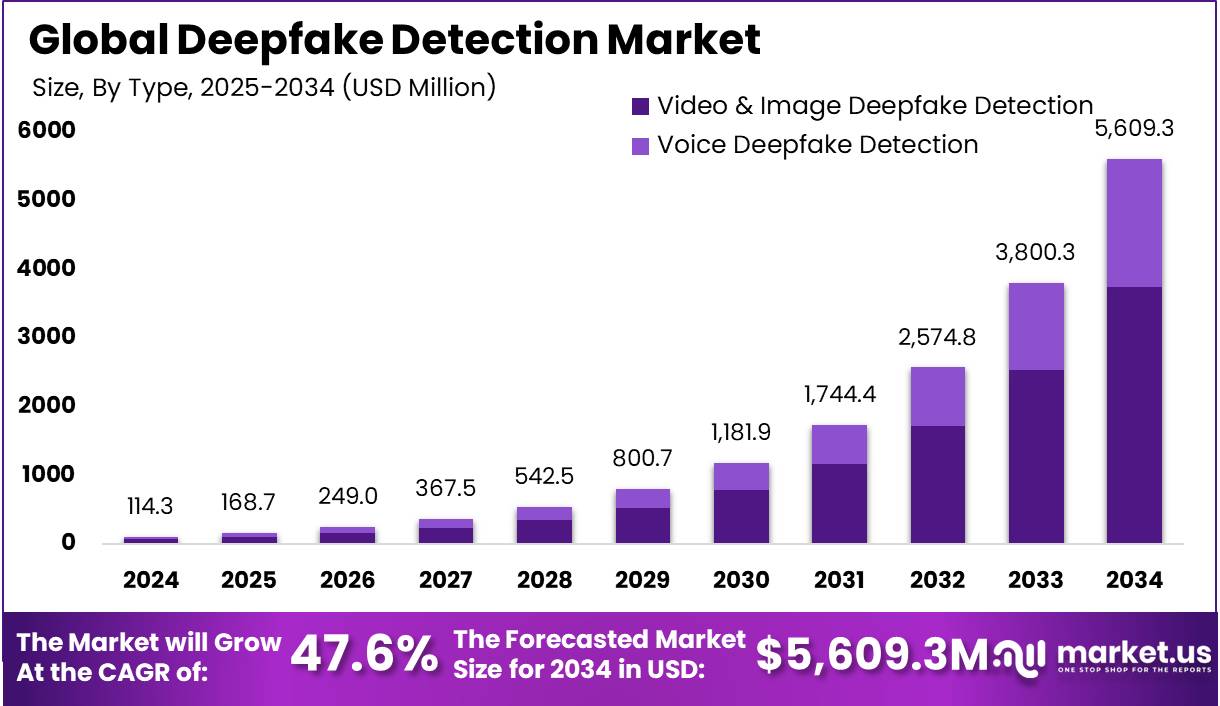

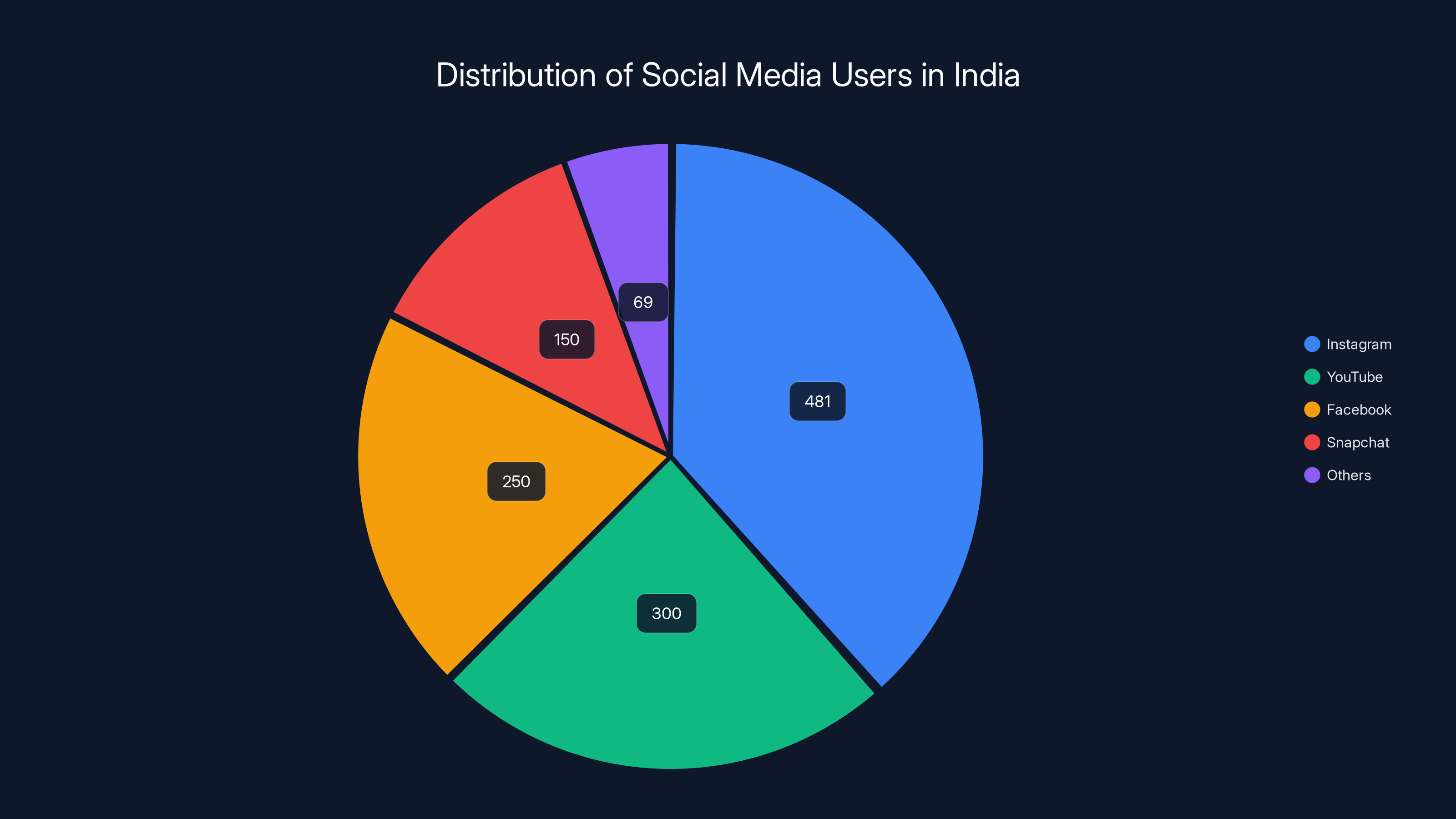

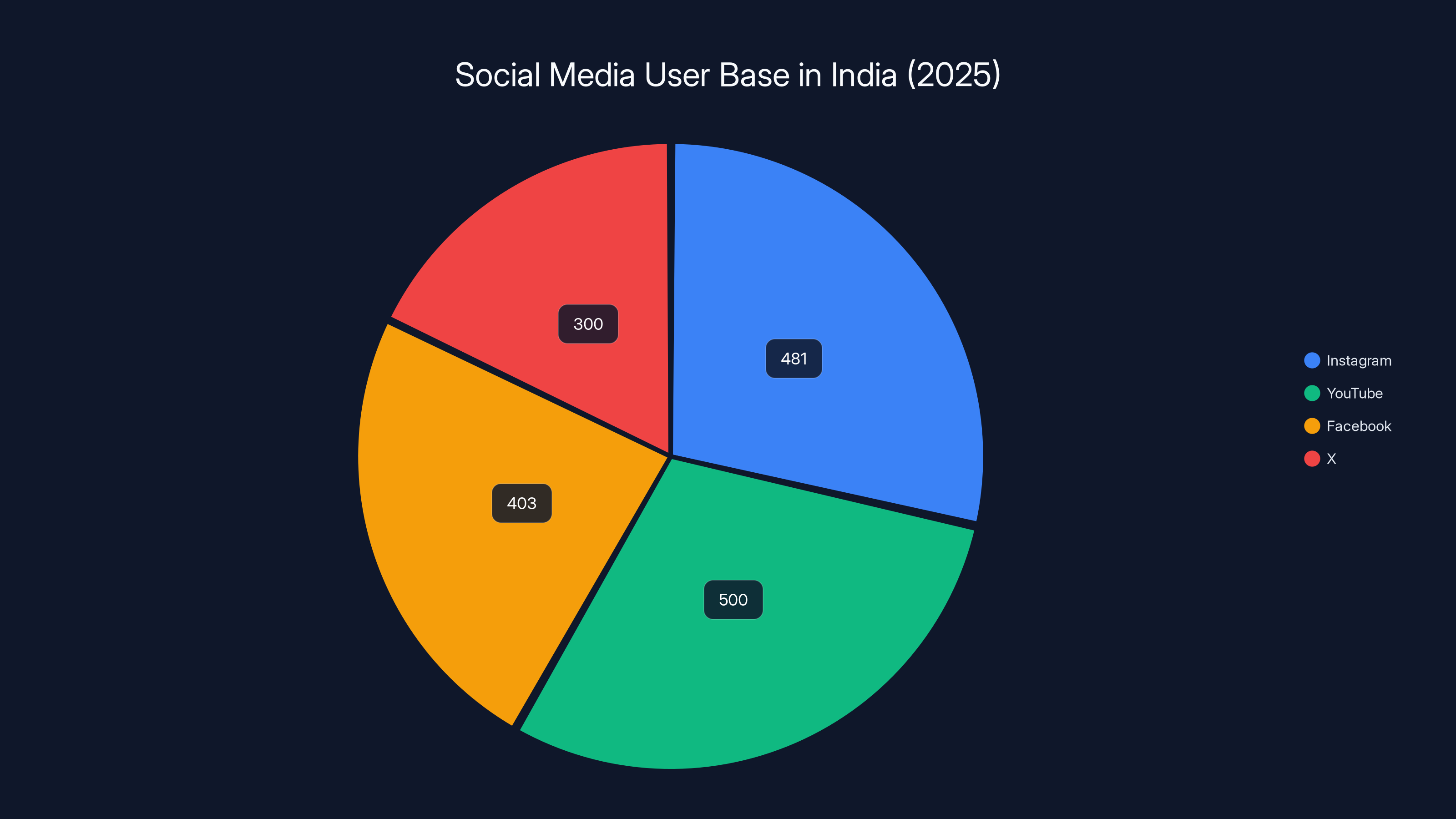

Let's be clear about what just happened. On February 11th, 2025, India amended its Information Technology Rules with requirements that social media platforms deploy what the government calls "reasonable and appropriate technical measures" to detect, remove, and label AI-generated or manipulated content. That sounds reasonable until you realize the tech doesn't work reliably yet. Instagram has 481 million users there. YouTube has 500 million. Facebook has 403 million. X is the third-largest market for the platform. These aren't niche markets where platforms can experiment quietly. They're massive, real-time testing grounds for detection systems that frankly don't exist at the necessary scale.

The clock is running. Platforms that haven't implemented any labeling systems at all now have just over a week to do it. Platforms using content credentials standards need to figure out how to prevent metadata stripping and maintain detection at scale. Everyone needs to hit a three-hour removal deadline for illegal deepfakes—down from the current 36-hour standard. This isn't a request for improvement. This is a legal requirement with real enforcement teeth.

But here's the thing that keeps AI researchers up at night: the best methods we currently have for detecting deepfakes are already failing at scale. The technology that companies like Meta, Google, and Microsoft have said works perfectly in controlled tests crumbles the moment you release it into the wild. It misses obvious fakes, flags authentic content as manipulated, and struggles with new generation techniques that emerge monthly.

India isn't asking platforms to do something they've already mastered. India is asking them to do something nobody has actually solved yet, and they're asking them to do it by next week.

TL; DR

- February 20th deadline: India requires all AI-generated content to be labeled and illegal deepfakes removed within three hours

- Detection crisis: Current deepfake detection technology only works in controlled labs, fails at scale, and can't handle new generation methods

- The metadata problem: Content credentials (C2PA) are supposed to track synthetic content but strip easily during uploads and don't cover open-source AI models

- Impossible timeline: Platforms need days to do what would normally take months of development, testing, and refinement

- Global implications: India's 1 billion internet users make this a critical test case that could force worldwide changes in how platforms handle synthetic content

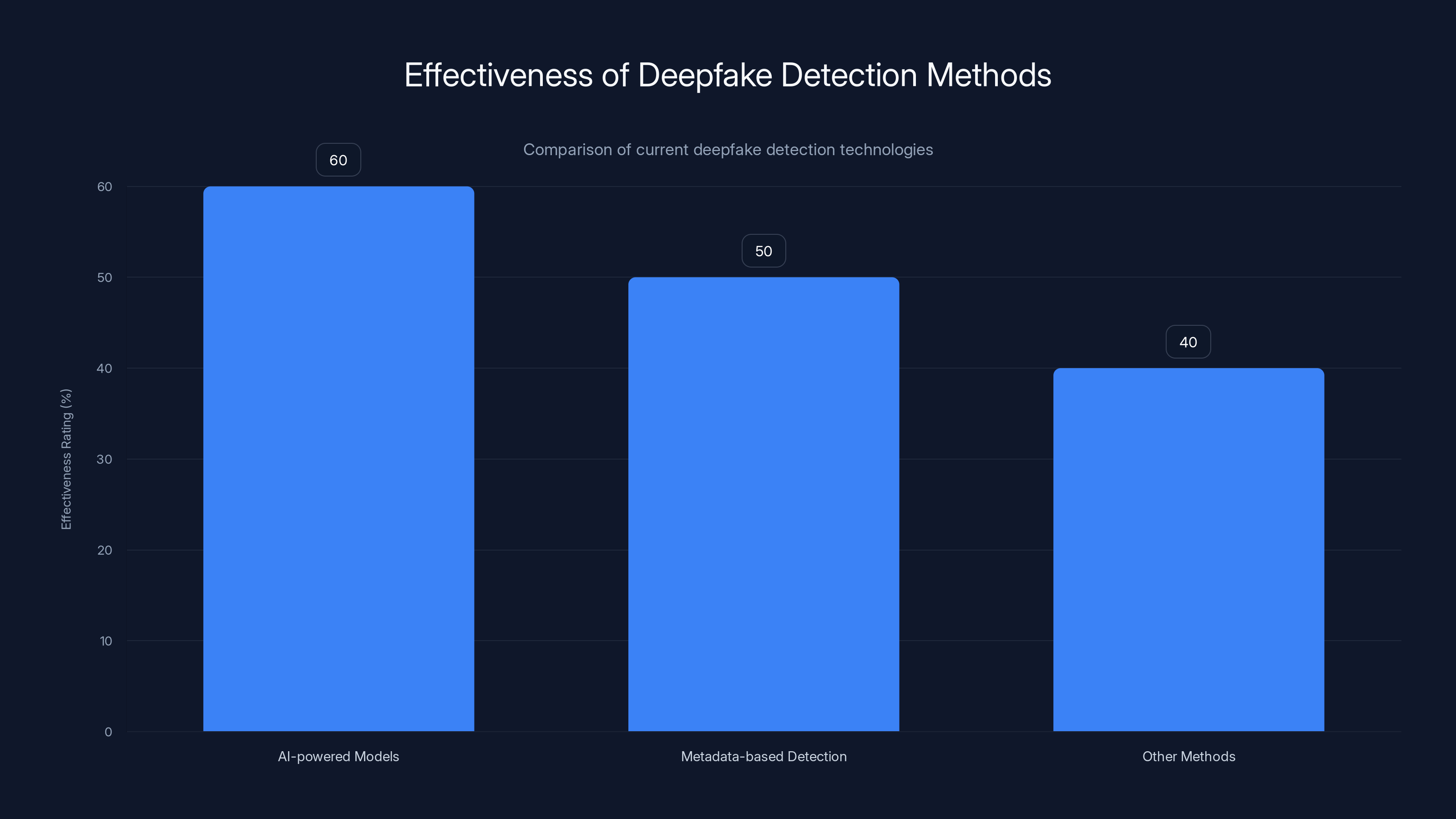

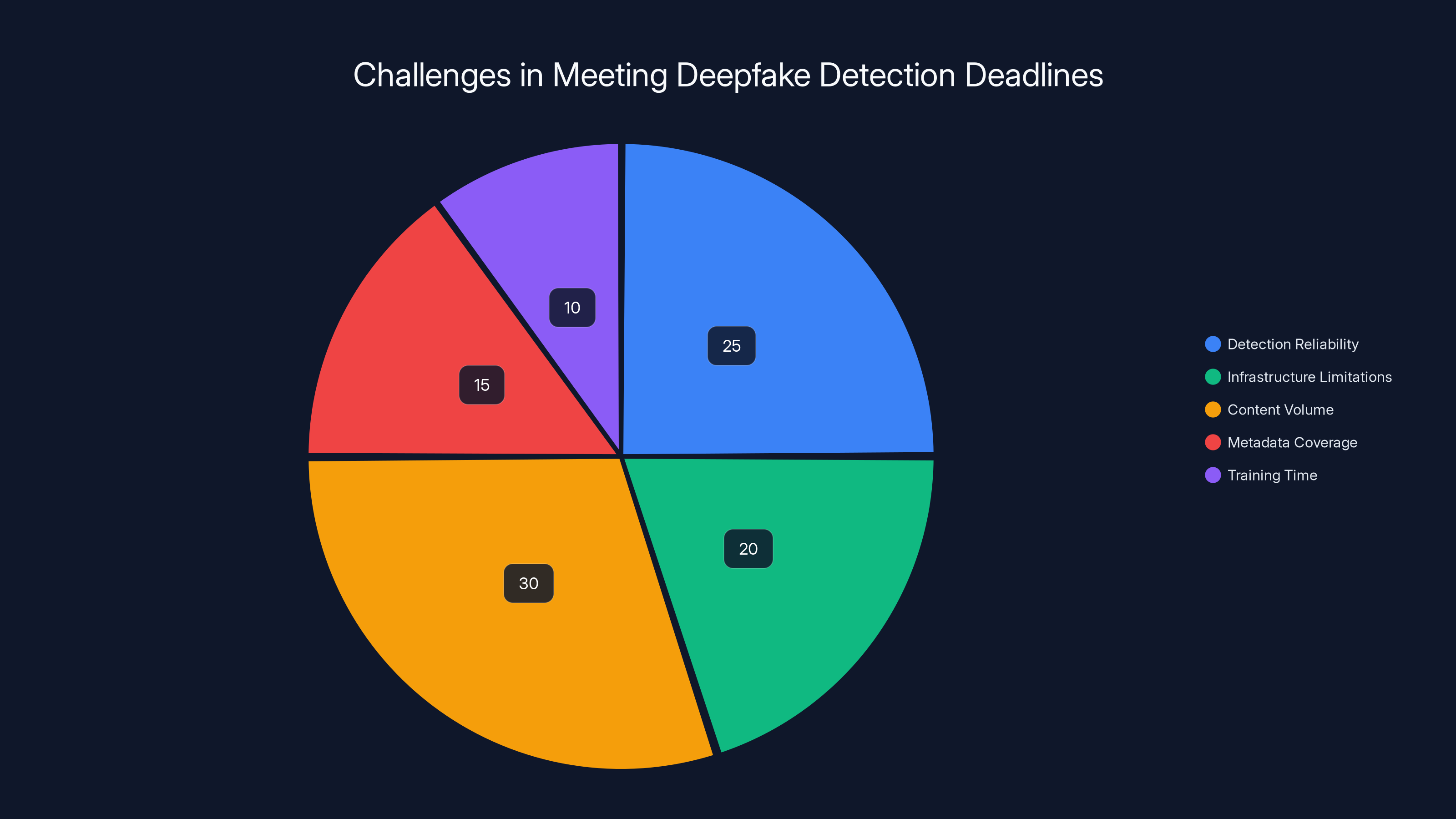

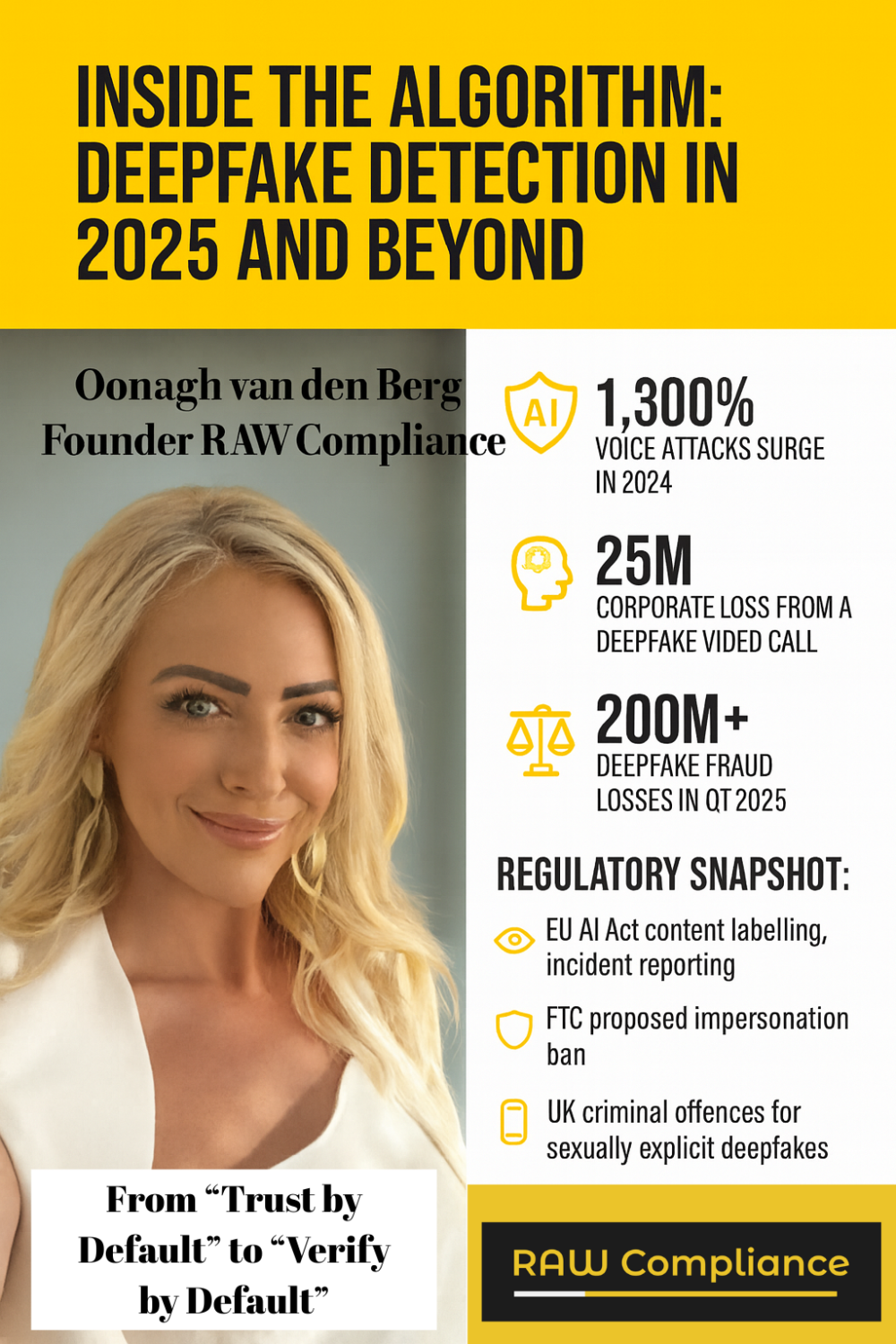

AI-powered models, while effective in controlled environments, struggle in real-world applications. Metadata-based detection is promising but addresses different issues. Estimated data.

Why India's Deepfake Mandate Actually Matters

India isn't usually the first place you'd expect radical tech regulation to land. The country is known for being a massive growth market where tech companies want to succeed, which means they're usually willing to tolerate ambiguous rules and move fast. But deepfakes have become a political emergency there in a way they haven't quite reached in Western democracies yet.

The numbers tell the story. India has over 1 billion internet users, and that number skews dramatically young. The average age of an Indian internet user is significantly lower than in North America or Europe, which means the country is dealing with a generation that grew up with AI tools as normal infrastructure, not a novelty. When you combine that with rapid smartphone adoption, affordable data plans, and minimal friction to creating synthetic content, you get a perfect storm for deepfake proliferation.

More importantly, India has experienced what other countries are just beginning to worry about: deepfakes weaponized at scale for political manipulation, non-consensual intimate imagery, and fraud. The problem isn't theoretical there. It's happening in real time across 500 million social media accounts.

That's why the government acted. But in acting quickly, they've created a regulatory trap that nobody can escape from.

When a platform with 481 million Indian Instagram users implements a deepfake detection system, they're not experimenting with a small subset. They're rolling out technology to roughly one-third of the world's fastest-growing internet population. If the detection system is too aggressive, millions of legitimate videos get flagged. If it's too lenient, millions of dangerous deepfakes slip through.

India isn't a test market here. It's ground zero.

The Political Pressure Behind the Timeline

Someone looking at this situation from the outside might wonder why India set a deadline so impossibly tight. The answer is political. Deepfakes have been used to manipulate elections, spread religious violence, and create non-consensual intimate videos at a scale that's become undeniable. Waiting six months for platforms to develop proper detection systems isn't politically viable when people are being harmed right now.

The government set a deadline that sounds reasonable to legislators but impossible to engineers. Nine days to implement systems that take months to build properly. It's the same pattern you see in every tech regulation crisis: politicians set timelines based on political pressure, not technical reality, and then companies scramble to either comply imperfectly or risk massive fines.

India hasn't explicitly stated what fines look like, but based on similar regulations in the EU and other jurisdictions, non-compliance could mean app store removal, massive financial penalties, or both. That's enough pressure to force every major platform to attempt something, even if that something isn't fully baked.

Why This Matters Beyond India

The real significance of India's deepfake mandate isn't just for Indian users. It's that India is big enough to force global platforms into compliance mode, and whatever systems they build there will likely get deployed everywhere else.

When Meta implements a deepfake detection system for Instagram's 481 million Indian users, that system doesn't stay in India. Platform engineering works at scale. If they build infrastructure to detect and label synthetic content in India, they'll deploy it globally because the cost of maintaining separate systems is higher than the cost of one global implementation.

So India's deadline is effectively a global deadline. It's forcing the entire industry to make a choice: build imperfect detection systems quickly or risk massive regulatory consequences.

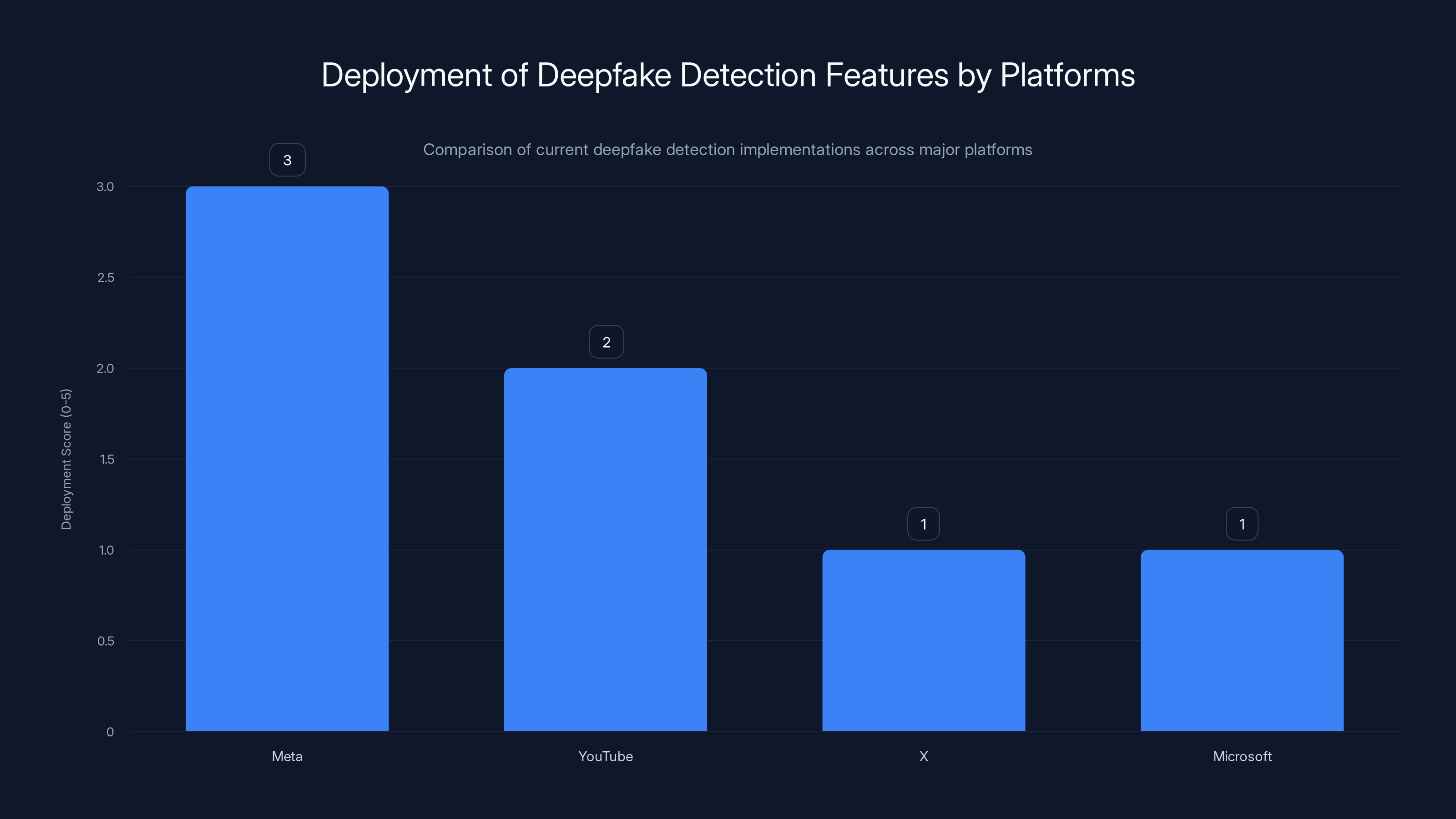

Meta leads with partial deployment of detection features, while X and Microsoft lag behind with minimal or research-level efforts. Estimated data.

The Current State of Deepfake Detection Technology

Let's talk about what actually exists right now in terms of deepfake detection. It's not as reassuring as the tech industry claims.

The honest assessment: current deepfake detection falls into roughly three categories, and none of them work reliably at scale.

Category one is AI-powered detection models. These are neural networks trained on thousands of fake videos to recognize the patterns that AI generation leaves behind. They work in controlled benchmarks, sometimes achieving 90%+ accuracy on test datasets. But in the wild, they fail constantly. They overfit to the specific generation techniques used in their training data, then new tools like Sora or upcoming models use slightly different techniques and suddenly the detection model is useless. It's like training a spam filter on 2024 spam messages and wondering why it doesn't catch 2025 spam.

The biggest problem: these models are expensive to run. Processing a single minute of video through state-of-the-art detection can take multiple minutes of compute time on high-end hardware. Now multiply that by 500 million Instagram users posting 10,000 hours of video daily, and you're looking at infrastructure costs that would bankrupt even Meta.

Category two is metadata-based detection, which is where the industry has pinned most of its hopes. This is the C2PA (Content Credentials) system that major tech companies have adopted. The idea is elegant: when you create or edit content with supported tools, the system embeds invisible metadata describing exactly what happened. An AI model that generated an image would have metadata saying "generated by Midjourney at 3:47 PM on February 15th, 2025." An edited photo would have metadata saying "edited in Photoshop with these specific changes applied."

Metadata-based detection is fantastic in theory. In practice, it solves a completely different problem than the one platforms actually face.

Here's why: metadata only exists if the person who created the content chose to create it using a tool that embeds C2PA metadata. Open-source tools like Stable Diffusion? No metadata. Deepfake apps like Reface or Face Swap? They explicitly refuse to use C2PA. Cheap tools built by people with no interest in compliance? No metadata.

C2PA covers maybe 30% of professionally-created synthetic content and almost none of the amateur stuff. It's like requiring cars to have black boxes that broadcast their speed, then acting surprised when people use older cars without the technology.

Making it worse: C2PA metadata is trivially easy to remove. It can be stripped accidentally during file uploads as platforms compress or re-encode content. Some users deliberately remove it. The new Indian regulations specifically prohibit platforms from allowing metadata removal, but that's a bit like banning gravity. You can't prevent something that happens during routine technical operations.

Category three is human moderation, which is the only system that actually works reliably right now. Trained moderators watching videos, reading reports, evaluating context, and making judgment calls. It works because humans can understand context, recognize local cultural references, and apply nuance.

But it's slow and expensive. The three-hour removal deadline that India's regulations impose would require platforms to staff moderation teams at scales that don't currently exist. For Instagram alone, processing deepfake reports within three hours for 481 million Indian users would require building out moderation infrastructure that currently doesn't exist.

The AI Detection Arms Race

There's a deeper problem that nobody's really talking about, and it's going to get worse fast. Deepfake generation is improving exponentially while detection is barely keeping pace.

Every few weeks, a new generative AI model comes out that's better at creating convincing video than the previous model. These tools aren't getting incrementally better—they're getting categorically better. A model from six months ago that could only fool detection systems if you knew what to look for now gets destroyed by models released last month that fool everyone.

Meanwhile, detection systems get trained on historical data. By the time a detection model is deployed, the generation techniques it was trained to detect are already obsolete. It's like trying to fight an enemy that gets stronger every week while your weapons get weaker.

This is why companies like Meta have started investing in provenance (metadata) systems instead of detection. They've essentially admitted that pure detection can't win an arms race against generation technology. But provenance only works if everyone participates, and the problem is nobody has to.

What Platforms Are Actually Doing Right Now

Let's look at what Meta, Google, X, and other platforms have actually deployed. Spoiler: it's not much.

Meta has integrated C2PA metadata reading across Instagram, Facebook, and WhatsApp. When content includes C2PA credentials indicating it's synthetic, the platform adds a label saying "AI info." The label is small, easily missed, and doesn't prevent the content from spreading. Studies have shown that people often don't notice these labels, and when they do, they don't fully understand what they mean.

More importantly, Meta's system only works for content that includes C2PA metadata. The deepfakes that are actually causing harm—created with open-source tools, deepfake apps, or locally-built systems—often have no metadata at all. So Meta's detection system effectively ignores the problem it's supposed to solve.

YouTube added labels to AI-generated content in 2023, but the system is similarly limited. You can manually disclose that your video is AI-generated through the platform's UI, and YouTube will add a label. But nothing forces disclosure, and nothing automatically detects undisclosed synthetic content.

X hasn't implemented significant deepfake detection at all. The company removed its deepfake labeling system months ago, citing concerns about accuracy. For a platform that's become famous for bot problems and information manipulation, the lack of synthetic content detection is conspicuous.

Microsoft has been working on detection at the research level, but nothing public-facing has really been deployed at scale.

The Infrastructure Challenge

Building deepfake detection at scale isn't just a software problem. It's an infrastructure problem.

Instagram processes roughly 95 million posts daily. YouTube receives 500 hours of video uploaded every minute. Facebook handles messages, images, and videos from billions of users. Even if you had perfect detection technology, running it on all this content would require computational infrastructure that's staggering.

Let's do some math. A modern deepfake detection model might take 5 minutes of GPU time to analyze a 1-minute video. Instagram's users upload roughly 24,000 hours of video daily to Reels alone. That's 24,000 × 60 minutes = 1.44 million minutes of video per day. At 5 minutes of GPU time per minute of video, you need 7.2 million GPU-minutes of compute per day. That's roughly 5,000 GPUs running 24/7 just to screen Instagram video uploads in India.

The cost? At current GPU pricing, that's roughly

So platforms have to make a choice: deploy detection systems that are expensive and imperfect, or don't deploy them and face regulatory consequences. India's deadline hasn't really given them a third option.

Estimated data shows that content volume and detection reliability are the biggest challenges in meeting deepfake detection deadlines.

The Content Credentials Trap

C2PA (Content Credentials) was supposed to be the solution. Adobe, Meta, Microsoft, and other tech companies funded it. The idea was revolutionary: embed metadata at the point of creation describing exactly what happened to the content. No more guessing whether something is real or fake. The metadata would tell you.

But in practice, C2PA has become a trap rather than a solution.

Why Content Credentials Don't Work at Scale

C2PA works perfectly when every link in the chain participates. Photoshop adds metadata. Your phone's camera adds metadata. Every platform you upload to preserves metadata. Everyone wins.

But the moment anyone in the chain doesn't participate, the whole system breaks. Open-source image generation tools don't add C2PA metadata. Mobile apps built by people in other countries don't care about content credentials. Amateur deepfake creators definitely don't add metadata announcing "Hey, this is fake and I made it."

So you end up with a system where legitimate professionals who want to be transparent have their content properly credentialed, while the actual bad actors using open-source tools have no metadata at all. C2PA effectively labels the honest people while letting the dishonest ones roam free.

Making it worse, C2PA metadata is fragile. It gets stripped during routine platform operations. A video uploaded to Instagram gets transcoded for mobile viewing, and suddenly the metadata is gone. An image gets reposted across Twitter, and the chain of provenance breaks. Users download and re-upload content, and any metadata history evaporates.

India's new regulations prohibit platforms from allowing metadata to be removed. But that's like prohibiting water from being wet. It happens during normal technical operations. A platform can't prevent file compression from stripping metadata without fundamentally breaking how video and image processing work.

The Interoperability Problem

Another issue: C2PA metadata isn't universal yet. Different platforms read it differently. A video that includes C2PA metadata on Adobe's platform might lose some information when it goes to Instagram, and lose more when it gets shared to TikTok.

India's deadline requires platforms to implement C2PA reading and preservation by February 20th. That's not enough time to test whether different systems will actually interoperate reliably. Platforms could deploy C2PA support that technically complies with the regulation but doesn't actually preserve metadata across the ecosystem.

And that's assuming every platform cooperates. X probably won't implement C2PA by the deadline. TikTok definitely won't, since they're based in China and have their own content moderation approach. So Indian users will be able to post C2PA-protected content that strips its metadata the moment it crosses platform boundaries.

C2PA isn't a solution to India's deepfake problem. It's a band-aid that looks good in boardrooms but doesn't actually solve anything in practice.

The Three-Hour Removal Deadline Problem

India's regulations require platforms to remove illegal deepfakes within three hours of being discovered or reported. That sounds reasonable until you think about the mechanics of actually doing that.

Let's walk through what needs to happen:

- A user reports deepfake content

- The report gets flagged in the platform's moderation queue

- A human reviewer (or AI system) evaluates whether it's actually a deepfake and whether it violates law

- A decision is made about removal

- The content is actually removed from all caches and backups

Under current systems, this takes 24-36 hours for most platforms. The ones that are fastest take about 12 hours. Three hours is not currently achievable at scale for any platform.

Make it even harder: you can't just remove the content. You have to document the removal, keep records for potential law enforcement review, notify the user, and handle appeals. Three hours doesn't give you time to do any of that properly.

India's three-hour deadline would require hiring enough moderation staff to handle Indian reports continuously, 24/7. Current scaling suggests you'd need roughly 2,000-3,000 additional moderation staff per platform just for India. That's a massive hiring surge for employees who often work in difficult conditions, have high burnout rates, and aren't currently being trained on deepfake identification.

More realistically, platforms will probably try to solve this with automation. They'll deploy AI systems to automatically flag potential deepfakes and remove them on suspicion. This leads to a different problem: massive false positive rates. Legitimate content gets removed, users appeal, and the three-hour deadline becomes meaningless because you're too busy handling false positive appeals to process new reports.

The Appeal Mechanism Gap

Where the three-hour deadline really breaks down is appeals. If a platform removes content and that removal is wrong, users need some mechanism to appeal and get their content restored.

But three hours doesn't give you time for appeals. You have to decide to remove content, remove it, and then handle an appeal process if the user claims it's wrong. But most appeal processes take days or weeks because they require human review. After three hours of removal, platforms would be buried in appeal requests.

India's regulations don't explicitly address what happens with appeals, which suggests either the regulators didn't think this through or they expect platforms to just accept that false positives happen. Neither is good.

India's social media landscape is dominated by Instagram with 481 million users, followed by YouTube and Facebook. Estimated data highlights the vast reach of these platforms.

Open-Source Models and the Enforcement Gap

Here's a problem that won't go away, no matter how much infrastructure platforms deploy: open-source generative AI tools.

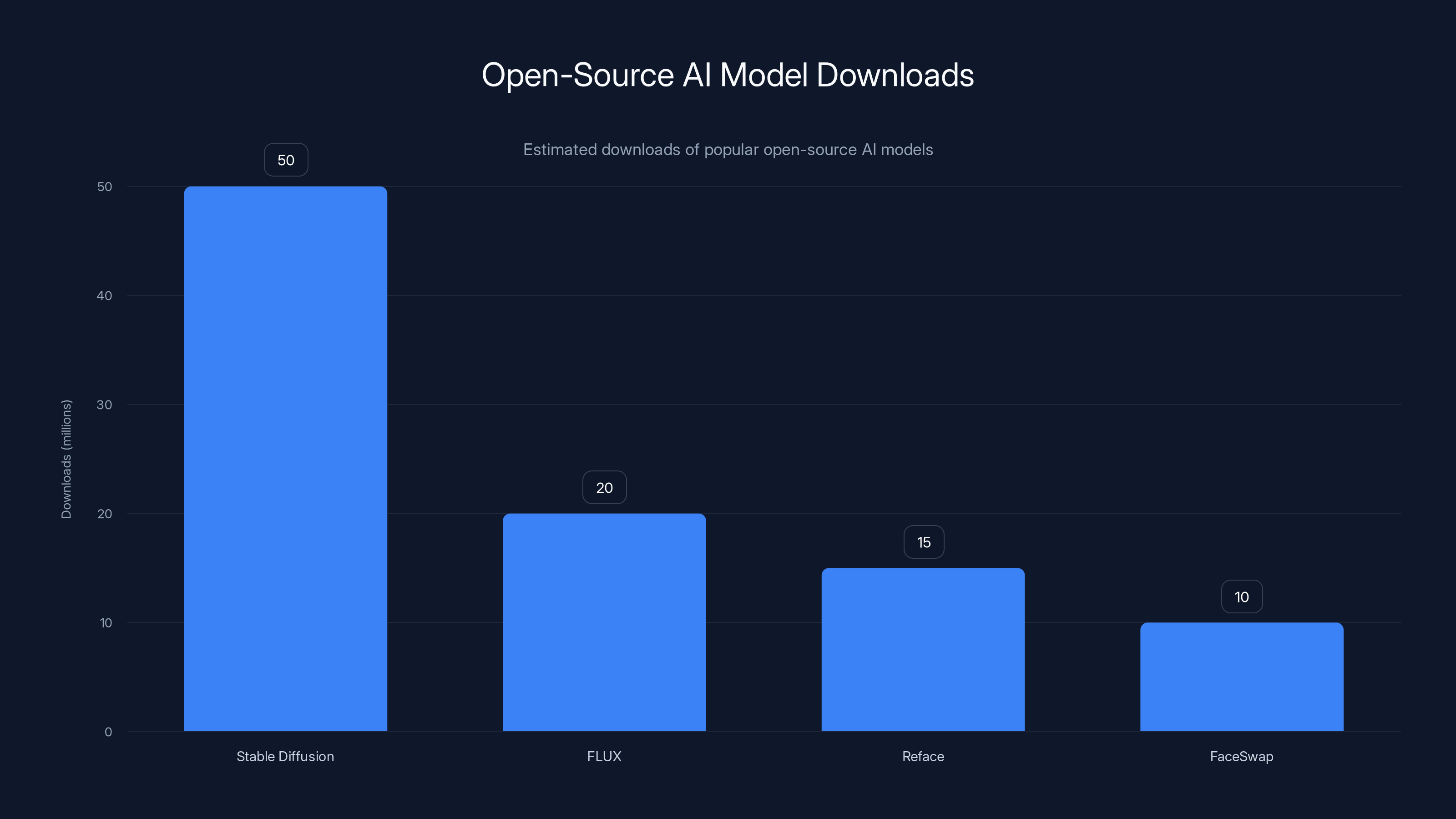

Models like Stable Diffusion, FLUX, and others are freely available, run locally, and don't communicate with any platform. You can generate an image or video entirely on your laptop, then upload it to Instagram. The platform has no way of knowing it came from an open-source model. No metadata exists. No detection system can look at the file and be confident it's synthetic.

Open-source deepfake tools are even more explicit about this. Reface, Face Swap, and other apps actively refuse to implement C2PA metadata or other detection signals. They're built by people who don't care about platform regulations, often based outside India's jurisdiction.

So platforms face an impossible situation: they're supposed to detect and remove all deepfakes, but a significant and growing portion of deepfakes are created with tools that leave zero signals that they're synthetic. The best you can do is rely on human reports, which means your detection is actually just report processing.

Building Custom Models

Where this gets even more complicated: it's trivial for someone to build a custom deepfake model trained on specific local data. You don't need fancy infrastructure. Run code on a $1,000 laptop for a week, train a model on thousands of images of a political opponent, and you've got a tool that can generate convincing deepfakes of that person.

Platforms can't detect the existence of custom-built models. They can only detect the output. And detecting output gets harder as generation technology improves.

India's regulations seem to assume that deepfakes are created by professional actors with bad intentions, and if you just detect and label them, the problem is solved. But reality is much messier. Deepfakes are created by teenagers, political operatives, criminals, revenge porn creators, and people experimenting with new tools. Some of them are sophisticated. Most are just people with free tools and bad judgment.

Detecting and removing all of those requires either perfect automated detection (which doesn't exist) or absurdly large moderation teams (which platforms won't hire). You're left with a regulation that looks good on paper but can't actually be enforced in practice.

What Platforms Will Actually Do by February 20th

Let's be realistic about what's actually going to happen when the deadline arrives.

Meta will probably expand its existing C2PA labeling system and claim compliance. They'll add some extra labels, maybe improve the detection slightly, and announce that they've deployed "advanced AI content detection for synthetic content." The system will still only catch a fraction of deepfakes, but it'll technically follow the letter of the law.

Google will likely do something similar with YouTube, possibly implementing some automatic flagging and require users to disclose AI-generated content. They'll also probably claim compliance and move on.

X might just do the bare minimum, possibly implementing a disclosure system where users can mark content as synthetic. Whether it will actually detect undisclosed deepfakes is unclear.

None of these will actually solve the deepfake problem. They'll just create a veneer of compliance.

The Real Outcome: Imperfect Compromise

What's most likely is that platforms will deploy systems that are technically compliant but practically limited. They'll remove some deepfakes, label some synthetic content, and claim they've met the regulation. Meanwhile, deepfakes will continue spreading, just slightly slower than before.

India will probably accept this because the alternative is banning these platforms entirely, which would harm their local creator economies and internet infrastructure. There will be headlines about "India Cracks Down on Deepfakes" and "Tech Giants Deploy AI Detection for Synthetic Content." The regulation will technically be in place.

But behind the scenes, platforms will be quietly admitting that the deepfake problem is harder than they thought, the deadline was impossible, and their solutions don't actually work at scale. And they'll start lobbying for regulations that are less prescriptive and more flexible, while pouring resources into research for better detection technology that might actually work.

That research is important, because the current state of deepfake detection is genuinely inadequate for the problem at hand.

Stable Diffusion leads with an estimated 50 million downloads, highlighting the widespread use of open-source AI models. (Estimated data)

The Detection Technology That Actually Works

If C2PA doesn't work and AI detection fails at scale, what actually works for identifying deepfakes?

Honestly? Context. Human judgment. Lateral thinking.

Forensic analysts look at behavioral inconsistencies, lighting that doesn't match, background details that are wrong. They check whether the video makes sense given what they know about the person depicted. They look at the metadata, even though it can be spoofed. They use multiple tools and techniques rather than trusting a single detection system.

This is why large deepfakes used for political manipulation often get caught—not by detection technology, but by journalists and investigators who notice something is off. The techniques are decades-old fraud investigation techniques applied to video.

For platforms at scale, this translates to: you need humans in the loop. You need trained moderators who understand deepfakes, who can recognize the tells that current AI can't yet reliably detect. This is expensive, difficult work, and it's the closest thing we have to reliable deepfake detection.

Biological Signal Detection

One emerging approach is looking at biological signals. AI-generated faces often have subtle flaws in how pupils respond to light, how blood perfuses through skin, micro-expressions that don't quite match the macro emotions being expressed.

Researchers have shown you can detect some deepfakes by analyzing these biological signals. But the technique requires specialized hardware or very high-quality video. It works in controlled settings. It doesn't work with compressed TikTok videos shot on phones.

And here's the kicker: as generation technology improves, it's learning to fake these biological signals too. The arms race continues.

Behavioral Analysis

Another technique is behavioral analysis. Deepfakes often have flaws in how they move or gesture. The head turns in slightly unnatural ways. The hand movements are slightly off. The cadence of speech doesn't match the lip movements perfectly.

Computer vision systems can be trained to recognize these patterns. But again, this works on isolated, high-quality videos. It doesn't work reliably when the video is compressed, noisy, or shot in poor lighting.

Watermarking and Cryptographic Approaches

Some researchers are exploring cryptographic watermarking that's embedded at generation time. This is different from C2PA metadata—it's actually part of the image itself, harder to remove without degrading quality.

The idea is that when you generate an image with a particular tool, that tool embeds a watermark that proves it came from that tool and proves who created it. Platforms could then detect these watermarks as evidence of synthetic content.

But this requires universal adoption. Every tool would need to embed watermarks, and watermarks would need to survive platform processing. Neither of these things is guaranteed.

Why India's Deadline Will Actually Force Progress

Here's where it gets interesting. India's deadline is terrible, and the technology isn't ready, and platforms can't actually comply fully. But all of that pressure might actually push the industry forward faster than it would have moved otherwise.

When you have a hard deadline and a real threat of enforcement, companies stop researching and start deploying. They invest in infrastructure, hire people, and build systems. Some of those systems will fail. Some will have to be scrapped. But some will actually work better than the research systems they replaced.

The pressure also forces a different kind of innovation. Instead of trying to build perfect detection, companies will build better systems for combining multiple signals: C2PA metadata when available, behavioral analysis, biological signal detection, human moderation, user reports. No single system works. But layering multiple imperfect systems starts to become actually useful.

Regulatory Pressure as Innovation Driver

Historically, impossible deadlines have driven innovation. The GDPR seemed impossible to comply with in 2016. Companies deployed systems anyway, and now privacy is better (not perfect, but better). The medical device regulations in the US seemed absurdly strict. Companies built better processes, and medical devices became safer.

India's deepfake regulations might play the same role. They're impossible to comply with perfectly, but they force platforms to invest in detection, labeling, and moderation infrastructure that they probably wouldn't have prioritized otherwise.

The outcome won't be perfect deepfake detection by February 20th. But it might be better detection systems across the industry by the end of 2025, and even better systems by 2026.

The Global Spillover Effect

Whatever India forces platforms to build, they'll deploy globally. That's the thing about platform engineering: maintaining separate systems in different countries is expensive. It's easier to deploy one global solution and accept that it's probably too strict for some places and too lenient for others.

So if Instagram builds better deepfake detection for India, Instagram users everywhere will benefit. If X finally implements deepfake removal systems to comply in India, X users everywhere get better moderation. The deadline becomes a forcing function for global improvement.

That's probably not what India's regulators intended, but it's what will likely happen.

Estimated data shows YouTube as the largest platform with 500 million users, followed by Instagram and Facebook, highlighting the scale of the deepfake detection challenge in India.

What Happens When Systems Fail

We should talk about what actually happens when these systems inevitably fail to work as intended.

Scenario one: Platforms deploy detection systems that are too aggressive. Legitimate videos get flagged as deepfakes and removed. Creators get their content taken down. Appeals take days or weeks to resolve (remember, the three-hour deadline is for removal, not appeals). Creators lose followers, lose income, lose trust in the platform.

This is probably what will happen. AI-powered detection systems have high false positive rates. It's how they're designed. They trade accuracy for recall. They'd rather flag ten real videos as fake than miss one actual deepfake.

Scenario two: Platforms deploy detection systems that are too lenient. Obvious deepfakes stay online. They spread, cause harm, and by the time humans catch them, thousands of people have already seen them. The regulation fails to actually prevent harm.

This is also probably what will happen, just at lower scale. Most deepfakes will slip through, especially ones created with new tools that detection systems weren't trained on.

The Litigation Risk

When these systems inevitably fail, there's litigation risk. If Instagram falsely flags someone's video as a deepfake and removes it, causing them to lose their job or suffer reputation damage, they could sue. If Instagram fails to remove an actual deepfake that causes harm, that person could also sue.

Platforms are suddenly exposed to legal liability they didn't have before the regulation. That's going to make them very defensive. Expect lots of legal disclaimers, very conservative removal policies, and extended appeal processes despite the three-hour deadline.

The Creator Economy Impact

People making a living through content creation on Instagram, YouTube, and TikTok are about to experience a shock.

If deepfake detection systems are too aggressive, legitimate AI-assisted content gets flagged. Someone using AI tools to create backgrounds, fix lighting, or improve production value suddenly finds their video gets removed or labeled as synthetic. That's a massive problem for creators who've built their workflows around AI tools.

The regulation doesn't distinguish between deepfakes (malicious, non-consensual fake videos) and AI-assisted content creation (legitimate use of tools to improve production quality). To an algorithm, they might look the same. So you get collateral damage.

Creators will probably need to start disclosing which parts of their content used AI tools, even when it's just background removal or color grading. This will become a standard practice, which might actually be good for transparency but will also add friction to content creation workflows.

Professional vs. Amateur Split

Professional creators who invest in C2PA-compliant tools will be fine. They'll disclose everything, properly embed metadata, and their content will be protected. Amateur creators using free tools won't have those options, and their content might get caught up in false positives.

This creates an incentive structure where professional creators move toward compliant tools, and amateur creators either stop using AI tools or switch to tools that explicitly don't embed detection signals. Neither is great for platform health.

Possible Solutions Nobody's Really Talking About

If current deepfake detection technology isn't adequate for India's deadline, what could actually work?

Verification Layers

Instead of trying to detect deepfakes automatically, platforms could implement verification layers. Want to share a video of a political candidate? Verify through multiple methods that it's real: cryptographic signature, metadata chain, biometric verification of the person in the video. This is expensive and friction-heavy, but it would actually work for high-stakes content.

For most content, you wouldn't need verification. Only content that's flagged as potentially sensitive would go through the process. This trades off some friction for actual security.

Trusted Creator Verification

Another approach: verified creators get special status. If you're a journalist, a politician, a public figure, you go through a verification process. Your account gets marked as verified. Your videos are trusted by default. Deepfake detection only applies to unverified accounts.

This isn't perfect, but it protects high-stakes content while allowing amateur creators freedom to experiment.

Incentive Structures

Instead of trying to detect and remove all deepfakes, platforms could change incentives. Rewarding creators who disclose when they use AI tools. Penalizing creators who create non-consensual intimate content. Marking content from accounts with histories of spreading misinformation as potentially unreliable.

This is softer than detection and removal, but might actually be more effective than algorithms that get fooled easily.

Community-Powered Verification

Let users help identify deepfakes. Implement a Reddit-like voting system where users flag potentially manipulated content, and if enough users agree, the platform adds a warning label. This crowdsources detection and distributes the work.

It's not perfect, but it's cheaper than hiring millions of moderators and often more accurate than algorithms.

The Global Deepfake Arms Race

Let's zoom out and look at the bigger picture. India's deadline is just one battle in a much larger war: the race between deepfake generation technology and detection technology.

Right now, generation is winning. Every few months, a new model comes out that's better at generating convincing fakes. Sora can create realistic video. Stable Diffusion Video can generate cinematic sequences. Eleven Labs can clone any voice. FLUX can generate images indistinguishable from photographs.

Meanwhile, detection is still struggling with problems that researchers thought were solved five years ago. This is the fundamental asymmetry: creating a deepfake takes a few minutes and costs nothing. Detecting it requires custom-built technology and human expertise.

The Economic Incentive Structure

Why is this happening? Economics. There's enormous venture capital funding flowing into generative AI. Tools that let anyone create impressive content are valuable and profitable. Tools for detecting deepfakes? Less clear business model. Less funding. Slower progress.

India's regulation is trying to force balance by making detection a legal requirement. Platforms have to invest in it or face consequences. That's the right approach, but it only works if platforms actually can invest effectively. If the technology doesn't exist even with enough funding, regulation just forces bad implementations.

The Regulation Acceleration Pattern

We've seen this pattern before. New technology emerges, grows unchecked for years, then suddenly faces regulation. Regulation forces investment in safety, detection, or prevention systems. Sometimes those investments work. Sometimes they don't.

With deepfakes, we're at the regulation phase. India's deadline is an early attempt to force the industry into the "investment and solution" phase. Whether it succeeds depends on whether adequate detection technology can actually be built with enough resources and time.

Early signs aren't great. But the pressure might create breakthroughs that pure research funding couldn't achieve.

The Data Privacy and Consent Layer

There's another dimension to this that doesn't get talked about enough: consent and privacy.

Deepfakes require training data. To create a convincing deepfake of someone, you need images or videos of that person. For public figures, that data is freely available. For private individuals, deepfake creators often scrape social media, dating apps, or video platforms without consent.

India's regulations focus on detection and removal. They don't address the consent issue. But they probably should. Someone whose face is being used to create deepfakes without permission is being harmed, and that harm happens before the deepfake is even posted to a platform.

Privacy by Design

What if platforms required consent verification before allowing images of identifiable people to be downloaded in bulk? What if there was a registry of people who've opted out of having their likeness used for training AI models? What if scrapers faced penalties for violating these restrictions?

These aren't perfect solutions, but they address the root cause (lack of consent for data use) rather than just the symptom (deepfakes on platforms).

But implementing this would require international cooperation and coordination between platforms and regulators. India's deadline doesn't address this at all.

Looking Forward: What 2025 Actually Brings

Let's predict what actually happens in the next year, assuming India's deadline holds.

February 20, 2025: Platforms announce compliance. They've deployed detection systems, labeling systems, and moderation improvements. Technically, they're compliant. Functionally, they've only partially solved the problem.

March-April 2025: Users and researchers start documenting false positives. Legitimate videos get flagged. Creators complain. Appeals backlogs grow.

May 2025: Some platforms adjust their detection thresholds to reduce false positives. This increases false negatives. Deepfakes slip through more often.

Summer 2025: India conducts audits of platform compliance. They find that detection systems are inadequate. They threaten enforcement but don't follow through immediately because the alternative (shutting down platforms) is too disruptive.

Late 2025: Platforms announce improved detection systems, better moderation, and new initiatives. They start investing in better R&D.

2026: Real progress. Better detection systems exist, not because they're perfect, but because platforms finally invested serious resources.

This assumes India's regulators have realistic expectations and are willing to work with platforms rather than just punishing them. It also assumes no major new deepfake generation technology that completely breaks existing detection systems.

Neither assumption is guaranteed.

FAQ

What is a deepfake exactly?

A deepfake is a synthetic media file (video, audio, or image) created using deep learning AI techniques to alter or completely fabricate content. The term originally referred to highly realistic face-swapped videos, but now covers any AI-generated or AI-manipulated content that mimics real people or events. Deepfakes range from obvious parodies to nearly indistinguishable forgeries that can deceive viewers into believing fake events actually occurred.

How does deepfake detection technology work?

Deepfake detection uses several approaches: AI-powered models analyze videos for patterns that reveal AI generation (like unnatural eye movements or inconsistent lighting), metadata-based systems check for embedded content credentials that prove when and how content was created, and behavioral analysis examines movement patterns and facial expressions for unnaturalness. Most effective detection combines multiple techniques because no single method is reliable enough alone, and detection is constantly racing against improving generation technology that creates increasingly convincing fakes.

Why is India's February 20th deadline impossible to meet?

The deadline is impossible because current deepfake detection technology doesn't work reliably at scale, platforms need to process millions of pieces of content daily, the existing infrastructure doesn't support three-hour removal times for all reported content, and much synthetic content is created with open-source tools that leave no detectable signals. Additionally, metadata systems like C2PA only cover a fraction of actual deepfakes, and properly training moderation teams takes months that platforms don't have.

What is C2PA and why doesn't it solve the deepfake problem?

C2PA (Content Credentials) is a metadata standard that records how digital content was created or edited, theoretically helping platforms verify authenticity. However, it only works when creators use C2PA-compliant tools to create content, which excludes open-source generative AI models and deepfake apps that actively refuse to participate. Additionally, C2PA metadata strips easily during routine platform operations like video compression or file re-uploads, making it fragile in real-world usage.

What's the real impact of India's deepfake regulation on global platforms?

India's 1 billion internet users make the country a critical market where platforms can't ignore regulations. Any systems built for India compliance will likely deploy globally since maintaining separate infrastructure is expensive. This forces worldwide changes in deepfake detection and content labeling, but also risks exporting overly aggressive AI detection systems that create false positives affecting legitimate content creators everywhere.

Can platforms actually hire enough moderators to meet the three-hour removal deadline?

No, not in nine days. The current moderation workforce isn't trained for deepfake detection, new hires would need months of training, and hiring thousands of moderators across India in a week is logistically impossible. Platforms will attempt to automate with AI systems instead, which will create high false positive rates as AI detection systems struggle with reliability at this scale and speed.

What happens to creator economies when deepfake detection systems launch?

Creators using AI tools for legitimate purposes (background removal, color grading, voice enhancement) risk having content flagged as synthetic and removed. Professional creators with C2PA-compliant tools will be protected, but amateur creators using free tools lack this option. This creates incentives for creators to disclose AI usage or switch to tools that don't embed detection signals, adding friction to content creation workflows and potentially dividing the creator economy into verified and unverified tiers.

Why does deepfake generation continue to improve while detection struggles?

Generation technology receives massive funding from venture capital and major tech companies, creating strong economic incentives for improvement. Detection systems have weaker business models and funding patterns, causing slower progress. Additionally, detecting deepfakes is mathematically harder than generating them, creating a fundamental asymmetry where generation will likely always stay ahead of detection until significant breakthroughs occur in detection technology.

The Bottom Line

India's deepfake detection deadline arrives in nine days, and the technology platforms would need to fully comply doesn't actually exist yet. This isn't a minor implementation challenge. This is a fundamental problem with current state-of-the-art deepfake detection, labeling, and removal systems.

Meta, Google, and other platforms will deploy something. They'll probably claim compliance. But honest assessment: what they deploy will be inadequate for the actual problem at hand. It might catch some obvious deepfakes. It will definitely create false positives that harm legitimate creators. It won't significantly reduce deepfake spread.

But here's what might actually matter: pressure forces change. Impossible deadlines drive innovation. The regulation is badly timed and inadequately thought through, but it's forcing platforms to invest in infrastructure they should have built years ago.

A year from now, deepfake detection will be better than it is today. Not perfect. Not adequate for stopping all deepfakes. But measurably better. That's something.

India's regulation might fail in the short term. But it might succeed in the long term by forcing the kind of investment and engineering effort that pure market incentives never created.

Key Takeaways

- India's February 20th deepfake mandate requires content labeling and three-hour removal, but detection technology isn't ready for deployment at this scale

- Current deepfake detection methods fail at scale: AI models overfit to training data, C2PA metadata strips during platform operations, and open-source tools leave zero signals

- Platforms will likely deploy technically compliant but practically inadequate systems, creating false positives that harm legitimate creators while missing actual deepfakes

- India's 1 billion internet users make this the world's most critical test case for deepfake regulation, forcing global platforms into early compliance attempts

- The regulatory pressure may accelerate real progress in detection and moderation infrastructure that pure market incentives haven't motivated, with measurable improvements likely by late 2025

Related Articles

- India's New Deepfake Rules: What Platforms Must Know [2026]

- Social Media Safety Ratings System: Meta, TikTok, Snap Guide [2025]

- Google's Tool for Removing Non-Consensual Images from Search [2025]

- OpenAI's Super Bowl Hardware Hoax: What Really Happened [2025]

- New York's AI Regulation Bills: What They Mean for Tech [2025]

- Grok's Deepfake Problem: Why AI Image Generation Remains Uncontrolled [2025]

![Deepfake Detection Deadline: Instagram and X Face Impossible Challenge [2025]](https://tryrunable.com/blog/deepfake-detection-deadline-instagram-and-x-face-impossible-/image-1-1770831422485.jpg)