Social Media Safety Ratings System: What the New Mental Health Standards Mean for Platform Accountability [2025]

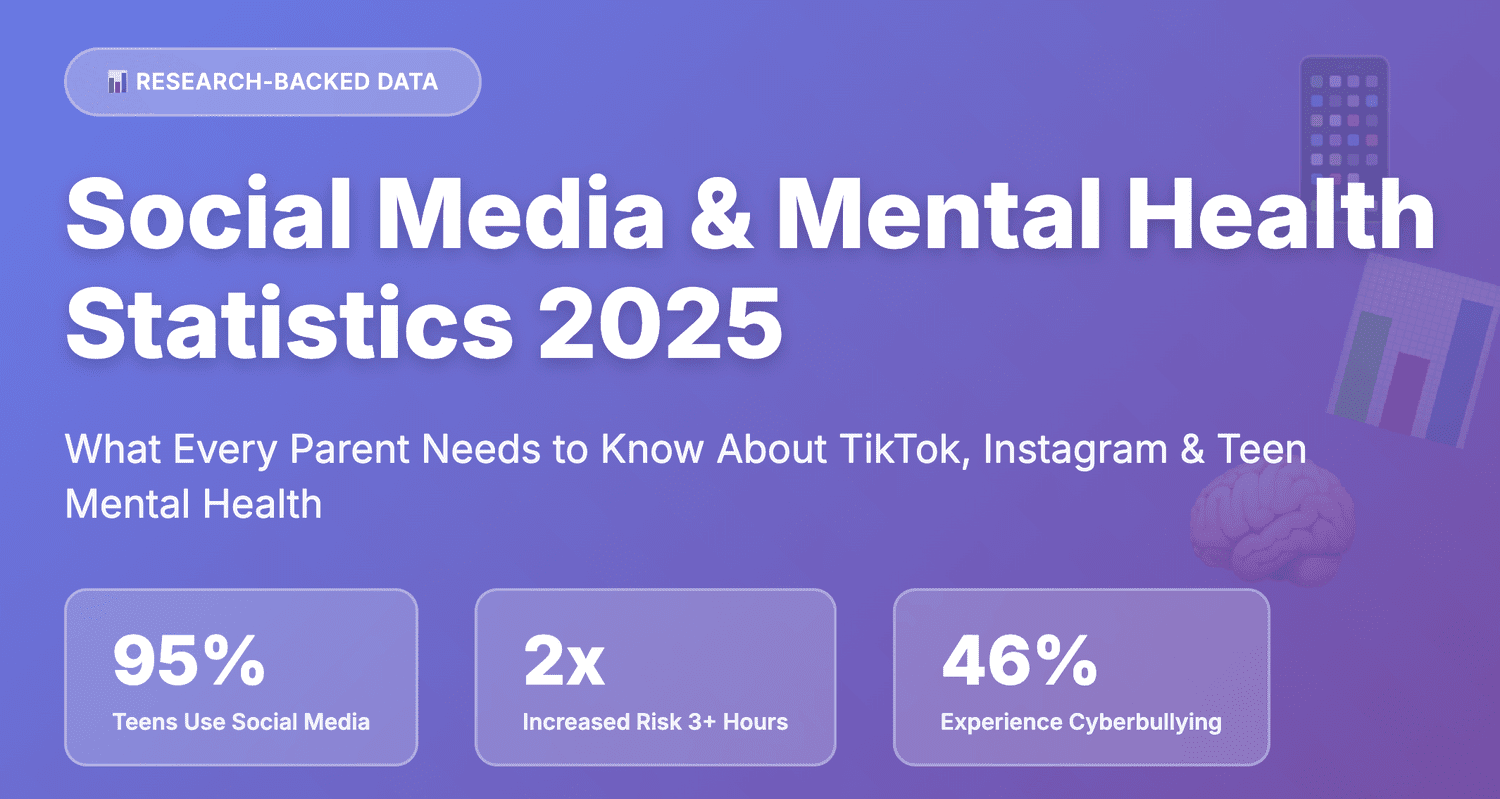

Something interesting just happened in the world of social media regulation. Instead of waiting for government mandates, major platforms like Meta, TikTok, Snap, and YouTube voluntarily agreed to be graded on how well they protect teen mental health. No lawsuits forced this. No legislation required it. They just... signed up.

The program is called Safe Online Standards (SOS), and it's backed by the Mental Health Coalition. Think of it like a nutrition label for social media—a transparent scoring system that tells parents, teens, and researchers exactly how safe these platforms are when it comes to adolescent mental health.

But here's what makes this wild: the highest rating they can get is called "use carefully." Not "safe." Not "healthy." But "use carefully." That tells you something about what these experts actually think about social media and teen wellbeing.

This article breaks down exactly what the SOS initiative is, how it works, which companies participate, what the ratings actually mean, and whether any of this stuff matters in the real world. We're talking about the future of platform accountability, the politics of "voluntary" compliance, and what happens when mental health experts get a seat at the table.

TL; DR

- What It Is: The Safe Online Standards (SOS) initiative is a new external rating system that grades social platforms on teen mental health protection

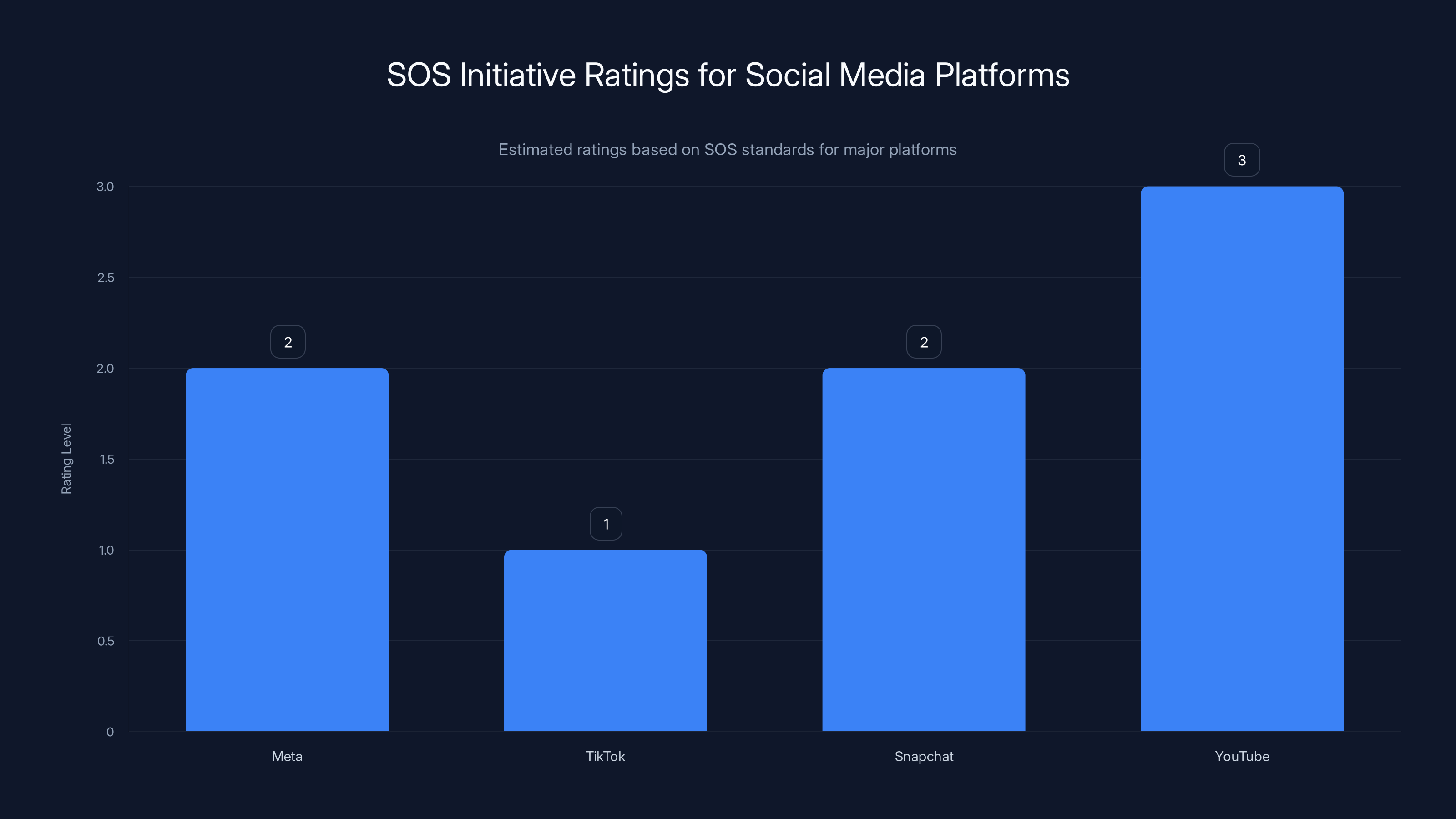

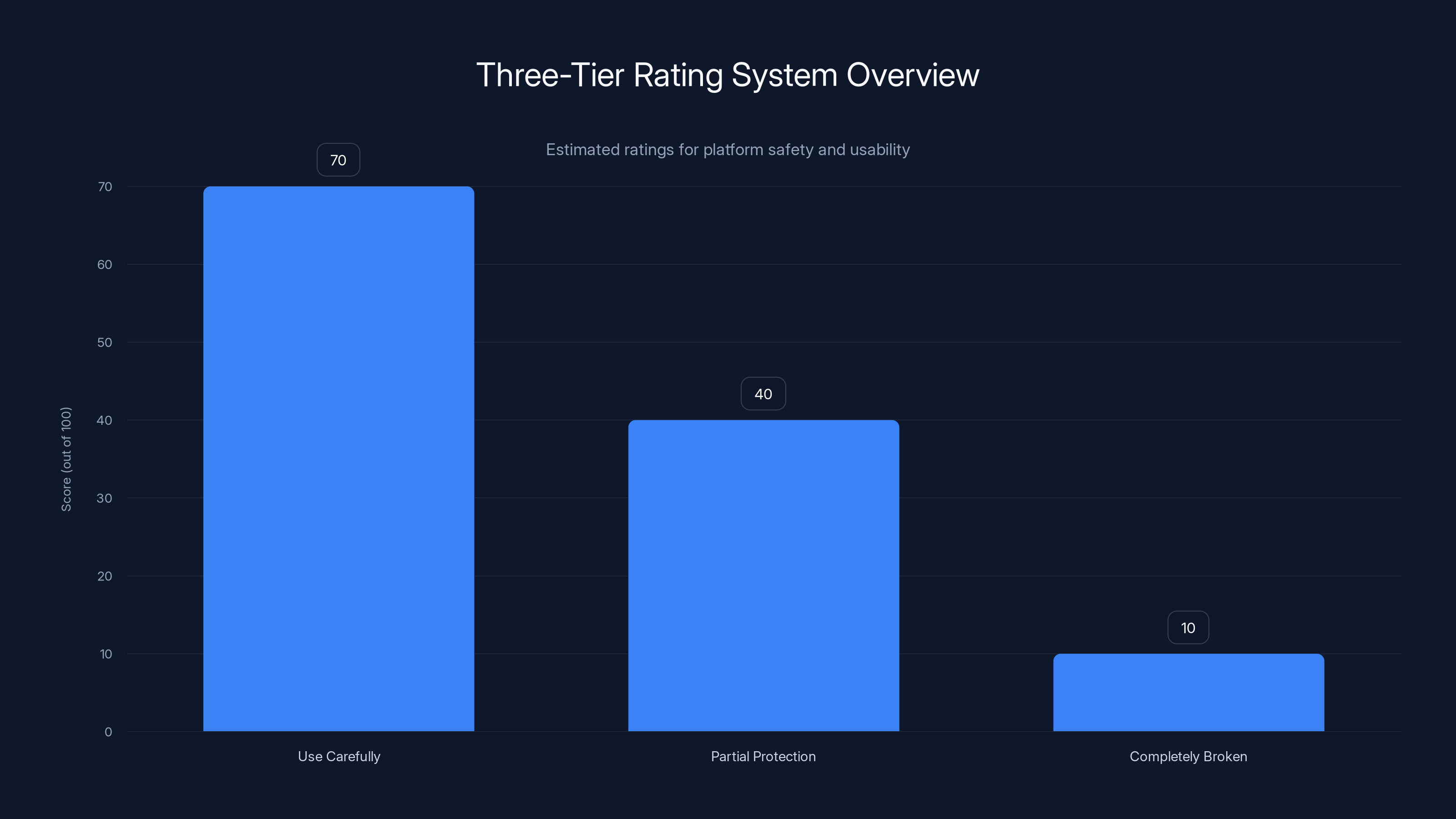

- The Ratings: Three tiers exist: "use carefully" (best), "partial protection" (middle), and "does not meet standards" (worst)

- Who's In: Meta, TikTok, YouTube, Snap, Discord, Roblox, and others are voluntarily submitting for evaluation

- What Gets Graded: Platform policies, content moderation, reporting tools, privacy settings, parental controls, and harmful content filters

- The Catch: Even the "best" rating basically says "this could still harm your kid, so be careful." It's not an endorsement

Estimated data shows Meta and Snapchat with 'partial protection', TikTok with 'does not meet standards', and YouTube with 'use carefully'.

Understanding the Safe Online Standards Initiative

The Mental Health Coalition launched the SOS initiative because social media platforms weren't being held accountable for teen mental health impacts. Government regulation moves slow. Lawsuits take years. Public pressure gets forgotten. But a transparent, expert-backed grading system? That creates immediate, measurable accountability.

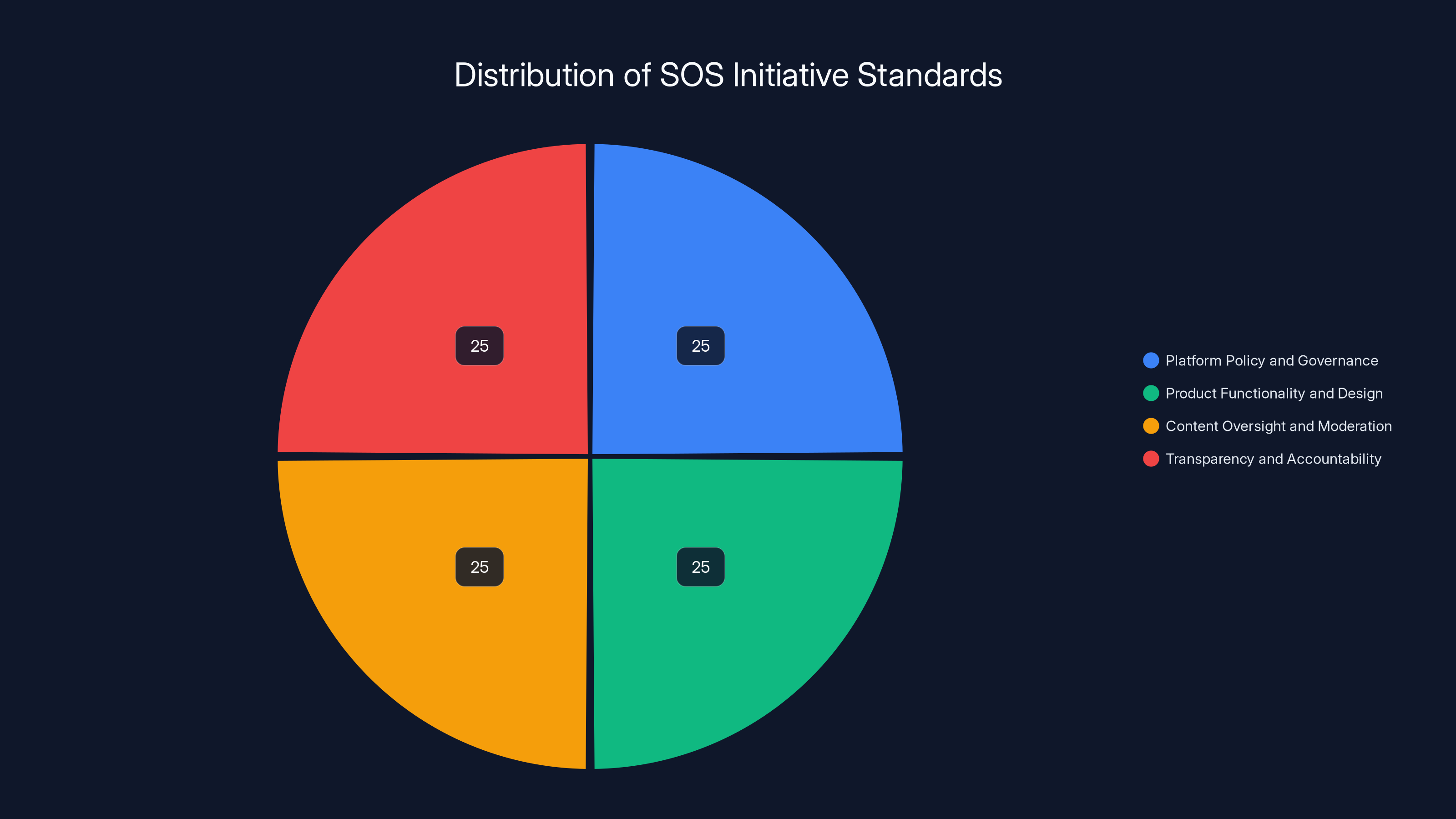

The initiative comprises roughly two dozen standards covering four major areas: platform policy and governance, product functionality and design, content oversight and moderation, and transparency and accountability. These aren't vague suggestions. They're specific, measurable criteria that experts can actually evaluate.

Dr. Dan Reidenberg, Managing Director of the National Council for Suicide Prevention, leads the SOS initiative. That's not some random name—he's been working on suicide prevention for decades. His team didn't just pull standards out of thin air. They studied what actually matters for teen mental health, looked at what the best platforms were already doing, and created a benchmark.

The evaluation process works like this: platforms voluntarily submit documentation on their policies, tools, and product features. An independent panel of global experts reviews everything. Then the platforms get a rating. The whole thing is designed to be public, transparent, and independent of industry influence. Sounds good on paper. The execution is where it gets interesting.

The SOS initiative standards are evenly distributed across four major areas, ensuring a comprehensive approach to evaluating social media platforms.

The Three-Tier Rating System Explained

The SOS initiative uses three ratings. Most people assume "three tiers" means "good, bad, worse." In this case, it means "maybe okay, kind of broken, or completely broken."

The "Use Carefully" Rating

This is the highest achievable rating. Before you get excited, understand what that actually means. "Use carefully" doesn't mean "safe" or "healthy" or "good for your kid." It means the platform met minimum standards for protecting teens from the worst stuff.

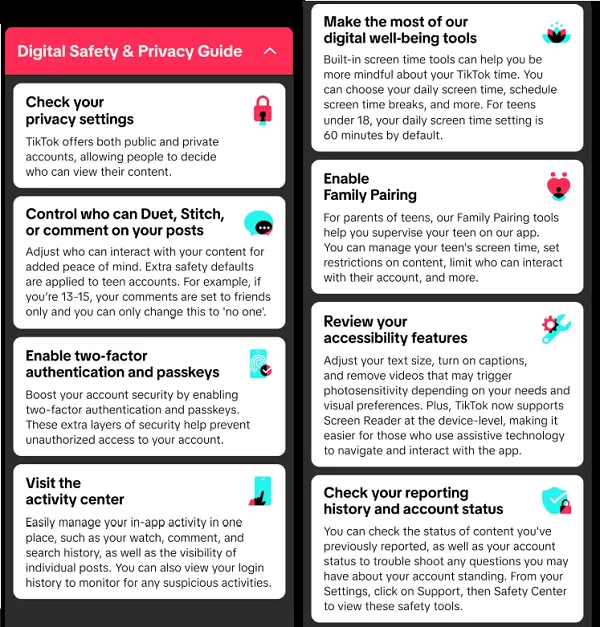

The specific requirements for "use carefully" include: reporting tools that are accessible and easy to use, privacy and safety functions that are clear and easy to set (especially for parents), platform features and filters that reduce exposure to harmful or inappropriate content, and clear policies about suicide and self-harm content. There's also a requirement for parental controls and for the platform to take action on reported harmful content within a reasonable timeframe.

Compliant platforms get a blue badge they can display. That badge is basically a seal that says "we tried." It's not a guarantee. It's not an endorsement. It's more like a participation trophy for actually showing up to the mental health game.

When you break down what "use carefully" actually requires, it's not revolutionary. Reporting tools should be easy to find? That's table stakes. Parental controls should exist? Most platforms have had that for years. Content filters should reduce exposure to harmful stuff? That's literally the bare minimum of content moderation.

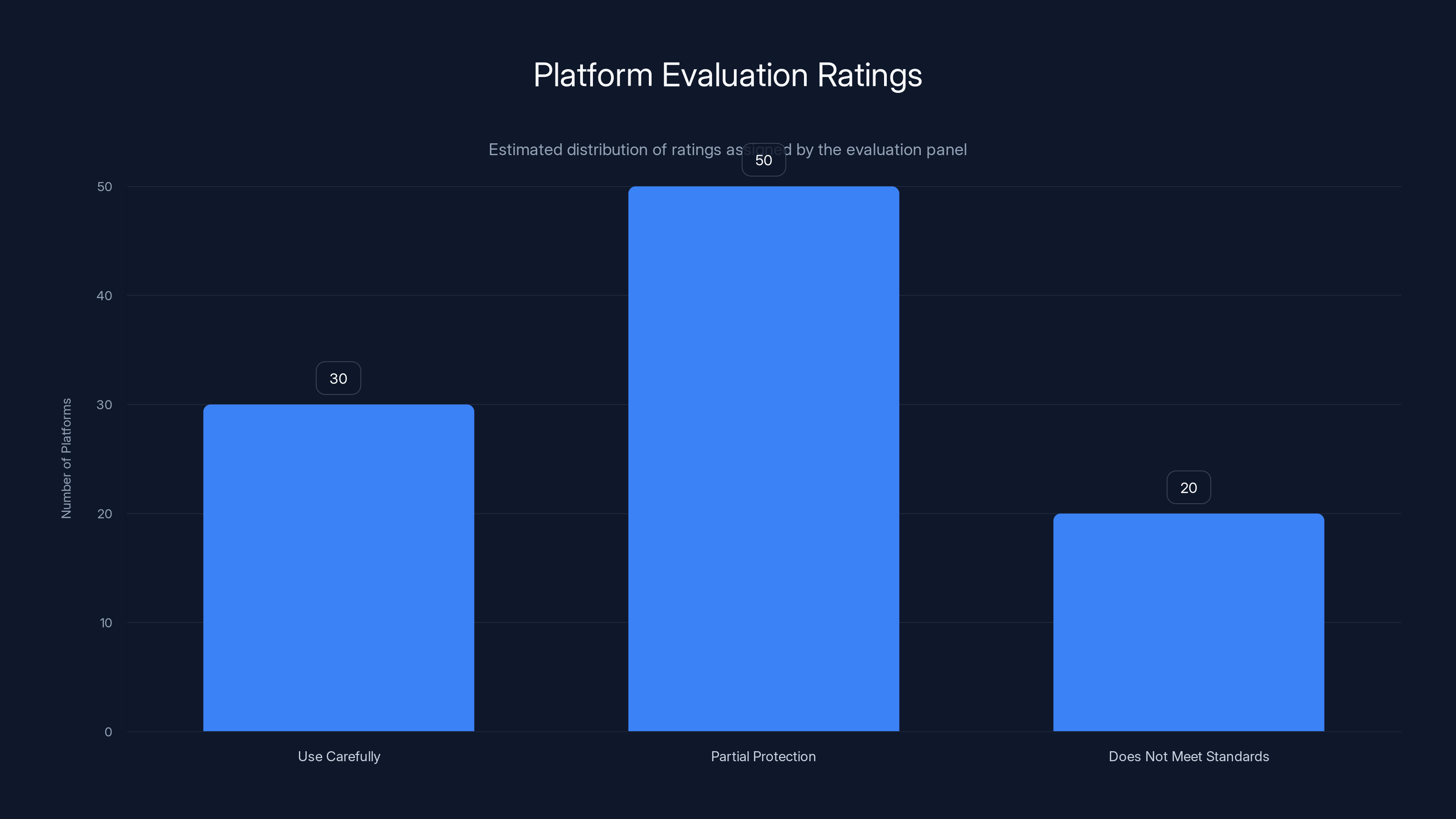

The "Partial Protection" Rating

This middle tier means some safety tools exist, but they're hard to find or use. Maybe the platform has a reporting mechanism, but you need to click through seventeen menus to find it. Maybe they have parental controls, but they're buried in settings and don't actually work well. Maybe they moderate some harmful content, but inconsistently.

"Partial protection" is what you see with platforms that are trying but not really trying. They have the infrastructure, but they didn't invest in making it user-friendly. Or they created the tools years ago and haven't updated them as the platform evolved.

The "Does Not Meet Standards" Rating

This is what happens when a platform basically fails. Filters and content moderation don't reliably block harmful or unsafe content. Reporting tools are so hard to use that nobody uses them. Privacy settings are confusing or non-existent. Parental controls either don't work or don't exist.

Historically, this is where platforms that see suicide and self-harm content spread without intervention would land. Or platforms where minors can easily encounter predatory behavior and the company doesn't care. Or gaming platforms where kids are routinely exposed to harassment.

The Twenty Standards That Actually Get Evaluated

The twenty-odd standards covering four categories sound abstract. Let's get specific about what experts are actually checking.

Policy and Governance Standards

First, platforms need clear policies about minimum age requirements and age verification. They need to publish how they handle content related to suicide and self-harm. They need transparency reports on how often they remove content, how quickly they act, and what the appeals process looks like.

Governance matters too. Is there actually a person accountable for mental health? Or is it buried under the legal department? Does the platform have a process for updating policies when new research emerges? Are there advisory boards that include mental health experts, not just lawyers?

The governance angle is where most tech companies fall down. They'll hire a head of safety, but that person reports to legal. They'll create policies, but they won't change them even when evidence shows the policy is creating harm. They'll say they care about teen mental health while simultaneously designing features that maximize engagement (which often means more harmful content exposure).

Product Functionality and Design Standards

This is where the rubber meets the road. Does the platform allow users to easily customize their feeds? Can you turn off auto-play? Can you limit notifications? Can you see what data the platform collects on you? Can you actually delete your content and your account?

Also, does the platform actively promote mental health content? If a teen searches for help with depression, does the platform direct them to resources? Or does it direct them to more depressing content because that's what keeps them scrolling?

Parental controls are a big one. Can a parent actually monitor who their teen is talking to? Can they set screen time limits that the teen can't bypass? Can they see what content their teen is viewing? Or are parental controls an afterthought that got added because regulators were asking questions?

The design standards also cover age-appropriate features. Some features that work fine for 18-year-olds are harmful for 13-year-olds. Does the platform actually enforce that? Or do they treat everyone the same and hope parents monitor their kids?

Content Oversight and Moderation Standards

This is the hard part. Every platform claims they have good moderation. But do they actually catch harmful content? And if they catch it, do they remove it fast enough to prevent harm?

The standards include: identifying and removing content that encourages self-harm or suicide, removing content that promotes eating disorders, removing content that promotes drug use to minors, and moderating harassment and bullying. The standard isn't "get everything." It's "have a reliable system that gets most of it, and gets it fast."

The specificity here matters. Platforms don't get points for saying "harassment is bad." They get evaluated on whether their actual enforcement catches harassment and removes it. That's why TikTok and Meta are interesting case studies—they have billions of hours of content to moderate and have been caught letting harmful content stay up.

Transparency and Accountability Standards

This is where the Meta relationship with the Mental Health Coalition gets weird. If you want to evaluate a platform fairly, you need them to be honest about what's happening. But platforms have every incentive to hide data that makes them look bad.

The standards require platforms to publish transparency reports. How much content gets flagged? How much gets removed? How many accounts get suspended? What's the appeal rate? What percentage of appeals succeed? If a platform can't answer these questions, or won't answer them, that's a red flag.

They also need to commit to third-party audits. Independent researchers should be able to study the platform and publish findings. Some platforms are cool with this. Others... less cool.

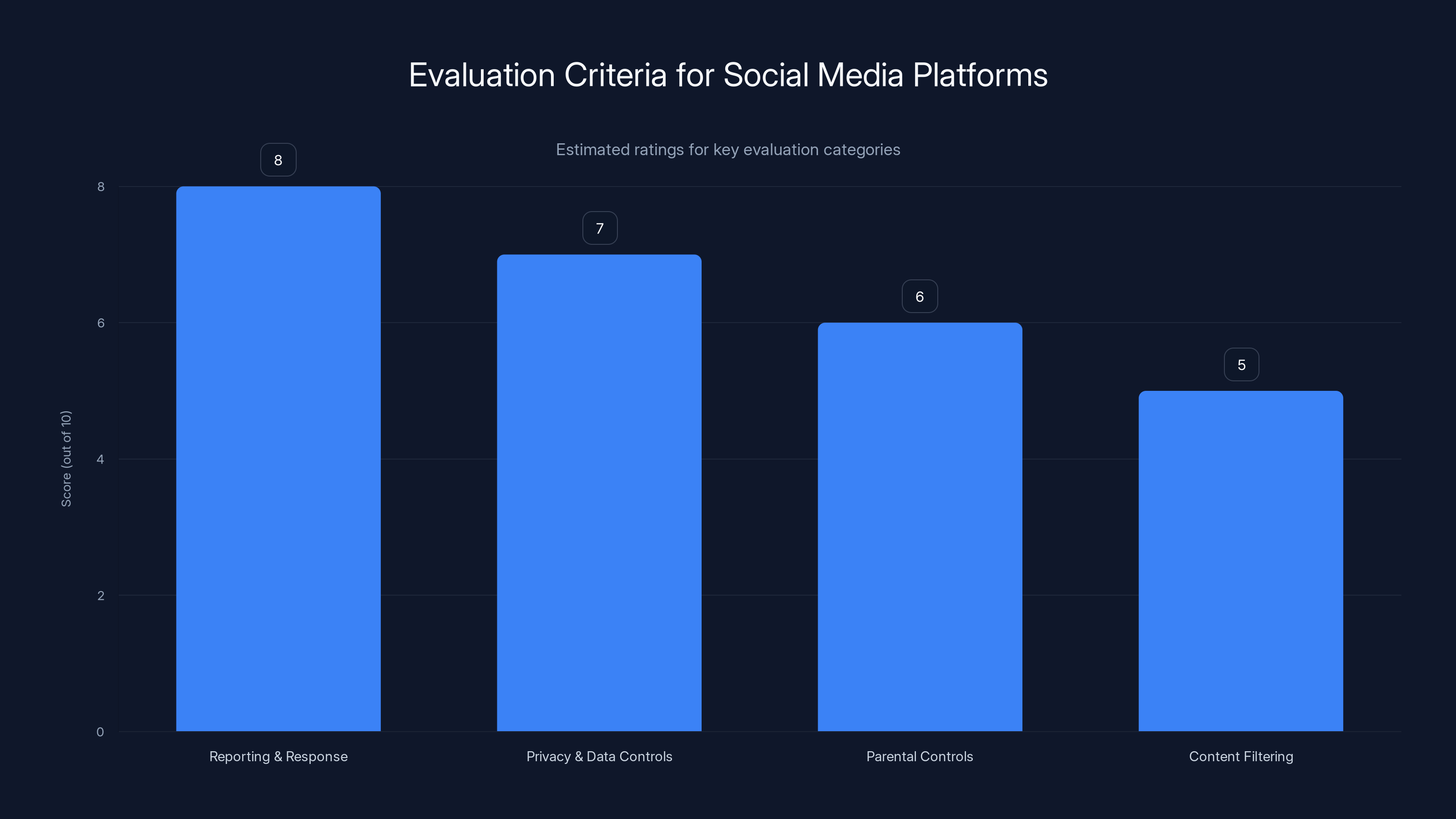

Estimated data shows that Reporting & Response systems are typically rated higher than Content Filtering in platform evaluations.

Why Meta, TikTok, and Other Platforms Are Participating

Here's the thing nobody talks about: these platforms are participating because regulation is coming anyway, and they'd rather shape the rulebook themselves.

Meta has been facing lawsuits over how Instagram harms teen mental health. Project Mercury, an internal Meta research effort that began in 2020, found damaging effects on users' mental health. Instead of fixing the problems, Meta buried the data. Last year, that cover-up became public, and now Meta is on trial in California over child harm from addictive products.

TikTok is fighting for its existence in the US. A law that could ban the app is pending. Showing that TikTok is voluntarily participating in mental health oversight makes the company look responsible. It's a PR move, but it's also acknowledgment that the company needs to appear more regulated.

YouTube (owned by Google) wants to get ahead of criticism about harmful content exposure to minors. Snap has been rebuilding its reputation after years of being the platform for sext-sharing teens. Discord faced serious child endangerment concerns and has been beefing up age verification. Roblox got accused of not protecting kids well enough.

Every one of these platforms is participating because doing so is better than the alternative: being forced into compliance by regulation, legislation, or litigation.

But here's where it gets complicated. The Mental Health Coalition lists Meta as a "creative partner." That means Meta had input into how the standards were created. Meta has influenced the rulebook. That's... a conflict of interest that the organization publicly acknowledges but doesn't seem to think is a problem.

The Meta Partnership That Raises Questions

Meta and the Mental Health Coalition have a history together. In 2021, the MHC said it would work with Facebook and Instagram to destigmatize mental health during COVID-19. In 2022, they published research (with Meta's support) showing that mental health content on social media reduces stigma and encourages people to seek help.

In 2024, the MHC partnered with Meta on the "Time Well Spent Challenge," a campaign asking parents to have "meaningful conversations" with teens about healthy social media use. The framing is interesting: instead of questioning whether teens should be on social media at all, the campaign focused on keeping them on-platform in a "time well spent" way.

That same year, they partnered again to establish Thrive, a program where tech companies can share data about content that violates self-harm or suicide guidelines. Sounds good, but it's also a program Meta controls. Meta decides what data to share and how.

Meta lists the Mental Health Coalition as a creative partner on its website. The MHC is now evaluating Meta's safety practices. Do you see the potential problem?

This isn't necessarily scandal-grade corruption. It's not like Meta is bribing the MHC. It's more subtle. When you work closely with a company, when they support your research, when they fund your initiatives, there's an implicit understanding: don't bite the hand that feeds you.

Would the MHC give Meta a "does not meet standards" rating? Probably not. Would they give a competitor a "does not meet standards" rating for the exact same issues Meta has? Maybe. That asymmetry is what makes the relationship problematic.

Estimated data suggests that most platforms receive a 'Partial Protection' rating, indicating room for improvement in meeting safety standards.

Other Companies Participating in SOS

Meta, TikTok, YouTube, and Snap get the headlines, but other platforms are participating too.

Discord

Discord is a chat and voice platform popular with gamers and communities. It's not a traditional social network, but it hosts communities where harmful content can spread. The platform has faced serious accusations about child endangerment. Predators were using Discord to groom minors. The platform responded by beefing up age verification and moderation, but trust was damaged.

Participating in SOS is a chance for Discord to rebuild that trust. They'll be evaluated on whether they actually protect minors in communities, whether they can identify and remove grooming attempts, and whether their moderation keeps up with the scale of the platform.

Roblox

Roblox is a game creation platform where millions of kids spend time. It's also been accused of not protecting children well enough. Inappropriate interactions between adults and minors have happened on the platform. The company has implemented safety features, but there's always a question about whether they're enough.

Roblox participating in SOS means being evaluated on: whether they can identify adults attempting to contact minors inappropriately, whether they enforce age-appropriate content, and whether their moderation scales with the millions of user-generated games on the platform.

Other Platforms

The SOS initiative includes about two dozen standards, and multiple platforms beyond the big five are participating. We'll see evaluations for smaller social networks, gaming platforms, and messaging apps. The goal is to create a universal benchmark so parents and teens can compare platforms across the entire social media landscape, not just TikTok vs. Instagram.

What Gets Evaluated in Each Category

Let's get granular about what an expert panel actually looks at when they evaluate a platform. This is where the standards become concrete.

Reporting and Response Systems

When a teen finds harmful content (suicide encouragement, self-harm instructional videos, eating disorder tips, bullying, grooming), can they report it? Where's the report button? How many clicks to get there? What happens after they report it?

The standard requires the button to be easy to find and easy to use. A good system has the report button visible in obvious places: next to the content, in the user's menu, in comments. A bad system buries it three menus deep or doesn't exist.

After reporting, what's the timeline for the platform to respond? If a teen reports a video promoting suicide, how long before it gets removed? Hours? Days? Weeks? The SOS standards evaluate whether the platform has a process that actually works.

Privacy and Data Controls

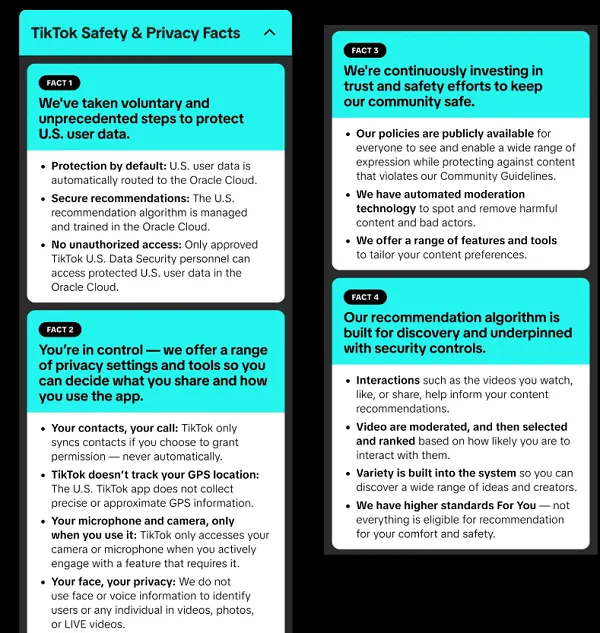

Platforms collect massive amounts of data on teens: who they talk to, what they watch, how long they spend on features, where they are, what they search for. The standard requires that teens (and their parents) can understand what data is being collected and can control it.

Can you see what data the platform has on you? Can you download it? Can you delete it? Can you turn off tracking? Can you keep your location private? These aren't optional nice-to-haves. They're fundamental to protecting teen privacy.

For parental controls, the bar is: parents should be able to see what their teen is doing on the platform and set limits. That means parent accounts that can view the teen's activity, see who they're communicating with, and set screen time limits that can't be easily bypassed.

Content Filtering and Recommendations

The algorithm is the hidden culprit in social media harm. Platforms are designed to show you more of what keeps you engaged. If you watch a sad video, the algorithm assumes you want more sad videos. If you watch eating disorder content once, suddenly your feed is full of it.

The standard requires: platforms should have filtering mechanisms that reduce exposure to harmful content, the algorithm should not deliberately recommend harmful content, and teens should have the ability to customize what the algorithm shows them.

This is where many platforms fail. They have filters for certain categories, but the recommendation algorithm still promotes engagement-maximizing content (which is often the most harmful). Customization exists, but it's buried in settings.

Age Verification and Age-Appropriate Design

Platforms have minimum age requirements (typically 13, sometimes 16). But anybody can sign up and lie about their age. The standard requires: platforms should implement reasonable age verification, content should be age-appropriate for the stated audience, and features should be restricted based on age.

Age verification is a spectrum. Some platforms just have a birthdate field (useless). Others require ID verification (stronger) or carrier verification (better). The requirement isn't "catch every kid under 13." It's "make a reasonable effort and update your process as you discover new ways people bypass it."

Age-appropriate design means if a 13-year-old is using a feature, the content they see should be different from what a 18-year-old sees. Recommendations should differ. Content moderation standards should differ. Most platforms treat everyone the same and hope moderation catches the really bad stuff.

Harmful Content Moderation

Here's what the platform actually needs to remove or restrict: content promoting or encouraging self-harm or suicide, content providing instructional information on self-harm or suicide, content romanticizing or celebrating suicide victims, content promoting eating disorders, content providing instructions for eating disorder behaviors, content promoting drug use to minors, harassment and bullying content, and grooming behavior.

The standard isn't "catch everything." It's "have a reliable process that catches most of it and removes it quickly." A reliable process might be: a combination of automated filters and human review, with response times measured in hours not days, and consistent enforcement across the platform.

The catch: most platforms use automated systems that are imperfect. They catch obvious stuff but miss context. A teen posting "I want to die" might be joking, might be serious, might be lyrics from a song. The system can flag it, but then what? Does a human review it? Or does the filter automatically remove it?

The 'Use Carefully' tier scores highest, indicating basic compliance with safety standards, while 'Completely Broken' reflects minimal safety features. Estimated data based on typical platform assessments.

How the Evaluation Process Actually Works

Think of SOS as a platform health inspection. Instead of a single auditor visiting once a year, an independent panel of global experts does a deep evaluation based on documentation the platforms submit.

Platforms voluntarily submit documentation on their policies, tools, and product features. This is self-reported data. The platform fills out forms about their policies, provides screenshots of their tools, explains how their moderation works. It's like a student writing their own recommendation for college.

An independent panel reviews the documentation. The panel comprises mental health experts, researchers, advocates, and technologists. They're looking for: Is the documentation credible? Does the evidence support what the platform claims? Have any credible third-party reports contradicted these claims?

The evaluation considers public information too. If there are news reports about a platform failing to remove harmful content, that's data the evaluators have. If academic research found that a platform's moderation is inconsistent, that's relevant. If parents and teens complain that certain features are harmful, the panel can weigh that.

Based on the documentation and independent evidence, the panel assigns a rating: "use carefully," "partial protection," or "does not meet standards." They publish the rating with a detailed report explaining the reasoning.

The process is designed to be transparent. You can read why a platform got the rating it got. You can challenge the rating if you think the evaluation was unfair. You can demand that the panel revisit a rating if new evidence emerges.

In practice, this depends entirely on whether the panel is actually independent and whether they're willing to give bad ratings to powerful platforms. That's the open question right now.

The Business Incentives Behind Voluntary Compliance

You might wonder: why would platforms voluntarily submit to evaluation when they could just ignore it? The answer is regulatory capture and legal risk mitigation.

Regulation is coming. Congress has proposed multiple bills to protect teen privacy and limit harmful content on social media. States are passing their own laws. The UK has an Online Safety Bill. The EU has the Digital Services Act. Around the world, governments are forcing platforms to be more accountable.

Platforms would rather be evaluated on their own terms than wait for government regulation. With SOS, they're shaping the standards that will become the baseline. If they cooperate now, they can argue to regulators: "Look, we're already doing this. You don't need to pass a new law." It's a pre-emptive move to prevent stricter regulation.

There's also reputational risk. If a platform refuses to participate in SOS, what message does that send? "We don't want to be evaluated on teen safety?" Bad look. It's better to participate, get a "use carefully" rating, and show you're taking it seriously.

Litigation is another factor. Meta is currently on trial in California over child harm. If the trial results in a big judgment against the company, that opens the door for other lawsuits. Showing that Meta is voluntarily participating in mental health oversight and taking it seriously could help in court. The defense becomes: "We care about teen mental health. We're actively participating in independent evaluations. Here's what we're doing to address this issue."

The incentive structure is: it's cheaper to voluntarily agree to SOS standards now than to deal with regulation, litigation, and PR disasters later. Platforms aren't doing this out of altruism. They're doing it because the alternative is worse.

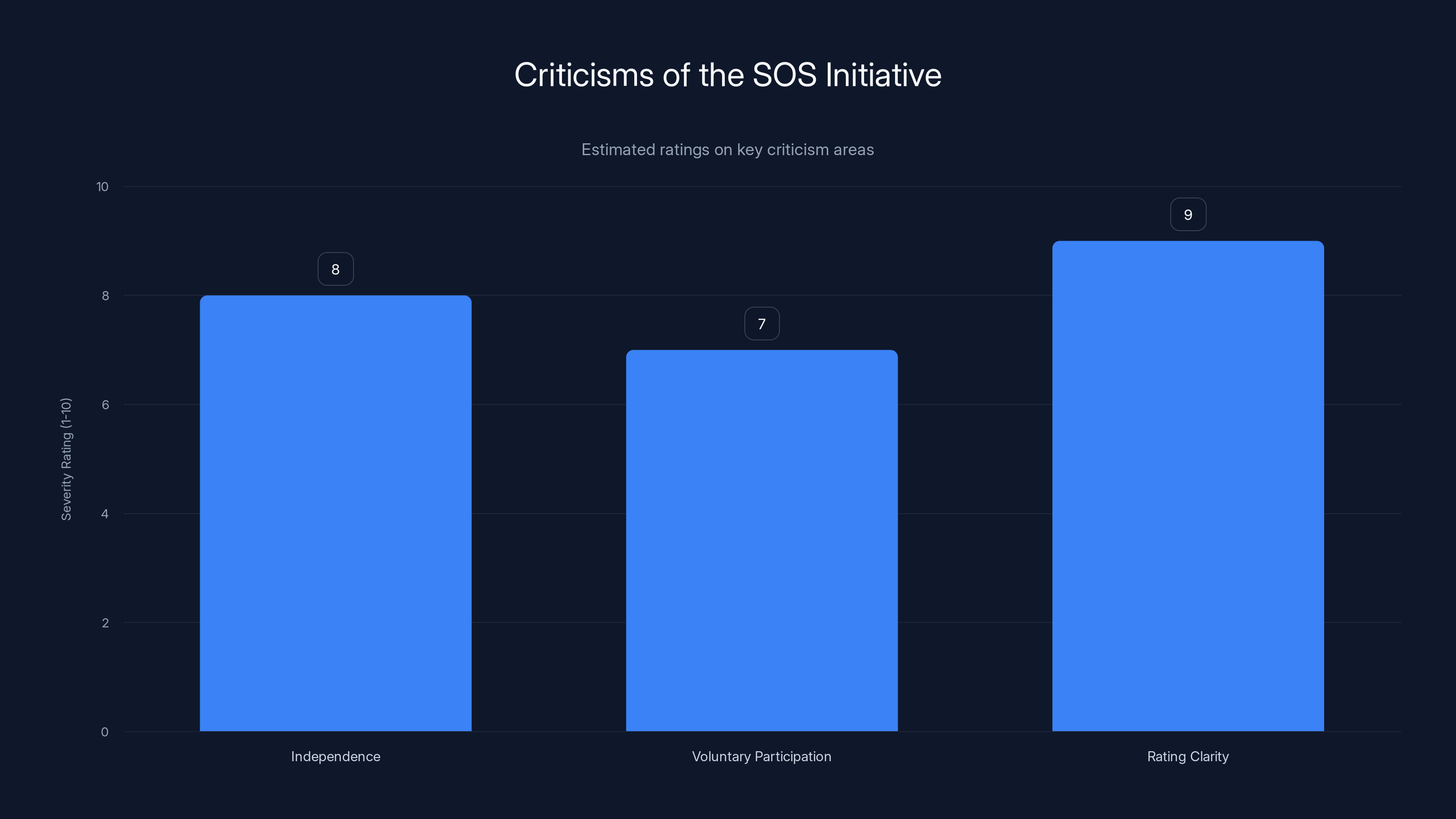

Estimated data shows that rating clarity is the most severe criticism of the SOS Initiative, followed by independence and voluntary participation concerns.

Criticisms of the SOS Initiative

The program is well-intentioned, but it has real limitations. Let's talk about what actually matters.

Independence Questions

The Mental Health Coalition's relationship with Meta is the big problem. When an organization evaluating a company has been financially supported by that company, is the evaluation truly independent? The MHC says yes. Skeptics say the appearance of conflict is itself a problem.

Independence also depends on panel composition. If the panel is mostly academics from universities that receive Meta funding, is it independent? If some panel members have consulting relationships with tech companies, does that bias their evaluation?

The SOS initiative needs to publish its panel composition and require disclosure of any financial relationships. Otherwise, the ratings won't have credibility.

Voluntary Participation

Participation is voluntary. That means platforms that are worst at protecting teen mental health might not participate. If TikTok got a "does not meet standards" rating, you'd hear about it. But what if TikTok just refused to participate? Then we'd never know.

The standards only apply to companies willing to be evaluated. That leaves out smaller platforms, niche apps, and any platform that thinks it can't pass the evaluation. It also means the most accountable companies are the ones big enough to hire compliance teams. Small competitors might be locked out.

The Ratings Are Broad

"Use carefully" doesn't mean much. It's so broad that multiple companies with different safety practices could get the same rating. That defeats the purpose of a rating system. Users can't compare platforms based on SOS ratings if every major platform gets "use carefully."

The solution would be detailed subcategories: reporting systems get a rating, moderation gets a rating, privacy controls get a rating. Then you could compare platforms on specific features. But that makes the system more complex and harder to communicate to non-technical people.

No Enforcement

The SOS ratings are informational. There's no enforcement mechanism. If a platform gets "does not meet standards," what happens? Nothing. The platform can't be fined. It can't be shut down. The only punishment is reputational damage.

Some would argue that's enough. Reputation matters. If a platform gets a bad rating, parents will tell other parents. Media will cover it. The company will face pressure to improve.

But historically, that kind of voluntary compliance fails. Companies improve until the public forgets about the problem. Then they regress back to maximizing engagement at the expense of safety.

The Standards Are Behind the Curve

Mental health research is constantly evolving. New harms emerge. New strategies for coping with social media develop. The standards are based on research, but research moves faster than policy.

The SOS initiative should be updated yearly based on new evidence. But updating standards is bureaucratic and slow. By the time SOS standards catch up to the research, the research will have moved on to new concerns.

Missing Standards

The twenty-some standards don't cover everything that matters. What about algorithmic amplification of harmful content? What about the business model itself (advertising revenue based on engagement time)? What about feature design that's deliberately addictive?

These aren't accidents. These are design choices. But they're hard to measure and hard to regulate. SOS focuses on what's easy to measure: moderation response times, reporting tool accessibility, parental control features. It ignores the systemic issues that make platforms profitable but harmful.

What the Ratings Actually Mean for Teens and Parents

Let's translate what these ratings mean in practical terms.

"Use carefully" means: this platform has implemented basic safeguards, but that doesn't mean it's safe. Teens can still encounter harmful content. Algorithms can still amplify bad stuff. Predators can still use the platform. The rating means the company is trying, not that the problem is solved.

For parents, "use carefully" means: your teen can use this, but you need to stay involved. Check in about what they're seeing. Set time limits. Review their followers and friends. These platforms are designed to be engaging (addictive). Even with safeguards, they can cause harm.

"Partial protection" means: don't use this if there's an alternative. The company has some safeguards, but they're not effective. Harmful content probably reaches your teen. The platform probably isn't doing enough to prevent harm.

"Does not meet standards" means: avoid this platform if possible. The company has shown it won't prioritize your teen's safety. Harmful content is prevalent. Predators are present. The platform isn't taking it seriously.

The key insight: even the best rating ("use carefully") is a warning, not an endorsement. It's acknowledgment that social media is inherently risky, and the best you can do is reduce (not eliminate) that risk.

The Role of Parental Controls and Monitoring

The SOS standards emphasize parental controls for good reason. At a certain point, technology can only do so much. The real protection comes from parents who are involved.

Good parental controls let you: set screen time limits, see who your teen is communicating with, review what content they're viewing, restrict certain features, and set purchase approval requirements.

The challenge: teens are smart. If you set strict controls, they'll find workarounds. They'll use a friend's phone. They'll download a different app. They'll hide their activity.

So parental controls are only one part. The other part is relationship. Teens who trust their parents and feel they can talk about online problems are less likely to hide risky behavior. Parental controls that feel oppressive create secrecy. Parental controls that feel reasonable can work.

The SOS standards require parental controls to exist, but they can't require parents to be involved in their teens' lives. That's the missing piece. The best platform safety in the world can't substitute for a parent who cares and maintains a relationship with their teen.

How Content Moderation Works in Practice

Understanding moderation helps you understand why the SOS standards focus on it.

Platforms use three layers: automated filters, human moderators, and user reports. Automated filters catch obvious stuff: spam, known child sexual abuse material, clear threats of violence. They're imperfect but necessary because the scale is too large for humans alone.

Human moderators review flagged content, user reports, and edge cases. Does this video really glorify eating disorders, or is it someone sharing their recovery story? Does this comment constitute bullying, or is it friendly banter between friends?

User reports help platforms identify content that slipped through. If a teen reports something, a human should look at it. If the report is valid, the content should be removed quickly.

The challenge: teens interact with each other. Sometimes they tease. Sometimes they're mean. The moderation system needs to distinguish between normal teen behavior and actual harm. That requires judgment, which automated systems can't do well.

Platforms struggle with this constantly. Remove too much content and you're censoring normal communication. Remove too little and you're ignoring harm. The SOS standards require: a reliable system that catches harmful content consistently.

What "reliable" means varies. For self-harm content, it means catching most of it and removing it quickly (hours, not days). For harassment, it might be slower (a few days) if it's ambiguous. For grooming behavior, it should be immediate.

Meta's scale is billions of posts per day. TikTok's is similar. YouTube's is even larger. Human moderators can't review everything. Automated systems catch 70-80% of obvious bad stuff. That means 20-30% of harmful content gets through initially.

The Future of Platform Accountability

SOS is an interesting experiment, but it's not the end of platform regulation. It's likely the beginning.

Governments are watching. If SOS works—if it produces meaningful ratings that improve platform practices—it becomes a model for regulation. Congress might legislate that platforms must be evaluated on SOS standards. The UK might incorporate SOS into their Online Safety framework. The EU might reference it in Digital Services Act enforcement.

If SOS fails—if it becomes a rubber stamp that always gives major platforms "use carefully" ratings—then government steps in with more aggressive regulation.

The likely future is hybrid: voluntary industry standards (SOS) plus government regulation (new laws) plus litigation (civil suits) plus public pressure. All of these together will force platforms to take teen mental health more seriously.

Tech companies will adapt by building better safety features, implementing more aggressive moderation, and improving transparency. They'll argue it's to comply with SOS and regulations. The real reason is risk mitigation: it's cheaper to improve now than deal with regulation and lawsuits later.

The winners will be the platforms that move fast on safety. The losers will be platforms that resist change and wait for legal pressure. The teens who benefit will be those with parents involved enough to know about SOS ratings and make informed choices.

What Experts and Mental Health Organizations Say

The Mental Health Coalition is just one voice. Other organizations have different perspectives.

The American Psychological Association has published research on social media harm to teens, particularly around anxiety, depression, and sleep disruption. They recommend parental involvement and platform accountability. SOS aligns with their recommendations but doesn't go as far as some experts would like.

The National Suicide Prevention Lifeline (now 988) supports SOS because suicide and self-harm content moderation is critical. They're focused on one specific harm: suicide. SOS addresses that but also covers eating disorders, harassment, and other mental health concerns.

Children's advocacy organizations like Common Sense Media have emphasized that SOS is a step forward but not a solution. They want more aggressive government regulation and more platform accountability. SOS is voluntary compliance, which is better than nothing but not as protective as legal requirements.

Tech companies, unsurprisingly, support SOS as an alternative to government regulation. Meta, Google, and others prefer industry standards to legislative mandates. They have more control over standards they shape themselves.

Academic researchers studying teen mental health and social media have mixed reactions. Some see SOS as a step toward accountability and transparency. Others worry it's too weak and gives platforms cover to avoid stronger regulation.

How Platforms Are Preparing for Evaluation

Major platforms are already preparing for SOS evaluation. This means hiring compliance officers, documenting policies, building new features, and improving existing ones.

Meta has hired teams focused on teen safety. They've implemented age-gating for certain features, improved parental controls, and updated their content moderation policies. Whether these changes are sufficient for a "use carefully" rating is the question the evaluators will answer.

TikTok has been beefing up its safety features, particularly around content exposure to young users. They've launched an initiative (TikTok Family Pairing) that helps parents monitor their teens' accounts. But questions remain about algorithmic recommendations of potentially harmful content.

YouTube has implemented Restricted Mode (which tries to filter mature content) and YouTube Kids (a separate app for younger users). They also have extensive content policies on harmful content. The question is whether implementation matches policy.

Snap has implemented parental controls and transparency tools. They focus on the fact that Snapchat is ephemeral (messages disappear), which they argue reduces harm compared to platforms where content is permanent.

Discord and Roblox are implementing age verification and improved moderation to prepare for evaluation. Both platforms need to show they've addressed past criticism about inadequate child safety measures.

The Broader Context of Social Media Regulation

SOS doesn't exist in a vacuum. It's part of a broader shift toward regulating social media, particularly around teen protection.

In the US, multiple bills have been proposed: Kids Online Safety Act (KOSA), Protecting Minors from Algorithmic Deceptive Content Act, and others. These would mandate platform accountability and give the FTC power to regulate social media.

In Europe, the Digital Services Act requires platforms to be transparent about how they moderate content and protect minors. Compliance requires documentation and auditing, similar to SOS but with legal enforcement.

In the UK, the Online Safety Bill creates legal obligations for platforms to protect users (including minors) from harmful content. Violations can result in fines up to 10% of annual revenue.

Other countries are following similar patterns. Australia, Canada, and others are developing frameworks to hold platforms accountable for harm to young people.

SOS fits into this ecosystem as: a voluntary industry standard that sets a baseline. If platforms meet SOS standards, they can argue they're complying with best practices. But governments can (and probably will) require more than SOS baseline.

The endgame is likely: SOS standards become legislated requirements. Meet SOS or face legal penalties. That's why platforms are taking participation seriously—it's cheaper to shape the standards now than fight regulation later.

Recommendations for Parents, Teens, and Educators

If you're a parent trying to protect your teen, here's what to do:

Understand the SOS Ratings: Once platforms get their ratings, read the detailed evaluation. Don't just look at the badge. Understand why a platform got the rating it got. What areas did it struggle with? What areas is it strong in?

Have Conversations: Don't just set rules. Talk to your teen about what they're seeing on social media, how it makes them feel, and what they should do if they encounter harmful content. Teens who feel they can talk to parents about online problems are safer than teens who hide their activity.

Use Parental Controls Reasonably: Implement controls, but be transparent about it. Explain why you're setting limits. Don't be oppressive. Trust builds safety.

Monitor Content, Not Time: Instead of obsessing over screen time, pay attention to what content your teen is engaging with. What accounts do they follow? What are they watching? This is more predictive of harm than how long they spend on the app.

Know the Warning Signs: Withdrawal from offline activities, anxiety or depression, sudden changes in behavior, obsession with appearance or body image, or talking about self-harm. These are red flags that social media might be causing harm.

Educators and counselors: Use SOS ratings as a teaching tool. Teach teens to evaluate platforms themselves. Discuss why platforms have these policies. Help them think critically about social media design and its effects on their mental health.

FAQ

What is the Safe Online Standards initiative?

The Safe Online Standards (SOS) initiative is a voluntary external rating system created by the Mental Health Coalition to evaluate how well social media platforms protect adolescent mental health. The initiative comprises roughly twenty standards covering platform policies, functionality, content moderation, and transparency. Platforms that participate submit documentation to an independent expert panel, which assigns one of three ratings: "use carefully" (best), "partial protection" (middle), or "does not meet standards" (worst). The goal is to create transparent, measurable accountability for platform safety practices.

How does the evaluation process work?

Participating platforms voluntarily submit documentation on their policies, tools, and features. An independent panel of global mental health experts, researchers, and technologists reviews this documentation along with any public information about the platform's actual practices. The panel then assigns a rating based on whether the platform meets the SOS standards. The entire process is designed to be transparent, with detailed reports explaining the reasoning behind each rating. Importantly, platforms provide self-reported data, which is then evaluated against independent evidence and scrutiny.

What are the benefits of the SOS initiative?

The benefits include increased transparency about platform safety practices, a measurable benchmark parents and teens can use to compare platforms, incentives for platforms to improve safety features, and a framework that could inform future regulation. The initiative also creates accountability without government mandates, which allows it to adapt more quickly to new research and evolving harms. Additionally, participating platforms can demonstrate to regulators that they're already meeting best practices, potentially avoiding stricter legislation.

Why did Meta, TikTok, and other major platforms agree to participate?

Major platforms are participating largely due to regulatory and legal pressures. Government regulation of social media is coming, and platforms prefer shaping industry standards to being subject to government mandates. Meta faces lawsuits over child harm from its products, making voluntary participation in safety evaluation a risk mitigation strategy. TikTok is dealing with potential bans and wants to appear responsible. All platforms face reputational risk if they refuse to be evaluated on teen safety, so participation is economically rational even without genuine safety commitments.

What does "use carefully" actually mean as a rating?

Despite being the highest achievable rating, "use carefully" does not mean the platform is safe. It means the platform has implemented basic safeguards and meets minimum standards for protecting adolescent mental health. Teens can still encounter harmful content, algorithms can still promote problematic material, and predators can still use the platform. The rating is acknowledgment that the company is trying and has reduced (but not eliminated) risk. Parents should interpret it as: you can allow your teen to use this platform, but you need to stay involved and monitor their activity.

What if a platform refuses to participate in SOS?

Platforms that refuse to participate will face reputational damage and will lack the "use carefully" badge that shows safety engagement. This could harm adoption, particularly among safety-conscious parents. Additionally, refusing to participate could be used as evidence of non-cooperation in future litigation. However, the SOS initiative has no legal enforcement mechanisms, so technically a platform could refuse and face no direct legal penalty—only market and reputational consequences.

How do the SOS standards address content about self-harm and suicide?

The SOS standards specifically require that platforms identify and remove content encouraging self-harm or suicide, remove instructional content that provides methods for self-harm, and prevent the promotion of eating disorders. Platforms must also have processes to catch this content quickly and respond within a reasonable timeframe (hours, not days). This is a core area of evaluation because these specific harms are particularly acute for adolescents and have clear, measurable impacts on mental health and safety.

Are the SOS standards based on research?

Yes, the standards were developed based on mental health research and expert consultation. Dr. Dan Reidenberg, Managing Director of the National Council for Suicide Prevention, leads the initiative. However, it's important to note that research on social media harm is still evolving, and new evidence about platform effects on teen mental health continues to emerge. This means the standards may become outdated relatively quickly and should be updated regularly as research advances.

How does SOS relate to government regulation?

SOS is a voluntary industry standard, not a legal requirement. However, it likely serves as a bridge between voluntary compliance and government regulation. As more evidence shows that voluntary standards are insufficient, governments may legislate that platforms must meet SOS standards or face penalties. The EU Digital Services Act and UK Online Safety Bill already move in this direction, creating legal requirements for platform accountability. SOS may eventually become incorporated into formal regulations, particularly if it proves effective at improving platform practices.

What can parents do with SOS information?

Parents should use SOS ratings as one data point in deciding whether to allow their teen to use a platform, but not the only one. Read the detailed evaluation report, not just the badge. Discuss the platform with your teen, understand what content they're seeing, and set reasonable boundaries. Use SOS ratings to start conversations about platform design and mental health. Ultimately, parental involvement and open communication about online experiences is more protective than any platform rating.

The Social Media Safety Ratings System represents a significant step toward platform accountability. But accountability through voluntary ratings only goes so far. The real protection comes from informed parents, engaged teens who understand how platforms work, and continued pressure from researchers, regulators, and the public to prioritize mental health over engagement metrics. The SOS initiative is important. Just don't mistake "use carefully" for "safe."

Key Takeaways

- The Safe Online Standards (SOS) initiative creates a voluntary three-tier rating system for social media platforms based on teen mental health protection measures

- Even the highest "use carefully" rating is a warning, not an endorsement, acknowledging that social media inherently carries risk

- Major platforms participate in SOS due to regulatory and legal pressures, making it a strategic move to shape standards before stricter government regulation arrives

- SOS standards evaluate four key areas: platform policy and governance, product functionality and design, content moderation, and transparency and accountability

- The Meta and Mental Health Coalition partnership raises legitimate independence questions about whether evaluations can be objective when a company being rated influences the standards

Related Articles

- India's New Deepfake Rules: What Platforms Must Know [2026]

- How Roblox's Age Verification System Works [2025]

- Meta's Child Safety Crisis: What the New Mexico Trial Reveals [2025]

- Meta's Child Safety Trial: What the New Mexico Case Means [2025]

- Discord Age Verification: Complete Guide to Teen-Safe Features [2025]

- AT&T AmiGO Jr. Phone: Parental Controls Guide & Alternatives 2025

![Social Media Safety Ratings System: Meta, TikTok, Snap Guide [2025]](https://tryrunable.com/blog/social-media-safety-ratings-system-meta-tiktok-snap-guide-20/image-1-1770741410486.png)