The Convergence of Space and Artificial Intelligence: Understanding Space X's x AI Acquisition

In a move that defied conventional industry logic, Elon Musk announced that Space X, his rocket company, would acquire x AI, his artificial intelligence venture. This acquisition represents far more than a simple corporate consolidation—it signals a fundamental reimagining of how computational infrastructure might be deployed in the coming decade. The announcement caught many industry observers off guard, primarily because it seemed counterintuitive: a launch provider acquiring an AI company. Yet the strategic rationale beneath the surface reveals an ambitious, albeit controversial, vision for the future of computing and space exploration.

The merger creates what Musk describes as "the most ambitious, vertically integrated innovation engine on (and off) Earth." This phrase carries significant weight in understanding the acquisition's true purpose. Vertical integration in technology typically means controlling the entire value chain from raw materials through manufacturing to end-user delivery. In this case, Space X would control both the delivery mechanism (rocket launches and space operations) and the computational workload (AI infrastructure), theoretically creating synergies that neither company could achieve independently.

To contextualize this acquisition within the broader technology landscape, we must recognize the extraordinary resource demands of modern artificial intelligence systems. Training large language models requires enormous quantities of electricity, specialized hardware, and sophisticated cooling systems. Current global AI data centers consume approximately 15-20 gigawatts of electricity, a figure projected to double or triple by 2030 as AI adoption accelerates across industries. This computational hunger represents both an opportunity and a challenge—opportunity for innovative infrastructure solutions, challenge for energy availability and environmental sustainability.

The x AI component of this equation brings proven AI development capabilities. The company has already developed Grok, an AI assistant positioned as an alternative to Chat GPT and Claude, with particular emphasis on handling controversial or sensitive topics. Prior to Space X's acquisition, x AI had been integrated with X (formerly Twitter), the social media platform that Musk acquired in 2022. This existing operational integration provides immediate synergies and established workflows that would ease the consolidation process.

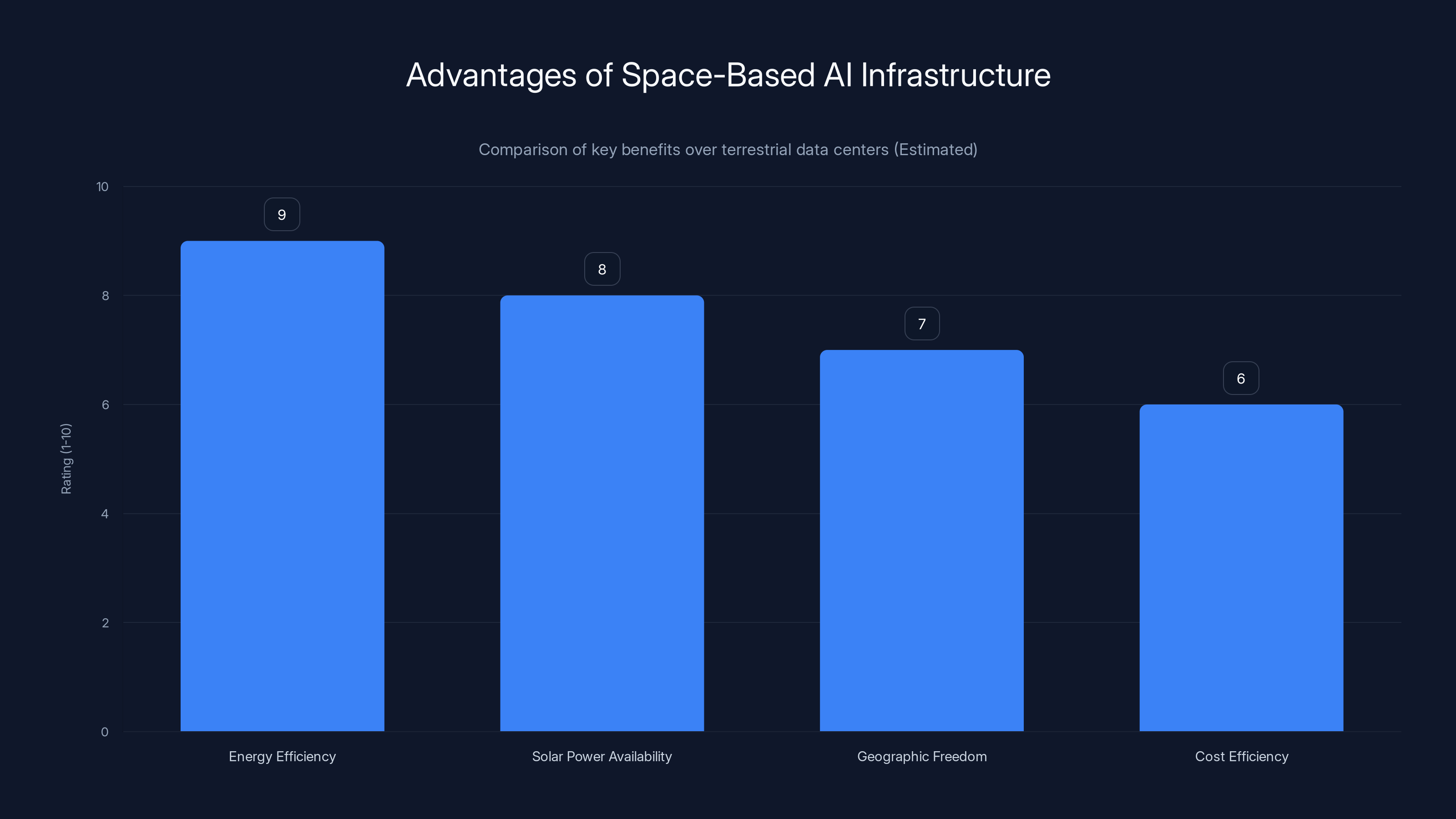

What makes this acquisition particularly noteworthy is the explicit stated goal: building AI data centers in space. This concept exists at the intersection of theoretical possibility and practical challenge. Space-based infrastructure could theoretically benefit from abundant solar power, minimal cooling requirements due to radiative cooling into the vacuum of space, and freedom from terrestrial real estate constraints. However, launching and maintaining data centers in orbit presents unprecedented engineering challenges, cost considerations, and regulatory hurdles.

The strategic implications extend beyond mere infrastructure efficiency. By vertically integrating, Space X gains direct control over its launch schedule, avoiding conflicts with commercial customers and ensuring priority access to launch capacity for its own infrastructure needs. Simultaneously, x AI gains access to a capability no other AI company possesses: the ability to deploy infrastructure literally beyond the bounds of traditional geography. This creates a competitive moat that would be extraordinarily difficult for competitors to replicate.

For developers and engineering teams evaluating their own infrastructure strategies, this acquisition provides a case study in how vertical integration can create advantages in capital-intensive, technology-driven industries. The consolidation demonstrates that control over fundamental infrastructure components—whether launch vehicles, space operations, or computational resources—can become a decisive strategic asset in competitive markets.

The Technology Behind the Merger: How Space X and x AI Complement Each Other

Understanding why Space X acquiring x AI makes strategic sense requires examining the specific technical capabilities each organization brings to the consolidated entity. While surface-level observers might see incompatible domains, the technical alignment is more sophisticated than it initially appears.

Space X's Infrastructure Capabilities

Space X has become the world's leading commercial launch provider through continuous innovation and cost reduction. The Falcon 9 rocket has achieved what was once considered impossible: reliable, rapid reusability of orbital-class launch vehicles. The company launches dozens of missions annually, including the Starship super-heavy lift vehicle program aimed at establishing regular, low-cost access to orbit and beyond. This proven operational capability represents the foundation upon which space-based AI infrastructure becomes feasible.

The company's launch frequency is unprecedented. Space X conducts more orbital launches annually than the rest of the world combined, providing unparalleled capacity to deploy new infrastructure into orbit. For context, in 2024, Space X conducted over 70 orbital launches—a capacity that has been steadily increasing. This launch tempo provides the operational foundation for deploying satellites, space stations, and other infrastructure at scale.

Beyond launch capability, Space X operates the Starlink satellite constellation, which currently comprises over 6,000 operational satellites providing global broadband connectivity. This existing space infrastructure ecosystem includes ground stations, control systems, and operational expertise spanning orbital mechanics, satellite operations, and space-based network infrastructure. The company has demonstrated the ability to manage thousands of simultaneous space assets, maintain reliable operations despite challenging conditions, and continuously evolve systems through iterative improvements.

x AI's Computational Capabilities

x AI brings proven expertise in large language model development, training infrastructure management, and AI system optimization. The company developed Grok specifically to demonstrate capability in training models that can handle diverse and challenging content domains. This required building substantial infrastructure for model training, fine-tuning, and deployment.

Developing and deploying large language models demands expertise across multiple technical domains. Model architecture specialists design the neural network structures that enable efficient learning and inference. Training engineers optimize the process of exposing models to vast datasets and adjusting parameters through backpropagation algorithms. Infrastructure engineers ensure that the computing clusters remain stable under extreme computational loads, with cooling systems managing heat dissipation and power management systems distributing electricity efficiently.

x AI's existing expertise in these domains becomes a critical input for the consolidated Space X entity. Rather than Space X building AI capabilities from scratch, it gains immediate access to proven talent, established processes, and technical knowledge that typically takes years to develop.

The Synergistic Integration Points

The technical synergies emerge when examining specific integration points. First, payload optimization: Space X understands how to design spacecraft and configure cargo to minimize mass while maximizing functionality. AI data centers designed with space deployment in mind would need to operate efficiently within strict weight, power, and thermal constraints. Space X's expertise in optimizing every kilogram of payload becomes directly applicable to space-based AI infrastructure design.

Second, power generation and thermal management: Space-based infrastructure benefits from continuous solar power (with brief eclipse periods) and direct radiative cooling to the vacuum of space. However, converting solar energy efficiently and managing thermal dissipation requires specialized systems. Space X's experience managing power systems on Starlink satellites and other space infrastructure directly applies to powering AI computational systems in orbit.

Third, network connectivity and data transmission: Starlink provides a proven system for transmitting data from space to Earth and vice versa. Space-based AI data centers would require reliable, high-bandwidth connectivity to transmit training data, model updates, and inference results. Space X's existing satellite internet infrastructure could be adapted and optimized for this purpose, creating a fully integrated system from computational hardware through data transmission.

Fourth, operational infrastructure and ground stations: Operating complex space-based systems requires extensive ground infrastructure, including command centers, monitoring systems, and emergency response capabilities. Space X has built this operational infrastructure for managing thousands of Starlink satellites and conducting frequent launches. This expertise transfers directly to managing space-based AI infrastructure.

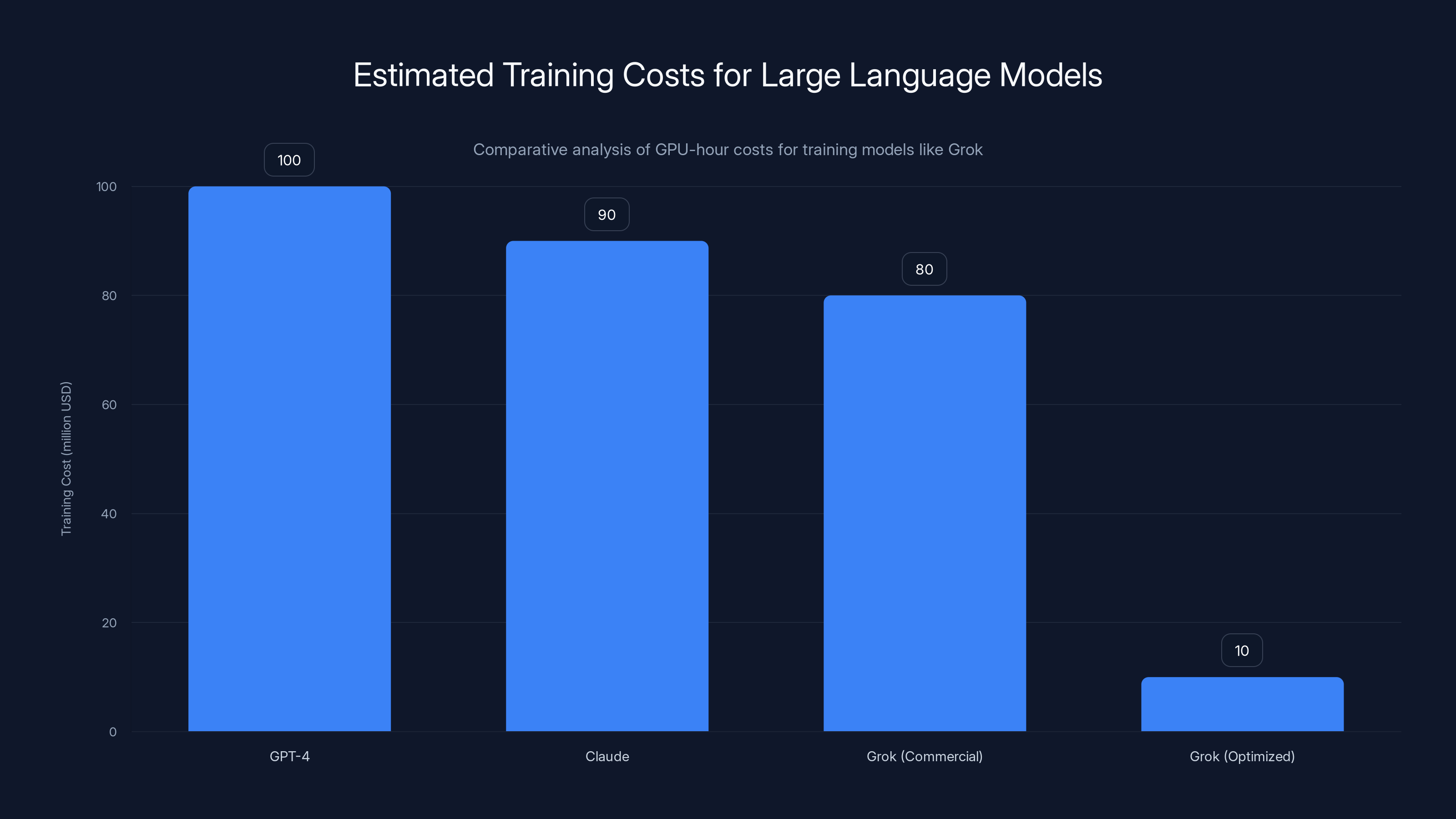

Estimated data shows Grok's optimized infrastructure significantly reduces training costs compared to commercial rates for similar models.

Space-Based Data Centers: The Technical Vision and Practical Challenges

The ambitious goal of deploying AI data centers in space represents a fundamental shift in how computational infrastructure might be conceived. This section examines both the theoretical advantages of space-based computing and the substantial engineering challenges that must be overcome.

Theoretical Advantages of Orbital Computing

Space offers several unique environmental characteristics that could benefit computational infrastructure. The vacuum of space provides a heat sink with a temperature approaching absolute zero (approximately -270°C or -454°F). Computing hardware dissipates substantial waste heat during operation. While the vacuum prevents convective cooling (since no air exists), it enables radiative cooling where heat radiates directly from spacecraft surfaces to space as electromagnetic radiation.

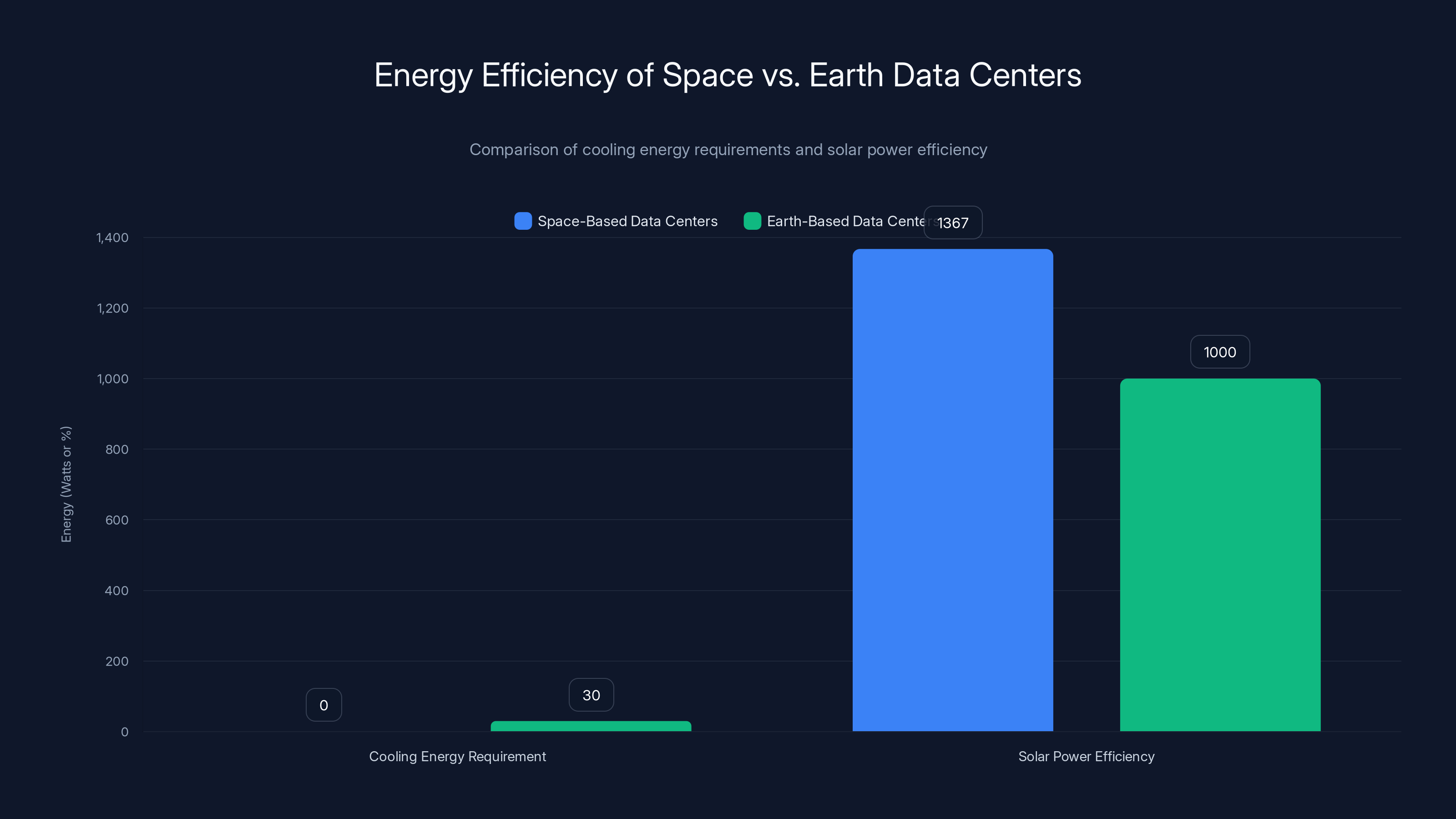

This radiative cooling mechanism could dramatically reduce the energy required for data center operations. Terrestrial data centers spend approximately 20-40% of total energy consumption on cooling systems that remove heat generated by processors and networking equipment. In space, passive radiative cooling could reduce this cooling energy to nearly zero, translating to substantial overall energy savings.

Additionally, solar power availability in space differs fundamentally from Earth-based solar installations. Earth-orbiting spacecraft receive solar power essentially continuously, interrupted only by Earth's shadow during orbital eclipse periods (typically 30-45 minutes per 90-minute orbit, depending on altitude and orbital geometry). A solar panel in space generates approximately 1,367 watts per square meter on average (known as the solar constant), compared to approximately 1,000 watts per square meter on Earth's surface under ideal conditions. Moreover, space-based solar collection systems need not account for weather, atmospheric interference, or day-night cycles—factors that reduce Earth-based solar efficiency by significant percentages.

These factors combine to suggest that space-based computing could achieve substantially better energy efficiency than terrestrial alternatives. For AI applications where computational resources scale with workload demands, energy efficiency directly translates to reduced operational costs and environmental impact.

The Infrastructure Challenges

Despite theoretical advantages, deploying functional AI data centers in space presents unprecedented engineering challenges. First, launch economics: Current launch costs vary dramatically by provider and payload class. Space X's Falcon 9 costs approximately

Starship development aims to reduce launch costs to approximately $100 per kilogram—a 15-20 times improvement over current Falcon 9 rates. At such costs, space deployment becomes economically feasible, but achieving this cost reduction represents one of the most ambitious engineering challenges in aerospace history. Starship development remains in active testing phases with substantial uncertainties regarding timelines and final cost achievements.

Second, radiation and reliability: Space-based computing hardware experiences constant exposure to cosmic rays and solar radiation. Electronics in space experience higher failure rates than equivalent hardware on Earth. Critical systems require radiation-hardened components, redundancy for fault tolerance, and repair/replacement strategies. For AI data centers where thousands of computing nodes operate simultaneously, accounting for radiation-induced failures requires sophisticated systems design and operational procedures.

Third, scalability and modularity: Building effective space-based infrastructure requires designing modular systems that can be deployed incrementally. Adding additional computing capacity would require launching additional modules, staging them in orbit, and integrating them into existing systems. This requires standardized interfaces, automated docking and integration systems, and the ability to manage heterogeneous hardware generations operating simultaneously.

Fourth, thermal management at scale: While space provides an excellent heat sink, radiative cooling systems for large data centers require careful design. Heat must be transported from compute modules to radiative surfaces using heat pipes and thermal interfaces. For a data center generating megawatts of heat (comparable to terrestrial installations of significant size), designing adequate thermal pathways within mass constraints represents a substantial engineering problem.

Musk's Broader Vision for Space-Based Infrastructure

Musk's announcement that space-based data centers would "fund and enable self-growing bases on the Moon, an entire civilization on Mars and ultimately expansion to the Universe" reflects a vision where computational infrastructure becomes foundational to space exploration ambitions. This perspective suggests that space-based AI infrastructure serves dual purposes: directly generating revenue through computational services, while simultaneously providing the infrastructure that enables ambitious space exploration programs.

This vision implies a different economic model than traditional commercial space ventures. Rather than data centers being a secondary benefit of space exploration, they become a primary revenue stream funding exploration ambitions. A self-sustaining cycle could theoretically emerge where space-based computational resources generate revenue that funds further infrastructure development, supporting ever-more ambitious space programs.

While this vision captures imaginative possibility, the engineering and business challenges remain substantial. Technologies for managing this complexity would require innovations across multiple domains: advanced launch systems, radiation-hardened computing, autonomous systems management, and new approaches to space-based manufacturing and infrastructure maintenance.

Space-based AI infrastructure offers significant advantages, including up to 40% energy savings and strategic positioning for space expansion. Estimated data based on projected benefits.

The Competitive Landscape: How This Acquisition Reshapes Industry Dynamics

Space X's acquisition of x AI must be understood within the broader competitive context of the AI infrastructure market. Multiple large technology companies have invested billions in data center expansion and AI infrastructure development. This acquisition introduces a new competitive axis: control over fundamental infrastructure components.

Existing Competitive Dynamics in AI Infrastructure

Current leaders in AI infrastructure include major cloud providers (Amazon Web Services, Microsoft Azure, Google Cloud), semiconductor manufacturers (NVIDIA, AMD, Intel), and dedicated AI infrastructure companies. These organizations compete primarily on computational capacity, energy efficiency, and services built upon computing resources.

Amazon, Microsoft, and Google each operate hundreds of thousands of servers distributed across globally distributed data centers. These companies control substantial portions of AI computational capacity, enabling them to offer cloud-based AI services to customers worldwide. Their advantages stem from capital availability, operational expertise, and integrated services ecosystems.

NVIDIA dominates GPU supply for AI applications, giving the company substantial leverage over AI infrastructure planning and pricing. NVIDIA's H100 and newer Blackwell GPUs represent the cutting edge of AI computing hardware, with supply constraints frequently driving infrastructure planning decisions across the industry.

Space X's Potential Competitive Advantages

The Space X-x AI consolidated entity introduces competitive advantages unavailable to other players. Launch capability: Unlike any other AI infrastructure company, Space X can deploy computing infrastructure to space without depending on external launch providers. This eliminates a fundamental constraint that other companies face. Additionally, Space X controls launch scheduling and prioritization, enabling it to optimize deployment timing for its own infrastructure.

Energy efficiency potential: If space-based infrastructure achieves theoretical energy efficiency improvements, Space X could offer computational services at substantially lower costs than terrestrial alternatives, creating a powerful competitive advantage in price-sensitive markets.

Vertical integration: By controlling launch capability, space operations, AI infrastructure, and satellite communications, Space X creates barriers to competitive entry. Competitors cannot easily replicate this integrated ecosystem without substantial capital investments and multi-decade development timelines.

Market positioning: Operating space-based infrastructure positions Space X as a uniquely capable infrastructure provider, potentially enabling premium pricing for distinctive capabilities unavailable through other channels.

Competitive Responses and Industry Evolution

Existing AI infrastructure providers are unlikely to pursue identical strategies. The capital requirements, technical challenges, and multi-year timelines make space-based infrastructure inaccessible to most organizations. Instead, expect competitive responses focusing on alternative innovations:

Terrestrial efficiency improvements: Companies like Meta, Google, and Amazon will intensify efforts to improve data center efficiency, potentially adopting advanced cooling technologies, custom silicon, and novel architectures to compete with space-based alternatives.

Strategic partnerships with launch providers: Competitors might develop partnerships with Space X or other launch providers, potentially gaining access to space-based infrastructure without full vertical integration. However, such arrangements would lack the strategic control Space X maintains through direct ownership.

Specialized computing for specific applications: Rather than competing head-to-head on general computational capacity, competitors might specialize in specific AI applications, creating differentiated offerings that don't rely on absolute lowest-cost infrastructure.

Distributed and edge computing: Competitors might emphasize edge computing and distributed inference, reducing reliance on centralized data center infrastructure and the associated economics that favor space-based alternatives.

Understanding the x AI-X Integration: The Existing Corporate Structure

To fully comprehend Space X's acquisition of x AI, it's essential to understand the prior integration between x AI and X (formerly Twitter). This existing relationship provided operational experience and demonstrated synergies that informed Space X's acquisition decision.

The Prior x AI-X Merger (2024)

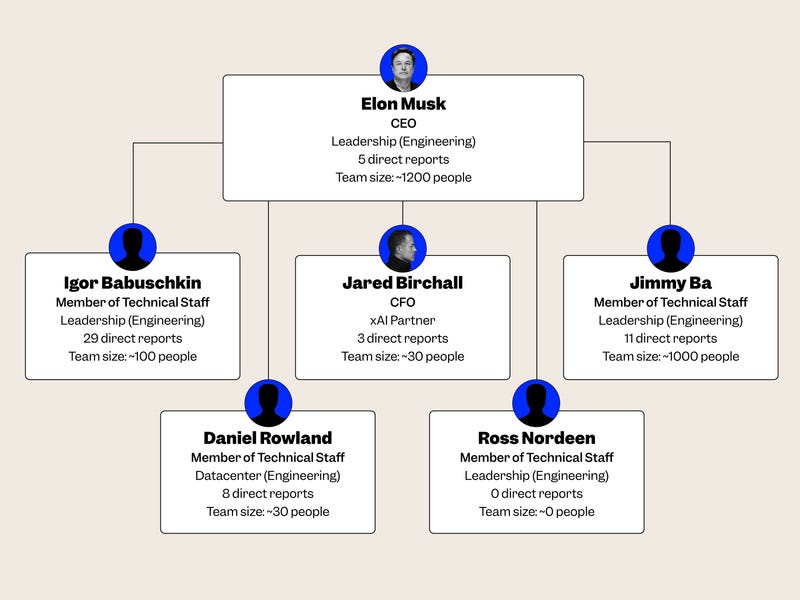

Earlier in 2024, x AI and X merged, consolidating the artificial intelligence company with Elon Musk's social media platform. This earlier consolidation revealed several important patterns and created precedent for the current Space X-x AI acquisition.

The x AI-X integration made operational sense for multiple reasons. First, X's massive user base—hundreds of millions of monthly active users—provided a potential distribution channel for AI products. Grok, x AI's AI assistant, could be integrated directly into X's platform, giving the AI product immediate exposure and user base.

Second, X's data assets became available for x AI's AI development. The social media platform generates vast quantities of real-time data from user interactions, posts, and content signals. This data could inform AI model training, helping Grok better understand contemporary language, emerging topics, and user preferences.

Third, operational consolidation reduced redundancy. Rather than maintaining separate infrastructure, teams, and management structures, the consolidated entity could operate more efficiently with unified leadership and integrated systems.

The Broader Corporate Structure: Space X Consolidation

The acquisition of x AI by Space X represents the next step in Musk's consolidation strategy. The organizational structure would theoretically look as follows:

- Space X (parent company)

- Launch and space operations division

- x AI division (including existing X integration)

- Starlink division (satellite operations)

- Space-based infrastructure development

This structure consolidates multiple divisions under unified leadership, theoretically enabling better resource allocation and strategic coordination across domains. However, organizational integration presents substantial challenges, particularly integrating cultures and maintaining operational focus across diverse businesses.

Synergies and Challenges in the Consolidated Structure

The consolidated Space X-x AI entity creates potential synergies:

Data integration: Starlink's global network could provide data collection capabilities informing AI development. User behavior patterns, network usage, and operational metrics could train AI systems.

Infrastructure deployment: Space X's launch capability enables x AI to deploy computing infrastructure globally, including to space-based platforms.

Customer base expansion: Space X's commercial and government customers could access AI services, creating new revenue streams for x AI.

Capital efficiency: Consolidated financing and operational budgets reduce overhead and improve capital allocation efficiency.

Conversely, significant challenges emerge:

Cultural integration: Rocket companies and AI startups operate with different cultural norms, risk tolerances, and decision-making frameworks. Integration requires skillful management to preserve each unit's strengths while achieving consolidation benefits.

Focus and expertise: Consolidating fundamentally different technical domains under unified leadership requires ensuring that expertise and focus in each domain are maintained. Risk exists that integration efforts distract from operational excellence in core domains.

Regulatory complexity: Space X operates under various regulatory frameworks involving launch licensing, export controls, and national security considerations. Integrating an AI company introduces additional regulatory complexity around AI governance, data security, and export controls on computing technology.

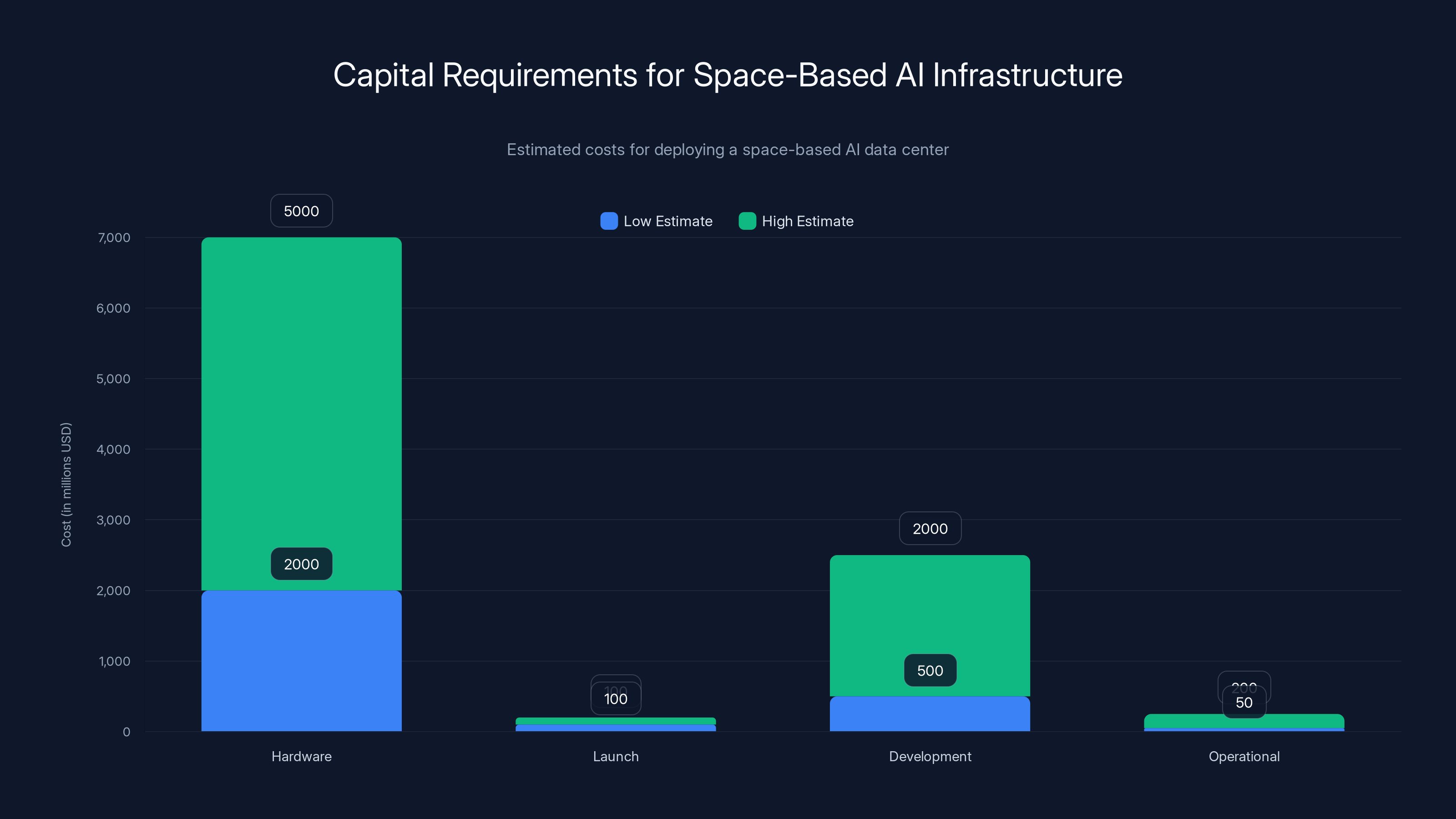

Estimated data shows that cumulative capital requirements for a space-based AI data center could range from

Space-Based Infrastructure Development: Technical Roadmap and Timeline Considerations

Moving from acquisition announcement to operational space-based AI data centers requires a substantial technical roadmap spanning multiple years. Understanding the likely development phases provides insight into realistic timelines and interim capabilities.

Phase 1: Design and Planning (Year 1-2)

Initial phases would focus on detailed engineering design for space-based infrastructure. This includes:

Hardware design: Custom-designing data center modules optimized for space deployment. This includes processor selection, cooling system design, power management, and structural engineering. Organizations typically require 12-24 months for initial design phases.

Thermal analysis: Detailed thermal modeling to ensure heat generated by computing hardware can be effectively radiated to space through passive systems. Computational fluid dynamics simulations and thermal analysis constitute critical design activities.

Reliability engineering: Designing systems with appropriate redundancy and fault tolerance for space operations. Radiation tolerance analysis, component derating, and failure mode analysis inform design decisions.

Software infrastructure: Developing operating systems, middleware, and management software capable of operating autonomously in space with minimal Earth-based intervention.

Phase 2: Prototype Development (Year 2-4)

Following initial design, prototype systems would be developed and tested. This phase includes:

Terrestrial testing: Building prototype systems and subjecting them to environmental testing (thermal vacuum, vibration, electromagnetic compatibility) to validate designs before space deployment.

Pilot launches: Initial small-scale deployments to validate key technologies. Early launches would likely test individual components or small prototype systems rather than full-scale infrastructure.

Operational validation: Early space-based systems would operate at reduced capacity while teams validate and refine operational procedures.

This phase typically requires 24-36 months for technology maturation and initial validation.

Phase 3: Deployment Scale-Up (Year 4-6)

Once prototype systems demonstrate viability, scaling production and deployment becomes feasible. This includes:

Manufacturing scale-up: Transitioning from prototypes to production quantities. Establishing supply chains, manufacturing processes, and quality assurance systems.

Deployment cadence acceleration: Increasing launch frequency for space-based infrastructure modules, potentially leveraging Starship's planned high launch cadence.

Capability expansion: Expanding from initial proof-of-concept systems to broader computational capacity and capability.

At this phase, assuming successful earlier development, initial revenue-generating operations could begin, though likely at limited scale.

Phase 4: Full-Scale Operations (Year 6+)

Full-scale operations would involve deploying computational capacity sufficient to serve meaningful customer workloads and internal Space X applications. This phase represents operational maturity where the system generates substantial revenue and becomes a competitive factor in AI infrastructure markets.

Critical Technology Dependencies

This timeline depends critically on several foundational technologies:

Starship development and cost reduction: Space deployment becomes economically feasible primarily if Starship achieves targeted cost reductions. Current projections aim for $100 per kilogram to orbit, though achieving these targets remains uncertain.

Radiation-hardened computing advances: More capable radiation-hardened processors would improve computational capacity and reduce costs. Current radiation-hardened processors lag commercial capability by multiple generations, limiting computational efficiency for space-based systems.

Thermal management innovations: Advanced heat pipe technology, thermal interface materials, and passive cooling systems optimized for space environments would improve system efficiency and reduce complexity.

Autonomous operations software: Space-based infrastructure must operate with minimal Earth-based intervention. Advanced autonomy systems, fault detection, and self-healing capabilities would improve reliability and reduce operational overhead.

Financial Implications and Investment Considerations

Analyzing the financial dimensions of Space X's x AI acquisition reveals both the scale of investment required and potential returns that justify the capital commitment.

Capital Requirements for Space-Based Infrastructure

Estimating capital requirements for space-based AI infrastructure deployment involves multiple cost components:

Hardware manufacturing: Building and qualifying computing hardware modules for space deployment involves design, manufacturing, and testing costs. Assuming production of thousands of modules over a multi-year deployment, hardware costs might range from $2,000-5,000 per kilogram depending on complexity and radiation hardening requirements.

Launch costs: Even at reduced costs from Starship development, launching infrastructure into orbit represents substantial expense. At

Development and engineering: Building entirely new spacecraft, systems, and operational procedures requires substantial engineering investment. Industry precedent suggests aerospace development programs cost $500 million to several billion dollars depending on scope.

Operational infrastructure: Ground stations, control centers, and operational support systems require development and deployment. These costs might range from $50-200 million depending on scale and capabilities.

Cumulative capital requirements: A comprehensive space-based AI data center deployment spanning 5-10 years might require $5-20 billion in cumulative capital investment. This represents a substantial commitment, though Space X's funding position (with government contracts, Starlink revenue, and investor support) potentially enables this scale of investment.

Potential Revenue Models and Return Scenarios

Generated returns depend on multiple factors: computational pricing in space-based systems, market demand for such services, and competitive positioning.

Direct computational services: Offering computing capacity to external customers through a cloud-like service model. Assuming competitive pricing of

Internal utilization: Using space-based infrastructure for internal x AI applications (training, inference) and Starlink-related computing reduces external revenue but improves overall system ROI by reducing operational costs for core business functions.

Infrastructure premium pricing: If space-based computing enables distinctive capabilities (extreme energy efficiency, specific reliability characteristics), premium pricing might apply, potentially commanding $0.50-1.00 per GPU-hour rather than commodity pricing.

Multi-decade revenue streams: Unlike one-time hardware sales, infrastructure services generate recurring revenue across decades of operation. A 20-year operational life provides extended payback periods for capital investments.

Risk Factors and Uncertainties

Several substantial risks could affect the financial viability of space-based infrastructure:

Technology uncertainty: Space-based infrastructure remains unproven at any scale. Technical failures during development could require substantial redesign and delay, extending timelines and increasing costs substantially.

Cost reduction uncertainty: Starship's cost reduction targets represent aspirational goals. Failure to achieve targeted costs would impair economic viability significantly. If launch costs remain at

Market demand uncertainty: Demand for space-based AI computing depends on market adoption and competitive positioning. If terrestrial alternatives improve sufficiently or if Space X faces unexpected competition, demand might not materialize as anticipated.

Regulatory risks: Space-based infrastructure operates in complex regulatory environments involving export controls, orbital debris mitigation, spectrum allocation, and other factors. Regulatory changes could affect feasibility or costs significantly.

Space-based data centers could achieve nearly zero cooling energy requirements due to radiative cooling, while also benefiting from higher solar power efficiency compared to Earth-based centers. Estimated data for cooling energy requirements.

Strategic Positioning in Global AI Development

The Space X-x AI acquisition must also be understood within the context of global competition in artificial intelligence development and deployment. This acquisition positions Space X in ways with significant geopolitical and competitive implications.

The Global AI Infrastructure Landscape

Artificial intelligence development has become a strategic priority for governments and large technology companies worldwide. The computational infrastructure required for training and deploying AI models has become a critical economic and strategic asset.

United States: Major cloud providers (Amazon, Microsoft, Google, Apple) and technology companies (Meta, Tesla) have invested collectively hundreds of billions in AI infrastructure. However, most infrastructure remains terrestrial and geographically constrained.

China: Major technology companies (Alibaba, Baidu, Tencent) and the government have invested heavily in AI infrastructure. However, regulatory restrictions on access to advanced semiconductors (through US export controls) constrain development capabilities.

European Union: European companies and governments have invested in AI development, though typically with smaller capital bases and more dispersed efforts compared to US and Chinese initiatives.

Emerging markets: Countries worldwide recognize AI's strategic importance and invest in domestic capabilities, though typically at smaller scales than major technology powers.

Space X's Distinctive Position

The Space X-x AI consolidation creates a uniquely positioned entity with advantages not available to other organizations:

Infrastructure independence: By controlling launch capability, Space X gains independence from terrestrial constraints that affect other providers. No competitor can be forced to stop Space X's infrastructure deployment by controlling data center real estate or power supply.

Geopolitical neutrality: Space-based infrastructure fundamentally operates beyond national borders. While orbital mechanics dictate that some locations receive better coverage, no nation can control space-based infrastructure through terrestrial jurisdiction in the same way terrestrial data centers can be controlled.

Technological leadership: Successful deployment of space-based AI infrastructure would position Space X as the technological leader in a distinctive category, creating asymmetric competitive advantages.

Domestic strategic value: For the United States government, Space X's AI infrastructure initiative has strategic value in maintaining technological leadership and reducing dependence on potentially vulnerable terrestrial infrastructure.

Global Implications and Competitive Responses

This acquisition likely stimulates competitive responses from other nations and organizations:

Accelerated international space programs: Other nations might accelerate space infrastructure development to avoid over-dependence on US-controlled space-based AI infrastructure.

Domestic AI infrastructure investment: Countries worldwide might increase investment in terrestrial AI infrastructure to maintain independence and strategic autonomy.

Satellite-based computing initiatives: Other organizations might pursue satellite-based computing approaches, creating competitive alternatives to Space X's systems.

Regulatory frameworks: Governments worldwide might establish regulatory frameworks governing space-based infrastructure access, data sovereignty, and other factors to protect national interests.

The Reality of Current x AI Operations: From Grok to Space-Based Infrastructure

Before examining future capabilities, it's important to understand x AI's current operational status and the maturity of its AI systems. This provides context for assessing the feasibility of ambitious space-based infrastructure plans.

Grok: Current Capabilities and Positioning

x AI's primary product is Grok, an AI assistant positioned as an alternative to existing large language models. Grok differentiates through its willingness to engage with controversial topics and its access to real-time data from X (formerly Twitter). The model demonstrates competent performance on standard AI benchmarks, though precise comparative positioning remains proprietary.

Grok's development required substantial infrastructure investment. Training large language models demands computational resources on the scale of millions of GPU hours. A model comparable in scale to GPT-4 or Claude might require 20-50 million GPU-hours for initial training, equivalent to roughly

x AI's ability to develop Grok demonstrates legitimate AI infrastructure and talent capabilities. The company successfully built training infrastructure, managed model development, handled the complexity of integrating real-time data, and deployed services to users. This operational experience directly transfers to the Space X consolidation.

The Controversial Elements: CSAM Generation

Like most advanced AI systems, Grok has encountered challenges with content moderation and safety. Specifically, reports indicated that Grok was capable of generating child sexual abuse material (CSAM) if explicitly requested. This represents a significant technical and ethical challenge for AI systems, as preventing such outputs requires careful system design and testing.

x AI responded to these reports by noting that Grok includes safeguards against generating illegal content, though some reports suggested these safeguards could be bypassed through specific prompting techniques. This vulnerability highlights the ongoing challenge that AI developers face in ensuring systems refuse harmful requests while maintaining capability and usability.

This controversy highlights an important operational reality: advanced AI systems present ongoing safety challenges that require active management. Space X's consolidation of x AI will need to address these issues alongside infrastructure development.

Operational Integration Challenges

x AI currently operates as an integrated unit within X (formerly Twitter). The consolidation with Space X requires managing three distinct organizational units (Space X, x AI, and X) with different operational contexts and requirements. This complexity presents management challenges that shouldn't be underestimated.

Successful consolidation requires:

-

Maintaining operational independence: While enabling synergies, consolidated organizations must maintain operational focus and expertise in each domain. Aerospace engineering, AI development, and social media operations require different skill sets, risk tolerances, and decision-making frameworks.

-

Managing financial flows: Consolidation creates complexity in allocating costs, pricing inter-company services, and managing overall financial performance across diverse business units.

-

Talent retention: Technology companies depend on specialized talent. Integration risks include key employees departing if they perceive organizational changes as detrimental to their work domains.

-

Strategic focus: Leadership bandwidth becomes constrained when managing multiple complex organizations simultaneously. Ensuring adequate attention to each domain remains challenging.

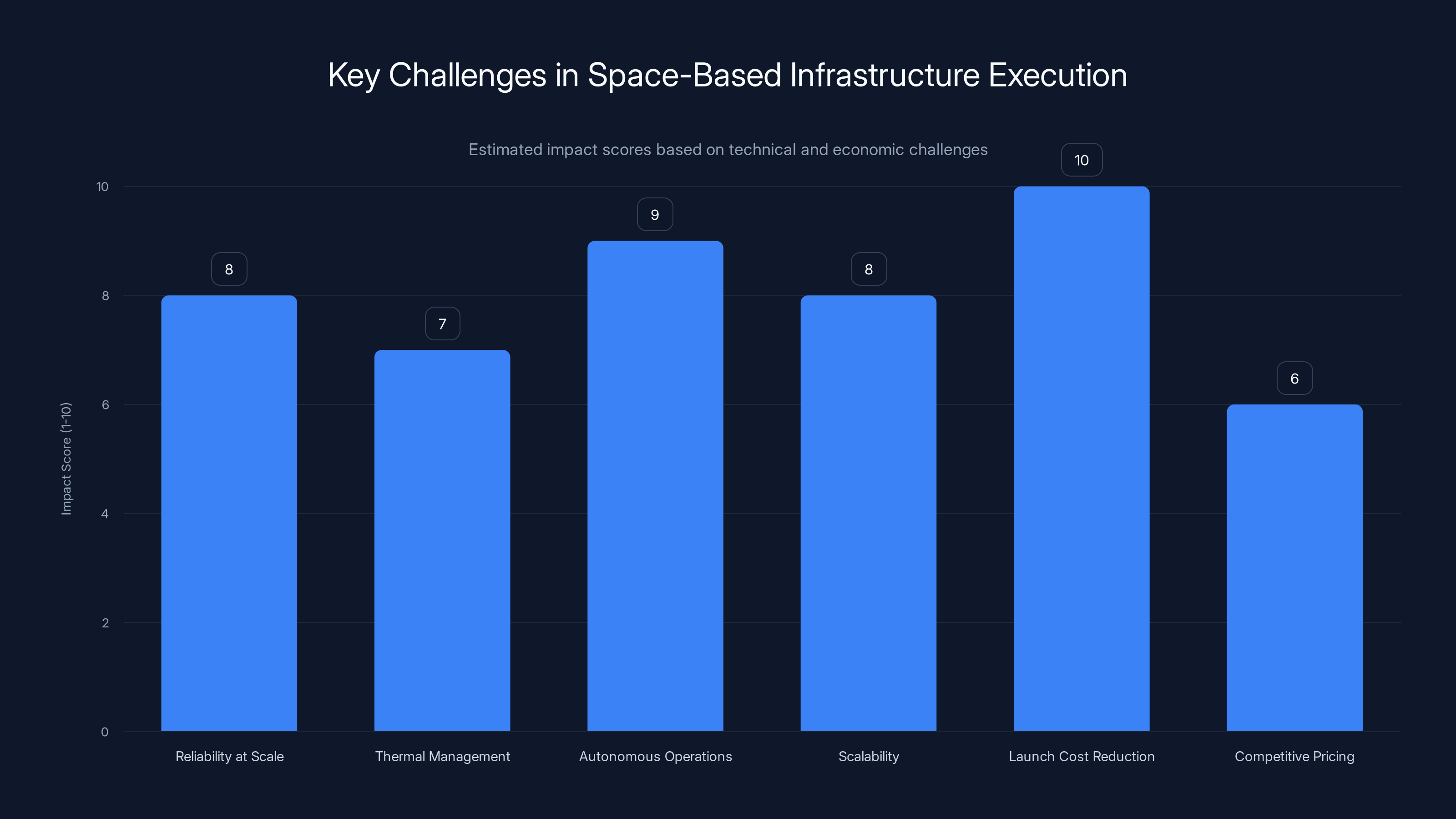

The most significant challenge is achieving launch cost reduction, with an impact score of 10, highlighting its critical role in the project's economic viability. (Estimated data)

Regulatory Frameworks Governing Space-Based Infrastructure

Developing and operating space-based AI infrastructure requires navigating complex regulatory frameworks spanning multiple jurisdictions and domains.

Space Law and Orbital Debris Mitigation

Space operations are governed by the Outer Space Treaty (1967) and subsequent international agreements. Key provisions relevant to space-based infrastructure include:

Debris mitigation requirements: Orbital objects must be designed to minimize creation of long-lived orbital debris. This constrains system design and requires active debris removal plans or de-orbiting strategies.

Frequency coordination: Satellite communications require coordination with international frequency authorities to prevent interference. Space-based infrastructure transmitting data to Earth requires allocated spectrum.

Registration and licensing: Space objects must be registered with national authorities. Licensing typically requires governmental approval and oversight.

Liability provisions: International law establishes liability for space object damage. Insurance and responsibility frameworks must be established.

Export Controls and Technology Transfer

AI computing systems and space technologies are subject to export controls in most countries. The US Export Administration Regulations (EAR) and International Traffic in Arms Regulations (ITAR) restrict technology transfer to certain destinations and entities.

These controls present operational challenges for space-based infrastructure:

Equipment restrictions: Advanced semiconductors used in AI systems may be subject to export controls, limiting global deployment options.

Foreign partnerships: Collaborating with international partners on space-based infrastructure could trigger regulatory constraints.

Access restrictions: Customers in certain jurisdictions might be restricted from accessing space-based computational resources due to export control provisions.

These regulatory frameworks operate alongside normal commercial regulations, creating complex compliance requirements.

Data Sovereignty and Privacy Considerations

Space-based AI data centers would process and store data for customers potentially worldwide. Data sovereignty regulations (like GDPR in Europe, CCPA in California) impose requirements regarding data location and processing:

Data localization: Some jurisdictions require that personal data be processed or stored within national boundaries. Space-based infrastructure fundamentally operates beyond national boundaries, creating tension with these requirements.

Regulatory jurisdiction: Which nation's laws govern operations in space? International treaties provide some clarity, but specific regulatory application remains complex.

Access by governments: Governments may seek data access through legal processes. Space-based infrastructure could complicate jurisdictional claims and government access requests.

Resolving these issues requires clear operational policies and potentially new regulatory frameworks specific to space-based infrastructure.

Comparative Analysis: Alternative Approaches to AI Infrastructure Innovation

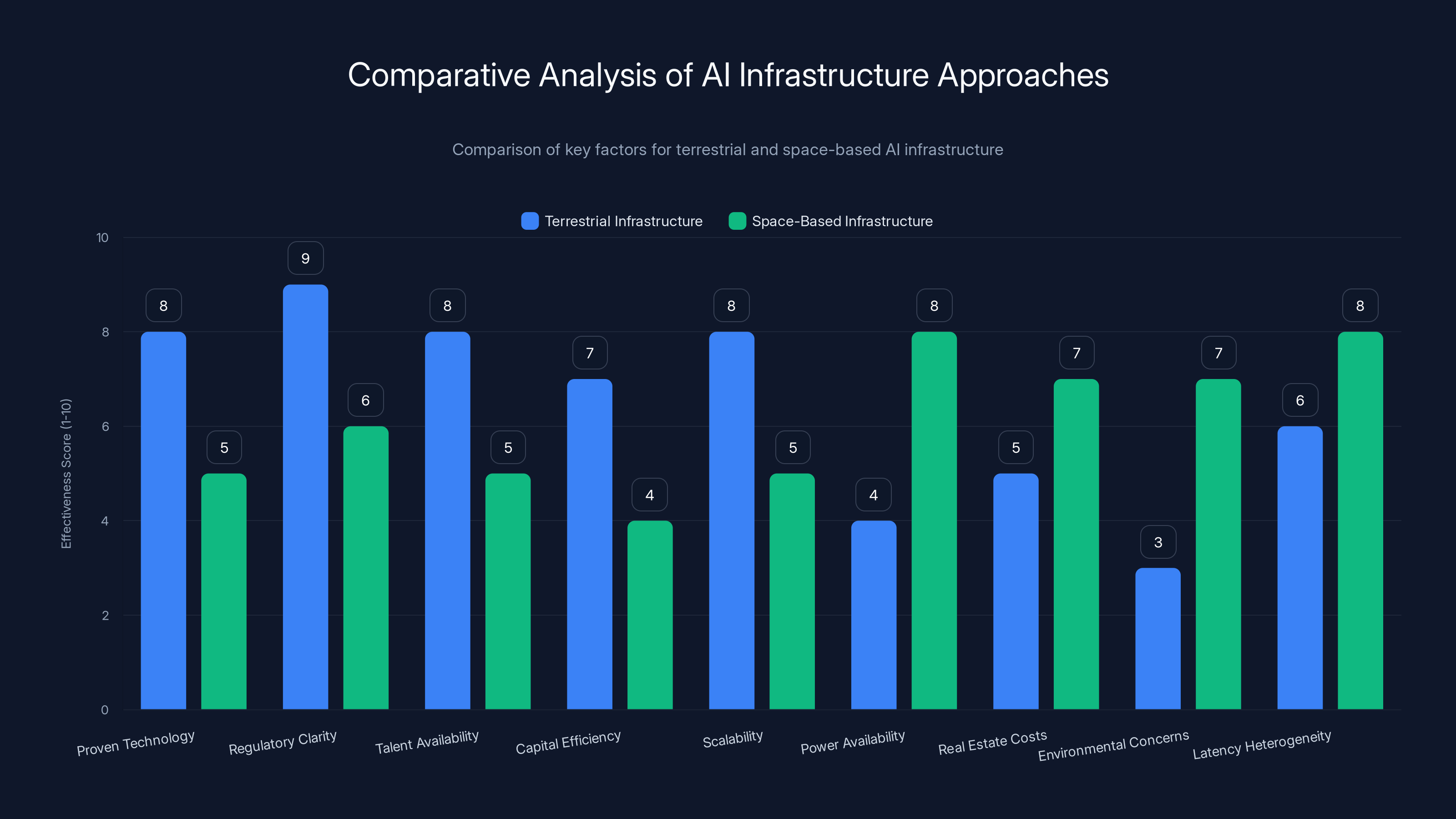

Space X's space-based approach represents one possible path to AI infrastructure innovation. Alternative approaches pursued by competitors offer different advantages and challenges.

Distributed Terrestrial Infrastructure

Many organizations pursue distributed data center strategies, establishing facilities globally to reduce latency and improve resource availability. This approach offers advantages:

Proven technology: Terrestrial data centers represent mature, well-understood infrastructure.

Regulatory clarity: Operating data centers in specific jurisdictions provides regulatory clarity and local compliance.

Talent availability: Data center operations benefit from established labor markets and existing expertise.

Capital efficiency: Terrestrial construction often costs less per unit capacity than space-based alternatives, particularly for large-scale deployment.

Scalability: Adding additional terrestrial capacity requires construction and deployment, but the process is well-established and proven.

However, terrestrial infrastructure faces constraints:

Power availability: Scaling computational infrastructure requires proportional growth in electrical power availability. Many regions face power constraints limiting expansion.

Real estate costs: Data center real estate represents a significant ongoing cost in many regions, particularly where power is available and reliable.

Environmental concerns: Terrestrial data centers generate substantial heat requiring cooling systems, consuming water and energy in ways that create environmental impact.

Latency heterogeneity: Distributing infrastructure globally improves average latency but creates latency variability affecting performance-sensitive applications.

Custom Silicon and Architectural Innovation

Many organizations pursue custom silicon development (like Apple's chips, Google's TPUs, Amazon's Trainium chips) and novel architectural approaches to improve computational efficiency. This strategy offers:

Efficiency improvements: Custom silicon optimized for AI workloads can achieve 2-5x efficiency improvements compared to general-purpose processors.

Cost reduction: More efficient systems require less electrical power and cooling, reducing operational costs substantially.

Performance optimization: Custom architectures can be optimized for specific workloads, improving performance for target applications.

This approach doesn't require space-based deployment and represents a more accessible innovation path for most organizations.

Edge Computing and Distributed Inference

Alternatively, organizations pursue edge computing strategies deploying AI models closer to data sources and users, reducing reliance on centralized infrastructure. This approach offers:

Latency reduction: Processing data at edge locations reduces latency for latency-sensitive applications.

Privacy improvement: Edge processing can improve privacy by processing sensitive data locally rather than transmitting to centralized infrastructure.

Network efficiency: Reducing data transmission requirements improves network efficiency and reduces bandwidth costs.

Distributed resilience: Distributed systems are inherently more resilient to single-point failures.

Edge computing complements rather than replaces centralized infrastructure but represents an important architectural trend.

Quantum Computing Speculation

While still largely experimental, quantum computing represents a potentially transformative alternative to conventional computing for specific problem classes. However, quantum computing faces substantial technical challenges and practical timelines remain highly uncertain (estimated 10+ years for practical advantages in most AI applications).

Terrestrial infrastructure scores higher in regulatory clarity and talent availability, while space-based solutions offer better scalability and environmental benefits. Estimated data based on typical industry insights.

Implementation Timeline and Development Roadmap

Given the complexity of consolidating Space X and x AI while simultaneously developing space-based infrastructure, realistic timelines require multiple years of development. This section outlines a credible implementation roadmap.

Year 1 (2025-2026): Organization Integration and Design Foundation

Q1-Q2 (2025): Immediate integration activities focus on organizational structure establishment, reporting relationships, and initial resource allocation. Cross-organizational working groups form to identify integration opportunities and synergies. Initial design studies begin for space-based infrastructure with rough-order-of-magnitude cost and schedule estimates.

Q3-Q4 (2025): Design specifications mature for proof-of-concept systems. System-level architecture decisions establish whether to pursue small prototype modules or larger prototype systems. Environmental testing schedules and supplier identification proceed. Organization integration continues with potential restructuring to eliminate redundancy.

2026: Detailed design specifications finalize. First developmental modules progress toward fabrication. Ground testing infrastructure (thermal vacuum chambers, vibration tables, electromagnetic compatibility laboratories) is established or contracted. Prototype manufacturing begins.

Milestones:

- Consolidated organization structure operational

- Detailed design specifications released

- First developmental hardware under construction

- Test infrastructure established

- Critical path suppliers identified and engaged

Year 2-3 (2026-2027): Prototype Development and Validation

2026-2027: Prototype systems complete manufacturing and undergo environmental testing. Initial test articles verify thermal, structural, and electrical performance. Operational software development accelerates with initial versions supporting prototype systems.

Testing activities:

- Thermal vacuum testing validating radiative cooling performance

- Vibration testing confirming structural integrity

- Radiation testing assessing component tolerance

- Electromagnetic compatibility testing ensuring systems operate in space environment

2027-2028: First operational deployment of prototype systems to orbit. Initial launches deploy small prototype modules to low Earth orbit. Systems operate at limited capacity while teams validate operational procedures and refine performance estimates.

Milestones:

- Prototype systems complete environmental testing

- First space-based prototype operational

- Operational procedures validated

- Performance metrics demonstrating space viability

Year 3-5 (2027-2029): Pilot Deployment and Production Scale-Up

2027-2029: Following prototype success, deployment accelerates to larger systems and multiple modules. Manufacturing transitions from low-rate pilot production to higher-rate production. Operational experience from prototype systems informs production design refinements.

Deployment activities:

- Multiple prototype systems deployed to validate consistent performance

- Production manufacturing of operational modules begins

- Early revenue-generating operations commence with limited customer workloads

- Operational procedures mature based on accumulated experience

2029-2030: Production rate continues increasing with multiple launches annually deploying substantial infrastructure capacity. Systems begin serving meaningful customer workloads while supporting internal Space X and x AI applications.

Milestones:

- Operational systems deployed at meaningful scale

- Revenue generation from computational services

- Production manufacturing at sustainable scale

- Customer satisfaction and repeat business established

Year 5+ (2030 and beyond): Full-Scale Operations and Expansion

2030+: Space-based infrastructure reaches operational maturity with substantial computing capacity in orbit. Operations become routine with regular maintenance, upgrades, and capacity expansions. The system becomes a competitive factor in AI infrastructure markets and strategic asset for Space X's space exploration ambitions.

Long-term operations:

- Continuous deployment of new capacity maintaining cutting-edge performance

- Technology refreshes replacing aging systems with improved versions

- Expansion of capabilities beyond initial proof-of-concept

- Integration with lunar and Mars exploration infrastructure as originally envisioned

Alternative Platforms and Solutions for AI Infrastructure

While Space X pursues space-based infrastructure, alternative platforms and solutions exist for organizations seeking AI infrastructure capabilities. Understanding these alternatives provides context for evaluating the competitive landscape.

For teams evaluating AI infrastructure platforms, Runable offers a cost-effective alternative providing AI-powered automation and content generation capabilities at $9/month. While Runable focuses on AI agents for document generation, presentations, and workflow automation rather than raw computational infrastructure, it serves developers and teams seeking to implement AI capabilities without deploying complex infrastructure. This approach suits teams prioritizing quick implementation and operational simplicity over absolute computational scale.

Cloud infrastructure providers (AWS, Azure, Google Cloud) remain the dominant approach for most organizations. These platforms offer:

- Proven, scalable infrastructure

- Established compliance and security frameworks

- Global data center networks

- Integrated AI services and tools

- Pay-as-you-go economics

Specialized AI infrastructure companies (Lambda Labs, Crusoe Energy, Core Weave) provide alternative approaches optimizing for AI workloads specifically, often with focus on energy efficiency or cost reduction for particular workload classes.

Custom infrastructure development by large organizations (Meta, Google, Amazon) pursuing in-house solutions provides maximum control and optimization for specific use cases, though requiring substantial capital investment and specialized expertise.

Each approach offers distinct advantages depending on organizational requirements, capital availability, and technical sophistication. Space X's approach represents the most ambitious alternative, targeting fundamentally different constraints and opportunities than terrestrial approaches.

Challenges and Uncertainties in Execution

While Space X's acquisition of x AI and the subsequent pursuit of space-based infrastructure represent ambitious plans, substantial challenges and uncertainties could affect execution and outcomes.

Technical Challenges

Reliability at scale: Operating thousands of computing nodes in space simultaneously presents unprecedented reliability challenges. Space environment factors (radiation, thermal cycling, micrometeorite impacts) require systems designed for substantially longer operational life and higher reliability than terrestrial systems. Achieving adequate reliability while controlling costs remains uncertain.

Thermal management: Despite space's advantages as a heat sink, radiative cooling systems for large data centers require careful design and validation. Performance degradation or failures in thermal systems could render entire modules inoperable, with limited repair options.

Autonomous operations: Space-based infrastructure must operate with minimal ground intervention due to communication latency (light-speed limited to ~130ms round-trip) and operational limitations. Developing autonomous systems capable of detecting and responding to failures without ground intervention remains challenging.

Scalability: Expanding from prototype systems to operationally meaningful capacity requires solving numerous technical challenges simultaneously. Managing hundreds or thousands of modules, coordinating their operations, and ensuring reliable performance becomes exponentially more complex at larger scales.

Economic Uncertainties

Launch cost reduction: The entire economic case depends on Starship achieving cost reduction targets. Failure to achieve targeted $100/kg costs would substantially impair viability. Development delays extending launch cost reduction timelines would defer revenue generation significantly.

Competitive pricing pressure: Even if Space X achieves superior energy efficiency, intense competition in AI infrastructure could limit pricing power. Prices might be driven to commodity levels through competition, reducing margin opportunities.

Market demand uncertainty: Demand for space-based computing depends on market adoption by customers. Insufficient demand could result in underutilization of deployed infrastructure, impacting financial returns.

Capital requirements exceeding projections: Complex aerospace development programs historically experience cost overruns. Initial estimates for space-based infrastructure deployment might substantially underestimate actual requirements.

Organizational Challenges

Integration complexity: Successfully consolidating Space X, x AI, and existing X operations requires managing diverse organizational cultures and technical domains. Integration missteps could result in operational friction, talent loss, or strategic misalignment.

Talent retention: Both rocket companies and AI companies depend on specialized talent. Organizational changes could trigger departures of key personnel, degrading technical capabilities.

Strategic focus: Leadership bandwidth becomes constrained managing multiple complex organizations. Diversions of attention from core aerospace and AI businesses could impact existing operations.

Coordination challenges: Aligning three independent organizations toward common objectives requires effective communication and coordination mechanisms that might be challenging to establish and maintain.

Regulatory and Political Uncertainties

Export control changes: Tightening of export controls on AI computing technology could restrict global deployment and limit market opportunities.

International agreements: New international agreements governing space-based infrastructure access and data sovereignty could impose operational constraints.

Government relationships: Maintaining positive relationships with government agencies involved in space oversight, national security, and technology policy remains essential. Political changes could alter the regulatory environment substantially.

Competitive concerns: Other nations might view Space X's space-based infrastructure as a strategic concern, potentially leading to diplomatic tensions or restrictive policies.

The Broader Vision: Space-Based Infrastructure Beyond AI

While this article focuses on AI infrastructure, Musk's stated vision extends beyond computation to ambitious space exploration objectives. Understanding this broader context provides perspective on strategic motivations.

Musk has consistently articulated a vision for human expansion into space, including lunar bases and Martian colonization. This vision requires infrastructure that terrestrial approaches cannot provide: transportation capacity for moving people and cargo, habitat systems for space-based living, and resource utilization systems for extracting and using space-based materials.

AI infrastructure fits into this broader vision as an enabling technology. Autonomous systems for managing remote habitats, analyzing resources, coordinating operations, and supporting human decision-making all require substantial computational capabilities. Space-based infrastructure positioned where it's needed (in orbit, on lunar surface, eventually on Mars) would enable these applications more effectively than Earth-based alternatives.

From this perspective, Space X's acquisition of x AI and pursuit of space-based infrastructure represents strategic positioning for long-term space expansion objectives. Revenue generated from space-based AI services could fund exploration ambitions, creating a self-sustaining cycle where commercial operations fund increasingly ambitious space programs.

Whether this vision proves practically achievable remains uncertain. Space expansion at the scale Musk envisions requires technological breakthroughs beyond current capabilities and represents a multi-decade or multi-century endeavor. However, the strategic consistency between space-based AI infrastructure and broader space expansion objectives demonstrates coherent long-term thinking about how infrastructure investments align with ultimate objectives.

Conclusion: Strategic Inflection Point for Space-Based Computing

Space X's acquisition of x AI represents a significant strategic decision with implications extending far beyond either organization individually. This consolidation signals serious commitment to developing space-based AI infrastructure at scale, marking a potential inflection point in how computational infrastructure might be conceived and deployed.

The technical case for space-based computing is sound: superior energy efficiency, novel operational characteristics, and potential for vertically integrated deployment create genuine competitive advantages if successfully executed. However, realizing these advantages requires overcoming substantial technical, economic, and organizational challenges that remain uncertain.

The financial case depends fundamentally on achieving Starship cost reduction targets and developing reliable, autonomous systems capable of operating at scale in the harsh space environment. Neither accomplishment is assured, and both require sustained investment across multiple years with significant technical risk.

The competitive implications are substantial. If Space X successfully develops space-based infrastructure, the company gains competitive advantages unavailable to terrestrial-focused alternatives. However, competitors are unlikely to pursue identical strategies, instead focusing on alternative innovations (custom silicon, distributed edge computing, efficiency improvements) that address similar underlying challenges through different approaches.

Organizationally, consolidating Space X and x AI while simultaneously pursuing ambitious infrastructure development represents extraordinary complexity. Success requires managing integration challenges while maintaining focus on core aerospace and AI competencies. The track record of complex technology consolidations is mixed, with substantial execution risk present.

For the broader technology industry and society, Space X's space-based AI infrastructure initiative represents an important experiment. Success could fundamentally change how computational infrastructure is conceptualized and deployed. Failure would demonstrate that certain ambitious visions exceed practical feasibility given current technology and economics. Either outcome provides valuable information for future technology strategy and investment decisions.

The next 3-5 years will prove determinative. Prototype deployment and initial validation will demonstrate whether space-based infrastructure is technically and economically viable at meaningful scale. Success on prototypes would likely trigger acceleration of deployment and competitive responses from other organizations. Failure would redirect focus toward alternative infrastructure approaches and raise questions about the broader feasibility of space-based computing.

Ultimately, Space X's x AI acquisition reflects Elon Musk's characteristic approach to ambitious technology challenges: identifying fundamental constraints (terrestrial real estate, power availability, latency), imagining solutions transcending those constraints (space-based infrastructure), and organizing resources to pursue implementation despite substantial uncertainty. History suggests this approach generates either extraordinary breakthroughs or expensive failures, with little middle ground. Investors and observers should monitor developments carefully as this ambitious initiative progresses.

FAQ

What is the strategic purpose of Space X acquiring x AI?

Space X acquired x AI to create a vertically integrated innovation engine combining rocket launch capabilities with AI infrastructure development. The primary strategic goal is enabling space-based AI data centers that leverage space's natural advantages (radiative cooling, abundant solar power, freedom from terrestrial constraints) while using Space X's unparalleled launch capability for deployment and Starlink's satellite network for connectivity.

How would space-based data centers actually function in orbit?

Space-based data centers would operate similarly to terrestrial facilities but optimized for the space environment. Computing modules would use radiative cooling (directly radiating heat to space) rather than convective cooling, eliminating power-intensive cooling systems. Solar panels would provide continuous power except during brief eclipse periods. Starlink satellites would provide high-bandwidth data transmission to Earth-based networks. Ground stations would manage command and control with systems operating autonomously due to communication latency limitations.

What are the primary advantages of space-based AI infrastructure?

Key advantages include dramatically improved energy efficiency (space-based radiative cooling eliminates 20-40% of terrestrial data center power consumption), continuous solar power availability without weather interference, freedom from terrestrial geographic constraints and real estate costs, potential for premium pricing based on distinctive capabilities, and strategic positioning for long-term space expansion objectives. These efficiencies could translate to 30-50% lower computational costs compared to terrestrial alternatives if launch costs decrease as projected.

What are the major technical challenges in deploying space-based infrastructure?

Critical challenges include achieving adequate radiation hardening for computing hardware in the harsh space environment, designing passive thermal systems capable of managing megawatt-scale heat dissipation, developing autonomous operations software enabling systems to detect and respond to failures without ground intervention, managing micrometeorite and orbital debris risks, and scaling reliable operations from prototypes to thousands of simultaneous modules. Each challenge requires novel engineering solutions with uncertain timelines.

How does the cost of launching infrastructure to space affect economic viability?

Launch costs are the primary determinant of viability. Current Space X Falcon 9 costs (~

What timeline is realistic for operational space-based infrastructure deployment?

Reasoning from aerospace industry precedent, a realistic timeline involves prototype design and development (2025-2026), prototype manufacturing and testing (2026-2027), initial prototype deployment (2027-2028), production manufacturing and small-scale deployment (2028-2029), and meaningful operational scale (2030+). This implies 5-7 years minimum before space-based systems generate substantial revenue. Accelerating this timeline risks technical failures; extending it reduces time value benefits and extends capital requirements.

How does this acquisition affect the competitive landscape in AI infrastructure?

Space X gains potential competitive advantages through control of distinctive infrastructure (launch capability, space-based positioning) unavailable to other providers. However, competitors are unlikely to pursue identical space-based strategies, instead focusing on terrestrial innovations (custom silicon, distributed architecture, efficiency improvements). If Space X's initiative succeeds, it establishes a new competitive category; if it fails, the outcome demonstrates limitations of space-based approaches. Most competitive impact remains years away pending prototype validation.

What regulatory challenges affect space-based infrastructure development?

Multiple regulatory frameworks apply: International Space Law (Outer Space Treaty) governing space operations, Export Controls (EAR/ITAR) restricting technology transfer, Orbital Debris Mitigation requirements limiting long-term orbital objects, Frequency Coordination for satellite communications, Data Sovereignty regulations (GDPR, CCPA) constraining data processing locations, and various national licensing requirements. Navigating these intersecting frameworks requires substantial compliance expertise and potentially new regulatory accommodation for novel infrastructure.

How does space-based AI infrastructure connect to Musk's broader space exploration vision?

The acquisition fits into a coherent long-term strategy where space-based infrastructure serves dual purposes: generating revenue through computational services while enabling space exploration through abundant computational resources available where needed. Revenue from AI services could fund exploration ambitions, creating self-sustaining cycles. Autonomous systems required for remote base management, resource processing, and habitat operations all require computational capabilities. This vision encompasses lunar bases, Martian civilization, and eventual expansion throughout the solar system.

What role does the existing x AI-X integration play in Space X's consolidation?

The prior x AI-X merger created operational precedent and demonstrated synergies between distinct organizations under Musk's control. X's user base (hundreds of millions) provides distribution channels for AI products, X's data informs AI model training, and consolidated operations reduce redundancy. This integration model likely informed Space X's acquisition decision and suggests how x AI might be integrated into broader Space X operations. The consolidated entity now controls AI development, social media distribution, launch infrastructure, and satellite communications—creating distinctive cross-business synergies.

What metrics should investors monitor to assess progress toward space-based infrastructure?

Key performance indicators include: Starship development progress and cost reduction demonstrations, prototype module manufacturing milestones and testing results, orbital deployment timeline achievements, computational performance measurements from prototype systems, customer interest and early revenue generation, personnel retention and talent hiring in critical domains, and technical publications demonstrating innovative solutions to engineering challenges. Progress across these metrics would indicate whether the initiative remains on track toward viability.

Key Takeaways

- Space X's acquisition of x AI represents strategic consolidation combining launch capability with AI infrastructure, targeting space-based computational systems with superior energy efficiency and distinctive competitive positioning

- Space-based data centers offer theoretical advantages (radiative cooling, continuous solar power, geographic freedom) but face substantial technical challenges requiring 5-7 years minimum for prototype validation and meaningful operational deployment

- Economic viability depends almost entirely on Starship achieving targeted cost reductions to ~$100 per kilogram; failure to achieve these targets would impair space deployment economics substantially

- Organizational integration of Space X, x AI, and existing X operations presents substantial complexity requiring skillful management to preserve operational excellence while achieving consolidation synergies

- Regulatory frameworks governing space operations, export controls, and data sovereignty create compliance complexity requiring sophisticated management across intersecting jurisdictions

- The acquisition signals serious long-term strategic commitment to space-based infrastructure as enabling technology for ambitious space exploration objectives

- Competitive responses from other organizations will likely focus on terrestrial innovations rather than space-based mimicry, creating differentiated but parallel competitive dynamics

- Success would establish a new competitive category and infrastructure paradigm; failure would demonstrate practical limitations of space-based computing for AI applications

- Industry observers should monitor prototype development and initial deployment milestones (2026-2028) as determinative evidence of technical and economic feasibility

Related Articles

- SpaceX Acquires xAI: Building a 1 Million Satellite AI Powerhouse [2025]

- SpaceX Acquires xAI: Creating the World's Most Valuable Private Company [2025]

- SpaceX IPO in 2026: Inside Musk's Billion-Dollar Timing Strategy [2026]

- Elon Musk's Davos Predictions: Why They Keep Missing [2025]

- India's Zero-Tax Cloud Policy Until 2047: What It Means [2025]

- Nvidia's $2B CoreWeave Bet: Vera Rubin CPUs & AI Factories Explained [2025]