Introduction: The SSD Memory Crisis Nobody's Talking About

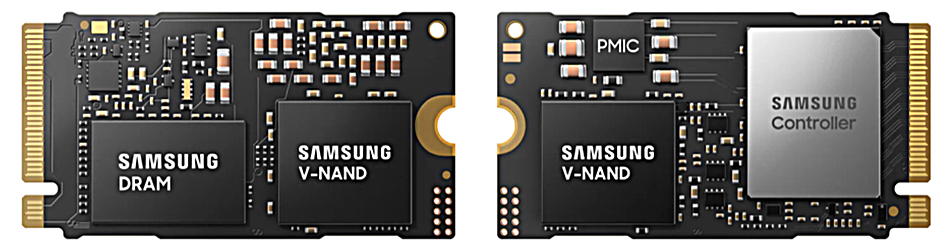

Here's the thing. Your SSD isn't just storing data. It's also storing a massive instruction manual that explains where every single piece of data lives. This manual lives in expensive RAM sitting right on the drive's controller. And as SSDs get bigger, this manual becomes a massive, bloated problem.

David Flynn, the founder of Hammerspace, was in Korea recently pitching something radical: what if we just got rid of the entire manual?

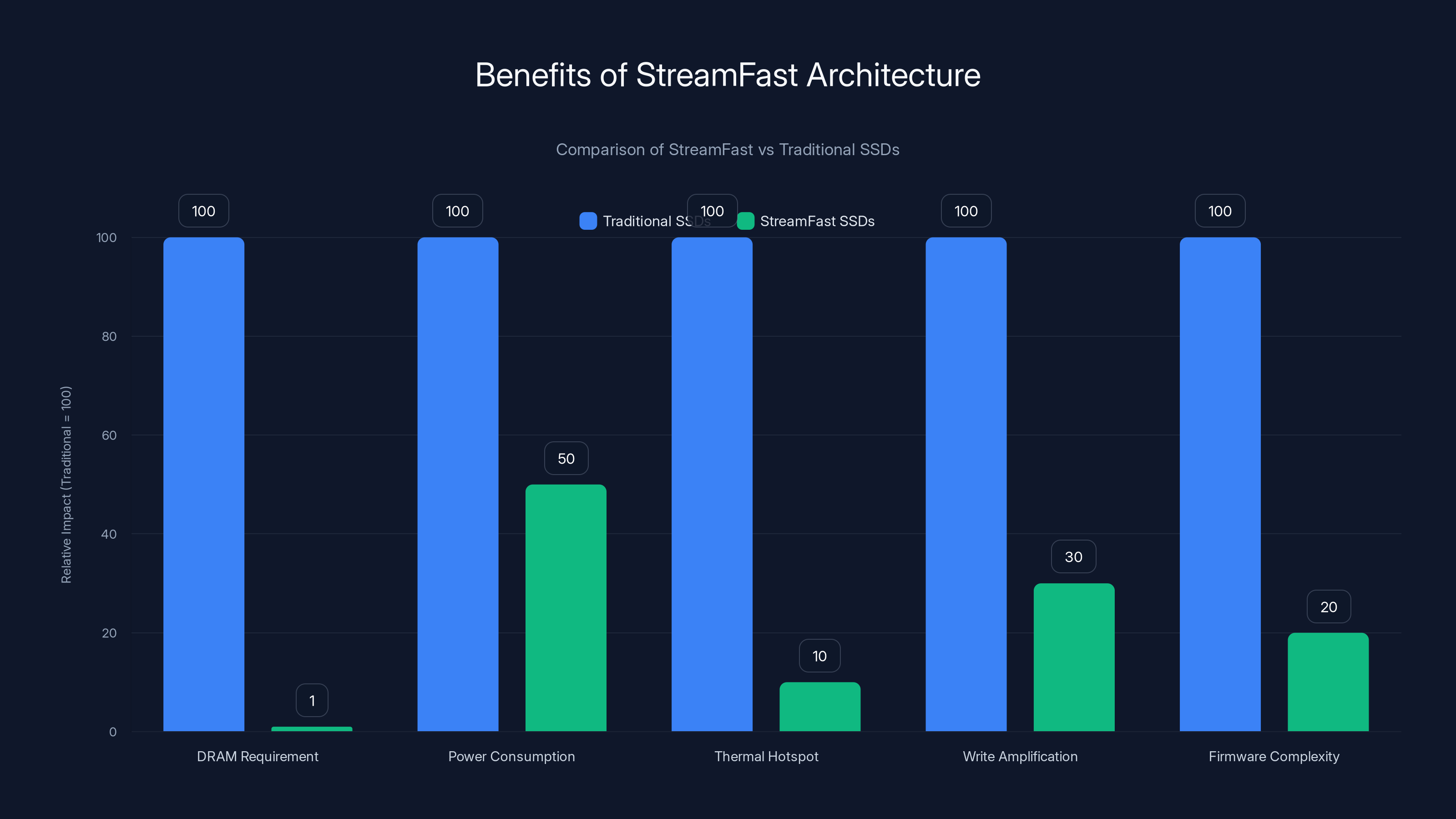

Stream Fast is a new SSD architecture concept that removes two of the most fundamental components from every modern drive you've ever used: the Flash Translation Layer (FTL) and the controller DRAM. In exchange, you get dramatically simpler storage, dramatically lower power consumption, and a thousand-fold reduction in memory overhead.

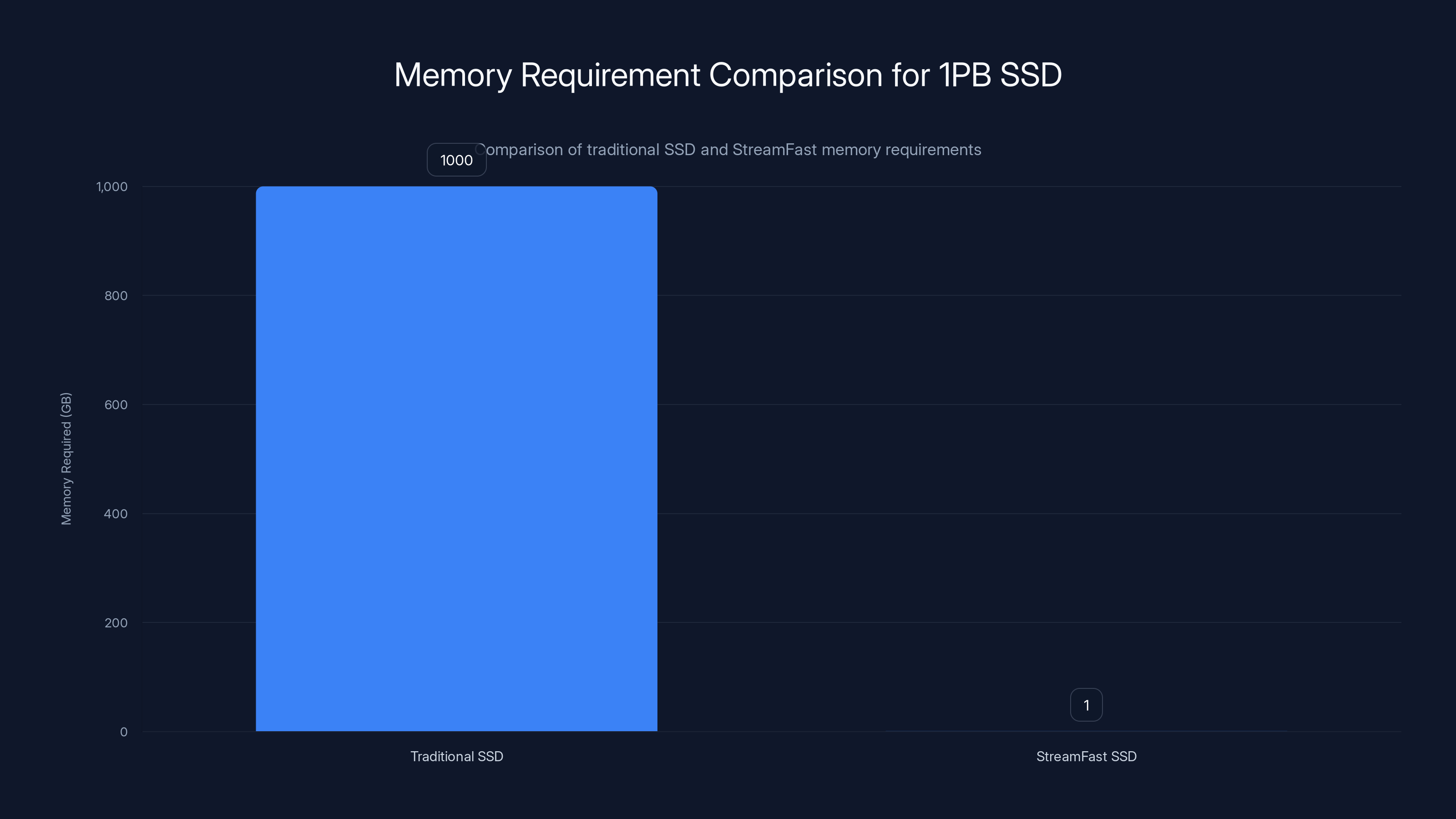

We're talking about a storage revolution that could reshape how enterprises build data centers, how AI companies manage their infrastructure, and how we think about storage scaling itself. The numbers alone are staggering. Instead of needing a terabyte of DRAM to manage a petabyte SSD, Stream Fast needs just a gigabyte. That's not an optimization. That's a fundamental rethink of what an SSD should be.

But here's where it gets interesting. This isn't just academic. Stream Fast is being developed through the Open Flash Platform group, with backing from major flash memory manufacturers. SK Hynix is reportedly involved. The technology is moving from concept to potential reality.

Understanding Stream Fast means understanding why modern SSDs are built the way they are, where the current architecture fails, what a post-FTL world actually looks like, and why this matters for the storage industry's future. Let's dig in.

TL; DR

- FTL Memory Overhead: Traditional SSDs require 1 byte of DRAM for every kilobyte of flash, meaning a petabyte drive needs a terabyte of controller memory

- Stream Fast Solution: Eliminates the FTL entirely, reducing overhead to 1 byte per megabyte, a thousand-fold improvement

- The Mechanism: Uses sequential device-assigned addresses instead of mapping tables, letting the file system interact directly with flash

- Practical Gains: Cooler, more reliable drives with lower power consumption and simpler construction

- Industry Impact: Addresses the DRAM shortage crisis and enables new architectures for AI, cloud, and enterprise storage

StreamFast architecture significantly reduces DRAM requirements by 99%, lowers power consumption, and minimizes thermal hotspots and write amplification, while simplifying firmware complexity.

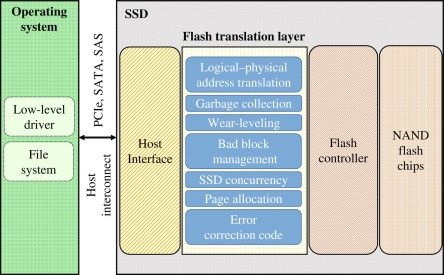

What Is the Flash Translation Layer and Why Does It Consume So Much Memory?

The Flash Translation Layer isn't new. It's been a core part of SSD architecture since SSDs became consumer devices. But most people don't understand what it does or why it's necessary.

Flash memory, at its core, is weird. You can't just write data anywhere you want like you can with traditional hard drives. Flash has blocks. These blocks have limited write cycles. Once you've written to a block too many times, it dies. This creates a fundamental problem: how do you manage this complexity invisibly to the host operating system?

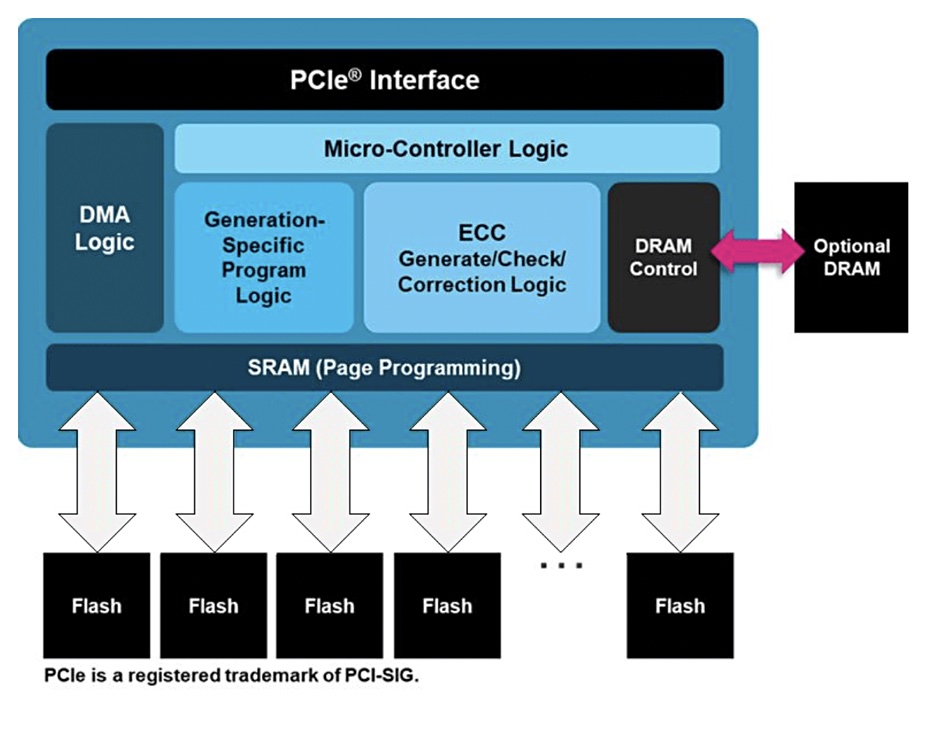

That's what FTL solves. It sits between the file system and the actual flash hardware, translating logical addresses (the addresses your computer thinks data lives at) into physical addresses (where data actually lives on the flash chips). It also handles wear leveling, making sure no single block gets hammered with writes until it dies prematurely.

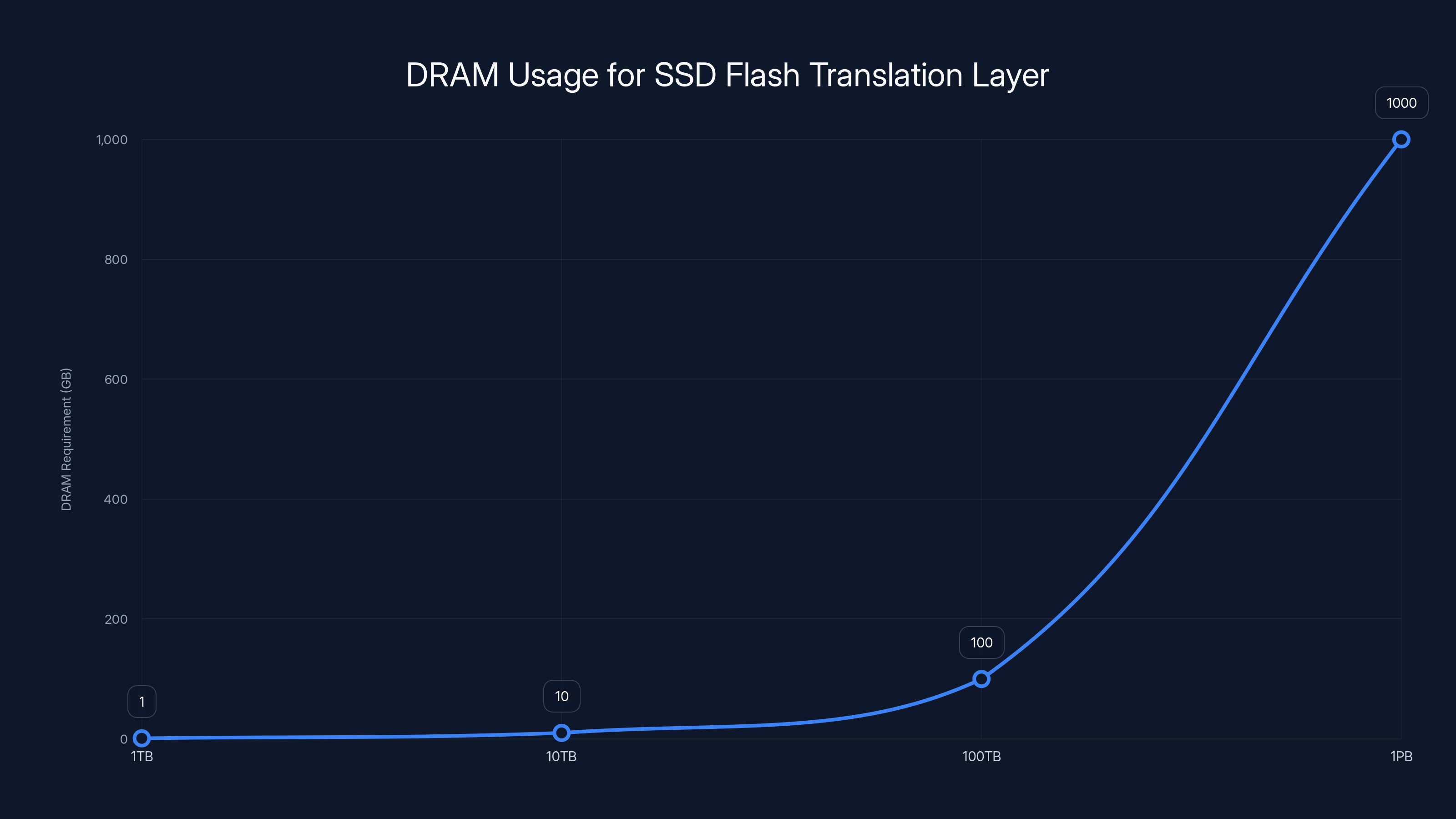

But here's the catch. To do this translation instantly, every single address mapping has to live in RAM. For a 1TB SSD, that's roughly 1GB of DRAM. For a 10TB drive, it's 10GB. For a 100TB drive, it's 100GB. For a petabyte drive, it's a terabyte.

David Flynn breaks it down simply: "It takes one byte of RAM for every kilobyte of flash on the SSD."

This becomes a massive problem at scale. You're not just buying flash memory. You're buying expensive, power-hungry DRAM just to manage that flash. The DRAM isn't even exposed to the user. It's stuck on the controller board, generating heat, consuming power, and taking up physical space.

This controller DRAM also becomes a thermal hotspot. All that constant memory access creates heat. The drive needs cooling. Heat means higher power consumption. Higher power consumption means the drive is less efficient.

The problem gets exponentially worse as we scale into the petabyte and multi-petabyte realm. Modern data centers are demanding bigger and bigger SSDs. Cloud providers want storage that's cheap per gigabyte. But the math breaks down when you need to allocate massive amounts of expensive DRAM just to manage larger flash arrays.

And then the DRAM crisis hit. Nvidia and AMD started hoarding high-bandwidth memory for their AI accelerators. Suddenly, SSD manufacturers couldn't source controller DRAM at reasonable prices. The system that worked fine when DRAM was cheap and plentiful started looking unsustainable.

Stream Fast directly attacks this architectural limitation by asking a radical question: what if we don't need the FTL at all?

The Core Problem with Modern SSD Architecture

When you zoom out and look at modern SSDs holistically, you realize they're solving a problem that became obsolete about fifteen years ago.

The reason SSDs needed the FTL was because file systems were designed for hard drives. Hard drives present a simple interface to the operating system: fixed-size blocks, random access, unlimited write cycles per block. File systems assumed this model. Windows, Linux, macOS—they all built their file systems around these assumptions.

SSDs came along and pretended to be hard drives. The SSD controller translated the file system's requests into actual flash operations. This abstraction made SSDs backwards compatible with existing operating systems. You could drop an SSD into an old computer and it just worked.

But this abstraction layer came with immense overhead. The FTL has to track millions or billions of individual address mappings. Modern SSDs use sophisticated algorithms to manage this. Some use hierarchical mapping to reduce RAM overhead. Some use hybrid approaches with a small DRAM cache and larger NAND cache. But all of these are Band-Aids on a fundamentally wasteful architecture.

Flynn's insight is simple but profound: what if the file system knew it was talking to flash? What if we built file systems that were native to how flash actually works?

This isn't new thinking in academic circles. Researchers have been publishing about FTL-less file systems for years. But actually building and deploying this at scale, with compatibility for existing applications, is a different challenge entirely.

Stream Fast's approach is to make this architectural shift practical. Instead of hiding flash complexity behind abstraction, Stream Fast pushes intelligence up to the file system level, where it's actually easier to manage.

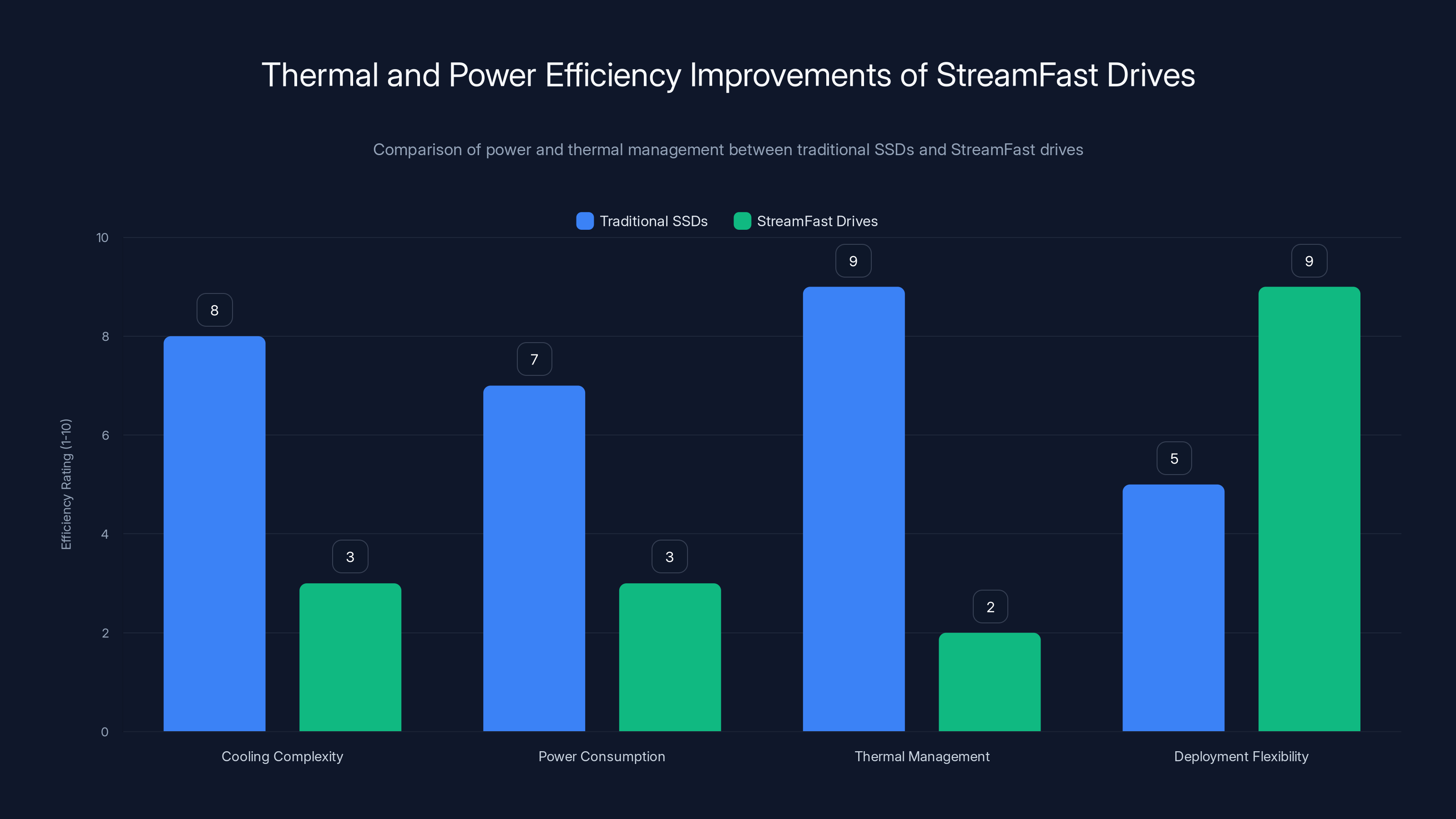

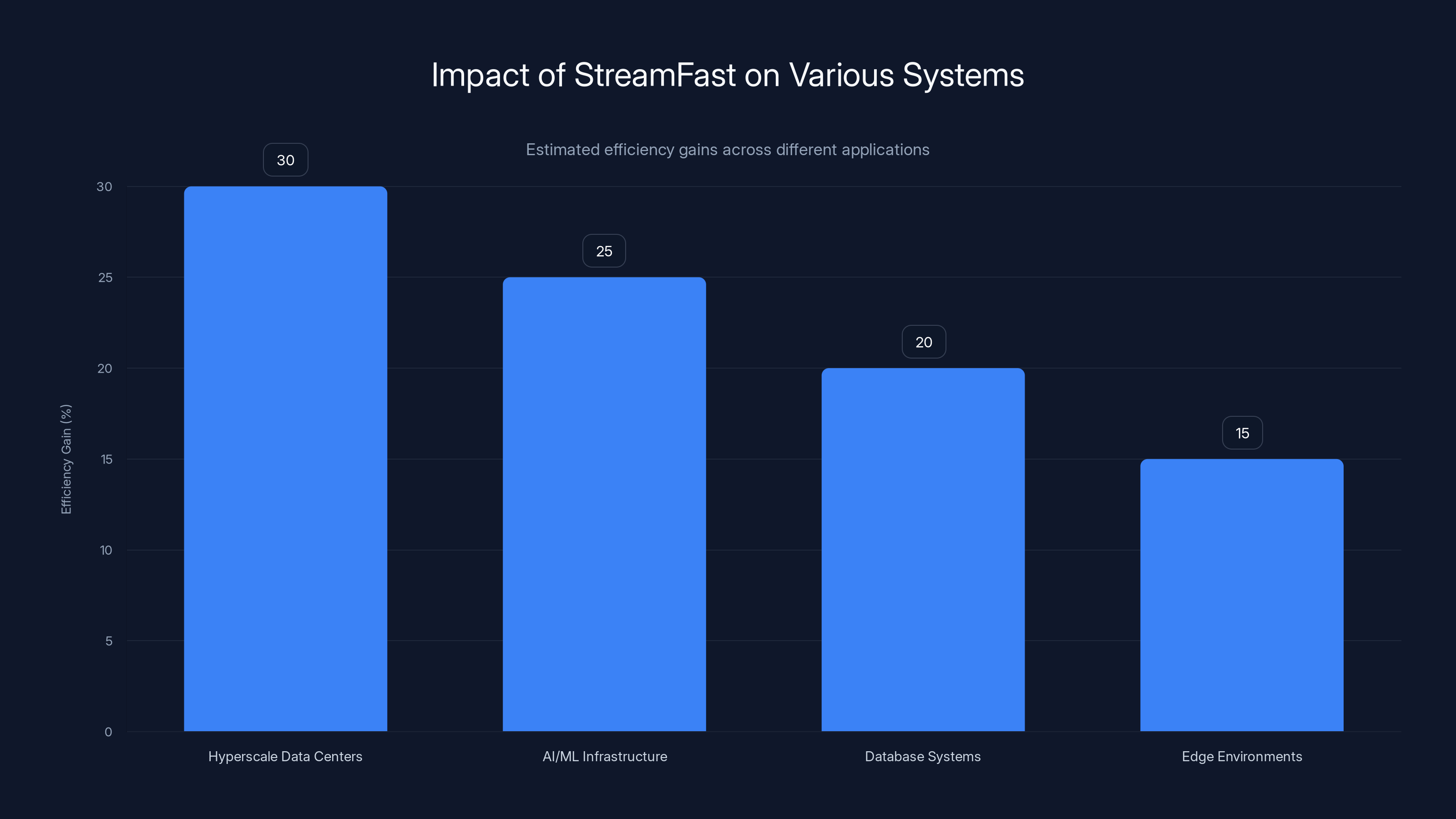

StreamFast drives offer significant improvements in power and thermal efficiency, reducing cooling complexity and power consumption, while increasing deployment flexibility. Estimated data based on described benefits.

How Stream Fast Actually Works: The Sequential Address Model

Understanding Stream Fast requires thinking differently about how data flows through a storage system.

In traditional SSDs, the flow is messy. Data comes in random order from the host. The FTL has to figure out where to put each piece of data, track all those placements in a massive table, update that table constantly, and pray the DRAM doesn't lose power before writing everything back.

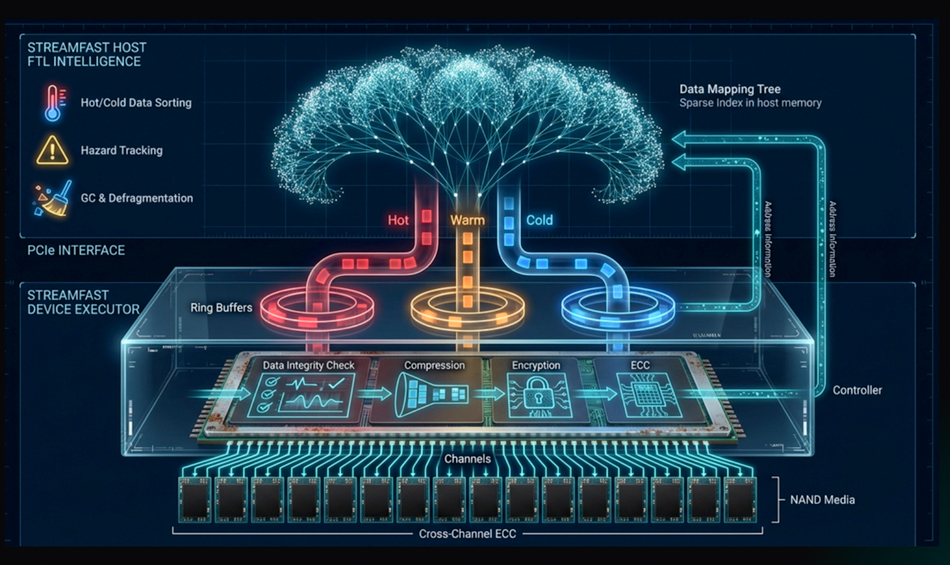

Stream Fast inverts this model. Instead of random placement with tracking, Stream Fast uses sequential device-assigned addresses.

Here's how it works in practice. Data arrives at the SSD in streams. Instead of the controller deciding where to put each byte, the SSD writes the incoming stream sequentially, one byte after another, to the flash. As it does this, it assigns sequential addresses to that data stream. Then it returns those addresses to the host file system.

The key insight is that the host file system now knows exactly where its data lives. There's no hidden mapping table. There's no translation layer. The file system can talk directly to the flash using these sequential addresses.

Flynn explains it this way: "The magic is that the device assigns sequential addresses to arbitrary strings of data that are streamed to the device."

This creates a fundamental shift in responsibility. Instead of the SSD controller managing all mapping complexity, the file system manages it. And the file system is already running in gigabytes of RAM (on the host system, not the SSD controller). The overhead essentially disappears.

But sequential writes alone don't solve everything. What happens when data gets deleted or updated? How do you handle overwrites without destroying the sequential model?

Stream Fast handles this through something Flynn calls stream replaying. Because writes are sequential, the host can replay the stream after a failure. The file system maintains a log of all write operations. If something goes wrong, you don't need to track individual address mappings. You just replay the write log.

This is more similar to how modern databases work (with write-ahead logging) than how traditional SSDs work. It's a conceptual shift that's more aligned with how modern software actually operates.

The Math Behind the Memory Reduction

Let's talk numbers, because the memory reduction is genuinely staggering.

Traditional SSD formula for controller DRAM:

For a 1 petabyte (PB) SSD:

Stream Fast formula for controller memory:

For the same 1PB SSD:

That's a thousand-to-one improvement. Not theoretical. Not a press release. An actual mathematical reduction from 1TB of expensive DRAM to 1GB.

Flynn's specific words: "With the Stream Fast file system, it's a byte of RAM for every megabyte of flash."

To understand why this matters, consider current enterprise SSD pricing and specifications. A modern enterprise NVMe SSD with 3.2TB capacity might include 8-16GB of controller DRAM. The DRAM itself costs more per gigabyte than the entire SSD per gigabyte.

Remove that requirement and suddenly SSDs become much cheaper to manufacture. You're not just saving the DRAM cost. You're saving power delivery complexity. You're saving thermal management. You're saving PCB real estate. You're removing one of the main constraints on scaling to larger capacities.

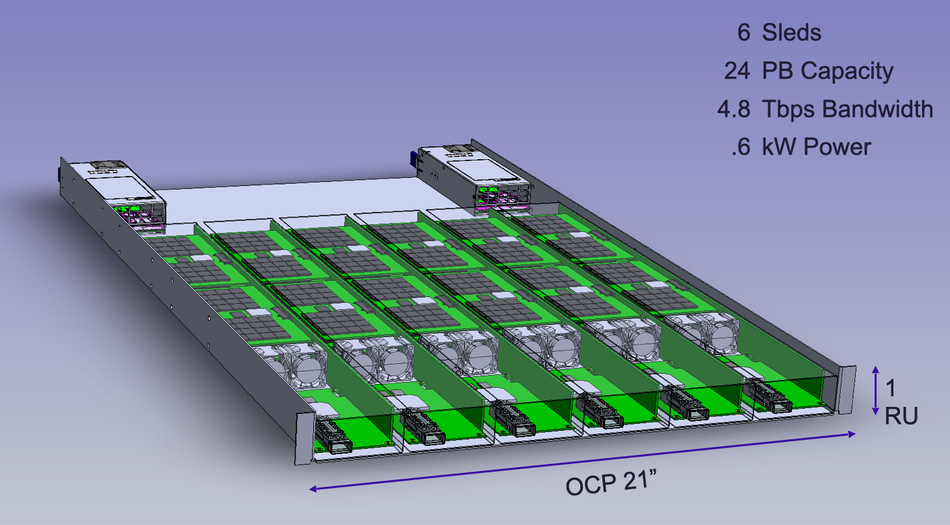

For a data center operator, this is transformational. A 100PB storage cluster using traditional SSDs would need roughly 100TB of controller DRAM distributed across hundreds of drives. Stream Fast cuts that to 100GB. You're removing the need for tens of terabytes of expensive, power-hungry memory from your storage infrastructure.

Thermal and Power Efficiency Improvements

The memory reduction creates secondary benefits that are almost as important as the primary benefit.

Traditional SSDs generate significant heat. Much of this comes from the controller DRAM. Memory access is power-intensive. Constant address lookup operations means constant RAM access patterns. The controller board becomes a thermal hotspot.

Stream Fast drives would be dramatically cooler because they're eliminating the primary source of controller board heat. Less heat means several cascading improvements.

First, cooling becomes simpler. Enterprise SSDs require active cooling solutions. Some require heatsinks. Some require thermal pads. Some require liquid cooling in extreme environments. Stream Fast drives would need minimal thermal management. You could pack them much more densely without thermal concerns.

Second, power consumption drops significantly. The controller no longer needs constant high-speed RAM access. The drive consumes less power per read and write operation. For data centers with thousands of drives, this compounds into massive efficiency gains.

Flynn specifically mentioned that Stream Fast targets "cooler drives, lower power use, and simpler high-capacity storage."

Lower power consumption has practical implications beyond just efficiency. It means:

- Smaller power supplies in storage systems

- Reduced cooling infrastructure costs

- Lower data center power bills

- Better performance at power-constrained deployments

- Improved reliability (cool components last longer)

- Ability to deploy in sealed or even orbital data centers

Flynn mentioned this: "The cooler, simpler drives could fit power-limited environments, including sealed or even orbital data centers."

Orbital data centers sound like science fiction. But they're not. Multiple companies are exploring putting data centers in space to reduce cooling overhead and take advantage of extreme conditions. Traditional SSDs with massive DRAM would be impractical in those environments. Stream Fast changes that equation.

StreamFast offers significant efficiency gains, with hyperscale data centers seeing up to 30% improvements. Estimated data.

Reliability and Durability Benefits

Removing hardware also increases reliability. This might seem counterintuitive. You'd think more components means more failure points. But in practice, the FTL and controller DRAM are sources of complexity that create failure modes.

Flynn states it directly: "This simplifies the construction of the SSD to the point where it's much more reliable."

Why? Several reasons.

First, fewer components means fewer places for things to fail. The controller DRAM can have bit errors. The FTL firmware can have bugs. These complexity sources simply vanish with Stream Fast.

Second, the recovery model is simpler. Traditional SSDs have elaborate recovery mechanisms because the address mapping tables can become corrupted. If the FTL doesn't match the actual data on the flash, you're in trouble. Stream Fast doesn't have this problem. The file system maintains the mapping.

Third, power loss scenarios are handled better. One of the scariest scenarios for SSDs is losing power while updating the FTL. If you crash mid-operation, the mapping tables can become inconsistent with the actual data. Stream Fast avoids this by keeping all mapping information on the host side.

This has practical implications for data integrity. Enterprise storage demands RAID protection, checksumming, and other protection mechanisms. These all become more reliable when you're not fighting complexity in the SSD controller.

The File System Side of Stream Fast

Stream Fast doesn't exist in isolation. It requires file systems that understand and can work with the sequential address model.

This is where the concept gets interesting because it's not just about changing the SSD hardware. It's about changing how operating systems interact with storage.

A Stream Fast-aware file system would need to:

- Understand sequential writes - Instead of assuming random placement, optimize writes as sequential streams

- Manage address mapping - Maintain the mapping table that the SSD controller no longer maintains

- Handle address reassignment - When data is overwritten or deleted, manage the flow of new sequential addresses

- Implement wear leveling - The file system now owns wear leveling, not the controller

- Support recovery - Use the write log to recover from failures

The good news? Modern file systems already have most of these capabilities. Technologies like copy-on-write (used in Btrfs and ZFS), write-ahead logging (common in databases), and block mapping already exist in mature form.

Stream Fast essentially moves these file system concepts into the primary position, rather than having them as add-ons.

This also opens up interesting possibilities for optimization. File systems could now make decisions about data placement that controllers never could. A file system could assign sequential addresses based on expected access patterns. It could co-locate frequently accessed data. It could reorganize data based on application behavior.

Flynn's company, Hammerspace, specializes in file system innovation. They understand the nuances of making file systems work at scale. Their expertise in this area is likely crucial for making Stream Fast practical.

Compatibility Challenges and Transition Strategy

Here's the honest part: Stream Fast isn't a drop-in replacement for existing SSDs.

Your current operating system expects SSDs to present the traditional interface. If you plugged in a Stream Fast drive without the appropriate file system support, it wouldn't work. You'd need:

- Operating system support for Stream Fast file systems

- File system drivers that understand sequential addressing

- Application compatibility (though most apps interact through standard APIs)

- Data migration tools

This is non-trivial. But it's not insurmountable.

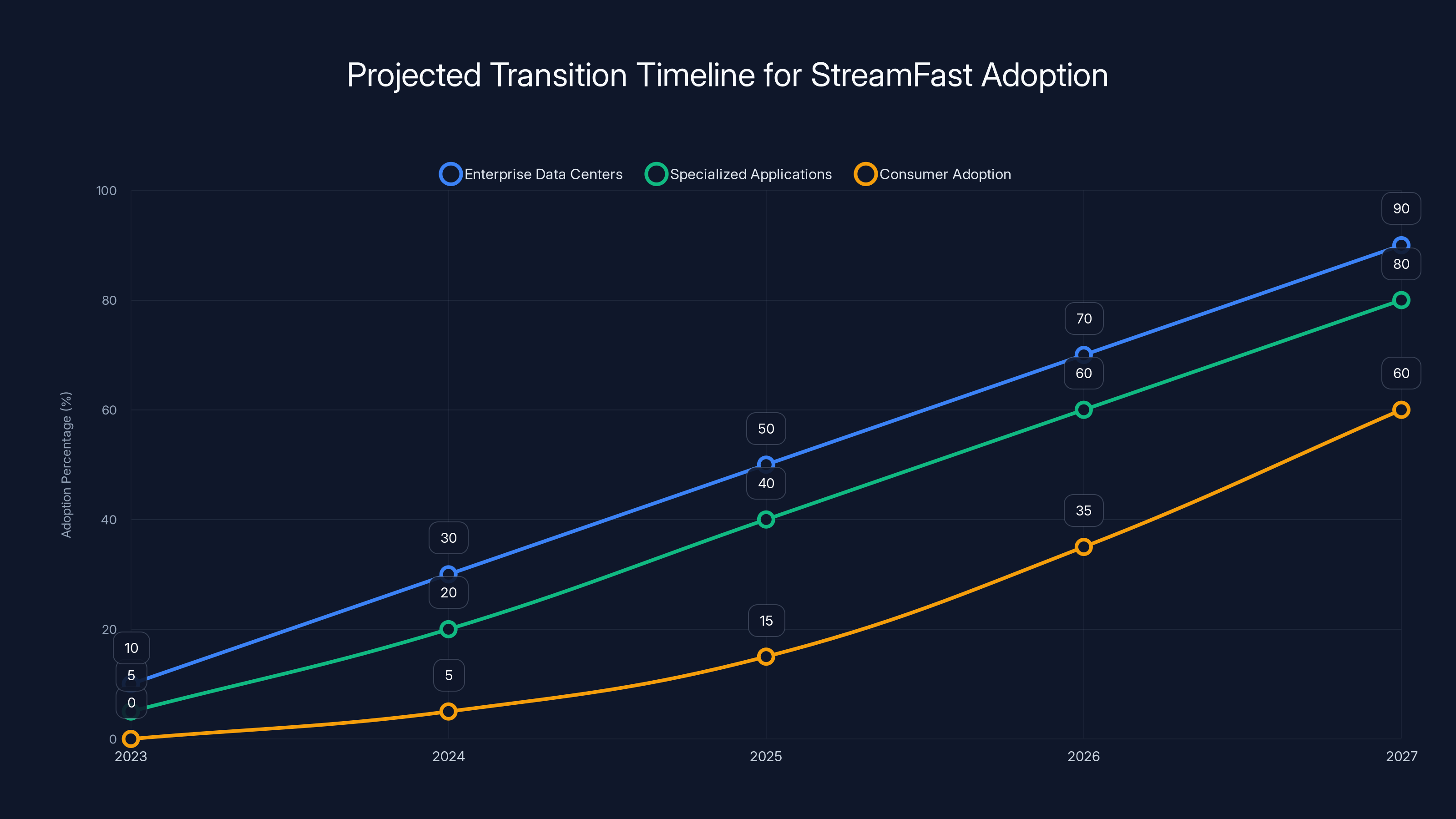

The transition strategy would likely look like:

Phase 1: Enterprise Data Centers - Stream Fast would debut in controlled environments where operators have deep storage knowledge. Cloud providers, financial institutions, research facilities would be early adopters.

Phase 2: Specialized Applications - Databases, data warehouses, and AI training systems would move to Stream Fast early because they're I/O-intensive and benefit most from the efficiency gains.

Phase 3: Consumer Adoption - Operating systems would integrate Stream Fast support gradually. Linux would probably get support first. Windows and macOS would follow. By the time consumers encountered them, they'd just work.

The timeline for this transition is likely measured in years. But it's happening through the Open Flash Platform group, which includes major manufacturers. This isn't a fringe academic idea. It's industry-backed work.

StreamFast SSDs require only 1GB of memory for a 1PB SSD, compared to 1000GB for traditional SSDs, marking a thousand-to-one improvement.

Write Amplification and Performance Implications

One of the most problematic aspects of traditional SSDs is write amplification. Every time the file system wants to write data, the FTL might actually write it multiple times internally due to garbage collection, wear leveling, and consolidation operations.

A single write from the host might result in 3, 4, or even 10 actual writes to the flash. This compounds power consumption and reduces drive lifespan.

Stream Fast directly attacks write amplification because it eliminates garbage collection and most consolidation overhead.

Flynn mentions this explicitly: "Removing the FTL also cuts write amplification and reduces heat."

How? Because sequential writes naturally minimize garbage collection. When you write data sequentially, you're filling blocks in order. When a block fills, you move to the next one. There's no random scattered data that needs consolidation.

This creates a performance feedback loop:

- Sequential writes reduce write amplification

- Lower write amplification means fewer actual flash operations

- Fewer flash operations means less controller load

- Less controller load means faster response times

- Faster response times improve application performance

For workloads that are already sequential (and many database and analytical workloads are), this could mean significant performance improvements, not just efficiency gains.

The trade-off is that truly random write workloads might perform differently. But modern applications, especially cloud-scale systems, tend to be optimized for sequential I/O anyway. The abstraction of random I/O was always more of a convenience than a necessity.

DRAM Crisis Context: Why This Timing Matters

Stream Fast isn't just a good idea in a vacuum. It's a direct response to a specific, ongoing crisis in the semiconductor industry.

The DRAM shortage isn't new. We've had multiple DRAM shortages over the years. But the current situation is structurally different. Nvidia and AMD are pulling all the expensive high-bandwidth memory into their AI accelerators. HBM (high-bandwidth memory) is where the margins are. General-purpose DRAM that SSD controllers need is a lower-priority product.

This creates a supply crunch. SSD manufacturers need massive amounts of controller DRAM. But manufacturers are diverting capacity toward HBM for GPUs. The economics don't work anymore. SSD controller DRAM is too expensive relative to the value it provides.

Stream Fast solves this by removing the dependence on large amounts of expensive DRAM. Instead of waiting for DRAM supply to balance out (which might take years), Stream Fast provides an architectural alternative.

Flynn explicitly connected the dots: "He links that overhead to the wider DRAM crisis, where manufacturers are moving capacity toward high-bandwidth memory for GPUs from companies like Nvidia and AMD."

This is why industry players are interested. It's not just about optimization. It's about solving a genuine supply chain problem that's affecting storage industry margins and capabilities.

The Open Flash Platform and Industry Coordination

Stream Fast isn't being developed by a single company in isolation. It's being developed through the Open Flash Platform (OFP) group, which includes major flash memory manufacturers and storage companies.

This coordination is crucial. A new SSD architecture requires work across multiple layers:

- Flash memory manufacturers need to optimize for sequential workloads

- Controller manufacturers need to simplify their designs

- File system developers need to implement Stream Fast-aware logic

- Operating system vendors need to provide driver support

- Cloud providers and data center operators need to adopt and deploy

No single company can drive this alone. Flynn was in Korea recently, suggesting active collaboration with SK Hynix (a major flash memory manufacturer). This is how architectural shifts actually happen.

The Open Flash Platform is positioning itself as the coordinating body for next-generation flash storage architecture. They're thinking beyond just NVMe and considering how flash should evolve as capacities scale into petabytes.

Hammerspace, with Flynn's expertise, is contributing the file system knowledge to make this practical. Other OFP members are handling the controller and flash optimization sides.

This ecosystem approach is important because it prevents any single player from gatekeeping the technology. If Stream Fast becomes industry standard, it benefits everyone, not just one vendor.

The transition to StreamFast SSDs is projected to occur over several years, with enterprise data centers leading the adoption, followed by specialized applications and eventually consumer markets. Estimated data.

Real-World Applications and Use Cases

Stream Fast's benefits are most apparent in specific, high-impact scenarios.

Hyperscale Data Centers

Cloud providers operate massive storage systems. Amazon, Microsoft, Google, and Alibaba each manage exabytes of data. For these operations, the efficiency gains from Stream Fast compound enormously.

Consider a data center with 100,000 drives. Switching from traditional SSDs to Stream Fast could eliminate roughly 1 petabyte of controller DRAM. That's massive power savings, cooling savings, and acquisition cost savings. At hyperscale, these savings translate into nine-figure improvements to operating margins.

AI and Machine Learning Infrastructure

AI training requires moving massive amounts of data. Models train faster when I/O isn't a bottleneck. Stream Fast's improved I/O efficiency and lower latency would benefit training workloads directly.

Plus, AI infrastructure is power-constrained. Data center operators power-limit their deployments to manage cooling. Every watt saved in storage is a watt that can go to GPUs. Stream Fast directly improves this utilization.

Database and Data Warehouse Systems

Systems like Snowflake, Big Query, and Redshift are I/O intensive. They read and write massive amounts of data. The efficiency improvements from Stream Fast would improve query performance and reduce operational costs.

Many of these systems already use sequential workload patterns. Stream Fast would optimize for their actual access patterns rather than fighting against the FTL complexity.

Edge and Constrained Environments

Flynn specifically mentioned sealed and orbital data centers. These are power-limited environments where traditional SSDs are impractical. Stream Fast opens new deployment possibilities.

Edge computing, remote research stations, submarines, and yes, space-based data centers could all use storage that doesn't require massive power and cooling infrastructure.

Storage Systems with High Capacity Requirements

As SSDs push toward multi-petabyte capacities, the FTL memory overhead becomes prohibitive. Stream Fast makes extreme capacity practical. You could imagine future drives with 10PB or 100PB capacity on a single SSD. These would be impractical with traditional FTL. They're feasible with Stream Fast.

Performance Characteristics and Trade-offs

Stream Fast isn't purely beneficial. Understanding the trade-offs is important.

What Stream Fast is Great For:

- Sequential workloads (databases, data warehouses, analytics)

- High-capacity drives (petabyte-scale storage)

- Power-constrained environments (mobile, edge, orbital)

- Write-intensive workloads (reduced write amplification)

- Throughput-focused applications

Where Stream Fast Has Challenges:

- Truly random workloads might perform differently than optimized FTL controllers

- Applications expecting traditional block device semantics need adaptation

- Legacy systems require file system updates

- Initial adoption means smaller ecosystem and fewer optimizations

However, modern storage workloads are already heavily sequential. Even applications that appear random are often sequentially optimized at the file system or database layer. The theoretical challenge of random workloads is less significant practically.

Competitive Landscape and Alternative Approaches

Stream Fast isn't the only attempt to rethink SSD architecture, but it's among the most practical.

Academic research has explored FTL-less designs for years. Papers on object-based storage, key-value storage, and direct flash file systems exist in abundance. But academic designs don't necessarily work at scale with existing applications.

Other industry approaches include:

- Hybrid FTL approaches - Reducing DRAM requirements through clever algorithms. Hitachi and Samsung have patents on various optimizations.

- NAND-cached FTL - Using cheaper NAND instead of DRAM for mapping tables. This reduces cost but doesn't eliminate the fundamental inefficiency.

- Disaggregated storage - Separating the control plane from the data plane, using metadata services. This works but is complex operationally.

- SMR (Shingled Magnetic Recording) alternatives - Some are exploring SMR-like concepts for NAND. These reduce random write performance.

Stream Fast is distinctive because it:

- Actually eliminates the DRAM requirement rather than just reducing it

- Maintains reasonable performance characteristics

- Leverages existing file system technology

- Has industry backing and a clear path to adoption

Competitively, Stream Fast isn't about being superior in one narrow metric. It's about being a more fundamentally sound architecture that works better as capacities scale.

The Flash Translation Layer requires significant DRAM, scaling linearly with SSD capacity. For a 1TB SSD, 1GB of DRAM is needed, while a 1PB drive requires 1TB of DRAM. Estimated data based on typical FTL requirements.

Technical Implementation Details and Controller Simplification

From a controller firmware perspective, Stream Fast massively simplifies the required logic.

Traditional SSD controllers are genuinely complex pieces of software. The firmware has to:

- Maintain FTL tables and manage updates

- Implement wear leveling algorithms

- Handle garbage collection

- Manage NAND operations with their electrical complexities

- Implement ECC (error correction)

- Handle power loss recovery

- Support various NAND types and revisions

Stream Fast controllers only need to:

- Manage sequential write placement

- Assign addresses and return them to the host

- Implement ECC

- Handle the physical NAND operations

- Support basic error recovery

Remove FTL logic, wear leveling, and complex garbage collection, and controller firmware becomes dramatically simpler. Simpler firmware means:

- Fewer bugs

- Faster development cycles

- Lower firmware size (more space for other features)

- Easier validation and testing

- More resources available for other optimizations

This is a significant shift. Modern SSD firmware is measured in megabytes. Stream Fast firmware would likely be much smaller, but the complexity reduction benefits operational stability and reliability.

Timeline and Commercialization Path

Flynn was cagey about specifics when asked about partnerships: "Can't talk about specifics of our partnerships yet, but stay tuned."

This suggests active negotiations and development, but not public announcements. Industry insiders are likely further along than press releases indicate.

A reasonable timeline might look like:

2025 - Technical specifications finalized, early partnerships disclosed, file system integration underway

2026-2027 - First commercial deployments with hyperscale cloud providers, integration with Linux kernels

2028-2029 - Broader adoption in enterprise, Windows and macOS support, consumer awareness

2030+ - Mainstream adoption, most new SSDs ship with Stream Fast variants

This is speculative, but architectural transitions in storage historically take 3-5 years from announcement to mainstream adoption. SATA-to-NVMe transitions took roughly this timeframe.

The fact that industry coordination is happening through OFP and that major flash manufacturers are involved suggests serious commitment. This isn't a technology that will languish in academic papers.

DRAM Ecosystem Impact

Stream Fast's widespread adoption would have significant ripple effects through the DRAM market.

Currently, SSD controller DRAM represents a meaningful portion of DRAM capacity. If that demand evaporates, DRAM manufacturers lose revenue. However, the shift of DRAM capacity toward high-bandwidth memory and AI applications is already happening. Stream Fast essentially acknowledges this shift and works around it.

From a supply chain perspective, Stream Fast could ease tensions. SSD manufacturers could get the DRAM they need more easily because they wouldn't need as much. DRAM manufacturers could focus on higher-margin HBM products. The market rebalances.

This is actually beneficial for the entire semiconductor ecosystem. Resources flow toward higher-value applications. The economics improve for everyone.

Security and Data Protection Considerations

Moving address mapping from the SSD controller to the file system changes security implications.

With traditional SSDs, address mapping is opaque to the operating system. The file system doesn't know exactly where its data lives. This provides a layer of abstraction.

With Stream Fast, the file system maintains address mappings. This creates both benefits and considerations:

Benefits:

- File system can implement custom encryption and security policies

- Address mapping is part of the file system, not a black box

- Easier to verify data integrity throughout the stack

- Better integration with application-level security

Considerations:

- Requires file systems with strong security properties

- Address mapping becomes attack surface that needs protection

- Requires secure recovery mechanisms

- Legacy file systems without security hardening shouldn't be used

For enterprise deployment, this means using file systems like ZFS or Btrfs with strong checksumming and encryption. These systems are already designed for the level of control Stream Fast provides.

This is actually a security improvement long-term. You're not trusting a black box SSD controller. You're trusting open-source file system code that's been peer-reviewed by thousands of developers.

Comparative Analysis: Stream Fast vs. Traditional SSD Architecture

Let's do a side-by-side comparison of how these two approaches handle the same operations:

| Operation | Traditional SSD | Stream Fast SSD |

|---|---|---|

| Write Operation | Host requests write, controller finds free block via FTL lookup, writes data, updates FTL table, returns confirmation | Host sends sequential data stream, controller appends to sequential log, returns assigned address, host maintains mapping |

| Read Operation | Host requests data at logical address, controller uses FTL to translate to physical address, reads from flash, returns data | Host requests data using sequential address assigned during write, controller reads from flash at that address, returns data |

| Address Mapping Storage | 1GB DRAM per TB of flash (for 1TB drive: ~1GB DRAM) | Minimal controller memory (~1-10MB for housekeeping), 1GB host RAM per TB of flash (already available) |

| Wear Leveling | Handled by controller FTL algorithm | Handled by file system aware of sequential model |

| Garbage Collection | Continuous background operation, causes write amplification | Simplified, triggered by sequential block management |

| Power Consumption | High (constant DRAM access, continuous GC) | Lower (minimal controller operations) |

| Thermal Output | High (DRAM and controller activity) | Lower (simplified operations) |

| Recovery from Crash | Verify FTL consistency, potentially recover from backup | Replay write log, deterministic recovery |

This comparison shows why Stream Fast is architecturally superior for modern workloads, especially at scale.

Future Storage Evolution Post-Stream Fast

Stream Fast might not be the final form of SSD architecture. It's a major step forward, but it opens doors to further innovation.

Potential future evolutions include:

Computational Storage - Embedding processing capabilities near the storage. Stream Fast's simplified architecture makes this easier. Imagine SSDs that run analytics queries directly on stored data.

Disaggregated Storage - Separating control, metadata, and data planes across network. Stream Fast's simplified control plane would work well in disaggregated architectures.

Persistent Memory Integration - Using persistent memory (PMEM) alongside flash. Stream Fast's file system-centric model works well with mixed memory hierarchies.

Quantum-resistant Encryption - Building cryptographic protections against quantum threats. Easier to implement when you own the address mapping logic.

AI-driven Optimization - File systems could use machine learning to predict access patterns and optimize data placement. Requires file system control of placement, which Stream Fast enables.

Stream Fast isn't the end of storage evolution. It's removing a major architectural constraint, enabling these future innovations.

FAQ

What is Stream Fast and how does it differ from traditional SSDs?

Stream Fast is a new SSD architecture concept that eliminates the Flash Translation Layer (FTL) and controller DRAM, replacing them with a file-system-centric design that uses sequential device-assigned addresses. Unlike traditional SSDs that require a massive mapping table (1 byte of DRAM per kilobyte of flash), Stream Fast reduces memory overhead to just 1 byte per megabyte of flash, a thousand-fold improvement. This simplification makes SSDs more efficient, cooler, and enables much larger capacity drives without proportional memory overhead.

How does the sequential address model in Stream Fast actually work?

Instead of random data placement with controller-managed address translation, Stream Fast writes incoming data sequentially to flash and assigns sequential addresses to each data stream. The SSD returns these addresses to the host file system, which maintains the address mapping. Because writes are sequential, the file system can replay the write stream after failures without needing massive address lookup tables. This shifts complexity from the SSD controller to the file system, where hardware already has abundant RAM available.

What are the main benefits of Stream Fast architecture?

Stream Fast offers several major benefits: reduces controller DRAM requirements by 99% (from terabytes to gigabytes for petabyte-scale drives), dramatically lowers power consumption, eliminates a primary thermal hotspot, reduces write amplification, simplifies controller firmware (fewer bugs, higher reliability), and enables deployment in power-constrained environments like sealed or orbital data centers. For hyperscale operations, these improvements compound into substantial cost savings on power, cooling, and hardware acquisition.

Why is Stream Fast relevant now, given the DRAM shortage?

Stream Fast directly addresses the current semiconductor market imbalance where manufacturers are prioritizing high-bandwidth memory for GPU applications over general-purpose DRAM for SSD controllers. By eliminating the dependency on large amounts of expensive controller DRAM, Stream Fast provides an alternative solution to the SSD industry's supply chain challenges. The timing is particularly relevant because DRAM constraints are pushing SSD manufacturers to seek architectural alternatives, making this the right moment for a fundamental rethink.

Will Stream Fast SSDs work with my current computer and operating system?

Stream Fast requires file system support to function properly. Your existing operating system (Windows, macOS, Linux) would need updates to support Stream Fast-aware file systems. Early deployments will occur in enterprise data centers and cloud environments where operators control software deployment. Consumer adoption would come later, as operating systems integrate Stream Fast support. Linux will likely get support first, followed by Windows and macOS. Once supported, Stream Fast drives would function transparently to applications.

How does Stream Fast handle reliability and data protection differently?

Stream Fast improves reliability by eliminating hardware complexity (controller DRAM and FTL logic) that can fail, and by moving address mapping to file systems that already implement sophisticated checksumming and error detection. The write-log replay mechanism provides deterministic recovery from crashes without needing to verify complex FTL consistency. Security is enhanced because you're not relying on a closed-source SSD controller but rather on openly auditable file system code, though you need to use enterprise-grade file systems like ZFS or Btrfs for full protection.

When will Stream Fast SSDs be commercially available?

Stream Fast is currently in development through the Open Flash Platform group with backing from major manufacturers like SK Hynix. Based on typical architecture transition timelines, initial commercial deployments in hyperscale cloud environments could occur by 2026-2027, with broader enterprise adoption by 2028-2029. However, these are estimates since the industry is still in active development and partnership negotiation phases. The fact that major flash manufacturers are coordinating suggests real commitment to shipping this technology within the next 2-4 years.

How does Stream Fast affect SSD performance compared to traditional drives?

Stream Fast improves performance for sequential workloads (databases, data warehouses, analytics), which represent the majority of modern enterprise storage usage. The reduced write amplification and simplified controller operations can provide faster response times and higher throughput. For workloads that are actually random at the application level but sequentially optimized at the file system level (which is most modern applications), Stream Fast performs equivalently or better. True random workloads would experience different performance characteristics, but modern storage systems optimize for sequential I/O anyway.

What role do file systems play in Stream Fast architecture?

File systems become the intelligence layer in Stream Fast architecture. They maintain address mappings that traditional SSD controllers maintain, implement wear leveling algorithms, manage data placement, and handle recovery from failures. This requires file systems with sophisticated capabilities like journaling, copy-on-write semantics, and checksumming. Systems like ZFS and Btrfs already implement these features and would be natural candidates for Stream Fast adoption. This represents a fundamental shift in responsibility from hardware controllers to software file systems.

How does Stream Fast compare to other proposed SSD architecture improvements?

Stream Fast is more radical than incremental FTL optimizations (which only partially reduce DRAM overhead) or NAND-cached approaches (which use cheaper NAND instead of DRAM but maintain the fundamental inefficiency). It competes with other academic proposals for FTL-less storage, but distinguishes itself through practical implementation path, industry backing, and leveraging existing file system technology rather than requiring entirely new systems. Stream Fast bridges the gap between academic theory and commercial viability.

What are the implications of Stream Fast for data center operators?

Data center operators benefit from dramatic reductions in power consumption (directly reducing electricity bills), elimination of major thermal hotspots (reducing cooling infrastructure costs), simpler and more reliable storage systems, and the ability to deploy storage in previously impractical power-constrained environments. For hyperscale operators managing hundreds of thousands of drives, the aggregate benefits compound into nine-figure operational savings. This is why hyperscale cloud providers are likely to be early adopters.

Conclusion: The Storage Architecture Shift We've Been Waiting For

Stream Fast represents something rare in storage technology: a fundamental architectural rethinking that's both theoretically sound and practically implementable.

For decades, SSDs have hidden their true nature behind the abstraction of a traditional hard drive interface. This abstraction made SSDs compatible with existing systems. But it created massive inefficiency. As SSDs scale to petabyte capacities and DRAM becomes supply-constrained, that inefficiency became unsustainable.

David Flynn's insight is simple but profound. Stop pretending SSDs are hard drives. Build storage architecture that's native to how flash actually works, letting file systems that are already sophisticated enough to handle this complexity do the heavy lifting.

The mathematics are compelling. A thousand-fold reduction in memory overhead isn't a rounding error. It's transformational. It enables storage designs that are simpler, cooler, more reliable, and fundamentally more scalable than anything possible with traditional FTL architecture.

The timing is perfect. DRAM supply is constrained. Data centers demand efficiency. Power limits are becoming real constraints. Petabyte-scale storage is becoming necessary, not theoretical. All these pressures push toward Stream Fast adoption.

The path forward is clear too. Industry coordination through the Open Flash Platform, backing from major flash manufacturers, and partnerships (reportedly including SK Hynix) suggest this isn't a research project that might never ship. This is technology that's actively being developed for commercial deployment.

Will Stream Fast completely replace traditional SSDs? Probably not immediately. Transition always takes time. But for new deployments, especially at scale, Stream Fast becomes the obvious choice within a few years.

The storage industry has been working within architectural constraints for so long that we forgot to question the constraints themselves. Stream Fast removes those constraints. Everything else follows from that.

As the semiconductor industry continues its painful rebalancing toward AI and high-bandwidth memory, storage technologies like Stream Fast provide viable alternative paths forward. Rather than competing for scarce DRAM, storage evolves away from DRAM dependency entirely.

That's not incremental improvement. That's how fundamental technologies actually change.

Key Takeaways

- StreamFast eliminates FTL and controller DRAM, reducing memory overhead by 1000x (from 1 byte per KB to 1 byte per MB of flash)

- Sequential addressing model shifts responsibility from SSD controller to file system, leveraging already-available host RAM

- Dramatically improves power efficiency and thermal characteristics, enabling deployment in power-constrained environments

- Addresses critical DRAM shortage by removing dependence on expensive controller memory

- Open Flash Platform backing suggests serious path to commercialization within 2-4 years

Related Articles

- Reused Enterprise SSDs: The Silent Killer of AI Data Centers [2025]

- Zettlab D6 Ultra NAS Review: AI Storage Done Right [2025]

- RAM Price Surge 2025: What's Driving Costs Up and How to Cope [2025]

- Raspberry Pi Price Surge: How AI Memory Wars Broke Affordable Computing [2025]

- Cheap Hydrogen Is Reshaping Data Center Infrastructure [2025]

- Hardware Compression PCIe Gen5 SSDs: Roealsen6 Breaks Speed Records [2025]