The AI Productivity Paradox: Why 89% of Firms See No Real Benefit [2025]

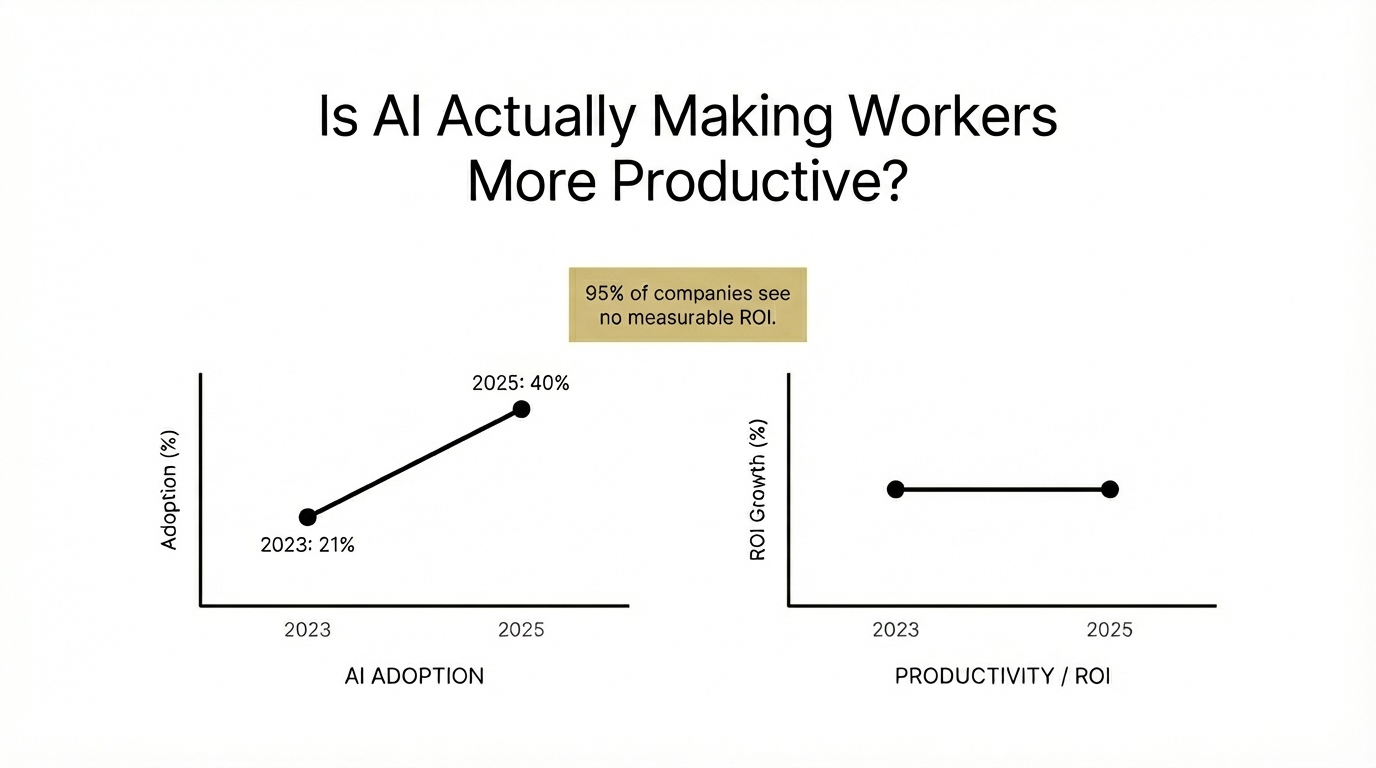

You've probably heard the pitch a hundred times by now. Artificial intelligence is going to transform your workplace. It'll automate the boring stuff, boost productivity through the roof, and make your team unstoppable. Companies have certainly believed it—they're pouring billions into AI tools, infrastructure, and training.

But here's where it gets awkward: the vast majority of them aren't seeing the payoff.

A major study from the National Bureau of Economic Research surveyed roughly 6,000 C-suite executives across the US, UK, Germany, and Australia. The findings are sobering. While 69% of firms now use AI in some capacity, 89% reported absolutely zero improvement in productivity over the past three years. Read that again. Nearly nine out of ten companies deployed AI and got nothing measurable in return.

This isn't a niche problem. We're talking about companies with the resources to implement enterprise-grade AI systems, competent IT teams, and clear business objectives. Yet the productivity gains that consultants promised? They haven't materialized. The job losses everyone feared? They haven't happened either. And perhaps most telling, most executives aren't surprised anymore—they've recalibrated expectations downward.

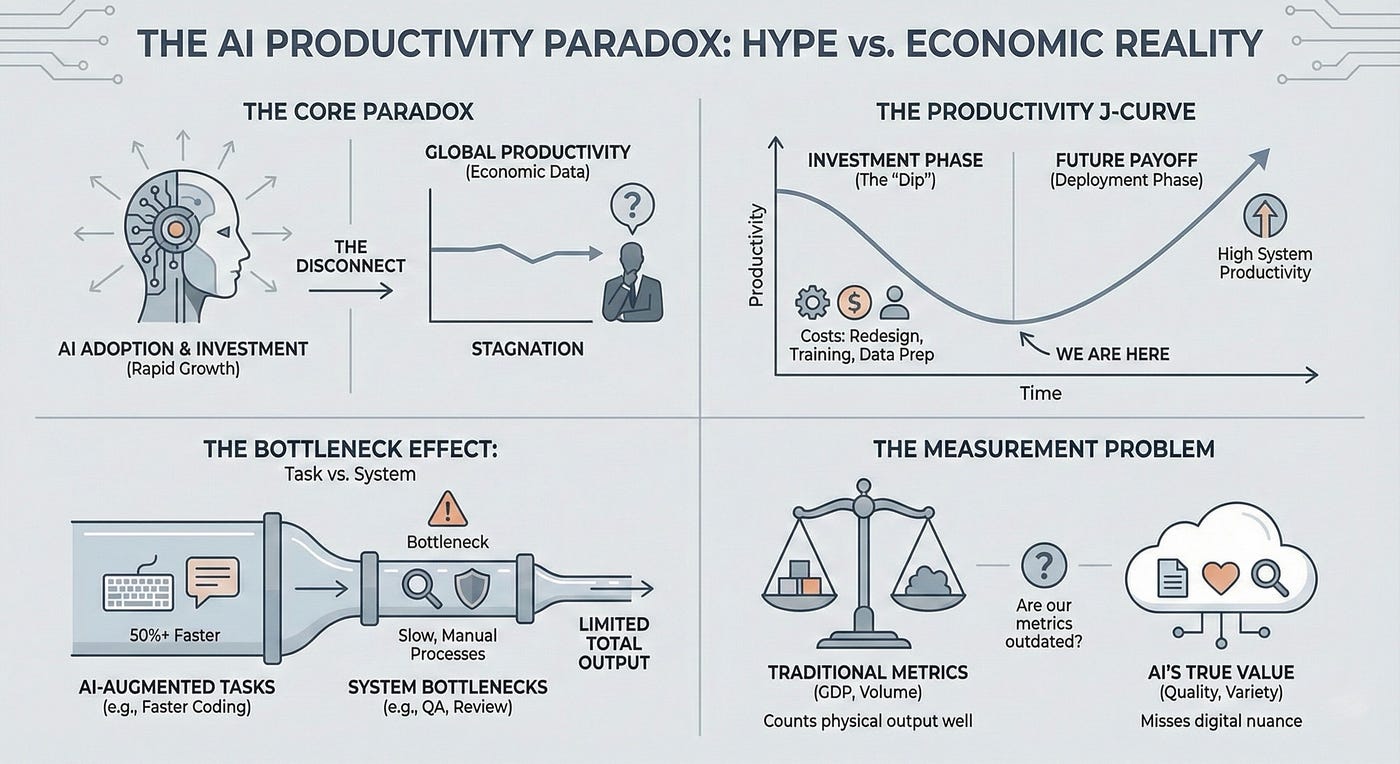

So what's actually going on? Why is AI adoption running at record highs while productivity improvements sit at near-zero? The answer isn't that AI doesn't work. It's that most companies have no idea how to actually use it.

TL; DR

- 69% of firms use AI, but 89% see zero productivity gains over three years

- Text generation, data processing, and visual content remain the top use cases

- Only 25% of companies expect small productivity gains, while 60% expect nothing

- 90% report no job losses, contradicting fears of mass automation

- Realistic projections: 1.4% productivity boost and 0.7% employment reduction over next three years

- Bottom Line: AI adoption outpaces actual value realization by a massive margin

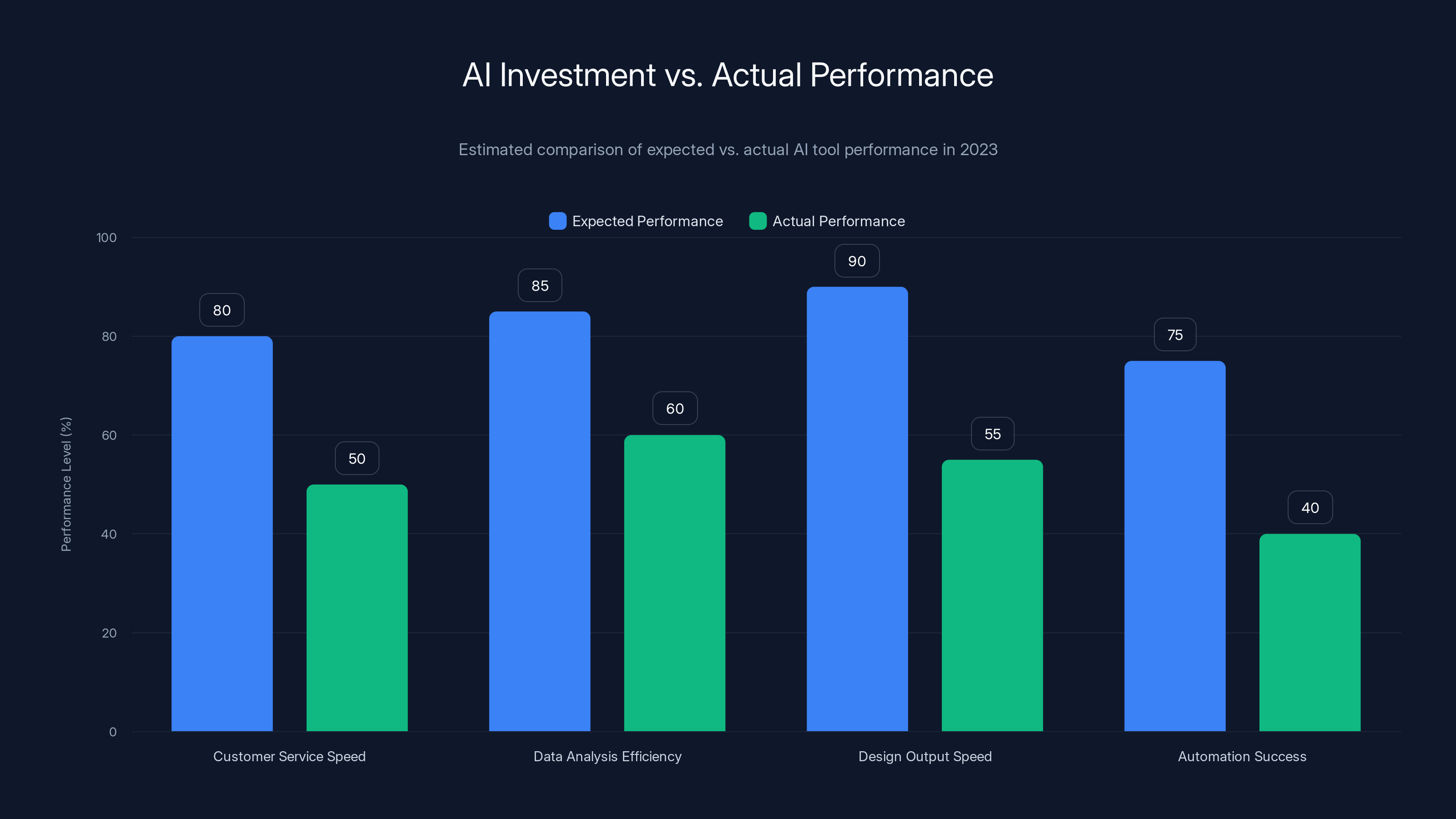

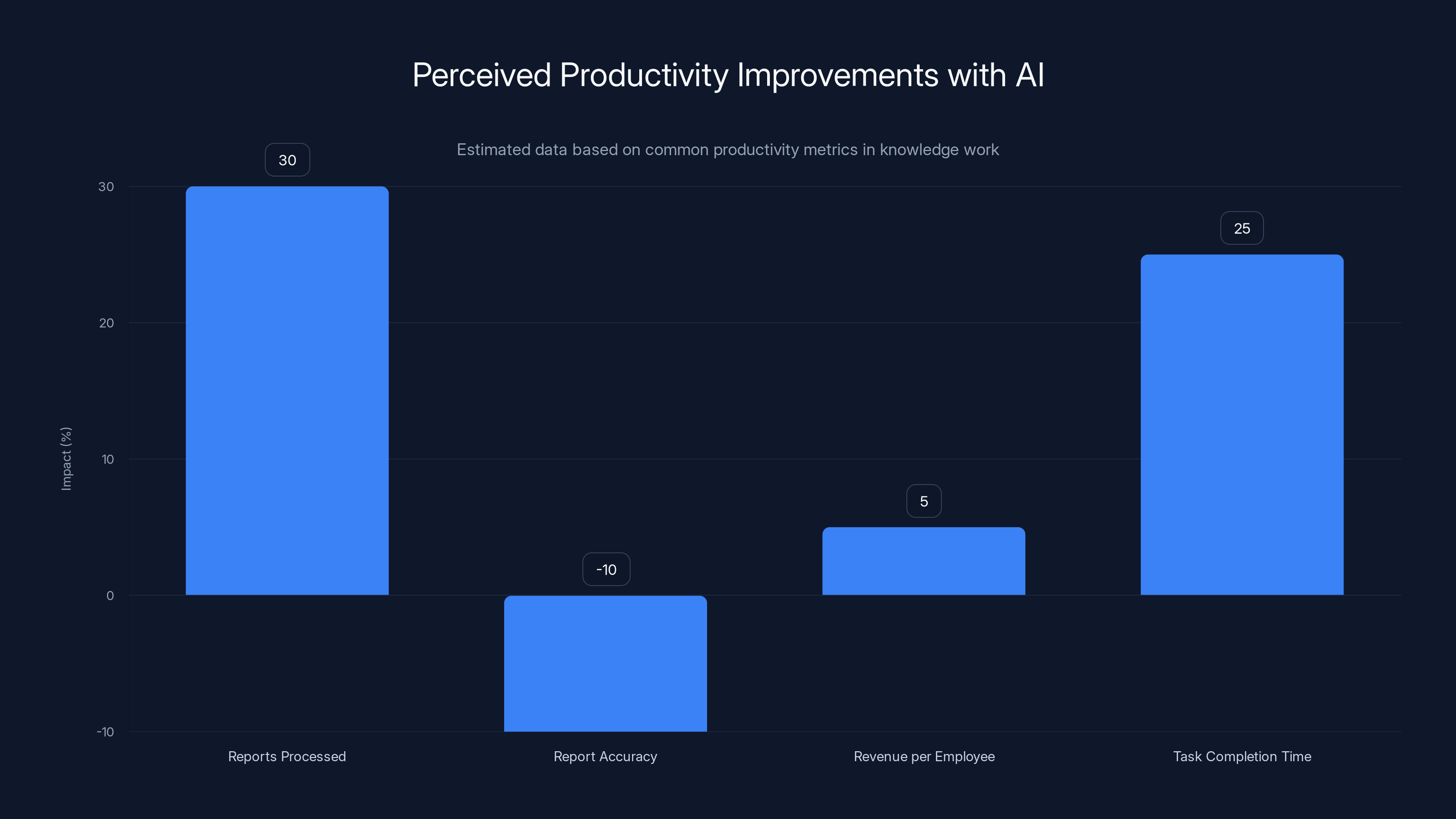

Estimated data shows a significant gap between expected and actual performance of AI tools in various business areas, highlighting the challenges in realizing AI's potential.

The Disconnect Between AI Investment and Actual Results

Let's set the scene. It's 2023. Your CFO comes into the quarterly meeting with a presentation deck full of AI opportunities. She cites industry reports, analyst predictions, and competitor moves. The tone is clear: we need to move fast or fall behind.

So you approve the budget. Six figures, maybe seven. You license some AI platforms, hire a consultant to help with "AI strategy," and start rolling things out. Your team gets trained. Some people are excited. Others are skeptical.

Then nothing happens.

Not nothing-nothing. You implemented the tools. People are using them. But they're not working the way the vendor promised. Customer service isn't dramatically faster. Analysts aren't churning through data any quicker. Designers aren't producing polished mockups five times faster. The stuff that was supposed to be automated? It still requires human oversight and cleanup.

This is the actual AI landscape right now, and it's why the numbers are so damning. Companies aren't failing because they chose bad vendors or made implementation mistakes (though plenty have). They're struggling because AI is much harder to extract value from than the marketing suggests.

The gap between AI's theoretical capability and its practical performance in real work environments is enormous. An AI language model can generate text, sure. But it generates it wrong 30% of the time. Someone still needs to read it, check it, fix it. That's not automation—that's using AI as a first draft tool. Which is fine! Except you can't build a business case around "it makes our first drafts 20% faster." That's not a $200K investment.

What's really happening is that companies adopted AI tools optimistically, discovered they require more work than expected, and kept using them anyway because they'd already paid for them. The productivity gains never came. But the tools stuck around.

The Current State of AI Adoption: What Companies Are Actually Doing

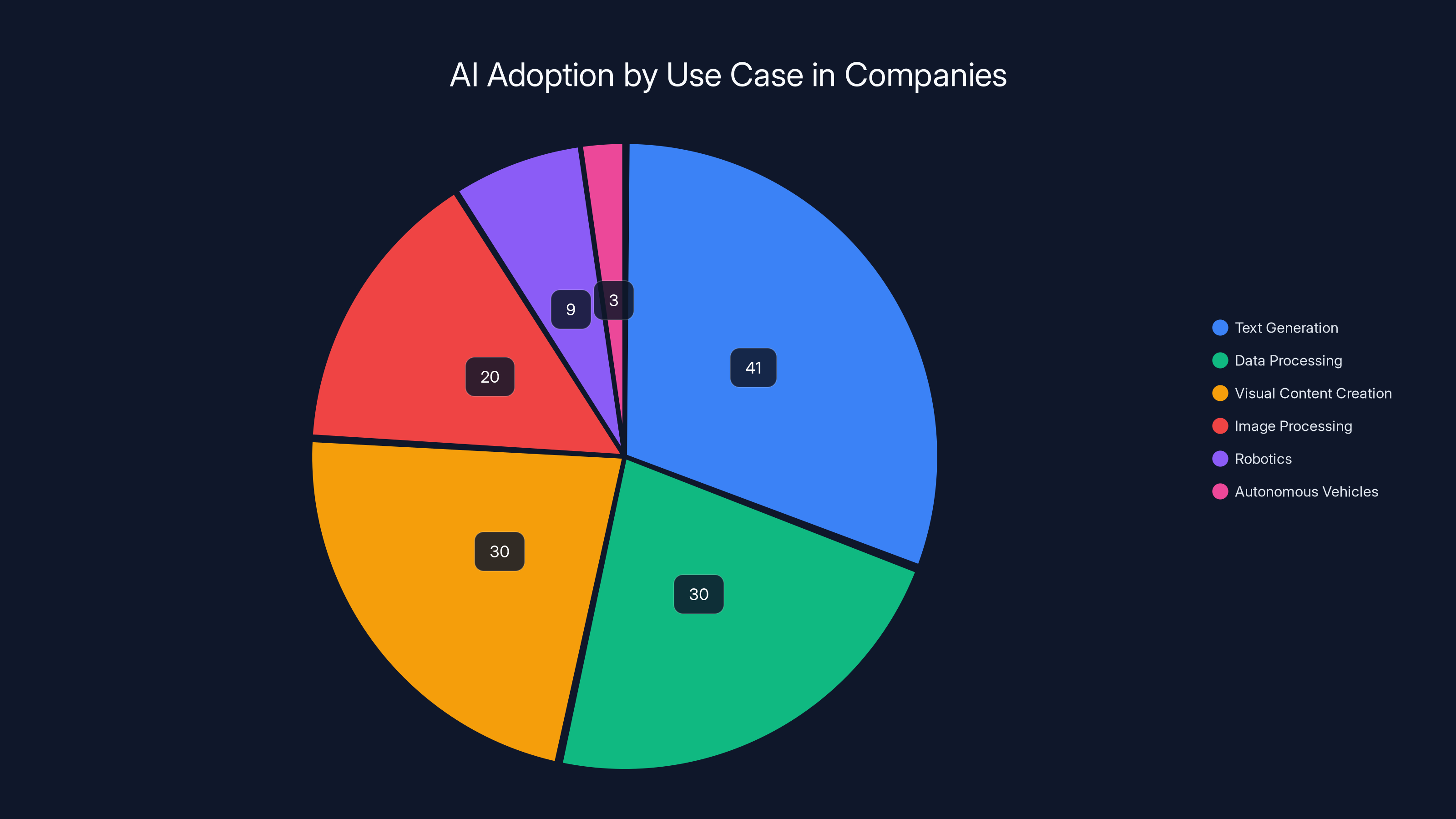

So what are the 69% of firms that use AI actually using it for?

The survey breaks it down pretty clearly. Text generation is the leader at 41% of companies. That's Chat GPT-style tools—writing emails, generating marketing copy, drafting documentation. The appeal is obvious. It's easy to implement, immediately useful (even if not always accurate), and visible to employees.

Data processing comes in at 30%. This is where companies pull structured information from databases, spreadsheets, or logs and use AI to find patterns, clean datasets, or generate summaries. This one actually tends to deliver real value because data doesn't lie the way text can. If you're using AI to find anomalies in transaction logs or flag suspicious patterns, it either works or it doesn't.

Visual content creation and image processing tie at roughly 30% and 20% respectively. You've probably seen this in action. Designers using AI to generate marketing imagery, teams using tool suites to enhance photos, engineers using computer vision to inspect products. Again, the value is visible but the workflow integration is clunky.

Then there's everything else—robotics at 9%, autonomous vehicles at 3%, and dozens of other specialized use cases at low adoption rates. These require significant infrastructure changes and usually only make sense for specific industries or problem types.

The critical insight here is that most AI adoption is concentrated in the easy-to-implement, easy-to-see, but hard-to-prove-ROI categories. Text generation tools deployed fast and widely. Data processing requires more expertise but shows clearer results. Visual tools are popular with creative teams but often require significant workflow redesign to extract actual productivity gains.

What's conspicuously absent? Deep workflow automation. The kind of AI integration that would actually replace entire business processes. The kind of thing that would show up in productivity metrics.

There are good reasons for this. Deep automation requires process redesign, change management, and custom integration work. It's expensive. It's risky. It requires organizational buy-in. Text generation tools require a free account and some curiosity. Guess which one companies prefer?

Text generation leads AI adoption at 41%, followed by data processing and visual content creation at 30% each. Robotics and autonomous vehicles have lower adoption rates.

Why Productivity Remains Flat Despite Widespread Adoption

This is the question that keeps researchers and consultants awake at night. Why isn't AI delivering?

The answer has multiple layers, and none of them is "AI doesn't work."

First, there's the implementation problem. Most companies deployed AI tools without fundamental process redesign. You can't just drop Chat GPT into a workflow that was designed for humans and expect magic. An analyst who used to spend 6 hours writing a report still needs to spend 4 hours reviewing the AI output, editing it, and adding judgment. You've saved 2 hours maybe, not 6. You've created a new job category (AI-output reviewer) and changed the role of the analyst. But productivity in measurable terms? Unclear.

Second, there's the expectation mismatch. Executives expected AI to be a substitute for human workers. Engineers and managers know better—AI is better thought of as a capability enhancer. A translator who learns to use AI-powered translation memory can work faster, but still needs linguistic expertise. A coder using Git Hub Copilot can write faster, but still needs to understand system design. You're not replacing the skill; you're making skilled people slightly more productive. And "slightly" is not enough to move aggregate productivity metrics.

Third, there's the quality problem. AI outputs require verification. That verification work is often underestimated. A machine learning model that's 95% accurate still requires someone to identify and correct the 5% of errors. If that error-catching and correction work takes almost as long as creating the output from scratch, you've made things slower, not faster.

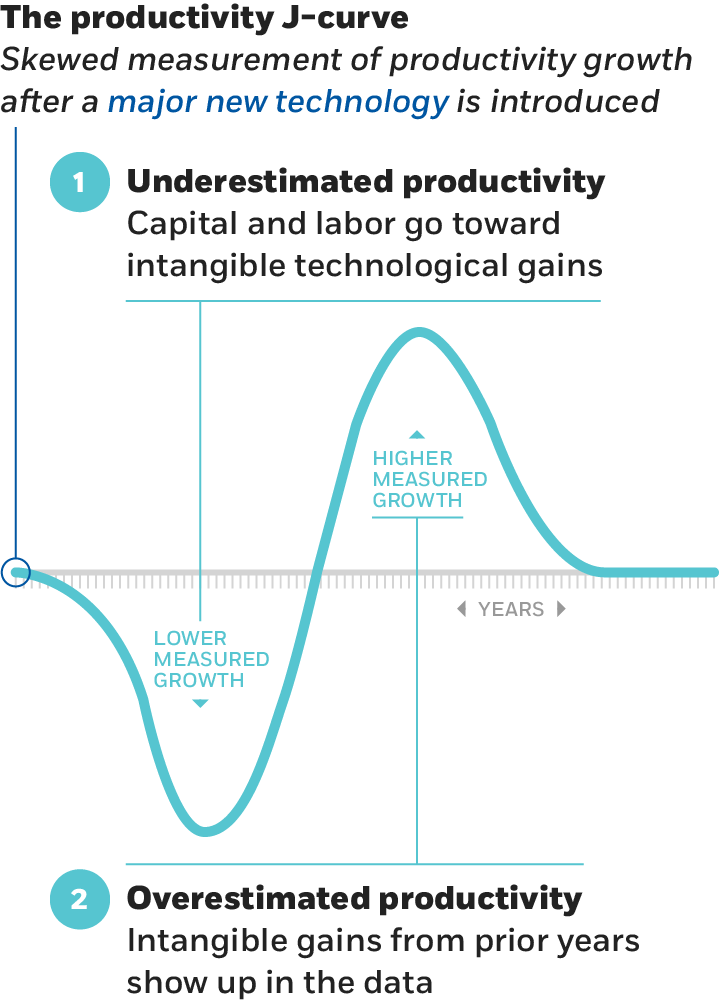

Fourth, there's organizational disruption. AI deployment requires training, process changes, and people reorganization. During a 6-to-12 month deployment, productivity typically drops as people learn new tools and processes. By the time the learning curve flattens, you're back to baseline. The productivity gain, if it exists, only shows up after 18+ months. Most studies look at 3-year windows, so it's possible that gains are coming—just not yet visible.

Fifth, and most important, there's the measurement problem. How do you actually measure productivity in knowledge work? Revenue per employee? Hours worked? Tasks completed? Customer satisfaction? Each metric tells a different story. A customer service team using AI chatbots might handle 30% more inquiries with the same headcount, but customer satisfaction might drop 15% because the AI doesn't actually solve problems as well as humans. What's the productivity gain there? It depends on what you measure.

Take away all these layers and you're left with a simple truth: AI adoption has been fast, but thoughtful implementation has been slow. Companies bought tools before they understood their problems. They deployed technology before redesigning workflows. They expected automation without doing the integration work that automation requires.

The study captures this perfectly. 69% adoption. 89% seeing no improvement. It's not that AI failed. It's that most companies haven't actually implemented AI properly yet. They've bought AI tools. They haven't transformed their work.

Employment Impact: Fears vs. Reality

Here's something that surprised executives when the results came out: AI isn't eliminating jobs the way everyone feared.

90% of companies reported no change in employment over the past three years despite AI adoption. Zero. Nada. The automation wave that was supposed to make white-collar workers obsolete? Hasn't happened.

This aligns with what economic history tells us. New technologies displace specific roles but create new ones. The ATM eliminated teller jobs—but it also enabled banks to open more branches, because operating a branch became much cheaper. More branches meant more banking jobs overall. Spreadsheets were going to destroy accounting. Instead, they enabled financial analysis to become more sophisticated, and accountants shifted to higher-value work.

AI is following the same pattern. A customer service team using AI chatbots doesn't shrink to zero agents. It shifts from "answer customer questions" to "train and monitor AI, handle edge cases that AI can't solve, improve customer satisfaction." Different work. Same number of people, maybe.

Looking forward, companies predict 0.7% employment reduction over the next three years. That's meaningful but not catastrophic. For every thousand employees, companies expect to reduce headcount by 7 people. At the same time, they expect productivity to rise by 1.4%. So they're expecting to get more output from fewer people, but only marginally on both fronts.

The fear narrative isn't happening because replacing humans with AI is actually expensive and disruptive. A person doing customer service costs a company

Companies would love to replace expensive workers with cheap AI. But it turns out that running a business on AI that's right 95% of the time is harder than it sounds. Humans forgive AI mistakes less than they forgive human mistakes. Customers get frustrated fast.

So instead of wholesale job elimination, we're seeing job transformation. People moving from repetitive tasks into oversight and judgment roles. That's not no change—it's meaningful change. But it's not the dramatic displacement everyone feared.

The Text Generation Gold Rush (And Its Limitations)

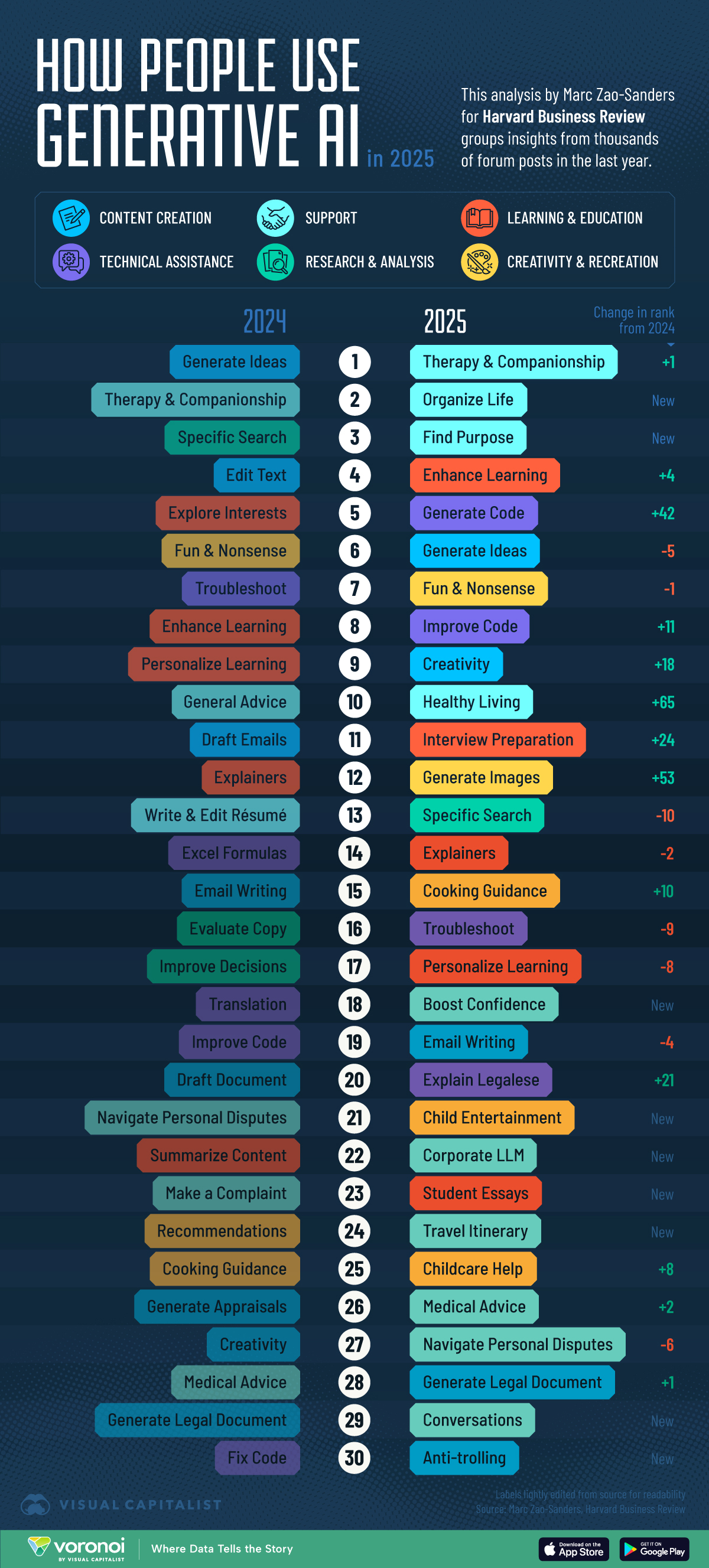

Let's talk specifically about text generation, since 41% of firms are using it. This is where most of the visible AI activity is happening.

The appeal is straightforward. You can prompt Chat GPT or Claude to write an email, draft marketing copy, outline a strategy document, generate a customer support response, or summarize a meeting. The output is often pretty good. Sometimes it's excellent. Frequently it's plausible but wrong. And yet, people keep using it because it's genuinely faster than starting from a blank screen.

But here's what happens in practice. Your marketing team starts using an AI writing tool. The tool generates social media copy, email templates, and blog post outlines. Great, right?

Wrong. Someone still has to read every output. Someone has to check for factual errors (AI hallucinates constantly). Someone has to verify that brand voice comes through. Someone has to make sure the tone matches the audience. Someone has to ensure the information is accurate.

So the marketer who used to spend 4 hours writing a piece of copy now spends 2 hours reviewing AI output. You've saved 2 hours per piece. Over a hundred pieces a year, that's maybe 200 hours saved. If that marketer makes

Scale that across a team of five people. You've saved

The math gets even worse when you factor in the fact that AI-generated text often requires more editing than it appears. The writing might be grammatically perfect but tonally off. It might be factually accurate but missing context. It might hit the word count but miss the actual point.

What's really happening is that AI is shifting work, not eliminating it. A writer using AI still writes. They just do more of it, with more review burden. They're more productive in output volume but possibly less productive in output quality.

Where text generation actually does work well is in first drafts and templates. Using AI to generate 80% of a first draft that you then shape to perfection? That's a valid workflow. But it requires a different mindset than what most companies bring. They're treating AI as a tool that produces final output. It doesn't.

Despite fears, AI adoption has not led to significant job losses in the past three years, with companies projecting only a 0.7% reduction in employment over the next three years, alongside a 1.4% productivity increase.

Data Processing: Where AI Actually Delivers

If there's one area where AI is genuinely outperforming expectations, it's data processing. About 30% of companies using AI put it to work on data analysis tasks.

This is where AI feels different from text generation. A machine learning model trained to identify fraudulent transactions either catches fraud or it doesn't. You can measure accuracy. You can measure false positives and false negatives. You can calculate ROI.

When a financial services company deploys AI to flag suspicious transactions, the results are quantifiable. The model catches 95% of actual fraud. It generates 5% false positives that analysts review in 5 minutes. Before AI, analysts caught maybe 60% of fraud through rule-based systems, and each review took 20 minutes.

Now you do the math: 100,000 transactions per month. AI catches 95,000 real frauds (yes, your company had 100,000 fraudulent transactions). 5,000 false positives at 5 minutes each = 417 hours of review work. Old system caught 60,000 real frauds and generated 40,000 false positives at 20 minutes each = 13,333 hours of review work.

Going from 13,333 hours to 417 hours is the real deal. That's productivity improvement you can measure in the budget spreadsheet.

The reason data processing works better than text generation is that data is objective. The model is evaluated against ground truth. Either a transaction is fraudulent or it's not. Either a customer will churn or they won't. Either equipment will fail in the next 30 days or it won't. You can measure accuracy. You can measure cost-benefit. You can tie AI deployment directly to business outcomes.

Compare that to text generation, where "good copy" is subjective. Did the email template increase clickthrough rate? Who knows—maybe the audience changed. Did the blog outline improve search rankings? Possibly, but you'd need a controlled test to verify.

So when you see the survey saying 89% of companies aren't seeing productivity improvements, a lot of that is the text generation drag pulling down the averages. The companies using AI for data processing are probably seeing real gains. The companies using AI for text generation are seeing marginal gains, if any.

Visual Content Creation: The Capability Versus Integration Gap

About 30% of companies are deploying AI for visual content creation, and this category deserves its own analysis because it shows the full spectrum of AI outcomes.

Image generation tools like DALL-E, Midjourney, and Stable Diffusion are genuinely impressive. You type "a minimalist dashboard for a fintech app in dark mode" and you get back four usable image options in seconds. Before AI, someone would spend 2-4 hours working with a designer to iterate through mockups. Or a designer would spend that time creating mockups from scratch.

So there's real productivity gain potential here. A startup creating marketing materials can generate dozens of variations in an afternoon instead of a week.

But here's where it gets complicated. The AI images are just okay. They're not perfect. The perspective might be slightly off. The proportions might not be quite right. The hands—oh god, the hands. AI is still learning hands and faces, and experienced designers immediately spot the problems.

So what actually happens? A designer uses AI to generate rough concepts. Then the designer spends time editing those concepts because they're not perfect. Is that faster than starting from scratch? For some designers, yes. For others, it's actually slower because now they need to both know design and know how to engineer prompts to get concepts worth editing.

The real productivity win comes if you fundamentally redesign the workflow. Instead of "designer makes perfect comps," you shift to "designer generates AI concepts, stakeholders choose direction, designer refines." That's faster. But it requires changing how decisions are made and involving stakeholders earlier. That's organizational work, not technology work.

Most companies implemented the AI tool without the workflow redesign. So they saw the capability but not the productivity gain. The image generation works. People use it. But it didn't cut design time in half the way executives hoped.

The Expectations Reset: From Automation Dreams to Incremental Improvement

Something interesting happened between 2023 and 2025. Expectations changed.

In 2023, the narrative around AI was revolutionary. This technology will transform work. Automate entire job categories. Increase productivity by 40-50%. Executives believed it. Investors certainly did.

Now, in 2025, the survey results tell a different story. While only 25% of companies expect small productivity gains and 12% expect large gains over the next three years, the overall tone has shifted from "AI will transform everything" to "AI will probably help a bit, if we figure out how to use it."

This is actually healthy. The conversation has matured from hype to reality. Companies are no longer waiting for AI to magically solve their problems. They're asking harder questions: How do we actually integrate this? What processes need to change? Who owns the implementation? What are the actual costs?

The 60% of companies expecting no further impact over the next three years? That's not pessimism. That's realism. It's companies saying "we've deployed AI, we've learned it doesn't solve everything, and we're not expecting magic next year either."

But there's an optimistic flip side. The same research found that 63% of companies expect no employment reduction, with only 26% expecting job losses. That's companies saying "we trust we'll figure this out without firing a third of our workforce." There's confidence in that.

Over the next three years, the survey predicts productivity improvements of 1.4% on average, with employment reduction of 0.7%. These are modest numbers. They're also probably realistic. A 1.4% productivity boost is noticeable but not revolutionary. For a company with 1,000 employees, that's the equivalent of adding 14 people's worth of output without adding 14 people. That's worth doing, but it requires getting AI implementation right.

The employment reduction of 0.7%—7 fewer people per thousand—probably represents natural attrition and some early retirement rather than layoffs. You're losing people to retirements and voluntary moves, not forced displacement. That matches the real-world experience where companies using AI have simply been more cautious about hiring new people while ramping AI tools.

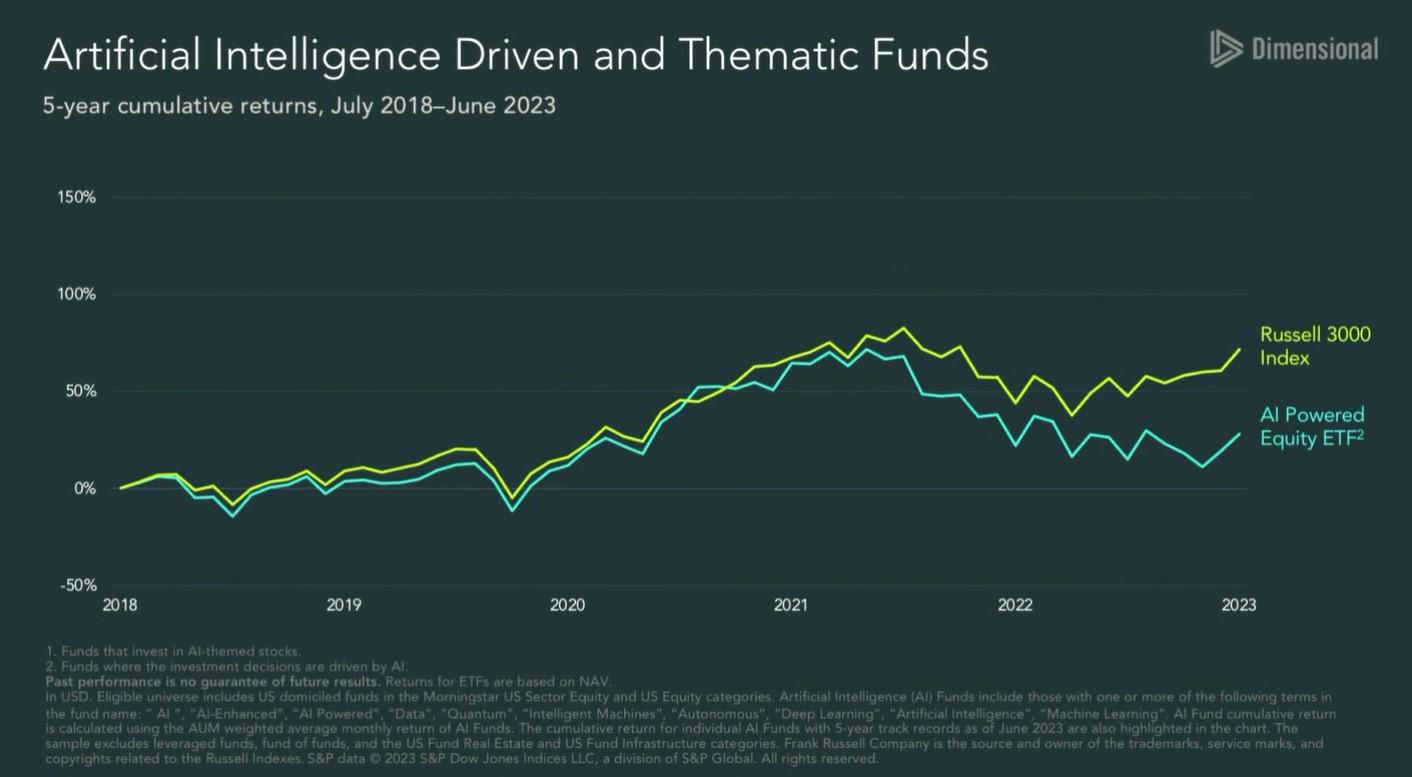

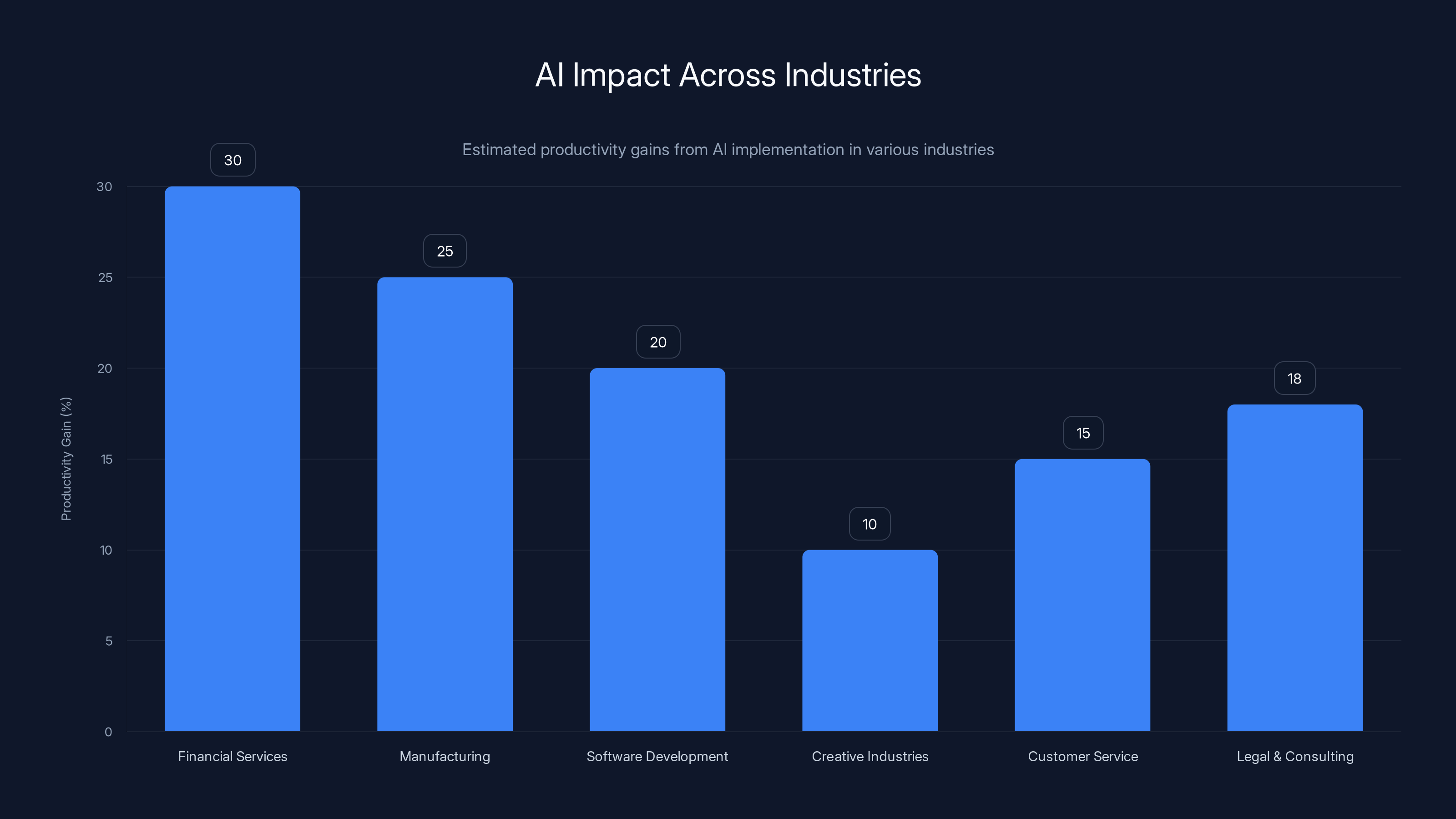

Financial services and manufacturing show the highest estimated productivity gains from AI, while creative industries see the least improvement. (Estimated data)

The Real Problem: AI Deployment Without Process Design

If you distill the survey findings into one root cause, it's this: companies are deploying AI without fundamentally rethinking how work gets done.

AI is a tool. But most organizations treat it like a feature. You add a chatbot feature to your website. You integrate an AI feature into your design software. You enable an AI feature in your email client. Then you expect productivity improvements.

That's not how technology transformation works. When email first hit companies, productivity didn't improve because someone sent emails slightly faster. Productivity improved because email fundamentally changed how organizations coordinate. You could collaborate across time zones asynchronously. You could keep written records. You could include multiple stakeholders in a conversation.

But that required organizational change. It required building new email workflows, creating standards for response time, adjusting meeting culture. Some companies that adopted email poorly actually saw productivity drop because they were dealing with email chaos—no standards, everything urgent.

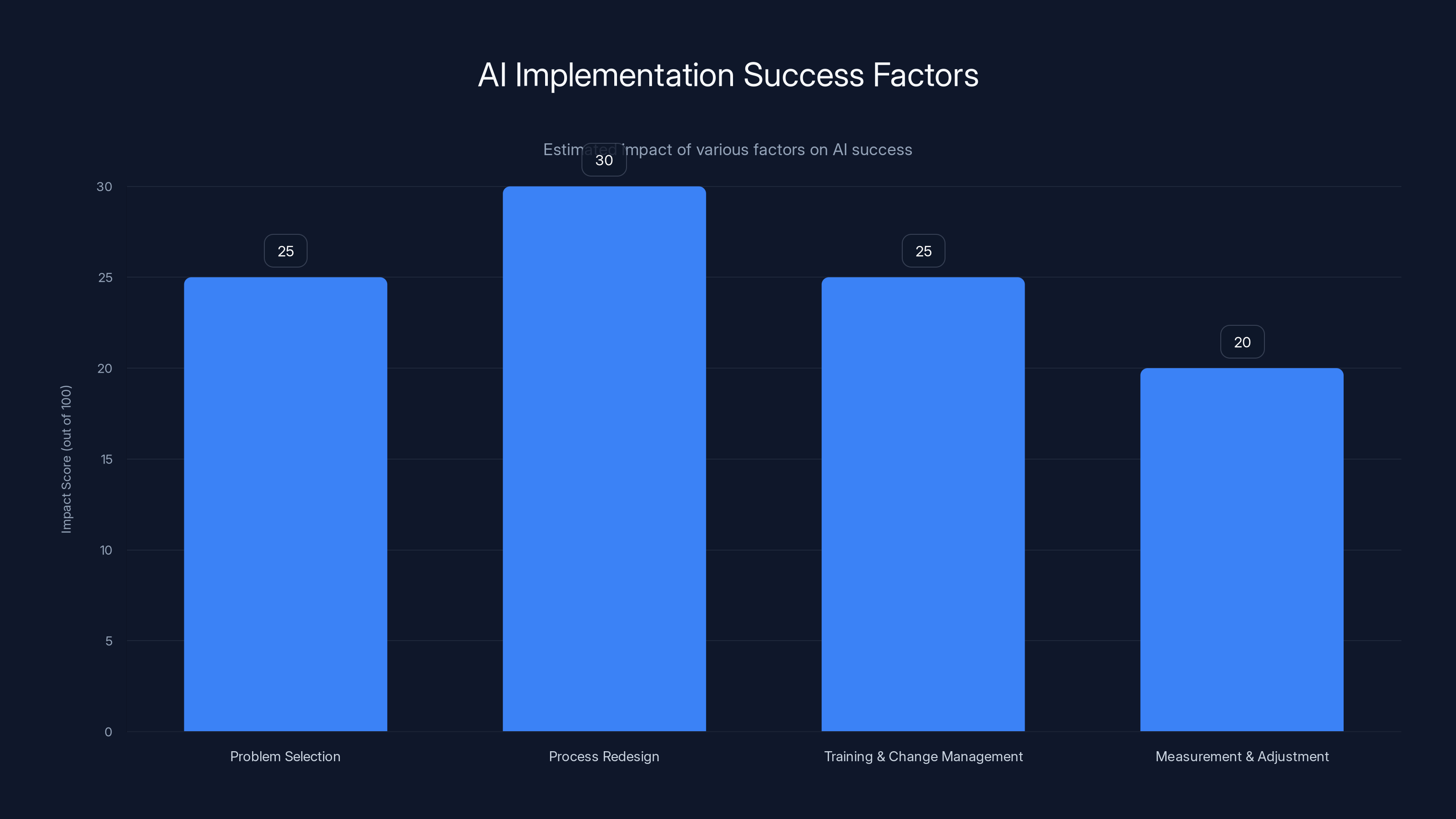

AI is the same. The companies that will eventually see real productivity gains are the ones that:

- Identify specific problems that AI can solve better than the current process.

- Redesign the workflow around AI's actual capabilities (not what the vendor promised).

- Train people thoroughly on both the AI tool and the new process.

- Iterate and refine based on actual results.

- Measure outcomes systematically.

Most companies are doing maybe step 1. They identify that AI could help with text generation or data processing. Then they skip steps 2-5 and wonder why productivity hasn't improved.

There's another dimension to this. Organizational readiness matters enormously. A company with sophisticated operations, solid data infrastructure, and a culture of measurement can implement AI effectively. A company with fragmented systems, no data standards, and a culture of "we've always done it this way" will struggle.

The survey caught companies at different stages of maturity. Some of that 69% using AI are sophisticated implementations. Others are pilot projects that didn't scale. The 89% seeing no improvement might be distributed across the full spectrum, but probably concentrated in companies with weaker operational maturity.

Industry Variations: Where AI Makes the Most Sense

One thing the survey hints at but doesn't deeply explore: different industries are getting different value from AI.

Financial services and insurance, which use data-heavy processes extensively, probably show better results than the overall average. A fraud detection AI, claims processing automation, or risk analysis tool delivers measurable value. These are industries where AI fits naturally into existing processes.

Manufacturing is probably on the upside too. Predictive maintenance AI that prevents equipment breakdowns is a clear business case. Quality control AI that catches defects is another. These are measurable improvements.

Software development might be showing unexpected value. A developer using Git Hub Copilot or similar actually does write more code, faster. The code quality might be slightly lower (requiring more review), but output volume definitely increases. That's a real productivity win in an industry that's built on throughput metrics.

But creative industries—marketing, advertising, design, content creation—are probably dragging the averages down. This is where AI text generation and image creation are most widely deployed but least successful at improving actual productivity. The outputs require heavy editing. The workflows don't naturally accommodate "first draft AI." The subjective nature of quality makes measurement hard.

Customer service is interesting. AI chatbots are everywhere. But they're not actually reducing agent headcount at most companies because agent conversations are shifting toward harder problems (the easy stuff goes to the chatbot, the hard stuff goes to humans). So you get efficiency gains, not headcount reduction. Productivity in one sense (more conversations handled per agent) but not in others (fewer total agents).

Legal and consulting probably show mixed results. AI tools are great at legal research and document review, which are measurable wins. But client-facing strategic work—where the real value lives—doesn't improve much. AI handles 30% of the work (research and prep), but the critical 70% (judgment and strategic thinking) still requires the human expert. So productivity improves incrementally, not transformatively.

The lesson here is that AI outcomes are sector-specific. You can't just apply generic analysis to all 6,000 companies surveyed. A 2% productivity improvement in financial services is realistic. A 20% improvement in creative work is a fairy tale. The average of 1.4% predicted over three years probably reflects this variation.

Cost Reality: What Companies Actually Spent

The survey doesn't deeply analyze costs, but we can infer them from the deployment patterns. If 69% of companies are using AI, what are they actually buying?

Software licenses: $10K-100K+ annually depending on scale. Tools like Chat GPT for Business, Claude API, specialized AI platforms—this adds up fast once you're running across an organization.

Integration work: $50K-500K+ depending on depth. This is the consultants, the custom development, the system configuration work that actually makes AI useful rather than just installed.

Infrastructure: $5K-200K+ annually. Cloud compute costs for running models, data storage, API costs. These can balloon quickly if you're not monitoring usage.

People: This is the big one. Someone needs to champion the AI initiative. Teams need training. You might hire AI specialists or data scientists. Internal cost for change management and training could easily be $100K-500K for a meaningful rollout.

So a mid-sized company putting serious effort into AI implementation is probably spending

Against what returns? If productivity improves 0% and you spent

But if half your implementations show minimal returns while others show decent gains, the portfolio average stays flat. Which is exactly what the survey found: 89% seeing no improvement means 11% presumably seeing some improvement.

This is the secret that nobody wants to say out loud: many of those AI investments are sunk costs. The companies have already spent the money. The tools are already deployed. They're hoping the next version is better, or they'll eventually figure out a use case that works. But they're not expecting ROI.

Estimated data shows AI can increase report processing by 30% but may decrease accuracy by 10%. Task completion time improves by 25%, while revenue per employee sees a modest 5% gain.

The Measurement Problem: How Do You Actually Define Productivity?

Here's the thing that none of the survey responses can capture fully: productivity is incredibly hard to measure in knowledge work.

For a factory making widgets, productivity is simple. Widgets per hour per worker. But for an accounting team using AI to process expense reports, what's the metric?

Number of reports processed per day? Then the AI tools probably do help. But what about report accuracy? What if AI-processed reports have slightly more errors requiring follow-up? Is processing 30% more reports worth 10% more errors?

Revenue per employee? That's even harder. Did revenue increase because of AI, or because the market improved? Did revenue per employee improve if revenue stayed flat but headcount didn't increase?

Time to complete tasks? This is what most companies measure. An expense report that took 4 hours to process now takes 3 hours. Productivity gain of 25%. But the company might still need the same number of people for expense processing because the volume has grown. So the productivity metric is up, but the cost is the same. Did productivity improve or just allow scale?

This is why the survey probably captures real patterns even though measurement is messy. Companies know whether they're doing more with the same people (productivity improved), the same with the same people (no improvement), or less with the same people (productivity declined). Even if precise measurement is hard, the direction is clear.

When 89% of companies report no improvement, they're saying: "We deployed AI, we measured what we measure, and we're not getting better." That's a strong signal regardless of how they calculated it.

But it also means some of that 89% might be missing productivity improvements that are real but invisible. A manager using AI-powered analytics to make better decisions might improve team performance by 5%, but that improvement shows up in quarterly results six months later, not in a productivity metric. By the time it's visible, people have forgotten about the AI deployment.

Change Management: The Unglamorous Truth About AI Implementation

Here's what nobody in Silicon Valley wants to talk about: most AI implementations fail not because the technology doesn't work, but because organizations aren't designed to adopt it.

Your company has workflows built on decades of practice. People know how to do their jobs. The processes work, even if they're not optimal. Then you introduce AI and everything becomes uncertain.

Will this AI tool actually save me time, or create more work? Can I trust the output? What happens if something goes wrong? Will I become obsolete?

These questions don't have good answers in most organizations. So people keep using the old process because it's familiar, while the AI tool sits unused. Or they use it grudgingly for easy tasks and abandon it for important work.

Change management is the hardest part of AI implementation, and it's also the least glamorous. It's not about the technology. It's about helping people understand why change matters, training them thoroughly, giving them time to adapt, and measuring results to show them it's working.

Most companies skip this or minimize it. They assume that because the AI tool is easy to use, people will just adopt it. Wrong. Easy to use doesn't mean easy to integrate into workflows you've perfected.

The companies seeing real AI benefits probably had:

- Clear leadership sponsorship

- Dedicated change management resources

- Honest expectations about what would improve

- Realistic timelines (12-18 months, not 3 months)

- Measurement from day one

- Willingness to redesign processes, not just add AI

Most didn't have all of these. That's a big reason why the productivity numbers are so underwhelming.

The Future Outlook: What Should We Realistically Expect?

The survey's prediction of 1.4% productivity improvement over the next three years is probably in the right ballpark. Here's why.

AI technology is improving rapidly. The models get better every six months. The tools become easier to integrate. The ecosystem becomes more sophisticated. We'll probably have better results in 2027 than in 2025.

But organizational capability to use AI is lagging. Most companies are still learning. They're still running experiments. They're still figuring out which use cases work. That learning curve will flatten out. The companies that have done AI implementation well will get better at doing it faster. The ones that have failed will either try again or give up.

Overall, 1.4% productivity improvement seems reasonable. It's not transformative. It's not nothing. For most organizations, that improvement is meaningful but not business-model-changing.

Where you might see higher improvements: industries with heavy data processing, manufacturing, software development, and financial services. 2-4% improvement is possible if implementation is good.

Where you'll see lower improvements: creative industries, strategy/consulting, fields requiring deep human judgment. Improvements might be 0-1% because AI handles easy parts but not the valuable parts.

The employment reduction of 0.7% should probably be understood as "not a massive job displacement." That doesn't mean no disruption. It means that on average, across large organizations, headcount doesn't drop much. But within specific roles and departments, there will be significant changes. The company as a whole hires fewer new people. Specific roles shrink. New roles emerge. The net effect is modest, but the local turbulence is real.

Effective AI implementation hinges on selecting the right problems, redesigning processes, investing in training, and ongoing measurement. Estimated data.

What This Means for Your Organization

If you're a leader trying to figure out an AI strategy, the survey results should tell you something important: there's no autopilot for AI success.

You can't just buy tools and expect productivity improvement. You have to actively redesign work. You have to invest in training and change management. You have to measure results. You have to iterate.

If you're a knowledge worker worried about AI, the good news is that the productivity improvements are marginal. You're probably not getting replaced. Your job might change—you might spend more time on AI oversight and less time on routine work. But that's often a better job, not a worse one.

If you're an investor or analyst, the survey confirms what you probably already suspected: the AI productivity story is slower and harder than the narrative suggests. Big returns will come, probably, but they'll take years. Companies that get 2-3 years ahead in AI implementation will have a real advantage. But the across-the-board productivity revolution? Still waiting for it.

If you're an AI vendor, the message is clear: your product might be wonderful, but your customer success depends on their ability to redesign work around it. Free trials and quick implementations don't cut it. You need to deeply integrate with customer operations.

Bridging the Gap: Where AI Can Actually Deliver Value

Okay, so the picture is sobering. 89% of companies seeing no productivity improvement. That's the status quo.

But it also means there's massive opportunity for the companies that figure it out. They'll have competitive advantage. So how do you actually get AI to work?

Start with a specific problem, not technology. Not "we want to use AI" but "we need to process customer requests 40% faster" or "we're missing fraud that costs us $500K annually." Pick something measurable where success is obvious.

Then assess whether AI can actually solve it. Some problems AI is genuinely good at. Identifying patterns in data, generating first drafts, processing images, extracting information from documents. Other problems require human judgment and context that AI struggles with.

If AI makes sense, redesign the process around AI's actual capabilities. Don't bolt AI into your existing workflow. Fundamentally reconsider how the work flows. Who gets the AI output first? How does it move through your organization? Where is human judgment critical? Design the process accordingly.

Invest heavily in training and change management. Probably 30-40% of your AI implementation budget should go here. Help people understand the change. Get their feedback. Iterate. Don't assume they'll automatically adopt it.

Measure from day one. Before deployment, establish what you're measuring and what success looks like. After deployment, measure regularly and honestly. If it's not working, adjust or pivot. Don't waste resources on a bad implementation hoping it gets better.

Give it time. Real productivity improvements probably take 12-18 months to show up. Plan for initial disruption and adjustment. If you see improvement by month 18, that's success.

These aren't novel recommendations. They're how any technology transformation works. But they're not what most companies are doing. Most are buying tools and hoping. That's why 89% see no improvement.

The Consultant's Play: Selling Solutions to Unfixed Problems

One thing the survey doesn't address but is worth noting: this environment is perfect for AI consultants.

Your company has spent

That might be the right move. Some companies genuinely need outside expertise. But there's also an incentive misalignment. A consultant makes money whether your AI implementation succeeds or fails. If it fails, you hire them to fix it. If it succeeds, you hire them to expand it. Either way, they win.

So when evaluating consultant recommendations, push back. Ask them specifically: "What will change? What was different last time? Why should we expect different results?" If they can't answer clearly, they're probably overselling.

The best consultants will tell you that some AI implementations should be killed, not fixed. Some problems shouldn't be solved with AI. Some processes shouldn't be automated. But that kind of consultant gives you bad news, so they're less likely to get hired.

Why Survey Timing Matters: Measuring at the Inflection

There's one more thing to keep in mind when interpreting these results. The survey covers the last three years: 2022-2025.

2022 was pre-Chat GPT. AI was mostly niche tools for data scientists. 2023 was Chat GPT explosion. Everyone started experimenting. 2024-2025 is maturation and integration.

So the survey is measuring a window where AI adoption was extremely rapid but thoughtful integration was lagging. It's possible that companies deploying AI in 2022 (the sophisticated ones) show better results. Companies deploying in early 2023 (the trend-chasers) show worse results.

By 2025, some companies have figured out what works. They're seeing improvements. But they're still a minority. The survey captures this in the aggregate: 11% seeing benefits, 89% not yet.

Over the next three years (2025-2028), this distribution will probably shift. More companies will figure it out. The 89% will shrink. Not because the technology improved dramatically—it will, but not that much—but because the organizational capability improved. Companies will get better at AI implementation.

So the 1.4% average productivity improvement predicted for 2025-2028 is probably conservative. It might be 2% by then. Maybe 2.5% if the ecosystem matures nicely.

But it won't be the 20-40% improvements that some executives daydreamed about. The technology will improve. Adoption will improve. But there are real limits to what AI can do in knowledge work, and those limits will increasingly become obvious.

The Honest Conclusion: AI Is Useful, But Not Revolutionary (Yet)

Let's wrap this up.

The survey tells a clear story: AI adoption is widespread (69% of companies), but value realization is lagging (89% see no improvement). This isn't because AI doesn't work. It's because most companies haven't figured out how to actually use it in ways that improve their business.

The companies that will eventually win are the ones that:

- Pick specific problems where AI genuinely helps

- Redesign workflows around AI's actual (not theoretical) capabilities

- Invest heavily in change management

- Measure honestly and adjust based on results

- Give the implementation 18+ months

That's harder than buying tools. It's also less exciting than the "AI will transform work" narrative. But it's where productivity improvements actually come from.

The good news: there's real opportunity here. Most companies are doing this poorly. The companies that do it well will have competitive advantage. Your team could be one of them.

The realistic news: don't expect magic. Expect 1-2% productivity improvements over 18 months if implementation is solid. Expect disruption during the transition. Expect some workflows to fundamentally change and some to stay largely the same.

The honest news: a lot of this AI investment is not paying off right now. Some of it will eventually. Some of it won't. That's normal for technology adoption—you have winners and losers and a lot of middle ground.

We're in the middle ground now. The hype is fading. The real work is starting. The productivity improvements, when they come, will be hard-won and specific to companies that earned them.

That's not as exciting as "AI will transform work." But it's closer to the truth.

FAQ

What does the survey actually measure?

The National Bureau of Economic Research surveyed approximately 6,000 C-suite executives (CEOs, CFOs, and other senior leaders) across the US, UK, Germany, and Australia. They were asked about AI adoption, productivity impacts, employment changes, and future expectations. The survey covered a three-year lookback period and a three-year forward projection.

Why are 89% of companies not seeing productivity improvements if AI is so powerful?

AI is powerful in specific applications, but most companies have deployed AI tools without fundamentally redesigning their workflows. They've added AI to existing processes rather than reimagining processes around what AI can actually do. Additionally, many AI deployments require human verification and oversight that offset the time savings from automation. Finally, knowledge work productivity is inherently hard to measure, so improvements might be happening but not visible in the metrics companies track.

Is AI actually eliminating jobs, or was that just hype?

The survey shows 90% of companies experienced no employment change over three years despite AI adoption, with predictions of only 0.7% employment reduction going forward. This suggests that AI is transforming jobs (shifting work from routine to oversight tasks) rather than eliminating them wholesale. The fear of massive job displacement was probably overblown, though specific roles within organizations are certainly being affected.

Which AI use cases are actually delivering productivity improvements?

Data processing and analysis show the strongest returns because they produce measurable, objective outcomes. Fraud detection, predictive maintenance, and pattern recognition in large datasets deliver clear business value. Text generation and image creation show promise but require significant workflow redesign to realize productivity gains. Visual content creation is somewhere in the middle—capable but requiring integration work.

How long does it actually take to see AI productivity improvements?

Based on successful implementations, productivity improvements typically don't appear until 12-18 months after deployment. This window covers initial disruption, learning curve, process redesign, and stabilization. Companies expecting improvements in 3-6 months are usually disappointed. Most realistic deployments plan for 18 months before meaningful results.

Should we abandon AI if we're not seeing immediate returns?

Not necessarily, but honestly assess whether your implementation approach is sound. If you bought tools without process redesign and change management, you should redesign your approach, not give up on AI entirely. However, if you've invested properly in implementation for 12+ months and still see no improvement, it might be time to pivot to a different use case where AI is more likely to help.

What's the most common mistake companies make with AI implementation?

Treating AI as a feature rather than a business transformation. Companies buy the tools and expect productivity improvement to follow automatically. In reality, productivity improvement requires process redesign, training, change management, and measurement. If you're not doing all of those things, you won't see results. The technology is just the easiest part.

How should we measure AI productivity improvements?

Define specific metrics before you deploy anything. Don't measure "did we use AI" but rather "did this specific outcome improve." For a customer service team, it might be "calls resolved per agent." For an accounting team, it might be "days to close the books." For a design team, it might be "designs completed per month." The metric should be directly tied to the problem you're solving and measurable with existing data.

What does this survey mean for the future of AI in the workplace?

The survey suggests that widespread AI productivity improvements will happen, but more slowly and with more difficulty than the hype suggested. Companies that thoughtfully implement AI with proper change management and process redesign will pull ahead. The lazy deployments (tools without workflow changes) will struggle. Over the next 3-5 years, a clearer separation between successful and unsuccessful AI implementations will become visible.

If most companies aren't seeing returns, why keep using AI?

Many companies are continuing to use AI because they've already invested in it (sunk cost), because they're hoping improvements will come later, because they see competitors using it and don't want to fall behind, or because specific teams are finding specific value even if the organization as a whole isn't. Additionally, some improvements are real but hard to measure (better decision-making, fewer errors), so the business case isn't obvious even if value is being created.

Wrapping It All Up

The data is clear, even if it's not what executives hoped for. AI is everywhere, but productivity improvements are nowhere. That gap represents both a warning and an opportunity.

The warning: if you're deploying AI without serious change management and process redesign, you're probably wasting money. You won't see returns. You'll join the 89%.

The opportunity: if you're willing to do the hard work—redesigning workflows, investing in training, measuring results honestly, iterating based on what you learn—you can be part of the 11% that's actually seeing benefits. And as AI technology continues improving over the next few years, that advantage compounds.

AI isn't magic. It's a tool that amplifies what you're already doing. If you're doing knowledge work poorly without AI, you'll do it poorly with AI, just faster. If you're doing knowledge work well and you redesign around what AI is actually good at, you'll see real improvements.

The choice is yours. The hype cycle is ending. The real work is just starting. And the companies that succeed won't be the ones that bought the most AI tools. They'll be the ones that had the patience, rigor, and organizational capability to actually use them effectively.

That's not exciting. It's also true.

Key Takeaways

- 69% of firms use AI tools, but 89% report zero productivity improvement over three years—a massive gap between adoption and actual value realization

- Text generation (41%), data processing (30%), and visual content (30%) dominate AI use cases, but only data processing shows consistently strong ROI

- Employment has remained flat despite AI deployment (90% no change), contradicting fears of mass job displacement; realistic projections show only 0.7% employment reduction over next three years

- Most AI implementation failures stem from deploying tools without fundamental workflow redesign and change management—companies treat AI as a feature, not a transformation

- Realistic productivity improvements take 12-18 months minimum and average only 1.4% over three years when implementation is done well; most organizations should expect 0% if process redesign is skipped

- Companies seeing AI benefits invest heavily in change management (30-40% of budget), not just software licenses—this hidden cost explains why 89% see no improvement

Related Articles

- Infosys and Anthropic Partner to Build Enterprise AI Agents [2025]

- Fractal Analytics IPO Signals India's AI Market Reality [2025]

- Wix and QuickBooks Integration: Complete Guide for SMBs [2025]

- LinkedIn AI Posts: How Professionals Use AI to Share Work Updates [2025]

- The AI Agent 90/10 Rule: When to Build vs Buy SaaS [2025]

- VMware Customer Exodus: Why 86% Still Want Out After Broadcom [2025]

![The AI Productivity Paradox: Why 89% of Firms See No Real Benefit [2025]](https://tryrunable.com/blog/the-ai-productivity-paradox-why-89-of-firms-see-no-real-bene/image-1-1771499231872.jpg)