Introduction: The Vibe Coding Revolution Met Reality

There's a moment every founder gets drunk on: shipping something real in 48 hours. Not a prototype. Not a demo. A production tool with real users. The vibe coding revolution promised this to everyone, and honestly, it delivered.

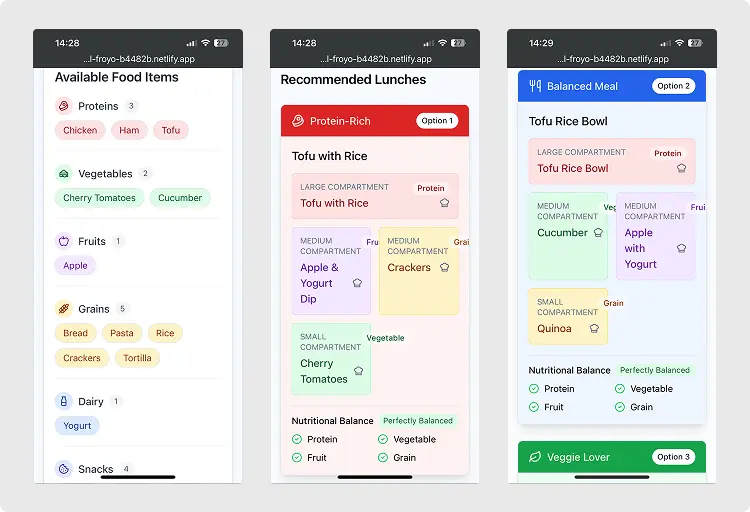

I'm a true believer in this movement. Our team built 12+ AI-powered applications using rapid development frameworks, and the numbers are genuinely staggering. These aren't hobby projects or weekend experiments. We're talking about 800,000+ total uses across production tools. Ten thousand founders used our AI research agents to pick the right AI tools for their stacks. Three thousand VC pitch decks got reviewed by our systems. Nearly 1,000 founders got introduced to venture capitalists through our platform.

This is real software. It's generating real value. It's helping real people make better decisions. And I would absolutely do this again.

But here's what the "I built a SaaS in 4 hours" content wave isn't telling you, and what I wish someone had been honest about with me upfront: every single one of those apps needs real maintenance every single day. Not every week. Not every sprint. Every day.

I don't write this to scare you away from vibe coding. I write this because the gap between the hype and the reality is where founders get hurt. The ones who understand this trade-off and embrace it? They're building something that compounds. The ones expecting "set and forget" software from rapid development? They're about to learn a hard lesson.

Let me break down what I've actually learned shipping AI applications at scale, what the real time commitment looks like, and how to think about it if you're considering this path.

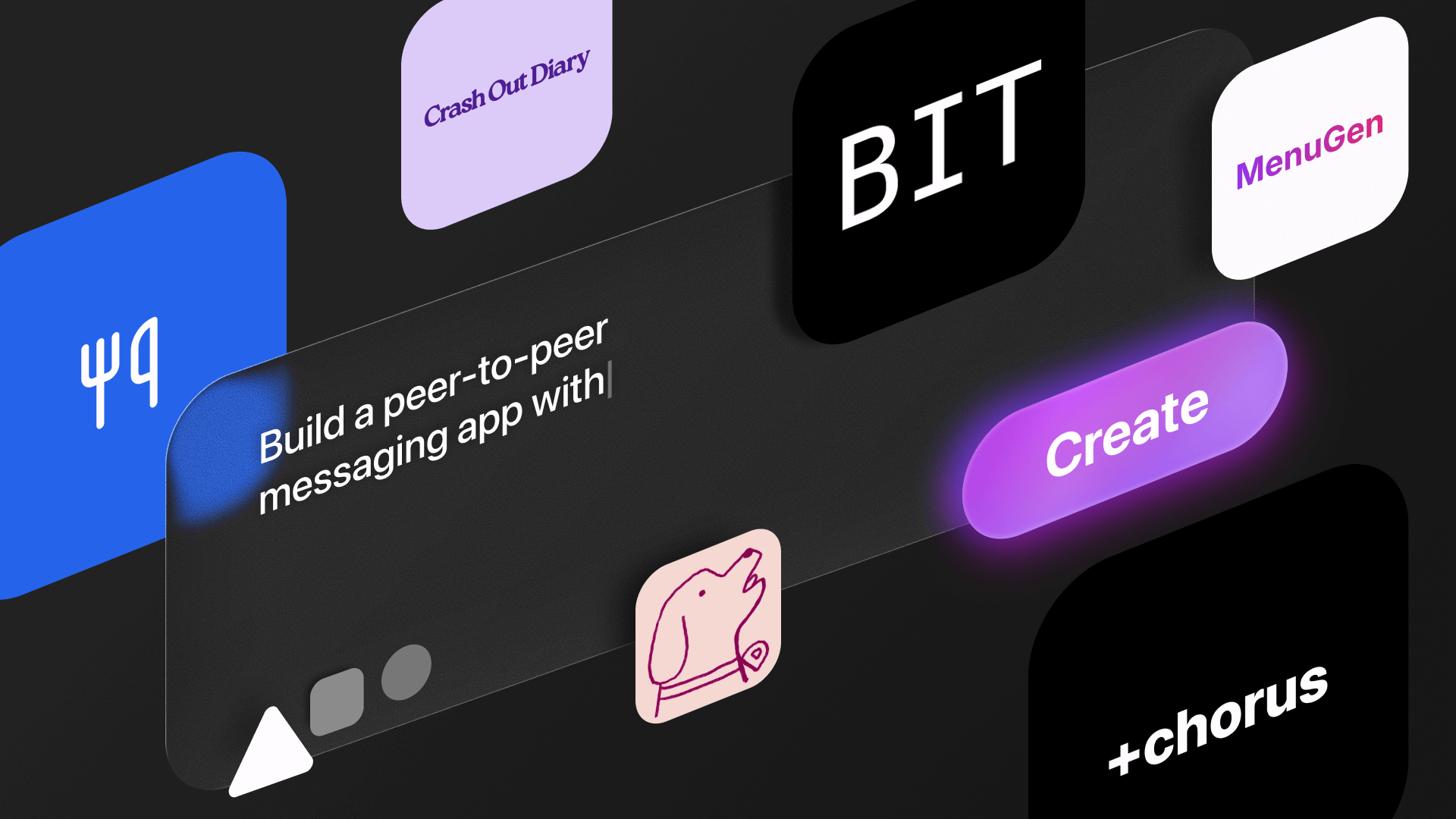

The Vibe Coding Explosion: What Actually Happened

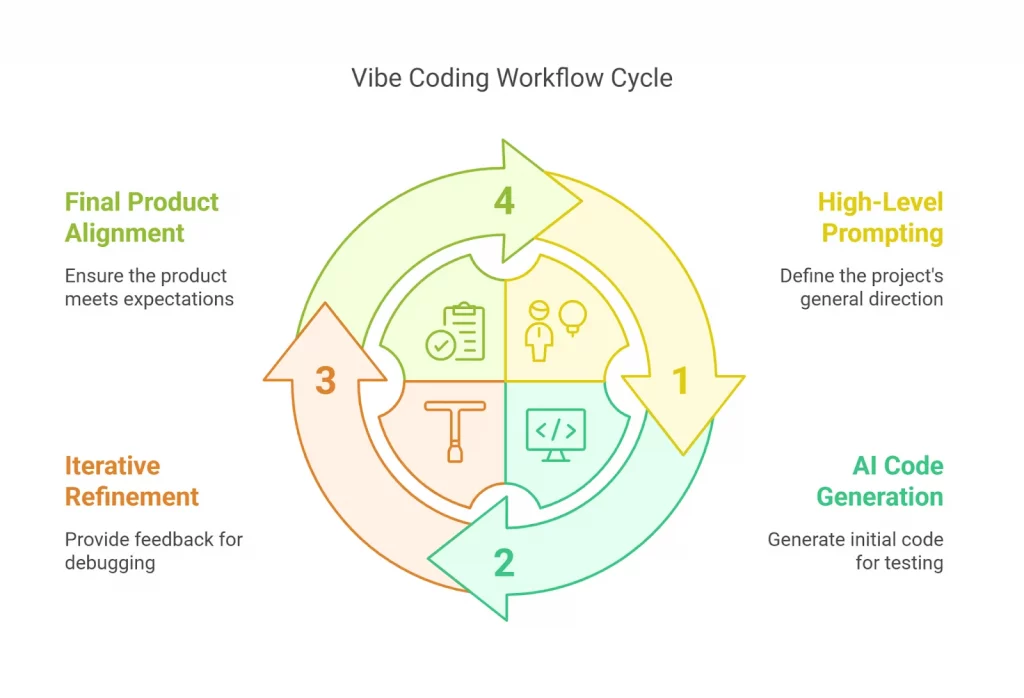

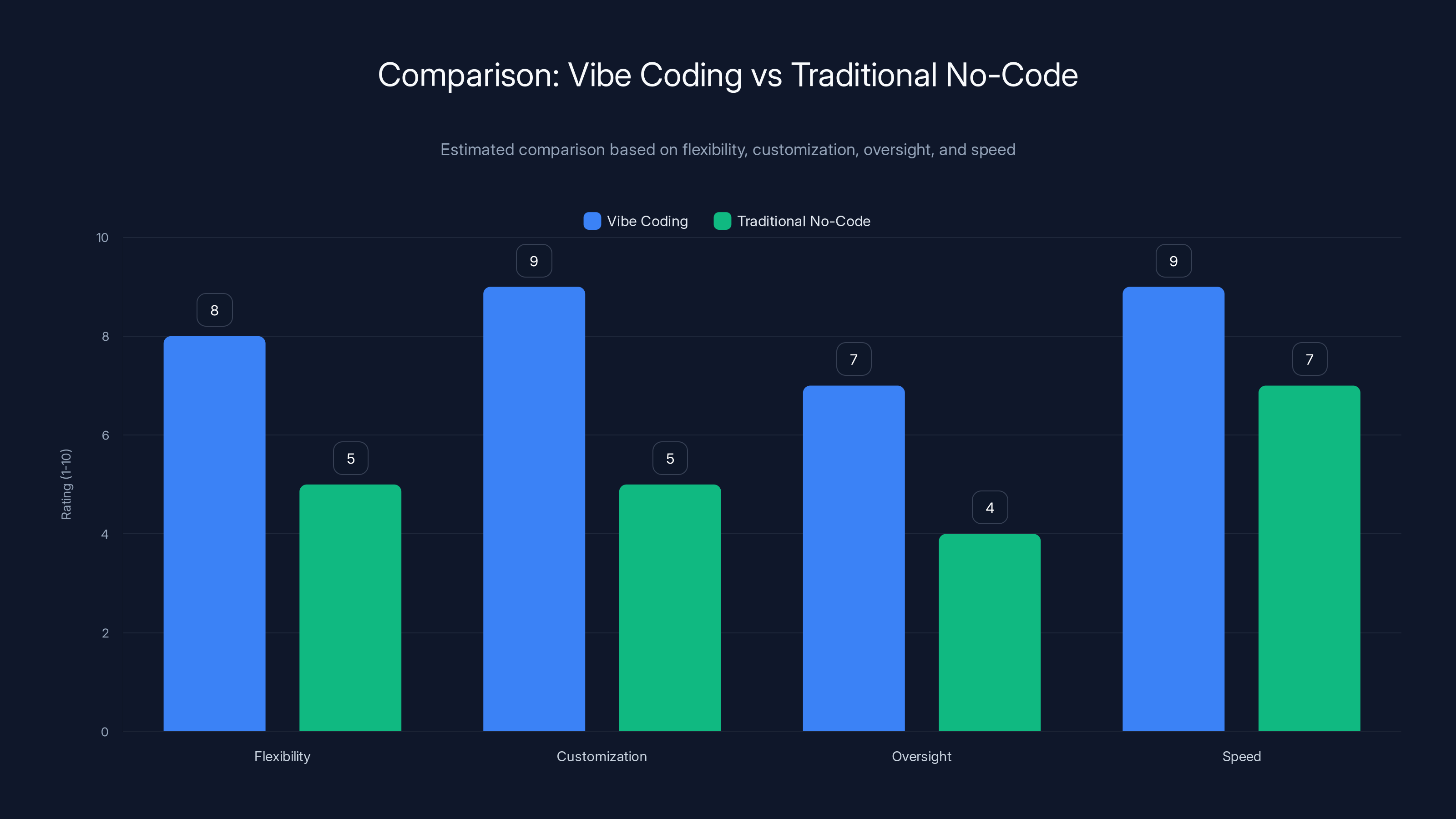

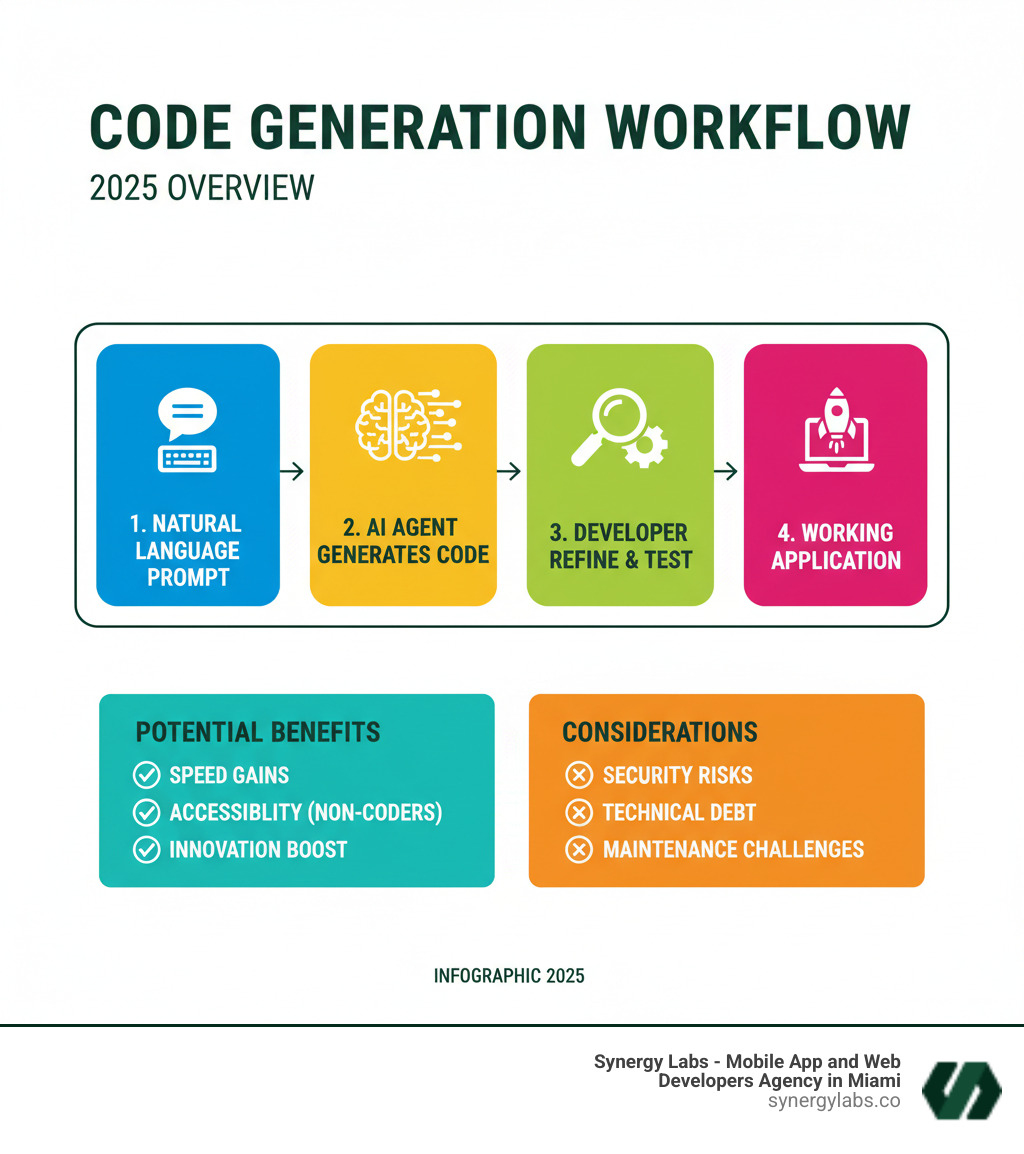

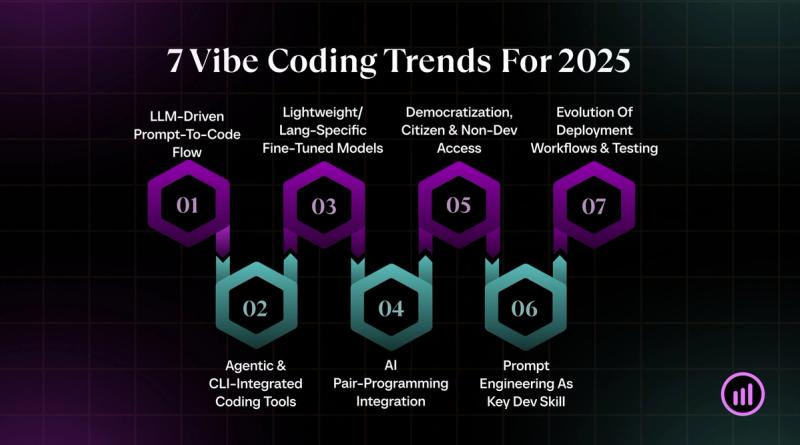

Vibe coding represents a fundamental shift in how software gets built. Instead of months of traditional development, product managers can now iterate on ideas in days or hours. The basic concept: use AI agents and visual builders to generate application logic, interfaces, and workflows without writing production code from scratch.

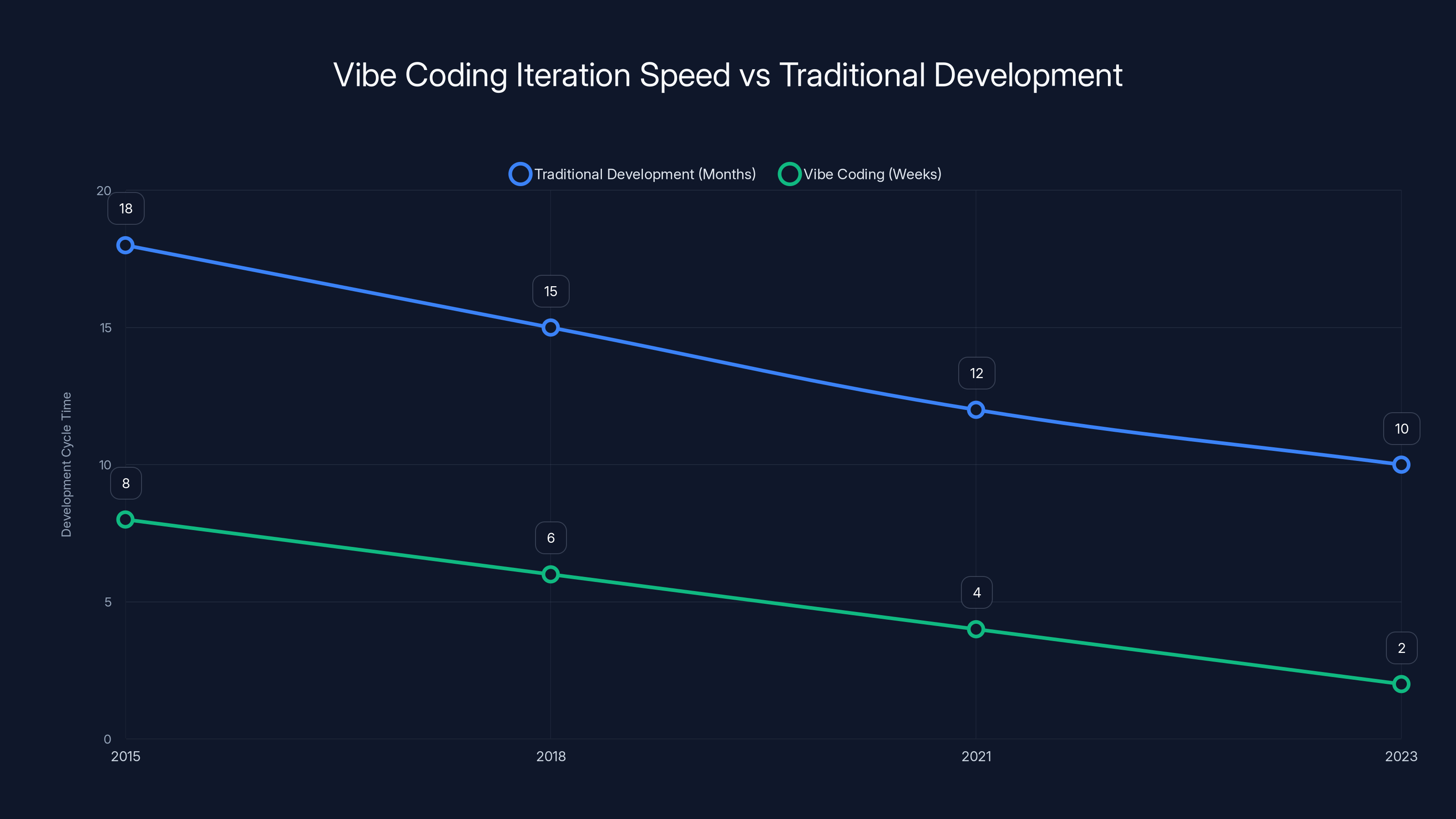

The results we've seen are genuinely impressive. We didn't hire a traditional product team and wait 18 months. We released tools, got feedback from users, improved them, and released new versions. All in weeks. What would have required a five-person engineering team in 2015 now happens with one person iterating fast and thinking hard.

But here's the subtle trick that catches everyone: vibe coding makes shipping easy. It doesn't make shipping at scale easy. These are completely different problems.

When we talk about 800,000 uses, we're not talking about 800,000 isolated interactions with a tool that never changes. We're talking about a living, evolving product that users interact with multiple times per month. They expect it to get better. They expect new features. They expect bugs to get fixed within hours, not weeks.

The speed advantage isn't that you build something and you're done. The speed advantage is that you can respond to users, market conditions, and AI capability improvements in days instead of months. But that only works if you're actually there to respond.

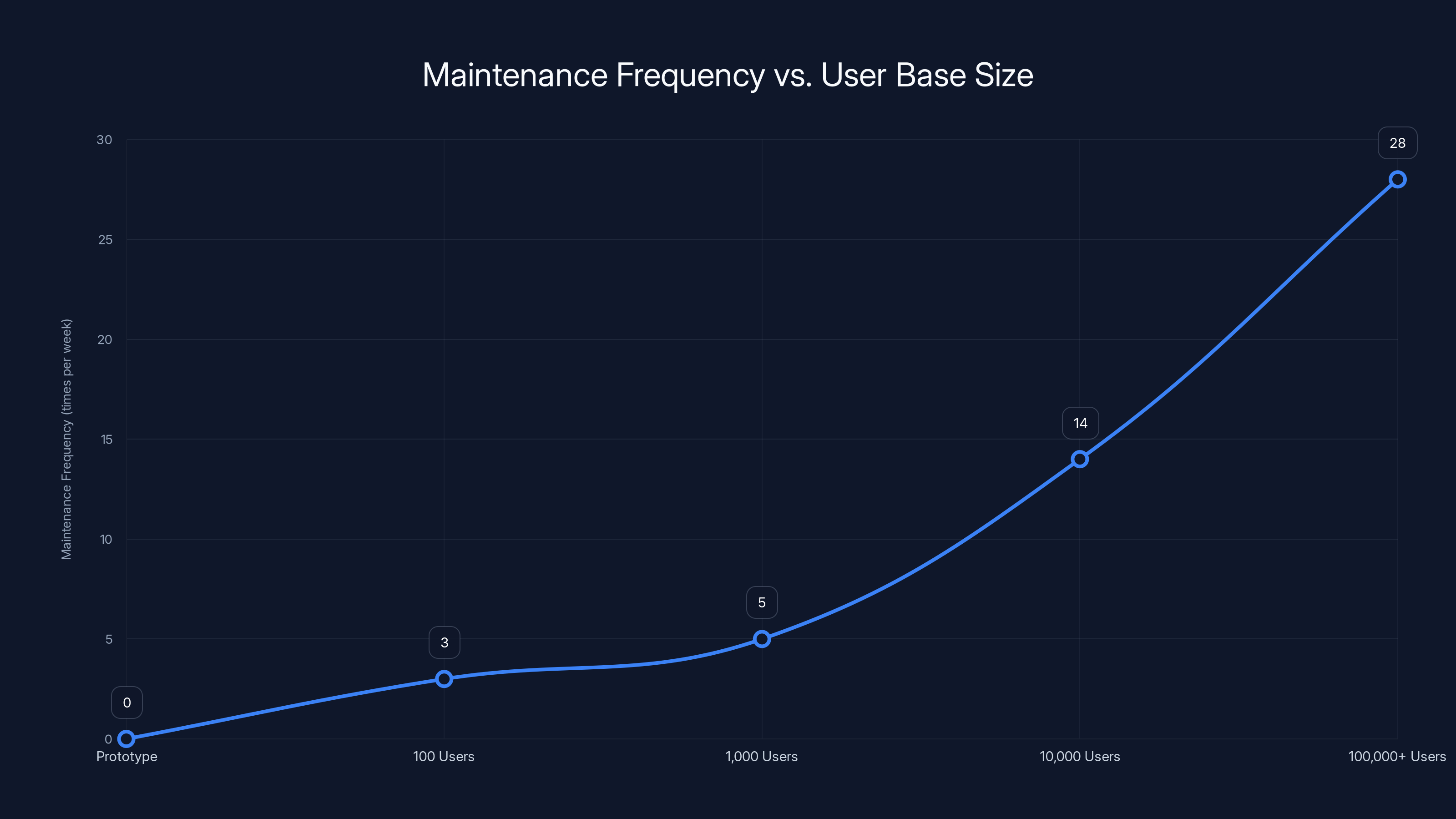

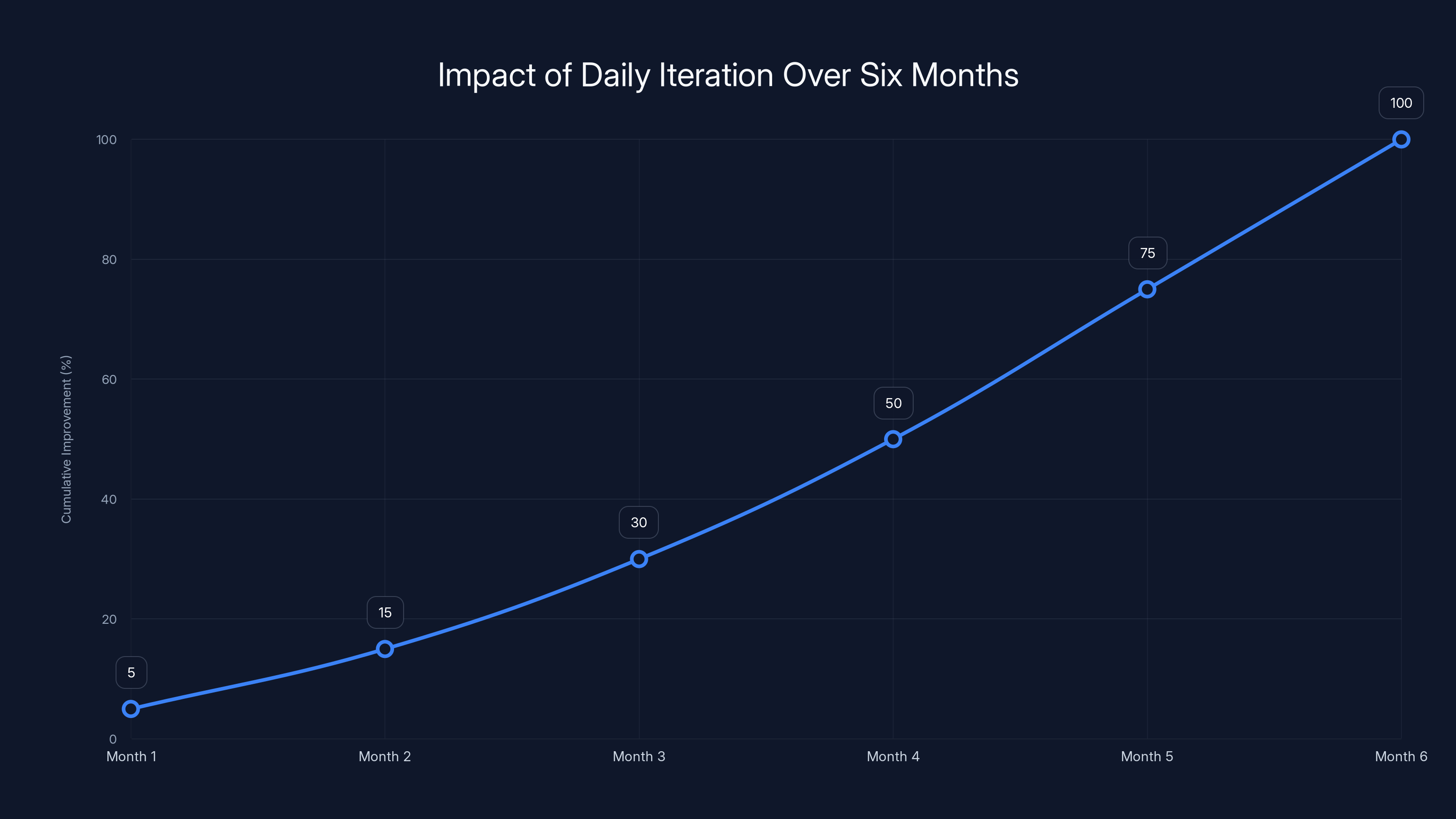

As user base size increases, the frequency of maintenance required grows significantly, highlighting the challenges of scaling from prototype to production. Estimated data.

The Prototype Trap: Where Everyone Gets Caught

There's a massive gap between a proof-of-concept and production software at scale, and almost nobody talks about this honestly.

A prototype requires almost zero maintenance. You spin something up, demo it, post about it on social media, maybe 100 people try it, and you're done. If something breaks, five people notice. If a feature doesn't work perfectly, it doesn't matter because the prototype was never promised as a finished product anyway.

Production at 100 users? You're probably fine checking in a few times a week. The user base is small enough that you can handle edge cases manually. If someone hits a bug, you can reach out directly and fix it. The feedback loop is tight but manageable.

Production at 1,000 users? Now you're checking in several times a week. Patterns start emerging. You notice that 30 people are hitting the same edge case. You see that one workflow is frustrating 2% of your users, but that's still 20 people complaining.

Production at 10,000 users? You're checking in multiple times daily. You've moved from "fixing bugs as they get reported" to "preventing problems before they happen." You're watching usage patterns. You're seeing which features are working and which are confusing people.

Production at 100,000+ uses? It's daily, non-negotiable maintenance. You're not doing it because you're a perfectionist. You're doing it because the cost of not doing it is measurable user churn and reputation damage.

This is the prototype-to-production trap. Almost everyone building with vibe coding starts at the prototype level and then acts shocked when scaling requires different behavior.

The Daily Time Tax: 30 to 60 Minutes Minimum, Plus Mental Overhead

Let me be specific about what maintenance actually looks like for our 12+ applications at scale.

Some days it's 30 minutes. Some days it's 60. On bad days, something breaks and it's 90 minutes. But that raw time commitment is actually the smaller problem.

The bigger drain is constant mindshare. You're always thinking about the apps. What feedback came in overnight? Are there any error spikes I haven't seen? That request from a user last week about handling CSV files differently—should I implement that? Claude just released a new model that's 30% faster for this task. Should I update the system prompt? A competitor launched something that does one thing slightly better. How do I leapfrog them?

With traditional software, you can compartmentalize. You work on a feature for two weeks, you ship it, and then you context-switch. You're not thinking about it for four days while you're focused on the next thing.

With vibe coded apps at scale, they live in your head rent-free. The iteration cycles are so fast that there's always something you could be improving, and the feedback loops are immediate enough that you feel the drag of not improving it.

Some days you ship something new. Some days you optimize a workflow because you noticed 15 people struggling with it. Some days you rewrite a system prompt because Claude got better at reasoning and your tool should take advantage of that. Some days you just monitor to make sure everything is working, but you're thinking about optimizations the whole time.

This isn't paranoia. This is the reality of products where users expect AI-powered tools to continuously improve. Your competition isn't standing still. The capabilities are expanding every month. If you're not updating your products to take advantage of new capabilities, you're getting lapped.

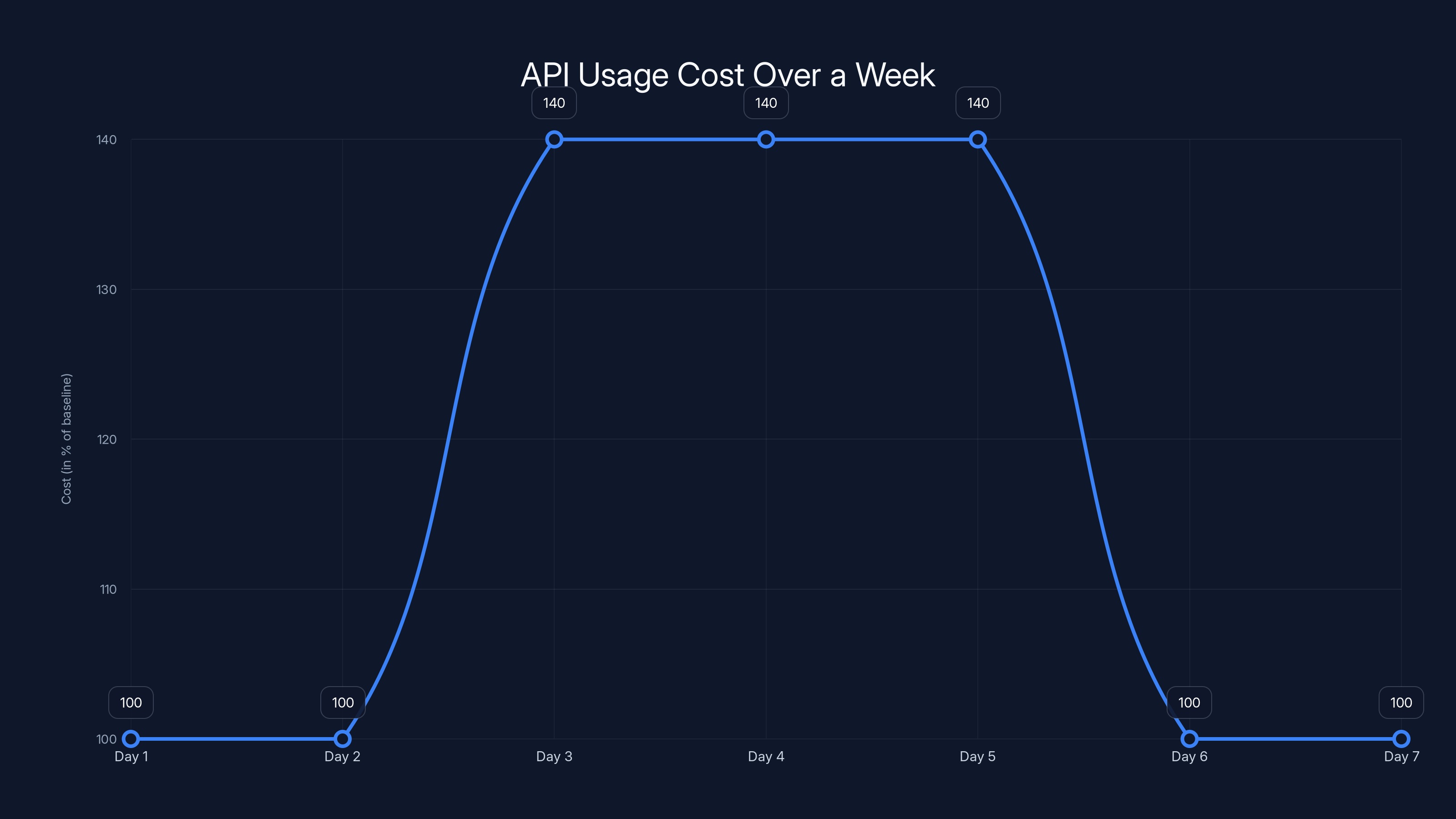

The chart shows a sudden 40% spike in API usage cost on Day 3, which remained elevated for three days before returning to baseline. Estimated data.

Why It's Not Just About Things Breaking

Here's what surprised me: the day-to-day maintenance isn't primarily about bugs. Stuff breaks sometimes, sure. APIs change. Edge cases surface. Users do things you didn't anticipate. But these are actually the smaller portion of the work.

The bigger work is evolution. When you're building with AI at the core, you're not building static software. You're building something that needs to get smarter, handle more cases, and improve constantly just to stay competitive.

A vibe coded app isn't a house you build once and then maintain. It's more like a garden. And gardens don't stay beautiful without daily attention.

I noticed this first with our AI research agent. When GPT-4 came out, the same prompt that worked fine on GPT-3.5 suddenly needed tweaking. When they released vision capabilities, we could suddenly help users do things they couldn't do before. When retrieval-augmented generation got better, we could improve accuracy.

Every single one of these improvements required active work. Not big architectural work, usually. But smart, focused iterations.

The same thing happens with user feedback. You release a feature, and 200 people use it. You notice that 50 of them are hitting it in a way you didn't anticipate. That's not a bug exactly. The feature is working as designed. But there's an opportunity to handle that use case better. Do you ignore it because the feature is "done"? Or do you spend an hour iterating?

If you ignore it, you've just made a conscious decision to let your product get worse relative to what it could be. That's fine if you're okay with slow decline. Most people building at scale aren't okay with that.

The Real Calculus: What Different Scales Require

Here's the honest math that I wish I'd seen written down clearly when I started:

Prototype or Demo Phase: Near-zero maintenance. You're probably spending more time writing Twitter threads about it than maintaining it. Great for validation. Great for investor conversations. Totally fine if it breaks for a few users.

Early Production (50-500 Users): A few hours per week, probably 3-5. This is manageable alongside other work. You're probably building one new feature for every three sessions of maintenance and optimization. The leverage is excellent. You can still do this part-time or alongside other responsibilities.

Scaling Production (500-5,000 Users): 10-15 hours per week minimum. This is starting to require serious commitment. You're probably spending 40% of your time on maintenance, improvements, and iteration. The new feature velocity slows down because you're also keeping a bigger user base happy.

Scaled Production (5,000+ Users): 30-60 minutes daily, minimum. That's 3-5 hours per week at the lower end, potentially more at the upper end. But here's the thing: it's not concentrated time. It's distributed throughout the day. You're monitoring in the morning, responding to a critical thing at lunch, pushing a small optimization in the afternoon, and checking analytics before bed.

This is the commitment that most people downplay. It's not the "epic engineering project" that requires a focused sprint. It's the "living with your product" type of maintenance that never really stops.

Why the Speed Advantage Is Still Real (Even With Daily Maintenance)

I want to be crystal clear about something: despite the daily maintenance requirement, vibe coding is still an absolutely incredible lever for building software fast.

In a traditional scenario, building 12 production applications to 10,000+ users each would require a team of 15-20 engineers working for 18-24 months. The coordination overhead alone would be massive. Feature prioritization would take weeks. Shipping iterations would require multiple rounds of review and testing.

We did this with a small team, working quickly, because we could iterate in days. Yes, we maintain these apps daily. But the total time investment is still fractional compared to the traditional path.

The leverage is still there. It's just different leverage than the "build once, ship once, never touch it again" fantasy that some content creators are selling.

The leverage is in idea-to-user speed. It's in responding to feedback in days instead of months. It's in being able to take advantage of new AI capabilities as they emerge. It's in the ability to run A/B tests and iterate instead of guessing what users want.

That's a real competitive advantage. It's just not the "no-code means no work" advantage that gets sold in the Twitter threads.

Vibe coding significantly reduces development cycle times, enabling rapid iteration and response to user feedback. Estimated data.

The Evolution Treadmill: Staying Ahead of Capability Improvements

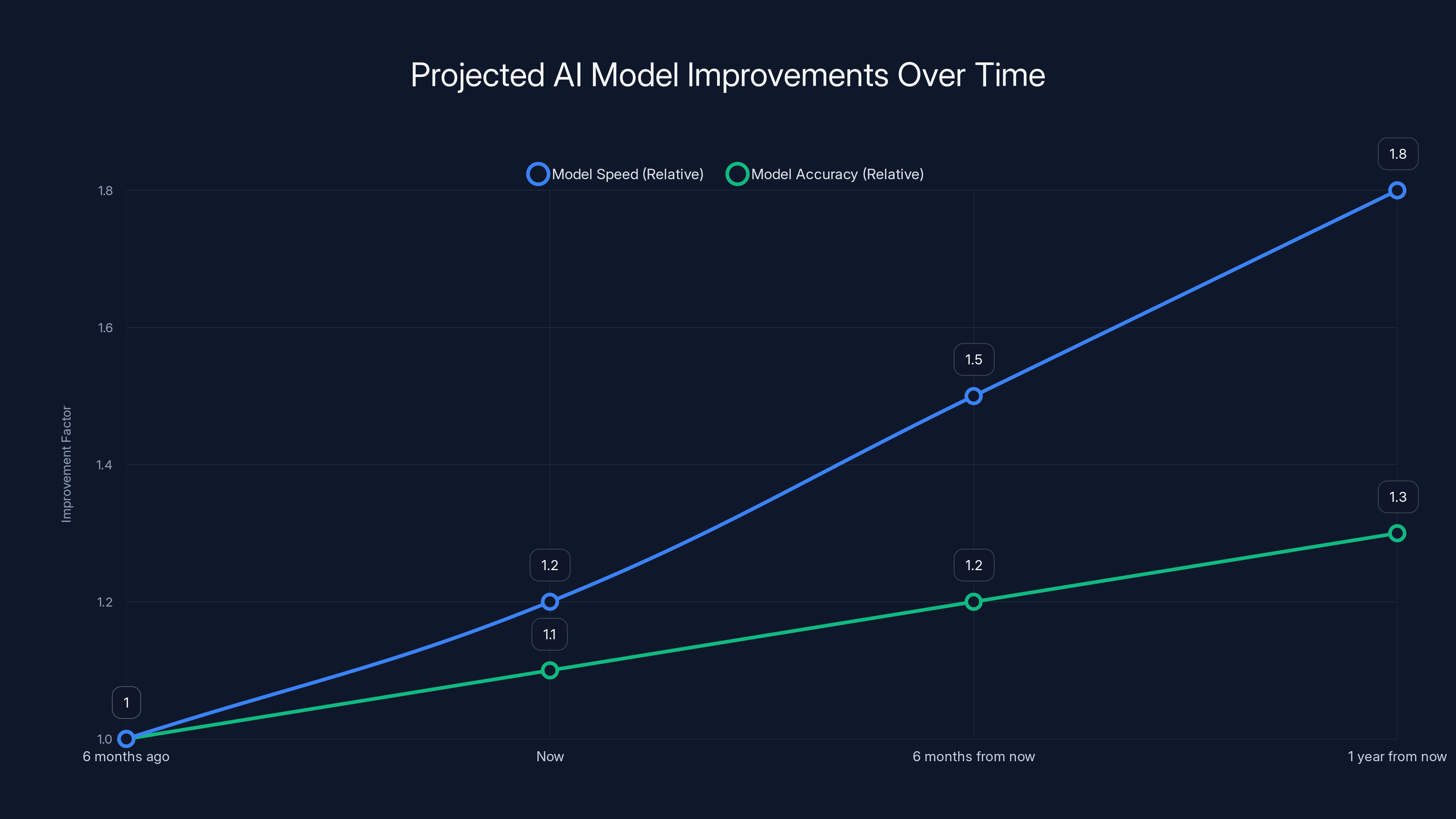

One thing that accelerates the maintenance cycle is the sheer speed at which AI capabilities are improving.

When you build with AI at the core, you're implicitly betting on the underlying model staying valuable. But the models aren't static. They improve. Sometimes dramatically.

Six months ago, a certain capability required custom logic and orchestration. Now the base model handles it natively. Do you leave your system prompt alone because it's "working"? Or do you update it to take advantage of better capabilities and simpler code?

Six months from now, there will probably be models that are 50% faster and 20% more accurate. Will your system prompts be optimized for those? Or will you have systems designed for 2024 that don't fully leverage 2025 capabilities?

This is the treadmill nobody talks about. You're not just maintaining. You're constantly optimizing for improving tools. It's not a bug-fixing treadmill. It's a capability-chasing treadmill.

The good news: this is how you stay ahead. The bad news: it requires continuous attention.

The Feedback Loop Problem: Data Overload and Decisions

When you have 800,000 uses, you're not getting feature requests once a week. You're getting patterns in real-time.

Three hundred people used this feature in a way you didn't expect. Fifty people abandoned the tool at this exact step. One hundred users asked for this specific capability. A competitor released something that does this thing slightly better.

Every single day, you have this data. And every single day, you have to make decisions about what matters and what doesn't.

With a small user base, you can ignore noise. With a large user base, noise becomes pattern. And patterns demand decisions.

Are you responding to actual user needs or optimizing for the wrong metrics? That's a decision you have to make, and it requires paying attention. You can't check in once a week and hope the right things surfaced. You need to be present enough to see the signal in the noise.

This is why daily maintenance isn't optional at scale. It's about being present enough to make good decisions about where to invest your limited time and attention.

Infrastructure Stability: The Invisible Maintenance Layer

Beyond features and iterations, there's the infrastructure layer that almost nobody mentions.

Your AI-powered app depends on API services. OpenAI, Anthropic, maybe some other providers. These services go down sometimes. They hit rate limits. They change pricing models. They deprecate features.

You're not directly operating the servers, sure. But you're responsible for monitoring that your apps are working. You're responsible for handling gracefully when an API goes down. You're responsible for managing costs when usage spikes.

Our VC research tool uses Claude API. One week, we got a burst of usage and the cost spiked 40%. We didn't realize it until three days in. Had I been checking daily, we would've caught it and adjusted pricing or throttling immediately. We caught it eventually, but there was waste.

That's the infrastructure maintenance layer. It's not exciting. It doesn't show up in product improvements. But it's real work, and it happens every day.

AI models are projected to become 50% faster and 30% more accurate over a year. Estimated data.

Cost Management: The Hidden Daily Task

Related to infrastructure maintenance: cost management becomes a daily task when you're running multiple AI-powered apps at scale.

Each feature improvement might cost a little more to run. Each new capability might require calling different APIs. Each usage spike hits your bill immediately.

You can't just "set and forget" your cost structure. You're making micro-decisions constantly:

Should we use GPT-4 or GPT-4o for this workflow? Will the cheaper model work? Is the speed difference worth the cost difference? Should we cache certain responses? Should we rate-limit aggressive users? Should we implement cost-per-user pricing tiers?

These aren't quarterly strategy discussions. These are daily operational realities when you're running live AI services.

The Context-Switching Toll: Mental Energy

I've left this for nearly the end because it's the part that's hardest to quantify but most important to understand.

Maintaining 12 applications means your mind is partially occupied by 12 different contexts. You're not context-switching intensely like you would be in a different scenario. But you are never fully context-switching away.

You can't enter that deep focus state where you're doing creative work on a new idea for three hours straight without thinking about something else. Because there's always a background process running that says "could I be improving something?"

For some people, this is paralyzing. For me, it's actually energizing most days. I genuinely like iterating on things. I like seeing usage patterns shift based on small improvements. I like the challenge of staying ahead.

But I'd be lying if I said it didn't have a cost. Some days, I'd like to just focus on one thing and forget about the other 11 applications. But that's not an option if you care about the quality of the product.

This mental overhead is real, it's significant, and it's not captured in time-tracking metrics.

Who Should Actually Vibe Code: The Honest Assessment

Given all this, I want to be very clear about when vibe coding is the right call and when it isn't.

Vibe coding is incredible for:

Internal tools that only your team uses. You can move fast, iterate freely, and maintenance is a team responsibility spread across people who understand the domain deeply.

Validation and MVP work. If you're testing whether an idea has legs, vibe coding lets you get real user feedback in weeks instead of months.

Products where you're comfortable being the primary custodian. If you're building something and you're okay with daily involvement, the speed advantage is unmatched.

Feature additions to existing products. If you have a successful product and want to add AI-powered features quickly, vibe coding can work great. The core product already has users and you're adding capability.

Personal productivity tools. If it's something you use yourself, daily maintenance isn't a burden. It's just using the thing you built.

Vibe coding is probably not the right choice for:

Products where you want to build something and hand it off. If you're planning to outsource operations entirely, you're still going to need someone checking in on the product daily. That might be a contractor or a hire, but the daily maintenance cost exists either way.

Multiple unrelated products where you want to spread your attention. 12 applications is a lot. If you're trying to maintain 12 unrelated products, the cognitive load is real. In my case, they all lived on the same platform and shared infrastructure, which helped significantly.

Products where reliability is absolutely critical. If one user losing data is unacceptable, you need more robust error handling and monitoring than you can do with casual daily maintenance.

Products with complicated compliance requirements. Healthcare, finance, regulated industries. These need more rigor than rapid iteration provides, even with daily oversight.

Vibe coding offers higher flexibility and customization compared to traditional no-code platforms, but requires more oversight. Estimated data based on typical characteristics.

The Honest Time Allocation

Let me break down what a typical day actually looks like with these 12+ applications:

Morning (15 minutes): Check monitoring dashboards. Any errors overnight? Any usage spikes that indicate a problem? Skim the feedback that came in.

Mid-morning (20-30 minutes): Based on what I saw in monitoring, either investigate a specific issue or plan one optimization.

Lunch or afternoon (10-20 minutes): Implement the optimization or fix. This could be rewriting a system prompt, adjusting a parameter, or implementing a small feature.

Evening (5-10 minutes): Final check that everything is working as expected.

On days where something is broken or there's a bigger problem to solve, it extends to an hour total. On days where everything is running smoothly and feedback is minimal, it might be just 30 minutes.

But it's every day. Weekends included, though weekends sometimes get a pass if nothing is actively on fire.

That's not "no-code means no work." That's just being honest about the commitment.

What Would Happen If I Stopped: The Degradation Timeline

I sometimes wonder what would happen if I just stopped maintaining the applications. Not shut them down. Just stopped iterating, stopped monitoring, stopped optimizing.

Week one: Nobody would notice. Everything would keep working.

Week two: A few edge cases would emerge. Minor issues that aren't breaking but are starting to create friction.

Week three: Feedback would accumulate. Users would start asking about features they expected to see. Competitors with newer AI capabilities would start looking comparatively better.

Month two: Usage would start to decline. Not dramatically, but noticeably. Users would migrate to tools that are actively being improved.

Month three: What was once a valuable product would start to feel stale. The AI capabilities that were cutting-edge two months ago are now standard.

Month four and beyond: The product would slowly rot. It would still work technically, but it would no longer be competitive or particularly valuable.

This is the implicit contract you sign when you launch something at scale. You're committing to not letting it rot. That requires daily attention.

The Compounding Advantage of Daily Iteration

But here's the flip side that makes it all worth it:

Daily iteration compounds in ways that quarterly planning never could.

Six months of daily, small improvements adds up to something dramatically better than what existed six months ago. I'm not talking about big feature launches. I'm talking about the cumulative impact of:

Small UX improvements that reduce friction by 5% each. After 10 of those, you're at 40% better.

Optimization to system prompts that make the AI 10% better at understanding user intent. Compound that over three months and suddenly the tool is noticeably more capable.

Integrations and workflows that didn't exist before, implemented quickly because you could iterate fast.

Small price or positioning changes based on real user data instead of guesses.

This is where the real competitive advantage lives. Not in shipping something once. In being able to ship continuously and let the compound growth accumulate.

Daily iteration leads to significant cumulative improvements over time, with a projected 100% improvement after six months. Estimated data.

The Psychological Aspect: Ownership and Accountability

There's a psychological dimension to this that's worth acknowledging.

When you're building vibe coded apps and you're the person maintaining them, there's a different sense of ownership. These aren't someone else's problem. They're your problem. Your users are depending on them. If something breaks, you're going to see the feedback. You feel it.

For some people, that's incredibly motivating. You're directly connected to impact. You see a problem, you fix it, users benefit, and you feel good about it.

For other people, that level of direct accountability is exhausting. They'd prefer to build something, hand it off, and move on.

Neither is wrong. But it's important to know which category you fall into before you commit to building at scale.

I'm clearly in the first category. The direct feedback loop energizes me. But I know plenty of brilliant people who'd hate this rhythm. For them, a different model would be better.

Would I Do It Differently Knowing What I Know Now?

Absolutely not. I would do it exactly the same way, and I would do more of it.

Yes, I maintain these applications every single day. Yes, that's a real commitment. Yes, it's a cost that almost nobody mentions in the "I built a SaaS in 4 hours" content wave.

But the impact has been staggering. We've helped thousands of founders make better decisions about their AI tooling. We've facilitated introductions between founders and investors that might not have happened otherwise. We've created tools that are actively being used to solve real problems.

Would all of that have been possible with traditional software development? Theoretically yes. But would it have happened as fast, or with as much user feedback integrated into the decisions, or with as much iteration based on real usage patterns? Almost certainly not.

The speed-to-impact is the real superpower. The daily maintenance is just the cost of that superpower.

And I'm building more. If I could maintain 24 well-chosen applications at this quality level, I would. Not 12. 24. The leverage is still there. The maintenance cost would increase, sure. But the impact would scale.

The Founder's Dilemma: Maintenance vs. Growth

Here's the tricky part that I'm still figuring out:

As these applications scale and daily maintenance starts to take more time, at what point does it make sense to hire someone to help with the maintenance? At what point do I hand off some of the operational responsibility?

Right now, I'm comfortable doing it myself. The 30-60 minutes a day is part of my work rhythm. But if usage scaled another 10x, that would probably change. At 8 million uses instead of 800,000, something has to give.

Do I hire someone to handle day-to-day maintenance while I focus on new product ideas? Do I bring in a product manager who's responsible for monitoring feedback and suggesting iterations? Do I just accept slower iteration cycles and less frequent updates?

Each choice has tradeoffs. Hire someone and you're giving up some of the speed-to-decision that makes this whole approach work. Don't hire someone and you hit a ceiling on how many applications you can maintain well.

This is the other conversation that almost nobody is having. The vibe coding revolution is real. It's powerful. But at some point, you hit scaling problems that require different solutions. And it's worth thinking about those in advance.

The Competitive Pressure Is Real

One thing that drives a lot of the daily maintenance is competitive pressure.

When you're building tools that are easy to copy and easy to improve, the competitive surface area is wide. Someone else can build a similar tool in days. Someone else can take your idea, add a feature you missed, and launch it in weeks.

If you're checking in on your application once a month, that's plenty of time for someone to build something better and steal your users.

If you're checking in daily, you see competitive threats faster. You see where users are struggling faster. You can respond faster.

This is why the daily maintenance isn't optional for products that matter. It's not paranoia. It's just keeping up with the pace of the market.

Building a Vibe Coding Company vs. Building in Vibe Code

There's an important distinction I want to highlight:

There's a difference between building a company where the product is built in vibe code (which is what I'm doing) and building a company that sells vibe coding as a service.

I'm not building a "no-code platform." I'm not selling vibe coding tools. I'm using vibe coding to build applications that solve specific problems for specific users.

This matters because the constraints are different. I can iterate quickly. I can cut corners on some things because my users understand that iteration is happening. I don't need to maintain backwards compatibility with ancient API versions because I control the entire product.

If I were building a platform that other people used to build things, the maintenance burden would be different. I'd need more stability. More documentation. More consideration for breaking changes.

So when you're thinking about whether vibe coding is right for your situation, also think about what you're building. Is it an application you're using to serve users? Or is it infrastructure that other people depend on?

The Role of Monitoring and Alerts

One thing that helps with the daily maintenance burden: good monitoring and alerting.

If you're manually checking everything every day, you'll burn out. But if you have systems that alert you to problems, you can be reactive to what actually matters instead of scanning everything.

We use error tracking, usage monitoring, cost tracking, and user feedback aggregation. That means when something actually needs attention, we know about it immediately. The other 90% of time, everything is fine and we can focus on improvements instead of firefighting.

This is something that people building very quickly sometimes skip. They're so focused on shipping that they don't set up basic monitoring. Then they're maintaining by accident instead of by design.

If you're going to commit to vibe coding at scale, commit to monitoring at scale too. It makes the daily maintenance manageable instead of stressful.

Building a Team Around Vibe Coded Applications

As these applications scale, the question becomes: how do you build a team around them?

Traditional software would have engineers working on each product. But vibe coded applications don't need engineers in the same way. They need:

People who understand the problem domain and can identify where improvements would matter most. That's usually the founder, but it doesn't have to be.

People who can write good system prompts and understand how AI responds to different inputs. That's more of a product/design skill than engineering.

People who can monitor and identify patterns in user behavior. That's more of a data or product analytics skill.

People who can implement small iterations and tweaks. That can be junior technical people, not necessarily senior engineers.

When I think about scaling the maintenance burden, this is what I think about. Not hiring engineers. Hiring people who have specific skills for working with AI-powered applications.

This is a new skill set and it's not clear yet what the career path looks like. But it's the right way to think about scaling instead of treating vibe coded applications like traditional software that just needs more engineers.

The Long-Term Sustainability Question

Here's the question I can't quite answer yet:

Is maintaining 12 applications at this intensity sustainable indefinitely? Or is this the sprint phase and I'll eventually need to slow down?

Right now, it energizes me. The feedback loop is tight. The impact is visible. The work doesn't feel like work.

But I also know that I have a finite amount of energy and attention. At some point, the question becomes: do I want to maintain 12 applications forever? Or do I want to ship new things?

If I want to keep shipping new applications, then at some point I need to hand off maintenance of the existing ones to other people. And that's a different skill set than rapid iteration.

I don't have the answer yet. But it's the right question to think about if you're going down this path.

The Broader Lesson: Different Constraints, Different Solutions

The vibe coding revolution is real. The speed advantage is real. The ability to build things that would have been impossible before is real.

But the maintenance burden is also real. The daily commitment is real. The need to stay engaged and iterate is real.

When you're building applications at scale with AI at the core, you're not getting some magical reduction in the work required to maintain software. You're just redistributing the work.

Traditional software: more work upfront to ship, but lower ongoing maintenance burden.

Vibe coded applications: much less work upfront to ship, but higher ongoing maintenance burden because you're running at such a fast iteration cycle.

Neither is better. They're just different tradeoffs.

The people who thrive with vibe coding are the ones who understand this tradeoff and embrace it. The people who struggle are the ones expecting "no-code means no work."

If you love iteration and feedback loops and continuous improvement, vibe coding is going to feel amazing.

If you want to build something once and maintain it minimally, vibe coding is going to feel like a treadmill.

Both perspectives are valid. Just know which one you are before you commit.

FAQ

What exactly is vibe coding?

Vibe coding is a development approach where you use AI-powered tools and visual builders to generate application logic, interfaces, and workflows rapidly without writing traditional production code. The "vibe" refers to describing what you want the application to do conversationally or visually, and letting AI interpret that into functional software. It's not no-code—it's different code, written by AI based on your direction, that still requires human oversight and iteration.

How is vibe coding different from traditional no-code platforms?

Traditional no-code platforms provide pre-built components and visual workflows that you assemble like Lego blocks. Vibe coding uses AI agents to generate custom logic based on conversational prompts or visual descriptions, allowing much more flexibility and customization. The tradeoff is that vibe coded applications often require more active oversight because they're more dynamic and less standardized than pre-built components.

Can I really ship an application in 48 hours with vibe coding?

Yes, you can ship something functional and working in 48 hours. But there's a critical distinction between a prototype that works and a production application that scales. A prototype might be 48 hours. A production application at 100+ users that actually delivers value requires significantly more work, including the daily maintenance discussed in this article. The 48-hour narrative is true for validation, but it's misleading for production expectations.

What does daily maintenance actually mean for a vibe coded app?

Daily maintenance includes: monitoring error rates and usage patterns, responding to user feedback, iterating on system prompts or workflows based on observed patterns, optimizing for new AI capabilities, managing costs, and ensuring the application stays competitive. It's typically 30-60 minutes per day distributed throughout the day, plus mental overhead of thinking about improvements. It's not emergency firefighting most days—it's active stewardship.

At what scale does the daily maintenance commitment become necessary?

The maintenance commitment scales roughly with user volume. At 100 users, you might check in a few times per week. At 1,000 users, several times per week. At 10,000+ users or significant usage volume, daily maintenance becomes necessary to catch problems, patterns, and optimization opportunities before they impact user experience. The inflection point is different for different applications, but somewhere in the 1,000-5,000 user range is when daily attention becomes important.

Should I hire someone to maintain my vibe coded apps, or should I do it myself?

That depends on your situation and preferences. If you enjoy iteration and direct user feedback, maintaining the apps yourself preserves the speed-to-decision that makes vibe coding powerful. If you want to move on to new projects, you can hire someone to manage day-to-day operations, but they need to understand how to work with AI-powered applications—this isn't the same as traditional software engineering. You might want to do both: maintain core strategic decisions while delegating operational monitoring to someone else.

Is vibe coding sustainable long-term?

Vibe coding is absolutely sustainable long-term, but the sustainability model changes as applications scale. In the early phase, you can maintain multiple applications yourself while shipping new features rapidly. As applications mature and user bases grow, you either need to hire operational support, slow down new feature velocity, or both. The leverage is still there at scale—you're getting more done with smaller teams than would be possible traditionally—but it's not "set and forget" at any scale with active users.

What's the biggest mistake people make with vibe coding?

The biggest mistake is treating a prototype as production and expecting zero ongoing maintenance. You can ship fast, but that speed is a feature of how the development process works, not the maintenance process. People who thrive with vibe coding understand that shipping quickly just means you start the maintenance and iteration cycle sooner. People who struggle are the ones who expected to "build it once and forget about it."

Can vibe coding replace traditional software development entirely?

Not entirely, but it replaces a significant portion of it for many types of applications. It's particularly powerful for applications where iteration speed matters, where the domain is well-understood enough that AI can handle it, and where the founder is willing to actively maintain the product. It's less suitable for applications requiring absolute reliability or extensive compliance oversight. The future isn't "vibe coding replacing everything"—it's "traditional development and vibe coding coexisting for different use cases."

How do you keep a vibe coded app competitive as AI capabilities improve?

You stay engaged with the product and actively iterate as new capabilities emerge. When a new model or feature gets released, you evaluate whether it makes your application better. This usually means updating system prompts, changing which APIs you call, or reworking workflows. This is a significant part of the daily maintenance mentioned in this article—staying ahead of capability curves so your product doesn't become technically outdated. This is why "set and forget" doesn't work with AI-powered applications specifically.

What's the ROI on maintaining vibe coded apps daily?

The ROI is substantial compared to traditional development. Maintaining 12 applications at significant scale might require 1-2 people with daily involvement. The equivalent in traditional software would require a much larger team spread across multiple applications. The tradeoff is that you're compressing timeline and team size in exchange for ongoing active management. For applications generating real value, the math typically works out strongly in vibe coding's favor, but it requires honest assessment of the maintenance burden.

Key Takeaways

- As user base size increases, the frequency of maintenance required grows significantly, highlighting the challenges of scaling from prototype to production.

- A prototype requires almost zero maintenance.

- It's daily, non-negotiable maintenance.

- Let me be specific about what maintenance actually looks like for our 12+ applications at scale.

- That request from a user last week about handling CSV files differently—should I implement that?

Related Articles

- Claude Code MCP Tool Search: How Lazy Loading Changed AI Agents [2025]

- Venezuela's X Ban Still Active: Why VPNs Remain Essential [2025]

- NYT Strands Game #684 Hints, Answers & Spangram [January 16, 2025]

- Euphoria Season 3 2026: Why This HBO Max Trailer Changed Everything [2025]

- Bandcamp's AI Music Ban: What It Means for Artists and the Industry [2025]

- Signal's Founder Built a Private AI That ChatGPT Can't Match [2025]

![The Daily Reality of Vibe Coded Apps at Scale [2025]](https://tryrunable.com/blog/the-daily-reality-of-vibe-coded-apps-at-scale-2025/image-1-1768578014054.jpg)