The Reddit Post That Fooled Everyone

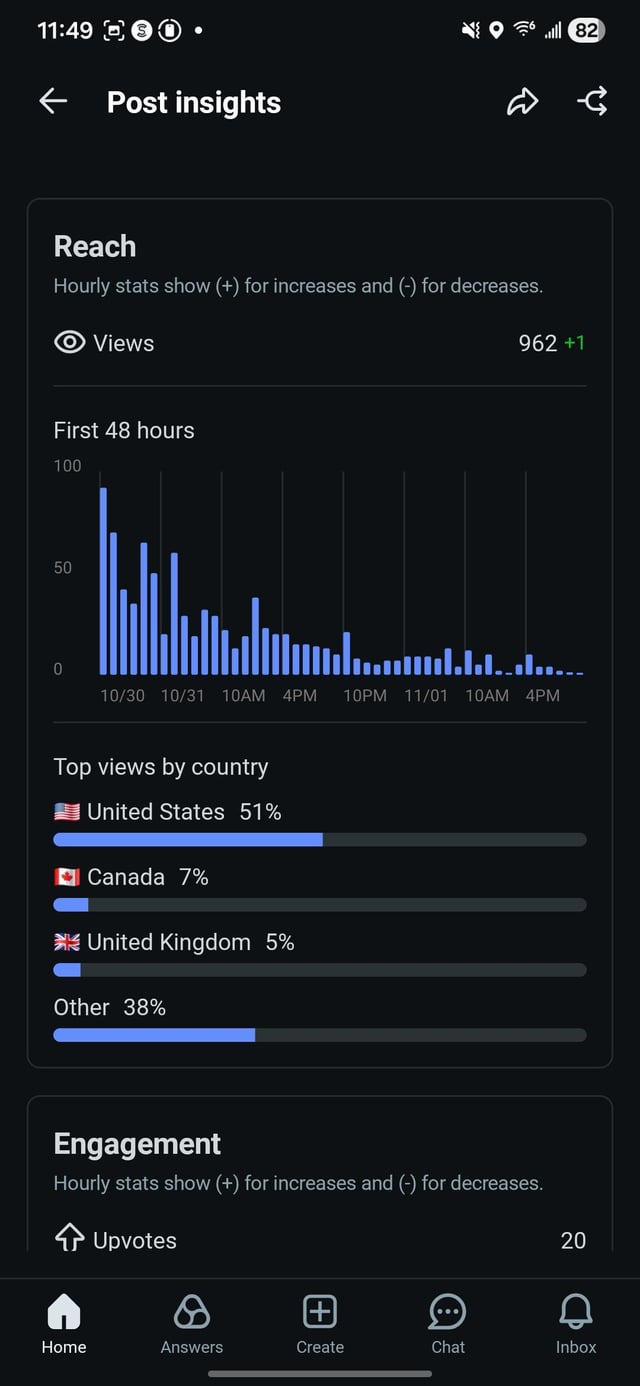

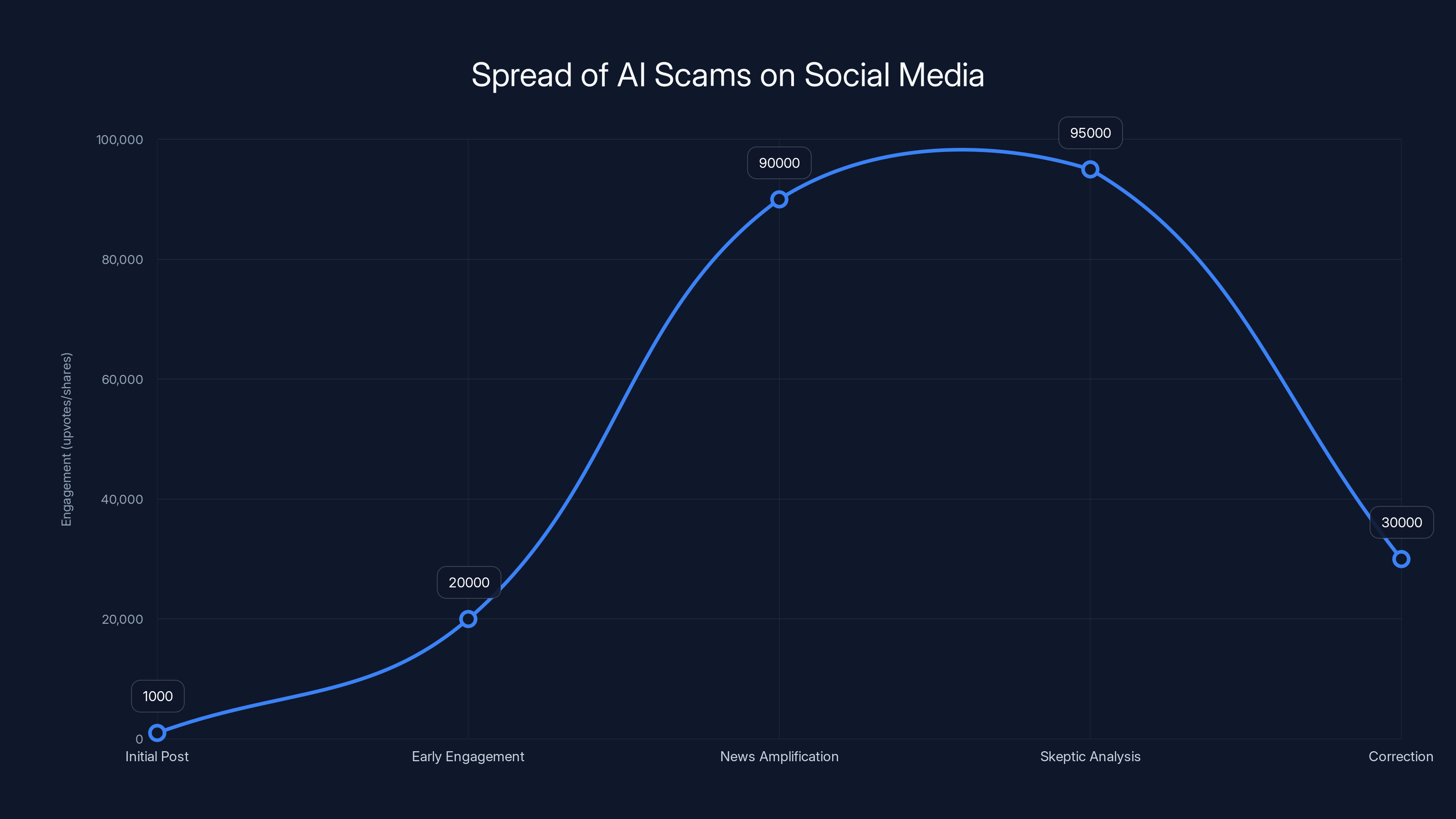

In early January, a Reddit post dropped that seemed like the ultimate whistleblower confession. Some anonymous software engineer claiming to work at a "major food delivery app" spilled the dirt on everything you'd suspect they're doing wrong. Delayed orders on purpose. Calling workers "human assets." Exploiting drivers' desperation for quick cash. The post hit 90,000 upvotes in just four days.

Then something weird happened. Journalists started asking basic questions, and the whole thing collapsed like a house of cards made of AI output.

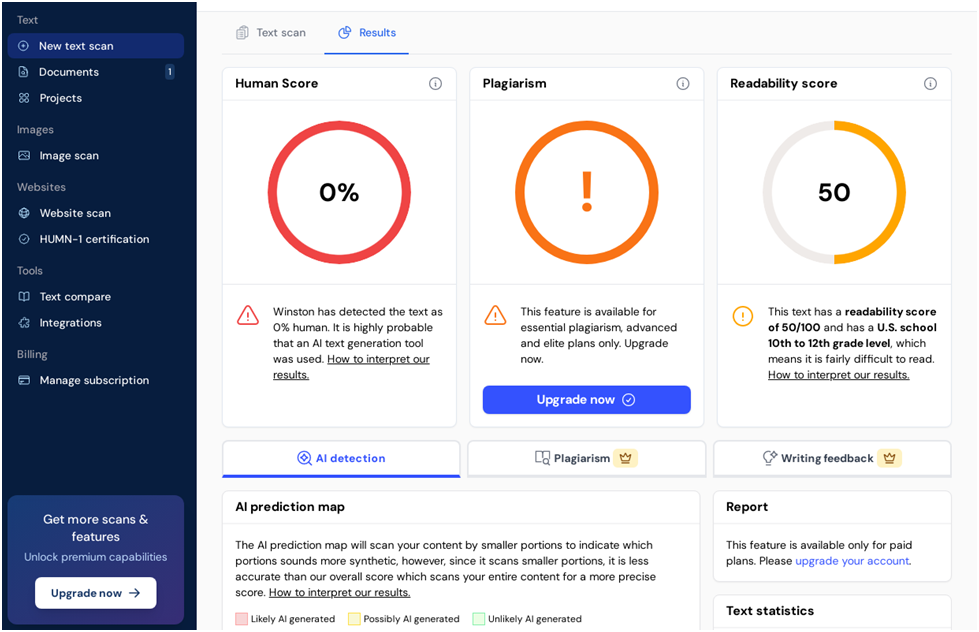

The confession was AI-generated. Not just suspected. Confirmed through multiple detection tools, image analysis, and the poster's increasingly nervous behavior when pressed for proof. What started as a damning insider account turned into a case study in how convincing AI has become at fabricating narratives that tap into what people already believe.

But here's the kicker: just because the post was fake doesn't mean the exploitation it described isn't real. Food delivery companies have a documented track record of exactly the kind of worker mistreatment the fake whistleblower claimed. So while the AI scam fell apart, it revealed something genuine about an industry built on worker extraction.

How We Know It Was AI

Let's talk about the forensics. The Reddit post itself was 586 words of supposedly confidential confession. Seems specific. Seems detailed. Seems like something an actual engineer would write.

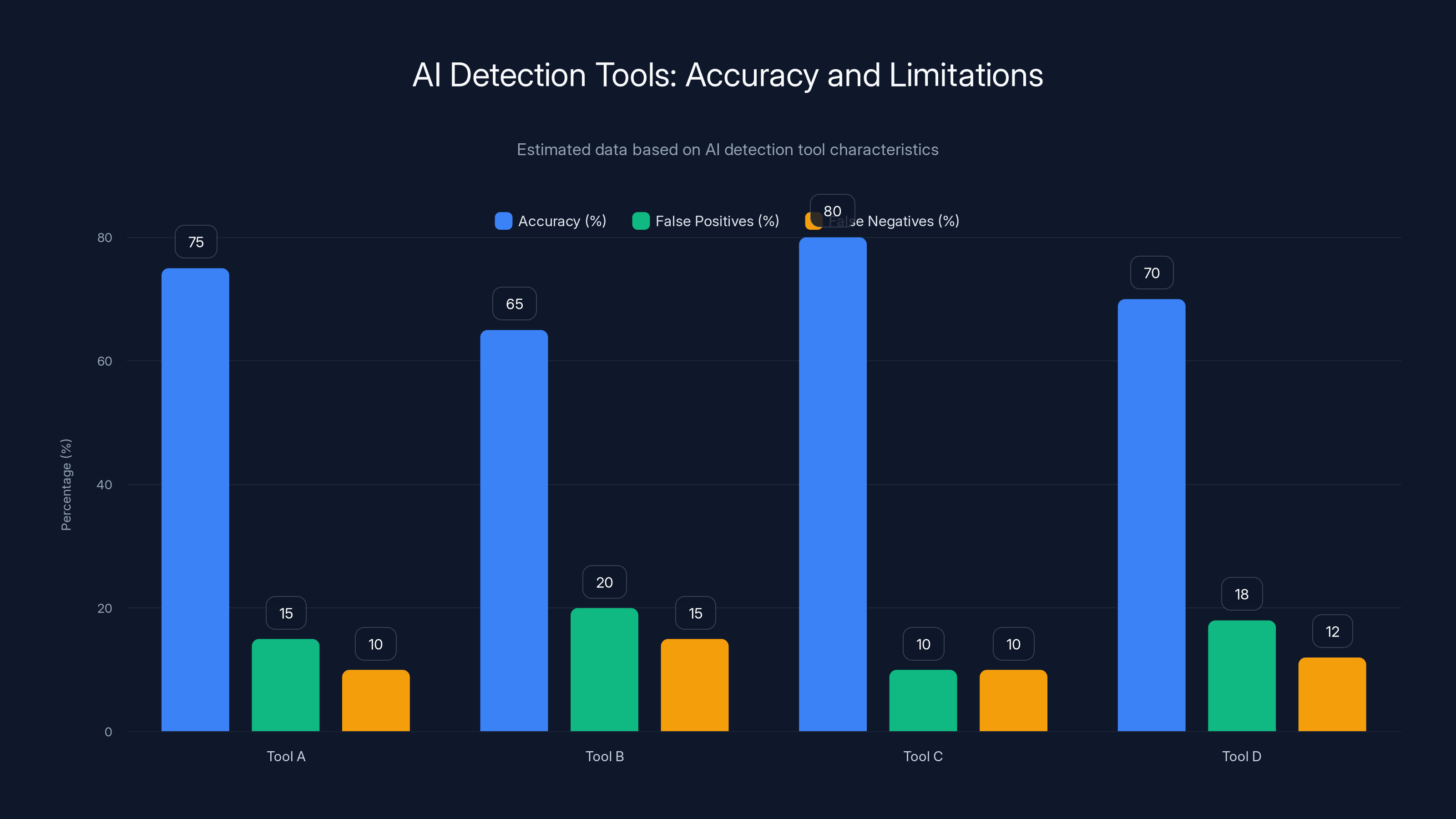

The Verge and other outlets ran the text through multiple AI detection tools. This is where it gets interesting because different detectors gave different answers. Copyleaks flagged it as likely AI. So did GPTZero, Pangram, Claude, and Google's Gemini. But Zero GPT and Quill Bot said it looked human-written. Chat GPT basically said "maybe."

This inconsistency matters because it shows why people believed it. AI detection tools aren't perfect. They're improving, but they're still probabilistic, not deterministic. A post can legitimately fool some tools while triggering others.

But then came the smoking gun: the employee badge.

The anonymous poster claimed to be a senior software engineer and provided what looked like an Uber Eats employee badge as proof. The image showed the Uber Eats logo, two blacked-out boxes (presumably covering the employee name and photo), and the title "senior software engineer."

Here's the problem: Uber Eats-branded employee badges don't actually exist. Uber employees carry Uber badges, not Uber Eats badges. That's detail most AI models wouldn't know to correct.

When Google's Gemini analyzed the image, it identified several red flags. The words were slightly misaligned. The coloration was warped at the edges of the green border. The overall composition had the telltale signs of AI image generation or editing. Gemini flagged it immediately as inauthentic.

Other journalists got similar treatment. Casey Newton from Platformer and Hard Fork received the same fake badge. Alex Shultz from Hard Reset got shown an allegedly internal Uber document, but when he started asking verification questions, the poster deleted their Signal account. The whole operation had the hallmark pattern of a scammer: aggressive in the initial pitch, evasive under scrutiny, and willing to burn the whole thing down rather than provide real proof.

Gemini was the most effective tool, scoring 95% in detecting AI-generated content, highlighting the variance in tool accuracy. Estimated data.

Why Everyone Believed It (And Why That Matters)

Here's the uncomfortable truth: this AI scam worked because it tapped into something people already knew or suspected to be true.

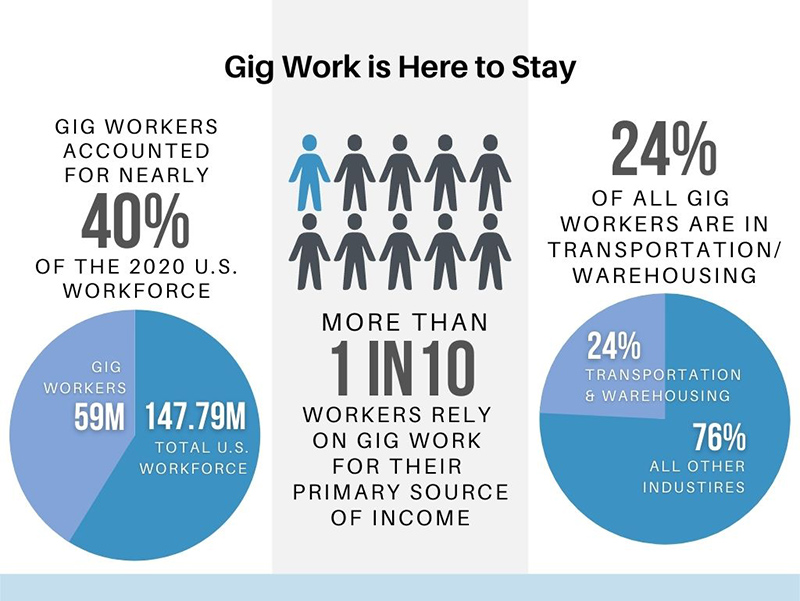

Food delivery companies have spent over a decade earning a reputation for exploiting workers. This isn't speculation. It's documented in lawsuits, regulatory filings, investigative reports, and worker testimonies. When Door Dash went public in 2020, their own IPO filing detailed how they classify drivers as independent contractors to avoid paying benefits, minimum wages, or unemployment insurance. Uber had similar disclosures.

The model itself is extractive by design. Gig workers bear the costs (vehicle maintenance, insurance, fuel, phone service) while the platform keeps commission. A 2019 MIT study found that some gig workers were earning less than minimum wage when you factored in expenses. Workers have reported waiting unpaid between deliveries, getting deactivated without explanation, and competing with surge pricing that compresses their earnings.

So when someone posted a detailed confession about calling workers "human assets" and deliberately exploiting their desperation? People believed it because the foundation was already laid. The industry's actual practices made the AI fabrication feel plausible.

This is why AI scams targeting skeptical, informed audiences can be particularly effective. The scammer doesn't need to convince anyone the company is ethical. They just need to confirm what people already suspect, add specific details that sound authentic, and let the audience fill in the blanks with their existing beliefs.

The psychology is worth understanding: When information aligns with your prior beliefs and expresses those beliefs in a way that feels emotionally true, you're less likely to scrutinize it aggressively. The fake post didn't ask anyone to believe something counterintuitive. It just dressed up existing knowledge in the garb of insider confession.

Estimated data shows that average earnings for food delivery workers often fall below state and federal minimum wage standards, highlighting the economic challenges they face.

The Red Flags You Should Have Caught

In retrospect, there were signals that should have triggered skepticism, even before the technical analysis kicked in.

The anonymity without sacrifice. Real whistleblowers typically reveal themselves to credible outlets and work with journalists to verify their claims independently. The original Reddit post was just a wall of text with no follow-up mechanism, no verification pathway, and no way to fact-check specific claims. The poster could make any allegation and face zero accountability.

The comprehensive indictment. Whistleblower complaints tend to focus on specific incidents or practices the person directly witnessed or participated in. This confession covered everything: algorithmic manipulation, worker exploitation, customer deception, and internal culture. It's the kind of omniscient critique that an AI trained on thousands of articles about gig economy problems might produce.

The perfect narrative arc. The post followed a classic structure: innocent-sounding job description, escalating revelations of unethical behavior, emotional appeals about worker suffering, and a call to action (people should know about this). It's basically a persuasive essay masquerading as testimony.

The vague specificity. Details like "calls workers human assets" or "exploits their desperation" are specific enough to feel authentic but vague enough that they couldn't be directly fact-checked. You can't disprove that someone used those phrases internally. But you also can't prove it.

The defensive response to scrutiny. When journalists asked basic questions like "can you provide corroborating evidence?" or "can we verify this independently?", the poster got nervous, offered obviously fake proof, and then disappeared. Legitimate whistleblowers typically anticipate these questions and have answers prepared.

How AI Generation Creates Convincing Fakes

Understanding how the original post and badge were likely created helps you spot similar scams in the future.

Text generation: Modern language models like Chat GPT, Claude, and Gemini can write convincing prose in nearly any voice or style. Ask the model to write a Reddit confession from a senior engineer at a food delivery startup, specify the concerns (worker exploitation, algorithmic manipulation, customer fraud), and set the tone (insider knowledge, moral outrage, but anonymized for self-protection), and you get exactly what we saw.

The text would pass basic plagiarism checks because it's original. It would fool casual readers because it's coherent and detailed. It might even fool some AI detectors because modern language models are quite good at mimicking human writing patterns. The key indicator AI detectors might catch: excessive use of certain phrase structures, slightly mechanical transition sentences, or an absence of the kind of personal idiosyncrasies that characterize human writing.

Image generation: Creating a fake employee badge used to require graphic design skills or Photoshop expertise. Now you can describe the badge you want to Midjourney, DALL-E, or Stable Diffusion and have an image in 30 seconds. The model doesn't know that Uber Eats badges don't exist. It just knows that badges have certain aesthetic properties (corporate logo, identification photo, name, title) and generates something that looks plausible.

The giveaway is in the details: warped text, misaligned elements, subtle color inconsistencies, and impossible spatial relationships. AI image generators still struggle with text rendering and geometric precision. That's why Gemini spotted the issues immediately.

The narrative package: The scammer likely used AI to generate the initial post, created a fake badge with generative AI, and maintained the anonymous account with just enough responsiveness to maintain credibility. When pressed, they offered the obvious fake (the badge) because it's what they had.

The whole operation probably took a few hours and cost essentially nothing. No technical skill required beyond knowing which tools to use. No expertise in food delivery industry needed because the models trained on plenty of gig economy criticism. No deep knowledge of how badges work because the scammer was banking on reviewers trusting the visual rather than the substance.

Estimated data shows varying accuracy and error rates among AI detection tools, highlighting their limitations and the challenge of achieving certainty.

The Response from Companies and Why It Mattered

Once the AI nature became apparent, both Door Dash and Uber Eats issued denials. And here's where it gets interesting: their denials actually mattered because the alternative (staying silent) would have implied something to hide.

Door Dash CEO Tony Xu posted on X: "This is not Door Dash, and I would fire anyone who promoted or tolerated the kind of culture described in this Reddit post." The language is careful. He's not saying the concerns about gig economy exploitation are unfounded. He's saying this specific post doesn't describe Door Dash's practices.

Uber also denied the allegations and confirmed what the technical analysis suggested: Uber Eats-branded employee badges don't exist in their system. Which is a small thing, but it's the kind of operational detail an insider would likely know and get right.

The company responses were necessary but insufficient. They addressed the specific fabrication without engaging with the underlying truth that motivated people to believe it. Yes, the post was AI-generated. Yes, it was likely a scam designed to either rack up engagement or test how effectively AI disinformation could spread. But the companies operating food delivery apps do have a documented history of worker exploitation. A denial of the fake doesn't erase the real.

What made the company responses effective: They were fast. They were specific. They pointed to verifiable facts (badge authenticity) rather than just claiming innocence. Uber didn't say "we'd never do that." Uber said "here's a factual detail that proves this is fake." That's the right strategy.

The Broader Pattern of AI Misinformation

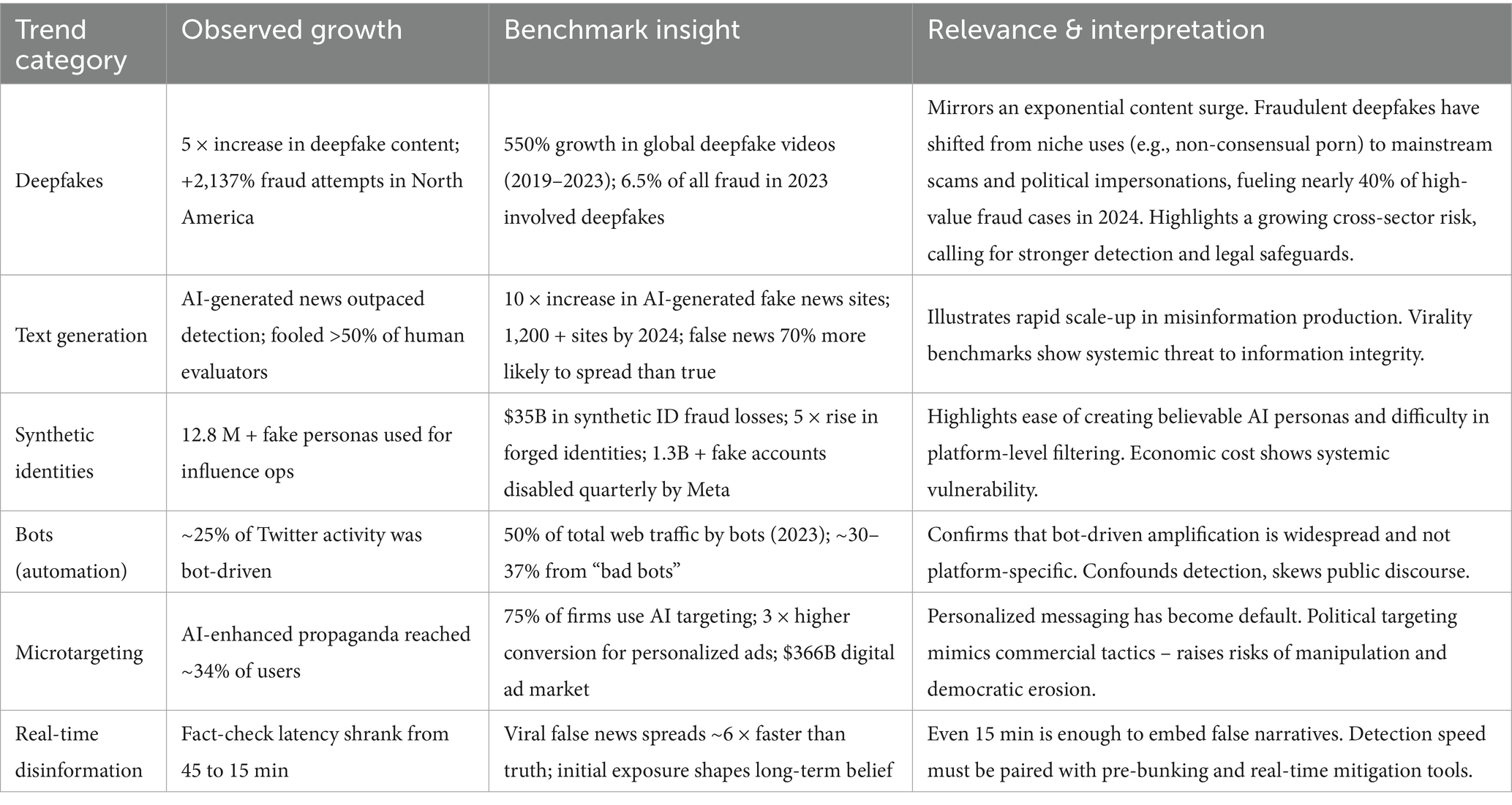

This Reddit scam is part of a growing trend, not an isolated incident. As AI models have improved, so has the ability to generate convincing disinformation at scale.

The economics are terrifying. Before AI, creating convincing misinformation required human effort: hiring writers, building fake personas, managing multiple accounts, and coordinating across platforms. The return on investment was often questionable unless you were a nation-state or well-funded operation.

With AI, the marginal cost drops to nearly zero. Generate a thousand Reddit confessions, seed them across different subreddits, let the algorithm surface the most engaging ones, and suddenly you have a disinformation operation that costs less than a used car.

The specificity increases believability. Early AI text generators were obviously mechanical and repetitive. Modern models are genuinely good at varying sentence structure, mimicking authentic voice, and including specific details that make claims feel grounded. A post that says "we manipulate algorithm and treat workers bad" is obviously AI-written. A post that describes a specific internal culture where workers are called "human assets" and exploited through psychological manipulation of desperation feels like actual testimony.

The detection tools are getting worse, not better. This might surprise you, but AI detectors are actually becoming less reliable as models improve. The reason: better language models are harder to distinguish from human writing. If an AI is trained to write exactly like a human, how do you detect it without having access to metadata (which AI was used, when it was generated, etc.)?

Copyleaks and GPTZero are trying to identify patterns that differ from human writing. But as models get better at mimicking humans, those patterns disappear. Some researchers suggest the problem is fundamentally unsolvable: if an AI can write indistinguishably from humans, then no statistical test can reliably detect it without accessing metadata.

The remedy is human verification, not software. You can't detect this fake through statistical analysis of the text. You detect it by doing basic journalism: asking for corroborating evidence, verifying the source, checking claims against what you already know, and being skeptical when someone makes sweeping allegations without submitting to verification.

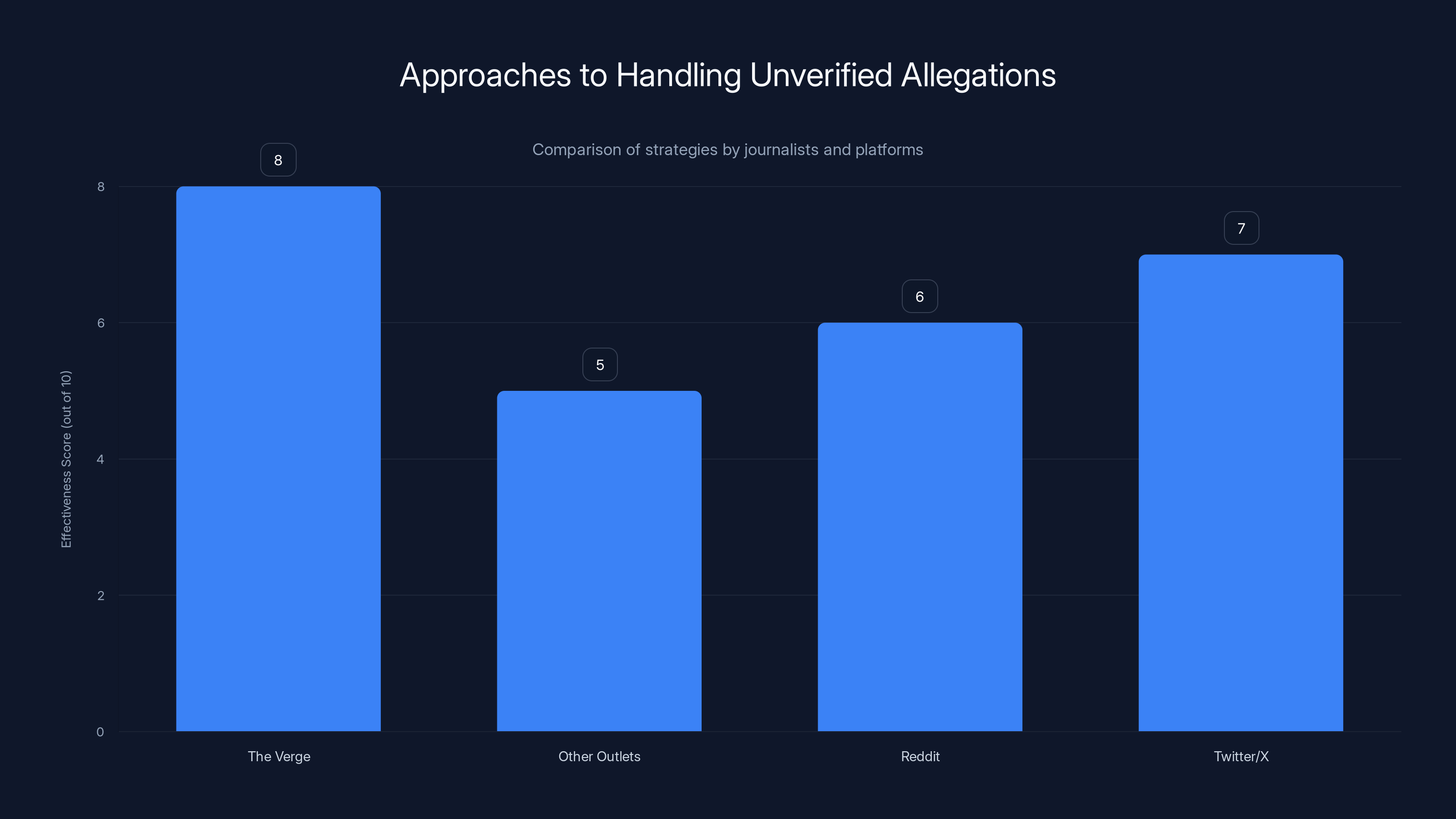

The Verge's approach to verifying and correcting unverified allegations is considered the most effective, while other outlets and platforms like Reddit and Twitter/X have varying levels of effectiveness. Estimated data.

Food Delivery Worker Exploitation: The Real Story

While the Reddit post was fake, the underlying concern it addressed is entirely real. Food delivery companies have built a business model that depends on worker exploitation.

Let's define terms here. Exploitation in this context means workers bear the economic risk and cost of the operation while the platform captures most of the value. Here's how it works in practice:

A driver uses their own vehicle (average cost around

Meanwhile, the platform takes a commission on every order (typically 15-30% depending on the region and restaurant) and charges customers delivery fees and service fees. The platform's actual costs are marginal: servers running the app, customer support, and payment processing. The massive fixed costs (vehicle, insurance, fuel) are externalized to workers.

A 2019 study by researchers at MIT and conducted with gig workers found that many were earning

In response, many cities and states have tried to regulate this. California passed Proposition 22 in 2020 (later found partially unconstitutional), which attempted to mandate some benefits while maintaining contractor status. New York City implemented minimum pay standards for delivery workers in 2019. Other cities followed.

But the regulatory landscape remains fragmented. Workers in some regions have some protections. Workers in others have essentially none. The companies have consistently fought stronger regulations because those regulations cut into their margins.

This is the context that made the fake Reddit post believable. Not because the specific allegations were likely (they probably weren't), but because the industry's actual practices are bad enough that additional exploitation wouldn't have been shocking.

How AI Scams Like This Get Traction

Understanding the mechanics of how this particular scam propagated helps you recognize similar ones in the future.

Reddit's algorithm favors engagement. Reddit doesn't algorithmically promote posts the way Twitter or Tik Tok does, but it does sort by upvotes. Posts that get early upvotes continue to get visibility. The fake confession likely got initial traction because:

- It was posted in a relevant subreddit (probably r/news, r/technology, or something similar)

- People upvoted it because it aligned with their priors about food delivery companies

- Visibility increased, which brought more upvotes, which increased visibility further

- By the time skeptics could analyze it, it already had 90,000 upvotes and top-of-feed placement

This is a recurring problem with social media: negative information spreads faster than corrections. The original post gets momentum before verification can catch up.

News outlets amplified it. Multiple journalists and publications reported on the confession before fully vetting it. This makes sense on one level: if it's real, it's a major story about worker exploitation. Ignoring it would be negligent. But the rush to report creates a problem: the story gets credibility from being reported, even if that credibility is undeserved.

The Verge did the reporting right: they tried to verify independently, they ran the text through detection tools, they analyzed the badge image, and they reported their findings when the post turned out to be fake. Not all outlets handled it as carefully.

The timeline creates deniability. By the time the fake was definitively exposed as AI-generated, the original post had already generated thousands of shares, hundreds of news mentions, and had been discussed on podcasts and in conversations. The correction reaches a fraction of the audience the original reached. The fake does its damage before the truth catches up.

There's no penalty for the scammer. The anonymous poster faced no consequences. They deleted their account and disappeared. There's no mechanism to hold them accountable. No lawsuit, no prosecution, no way to recover reputational damage from the false allegations. This creates an asymmetric situation where scammers can operate with impunity.

Estimated data shows a decline in average hourly earnings for gig economy workers from

Spotting AI Scams: A Practical Guide

You don't need to be a technical expert to identify suspicious posts, fake confessions, and AI-generated content. Here are specific signals that should make you skeptical.

Anonymous sources without verification pathways. If someone is making serious allegations and offering only anonymity with no way to verify their claims, that's a red flag. Legitimate whistleblowers typically work with journalists, provide corroborating evidence, or reveal themselves when push comes to shove. Scammers stay anonymous and disappear when pressed.

Emotional appeals without specifics. AI models are good at generating emotional language but sometimes struggle with specific, verifiable details. A post that says "they treat workers horribly" is vague. A post that says "on March 15th, at our San Francisco office, a manager told me..." is specific. The more emotional the language and the vaguer the details, the more suspicious you should be.

Perfection in narrative structure. Human testimony is messy. It's nonlinear. It includes tangents and corrections and moments where the speaker realizes they're leaving something out. AI-generated confessions tend to be more structurally sound. They flow logically from setup to revelation to conclusion. They're essentially essays masquerading as personal testimony.

Evasiveness about proof. When journalists asked for corroboration, the poster either offered obviously fake proof (the badge) or disappeared. Real whistleblowers might be cautious about revealing too much, but they typically have a strategy for verification. They work with legal counsel. They gather documentation. They're thoughtful about how and when to come forward. Scammers just panic when scrutiny arrives.

Details that don't check out. The Uber Eats badge was the giveaway because it's a factual detail you can verify. Uber employees don't carry Uber Eats badges. Period. Before trusting major allegations, check whether the operational details described would actually work the way the source claims.

Timing that's suspiciously convenient. This scam dropped right at the beginning of January, a time when news cycles were slower and people were more prone to sharing viral content. Genuine whistleblowers don't typically strategize around news cycle patterns, but scammers do.

Lack of identifying information. Even anonymously, credible sources often provide some verifiable detail: the department they worked in, the time period they were employed, their role, something that could be corroborated by someone with knowledge of the company. This post provided almost nothing verifiable.

The Difference Between Fake Posts and Real Exploitation

This distinction matters enormously because the fake post, while false, has created a perverse incentive structure.

Companies like Door Dash and Uber can now point to the fake confession and say "see, this is the kind of misinformation that gets spread about us." They can use it to dismiss legitimate concerns about worker exploitation as hysteria or exaggeration.

This is the real damage the AI scam does. Not that people believed false allegations. The damage is that it gives ammunition to bad actors to dismiss real problems as AI-generated hysteria.

The food delivery industry does have genuine issues with worker classification, minimum compensation, and benefits. These have been documented in academic research, confirmed by worker testimony, addressed in regulatory action, and acknowledged in company filings. The regulatory pushback in places like New York City and California confirms that these problems are real and serious enough to warrant legal intervention.

The fake Reddit confession doesn't change any of that. But it does make it easier for companies to dismiss worker advocates as credulous believers in scams.

This is a known pattern with disinformation: introduce a fake version of a true concern, watch people believe it, then discredit them for falling for the fake. The real problem survives intact, but advocates have to spend time and credibility recovering from the scam.

Estimated data shows how AI scams gain traction rapidly, with initial engagement leading to significant amplification before corrections can mitigate the spread.

What AI Detection Tools Actually Tell You

The inconsistency in AI detection results on the Reddit post deserves deeper examination because it reveals the limitations of automated detection.

How detection tools work: AI detectors typically look for statistical patterns that differ from human writing. They might analyze:

- Sentence length variation (do sentence lengths follow a typical human distribution?)

- Vocabulary diversity (do word choices show variety or repetition?)

- Punctuation patterns (do punctuation choices match human patterns?)

- Perplexity and burstiness metrics (how predictable is the next token?)

- Linguistic markers that differ between human and AI text

Why the inconsistency? Different detectors use different algorithms and training data. Some are trained on older AI models and might not detect newer ones. Some are overly aggressive and flag borderline content. Some are too lenient. None are 100% accurate.

The problem with false confidence. When a detector says "this is probably AI-generated," it's providing a probability, not certainty. If six detectors say AI and two say human, what does that mean? It probably means the text has enough ambiguous patterns that statistical analysis alone can't determine origin. It doesn't mean the text is definitely fake.

This is why the image analysis was more definitive. Gemini flagged the badge as AI-generated based on specific, visual errors. Those errors aren't probabilistic. Either the text is warped (it is) or it isn't. Either the badge style doesn't match Uber's actual badge design (it doesn't) or it does.

The timeline problem. Detection tools trained on older AI text (from 2022 or early 2023) are increasingly ineffective against newer models. As language models improve and learn to mimic human writing more closely, the statistical signatures that detection tools rely on disappear.

Some researchers argue the problem is fundamentally unsolvable. If an AI can write identically to humans, then no statistical test can distinguish them without additional context (like metadata about when the text was generated). The conversation is shifting from "can we detect AI?" to "should we assume everything unverified is potentially AI-generated?"

The Responsibility of Journalists and Platforms

This incident raises questions about how journalists and platforms should handle unverified allegations, especially when those allegations align with public sentiment.

The journalist's dilemma: If the Reddit post had been real, not reporting on it would have buried a major story about worker exploitation. Journalists have a responsibility to report on credible allegations of wrongdoing, especially when they come with inside details. But they also have a responsibility to verify before amplifying.

The solution The Verge employed is the gold standard: report on the claim, immediately work to verify it independently, and when you discover it's fake, prominently report that finding. Don't bury the correction. Make it part of the story.

Other outlets either reported the claim and then quietly deleted coverage once the fakery was revealed, or they reported on the verification failure but didn't give it as much prominence as the original claim. That asymmetry is the problem.

The platform's responsibility: Reddit doesn't fact-check posts or remove content just for being unverified allegations. They rely on community moderation and downvoting for quality control. This works reasonably well for obvious spam or illegal content, but it's terrible at catching sophisticated scams that align with community sentiment.

Reddit's algorithm could surface verification status alongside viral posts. When a claim goes viral, the platform could flag it as "unverified" or "flagged for verification" and prevent it from reaching the top of the feed until verification attempts have been made. This would slow the spread of disinformation without requiring the platform to actively censor.

Twitter/X tries something similar with community notes, but community notes themselves can become a battleground where different groups fight over what's true. The system isn't perfect, but it's better than pure community voting.

The media's responsibility: News outlets amplifying the Reddit post without independent verification contributed to its spread. Once the fakery was discovered, the original story had already saturated the discourse. A correction reaches 10% of the people who saw the original claim.

One solution: news outlets could adopt a higher bar for reporting on unverified allegations, especially when those allegations come from anonymous sources with no verification pathway. If something is genuinely important, waiting 24 to 48 hours to verify it won't change the story significantly, but it could prevent the spread of elaborate scams.

Future-Proofing Yourself Against AI Scams

As AI continues to improve, more convincing scams will emerge. Here's how to build resilience.

Develop media literacy habits. Before believing a viral claim:

- Check whether it's been reported by established news outlets (they've had time to verify)

- Look for responses from the alleged subjects (do they deny specific claims or just deny generally?)

- Search for similar claims from independent sources (has this been documented before?)

- Notice whether the source provides verifiable details or stays vague

- Ask whether the claim requires you to believe something counterintuitive or just confirms what you already suspect

That last point matters: you should be more skeptical of claims that confirm your existing beliefs, not less. It's exactly when you feel the information is obviously true that you're most likely to skip verification.

Understand the economic incentives. Who benefits if people believe this claim? The Reddit poster clearly benefits from engagement (upvotes, attention, potentially metrics they can monetize elsewhere). News outlets benefit from reporting a sensational story. Your own social media engagement benefits from sharing outrageous content.

Companies have incentives to deny allegations regardless of truth. These incentive structures don't tell you what's true, but they tell you where to be skeptical.

Demand better from platforms. If you use Reddit, Twitter, or other platforms, engage with verification features when available. Use community notes on Twitter to flag unverified claims. Report obvious scams. Downvote posts that spread easily debunked misinformation.

The algorithm is optimized for engagement, not accuracy. You have to actively counteract that optimization.

Expect more of this. As AI gets better and faster, the volume of AI-generated content will increase dramatically. Some of it will be used for legitimate purposes (automation, efficiency). Some will be used for scams, disinformation, and exploitation.

The Verge reporting incident isn't a isolated case. It's a preview. By 2026, every social platform will have faced similar situations. The question isn't whether this will happen again. It's how you'll respond when it does.

The Real Problem: Worker Exploitation Continues

While everyone was focused on whether the Reddit post was real, the actual problem it addressed continued unabated.

Food delivery workers across major US cities are still classified as independent contractors. They still bear vehicle costs, insurance costs, and fuel costs. They still wait unpaid between deliveries. They still have no minimum wage, no benefits, and no job security.

In fact, conditions have arguably gotten worse. As more competition entered the gig economy (Amazon Flex, Instacart, Door Dash, Uber Eats, Grubhub), workers have been pitted against each other, driving down rates. Some drivers reported making $12-15 per hour after expenses in 2024, down from slightly higher rates in 2022.

Regulatory efforts have achieved mixed results. New York City's minimum pay rules for delivery workers—$17.96 per hour from 2024-2025—are often circumvented through apps that allow customers to reject orders, temporarily disabling workers. California's Proposition 22 was partially struck down in court but functionally remains in effect due to legal maneuvering.

The fake Reddit confession is actually useful here, paradoxically. It shows what the actual, documented, real worker exploitation looks like when described in AI-generated dramatic fashion. Strip away the sensationalism of the fake post, and what's left is basically accurate: food delivery companies do structure their operations to minimize cost and maximize worker expenditure.

They don't need to explicitly call workers "human assets" because their business model does it implicitly. The algorithms that determine which orders get offered to which drivers, when workers get deactivated, how ratings affect earning opportunity—these are sophisticated forms of worker control without the explicit language.

The real scandal is that this happens at all. The fake scandal was in the specific details the AI fabricated. The genuine scandal has been documented in tax filings, academic studies, worker interviews, and regulatory proceedings. It just doesn't trend on Twitter the same way a "whistleblower confession" does.

What Companies Are Actually Doing About It

In response to regulatory pressure and worker advocacy, food delivery companies have made some changes. Whether those changes are meaningful is debatable.

Benefits programs for contractors. Door Dash, Uber Eats, and others now offer some benefits to contractors who work enough hours. These typically include: discounted health insurance (the worker still pays premiums), accident insurance if they're hurt while working, and vehicle maintenance discounts.

These are meaningful but limited. They don't include paid time off, sick leave, unemployment insurance, or the other benefits traditional employees receive. They're also conditional on working a certain number of hours, which means benefits effectively get cut off when the algorithm stops offering orders.

Transparency initiatives. Some companies now disclose earnings potential before drivers accept orders. This is progress: drivers can make more informed decisions. But it doesn't change the fundamental structure where platforms set commission rates and workers have no bargaining power.

Minimum pay in regulated markets. In cities with minimum pay requirements, companies have largely complied while finding ways to minimize the impact on their margins. Some shifted to tips (more visible, more psychologically effective), some raised commission rates to compensate, some reduced the number of available orders. The regulations work, but they're regional, creating incentives for workers to prefer working in regulated cities and companies to shift capacity to unregulated areas.

Lobbying against stronger regulation. While these changes were happening, food delivery companies have spent heavily on lobbying to oppose stronger worker protections. They've funded ballot initiatives, hired lobbyists in state capitals, and built coalitions with restaurant groups to argue that stricter regulations would drive prices up or reduce service.

These lobbying efforts have been partially successful. Proposed federal gig worker standards have gone nowhere. State-level efforts have been fought intensely. International regulators (particularly in Europe) have moved faster than US regulators, with some countries effectively reclassifying gig workers as employees despite company resistance.

The asymmetry is clear: When regulation is light, companies maximize extraction. When regulation is implemented, companies adapt to minimize the cost of compliance. Workers haven't seen dramatic improvement in compensation or job security even as companies have posted record revenues.

What Happens Next: AI Scams Will Get Worse

This Reddit incident will not be the last AI-generated fake to go viral. In fact, you should expect more of them, faster and more elaborate.

As language models improve and become easier to access, the barriers to creating convincing text diminish. Within six months, image generation will likely improve enough that detection becomes even harder. Video synthesis technology is advancing rapidly—we're not far from AI-generated videos that fool casual observers.

The economic incentives haven't changed. Someone could spend 30 minutes and $10 in API costs to generate 100 Reddit confessions about different companies. Upload them to different accounts, seed them across different subreddits, and wait. Even if 95% are detected as fakes immediately, 5 of them might go viral before the fakery is discovered.

The cost-to-reward ratio is terrible for society but great for the scammer. That's the fundamental problem.

Platforms will respond slowly. Reddit, Twitter, and others will implement better AI detection, watermarking, and verification systems. But they'll also want to avoid heavy-handed censorship that limits legitimate expression. The result will be imperfect systems that catch some scams and miss others.

Detection will become less reliable as generation improves. The arms race between AI generators and AI detectors will accelerate. Generators will be trained to evade detectors. Detectors will be updated to catch the new evasion tactics. This cycle will repeat.

Verification will become the bottleneck. Eventually, the solution to AI scams won't be better detection tools. It'll be better verification systems. Journalists will need to implement independent verification, platforms will implement community verification, and users will develop stronger skepticism of unverified claims.

But verification is slow. It's expensive. It doesn't generate engagement. Social media algorithms don't favor it. So we'll see a growing gap between what's verifiable and what's believed.

Key Takeaways: What This Reveals About AI, Media, and Labor

The viral Reddit food delivery scam teaches several important lessons.

First, AI-generated content is indistinguishable from human-written content to most people. Detection tools exist but aren't reliable. Platform verification systems are inconsistent. The only reliable detection method is human journalism: asking questions, demanding evidence, and checking facts independently.

Second, AI scams work because they confirm existing beliefs. People believed the confession because food delivery companies do exploit workers. The fake details were believable because the underlying premise was true. This is a recipe for effective disinformation: find a real problem, describe it dramatically, add specific false details, and distribute widely.

Third, media responses matter. The Verge handled this well by reporting and then reporting the correction with equal prominence. Other outlets didn't. The difference in coverage shows how much impact journalist decisions have on what's believed.

Fourth, platform design affects what spreads. Reddit's algorithm surfaces engaging content regardless of accuracy. This incentivizes sensationalism and rewards scammers. Better platform design would slow viral spread long enough for verification to occur.

Fifth, the underlying problems remain. Food delivery worker exploitation is real and documented. The fake confession doesn't change that. But it does give companies ammunition to dismiss real concerns as hysteria.

Finally, you can develop resilience. Media literacy isn't complicated. Wait before sharing. Check sources. Notice when claims confirm your existing beliefs. Demand verifiable details. These habits won't make you immune to scams, but they'll substantially reduce your likelihood of being fooled.

The Reddit scam is a preview of what the next few years will look like online. AI-generated content will become ubiquitous. Scams will become more sophisticated. The solution isn't technology. It's skepticism, verification, and patience.

FAQ

What is the Reddit food delivery AI scam?

In early January 2025, an anonymous Reddit user posted a 586-word confession claiming to be a senior software engineer at a major food delivery company, alleging worker exploitation, order manipulation, and unethical business practices. The post received 90,000 upvotes before being identified as AI-generated through text analysis, image authentication, and journalist verification. The poster provided a fake employee badge created with generative AI and disappeared when questioned about evidence.

How did investigators prove the Reddit post was AI-generated?

Multiple AI detection tools (Copyleaks, GPTZero, Pangram, Claude, and Gemini) flagged the 586-word post as likely AI-generated, though some detectors reported it as human-written, showing the inconsistency of current detection methods. The crucial evidence came from the employee badge image: Google's Gemini identified AI generation artifacts including misaligned text, warped coloration, and impossible design choices. Uber later confirmed that Uber Eats-branded employee badges don't exist in their system, confirming the badge was inauthentic. When journalists pressed for corroborating evidence, the poster became evasive and eventually deleted their Signal account.

Why did people believe the fake confession so easily?

The post tapped into genuine, documented worker exploitation in the food delivery industry. Food delivery companies have a documented track record of treating drivers as independent contractors without benefits, minimum wage guarantees, or job security. A 2019 MIT study found delivery workers earning $8-11 per hour after factoring in vehicle, insurance, and fuel costs. Because the underlying concern was real, people were more likely to believe the specific allegations without demanding verification. AI scams exploit this tendency by confirming existing beliefs with dramatic details.

What red flags indicate an AI-generated confession or scam?

Redflags include: anonymity without verification pathways, perfect narrative structure (like an essay rather than rambling testimony), emotional language paired with vague details, evasiveness when asked for proof, lack of verifiable operational details, and suspicious timing. Genuine whistleblowers typically provide some verifiable information, work with credible journalists, and demonstrate willingness to face scrutiny. Scammers panic when pressed and often disappear rather than provide evidence.

How does this relate to real food delivery worker exploitation?

The fake post drew engagement precisely because real exploitation in the food delivery industry is documented. Workers bear all operational costs (vehicle, insurance, fuel) while platforms keep commission revenue and worker classification as contractors. Since 2019, various cities including New York have implemented minimum pay standards ($17.96/hour in NYC 2024-2025), but protections remain geographically fragmented. The scam doesn't change the underlying reality, but it gives companies ammunition to dismiss legitimate worker concerns as hysteria.

What can I do to avoid being fooled by AI scams like this?

Develop these practices: wait 24 hours before sharing viral claims, check whether established news outlets have reported and verified the story, look for responses from alleged subjects that address specific rather than general denials, search for independent corroboration of key facts, notice whether claims confirm your existing beliefs (be more skeptical in these cases), and demand verifiable details from anonymous sources. These habits won't guarantee immunity to scams, but they substantially reduce your vulnerability.

Will AI detection tools prevent future scams?

Unfortunately, current AI detection tools have fundamental limitations. As language models improve and learn to mimic human writing more closely, statistical detection becomes less reliable. Some researchers argue the problem is theoretically unsolvable: if an AI writes identically to humans, no statistical test can distinguish them without metadata. The solution is shifting from technical detection to human verification: journalists checking facts independently, platforms implementing community verification, and individuals developing healthy skepticism of unverified claims.

How can platforms reduce the spread of AI-generated misinformation?

Platforms could implement several strategies without heavy-handed censorship. These include: flagging unverified viral claims to slow their spread until verification occurs, implementing community verification systems like Twitter's community notes, requiring anonymous sources making serious allegations to provide some verifiable detail, and designing algorithms to prioritize verified information over engagement metrics. However, any system that reduces engagement will face pressure from advertisers and stakeholders. The current incentive structure favors viral spread over accuracy.

What's the difference between detecting AI and preventing AI scams?

Detection aims to identify whether specific content was AI-generated through statistical analysis or artifacts. Prevention aims to stop scams from being believed in the first place through better verification, media literacy, and platform design. Detection is becoming less reliable as AI improves. Prevention (through verification and skepticism) will likely remain effective longer. The shift in focus from detection to prevention reflects the emerging consensus that technological solutions alone won't solve this problem.

How has this incident changed how news outlets handle viral allegations?

The most important change is recognizing the asymmetry between viral spread and correction: false claims spread to millions in hours while corrections reach a fraction of those people. This has led some outlets to adopt higher verification standards before reporting on unverified allegations from anonymous sources, especially when those allegations align with public sentiment. The Verge's approach—reporting the allegation, immediately investigating, and prominently reporting the fraud when discovered—represents best practices but isn't universally adopted. The gap between best practices and actual practice remains significant.

Related Articles

- How Disinformation Spreads on Social Media During Major Events [2025]

- The AI Slop Crisis in 2025: How Content Fingerprinting Can Save Authenticity [2026]

- Why Quitting Social Media Became So Easy in 2025 [Guide]

- DoorDash Food Tampering Case: Gig Economy Safety Crisis [2025]

- Instagram's AI Media Crisis: Why Fingerprinting Real Content Matters [2025]

- Political Language Is Dying: How America Lost Its Words [2025]

![The Viral Food Delivery Reddit Scam: How AI Fooled Millions [2025]](https://tryrunable.com/blog/the-viral-food-delivery-reddit-scam-how-ai-fooled-millions-2/image-1-1767640218216.jpg)