The Great AI Brain Drain: Understanding the Thinking Machines Exodus

In late 2024, the artificial intelligence world witnessed a significant talent migration that sent shockwaves through the nascent AI startup ecosystem. Barret Zoph and Luke Metz, two prominent cofounders of the fledgling Thinking Machines Lab, announced their departure to rejoin OpenAI, the company they had left just months earlier. This development represents far more than a simple personnel change—it signals deeper structural challenges facing AI startups attempting to compete with well-funded incumbents and highlights the extraordinary gravitational pull that established AI firms like OpenAI exert on elite technical talent.

The departure of these cofounders, along with additional staff member Sam Schoenholz, struck a considerable blow to Thinking Machines Lab, which had positioned itself as an ambitious independent research and development laboratory under the leadership of Mira Murati, the former Chief Technology Officer of OpenAI. What makes this exodus particularly noteworthy is the timing and the context—these departures occurred within months of the lab's founding, raising critical questions about the viability of autonomous AI research organizations competing outside the protection of major technology conglomerates.

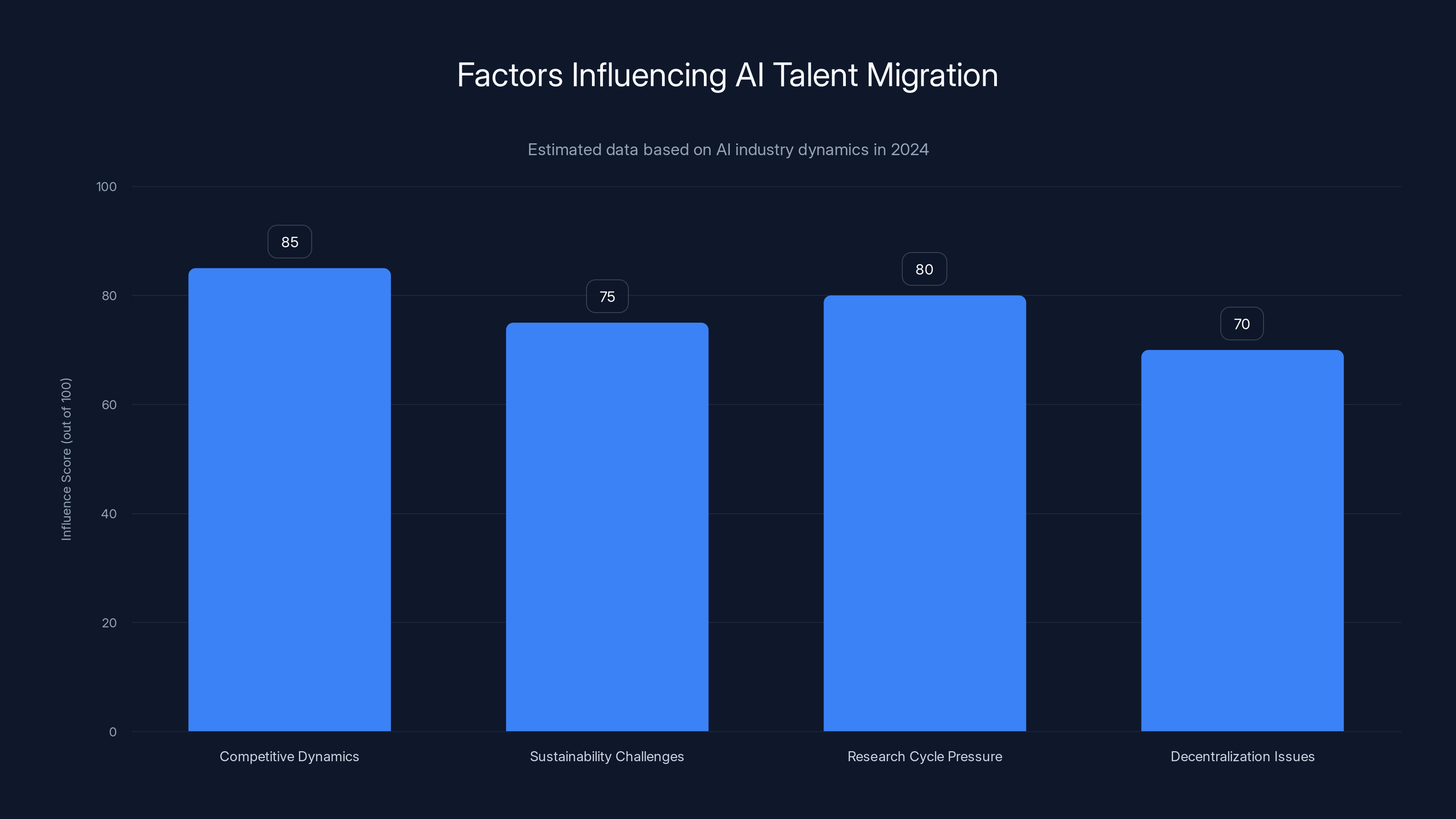

Understanding this development requires examining multiple interconnected factors: the competitive dynamics of the AI talent market, the sustainability challenges facing AI startups, the acceleration of research and product cycles that may overwhelm smaller organizations, and the broader implications for the future of decentralized AI development. This article provides a comprehensive analysis of the Thinking Machines Lab situation, explores the structural factors driving these decisions, and examines what this means for the broader AI industry landscape.

The narrative surrounding the departures remains contested, with multiple interpretations emerging from different stakeholders. Some observers point to internal management challenges and allegations of confidentiality breaches, while others suggest that the allure of working at the forefront of OpenAI's cutting-edge initiatives simply proved irresistible. Both narratives contain elements of truth, and understanding the full picture requires examining the evidence, context, and incentive structures that shape decision-making at the highest levels of AI research and development.

The Timeline: How It Unfolded

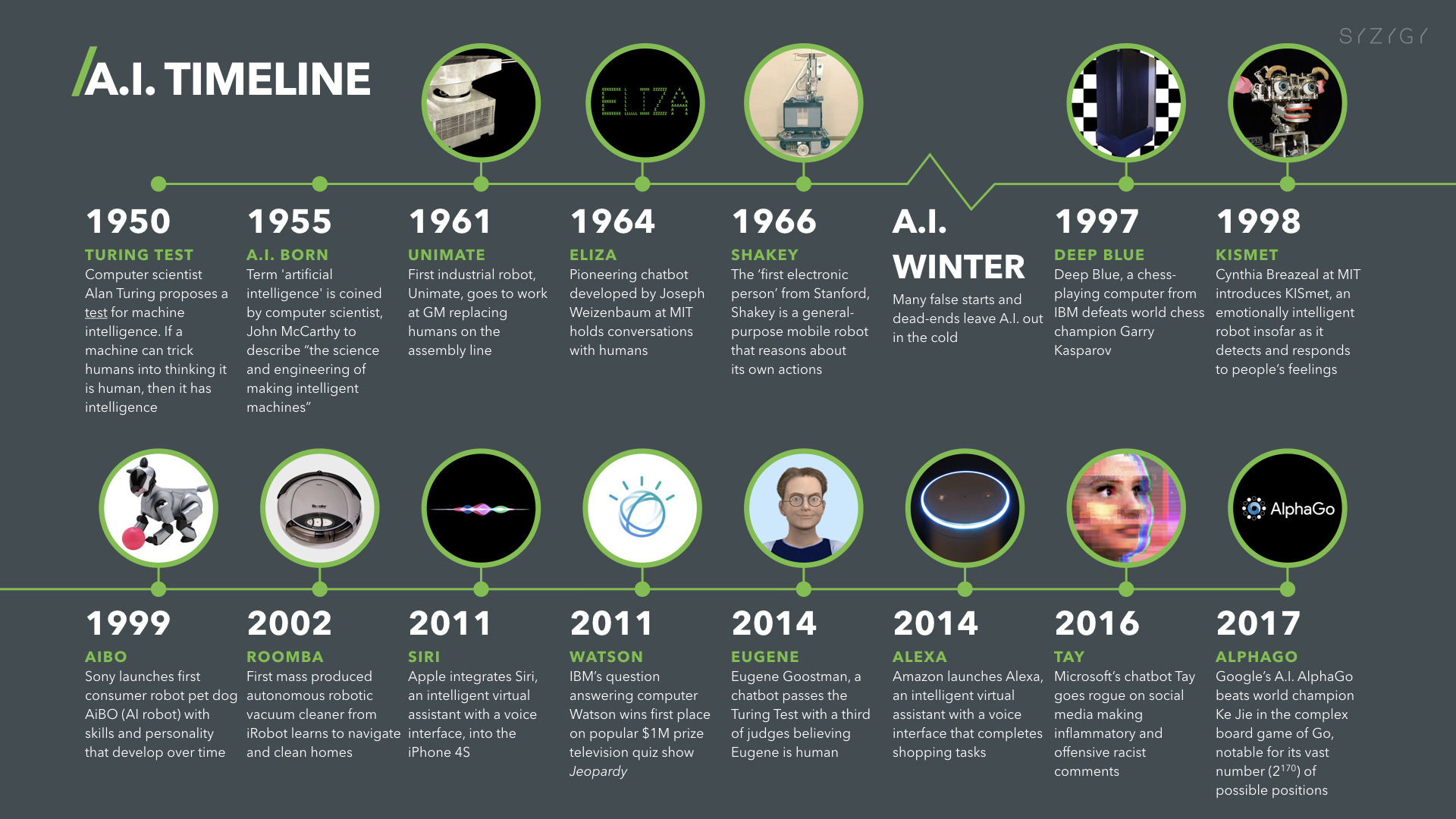

The departure sequence followed a compressed timeline that reflected the intensity of the situation. According to OpenAI CEO of applications Fidji Simo's announcement, Barret Zoph initially indicated his intention to leave on Monday, revealing to Thinking Machines CEO Mira Murati that he was considering departing. By Thursday of the same week, OpenAI had officially announced his return, along with Luke Metz's. This rapid progression suggests that the decision was not impulsive but rather involved significant behind-the-scenes coordination and negotiation.

What transpired between Monday and Thursday became a subject of intense speculation and competing narratives. Technology reporter Kylie Robison initially reported that Zoph had been terminated for "unethical conduct," a characterization that immediately prompted questions about what specific actions warranted such serious allegations. Sources connected to Thinking Machines suggested that Zoph had shared confidential company information with competitors—a serious breach of trust that would justify immediate termination in any research-focused organization, particularly one handling proprietary AI research.

However, OpenAI's framing of the situation differed markedly from this narrative. Simo's memo to OpenAI staff stated that OpenAI did not share Thinking Machines' concerns about Zoph, suggesting that what appeared damning from one perspective required recontextualization from another. This discrepancy between the narratives creates ambiguity about the actual events and motivations driving the departure, a pattern common in high-stakes personnel transitions involving sensitive intellectual property and proprietary information.

Context: The Formation of Thinking Machines Lab

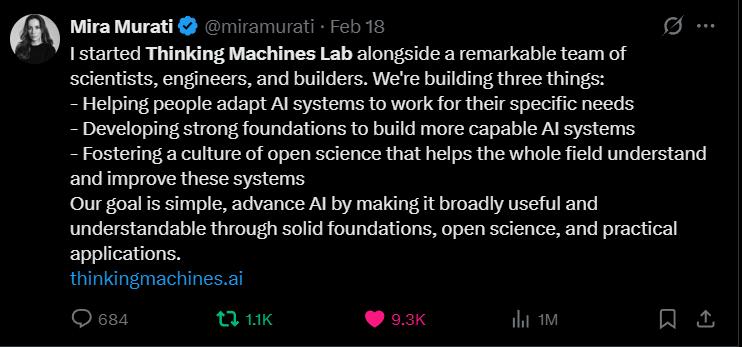

To understand the significance of these departures, it's essential to recognize the ambitious vision that prompted these individuals to leave OpenAI in the first place. In late 2024, Barret Zoph, Luke Metz, and Mira Murati announced the formation of Thinking Machines Lab as an independent AI research organization. Their departure from OpenAI represented a notable moment—senior figures breaking away from the dominant player to establish what they positioned as a more nimble, focused research entity.

Mira Murati brought particular credibility to the venture. As OpenAI's Chief Technology Officer, she had been instrumental in the development of major models and represented continuity with OpenAI's technical excellence. Zoph and Metz brought complementary expertise in machine learning research and systems development, collectively representing decades of accumulated knowledge in neural networks, model optimization, and scaling techniques. For observers watching the AI landscape, this announcement suggested that elite technical talent could still establish competitive alternatives to OpenAI.

The founding of Thinking Machines Lab also reflected broader industry sentiment that there existed meaningful opportunities for differentiation outside of OpenAI's organizational structure. The founders presumably believed they could move faster, explore novel research directions with greater autonomy, and build a culture aligned with their vision for advancing AI capabilities. These aspirations mirrored narratives that had driven similar ventures in other technology domains, where founders broke away from incumbents to pursue their own visions.

The Competitive Dynamics of AI Talent Retention

Why Talent Gravitates Toward OpenAI

The return of Zoph, Metz, and Schoenholz to OpenAI illuminates the structural advantages that incumbent AI firms maintain in talent competition. OpenAI possesses multiple gravitational forces that pull elite researchers back into its orbit, factors that extend far beyond salary compensation. Understanding these forces requires examining the multidimensional value proposition that OpenAI offers to elite technical talent in the AI field.

First and foremost, OpenAI commands access to computational resources at a scale that few independent organizations can match. Training state-of-the-art language models requires enormous quantities of GPU and specialized processor capacity, sophisticated infrastructure for distributed training, and the engineering expertise to manage these systems efficiently. A single large-scale model training run can consume thousands of GPUs over extended periods, representing expenditures that reach tens of millions of dollars. Independent startups attempting to compete directly with OpenAI on model development must either raise exceptional capital or find alternative approaches that don't require parity-level computational resources.

Beyond computational infrastructure, OpenAI maintains significant advantages in data acquisition, curation, and licensing. The company has invested substantial resources in assembling training datasets that represent comprehensive slices of human knowledge and creative output. This data infrastructure, once established, generates increasing returns—it enables more effective model training, which in turn enables the collection and incorporation of additional valuable data. For researchers intent on advancing the frontier of large language models, working within an organization that controls access to optimal training data represents a meaningful competitive advantage.

The organizational capability at OpenAI also constitutes a compelling draw. The company has built teams of engineers, researchers, and product specialists who have collectively solved thousands of technical problems associated with scaling AI systems. This accumulated expertise manifests in systems for distributed training, inference optimization, fine-tuning methodologies, safety evaluation frameworks, and deployment infrastructure. Researchers rejoining OpenAI gain immediate access to these capabilities, sparing them the years of development time required to build equivalent systems from scratch.

The Talent Retention Challenge for Startups

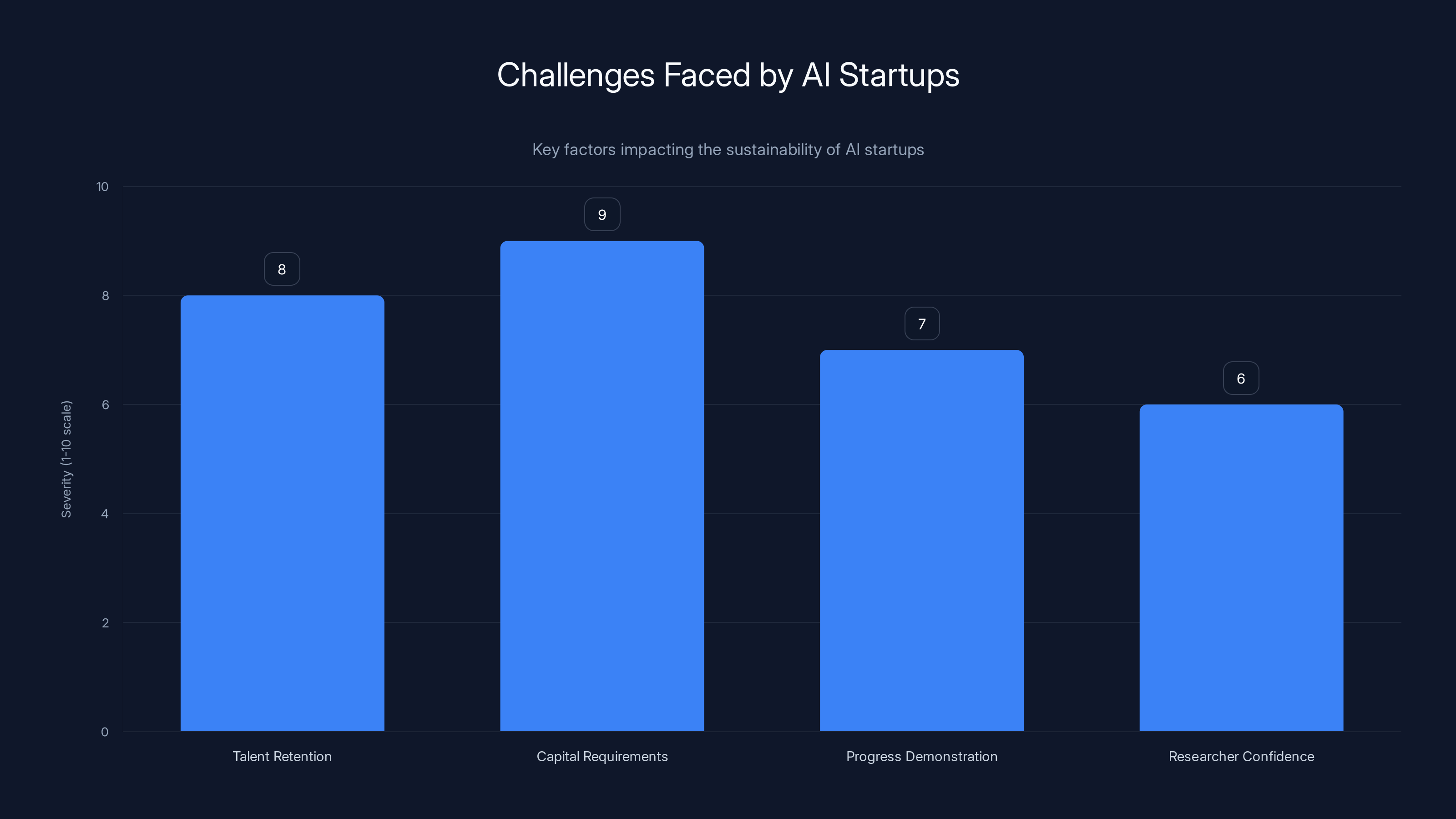

Thinking Machines Lab confronted the same fundamental challenge that affects many technically ambitious startups: the difficulty of sustaining elite talent retention when competing against dominant incumbents with superior resources. While the founders brought exceptional credibility and vision, the organization lacked several critical advantages that would enable it to retain ambitious researchers who might otherwise consider their options.

The financial compensation model presents one significant challenge. Although private companies can offer equity with potentially substantial upside, the timeline to realized value remains uncertain and lengthy. Researchers who leave OpenAI, a company with established revenue streams and clear pathways to profitability, accept meaningful financial risk in exchange for equity stakes in a nascent organization. This trade-off becomes particularly acute when the startup faces headwinds or when the timeline to significant achievement extends beyond initial expectations.

Beyond compensation, the pace of progress and magnitude of computational resources available to researchers shape their research trajectories significantly. At OpenAI, researchers can propose ambitious projects with confidence that the organization possesses the resources to execute them. In a startup context, even well-funded operations must make difficult prioritization decisions, constraining the scope of projects that can be pursued simultaneously. For researchers whose primary motivation involves advancing the frontier of AI capabilities, these constraints can feel restrictive relative to the freedom available at better-resourced incumbents.

The geographic and organizational dynamics of working at a startup also differ materially from incumbent firms. OpenAI maintains a concentrated team in San Francisco with tight integration between researchers, engineers, and product specialists. This density facilitates rapid iteration and cross-functional collaboration. Thinking Machines Lab, as an emerging organization, would require time to build equivalent coordination mechanisms. This difference may seem subtle but significantly impacts the velocity at which researchers can test ideas, receive feedback, and move projects forward.

The Network Effects of Leading Organizations

Beyond tangible resources, OpenAI benefits from network effects that make it increasingly attractive to new talent and collaborators. The company's prominence in AI research, the visibility of its products like Chat GPT, and its central position in industry conversations create an environment where researchers can advance their reputations through association and achievement. Publishing research from OpenAI, speaking at conferences, or being identified as working on OpenAI's priorities generates credibility within the AI research community.

This dynamic creates a self-reinforcing cycle: elite researchers want to work at the organization where the most visible cutting-edge work happens, which in turn attracts additional elite researchers, which enables more visible cutting-edge work. Breaking this cycle requires startups to offer something sufficiently differentiated or compelling that it outweighs the reputational advantages of association with incumbent firms.

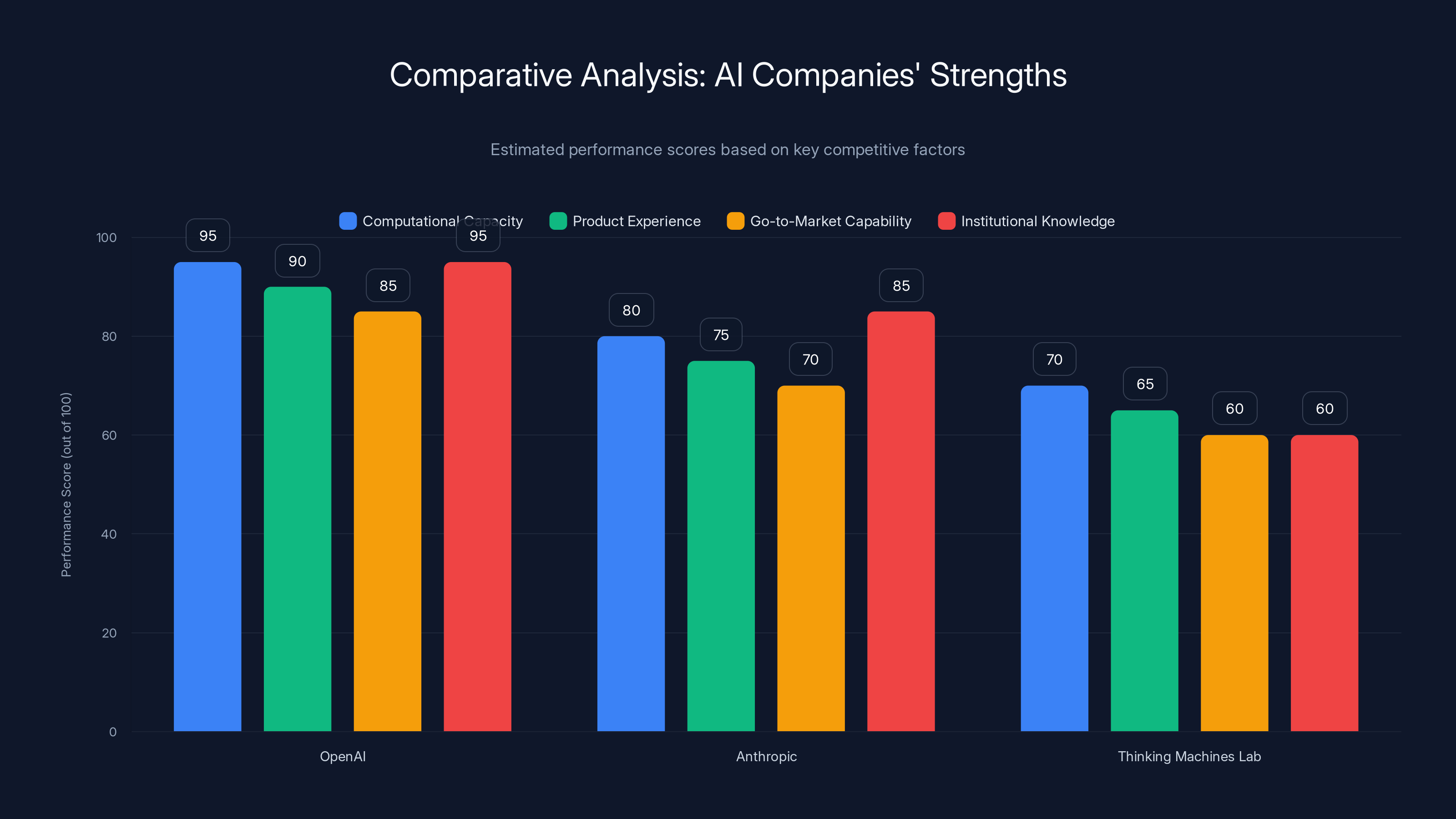

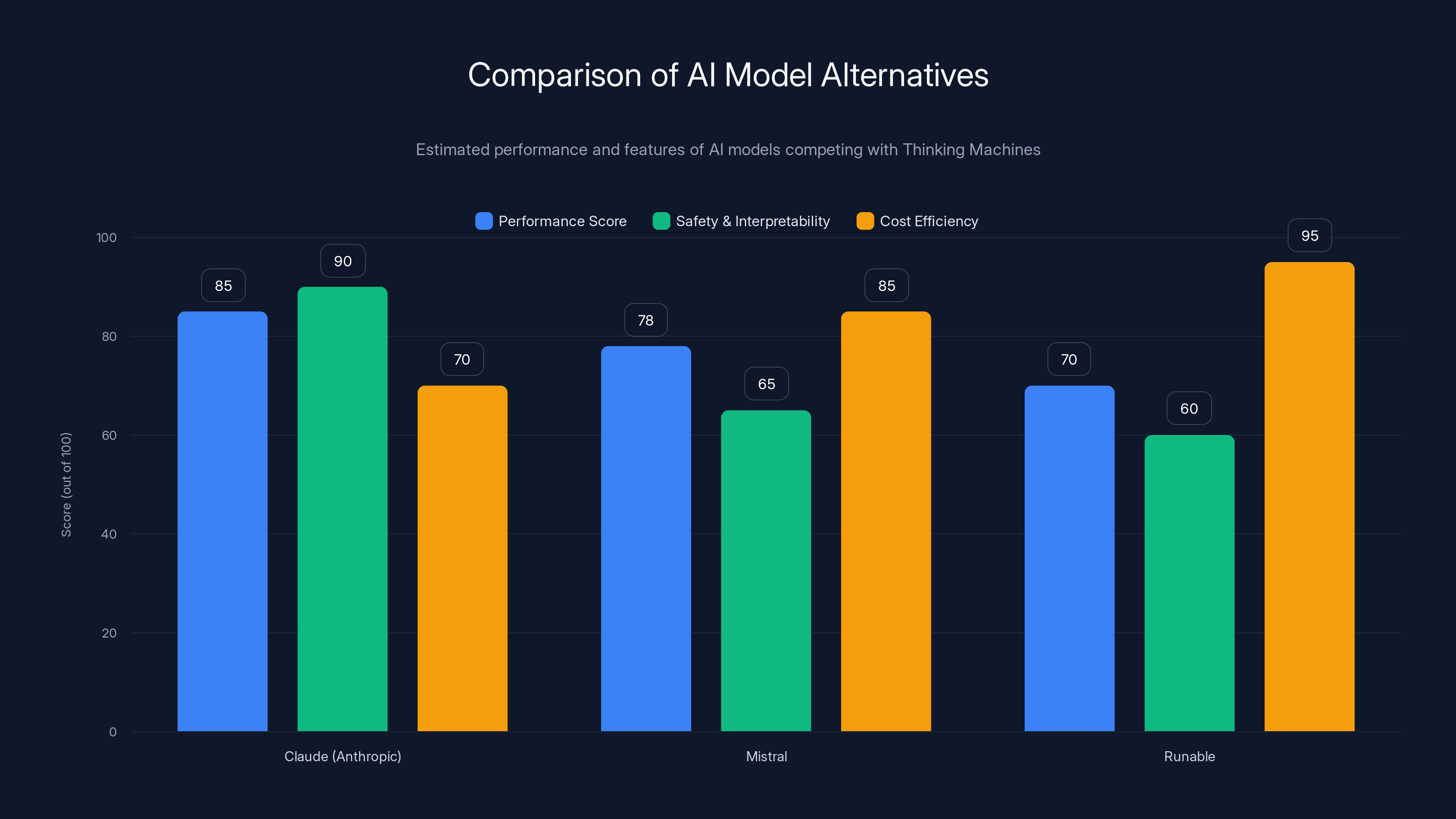

OpenAI leads in computational capacity, product experience, and institutional knowledge, giving it a competitive edge over Anthropic and Thinking Machines Lab. (Estimated data)

The Confidentiality Question: Unpacking the Allegations

What the Allegations Suggest About Startup Risks

The allegations that Barret Zoph shared confidential company information with competitors point to a particular vulnerability that technology startups face when hiring from incumbent firms. Research-stage companies, in particular, operate with information asymmetry—their progress, strategic direction, and technical approaches often remain shielded from external view. When founders with recent experience at competitor firms join a startup, they bring not only expertise but also detailed knowledge of how those competitors operate, what they're pursuing, and what directions they've decided against.

While most researchers exercise appropriate judgment about what information they should bring or remember from previous roles, the temptation to apply lessons learned elsewhere can become acute during startup operations. A researcher familiar with approaches tried at OpenAI might casually reference that OpenAI had explored a particular direction and concluded it was not promising, thereby saving a startup significant time and resources—but such references, even in informal contexts, technically involve sharing information about OpenAI's research directions and decisions.

The allegations suggest that Zoph may have ventured beyond such casual references into active communication with competitors about Thinking Machines' work. If accurate, such conduct would represent a serious breach of trust, particularly given that Thinking Machines was operating in direct competition with OpenAI in the large language model research space. The startup depended on maintaining confidentiality about its progress and technical approaches; compromising that confidentiality would directly undermine its competitive position.

Interpreting OpenAI's Response

OpenAI's statement that it did not share Thinking Machines' concerns about Zoph admits of multiple interpretations. One reading suggests that OpenAI doubted the severity of the alleged misconduct—perhaps viewing the confidentiality breach as less consequential than Murati did, or considering the allegations unsubstantiated. Another reading suggests that OpenAI simply did not care about the allegations, viewing them as less relevant than other factors like Zoph's technical capabilities or his value to OpenAI's research agenda.

A third interpretation focuses on the legal and contractual dimensions. If Zoph had been fired by Thinking Machines based on alleged confidentiality breaches, OpenAI's willingness to rehire him despite those allegations suggests either confidence in his vindication or an assessment that the competitive advantages of hiring him outweighed the reputational risks of doing so. This interpretation reflects a cold calculation: whatever happened at Thinking Machines, bringing Zoph back to OpenAI eliminates a potential competitor and secures his talents for OpenAI's own projects.

The Broader Pattern: Why This Matters

The Zoph situation illuminates a particular structural challenge for AI startups founded by people departing from leading organizations. These founders are inherently in a position of conflict—they possess knowledge that could benefit competitors (from their perspective, simply returning to the source), and they face temptation to leverage that knowledge even in ways they might not fully recognize as problematic. This tension becomes more acute when a startup struggles or when the founder questions whether they made the right choice in leaving.

For investors and other stakeholders in AI startups, these dynamics suggest the importance of careful management of information boundaries when hiring from competitors. Non-compete agreements and confidentiality provisions become even more critical, as does building organizational cultures where researchers understand the importance of maintaining boundaries between previous employers' confidential information and current work.

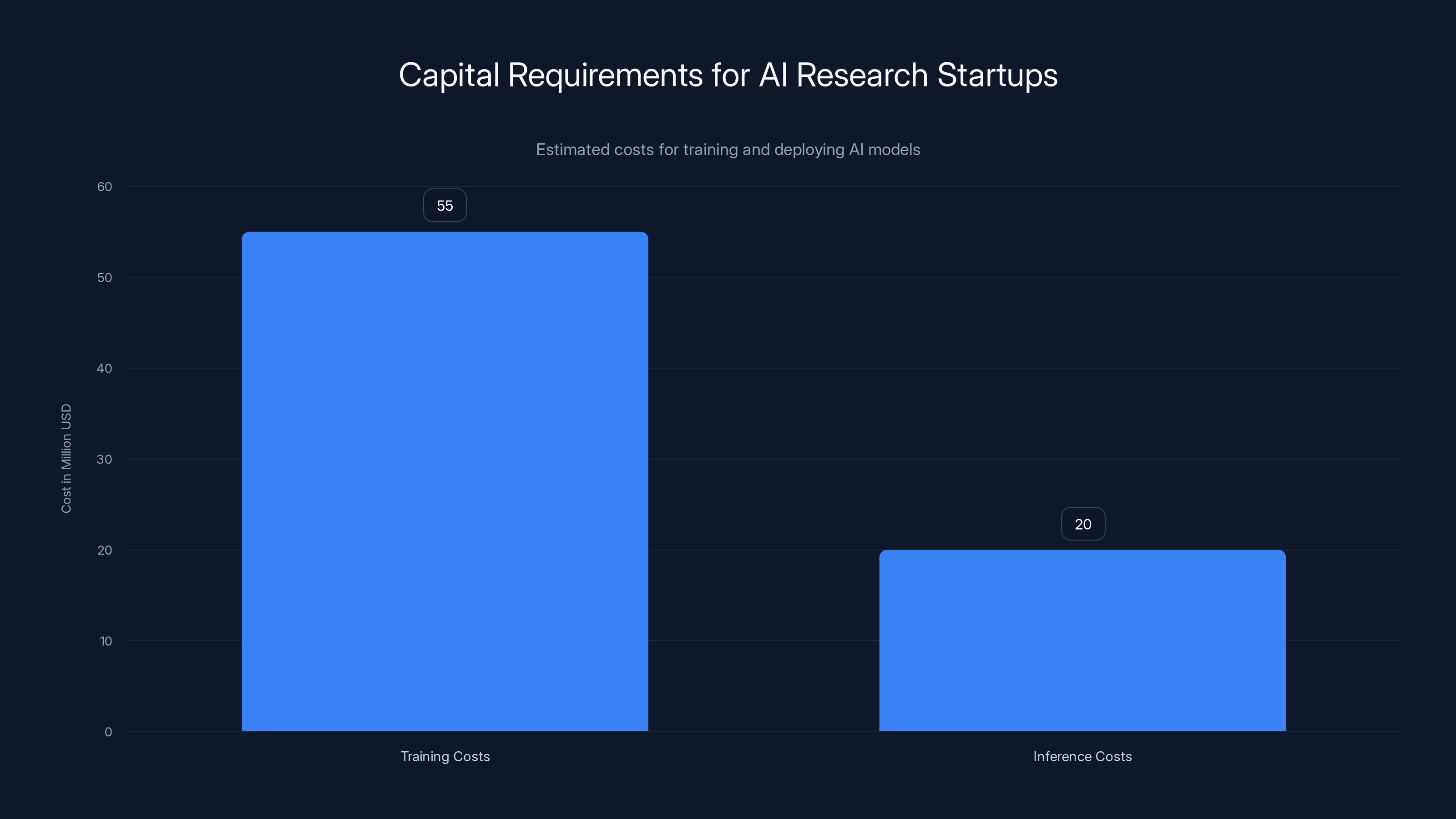

Training a competitive large language model can cost between

The Structural Economics of AI Research Startups

Capital Requirements and Competitive Economics

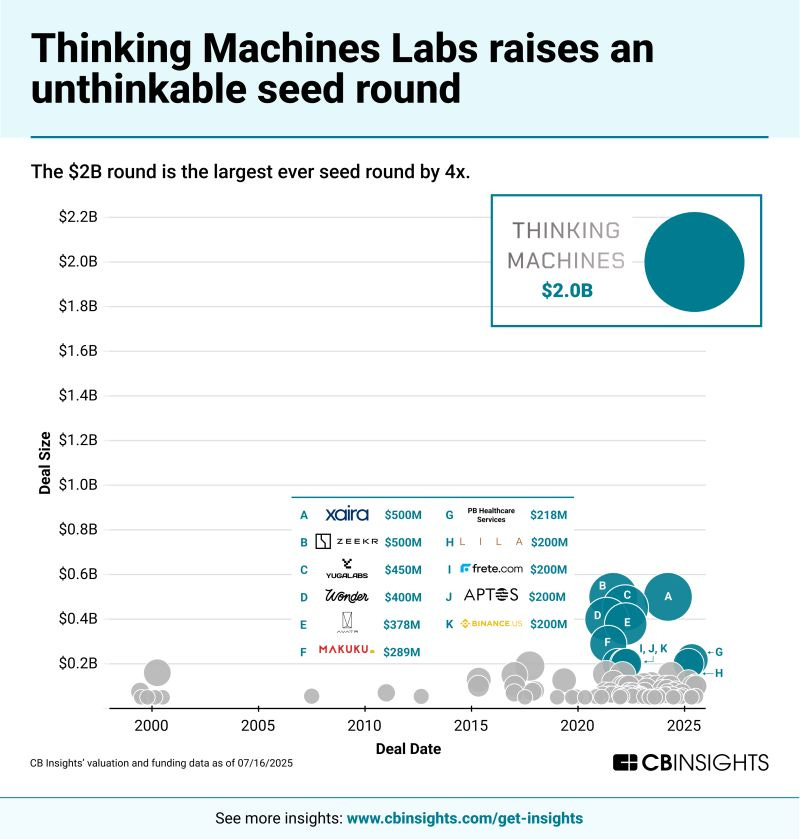

The departure of Thinking Machines Lab's cofounders cannot be fully understood apart from the underlying economics of AI research and development in the current market environment. Unlike software startups, which can achieve meaningful scale with minimal capital investment, AI research organizations require sustained access to enormous computational resources, specialized talent, and substantial runway to produce results that justify their existence.

Training a competitive large language model now costs between

The competitive economics favor established organizations that can distribute fixed research costs across numerous products and revenue streams. OpenAI can invest billions in model development knowing that these investments feed multiple products: Chat GPT, GPT-4, API access, enterprise offerings, and future applications. A startup must fund the same research costs on a per-project basis, making the hurdle rate for research investment substantially higher.

The Timeline Problem: When Progress Slows

The pace of AI progress has accelerated dramatically, with major capability breakthroughs occurring roughly every 6-18 months in the large language model space. This acceleration creates a particular challenge for startups: any lag in progress becomes increasingly visible and potentially demoralizing. Thinking Machines Lab launched with the presumption that its leadership and focus would enable rapid progress, but if early results suggested progress at a slower pace than OpenAI was achieving, this would immediately create doubt about the venture's viability.

Researchers departing in this context face a rational calculation: if the startup appears to be lagging behind the state-of-the-art by 6-12 months, is the equity stake—which depends on eventual success—sufficiently valuable to justify remaining at a lower-resourced organization? At OpenAI, by contrast, researchers can see cutting-edge progress happening continuously, providing daily validation that they've chosen correctly. This creates an enormous psychological advantage for incumbents.

Capital Efficiency and Scaling Challenges

Thinking Machines Lab, like all AI research startups, would have needed to demonstrate a path to capital efficiency—a way of achieving competitive AI capabilities at lower cost than incumbents. This might have involved novel training approaches, more efficient architectures, clever data selection, or specialization in particular domains where comprehensive general-purpose models weren't necessary. Without a clear demonstration of capital efficiency, the startup faced an existential challenge: raising enough capital to compete with incumbents on their preferred grounds (brute computational force) was likely impossible, yet investors would struggle to justify funding if the startup lacked a differentiated path forward.

Comparative Analysis: Thinking Machines Lab vs. Competitors

OpenAI's Position and Advantages

OpenAI stands as the undisputed leader in the large language model space, with GPT-4 representing the current capability frontier and GPT-4o demonstrating strong performance across multiple modalities. The company maintains several structural advantages that other organizations, including Thinking Machines Lab, find difficult to overcome. These advantages extend beyond computational capacity to encompass product experience, go-to-market capability, and institutional knowledge accumulated over years of scaling challenges.

OpenAI's revenue streams—including subscription revenue from Chat GPT Plus and API access—generate cash that funds ongoing research without requiring continuous external capital raises. This financial independence enables longer time horizons and more patience with research directions that might take years to bear fruit. A startup must either convince investors of near-term paths to revenue or deplete its balance sheet pursuing long-term research bets.

The company's institutional experience with safety evaluation, alignment research, and deployment at scale provides advantages less visible to outsiders but critical for building next-generation systems. The infrastructure for evaluating model behavior, identifying failure modes, and adjusting training approaches represents accumulated problem-solving across thousands of implementation decisions. New organizations must rediscover many of these insights independently.

Anthropic: A Contrasting Trajectory

While Thinking Machines Lab struggled to maintain its initial leadership, Anthropic provides a contrasting example of an AI research startup that has achieved some success competing against OpenAI. Founded by Dario and Daniela Amodei in 2021, Anthropic assembled a team of researchers departing from OpenAI and has pursued a differentiated approach emphasizing constitutional AI and safety-oriented research. The company has raised significant capital (over $5 billion) and has developed Claude, a competitive large language model that differentiates partly on safety properties and partly on performance.

Anthropic's success relative to other AI startups likely stems from several factors: differentiated positioning around safety and alignment, sustained capital inflows that have enabled long-term research timelines, patient capital from investors willing to fund research-stage operations, and leadership that has resisted the temptation to cut corners or compromise on the organization's founding vision. The company has achieved this while operating independently and maintaining a research focus rather than becoming consumed by product pressures.

Despite Anthropic's relative success, the company faces ongoing challenges in recruiting and retaining elite talent, particularly researchers who question whether Anthropic can ultimately compete with OpenAI's superior computational resources and progress velocity. The firm must continuously convince researchers that its differentiated approach and patient research timelines justify forgoing the advantages available at well-resourced incumbents.

Other Emerging Players and Their Strategies

A number of other organizations have attempted to compete in the large language model space with varying degrees of success. Organizations like Mistral, focused on open-source model development; x AI, pursuing alternative approaches to capability and alignment; and various corporate ventures from major technology firms all represent different strategies for competing in the AI landscape. None have yet achieved a dominant position that clearly rivals OpenAI, though some have attracted significant capital and talent.

The survival and success of these ventures depends heavily on finding differentiated positioning rather than competing directly with OpenAI on general-purpose model capability. Mistral's focus on efficiency and open-source approaches, for instance, targets a different segment of the market than OpenAI's proprietary, closed-source offerings. Similarly, specialized applications targeting particular industries or domains offer paths to success that don't require parity-level general-purpose AI capability.

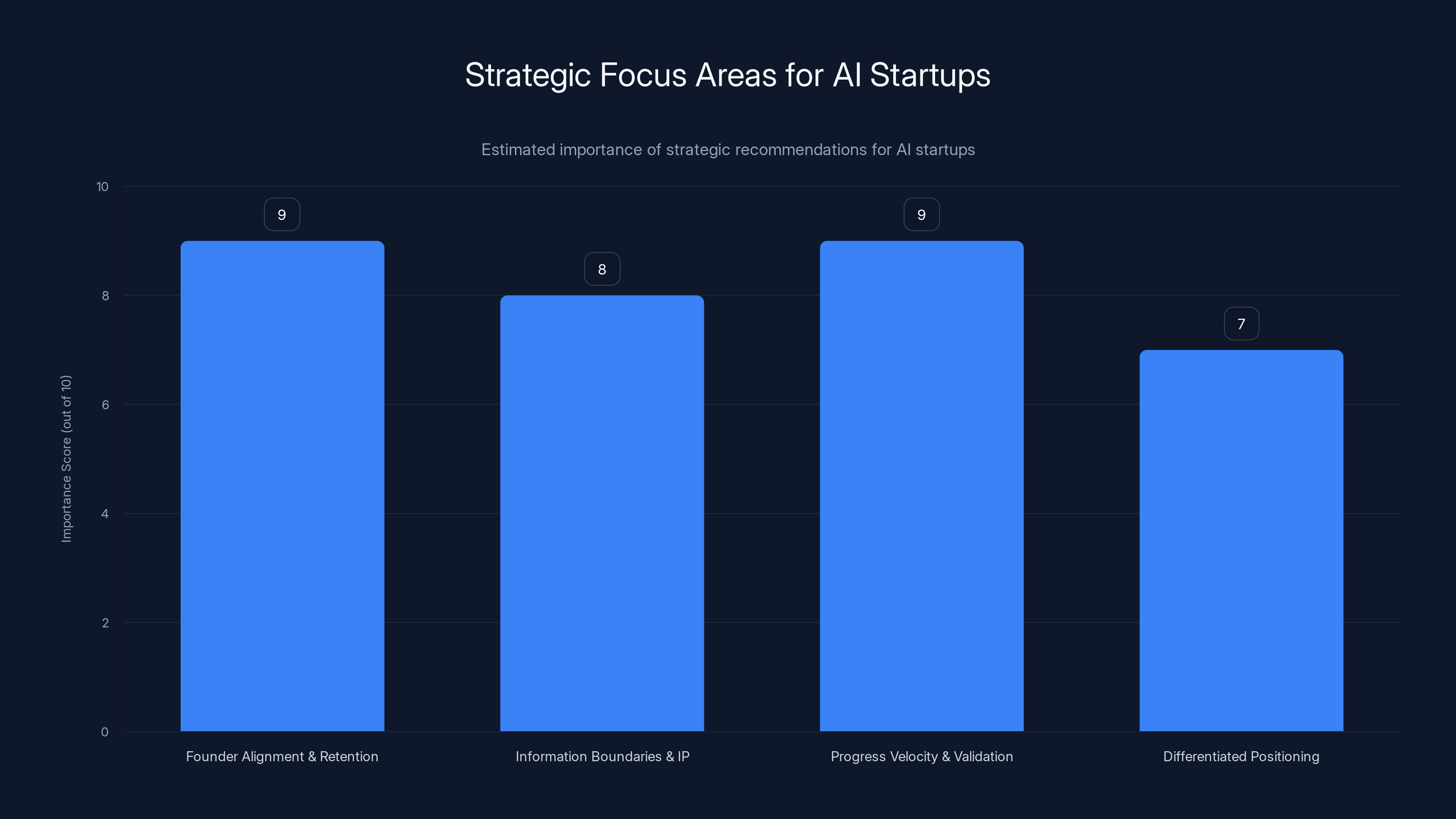

AI startups face significant challenges, with capital requirements and talent retention being the most severe. Estimated data highlights the need for strategic differentiation.

Talent Market Dynamics in 2025: Lessons from the Exodus

The Premium on Execution Capability

The departures from Thinking Machines Lab highlight the increasing premium that the AI talent market places on execution capability and demonstrated ability to ship meaningful results. Researchers willing to join startups require evidence that the organization possesses the ability to move from ideas to implementation at pace. A startup with exceptional vision but uncertain execution capability will struggle to retain researchers, who can rapidly assess whether progress is happening at expected velocity.

OpenAI demonstrates world-class execution capability—the organization ships new models, features, and products on a regular cadence. This regular demonstration of capability reinforces researcher confidence that they can pursue ambitious projects and see them realized. Thinking Machines Lab, lacking a track record, would have needed to demonstrate comparable execution velocity from its early stages to overcome the gravitational pull of OpenAI. If the organization faced unexpected challenges or delays in its initial research and development, these would immediately become visible to researchers evaluating their decision to join.

The Role of Founder Credibility

Mira Murati's credibility as a former OpenAI CTO provided crucial initial attractiveness to Thinking Machines Lab, signaling that elite talent could expect to work on cutting-edge problems with a proven leader. However, credibility alone proves insufficient to retain talent over time; founders must also demonstrate execution capability and successful navigation of the myriad challenges that emerge during a startup's scaling phase.

When Zoph departed, this represented a signal that even Murati's credibility and the promise of working on frontier problems were insufficient to retain elite talent when the opportunity arose to return to an incumbent organization with superior resources. This dynamic suggests that founder credibility matters enormously for initial recruitment but becomes less relevant as researchers assess on-the-ground execution capability.

The Countervailing Forces: Why Some Startups Succeed

While the Thinking Machines exodus suggests difficulty for AI startups, some research organizations have succeeded in attracting and retaining elite talent despite competing with OpenAI. Organizations like Anthropic and others have achieved this through several mechanisms: differentiated research vision that attracts researchers not fully aligned with OpenAI's approach, patient capital that enables long-term research timelines, compelling narratives about the importance of particular research directions (especially safety and alignment), and organizational culture that prioritizes research autonomy and publication.

Additionally, some researchers are motivated by desire to build diverse centers of AI development rather than concentrate all elite talent in a single organization. These researchers view contributing to organizational competitors as advancing broader societal goals around decentralization of AI development. This motivation, while not universal, can partially offset the advantages available at dominant incumbents.

The Strategic Implications: What This Means for OpenAI

Acquiring Talent Rather Than Building It

OpenAI's decision to rehire Zoph, Metz, and Schoenholz—and to absorb talent from competitor organizations more broadly—reflects a strategic posture favoring rapid talent acquisition over organic hiring. This approach makes sense for an organization attempting to maintain dominance in a rapidly evolving technological frontier. Rather than investing years in developing new researchers, OpenAI sources proven talent from competitive ventures.

This strategy works particularly well because departing talent brings not only existing capabilities but also external perspective on what competitors are attempting and alternative approaches to problems that OpenAI is pursuing. A researcher returning from another organization can contribute ideas influenced by different problem-solving approaches and research directions, potentially accelerating OpenAI's progress.

However, this strategy also carries risks. Talent acquired from competitors may harbor subtle allegiances or knowledge from their previous organizations that they struggle to compartmentalize. Additionally, a pattern of aggressively recruiting talent from competitors might eventually create reputational consequences or incentivize competitors to implement more stringent non-compete agreements and confidentiality protections.

The Timing of Talent Returns

The fact that Zoph and Metz departed and then returned within roughly a year suggests timing and optionality entered into their original decision. Rather than viewing the departure as permanent, these individuals may have framed the Thinking Machines opportunity as a temporary exploration that they could exit if circumstances changed. This framing reduces the commitment intensity associated with the original departure, essentially treating the Thinking Machines experience as an opportunity to explore alternative approaches while maintaining the option to return if the experiment proved less promising than expected.

OpenAI's acceptance of their return likely reflects an understanding that these individuals' departures represented exploration rather than fundamental disaffiliation. The organization likely maintained relationships with departing talent, creating channels through which they could re-engage if opportunities presented themselves. This relational approach proves superior to hostile departures that burn bridges permanently.

Implications for OpenAI's Research Agenda

The acquisition of Zoph and Metz likely reflects OpenAI's assessment that these researchers bring specific capabilities relevant to problems the organization is currently prioritizing. Zoph, in particular, has published research on scaling laws and model architecture, suggesting that OpenAI may be actively working on problems related to model scaling and efficiency—domains where Zoph's expertise would provide direct value.

The return of these researchers enables OpenAI to consolidate intellectual talent around its core research mission and likely accelerates progress on whatever problems OpenAI is prioritizing. For any organization attempting to compete with OpenAI, this represents a concerning development—the organization just acquired additional elite talent, making competition even more difficult.

Investing in founder alignment and retention, along with maintaining progress velocity and external validation, are critical for AI startups. Estimated data.

Organizational Challenges and Culture: The Human Dimension

Managing Departures and the Morale Question

For Thinking Machines Lab, the departure of cofounders represents a significant morale challenge. Remaining employees must process what the departures signal about the venture's trajectory and their own decision to join. If the founders are leaving, what does that imply about their confidence in eventual success? Conversely, if the founders are leaving because they've been drawn back to OpenAI by opportunity rather than because of fundamental doubts about Thinking Machines, this signals something different—that the venture faces an ongoing challenge retaining talent that has attractive outside options.

Organizations typically experience cascading departures when founders leave, particularly if the departures are early enough in the venture's lifecycle that the organization hasn't yet achieved significant milestones that might reinforce staying. Researchers at Thinking Machines likely entered with expectations that the founders would remain engaged and committed; early departures violate those expectations and can trigger rapid reassessment of whether to continue at the organization.

The Leadership and Confidence Question

Mira Murati now faces the challenge of maintaining Thinking Machines Lab's trajectory and credibility despite losing two core cofounders. Her decision to fire Zoph rather than accept his resignation suggests she's taking a firm stance on ethical conduct and confidentiality, which could be positive for organizational culture. However, the departure of Metz and Schoenholz may indicate that Murati's authority and vision are insufficient, without the support of her cofounders, to retain elite researchers through periods of difficulty or uncertainty.

The organization's future depends substantially on Murati's ability to demonstrate that Thinking Machines Lab's path forward offers sufficient promise to retain and attract elite talent. This requires accelerating progress, securing additional capital, and articulating a vision so compelling that it outweighs the attractions of established organizations with superior resources.

The Broader AI Industry Landscape: Consolidation vs. Fragmentation

Momentum Toward Consolidation

The Thinking Machines exodus illustrates broader trends in the AI industry favoring consolidation around dominant players. As the barrier to competitive success rises—due to increasing capital requirements, computational resource needs, and talent acquisition costs—the number of viable independent organizations declines. We may be witnessing the emergence of a landscape where OpenAI, Anthropic, and perhaps a few other well-capitalized ventures survive as meaningful research organizations, while others struggle or are acquired by larger technology firms.

This consolidation trajectory mirrors patterns in other technology domains. The search engine space consolidated around Google; the social network space around Facebook; and cloud computing around AWS, Google Cloud, and Azure. In each case, the ability of early movers to accumulate resources and lock in talent created barriers to competition that most startup ventures could not overcome. The AI space may follow a similar pattern, with OpenAI emerging as a similarly dominant position.

Counterarguments and Mechanisms for Maintained Competition

However, several factors might prevent complete consolidation in the AI space. First, different applications require different models optimized for specific purposes, creating room for specialized competitors. Anthropic's success demonstrates that differentiation around safety and alignment can support a viable competitor even against OpenAI's resources. Similarly, models optimized for efficiency, specific languages, or particular domains might enable smaller organizations to serve niches that dominant incumbents choose not to prioritize.

Second, regulatory pressure might eventually require decentralization of advanced AI development across multiple organizations to prevent excessive concentration of power and capability. Governments and regulators, awakening to the societal implications of dominant AI organizations, might implement policies that actively support competitor development or prevent consolidation beyond certain thresholds.

Third, capital has flowed abundantly to AI ventures, and deep-pocketed investors remain willing to fund organizations with differentiated visions or compelling founder teams. This capital abundance can support ventures that wouldn't survive in capital-constrained environments. As long as venture capital continues to view AI as a transformational domain justifying outsized investments, organizations like Thinking Machines Lab will find capital available to support their missions.

This chart compares AI models based on performance, safety, and cost efficiency. Claude excels in safety, Mistral in cost efficiency, and Runable in affordability for automation solutions. Estimated data.

Lessons for Founders and Future AI Ventures

Founder Retention and Equity Incentives

Thinking Machines Lab's experience highlights the importance of founder retention mechanisms and meaningful equity incentives that align founder interests with long-term organizational success. If Zoph and Metz possessed sufficient equity stakes and vesting schedules aligned with multi-year commitment, the financial incentive to depart might have been more constrained. While no equity structure can indefinitely bind individuals committed to returning to better-resourced organizations, thoughtful structure can align incentives and reduce the temptation to depart when alternative opportunities emerge.

Founders should also establish clear governance mechanisms and expectations about decision-making during difficult periods. When challenges emerge—as they inevitably do in any startup—founders without aligned decision-making frameworks may find themselves disagreeing about paths forward. Clear governance structures reduce the likelihood that difficult periods trigger founder departures.

Differentiation as Essential

AI ventures attempting to compete with OpenAI cannot do so by attempting to replicate OpenAI's approach or match its resources. Instead, successful ventures require clear differentiation—either through focused specialization in particular domains or applications, through differentiated approaches to key research problems, or through organizational positioning that appeals to researchers and customers with specific values. Anthropic's focus on safety and constitutional AI provides such differentiation; other ventures might similarly identify distinctive positioning.

Managing Expectations and Progress Velocity

Startups must manage internal expectations about progress velocity and external expectations about competitive positioning. If a venture positions itself as pursuing cutting-edge large language model development on par with OpenAI, any perception of lag will be demoralizing to staff and potentially damaging to fundraising efforts. More realistic positioning around research timelines and competitive positioning helps ensure that actual progress is viewed as successful rather than disappointing.

Building Stakeholder Buy-In

Successful ventures require genuine stakeholder buy-in to the mission and approach. When founders depart early, this suggests insufficient stakeholder alignment. Founders should invest significant effort in ensuring that key team members, investors, and board members genuinely believe in the venture's approach and have assessed the risks appropriately. This reduces the likelihood that initial difficulties trigger departures based on loss of confidence.

Comparative Technology Solutions: Thinking Machines' Alternatives

What Competitors Are Offering

As Thinking Machines Lab navigates its challenges following the cofounders' departures, it must contend with an increasingly crowded competitive landscape. Various organizations have developed alternative approaches to large language model development and deployment that offer different value propositions. Understanding these alternatives illuminates the options available to teams seeking AI research and development capabilities outside of OpenAI.

Claude, developed by Anthropic, represents a sophisticated alternative to OpenAI's models, emphasizing constitutional AI principles and safety-oriented development. The model performs comparably to GPT-4 on many benchmarks while offering different trade-offs around safety, interpretability, and refusal behavior. For teams prioritizing safety-oriented AI development, Claude offers a credible alternative.

Mistral's open-source models provide efficiency-focused alternatives that enable organizations to run competitive models on more constrained hardware. For teams seeking to reduce inference costs or maintain control over their AI infrastructure, Mistral's approach offers meaningful advantages relative to closed-source alternatives.

For Teams Seeking AI Automation Solutions

Beyond frontier model development, numerous platforms now offer AI automation capabilities at various price points and feature completeness levels. For organizations seeking AI-powered document generation, workflow automation, or content creation, platforms like Runable offer cost-effective alternatives. Starting at $9/month, Runable provides AI agents for automated document generation, presentation creation, and workflow automation without requiring the computational infrastructure demands of training frontier models from scratch.

Teams pursuing AI automation and productivity tools benefit from evaluating multiple options based on their specific use cases. OpenAI's API and model access remain powerful tools for organizations building custom applications, while specialized platforms like Runable provide purpose-built solutions for common tasks like content generation and workflow automation. The choice between options depends on specific requirements, budget constraints, and the desired degree of customization versus convenience.

For developers and startups building modern applications, Runable's AI agents for automated workflows provide an accessible entry point to AI-powered productivity gains without requiring deep machine learning expertise or significant computational infrastructure. Similarly, organizations seeking AI-powered presentation and document generation capabilities might consider both specialized tools and custom solutions built on top of foundational models.

Estimated data suggests competitive dynamics and research cycle pressures are major factors influencing AI talent migration. Established firms like OpenAI have a strong pull due to these dynamics.

The Future of AI Startups: 2025 and Beyond

Evolving Capital Models

As the AI industry matures, we can expect capital allocation patterns to shift. The phase of funding any team with credible founders attempting large language model development likely draws toward a close. Future capital will increasingly flow toward ventures with clear differentiation, demonstrated traction, or innovative approaches to reducing the capital intensity of AI development. This shift will make fundraising more challenging for ventures lacking clear paths to success but may ultimately create healthier economics for the ventures that do secure funding.

The Role of Open Source and Community Development

Open-source model development, pioneered by organizations like Mistral and others, has emerged as an alternative path to frontier AI development outside of closed-source incumbent organizations. This path reduces the capital intensity requirement by enabling community contribution, and it appeals to researchers and organizations valuing transparency and control. The next phase of AI development may see continued growth in open-source alternatives that compete with closed-source incumbents on various dimensions beyond raw capability.

Regulatory and Policy Implications

Governments and regulatory bodies worldwide are increasingly focused on AI governance, with implications for how companies structure their operations and research. Future AI ventures should anticipate regulatory requirements around transparency, safety evaluation, and responsible deployment. Organizations that proactively engage with regulatory concerns and build safety considerations into their development approaches from the start may find themselves at competitive advantages as regulatory frameworks crystallize.

The Possibility of Sustained Competition

Despite consolidation pressures, meaningful competition in AI likely persists in specialized domains and applications. General-purpose model development may see consolidation around dominant players, but specific applications—legal AI, medical AI, scientific discovery tools, creative applications—may support diverse competitive ecosystems. Ventures focused on applications rather than foundational models may find more sustainable paths to success than those competing directly with OpenAI on general-purpose capability.

Analyzing the Narratives: What Really Happened?

The Confidentiality Breach Interpretation

The allegation that Barret Zoph shared confidential information with competitors provides one narrative framework for understanding the departures. Under this interpretation, Zoph's breach violated the trust that Murati and the organization had placed in him, justifying immediate termination. This narrative frames the departures as driven by fundamental ethical violations and organizational dysfunction—a startup unable to maintain basic information security cannot succeed in competitive environments where intellectual property represents core value.

This interpretation gains credence from the seriousness of confidentiality concerns in AI research contexts, where months of model development work can be undermined by disclosure of training approaches, architecture decisions, or performance data. If Zoph had indeed shared such information, Thinking Machines Lab would face immediate damage to its competitive position. The question becomes whether the damage derived from the breach itself or from discovery of the breach and subsequent loss of Zoph's services.

The Opportunity-Driven Departure Interpretation

An alternative narrative frames the departures as primarily opportunity-driven rather than ethics-driven. Under this interpretation, Zoph likely maintained positive regard for Murati and genuine belief in Thinking Machines Lab, but the chance to return to OpenAI—perhaps to work on a particularly compelling research problem or with preferred colleagues—simply proved too attractive to refuse. The allegations of confidentiality breaches represent Murati's rationalization for the departure, a way of framing an essentially voluntary departure as forced termination.

This interpretation assumes that confidentiality concerns existed but may have been incidental to the core decision. Zoph may have inadvertently referenced OpenAI research or casually discussed Thinking Machines directions with OpenAI colleagues, which Murati chose to characterize as serious breaches to justify termination rather than accepting Zoph's voluntary departure. Under this interpretation, OpenAI's statement that it doesn't share Murati's concerns reflects genuine belief that the confidentiality concerns are overblown rather than indifference to ethical misconduct.

Synthetic Interpretation: Multiple Truths

A more nuanced interpretation suggests that both narratives contain elements of truth. Zoph likely did share information that, technically, should have remained confidential—a boundary violation that is common in knowledge-intensive fields where researchers naturally reference their previous experiences. Murati likely viewed these violations more seriously than they merited, particularly because any information sharing became salient in a context where she was already struggling to retain Zoph in a venture that, by that point, appeared less promising than initially expected.

In this synthetic interpretation, the sequence of events unfolded as follows: Zoph began having doubts about Thinking Machines Lab's trajectory or his role within it. These doubts were likely exacerbated by organizational challenges, slower-than-expected progress, or emergence of conflicts with Murati about research direction. In this context of incipient doubt, Zoph likely had interactions with OpenAI colleagues (perhaps recruiting conversations) that involved discussing Thinking Machines' work. Murati discovered these interactions and, recognizing that Zoph was considering departure, chose to preempt his voluntary departure by firing him for confidentiality violations—thereby maintaining organizational dignity and potentially weakening Zoph's position in any negotiations with OpenAI.

OpenAI, aware of these circumstances and unconcerned by the confidentiality allegations, simply accepted Zoph's availability and brought him back into the organization. This interpretation explains all the observable facts: Murati's firm stance on confidentiality, Zoph's apparent departure, and OpenAI's acceptance of him despite the allegations.

Strategic Recommendations for Affected Parties

For Thinking Machines Lab and Similar Ventures

For Thinking Machines Lab and other AI startups navigating similar challenges, several strategic recommendations emerge from analysis of this situation. First, ventures should invest heavily in founder alignment and retention mechanisms, including equity structures, governance clarity, and regular check-ins about satisfaction with organizational trajectory. This prevents surprises and creates space for candid conversations about concerns before they trigger departures.

Second, ventures should establish clear policies around information boundaries and intellectual property, ensuring that all team members understand what information is confidential and why. Regular training and reinforcement of these principles reduce inadvertent violations and create clarity if violations do occur.

Third, ventures should focus relentlessly on progress velocity and external validation. Regular demonstrations of progress—through published research, product releases, performance benchmarks, or other visible achievements—reinforce team confidence in the venture's trajectory. Lack of external validation creates vacuum for doubt and makes team members vulnerable to recruitment by competitors.

Fourth, ventures should develop differentiated positioning rather than attempting to compete directly with OpenAI on general-purpose model capability. This enables meaningful progress and external validation against realistic competitive sets rather than constant comparison with an incumbent that commands vastly superior resources.

For OpenAI and Incumbent Organizations

OpenAI's strategy of acquiring talent from competitor ventures makes strategic sense in the current environment but warrants thoughtful management. The organization should be prepared for reputational consequences from a pattern of aggressively recruiting talent from competitors, and should consider whether such talent ultimately proves as valuable as it appears during recruitment.

Additionally, OpenAI might consider investing in ecosystem health through deliberate partnerships with promising startups, technology transfer arrangements, or acquisition strategies. Entirely eliminating competition through talent acquisition may eventually attract regulatory scrutiny or create resentment among founders and investors who view OpenAI as predatory. More collaborative engagement with the broader ecosystem might serve OpenAI's long-term interests.

For Investors and Stakeholders

Investors in AI ventures should carefully assess founder commitment and retention mechanisms before deploying capital. Red flags include founders maintaining optionality relative to previous employers, unclear founder alignment on research direction or business strategy, or organizational structures that don't effectively incentivize multi-year founder commitment.

Additionally, investors should be realistic about the competitive dynamics in large language model development. Ventures attempting to compete directly with OpenAI on general-purpose model capability face extremely challenging odds. More realistic assessments focus on whether a venture can achieve sustainable competitive advantage through specialization, differentiation, or more efficient approaches.

FAQ

What is the Thinking Machines Lab exodus?

The Thinking Machines Lab exodus refers to the departure of cofounders Barret Zoph and Luke Metz, along with researcher Sam Schoenholz, who left the fledgling AI research organization in late 2024 to rejoin OpenAI. The departures occurred within months of the lab's founding, representing a significant setback for the venture and raising questions about the sustainability of independent AI research organizations competing with well-resourced incumbents.

Why did Barret Zoph leave Thinking Machines Lab?

The exact reasons for Zoph's departure remain contested, with multiple narratives emerging from different stakeholders. Thinking Machines Lab alleged that Zoph was terminated for sharing confidential company information with competitors, while OpenAI's statement suggested that the organization did not share these concerns about Zoph. Alternative interpretations suggest that Zoph's departure was primarily driven by opportunity (the chance to return to OpenAI and work on compelling research problems) rather than ethics-driven termination, though confidentiality boundary violations may have contributed to the breakdown.

What are the implications of the exodus for AI startups?

The exodus illustrates several challenges facing AI startups attempting to compete with OpenAI: the difficulty of retaining elite talent when well-resourced incumbents offer superior resources and opportunities, the enormous capital requirements for frontier AI research, the importance of demonstrating near-term progress and execution capability, and the challenge of maintaining researcher confidence when organizational trajectory appears less promising than initially expected. These factors suggest that independent AI research ventures must differentiate through specialization rather than attempting to compete on general-purpose model capability.

How does Thinking Machines Lab compare to Anthropic in terms of viability?

While both organizations were founded by OpenAI departures and faced OpenAI competition, Anthropic has achieved greater success in retaining talent and progressing on its research mission. This likely reflects several factors: Anthropic's differentiated positioning around safety and constitutional AI, sustained capital inflows from patient investors, leadership that has maintained focus on the organization's founding vision, and arguably better management of organizational dynamics and talent retention. Thinking Machines Lab, by contrast, has struggled with early talent departures and questions about organizational trajectory, though it remains too early to declare the venture's ultimate viability.

What role did confidentiality concerns play in the departures?

Confidentiality concerns emerged as a significant factor in the departures, with allegations that Zoph shared confidential information with competitors. While OpenAI's public statement suggested the organization did not share Thinking Machines' concerns about these allegations, the underlying issues point to broader challenges facing startups: the difficulty of maintaining information boundaries when hiring talent from competitors, the reality that even well-intentioned researchers may inadvertently reference previous employers' work, and the risk that startup founders and employees will face temptation to leverage previous experience in ways that violate confidentiality. These concerns are particularly acute in AI research contexts where intellectual property represents enormous value.

What alternatives exist for teams seeking AI research capabilities?

Teams seeking AI research and development capabilities have several options beyond OpenAI. Anthropic's Claude offers a competitive large language model emphasizing safety and constitutional AI principles. Mistral provides open-source model alternatives optimized for efficiency. For organizations seeking AI automation and productivity capabilities rather than foundational model development, platforms like Runable offer cost-effective solutions starting at $9/month, providing AI agents for document generation, presentation creation, and workflow automation. Other specialized platforms and models target specific domains or applications, offering alternatives to general-purpose incumbents. The optimal choice depends on specific requirements, budget constraints, and desired customization levels.

How might regulatory changes affect the AI startup landscape?

Increasing regulatory focus on AI governance, safety, and responsible development will likely shape the future landscape for AI ventures. Startups that proactively engage with regulatory concerns, build safety considerations into development approaches from the start, and maintain transparency about their research directions may find themselves at competitive advantages as regulatory frameworks crystallize. Conversely, ventures that view regulation as purely constraining rather than opportunity-creating may struggle as policy frameworks become more established. Additionally, regulatory requirements might eventually support competition by preventing dominant incumbents from acquiring all promising startups or maintaining exclusive control over critical resources.

What is the significance of founder credibility in attracting and retaining talent?

Founder credibility—derived from previous experience, published research, or demonstrated accomplishment—plays a crucial role in attracting initial talent to new ventures. Mira Murati's role as former OpenAI CTO provided Thinking Machines Lab with credibility that enabled recruitment of elite researchers. However, credibility alone proves insufficient to retain talent over time. Researchers rapidly assess on-the-ground execution capability, progress velocity, and organizational trajectory. If these factors appear unfavorable, initial credibility proves insufficient to prevent departures. Founders must therefore invest heavily in demonstrating execution capability and progress after attracting initial talent through credibility.

How does computational resource access affect AI startup competitiveness?

Computational resource access represents one of the most significant barriers to competitive AI research and development. Training state-of-the-art large language models requires billions of dollars' worth of GPU and specialized processor capacity. Even well-capitalized startups find it challenging to match the computational resources available to incumbent organizations like OpenAI that operate with billion-dollar budgets. This structural advantage means that startups must either: (1) raise exceptional capital to match incumbent computational capacity, (2) differentiate through more efficient approaches that require less computational resources, or (3) focus on specialized applications that don't require parity-level general-purpose model capability. Most successful startups pursue option 2 or 3 rather than attempting option 1.

Conclusion: Navigating the AI Landscape in 2025 and Beyond

The departure of Thinking Machines Lab's cofounders represents far more than a simple personnel shuffle or organizational setback. The exodus illuminates fundamental structural challenges facing AI startups in an environment of rapid technological progress, enormous capital requirements, and increasing consolidation around dominant players. Understanding these dynamics helps stakeholders—whether founders, investors, researchers, or customers—navigate the AI landscape more effectively and make decisions aligned with their interests and values.

The competitive dynamics revealed by the Thinking Machines situation suggest that the AI industry is likely moving toward a landscape where general-purpose foundation model development concentrates among a small number of well-resourced organizations like OpenAI, Anthropic, and perhaps a few others. This concentration reflects the economic realities of frontier model development—the capital intensity, computational resource demands, and time-to-market pressures simply overwhelm most startup operations.

However, this consolidation around general-purpose models does not imply that all viable opportunities for independent AI organizations disappear. Significant value creation opportunities emerge in specialized applications, domain-specific models, AI-powered productivity tools, and organizational solutions that leverage foundation models from incumbents. Teams and organizations focused on these applications rather than competing directly on model development may find more sustainable paths to success.

For researchers and founders contemplating their career choices in this environment, the Thinking Machines situation offers several lessons. First, evaluate opportunities based on execution capability and realistic assessment of competitive positioning, not just founder credibility or intellectual appeal. Second, recognize that maintaining independent research organizations requires either differentiated positioning that doesn't directly compete with incumbents, exceptional capital resources and patience, or some combination of these factors. Third, understand that well-resourced incumbents will continue to acquire talent from ventures through direct recruitment; build organizational culture and mission alignment strong enough to retain talent despite these pressures.

For investors considering AI ventures, the exodus suggests the importance of realistic assessment of competitive positioning and capital requirements. Ventures attempting to compete with OpenAI on general-purpose model capability face extremely challenging odds. More promising opportunities likely emerge in specialized applications, efficiency-focused alternatives, safety-oriented research, or domain-specific solutions.

For customers and organizations seeking AI capabilities, the competitive dynamics mean that multiple credible alternatives to OpenAI increasingly exist. Evaluating options based on specific requirements—whether prioritizing cost-effectiveness, safety properties, specific capabilities, or customization—enables more optimal decisions than defaulting to the largest incumbents. For teams seeking AI-powered productivity and automation capabilities, cost-effective alternatives like Runable's AI automation platform provide accessible entry points that don't require the overhead of managing custom integrations or the costs of premium enterprise offerings.

The AI landscape will continue evolving rapidly, with new organizations emerging, market leaders potentially shifting, and regulatory frameworks crystallizing around governance and safety requirements. The Thinking Machines Lab exodus demonstrates that even exceptionally credentialed founders face structural challenges in competing with well-resourced incumbents, but it also suggests that alternative approaches and specialized positioning offer viable paths forward for well-executed ventures.

For stakeholders watching these developments, the key takeaway is this: the AI industry is consolidating around dominant players in general-purpose model development, but meaningful competition and value creation will continue in applications, specialization, and differentiated approaches. Success requires realistic assessment of competitive positioning, focus on execution capability rather than vision alone, and willingness to pursue differentiated paths rather than attempting to compete on incumbents' preferred terrain.

As we move deeper into 2025, the competitive dynamics established by the Thinking Machines exodus will likely intensify—creating both challenges for startups and opportunities for investors, customers, and researchers who navigate the landscape strategically and realistically.

Key Takeaways

- Thinking Machines Lab founders Barret Zoph and Luke Metz returned to OpenAI within months of launching the independent AI research venture, illustrating structural challenges facing AI startups

- OpenAI maintains gravitational advantages through computational resources, data infrastructure, institutional capability, and organizational capability that smaller ventures struggle to replicate

- Confidentiality allegations suggest information boundary violations in knowledge-intensive fields, highlighting startup vulnerabilities when hiring talent from competitors

- AI research and development increasingly requires capital intensity and computational resources that favor incumbent organizations, driving industry consolidation

- Successful AI startups must pursue differentiation through specialization, efficiency focus, or safety-oriented research rather than competing directly on general-purpose model capability

- Founder credibility attracts initial talent but proves insufficient for long-term retention without demonstrated execution capability and progress velocity

- Alternative AI platforms and solutions like Runable offer cost-effective approaches for teams seeking AI automation without requiring frontier model development capabilities

- Future AI landscape likely consolidates around dominant incumbents for general-purpose models while supporting specialized competitors in applications and domain-specific solutions

- Investors and founders navigating AI opportunities should assess competitive positioning realistically rather than attempting direct competition with well-resourced incumbents

- Regulatory engagement and early adoption of safety-oriented development approaches may provide competitive advantages for AI ventures as governance frameworks crystallize

Related Articles

- Yann LeCun on Intelligence, Learning & the Future of AI Beyond LLMs

- Google Gemini vs OpenAI: Who's Winning the AI Race in 2025?

- AI Companies & US Military: How Corporate Values Shifted [2025]

- Anthropic's Strategic C-Suite Shake-Up: What the Labs Expansion Means [2025]

- Claude Cowork: Complete Guide to AI Agent Collaboration [2025]

- AI Chatbots and Breaking News: Why Some Excel While Others Fail [2025]