TL; DR

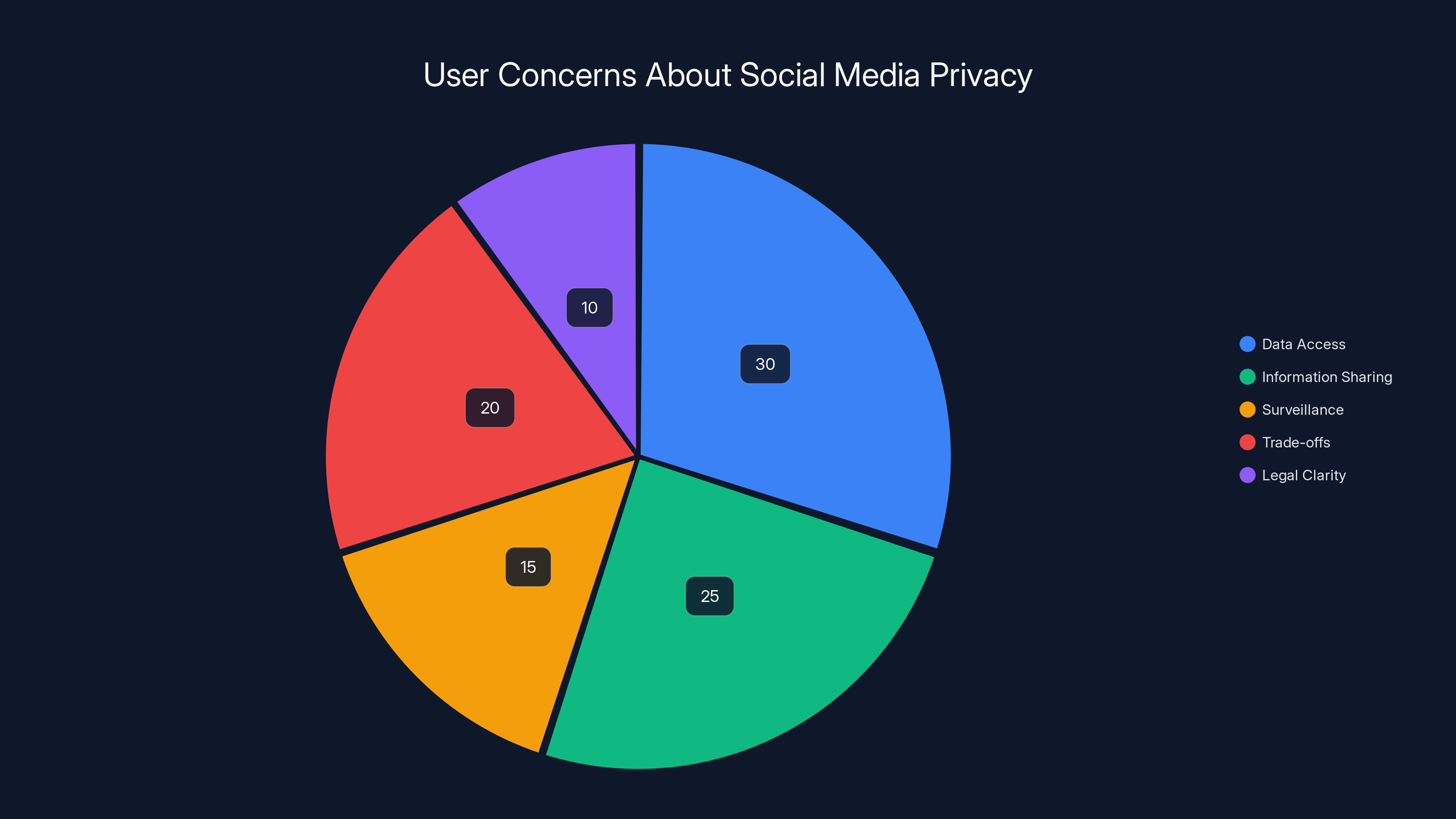

- Tik Tok's policy isn't secretly collecting immigration data: The language refers to information users voluntarily share in videos, not hidden surveillance, as clarified in TechCrunch's analysis.

- State privacy laws created the disclosure: California's CCPA and CPRA legally require companies to list "sensitive information" categories, as detailed in Jackson Lewis's insights.

- Citizenship and immigration status were added in 2023: California Governor Gavin Newsom signed AB-947 into law specifically expanding sensitive data definitions, as reported by IAPP.

- Social media platforms include similar disclosures: But many keep explanations vague—Tik Tok actually spelled them out, which backfired, according to Mashable.

- The real concern is legitimate: Data shared on social platforms can theoretically be accessed by governments, especially in authoritarian countries, as discussed in a Human Rights Watch feature.

What's Actually Happening With Tik Tok's Data Policy?

Let's start with what didn't happen. Tik Tok isn't actively scanning your immigration status documents or collecting data about your citizenship behind the scenes. That's not what's going on here, and I want to be clear about that from the start.

What actually happened is far less dramatic—and honestly, more boring from a scandal perspective. Tik Tok updated its terms of service, and like many app companies, it included a lengthy section explaining what kinds of data it might process. Somewhere in that section, buried in legalese, was a reference to immigration status alongside racial origin, sexual orientation, and other sensitive information. Users scrolling through Tik Tok spotted this language and, given the current political climate, understandably freaked out.

The issue is that the way Tik Tok worded this disclosure sounds way more sinister than the reality. When you see a privacy policy listing "citizenship or immigration status" as something the platform might collect, your brain immediately pictures Tik Tok secretly gathering information about who's documented and who isn't. That's not unreasonable. But that's not actually what the policy says Tik Tok is doing.

The real story here is about how privacy law has become so specific, so granular, and so divorced from how normal people talk that it creates panic where none should exist. It's also a story about how app makers are trying to be legally compliant while accidentally making themselves look terrible in the process.

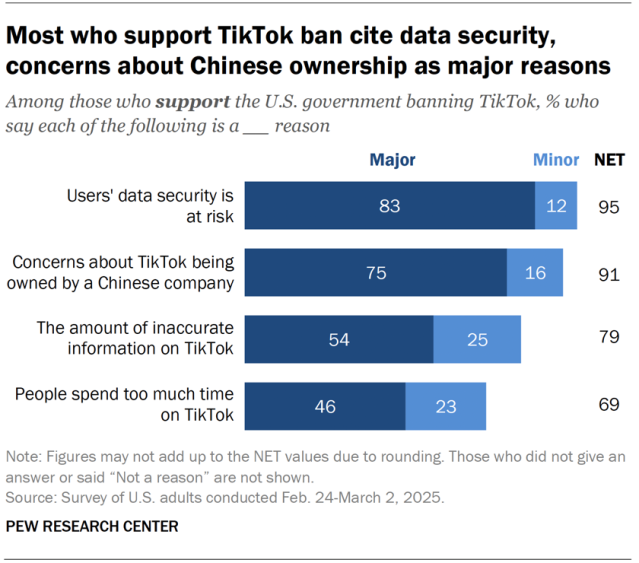

The timeline shows the progression of California's privacy laws, from the CCPA in 2018 to the CPRA in 2020, and the addition of AB-947 in 2023, which expanded the definition of sensitive information to include immigration status.

The Legal Framework Behind "Sensitive Information"

This whole thing traces back to California, specifically to state privacy legislation that's become the standard for the entire US tech industry. Here's how we got here.

California passed the California Consumer Privacy Act, or CCPA, back in 2018. It was groundbreaking at the time because it actually gave consumers rights over their personal data, which was radical in 2018. The CCPA required companies to disclose what data they collect, why they collect it, and what they do with it. Simple enough on paper.

But the CCPA went further. It created a special category called "sensitive personal information." The law said that if a company collects sensitive info, they need to be extra transparent about it. That list included things like Social Security numbers, financial account information, precise geolocation, and a few other categories.

Then came the California Privacy Rights Act, the CPRA, which expanded everything. The CPRA didn't just tighten rules—it added new categories to the sensitive information list. This is where immigration status comes in.

In October 2023, California Governor Gavin Newsom signed Assembly Bill 947, or AB-947, into law. This bill specifically added "citizenship or immigration status" to the definition of sensitive personal information under California privacy law. Why? Because lawmakers recognized that in a climate where immigration enforcement is political and contentious, information about someone's immigration status is genuinely sensitive. Someone with vulnerable immigration status might face real harm if that information leaked or was shared with the wrong parties.

Once AB-947 passed, companies doing business in California had to update their policies. They had to acknowledge that they might process information about citizenship and immigration status. And here's the key part: they had to do this whether they were actually collecting that data or not.

Why? Because under privacy law, "processing" data includes situations where users themselves share that data with the platform. If someone posts a Tik Tok about their immigration journey, Tik Tok is technically "processing" immigration-related information. It doesn't matter that the user volunteered it. The data exists on the platform, and the platform is legally responsible for how it handles it.

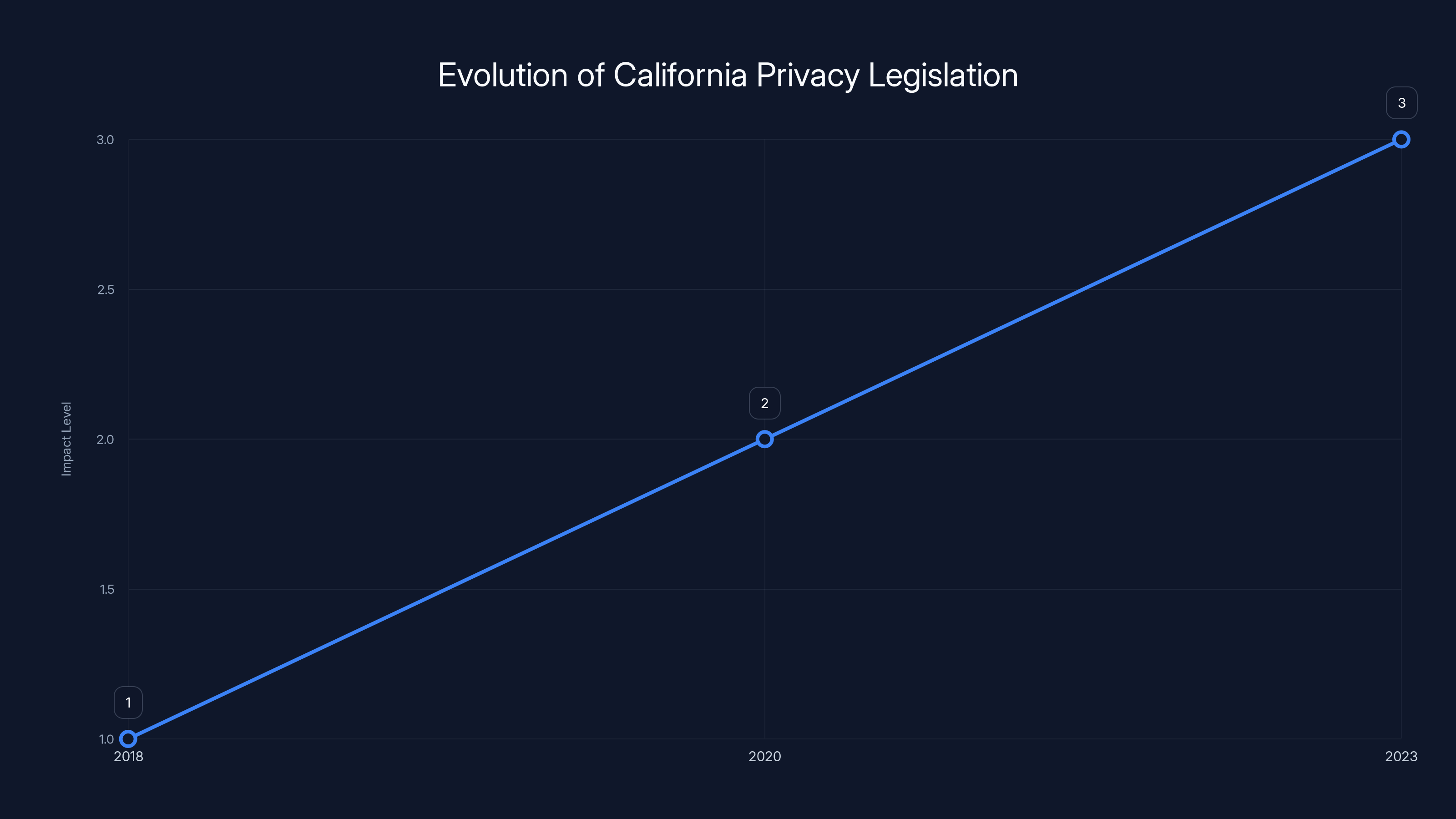

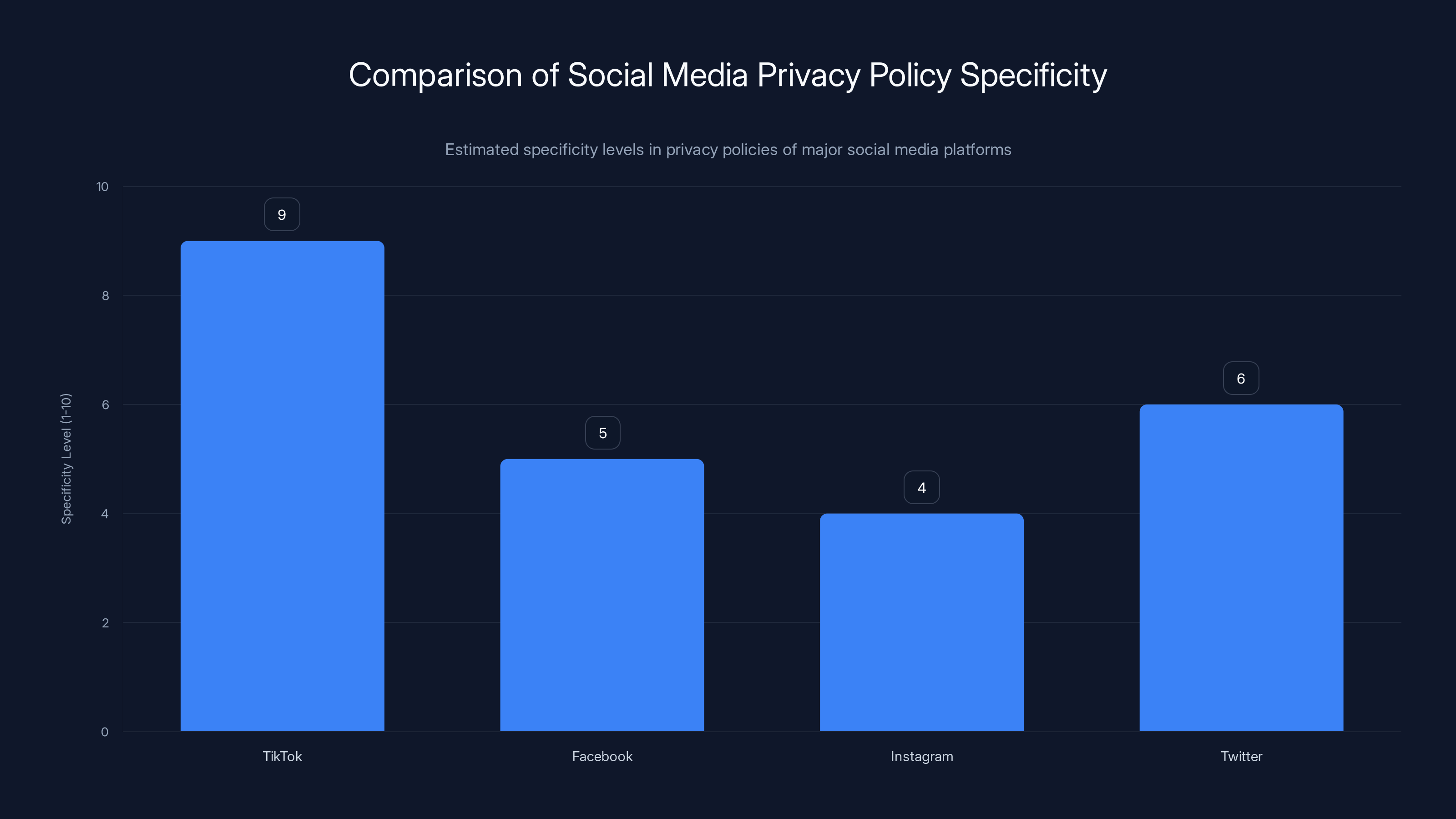

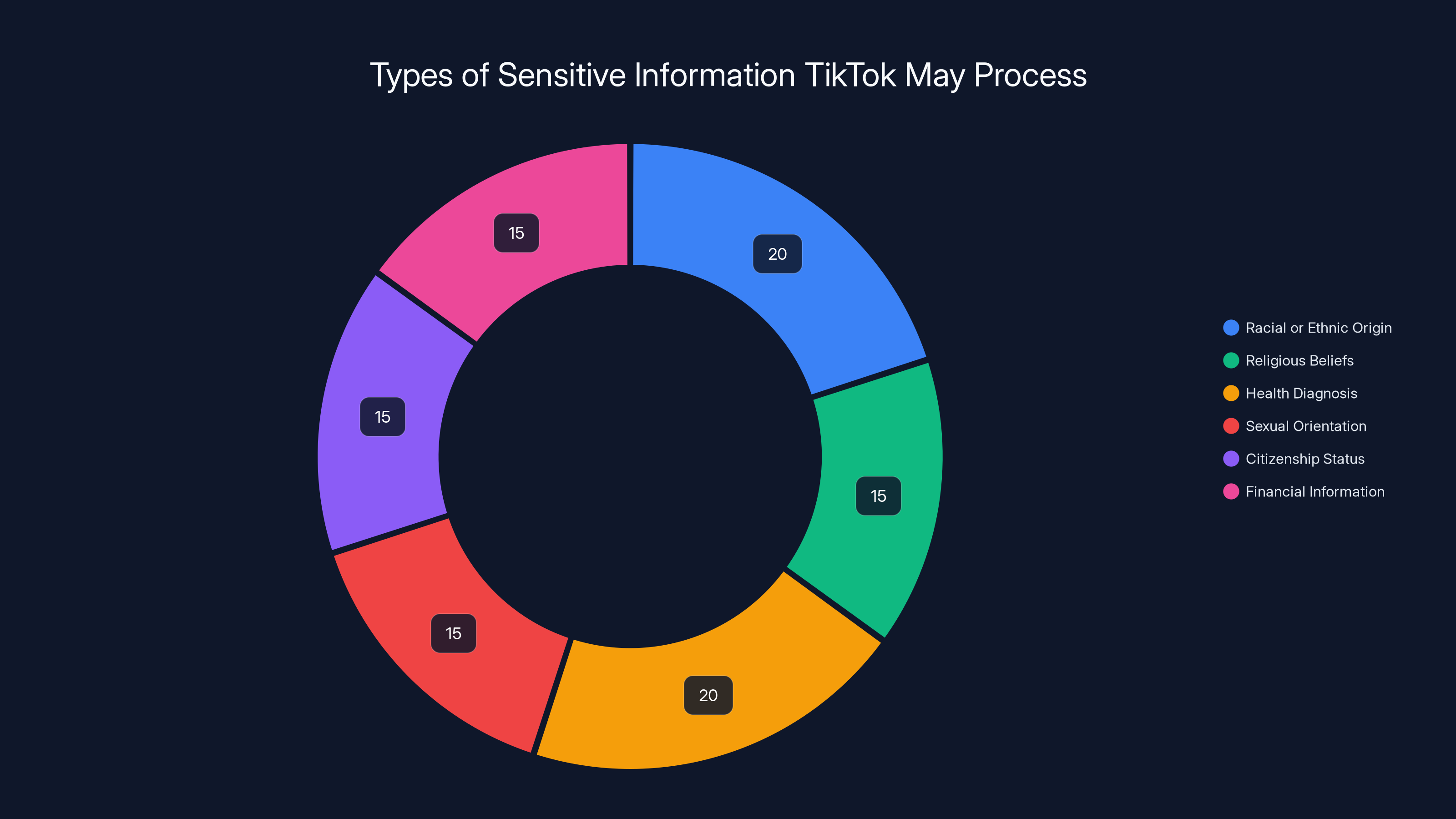

TikTok has a higher explicitness rating in its sensitive data disclosures compared to other major platforms. Estimated data based on narrative context.

Why Tik Tok Listed These Categories So Explicitly

Now, here's where Tik Tok's approach gets interesting—and where it backfired.

When you read Tik Tok's updated privacy policy, it doesn't just say "We comply with California privacy law." Instead, it actually lists out the specific categories. Racial or ethnic origin. Sexual orientation. Transgender or nonbinary status. Religion. Mental and physical health. Financial information. And yes, citizenship or immigration status.

This is what spooked people. But this approach wasn't reckless or malicious. It was actually an attempt at transparency. Tik Tok's legal team presumably thought: "If we spell out exactly what categories we're referring to, users and regulators will have clarity. We're not being vague. We're being specific."

The problem is that specificity doesn't always equal clarity, especially in privacy policy writing. One privacy lawyer I consulted explained it well: these policies are written for regulators and litigators, not for humans. When you list out "citizenship or immigration status" alongside "sexual orientation" and "health diagnosis," it reads as comprehensive data collection. It reads as invasive. It reads as scary.

Other social media companies handle this differently. Facebook, for example, keeps its sensitive data disclosures more generic. Instagram doesn't spell out every single category. Twitter has similar policies but describes them in more abstract language. Tik Tok took the more explicit route, thinking clarity would help. Instead, it created a situation where millions of users read the policy for the first time and thought something suspicious was happening.

The timing didn't help either. These disclosures were required because of the Tik Tok deal that came together in late 2024 and early 2025. The platform had to restructure its operations because of legal and political pressure. That restructuring triggered new transparency requirements. Users who normally never read the privacy policy suddenly saw notifications about terms changes and started digging in. That's when they found this language.

Understanding What "Processing" Actually Means

Here's the crucial distinction that almost nobody making panic posts on social media understands: processing information is not the same as collecting information.

Imagine you post a Tik Tok video where you talk about your experiences as an undocumented immigrant navigating the US job market. You share your story voluntarily. That video exists on Tik Tok's servers. The platform now has information related to immigration status—because you put it there.

Tik Tok's policy, when it says the platform "processes" information about immigration status, is acknowledging this reality. It's saying: if you share content that includes sensitive information, we (the platform) are technically handling that data. We're storing it, we might transcribe it, we're definitely moderating it, we might use it for recommendations, and so on.

This is completely different from Tik Tok actively seeking out your immigration status and adding it to some database. Tik Tok isn't asking for this information. It's not fishing for it. It's saying that if and when users share it, Tik Tok will handle it according to applicable law.

Privacy lawyers point out that this kind of disclosure is necessary because the law requires it. Companies have to be transparent about what sensitive information they might handle. They can't just say "We collect personal data" without getting more specific, because regulators wouldn't accept that. The law demands that companies identify sensitive information by name.

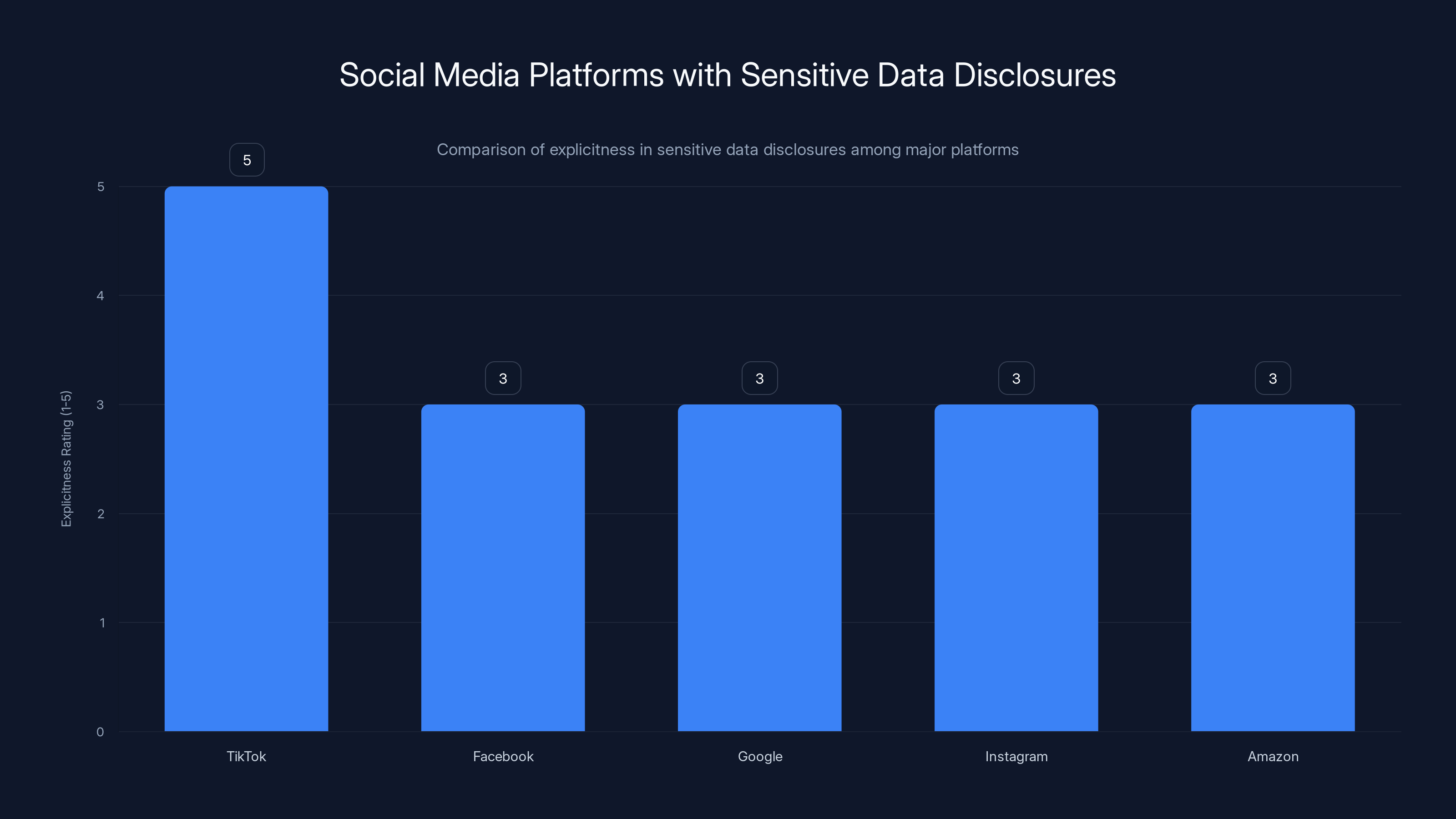

Estimated data shows that data access and information sharing are the top concerns for social media users in 2025, highlighting the need for clarity and informed decision-making.

How Other Platforms Handle Similar Disclosures

If you're thinking "Why doesn't every other app have this problem?" the answer is that most of them do have similar policies. They're just less explicit about them.

Meta (Facebook's parent company) has privacy policies that acknowledge processing sensitive information. So does Google. So does Amazon. Linked In, You Tube, Snapchat—they all have policies covering sensitive data categories. But they typically describe these categories in more abstract terms or in smaller subsections of their privacy documents.

The difference with Tik Tok is visibility. Tik Tok decided to list things out. That made the policy easier to understand for lawyers and regulators, but it made it look worse to regular users who encountered it for the first time. If Tik Tok had written "we may process health information and other sensitive categories as defined by applicable law," nobody would have noticed. But Tik Tok spelled it out, and the specificity created alarm.

Another factor: many US tech companies have been handling these kinds of disclosures for years. Users are somewhat accustomed to seeing them. But the Tik Tok policy disclosure was new and very visible because of the deal restructuring. It was impossible to miss. Millions of users who had never read a privacy policy before suddenly had one shoved in their faces with notification after notification.

Some companies actually do something different: they have tiered policies. They include a simple, plain-English summary upfront, then link to the detailed legal version for people who want more. A few platforms are experimenting with visual privacy dashboards that show you exactly what data types they process without making you read 50 pages of legal text. These approaches take more work, but they avoid the "oops, everyone thinks we're spying on immigration status" problem.

The Real Concern: Government Access and Geopolitics

Now, here's where this situation gets genuinely complicated. The panic over Tik Tok's immigration status language, while somewhat misdirected, does touch on a real and legitimate concern. That concern just isn't about what Tik Tok is actively collecting.

The actual risk is this: what information does Tik Tok have access to, and what could happen if a government demanded it?

Tik Tok, being a Chinese company (owned by Byte Dance), operates under a complex geopolitical situation. The US government has worried for years about whether Chinese authorities could compel Tik Tok to hand over data about American users. There's no confirmed evidence this has happened, but it's theoretically possible. China's national security laws give the government broad authority to demand corporate data. The US government, for its part, wants access to Tik Tok's data too, under different legal frameworks.

So the question becomes: if millions of Americans have shared sensitive information on Tik Tok—whether immigration status, health information, location data, or other sensitive stuff—what happens if a government requests that data? What happens if it leaks or gets hacked?

This is where the immigration status concern becomes real. If you're an undocumented immigrant and you've posted about it on Tik Tok, that information exists somewhere in Tik Tok's systems. In a normal scenario with a normal company and normal government requests, legal protections apply. But we're not in a normal scenario. Immigration enforcement has become increasingly aggressive and politicized. In that context, having your immigration status information available to a major tech platform, which might face pressure from governments, is genuinely risky.

Tik Tok's policy disclosure, ironically, makes this risk visible. It's saying "we might handle sensitive information." That's true. And for people whose sensitive information is immigration status, that's worth knowing about and worth understanding the risks of.

The problem is that instead of having a sophisticated conversation about data governance, government requests, and geopolitical risk, people saw the words "immigration status" in a privacy policy and immediately thought "Tik Tok is spying on me." Both concerns are worth discussing, but they're not the same thing.

TikTok's privacy policy is notably more specific than its competitors, which may lead to perceptions of invasiveness. (Estimated data)

Privacy Policy Language and the Communication Problem

There's a genuine communication failure here, and it's not really Tik Tok's fault entirely. The fault is structural. Privacy laws have become so specific and so detailed that compliance requires listing out things in ways that sound alarming to non-lawyers.

Take the CCPA's definition of sensitive personal information. It includes "sexual life or sexual orientation." That's a specific, legally defined category. Companies have to acknowledge they might process this information. That's the law. But when you write it in a policy, it sounds like the company is actively interested in your sex life, which is creepy. The reality is more benign: if you post a video coming out as gay, or if you post about your dating life, that information exists on the platform.

Similarly, "mental or physical health diagnosis." If you post a Tik Tok about your anxiety or your depression or your diabetes management, Tik Tok's system now contains health information. The company has to acknowledge this. But the policy language makes it sound like Tik Tok is secretly screening for health problems.

The solution isn't to hide these categories or be vague about them. That would violate the law and actually harm transparency. The solution is better communication about what privacy policy language actually means. Most platforms should include brief explanations like: "This category covers information you voluntarily share in your content. We're required to acknowledge that we process this information if and when you share it."

A few companies are starting to do this. Apple includes plain-English explanations alongside its legal privacy policies. Duck Duck Go publishes simplified versions of its privacy practices. These approaches don't violate privacy law, but they do make compliance less scary and more understandable.

The Political Context That Made This Blow Up

Timing is everything, and the timing here was particularly bad for Tik Tok.

The panic happened during a specific moment: the Trump administration had recently taken office in 2025, and immigration enforcement was in the news constantly. Immigration and Customs Enforcement, or ICE, was conducting high-profile operations. Entire industries were organizing boycotts and economic blackouts to protest immigration enforcement. The political temperature around immigration was at a high point.

In that climate, seeing a major social media platform's policy mention immigration status felt particularly menacing. Users weren't reading the policy in a neutral environment. They were reading it in a moment of heightened political anxiety about immigration and government overreach. That context shaped how the language was interpreted.

Historically, privacy policies are boring and nobody reads them. But when there's a dramatic deal restructuring and millions of notifications go out, suddenly people are paying attention. Add in a politically charged moment, and you have the perfect storm for a policy disclosure to be misinterpreted.

This is partly why Tik Tok's approach, while legally clear, was communication-wise risky. In a neutral political environment, spelling out sensitive information categories is fine. In a moment of political tension around a specific category—in this case, immigration status—it's asking for trouble.

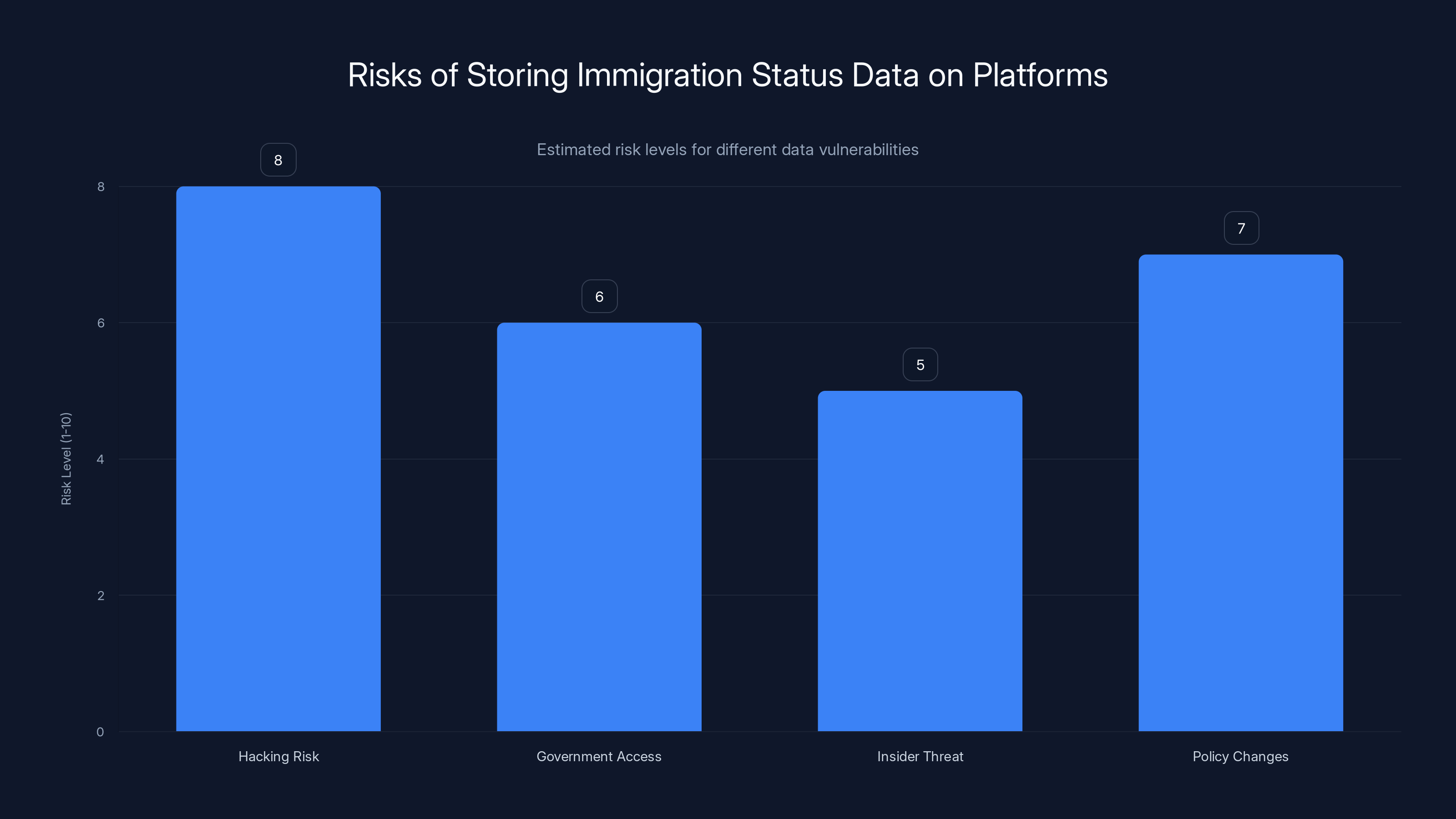

Hacking and policy changes pose the highest risks for immigration status data on platforms. Estimated data based on typical concerns.

How Data Can Actually Be Used in Immigration Enforcement

Let's talk about the scenario that legitimately scares people: could government use social media data for immigration enforcement?

It's already happened to some degree. Immigration enforcement agencies have subpoenaed social media platforms for user data, though these cases are usually limited and legally challenging. There have been instances where ICE requested user data from platforms, and some platforms complied with valid legal orders. In most cases, platforms fought the requests or required warrants or other legal process.

But here's where it gets complicated. The more detailed and comprehensive the data a platform has, the more useful it could theoretically be for government purposes. If a platform knows someone's location, their contacts, their stated immigration status, their employment information, and everything else about them, that's incredibly valuable for enforcement purposes. Even without direct government requests, that data could be compromised by hacks, leaks, or insider threats.

For people with vulnerable immigration status, this is a genuine risk calculation. Using Tik Tok and sharing personal information means accepting that risk. That's not necessarily a reason not to use the platform—millions of people use it daily—but it's worth understanding.

The counterargument is that every major tech platform has similar risks. Google knows where you are, what you search, and who you contact. Facebook knows your relationships, locations, and interests. You're taking on data risk by using any of these platforms. The question isn't whether Tik Tok is uniquely dangerous, but whether the benefits of using it outweigh the risks. That's a personal decision.

What Tik Tok Actually Says About Data Processing

Let's look at what Tik Tok's policy actually says, word for word, without the panic.

The policy states that Tik Tok may process information from user content or surveys, including information about "racial or ethnic origin, national origin, religious beliefs, mental or physical health diagnosis, sexual life or sexual orientation, status as transgender or nonbinary, citizenship or immigration status, or financial information."

Then it adds a crucial phrase: "in accordance with applicable law."

That phrase is key. Tik Tok isn't saying it will process this information however it wants. It's saying it will process it according to applicable law—which includes the privacy protections built into the CCPA, the CPRA, and other regulations. It's basically saying "if we handle this information, we'll follow all the rules about how we can use it."

The policy also explicitly references the CCPA, showing that Tik Tok understands the legal framework. It's not being vague about the law it's following. It's naming it.

When you read the full context, the policy is actually Tik Tok being transparent about its legal obligations. It's saying "we might handle sensitive information in accordance with law." That's not a confession. That's compliance.

TikTok's policy outlines several types of sensitive information it may process, with each category representing a portion of potential data handled, all in compliance with applicable laws. Estimated data.

California's Privacy Law Changes That Triggered All This

The entire disclosure happened because California expanded its definition of sensitive information in late 2023. Let's understand that change because it's central to why this is happening now.

When the original CCPA passed in 2018, immigration status wasn't explicitly listed in the sensitive information category. The law was written before immigration became as politically charged as it is now. By 2023, with immigration enforcement becoming increasingly intense and politicized, California lawmakers decided they needed to explicitly protect immigration status information.

AB-947 did that. It added citizenship and immigration status to the definition of sensitive personal information. Once that law took effect, any company doing business in California had to update its policies to acknowledge this. Not because they were secretly processing immigration data, but because the law now explicitly covered that category.

This created a cascading effect. Once California defined immigration status as sensitive information, companies started listing it in their policies. Users who had never paid attention to privacy policies suddenly started reading them. And they saw immigration status listed right there, which triggered concern.

It's a good reminder of how privacy law works: the law doesn't just regulate company behavior, it also regulates company communication. The law says "you have to tell people if you might process this type of information." Once the law names a specific type—immigration status—companies have to name it too. They can't keep it vague. And that specificity, while legally correct, creates communication problems.

The Larger Question: Should Social Media Even Have This Data?

All of this raises a bigger question that's worth asking: should Tik Tok (or any social platform) have access to sensitive information like immigration status in the first place?

The answer is complicated. These platforms don't ask for immigration status information. They don't require it. But they do allow users to post it, and once it's posted, the platform stores it, processes it, and can theoretically access it.

One argument is that social media should be designed to minimize the collection and retention of sensitive data. Instead of letting users post anything and storing everything, platforms could limit what's retained or implement stronger privacy protections. Some privacy advocates argue for "data minimization," where companies only keep the bare minimum data they actually need.

But social media platforms are built on the opposite principle: maximize data collection and retention because that data is valuable for advertising, recommendations, and other business purposes. The tension between making platforms work well (which requires data) and protecting privacy is central to social media's business model.

For users, the practical reality is this: if you share sensitive information on a social platform, that information exists on their servers. You can't unsee that. So your options are: don't share sensitive information online, or share it and accept the risks. Many people choose to share anyway because the value of connecting with communities and expressing themselves outweighs the privacy risks.

How Other Companies Disclose Similar Information

As I mentioned earlier, Tik Tok isn't alone in having policies that acknowledge processing of sensitive information. But most companies handle the communication differently.

Meta's privacy policies do reference sensitive data, but the language is more abstract. Meta talks about "sensitive information" as a category without always listing specific examples. Google's policies are similarly broad. Amazon acknowledges processing sensitive data but doesn't list every category. Microsoft's policies reference compliance with regulations like the CCPA without getting specific about every data type.

The result is that users don't panic about Facebook or Google in the same way they panicked about Tik Tok, even though those platforms potentially have similar or more extensive data about users. It's not because they're doing something less invasive. It's because they're communicating about it differently.

There are some platforms trying to do better. Runable and other newer AI automation platforms are starting to include more transparent privacy disclosures and plain-English explanations. But most legacy social media companies stick with the abstract approach.

Tik Tok's mistake, if we can call it that, was trying to be more transparent and specific. That transparency backfired when the political context made certain categories seem scarier than they should be.

Immigration Status and Data Vulnerability: Real Risks

While Tik Tok isn't secretly collecting immigration status data, there are genuine risks worth discussing about having that data on any platform.

First, there's the hacking risk. If you post about your immigration status and Tik Tok stores that information, what happens if the company gets hacked? Data breaches exposing immigration status information could harm people. Major platforms have been hacked before, and their data has been compromised. The more sensitive information they store, the more damage a breach could cause.

Second, there's the government access issue. Legal requests, valid subpoenas, and emergency government requests can compel platforms to hand over user data. The standards for these requests vary. Some require warrants. Some require less. Depending on who the government is and what laws apply, the risk level varies. But it's not zero.

Third, there's the insider threat. Employees at tech companies sometimes access or leak user data. A disgruntled employee with access to immigration status information could theoretically leak it. This has happened before at various companies.

Fourth, there's the question of what happens if company policies change or companies get acquired. If Tik Tok is sold or restructured or sold to another company, what happens to the data they've collected? Acquiring companies have different privacy practices. Data that was handled carefully at one company might be handled differently at another.

These aren't hypothetical concerns. They're real risks that apply to any sensitive information stored on any platform. The question is whether the benefits of using the platform outweigh these risks. For immigration-vulnerable people, that's a risk calculation worth making consciously.

Privacy Law's Unintended Consequences

This whole situation is actually a perfect example of how privacy law, while well-intentioned, can create unintended consequences.

Privacy laws like the CCPA and CPRA are designed to protect people by requiring companies to be transparent about data practices. That's good. But the laws force this transparency through very specific, very detailed category listings. The laws say "you must identify sensitive information by these specific categories." Companies comply by listing the categories. Users see the lists and panic.

It's not that the companies are doing anything wrong. It's that the legal framework forces a type of communication that creates fear. The law is trying to protect people, but the way it does that is by making privacy policies scarier.

One solution would be for privacy law to require not just disclosure of what data might be processed, but also clear explanation of how it's protected and what the actual risks are. Instead of just listing categories, companies would have to explain: "We might process immigration status information if you share it, and here's how we protect it, here's when we delete it, and here's what government processes we comply with."

Another solution would be for the platforms themselves to voluntarily communicate better. They could include plain-English summaries. They could include visualizations. They could explain the difference between what they collect and what they might process through user content. Some are starting to do this, but not Tik Tok.

The broader lesson is that privacy protection and clear communication often require more than just following the law. They require thinking about how the law translates to user understanding and user behavior. Tik Tok followed the law by listing categories. But it didn't think about how users would interpret seeing those categories, especially in a politically charged moment.

What Users Should Actually Be Doing

If you're someone who was concerned about Tik Tok's privacy policy, here's what's actually worth doing.

First, understand that the presence of immigration status in the policy doesn't mean Tik Tok is targeting you. It means if you share that information, Tik Tok will handle it according to law.

Second, think about what information you're actually sharing on social media. Are you posting about sensitive topics? About your immigration status, your health, your location, your relationships? If yes, understand that you're trusting the platform with that information. That's a choice, and it has risk.

Third, use privacy settings. Most platforms allow you to control who sees your posts. You can make posts private, visible only to friends, or public. Using privacy settings limits the exposure of sensitive information.

Fourth, delete old posts occasionally. The longer sensitive information sits on a platform's servers, the more time it has to be hacked, leaked, or accessed by government. Periodically clearing old posts reduces that risk.

Fifth, use a VPN if you're concerned about location tracking. If you're posting about sensitive topics and worried about geographic tracking, a VPN makes it harder for the platform to know where you are.

Sixth, pay attention to who can access your account. Use strong passwords, enable two-factor authentication, and be careful about what apps you give access to your social media account.

None of this means you should or shouldn't use Tik Tok. That's a personal decision. But if you do use it and you're sharing sensitive information, these steps can reduce your risk.

The Future of Privacy Disclosures

This whole incident probably won't be the last time privacy policy language creates panic. In fact, we'll likely see more of it as privacy laws evolve and become more specific.

The trend in privacy legislation is toward more detailed, more specific requirements. The EU's General Data Protection Regulation is incredibly detailed about data handling. Various US states are passing privacy laws with specific categories and requirements. As these laws proliferate, companies will be required to acknowledge more and more specific data types.

If companies keep communicating these requirements by just listing categories, we'll keep having situations where users see scary language and panic. But if companies get better at explaining what the disclosures actually mean, we might avoid some of this.

There's also a possibility that privacy law itself evolves in response to situations like this. Legislators might recognize that requiring specific category listings creates more confusion than clarity. They might require companies to explain what the categories actually mean. Or they might shift toward requiring companies to demonstrate how they protect sensitive information rather than just listing categories.

Whatever happens, the dynamic is clear: privacy law is increasingly important, increasingly detailed, and increasingly visible to regular users. The legal battles of the future won't just be about what companies do with data. They'll be about how companies talk about what they do with data.

Key Takeaways About Immigration Status and Data Privacy

Let me summarize the important points about this whole situation.

One: Tik Tok's policy language about immigration status doesn't reflect secret data collection. It reflects legal disclosure requirements under California privacy law.

Two: Those legal requirements exist because California added immigration status to the definition of sensitive information in 2023. Companies had to update their policies accordingly.

Three: The communication failure is real. Tik Tok listed specific sensitive categories without explaining that these refer to information users voluntarily share. That lack of clarity created unnecessary panic.

Four: Other platforms have similar policies but communicate about them differently, which is why they don't trigger panic.

Five: The underlying concern about data access and government enforcement is legitimate. If you share sensitive information on social platforms, you're trusting those platforms with that information. That carries risk.

Six: The practical response is to understand what information you're sharing, use privacy settings, and make conscious decisions about what you post on social media.

Seven: Privacy law is becoming increasingly specific and detailed, which will likely create more situations like this unless companies improve their communication.

FAQ

What does it mean when Tik Tok says it processes immigration status information?

It means that if users post content mentioning their immigration status, Tik Tok's systems store and handle that information. This doesn't mean Tik Tok is actively collecting immigration status data or scanning for it. Rather, the company acknowledges that user-generated content might contain immigration information, and Tik Tok will process that information according to applicable privacy laws.

Why did Tik Tok add immigration status to its privacy policy in 2025?

Tik Tok was required to add it because California's AB-947, signed into law in October 2023, explicitly added citizenship and immigration status to the definition of sensitive personal information under the California Privacy Rights Act and California Consumer Privacy Act. Any company doing business in California must acknowledge that they might process sensitive information, and Tik Tok updated its policy to comply with this legal requirement.

Is Tik Tok secretly collecting data about my immigration status?

No. Tik Tok isn't actively scanning for or requesting immigration status information. The policy disclosure is about what information Tik Tok might handle if users voluntarily share it. If you post a video about your immigration experiences, Tik Tok's system stores that content. The policy is acknowledging this reality, not revealing a hidden practice.

Do other social media platforms have similar sensitive data disclosures?

Yes. Facebook, Google, Instagram, Amazon, and other major platforms all have policies that acknowledge they might process sensitive information. The difference is that many of these companies keep their disclosures more abstract or less visible, which is why they haven't triggered the same level of concern as Tik Tok's more explicit listing of categories.

What actually is sensitive personal information under California law?

Under the California Consumer Privacy Act and California Privacy Rights Act, sensitive personal information includes social security numbers, financial account information, precise geolocation, racial or ethnic origin, religious beliefs, union membership, health information, sexual orientation, transgender or nonbinary status, citizenship or immigration status, and genetic data. Companies must handle this information with extra protections and disclose when they process it.

Could Tik Tok or other platforms be compelled to share immigration status data with government agencies?

Theoretically yes, though there are legal protections. If government agencies submit valid legal requests with warrants or subpoenas, platforms can be compelled to provide data. However, platforms often challenge such requests or require proper legal process. The existence of sensitive information on a platform does make it potentially available for government access, which is why privacy advocates recommend minimizing what sensitive information you share on social media.

What should I do if I'm concerned about my data on Tik Tok?

Consider these steps: understand that your sensitive information is only on the platform if you voluntarily shared it; use privacy settings to control who sees your posts; delete old posts periodically; use strong passwords and two-factor authentication; be careful about what third-party apps you authorize; and if you're extremely concerned about privacy, limit the sensitive information you share on social platforms. You can also request that Tik Tok delete your account, which should remove the data you've uploaded.

Will other platforms start disclosing sensitive information the way Tik Tok did?

Maybe. As privacy laws become more detailed and more specific, companies might shift toward more explicit category listings to ensure compliance and avoid legal challenges. However, many platforms will likely continue using more abstract language because it doesn't trigger user panic. The trend will probably be toward companies finding better ways to communicate about sensitive information without creating unnecessary concern.

How does Tik Tok protect sensitive information once it's on the platform?

Tik Tok uses encryption, access controls, and other security measures to protect user data, though the company doesn't publicly detail all of its security practices. The company says it follows "applicable law," which includes requirements under the CCPA and CPRA. However, no security system is perfect, and sensitive information on any platform carries some risk of breach, hacking, or government access.

What is the difference between sensitive personal information and regular personal information under California law?

Regular personal information is any information that identifies or could reasonably be linked to a consumer, like names, email addresses, or browsing history. Sensitive personal information is a subset that includes especially private details like health information, immigration status, sexual orientation, financial accounts, and other categories that require extra legal protections. Companies must disclose sensitive information collection and handle it with more restrictive use policies.

Understanding the Bigger Picture: Data, Privacy, and Your Rights

This whole Tik Tok situation, while confusing, actually reveals something important about how tech regulation works in 2025. Privacy law is evolving, becoming more specific, and increasingly visible to regular users. The language of privacy policy is becoming more of a public concern rather than just a legal formality.

For Tik Tok users specifically, the key takeaway isn't that you should panic or abandon the platform. It's that you should understand the trade-offs. Social media platforms operate by trading convenience and connection for data access. They provide free services in exchange for the ability to collect, process, and sometimes sell information about your behavior. If you use these platforms, you're accepting that trade-off.

For immigration-vulnerable people specifically, that trade-off has additional weight. Your data could theoretically be used against you if it ends up in the wrong hands. That's not fear-mongering. That's a realistic risk assessment. Whether that risk is worth the benefits of using social media is a personal decision that only you can make.

What's important is that you make that decision consciously, with full information about what's actually happening. You're not being secretly surveilled for your immigration status. Tik Tok isn't building a database of undocumented immigrants. But if you post about your life and your experiences on Tik Tok, and that posting includes references to your immigration status, then yes, that information exists on their servers, and yes, theoretically it could be accessed by various parties under various circumstances.

That's not unique to Tik Tok. That's how social media works. The conversation we should be having isn't "Should I panic about Tik Tok's privacy policy?" but rather "What information do I want to share on social media, and what are the risks of sharing it?" Those are two very different questions.

The privacy policy panic was a communication failure, not a revelation of secret practices. But it did surface a legitimate conversation about data, privacy, immigration, and risk. That's worth thinking through carefully.

Related Articles

- TikTok's New Data Collection: What Changed and Why It Matters [2025]

- Gemini's Personal Intelligence: How AI Now Understands Your Digital Life [2025]

- Why "Catch a Cheater" Spyware Apps Aren't Legal (Even If You Think They Are) [2025]

- Digital Rights 2025: Spyware, AI Wars & EU Regulations [2025]

- How to Curate Your Reddit Profile: Privacy Tips [2025]

![TikTok's Immigration Status Data Collection Explained [2025]](https://tryrunable.com/blog/tiktok-s-immigration-status-data-collection-explained-2025/image-1-1769231219167.jpg)