Tik Tok Settles Social Media Addiction Lawsuit: What It Means for Digital Wellness [2025]

When the news broke that Tik Tok had settled a major social media addiction lawsuit just hours before jury selection was supposed to begin, it felt like watching a high-stakes poker game where one player suddenly folded. But here's the thing: this wasn't about weakness. It was a calculated move by one of the world's most powerful platforms to avoid a trial that could have set a devastating precedent for the entire social media industry.

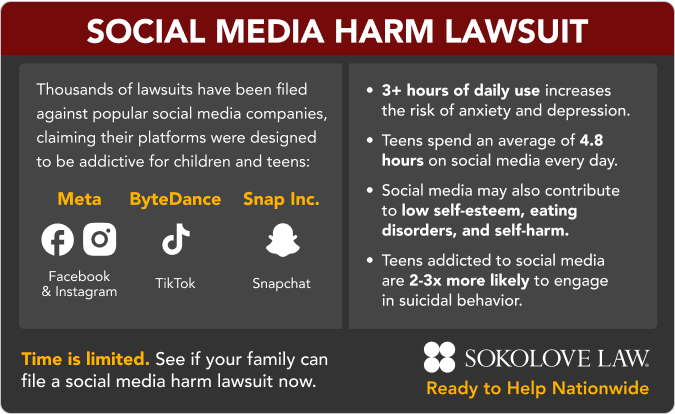

The settlement, quietly negotiated and announced with minimal fanfare, represents a critical turning point in how regulators, courts, and society are thinking about digital addiction. For years, social media companies operated in a kind of legal gray zone, where addiction concerns were treated like background noise from concerned parents and researchers. Now, with Tik Tok stepping back from the courtroom and Snap having just settled weeks earlier, the narrative is shifting. These aren't niche concerns anymore. They're worth billions of dollars to defend against.

What makes this moment genuinely significant isn't just the settlement itself. It's what comes next. Meta and YouTube are still scheduled to face trial. The case that started with one California woman's lawsuit against major tech platforms has now become the opening battle in what looks like a sustained legal offensive against social media business models fundamentally designed around engagement above all else.

Let's dig into what happened, why it matters, and what this tells us about the future of digital platforms and user protection.

TL; DR

- Tik Tok settles addiction lawsuit moments before trial, avoiding public testimony and damages exposure

- Meta and YouTube remain defendants in the case originally brought by California woman in 2023

- Snap settled weeks prior with undisclosed terms, suggesting industry-wide legal pressure

- Addiction mechanisms are documented with research showing variable rewards, infinite scroll, and notification systems drive compulsive use

- $9 billion+ in potential liability makes settlements economically rational despite legal precedent risks

- Regulatory environment shifting toward algorithmic transparency and design accountability standards

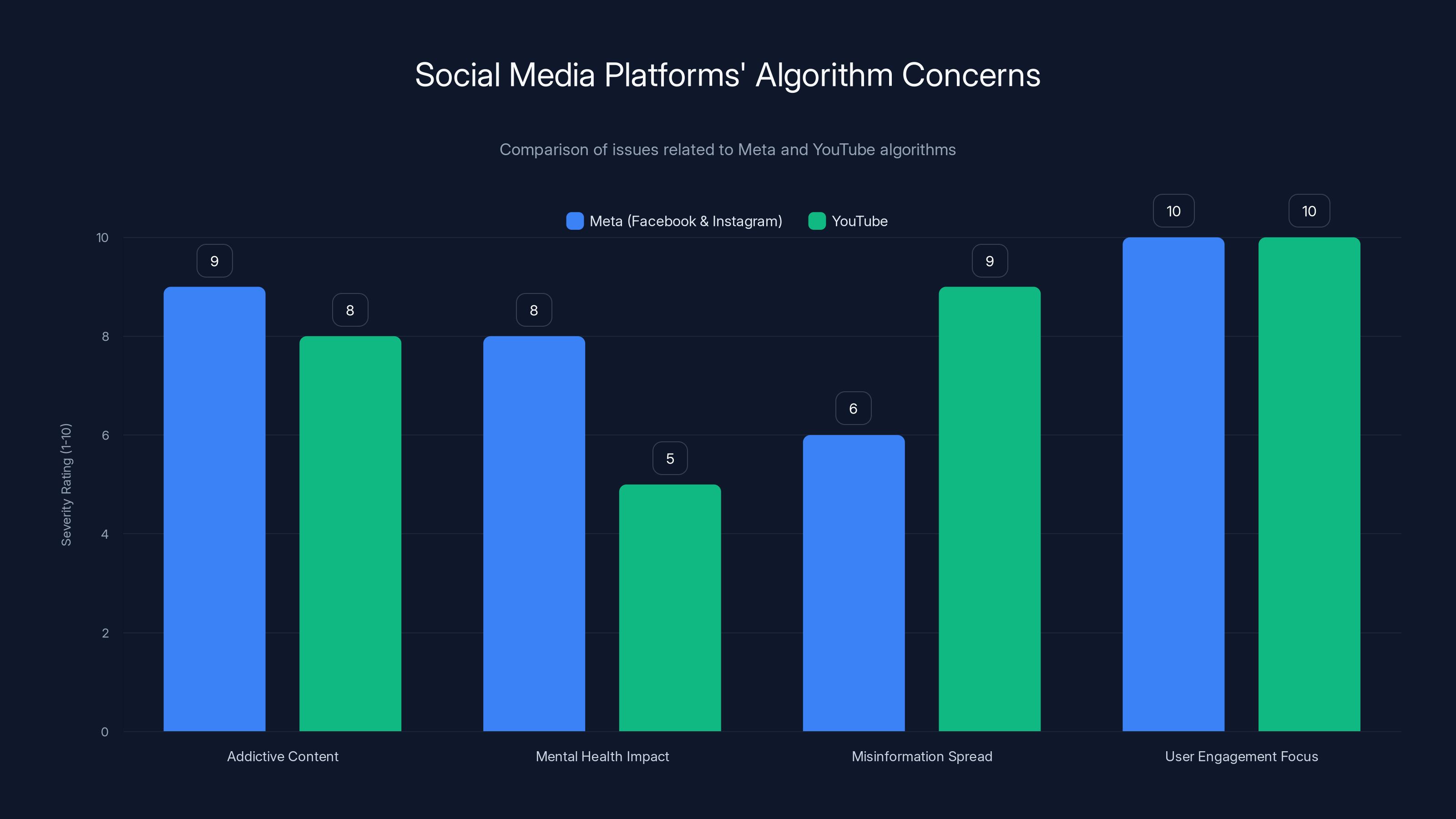

Meta's algorithms are particularly criticized for their impact on mental health and addictive content, while YouTube's focus is on misinformation spread. Both platforms prioritize user engagement.

The Case That Started Everything: Understanding the Lawsuit

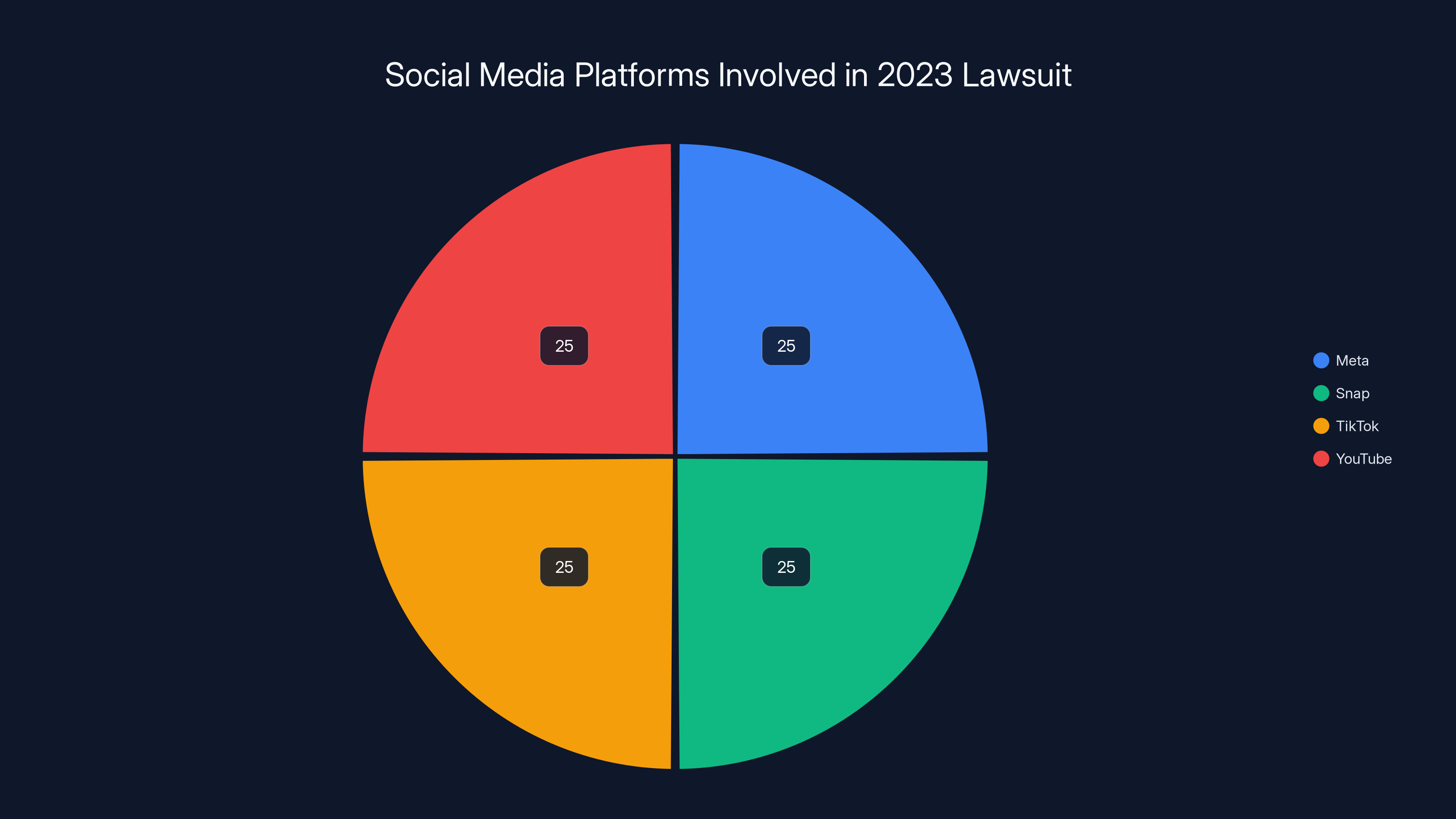

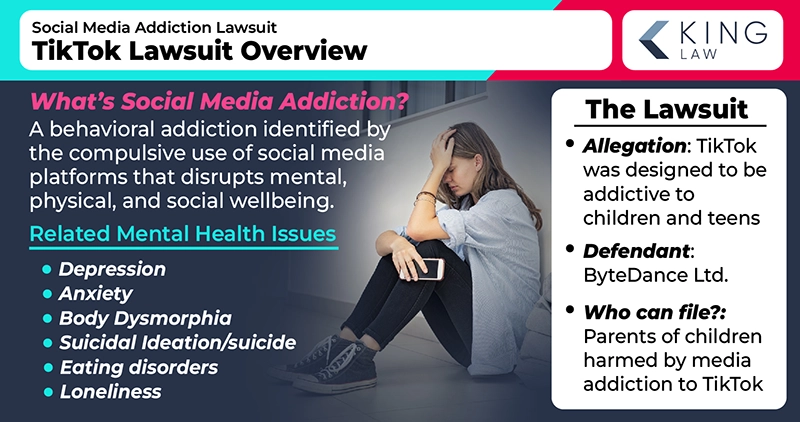

To understand why Tik Tok's settlement matters, you need to know where this lawsuit actually came from. In 2023, a California woman identified in legal documents only as "K. G. M." filed suit against four major social media platforms: Meta, Snap, Tik Tok, and YouTube. Her core allegation was deceptively simple: these platforms deliberately designed their apps to be addictive, and that addiction had caused her serious harm as a child.

This wasn't a lone voice complaint. The plaintiff's legal team, led by prominent attorney Mark Lanier, built a case that treated social media like tobacco litigation. Just as tobacco companies had engineered nicotine delivery to maximize addiction, the argument went, social media platforms had engineered algorithmic feeds, notifications, and reward mechanics to maximize engagement and time spent in app.

What made this lawsuit fundamentally different from previous tech regulation attempts was its framing. This wasn't about privacy or data security, which are complex and technical. This was about addiction, which is something jurors understand intuitively. It's hard for a regular person to wrap their head around machine learning algorithms or data brokers. It's easy to understand: "Your app made my kid unable to put the phone down."

The judge handling the case took it seriously enough to order major executives to testify. Mark Zuckerberg, Meta's CEO, would have been in the witness stand. So would Adam Mosseri, who leads Instagram's operations. Neal Mohan, YouTube's CEO, was likely to testify too.

Let that sink in. These executives, who rarely face public scrutiny on the business practices that generate their companies' revenue, would have been forced to explain under oath why their platforms use certain design patterns. Why the infinite scroll? Why the personalized recommendations? Why the notification systems that pull people back in multiple times per day?

Instead, both Tik Tok and Snap chose to settle before any of that public testimony happened.

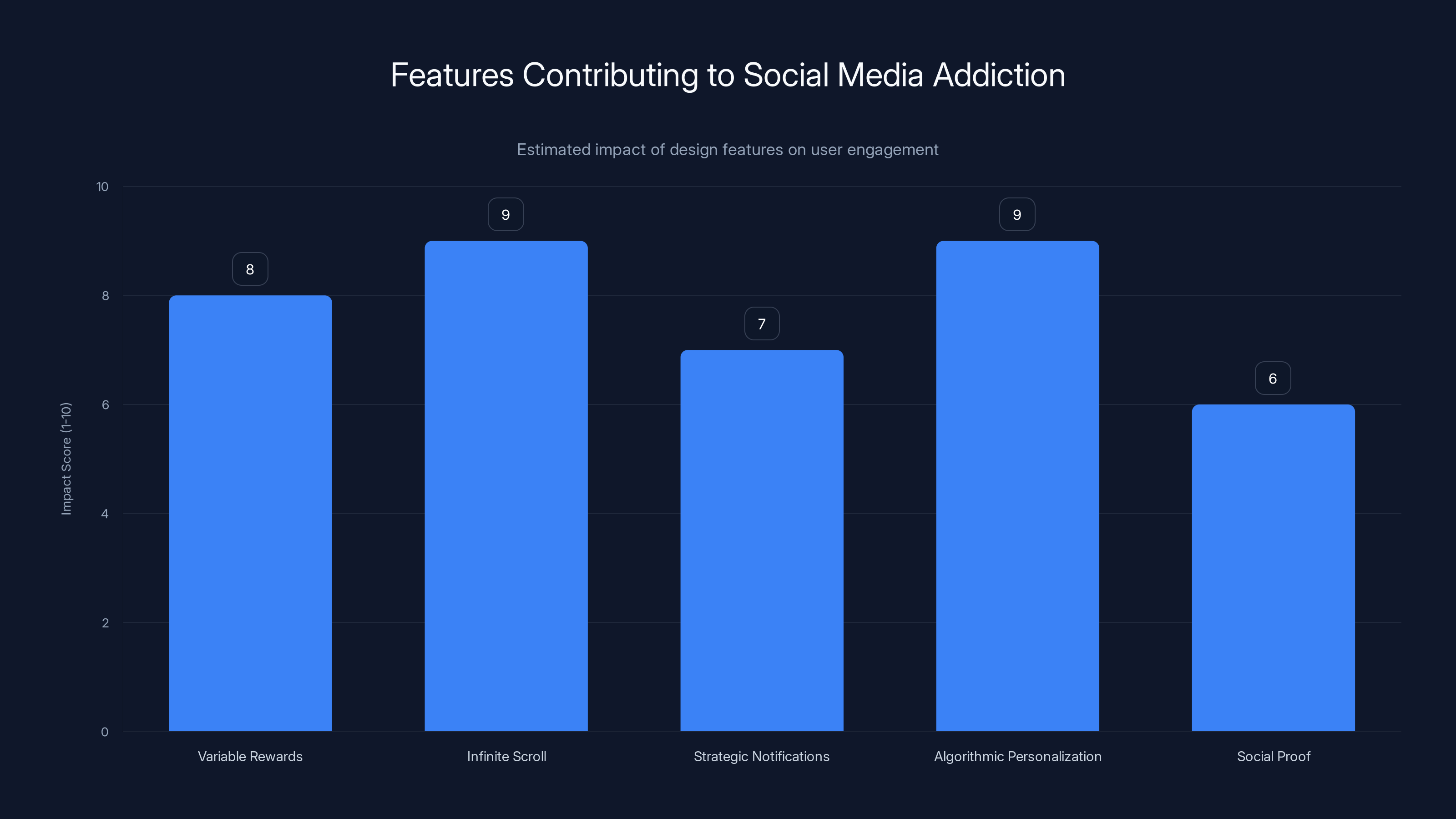

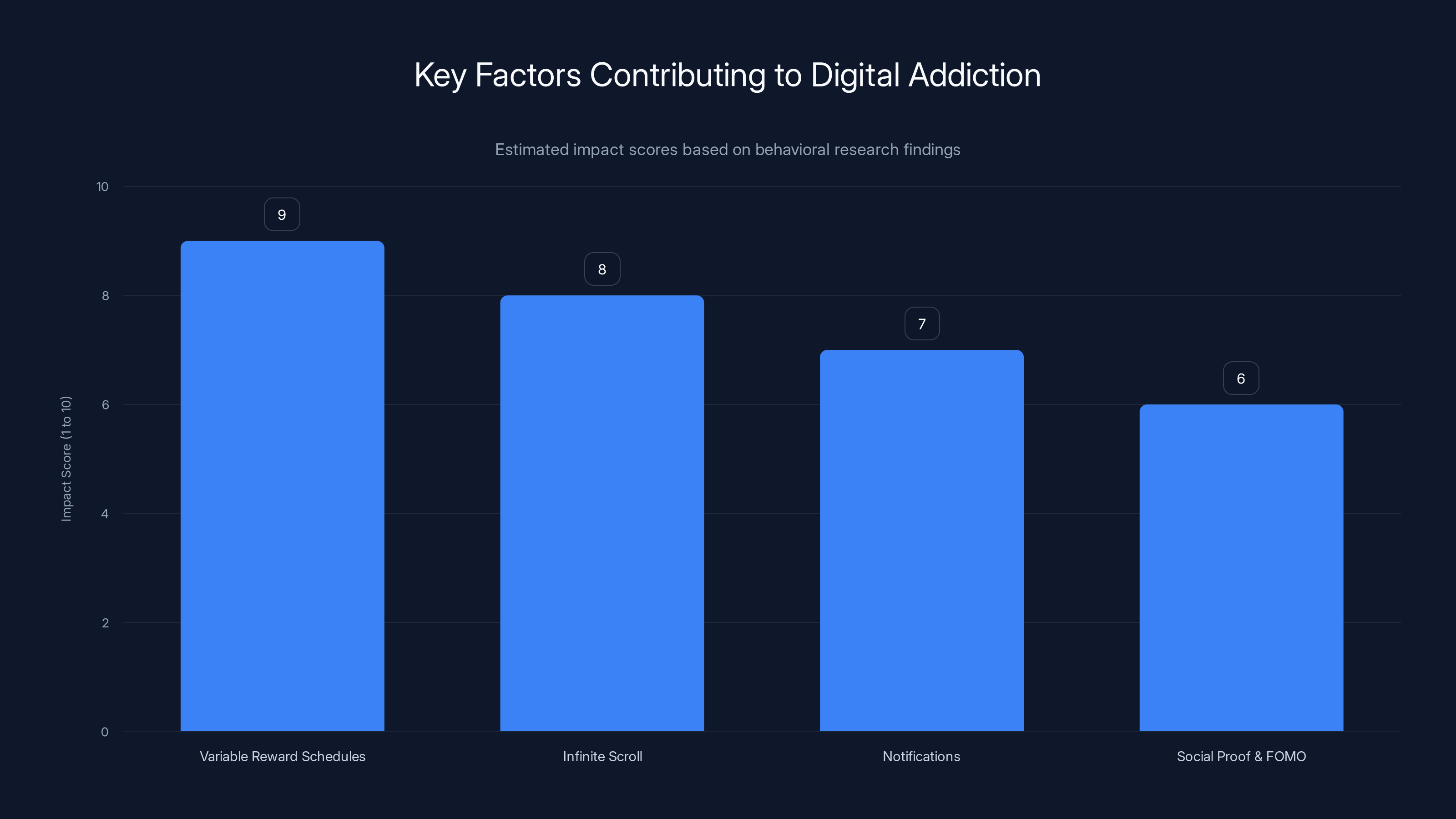

Design features like infinite scroll and algorithmic personalization have the highest impact on creating addictive experiences on social media platforms. (Estimated data)

Why Tik Tok Settled: The Economics of Legal Risk

Let's be brutally honest about why Tik Tok settled. It wasn't because they suddenly discovered their app had problems. It's because the litigation math didn't work anymore. This is cold calculation dressed up as "good resolution," to use Mark Lanier's diplomatic phrasing.

Consider what Tik Tok would have faced in trial. First, there's the testimony issue. Internal documents would likely have been subpoenaed showing exactly how the algorithm works, what engagement metrics Tik Tok optimizes for, and what the company knows about addiction mechanics in its user base. These documents almost certainly exist. Every social media company has teams analyzing "session length," "daily active users," "return rate," and other engagement metrics.

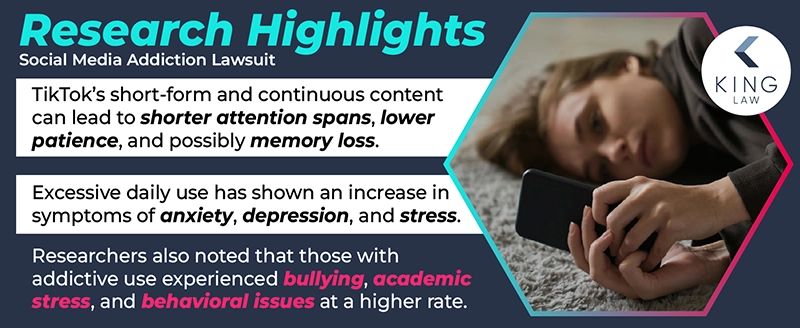

Then there's the expert witness problem. Tik Tok would have had to defend its design against neuroscientists and addiction researchers who have spent the last decade documenting exactly how social media platforms activate the same reward pathways in the brain that gambling and drugs do. The research here is solid. We're not talking about fringe science. We're talking about peer-reviewed studies showing that notifications trigger dopamine responses, that variable reward schedules (the core of how social media feeds work) are psychologically identical to slot machine mechanics, and that teenagers' brains are particularly vulnerable because their prefrontal cortex doesn't fully develop until the mid-20s.

Third, there's the damages question. If K. G. M. won, what would she be entitled to? That's complicated, but the discovery process would have forced Tik Tok to disclose the total number of teenage users, how much time they spend in app, correlation data between usage and mental health outcomes, and internal estimates of harm. This information could then be used in class action suits and state-level litigation.

So from Tik Tok's perspective, settling before trial made financial and reputational sense. Settlements stay largely private. Trials create public records, transcripts, and documented admissions that other plaintiffs can use as precedent. Tik Tok gets to avoid all of that in exchange for a check.

But here's what's critical: settling doesn't make the problem go away. It just clears the field for the next battle.

The Domino Effect: Why Snap Settled First

About a week before Tik Tok's settlement announcement, Snap reached its own settlement in the same case. The timing matters because it signals something to the market: these companies are taking this threat seriously enough to pay to make it disappear.

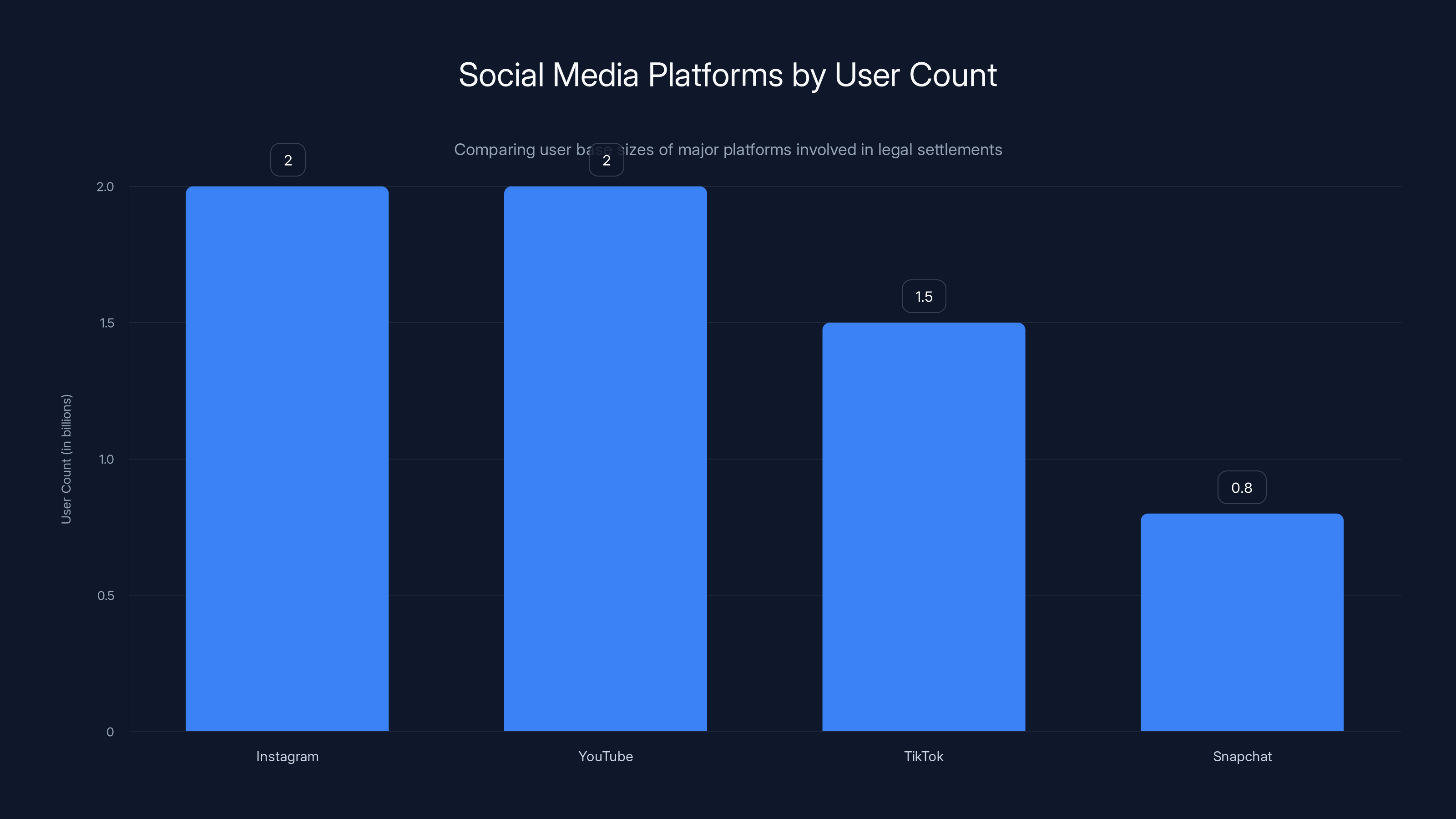

Snap's settlement is particularly interesting because Snapchat isn't the largest platform by user count. Instagram has over 2 billion users. Tik Tok has over 1.5 billion. YouTube has over 2 billion. Snapchat has around 800 million. Yet Snap still chose to settle. Why? Because Snapchat's user base skews younger than Tik Tok's, and younger users means greater potential liability for addiction claims. Snapchat's core feature is ephemeral messaging with Snap Streaks, a mechanic specifically designed to encourage daily consecutive usage. From a legal standpoint, Snapchat's addiction mechanisms might actually be more defensible in some ways (shorter message exposure) but harder in others (the Streaks system is explicitly designed to create compulsion).

Both Snap and Tik Tok settling before trial creates an awkward position for Meta and YouTube. They're now the only major defendants still facing courtroom exposure. That's actually a strategic disadvantage. Why? Because the cases against them will now proceed with their competitors having already settled. There won't be comparative testimony about industry-wide practices. There will be testimony focused specifically on Meta and YouTube, without the dilution of hearing about similar practices at Tik Tok.

Meta faces particular exposure because of Instagram, which has arguably more sophisticated personalization algorithms than any other platform. When the algorithm recommends content, it's often designed to maximize "engagement," which translates to continued scrolling. Meta knows this. They've had a massive crisis over Instagram's impact on teenage mental health. The documents released during Frances Haugen's whistleblower revelations made clear that Meta has extensive internal research on these harms.

YouTube faces its own challenges. The YouTube recommendation algorithm has been extensively studied, and it's well-documented that it frequently recommends increasingly extreme content. While that's not identical to an addiction argument, it's a related problem that could come up in testimony.

The lawsuit filed by K.G.M. in 2023 targeted four major platforms equally: Meta, Snap, TikTok, and YouTube, each accused of designing addictive apps. (Estimated data)

What the Research Actually Says About Digital Addiction

Before we talk about what happens next in the legal arena, it's worth understanding what the scientific evidence actually shows. This matters because it's the foundation of the entire lawsuit.

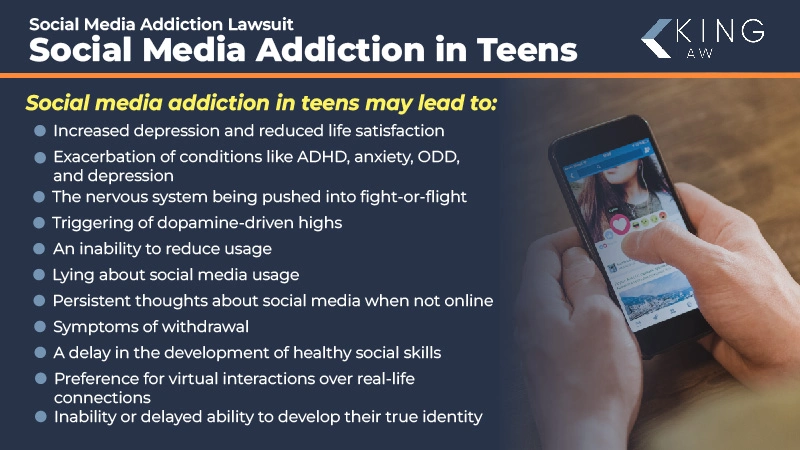

First, some definitions. Digital addiction, officially recognized in the DSM-5 as "Internet Gaming Disorder," involves persistent and recurrent use of the internet for gaming, leading to significant impairment or distress. But social media addiction isn't technically in the DSM-5 yet, though it's being studied and proposed for future inclusion.

However, the behavioral and neurological mechanisms are well-documented. Here's what research shows:

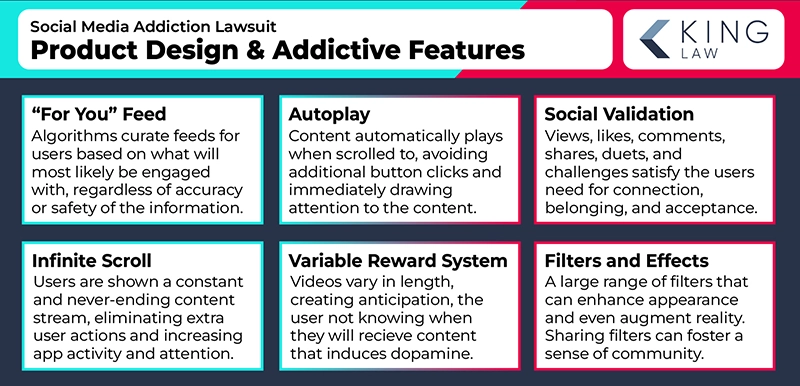

Variable Reward Schedules: When you scroll through Tik Tok's "For You" page, you don't know what you're going to see next. Sometimes it's hilarious. Sometimes it's boring. Sometimes it's mesmerizing. That unpredictability is the whole point. It mirrors what's called a "variable ratio reward schedule," which is the most addictive type of behavioral reinforcement discovered by B. F. Skinner decades ago. Slot machines use variable ratio schedules. So does Tik Tok's algorithm.

Infinite Scroll: There's no natural endpoint to a social media feed. In the old days of social media (or in email), you'd eventually reach the bottom. Now, feeds load endlessly. This removes a natural stopping point that would normally trigger decision-making about whether to continue using the app.

Notifications: Every like, comment, share, or follower notification triggers a small dopamine hit. The platforms understand this perfectly. That's why notifications are timed strategically. Research shows that notifications are often batched and sent at times when the user is most likely to re-engage with the app.

Social Proof and FOMO: Seeing how many likes or views content received creates status anxiety. Missing out on trending content creates FOMO. Both are powerful motivators that drive repeated checking behavior.

Algorithmic Personalization: The more you use the platform, the better the algorithm gets at predicting what will keep you engaged. This creates a feedback loop where the app becomes increasingly tailored to your specific vulnerabilities and interests, making it harder to disengage.

Neuroscientific research shows that these mechanisms activate the same reward centers in the brain (particularly the nucleus accumbens and ventral tegmental area) that are activated by gambling, drugs, and other behavioral addictions. Brain imaging studies have shown comparable patterns of activity.

What's crucial is that these mechanisms aren't accidental. They're intentional. Social media platforms are explicitly optimized for engagement because engagement = ad impressions = revenue. The business model requires addiction. You can't have a platform that generates $100+ billion in annual ad revenue if users only casually check it once or twice a day. The platform has to maximize daily active users, session length, and return frequency.

The Meta and YouTube Problem: Why the Trial Remains Dangerous

With Tik Tok and Snap settled, Meta and YouTube now face a trial that could establish legal precedent. And frankly, their situation is precarious.

Meta's Vulnerability: Meta owns both Facebook and Instagram. Internal documents that emerged from the Frances Haugen revelations showed that Meta conducted extensive research on Instagram's impact on teenage girls' mental health. The research found direct causal links between Instagram use and body image problems, eating disorders, and depression. More damaging still, Meta's executives knew about this research and didn't act on it in ways that would have significantly reduced engagement.

Those documents are now public. They're fair game for the plaintiff's lawyers. During trial, meta executives will be questioned about why they didn't implement changes that their own researchers recommended. That's not a defensible position. You can't argue that your platform isn't addictive when you have internal research showing that it is.

Furthermore, Instagram's algorithm is particularly aggressive. It's been heavily optimized for engagement over safety. The algorithm knows exactly what content will make a user scroll for hours. It has millions of data points about each user's vulnerability to different types of content. This isn't speculation. This is documented through years of reporting by tech journalists and researchers.

YouTube's Vulnerability: YouTube's recommendation algorithm has been extensively studied and critiqued. The algorithm is known for recommending increasingly extreme content, sometimes leading users down rabbit holes of misinformation or harmful content. While that's slightly different from an addiction argument, it's related. The mechanism is the same: the algorithm is optimized to keep people watching.

YouTube's watch time metric is public. Users can see their own statistics on how many hours they've watched. YouTube doesn't discourage this. In fact, the platform's analytics encourage creators to optimize for watch time, which creates a feedback loop where content is increasingly designed to keep viewers glued.

During trial, YouTube executives will be asked to explain the recommendation algorithm's design. They'll be asked why the algorithm sometimes recommends harmful content. They'll be asked about internal estimates of how much watch time is driven by algorithmic recommendation versus organic user behavior. These are questions that are very hard to answer in ways that don't implicate the company in deliberately creating addictive systems.

The Class Action Risk: If Meta or YouTube loses this case, the damages could be enormous. A class action lawsuit encompassing all teenage users of these platforms over a certain time period could involve hundreds of millions of people. Even if damages per person are modest, the total could be billions of dollars.

More importantly, a loss would establish legal precedent that social media platforms can be held liable for harm caused by addictive design. That precedent would then be used in dozens of other lawsuits. Every state's attorney general could bring cases. Plaintiffs' attorneys would file class actions. The entire legal landscape would shift.

Estimated data shows that expert witness challenges (40%) and testimony/internal documents (35%) were significant factors in TikTok's decision to settle, with potential damages also playing a role (25%).

The Broader Regulatory Context: Where This Fits

Tik Tok's settlement doesn't happen in a vacuum. It's part of a much broader regulatory movement against social media companies.

State-Level Litigation: Multiple states' attorneys general have already filed lawsuits against Meta and Snap. New Mexico's attorney general brought a case alleging that Facebook and Instagram have facilitated harm to children. Other states have similar cases in motion. These cases are separate from the addiction lawsuit, but they're part of the same movement.

Federal Legislative Efforts: Congress has been working on bills aimed at social media regulation for years. Nothing has passed yet, but the legislative appetite for action is real. Bills like the Kids Online Safety Act (KOSA) would impose requirements on platforms to protect minors and could create new liability for addictive design practices.

International Regulation: Europe's Digital Services Act is already in effect. It imposes obligations on platforms to assess and mitigate risks, including those related to child protection. The UK's Online Safety Bill is law. Australia has passed legislation. The regulatory environment globally is shifting toward requiring platforms to prove they're not causing harm.

Academic and Medical Pushback: Major medical organizations including the American Academy of Pediatrics and American Psychological Association have issued statements about the risks of social media use to young people. The U. S. Surgeon General issued an advisory on social media and youth mental health. This isn't niche anymore. Mainstream medicine is flagging these issues.

In this context, Tik Tok's settlement is a rational move, but it's also an acknowledgment that the regulatory environment has fundamentally shifted. Companies can no longer rely on legal gray zones. They're now expected to prove they're protecting users, not just argue that they're not explicitly breaking laws.

What the Settlement Actually Means

Here's what we know and don't know about Tik Tok's settlement:

What We Know: Tik Tok reached a settlement with undisclosed terms. It happened just hours before jury selection. Mark Lanier said they were "pleased" with it and that it was a "good resolution." That language suggests the plaintiff got something meaningful, not a nuisance settlement.

What We Don't Know: The settlement amount. Whether it includes mandatory design changes. Whether Tik Tok agreed to any specific safeguards for minor users. Whether there are non-disparagement clauses. Whether the plaintiff agreed to sealed terms or public disclosure.

The fact that terms are undisclosed is actually significant. In some cases, companies insist on confidential settlements to prevent the amounts from being public (since high settlements suggest admission of liability, even if officially it's a settlement without admission of wrongdoing). In other cases, plaintiffs push for public disclosure to amplify their victory.

Likely, Tik Tok pushed for confidentiality. Why would they? Because even if the settlement amount is $50 million, if that becomes public, it signals to other plaintiffs' attorneys that Tik Tok thinks these cases are worth that amount. Every plaintiff's attorney would then ask for similar amounts in their cases. Secrecy keeps the signal from spreading.

The Design Changes Question: The really critical question is whether Tik Tok agreed to change anything about how the app works. Did they agree to implement time limits? Did they agree to reduce push notifications? Did they agree to change the algorithm? We don't know. But the timing of the settlement suggests that Tik Tok wanted to avoid trial badly enough that they might have agreed to something.

Consider: if Tik Tok had simply paid money without making any changes, what's the point? The app would still work the same way. The addiction mechanisms would still be there. Why would the plaintiff's attorney call that a "good resolution"? Unless they negotiated something beyond money.

Snapchat, with the smallest user base of 800 million, settled first due to its younger demographic and potential liability for addiction claims. Estimated data.

The Precedent Problem: Why This Trial Matters

Let's focus on what happens next with Meta and YouTube. Their trial is scheduled to begin jury selection in Los Angeles, and it's genuinely high stakes.

Precedent Risk: If Meta or YouTube loses, it establishes that social media companies can be held liable for harm caused by addictive design. Currently, that's legally uncertain. Companies hide behind Section 230 of the Communications Decency Act, which provides broad legal protection for user-generated content platforms. But addiction mechanics aren't user-generated content. They're platform design.

A loss would clarify that users (or their representatives) can hold platforms accountable for how the platform is designed, not just for what users post. That's a fundamental shift in liability.

Damages Precedent: A loss would also establish what damages might look like. If the jury awards significant damages to one plaintiff, it suggests what damages might be in larger class actions. Some estimates suggest that if Meta or YouTube lost, they could face class action exposure worth tens of billions of dollars.

Design Precedent: A loss might force the winning company to make specific changes to how their platform operates. Imagine a court order requiring Meta to modify Instagram's algorithm to reduce engagement optimization. That would be revolutionary. It would force Meta to choose between profitability and compliance. (Spoiler: they'd probably comply, but it would reduce their stock price.)

The Defense Strategy: How Meta and YouTube Will Argue

Meta and YouTube have a few possible defenses, and understanding these matters because they'll likely appear in any expert testimony:

The Free Choice Argument: "Users choose to use our platforms. Nobody is forced. If users find them addictive, they can stop." This is weak legally, particularly for minors, because minors have diminished legal capacity. But it's a starting position.

The Technology Neutrality Argument: "All engaging experiences—movies, books, games, even conversation—can feel compelling. We're not unique in creating engaging experiences." True, but not really a defense. The issue isn't whether the experience is engaging. It's whether the platform deliberately engineered the engagement in ways that exploit psychological vulnerabilities.

The Design Necessity Argument: "Our design choices are necessary for the platform to work. You can't have a social network without an algorithm. You can't have an algorithm without optimizing for relevance. Engagement is a proxy for relevance." There's something to this, but it's not really a defense either. It's saying: "Yes, we designed it this way, and we couldn't do it differently." The question is whether they tried.

The Competitor Parity Argument: "Everyone does this. We're not unique. If we're liable, so are all the others." With Tik Tok and Snap having settled, this argument is actually weaker. It's basically saying: "We're so confident in our legal position that we're willing to go to trial even though our competitors settled." That's either brave or foolish.

None of these defenses are particularly strong when you're facing a jury of regular people who use social media, understand the experience of compulsive scrolling, and have probably worried about their own kids' screen time.

Variable reward schedules have the highest impact on digital addiction, followed by infinite scroll, notifications, and social proof/FOMO. Estimated data based on behavioral research.

What This Means for Platform Design Going Forward

Regardless of how the Meta and YouTube trial resolves, the settlement signals something important about the future of social media platform design: the era of "maximize engagement above all else" is ending.

We're moving toward a new model where platforms will need to balance engagement with user welfare. This might mean:

Time Limits: Platforms might implement daily usage limits, either mandatory or as an optional feature that's easy to access and use.

Notification Reduction: Platforms might reduce or eliminate push notifications, or make the algorithms that decide when to send them more conservative.

Algorithmic Transparency: Platforms might expose more information about how recommendations are made, allowing users and regulators to audit for problematic patterns.

Content Moderation for Harm: Platforms might more aggressively remove or reduce the visibility of content that's known to trigger addictive usage patterns.

Minor Protections: Platforms might implement special rules for minor users, like mandatory time limits, reduced algorithmic recommendations, or different notification patterns.

None of this is certain. But the regulatory environment and legal landscape are making it increasingly likely.

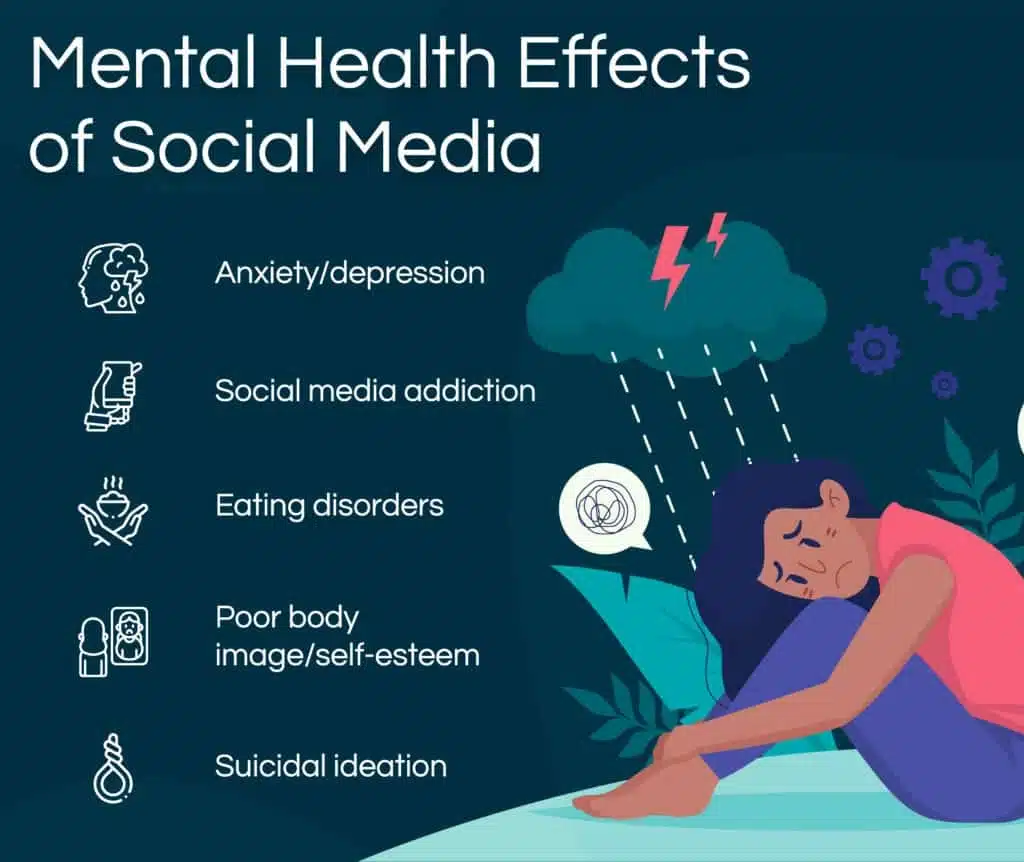

The Mental Health Impact: Why This Matters Beyond Law

It's easy to get lost in the legal and business implications of these settlements. But the actual reason the lawsuit exists is worth keeping in mind: real harm to real people.

The correlation between social media use and teenage depression, anxiety, eating disorders, and body image problems is well-documented. The research doesn't always show causation (some people who are already struggling seek out social media), but it's strong enough that major medical organizations have issued warnings.

For some teenagers, social media becomes genuinely addictive. They experience withdrawal symptoms when they can't access it. They compulsively check notifications. They experience anxiety when they can't use the app. For these individuals, the settlement isn't abstract legal maneuvering. It's about whether platforms have any responsibility to reduce the mechanisms that make that addiction possible.

A teenage girl checking Instagram 50 times per day isn't doing that because she's weak-willed. She's doing it because the app is deliberately engineered to trigger that behavior. The psychology is real. The neurobiology is real. The harm is real.

What Happens to Meta and YouTube: Possible Outcomes

As we head into the actual trial with Meta and YouTube as defendants, there are several possible outcomes:

Outcome 1: Settlement: Meta or YouTube could decide, once jury selection begins or during the trial, that the legal risk is too high and settle like their competitors did. This seems possible, particularly if early trial testimony goes badly for the defendants.

Outcome 2: Verdict for the Defendant: The jury could decide that while social media platforms might be engaging, they're not deliberately designed to be addictive, or they don't believe the addiction caused the plaintiff measurable harm. This is possible but seems unlikely given the evidence.

Outcome 3: Verdict for the Plaintiff: The jury could find that the platforms are deliberately designed to be addictive and that caused the plaintiff harm. This would be major and would likely trigger an immediate appeal. But it would also establish precedent that other plaintiffs could rely on.

Outcome 4: Mistrial: Some issue with the trial process could result in a mistrial, requiring a new trial later.

Most legal analysts think a settlement before or during trial is most likely. But if the case goes to completion, a verdict for the plaintiff would be extraordinary and genuinely transformative for the tech industry.

The Bigger Picture: Tech Regulation and Accountability

Zoom out from this one case, and you see a much larger movement toward holding tech companies accountable for the impacts of their products.

For years, tech companies operated with a kind of regulatory immunity. Section 230 protected them from liability for user-generated content. Privacy law was fragmented and weak. Product liability law didn't quite apply. Regulatory agencies lacked resources and technical expertise.

All of that is changing. Regulators are getting smarter. Legislatures are passing laws. Courts are allowing cases to proceed. International regulatory movements are creating pressure.

Tik Tok's settlement is a small acknowledgment of this shift, but it's a meaningful one. The company is admitting that the legal risk of defending itself in court is now too high. That's a shift from the historical position of tech companies, which has been to fight aggressively on legal grounds while changing nothing operationally.

What Users Can Do Right Now

While these legal cases work their way through the system, individual users aren't powerless. There are concrete steps you can take:

Understand Your Usage: Check your screen time statistics. Know how much time you're actually spending on social media. The platforms make this easy to access. Most people are surprised by the numbers.

Use Built-In Tools: Most platforms now offer time limit features, notification controls, and usage insights. Turn on these features. Make notification rules stricter. Set daily time limits.

Modify Your Feeds: Unfollow accounts that make you feel bad. Mute keywords. Customize your algorithm by being intentional about what you engage with. The algorithm learns from your behavior. Make it learn that you want better content.

Take Real Breaks: Schedule phone-free time. Actual time when the phone is in another room. These breaks are harder to commit to than you'd think, which is actually evidence that the apps are working as designed. But they're important.

For Parents: Monitor your kids' usage. Have conversations about social media. Help them understand that these apps are designed to be engaging. That's not a moral failing on their part; it's intentional platform design.

The Road Ahead: What to Watch For

As this situation develops, here are the critical things to watch:

Meta and YouTube Trial Timeline: The trial is in Los Angeles. It's likely to get media coverage, particularly if major executives testify. Watch for how those executives perform under questioning.

Other State Cases: States like New Mexico with separate cases against Meta will be filing. Watch for settlements or victories that might accelerate action elsewhere.

Legislative Movement: Congress might finally pass legislation like KOSA or other regulation. This would be more significant than any individual lawsuit because it would set standards across all platforms.

International Precedent: Watch the UK Online Safety Bill implementation and EU Digital Services Act enforcement. If those jurisdictions successfully force platforms to change, it creates pressure for U. S. regulation.

Platform Design Changes: Whether Meta or YouTube makes voluntary changes to reduce addictive mechanics. This is the real indicator of whether the legal pressure is working.

Why This Matters: Beyond the Courtroom

At its core, this case is about a fundamental question: what responsibility do platforms have for how their products affect people's minds and behavior?

For the first decade or so of social media, the answer was basically "none." Platforms were just neutral conduits. Users were responsible for managing their own usage.

That answer doesn't hold up anymore. We know too much about how these platforms work. We know they're not neutral. We know they're deliberately engineered to maximize engagement. We know that engagement often comes at the cost of user wellbeing.

The question now is whether the legal and regulatory system will force platforms to balance engagement optimization with user welfare. Or whether platforms will continue to prioritize profit above all else, accepting legal settlements as a cost of doing business.

Tik Tok's settlement suggests the company chose the latter. But the pressure is building, and eventually, that pressure usually creates change.

FAQ

What is social media addiction?

Social media addiction refers to the compulsive use of social media platforms despite negative consequences, characterized by persistent urges to check notifications, scroll feeds, and return to the app throughout the day. It involves psychological dependence on the engagement rewards (likes, comments, follows) that these platforms deliver. The American Psychological Association recognizes behavioral addictions including internet gaming disorder, and social media addiction follows similar patterns of neurological activation in reward centers of the brain.

How do platforms like Tik Tok and Instagram deliberately create addictive experiences?

Platforms use several deliberate design mechanisms to maximize engagement and encourage compulsive use. Variable reward schedules (unpredictable content that creates anticipation), infinite scroll (eliminating natural stopping points), strategic notifications timed for maximum re-engagement, algorithmic personalization that learns what content keeps users engaged longest, and social proof mechanisms (likes and follower counts) all combine to create compelling, habit-forming experiences. These aren't accidental design choices but rather intentional optimizations that directly serve each platform's revenue model, which depends on advertising and is measured by time spent in app.

Why did Tik Tok settle the addiction lawsuit?

Tik Tok settled to avoid the legal and reputational risks of a public trial. A trial would have exposed internal documents about how the platform's algorithm works, forced executives to testify about addiction mechanics, and potentially established legal precedent that platforms can be held liable for harm caused by addictive design. Settlement allows Tik Tok to resolve the case confidentially while avoiding the risk of a jury verdict that could trigger massive class action lawsuits and force design changes. The timing right before jury selection suggests the company calculated that settlement costs less than trial risk.

What happens now that Meta and YouTube are the remaining defendants?

Meta and YouTube now face a trial that could set important legal precedent. They must defend against claims that their platforms are deliberately designed to be addictive and that this design caused harm. The fact that their competitors (Tik Tok and Snap) settled strengthens the plaintiff's position by suggesting even the tech companies consider these cases serious enough to pay to resolve them. Internal documents from Meta's researcher Frances Haugen revelations could be particularly damaging, as they show the company knew about harms and didn't fully address them. A verdict for the plaintiff could expose Meta and YouTube to billions in class action liability and force them to fundamentally change how their algorithms operate.

How does brain science prove that social media is addictive?

Neuroscientific research shows that social media engagement activates the same reward pathways in the brain (particularly the nucleus accumbens and ventral tegmental area) that are activated by gambling, drugs, and other addictions. Brain imaging studies reveal comparable patterns of neural activation. The variable reward schedule used by social media feeds is psychologically identical to slot machine mechanics, which B. F. Skinner identified as the most addictive type of behavioral reinforcement. Teenagers are particularly vulnerable because their prefrontal cortex, which controls impulse control and risk assessment, doesn't fully develop until age 25. These aren't opinions but rather documented neurobiological mechanisms that explain why people struggle to use these platforms in moderation.

What design changes might platforms be forced to make?

Platforms could be required to implement mandatory or easily accessible daily usage limits, reduce or eliminate push notifications, provide algorithmic transparency so users understand why content is being recommended, modify algorithms to reduce engagement optimization in favor of user welfare, and implement special protections for minor users such as mandatory time limits, reduced algorithmic recommendations, or different notification patterns. Some jurisdictions, particularly in Europe under the Digital Services Act, are already beginning to require platforms to demonstrate they've assessed and mitigated risks to user wellbeing. A legal loss or regulatory pressure could accelerate these changes industry-wide.

How has the regulatory environment changed?

The regulatory environment has shifted dramatically toward holding platforms accountable. The U. S. Surgeon General issued an advisory on social media and youth mental health. Medical organizations including the American Academy of Pediatrics have issued statements about risks. Congress has considered bills like the Kids Online Safety Act that would impose new requirements. The EU's Digital Services Act is already in effect, imposing obligations to assess and mitigate risks. The UK's Online Safety Bill became law. Multiple states' attorneys general have filed lawsuits against social media companies. This multi-pronged regulatory pressure from medical authorities, legislatures, courts, and international bodies signals that the era of regulatory immunity for tech companies is ending.

What can I do if I'm concerned about my own social media use?

You can check your screen time statistics, which all major platforms provide in settings. Set daily time limits using built-in tools. Modify notification settings to receive fewer interruptions. Customize your algorithm by following accounts that add value and muting or unfollowing those that don't. Use website blockers or phone features that restrict app access during certain hours. Take regular breaks from social media entirely. For parents concerned about children, have conversations about how social media is designed to be engaging, monitor usage, and help establish healthy boundaries. Understanding that these apps are deliberately engineered to be compelling is the first step to using them more intentionally.

Conclusion

Tik Tok's settlement isn't an ending. It's barely a beginning. What it represents is a fundamental shift in how the legal system, regulators, and society view social media companies and their responsibility for the impacts of their products.

For years, these companies operated under the assumption that they were neutral platforms, that they bore no responsibility for how their products affected people's behavior and mental health. That assumption is no longer legally or socially sustainable. Regulators understand that platforms aren't neutral. They're deliberately engineered systems designed to maximize engagement, often at the cost of user wellbeing.

The settlement signals that even Tik Tok, one of the most aggressive platforms in terms of engagement optimization, calculated that defending itself in court wasn't worth the risk. That's a massive shift. It's an acknowledgment that the legal landscape has changed.

What happens next will depend largely on how the Meta and YouTube trial unfolds. A verdict for the plaintiff would be revolutionary and would force dramatic changes across the industry. A settlement would represent continued legal pressure. Either way, the era of unaccountable engagement optimization is ending.

For users, the critical takeaway is that you're not weak-willed if you find it hard to moderate your social media usage. These platforms are deliberately engineered to be addictive. That's not a personal failing. It's intentional platform design. Understanding that is the first step toward using these tools more intentionally rather than being used by them.

The regulatory framework is shifting. The legal system is shifting. But individual behavior change still matters. Whether or not courts force platforms to make changes, you can modify how you interact with them. Set limits. Use privacy and notification controls. Be intentional about what content you engage with. Take breaks.

The path forward likely includes both regulatory action and individual responsibility. Both are important. The settlements are just the opening move in a much longer game about what responsibility tech companies have for their impacts on human behavior and wellbeing.

Key Takeaways

- TikTok settled a major social media addiction lawsuit just hours before jury selection, avoiding public testimony and precedent-setting trial

- Meta and YouTube remain the only major defendants, facing a trial that could establish legal precedent that platforms can be held liable for addictive design

- Research shows social media platforms deliberately use variable reward schedules, infinite scroll, and algorithmic personalization to maximize engagement at the cost of user wellbeing

- Regulatory environment globally is shifting toward holding platforms accountable, with legislation in Europe already in effect and Congressional action pending in the U.S.

- Individual users can take concrete steps to manage social media use including setting time limits, modifying notifications, and customizing their algorithmic feeds

Related Articles

- Meta's "IG is a Drug" Messages: The Addiction Trial That Could Reshape Social Media [2025]

- Social Media Companies' Internal Chats on Teen Engagement Revealed [2025]

- Meta Pauses Teen AI Characters: What's Changing in 2025

- Age Verification & Social Media: TikTok's Privacy Trade-Off [2025]

- WhatsApp's Strict Account Settings: What They Mean for Your Privacy [2025]

- TikTok US Ban: 3 Privacy-First Apps Replacing TikTok [2025]

![TikTok Settles Social Media Addiction Lawsuit [2025]](https://tryrunable.com/blog/tiktok-settles-social-media-addiction-lawsuit-2025/image-1-1769540974909.jpg)