Introduction: What the Documents Really Show

Last week, the tech industry got caught red-handed. Not in some dramatic whistleblower moment, but in something arguably worse: their own emails and internal presentations, now public as part of a massive legal battle.

Thousands of internal documents from Meta, TikTok, YouTube, and Snap were released ahead of major trials brought by school districts, state attorneys general, and parents alleging that these platforms deliberately designed products to addict teenagers. The documents paint a picture that's uncomfortable for Big Tech: these companies knew exactly what they were doing when it came to teen users, discussed the risks openly in closed-door meetings, and weighed those risks against business opportunity.

Here's what makes this different from the usual tech industry drama. We're not talking about leaked emails or rumors. We're talking about official documents produced as part of court-ordered discovery, reviewed by The Tech Oversight Project and independently verified by major news outlets. These aren't speculation. They're the internal conversation these companies thought would never become public.

The trials themselves are historic. This is the first time tech companies are being sued at this scale by government entities and schools over claims that their products harm young users. The first trial kicks off in June. On the Monday these documents dropped, a federal judge heard arguments about the scope of the trials, which will determine what evidence gets considered and how broad the legal exposure really is.

But beyond the legal theater, these documents reveal something crucial about how Big Tech actually thinks about teenagers. They reveal conversations about teen recruitment, retention strategies, the mental health risks they tracked internally, and how they calculated whether addressing those risks was worth the business cost. This isn't theory anymore. This is their own words.

The documents span nearly a decade, from 2016 to recent years, capturing a period when teen social media use exploded and when platforms shifted from viewing young users as a future opportunity to viewing them as a critical growth engine. What you'll find is that the companies didn't stumble into teen addiction. They actively built systems designed to keep young users engaged, all while tracking evidence that those systems were causing harm.

TL; DR

- Internal documents show platforms strategically recruited teens as a core growth priority, with Meta prioritizing "teen growth" and Google noting "kids under 13 are the fastest-growing Internet audience"

- Companies tracked harmful effects while designing addictive features, including awareness of mental health risks, depression links, and sleep disruption from autoplay and infinite scroll

- Risk vs. reward calculations were made transparently, with executives deciding business growth outweighed addressing safety concerns for younger users

- Platforms used psychological tactics targeting adolescent vulnerabilities, including likes, notifications, and algorithmic recommendation systems designed specifically for teen retention

- Legal trials represent historic accountability moment, with first trial starting June 2025, potentially reshaping how platforms design products for young audiences

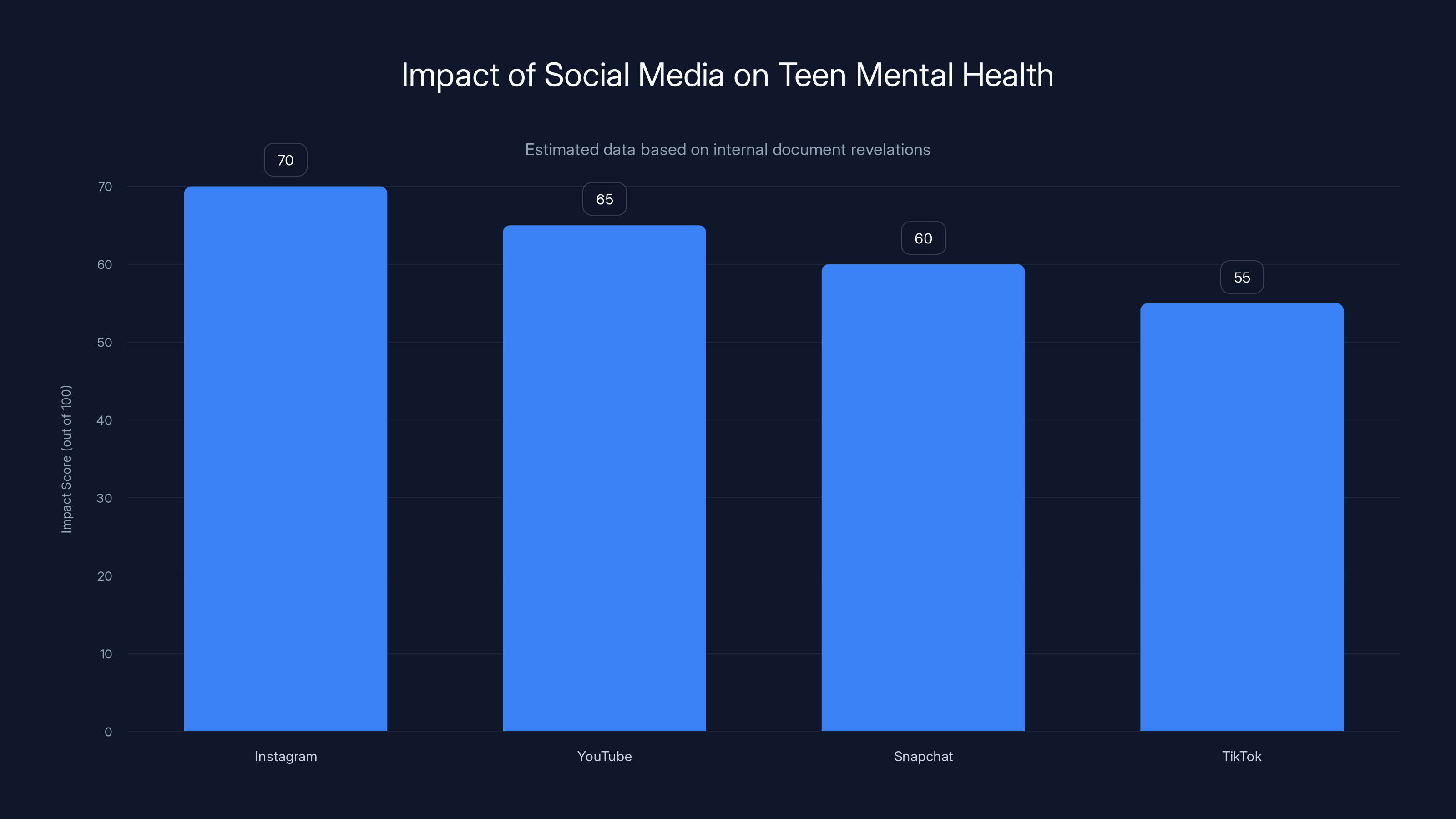

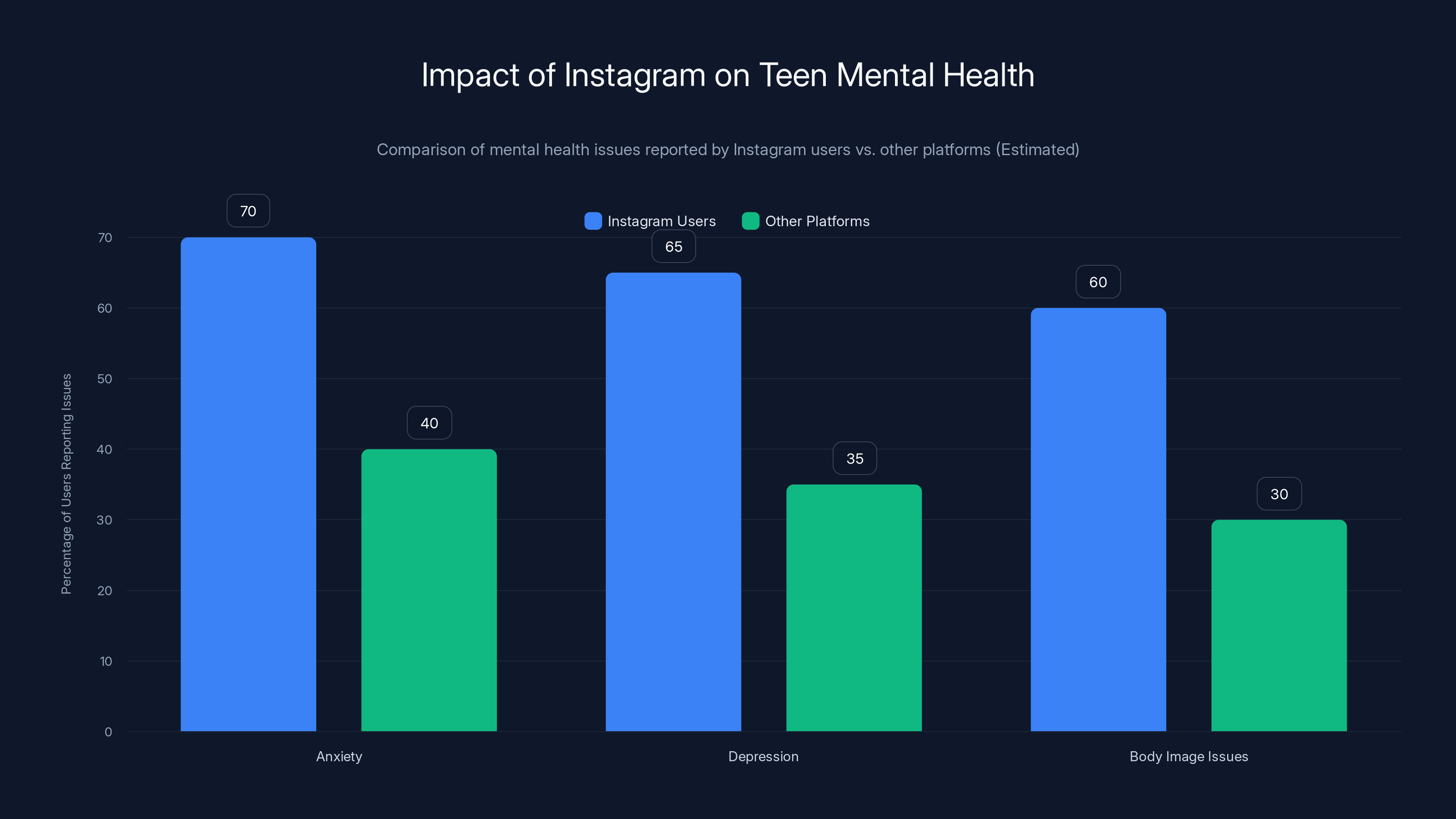

Estimated data suggests Instagram has the highest negative impact on teen mental health, followed by YouTube, Snapchat, and TikTok. These platforms prioritize engagement over safety.

The Teen Gold Rush: Why Social Media Companies Became Obsessed with Young Users

There's a moment in every tech company's lifecycle when growth flattens. User saturation hits. Engagement plateaus. What do you do? You go younger. That's the story of social media in the 2015-2017 period, and the documents show exactly how it played out in executive-level decision-making.

Meta's playbook is the clearest example. In a December 2016 email flagged "FYI: Teen Growth!!" a Meta executive told Guy Rosen, then-head of growth, that "Mark has decided that the top priority for the company in H1 2017 is teens." This wasn't a vague statement. This was literal C-suite marching orders to make teen recruitment the primary business objective for the entire first half of 2017.

Why? Because adult growth was slowing down. By 2016, Facebook's core user base wasn't growing explosively anymore. The teenage market, though, offered something different: lifetime value. Get someone at 14, keep them engaged for the next 50 years, and the math becomes extraordinary. Plus, teenagers spend more time on social platforms than any other demographic. They're more likely to create content, more likely to engage with ads, more likely to invite friends. They're not just users. They're growth multipliers.

Google saw the same opportunity. A November 2020 internal slide titled "Solving Kids is a Massive Opportunity" explicitly stated that "Kids under 13 are the fastest-growing Internet audience in the world." The deck noted that family users "lead to better retention and more overall value." The strategy was elegant: get schools to adopt Chromebooks, then leverage that relationship to drive YouTube adoption and subsequent Google product purchases down the line. One Google spokesperson later claimed YouTube doesn't market directly to schools, but the internal documents tell a different story. The company strategically targeted the educational market as a gateway to youth adoption.

Snap and TikTok followed similar logic. TikTok's algorithm was specifically designed to be addictive to young users. The platform's internal understanding of teen psychology was sophisticated. They knew what retention mechanisms worked. They deployed them aggressively.

The business case for teen recruitment is worth understanding. A teenager acquired at 14 generates value for 60+ years. They develop platform habits that stick. They're less price-sensitive than adults. They create the content that attracts other users. And critically, their engagement metrics are higher. They spend more time, create more content, and drive more social connections than adult users. The lifetime customer value calculation makes the entire teen strategy mathematically rational, at least from a pure profit standpoint.

What the documents show is that this wasn't an accident or a byproduct of launching features. It was deliberate. It was prioritized. It was measured. And the companies knew exactly what they were doing.

How Meta Planned to Turn Teens Into "Smaller, Cooler" Content Creators

Meta's internal strategy for teen engagement went beyond just recruitment. The company thought deeply about how to make Facebook and Instagram appealing to teenagers who were rapidly losing interest in their parents' platform.

The insight was simple but revealing: teenagers didn't want their parents watching them. They'd already created Instagram accounts for that. What they wanted was something smaller, more private, more experimental. Meta knew this because teenagers had literally told them by inventing "Finsta"—fake Instagram accounts where teens could post unfiltered content to small groups of actual friends without fear of adult judgment.

Instead of letting this behavior exist on other platforms, Meta decided to formalize it. Internal documents show the company proposed introducing a "private mode" for Facebook that would mimic what teenagers loved about Finsta: "smaller audiences, plausible deniability, and private accounts." The genius here is that Meta would own the private behavior instead of losing it to competitors.

The company also developed a "teen ambassador program" for Instagram, essentially turning popular teenagers into brand advocates. Give them tools, status, and recognition, and they'll promote the platform to their peers. It's a classic growth hack, but its application to teens shows how strategically Meta thought about adolescent psychology and social dynamics.

What's particularly telling is that Meta recognized the risk in these moves. Emails from 2016 discussing the short-lived under-21 app "Lifestage" show executives debating whether to inform school administrators about the app's launch. One concern: how would they verify that only actual teenagers were using it? The potential for predators to impersonate minors was obvious. But the solution—adding account verification and safety checks—was considered less important than maintaining the app's "cool factor" by keeping it secret from adults.

One internal email captures the tension perfectly: "[W]e can't enforce against impersonation/predators/press if we don't have a way to verify accounts." Knowing this fundamental safety vulnerability, the company launched the app anyway. That's not negligence. That's a calculated risk decision.

The Lifestage story is particularly important because it shows Meta's actual hierarchy of concerns. Teen engagement and growth came first. Safety mechanisms came later, if at all. The app was eventually shut down, but not because of safety concerns. It was killed because it didn't gain traction with users. The safety risk was apparently acceptable for growth potential, but user adoption numbers determined its fate.

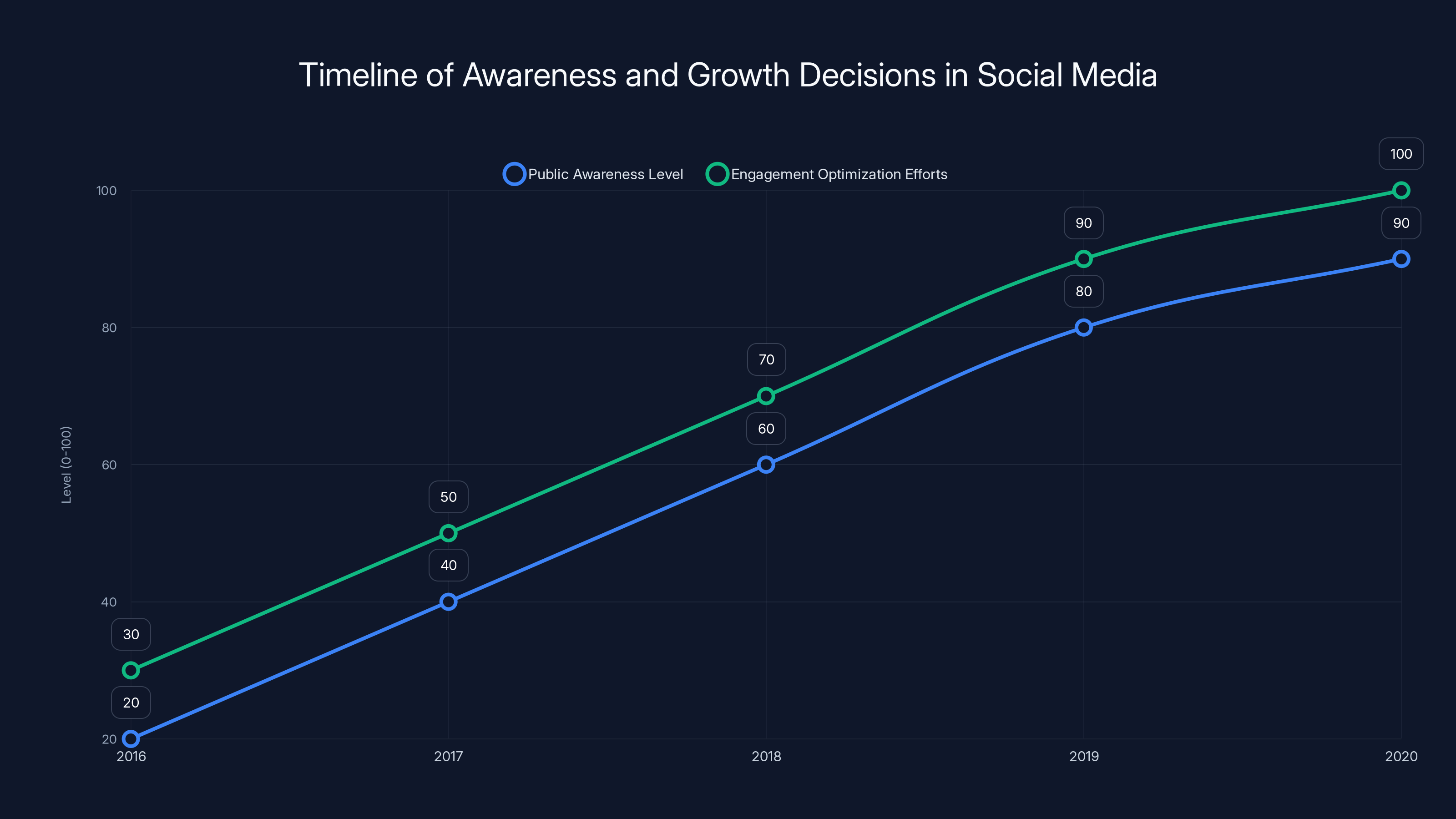

The timeline shows that from 2016 to 2020, public awareness of social media's negative impacts on teens increased significantly, yet companies continued to enhance engagement optimization efforts. Estimated data.

The Instagram Problem: How Platforms Trapped Their Own Biggest Problem

Meta's internal documents reveal a fascinating contradiction about Instagram and teen mental health. The company had data showing that Instagram was making teenagers feel worse. The platform comparison research was stark: Instagram users reported higher rates of anxiety, depression, and body image issues compared to other social platforms. This isn't speculation. Meta had the data. They knew it.

But instead of pulling back, instead of redesigning the platform to reduce harm, Meta leaned in. The company increased investment in Instagram's algorithmic recommendations. They expanded the features most correlated with mental health problems, like Stories and the Like button. They made the platform more addictive, not less.

Why? Because Instagram was also Meta's most valuable property. It was driving advertising revenue, user growth, and retention. The teenagers suffering from anxiety were also the teenagers generating billions in ad value. Addressing the mental health issue would have meant reducing engagement. That wasn't going to happen.

The internal documents show Meta commissioning research into the Instagram-mental health link, then watching executives discuss the PR risk if that research became public. They weren't trying to solve the problem. They were trying to manage the optics of the problem. When internal research confirmed that Instagram was linked to body image issues and eating disorders in teenage girls, the response wasn't to redesign the algorithm. It was to figure out how to explain it away.

One particularly damaging memo notes that "We make body image worse for 1 in 3 teen girls." That's not a hypothesis. That's Meta's own research. And the response was to discuss how to contextualize the finding rather than how to fix it. When a company has that data and makes that choice, it's not collateral damage. It's a decision.

What makes this especially damaging is that Meta had the technical ability to reduce harm. They could have deprioritized comparison-based content. They could have limited algorithmic recommendations that push toward extreme body types. They could have added friction to features that drive obsessive checking. They could have many things. But none of those actions would have maximized engagement and revenue. So they didn't do them.

Google's "Time Well Spent" Problem: When YouTube Knew Autoplay Was Harming Kids

Google's documents tell a similar story but with a different mechanism. YouTube's autoplay feature—where videos automatically play one after another without user interaction—was internally known to be problematic for young viewers. A 2018 Google deck titled "Digital Wellness Overview - YT Autoplay" explicitly states that "Tech addiction and Google's role has been making the news and has gained prominence since 'time well spent' movement started."

Translation: Google knew that its products were being designed to be addictive, the public was becoming aware of this, and the company was monitoring the PR risk. The response wasn't to remove autoplay or redesign how it worked. The response was to monitor how the public conversation evolved and prepare messaging around it.

Google's acknowledgment is interesting because it shows that tech companies weren't operating in ignorance. They understood concepts like "time well spent." They knew that their product design was being criticized for creating addictive experiences. They had internal discussions about digital wellness. But knowing something and acting on it are different things.

YouTube's autoplay feature is brilliantly designed for engagement but devastating for young users who lack the impulse control to stop watching. A teenager who intends to watch one 5-minute video ends up consuming 90 minutes of content because the next video starts automatically. The platform harvests attention systematically, and Google knew it was doing so.

What's revealing is that Google had alternatives. The company could have required explicit clicks to play the next video. It could have added time warnings. It could have limited autoplay for users under 18. Instead, Google researched the problem, documented the harm, and kept the system as-is because it was too profitable to change.

The "Digital Wellness" framing in Google's documents is particularly clever. By positioning autoplay as a potential risk while maintaining the feature, Google created the appearance of responsibility without implementing actual changes. It's a common corporate strategy: acknowledge the problem, commission research, discuss solutions, change nothing. The public sees the acknowledgment. The bottom line benefits from the inaction.

Snap's Evolving Strategy: From Secret Features to Ephemeral Pressure

Snap's documents tell a more complex story. The company wasn't as focused on formal teen recruitment as Meta and Google, but Snapchat's product design was arguably more psychologically sophisticated in targeting young users.

Snap understood something crucial: teenagers value ephemerality. Messages that disappear, stories that vanish after 24 hours, content that isn't permanent—this resonates deeply with how adolescents want to present themselves. Snap built an entire platform around this insight. The company wasn't hiding it. The product design itself communicated this value directly to teens.

Snap's documents also show internal awareness of the addictive potential of notification systems. The company tracked how push notifications affected user engagement. Like every social platform, Snap optimized notification timing and content to maximize return visits. The research was sophisticated: they knew which notification types caused which behaviors, how frequency affected habituation, and where the breaking point was where users disabled notifications entirely.

What's different about Snap compared to Meta is transparency. Snap generally wasn't hiding its teen focus as much as leaning into it openly. The company positioned itself as the teen alternative to Facebook. But the underlying mechanisms—algorithmic ranking, notification systems, addictive feedback loops—were identical to competitors.

Snap's Discover feature (equivalent to Facebook's News Feed) was algorithmically ranked to maximize watch time. The company's research showed that certain content types drove longer session duration and more frequent returns. This informed the algorithmic ranking. Is this different from Facebook's approach? Not really. The mechanism is the same. The only difference is that Snap owned its teen positioning more explicitly.

Snap also faced documented challenges around user safety. The company's Stories feature enabled direct messaging between strangers, creating opportunities for grooming and exploitation. Internal discussions show Snap was aware of these risks. The response involved some safety features, but implementation was measured carefully to not reduce engagement. This is the pattern across all platforms: safety as a secondary consideration.

Implementing these safety measures could reduce engagement metrics by 5-20%, impacting profitability. Estimated data.

TikTok's Algorithm: Designed for Addiction or Just Effective Engagement?

TikTok's documents are the most revealing about algorithmic design intent because they show less concern about hiding motivation. TikTok built its entire recommendation algorithm around maximizing watch time. The company's internal research showed what content types kept viewers engaged longest, which hooks worked best for different age groups, and how to sequence content to prevent scrolling away.

TikTok's algorithm is genuinely more sophisticated than competitors'. The platform doesn't rely heavily on social connections (like Instagram or Snapchat). Instead, it uses pure content matching: "Based on what you watched, here's the next video we predict you'll like." This approach removes the friction of needing followers or friends. You don't scroll past irrelevant content from accounts you follow. The algorithm shows you exactly what it thinks you want.

For teenagers, this is particularly effective. The algorithm learns incredibly quickly. After 10 videos, TikTok has a reasonable model of what content engages you. After 100 videos, the model is sophisticated. The platform becomes increasingly personalized, which increases retention.

TikTok's documents show internal tracking of session duration metrics by user age. The company knew how long teenagers spent per session, how frequently they returned, and which content categories drove highest engagement for different age groups. This data informed algorithm tuning.

What's important here is that TikTok wasn't trying to be deceptive about the algorithm's purpose. The company was transparent internally that the goal was engagement maximization. There's no conspiracy, just effective engineering. The algorithm does what it's designed to do: keep users watching. The fact that it's particularly effective with teenagers appears to be a feature, not a bug.

The psychological angle matters though. Teenagers' brains are still developing, particularly the prefrontal cortex, which governs impulse control and long-term consequence evaluation. An algorithm optimized for immediate engagement is hitting users at exactly the developmental stage where they're most vulnerable to its effects. That's not accidental design. It's not hidden in the code. But it's also not being counteracted. The algorithm targets the age group most susceptible to its mechanisms.

The Safety vs. Scale Tradeoff: How Companies Calculated the Cost of Protection

Across all these documents, one pattern emerges repeatedly: every platform understood the safety tradeoffs they were making. The companies didn't stumble into problems. They identified problems, calculated the cost of solutions, and decided growth was worth more than safety.

This is visible in concrete decisions. Meta could have limited teen recruitment to older teens (16+). The company knows younger teens experience more mental health harm from its products. But limiting the market would have cost billions in lifetime value. So Meta kept recruiting 13-year-olds.

Google could have disabled autoplay by default for accounts flagged as young users. YouTube has the technical capability to do this. The feature could still exist—users could turn it on voluntarily. But the default matters because most users never change defaults. So YouTube keeps autoplay on for everyone.

Snap could have limited notifications per day for young users, preventing the push-driven checking behavior that drives dependency. The company understands this mechanism. But limiting notifications would reduce engagement metrics. So Snap sends notifications optimally for engagement regardless of user age.

TikTok could have deprioritized extremely engaging content for young users, deliberately creating a less compelling product for minors. But that would be business suicide in a competitive market. So TikTok doesn't do this.

What these decisions share is rationality. From a business perspective, these companies are doing exactly what shareholders expect. Maximize growth. Maximize engagement. Maximize monetization. Safety features reduce all three. So safety features get implemented only when forced by regulation or reputation risk.

The documents show this calculation explicitly in some cases. Meta discussed the Lifestage launch knowing about predator risks, but judged the growth opportunity worth more than the safety risk until user adoption proved disappointing. Google discussed YouTube autoplay knowing about addiction risks, but kept the feature because engagement metrics drove advertising revenue.

This isn't malice. It's incentive structures. When a company's stock price is tied to user engagement, and when algorithms can be demonstrated to increase engagement, and when increasing engagement creates psychological dependency, companies will use those algorithms. The market rewards them for doing so.

Mental Health Evidence: What Did Companies Know?

The internal documents reveal that Meta, Google, Snap, and TikTok had significantly more sophisticated understanding of mental health impacts than they publicly acknowledged. The companies commissioned research. They tracked metrics. They monitored longitudinal outcomes. And the data was concerning.

Meta's research, leaked internally before these formal documents, showed Instagram's particular harm to teenage girls. The company's own research unit found links between Instagram use and body image dissatisfaction, eating disorders, and suicidal ideation. These weren't marginal effects. The company reported that the platform made body image worse for substantial percentages of teen girls.

Google's research on YouTube documented time consumption patterns and attention spans. The company tracked how autoplay changed session duration and frequency. The data showed that algorithmic recommendations increased watch time compared to chronological feeds. This was presumably intentional, but it also meant algorithmic recommendations were making watch time addiction worse than alternatives.

Snap and TikTok's documents show less public evidence of mental health research, but both companies tracked engagement metrics that correlate with mental health harm. Notification frequency, session duration, daily active user returns—these are all proxy metrics for dependency. The companies knew what they meant.

What's crucial is that knowing something differs from addressing it. Meta knew. Google knew. Snap and TikTok almost certainly knew. But knowledge didn't change product design because product design changes would reduce the metrics that determine company value.

The mental health question matters legally because it determines whether harm was foreseeable and preventable. If companies knew about risks and did nothing, that's different from creating harm unknowingly. The documents establish that companies knew. The next question is whether that knowledge creates liability.

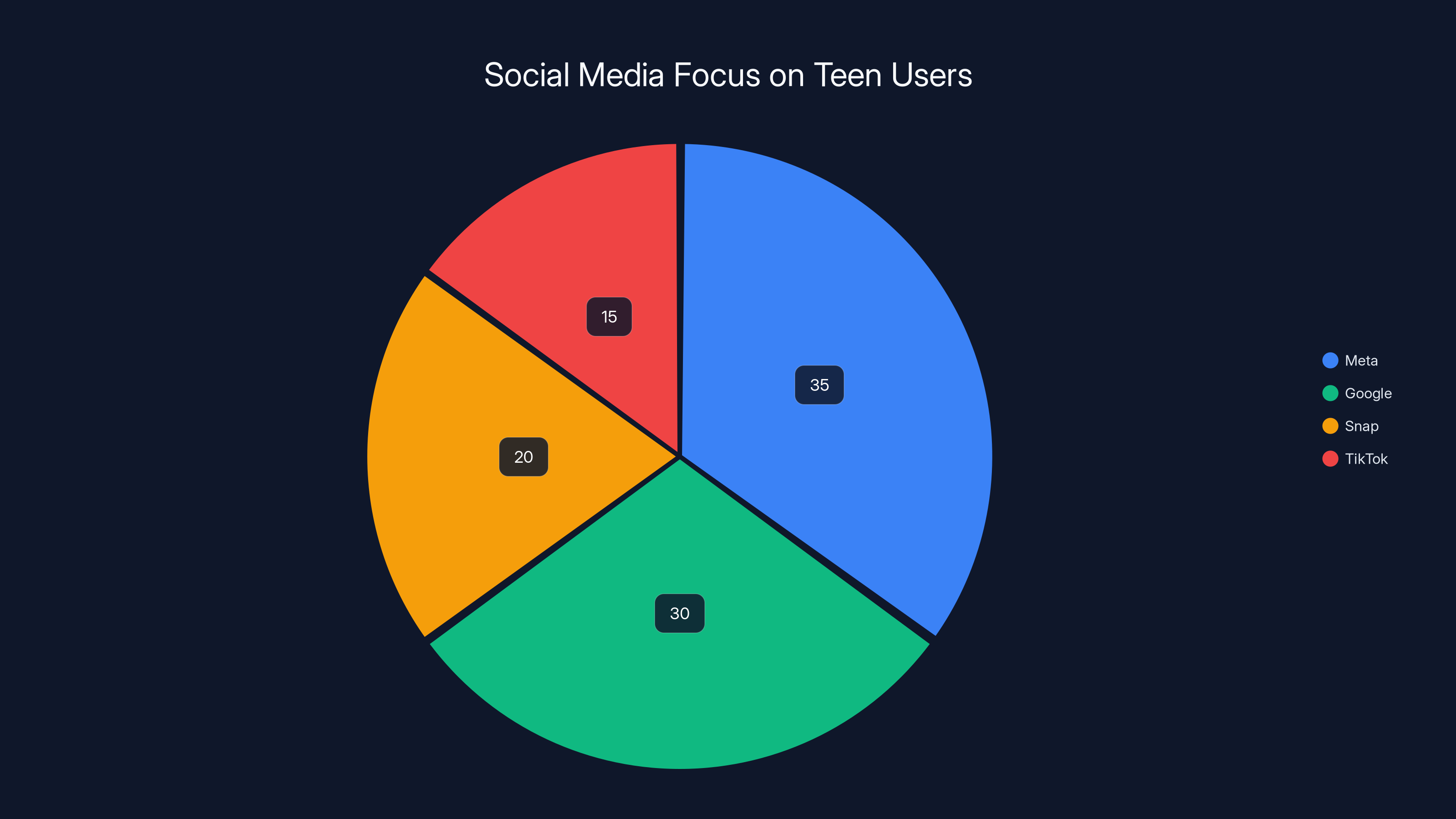

Estimated data shows Meta and Google leading the focus on teen users during the 2015-2017 period, driven by the potential for long-term engagement and growth.

The Competitor Dynamics: Why No One Could Afford to De-Prioritize Teen Engagement

An important context from these documents is competitive pressure. Meta couldn't simply decide to be responsible and deprioritize teen engagement because Instagram and Snapchat would capture that market. TikTok couldn't make its algorithm less addictive because competitors would exploit that advantage.

This is a classic prisoner's dilemma. If all platforms agreed to limit teen engagement and design safer experiences, all would benefit from reduced liability and improved reputation. But if one platform honored that agreement while others ignored it, the compliant platform would lose. So everyone defects from the safer outcome.

The documents show platforms being aware of each other's strategies. Meta explicitly positioned Facebook and Instagram against Snapchat and TikTok. Google positioned YouTube against TikTok and Instagram. Each company knew what competitors were doing and optimized accordingly. When a competitor launched a feature that drove engagement, pressure existed to match it.

This competitive dynamic is important because it explains why individual corporate responsibility failed. Even if a CEO personally wanted to make safer products, market forces would punish the company. Users would migrate to competitors. Ad-supported growth would slow. Stock prices would fall.

What this means is that competitive markets may be inherently unable to self-regulate around harms that correlate with engagement. If the harm correlates with the metric driving business value, competition ensures no player can afford to mitigate it. This is why regulation becomes necessary. Market forces alone won't produce safer outcomes if safety reduces the metrics that drive profitability.

The documents reveal this dynamic clearly. Companies aren't debating whether to be responsible. They're tracking what competitors do and doing the same thing. The market is the mechanism forcing the race to the bottom.

The PR Strategy: Acknowledging Risks While Maintaining the Status Quo

What the documents reveal about corporate communication is almost as damaging as what they reveal about product design. Companies weren't denying that problems existed. They were managing perception while maintaining harmful products.

Meta's response to criticism involved creating webpages responding to "FAQs about litigation" that list research showing "other factors" impact teen mental health. This is technically true. Mental health is multifactorial. But the rhetorical move is clear: acknowledge that multiple factors matter, therefore our platform's contribution is ambiguous, therefore we're not responsible.

Google's approach with the "Digital Wellness Overview" was similar. Commission research on problems, discuss solutions, release the discussion publicly as evidence of responsible thinking, implement minimal actual changes. The appearance of responsibility without behavioral change.

Snap positioned itself as more transparent about its teen focus while avoiding explicit discussion of addiction mechanisms. TikTok emphasized parental controls and educational content while maintaining an algorithm optimized for engagement.

What's telling is that none of these companies publicly advocated for regulations that would actually constrain their business models. If they genuinely believed their own responsibility claims, they'd support legal requirements to implement the safety measures they admit are possible. Instead, companies lobbied against meaningful restrictions while releasing statements about their commitment to safety.

This pattern—acknowledge, discuss, release educational materials, avoid actual constraints—is what happens when companies want the reputation benefits of responsibility without the business costs. It's a strategic communication approach, not evidence of genuine commitment.

The litigation itself forces a different level of honesty. Companies can't control how documents are presented in court. They can't frame findings with contextual caveats. Emails and internal presentations just appear as evidence. This is why these documents are valuable. They bypass the PR layer and reveal actual thinking.

The Historical Timeline: When Awareness Intersected with Growth Decisions

The documents span nearly a decade, revealing how teen-targeting strategies evolved and intensified as companies became aware of harms. This timeline matters because it suggests companies doubled down on teen engagement even as evidence of harm accumulated.

2016 marks a critical year. This is when Meta made teen growth the literal top priority. It's also around when internal research was beginning to document Instagram's mental health impacts. The timing suggests the company knew about problems while accelerating teen recruitment.

2017-2018 saw increased discussion of addiction and mental health impacts across all platforms. This coincided with increased public awareness through media coverage and advocacy campaigns. Yet product designs became more engaging, not less. Features optimized for engagement increased. Recommendation algorithms improved. Autoplay expanded.

By 2019-2020, the evidence was overwhelming. Meta's own research showed Instagram's correlation with mental health harm. Google had documented autoplay effects. Academic research was published showing social media's negative effects on teens. Public awareness was rising. Yet none of the major platforms fundamentally changed their approach to teen engagement.

2020 onwards, the companies increased investment in what they branded as "wellness features." Screen time timers were introduced. Content warnings were added. Parental control tools were offered. But these features were optional, defaulted off, and didn't reduce the core mechanisms driving engagement. It was the classic pattern: acknowledge, discuss, implement performative solutions, maintain engagement optimization.

The timeline reveals that awareness didn't reduce harmful behavior. Instead, companies became more sophisticated at managing the perception of harm while maintaining the mechanisms producing that harm. This is legally significant because it suggests deliberateness. Companies didn't change course because profits depended on maintaining their existing approach.

Instagram users report significantly higher rates of anxiety, depression, and body image issues compared to users of other platforms. Estimated data based on internal research insights.

The Legal Implications: What These Documents Mean for the Trials

These internal documents become the foundation for the upcoming trials. Plaintiffs will use them to establish that companies knew about harms, calculated the business trade-offs, and chose growth over safety. This is the definition of negligence and potentially gross negligence depending on how courts interpret the evidence.

The documents are particularly damaging because they show deliberate choice rather than accidental harm. Companies didn't stumble into addictive design. They understood addiction mechanisms, researched their effects, and maintained them because they drove engagement. That's not a gray area legally. That's understanding a risk and proceeding anyway.

Meta's challenge is that its own internal research on Instagram's mental health effects is extremely difficult to contextualize as anything other than knowledge of harm. The company discovered the link empirically. The company discussed it. The company did nothing to address it because addressing it would reduce engagement. That's a challenging position to defend.

Google's challenge is slightly different because YouTube's autoplay is presented as a "feature," not a bug. But the company's internal discussions acknowledging that autoplay drives addiction and that the addiction is caused by algorithmic design, not user preference, undermines the defense that autoplay is just what users want. If users wanted endless video watching, they'd have invented it themselves. The fact that Google has to engineer it suggests market demand doesn't support it. That engineering choice is what creates liability.

The trials will likely succeed on establishing knowledge of harm. The harder question is whether that knowledge creates liability given that users choose to use the platforms and given that harms are not certain (not all teens suffer mental health consequences). But the documents make it clear that companies understood the probability of harm and calculated the risk as acceptable given business benefits.

One key legal issue is whether teen users can be considered a "captive" audience in the sense that they lack the cognitive development to resist engineered persuasion systems. If courts rule that teenagers cannot meaningfully consent to products designed to exploit adolescent brain development, liability becomes much clearer. The documents support this interpretation by showing companies' research into adolescent psychology and their deliberate use of that knowledge in design decisions.

The documents also establish that safer alternatives were technically and financially feasible. Meta could have implemented better safeguards. Google could have disabled autoplay by default. Snap could have limited notifications. TikTok could have deprioritized engagement-maximizing content for teens. That these alternatives were available but not implemented suggests deliberate choice. And deliberate choice with knowledge of harm is what creates legal exposure.

What Happens Next: The June 2025 Trial and Beyond

The first trial is scheduled for June 2025. This will be the initial major test case, with more trials expected to follow. The outcome could reshape how social media companies design products for young users.

If courts rule in favor of plaintiffs, the implications are substantial. Companies could be required to redesign platforms to reduce engagement optimization for teen users. Algorithms could be constrained in how they target young users. Features identified as particularly harmful (like autoplay, infinite scroll, or engagement-based notifications) could be restricted for accounts under 18.

Beyond individual platform changes, a verdict for plaintiffs would likely trigger regulatory response. Legislators already considering restrictions on teen social media would gain political momentum. States could pass laws implementing the restrictions that courts impose. Federal regulation becomes more likely.

Companies are already preparing. Meta is heavily investing in AI tools to detect and remove illegal content. It's also continuing to invest in features that appear to address safety while maintaining engagement optimization. TikTok is emphasizing that it's primarily a creator platform, not addictive social media. Google is marketing YouTube's educational value.

But the documents now exist in the public record. Companies can't undo that. Investors now know the internal conversations happened. The business community knows that companies knowingly designed addictive products for teenagers. That knowledge affects company valuations and regulatory environment.

The documents are also globally significant. Other countries are watching these trials to inform their own regulatory approaches. Europe is already implementing stricter rules around teen social media. China has strict limits on teen gaming time. The United States trials will influence how the world's democracies think about digital regulation for young users.

The Broader Context: Is Individual Corporate Responsibility Even Possible?

These documents reveal a deeper question about whether individual corporate responsibility is possible in competitive markets. If one company designs safer products while competitors optimize for engagement, the company offering safer products loses market share. Users migrate to more engaging platforms. Revenue declines. Stock price falls.

This creates a systemic problem. No single company can solve it through responsible behavior because market mechanisms punish responsibility. The solution requires either regulation (level playing field that makes all companies implement safety measures) or changed business models (moving away from engagement-based advertising).

The documents suggest that company leaders understood this dynamic. They weren't debating whether to be responsible. They were tracking what competitors did and matching those strategies. The market was the mechanism driving race-to-the-bottom behavior.

This has implications for how we think about corporate responsibility generally. Asking companies to self-regulate around harms that correlate with profitability is asking them to sacrifice shareholder value. Market incentives don't reward that behavior. Regulatory structures need to change the incentives themselves.

What the social media documents reveal is that markets alone won't produce safe products for teens. Markets will produce engaging products. If engagement is harmful to teens, markets will produce harmful products. The solution isn't better corporate leadership. It's changed business models or regulatory constraints that make safety profitable.

The companies themselves have inadvertently made this case. Their internal discussions reveal that they understand the problems, understand the solutions, but choose not to implement those solutions because profitability depends on maintaining engagement optimization. Individual responsibility failed. Systemic change is required.

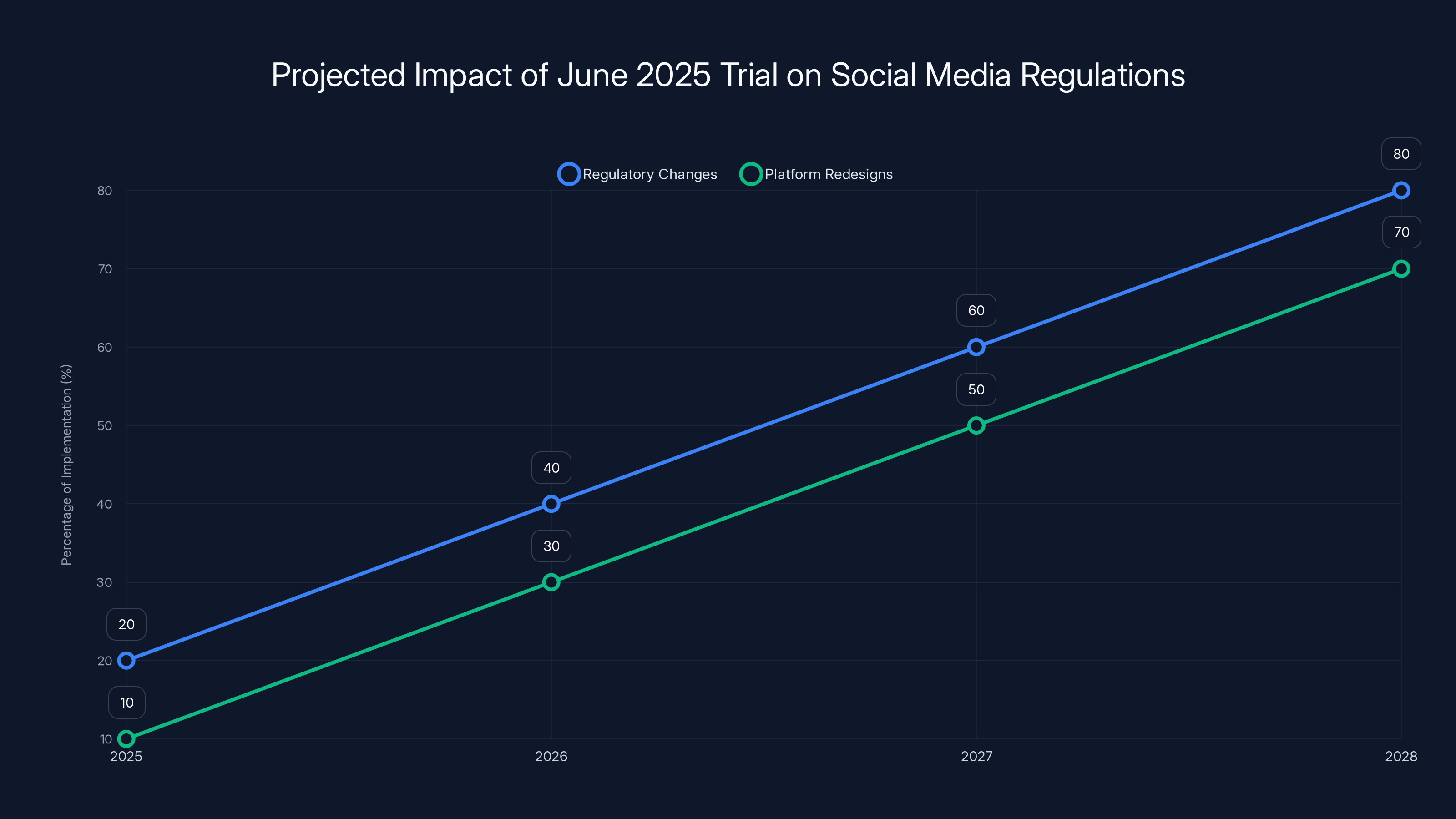

Estimated data shows a significant increase in regulatory changes and platform redesigns following the June 2025 trial, potentially reshaping social media for young users.

Implications for Users and Parents: What Teenagers and Families Should Know

For families, these documents should inform decisions about platform use. The companies have revealed that they understand how their products affect teens and have chosen to maintain those products anyway. That knowledge should factor into parental decisions about screen time, platform access, and digital literacy education.

Teenagers themselves should understand that platforms are designed to keep them engaged. This isn't a moral judgment. It's an empirical fact revealed by company documents. Knowing that design is intentional helps develop some resistance to it. Recognition that notifications, recommendations, and engagement metrics are engineered choices, not organic features, creates mental space for more critical engagement with platforms.

Parents should also understand that technical solutions (screen time limits, app restrictions) address symptoms but not root causes. The platforms are engineered to overcome those solutions. A more effective approach involves digital literacy education that helps teens understand why platforms are designed the way they are and what mechanisms are being used to influence their behavior.

Families should also be aware that not all platforms are equally problematic. The documents suggest that TikTok's pure algorithmic approach (not relying on social connections) is particularly effective at driving engagement. Instagram's comparison-focused design is particularly harmful for body image. YouTube's autoplay is particularly problematic for attention span. Different platforms have different harm profiles. Being aware of those differences can inform more targeted choices.

Beyond individual family decisions, these documents suggest the need for collective action. Parents and educators should understand that expecting individual responsibility from companies won't work. Market incentives don't reward safety. That only regulatory structures can create level playing fields where safety doesn't disadvantage companies that implement it.

The Activist Perspective: What Advocates Are Demanding

Advocacy organizations like the Tech Oversight Project are using these documents to push for specific regulatory changes. The demands include:

- Algorithmic transparency: Requiring platforms to disclose how recommendation algorithms work and how they affect different age groups

- Engagement metrics restrictions: Prohibiting notification-based engagement optimization for users under 18

- Data privacy: Restricting collection of behavioral data on minors

- Default safety settings: Requiring safety-first defaults for young users rather than optional features

- Independent auditing: Regular third-party audits of how platforms affect teen mental health

These demands are directly informed by what the documents reveal about platform design. Activists are essentially asking for regulatory enforcement of the safety measures that companies know are technically feasible but choose not to implement.

Some advocates argue for more radical changes, including separate product versions designed specifically for teens with fundamentally different business models (perhaps ad-free, engagement-capped, or human-moderated rather than algorithmically sorted).

The documents give advocates powerful ammunition. They can point to companies' own understanding of harms and ask: if you know this is harmful, why aren't you implementing the obvious solutions? The companies' answer—because engagement optimization drives profitability—becomes the case for regulation. Markets can't solve problems where the problem correlates with profitability. Regulation can.

What Companies Could Do (But Won't Without Regulation)

The documents reveal that safer designs are possible. Companies have researched solutions. Engineers have proposed alternatives. But implementation hasn't happened because safety reduces engagement metrics and therefore profitability.

Concrete changes platforms could make:

-

Algorithmic guardrails for minors: Deprioritize engagement-maximizing content for accounts under 18. Show chronological feeds by default. Require explicit clicks to enable algorithmic recommendations.

-

Notification constraints: Limit notifications per day for young users. Disable push notifications for accounts under 18 by default. Require conscious opt-in to notification engagement mechanisms.

-

Session timers with enforcement: Implement mandatory breaks after 2-hour sessions for users under 18. After the break, require re-authentication to continue. Make these enforced, not optional.

-

Comparison content restrictions: Deprioritize content that drives comparison and FOMO for young users. Reduce emphasis on likes, shares, and follower counts. Show content by interest rather than by social comparison metrics.

-

Sleep protection: Disable notifications between 11 PM and 7 AM for accounts under 18. Remove platform access during those hours entirely.

-

Transparency tools: Show users which features are designed to maximize engagement and why. Provide real-time data on time spent and comparison metrics that would have been displayed.

None of these changes are technically infeasible. Companies already have the infrastructure to implement them. The obstacle is purely business model. These changes would reduce engagement metrics, which reduces advertising revenue, which reduces growth rates and stock prices.

This is why regulation is required. Companies won't implement these changes voluntarily because markets reward engagement optimization. But once regulations require these changes across the industry, the competitive disadvantage disappears. All companies face the same constraints. The playing field is level. Companies can implement safety while maintaining competitive position.

The documents make this case implicitly. They reveal that companies understand the problems and understand the solutions. What's missing is the regulatory requirement that makes solutions profitable instead of penalizing them.

The Innovation Question: Can Safer Platforms Exist?

One challenge to aggressive regulation is the fear that constraining engagement optimization will kill the platforms that billions of users depend on. If algorithmic recommendations are removed, will users migrate to competitors? If notifications are limited, will daily active users decline? Will the platforms remain viable?

The evidence suggests that safer platforms can be viable, though potentially less profitable. The existence of platforms like Discord (community-based), Mastodon (federation-based), and Be Real (authenticity-focused) shows that engagement-optimized algorithms aren't required for successful social platforms.

The question is whether platforms built on different models can scale to billion-user bases. That's genuinely uncertain. But the documents provide no evidence that engagement optimization is required for platforms to exist. Instead, they show that engagement optimization is required for platforms to achieve maximum profitability.

There's a difference. A platform could be viable and much less profitable if engagement optimization were removed. Companies are choosing maximum profitability over viability without optimization. From a business perspective, that makes sense. From a social welfare perspective, it's less clear that maximum profitability should take priority over teen mental health.

Regulation forces this calculation differently. If engagement optimization is prohibited, companies can't choose it anyway. The competitive disadvantage disappears. Viable (but less profitable) platforms emerge. That outcome is actually achievable through regulatory constraint rather than corporate voluntarism.

Looking Forward: What Regulation Might Look Like

Based on the documents and the momentum building around teen safety, likely regulatory approaches include:

-

Age-gated access: Requiring meaningful age verification for social platforms, with different rules for under-13, 13-16, and 16+ users.

-

Algorithm regulation: Requiring platforms to explain how algorithms work and limiting certain types of recommendations for young users.

-

Data restrictions: Limiting collection and use of behavioral data on minors. Requiring parental consent for data use.

-

Feature restrictions: Prohibiting certain features (like Stories, Reels, or TikTok's algorithm) for under-13 users.

-

Transparency requirements: Requiring independent audits of platform effects on teen mental health. Publishing results publicly.

-

Liability changes: Reducing liability protections for platforms when they knowingly design features that harm minors.

The documents make the case for each of these regulations because they reveal that companies have this knowledge and aren't acting on it. Regulation becomes the policy tool to align company incentives with social welfare.

Europe's approach with the Digital Services Act provides a template. The regulation requires platforms to disclose algorithms, allows users to opt out of algorithmic recommendations, and imposes duties of care around harmful content. Future U.S. regulations will likely follow a similar pattern but with greater specificity around teen protections.

The documents will influence what those regulations look like because they provide evidence of specific harms and specific design choices that caused those harms. Legislators can point to the documents and say: "We know algorithms drive engagement addiction in teens. We know platforms know this. We're requiring alternatives." The documents make the regulatory case concrete rather than abstract.

FAQ

What are the main revelations from these internal documents?

The internal documents from Meta, Google, Snap, and TikTok reveal that these companies deliberately recruited teenagers as a growth priority while simultaneously tracking evidence that their platforms caused mental health harm. The documents show executives made conscious decisions to prioritize engagement metrics over safety because engagement drives profitability. Companies like Meta were aware that Instagram damaged body image in teenage girls and that the platform made mental health worse for substantial percentages of users, yet they continued to optimize for engagement rather than safety.

How did social media companies approach teen recruitment?

Platforms approached teen recruitment as a strategic business imperative. Meta made "teen growth" the explicit top priority in 2017. Google positioned "kids under 13" as "the fastest-growing Internet audience in the world" and strategically targeted schools to drive adoption. TikTok engineered an algorithm specifically optimized for adolescent engagement patterns. Snap built an entire product around features teenagers had already invented. All companies understood that recruiting teens early created lifetime value—a user acquired at 14 could generate profits for 60+ years. The recruitment wasn't accidental. It was deliberate business strategy with explicit C-suite authorization.

What did the documents reveal about mental health impacts?

The documents show that companies researched and documented mental health harms their platforms caused but chose not to address them meaningfully. Meta's internal research found that Instagram worsened body image for one in three teenage girls and linked Instagram use to eating disorders and suicidal ideation. Google documented that YouTube's autoplay feature increased watch time beyond what users voluntarily chose, understanding that this drove addiction patterns. Snap tracked notification effects on engagement and habituation patterns. TikTok optimized algorithms knowing how they affected different age groups. The key finding is that companies didn't stumble into these harms. They measured them, understood them, and maintained their products anyway because addressing the harms would reduce engagement metrics.

Why didn't companies simply make their platforms safer?

Companies didn't make platforms safer because doing so would reduce the engagement metrics that determine company profitability. Reducing notifications would cut daily active users. Removing algorithmic recommendations would cut session duration. Disabling autoplay would cut watch time. Deprioritizing comparison-focused content would cut engagement. Every safety improvement comes at the cost of engagement metrics. Since company valuations depend on engagement metrics, individual companies can't afford to implement safety measures that competitors don't also implement. This creates a prisoner's dilemma where market forces push toward race-to-the-bottom behavior despite all companies understanding the harms they're causing. Regulation that imposes the same constraints on all competitors simultaneously is required to break this dynamic.

What are the likely outcomes of the trials?

The first trial begins in June 2025. If courts rule in favor of plaintiffs, the implications are substantial. Companies could face liability for knowingly designing addictive products for teens. Regulatory response would likely follow, with new laws restricting how platforms can target young users. Specific regulatory approaches being discussed include algorithmic transparency requirements, restrictions on engagement-based notifications for minors, mandatory safety defaults instead of optional features, and data collection limitations. Even if individual trials produce mixed results, the documents' public release has already affected investor perception and regulatory momentum. Multiple states have already passed teen protection laws without waiting for federal action, suggesting that regulatory momentum exists independent of trial outcomes.

How do these documents affect my decision about whether teens should use these platforms?

The documents should inform family decisions by revealing that platforms are deliberately engineered to maximize engagement in ways that correlate with mental health harm. This doesn't mean banning platforms entirely, but it means approaching them with realistic understanding that features like notifications, algorithmic recommendations, and engagement metrics are intentional design choices aimed at driving addiction-like behavior. Parents might consider limiting access to particularly problematic platforms, supervising the time spent, and focusing on digital literacy education that helps teens understand why platforms are designed the way they are. Families should also recognize that technical solutions (screen time limits) address symptoms but not root causes since platforms are engineered to overcome those solutions. The most effective approach involves helping teens understand the mechanisms and making conscious choices rather than relying on technology restrictions alone.

What regulation is most likely to emerge from this?

Regulation emerging from this litigation will likely include several components. Age-gated access requirements will probably mandate meaningful age verification and potentially different rules for different age groups (under 13, 13-16, 16+). Algorithm regulation will require platforms to disclose how recommendation systems work and possibly restrict certain recommendation types for minors. Data restrictions will likely limit behavioral data collection on minors and require parental consent. Feature restrictions may prohibit certain engagement-maximizing features for young users. Transparency requirements will likely mandate independent audits of platform effects on teen mental health with public reporting. Finally, liability protections may be reduced when companies knowingly design features that harm minors. Europe's Digital Services Act provides a template for how these regulations might be structured, though U.S. regulations will likely include greater specificity around youth protections.

Are there social media platforms designed specifically to be safer for teens?

Yes, though they're smaller and less widely adopted than major platforms. Platforms like Discord (community-based), Mastodon (federation-based), Be Real (authenticity-focused), and Bluesky (open protocol-based) take different approaches that don't depend on algorithmic engagement maximization. They're generally less addictive but also have smaller user bases. The question isn't whether safer platforms can exist (they demonstrably can), but whether they can scale to billions of users and whether viable business models exist outside of engagement-based advertising. The answer to the first question is genuinely uncertain. The second question suggests that less profitable but still viable platforms are possible, though companies choose maximum profitability over viability through safer design when markets allow them that choice.

What can individual users do to protect themselves?

Individual protection requires multi-layered approaches. Understanding how platforms are designed to maximize engagement creates mental awareness of mechanisms being used. Setting deliberate limits on app usage time, disabling notifications, and opting out of algorithmic recommendations (where possible) reduces exposure to engagement-maximizing features. Seeking out communities and platforms that don't depend on addictive algorithms provides alternatives. Supporting regulatory efforts that constrain engagement optimization benefits everyone by removing the competitive disadvantage of implementing safety measures. Finally, developing digital literacy—understanding why platforms are designed the way they are and recognizing the difference between authentic user preference and engineered persuasion—is perhaps the most valuable protection because it enables critical engagement with any platform rather than dependence on specific technical solutions.

How do these documents compare to prior revelations about social media companies?

These documents are more concrete and legally binding than prior revelations because they're produced as part of court-ordered discovery. Prior leaks and revelations came from whistleblowers or investigative journalists working with incomplete information. These documents come from companies' own files and are presented as evidence in formal litigation. That means companies can't dispute their authenticity or context in the same way they could with leaked information. Additionally, these documents span nearly a decade and come from multiple companies simultaneously, showing that the pattern of knowing about harms and maintaining engagement optimization wasn't unique to any single company. It was industry-wide practice. That breadth makes the case for systemic problems rather than individual corporate failures.

What happens if regulation significantly constrains teen social media access?

If regulation severely constrains teen social media, multiple outcomes are possible. Platforms might introduce genuinely safer versions designed for young users with different business models (subscription-based rather than advertising-based, or with fundamentally different engagement mechanisms). Smaller platforms with different designs might grow if larger platforms are required to constrain teen-targeting features. International divergence might increase, with different regulatory regimes in different countries producing different platform landscapes. There's also a possibility that regulation overcorrects and prevents useful communication tools from reaching teenagers, which would be an unintended consequence. The optimal outcome would involve regulation sophisticated enough to address the most problematic mechanisms while preserving platforms' utility for social connection and self-expression. Whether regulation can achieve that balance remains an open question.

Conclusion: The End of Plausible Deniability

These internal documents represent a historical moment. They're the end of plausible deniability. Tech companies can no longer claim they didn't understand the harms their products caused or didn't have the capability to address them. The documents establish both knowledge and capability. What remains is accountability.

For decades, social media companies have operated in a gray zone between innovation and exploitation. They've claimed to be neutral platforms simply responding to user preference. They've positioned themselves as engines of connection and communication. They've donated to nonprofits focused on teen safety while engineering products designed to addict teenagers. They've funded research showing mental health harms while explaining those harms through unmeasurable factors like "social comparison" and "FOMO" rather than their own design choices.

The documents undermine all of this. They show that companies understood exactly what they were building. They researched the effects. They calculated the trade-offs. They chose growth anyway. That's not unfortunate collateral damage from useful platforms. That's calculated harm in service of profit.

What happens next will determine whether this becomes a turning point or just another moment of temporary accountability before normal business resumes. The trials matter. The regulatory momentum matters. But more fundamentally, a shift in how we think about responsibility matters.

For too long, we've accepted that innovation requires some harm. That growth demands sacrifice. That you can't have powerful tools without dangerous side effects. The documents suggest that's not actually true for social media. The danger isn't incidental to the tool. It's central to the business model. The addiction is the product, not a side effect.

Unwinding that requires acknowledging that market forces alone won't produce safe platforms. Regulation that changes the incentive structure is required. That means rules that make safety profitable instead of expensive. That means requirements that apply to all competitors simultaneously so no company faces disadvantage for implementing protections.

The documents make the case for that regulation concretely. They show companies understanding problems, possessing solutions, and choosing not to implement those solutions because profit depends on maintaining harms. That's the definition of negligence at scale. And that's what the courts will decide in the coming trials.

For teenagers themselves, the documents reveal something important: the platforms you use are not neutral spaces where you're just hanging out with friends. They're engineered systems designed to keep you engaged as long as possible because your engagement is the product being sold. Understanding that design, being aware of the mechanisms being used to influence your behavior, and making conscious choices about your relationship with these platforms becomes an act of resistance. Not against the platforms themselves—they serve valuable purposes—but against the engineered addiction baked into their design.

The era of ignorance is over. The documents are public. Everyone involved in social media—users, parents, companies, regulators, legislators—now knows what's actually happening. The only question remaining is what we do about it. The trials will answer part of that question. The regulatory process will answer another part. But the real answer lies in the collective choice of billions of users deciding whether engineered addiction is acceptable. That choice hasn't been made yet. The documents have simply given us enough information to make it consciously.

Key Takeaways

- Internal documents prove Meta, Google, Snap, and TikTok deliberately prioritized teen recruitment as core business strategy while knowing about mental health harms

- Companies possessed technical capability to implement safety measures but chose engagement optimization instead because safety measures reduce profitability

- Meta's research found Instagram worsened body image for 1 in 3 teen girls and linked the platform to eating disorders and suicidal ideation

- Competitive market dynamics create prisoner's dilemma forcing all platforms toward race-to-the-bottom behavior regardless of individual company responsibility

- June 2025 trials represent historic accountability moment with potential to reshape platform design and trigger global regulatory response

Related Articles

- UK Social Media Ban for Under-16s: What You Need to Know [2025]

- TikTok Data Center Outage: Inside the Power Failure Crisis [2025]

- TikTok's Trump Deal: What ByteDance Control Means for Users [2025]

- TikTok's US Future Settled: What the $5B Joint Venture Deal Really Means [2025]

- Meta's Aggressive Legal Defense in Child Safety Trial [2025]

- Snapchat Parental Controls: Family Center Updates [2025]

![Social Media Companies' Internal Chats on Teen Engagement Revealed [2025]](https://tryrunable.com/blog/social-media-companies-internal-chats-on-teen-engagement-rev/image-1-1769447307833.jpg)