UK's Online Safety Act Forces Major Adult Content Platform Offline: What You Need to Know

In early 2025, one of the internet's most visited websites made a dramatic decision that sent shockwaves through both the adult content industry and digital privacy advocates. Aylo, the parent company behind Pornhub and other major adult platforms, announced it would simply cut off access to UK users rather than comply with age verification requirements mandated by the Online Safety Act. This wasn't a bug or a temporary glitch—it was a calculated business decision that highlights the growing tension between child protection legislation and personal privacy in the digital age.

The move, effective February 2, 2025, represents a significant escalation in the ongoing battle between tech companies and governments over how to regulate online content. While regulators frame age verification as a simple safety measure, platforms argue it's a dangerous privacy overreach that forces adults to hand over sensitive personal data just to access legal content. The UK case offers a window into how these conflicts will likely play out globally as more jurisdictions attempt to implement similar laws.

What makes this situation so fascinating isn't just the immediate impact on millions of UK users—it's what it reveals about the fundamental incompatibility between privacy protection and age verification technology. When a platform as massive as Pornhub chooses to abandon an entire country's market rather than comply, it signals something deeper: either the technology doesn't work as promised, or the privacy risks genuinely outweigh the benefits.

This article breaks down what actually happened, why Aylo made this decision, what it means for internet regulation globally, and what happens next for UK users and the broader digital privacy landscape.

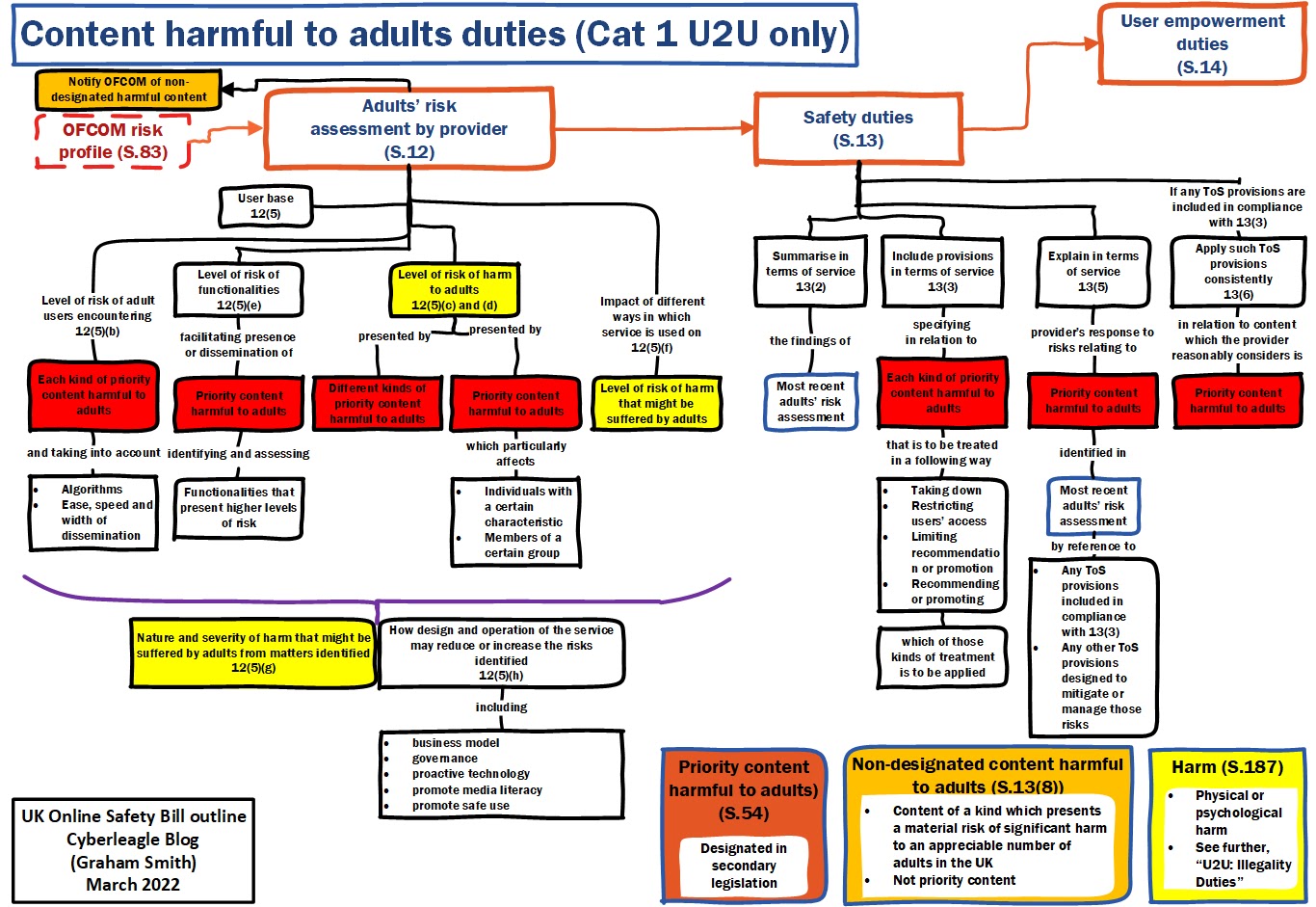

The Online Safety Act: Understanding UK's Internet Regulation Attempt

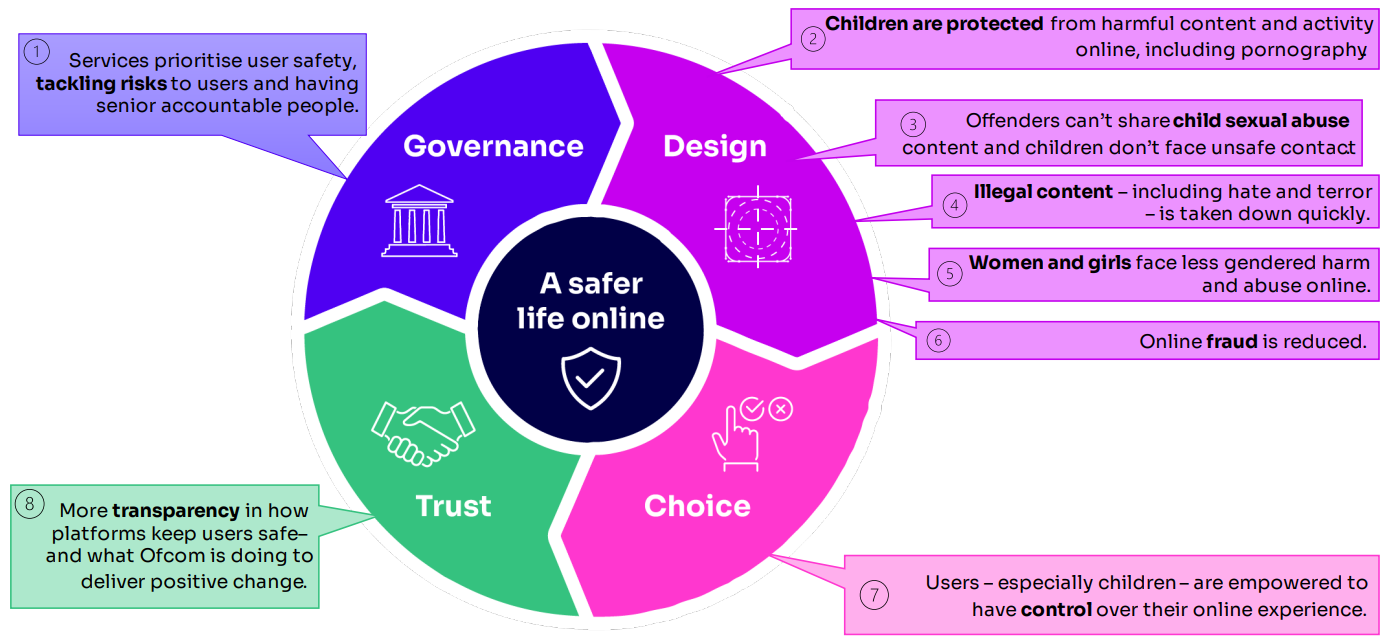

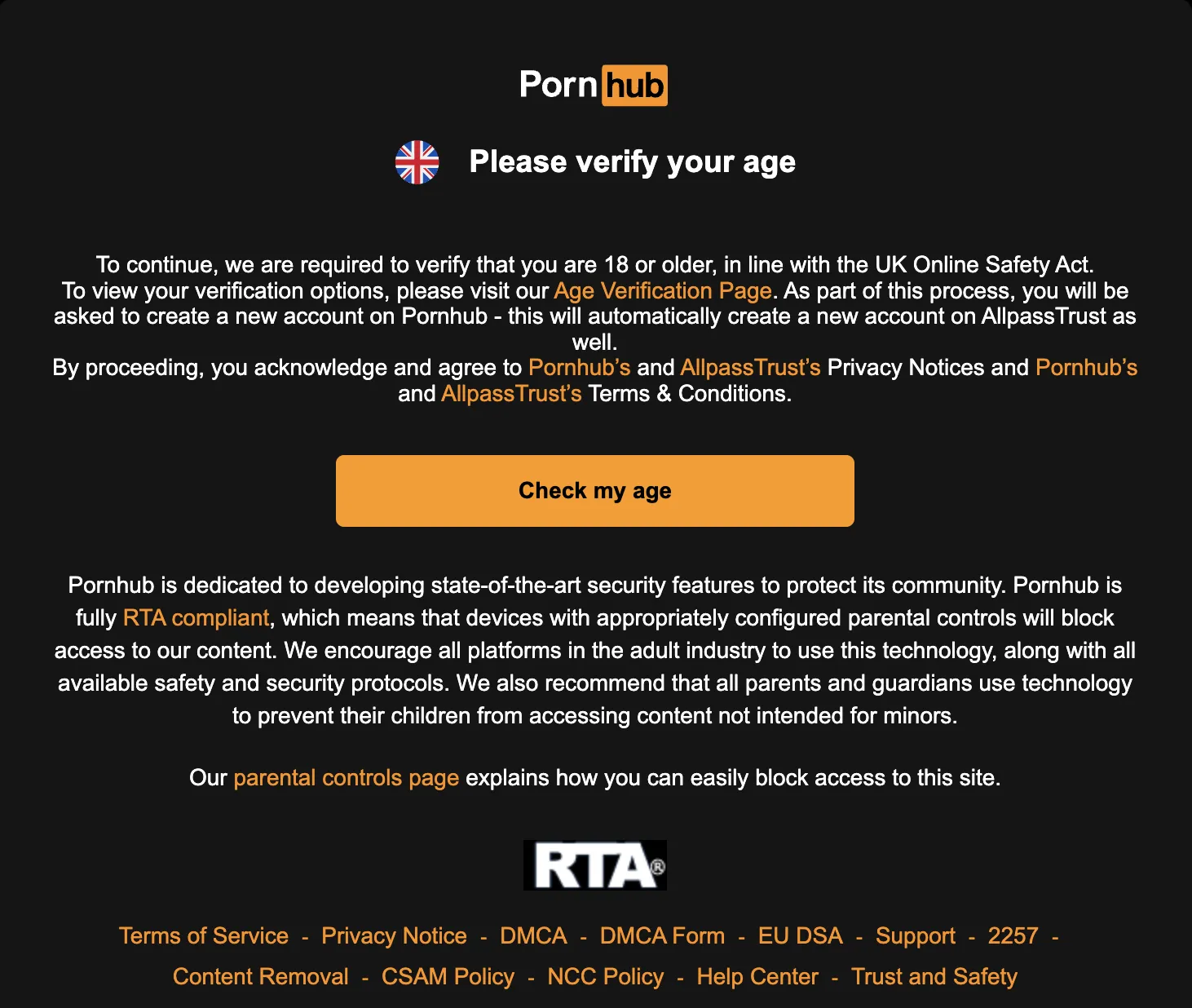

The Online Safety Act (OSA) represents one of the world's most ambitious attempts to regulate internet content and protect children from harmful material. Passed by the UK Parliament and coming into enforcement in phases, the law touches everything from social media moderation to age verification for adult content. For adult websites specifically, the OSA mandates that platforms implement age verification technology before displaying any sexually explicit material to users.

On paper, the logic seems straightforward. Kids shouldn't access pornography. Age verification prevents that. Problem solved. In practice, the situation is far messier.

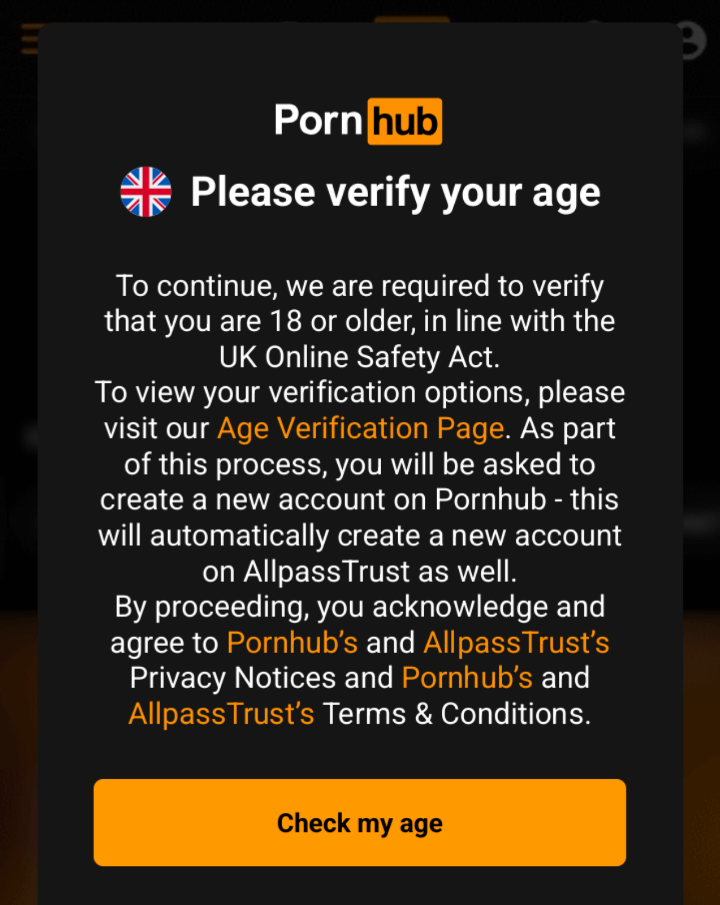

The OSA technically gave adult platforms two options: implement age verification systems or block access from UK IP addresses. For Aylo, which operates some of the internet's highest-traffic sites, even a small percentage of UK users represents millions of daily visits. The company spent six months attempting to comply with the first option, implementing age verification on Pornhub and its sister sites. But after half a year of real-world experience, Aylo concluded the entire framework was fundamentally flawed.

The company's official statement pulls no punches: after six months of compliance, they believe the OSA has failed to achieve its stated objective of restricting minors' access to adult content. More than that, Aylo argues the framework has done the opposite of what it intended—pushing young people toward "darker, unregulated corners of the internet" rather than keeping them away from adult material. This claim matters because it suggests that even good-faith compliance with the law might actually harm child safety.

Ofcom, the UK's communications regulator responsible for enforcing the OSA, has pushed back against Aylo's interpretation. According to Ofcom, the regulator has taken "strong and swift action" against non-compliant porn sites, launching investigations into more than 80 platforms and issuing at least one £1 million fine. Ofcom also notes that the law explicitly allows platforms to choose the blocking option if they prefer, and suggests that device-level age verification technology (rather than cloud-based identity checks) might provide an alternative path forward.

This disagreement between the platform and the regulator reveals something important: both sides are making claims about what works and what doesn't, but there's surprisingly little independent data showing which approach actually protects children while respecting privacy. The OSA essentially became a live experiment in how to regulate adult content, and the initial results suggest the experiment needs serious rethinking.

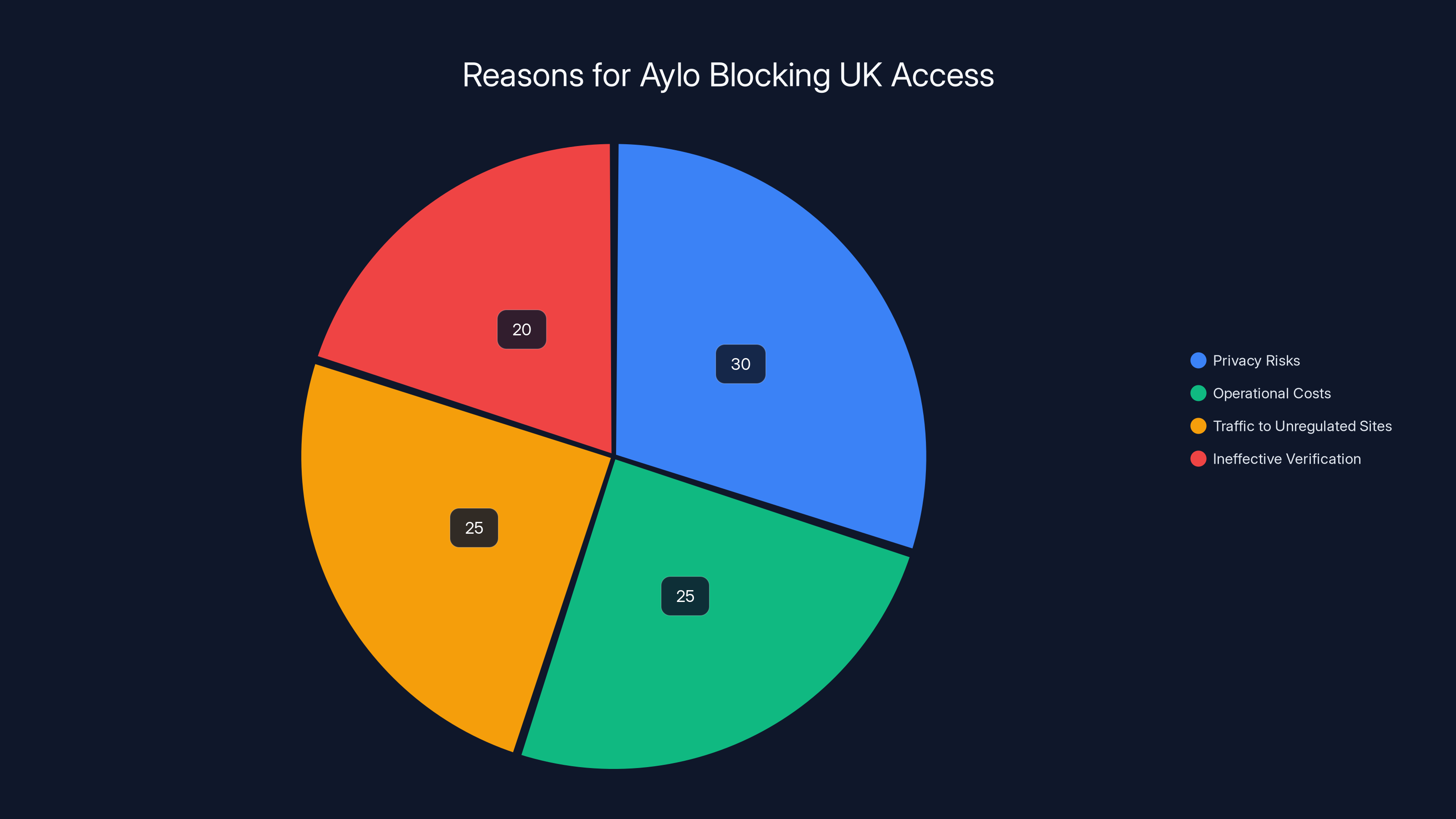

Aylo's decision to block UK access was influenced by privacy risks (30%), operational costs (25%), traffic to unregulated sites (25%), and ineffective verification (20%). Estimated data.

Age Verification Technology: The Privacy-Security Trade-off Problem

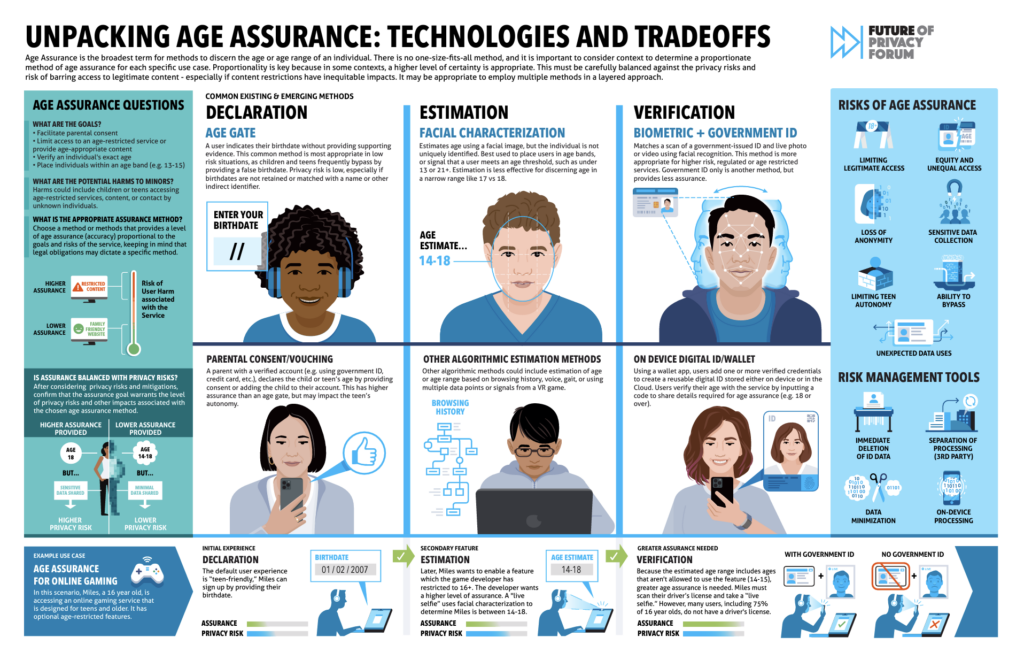

Underlying this entire conflict is a fundamental question about how age verification technology works and whether it can ever be implemented safely. When you hear "age verification," you might imagine a simple system that just checks a birthdate. The reality is far more intrusive.

Cloud-based age verification systems—the kind mandated by the OSA—typically require users to upload government-issued ID documents. These might include driver's licenses, passports, or national ID cards. The platform then verifies the document's authenticity and extracts the user's date of birth. In theory, the platform only needs to store whether the person is old enough (true/false). In practice, the data collection process creates massive privacy risks.

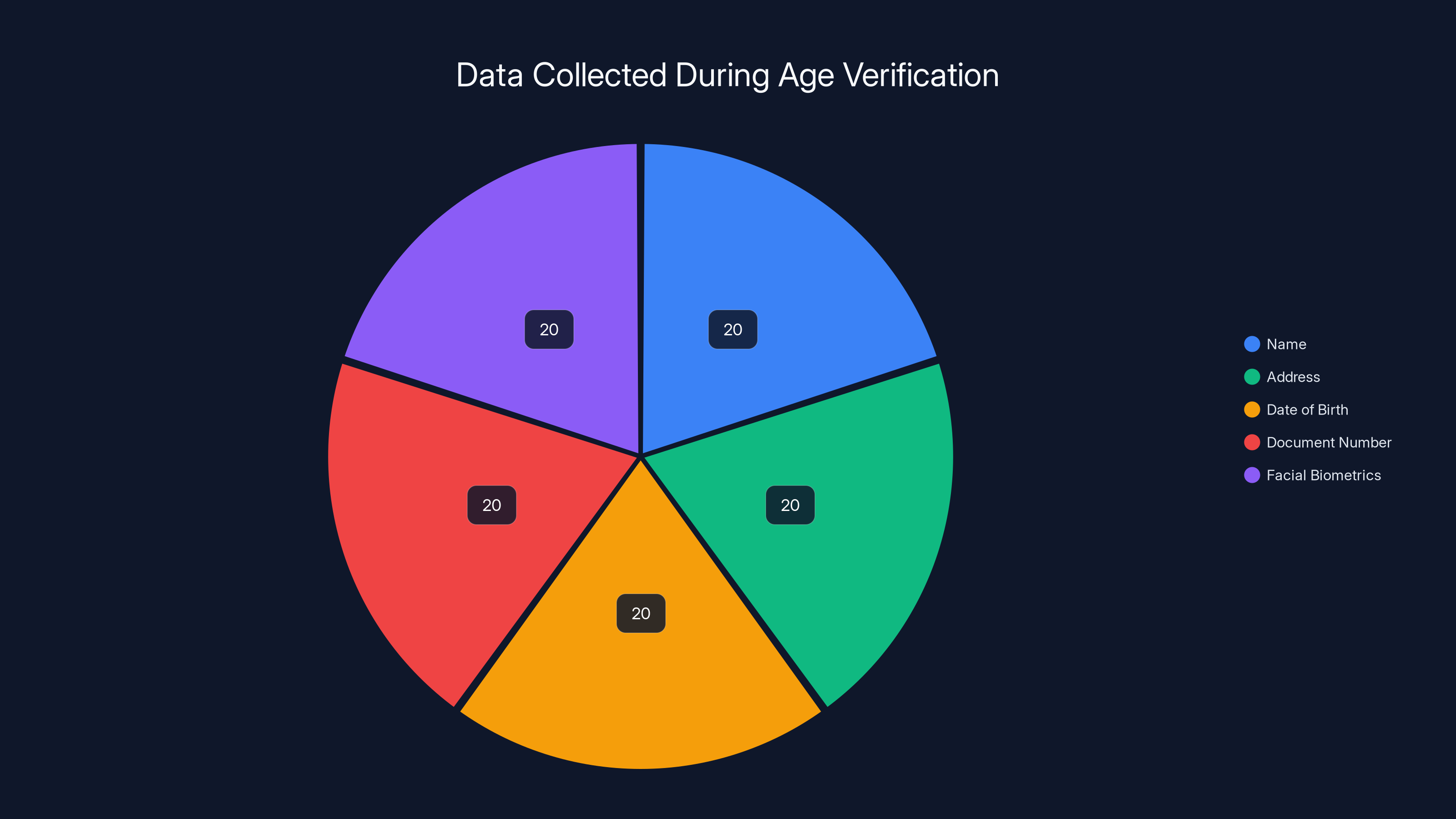

Consider what happens during the verification process: your name, address, date of birth, document number, and facial biometrics (if it's a photo ID) all get transmitted to third-party verification services. These services might be legitimate companies with security practices, or they might be whoever the platform contracts with—sometimes companies with questionable track records. Every additional touchpoint in the data chain creates additional vulnerability.

Historically, adult platforms have been targeted by sophisticated hackers precisely because users are motivated to protect that data—they don't want their browsing habits exposed. Pornhub itself experienced a notable data breach when a vulnerability in Mixpanel (an analytics provider) exposed data about Premium subscribers. The stolen information included email addresses, locations, videos watched, search keywords, and timestamps of activity. Imagine if ID documents had been exposed alongside that data.

Aylo's position is that age verification creates a paradox: to protect children, you must collect massive amounts of adult data, which then becomes an attractive target for criminals. The company claims this isn't theoretical scaremongering—it's based on their operational experience watching how platforms with aggressive data collection get targeted.

Moreover, there's a behavioral aspect worth considering. If young people know they can't verify their age without handing over ID documents, they might be less inclined to try using mainstream platforms. Instead, they might seek out unregulated alternatives—peer-to-peer file sharing, darknet communities, or sketchy VPN services. From a harm-reduction perspective, Aylo's argument has some merit. A regulated platform with content moderation, copyright protection, and basic safety features might be preferable to whatever teenagers find in truly unregulated spaces.

The counterargument from regulators is that people simply won't care that much—they'll either verify (accepting the privacy trade-off) or move on. But that assumes rational decision-making and perfect information. In reality, many adults might simply use VPNs or proxy services, rendering the age verification pointless while adding another layer of complexity to internet infrastructure.

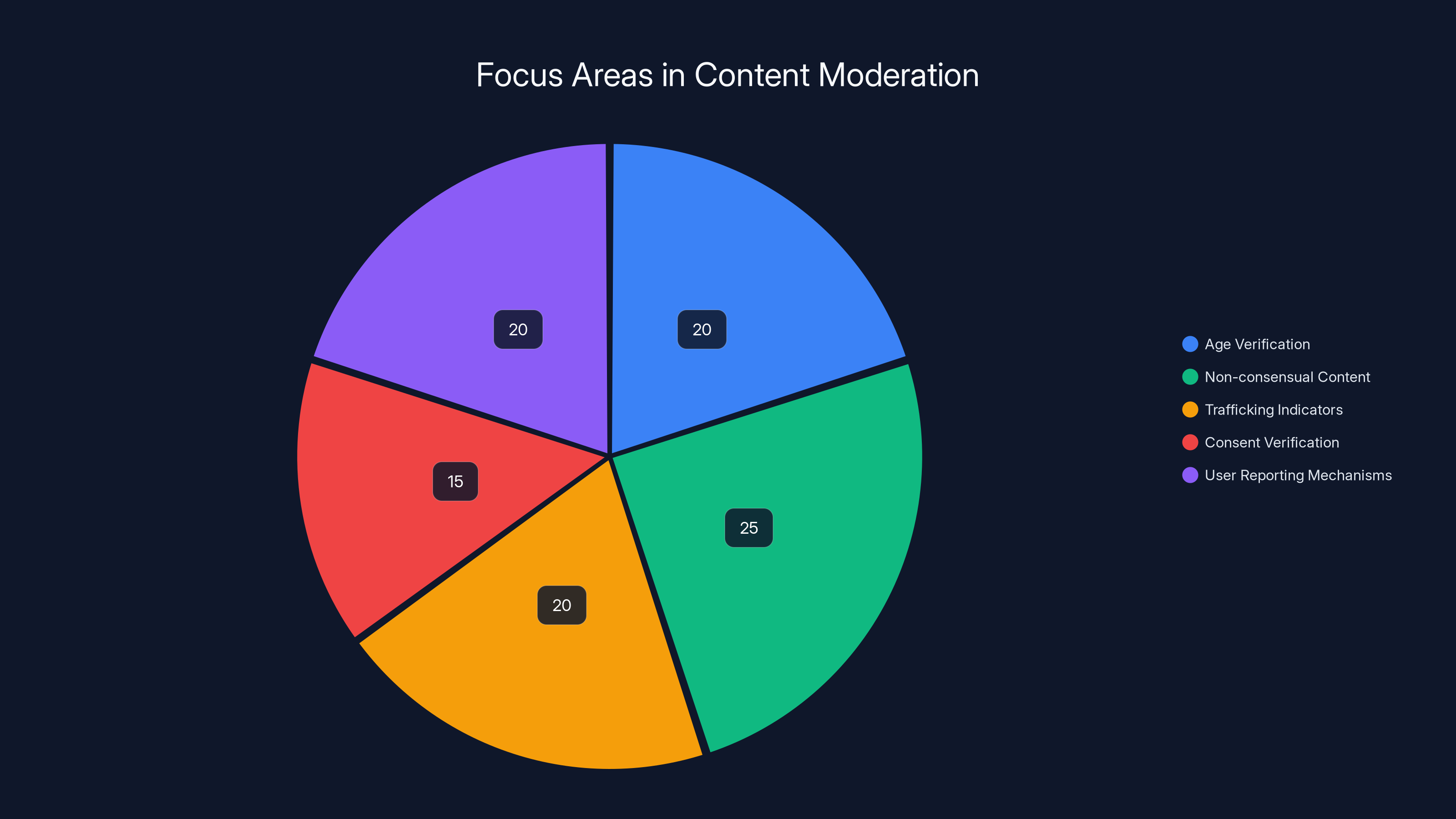

Estimated data shows that while age verification is a visible focus, significant attention is also needed for non-consensual content and trafficking indicators. Estimated data.

Aylo's Global Compliance Strategy: The Pattern of Retreat

The UK decision didn't happen in isolation. Aylo has been navigating age verification requirements in the United States for years, and their pattern suggests a clear preference: when faced with age verification mandates, block access rather than comply. The company has already restricted access to its platforms in multiple US states that require age verification for adult content. These include states like Utah, where legislation passed with broad support but faced immediate practical implementation challenges.

This strategy reveals something important about Aylo's actual priorities and constraints. If the company truly believed age verification was impractical but achievable, they might lobby for better standards or invest in developing privacy-preserving alternatives. Instead, they're consistently choosing to exit markets entirely. This suggests either: (a) they genuinely believe age verification poses unacceptable risks, or (b) they've determined that losing a market is less costly than implementing systems they consider problematic.

Likely the answer is both. Adult platforms operate on different economics than other websites. They face constant payment processor disputes, deplatforming pressure, and regulatory hostility. This hostile environment means they have limited capacity to invest in complex compliance infrastructure. When a government adds new regulatory burdens, platforms calculate the cost-benefit and often decide to walk away.

For Aylo specifically, their size gives them more options than smaller competitors. Blocking entire countries is costly—it means abandoning users, ad revenue, and verification data. But for a platform their size, it might still be the most cost-effective option compared to building and maintaining age verification infrastructure across multiple jurisdictions with different standards.

Smaller platforms have less ability to make this choice. They either comply or disappear entirely. The UK decision therefore has a selective impact: the largest platforms exit the market, while smaller (and potentially less scrupulous) competitors might attempt to comply, or might ignore the law and accept fines as a cost of business.

This creates a perverse outcome where regulation intended to protect children might actually drive users toward less-reputable platforms with worse content moderation, worse data protection, and worse user safety practices. Ofcom disputes this characterization, but they've offered little evidence that their enforcement actions have actually made children safer—only that they've launched investigations and issued fines.

The Global Precedent: Age Verification Creeping Worldwide

Why does a UK decision about one adult website matter? Because it signals what happens when age verification requirements spread to other jurisdictions—and they absolutely are spreading. The EU has discussed similar mandates, Australia passed its Online Safety (Basic Online Safety Expectations) framework which touches on age verification, and at least a dozen US states have active legislation or legislative proposals requiring age verification for adult content.

If Aylo's approach becomes the dominant strategy, we could see a fragmented internet where major platforms simply exit certain countries entirely rather than comply with local regulations. That's not purely hypothetical—it's already happening. Some platforms have retreated from Russia, some from Turkey, some from Australia. As regulatory complexity increases, more platforms will make similar decisions.

Conversely, if platforms did comply universally, you'd see massive centralization of identity verification data. A handful of verification providers would control which data about which adults accessing which content gets stored where. That concentration creates both security risks (valuable target for hackers) and surveillance risks (potential government access to the data).

Neither outcome is great. The blocking outcome means less access to regulated content and potential net harm to child safety (due to traffic diversion). The compliance outcome means massive privacy implications for adults. Regulators and platforms are essentially at an impasse where both positions have legitimate downsides.

What's missing from the debate is any serious attempt to develop better alternatives. Device-level age verification (as Ofcom mentioned) could theoretically work—verifying age once at the OS level rather than per-website. But that requires coordination between platforms, device manufacturers, and regulators that hasn't materialized. Standards bodies like the World Wide Web Consortium have discussed age verification technology, but there's no consensus on implementation.

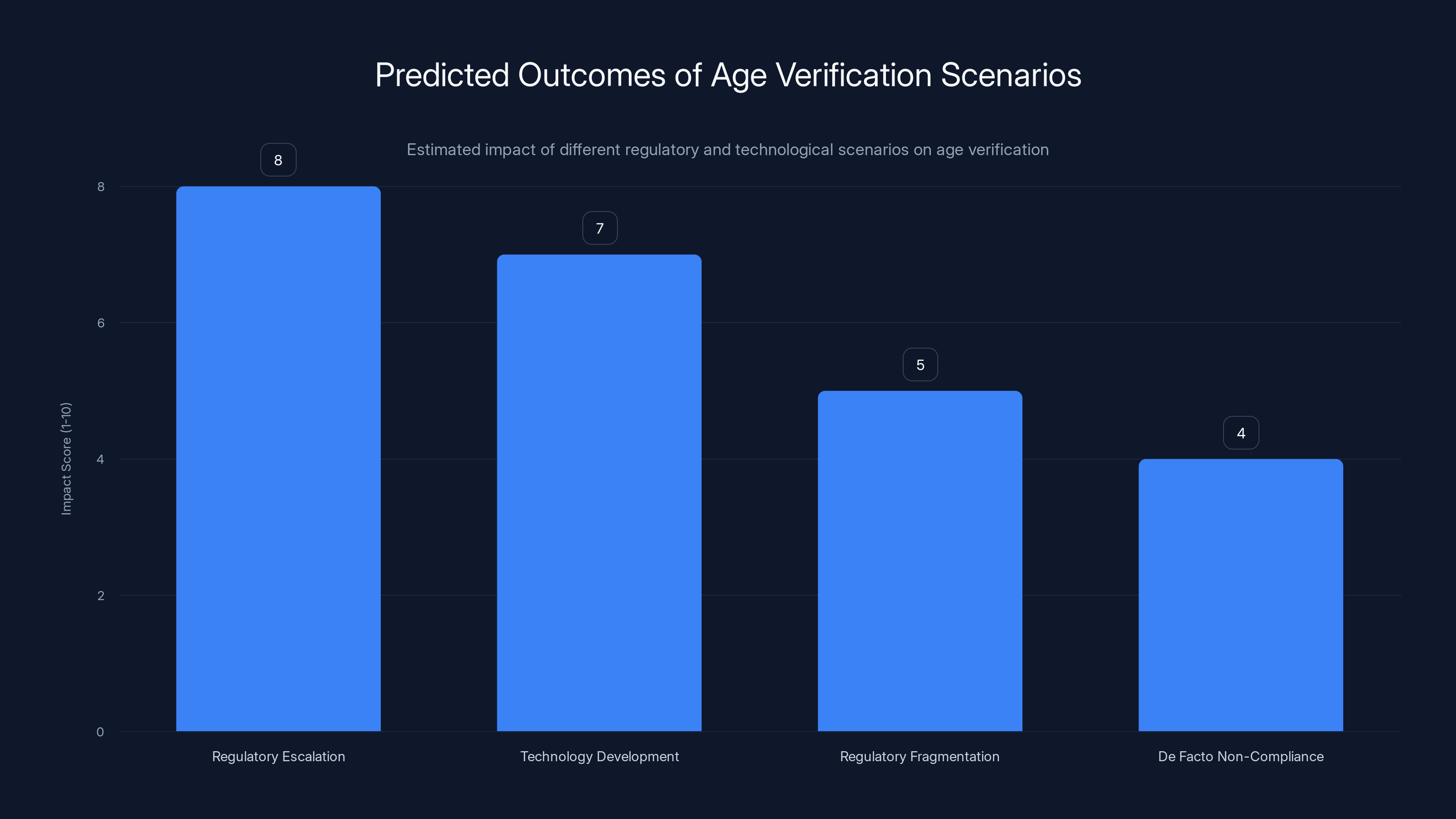

Estimated data: Regulatory Escalation is predicted to have the highest impact on age verification, while De Facto Non-Compliance has the least.

What Actually Happens to UK Users: Practical Impact

Let's talk about the immediate, practical consequences. On February 2, 2025, UK IP addresses attempting to access Pornhub and sister sites like Red Tube will see a block page. The restriction isn't technically sophisticated—it's based on geographic blocking using IP address databases. Users can bypass it with VPNs, but that creates its own problems: VPN usage might violate some platforms' terms of service, and it adds latency and potential security concerns.

Existing verified users—those who completed age verification before the deadline—retain access using their existing accounts. The company technically could have maintained this approach indefinitely. But from a business and liability perspective, maintaining an account system for restricted-access users creates ongoing regulatory exposure. If a regulator demands proof that only verified users are accessing the platform, maintaining that distinction becomes an ongoing compliance burden.

For content creators who have audiences in the UK, this is disruptive. Performers who monetize through Pornhub lose access to that revenue stream from UK viewers. Some might migrate to different platforms or seek alternative monetization. Others might face real financial hardship. The regulation was never intended to harm sex workers, but that's one of its actual effects.

For users, the immediate impact ranges from mild inconvenience to significant privacy concerns depending on how they choose to respond. Some will use VPNs, which adds a security layer (good) but also routes traffic through VPN providers (who might log activity) and violates platform terms (which might result in account suspension). Others will find alternative platforms, which might offer fewer safety features, worse content moderation, or sketchy business practices.

The regulatory theory is that blocking will protect children by limiting access. The practical theory—at least according to Aylo—is that it will push people toward worse alternatives. Neither side has conducted rigorous empirical research on this question, and the six months of data Aylo cited apparently convinced them that harm, not protection, resulted from their compliance attempts.

Ofcom's Counter-Arguments: The Regulator's Perspective

Ofcom, the UK communications regulator, stands by the OSA and disputes Aylo's characterization of its enforcement track record. According to Ofcom, the regulator has actively investigated more than 80 porn sites and issued fines (specifically mentioning a £1 million penalty) with more coming. Ofcom also rejects the premise that age verification necessarily harms privacy, suggesting that device-level verification or other technical alternatives exist that the industry hasn't seriously pursued.

There's an interesting asymmetry in these arguments. Aylo makes empirical claims about what they observed: after six months of compliance, they believe child safety hasn't improved and privacy has been harmed. Ofcom makes forward-looking claims: device-level solutions exist and should be explored. But neither side points to systematic research showing which approach actually works.

Ofcom's claim about enforcement is particularly interesting. They say they've fined porn sites and launched 80+ investigations. Aylo counters that only 4chan was fined. This discrepancy might reflect different timing—Ofcom might be counting investigations launched, while Aylo counts actual completed fines. Or it might reflect that investigations take time and haven't yet resulted in fines beyond the one mentioned.

The regulatory theory behind OSA is that the combination of age verification requirements plus fines for non-compliance will eventually push all platforms to comply. It's a straightforward regulatory approach: set a requirement, investigate violations, fine offenders, watch compliance increase. The problem is implementation at scale. Investigating 80+ sites takes resources. Fining individual sites doesn't change the economics for large platforms. And blocking a country is, from Aylo's perspective, cheaper than the ongoing compliance burden.

Ofcom's suggestion about device-level age verification is intriguing but not well developed in their public statements. How would device-level verification work? Which devices support it? How do you prevent people from spoofing their age at the device level? These questions matter because if device-level verification is actually feasible, it would solve several problems simultaneously—it would be more private (the website doesn't see ID documents), more effective (harder to bypass than website-level checks), and less likely to drive platforms into blocking mode.

But device-level solutions don't exist yet, and building them would require coordination between Apple, Google, Microsoft, and other device manufacturers—none of whom have shown enthusiasm for the project. Ofcom's suggestion that industry should "get on with that if they can evidence it is highly effective" puts the burden on platforms to develop technology that benefits regulators and child safety advocates but that platforms have no inherent incentive to build.

Aylo's decision to exit markets is driven by a combination of regulatory compliance costs, market size considerations, and pressures from payment processors and deplatforming. Estimated data.

The Data Breach Risk: Why Platforms Fear Age Verification Data

Aylo's core security argument deserves deeper examination. The claim isn't just theoretical—it's based on actual incident history. Pornhub was vulnerably to a data breach through Mixpanel, an analytics provider used by the platform. The exposed data included highly sensitive information: subscriber email addresses, locations, videos watched, search keywords, and activity timestamps.

Now imagine if government ID documents had been included in that breach. Users would have faced exposure of their identity alongside their pornography consumption patterns. For some users, this could mean serious real-world harms: employment consequences, family relationship damage, security vulnerabilities (identity theft), or even threats from extremist groups.

This isn't speculative. There have been incidents where adult website users faced extortion or harassment when their viewing habits were exposed. Adding identity information to those breaches would amplify those harms significantly. From a data security perspective, storing ID documents on behalf of adult website users creates a liability that most legitimate companies would try to avoid.

There's also the question of data broker risk. Once ID verification services process documents, that data could theoretically be sold, breached, or leaked by the verification service itself. These third-party services operate in a regulatory gray zone—they're not banks (which have strict data protection requirements) or healthcare providers (which are covered by HIPAA). They're basically private companies handling extremely sensitive information with limited oversight.

Aylo's position is that the privacy risk is too high relative to the actual child safety benefit. If evidence showed that age verification dramatically reduced minors' exposure to adult content and that the privacy protections were bulletproof, the calculation might be different. But the evidence from OSA's first six months apparently suggested neither condition was met: minors still found ways to access content, and privacy risks remained substantial.

The Behavioral Economics: Do People Actually Care About Age Verification?

Underlying this entire debate is an assumption that might not be true: that most people will comply with age verification requirements. Behavioral economics suggests otherwise. People often choose convenience over privacy, but they also often choose privacy over inconvenience. When you combine both factors—less convenience AND less privacy—compliance drops sharply.

Consider the incentives from a young person's perspective (hypothetically): access to pornography is desirable, but not so desirable that you'd hand over government ID to a random website. The result is that they'll use a VPN, lie about their age, or simply access the content through a different platform. From a harm-reduction perspective, that last option might be the worst—they end up on smaller, less-moderated platforms with worse content controls and potentially worse actors involved.

From an adult's perspective, the incentives are different but similarly problematic. Many adults will grudgingly comply with age verification if it means accessing the platforms they prefer. But some will use VPNs or switch platforms, and others will simply reduce their consumption of the content. Whether that last group is harmed (they wanted access to legal content and lost it) or helped (they wanted to reduce consumption and now have a convenient excuse) depends on your normative views.

What the data probably doesn't show is that young people massively reduced their porn consumption because of age verification. They found alternative methods. Some probably accessed more harmful content as a result of searching harder (driven to seedier parts of the internet). Others might have actually reduced their consumption, either because the friction was enough to dissuade them or because they made a conscious choice not to attempt verification.

Without rigorous studies tracking actual consumption patterns before and after age verification requirements, we're left with both sides making claims that are plausible but unproven.

Age verification systems often collect a wide range of personal data, each contributing equally to privacy risks. Estimated data.

Content Moderation and Platform Responsibility: The Bigger Picture

The age verification debate often overshadows a larger conversation about what adult platforms are actually responsible for. Pornhub, for instance, has faced years of criticism not just about access by minors, but about the platform hosting non-consensual content, content involving trafficking, revenge porn, and other genuinely harmful material.

Age verification is a highly visible response to the "minors accessing adult content" problem. But many of the actual harms—trafficking, exploitation, non-consensual content—could happen regardless of who's accessing the platform. A 25-year-old watching exploitative content is just as problematic from a harm perspective as a 15-year-old, but regulators don't attempt to prevent that. The regulation is narrowly focused on age, not on content quality or consent.

Aylo would probably argue that age verification requirements distract from more important work: content moderation, removing non-consensual material, verifying consent from performers, and building safe reporting mechanisms for users who encounter problematic content. But governments and advocacy groups focused on child safety often prefer age-based restrictions because they're easy to mandate through legislation, even if they're not the most effective at reducing actual harms.

This creates a misalignment between regulatory action and actual harm reduction. A platform could be 100% compliant with age verification requirements and still host massively harmful content. Conversely, a platform with excellent content moderation might be deemed non-compliant simply because it hasn't implemented age verification technology.

The UK's approach with OSA did attempt to address broader content safety issues beyond age verification, but the age verification requirement became the most visible and controversial component. When Pornhub withdrew from the UK market, the conversation focused on access restrictions rather than on whether content moderation might have been a more effective priority.

International Implications: What Other Countries Will Do

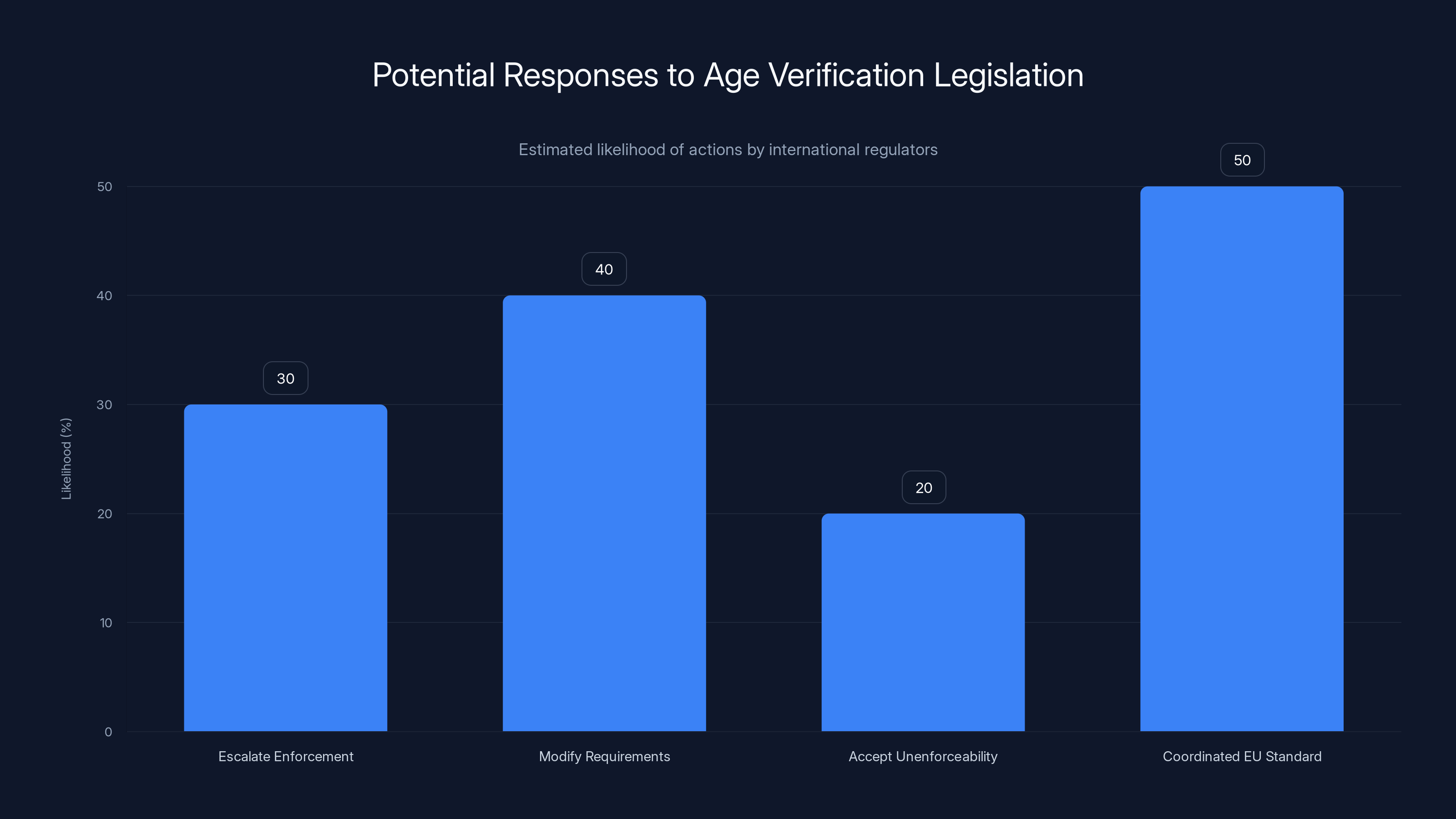

The UK's OSA and Aylo's response will likely influence how other jurisdictions approach similar legislation. If major platforms consistently choose to block countries rather than comply with age verification mandates, regulators will face a choice: escalate enforcement, modify requirements, or accept that their legislation is effectively unenforceable against large platforms.

Escalating enforcement might mean threatening other harms (threatening to block payment processors, holding corporate executives personally liable, etc.). But these tactics work better against platforms that depend on specific jurisdictions. For a global platform with revenue streams worldwide, losing one country's access might be preferable to facing broader enforcement threats.

Modifying requirements might mean moving away from ID-based age verification toward alternative approaches. Device-level verification, issuing government ID verification codes that users can employ across platforms, or a radically simplified process (just asking users to confirm they're over 18 without actually verifying) could all reduce the privacy burden while maintaining some age restriction.

Accepting that legislation is unenforceable is the politically difficult option but might be the most realistic. Many laws are designed assuming good-faith compliance even when enforcement is impractical. Jaywalking laws, traffic violations, and numerous others rely on voluntary compliance. Age verification requirements might eventually reach a similar equilibrium where most platforms ignore them and face occasional fines as a cost of business.

The EU, which tends toward aggressive regulation of tech companies, will likely attempt a coordinated approach where multiple member states require similar age verification standards. This might create enough market pressure that platforms can't simply block one jurisdiction (as Aylo did with UK). A coordinated EU standard affecting hundreds of millions of users would be harder for platforms to ignore. But it would also increase privacy risks proportionally—more states mean more data storage points and more opportunities for breaches.

Australia's approach has been somewhat different, focusing on blocking or restricting access to certain services rather than on age verification per se. The US is fragmented, with some states passing age verification requirements while others haven't, creating a patchwork that encourages platforms to take a global approach (either comply everywhere or block everywhere, rather than state-by-state compliance).

Estimated data suggests that a coordinated EU standard is the most likely response to age verification challenges, followed by modifying requirements.

Alternative Technologies: What Could Replace ID-Based Verification

If ID-based age verification is genuinely as problematic as Aylo claims, what alternatives exist? The answer might be: not many good ones currently, but several possibilities worth exploring.

Device-Level Verification: Operating systems could implement age verification at the OS level. A parent or guardian could set the device's age-of-use rating, and websites could query that setting instead of demanding individual verification. This would be more private (no ID documents involved), more effective (harder to circumvent), and less fragmented across websites. The downsides: it requires coordination between multiple device manufacturers, doesn't work if someone's using someone else's device, and still involves some data collection (albeit at a different point).

Government-Issued Digital IDs: Some countries are developing digital versions of government ID that individuals can control and selectively share parts of. A user could share only their age (as a certified boolean) without revealing their name, address, or document number. This would require government investment and coordination, which explains why it doesn't exist yet. But it's technically feasible and would solve many privacy issues.

Third-Party Adult Verification Services: Instead of each platform running its own verification, a trusted third party could issue age-verification tokens that users could carry across websites. The token proves age without proving identity. Users would need to verify with the third party once, then could use the token everywhere. This still creates a data concentration problem (the third party becomes a single point of failure) but reduces the number of companies holding sensitive data.

Metadata Analysis: Some researchers have explored using behavioral signals (account age, payment history, etc.) to estimate user age without requiring explicit verification. This would be less invasive than ID checks but potentially less accurate. It's also ethically questionable to be judging people's age through their behavior without their explicit consent.

Mesh Verification Networks: Theoretically, a distributed network of verification nodes could verify age claims without requiring ID documents to be stored anywhere permanently. This is technologically complex and probably impractical at scale, but the theoretical approach would minimize privacy risks while maintaining verification capability.

None of these alternatives exist in production yet. Device-level verification faces coordination challenges. Government digital IDs are years away in most countries. Third-party verification services raise new data concentration concerns. Metadata analysis is ethically questionable. Mesh networks are speculative.

This gap between what we need (effective age verification without massive privacy risks) and what exists (privacy-invasive ID checks or blocking) explains why the debate is so contentious. Both existing options have serious downsides, and the alternatives don't exist yet.

The Content Creator Perspective: Sex Workers and Performers Affected

One perspective often missing from the age verification debate is that of content creators themselves—the people who produce the material being regulated. For many, platforms like Pornhub provide primary or supplementary income. Blocking entire countries directly harms these creators by eliminating a revenue stream.

A performer with a global audience might see 10-15% of their views come from UK users. When that country gets blocked, revenue drops by that percentage. For creators with modest incomes, this could mean significant financial hardship. For more successful creators, it might be noticeable but manageable. But the aggregate effect across thousands of creators is substantial—it's a tax on creative workers to fund child safety initiatives that evidence suggests might not actually work.

Sex workers and adult content creators often have limited alternatives for income. Many face employment discrimination if their involvement in sex work becomes known. Reducing their income opportunities through geographic blocking has real consequences for their economic security. Some might migrate to different platforms or different business models (moving to custom content creation, subscription services, etc.), but that's not trivial—it requires rebuilding audiences and changing entire business models.

Interestingly, creators themselves have mixed opinions on age verification. Some see it as a legitimacy play—platforms that verify age appear more professional and might attract higher-paying users. Others see it as an invasion of privacy that they're forced to facilitate on behalf of their employers (the platforms). The debate about whether age verification helps or hurts sex workers as a group is complex and probably varies by individual circumstances.

When Aylo decided to block the UK, they presumably calculated that the revenue loss from UK users was acceptable. But that calculation doesn't just affect the company's bottom line—it affects every content creator on their platform who had revenue tied to UK users. That's a real material consequence of the age verification debate that regulatory discussions don't always acknowledge.

Payment Processing and De-platforming: The Real Enforcement Mechanism

One detail worth highlighting: the actual enforcement of age verification requirements isn't primarily through fines for non-compliance. It's through payment processors. Companies like Visa, Mastercard, and Stripe have their own policies around adult content and age verification. A platform that's non-compliant with government regulations becomes a liability for payment processors, which exposes them to regulatory scrutiny.

This means the real pressure on platforms comes not from government fines (which are often small relative to platform revenue) but from payment processor threats. If Visa decides not to process payments for a platform, that platform effectively can't operate, regardless of what users want or what the law technically requires.

Payment processor policies are private corporate decisions, not government regulations. They're arguably more powerful than government mandates because they're harder to challenge or negotiate with. A platform can fight a government fine in court. It can appeal regulatory decisions. But if Visa cuts off processing, the platform's business essentially ends immediately.

Aylo's decision to block the UK might have been influenced partly by payment processor pressure—either actual threats or the anticipation that payment processors would demand compliance. Interestingly, payment processors have been less aggressive about blocking adult platforms than they once were, partly due to advocacy by sex workers' rights groups about the harms of de-platforming. But the potential for processor-based enforcement remains the most powerful tool in the regulatory arsenal.

This creates a multi-layered enforcement system where government mandates are backed by payment processor policy, which is backed by corporate discretion rather than legal requirement. It's functionally equivalent to regulation but happens through private actors, which means less due process and less opportunity for public debate.

Privacy Advocacy Perspectives: Why Civil Liberties Groups Are Concerned

Beyond platform concerns about data security, civil liberties and privacy advocacy organizations have raised significant concerns about age verification requirements. Organizations like the Electronic Frontier Foundation, Privacy International, and various digital rights groups have published detailed critiques of ID-based age verification mandates.

Their core argument: collecting ID documents from millions of adults accessing legal content creates a dangerous precedent. If governments can mandate age verification for adult content, what's to stop them from mandating similar data collection for other "sensitive" content? Political speech? Religious material? News sources? Once you establish that government can force collection of identity data for accessing legal content, you've created a system that could theoretically be extended far beyond its original purpose.

Historically, privacy advocates have been right to worry about scope creep. Laws passed for one purpose often get repurposed for others. A system built to verify age for pornography could be repurposed to track access to abortion information, political speech, or other sensitive content. In countries with less robust free speech protections than the UK, the risks are even more significant.

Some privacy advocates also raise concerns about the databases used for age verification. If these databases are compromised or if they're eventually accessed by government agencies for law enforcement purposes (with warrants or without), the consequences could be severe. A data breach exposing adult content consumption alongside personal identity would be catastrophic for privacy.

Privacy organizations generally support alternatives like device-level verification that don't require ID documents, or strong legal restrictions on how age verification data can be used and stored. But they're skeptical that such protections would hold up indefinitely against government pressure or corporate misuse.

These aren't fringe concerns. They're based on historical precedent and reasonable projection of how systems designed for one purpose often drift toward surveillance purposes over time. The debate between child protection advocates (who want age verification) and privacy advocates (who oppose it) is fundamentally about whether we should trade individual privacy for collective safety—and different people have different answers to that question.

What Happens Next: Predictions and Uncertainties

Predicting the future of age verification regulation is difficult because multiple actors with different incentives are involved. But several scenarios seem plausible.

Scenario 1: Regulatory Escalation: UK and other governments respond to Aylo's blocking by increasing fines, threatening payment processor regulation, or taking more aggressive enforcement action. Platforms invest in compliance infrastructure because ignoring the requirement becomes too costly. Eventually, age verification becomes standard, with better privacy protections developed over time. Outcome: Platforms comply, ages get verified, some privacy harm but manageable with regulation. Risk: Systems get repurposed for surveillance.

Scenario 2: Technology Development: Alternative verification methods are developed (device-level, government digital IDs, etc.) that offer age verification with less privacy invasion. Platforms adopt these alternatives. Regulators accept them as compliance. Outcome: Age verification persists but with better privacy protections. Risk: Alternative technologies take years to develop and deploy; compliance gaps meanwhile.

Scenario 3: Regulatory Fragmentation: Different jurisdictions require different systems. Platforms can't economically comply with every variation. They either block multiple countries (shrinking addressable markets) or leave the business entirely (reduced innovation and potential negative externalities). Outcome: Fragmented internet with geographic restrictions. Risk: Harms to users, creators, and platforms; potential unintended consequences.

Scenario 4: De Facto Non-Compliance: Platforms ignore age verification requirements, accept occasional fines as cost of business, and continue operating as before. Users bypass restrictions with VPNs when needed. Outcome: Regulation exists but is largely unenforced. Risk: Continues harms regulators wanted to prevent; questions legitimacy of regulation.

Scenario 5: Market Contraction: Rather than deal with regulatory complexity, large platforms increasingly exit adult content business. Smaller, less scrupulous platforms fill the gap. Outcome: Adult industry becomes less regulated, less safe, and potentially more exploitative. Risk: Unintended consequences worse than original problem.

The most likely outcome probably involves elements of multiple scenarios: some regulatory escalation in some jurisdictions, some technology development moving forward slowly, some geographic blocking by platforms, and a slow-moving regulatory evolution as evidence accumulates about what actually works.

The next few years will likely see more jurisdictions attempt similar legislation, more platforms develop responses, and more debate about whether age verification is actually effective. The UK case will be closely watched as a test case: does blocking actually harm child safety by pushing users to worse platforms, or does it work as intended?

Until someone conducts rigorous empirical research on these questions, both sides of the debate will continue arguing based on theory and assumption rather than evidence.

Lessons for Digital Regulation: Key Takeaways

The Pornhub UK situation offers lessons about digital regulation more broadly. First, regulations that require platforms to collect sensitive personal data will face strong opposition from privacy advocates and platforms with data security concerns. Regulators can mandate compliance, but they can't force platforms to accept the regulatory burden—blocking is always a potential response.

Second, child safety is a important goal, but regulation designed to achieve it must actually work empirically. Good intentions don't guarantee good outcomes. A regulation that forces data collection to protect children but ends up distributing more people to worse alternatives has failed by its own measure. Any age verification requirement should be accompanied by research measuring whether it actually reduces harm.

Third, one-size-fits-all regulations don't work when technology companies operate globally. Different jurisdictions will require different approaches, and platforms must either develop complex compliance systems or make blocking decisions. Coordination between regulators could reduce this problem, but it requires international cooperation that's rare for internet regulation.

Fourth, platforms are not obligated to operate in every jurisdiction. When the regulatory burden becomes too high, blocking is a rational business response. This creates a different kind of harm (denying access to legal content) that regulators should weigh against the harm they're trying to prevent.

Fifth, the privacy concerns raised by platforms and privacy advocates are legitimate and worth taking seriously. Collecting ID documents from adults to protect children is a trade-off with real costs. Better alternatives (device-level verification, government digital IDs, etc.) don't exist yet but should be actively developed rather than assuming current approaches are optimal.

Sixth, enforcement by payment processors and other private actors is often more powerful than government regulation. Understanding these hidden enforcement mechanisms is essential for understanding how regulation actually works. Laws might mandate one thing, but payment processors might enforce something different.

These lessons apply beyond adult content regulation. They apply to privacy-invasive regulations generally, to age verification for other content types, and to internet regulation more broadly. Regulators who ignore them risk creating laws that don't achieve their intended goals while imposing real costs on users, creators, and platforms.

Conclusion: The Unresolved Tension Between Safety and Privacy

Aylo's decision to block Pornhub in the UK represents more than a business decision by one company. It's a signal about the fundamental tension between child safety and digital privacy that regulators worldwide will have to grapple with in coming years. There's no easy answer where both values are fully respected. Every approach involves trade-offs.

ID-based age verification offers real protection (some young people will decide not to attempt access rather than hand over ID documents), but it comes with genuine privacy costs and potential for surveillance misuse. Blocking offers privacy protection (no ID documents collected) but harms users who want legal access and potentially drives them to worse alternatives. Device-level verification could theoretically solve both problems, but the technology doesn't exist yet and requires coordination between companies and governments that hasn't materialized.

What we know after Aylo's decision: major platforms will not accept unlimited data collection in the name of child protection. Regulators can force the issue through fines and payment processor pressure, but platforms can always choose to exit rather than comply. This gives platforms veto power over regulation, which isn't ideal but is the reality of the current internet infrastructure.

What we don't know: whether age verification actually works at reducing harm to children. Aylo claims it doesn't, pointing to diverted traffic and privacy concerns. Ofcom disputes this but hasn't provided independent evidence. A rigorous study measuring actual changes in youth access to adult content and actual changes in privacy risks would be valuable, but such research hasn't been conducted or published.

Moving forward, the question isn't whether Aylo's decision is right or wrong. It's whether regulators will respond by (1) accepting that age verification won't be universal and adjusting their expectations, (2) developing alternative approaches that don't require ID documents, or (3) escalating enforcement to make non-compliance more costly than blocking. Each path has different implications for users, creators, platforms, and privacy.

The UK's Online Safety Act represented ambitious regulation of internet content. The Pornhub blocking shows that ambition hits real limits when platforms make different calculations about costs and benefits. Future regulations—whether in the UK, EU, US, or elsewhere—will need to account for these limits or risk creating rules that look good on paper but fail in practice or have unintended consequences worse than the problems they're meant to solve.

The conversation about how to protect children online is important and necessary. But it needs to happen with eyes open to the actual trade-offs involved and with willingness to develop better solutions than ID-based age verification. Until then, we're stuck in a debate where both sides have legitimate points and neither side has a solution that doesn't create new problems.

FAQ

What is the UK Online Safety Act and why does it require age verification?

The Online Safety Act is comprehensive legislation designed to protect users, particularly children, from harmful online content. For adult websites specifically, it mandates age verification to prevent minors from accessing sexually explicit material. The law essentially requires websites to verify users are over 18 before displaying age-restricted content, with non-compliance resulting in fines and regulatory action.

Why did Aylo choose to block UK access instead of implementing age verification?

Aylo's decision was based on operational experience after six months of compliance attempts. The company argued that age verification failed to meaningfully reduce minor access while creating significant privacy risks through collection of sensitive identity documents. Additionally, Aylo believed the requirement was driving traffic toward unregulated platforms with worse safety practices. From a cost-benefit perspective, blocking an entire country became preferable to maintaining ID verification infrastructure.

How does age verification technology work and what are the privacy concerns?

Cloud-based age verification typically requires users to upload government-issued ID documents (driver's licenses, passports, national IDs). The system verifies document authenticity and extracts the date of birth. Privacy concerns include: the collection and storage of highly sensitive biometric and identity data, vulnerability to data breaches (with real consequences for users if exposed alongside viewing habits), reliance on third-party verification services with questionable security practices, and the precedent it sets for mandatory identity collection to access legal content.

Will other countries follow the UK's age verification approach?

Multiple jurisdictions are considering similar legislation. The EU is discussing coordinated age verification standards, Australia has ongoing regulatory efforts, and numerous US states have proposed or passed age verification requirements for adult content. However, Aylo's blocking response suggests platforms may increasingly choose market exit over compliance, potentially leading to geographic fragmentation of the internet. International coordination between regulators might force broader compliance than individual country requirements.

What alternatives to ID-based age verification exist?

Several alternatives have been proposed but not yet implemented at scale: device-level verification (implemented at the operating system level to avoid per-website ID checks), government-issued digital IDs that selectively share only age information, third-party verification services that issue age-verification tokens, and behavioral analysis using account history and payment data. Each alternative has trade-offs between effectiveness, privacy protection, and implementation complexity. Device-level solutions would require coordination between platform manufacturers that hasn't materialized.

How are content creators and sex workers affected by age verification requirements?

Content creators on adult platforms depend on global audiences for income. Geographic blocking from the UK or other jurisdictions directly reduces revenue for performers. Some creators see age verification as legitimizing platforms, while others view it as privacy invasion they're forced to facilitate. The aggregate effect across thousands of creators is substantial reduced income opportunities. Platforms' decisions about compliance or blocking have direct economic consequences for individuals dependent on adult platform income.

What does Ofcom say about Aylo's claims?

Ofcom, the UK regulator, disputes Aylo's characterization on multiple points. The regulator claims it has actively enforced the Online Safety Act, investigating over 80 porn sites and issuing fines (specifically mentioning a £1 million penalty) with more enforcement coming. Ofcom also suggests that device-level age verification technology could provide effective alternatives to ID-based systems without requiring the industry to abandon age verification entirely. The discrepancy between Aylo's and Ofcom's claims about enforcement suggests either different timelines or different counting methodologies.

What are the security risks of storing ID documents for age verification?

Historically, adult websites have been targeted by sophisticated attackers because the data is valuable (users want viewing habits kept private). Pornhub itself experienced a data breach through a third-party analytics provider that exposed subscriber information including emails, locations, and viewing patterns. If government ID documents had been included in that breach, users would have faced identity theft risks, employment consequences, and potential threats from extremist groups. The more sensitive data stored alongside behavioral data, the more valuable the target becomes to criminals.

Could young people simply use VPNs to bypass the UK age verification block?

Yes, VPNs and other anonymizing tools could technically bypass geographic blocking. However, using VPNs to circumvent platform terms of service can result in account suspension or banning. Additionally, many users don't know how to use VPNs, and some worry about security risks from VPN services. From a harm-reduction perspective, the effort required to use a VPN means some young people will simply move to alternative platforms rather than attempt circumvention, potentially accessing less moderated or less safe content.

What do privacy advocates say about age verification requirements?

Organizations like the Electronic Frontier Foundation and Privacy International raise concerns that age verification creates a dangerous precedent for mandatory identity collection to access legal content. Their arguments: scope creep could eventually extend similar requirements to political speech, religious material, or other sensitive content; compromised databases could enable government surveillance; and historical experience shows laws passed for one purpose often drift toward other purposes over time. Privacy advocates generally support alternatives like device-level verification that avoid centralized identity data collection.

Is age verification actually effective at protecting children from adult content?

There is insufficient independent evidence to definitively answer this question. Aylo claims that after six months of implementation, age verification failed to meaningfully reduce minor access and drove traffic toward unregulated alternatives. Ofcom disputes this characterization but hasn't published independent data showing effectiveness. No rigorous academic studies appear to measure actual changes in youth exposure to adult content or actual changes in privacy harms. The regulatory effectiveness of age verification remains empirically unproven despite being legally mandated.

TL; DR

-

Aylo blocks Pornhub in UK: Instead of complying with age verification mandates from the Online Safety Act, Aylo chose to restrict all UK access effective February 2, 2025, claiming that 6 months of enforcement showed the law failed to reduce minor access while creating privacy risks.

-

Core debate: Age verification requires collection of sensitive identity documents (government IDs) to verify age—creating data breach vulnerabilities and privacy risks while evidence shows questionable effectiveness at preventing youth access.

-

Regulatory disagreement: Ofcom (the UK regulator) claims it has fined porn sites and launched 80+ investigations, while Aylo argues only one site faced enforcement and diverted traffic has harmed child safety by pushing users toward unregulated platforms.

-

Privacy concerns are serious: Platforms worry that mandatory identity collection creates surveillance infrastructure that could eventually be repurposed, and historical data breaches show adult website users face real-world harms if viewing data is exposed alongside identity information.

-

No perfect solution exists yet: Alternative technologies like device-level verification could solve both problems (safety + privacy) but don't exist at scale and would require coordination between device manufacturers and regulators that hasn't happened.

-

Global precedent matters: Other jurisdictions including the EU, Australia, and multiple US states are considering similar age verification requirements, so the UK case will influence how online regulation evolves worldwide.

-

Bottom line: The Pornhub UK blocking reveals the fundamental tension between child safety and digital privacy—regulators can mandate data collection, but platforms can choose market exit, and no approach protects both values simultaneously without creating new problems.

Key Takeaways

- Aylo blocked Pornhub in the UK on February 2, 2025 rather than implement age verification, citing privacy risks and ineffectiveness after 6 months of compliance

- Age verification requires collecting sensitive government ID documents, creating data breach vulnerabilities and surveillance concerns that extend beyond child safety

- Disagreement between Aylo and Ofcom shows regulatory uncertainty: regulators claim enforcement is active while platforms claim only minimal fines have been issued

- Alternative technologies like device-level or government-issued digital age verification could theoretically solve both safety and privacy problems but don't exist at scale yet

- When regulation becomes too burdensome, major platforms have the market power to choose geographic blocking over compliance, fragmenting the global internet

- Privacy advocates raise legitimate concerns that mandatory ID collection for accessing legal content sets dangerous precedents for government surveillance infrastructure

- Content creators and sex workers face direct financial harm when platforms block entire countries due to regulatory pressure

- The UK case will likely influence how other jurisdictions approach age verification, making this a critical test case for digital regulation globally

Related Articles

- Pornhub's UK Shutdown: Age Verification Laws, Tech Giants, and Digital Censorship [2025]

- Pegasus Spyware, NSO Group, and State Surveillance: The Landmark £3M Saudi Court Victory [2025]

- Age Verification & Social Media: TikTok's Privacy Trade-Off [2025]

- WhatsApp's Strict Account Settings: What They Mean for Your Privacy [2025]

- ExpressVPN Legacy Apps Update: What You Need to Know [2025]

- TikTok US Ban: 3 Privacy-First Apps Replacing TikTok [2025]

![UK Pornhub Ban: Age Verification Laws & Digital Privacy [2025]](https://tryrunable.com/blog/uk-pornhub-ban-age-verification-laws-digital-privacy-2025/image-1-1769538073615.jpg)