Pornhub's UK Shutdown: Age Verification Laws, Tech Giants, and Digital Censorship [2025]

On February 2, the world's largest adult content platform simply stopped accepting new users in the United Kingdom. No dramatic announcement. No press conference. Just a digital door slam that locked out millions of potential new visitors.

Pornhub didn't do this because of a sudden change in morality. The company, owned by Ethical Partners Capital (ECP) through its subsidiary Aylo, took this action as a protest against what it calls a "flawed" age verification system mandated by the UK's Online Safety Act. The company argues the law creates an illusion of child protection while doing almost nothing to actually protect children from explicit content online, as reported by Biometric Update.

This move represents far more than one adult site pulling out of a market. It's a watershed moment in the ongoing tension between government regulation, corporate responsibility, and the practical reality of digital age verification. The decision exposes critical gaps in how democracies are trying to protect minors from adult content, and it raises uncomfortable questions about whether the tech giants—Apple, Google, and Microsoft—should be forced to solve a problem that their own platforms enable.

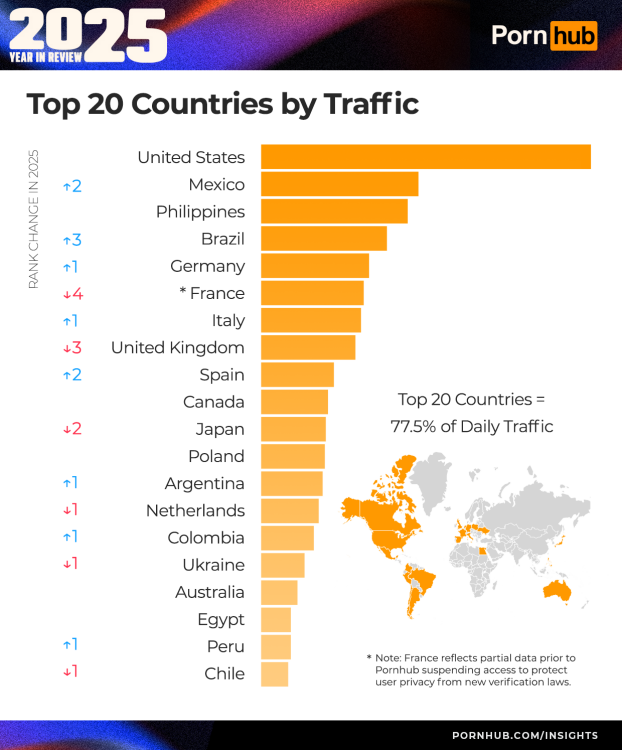

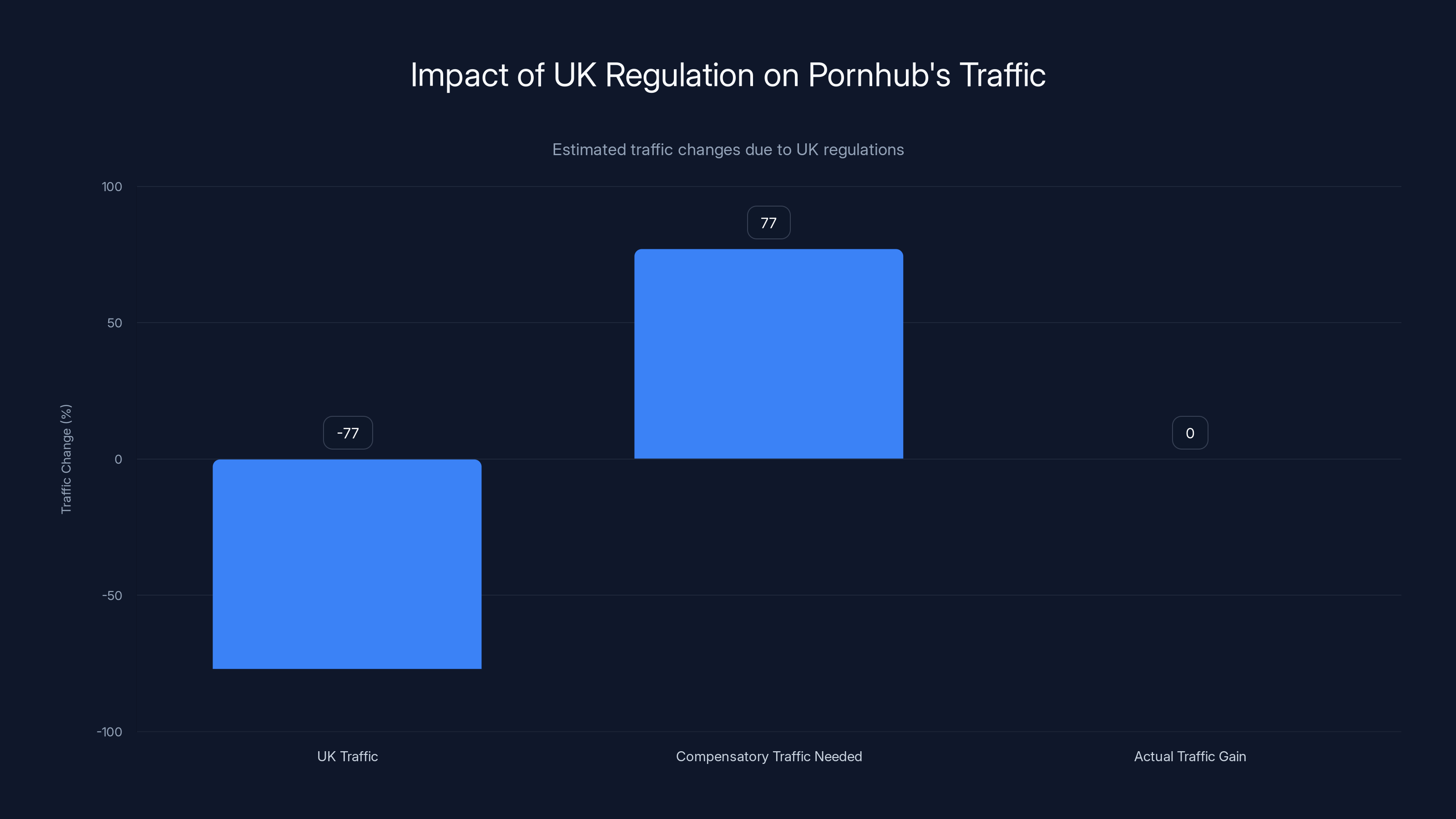

Since the Online Safety Act provisions kicked in last July, Pornhub's UK traffic dropped 77 percent. The company watched its audience evaporate. But rather than quietly absorb the hit, Pornhub's leadership decided to make a statement: if the UK wants meaningful age verification, it needs to force tech companies to build it at the device level, not at the application level.

The irony is sharp. A pornography company is now the loudest voice arguing for better child safety online. And they might actually have a point.

What Happened: The UK's Online Safety Act and Age Verification Mandate

The Online Safety Act wasn't written to target adult websites specifically. The legislation, which came into force in July 2024, represents the UK government's ambitious attempt to regulate the entire internet within its borders. Think of it as a sweeping modernization of digital responsibility laws, covering everything from social media algorithms to content moderation to yes, pornography.

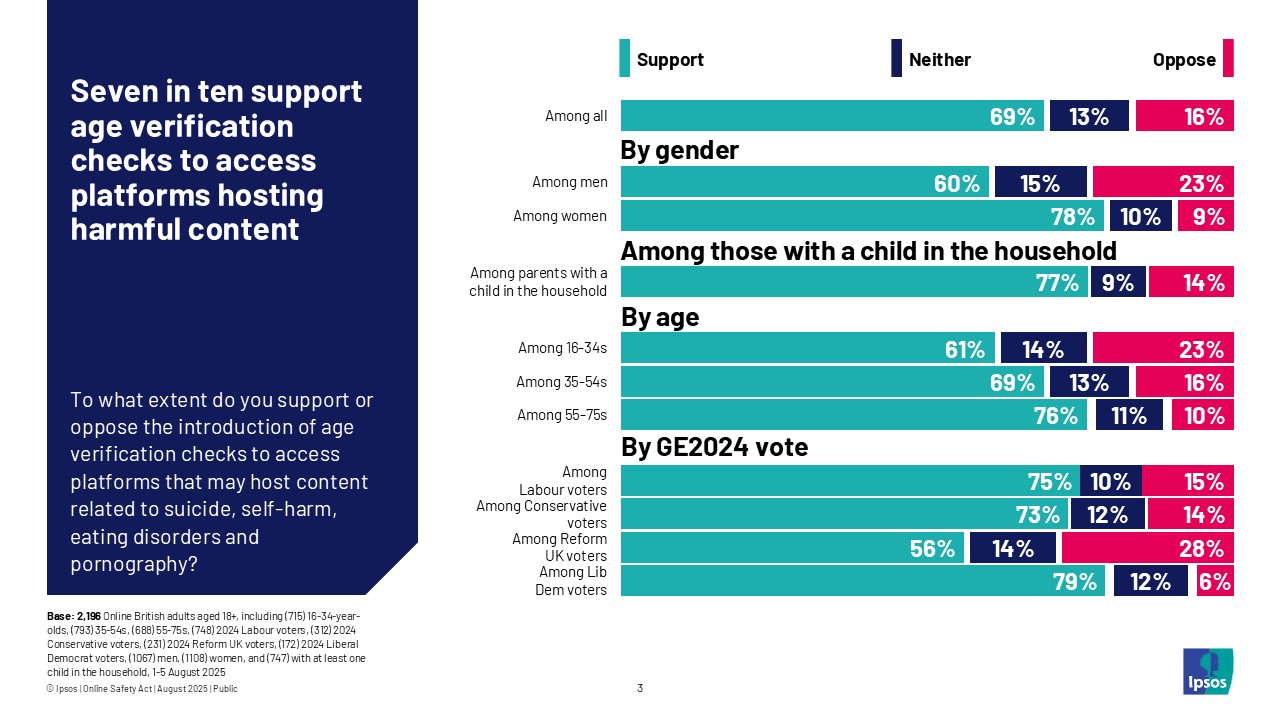

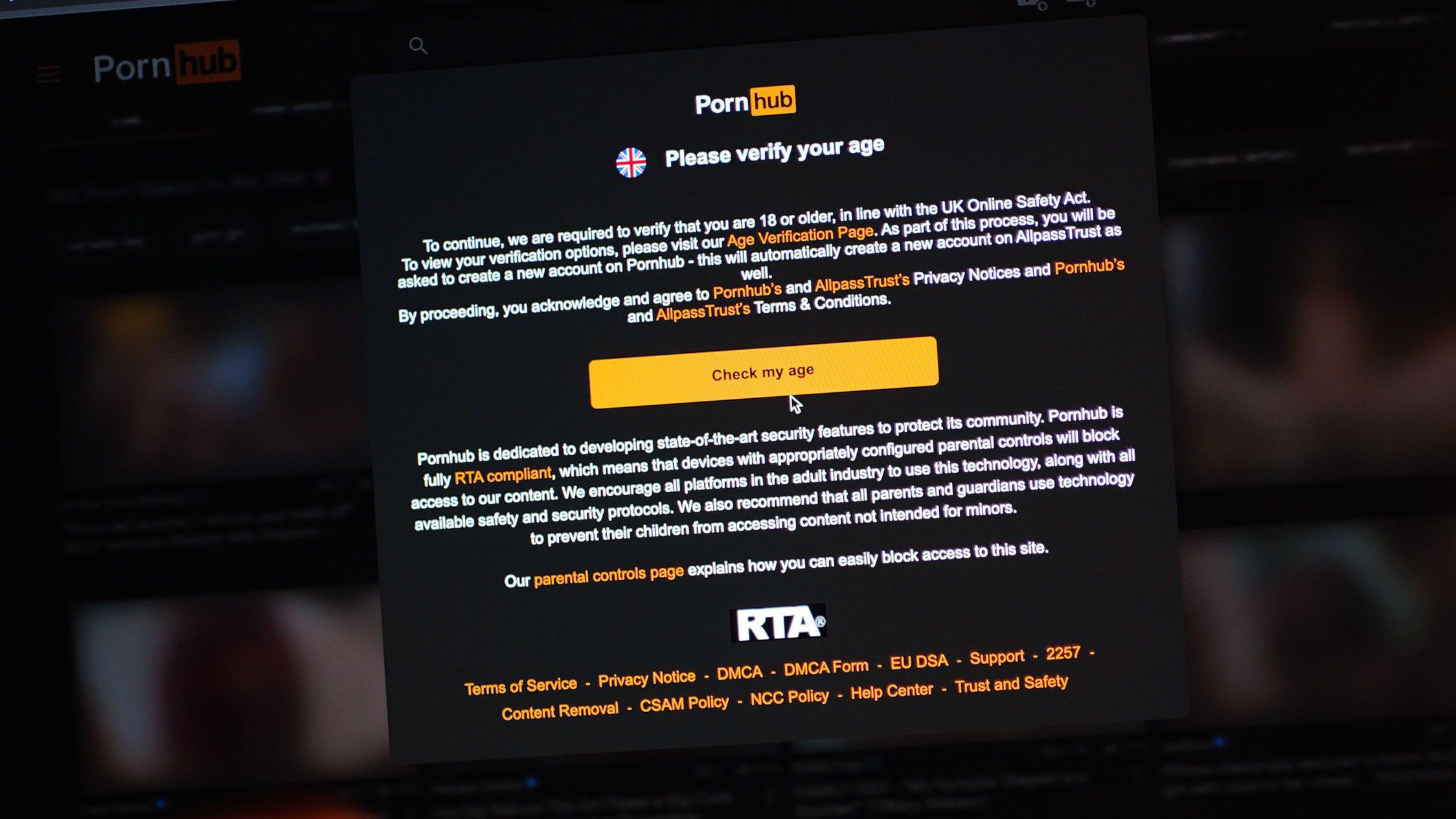

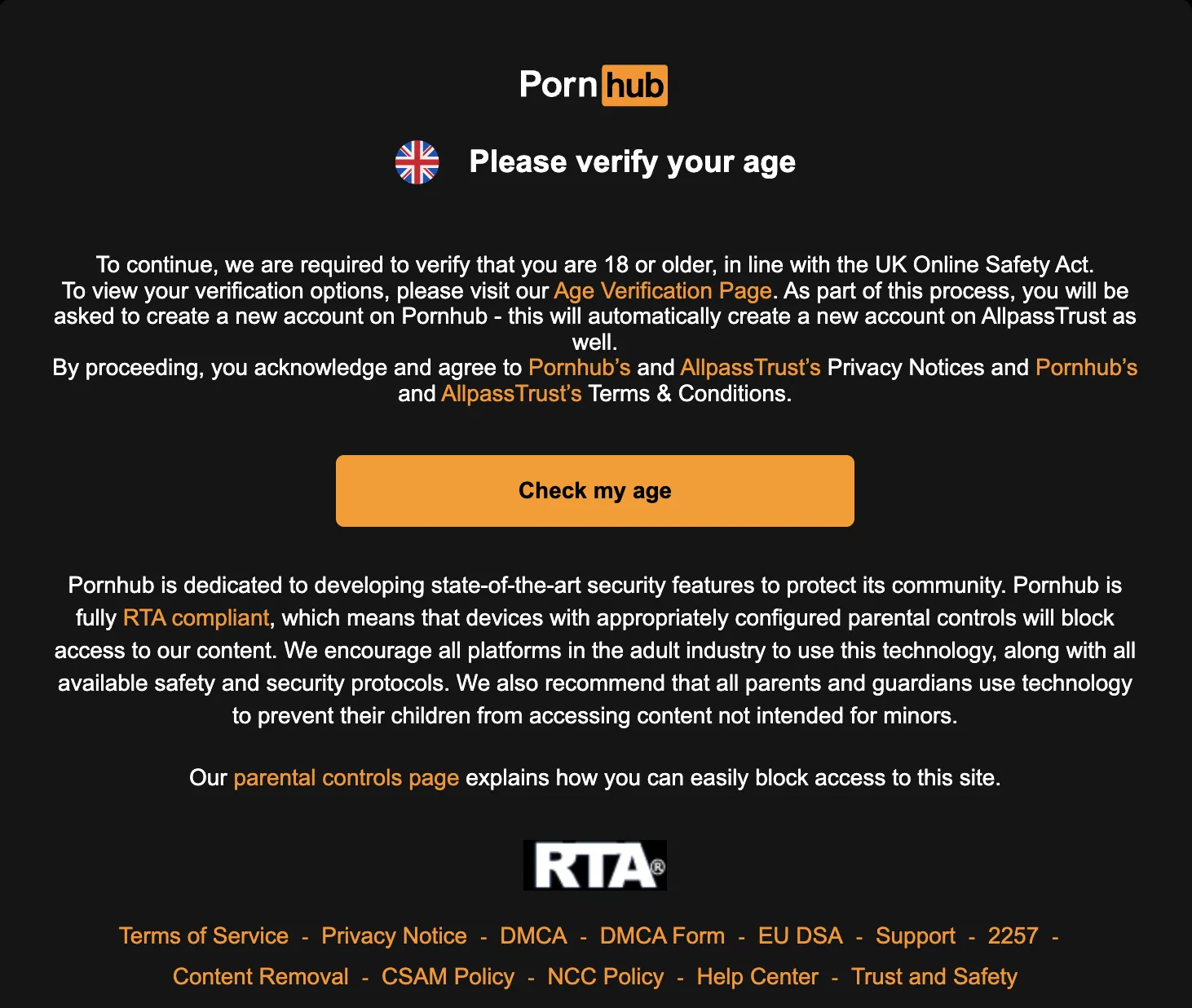

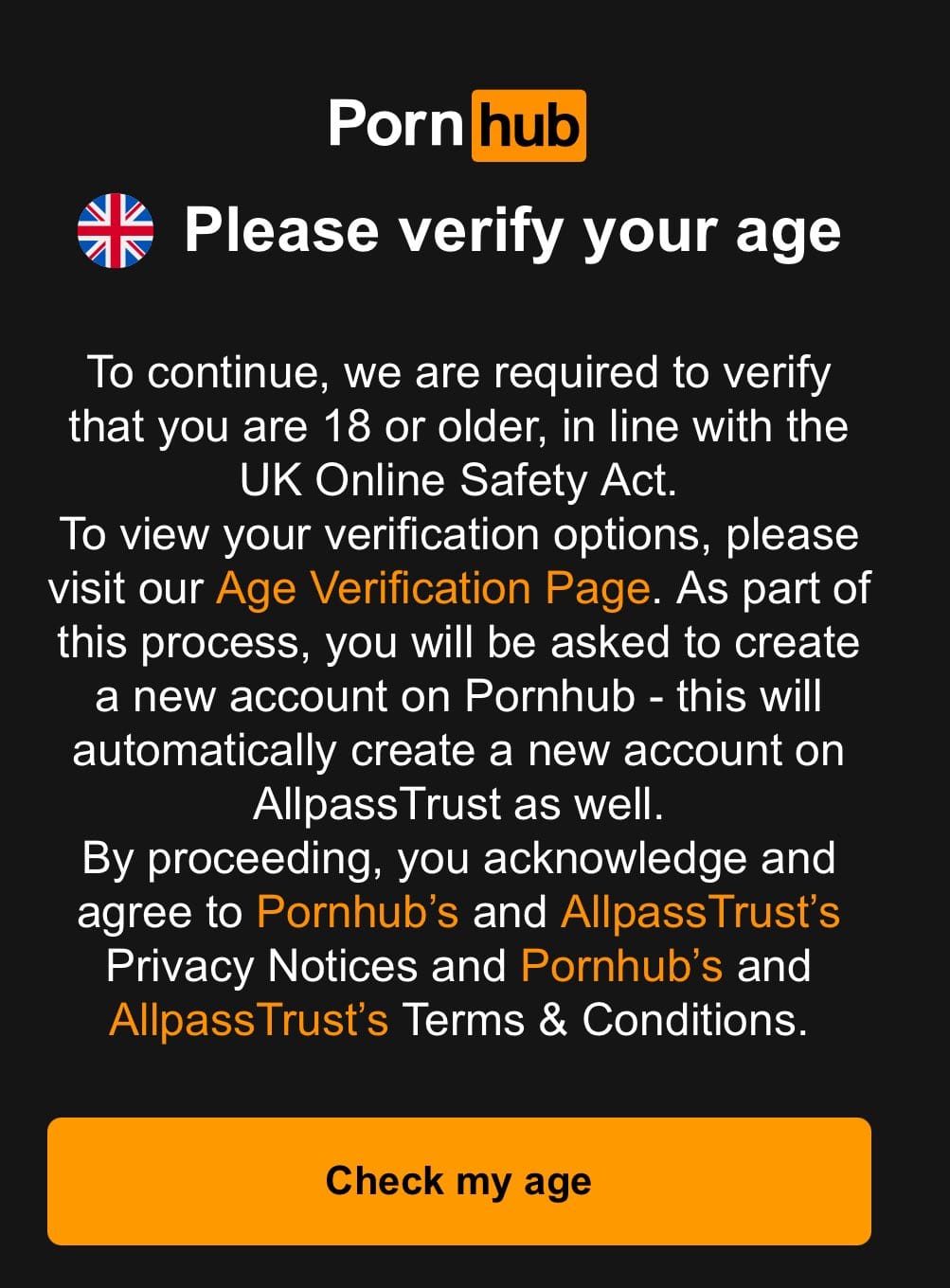

The age verification requirement is just one piece. It requires adult websites to verify that users are 18 or older before granting access to content. On its surface, this seems reasonable. Adults can access adult content, minors cannot. The law created expectations that six specific mechanisms would be used to verify age: age-estimating face scans, ID document uploads, credit card checks, knowledge-based challenges, mobile network operator verification, and existing age verification service providers.

But here's where reality diverges from intention. Implementing these requirements costs money. Real money. A small porn site with limited resources faces the same regulatory burden as a massive platform like Pornhub. For smaller operators, the economic calculation is simple: compliance costs exceed potential revenue from UK users, so they don't comply.

Pornhub, by contrast, has the resources to implement compliance systems. The company built sophisticated age verification infrastructure. It's expensive, inconvenient for users, and according to Pornhub's own data, it works: only users who have already registered and completed age verification can now access the site from the UK.

But the company discovered something troubling during its presentation to stakeholders in February. Of the top 10 Google search results for "free porn" in the United Kingdom, six sites were non-compliant with the age verification laws. Six out of ten. That's a 60 percent failure rate among the most visible results.

Moreover, Pornhub's own research found that thousands of adult sites continue operating in the UK without any age verification whatsoever. These sites face minimal enforcement action. They have little incentive to comply. And so the result is perverse: the largest, most professional platform that actually complies with the law sees its traffic collapse, while smaller, less scrupulous sites continue operating freely.

Estimated data shows a balanced distribution of age verification methods mandated by the UK Online Safety Act, with ID document uploads being slightly more prevalent.

The 77 Percent Collapse: What the Numbers Tell Us

That 77 percent drop in UK traffic isn't just a statistic. It's a measurement of how dramatically age verification changed user behavior.

Consider what users face now. If you're in the UK and want to access Pornhub, you can't just type the URL and start browsing. Instead, you encounter a registration wall. You need to create an account. Then you need to verify your age. The platform offers several options: upload a government ID, submit to a face scan that estimates your age, provide a credit card number, answer knowledge-based questions, or use a mobile network operator verification.

For returning users who had already completed verification, this was a minor inconvenience. They could log back in and continue browsing. But for new users, the friction is substantial. Some percentage of potential new users look at these requirements and think, "This is too much hassle." They move on.

But 77 percent? That number suggests something deeper than friction. It suggests that most new traffic was coming from casual, untargeted browsing—exactly the kind of traffic that evaporates when you introduce barriers. It also suggests that more users than expected were reaching the site through geographic misclassification or VPN circumvention, and once proper age verification was enforced, those avenues closed.

The traffic collapse also reflects the fact that the UK is a developed nation with strong internet literacy and alternative content sources readily available. Users didn't stop seeking adult content. They simply found it elsewhere—on non-compliant sites, through VPNs, or on platforms with weak or non-existent age verification.

This is the core of Pornhub's argument: the law created a compliance burden that only responsible companies could afford to meet, making those companies less competitive than their irresponsible competitors. It's a classic regulatory dynamic called the "compliance trap."

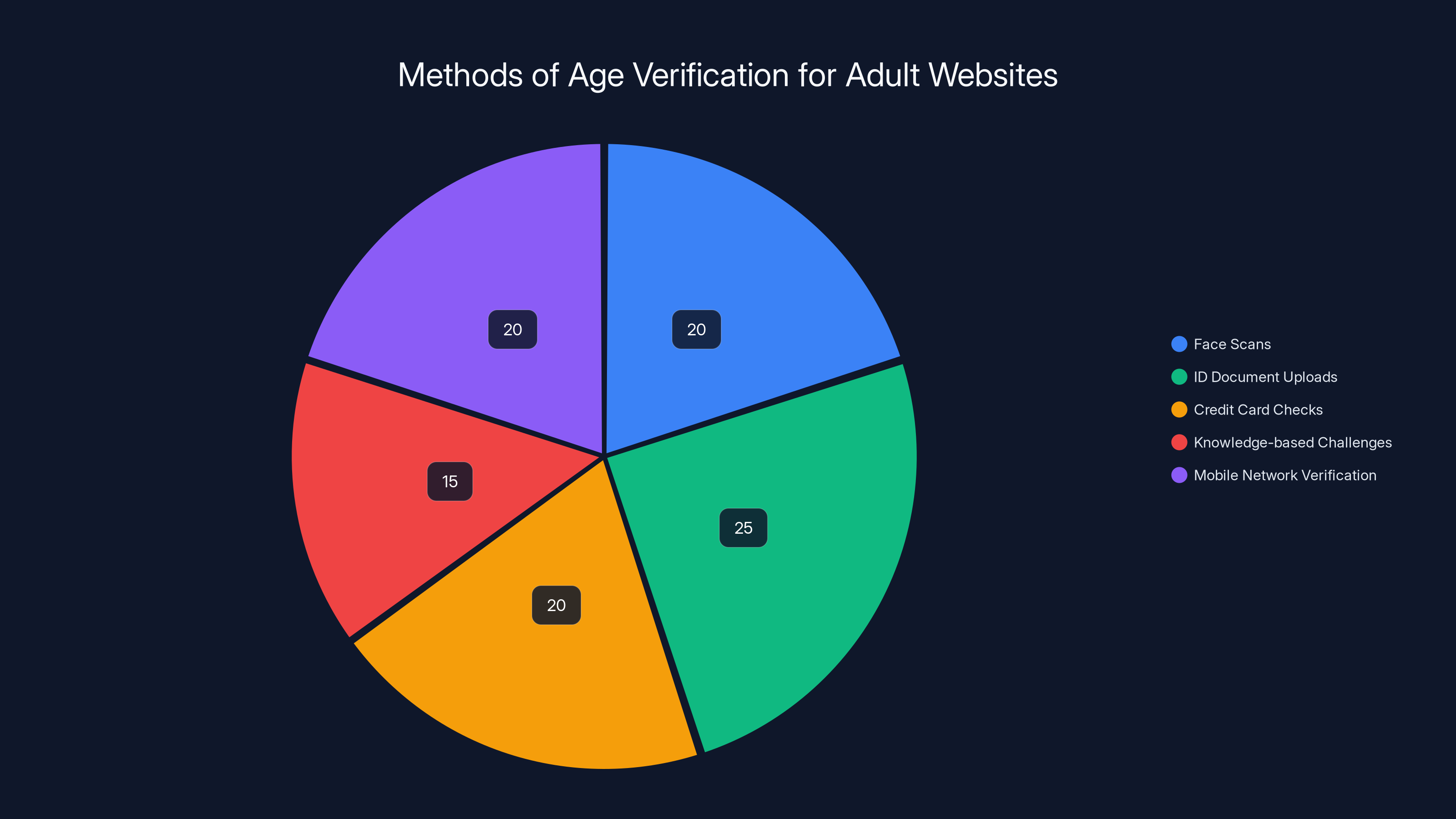

Estimated data shows Apple and Google each hold 35% influence over digital content access, with Microsoft at 20%. Others make up the remaining 10%.

Device-Based vs. Application-Based Age Verification: Why the Distinction Matters

During Pornhub's February presentation, Solomon Friedman, vice president of compliance at Ethical Partners Capital, made a crucial distinction that sits at the heart of this entire debate: the difference between device-based and application-based age verification.

Application-based age verification is what Pornhub currently uses. The adult site itself is responsible for verifying that each individual user is 18 or older. The verification data—whether it's biometric information from a face scan, personally identifiable information from an ID upload, or financial data from a credit card check—flows through the adult website's systems.

This creates obvious privacy and security concerns. Users must trust that the adult website will handle sensitive personal data responsibly. They must believe the company won't sell their information to third parties, won't experience a data breach that leaks their identity alongside their porn browsing history, and won't cooperate with law enforcement in ways that could expose their legal preferences.

Device-based age verification works entirely differently. Instead of verifying age at the application level, age verification happens at the device level—on your phone, tablet, or computer. The actual verification data stays on your device. The adult website doesn't receive personally identifiable information. It receives only a digital signal: yes, this device has been verified as belonging to an adult, or no, it hasn't.

This approach has profound advantages. Users don't have to trust individual websites with their biometric data or government ID information. The verification happens once, at the device level, and then works across all websites and applications that the device user accesses. Parents can set age restrictions on their children's devices. Teenagers can't simply switch to a different site that hasn't implemented verification.

But here's the problem: device-based age verification requires cooperation from the companies that control the devices themselves. Apple controls iOS. Google controls Android. Microsoft controls Windows. Unless these companies build age verification into their operating systems, device-based age verification remains a theoretical ideal rather than a practical solution.

Pornhub and Aylo have been pushing for exactly this. In November, the company sent formal letters to Apple, Google, and Microsoft, urging them to implement device-based age verification across their operating systems. The response? Silence, according to Pornhub's presentation.

Microsoft pointed toward a policy proposal suggesting age verification should be applied at the service level. Apple responded with its child online safety report, emphasizing that web content filters are turned on by default for users under 18. Google argued that adult entertainment apps aren't available on its app store and that companies like Aylo need to invest in their own tools to meet legal obligations.

In other words, each tech giant essentially said, "Not our problem. The adult website should handle it."

The Tech Giant Dilemma: Why Apple, Google, and Microsoft Hold the Keys

Here's where the argument becomes genuinely interesting, because Pornhub is essentially right about one fundamental fact: the tech giants do control the infrastructure through which adult content reaches users, yet they've largely opted out of responsibility for age verification.

Consider the practical reality. A 14-year-old in London wants to access pornography. They open Safari on their iPhone and search for porn. Apple hasn't required App Store developers to verify age before viewing web content—Safari is a browser, not an app, and web content browsing isn't restricted by any age verification mechanism at the OS level.

Alternatively, the same 14-year-old could use Google search on an Android device. Google search doesn't require age verification. It returns results for adult websites, many of which don't comply with age verification laws. The user taps one result, and they're in.

Now, Pornhub had to invest significant resources to build age verification systems that comply with UK law. A smaller site, by contrast, looks at the cost of compliance and the fact that Google search will still direct users to non-compliant alternatives, and decides compliance isn't worth the investment.

The tech giants benefit from this dynamic. They can claim they're not responsible for the content served through their platforms—they're just neutral pipes. They can point to child safety features like content filters and parental controls. But they can also avoid the expensive infrastructure investments that would actually make age verification work.

If Apple, Google, and Microsoft each implemented robust device-based age verification, the landscape would change overnight. Content would need to work with device-level verification rather than application-level verification. A 14-year-old's device wouldn't be verified as belonging to an adult, so adult websites would be inaccessible. Period.

This would actually accomplish what the Online Safety Act intends to accomplish. It would dramatically reduce minors' access to adult content. It would do so through a privacy-respecting mechanism that doesn't require website-by-website personal data collection. It would level the playing field between compliant and non-compliant websites.

But it would require the tech giants to make a choice that doesn't seem in their immediate commercial interest. Device-based age verification would mean changing their operating systems. It would mean potential friction with some users. It would mean explicitly positioning their platforms as enforcers of content restrictions rather than neutral providers of access.

So they haven't done it. And until they do, Pornhub's argument—that application-level age verification is a flawed, unequal, and ultimately ineffective approach—remains largely unanswered.

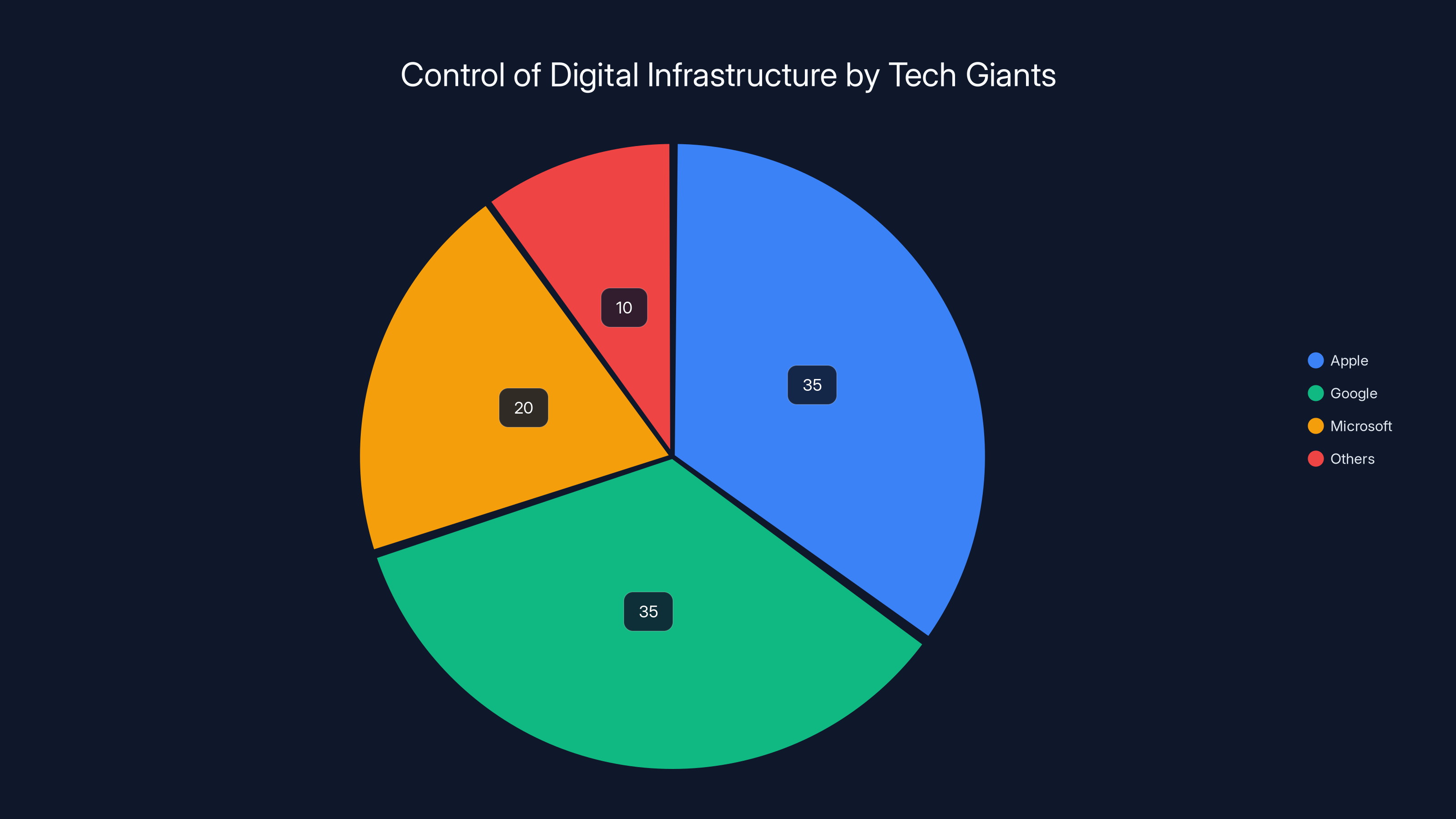

Pornhub experienced a 77% drop in UK traffic due to regulation, requiring a similar increase from other regions to maintain ad revenue, which did not occur. Estimated data.

The Global Spread: Age Verification Laws Beyond the UK

The UK isn't alone in this regulatory experiment. In the United States, 25 states have implemented their own age verification requirements for adult websites. Pornhub has pulled out of the majority of those states, just as it has pulled out of the UK, according to Built In.

But here's an interesting detail: the US remains the top traffic generator for Pornhub. That's because many Americans simply use VPNs to circumvent geographic restrictions. A user in Florida can connect to a VPN server in California, making their traffic appear to originate from a state without age verification requirements. The site becomes accessible again.

VPNs represent perhaps the most obvious failure of application-level age verification. A teenager with basic technical knowledge and access to free or cheap VPN software can bypass most age verification systems. They can make their device appear to be in a jurisdiction where the adult website operates without restrictions.

This is the catch-22 of geographic-based enforcement: the verification requirements often trigger based on location detection, which is trivially easy to circumvent with a VPN, as noted by VPN Overview.

Other countries are watching and learning. Australia implemented age verification requirements. Canada is considering them. The European Union is contemplating similar regulations as part of its broader push to govern online content. Each jurisdiction is discovering the same pattern: implementing meaningful age verification is expensive, enforcement is difficult, and compliant companies face competitive disadvantages against non-compliant competitors.

What's not yet clear is whether any government will take the step that Pornhub has been advocating for: mandating that device manufacturers implement age verification at the operating system level. Such a mandate would represent a significant intrusion into device functionality and would raise substantial privacy and freedom concerns. But it might be the only way to make age verification actually work.

For now, the pattern is: regulation creates requirements, responsible companies comply and lose market share, non-compliant companies flourish, and the stated goal of protecting minors from adult content remains largely unmet.

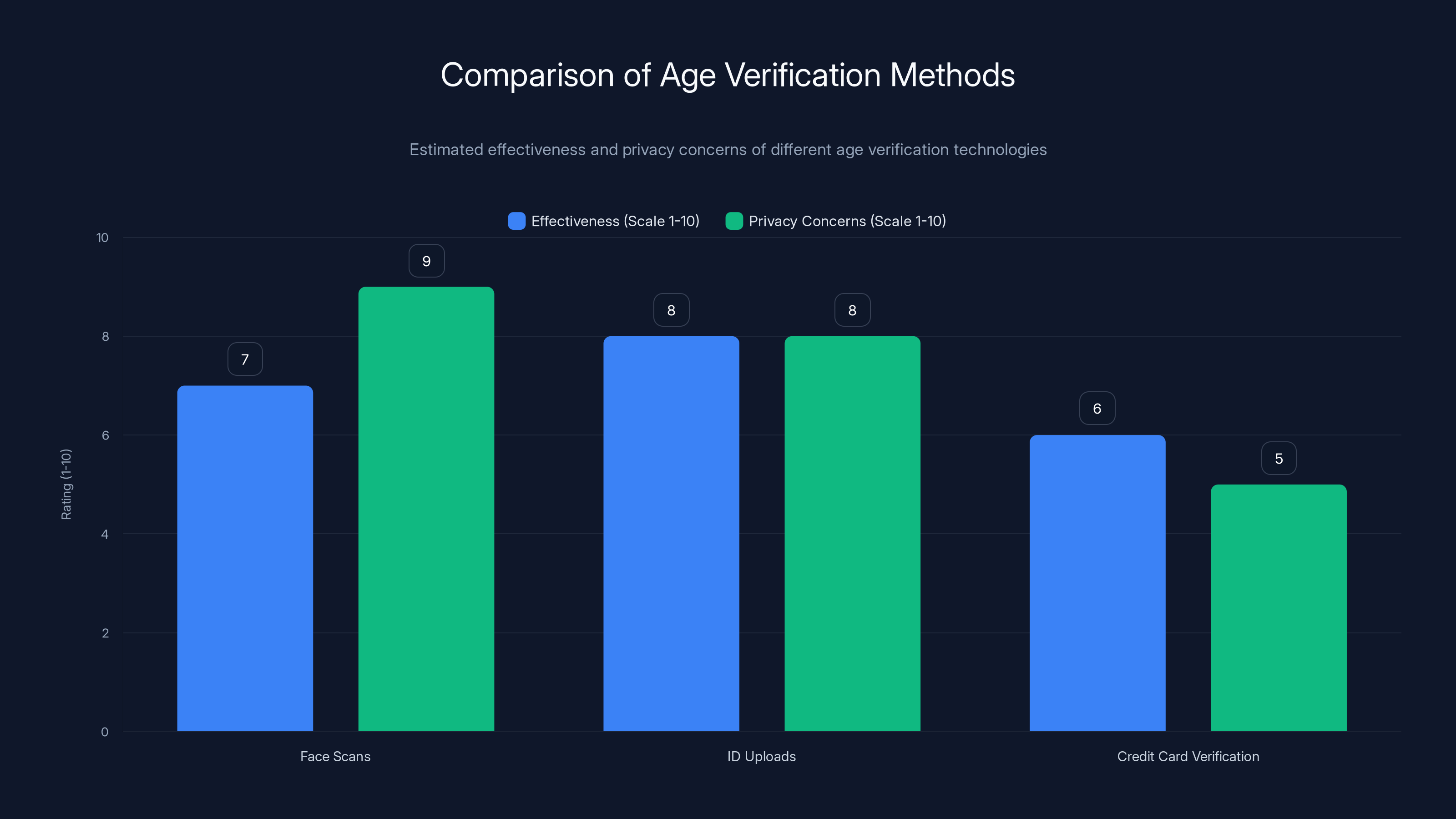

Age Verification Technology: Face Scans, ID Uploads, and Biometric Concerns

Let's examine the actual technology that Pornhub and other compliant sites now use, because the implementation details matter for understanding why age verification is so fraught.

Face scanning is perhaps the most discussed mechanism. Age-estimating face scans use machine learning models trained to infer age from facial features. A user points a camera at their face, the system analyzes the image, and returns an age estimate. If the estimate suggests the person is 18 or older, they're granted access.

Sounds straightforward, right? Except that face recognition technology has well-documented demographic bias issues. Research has consistently shown that facial recognition systems are more accurate for lighter-skinned faces than darker-skinned faces. Age estimation models have similar problems. They may systematically overestimate the age of younger-looking adults and underestimate the age of older-looking teens.

Moreover, a face scan is a biometric identifier. Even if it's only used to estimate age, it's still capturing and analyzing unique physical characteristics. That raises privacy concerns that go well beyond simple age verification.

ID document uploads create different problems. Users photograph or scan their government-issued ID—a passport, driver's license, or national ID card—and upload it to the website. The website verifies that the ID is valid and that the person in the photo matches the current user.

This requires the adult website to temporarily store sensitive personal data. Government ID documents contain a wealth of information: full name, address, date of birth, often biometric data like a photo or even a fingerprint depending on the country. If the website experiences a data breach, all of this information becomes exposed.

Credit card verification works by attempting a small transaction or by requesting the user to provide credit card details. The logic is simple: you must be an adult to have a credit card account, so credit card possession proves adulthood.

Except that's not quite true. Teenagers can have credit cards in some jurisdictions, and in others, parents might provide their cards for their children's use. More fundamentally, requiring a credit card creates a financial barrier that disproportionately affects people without banking access—a significant portion of the population in many countries.

Knowledge-based challenges ask users questions that only adults would be expected to know the answers to—questions about historical events, cultural touchstones, or similar. These are easy to defeat if you have access to Google and a few minutes of time.

Mobile network operator verification works with telecom companies to confirm that a phone number belongs to a billing account held by an adult. This is arguably one of the more effective mechanisms, though it still has limitations and privacy implications.

The broader point: none of these mechanisms is perfect. Each creates privacy concerns, potential for bias, costs for implementation, and opportunities for circumvention. Yet the Online Safety Act essentially mandated that websites use some combination of these imperfect tools.

Face scans and ID uploads are estimated to be more effective but raise higher privacy concerns compared to credit card verification. Estimated data.

Enforcement and Compliance: The Missing Piece

One of the most striking aspects of Pornhub's protest is that the company actually complied with the law. The company invested resources, implemented age verification systems, and is now blocking new UK users rather than allowing them to access content without verification.

In other words, Pornhub is following the rules. And for following the rules, it's being commercially punished.

Meanwhile, thousands of non-compliant sites continue operating in the UK without meaningful enforcement action. Why? Because enforcement requires resources. The UK's Office of Communications (Ofcom) is the regulatory body responsible for overseeing the Online Safety Act, but Ofcom doesn't have unlimited capacity.

Taking action against non-compliant websites requires identifying them, documenting the non-compliance, issuing warnings, and potentially pursuing enforcement action. This takes time and money. Meanwhile, compliant companies like Pornhub are losing revenue while non-compliant companies continue profiting.

This dynamic creates a regulatory failure known as "adverse selection." The compliant players are selected against—they lose market share—while non-compliant players are selected for. Over time, the market shifts toward non-compliance, and the stated regulatory goal (protecting minors from adult content) becomes harder to achieve, not easier.

Offcom has indicated that enforcement action is coming, but the pace matters. If enforcement is too slow relative to the market's ability to adjust, the regulation will ultimately fail to achieve its stated objectives.

The Social Media Dimension: Google Images, Twitter, Reddit, and Unregulated Platforms

During Pornhub's presentation, Solomon Friedman raised a point that deserves more attention: explicit content on social media and search platforms isn't effectively regulated by age verification laws focused on adult websites.

Consider Google Images. Search for virtually any common sexual term, and you'll find explicit content—thumbnails of sexually explicit images cached by Google's indexing systems. A minor with basic search ability can find this content in seconds, without any age verification whatsoever.

Twitter (now X) has adult content moderation policies, but enforcement relies heavily on user reporting and automated detection. A minor can follow accounts that post explicit content. They can see it in their feed.

Reddit has subreddit communities dedicated to explicit content, some of which are relatively accessible without substantial friction.

TikTok's algorithm can surface sexually suggestive content to younger users.

YouTube has explicit music videos and other sexual content that technically violates terms of service but remains accessible and often appears in recommendations.

None of these platforms are legally classified as "adult websites" requiring age verification. They're general platforms that happen to contain adult content. Yet minors encounter explicit content on these platforms regularly and easily.

Friedman's argument is that if the UK genuinely wanted to protect minors from adult content, the regulatory approach would need to extend to all platforms where adult content appears—which means, essentially, the entire internet. This is practically infeasible unless you have device-based age verification that can filter content across all websites and applications.

It's a version of the classic regulatory problem: you can regulate the obvious, easy targets (adult-only websites), but if you don't also regulate the harder targets (general platforms with adult content), you haven't actually solved the problem. You've just created competitive disadvantages for the easy targets while leaving the hard targets unaffected.

So Pornhub's withdrawal from the UK also highlights a deeper failure: age verification laws that focus only on dedicated adult websites are incomplete regulatory solutions. They protect some minors from some adult content while leaving many other pathways open.

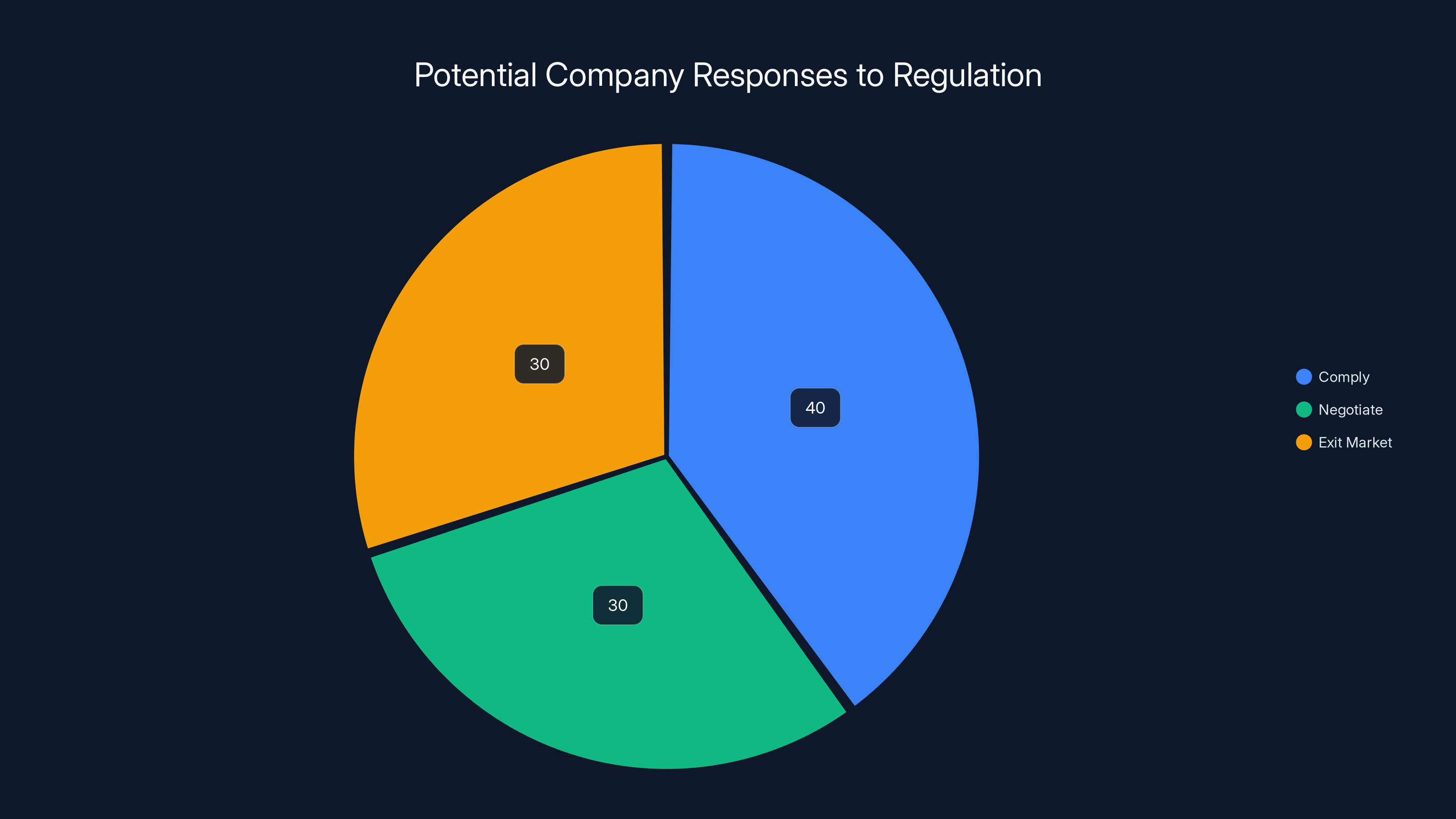

Estimated data shows that companies may equally choose to comply, negotiate, or exit markets when faced with challenging regulations.

Pornhub's Business Model and Profitability Under Regulation

To understand why Pornhub made this decision, it's worth considering the company's business model and how regulation affects profitability.

Pornhub is a free platform. The site generates revenue primarily through advertising. The more traffic, the more advertising impressions, the more revenue. The second revenue stream is premium content and premium subscriptions.

A 77 percent drop in UK traffic translates directly to a massive drop in ad impressions from UK users. For advertising rates to remain profitable, Pornhub would need to see a 77 percent increase in traffic from other regions to compensate.

That's not happening. The UK is a developed country with high internet penetration. The traffic losses in the UK aren't being made up elsewhere. They're just losses.

Additionally, implementing age verification systems has costs. The company needs to pay for the technology, maintain it, handle customer service inquiries related to verification failures, and manage the data security requirements of storing (or having third-party providers store) sensitive personal information.

So Pornhub faces a choice: continue operating in the UK under these conditions, accepting both the massive traffic loss and the ongoing compliance costs, or simply stop accepting new UK users.

The company chose the latter. And in doing so, it's making a very pointed statement: the regulatory framework is so flawed that compliance is economically irrational. Better to exit the market than to participate in a broken system.

This is significant because Pornhub is arguably the most responsible actor in the adult content space. The company has the resources to comply with regulations. Other companies don't. If Pornhub finds compliance unprofitable, smaller companies will find it impossible.

The outcome: fewer responsible adult websites, more unregulated alternatives, and minimal impact on minor's actual access to adult content.

The Precedent: What Pornhub's Exit Means for Future Regulation

Pornhub's decision to block new UK users is already having ripple effects. It serves as a precedent that even the world's largest adult website will exit markets rather than comply with what it views as flawed age verification requirements.

This sends a clear message to other companies, both in the adult content space and beyond. When a company faces regulation that it perceives as economically destructive and ineffective, market exit becomes a viable strategy.

The precedent also strengthens Pornhub's negotiating position. The company has demonstrated that it's willing to sacrifice immediate revenue to make a political point. This makes the company's demands harder to dismiss: when it says device-based age verification is necessary, it's backed by concrete action.

For regulators, the precedent is more troubling. Pornhub's exit suggests that regulation can't simply be imposed on unwilling companies. Companies have options: they can comply, they can negotiate, or they can exit.

Some will argue that Pornhub is simply trying to avoid compliance and using child safety as cover. There's a chance that's partially true. But the company's arguments about the inadequacy of application-level age verification aren't wrong. The data about non-compliant sites still operating freely is accurate.

Future regulators considering age verification requirements need to think carefully about what Pornhub has demonstrated: if regulation creates a system where compliant players lose and non-compliant players win, compliant players will eventually exit.

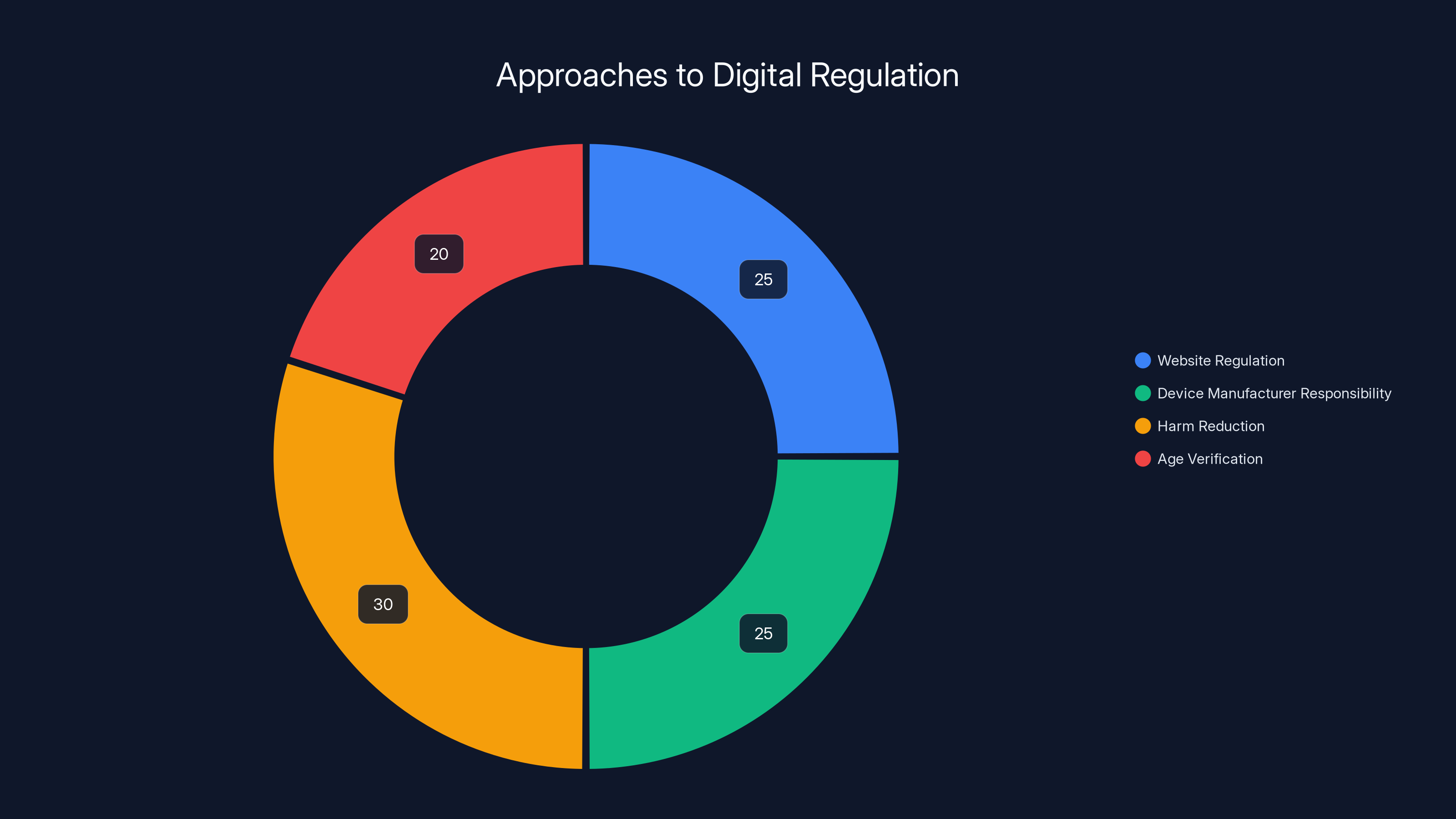

Estimated data: Harm reduction strategies are gaining focus over traditional regulation methods, reflecting a shift towards education and targeted enforcement.

The Bigger Picture: Digital Regulation and Corporate Responsibility

The Pornhub situation is ultimately a case study in how digital regulation works in the real world, with all its messiness and unintended consequences.

Governments want to protect minors from adult content. That's a legitimate goal shared across the political spectrum. But implementing effective protection at scale is harder than it sounds.

You can regulate websites, but websites are global. You can require age verification, but age verification is expensive and unreliable. You can try to enforce compliance, but enforcement requires sustained resources that might not be available.

Alternatively, you can push the responsibility to device manufacturers. Force Apple, Google, and Microsoft to implement age verification at the operating system level. This would be far more effective, but it requires tech companies to change their fundamental business model and invites concerns about surveillance and control.

Or you can accept that perfect protection is impossible and focus on harm reduction: better education for minors about online risks, digital literacy programs, media literacy initiatives, and targeted enforcement against the worst offenders rather than trying to eliminate access entirely.

Pornhub's protest is highlighting the gaps between what regulation intends to achieve and what it can realistically achieve. The company is arguing, implicitly, that the current approach is failing on its own terms.

Whether Pornhub is acting out of genuine concern for regulatory effectiveness or out of economic self-interest is perhaps less important than the fact that its arguments have merit. The Online Safety Act, as currently designed and enforced, seems unlikely to substantially reduce minors' access to explicit content while imposing significant costs on compliant platforms.

What's Next: Future Directions for Age Verification

So where does this leave us? What's likely to happen next?

In the short term, expect more adult websites to follow Pornhub's lead and exit UK and U.S. markets where age verification costs exceed anticipated revenue. The market will continue shifting toward non-compliant alternatives. Enforcement will likely accelerate, with Ofcom and state attorneys general pursuing legal action against non-compliant sites.

But enforcement alone won't solve the problem. You can take down a website, but another one will pop up elsewhere on the internet within weeks.

The more interesting question is whether tech giants will eventually be forced to implement device-based age verification. The pressure is building. Pornhub has explicitly called for it. Other regulated industries are likely to join the chorus. If governments can't effectively regulate harmful content through application-level requirements, they may eventually mandate device-level controls.

Such a mandate would be controversial. It would raise privacy concerns, questions about surveillance, and debates about who controls access to what content on your own device. But it might be the only way to actually achieve the stated regulatory goal of reducing minors' access to adult content.

In the longer term, the Pornhub situation might catalyze a broader rethinking of how democracies regulate digital platforms. The current approach—trying to impose legal obligations on service providers while leaving device manufacturers unregulated—appears to be failing. Future regulation might be more aggressive in mandating platform-level responsibility from the companies that control the underlying infrastructure.

Why This Matters: Beyond Adult Content

It would be a mistake to treat the Pornhub situation as isolated to the adult content space. The issues it raises extend far beyond pornography.

Consider misinformation. Governments want to reduce the spread of false information, particularly about health and politics. But misinformation spreads across platforms, and no single platform can police all content perfectly. The application-level regulation approach fails here too.

Consider hate speech and radicalization. Platforms are expected to moderate harmful content, but the definition of harmful varies by jurisdiction and evolves over time. Application-level moderation is expensive and inconsistent.

Consider gambling addiction. Young people can access online gambling platforms. Should they be regulated with age verification? Most countries think so, at least for some forms of gambling.

Consider cyberbullying and contact from strangers. These harms happen on platforms, but they're hard to prevent without device-level parental controls.

The Pornhub situation is a test case for a larger regulatory problem: how do democracies protect vulnerable populations from harmful content and interactions when that content and those interactions occur across globally distributed platforms that operate beyond any single government's complete control?

The answer might ultimately require device-level controls that let parents and individuals choose what content and interactions they want to permit or block. It might require transparency about algorithmic recommendations. It might require fundamental changes to how platforms operate.

But application-level regulation imposed on service providers while device manufacturers remain unregulated? That approach appears to be reaching the limits of its effectiveness, as Pornhub has just demonstrated.

The Privacy-Protection Trade-off

One final consideration: effective age verification requires some form of identity verification, which means collecting personal data. This creates a tension between child protection and privacy.

Some people argue that robust age verification is worth the privacy cost: if knowing your age protects minors from adult content, that's a reasonable trade.

Others argue the opposite: privacy is fundamental, and trading privacy for content control sets a dangerous precedent. Once governments can mandate age verification, what prevents them from mandating verification for other activities, other content categories, other purposes?

Europe, with its strong privacy culture and GDPR regulations, seems to worry more about this trade-off than other regions. The UK, which is post-GDPR but still influenced by European privacy thinking, has tried to balance child protection with privacy concerns.

The result is that UK age verification requirements explicitly contemplate privacy-respecting methods like mobile network operator verification, which can confirm age without transmitting personally identifiable information to the website.

But even these methods involve some data collection, and smaller companies may not have the infrastructure to implement the most privacy-respecting approaches.

So the tension remains: you can have effective age verification or strong privacy protection, but achieving both at scale is difficult.

Pornhub's decision to exit the UK rather than participate in this system reflects, in part, frustration with trying to balance these competing values while competitors operate under no such constraints.

International Implications: Learning from the UK Experiment

The UK's Online Safety Act is being watched carefully by regulators around the world. How it succeeds or fails will influence how other countries approach digital regulation.

Australia's age verification law, which came into force around the same time as the UK's, is watching these outcomes closely. Canada's government has proposed legislation that would create similar requirements. The EU is considering whether to adopt comparable approaches.

Each jurisdiction will learn from the UK's experience. If age verification laws demonstrably fail to protect minors while driving compliant businesses out of the market, other countries might opt for different approaches. If the laws succeed in actually reducing minors' access to adult content, expect rapid adoption elsewhere.

Early evidence suggests the former is more likely. The first year of the UK's Online Safety Act provisions has produced a massive shift toward non-compliant services and away from regulated ones. Whether this ultimately protects minors is an open question.

What seems clear is that pure application-level regulation, without corresponding device-level controls or meaningful enforcement of non-compliance, will continue to fail in achieving its stated objectives.

Conclusion: The Uncomfortable Truth About Digital Regulation

Pornhub's decision to block new UK users is, on its surface, about age verification and the adult content industry. But it points toward a broader uncomfortable truth: the straightforward regulatory approaches that governments typically use aren't well-suited to digital problems.

You can't effectively regulate a global medium by imposing rules on individual service providers when those providers face international competition and can exit jurisdictions if compliance becomes unprofitable. You can't protect vulnerable populations from harmful content without addressing the infrastructure through which that content is accessed—the devices, browsers, and operating systems that enable the access.

Pornhub is essentially saying: "We tried. We spent the money, built the systems, implemented the verification. It doesn't work. The non-compliant competitors continue thriving. Our business is punished for following the rules. Fix the system or accept that your regulation is failing."

That's a difficult message for regulators to hear. But it's hard to argue that they're wrong.

The path forward probably requires tech giants to implement device-level controls, probably requires international coordination to prevent regulatory arbitrage, and probably requires accepting that perfect protection is impossible and focusing instead on harm reduction.

The Pornhub situation is unlikely to be the last time a major platform protests digital regulation by exiting a market. It may, however, be the moment when regulators start taking seriously the suggestion that device-level solutions, not application-level ones, are the only way to actually achieve digital protection goals at scale.

FAQ

What is the UK Online Safety Act and how does it apply to adult websites?

The UK Online Safety Act is legislation that came into force in July 2024, designed to regulate online content and protect users from harmful material. For adult websites specifically, the law mandates age verification before users can access adult content. This requires users to verify they are 18 or older through mechanisms like face scans, ID document uploads, credit card checks, knowledge-based challenges, or mobile network operator verification. The law aims to prevent minors from accessing sexually explicit material while imposing compliance obligations on adult websites.

Why did Pornhub block new UK users and pull out of the market?

Pornhub blocked new UK users starting February 2, 2025, in protest against what the company views as a flawed age verification system. After implementing age verification in compliance with the Online Safety Act, Pornhub saw its UK traffic drop 77 percent. The company discovered that while it invested substantial resources in compliance, thousands of non-compliant adult websites continue operating in the UK with minimal enforcement action. Pornhub argues this creates an unfair situation where responsible companies lose market share to non-compliant competitors, ultimately failing to achieve the goal of protecting minors from adult content.

What is the difference between device-based and application-based age verification?

Application-based age verification, which is currently used by compliant websites like Pornhub, requires each website to verify user age individually. This means users must share personal data—biometric information, government ID details, or financial information—with each adult website. Device-based age verification works differently, placing verification at the operating system level on phones, tablets, or computers. Age verification happens once at the device level, and the device is then granted or denied access to adult content across all websites without requiring users to share personal information with individual sites. Device-based verification would require implementation by Apple, Google, and Microsoft.

Why don't Apple, Google, and Microsoft implement device-based age verification?

Despite calls from Pornhub and other advocates, tech giants have not implemented device-based age verification. Apple, Google, and Microsoft argue that individual service providers like adult websites should be responsible for their own compliance. They cite existing safety features like content filters and parental controls. However, implementing device-level age verification would require significant infrastructure changes to their operating systems and would position these companies as enforcers of content restrictions. There's no clear commercial incentive for them to do this, and it would likely face resistance from users concerned about privacy and surveillance. Without regulatory mandates, these companies appear unlikely to voluntarily implement such systems.

How effective is the Online Safety Act at protecting minors from adult content?

Early evidence suggests the Online Safety Act has had limited effectiveness at actually protecting minors from adult content while imposing significant costs on compliant platforms. Research presented by Pornhub found that six out of the ten top Google search results for "free porn" in the UK link to non-compliant websites. Thousands of adult sites continue operating without age verification while compliant companies like Pornhub experience massive traffic losses. This creates a perverse outcome where regulation punishes responsible actors while leaving non-compliant competitors unaffected. Unless enforcement significantly accelerates or device-level verification is implemented, the law appears unlikely to achieve its stated protective objectives.

Are other countries implementing similar age verification requirements?

Yes, age verification for adult websites is becoming a global trend. In the United States, 25 states have implemented age verification requirements, though Pornhub has pulled out of the majority of those states. Australia has implemented comparable age verification laws. Canada is considering similar legislation. The European Union is exploring whether to adopt comparable approaches as part of broader digital regulation. However, each jurisdiction is discovering the same pattern: implementing meaningful age verification is expensive, enforcement is difficult, and companies have options to exit markets if compliance becomes unprofitable. The global effectiveness of age verification laws may depend on whether governments can coordinate internationally and whether tech companies are eventually mandated to implement device-level solutions.

What happens to minors' access to adult content if compliant websites exit a market?

When compliant, regulated websites like Pornhub exit a market, minors' overall access to adult content doesn't decrease—it shifts to non-compliant alternatives. Minors can still search for adult content through Google, access it on social media platforms like Twitter or Reddit, or use VPNs to circumvent geographic restrictions. They can find explicit content on YouTube, TikTok, and other general platforms where adult content exists but isn't systematically age-gated. The practical effect of age verification laws that only target dedicated adult websites is that responsible websites exit, non-compliant alternatives continue operating freely, and minors' overall access to adult content remains largely unchanged. This is why advocates argue that device-level verification is necessary to actually reduce minors' exposure to explicit material.

What is regulatory adverse selection and how does it apply to age verification laws?

Regulatory adverse selection is a dynamic where regulation inadvertently selects against compliant companies and for non-compliant ones. In the context of age verification, the dynamic works like this: compliant websites invest in expensive verification systems, lose 77 percent of their traffic, and face reduced profitability. Non-compliant websites avoid these costs and continue profiting. Over time, the market shifts toward non-compliance as compliant players exit and non-compliant players expand. The regulation intended to protect users instead creates market conditions where protection is less available, not more. This is the core of Pornhub's argument: the current age verification approach has created regulatory adverse selection that defeats its stated purpose.

How do VPNs undermine age verification requirements?

VPNs (virtual private networks) allow users to mask their actual geographic location and appear to be accessing the internet from a different country or state. Since age verification laws often depend on geographic detection to determine which rules apply, users in restricted jurisdictions can use VPNs to appear as if they're in unrestricted areas. A user in the UK can connect to a VPN server in the United States, appearing to originate from a location where Pornhub operates without age verification requirements. A teenager in a U.S. state with age verification laws can use a VPN to access the same content as someone in a state without such laws. VPNs are widely available and often free or inexpensive, making them accessible to tech-savvy teenagers. This represents a fundamental limitation of geographically-based enforcement: it cannot prevent motivated individuals with basic technical knowledge from circumventing the restrictions.

What privacy concerns exist with age verification systems?

Age verification systems create substantial privacy concerns depending on their implementation method. Face-scanning systems use biometric data and raise concerns about facial recognition bias and the storage of unique physical identifiers. ID document uploads require websites to collect and secure sensitive personal information including government ID numbers, addresses, and sometimes biometric data from the documents themselves. Credit card verification creates financial records linking individuals to adult website access. Even mobile network operator verification, considered more privacy-respecting, still involves telecom companies collecting data about users accessing adult content. Data breaches at websites or service providers could expose sensitive information linking users to adult content consumption. Privacy advocates argue that some of these trade-offs may not be justified, especially when the effectiveness of the verification in actually protecting minors remains questionable.

Key Takeaways

- Pornhub blocked new UK users February 2, 2025, as protest against Online Safety Act age verification requirements after experiencing 77% traffic loss

- Application-level age verification creates regulatory adverse selection where compliant companies lose to non-compliant competitors, defeating regulatory goals

- Tech giants Apple, Google, and Microsoft control device infrastructure but resist implementing device-based age verification, leaving application-level verification as the only option

- Global spread of age verification laws across 25 U.S. states, Australia, Canada, and EU proposals suggests this issue will define digital regulation's future

- VPNs and non-compliance circumvent current age verification approaches, suggesting device-level verification is necessary for any meaningful protection of minors

Related Articles

- TikTok's Trump Deal: What ByteDance Control Means for Users [2025]

- Payment Processors' Grok Problem: Why CSAM Enforcement Collapsed [2025]

- TikTok's January 2025 Outage: What Really Happened [2025]

- Google's $68M Voice Assistant Privacy Settlement [2025]

- TikTok Data Center Outage Sparks Censorship Fears: What Really Happened [2025]

- UpScrolled Surges as TikTok Alternative After US Takeover [2025]

![Pornhub's UK Shutdown: Age Verification Laws, Tech Giants, and Digital Censorship [2025]](https://tryrunable.com/blog/pornhub-s-uk-shutdown-age-verification-laws-tech-giants-and-/image-1-1769532099738.jpg)