UStrive Security Breach: A Deep Dive Into the Data Exposure Crisis Affecting Thousands of Students [2025]

When a nonprofit designed to mentor and support high school and college students inadvertently exposed the personal information of a quarter-million users to any logged-in visitor, it revealed a troubling gap in how we protect young people online. UStrive, previously known as Strive for College, found itself at the center of a significant data security incident that raised uncomfortable questions about authentication, data architecture, and corporate responsibility.

The breach wasn't the result of a sophisticated hacker attack or zero-day exploit. Instead, it stemmed from a fundamental architectural flaw in how the platform queried and delivered user data. A vulnerable Amazon-hosted Graph QL endpoint allowed anyone with access to browse the platform to view streams of sensitive information including full names, email addresses, phone numbers, and in some cases gender and birth dates. For a mentoring platform serving vulnerable youth populations, this wasn't just a technical problem—it was a violation of trust.

What makes this incident particularly troubling is how it unfolded. Security researchers discovered the vulnerability and alerted the company through proper disclosure channels. Yet weeks passed before meaningful action occurred. The organization's initial response raised additional concerns about whether they'd properly notify affected users, whether they could even determine if malicious actors had accessed the exposed data, or if they'd ever conducted a proper security audit. These weren't rhetorical questions. They were fundamental to understanding the scope of damage and the organization's commitment to preventing future incidents.

This article examines the UStrive security breach from every angle: the technical vulnerability that enabled it, the data that was exposed, the timeline of events, the organization's response (and lack thereof), the regulatory implications, and most importantly, what this incident teaches us about protecting young people in digital spaces. Whether you're building educational technology, managing user data, or simply concerned about online safety for minors, this case study offers critical lessons.

Why This Matters Beyond the Headlines

Mentoring platforms occupy a unique position in the youth ecosystem. They're trusted with contact information, educational backgrounds, personal goals, and sometimes deeply personal information shared during mentoring relationships. When these platforms fail, the damage extends beyond the immediate data exposure. There's the erosion of trust in institutions designed to help. There's the practical risk of harassment, identity theft, or social engineering attacks against vulnerable populations. And there's the regulatory and legal liability that compounds daily the vulnerability remains unaddressed.

UStrive serves young people often from underrepresented backgrounds, precisely the populations that deserve the highest level of protection. The breach happened on their watch, whether intentional or not.

The Technical Foundation: Understanding Graph QL Vulnerabilities

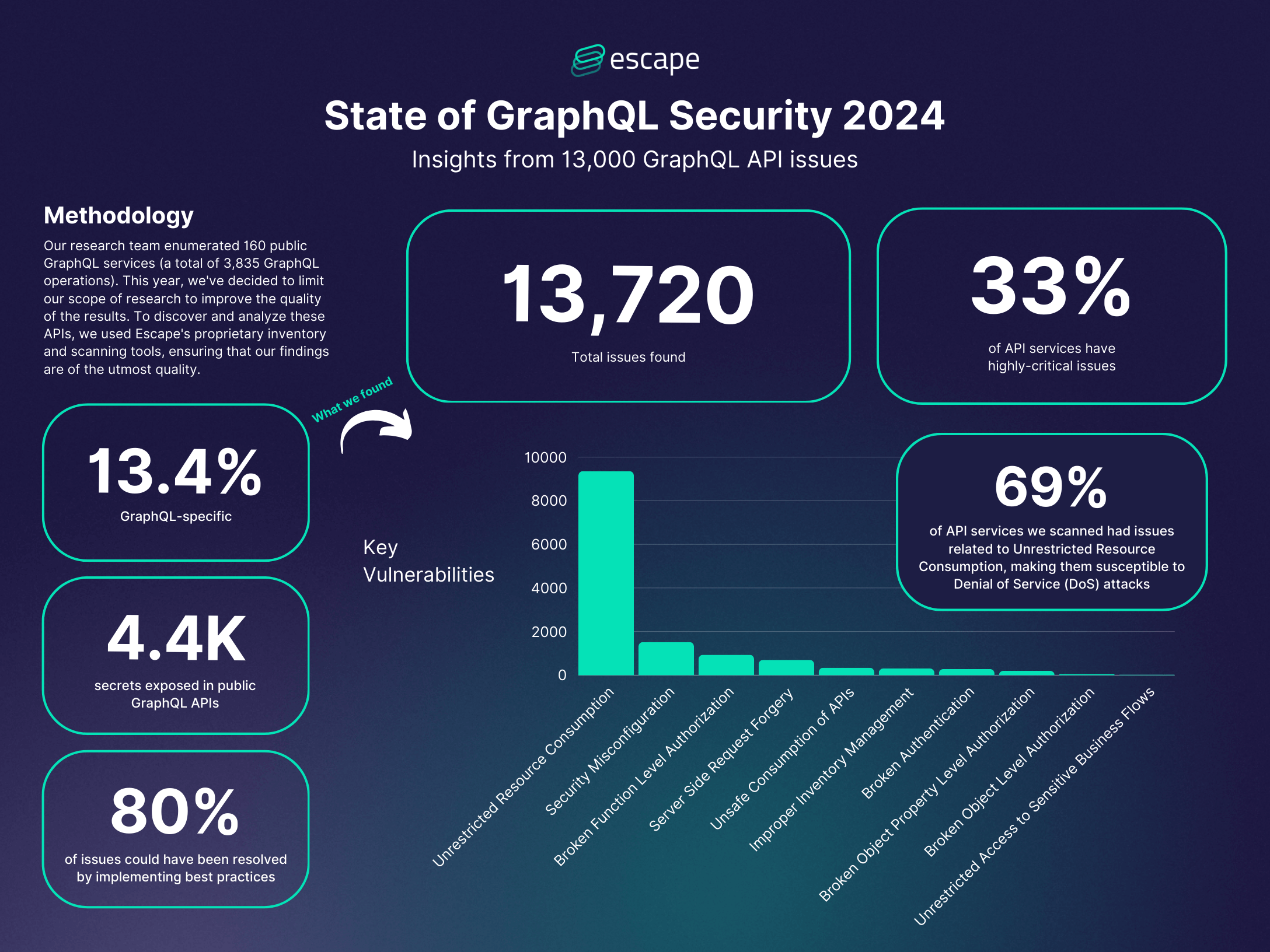

To understand how UStrive's security lapse happened, you need to understand Graph QL and how it can fail when implemented without proper safeguards. Graph QL is a query language developed by Facebook (now Meta) that allows clients to request exactly the data they need from a server. It's become increasingly popular because it's more flexible than traditional REST APIs, and it can reduce over-fetching of unnecessary data.

The appeal is obvious to developers. Instead of hitting multiple endpoints or receiving bloated responses with fields you don't need, you craft precise queries that return exactly what you want. It's elegant. It's efficient. And when done wrong, it's catastrophic.

How Graph QL Works in Theory

In a properly secured Graph QL implementation, the server validates every incoming query against defined permissions and resolvers. A resolver is essentially a function that determines whether a user has permission to access specific data. Before returning information about another user's profile, a properly built system checks: Is the requesting user authenticated? Are they authorized to view this specific user's data? If the answer to either question is no, the request fails.

The power of Graph QL also introduces complexity. Because the query language is so flexible, developers must be extremely careful about what data they expose and to whom. A misconfigured Graph QL endpoint can inadvertently allow users to traverse relationships they shouldn't see. A student asking for their own profile information might also be able to ask for profiles of all mentors, or all other students, or specific fields that should be private.

How UStrive's Implementation Failed

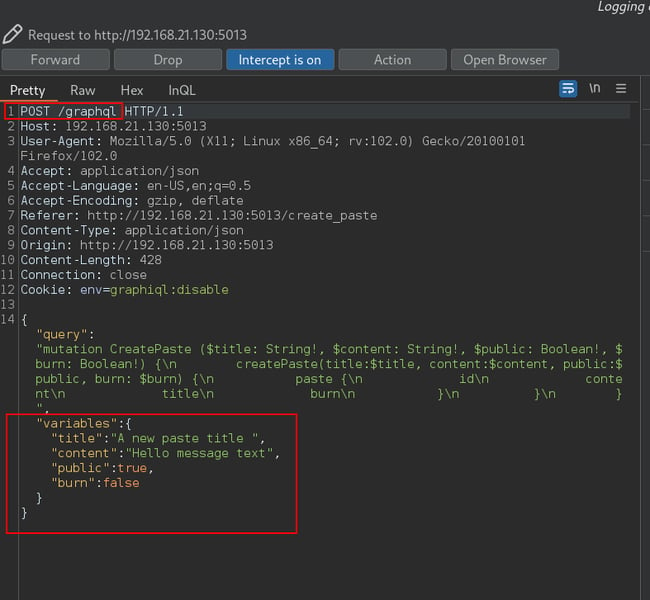

In UStrive's case, the vulnerability manifested as an insufficiently restricted Graph QL endpoint. The technical details matter here because they illuminate a common category of mistakes. When a user navigated the platform's interface—viewing profiles, looking at mentoring relationships, or exploring features—their browser made Graph QL queries to fetch the necessary data. These queries weren't properly authenticated or authorized at the query level.

The security researcher who discovered the flaw demonstrated this by examining network traffic through their browser's developer tools. They could see the exact Graph QL queries being executed and the responses containing sensitive information. More troublingly, they could manually craft queries that the endpoint would answer, bypassing whatever authorization checks existed in the user interface.

This is a critical distinction. UStrive's web interface might have had proper access controls. If you logged in as Student A, you probably couldn't click a button to see Student B's phone number. The interface enforced permissions. But the underlying API didn't. The interface was a lock on the door, but the actual vault had no protection.

It's like a library that prevents you from seeing other patrons' reading history on the computer terminal they provide, but stores all reading history in plaintext in a database anyone can access through the network. The security isn't in the interface. It's missing from the actual data storage and retrieval layer.

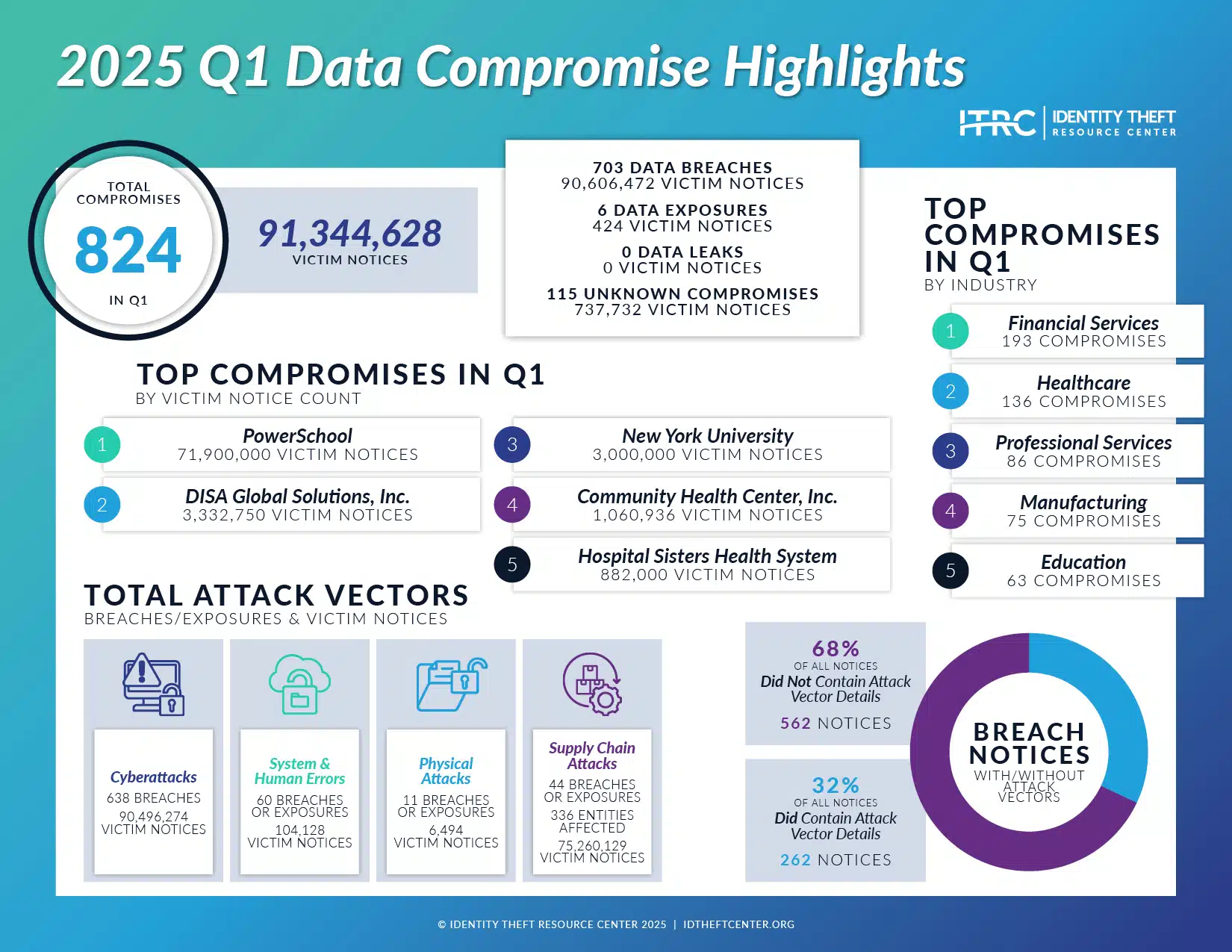

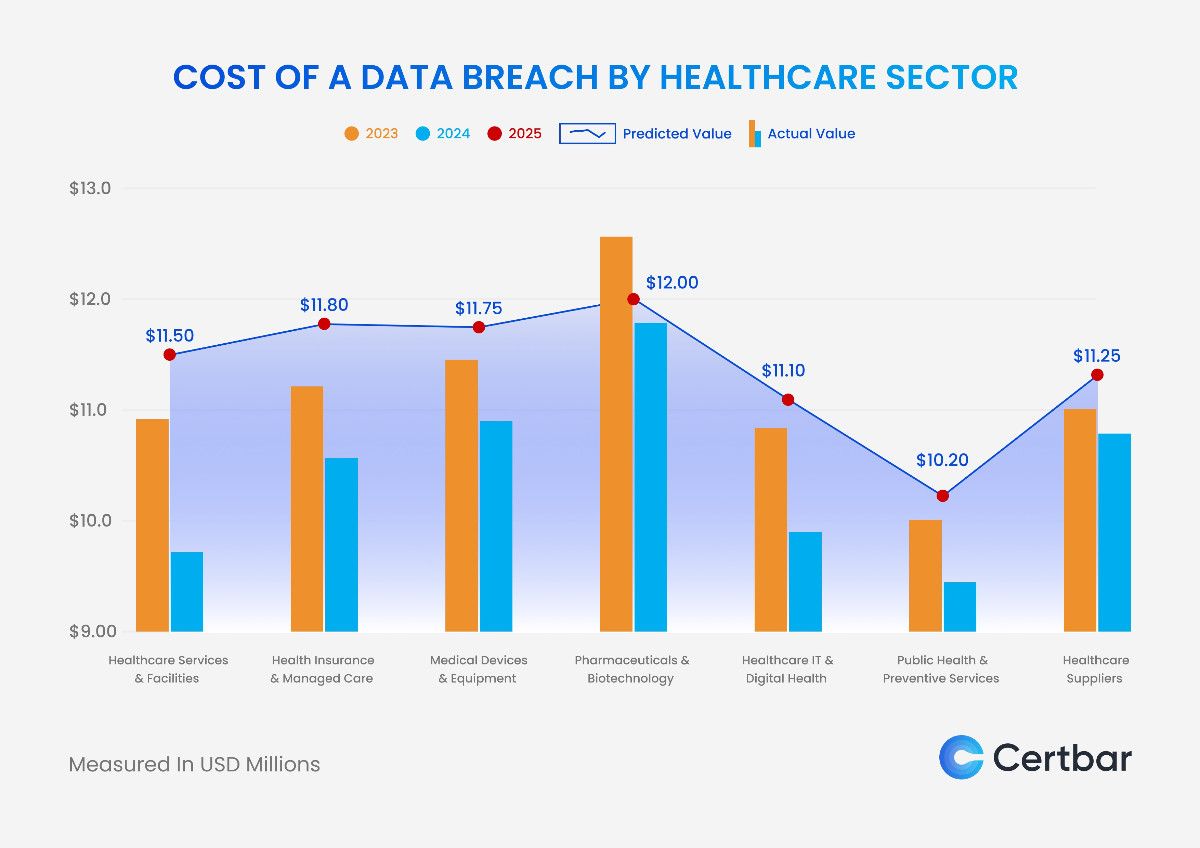

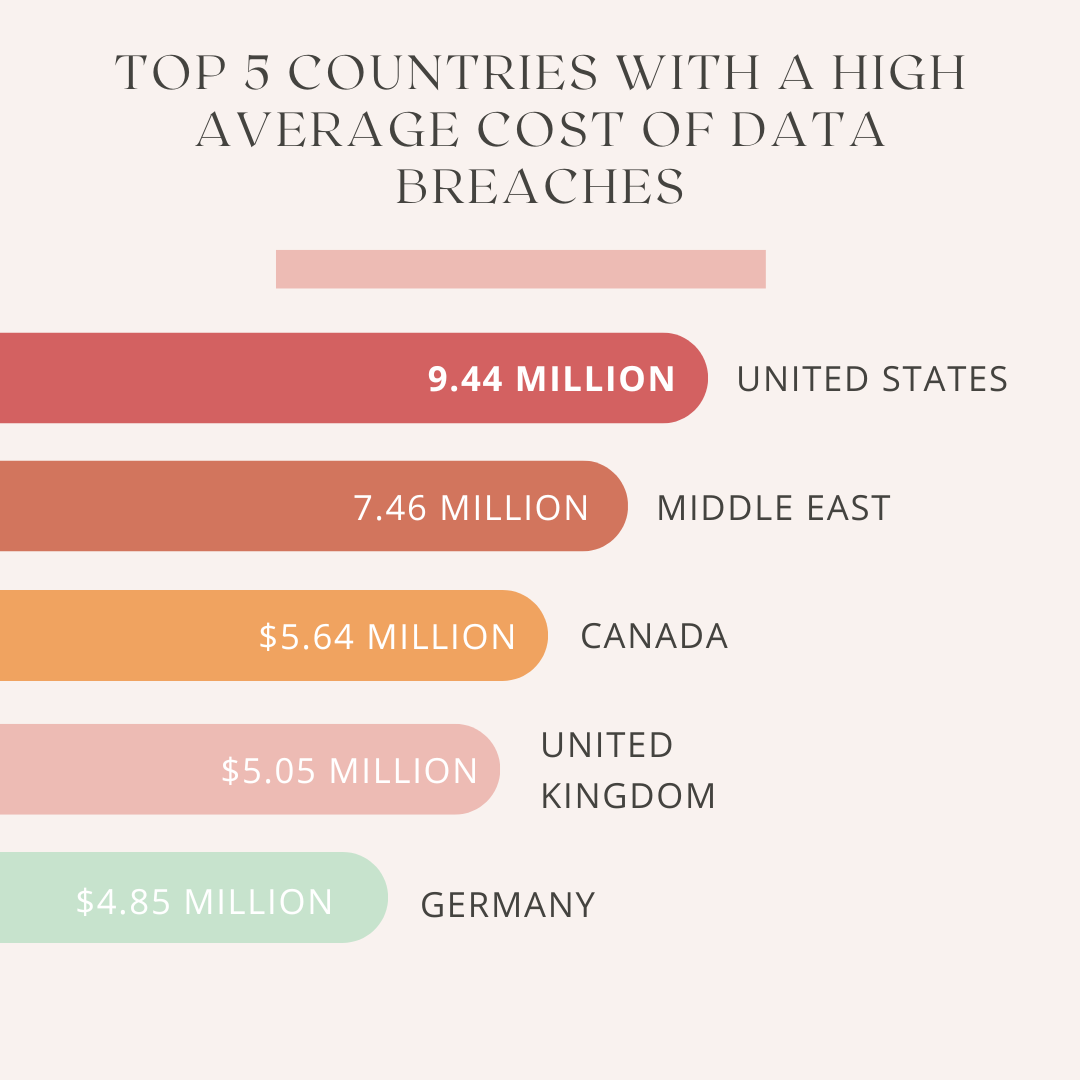

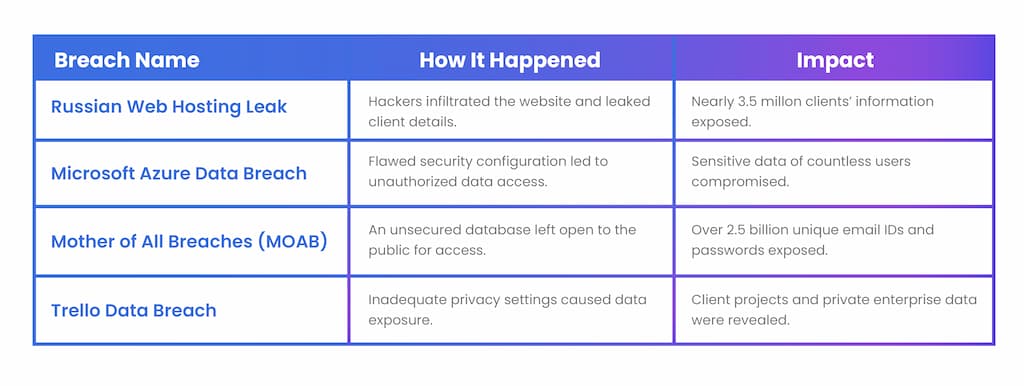

The chart estimates potential penalties and requirements under various regulations affecting UStrive, highlighting the significant impact of FTC settlements like the $5.7 billion TikTok case. Estimated data.

The Exposure: What Data Was Actually Accessible

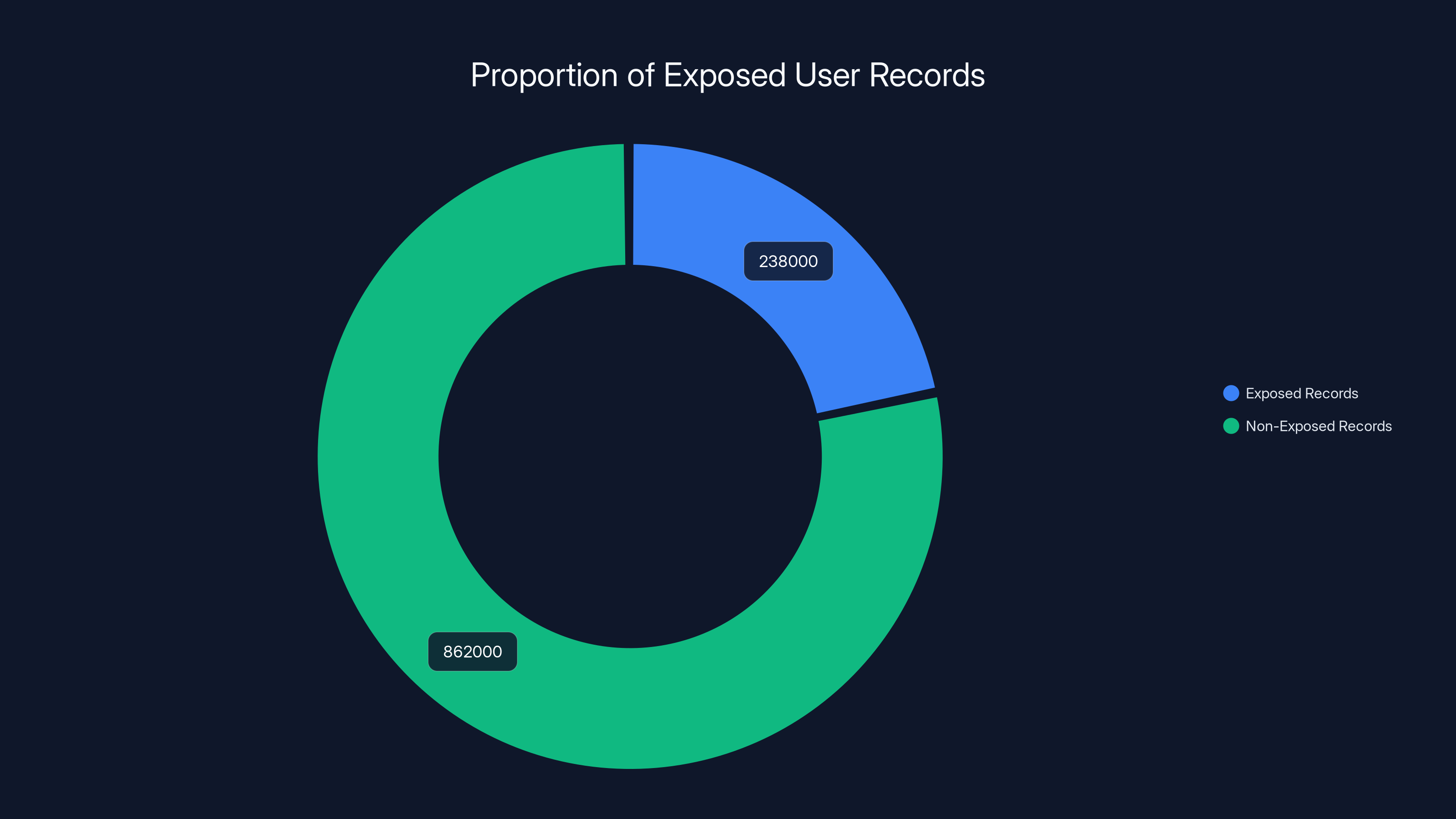

When the researcher discovered the vulnerability, they found at least 238,000 user records exposed. That's a substantial number. UStrive claims over 1.1 million students have used its platform historically, so the exposed portion represents potentially 20% of their active user base at the time of discovery. The exact number of truly active users versus historical accounts is unclear.

The exposed data included:

Universally exposed across all records:

- Full names

- Email addresses

- Phone numbers

- Profile information

- Account creation and modification timestamps

Selectively exposed depending on user configuration:

- Gender

- Date of birth

- Educational institution

- Grade level or college year

- Goals and biographical information provided by the student

- Mentoring relationship data

- Interaction history

The differential in data exposure is important. Some users had provided minimal information beyond what was required for account creation. Others had completed comprehensive profiles. This means the vulnerability exposed different levels of sensitive information to different users, and the actual damage varied accordingly.

Why This Data Is Particularly Sensitive

On a typical social network, exposed profile information is problematic. On a mentoring platform serving minors, it's dangerous. Here's why:

Full names combined with email addresses and phone numbers enable targeted contact. A bad actor could use this information to impersonate mentors, contact students directly, or attempt to build false relationships. The personal nature of mentoring relationships creates psychological vulnerability. A student might be more willing to trust someone claiming to be a mentor or program staff because they've already been vetted by the platform.

Gender and birth date information, when combined with names and contact details, creates a comprehensive profile for identity theft, harassment, or exploitation. The concern isn't hypothetical. Law enforcement and child safety organizations have extensively documented how publicly exposed information about minors is weaponized.

Educational institution information allows targeting of specific schools or grade levels. Someone looking to reach high school students from a particular district now has a clean list of email addresses and phone numbers. This is precisely the kind of targeting that makes the information dangerous.

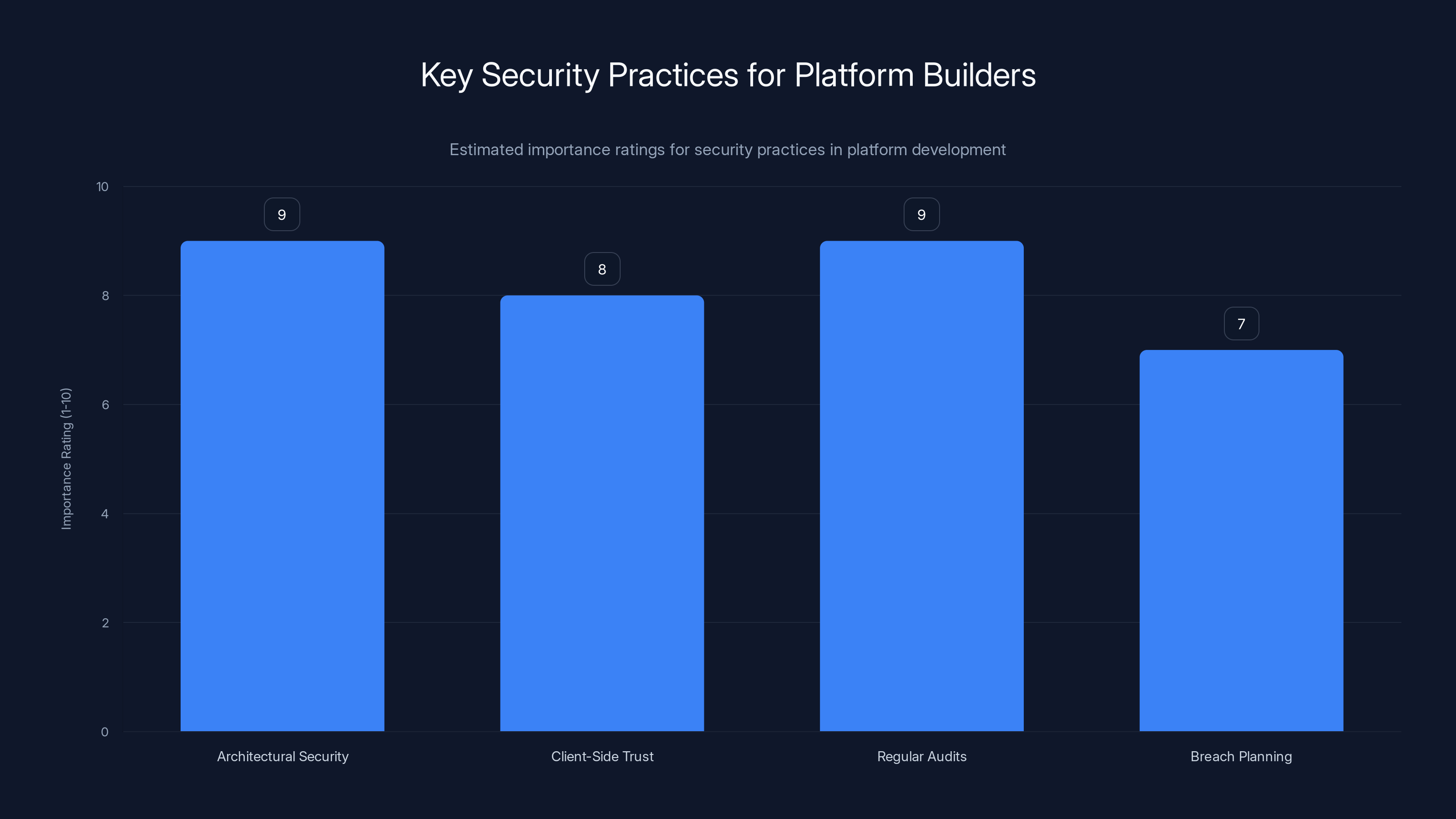

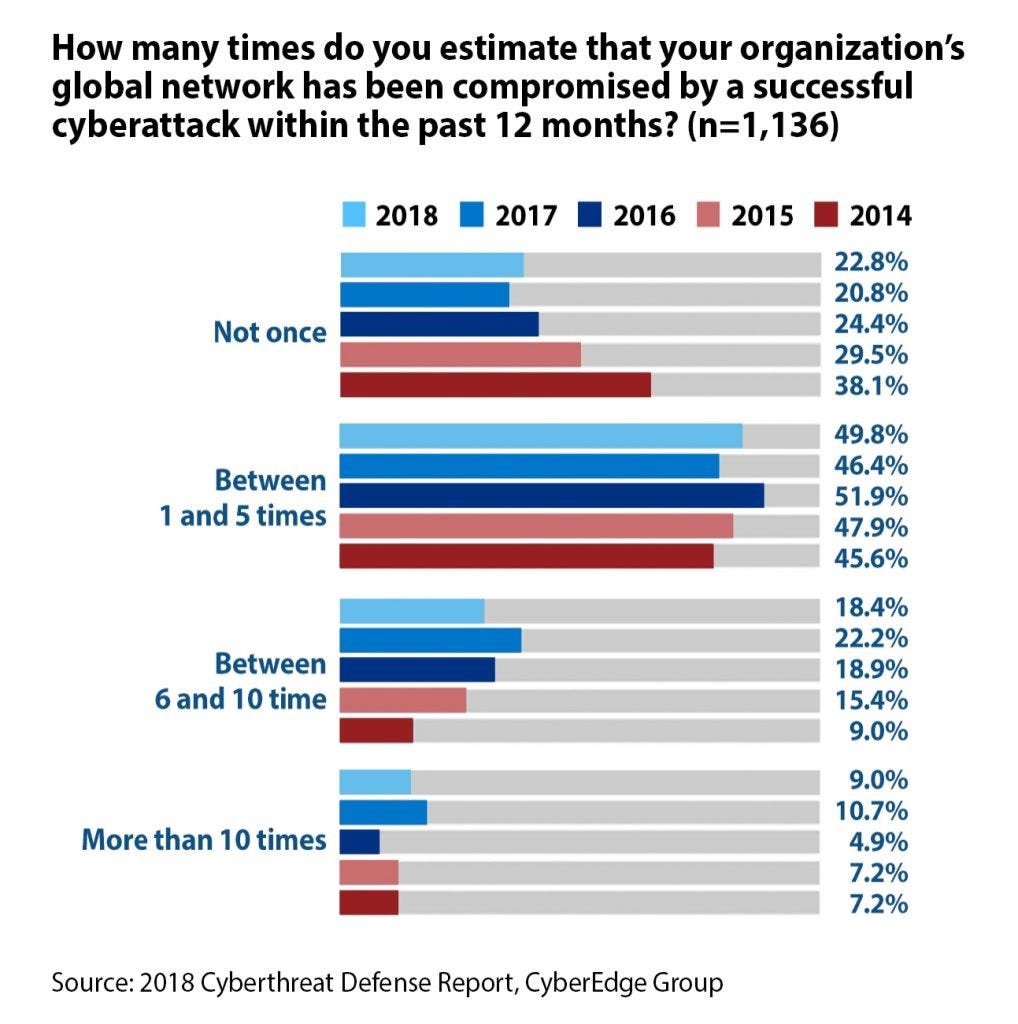

Estimated data suggests that prioritizing speed over security is the most severe vulnerability in EdTech platforms, followed closely by limited budgets and lack of expertise.

The Discovery and Timeline: When Did Things Go Wrong

The security researcher who discovered the vulnerability contacted Tech Crunch on January 20, 2026. They'd identified the flaw through legitimate means: creating an account, examining network traffic, and confirming they could access other users' information through the exposed Graph QL endpoint. This wasn't hacking in the criminal sense. This was responsible security research.

Tech Crunch confirmed the vulnerability independently by creating their own test account and verifying the data exposure. They then contacted UStrive's executives and informed them of the issue. This is where the timeline becomes concerning.

The Company Response

Initially, UStrive's legal representative, John D. Mc Intyre of a Virginia law firm, told Tech Crunch that the company couldn't fully respond because they were in litigation with a former software engineer. This is an odd response to a security vulnerability affecting hundreds of thousands of people, but it's not unique in corporate crisis management. Companies sometimes use legal proceedings as a reason to minimize public communication.

Tech Crunch pressed harder, asking Mc Intyre directly: Can you fix this by a certain date? Will you notify users? Mc Intyre didn't respond to follow-ups.

Meanwhile, UStrive's CTO, Dwamian Mcleish, sent a brief email stating the exposure had been "remediated." No timeline was provided. No details about what was fixed or when. No mention of user notification. When Tech Crunch asked follow-up questions about whether the company planned to notify users, whether they could determine if malicious access had occurred, and whether they'd conducted security audits, Mcleish didn't respond further.

UStrive's founder, Michael J. Carter, declined to comment entirely.

This response pattern—minimal acknowledgment, no transparency, avoidance of accountability questions—is unfortunately common in corporate data breach responses. From a public relations standpoint, it's a disaster. From a legal standpoint, it might be strategic (though that strategy seems flawed). From a user safety standpoint, it's negligent.

The Remediation Claim

When a company claims they've "remediated" a vulnerability, what does that actually mean? It could mean they've patched the Graph QL endpoint to require proper authorization checks. It could mean they've removed the vulnerable endpoint entirely and rebuilt it. It could mean they've done a quick fix that addresses the symptom but not the underlying architectural problem. Without details, the claim is meaningless.

For a mentoring platform serving young people, you'd expect a robust remediation that includes:

- Complete security audit of the infrastructure

- Implementation of authorization checks at every data access point

- Testing to ensure no other endpoints have similar vulnerabilities

- Notification to all affected users

- Credit monitoring or identity protection services for users whose sensitive information was exposed

- Clear communication about what went wrong and what's changed

None of this was confirmed to have happened.

The Regulatory Landscape: What Laws Apply Here

UStrive operates in a complex regulatory environment because it serves minors. The exposure triggers obligations under multiple legal frameworks, each with different requirements and penalties.

Children's Online Privacy Protection Act (COPPA)

COPPA is the primary federal regulation governing how companies can collect and use information from children under 13. If any of UStrive's users were under 13, COPPA applied directly. The law requires parental consent before collecting personal information from children, clear privacy policies, and reasonable security safeguards.

A security vulnerability that exposed personal information violates the "reasonable security" requirement. The FTC has historically interpreted this to mean security practices appropriate to the sensitivity of the data and the size of the company. For a nonprofit with significant resources serving vulnerable youth, the bar is higher than it might be for a tiny startup.

Violations can result in civil penalties, and the FTC has increasingly pursued these cases. In 2023, the FTC settled with Tik Tok for $5.7 billion partially over COPPA violations. That precedent matters.

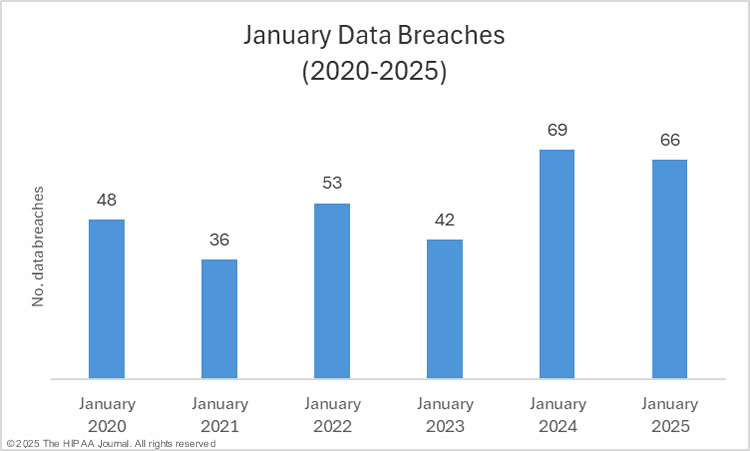

State Data Breach Notification Laws

Virtually every U. S. state has data breach notification laws requiring companies to notify residents if their personal information is compromised. The specifics vary by state, but the general framework is:

- Determine what information was exposed

- Determine which states residents live in

- Notify those residents in a timely manner (usually "without unreasonable delay" or within 30-60 days)

- Provide information about what happened and recommended steps to protect themselves

UStrive has users across the country. If they haven't provided breach notifications to those users, they're likely violating state laws in multiple jurisdictions. The penalties vary but can include fines per violation and attorney general enforcement actions.

Family Educational Rights and Privacy Act (FERPA)

FERPA governs how educational institutions handle student records. UStrive itself isn't a school, but it maintains educational information about students. If UStrive's data is integrated with school systems or if the information qualifies as "educational records" under FERPA, additional obligations apply.

At minimum, the exposure of information about students' educational goals, mentoring relationships, and academic status raises FERPA concerns if schools are involved.

Industry Standards and Best Practices

Beyond regulatory requirements, there are industry standards for protecting youth data. Organizations serving children are expected to implement:

- Encryption of sensitive data both in transit and at rest

- Regular security audits and penetration testing

- Access controls limiting who can see what data

- Monitoring systems that detect unusual data access patterns

- Incident response plans that include user notification

- Privacy impact assessments before deploying new features

The Graph QL vulnerability suggests UStrive wasn't meeting these standards. A proper security audit would have identified it before a researcher stumbled across it through basic network inspection.

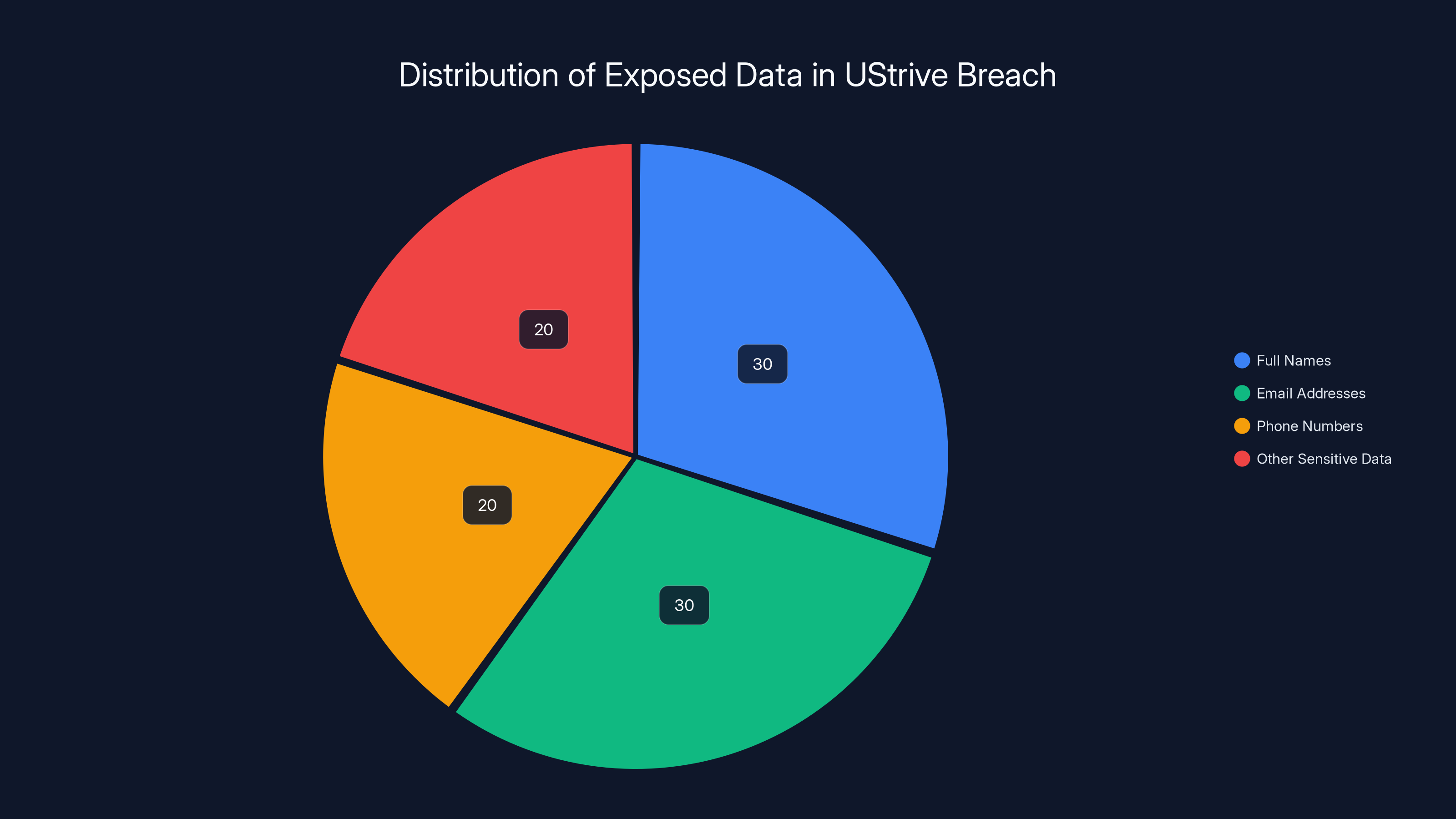

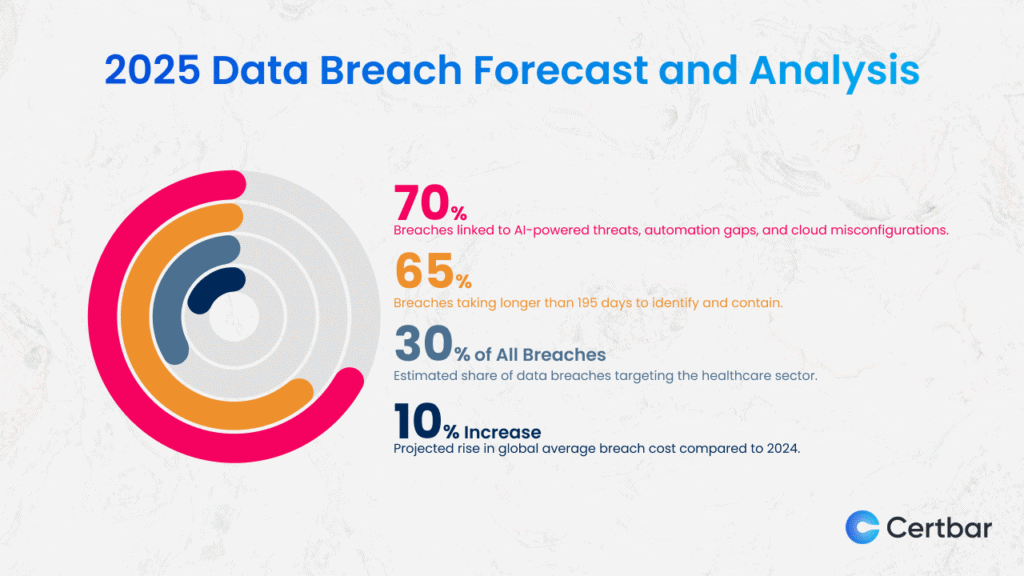

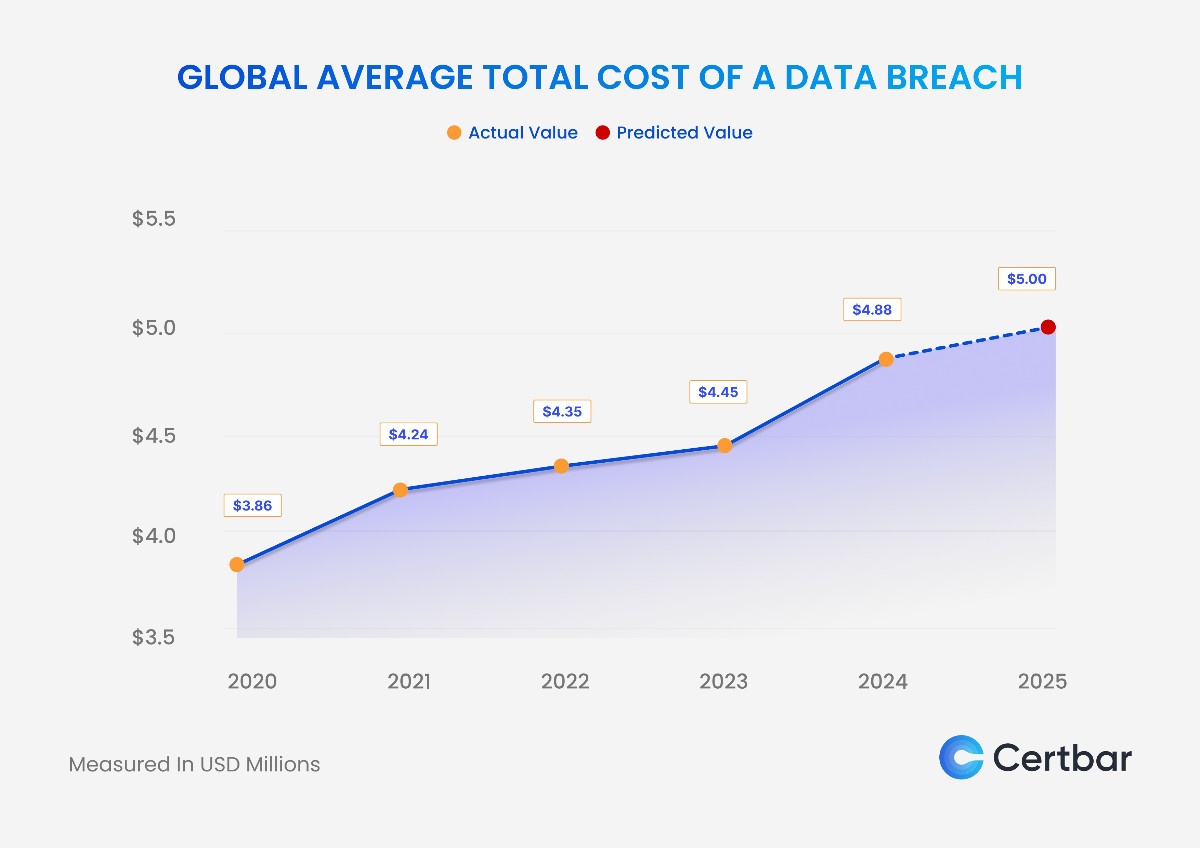

Estimated data shows that full names and email addresses were the most exposed data types, each accounting for 30% of the breach, followed by phone numbers and other sensitive data at 20% each.

Architectural Lessons: How Not to Build User-Facing Platforms

The UStrive incident illustrates several fundamental mistakes in platform architecture. These aren't new mistakes, but they're persistent ones that companies keep making.

Mistake 1: Trusting the Client

The biggest architectural failure was apparently trusting that users would only make requests through the web interface. This is Security 101 failure. Users don't always use your interface. They use developer tools, mobile apps, custom scripts, and API calls. If your security depends on users being good and only using the interface you designed, your security is an illusion.

Proper architecture assumes every request is potentially malicious and validates accordingly at the server level. Every resolver should check permissions. Every query should be validated. Every response should exclude unauthorized data.

Mistake 2: Insufficient Separation of Concerns

Authentication and authorization are different concerns. Authentication answers the question: Is this person really who they claim to be? Authorization answers the question: Is this authenticated person allowed to access this specific data?

UStrive apparently had authentication working (you needed to log in to access the API). But they failed at authorization (being logged in didn't mean you could only see your own data). These are separate problems requiring separate solutions.

Mistake 3: Inadequate Security Testing

Finding this vulnerability required:

- Creating a test account

- Examining network traffic in browser developer tools

- Crafting manual Graph QL queries

- Checking if the server would answer

This is basic security testing. Any competent security audit would have caught this in minutes. The fact that it existed undetected suggests either UStrive never conducted a security audit, or if they did, it was superficial.

For a platform serving 1.1 million youth users, the absence of regular security audits is shocking. This should be a requirement of the nonprofit's governance and a responsibility of any board overseeing youth-serving organizations.

Mistake 4: Inadequate Data Minimization

Graph QL makes it easy to ask for lots of data, but that doesn't mean you should store and expose lots of data. UStrive apparently maintained comprehensive profiles including sensitive information like birth dates. Some of this information might not be necessary for the core mentoring function.

Proper data architecture limits what information is collected, what's retained, and how long it's kept. Personal data should be collected only for specific purposes and deleted when no longer needed. A mentoring platform doesn't need birth dates and gender in a way that justifies the risk.

Corporate Responsibility and User Notification

One of the most troubling aspects of the UStrive incident was the company's apparent reluctance to notify affected users. UStrive never committed to notifying the 238,000+ exposed users that their information was compromised.

This raises an important question: What do companies owe users when their data is exposed?

The Legal Obligation

As discussed earlier, state data breach notification laws generally require notification. "Without unreasonable delay" is the typical standard. For a researcher discovering a vulnerability in January and notifying the company, a reasonable interpretation is notification should occur within days or weeks, not months or never.

If UStrive hasn't notified affected users, they're likely in violation of state laws. The question is whether regulators will enforce.

The Ethical Obligation

Beyond legal requirements, companies have ethical obligations to users, especially when serving minors. Notification serves practical purposes:

- It allows users to change passwords and secure their accounts

- It enables users to monitor their accounts for suspicious activity

- It helps users understand what information is at risk

- It provides information on protective measures like credit monitoring

- It gives users agency in protecting themselves

Refusing to notify users denies them all these benefits. It's paternalistic ("we'll handle this, you don't need to worry") and disempowering. It also suggests the company isn't confident in its response or remediation.

The Practical Reality

In practice, corporate responses to breaches often depend on:

- Whether notification is legally mandated in the affected states

- The company's insurance requirements

- The company's public relations strategy

- The likelihood of user class-action lawsuits

- The company's financial capacity to fund notification and credit monitoring

UStrive is a nonprofit. Its financial resources are limited. Notifying hundreds of thousands of users and offering credit monitoring is expensive. This might explain the reluctance, though it doesn't excuse it.

Approximately 20% of UStrive's historical user records were exposed, highlighting a significant data breach. Estimated data based on available information.

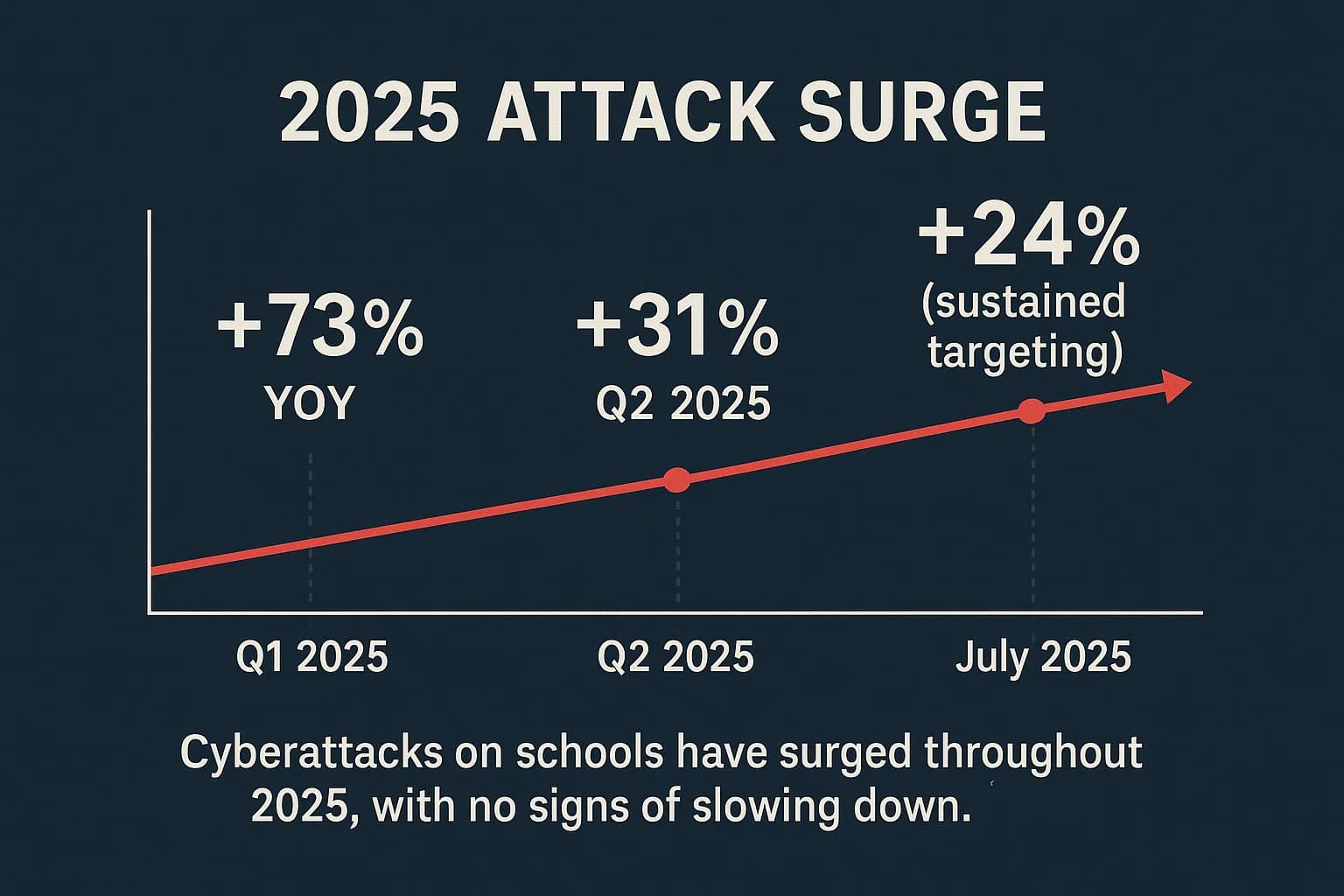

The Ed Tech Context: Why Educational Platforms Are Particularly Vulnerable

The UStrive breach isn't isolated. Ed Tech companies (educational technology platforms) have experienced numerous security incidents in recent years. There are structural reasons for this.

The Speed-Over-Security Problem

Educational startups and nonprofits often prioritize rapid development and feature deployment over security hardening. The thinking is: we're helping students, we're a nonprofit, we're not a target for serious attackers. This is dangerously naive.

Bad actors specifically target platforms serving minors because the data is valuable and the targets are less protected than corporate systems. Treating security as something you add later rather than building from the start is a recipe for incidents like UStrive's.

Limited Budgets and Expertise

Nonprofits typically have smaller budgets than for-profit companies. A nonprofit might have one or two engineers doing everything from feature development to infrastructure management. Security audits, penetration testing, and security engineers are expensive. So nonprofits often skimp.

But this creates false economy. The cost of a security audit is tiny compared to the cost of a breach. UStrive now faces regulatory investigation, potential lawsuits, damaged reputation, and remediation costs. A $20,000 security audit conducted annually would have prevented this.

Regulatory Gaps

There's no federal mandate requiring Ed Tech companies to conduct security audits or meet specific security standards. COPPA requires "reasonable security," but reasonable is vague. Some states are tightening requirements, but enforcement is inconsistent.

This creates perverse incentives. Companies that don't take security seriously operate without apparent consequence until they're caught. Companies that invest in security bear costs their competitors don't. Regulatory clarity and enforcement would help level the playing field.

The Myth of Small-Scale Anonymity

Some platforms assume they're too small to be targeted. UStrive isn't huge, but it's not tiny either. 1.1 million users is substantial. More importantly, scale doesn't determine attack likelihood. Attackers scan thousands of targets looking for low-hanging fruit. The question isn't "are we a likely target?" but "are we a possible target?" If the answer is yes, you need proper security.

Lessons for Developers and Platform Builders

If you're building any kind of platform that collects user data, the UStrive incident offers critical lessons.

Lesson 1: Security Is Architectural, Not Peripheral

You can't add security to an insecure system. You have to build it in from the start. That means:

- Authorization checks at every layer

- Data minimization principles guiding data collection

- Encryption as default, not option

- Regular security testing throughout development

- Security review as part of the code review process

If you're using modern frameworks and libraries, security tools are often built in. Graph QL frameworks, for instance, have libraries specifically for authorization. Using them properly is essential.

Lesson 2: Trust Nothing Coming From the Client

Your web interface is a convenience for legitimate users. It's not a security boundary. Never trust that users will only make requests through your interface or follow the rules your interface enforces.

Instead, implement server-side validation and authorization that would work even if users bypassed your interface entirely. A user with a REST client or curl or a custom script should be unable to access data they're not authorized to see, regardless of what interface they're using.

Lesson 3: Audit Regularly and Seriously

Conduct security audits regularly. Not the checkbox kind where you hire someone who agrees with everything and produces a report. The serious kind where you bring in experienced security engineers who actively try to break your system.

For companies serving minors, annual audits should be mandatory. For large platforms, quarterly or ongoing audits are appropriate. The audit should be independent, rigorous, and the results should inform actual changes, not just be filed away.

Lesson 4: Plan for Breaches Before They Happen

Assuming you'll never have a security incident is naive. Better to assume you will and plan accordingly. That means:

- Incident response procedures documented and tested

- Clear notification procedures and templates ready

- Credit monitoring services identified and pre-negotiated

- Communication strategies prepared

- Regular backups and disaster recovery testing

When an incident occurs, responding well is as important as preventing them. UStrive's poor response compounded the breach damage.

Lesson 5: Treat Youth Data With Special Care

If your platform serves minors, implement additional protections:

- Comply with COPPA and state laws, not just legally but with care

- Conduct privacy impact assessments before deploying features

- Limit data collection to what's actually needed

- Implement age-appropriate privacy controls

- Have a child safety officer or team responsible for youth protection

- Engage with child safety organizations and experts

Young people are more vulnerable to exploitation and identity theft. They deserve the highest level of protection.

Security practices such as architectural security and regular audits are rated highly important for platform builders. Estimated data based on typical industry emphasis.

The Broader Implications: Trust in Digital Youth Services

When a mentoring platform fails to protect youth data, the damage extends beyond the immediate exposure. It undermines trust in digital youth services broadly.

Young people need mentorship, especially those from underrepresented communities. Digital platforms can scale mentoring in ways in-person services can't. But only if those platforms are trustworthy.

UStrive's breach and response raise questions that parents, educators, and youth advocates ask:

- How can we verify that the platforms we direct young people to are secure?

- What's our recourse if a platform fails?

- Who's responsible for ensuring youth data is protected?

- What are young people's rights when their data is exposed?

These aren't technical questions. They're governance and accountability questions. They matter because the answers determine whether digital youth services can fulfill their mission or become vectors for harm.

Industry Trends: Is This Getting Better or Worse

Ed Tech security incidents haven't decreased. If anything, they've increased as more platforms come online and more data is collected. Several trends are worth noting:

The Rise of API-Based Vulnerabilities

As platforms have moved toward API-driven architecture and modern web frameworks, vulnerabilities have shifted. REST and Graph QL APIs create new attack surfaces if not properly secured. Many developers learn API security through incidents rather than proactive training.

Increased Regulatory Attention

Federal and state regulators are paying more attention to youth data. The FTC has brought more cases against Ed Tech companies. States are tightening privacy laws. But enforcement remains inconsistent and penalties aren't always sufficient to change corporate behavior.

Growing Third-Party Dependencies

Most platforms rely on third-party services for authentication, data storage, analytics, and other functions. Each third party introduces additional risk. Supply chain attacks and compromised dependencies are increasingly common. Single vulnerability in a widely used library can affect thousands of platforms.

The Professionalization of Youth Data Markets

Unfortunately, there's a growing market for youth data. Dark web markets value it highly. Sophisticated actors target youth-focused platforms specifically. This raises the stakes for organizations like UStrive. They're not just protecting data from script kiddies. They're protecting against determined attackers with financial incentives.

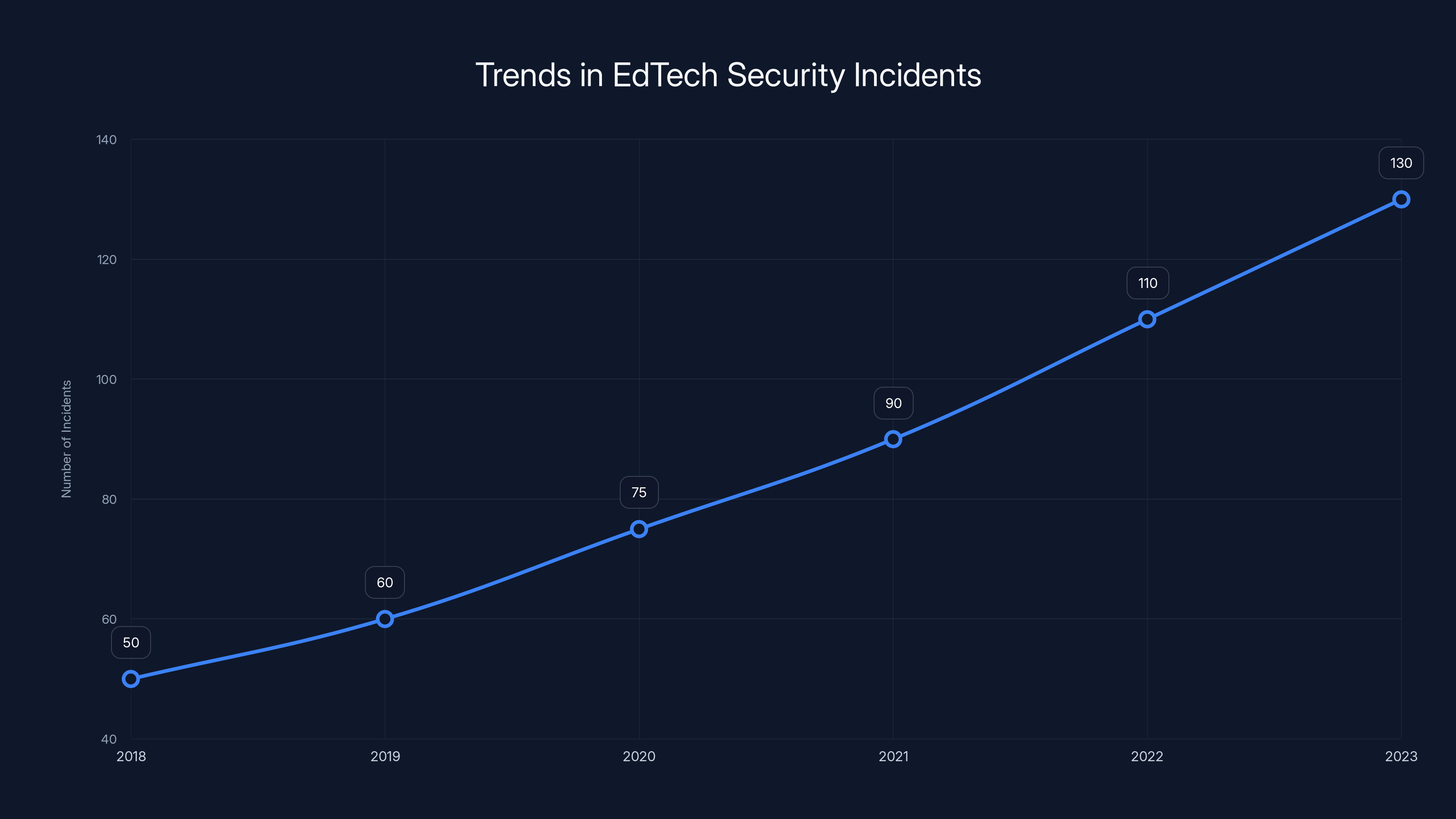

The number of EdTech security incidents has been increasing steadily from 2018 to 2023, highlighting the growing challenges in securing educational platforms. Estimated data.

What UStrive Should Have Done (And Can Still Do)

The incident has already happened, and the damage is done. But UStrive still has opportunities to handle the aftermath better than they have so far.

Immediate Actions

First, conduct and publish a comprehensive security audit. Not internal, not by a friendly firm. Bring in a respected, independent security firm and let them dig. Share the results with regulators and users (after consulting with legal counsel about protective privilege).

Second, notify all affected users. Clearly explain what happened, what data was exposed, what steps the company has taken to prevent recurrence, and what steps users should take to protect themselves. Offer credit monitoring or identity protection services. Make the notification actionable, not minimizing.

Third, implement proper remediation. Fix the Graph QL endpoint, yes, but more importantly, conduct a comprehensive review of authorization throughout the platform. Are there other endpoints with similar problems? Are there other architectural vulnerabilities? Fix them all.

Fourth, establish a board-level security oversight committee. Youth-serving organizations should have governance structures that prioritize security. This means a board committee that meets regularly, receives security reports, and has authority to require changes.

Longer-Term Actions

Beyond immediate response, UStrive should:

- Commit to annual independent security audits

- Implement a bug bounty program inviting external researchers to find problems

- Hire a Chief Security Officer or equivalent responsible for security strategy

- Implement security training for all developers

- Establish a culture where security is not a compliance requirement but a core value

- Engage with child safety organizations and incorporate their recommendations

- Be transparent about security in the platform's marketing and communications

These actions don't undo the breach, but they can prevent future incidents and signal to users, regulators, and stakeholders that the organization takes youth protection seriously.

Comparative Analysis: How Other Platforms Handled Similar Incidents

UStrive's response can be instructive when compared to how other platforms have handled breaches.

The Facebook Model (Reactive)

Facebook has experienced numerous privacy incidents over the years. Its typical response pattern has been: deny or minimize, investigate slowly, announce a fix months later, face regulatory scrutiny, settle. This approach protects the company short-term but damages trust long-term.

The Slack Model (Transparent)

When Slack discovered that some of its users' files had been exposed due to a technical issue, the company notified users promptly, explained what happened clearly, offered mitigation steps, and provided detailed information about the fix. This is closer to best-practice response.

The Apple Model (Proactive)

Apple has consistently positioned privacy and security as core brand values. While this isn't necessarily correlated with fewer vulnerabilities, it does mean Apple tends to invest heavily in security and respond seriously to incidents. The company's privacy marketing has consequences—it's harder to hide poor responses.

UStrive would benefit from learning from the Slack and Apple approaches rather than replicating Facebook's model.

Regulatory Futures: What Might Change

The UStrive incident occurs in a regulatory environment that's shifting. Several regulatory changes are likely in coming years.

Potential Federal Youth Privacy Law

There's been discussion of federal legislation creating consistent youth privacy requirements across all platforms and states. Such legislation would likely:

- Establish baseline security requirements for youth-serving platforms

- Require data breach notifications without exception

- Create meaningful penalties for violations

- Give youth privacy enforcement teeth

If passed, such a law would have direct implications for platforms like UStrive.

State-by-State Movement

In the absence of federal legislation, states are tightening requirements. California's CCPA and new privacy laws in other states are setting higher bars. Some states are specifically addressing youth privacy.

The patchwork of state laws is complex, but the trend is clear: expectations for youth data protection are increasing.

Enforcement Escalation

Regulators are increasingly willing to pursue Ed Tech companies for security failures. The FTC has brought cases, state AGs are investigating, and private lawsuits are common. This enforcement raises the stakes and the costs of security failures.

Personal Implications: What Users Should Do

If you or your child uses a platform that experiences a data breach, several steps are appropriate:

- Request details: Contact the company asking specifically what data was exposed, when, and what steps they've taken

- Monitor accounts: Watch your accounts for suspicious activity. Check credit reports for fraudulent activity

- Change passwords: Use a strong, unique password for the platform and any other services that share the same credentials

- Enroll in credit monitoring: If offered, take advantage of any credit monitoring or identity protection services

- Document everything: Keep records of all communications with the company

- Report to regulators: If the company fails to notify you or provide inadequate response, report it to your state's attorney general and the FTC

- Consider class action: If lawsuits are filed, consider whether joining them makes sense

- Share your experience: Tell others about your experience so others can protect themselves

Particularly for parents of minors whose data is exposed, vigilance is important. Stolen youth data is valuable and can be used in identity theft schemes that go undetected for years.

Building Trust in Ed Tech: A Path Forward

The UStrive incident is a setback for Ed Tech generally. Platforms that haven't experienced breaches worry about being next. Young people and families worry about their safety online. Regulators worry about enforcement.

Moving forward requires action across multiple stakeholders:

For Platforms

Take security seriously. Invest in it. Test it regularly. Be transparent about incidents. Notify users promptly. Implement accountability structures that prioritize youth protection over convenience or speed.

For Regulators

Create clear rules. Set reasonable but meaningful security standards. Enforce consistently. Make penalties sufficient to change behavior. Provide guidance to help platforms comply.

For Parents and Educators

Know what platforms young people are using. Understand privacy policies and data practices. Ask hard questions about security. Be willing to vote with your feet if platforms don't meet standards.

For Researchers and Advocates

Continue finding and reporting vulnerabilities responsibly. Push for better practices. Hold platforms accountable. Advocate for stronger protections.

Conclusion: Lessons Learned and Accountability Ahead

The UStrive security breach represents a failure at multiple levels. There was a technical failure in platform architecture. There was a governance failure in not conducting regular security audits. There was a response failure in not transparently notifying users. And there's an accountability failure in regulatory agencies not yet acting with sufficient force to prevent similar incidents.

For the young people whose data was exposed, the incident creates practical risk. Email addresses and phone numbers can be targeted for harassment or identity theft. Birth dates and gender information can be used to craft convincing social engineering attacks. The exposure happened months ago (in January 2026), and if accounts haven't been properly secured since, the risk window is still open.

For the Ed Tech industry broadly, the incident should serve as a wake-up call. Young people deserve platforms that prioritize their protection. Mentoring platforms serve particularly vulnerable populations who depend on the trust relationship the platform facilitates. Breaking that trust through inadequate security is a serious failure.

The question now is whether UStrive and the broader Ed Tech industry will learn the lessons this incident teaches. Will platforms take security more seriously? Will investors and nonprofit boards demand security as a core requirement? Will regulators enforce existing laws and create new ones? Will young people and families get the transparency and protection they deserve?

Until those questions are answered affirmatively, incidents like UStrive's will continue happening. The next vulnerable platform is already being built. The next researcher will find the next vulnerability. The next notification (or lack thereof) will happen. The cycle continues until the incentives change.

Changing those incentives requires action from all stakeholders. The technical solutions exist. The best practices are documented. What's missing is the will to implement them consistently. The UStrive incident should provide that will.

FAQ

What exactly was exposed in the UStrive breach?

The breach exposed personal information for at least 238,000 UStrive users including full names, email addresses, phone numbers, and for some users, gender, birth dates, educational institution, grade level, and mentoring relationship information. This data was accessible to any logged-in user through a vulnerable Graph QL endpoint, meaning anyone with an account could view other users' sensitive information.

How did the UStrive security vulnerability occur?

The vulnerability stemmed from a misconfigured Amazon-hosted Graph QL endpoint that lacked proper authorization checks. While the web interface enforced permissions preventing users from viewing other users' profiles through normal navigation, the underlying API allowed any authenticated user to craft Graph QL queries and retrieve sensitive data about other users. The issue illustrates the critical difference between client-side security and server-side authorization.

What is Graph QL and why is it vulnerable in UStrive's case?

Graph QL is a query language that allows clients to request specific data from a server. It's powerful because it's flexible, but that flexibility requires careful security implementation. UStrive's vulnerability occurred because their Graph QL endpoint didn't validate whether users were authorized to access specific data before returning it. This is a common mistake when developers rely on the user interface to enforce permissions rather than implementing authorization at the API level.

Did UStrive notify users about the breach?

No confirmed notification to affected users occurred, which is problematic under state data breach notification laws. UStrive's leadership declined to commit to notifying users, stating only that the vulnerability was "remediated" without providing details or timeline. This lack of transparency violates the principle of informed consent and potentially violates state notification requirements.

What laws apply to the UStrive breach?

Several legal frameworks apply including the Children's Online Privacy Protection Act (COPPA) if any users were under 13, state data breach notification laws requiring notification within a specific timeframe, Family Educational Rights and Privacy Act (FERPA) if school information is involved, and industry standards for protecting youth data. Violations can result in FTC enforcement, state attorney general actions, and civil litigation.

What should platforms do to prevent similar breaches?

Platforms should implement authorization checks at the API resolver level, not just in the user interface. They should conduct regular independent security audits, implement data minimization practices, deploy encryption for sensitive data, test security assumptions, maintain incident response plans, and treat youth data with special care. For platforms serving minors, annual security audits should be mandatory, and security should be a board-level governance priority.

What can users do if their data was exposed in the breach?

Users should contact UStrive requesting details about the exposure, change their passwords on the platform and anywhere they reused credentials, monitor their accounts for suspicious activity, check credit reports regularly, take advantage of any offered credit monitoring, document all communications with the company, and consider reporting the incident to their state attorney general and the FTC if the company fails to provide adequate notification or remediation.

Is UStrive still safe to use?

UStrive stated they remediated the vulnerability, but transparency about what was fixed and how remains limited. Users should understand that the company experienced a significant security failure, responded without transparency, and hasn't provided clear evidence of comprehensive remediation. Until more transparency emerges, users should be cautious about the amount of sensitive information they provide and monitor their accounts carefully.

Why didn't UStrive notify users about the breach?

Unfortunately, UStrive never clearly explained their reasoning. Possible explanations include legal strategy (avoiding admission of wrongdoing), financial limitations (the nonprofit couldn't afford notification and credit monitoring), minimization of the incident's seriousness, or prioritization of legal concerns over user safety. The lack of transparency makes it impossible to know which explanation applies, which itself is problematic governance.

What are the risks if my child's data was exposed?

Risks include identity theft (birth dates combined with names and email enable synthetic identity fraud), targeted harassment or social engineering (bad actors could contact your child impersonating mentors), data broker sales (exposed information often ends up for sale on dark web markets), and future exploitation. Parents should monitor their children's accounts, teach them about privacy and security, and be alert for unusual contact attempts.

Key Takeaways

- UStrive's GraphQL endpoint lacked proper authorization checks, allowing any logged-in user to access other users' sensitive information including names, emails, phone numbers, and birth dates

- At least 238,000 user records were exposed, with vulnerable youth and their data at particular risk of exploitation and identity theft

- The company failed to transparently notify affected users despite potential violations of COPPA, FERPA, and state data breach notification laws

- Root cause was architectural: security enforced only in the UI, not at the API level, a critical mistake that violates API security fundamentals

- EdTech platforms serving minors require heightened security standards, regular audits, and governance structures that prioritize youth protection over convenience

Related Articles

- 198 iOS Apps Leaking Private Chats & Locations: The AI Slop Security Crisis [2025]

- Most Spoofed Brands in Phishing Scams [2025]

- Best VPN Service 2026: Complete Guide & Alternatives

- Microsoft Copilot Prompt Injection Attack: What You Need to Know [2025]

- 45 Million French Records Leaked: What Happened & How to Protect Yourself [2025]

- Hyatt Ransomware Attack: NightSpire's 50GB Data Breach Explained [2025]

![UStrive Security Breach: How Mentoring Platform Exposed Student Data [2025]](https://tryrunable.com/blog/ustrive-security-breach-how-mentoring-platform-exposed-stude/image-1-1768943354773.jpg)