Humans& AI Coordination Models: The Next Frontier Beyond Chat [2025]

Chatbots have mastered question-answering. They can summarize documents, solve equations, write code, and generate marketing copy faster than humans can think. But ask one to help a team of ten people decide on a logo, and suddenly all that intelligence falls apart.

That's where most AI tools hit a wall. They're built for one person, one question, one answer. But the real work of modern organizations isn't happening in isolation. It's happening in rooms (and Zoom calls) where people with competing priorities need to make decisions together, track progress across weeks or months, and keep everyone aligned when contexts shift daily.

This gap between individual AI intelligence and collective human coordination is what Humans&, a freshly funded startup, believes is the next frontier for artificial intelligence. The company just closed a massive $480 million seed round and is building something fundamentally different from the chat-based models that dominate today's AI landscape.

The founding team reads like a who's who of AI research. Eric Zelikman, the CEO, spent time at x AI. Andi Peng came from Anthropic. Other founders bring experience from Meta, OpenAI, and DeepMind.

But this isn't just another well-funded startup with a good PR story. The team is tackling a real, measurable problem that's been largely invisible in the current AI boom: how to build models that understand social intelligence, group dynamics, and the messy work of real collaboration.

Let's dig into what Humans& is actually building, why it matters, and what it means for the future of AI in the workplace.

TL; DR

- New AI frontier: Humans& is shifting focus from question-answering models to foundation models built for team coordination and collaboration

- $480 million seed: The startup raised a record seed round from top-tier VCs who believe coordination AI is the next major paradigm shift

- Social intelligence focus: Unlike ChatGPT or Claude, Humans& models are designed to understand group dynamics, competing priorities, and long-term decision tracking

- No product yet: The company is designing the product and model in parallel, suggesting this is truly novel territory with no clear playbook

- Enterprise and consumer: Potential applications span workplace collaboration platforms, communication tools, and team productivity workflows

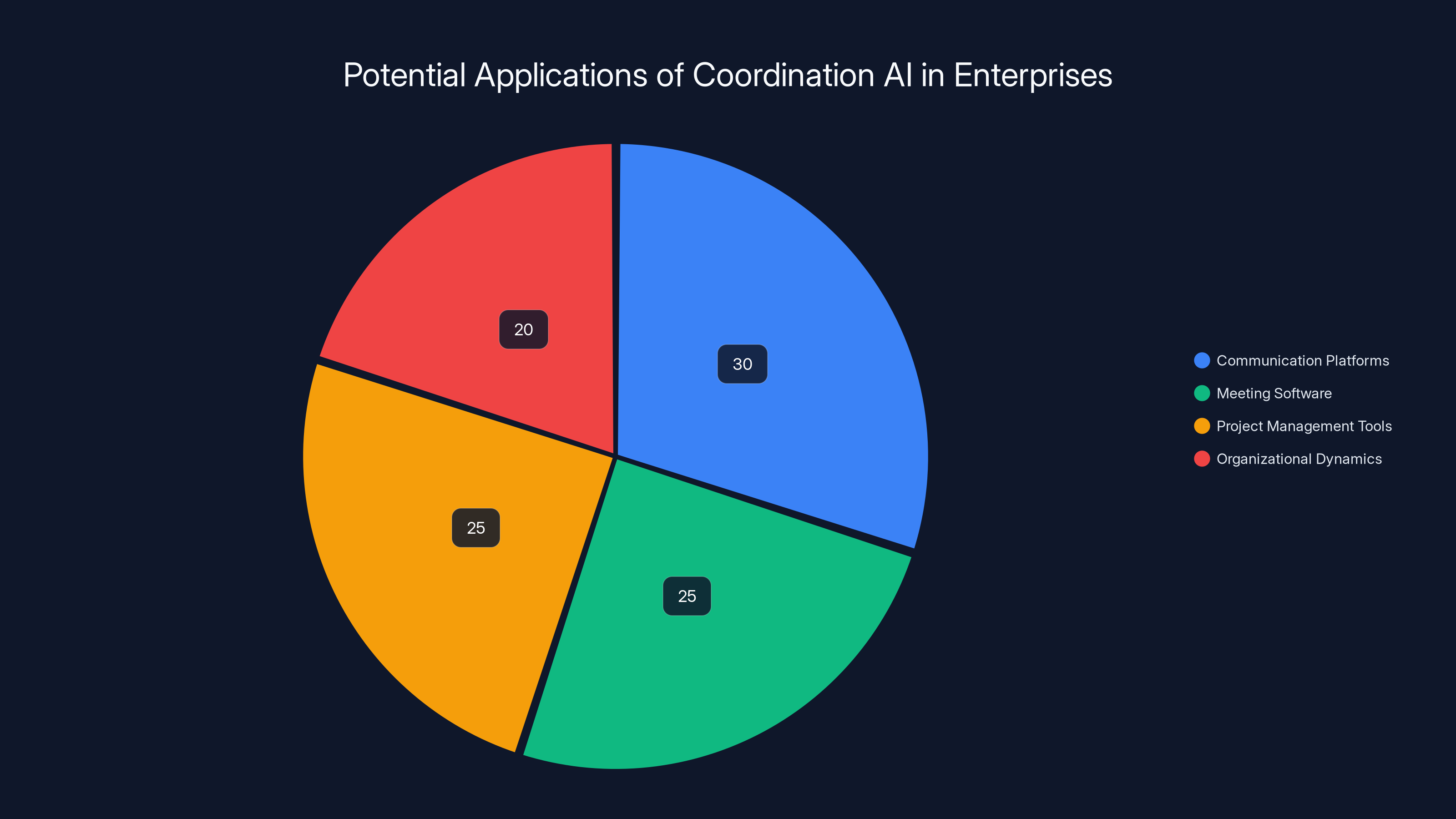

Coordination AI could significantly enhance communication platforms (30%) and meeting software (25%), with notable applications in project management (25%) and organizational dynamics (20%). Estimated data.

Why AI Coordination Is the Missing Piece

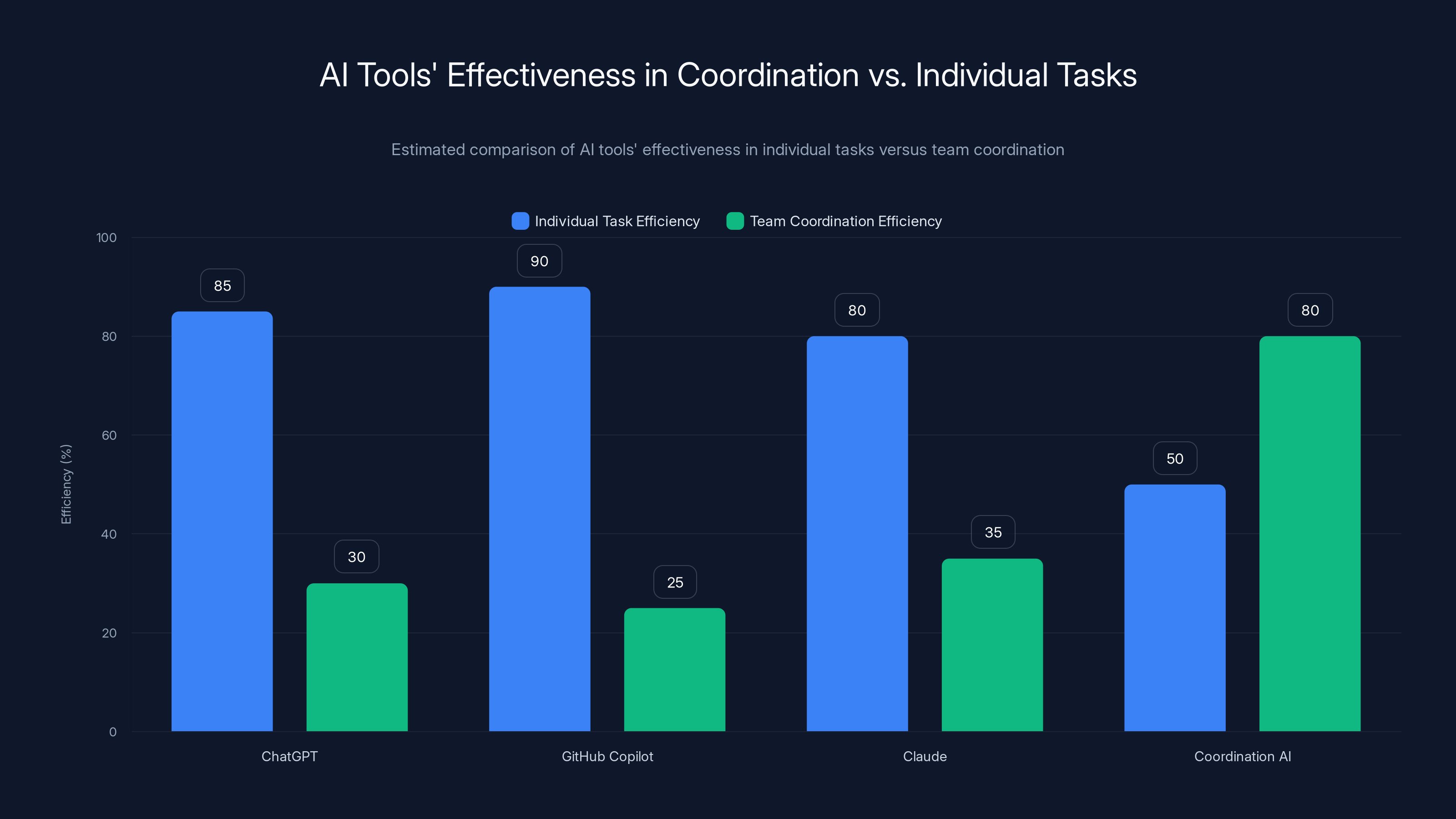

The first wave of AI disruption has been about efficiency for individuals. A marketer uses ChatGPT to write ad copy. An engineer uses GitHub Copilot to complete functions. A manager uses Claude to summarize meeting notes.

But organizations don't run on individual tasks stacked on top of each other. They run on coordination.

Consider what happens when a team needs to make a real decision. Not a technical problem with a right answer, but a judgment call where people have different views. Should we pivot the product roadmap? Which vendor should we choose? What tone should our brand voice use?

Traditionally, someone sends a calendar invite. Twenty people join a video call. Each person argues their position. The conversation spirals. No one's listening because they're preparing their rebuttal. Finally, the most senior person in the room decides, and half the team leaves feeling unheard.

Now imagine an AI that could actually help with this. Not by making the decision, but by understanding what each person cares about, surfacing the real points of disagreement, asking clarifying questions that make implicit assumptions explicit, and tracking the reasoning over time so people remember why the decision was made three months later when someone questions it.

That's not a chatbot task. That's a coordination problem.

The current generation of models was trained on the internet, which is mostly one person writing to an audience. There's no training signal for what it looks like when a team changes their mind together, or when someone says something that shouldn't be taken literally because context matters.

This is a blindspot that exists across nearly every AI product in the market right now. Slack integrated AI but it's still just summarizing threads. Notion added AI but it's still just writing individual documents. Google Workspace has AI but it's generating presentations one user at a time.

None of them are solving for the actual coordination problem: helping groups of people work together more effectively.

This is the opening Humans& is trying to exploit. The first company to build a foundation model specifically designed for group coordination could own an enormous piece of the AI value stack.

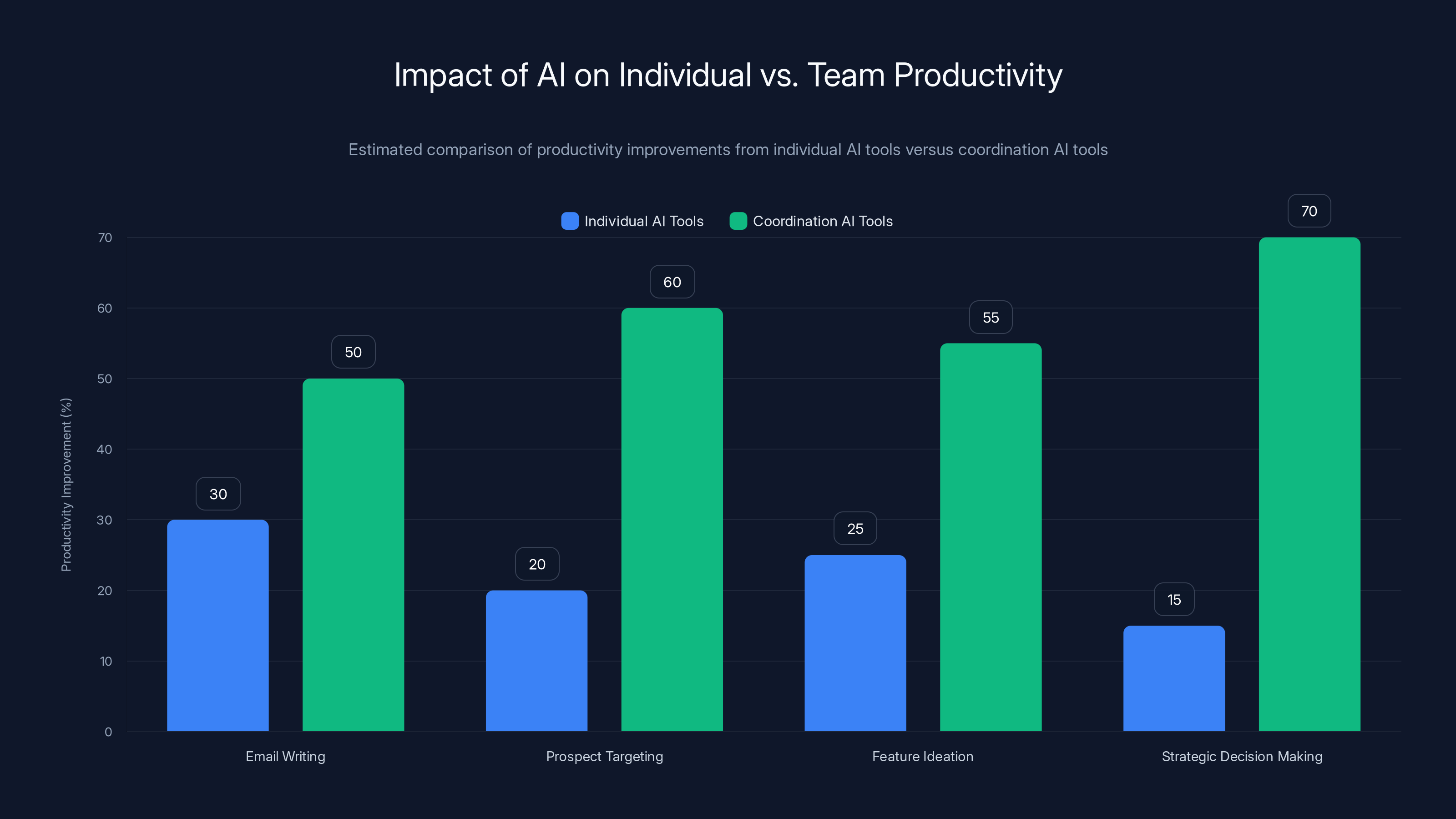

Coordination AI tools provide significantly higher productivity improvements compared to individual AI tools, especially in strategic decision making. Estimated data.

The Humans& Vision: Beyond Single-User Intelligence

When Zelikman talks about what Humans& is building, he returns to a specific scenario: a group trying to decide on a logo.

It sounds mundane. But it's actually the perfect example of why existing AI tools fail at coordination.

Today, if a team uses AI to help with a logo decision, it might go like this:

- Someone asks ChatGPT to "suggest five logo concepts for our brand"

- ChatGPT generates five mediocre concepts

- The team discusses them but the AI can't participate in understanding why people like or dislike certain approaches

- No shared reasoning emerges. Some people prefer minimalism, others want something bold, and nobody's clear on what the brand actually stands for

- Eventually someone picks one and half the team resents the choice

With a coordination-focused model, the workflow could look entirely different.

The AI might start by asking questions that help the group articulate their underlying values: What are we trying to communicate? Who's our audience? What do our competitors look like? What will make us feel proud when we see this logo five years from now?

Crucially, these questions wouldn't be generic. They'd be informed by what the AI already knows about the company's positioning, the team's previous decisions, and the constraints they're working within. The AI would ask in a way that feels like a colleague asking clarifying questions, not a bot running through a script.

As people share perspectives, the model would surface points of genuine disagreement versus points that are just using different language. It would keep a persistent record of the reasoning so that when people argue about the logo six months later (they will), there's a clear audit trail of why the decision was made.

Most importantly, the model would understand that the goal isn't to generate the best logo. The goal is to make a decision that the team can commit to together and that reflects their collective thinking.

This requires a fundamentally different training paradigm than what OpenAI or Anthropic built their models on.

The Training Problem: Why Current Models Fail at Social Intelligence

Here's the core technical challenge Humans& needs to solve: current foundation models optimize for two things.

First, how much users immediately like a response. Second, how likely the model is to answer the question it received correctly.

These metrics make sense for ChatGPT. You ask a question, you get an answer, you rate whether you liked it. Repeat millions of times and the model learns to generate responses people find helpful.

But social coordination doesn't work that way. When you're in a group decision, you don't immediately know if a particular response was good. You only know if the group made a decision they can stick with and that they understand.

Moreover, asking the right questions in a group setting is fundamentally different from answering questions in isolation. A good question might make someone uncomfortable because it challenges an assumption they didn't realize they were making. It might surface disagreement that wasn't explicit. It might slow down the decision-making process in a way that feels bad in the moment but leads to better outcomes.

None of this is rewarded by the training objectives that made GPT-4 so effective.

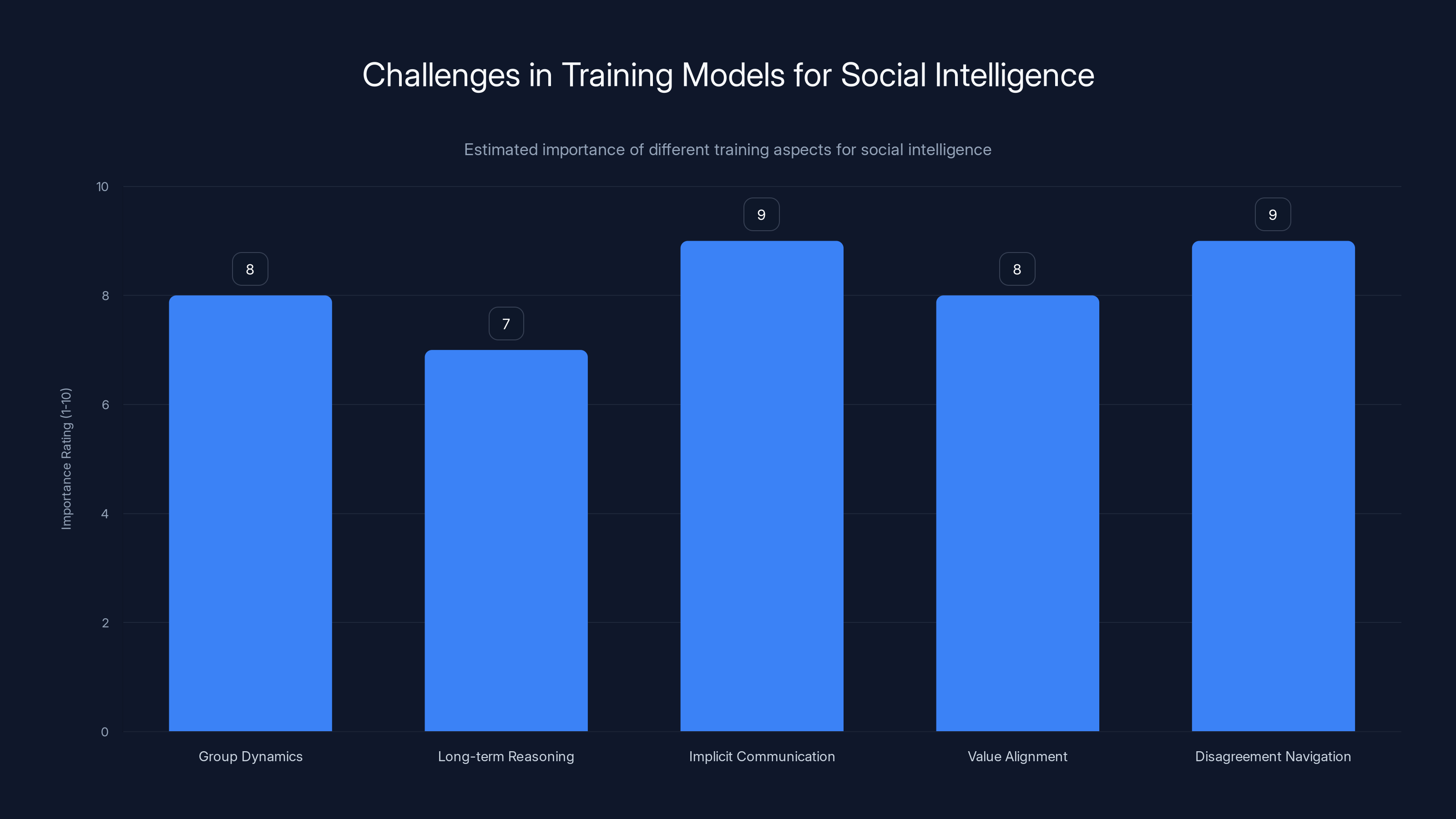

So Humans& needs to figure out how to train a model on:

- Group dynamics: Understanding when someone's quiet because they disagree versus when they're quiet because they're thinking

- Long-term reasoning: Tracking decisions over weeks or months and understanding how they connect

- Implicit communication: Recognizing when someone says something literal but means it metaphorically (like the logo joke Zelikman's team made about "getting everyone in a room")

- Value alignment: Understanding what the group actually cares about beneath the surface-level arguments

- Disagreement navigation: Helping people move from positions ("I want a minimalist logo") to interests ("I'm worried we'll look boring compared to our competitors")

This is exponentially harder than training a model to answer trivia questions accurately.

The training data problem is similarly complex. There's relatively little high-quality data of groups actually making decisions well. You can't just scrape Reddit threads or Twitter conversations. You need recordings of real meetings, documented decision processes, and feedback on whether the group actually achieved their coordination goals.

Humans& will likely need to collect proprietary training data through partnerships with companies willing to share their internal collaboration data (anonymized). This is expensive and slow but gives them a potential moat that general-purpose AI companies don't have.

Current AI tools excel in individual tasks but struggle with team coordination. A specialized Coordination AI could bridge this gap. (Estimated data)

Humans& Raised $480 Million Because They're Solving a Real Problem

The seed round size is staggering. Most AI startups raise between

This wasn't accident. VCs believe there are a few reasons why:

First, the team has proven they can identify AI paradigm shifts. When these founders worked at Anthropic, OpenAI, and DeepMind, they were inside the companies figuring out how to scale transformers and scale reinforcement learning. They've had early signals about what works and what doesn't.

Second, coordination AI could have enormous TAM (total addressable market). If Humans& builds a model that companies actually use for meetings, decisions, and team collaboration, they could charge enormous amounts. A model that helps a 1,000-person company make better decisions together could easily save millions in productivity and better business outcomes.

Third, the timing is right. Foundational AI models are now competent enough that the next wave of value comes from integration and domain-specific optimization. General-purpose chatbots are increasingly commoditized. Specialized models solving specific hard problems (like coordination) have more defensibility.

Fourth, there's genuine desperation for this solution. Every company is drowning in meetings, Slack messages, and async messages that don't create clarity. If AI could actually fix this, it would be transformational.

The fundraising tells us something important: even though Humans& doesn't have a product yet, the best capital allocators in the world think this problem is worth a half-billion dollar bet. That's a vote of confidence in the thesis.

The Product Question: What Will Humans& Actually Build?

Here's the honest part: nobody really knows what Humans& is building.

The team has given hints. Zelikman mentioned that the product could replace communication platforms like Slack or collaboration platforms like Google Docs and Notion. But that's so broad it's almost meaningless.

Notably, Peng said something revealing: "Part of what we're doing here is also making sure that as the model improves, we're able to co-evolve the interface and the behaviors that the model is capable of into a product that makes sense."

Translation: They're building the model and the product at the same time. They don't have a locked product vision waiting for the model. They're experimenting.

This is actually a more honest approach than most well-funded AI startups take. Instead of pretending they know exactly what they're building (they don't), they're admitting they're exploring the possibility space.

But what are the realistic options?

Option 1: A specialized Slack replacement. A communication platform where group conversations are AI-enhanced for better coordination. Instead of threads spiraling into confusion, the AI helps bring implicit disagreements to the surface and tracks decisions.

Option 2: A meeting augmentation layer. Software that runs during video calls and helps groups coordinate in real-time. Highlighting when someone made a new point that contradicts an earlier position. Surfacing what people actually care about beneath their stated positions.

Option 3: An async decision-making platform. Like Notion but specifically designed for capturing group decisions, the reasoning behind them, and how they change over time. Better than Slack because it's structured for decisions rather than conversation.

Option 4: An enterprise AI copilot for managers. Software that helps executives actually understand what's happening in their organization by synthesizing information from emails, meetings, and documents while maintaining awareness of team dynamics.

Option 5: An embedded coordination layer. A white-label model that other companies integrate into their existing tools. Like how Slack integrates with hundreds of apps, Humans& could be the coordination layer that Slack, Microsoft Teams, and other tools integrate with.

The most likely scenario? Humans& builds multiple of these things. They start with one, prove the model can deliver value, then expand to adjacent product categories.

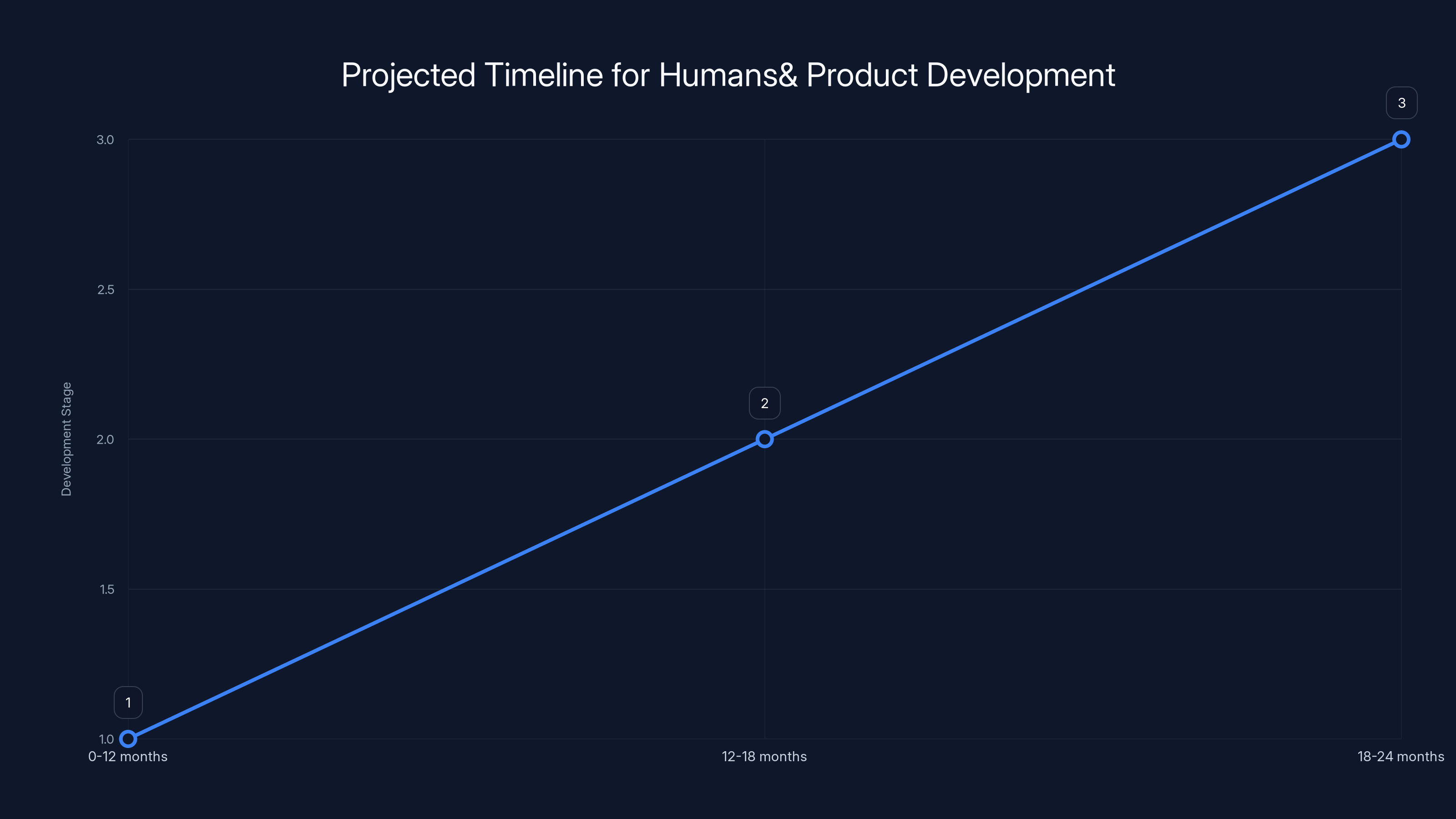

Estimated data suggests that Humans& will focus on model development in the first year, launch an early product in months 12-18, and expand use cases by months 18-24.

The Bigger Picture: Why Companies Are Moving Beyond Chat to Coordination

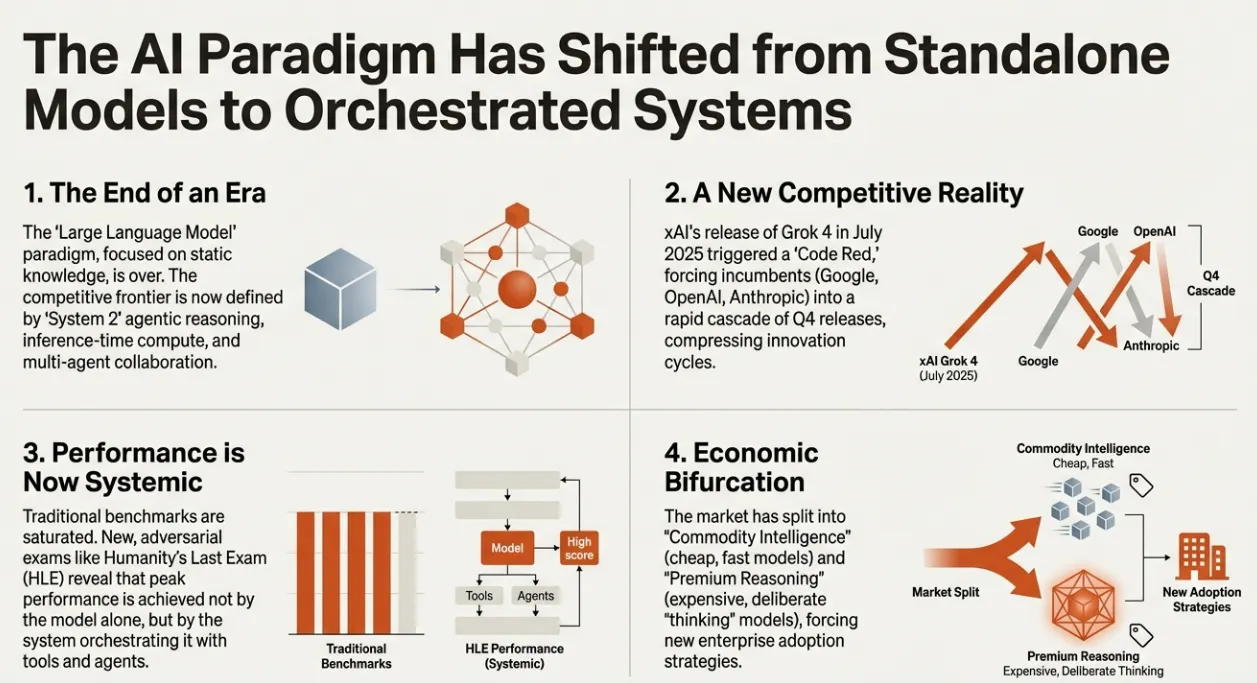

The AI industry is at an inflection point.

For the past two years, the narrative has been automation and individual productivity: AI will write your emails, generate your code, summarize your meetings. Every product company added a "chat with your data" feature.

But companies trying to implement these tools at scale hit a wall. Individual AI isn't actually that valuable without coordination around it.

Consider a sales team using AI to write personalized emails to prospects. Great. But the team also needs to agree on which prospects to target, what message resonates with which segments, how aggressively to follow up, and when to escalate to humans. That's not individual AI. That's coordination AI.

Or a product team using AI to generate feature ideas. Great. But the team needs to collectively decide which features matter most given limited resources, how they fit into the roadmap, and how they align with company strategy. Again, coordination.

Reid Hoffman, the LinkedIn founder, made this explicit in recent writing about how companies are implementing AI wrong. They're treating it like isolated pilots instead of coordinating around a workflow. The real leverage is in the coordination layer, Hoffman argued. That's where the value compounds.

This shift from "AI for individuals" to "AI for teams" is the next major paradigm shift in enterprise AI. And it's huge. Because while individual productivity improvements are valuable, they're linear. Better email writing saves 30 minutes a day per person. Organizational coordination improvements are exponential. They change which decisions get made, which opportunities get seized, and which mistakes get prevented.

A company with AI-enhanced individual productivity but poor coordination might still make strategic mistakes that wipe out all the productivity gains.

A company with bad individual AI but excellent coordination could outmaneuver competitors.

This is why Humans& raised half a billion dollars. Investors see the same shift. The next $100 billion AI company won't be built on individual chatbots. It'll be built on coordination infrastructure.

Why This Is Different From AI Agents

There's an important distinction to make here, because the AI industry loves to conflate different concepts.

AI agents are AI systems that can take actions autonomously: browsing the web, running code, moving money. Think about an AI agent that can book your flights by going to multiple airline websites, comparing prices, and booking the cheapest option.

Coordination AI is different. It's not about AI taking action. It's about AI facilitating better decision-making and communication among humans.

Agents might eventually be used within coordination systems (an agent that tracks who said what during a decision process), but the core challenge Humans& is solving isn't automation. It's clarity.

This is an important distinction because many companies think they need to build agents when what they actually need is better coordination. You don't need an AI to automatically make decisions for you. You need AI that helps your team understand each other better and make collective decisions you can all commit to.

![AI Coordination Model Market Share Projection [2025]](https://c3wkfomnkm9nz5lc.public.blob.vercel-storage.com/charts/chart-1769110484321-vl3gxxwv07q.png)

Humans& is projected to capture the largest market share in AI coordination models by 2025, driven by its innovative approach and strong founding team. Estimated data.

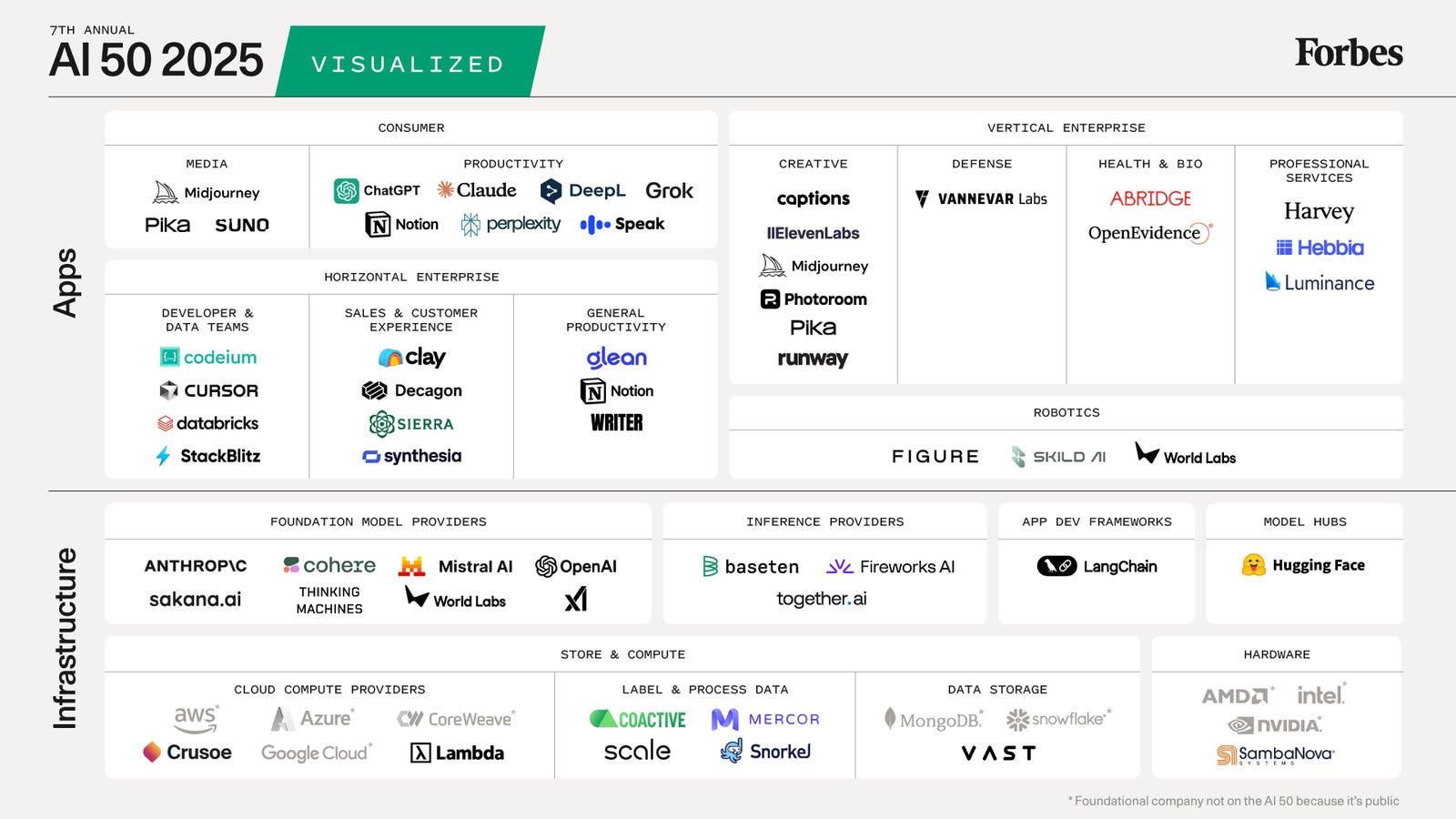

The Competitive Landscape: Who Else Is Chasing This?

Humans& isn't the only company working on team collaboration AI. But the specific focus on coordination as a foundational model problem is relatively unique.

Notion is adding AI but it's designed for individual documents and databases, not group coordination.

Slack is adding AI but it's mostly for summarization and search, not facilitating group decision-making.

Microsoft Teams is adding AI but it's largely copycat features of what others are doing.

There are some interesting startups in adjacent spaces. Granola, an AI note-taking app, raised $43 million and is building toward collaborative features. But they're starting from notes, not coordination.

None of these companies have Humans&'s specific bet: that the next major foundation model architecture should be designed from the ground up for social intelligence and group coordination.

If Humans& pulls this off, they'll have a massive first-mover advantage. The model and product will likely be so much better at coordination than tacked-on AI features in existing tools that users will be willing to switch platforms just to get better coordination.

If they don't pull it off, the team will have learned an enormous amount and the next company to try will start from a better foundation.

Either way, coordination AI is coming. The only question is whether Humans& shapes it or follows it.

What This Means for Enterprise AI Implementation

For companies thinking about how to actually implement AI in ways that drive value, Humans& is a signal that the playbook is changing.

The first wave of AI ROI came from individual productivity tools. Use ChatGPT to write better emails. Use GitHub Copilot to write code faster. Use Midjourney to generate images. These are good investments but they're reaching saturation point.

The next wave will come from thinking about AI's impact on collective coordination and decision-making. How can AI help your team understand each other better? How can it help you make faster decisions that everyone commits to? How can it help you track why decisions were made so you don't re-litigate them every quarter?

Companies that figure this out first will have a competitive advantage. They'll move faster. Their teams will be happier because they'll feel heard. Their decisions will be better because they'll incorporate more perspectives.

This is why the $480 million seed round makes sense. Humans& isn't just building a product. They're helping reshape how organizations actually work.

Estimated data suggests that implicit communication and disagreement navigation are critical areas for improving social intelligence in models, with high importance ratings.

The Honest Challenges Ahead

None of this is guaranteed to work. Humans& faces some real challenges.

Challenge 1: Training data. Building a model that understands group dynamics requires training data that mostly doesn't exist in digital form. They'll need to collect it, which is expensive and time-consuming.

Challenge 2: Deployment complexity. Coordination AI needs to work across different communication tools, meeting software, and work management platforms. That's a huge integration surface.

Challenge 3: Change management. Even if the product is amazing, getting companies to change how they communicate and make decisions is hard. People are creatures of habit.

Challenge 4: Privacy and trust. A model that needs to understand group dynamics needs to understand individual preferences, concerns, and thinking processes. Companies will be cautious about sharing this data, even anonymized.

Challenge 5: Defining success. How do you even measure if coordination AI is working? It's easier to measure lines of code produced (Copilot metrics) than team decision quality.

These challenges are real. They're why most companies wouldn't attempt this. They're also why the funding is so large and the team is so experienced. This is hard enough that you need top talent and significant resources.

When Might We Actually See Humans& Products?

The timeline is uncertain. Here's what seems likely.

First 12 months: The team focuses entirely on model development. They collect training data, build infrastructure, and run internal experiments. Maybe they release a research paper showing progress on coordination benchmarks.

Months 12-18: They launch an early product with limited users, probably accessed through an API or a beta collaboration platform. The product is rough but shows the model can improve real group decision-making.

Months 18-24: They expand to more use cases and potentially launch a public product. By this point, if the model is good, competing products (Slack, Notion, others) will start integrating coordination AI capabilities as well.

The race isn't to launch first. It's to build a model so good that the product becomes essential. Humans& has the resources and talent to do it, but execution risk is real.

What This Says About the AI Industry's Future

The Humans& story is telling us something important about where AI is headed.

First, the era of "general-purpose AI is all you need" is ending. Foundation models will still be important but they'll be increasingly specialized. There will be foundation models for code, for images, for scientific research, and yes, for coordination.

Second, the next $100B AI companies won't be built by large labs making incrementally better general models. They'll be built by teams that identify specific hard problems and build models designed to solve them.

Third, the AI value chain is shifting away from the model makers and toward the application layer. OpenAI's models are incredibly good but most of their value is captured by the companies building products on top of them. Humans& is betting that coordination is specific enough that the value stays with the specialist model builders.

Fourth, we're entering an era where domain expertise matters. You can't build a good coordination model just by scaling up compute and data. You need people who understand organizational behavior, group dynamics, and decision science. Humans&'s team has some of this expertise but they'll need to hire more.

Fifth, the AI industry is becoming less "move fast and break things" and more "move intentionally with massive capital." You can't build a coordination foundation model as a bootstrapped side project. You need resources to collect training data, hire specialized talent, and support long development timelines.

All of these trends suggest the AI industry is maturing. The wild west is giving way to more structured competition between well-funded, specialized teams. This is healthy because it means the AI that gets built is more likely to solve real problems rather than chase hype.

The Broader Implications for Work

If Humans& pulls this off, it changes how work actually gets done.

Imagine a future where your meeting software understands what people actually care about and surfaces it proactively. Where your Slack channels are enhanced with AI that brings clarity to confusing discussions. Where your company's decisions are tracked with reasoning so you understand not just what was decided but why.

This would be genuinely transformative. Not because it's automation or because it's flashy, but because it solves a real, persistent pain point that costs organizations enormous amounts of money and makes work less satisfying.

People often talk about AI as a threat to work. But better coordination is actually AI making work better. It means fewer unnecessary meetings. More voices heard. Faster decisions. More people feeling like their perspective matters.

That's not dystopian. That's actually pretty compelling.

Of course, there's a darker version too. AI that's really good at coordination could be used to manipulate groups into decisions they wouldn't have made with better information. It could be used to pressure dissent underground rather than bring it to the surface. It could be used to extract value from groups for benefit of the few.

But that's true of any powerful technology. The question isn't whether coordination AI will exist. It's whether the teams building it are thinking carefully about how to build it responsibly.

Given Humans&'s team and their stated values, it seems like they are. But we'll find out over the next few years.

The Bottom Line: This Is Just the Beginning

Humans& raising $480 million is a signal. It means the best investors in the world think that foundation models designed for coordination are the next major frontier for AI.

They're probably right.

The first wave of AI was about individual productivity. The second wave will be about collective coordination. And that second wave is just starting.

We don't know if Humans& will be the company that wins this space. We do know that someone will build it. And when they do, it will change how organizations work.

The logo choice that took two hours of meetings will take 30 minutes. The decision that got relitigated every quarter will be well-documented so everyone remembers why it was made. The person who felt like their voice wasn't heard in the meeting will have their perspective captured and considered.

These are small things individually. But collectively, they add up to work that feels less like a constant struggle for alignment and more like a group moving together toward shared goals.

That's what Humans& is actually building. It just needs to prove it's possible.

FAQ

What exactly is coordination AI, and how is it different from regular chatbots?

Coordination AI is specifically designed to help groups of people make decisions together and communicate more effectively. Unlike chatbots (such as ChatGPT or Claude) which optimize for answering individual questions accurately and immediately, coordination AI understands group dynamics, tracks long-term decisions, surfaces disagreements constructively, and maintains context across conversations. While a chatbot helps one person at a time, coordination AI helps teams work together better.

Why is Humans& raising so much money if they don't have a product yet?

The $480 million seed round reflects investor confidence that the coordination AI problem is massive and valuable. If Humans& builds a model that genuinely improves how teams make decisions, the market opportunity is enormous. Every organization struggles with coordination failures that cost millions in lost productivity and poor decisions. Additionally, the founding team has proven credibility from working at companies like OpenAI, Anthropic, and DeepMind, giving investors confidence they can execute on difficult AI problems.

What are the potential applications for coordination AI in enterprises?

Coordination AI could enhance communication platforms like Slack by surfacing genuine disagreements and tracking reasoning. It could improve meeting software by helping groups understand each other's underlying interests. It could replace or augment project management tools like Notion by better capturing why decisions were made. It could help leaders understand organizational dynamics by synthesizing information from across all communication channels. Enterprise teams could potentially save hours each week through better coordination and fewer misaligned meetings.

How does Humans& plan to train a model on something as complex as social intelligence?

Training coordination AI requires data that traditional chatbots don't need: recordings of real meetings, documented decision processes, and feedback on whether groups actually achieved good coordination outcomes. Humans& will likely need to collect proprietary training data through partnerships with companies willing to share anonymized collaboration data. This creates a competitive moat because general-purpose AI companies like OpenAI don't have this specialized training data.

Will coordination AI replace human judgment in decision-making?

No, coordination AI is designed to support better human decision-making, not replace it. The goal isn't for AI to make decisions for you, but to help your team understand each other better, surface implicit disagreements, and track the reasoning behind decisions. The AI facilitates human collaboration rather than automating it away.

What's the timeline for when Humans& products might actually launch?

Based on similar AI development timelines, Humans& likely spends 12-18 months on model development before launching limited early products. A public release of a full coordination AI product could happen 18-24 months from now, though timelines in AI development are notoriously unpredictable. The company has the resources to move faster than typical startups but coordination AI is genuinely complex territory with no proven playbook.

How might this affect existing workplace collaboration tools like Slack and Microsoft Teams?

If Humans& succeeds, existing collaboration tools will face pressure to either integrate coordination AI capabilities or lose users to specialized platforms. Slack and Microsoft Teams have the user base and resources to integrate coordination AI, but they don't have the specialized model. Humans& could either become a dominant standalone product or become an infrastructure layer that other platforms integrate with.

Why is this different from companies just building "AI agents" for automation?

AI agents are designed to autonomously take actions (like booking flights or sending emails). Coordination AI is designed to help humans make better collective decisions. Agents solve an automation problem. Coordination AI solves a communication and alignment problem. Most organizations actually need better coordination more urgently than they need more automation, which is why this is a potentially larger opportunity.

Looking Ahead: What Happens Next

The AI industry is at a pivot point. We've spent two years optimizing for individual productivity. The next phase is optimizing for collective intelligence.

Humans& isn't just a startup chasing funding. It's a signal that the best minds in AI are thinking about harder problems. They're not satisfied with incremental improvements to question-answering models. They want to build infrastructure that changes how humans work together.

Whether they succeed or fail, this is the direction the industry is moving. Coordination AI is coming. It's just a question of who builds it first and whether they build it well.

The $480 million seed round buys them the runway to find out.

Key Takeaways

- Humans& is building foundation models specifically designed for group coordination and team collaboration, not individual question-answering like ChatGPT

- The $480M seed round signals investor belief that coordination AI is the next major paradigm shift in enterprise AI, replacing individual productivity focus

- Current AI models fail at coordination because they optimize for immediate user satisfaction rather than long-term group understanding and decision quality

- Coordination AI could reduce meeting time, improve decision quality, and make employees feel more heard and aligned with organizational direction

- The company is designing product and model in parallel, suggesting this is genuinely novel territory requiring experimentation rather than following an existing playbook

Related Articles

- Why Microsoft Is Adopting Claude Code Over GitHub Copilot [2025]

- AI Bubble or Wave? The Real Economics Behind the Hype [2025]

- Building Your Own AI VP of Marketing: The Real Truth [2025]

- Enterprise AI Adoption Report 2025: 50% Pilot Success, 53% ROI Gains [2025]

- LinkedIn's Small Models Breakthrough: Why Prompting Failed [2025]

- ServiceNow and OpenAI: Enterprise AI Shifts From Advice to Execution [2025]

![Humans& AI Coordination Models: The Next Frontier Beyond Chat [2025]](https://tryrunable.com/blog/humans-ai-coordination-models-the-next-frontier-beyond-chat-/image-1-1769110594532.jpg)