Modernizing Apps for AI: Why Legacy Infrastructure Is Killing Your ROI

Your company probably has a problem. You just don't know it yet.

Right now, somewhere in your infrastructure, there's likely a system running code from 2015. Maybe it's handling customer data. Maybe it's powering your core workflows. Maybe it's both. And you're probably thinking: "If it works, why fix it?"

Here's why: AI doesn't work well with legacy infrastructure. Not because AI is picky, but because old systems were built for a different era. They weren't designed to handle the computational load of machine learning models. They weren't built with the flexibility that AI demands. And they definitely weren't designed with the security architecture that AI requires.

This isn't speculation. Recent research from Cloudflare surveyed over 2,300 IT, security, product, and engineering leaders across multiple industries. The findings were striking: companies that modernized their applications are three times more likely to achieve measurable ROI from AI tools compared to those stuck with outdated infrastructure. That's not a nice-to-have improvement. That's a game-changer for your bottom line.

But here's the catch. Most leaders think they're fine. The same research found that 95% of surveyed leaders believe their infrastructure is sufficient for AI development. That confidence? It's almost certainly misplaced. And it's costing billions across enterprises worldwide.

TL; DR

- Legacy systems create a "technical glass ceiling" blocking AI progress and ROI, with modernized companies seeing 3x higher ROI from AI investments

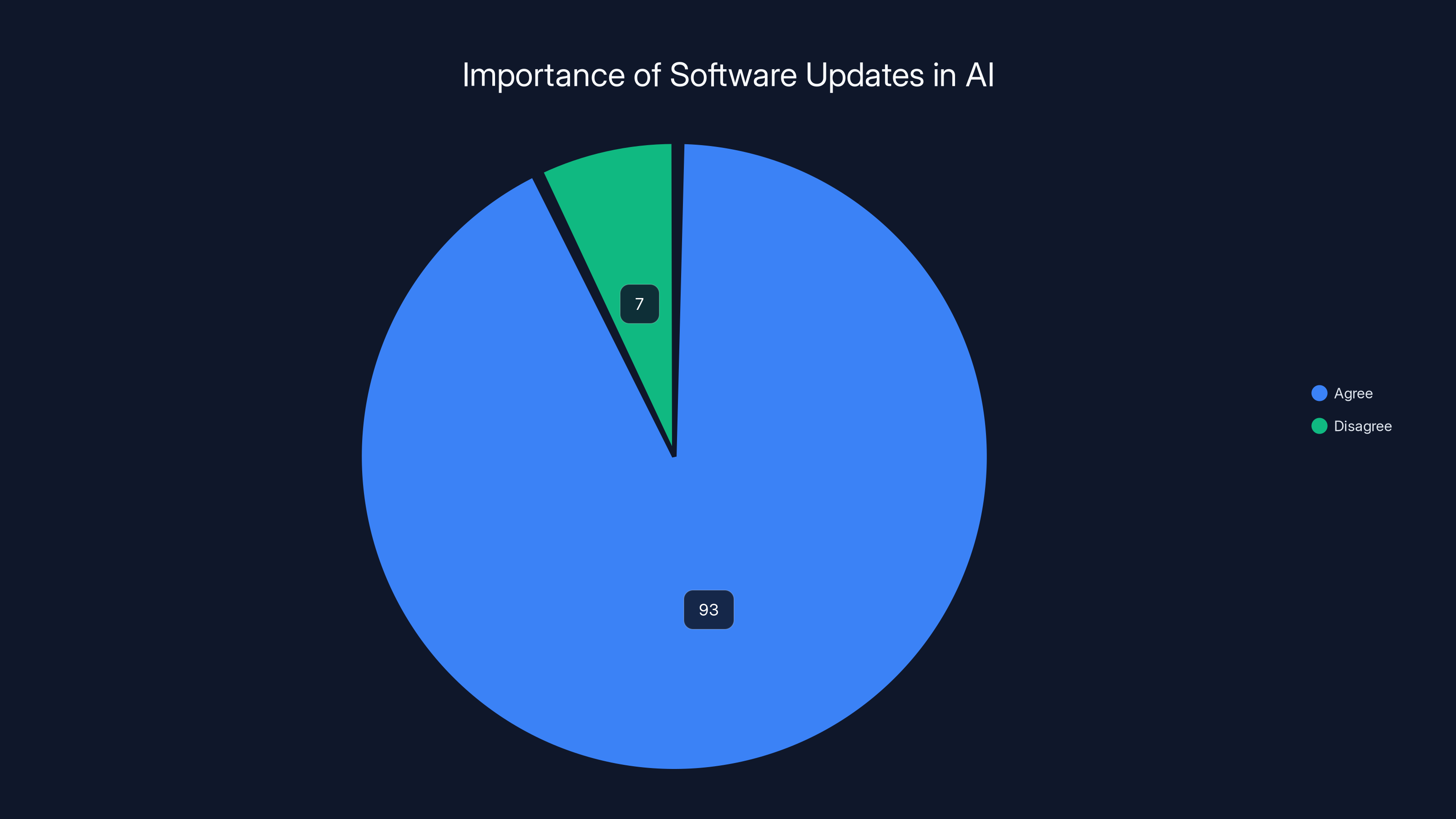

- 93% of IT leaders agree that updating software is the single most important factor for improving AI capabilities

- Security integration during modernization acts as a "growth multiplier," making companies 4x more likely to reach advanced AI maturity

- Infrastructure underconfidence paradox: 85% reduction in infrastructure confidence among those who delayed modernization, yet 95% still believe their systems are adequate

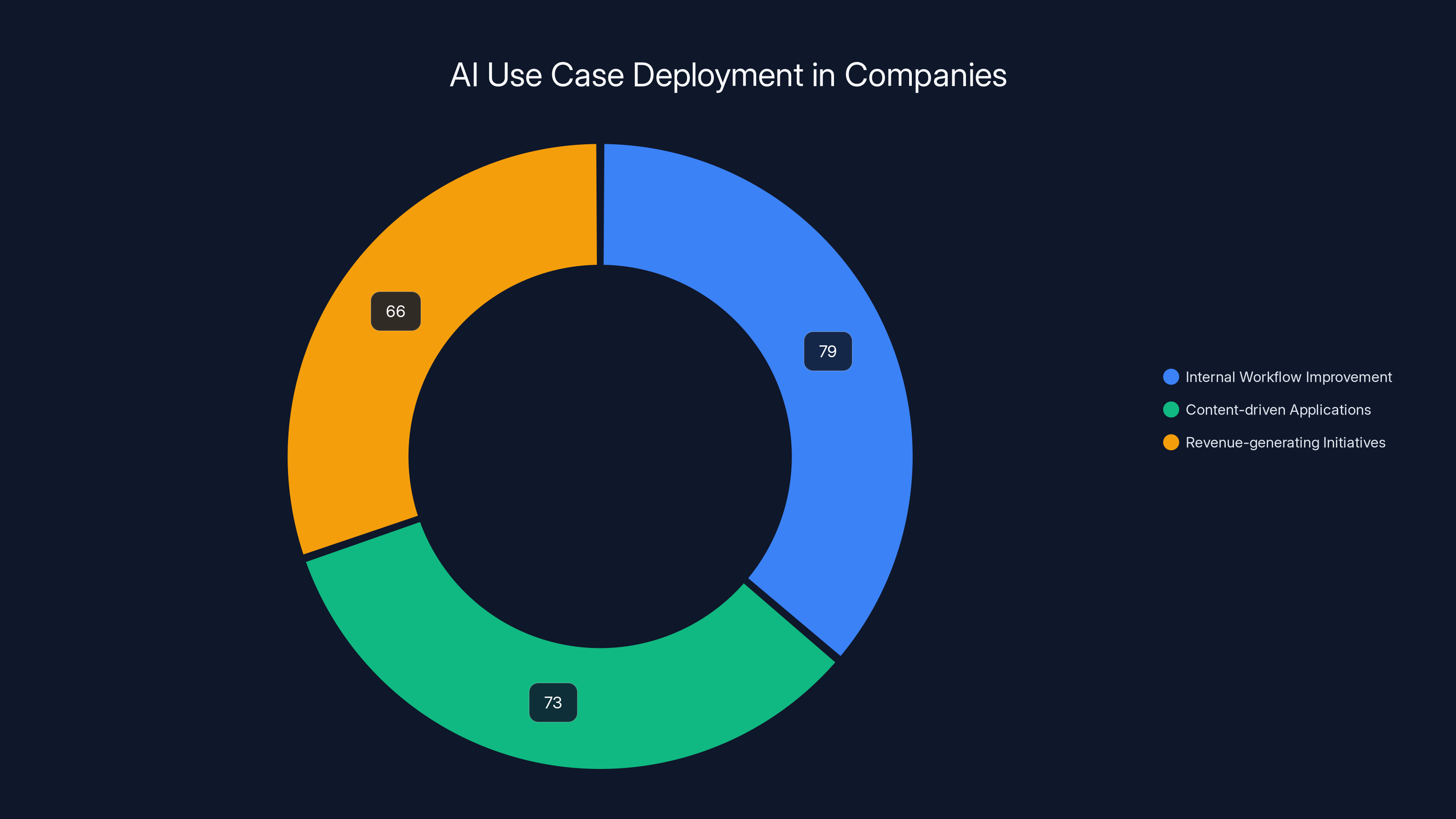

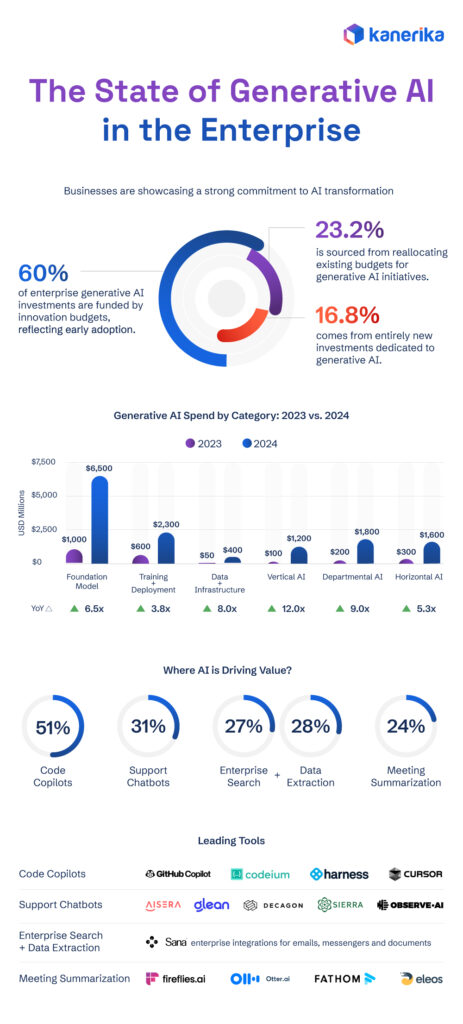

- Quick wins available: Improving internal workflows (79%), powering content applications (73%), and supporting revenue initiatives (66%) are the most popular AI targets

Internal workflow improvement is the most common AI use case, deployed by 79% of companies, followed by content-driven applications and revenue-generating initiatives.

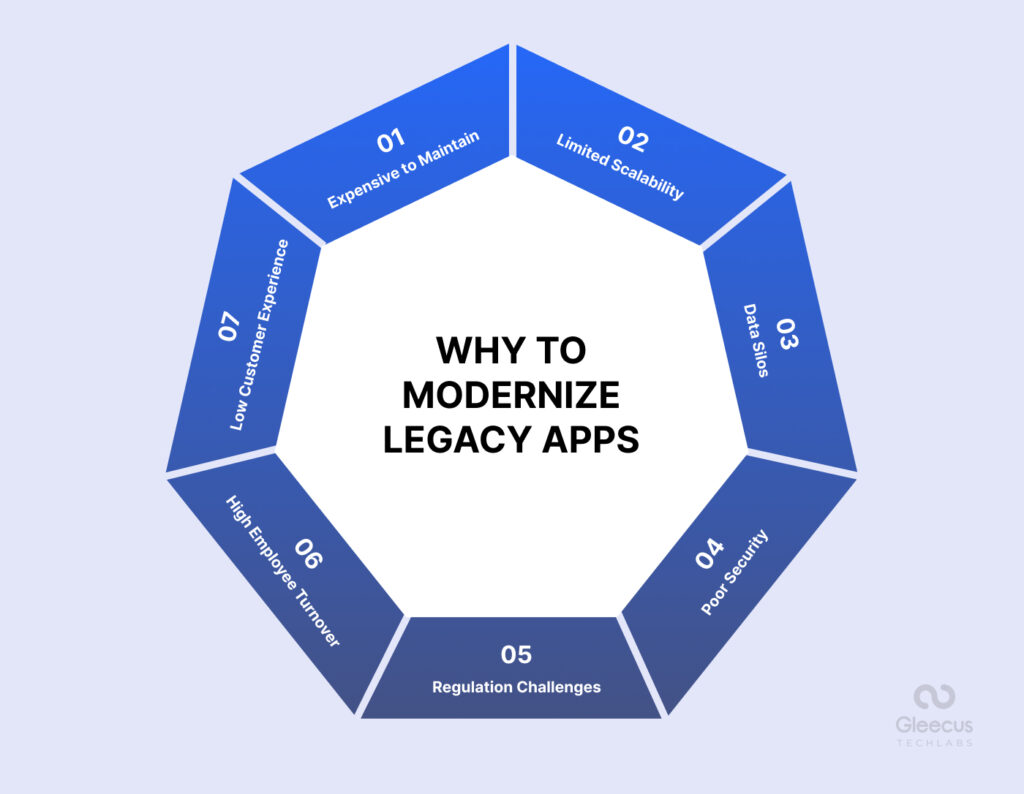

The Hidden Cost of Waiting: Why Your Legacy Systems Are Bleeding Money

Let's talk about what's actually happening right now when you try to run modern AI workloads on infrastructure designed for the early 2000s.

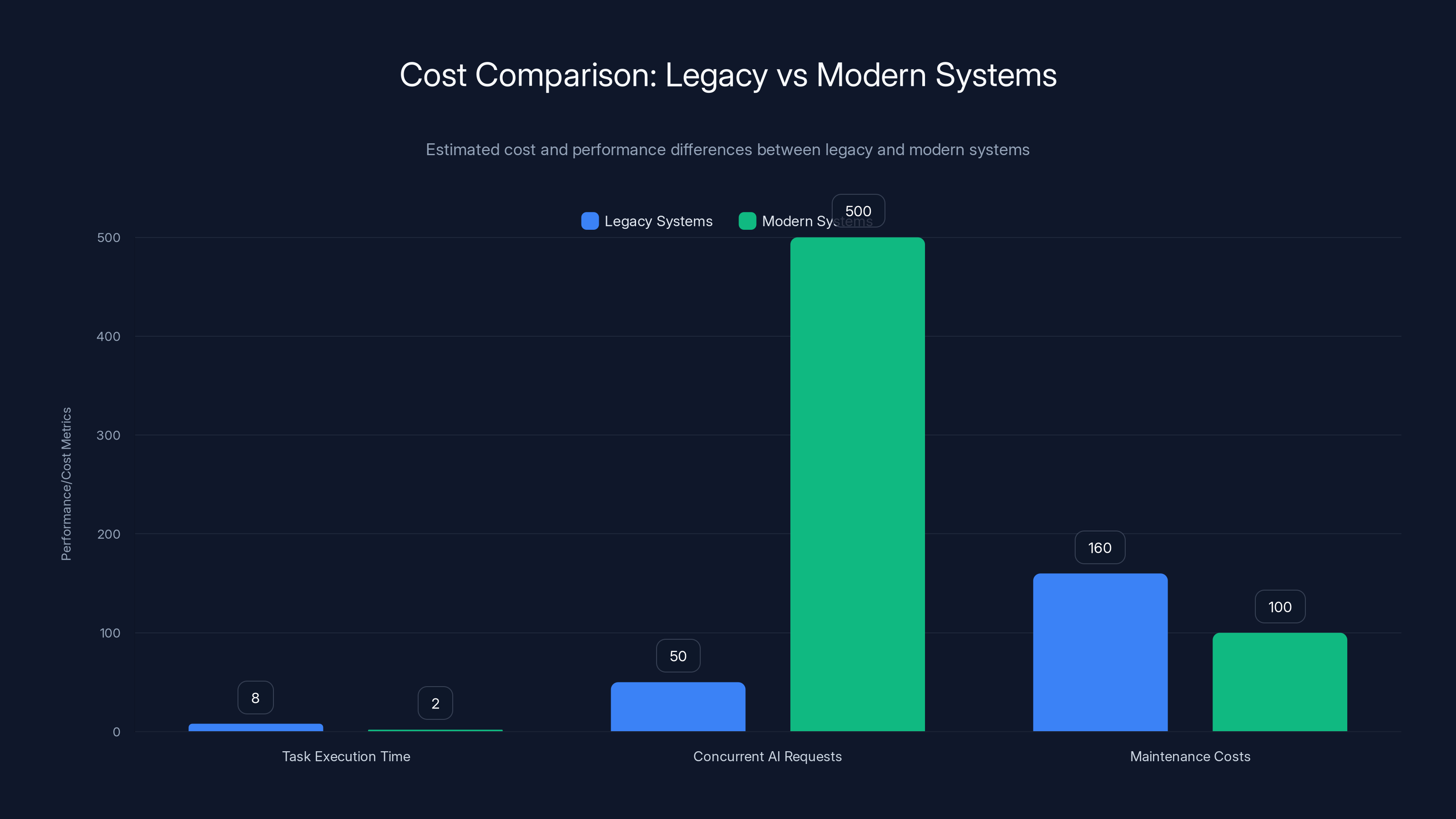

Your servers are working twice as hard for half the output. A task that could run on optimized infrastructure in 2 seconds takes 8 seconds on legacy systems. Multiply that across thousands of API calls per day, and you're looking at infrastructure costs that spiral out of control. But that's just the direct cost.

There's also the hidden tax. Your team spends time working around limitations instead of building new features. Your developers write workarounds instead of solutions. Your security team is constantly patching vulnerabilities because the underlying system wasn't designed with modern threat models in mind.

Consider this: a mid-sized financial services company discovered their legacy transaction processing system could only handle 50 concurrent AI inference requests before degrading performance. They were paying for cloud infrastructure that they couldn't actually use effectively. Once they modernized that single component, their AI throughput increased tenfold without additional infrastructure spend.

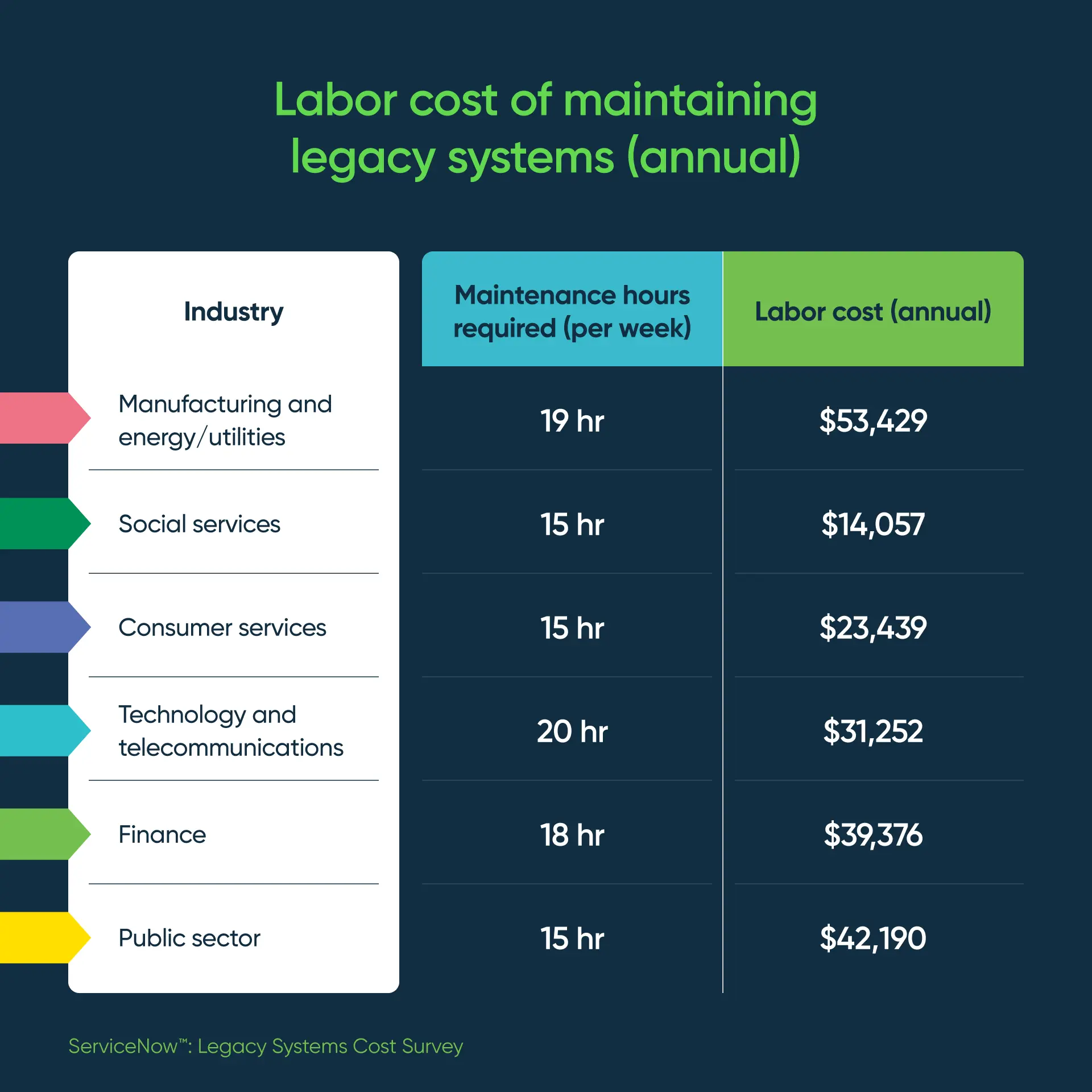

Maintenance Costs Double Down

Older systems require older expertise. Finding engineers who understand COBOL or legacy Java frameworks isn't getting easier. You're either paying premium salaries to keep experienced engineers on legacy systems, or you're struggling to find anyone at all.

Maintenance costs on legacy infrastructure typically run 40-60% higher than modernized systems. Not because new systems are magic, but because outdated systems have accrued technical debt, have fewer automated testing capabilities, and require manual interventions for problems that modern systems handle automatically.

Then there's the version problem. Your legacy system runs on a database from 2013. That database has known security vulnerabilities, but upgrading would require testing your entire application against new APIs. So you patch the surface vulnerabilities while the underlying system becomes increasingly at-risk.

The Slowdown Effect

Innovation moves at the speed of your infrastructure. When you're working with monolithic systems that were never designed to be decomposed, you can't adopt microservices. You can't implement containerization. You can't deploy AI models incrementally. You have to migrate everything at once, which means you're stuck in analysis paralysis, planning the perfect migration that never actually launches.

Modern companies launch new features in weeks. Legacy-bound companies launch in quarters, if they're lucky.

A striking 93% of IT and engineering leaders agree that software updates are crucial for improving AI capabilities, highlighting a near-universal consensus.

The Three Times Multiplier: How Modernization Transforms AI ROI

Let's dig into the actual research data, because the numbers are compelling.

Companies with modernized applications are 3x more likely to achieve clear ROI from AI tools. That's not 30% better. That's not a 2x improvement. It's three times the success rate. Statistically, if you randomly selected two companies—one modernized and one legacy-bound—and both deployed the same AI solution, the modernized company would be three times more likely to see positive returns.

Why? Because ROI from AI isn't just about deploying the AI. It's about integrating AI into systems that can actually handle it. It's about having the infrastructure flexibility to experiment with different models, different approaches, different use cases.

When you deploy AI to a monolithic legacy system, you're constrained. You can maybe swap out one model for another, but you can't easily A/B test. You can't quickly iterate. You can't scale incrementally. You're locked into the original deployment decision.

With modernized infrastructure, you can deploy multiple AI approaches in parallel. You can retire the ones that don't work. You can scale the winners. You're operating with optionality.

The Compounding Effect

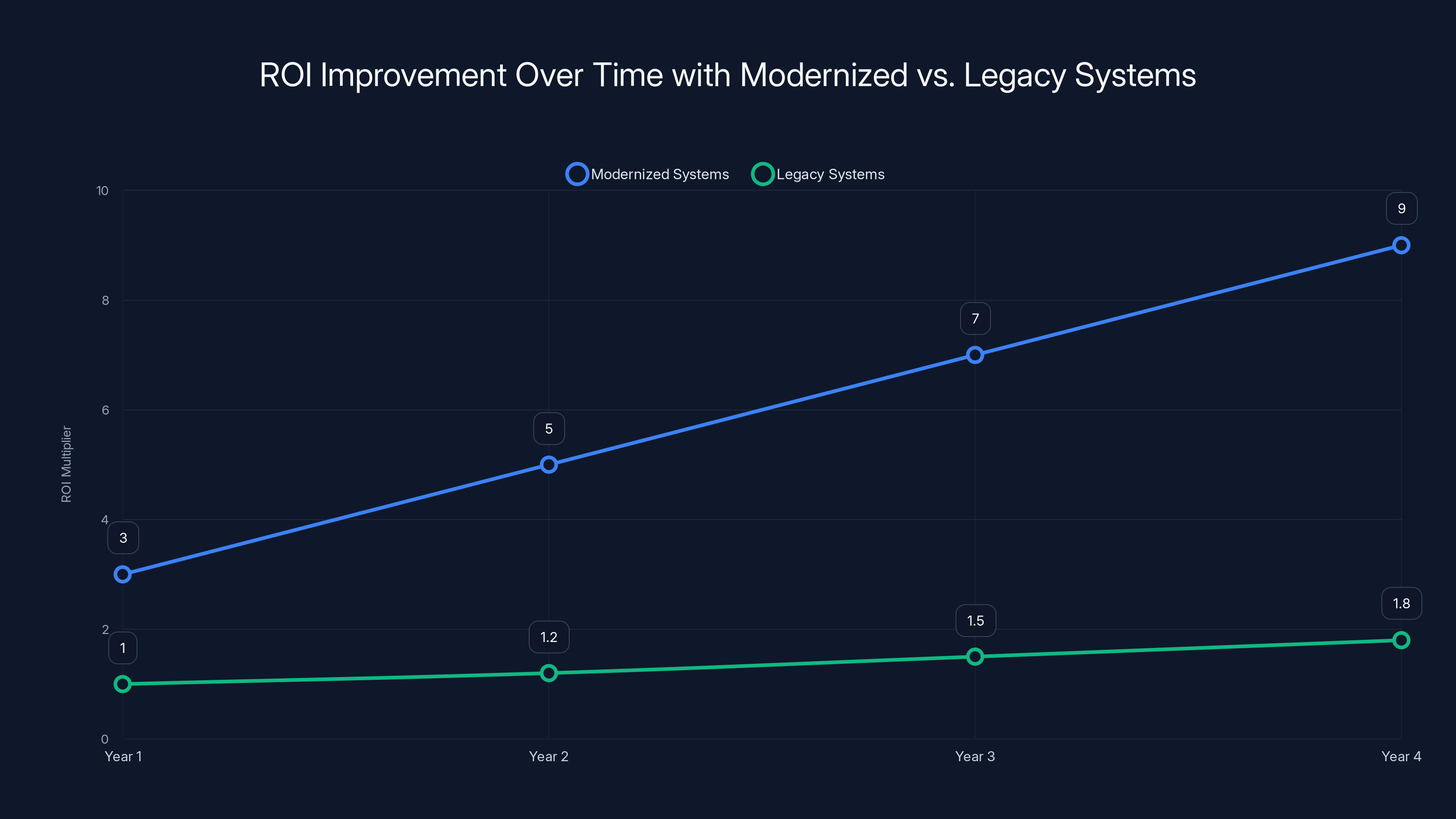

Here's what makes this even more interesting: the ROI improvement compounds over time.

Year one, the modernized company gets 3x better results because they can iterate faster. Year two, they're getting 5x better results because they've learned what works and optimized around it. The legacy-bound company is still debating whether AI is actually worth the investment.

Consider a customer service department deploying AI chatbots. The modernized company can:

- Deploy the chatbot to 10% of traffic first

- Measure satisfaction metrics

- Tweak the model based on actual customer interactions

- Expand to 50% of traffic

- Refine further

- Roll out to 100%

The legacy-bound company has to:

- Plan the entire integration for months

- Deploy to all or nothing because the architecture doesn't support partial rollouts

- Hope it works

- Spend weeks fixing integration issues if it doesn't

One approach reduces risk and maximizes learning. The other approach is all-or-nothing gambling.

The 93% Consensus: Why Software Updates Are Non-Negotiable

When you survey 2,300 IT and engineering leaders, you rarely get 93% agreement on anything. That's the level of consensus you'd expect on something like "water is wet" or "security matters."

Yet 93% of surveyed leaders agreed that updating software is the single most important factor in improving AI capabilities. That number matters because it means this isn't controversial. This isn't one school of thought versus another. This is basically universal agreement among people who actually work with these systems day in and day out.

What does "updating software" actually mean? It's not just running the automatic updates that your system nags you about. It means:

Database updates: Modern databases have better query optimization, better handling of concurrent operations, and better integration with AI frameworks. Running on a database from 2012 means you're missing years of performance improvements that matter specifically for AI workloads.

Operating system updates: AI frameworks assume modern OS features. If you're running on an OS that reached end-of-life five years ago, you're missing kernel-level optimizations that AI frameworks depend on. You're also accumulating security debt.

Runtime updates: Are you still running Python 3.6? Most serious AI frameworks have dropped support for anything older than Python 3.9. If you're on an old runtime, you can't run current models without serious compatibility layers.

Framework updates: TensorFlow, PyTorch, and other ML frameworks improve constantly. Staying three versions behind doesn't just mean missing new features. It means missing crucial performance optimizations and bug fixes.

The consensus exists because these upgrades have direct, measurable impact on AI performance. You can't deploy GPT-4-level models on infrastructure designed for traditional web services. The architectural assumptions are incompatible.

The Minimum Viable Modernization

You don't have to rewrite everything. Start with:

-

Core database upgrade: Move from single-instance SQL Server 2008 to cloud-native PostgreSQL or managed databases. This alone typically improves query performance by 30-50% and enables AI-specific features like vector searches.

-

API layer containerization: Wrap your existing services in containers without rewriting them. This buys you deployment flexibility and makes it easier to scale specific components for AI workloads.

-

Event-driven communication: Add message queues (Kafka, RabbitMQ) between systems. This decouples services and lets you insert AI models into workflows without rewriting everything.

-

Modern observability: Implement logging, metrics, and tracing. You can't optimize what you don't measure, and AI workloads are much harder to debug without proper instrumentation.

-

API authentication and rate limiting: AI models need careful management of computational resources. Legacy systems often have weak or non-existent API controls.

These five things don't require rewriting your business logic. They're infrastructure plays. And they unlock about 70-80% of the flexibility you need for serious AI integration.

Legacy systems take 4 times longer to execute tasks and handle 10 times fewer AI requests compared to modern systems. Maintenance costs are estimated to be 60% higher for legacy systems.

The Security Multiplier: Why Building It In Early Is Non-Negotiable

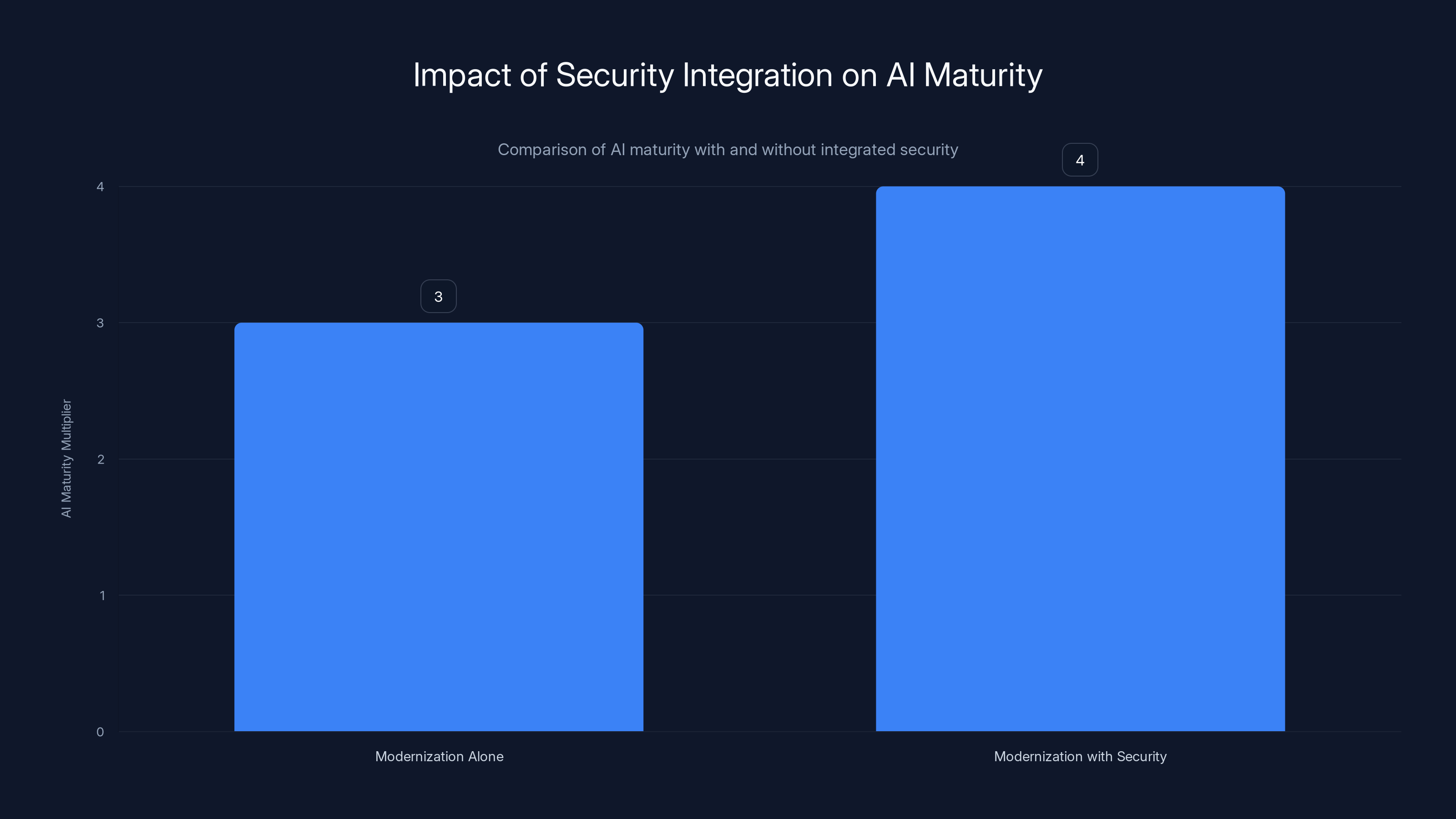

Here's a number that should make security teams sit up and pay attention: companies that integrate security into their modernization strategy are 4 times more likely to reach advanced AI maturity.

That's different from the 3x multiplier for modernization alone. Adding security to modernization adds another layer of improvement. Security isn't a constraint on AI. It's a growth multiplier.

Why? Because AI systems have unique security requirements that legacy infrastructure doesn't support.

AI models are intellectual property that needs protection. A leaked model can represent millions in training costs and years of competitive advantage. A poisoned model (one that's been manipulated during training or inference) can cause catastrophic failures.

AI systems need different threat modeling than traditional applications. A web application's security focuses on preventing unauthorized access. An AI system needs to prevent model extraction, prevent adversarial attacks, prevent data poisoning, and prevent inference attacks (where attackers deduce training data from model outputs).

Legacy systems weren't designed with any of this in mind. Building security into modernization means:

Model versioning and attestation: Every AI model deployed needs to be cryptographically signed and versioned. You need to know which model version is running in production, who trained it, and when. Legacy systems have no infrastructure for this.

Data access controls: AI systems require massive amounts of training data. That data flows through the system multiple times. You need fine-grained access controls that legacy systems typically don't support. You need to know which training data touched which model, and maintain that chain of custody through deployment.

Inference request logging: Every inference request needs to be logged (while protecting user privacy). You need to detect if someone is making hundreds of requests to extract model behavior. Legacy systems struggle with the scale of logging this requires.

Model explanation and accountability: When an AI system makes a decision, you need to be able to explain why. That requires the model to be integrated with data lineage systems that show exactly what inputs influenced which outputs. This is practically impossible to retrofit into legacy systems.

Adversarial testing: Before deploying AI in production, you need to test it against adversarial inputs (inputs specifically designed to fool the model). Legacy deployment pipelines don't have this capability built in.

Building security in from the start of modernization means these become normal parts of your deployment process. Trying to retrofit security later means expensive changes and constant workarounds.

The Cost of Reactive Security

The research found something interesting: 85% of leaders whose companies delayed modernization showed reduced confidence in their infrastructure's security. But here's the problem: they're not using that reduced confidence as an incentive to modernize. Instead, they're waiting for a breach and then panicking.

When a breach happens, you have no choice but to modernize. But you're doing it in crisis mode, under pressure, with limited time for careful planning. Crisis modernizations are expensive and often incomplete. They solve the immediate problem but don't build the foundation for sustainable AI integration.

Proactive modernization with security built in costs less in the long run. You're building a foundation that supports both security and AI. You're not constantly patching vulnerabilities. You're not doing emergency migrations under deadline pressure.

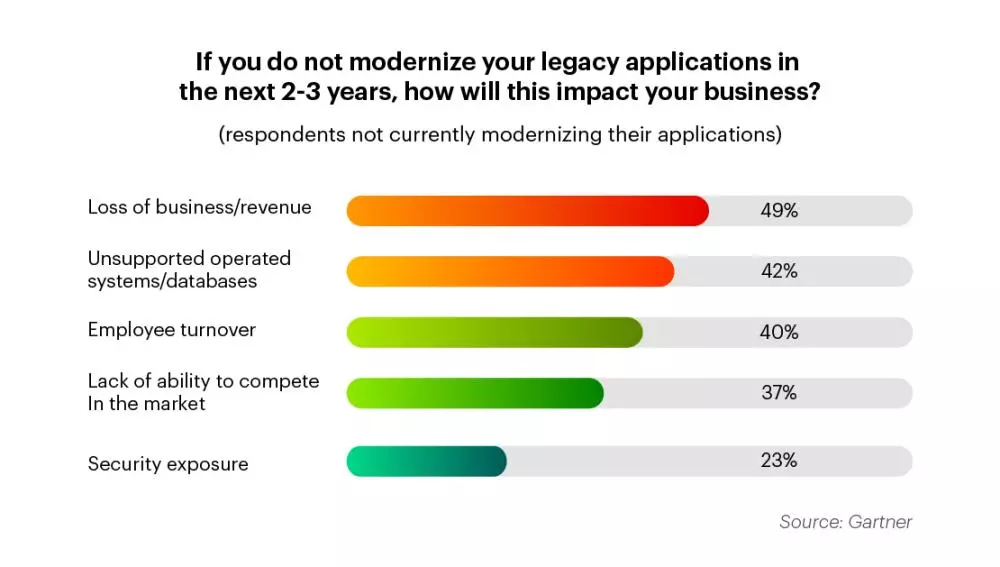

The Five Major Costs of Delaying: What You're Actually Paying

If you're not modernizing now, you're not saving money. You're spending it in invisible ways.

1. Maintenance and Operations Overhead

Legacy systems are maintenance black holes. Every critical component requires specialized knowledge. Your operations team spends 60-70% of their time keeping the lights on instead of building new capabilities.

When something breaks in a legacy system, there's often no obvious root cause. The systems are so interdependent that fixing problem A breaks problem B. You end up with band-aid fixes that spawn new problems three months later.

Meanwhile, your modernized competitors have automated deployments, automated testing, and self-healing infrastructure. When something goes wrong, their systems detect it and fix it automatically. Their ops team spends 20% of their time on maintenance and 80% on optimization and new capabilities.

2. Slow Innovation Velocity

Your time-to-market for new features is directly tied to your infrastructure flexibility. Monolithic legacy systems mean that adding a new feature requires coordinating across the entire codebase. Testing is slow. Deployment is slow. Rolling back is slow.

Modern architectures using microservices and containerization mean teams can deploy new features to production multiple times per day. Your competitors are learning from customers 100x faster than you are.

In a rapidly changing market, innovation velocity is often more important than feature completeness. The company that ships 50 features in a year and iterates based on customer feedback usually beats the company that ships 5 perfect features.

3. Developer Retention and Recruitment

Top engineers don't want to work on legacy systems. They want to work on interesting problems with modern tools. When you force talented engineers to spend their days working around architectural limitations, they leave.

You end up with a bifurcated team: the experienced developers who understand the legacy system (and are expensive to replace), and junior developers who are still learning. There's no middle ground because all the mid-career developers graduated to companies with modern infrastructure.

When you need to hire new talent, you can't compete on "we use modern technology." So you compete on salary. Legacy-system companies typically pay 20-40% more for equivalent engineers, but still can't hire the best people.

4. Reduced Competitive Agility

When the market changes, your ability to respond depends on infrastructure flexibility. If a competitor launches a new product that threatens your business, how fast can you build a competitive response?

With modern infrastructure, a well-organized team can prototype a new product in weeks. With legacy infrastructure, you're looking at months of planning and architectural discussions just to figure out where the new feature goes.

By the time you've modernized enough to launch your response, the competitor has already captured market share. Then you're playing catch-up for years.

5. Data Loss and Disaster Recovery Risk

Old backup systems work on tape. Tape takes weeks to restore. Modern systems replicate data in real-time across geographic regions, with recovery time measured in minutes.

If your legacy system has a catastrophic failure (ransomware attack, hardware failure, data corruption), you're looking at days of downtime. Not hours. Days. In our always-online world, a few days of downtime can mean millions in lost revenue.

Modern infrastructure with geographic replication means you can fail over to a different region automatically. Customers never notice the outage.

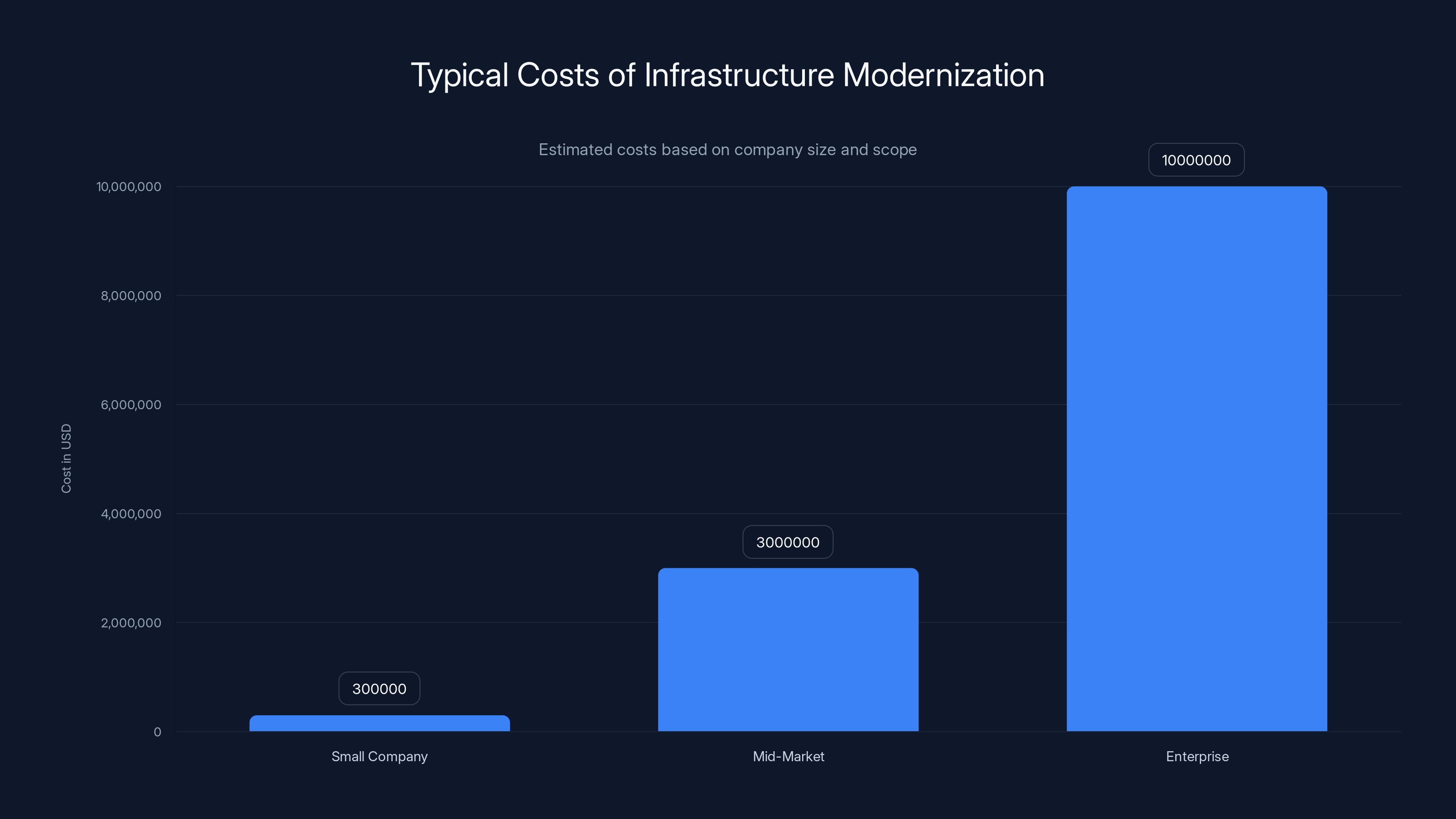

Infrastructure modernization costs vary by company size, ranging from

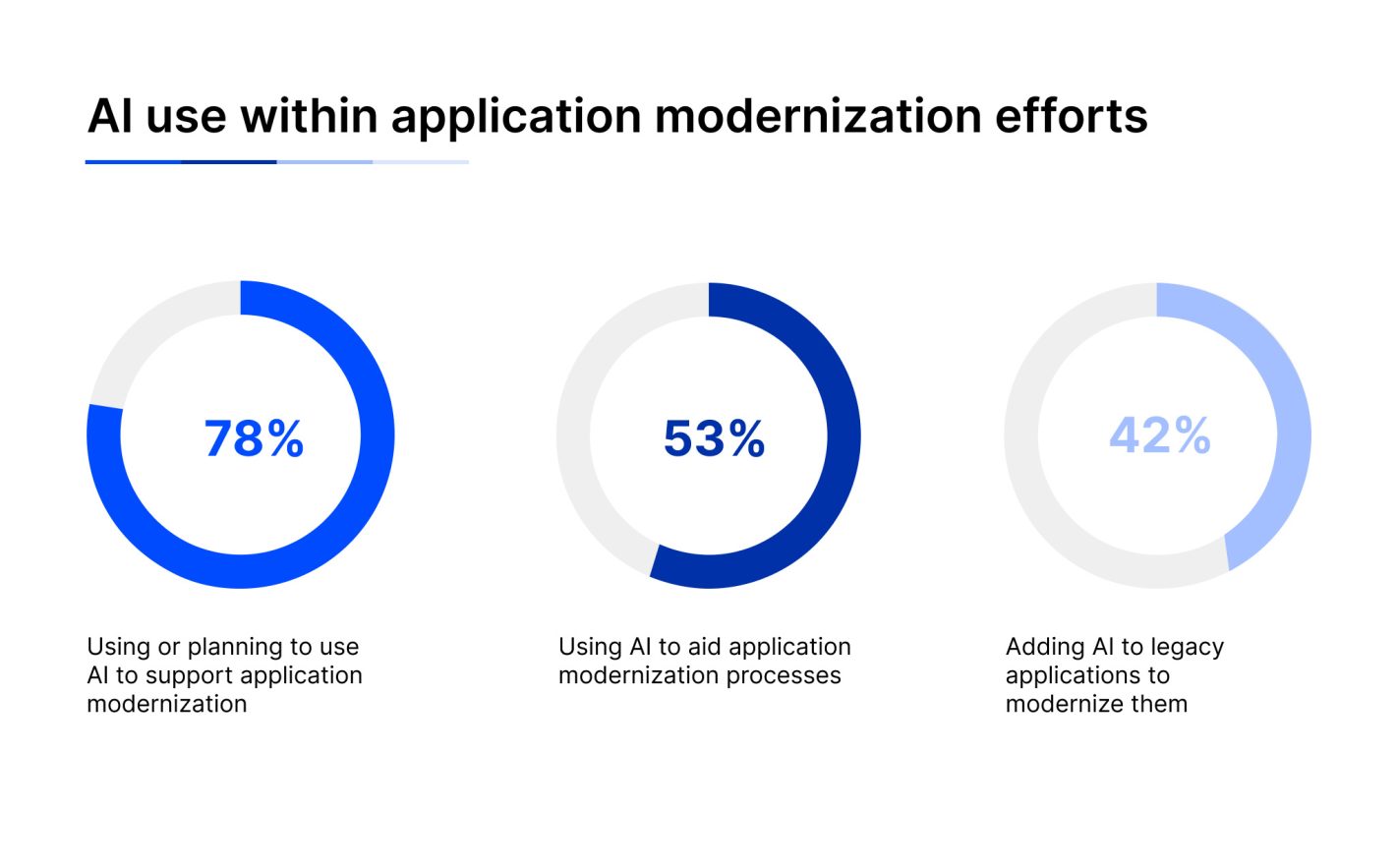

The AI Use Case Landscape: Where Companies Are Actually Deploying AI

Let's be concrete about where companies are investing in AI. Understanding the use cases helps you understand what infrastructure you actually need.

Internal workflow improvement (79% of companies) is the most popular AI use case. This includes automating email triage, scheduling, document processing, data entry validation, meeting transcription and summarization, and code review assistance. These aren't customer-facing AI applications. They're internal tools that increase productivity.

These use cases typically have lower risk (mistakes affect your team, not customers) and relatively clear ROI (you measure time saved). They're great starting points for AI integration because they teach your organization how to work with AI before deploying it in higher-stakes environments.

Infrastructure requirement: These need real-time inference at small scale. You might run 100 requests per day through an internal automation system. You don't need massive scale, but you need reliability and quick turnaround time.

Content-driven applications (73% of companies) include generative AI features built into products. Customer support chatbots that use LLMs, content recommendation systems, dynamic product descriptions, personalized email generation, and AI-assisted design tools.

These are customer-facing and have higher stakes. A bad recommendation can harm customer experience. But they also have clearer ROI because you can measure engagement metrics and revenue impact directly.

Infrastructure requirement: These need scale. If you have a million customers and they're all interacting with AI features, you might process millions of inference requests per day. You need careful rate limiting, load balancing, and cost control because each inference costs money.

Revenue-generating initiatives (66% of companies) are AI features that directly generate revenue. Dynamic pricing based on demand and competitor prices, predictive customer lifetime value for marketing optimization, fraud detection that saves money by preventing chargebacks, and AI-powered customer acquisition.

These are business-critical and require high reliability. An AI system that's down means immediate revenue loss. These also require real-time inference at scale, and they require careful monitoring to detect when the model is degrading and predictions are becoming less accurate.

Infrastructure requirement: These need the full stack. Real-time inference at scale, careful monitoring and alerting, model versioning and rollback capabilities, A/B testing infrastructure to test new models safely, and data pipelines that continuously update models with new data.

Building a Modernization Strategy That Actually Works

Modernization fails when companies treat it as a technology project. It's not. It's a business transformation that happens to use technology.

Define Your AI Ambition First

Don't start with "what infrastructure do we need?" Start with "what do we want to accomplish with AI?"

Be specific. Not "improve customer experience." Instead: "reduce customer support response time from 24 hours to 2 hours using an AI chatbot for 60% of tickets." Not "accelerate innovation." Instead: "review code and flag potential security issues before merging."

Once you've defined your AI ambition, work backward to infrastructure requirements. Different use cases need different infrastructure. A chatbot needs different capabilities than a recommendation engine. A fraud detection system needs different monitoring than a content generation system.

Identify Your Technical Debt

Your modernization strategy needs to address three categories of technical debt:

Data debt: You can't build AI without data. If your data is scattered across 15 different systems with inconsistent formats and no way to join datasets, you need to solve that first. This often means building a data lakehouse, data warehouse, or unified data pipeline.

Application debt: Your applications need to be able to call AI services and integrate results. If your applications are tightly coupled monoliths, you need to break them apart. If they can't handle asynchronous operations (fire-and-forget AI requests), you need to add that capability.

Infrastructure debt: You need computing resources that can run AI workloads. If you're running everything on bare metal, you need to move to cloud infrastructure that gives you flexibility. If you're using legacy databases, you need to modernize to databases that support the data types and operations that AI requires.

Most companies need to address all three. Rank them by impact on your AI ambition. What's blocking you from your primary AI use case?

Sequence Your Modernization

Do NOT try to modernize everything at once. Pick a single AI use case, fully modernize the infrastructure supporting it, and prove ROI. Then expand.

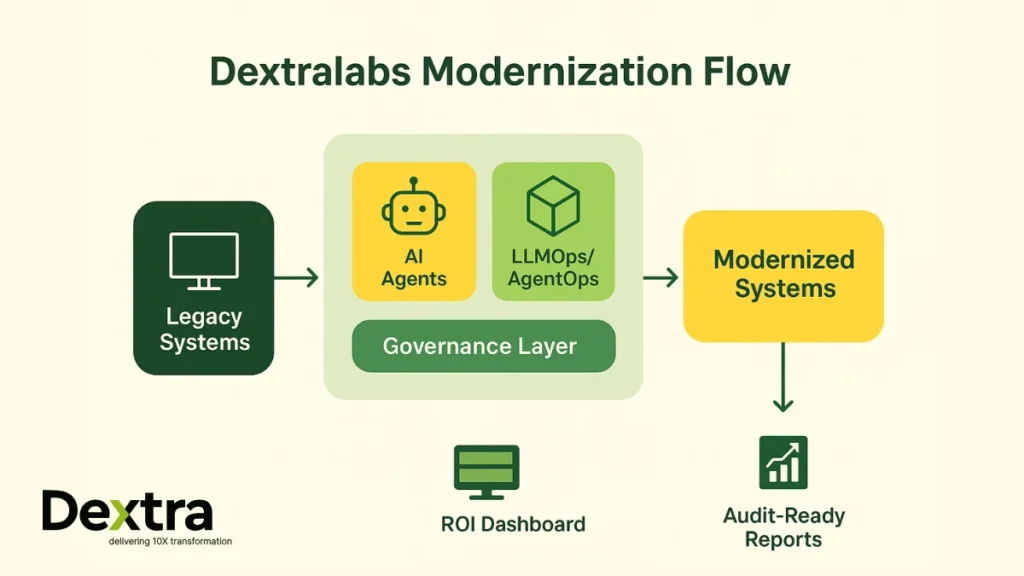

A typical sequence looks like:

-

Months 1-2: Foundation - Set up cloud infrastructure (if not already done), establish data pipelines, deploy observability and monitoring. These don't directly deliver AI value but they enable everything else.

-

Months 3-4: First AI Use Case - Pick your lowest-risk use case (internal workflow automation is usually best) and deploy an AI solution to it. Keep it simple. Maybe a single open-source model fine-tuned on your data.

-

Months 5-6: Measure and Optimize - Don't immediately expand. Spend this time understanding what's working, what's not, and what your team is learning. Measure actual ROI. Adjust your approach based on learnings.

-

Months 7-9: Expand to Content Use Cases - Once you've proven internal automation works, move to content-driven use cases. Your team now understands AI workflows and common pitfalls.

-

Months 10-12: Revenue-Generating AI - Only after you've successfully deployed AI in lower-risk contexts should you deploy AI in revenue-generating systems. By this point your team is comfortable with AI operations and monitoring.

This sequence takes a year, but you're learning continuously and reducing risk at each stage. Compare that to a company that tries to do everything in three months and has to roll back twice because they didn't understand the operational implications.

Build Organizational Capability, Not Just Infrastructure

The infrastructure is the easy part. The hard part is building a team that understands how to operate AI systems.

Traditional application operations teams understand uptime and reliability. AI operations teams need to understand uptime, reliability, and model degradation. They need to monitor not just "is the service responding?" but "are the predictions still accurate?" If training data distribution has shifted, models can drift and become inaccurate even if the service is technically up.

Your infrastructure team needs training on containerization, Kubernetes, and cloud platforms. Your data team needs to understand ML operations (MLOps). Your security team needs to understand model security and data governance for AI.

This takes time. Budget for it. Hire people with AI experience if you can, or invest heavily in training your existing team.

Modernized companies see a compounding ROI improvement from AI, starting at 3x in Year 1 and reaching 9x by Year 4, compared to legacy systems. Estimated data.

The Decision Framework: Should You Modernize Now?

If you've gotten this far, you're probably asking: "Okay, but when should we actually do this?"

Here's the framework:

You should modernize immediately if:

- Your company is trying to deploy AI and finding it difficult to integrate with existing systems

- Your infrastructure is more than 5 years old

- You have critical systems running software that's no longer supported by vendors

- Your team is spending more than 40% of their time on maintenance

- You have high employee turnover in engineering

- You're losing deals to competitors with faster deployment cycles

You can afford to wait a bit if:

- Your infrastructure is 3-4 years old and you've been maintaining it well

- You're not planning major AI initiatives in the next 18 months

- Your team is relatively satisfied and retention is good

- You don't face competition that moves faster than you

You're probably in trouble if:

- You've been saying "we'll modernize next year" for more than 2 years

- Your infrastructure is more than 8 years old

- You're losing customers to competitors

- Your team is struggling to hire and retain engineers

- You've had more than one major outage in the last 12 months

Remember: you don't have to make a binary all-or-nothing decision. Modernization is a journey, not a destination. Start with one use case. Prove it works. Expand from there.

Practical First Steps: How to Actually Start

Let's get tactical. If you've decided to modernize, what's the first thing you do tomorrow?

Week 1: Audit Your Infrastructure

Create a simple spreadsheet of your critical systems. For each one, document:

- Age: When was this system first deployed? When was it last significantly updated?

- Dependency: How many other systems depend on this? How many teams maintain it?

- Flexibility: How easy is it to add new features? How easy is it to scale?

- Knowledge: How many people on your team understand it? How replaceable is that knowledge?

- AI readiness: Could you attach an AI model to this system relatively easily? What would be missing?

Don't overthink this. One column per system, five questions per column. One hour of work maximum.

This audit shows you where your biggest constraints are. That's your starting point.

Week 2-3: Pick Your First Use Case

Look at your AI ambitions. Pick ONE. Not three. One.

Prefer use cases with these characteristics:

- Low risk if something goes wrong (internal tools preferred over customer-facing)

- Clear measurement of success (time saved, accuracy improvements, cost reduction)

- Natural integration point with existing systems (don't require rewriting everything)

- Executive support (because this takes effort and you need buy-in)

Be specific about success criteria. "Improve productivity" is vague. "Reduce time spent on email triage from 2 hours per day to 30 minutes per day" is measurable.

Week 4-6: Design the Infrastructure

Now you can ask: "What infrastructure do I need to support this use case?"

Keep it minimal. You don't need a full multi-region, auto-scaling, fully-monitored AI platform for your first use case. You need something that works reliably and gives you experience.

Consider using managed services to avoid operational overhead:

- Managed cloud databases instead of self-hosted databases

- Serverless compute (Lambda, Cloud Functions) instead of managing servers

- Hosted AI model APIs (OpenAI, Anthropic) instead of running your own models

- Managed data warehouses instead of building data pipelines from scratch

You're paying more per unit of compute with managed services, but you're paying less in total because you're not paying for the operations team to manage it.

Month 3: Build and Deploy

Actually build the thing. This should be your focus. Not planning. Not design reviews. Building.

Use agile methodology. Deploy weekly. Iterate based on feedback. If something doesn't work, change it. You're learning.

Month 4: Measure and Learn

Don't immediately expand to the next use case. Spend time understanding what you built:

- Is it actually solving the problem you identified?

- What's the actual ROI?

- What surprised you about operating an AI system?

- What would you do differently next time?

- What did your team learn?

This learning compounds. Your second AI project will go 3x faster because your team understands AI workflows.

Integrating security into modernization strategies results in a 4x multiplier in AI maturity, compared to a 3x multiplier with modernization alone. Estimated data.

Addressing the Confidence Gap: Why 95% Belief Is Dangerous

The most alarming finding in the research isn't that legacy systems are a problem. It's that 95% of leaders believe their infrastructure is sufficient for AI, while 85% of those who delayed modernization experienced reduced infrastructure confidence.

That's contradiction. People who delayed modernization know their systems are insufficient, but they still believe they're sufficient. That's not logical. It's cognitive dissonance.

Why does this happen? A few reasons:

Survivorship bias: If your system is still running, it must be fine. But "still running" is a very low bar. Plenty of systems are still running while causing massive opportunity costs and competitive disadvantage.

Hidden incompetence: People don't know what they don't know. If you've never worked with modern infrastructure, you don't know what's possible. So you assume your current setup is normal and fine.

Sunk cost fallacy: You've invested so much in your current systems that admitting they're insufficient feels like admitting you made bad decisions.

Invisible costs: The drag from legacy infrastructure is invisible. You don't see the features you're not building, the customers you're not acquiring, the talent you're not hiring. You see the operational costs directly (which are high), but you don't see the opportunity costs (which are often higher).

Overcoming this requires external validation. That's why the research is valuable. When 93% of IT leaders say "updating software is critical," that's not one person's opinion. That's an industry consensus.

If you're in the 95% who believe your infrastructure is fine, ask yourself: What would change my mind? What evidence would convince me to modernize? Then look for that evidence.

Common Modernization Mistakes (And How to Avoid Them)

Talking to dozens of companies going through modernization, I've seen patterns emerge. These mistakes show up over and over:

Mistake 1: Big Bang Migration

Trying to migrate everything at once. You spend six months planning the perfect cutover, and then cutover day arrives and everything breaks because you missed some edge case.

Instead: Migrate in stages. Get one service running in the new infrastructure while the old infrastructure still runs. Gradually shift traffic. Maintain the ability to roll back instantly if something goes wrong.

A company I spoke with tried a big bang migration from on-premise to cloud. It took 18 months and cost 3x the initial estimate. The company next door did the same migration in 4 months by moving one service at a time.

Mistake 2: Ignoring Data Migration

Leaders focus on "migrating our application" but forget that applications are useless without data. Data migration is the hard part.

Historical data is dirty. It has inconsistencies, missing values, encoding issues, and corrupted records that should have been cleaned up years ago. When you try to migrate it all at once, you spend months debugging data quality issues.

Instead: Clean as you go. When you migrate a service, clean the data it uses. Leave the garbage behind. Your new system starts clean, and you only migrate data that actually matters.

Mistake 3: Underestimating Security Complexity

Leaders think about security as an afterthought. "We'll add security features after the basic migration works."

That's wrong. Security in legacy systems and modern systems are completely different. Retrofitting security later means reworking the whole migration.

Instead: Build security into the design from day one. Yes, it takes longer upfront, but you avoid rework later.

Mistake 4: Not Planning for Skills Gaps

Your team knows how to operate the old system. They don't know how to operate the new system. You can't just flip a switch and expect everything to work.

You need a parallel run period where both systems are operating and your team is learning the new one. That's expensive and uncomfortable, but it's cheaper than learning in crisis mode when the old system breaks.

Instead: Plan for a 6-month parallel run minimum. Set up training programs. Hire people with experience in the new platform to teach your team. Accept that productivity will dip during transition. It's temporary.

Mistake 5: Not Measuring Progress

Leaders start modernization without clear metrics for success. So they have no idea if it's actually working or worth the investment.

Instead: Define metrics upfront. What does successful modernization look like? Lower infrastructure costs? Faster deployment cycles? Fewer outages? Higher employee satisfaction? Higher revenue? Pick three and measure them before, during, and after modernization.

The Integration Story: How AI Tools Complement Modernization

While you're modernizing your infrastructure, you're probably also evaluating AI tools to power your applications. These are complementary investments.

Modern platforms like Runable enable teams to build AI-powered workflows without waiting for perfect infrastructure. Runable offers AI-powered automation for creating presentations, documents, reports, images, videos, and slides starting at $9/month.

For teams with modernized infrastructure, Runable integrates with your systems to enable rapid deployment of AI agents for workflow automation. You can build AI-powered document generation, automatic report creation, and presentation synthesis without building these systems from scratch.

The modernization journey and AI tool adoption should be synchronized. Modernized infrastructure gives you the flexibility to integrate different AI services. AI tools help you deliver value from that modernized infrastructure faster.

Use Case: Automatically generate weekly reports from raw data and distribute them to stakeholders without manual formatting or review delays.

Try Runable For Free

The Timeline: When Will You See Payback?

Modernization is an investment. When's the payback?

Typical timeline:

Months 0-3: Negative ROI: You're investing heavily in infrastructure and training. You're not delivering value yet. This is painful.

Months 3-6: Break-even: You've deployed your first AI use case and it's working. Savings and revenue from that use case roughly balance the infrastructure investment.

Months 6-12: Positive ROI: You've deployed multiple use cases. Cumulative savings and revenue significantly exceed the modernization investment.

Year 2 and beyond: Compounding returns: You're moving fast. Each new feature takes weeks instead of months. Revenue grows. Costs decrease. The investment from year one keeps paying dividends.

The key insight: you start seeing returns within 3-6 months if you focus on high-impact use cases with clear ROI. But the full benefit takes years to realize. Plan for this. Don't expect immediate payback.

Looking Forward: The Future of AI and Infrastructure

Where is this all heading?

Your infrastructure in 2025 is probably going to look significantly different from your infrastructure in 2020. Partially because of AI, but also because of broader infrastructure trends:

Serverless computing is becoming the default. You're not managing servers anymore. You're defining how much compute you need for a function, and the infrastructure scales automatically.

Edge computing brings computation closer to data. Instead of sending everything to the cloud, processing happens locally. For AI, this means lower latency and more privacy.

Observability and monitoring are becoming first-class infrastructure concerns. You're not just monitoring uptime. You're monitoring model quality, data quality, performance, and fairness.

Open-source models are commoditizing AI. You can run your own small language models on your infrastructure for specific tasks instead of always relying on expensive API-based services.

Data governance is becoming table stakes. Who owns the data? How is it being used? What's the lineage? These aren't nice-to-have questions anymore. They're regulatory requirements in many jurisdictions.

Leaders who modernize now are positioning for these trends. Leaders who wait until 2026 to start modernizing will be playing catch-up for years.

Conclusion: The Modernization Imperative

Let's be direct. Your legacy infrastructure is not a feature. It's a liability.

You're paying hidden costs in lost innovation, slow time-to-market, high maintenance burden, and reduced ability to compete. You're also paying explicit costs in infrastructure, staffing, and opportunity loss.

Modernization isn't easy. It requires investment. It requires organizational buy-in. It requires your team to learn new skills. It requires uncomfortable periods where systems aren't running optimally while you transition.

But the research is clear: companies that modernize are 3x more likely to realize value from AI. They move faster. They innovate quicker. They retain talent better. They compete more effectively.

The decision isn't whether to modernize. The research already answered that. The decision is when to start. And every month you wait, you're falling further behind.

The window for turning this into a managed transition is probably 6-12 months. After that, the gap widens and you're forced into crisis mode.

So ask yourself: Is our infrastructure fit for our future? If the answer is no, what are you waiting for?

Start with one use case. Pick something that matters for your business. Modernize the infrastructure supporting it. Prove the ROI. Then expand.

Your future self will thank you for the investment your present self makes today.

FAQ

What exactly does "modernizing apps and infrastructure" mean?

Modernizing means updating your technology stack to contemporary standards. This includes upgrading databases from systems like SQL Server 2008 to cloud-native solutions, containerizing applications using technologies like Docker and Kubernetes, implementing modern data pipelines, adopting event-driven architecture, and establishing contemporary security practices. It's not about rewriting everything—it's about making your systems flexible, scalable, and compatible with modern workloads like AI.

How much does infrastructure modernization typically cost?

Costs vary dramatically based on system complexity and scope. A small company might spend

How long does modernization typically take?

Big bang migrations often take 12-24 months and commonly exceed budget by 50-100%. Incremental modernization (which is recommended) typically takes 12-18 months for meaningful impact, with the first measurable value appearing around month 4-6. The key advantage of incremental approaches is reduced risk and the ability to course-correct as you learn.

What's the relationship between modernization and AI adoption?

Modernization enables AI adoption. Legacy systems lack the flexibility, scalability, and data accessibility that AI requires. Companies with modernized infrastructure are 3x more likely to achieve measurable ROI from AI tools. Think of modernization as building the foundation and AI as the application built on that foundation.

Should we modernize our entire infrastructure at once or incrementally?

Incremental modernization is almost always superior. Trying to modernize everything simultaneously creates massive complexity, increases failure risk, and makes it nearly impossible to course-correct. Instead, identify one critical system or use case, modernize the infrastructure supporting it, prove the value, then expand. This approach reduces risk, enables learning, and maintains business continuity.

What happens if we don't modernize?

You'll face increasing competitive disadvantage. Your time-to-market will slow. Your infrastructure costs will remain high while competitors reduce theirs. Your ability to retain engineering talent will diminish. Your vulnerability to security breaches will increase. Eventually, you'll be forced to modernize in crisis mode (like after a breach), which is far more expensive and disruptive than planned modernization.

How do we know if our infrastructure is actually holding us back from AI?

Honest assessment: If you can't easily deploy a new service in your architecture, your infrastructure isn't ready. If you can't scale one component independently of others, you're limited. If adding a new data source requires weeks of engineering work, your data infrastructure needs modernization. If your team spends more than 30% of time on maintenance, your systems are too burdensome. Use these as quick indicators.

What's the role of security in modernization?

Security isn't something you add after infrastructure is modern. Companies that build security into modernization from the start are 4x more likely to reach advanced AI maturity. Modern infrastructure should include model versioning and attestation, fine-grained access controls, inference logging, and adversarial testing built in from day one. This prevents far more expensive security retrofits later.

Which AI use cases should we prioritize first?

Start with internal workflow automation (email triage, document processing, meeting summaries). These have low risk, clear ROI, and teach your organization how to operate AI systems. After success there, move to content-driven applications (chatbots, recommendations). Only after success in lower-risk areas should you deploy AI in revenue-generating systems where mistakes have direct financial impact.

How do we address the skills gap when our team has never used modern infrastructure?

Plan for a 6-month parallel run where both old and new systems operate simultaneously. This gives your team time to learn. Hire experienced engineers in the new technology to mentor your existing team. Implement formal training programs. Accept that productivity will dip during transition (it's temporary). Don't fire people who understand the old system—they're critical during the transition period.

Key Takeaways

- Modernized applications deliver 3x higher ROI from AI, making infrastructure modernization non-negotiable for serious AI adoption

- 93% of IT leaders identify software updates as the single most critical factor for AI success, yet 95% still believe their infrastructure is adequate

- Security integration during modernization creates a 4x multiplier for achieving advanced AI maturity, proving security enables rather than constrains AI

- Incremental modernization starting with single high-impact use cases reduces risk and enables learning, while big-bang migrations fail 50% more often

- The modernization timeline delivers break-even at 6 months and positive compounding ROI through 12+ months when sequenced properly

Related Articles

- The AI Adoption Gap: Why Some Countries Are Leaving Others Behind [2025]

- Why AI ROI Remains Elusive: The 80% Gap Between Investment and Results [2025]

- OpenAI's Enterprise Push 2026: Strategy, Market Share, & Alternatives

- AI Bubble or Wave? The Real Economics Behind the Hype [2025]

- TikTok's First Weekend Meltdown: What Actually Happened [2025]

- Agentic AI Demands a Data Constitution, Not Better Prompts [2025]

![Modernizing Apps for AI: Why Legacy Infrastructure Is Killing Your ROI [2025]](https://tryrunable.com/blog/modernizing-apps-for-ai-why-legacy-infrastructure-is-killing/image-1-1769425698196.png)