Telco AI Factory: Building Intelligent Communications Networks [2025]

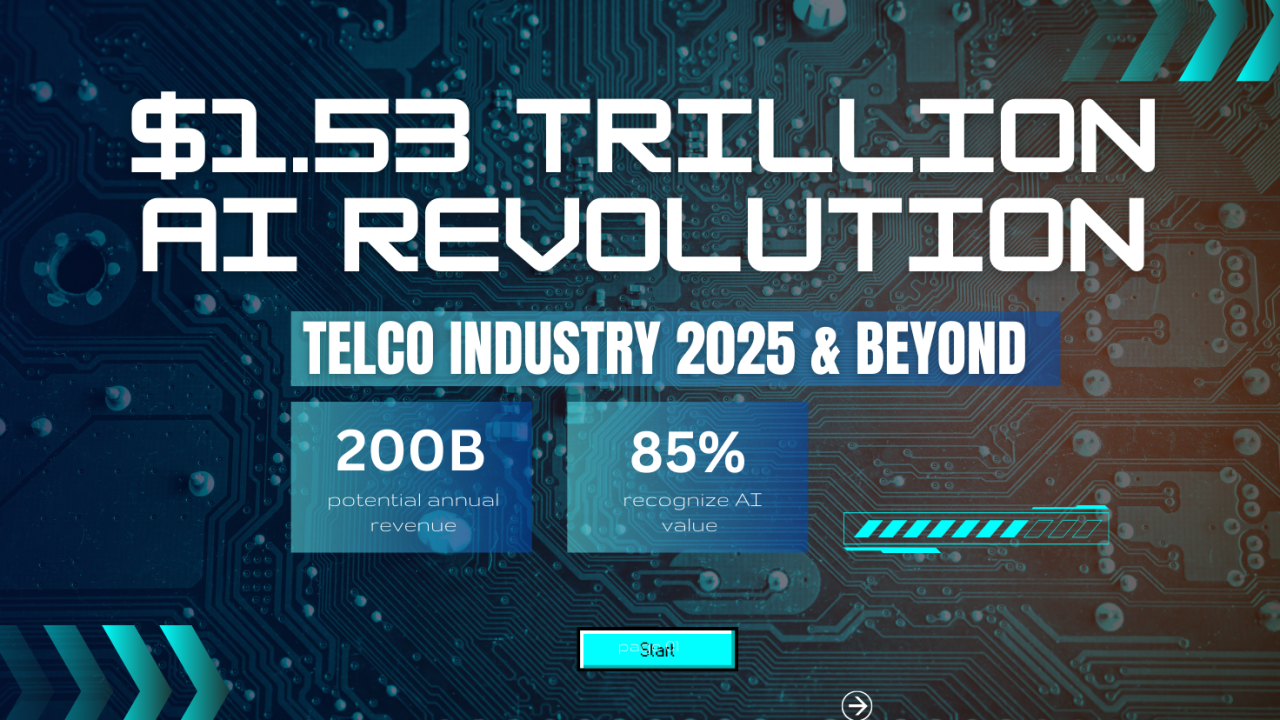

The telecommunications industry is undergoing its most significant transformation since the internet changed everything. We're not talking about incremental upgrades to 5G or minor infrastructure improvements. This is about fundamentally reimagining what telecom companies actually do.

For decades, telecom operators played a straightforward role: they moved data from point A to point B. Voice, text, video—doesn't matter. The infrastructure was designed to be a dumb pipe. Efficient, sure. But ultimately disconnected from what happened at either end of the connection.

That era is ending. The Telco AI Factory is the operating model that's making it happen. It's a collaborative framework that combines three critical elements: hardware infrastructure, telecom provider networks, and cloud communications intelligence. When these pieces work together, they create something genuinely new—a system that doesn't just move data, but understands it, acts on it, and creates value from it.

Here's why this matters right now. Enterprises are drowning in communication complexity. Customer service teams juggle voice calls, text messages, emails, and video chats across disconnected systems. Healthcare providers waste billions on administrative overhead that has nothing to do with actual patient care. Telecom operators watch hyperscalers like Amazon and Google capture the value in cloud computing while they remain stuck in connectivity. The Telco AI Factory solves all three problems simultaneously.

The shift is already happening. Operators in North America, Europe, and Asia are piloting these systems. Early results show dramatic improvements in customer service efficiency, new revenue streams from AI-driven services, and the ability to compete in markets they previously couldn't touch. But most people in enterprise IT still don't understand what's coming.

This guide breaks down exactly how the Telco AI Factory works, why telecom operators have advantages nobody else possesses, and how enterprise customers should prepare for this transformation. We'll cover the technical architecture, real-world use cases from healthcare to financial services, and the partnerships that are making it all possible.

TL; DR

- The Telco AI Factory is a collaborative model combining hardware providers, telco infrastructure, and cloud communications platforms to deploy AI at the network edge with low latency and high security

- Telecom operators possess unique advantages: sovereign AI capabilities, local data center proximity, access to vast contextual data, and inherent customer trust that hyperscalers can't replicate

- Voice AI is the revenue driver, not just text-based generative AI, with Goldman Sachs projecting AI could add $7 trillion to global GDP over the next decade

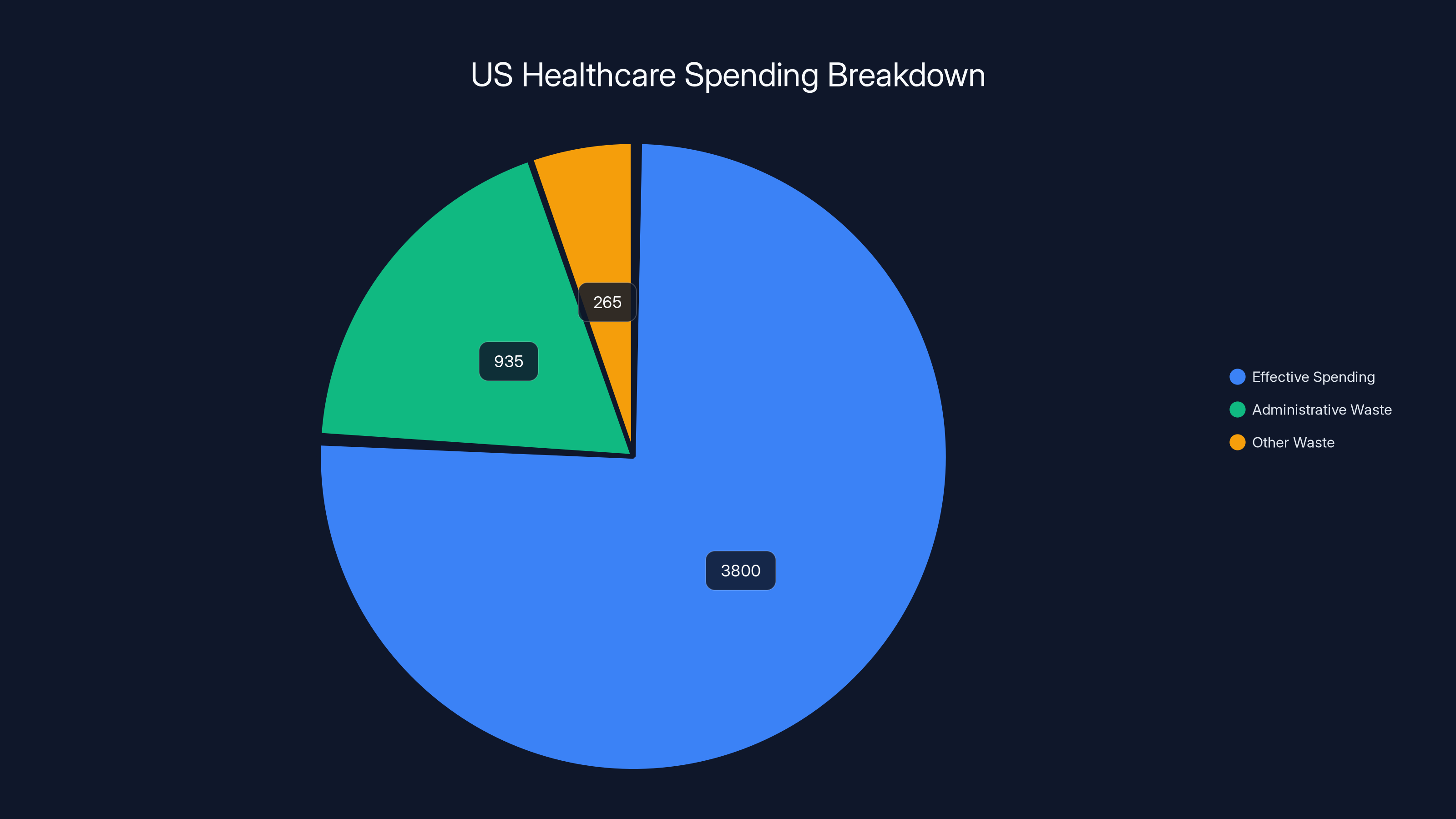

- Healthcare is the proving ground, where AI agents deployed on trusted telecom infrastructure can reduce administrative waste estimated at 935 billion annually in the US alone

- The edge computing advantage is critical: low-latency AI inference at the network edge enables real-time personalization, security, and compliance that centralized cloud computing cannot match

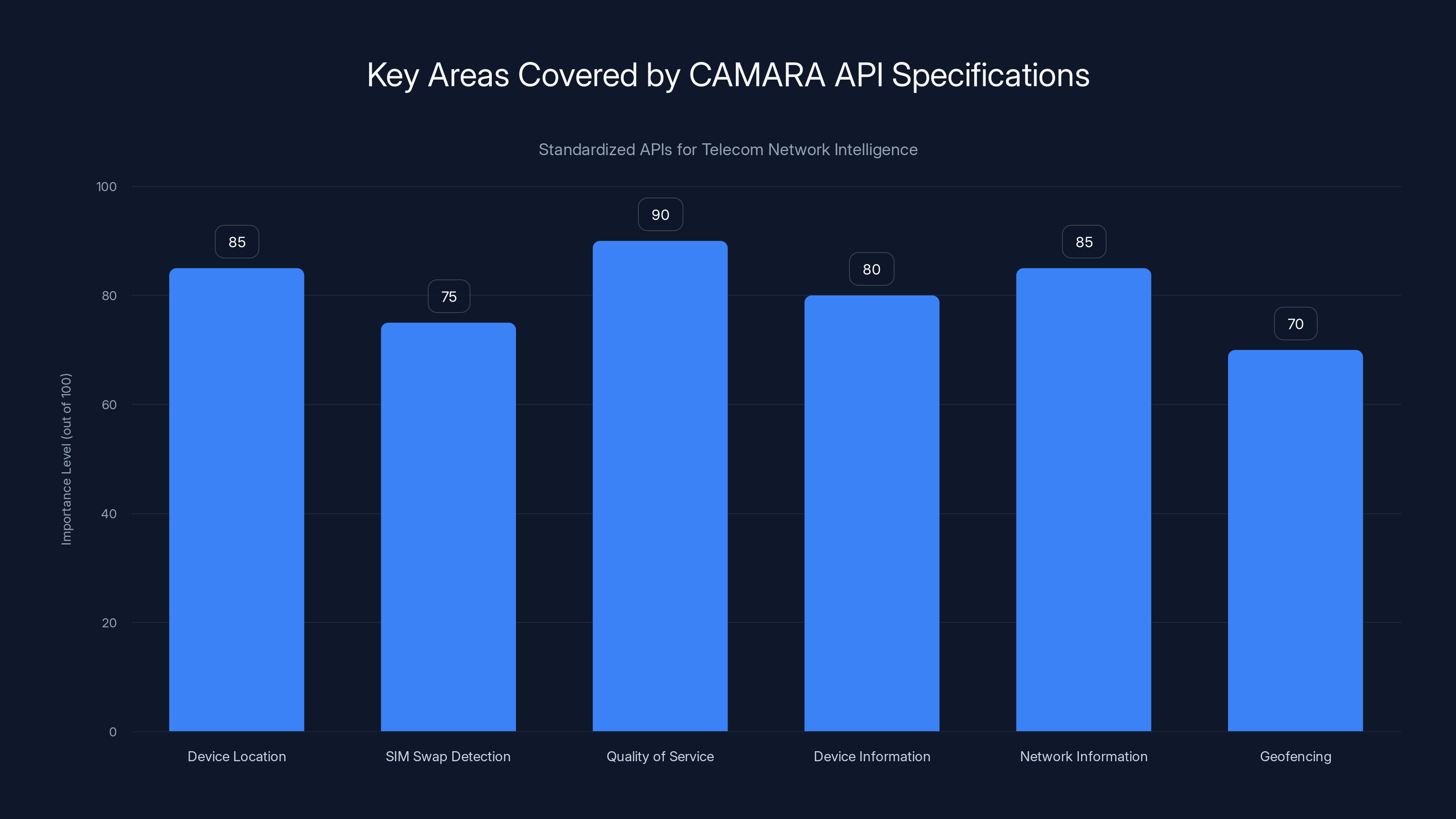

- Enterprise adoption depends on converged APIs that integrate network intelligence (device location, Qo S, SIM status) with AI agents for sophisticated use cases like fraud detection and personalized services

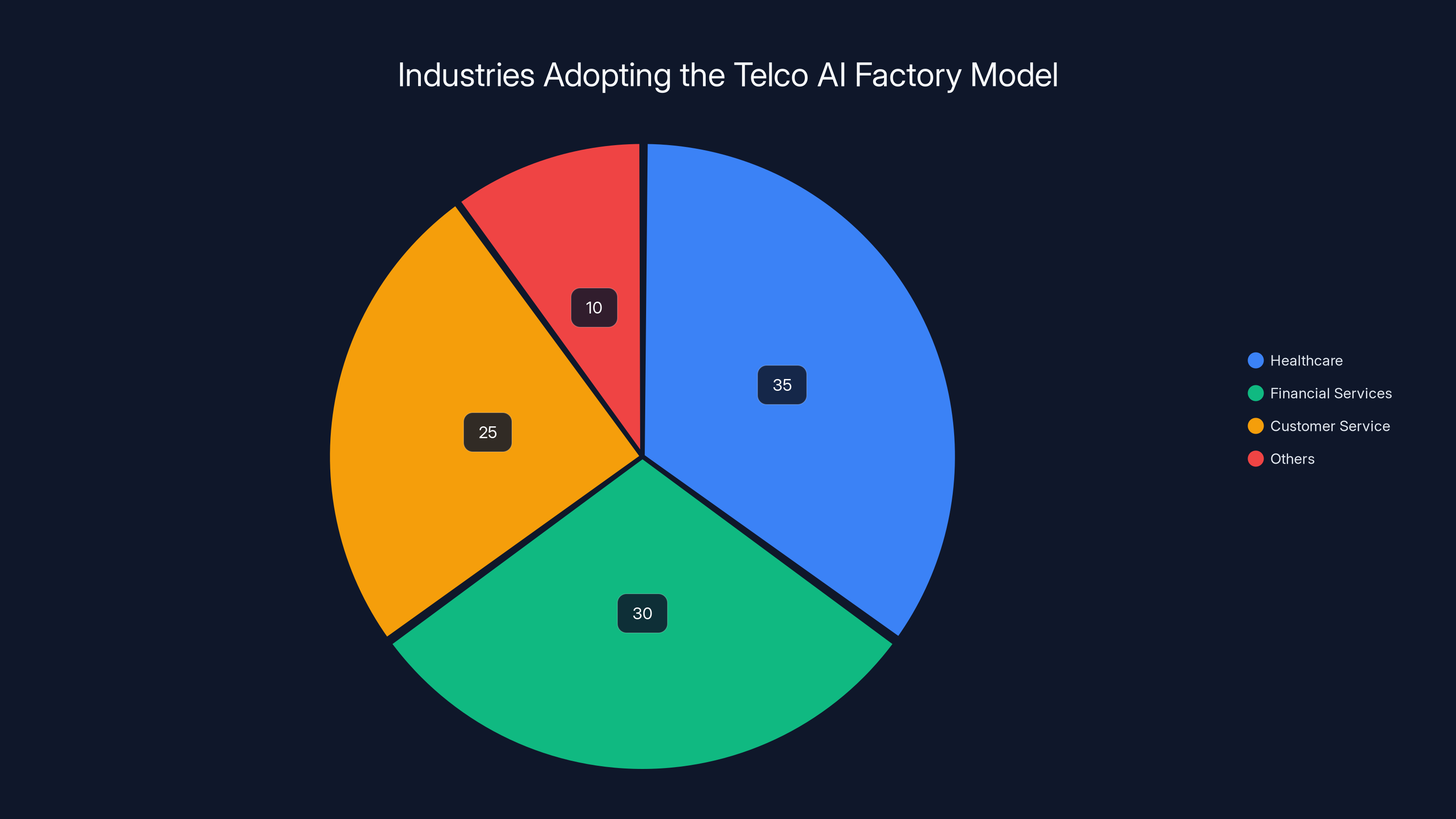

Healthcare, financial services, and customer service are leading in adopting the Telco AI Factory model, with healthcare taking the largest share. Estimated data.

Understanding the Telco AI Factory: A New Operating Model

When you hear "Telco AI Factory," it's easy to think it's just another tech buzzword. But it's actually a concrete operating model that changes how telecom networks function at a fundamental level.

Traditionally, telecom networks were built around a simple architecture: switching centers connected by fiber optic cables and radio towers. The switching centers (called Central Offices or COs) did one job incredibly well—they routed voice calls and data across the network. Everything else happened outside the network. If you wanted to add intelligence, you built it somewhere else: a cloud data center, an enterprise data center, your laptop.

The Telco AI Factory inverts this. Instead of intelligence living outside the network, it gets deployed directly into the network infrastructure itself. The Central Offices transform into what operators now call "AI hubs." These aren't just switching centers anymore. They're computing platforms that can run AI models, analyze data in real time, and make decisions at the edge of the network.

Why does this matter? Latency. Speed. An AI model running in a distant cloud data center takes 100–200 milliseconds to respond to a request. An AI model running at the network edge, five miles from you, responds in 10–20 milliseconds. For most applications, you don't notice the difference. But for real-time communications—voice calls being enhanced by AI, video streams being optimized on the fly, fraud being detected before a transaction completes—those milliseconds mean everything.

The Telco AI Factory model also creates something new: a trusted, localized AI infrastructure that doesn't require sending sensitive data across the internet to a hyperscaler's cloud. This becomes critical when you're dealing with patient health records, financial transactions, or government communications. European enterprises dealing with GDPR requirements, Asian markets with data localization mandates, and governments everywhere concerned about sovereignty all have the same problem—they need AI capabilities, but they need them running on trusted infrastructure inside their borders.

The model works because it brings together three distinct players, each contributing what they do best. Hardware platform providers (think companies like Intel, AMD, and specialized networking equipment manufacturers) provide the computing infrastructure. Telecom operators provide the physical network, the local data centers, and the trusted relationships with enterprises and governments. Cloud communications providers provide the artificial intelligence, the conversational understanding, the sophisticated AI agents that actually create value.

None of these players could do it alone. Hardware companies understand computing but don't have the network or the trust. Telecom operators have the network and the trust but traditionally lack the AI expertise. Cloud communications providers have the AI capabilities but no physical infrastructure to run it on. Together, they create something genuinely novel.

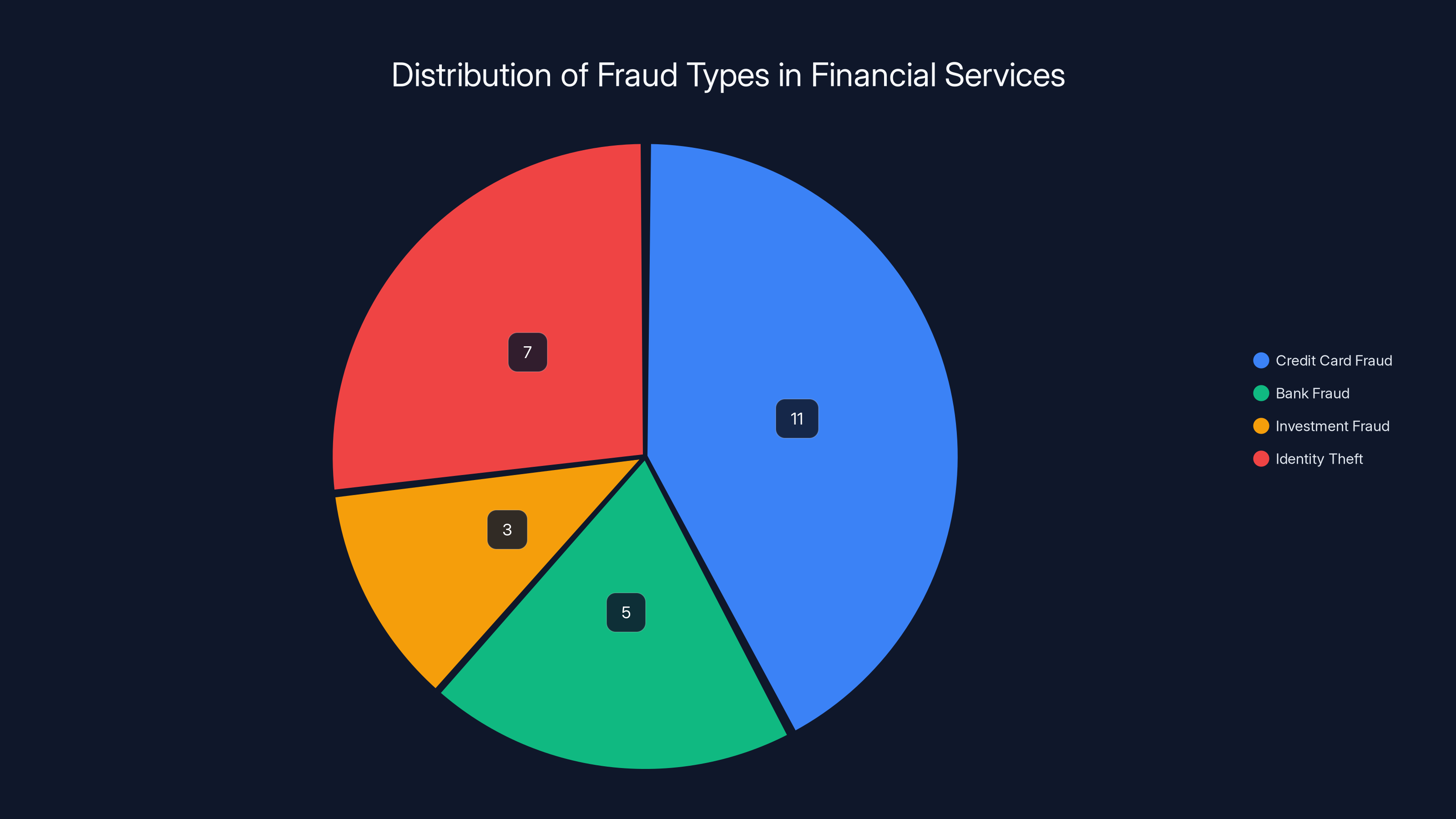

Credit card fraud is the largest contributor to financial losses, costing over $11 billion annually. Estimated data.

The Three Pillars: How the Telco AI Factory Actually Works

The Telco AI Factory operates on three interconnected pillars. Understanding each one shows why this model works and why it's difficult for competitors to replicate.

Hardware Platform Providers: The Foundation Layer

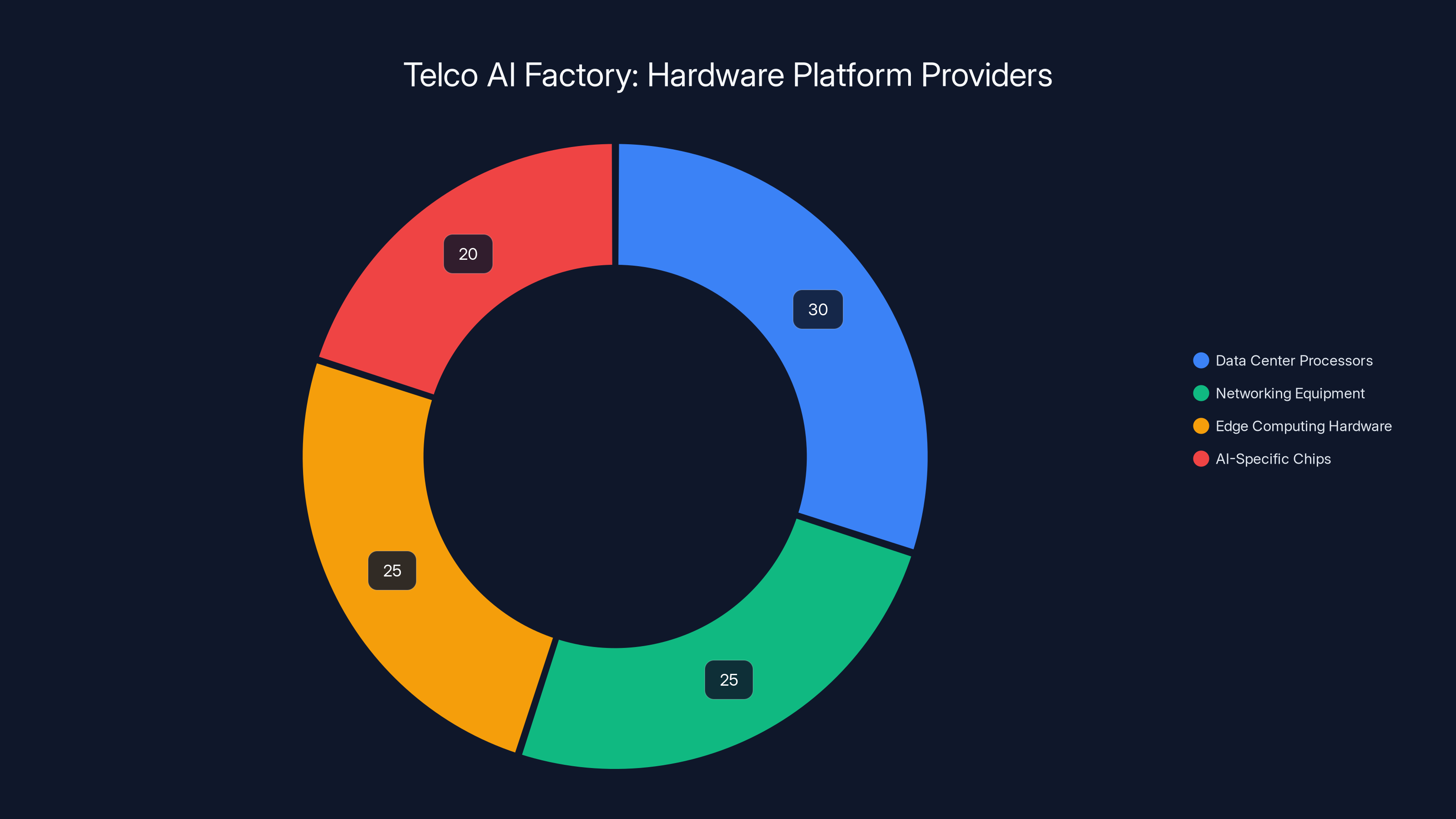

Hardware platform providers are the first pillar. These companies design and manufacture the physical computing infrastructure that makes everything else possible. We're talking about data center processors, networking equipment, edge computing hardware, and the specialized chips designed for AI inference.

Their job is straightforward in concept but complex in execution: deliver computing power that's dense enough to fit into existing Central Office spaces, efficient enough to not require massive power upgrades, and capable enough to run multiple AI models simultaneously without bottlenecks.

The hardware challenge is real. A typical Central Office building might have space constraints, existing power budgets that aren't designed for heavy computing loads, and cooling systems that were built when these buildings primarily housed mechanical switching equipment. You can't just put a hyperscale data center in every CO. You need hardware optimized for edge deployment: compact, power-efficient, and sophisticated in ways that cloud hardware doesn't need to be.

There's also the question of AI-specific hardware. Traditional CPUs work fine for many AI inference tasks, but GPU acceleration helps enormously for certain models, and specialized AI chips from companies working in this space offer even better performance for specific use cases. The hardware providers are responsible for assembling the right mix of processors, accelerators, storage, and networking to create a platform that actually works at the edge.

What's often overlooked is the importance of open standards and interoperability at this layer. The hardware platform providers need to work with multiple software vendors and multiple telecom operators. They can't create proprietary lock-in where the hardware only works with one company's software. That would defeat the entire purpose of the collaborative model. So you see companies in this space emphasizing open architectures, standard interfaces, and compatibility with industry-standard software stacks.

Telecom Operators: Trust, Infrastructure, and Data

The second pillar is the telecom operators themselves. And this is where you start to understand why this model is actually radical for the telecom industry.

Historically, telecom operators have been in a precarious position relative to tech giants. They invested enormous capital in building and maintaining networks, but most of the value in the digital economy was being captured by companies that didn't own networks. A telecom operator with millions of customers and billions in infrastructure revenue watched helplessly as Amazon and Google captured the high-margin cloud computing market. The telco's job was to provide connectivity so those companies could offer their services.

The Telco AI Factory gives operators a path to recapture some of that value. But it requires leveraging advantages that they actually have, and that hyperscalers don't.

Sovereignty and Trust. This is the first advantage. Governments and enterprises trust telecom operators in a way they don't trust cloud providers. A telecom operator is licensed by national governments, regulated by national regulators, and embedded in the infrastructure of the nation. When a government says "we need sovereign AI—all data processing must happen within our borders," a telecom operator can deliver that. They own the data centers. They control where data is processed. They're not going to route patient health records through servers in another country because there's no business reason to do so.

This trust is valuable precisely because hyperscalers have created so much anxiety around data sovereignty. European enterprises subject to GDPR regulations spend enormous effort ensuring data never leaves the EU. Asian governments are increasingly mandating that data stay within national borders. Healthcare providers are paranoid about patient data security. For all these customers, the ability to run AI workloads on a trusted local infrastructure is enormously valuable.

Physical Proximity and Low Latency. Telecom operators own regional and central data centers spread across entire countries. These facilities are often located close to population centers, which means low latency access to edge computing resources. This is genuinely hard for hyperscalers to match. They have a few massive data centers, and everything routes through them. A telecom operator might have 20 regional data centers, and for many enterprises, there's one within a few miles.

This proximity becomes critical for real-time applications. A customer service AI agent that responds in 10 milliseconds because it's running three miles away feels instant. The same agent running in a distant cloud data center, responding in 200 milliseconds, feels sluggish. For applications like real-time video optimization, network anomaly detection, or fraud prevention, latency differences matter enormously.

Access to Contextual Data. Telecom operators have access to data about network conditions that nobody else possesses. They know what device you're using, where you're located, what your network bandwidth is, whether you're roaming internationally, whether your SIM card has been recently changed (fraud indicator), and thousands of other network parameters.

When you combine this network intelligence with AI agents, you unlock capabilities that are impossible otherwise. You can detect fraud not just by analyzing a transaction's characteristics, but by analyzing it in the context of that person's current location, their typical usage patterns, their current network conditions. You can personalize a customer service experience not just based on the text of their message, but based on whether they're on a mobile network with poor conditions or a fiber connection, whether they're roaming internationally, what their historical network usage looks like.

Hyperscalers don't have access to this data. They see application traffic. They don't see the underlying network conditions. Telecom operators see everything.

Cloud Communications Providers: The Intelligence Layer

The third pillar is cloud communications providers. These are companies specialized in voice, video, and messaging AI. They bring the actual artificial intelligence to the model.

What distinguishes a cloud communications provider from a generic cloud company is specialization. They understand conversational AI in ways that general-purpose cloud platforms don't. They've built AI models trained specifically on voice and video data. They understand the subtleties of human communication: tone, emotion, context, cultural differences, regional language variations.

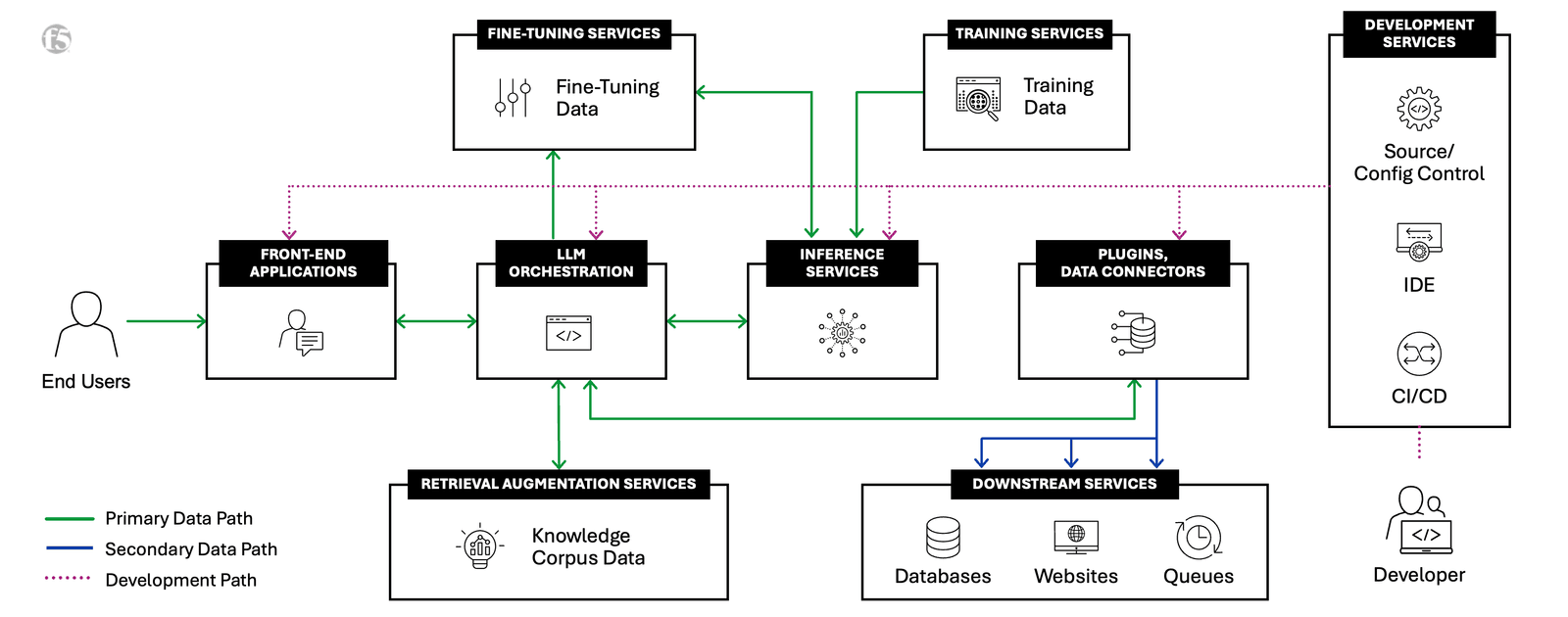

Their role is to take the infrastructure that hardware providers and telecom operators have assembled, and inject intelligence into it. This happens in several ways.

Conversational Intelligence at Scale. Cloud communications providers deploy AI engines that analyze all forms of communication: voice, video, text. These aren't simple transcription engines. They're sophisticated models that understand what people are actually saying, what they mean beyond the literal words, what emotional state they're in, what they need.

Think about a customer calling a healthcare provider with a billing question. A basic system transcribes the call and routes it to the right department. An intelligent system understands that the customer is frustrated, identifies the specific billing code that's causing confusion, cross-references it with their insurance, and potentially resolves the problem in the AI agent itself without ever needing to transfer the customer. The difference in customer experience is profound.

Domain-Specific AI Agents. Generic large language models are broad but shallow. A cloud communications provider working with healthcare builds AI agents that understand medical terminology, healthcare workflows, insurance systems, and patient communication in ways that general-purpose language models don't. This requires specialized training data, specialized model fine-tuning, and specialized validation to ensure accuracy.

These domain-specific agents actually listen and understand context. They adapt their communication style based on who they're talking to. They know when they're outside their competency and need to escalate to a human. They remember conversation history and reference it appropriately. They feel human in ways that generic chatbots don't.

Converged API Integration. This is the technical magic that makes everything work together. Cloud communications providers expose APIs that let the AI agents access network intelligence from the telecom operator's infrastructure. A fraud detection agent can query Qo S metrics, device location, and SIM status in real time. A personalization engine can adjust service quality based on current network conditions. A compliance system can ensure data never leaves the geographic region where it originated.

These APIs follow international standards (like CAMARA, which is bringing network operators together around common API specifications). This ensures that solutions built with one operator or cloud provider can potentially work with others, preventing lock-in and encouraging adoption.

Why Telecom Operators Are Uniquely Positioned for AI Leadership

At first glance, it seems surprising that telecom operators would be leading the charge into AI. For decades, they've been known as conservative, infrastructure-focused businesses. They invest in cables and towers, not cutting-edge software.

But this stereotype misses what actually makes telecom operators powerful in the AI era.

The Central Office Transformation: From Switching Center to AI Hub

The physical heart of any telecom network is the Central Office. For over a century, COs have been the switching centers where connections get routed. During the telephone era, COs were enormous mechanical installations with thousands of switches controlled by electromechanical relay systems. When digital telephony arrived, COs became computing facilities with specialized switching hardware.

Today, COs are transforming into something radically different: AI hubs. They're becoming the edge computing infrastructure where AI models run, where data gets analyzed, where decisions happen in real time.

This transformation is profound because it redefines what a telecom operator does. They're no longer just a connectivity provider. They're becoming a platform for intelligent services delivered at the network edge. A customer service AI agent running in a CO three miles from you, with access to your network data, able to understand your context, and respond in milliseconds—this is something genuinely new.

The physical proximity of these facilities is underrated. Cloud computing assumes that latency doesn't matter much, that you can afford to route everything to a distant data center. But for real-time services, this assumption breaks down. Voice quality degrades with latency above 150 milliseconds. Video optimization requires sub-100 millisecond response times. Fraud detection needs to happen in milliseconds, not seconds.

By deploying AI at the CO level, operators can offer low-latency intelligent services in ways that centralized cloud computing simply cannot match. They're not building a different kind of cloud. They're building a different paradigm entirely.

Sovereignty: The New Competitive Advantage

For the past 15 years, the competitive advantage in technology belonged to whoever could build the largest, most centralized infrastructure. Amazon's scale gave them cheaper compute. Google's data centers gave them better AI training. Microsoft's cloud computing gave them density and efficiency.

But something changed. Governments started caring about where data was processed. Europe passed GDPR and started fining companies for data violations. China mandated data localization. India required data storage on Indian soil. The US started restricting which companies could handle sensitive government data.

Suddenly, the advantage shifted. The biggest cloud operator became a liability if you couldn't guarantee that your data stayed within your country. The most centralized system became a weakness if you needed to comply with data residency requirements.

Telecom operators, by contrast, have something cloud providers struggle to replicate: they're national companies operating national infrastructure. They have the trust of national governments. They have data centers spread throughout national territories. They can credibly promise that sensitive data will never leave the country.

For healthcare providers dealing with sensitive patient records, this is invaluable. For governments building AI systems for defense or law enforcement, this is essential. For enterprises subject to strict data localization requirements, this is the only option that actually works.

The technology required to support sovereign AI is increasingly sophisticated. You need high-quality open-source AI models that you can run on your own infrastructure without relying on cloud APIs. You need local data processing that preserves privacy while extracting value. You need security systems that don't depend on remote cloud providers.

Telecom operators are building exactly this. They're investing in open-source AI infrastructure, in local deployment capabilities, in the security systems required for truly sovereign AI. This is a multi-billion-dollar investment, but it's based on competitive advantages that cloud providers literally cannot replicate.

Data Advantage: Understanding the Full Context

Here's something that never gets discussed enough: telecom operators see the entire flow of information. They see the network conditions, the device types, the traffic patterns, the geographic locations. They see metadata that reveals enormous amounts about human behavior and network health.

When you combine this network intelligence with AI, you get capabilities that are otherwise impossible to achieve.

Consider fraud detection. A cloud-based fraud detection system looks at transaction characteristics: amount, merchant category, time of day, geographic location of the transaction. It uses machine learning to identify anomalies. But it's working with incomplete information.

A fraud detection system running on telecom infrastructure can see all of that, plus: the current network quality, the device type and age, whether the SIM card has been recently changed (a classic fraud indicator), whether the person is roaming internationally, their historical network usage patterns, whether their device is connecting from the same cell tower as usual.

With this additional context, fraud detection becomes dramatically more accurate. You can distinguish between legitimate travel (person visiting a different country with their phone) and fraud (someone in another country using a stolen card) by correlating with network data. You can identify compromised devices by detecting unusual network behavior patterns.

This advantage applies across every service. Customer service becomes better when the AI agent understands the customer's network situation (are they on a slow connection? roaming internationally?). Service personalization becomes more effective with network context. Quality of service optimization becomes possible because you're making decisions with full visibility into actual network conditions.

Hyperscalers don't have access to this data. They see application-level traffic. They don't see the network underneath. Telecom operators see everything.

The US healthcare system spends about

Voice AI: The Massive Revenue Opportunity Everyone's Missing

When people talk about AI in telecom, they usually talk about chatbots. Text-based conversational AI. But that's actually a small part of the opportunity.

The real revenue driver is voice AI. And it's fundamentally different from text-based AI in ways that create unique opportunities for telecom operators.

Beyond Generative Text: Why Voice AI is Different

Generative AI got famous because of Chat GPT. Large language models trained on text data, able to generate human-like responses to text prompts. These models are impressive and useful, but they're fundamentally limited to text.

Voice AI is different. It's not just about converting speech to text and running it through a language model. That's the simplest approach, and it's the one everyone assumes happens. But sophisticated voice AI goes much deeper.

Real voice AI understands tone, emotion, stress levels, speech patterns, and communication nuances that don't exist in text. Someone might write "I'm fine" and mean it literally. Someone might say "I'm fine" in a tone that conveys deep frustration or distress. A voice AI system that understands vocal tone learns information that a text-based system could never access.

Voice AI also handles interruptions, background noise, accents, and regional language variations in ways that text systems don't need to address. Someone speaking English with an Indian accent, sitting in a noisy coffee shop, occasionally interrupted by ambient conversations—a good voice AI system should handle all of that transparently. This requires capabilities that generative text models don't have.

The most sophisticated voice AI systems also understand the structure and flow of conversation. They know when to interrupt, when to listen, how to handle overlapping speech, how to manage turn-taking. They understand that conversation isn't just sequential text exchange; it's a complex dance of timing, interruption, and responsiveness.

These are capabilities that telecom operators, working with cloud communications providers, are uniquely positioned to develop. They have access to billions of hours of voice recordings (with appropriate privacy protections). They have the network infrastructure to deploy voice AI at scale. They understand voice communications in ways that text-based AI companies don't.

The Market Size: Trillions of Dollars at Stake

According to Goldman Sachs, generative AI alone has the potential to add $7 trillion to global GDP over the next decade. That's not a small number. For context, that's roughly equivalent to the combined GDP of Germany, France, and the United Kingdom.

But here's what's important: most of that value isn't going to come from text-based conversational AI. It's going to come from AI applications that reduce costs, improve decisions, and enable new capabilities across virtually every industry.

For telecom operators, voice AI represents a direct path to capturing some of that value. Enterprises are desperate for AI-powered customer service solutions that actually work. Healthcare providers need AI systems that can handle patient communications. Financial services need fraud detection that's dramatically better than what they have today. All of these applications involve voice communications.

An operator that can deploy domain-specific voice AI agents (trained for healthcare, for financial services, for customer service) has access to a massive market opportunity. These aren't commodities. They're specialized services that solve real business problems.

Goldman Sachs research suggests that voice AI could actually be a larger market opportunity than text-based generative AI, because voice is the primary communication mechanism for high-value services. Customer service representatives talk to customers. Healthcare providers talk to patients. Fraud detection is most accurate when you can analyze voice patterns and emotional content.

Operators that build sophisticated voice AI capabilities will capture enormous value. This isn't a speculative opportunity 10 years in the future. Enterprises are building this technology now. The question is whether they'll build it themselves, buy it from cloud providers, or buy it from their telecom operators.

Healthcare: The First Killer Application

Healthcare is the perfect proving ground for the Telco AI Factory model. It has every ingredient necessary: complex communication needs, serious cost problems, strict data requirements, and massive potential ROI.

The Healthcare Cost Crisis and Administrative Waste

The US healthcare system spends about

Think about what happens when you call your healthcare provider. You wait on hold. You explain your situation to a receptionist. They put you on hold again. Someone in billing comes on the line. They look at your account. They might need to consult with someone else. You're on hold again. The entire interaction is designed around the constraints of human availability and sequential scheduling.

A typical healthcare provider has dozens of separate systems: electronic health records (like Cerner or Epic), billing systems, insurance verification systems, appointment scheduling systems, patient communication systems. None of these systems talk to each other effectively. So every customer interaction requires a human to manually navigate across multiple systems to find information and answer questions.

This is expensive. A healthcare provider pays customer service representatives

With millions of patient calls annually, this adds up. A health system with 5 million patient interactions per year loses

How the Telco AI Factory Solves Healthcare

Now imagine deploying an AI agent on the telecom operator's infrastructure that actually solves these problems.

The patient calls their healthcare provider. An AI agent answers the call—immediately, no waiting. The agent is trained on this specific healthcare provider's data. It understands their systems. The moment the call connects, the agent has access to the patient's entire medical record. It knows their appointment history, their current medications, their insurance status, their billing account.

The agent understands that the patient called about a billing question regarding a recent visit. It pulls up the specific charge. It verifies insurance coverage for that service. It understands why the charge appeared on the patient's bill. It explains it in language the patient understands. It resolves the issue without transferring the call to a human.

90% of the call is handled by the AI agent without human intervention.

For the 10% that does require human escalation (complex pre-authorization request, unusual insurance situation), the agent has already gathered all the necessary context and documented it. When the call gets transferred to a human specialist, that person doesn't start from zero. They're walking into an already-informed conversation.

The result: what previously took 10 minutes and a series of transfers now takes 2 minutes with a single interaction. The healthcare provider saves money. The patient gets faster resolution. The quality of the interaction is actually better because the AI agent doesn't get frustrated and can maintain perfect information access.

The Technical Architecture for Healthcare

Making this work requires specific technical pieces.

First, the healthcare provider's systems need to be accessible via APIs. This is increasingly happening (Epic and Cerner both have robust API ecosystems), but it's not universal. Some smaller health systems are still running on legacy systems with limited integration capabilities.

Second, the AI agent needs to be trained on healthcare-specific language and workflows. A general-purpose language model doesn't understand healthcare billing codes, insurance terminology, or medical conditions in sufficient depth. The cloud communications provider working with the telecom operator needs to fine-tune the model on healthcare data, validation it against medical accuracy, and ensure it doesn't hallucinate or guess when it doesn't know something.

Third, the entire system needs to comply with HIPAA requirements for patient data protection. Patient health information cannot be sent to a generic cloud API where it might be logged or used for other purposes. It needs to run on the telecom operator's infrastructure in a secure environment where data never leaves the healthcare provider's region.

Fourth, the system needs to handle real-time integration with multiple healthcare systems. When the patient calls about a billing question, the AI agent needs to query the patient's insurance status in real time. It needs to check their appointment history. It might need to verify pharmacy information. All of this happens during the call, in near-real-time.

This is genuinely complex. But it's exactly the kind of problem that the Telco AI Factory architecture was designed to solve. The telecom operator provides the trusted, local infrastructure. The cloud communications provider provides the AI expertise and healthcare-specific model. The hardware provider ensures the infrastructure can handle the latency and throughput requirements.

ROI and Implementation Timeline

For a health system considering this investment, the economics are straightforward.

Assume a health system handles 10 million patient interactions annually (calls, emails, messages, chat). Assume 70% could be handled entirely by an AI agent. That's 7 million interactions handled without human involvement.

At an average cost of

The implementation timeline is typically 6–12 months from contract to full deployment. You start with the most common call types (billing questions, appointment scheduling, insurance verification) and expand from there. Early pilots with specific departments or patient populations help validate the approach before full rollout.

CAMARA APIs standardize key telecom areas, with Quality of Service and Device Location being highly prioritized. Estimated data based on typical industry focus.

Financial Services and Fraud Detection

If healthcare is the first killer application, financial services is the second. And here, the advantages of the Telco AI Factory model become even more apparent.

The Fraud Problem at Financial Services Scale

Financial services companies lose enormous amounts of money to fraud. Credit card fraud alone costs the US financial system over $11 billion annually. Bank fraud, investment fraud, identity theft—the numbers are staggering.

Most of the fraud prevention currently in place is rule-based. Transaction exceeds typical amount? Flag it. Transaction in unusual location? Flag it. Transaction at unusual time? Flag it. Multiple transactions in rapid succession? Flag it.

These rules catch a lot of fraud, but they also generate massive numbers of false positives. The credit card company declines your legitimate transaction because you're traveling internationally. The bank blocks your online transfer because it's a larger amount than you typically send. False declines are expensive for financial institutions because they frustrate customers and damage loyalty.

Machine learning has improved fraud detection, but it's still fundamentally limited by the data available to the fraud detection system. The system sees the transaction amount, the merchant, the timestamp, the geolocation of the transaction. It doesn't see the full context of the customer's actual behavior and network activity.

How Network Intelligence Transforms Fraud Detection

Now add telecom network intelligence to the picture.

A fraudster uses a stolen credit card. The transaction looks normal: it's for a reasonable amount, at a merchant category the cardholder typically uses. Standard fraud detection systems give it a low fraud score because it looks normal.

But the telecom operator's AI system sees more. It sees that the transaction originated from a device that just had its SIM card changed. It sees that the device is connecting to a cell tower in a country the cardholder never travels to. It sees that the transaction is happening at a time that contradicts the cardholder's typical daily activity pattern (2 AM when the cardholder is typically active between 8 AM and 10 PM). It sees that the device is traveling at a speed that suggests international flight (it was in the US 3 hours ago and is now in Europe, which is impossible by car or ground transportation).

With this network context, the fraud detection system can confidently flag this transaction as fraudulent, while approving legitimate transactions that would normally trigger false-positive fraud detection.

The result: fewer false declines, better legitimate transaction approval, and dramatically improved fraud detection accuracy.

Real-Time Risk Assessment and Decisioning

The most sophisticated approach combines multiple data sources in real-time decisioning.

When a transaction is initiated, multiple systems analyze it simultaneously: the financial institution's fraud detection system, the telecom operator's network intelligence system, the payment processor's risk assessment system. They all provide their assessments within milliseconds.

The AI system in the telecom operator's infrastructure synthesizes these inputs and makes a decision: approve, require additional authentication, or decline. This all happens in under 100 milliseconds, so the customer doesn't notice any delay.

For high-risk transactions (unusual amount, new merchant, international location), the system might require step-up authentication: a second factor like SMS verification or biometric confirmation. The AI system decides this in real-time based on risk assessment.

For confirmed fraudulent transactions (device has been reported stolen, SIM swap fraud has been detected), the system can decline the transaction immediately, potentially preventing the fraud before the damage is done.

This is leagues beyond current fraud detection. It's not reactive (detecting fraud after it happens); it's predictive (preventing fraud before it can occur).

Implementation in Financial Services

Financial institutions are moving aggressively on this. Banks are partnering with telecom operators specifically to integrate network intelligence into fraud detection. Payment processors are building APIs to share risk data. Regulators are encouraging this kind of cooperative fraud prevention.

The timeline for implementation is typically 3–6 months once the partnership is established. Financial institutions already have the transaction data and the fraud detection infrastructure. Telecom operators already have the network intelligence. The integration work is complex but well-defined.

The ROI is immediate. Reducing false declines by even 5% improves customer satisfaction significantly. Preventing 10% more fraud saves enormous amounts of money. For a large financial institution processing billions of transactions annually, even small improvements translate to millions of dollars.

The Technical Architecture: API Integration and Standards

All of this works because of sophisticated API integration. Let's dig into how the technical pieces connect.

CAMARA and Open Standards

One of the biggest challenges in telecom is fragmentation. Every operator has different systems, different APIs, different naming conventions. A payment processor that wanted to integrate with multiple operators would need to write custom integration code for each one.

This is where CAMARA comes in. CAMARA is a working group of network operators creating standardized APIs for accessing network intelligence. These aren't vague API specifications; they're detailed, documented APIs with specific data schemas.

The CAMARA specifications cover areas like:

- Device location (with privacy protections)

- SIM swap detection

- Quality of Service metrics (bandwidth, latency, packet loss)

- Device information (type, manufacturer, operating system)

- Network information (carrier type, generation, signal strength)

- Geofencing (defining geographic areas and detecting when devices enter/exit)

These APIs allow payment processors, healthcare providers, and other service providers to access network intelligence in a standardized way. Instead of custom integration with each operator, they integrate once with the CAMARA API specification, and it works across multiple operators.

This standardization is critical for adoption. Without it, each operator's network intelligence would be a proprietary advantage that only works with that operator's customers. With standardization, network intelligence becomes a broadly accessible service.

Converged Network and Application APIs

But there's a second layer of integration that's even more important: converged network and application APIs.

These are APIs that combine network intelligence with application logic. A fraud detection API doesn't just return device location; it returns fraud risk assessment based on device location combined with other factors. A personalization API doesn't just return network quality metrics; it returns recommendations for content delivery optimization based on current network conditions.

This requires the cloud communications provider's intelligence to be combined with the telecom operator's network data, in real-time, to produce actionable insights.

The architecture looks like this: A payment processor calls a fraud assessment API, passing in the transaction details. The API (running on the telecom operator's infrastructure, provided by the cloud communications provider) assembles the request. It queries the CAMARA network intelligence APIs for device location, SIM status, and device information. It simultaneously queries the cloud communications provider's AI model to assess risk based on the customer's communication patterns. It synthesizes these inputs and returns a fraud risk score and recommended action.

All of this happens in under 100 milliseconds.

Data Governance and Compliance

A critical part of the technical architecture is governance around data access and privacy.

Telecom operators have access to vast amounts of personal data. They know your location, your contacts, your communication patterns, your financial transactions (indirectly, by seeing when you make payments). This data is incredibly valuable for understanding customer behavior and detecting anomalies.

But it's also incredibly sensitive. Using this data requires explicit customer consent, transparency about what's being used and why, and strong protections to ensure the data isn't misused.

The Telco AI Factory model addresses this through several mechanisms:

Explicit Consent. Before a telecom operator can share network intelligence with a third-party service, the customer must explicitly consent. This isn't buried in a terms of service; it's an active choice. "Do you want to enable fraud detection features that use network intelligence to identify suspicious transactions? Yes/No."

Purpose Limitation. The data can only be used for specific, stated purposes. If consent was given for fraud detection, that data cannot be used for marketing, advertising, or other purposes. If consent was given for location-based personalization, that data cannot be used for law enforcement without a court order.

Transparency. Customers can see exactly what data is being shared, when, and for what purpose. They can revoke consent at any time.

Aggregation and Anonymization. Much of the analysis that happens can be done on aggregated, anonymized data rather than individual customer data. Instead of tracking your specific location over time, the system analyzes patterns across millions of customers to identify fraud indicators.

Audit and Accountability. All access to sensitive data is logged and auditable. If a telecom operator's employee accessed customer data inappropriately, that access would be detected and investigated.

These governance mechanisms aren't perfect, but they're far more robust than what most cloud providers implement. A telecom operator handling personal data knows they're highly regulated; they take governance seriously.

Estimated data shows a balanced distribution of hardware components, with data center processors and edge computing hardware comprising the largest shares. This balance is crucial for efficient AI operations at the edge.

Competitive Advantages: Why Hyperscalers Can't Replicate This

At this point, you might be wondering: why don't Amazon, Google, or Microsoft just do this? They have massive computing infrastructure. They have sophisticated AI capabilities. Why does this require telecom operators?

The answer is that hyperscalers are missing critical ingredients that actually can't be easily replicated.

Physical Infrastructure and Network Ownership

Hyperscalers have enormous data centers, but they don't own the networks that connect those data centers to end users. This is a fundamental limitation.

A telecom operator owns fiber optic cables, radio towers, and switching infrastructure across entire countries. This physical network infrastructure is regulated by national governments. Building a competing network would require massive capital investment, government licenses, and time.

Amazon and Google have invested billions in building private fiber networks, particularly in data center interconnects and last-mile connections. But they don't have anything approaching the nationwide, interconnected infrastructure that telecom operators have built over decades.

This matters because the Telco AI Factory's fundamental advantage is low-latency edge computing. You need computing infrastructure close to end users. Hyperscalers can't deploy computing infrastructure to every neighborhood in a country. Telecom operators already have physical presence in every neighborhood.

Data Access and Privacy Relationships

Hyperscalers have massive amounts of data about user behavior, but it's application-level data. They see what you search for, what email you send, what documents you edit, what videos you watch. They don't see the underlying network intelligence.

Telecom operators see network-level data: where you are, how you're connected, what Qo S you're experiencing, what devices you're using. This data is fundamentally different and provides different insights.

More importantly, governments and enterprises trust telecom operators to handle sensitive network data in ways they don't trust hyperscalers. A hospital will give a telecom operator access to network infrastructure that it wouldn't give to Amazon or Google. A government will enable sovereign AI on a telecom operator's infrastructure that it wouldn't on a hyperscaler's infrastructure.

This trust is built over decades of regulatory relationships and is not something hyperscalers can quickly establish.

Relationships with Enterprise and Government Customers

Telecom operators have established relationships with virtually every enterprise and government entity. These relationships are based on critical infrastructure dependencies (your business needs connectivity) and regulatory oversight (governments regulate telecom operators closely).

Hyperscalers have relationships with enterprises, but they're different. Cloud customers choose hyperscalers because of specific capabilities; they could theoretically move to competitors. But nobody can change their telecom operator without massive disruption. You can move your applications to a different cloud; you can't move your connectivity to a different network.

This gives telecom operators a relationship advantage that hyperscalers simply don't have.

Challenges and Obstacles to Widespread Adoption

Despite these advantages, the Telco AI Factory model faces real challenges to widespread adoption.

Technology Integration Complexity

Getting hardware providers, telecom operators, and cloud communications providers to work together is non-trivial. These companies have different technical cultures, different priorities, and different business models.

A hardware vendor prioritizes reliability and compatibility across multiple software stacks. A telecom operator prioritizes network security and regulatory compliance. A cloud communications provider prioritizes AI model accuracy and feature richness. Getting these three to agree on a unified technical approach requires compromise.

The CAMARA standard helps, but it's still evolving. Different operators implement it differently. Compliance and validation testing is complex.

Implementation timelines are typically longer than people expect. An 18-month deployment that experts initially estimated at 12 months is not uncommon. Teams underestimate integration complexity. API specifications are clearer in principle than in practice.

Regulatory and Compliance Issues

Telecom operators are heavily regulated. Adding new services, especially services that involve AI and personal data, requires regulatory approval. This approval doesn't always come quickly.

A new AI-powered customer service system might require approval from:

- The telecom regulator (ensuring the service complies with telecom regulations)

- The privacy regulator (ensuring customer data is protected)

- The industry regulator for the customer's business (healthcare regulators, financial regulators, etc.)

- Potentially international regulators if the system processes data from multiple countries

Each approval process involves documentation, review, and potentially modification to comply with requirements. This can add 3–6 months to deployment timelines.

Operator Hesitation and Organizational Challenges

Many telecom operators haven't traditionally been software or AI companies. Building a new business around AI-powered services requires cultural change, hiring of new talent, and organizational restructuring.

Operators that have been focused on network infrastructure and connectivity services for decades don't naturally think like software companies. Decision-making processes are slower. IT governance is more conservative. Risk tolerance is lower.

Some operators are making this transition successfully. Others are moving more slowly. And some are skeptical that this new business model will ever be profitable compared to core connectivity services.

Competition from Cloud Providers and Niche Players

While hyperscalers can't replicate the entire Telco AI Factory model, they can build competing solutions in specific niches.

Amazon Alexa and Google Assistant already handle voice AI at scale. AWS and Google Cloud offer healthcare and financial services solutions. Microsoft has invested heavily in cloud communications with its acquisition of Slack and its partnership with Open AI.

These companies won't dominate the network edge, but they can be competitive in specific verticals. A healthcare provider might build on AWS instead of working with a telecom operator. A financial institution might use Google's fraud detection instead of relying on network intelligence.

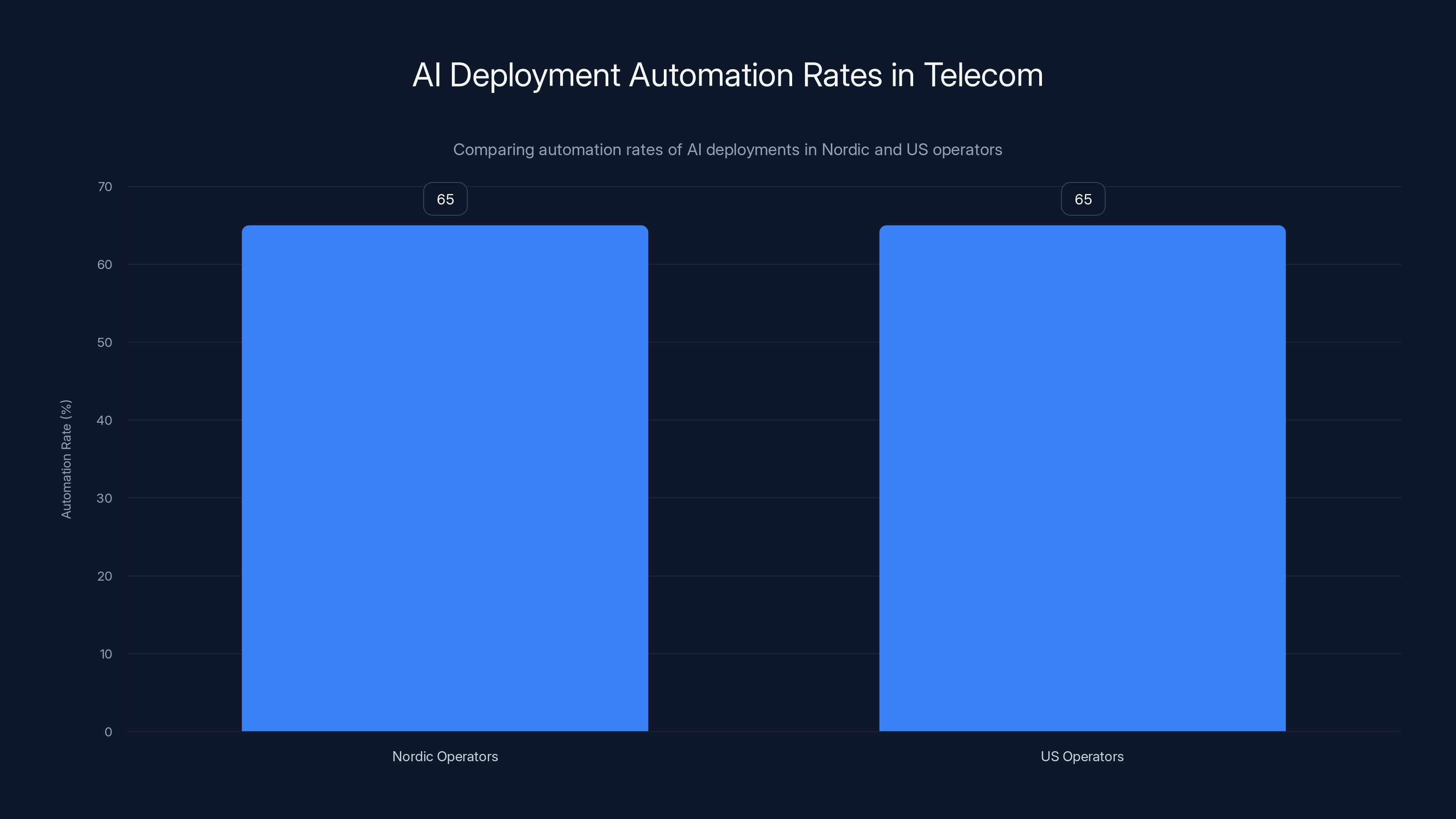

Both Nordic and US operators achieve around 65% automation in call handling with AI deployments, indicating similar efficiency levels despite different deployment speeds.

Real-World Deployments and Case Studies

While the Telco AI Factory is still relatively early-stage, real deployments are happening.

Nordic Operators and Sovereign AI

Telecom operators in the Nordic countries (Sweden, Denmark, Finland, Norway) have been aggressive in pursuing this model. These countries have strict data protection requirements and are prioritizing sovereign AI as a strategic capability.

Operators in this region have partnered with cloud communications providers to deploy voice AI agents in Central Offices. The initial use cases have been customer service (handling billing questions, appointment scheduling) and fraud detection. Early results show 60–70% automation rates for common call types, with customer satisfaction improving because calls are handled instantly rather than requiring waiting and transfers.

US and UK Operators

Operators in the US and UK are moving more slowly, but deployments are underway. Some are building internal AI capabilities. Others are partnering with cloud communications vendors.

A major US operator has deployed an AI customer service agent in selected markets. The agent handles about 65% of incoming calls without human escalation. The operator reports that customer satisfaction with AI-handled calls is comparable to human-handled calls, and satisfaction with transferred calls (where the agent has already gathered context) is actually higher because the human specialist has complete information.

A UK operator is piloting AI-powered customer service in healthcare provider partnerships. The goal is to have hospitals use the operator's infrastructure to deploy customer-facing AI agents. Early results are positive, but scaling across multiple hospitals requires solving integration challenges and regulatory approvals that are still in progress.

Enterprise Adoption Patterns

Enterprise adoption is starting with companies that have highest frustration with current solutions. Healthcare providers frustrated with customer service call volumes. Financial institutions looking for better fraud detection. Customer service organizations drowning in volume.

A healthcare provider with 2 million patient interactions annually piloted an AI customer service system. In pilot testing with 50,000 interactions, the system handled 72% without human intervention. For the 28% that required escalation, the AI agent had already gathered all necessary context. The provider is now rolling out more broadly.

A financial institution deployed AI-powered fraud detection combining network intelligence with transaction analysis. False decline rates decreased by 8%. Actual fraud detection accuracy improved by 23%. The ROI was immediately positive.

The Path Forward: What Enterprises Should Be Doing Now

If you're an enterprise considering these technologies, what should you actually do right now?

Assess Your Current Systems and Bottlenecks

Start by honestly assessing where your biggest pain points are. Where are you losing money to inefficiency? Where do customers experience poor service because of system limitations? Where do you need more intelligence in real-time decision-making?

For customer-facing organizations, this is usually customer service. For financial institutions, this is fraud detection. For healthcare, this is administrative overhead.

Quantify the pain. How much money are you losing? How much time are your teams spending on low-value work? What's the impact on customer satisfaction?

This quantification becomes the baseline for evaluating AI solutions. A solution that reduces administrative overhead by 30% is worth pursuing. A solution that reduces false declines by 5% in financial services is worth millions of dollars.

Evaluate Integration Requirements

Understand how well your existing systems can be integrated with new AI solutions.

Do you have APIs for your healthcare records? Can a fraud detection system access transaction data in real-time? Can you integrate with customer service systems to provide context to human specialists?

Integration complexity is usually the biggest factor in deployment timeline. Systems that were designed 10 years ago might not have APIs. Older systems might require custom integration work.

This evaluation shapes which solutions are actually viable for your organization.

Start with Pilots, Not Full Deployments

Don't jump into enterprise-wide deployment immediately. Start with a pilot in one department or one geography.

A healthcare provider might pilot in one hospital location before expanding to 10 locations. A financial institution might pilot fraud detection in one payment channel before enterprise-wide deployment. A customer service organization might pilot in one department before scaling to others.

Pilots help you understand real-world performance, identify integration issues before they become enterprise-wide problems, and build internal confidence that the solution actually works.

A good pilot program runs for 3–6 months, processes enough volume to be statistically significant, and includes feedback mechanisms from both users and customers.

Build Partnerships Thoughtfully

You don't need to go through a single vendor. You could work with a telecom operator and a cloud communications provider. You could use multiple cloud providers for different use cases. You could build some capabilities internally.

The key is making sure the pieces actually integrate. API compatibility. Data governance alignment. Support and maintenance clarity.

Asking hard questions upfront prevents painful surprises later. "What happens if this vendor goes out of business?" "Can we port our data and models if we decide to switch?" "Who owns the intellectual property if we customize the AI model?"

Plan for Organizational Change

Deploying AI is 30% technology and 70% organizational change. You're replacing manual processes with automated systems. People whose job is handling customer service calls now need to handle escalations and exceptions. People whose job is fraud investigation now need to focus on edge cases that the system can't handle.

Change management, training, and organizational restructuring are critical. Managers and employees need to understand how their jobs are changing and have confidence that their roles will still exist post-implementation.

A successful deployment includes investment in training, clear communication about why change is happening, and honest discussion about which roles are evolving versus being eliminated.

The Future: Multi-Vertical Expansion and Service Evolution

The Telco AI Factory is currently being piloted in healthcare, financial services, and customer service. But the model can be applied to virtually every industry that depends on communications.

Public Safety and Emergency Response

Imagine an emergency dispatch AI agent that understands the caller's situation in real-time. The agent listens to the description of an emergency, understands the urgency, and can access information about the nearest emergency resources. It might route the call intelligently based on current load at different stations.

For 911 centers handling overwhelming call volumes, this could significantly improve emergency response. The AI agent handles triage, ensuring that life-threatening emergencies get immediate response while lower-urgency calls wait appropriately.

Education and Learning

AI tutoring systems deployed at the network edge could provide 24/7 personalized learning support. Students get instant homework help, concept explanations, and personalized learning pathways. The system uses voice AI to understand where the student is struggling and provides targeted support.

This is particularly valuable in underserved communities where access to tutors is limited.

Manufacturing and Supply Chain

Manufacturers could deploy AI agents that handle supply chain communication. Real-time updates on shipment status. Automated notifications when orders are placed. Intelligent routing of requests to appropriate departments.

The network edge advantage becomes critical here; manufacturers want low-latency communication with supply chain partners.

Enterprise Communications and Internal Services

Organizations have thousands of internal support issues. HR questions, IT support, accounting inquiries, facilities requests. Currently, these get handled through ticketing systems, emails, and phone calls.

AI agents could handle the majority of these interactions instantly. Employee asks an HR question about benefits. The AI agent understands the question, pulls relevant policy information from the HR system, and provides a clear answer. 90% of calls don't need human intervention.

For a large enterprise, this could save millions of dollars in internal support costs while improving employee experience through faster resolution.

The Investment Opportunity: Why VCs and PEs Care

If you're an investor looking at this space, the Telco AI Factory represents a significant investment opportunity.

There's venture capital flowing into cloud communications providers building the AI engines. There's private equity interest in telecom operators undertaking digital transformation. There's strategic investment from hardware vendors building edge computing infrastructure.

The market size is enormous. If telecom operators can capture even 5% of the AI services market (worth trillions of dollars in potential GDP impact), the revenue uplift is in the hundreds of billions of dollars across the industry.

But the investment thesis is more nuanced. You're not betting on a single company. You're betting on an ecosystem that includes multiple players in different categories. Success requires orchestration across partners, regulatory navigation, and cultural transformation in traditional telecom operators.

The highest-conviction bets are on pure-play cloud communications providers. These companies are building the AI engines that make the entire ecosystem work. They have limited legacy business, high growth potential, and significant margin improvement opportunities.

Secondary bets are on leading telecom operators that are executing effectively on digital transformation. These are larger deals, but with lower growth rates and higher execution risk.

Tertiary bets are on hardware vendors and systems integrators, where the upside is more limited but the risk is lower.

Conclusion: The Telecom Industry's Next Act

The Telco AI Factory represents something profound. It's not just a new revenue stream for telecom operators. It's a fundamental reshaping of what telecommunications means in the AI era.

For over a century, telecom has meant moving information from place to place. Telephony moved voice. Telegraph moved text. The internet moved all forms of data. But the telecom network itself remained a dumb pipe. The intelligence was always somewhere else.

The Telco AI Factory changes this. The network becomes intelligent. The infrastructure that moves information also understands it, analyzes it, acts on it. This transformation happens at the edge, where latency is minimal and sovereignty is guaranteed. It's enabled by partnerships between hardware providers, operators, and cloud communications companies.

For enterprises, this means access to AI services that are faster, more trustworthy, and more integrated with their communications infrastructure than cloud alternatives. For telecom operators, this means a path forward that doesn't depend entirely on connectivity revenues. For users, this means better service, faster resolution, and more intelligent communication systems.

The pilots happening now in healthcare, financial services, and customer service are just the beginning. Over the next 5–10 years, expect this model to spread across virtually every industry that depends on communications. Healthcare systems will rely on AI agents deployed in telecom infrastructure. Financial institutions will use network intelligence for fraud prevention. Governments will build sovereign AI capabilities for defense and law enforcement.

Telecom operators who successfully make this transition will evolve from connectivity providers to infrastructure platforms for intelligent services. Those that don't will continue as commodity connectivity providers, watching more valuable work happen on their networks.

The technology is ready. The partnerships are forming. The early deployments are validating the model. The question now is execution. Which operators will move fastest? Which cloud communications providers will build the most valuable AI engines? Which enterprises will pioneer adoption in new verticals?

Those questions will be answered over the next 2–3 years. But the direction is clear. The Telco AI Factory represents the future of telecommunications, and that future is coming faster than most people realize.

FAQ

What exactly is the Telco AI Factory?

The Telco AI Factory is an operating model where telecom operators, hardware providers, and cloud communications companies collaborate to deploy AI infrastructure at the network edge. Instead of running AI in distant cloud data centers, the model pushes intelligent systems into Central Offices and regional data centers spread throughout networks, enabling low-latency, sovereign, contextually-aware AI services.

How does the Telco AI Factory differ from traditional cloud computing?

Traditional cloud computing centralizes computing resources in large data centers and routes all requests through them, which creates latency and requires sending data to distant servers. The Telco AI Factory distributes computing to the network edge, where latency is minimal and operators can guarantee data never leaves local infrastructure, making it ideal for real-time applications and sovereign AI requirements.

Why are telecom operators uniquely positioned to lead this transformation?

Telecom operators own vast, distributed physical infrastructure across entire countries, maintain trusted relationships with governments and enterprises, have access to network intelligence data that hyperscalers don't possess, and can guarantee data sovereignty by processing information locally rather than routing it through centralized cloud services.

What role do cloud communications providers play in the Telco AI Factory?

Cloud communications providers develop the artificial intelligence engines that make the infrastructure intelligent. They build conversational AI models, domain-specific AI agents, and integrate network intelligence APIs with AI decision-making, transforming raw data and computing resources into actual business value.

What industries are already using the Telco AI Factory model?

Healthcare, financial services, and customer service organizations are leading early adoption. Healthcare providers are deploying AI agents to handle billing questions and administrative overhead. Financial institutions are using network intelligence for fraud detection. Customer service organizations are automating interactions while maintaining context and quality.

How much latency improvement does the Telco AI Factory provide compared to cloud alternatives?

AI models running in Central Offices close to users respond in 10–20 milliseconds, compared to 100–200 milliseconds for models running in distant cloud data centers. This 5–20x latency improvement is critical for real-time applications like voice AI, fraud detection, and network optimization.

What is CAMARA and why does it matter for the Telco AI Factory?

CAMARA is a standardized API specification created by network operators to expose network intelligence (device location, SIM status, quality of service metrics) in a consistent way across operators. This standardization enables third-party developers to build applications that work across multiple telecom providers rather than requiring custom integration with each operator.

How much can the Telco AI Factory reduce customer service costs?

Organizations deploying AI agents in this model typically achieve 60–75% automation rates for common customer service interactions, reducing per-interaction costs by 70–85%. For organizations processing millions of annual interactions, this translates to tens of millions of dollars in annual savings.

What are the main obstacles to widespread Telco AI Factory adoption?

Key challenges include technology integration complexity across multiple vendors, regulatory approval requirements in heavily-governed industries, organizational and cultural change needed within telecom operators, competition from hyperscalers in specific niches, and the significant investment required to build edge computing infrastructure.

How can enterprises get started with Telco AI Factory solutions?

Enterprises should begin by assessing their biggest pain points and quantifying the impact in terms of money, time, or customer satisfaction. Next, evaluate how well existing systems can integrate with new AI solutions. Start with a 3–6 month pilot in one department or geography, build thoughtful partnerships with multiple vendors rather than depending on a single provider, and plan for significant organizational change as roles and processes evolve.

What is the expected timeline for Telco AI Factory deployment?

Pilot deployments typically take 3–6 months from initiation to completion. Full enterprise deployments usually take 6–12 months from contract to production, though complex integrations with legacy systems can extend this timeline. Regulatory approvals can add an additional 3–6 months depending on industry and geography.

How does data sovereignty work in the Telco AI Factory model?

Data sovereignty is guaranteed because computing happens on the telecom operator's infrastructure within the country, rather than being transmitted to external servers. This enables compliance with data localization requirements, GDPR restrictions on data transfer, and government mandates for sovereign AI capabilities.

Want to streamline your organization's AI integration and document collaboration processes? Runable provides AI-powered automation for creating presentations, documents, reports, images, and videos, enabling teams to accelerate their digital transformation initiatives without extensive technical overhead. Starting at just $9/month, Runable makes it simple to generate professional-quality outputs instantly.

Try Runable For Free and see how AI-powered automation can streamline your workflows and accelerate your organization's intelligence initiatives.

Key Takeaways

- The Telco AI Factory is a collaborative operating model where hardware providers, telecom operators, and cloud communications companies deploy AI infrastructure at the network edge rather than in centralized clouds

- Telecom operators possess unique advantages: sovereign AI capabilities, distributed infrastructure close to users enabling sub-20ms latency, access to network intelligence data, and inherent government trust that hyperscalers cannot replicate

- Voice AI represents a larger revenue opportunity than text-based generative AI, with Goldman Sachs projecting AI could add $7 trillion to global GDP—much of which will flow through voice communications

- Healthcare and financial services are proving grounds for Telco AI Factory deployment, with healthcare targeting $760-935 billion in annual administrative waste and fraud detection improving accuracy while reducing false declines

- Data governance, CAMARA API standardization, and converged network APIs are critical infrastructure components enabling enterprises to deploy sophisticated AI services that integrate network intelligence with application logic

- Deployment timelines are typically 3-6 months for pilots and 6-12 months for full implementations, with regulatory approvals potentially adding additional 3-6 months in healthcare and financial services industries

Related Articles

- OpenAI's 2026 'Practical Adoption' Strategy: Closing the AI Gap [2025]

- Why Microsoft Is Adopting Claude Code Over GitHub Copilot [2025]

- Nvidia's $1.8T AI Revolution: Why 2025 is the Once-in-a-Lifetime Infrastructure Boom [2025]

- RadixArk Spins Out From SGLang: The $400M Inference Optimization Play [2025]

- Why CEOs Are Spending More on AI But Seeing No Returns [2025]

- Physical AI: The $90M Ethernovia Bet Reshaping Robotics [2025]

![Telco AI Factory: Building Intelligent Communications Networks [2025]](https://tryrunable.com/blog/telco-ai-factory-building-intelligent-communications-network/image-1-1769269196016.jpg)