Why AI Startups Are Dying: The Wrapper and Aggregator Reckoning [2025]

Imagine building a startup for six months, shipping a product that works, and then watching the foundation crumble beneath you. That's happening right now to hundreds of AI startups. Not because their technology is bad. Not because they executed poorly. But because they built their entire company on quicksand.

The fault line runs deep through the AI startup ecosystem. In 2023 and early 2024, you could wrap a thin layer of UI around Chat GPT, call it a product, and venture capitalists would line up with checks. The magic was real. The velocity was intoxicating. But somewhere between the Chat GPT app store launch and now, the rules changed completely.

Darren Mowry, who leads Google's global startup organization, recently sounded the alarm about two business models that are facing existential pressure: LLM wrappers and AI aggregators. His diagnosis is brutal and specific. These aren't necessarily bad companies or incompetent founders. They're just building in a market that no longer wants what they're selling.

Here's what you need to understand: the AI startup world is undergoing a ruthless culling. And if your business model looks like one of these two archetypes, your company has its check engine light on. This isn't speculation. It's not pessimism. It's the market recalibrating around fundamental economics that nobody can escape.

Let's break down what's really happening, why it matters, and most importantly, which AI startups actually have a shot at survival.

Understanding the LLM Wrapper Problem

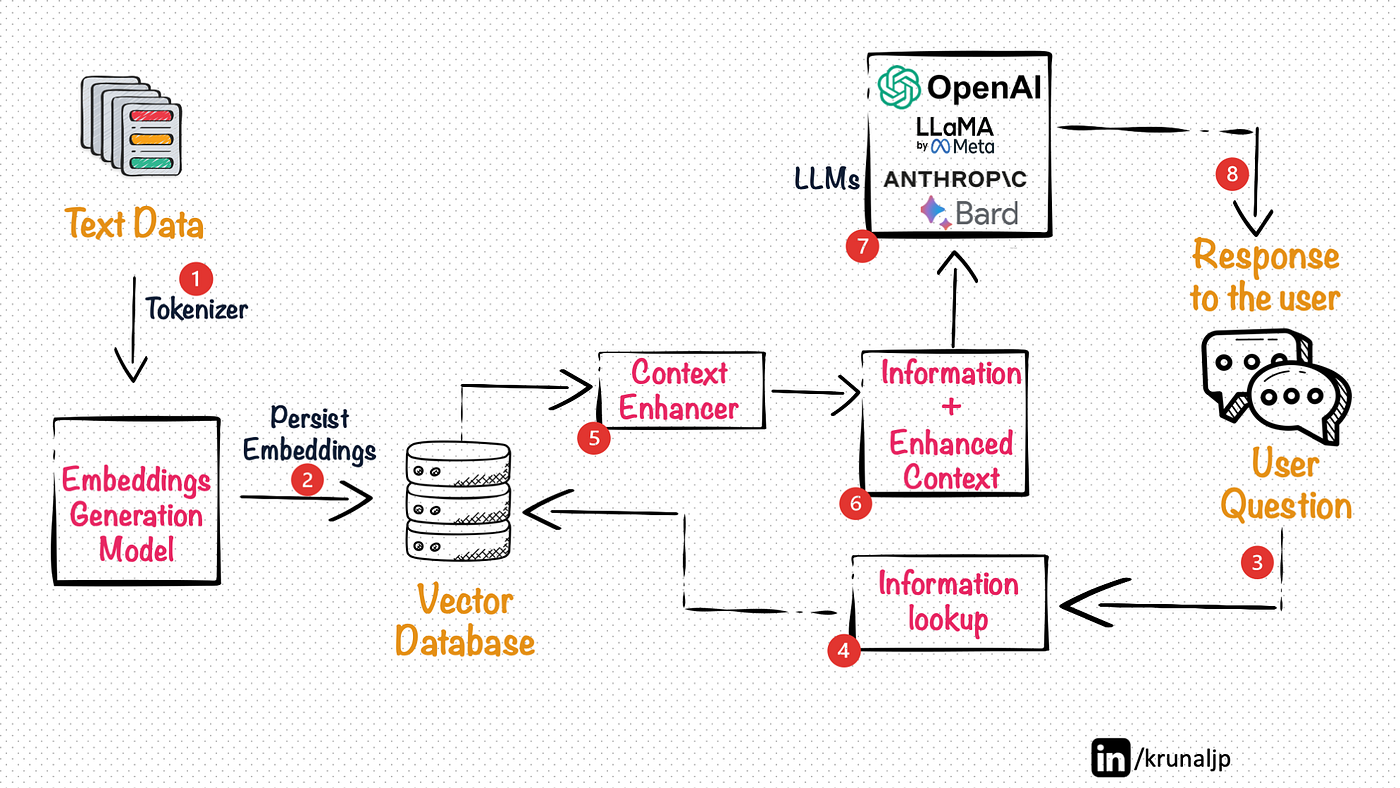

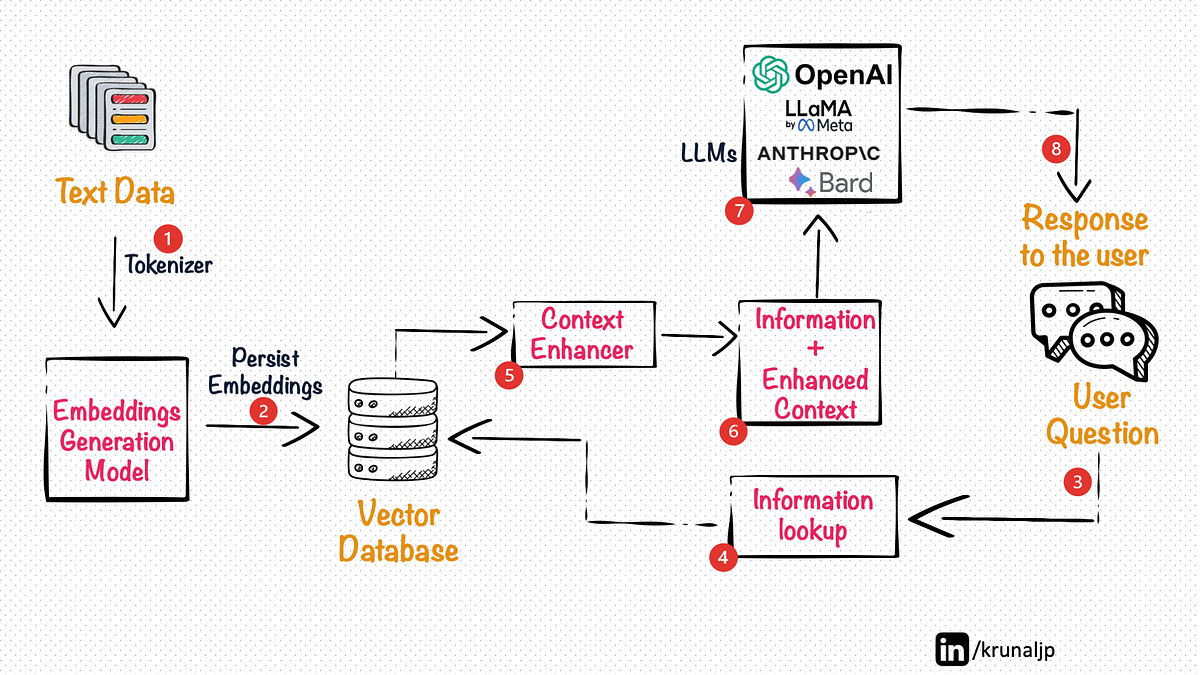

An LLM wrapper sounds benign. It's a startup that takes an existing large language model (Claude, GPT-4, Gemini, or any foundation model) and adds a product layer on top. You might use it to help students study, write code faster, generate business plans, or organize your email. The wrapper handles the UX, maybe adds some domain-specific logic, and routes queries to the underlying model.

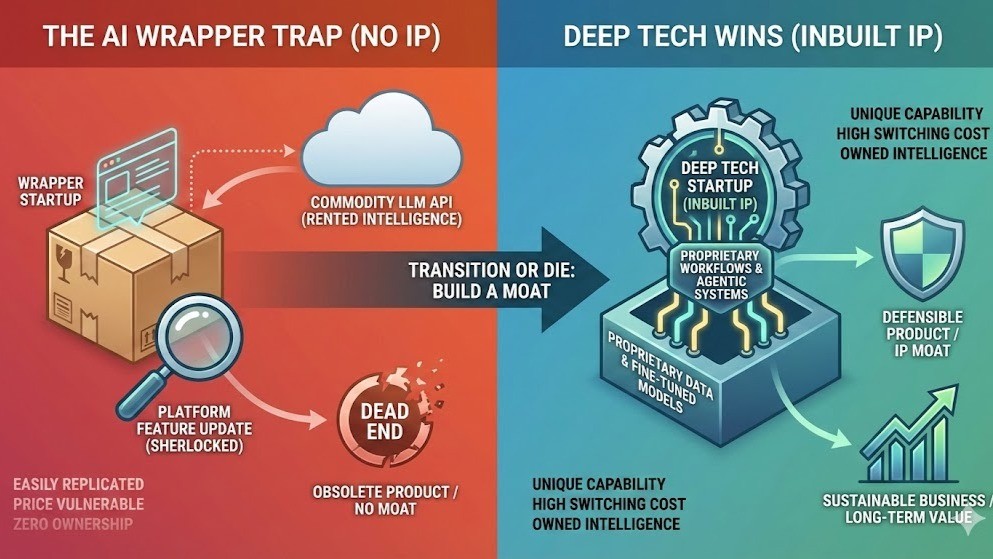

The problem isn't the concept. The problem is that for most wrappers, that's literally the entire business. They're white-labeling someone else's intelligence, slapping a different interface on it, and hoping customers will pay.

Mowry's phrasing was careful but devastating: "If you're really just counting on the back end model to do all the work and you're almost white-labeling that model, the industry doesn't have a lot of patience for that anymore."

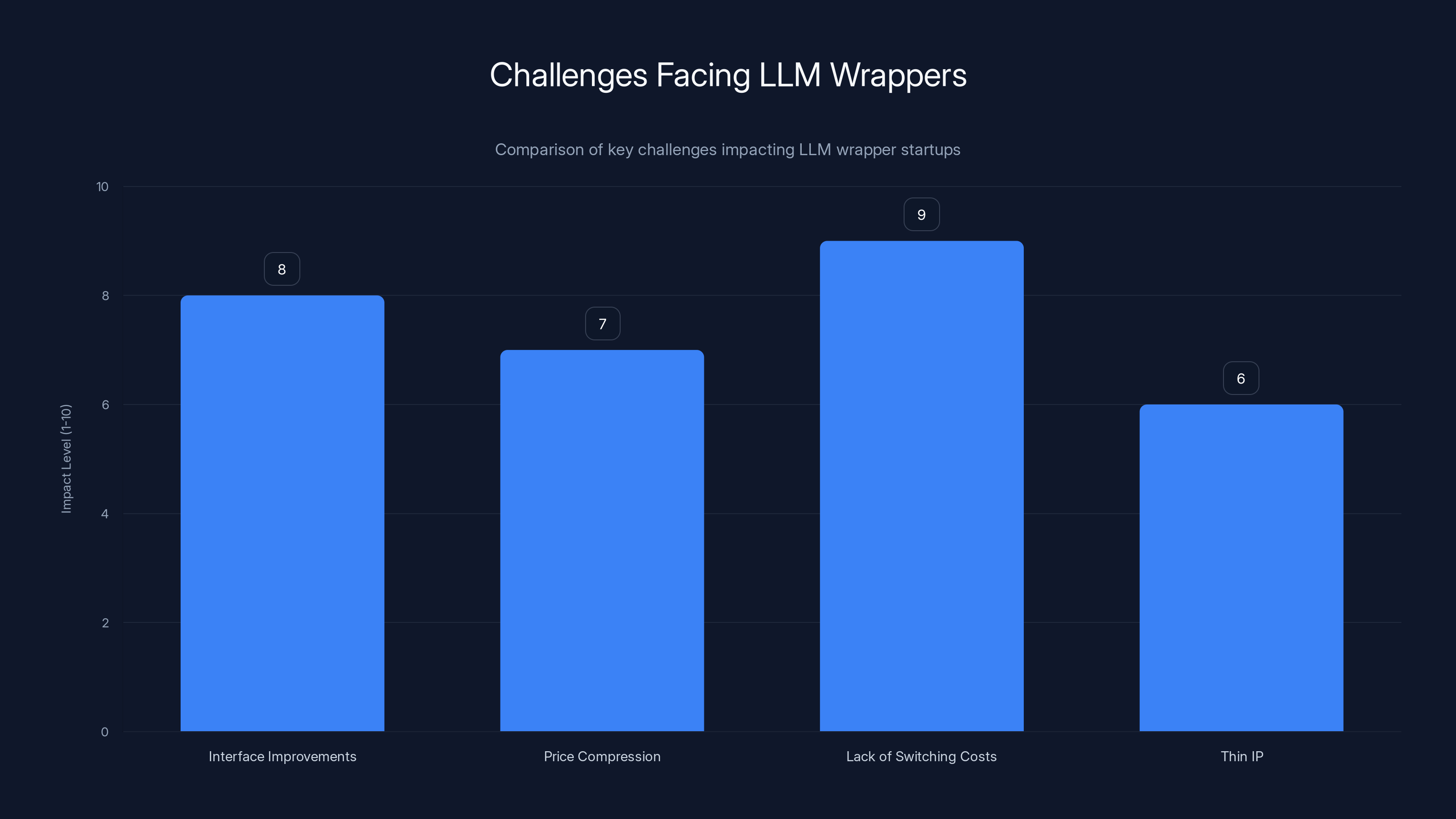

Why? Economics. When you're a wrapper, your value proposition is thin. Your customer could theoretically replicate what you're doing in an afternoon. They log into Chat GPT Plus, they create a custom GPT, they use Claude with a saved system prompt. The barrier to switching or replicating your work is measured in minutes, not months or years.

More importantly, the model providers themselves are moving upmarket. OpenAI isn't sitting idle. They're building better interfaces, more specialized tools, and enterprise features. When they do, your wrapper becomes obsolete overnight. You're not competing with OpenAI. You're competing against the next version of their product, and they move faster than you ever will.

This creates a vicious dynamic. You have to make your wrapper so good at one specific thing that it becomes irreplaceable. That requires deep, specialized knowledge about your vertical market and technical differentiation that goes far beyond "I added a better UI."

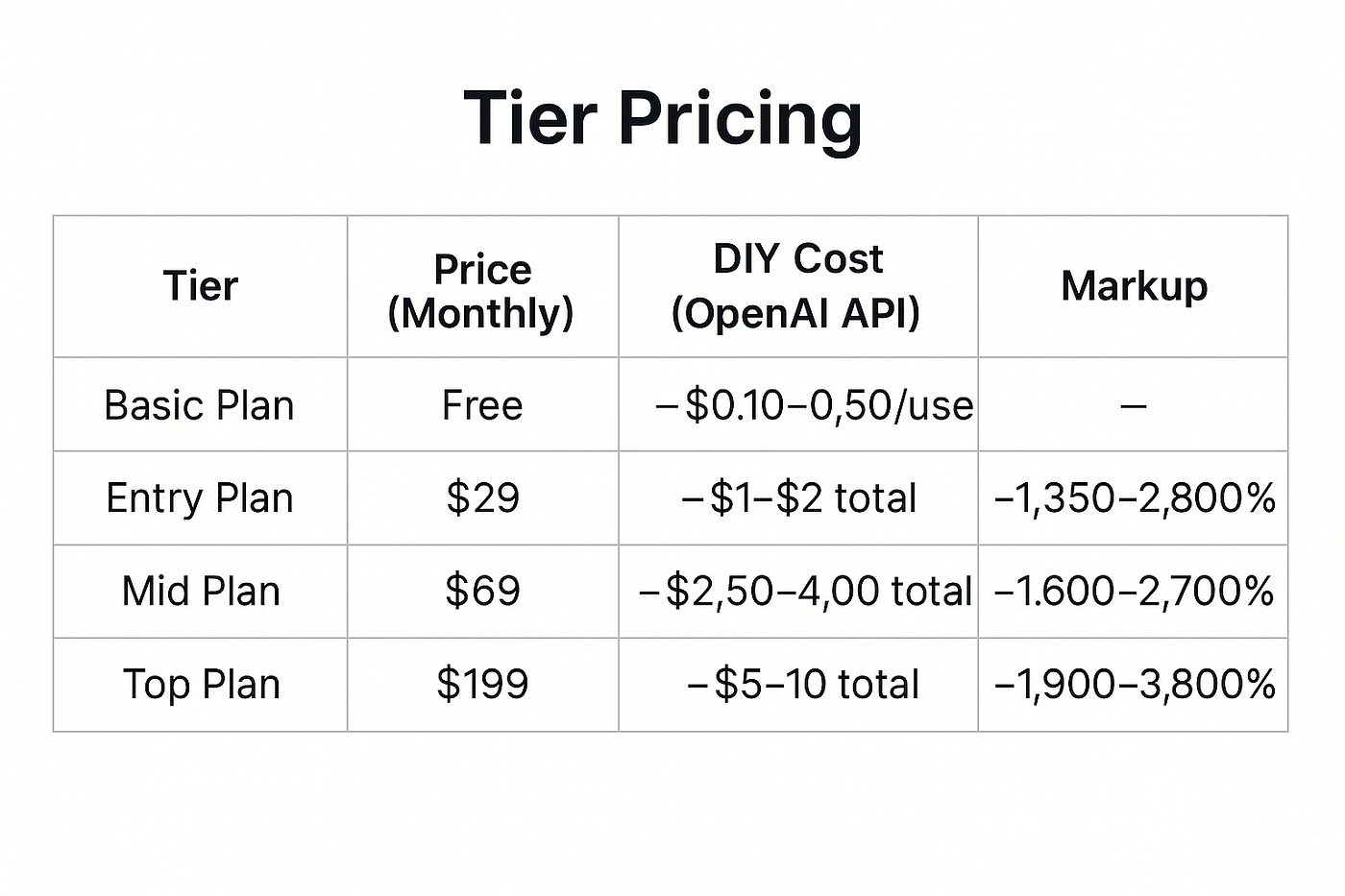

The Economics of Margin Destruction

Let's do the math. Assume you're a wrapper startup charging customers

Then Claude drops prices 20%. Now your API costs are

The model providers have economies of scale you'll never match. They serve millions of users across hundreds of use cases. When they optimize their model infrastructure, those savings are distributed across their entire user base. When you optimize your wrapper, you're squeezing yourself.

This is the trajectory Mowry observed during the AWS reseller era. Startups emerged to simplify cloud adoption, provide billing tools, and offer better support. Then Amazon built those tools themselves. Once customers could manage their AWS accounts directly and AWS support improved, the resellers had no value prop left. The survivors weren't the ones with the best interfaces. They were the ones who pivoted to offer actual services: migration consulting, security audits, architectural guidance.

Which Wrappers Actually Survive

Some wrappers do work. They're not common, but they exist. Cursor is the canonical example. It's a GPT-powered coding assistant that wrapped the model but added something genuinely valuable: deep integration with your entire codebase, local context awareness, and AI that understands your specific code patterns. Cursor didn't just add a UI to GPT. It built infrastructure that an individual developer using Chat GPT directly could never replicate in seconds.

Harvey AI is another example. It wrapped Claude but built domain-specific legal knowledge, compliance frameworks, and matter-specific context that generic LLMs simply don't have. A lawyer using Claude alone would spend hours building the same context that Harvey has baked in.

These survive because they have what Mowry calls "deep, wide moats." That means:

Horizontal differentiation: You own something the underlying model can't replicate. For Cursor, it's deep IDE integration and codebase indexing. For Harvey, it's legal domain knowledge and compliance frameworks.

Vertical specialization: You understand a specific market so deeply that you can build features and optimizations that generalist tools will never prioritize. A legal AI doesn't need to be good at coding. It needs to be exceptional at law.

Network effects or data advantage: The more users you have, the better your product gets. Or you control proprietary data that gives you an unfair advantage in your vertical.

Without at least one of these, your wrapper is just a timeline countdown to obsolescence.

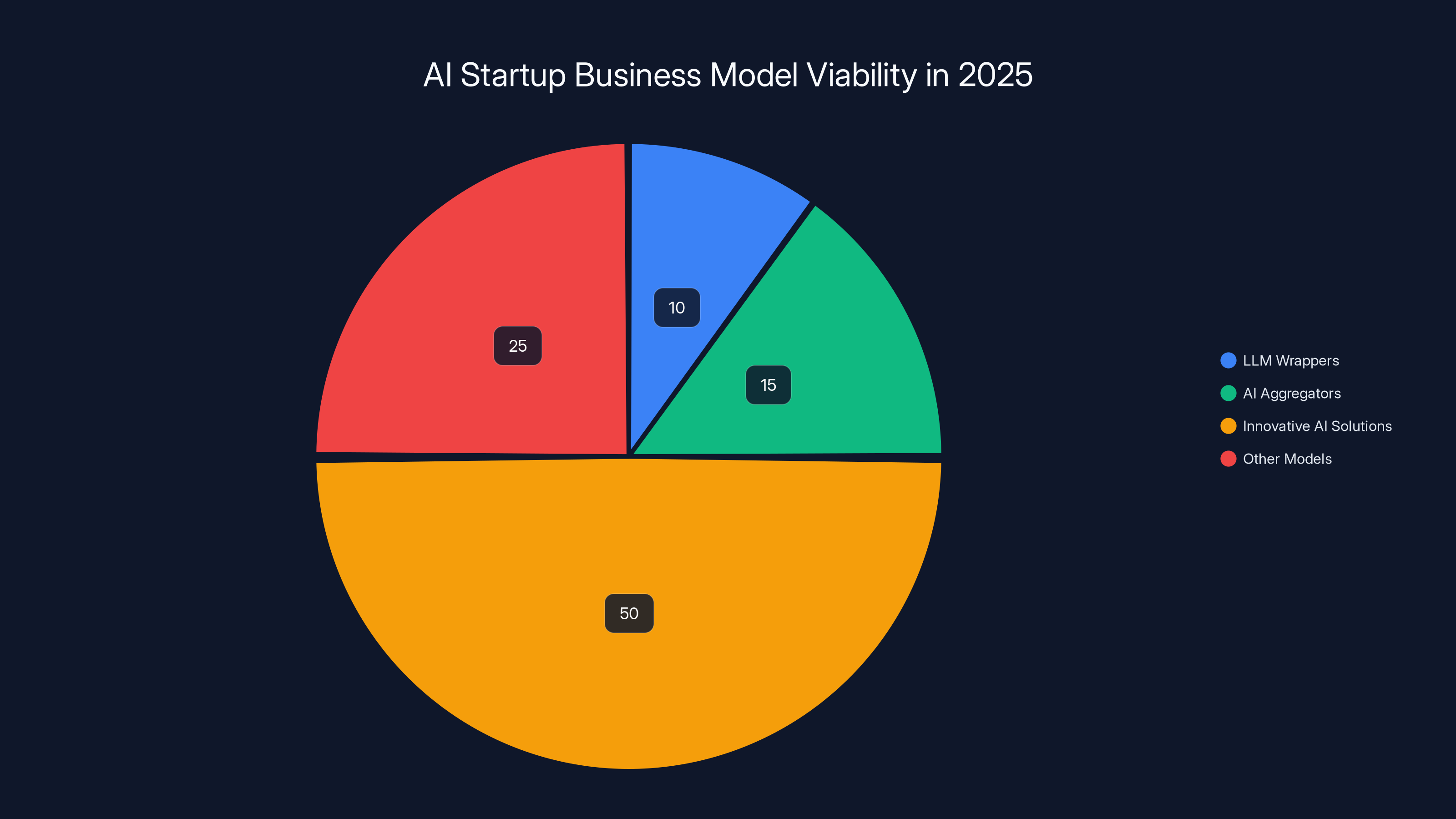

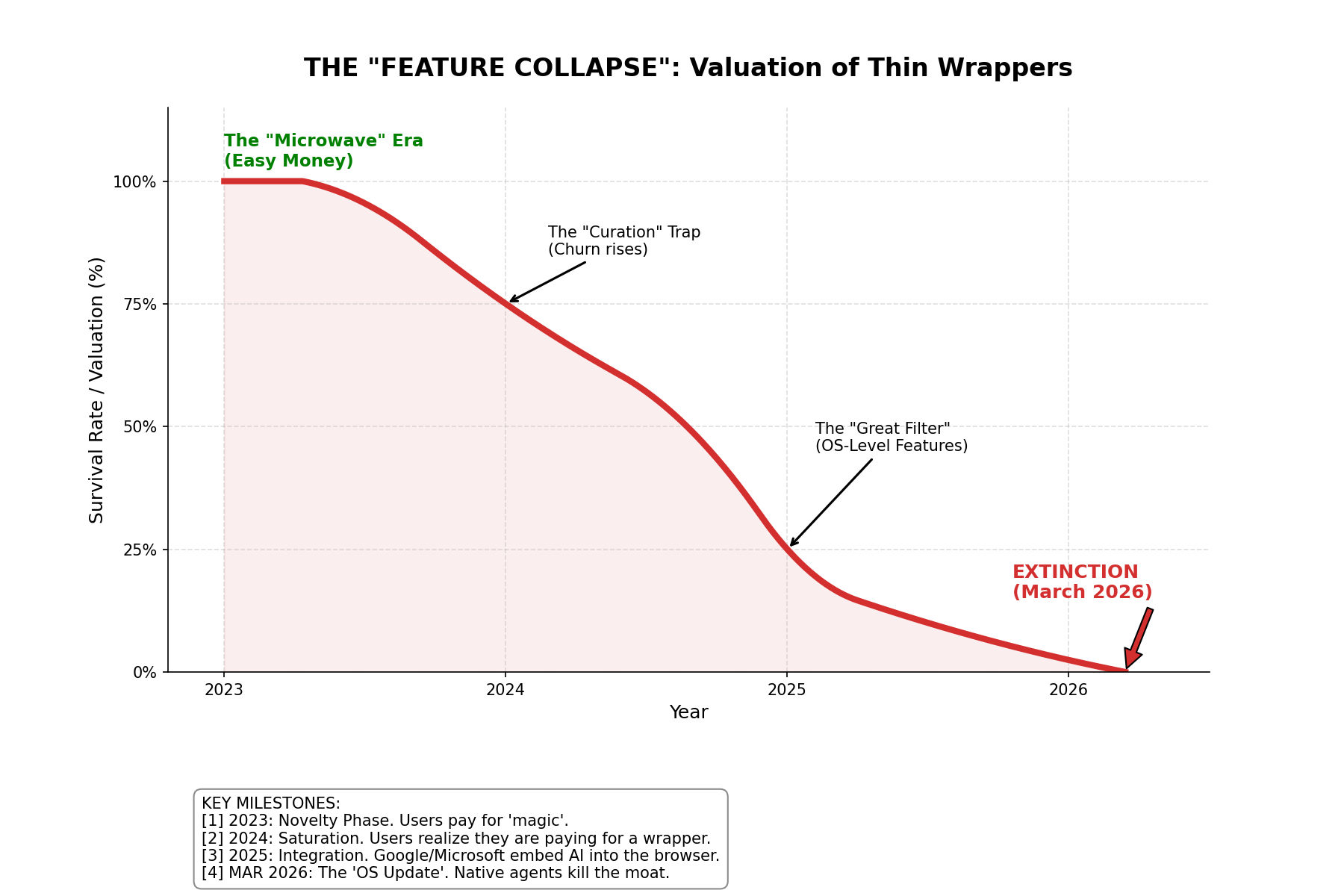

LLM wrapper startups face high challenges in value proposition and competition, with low switching costs making it difficult to retain customers. (Estimated data)

The AI Aggregator Fallacy

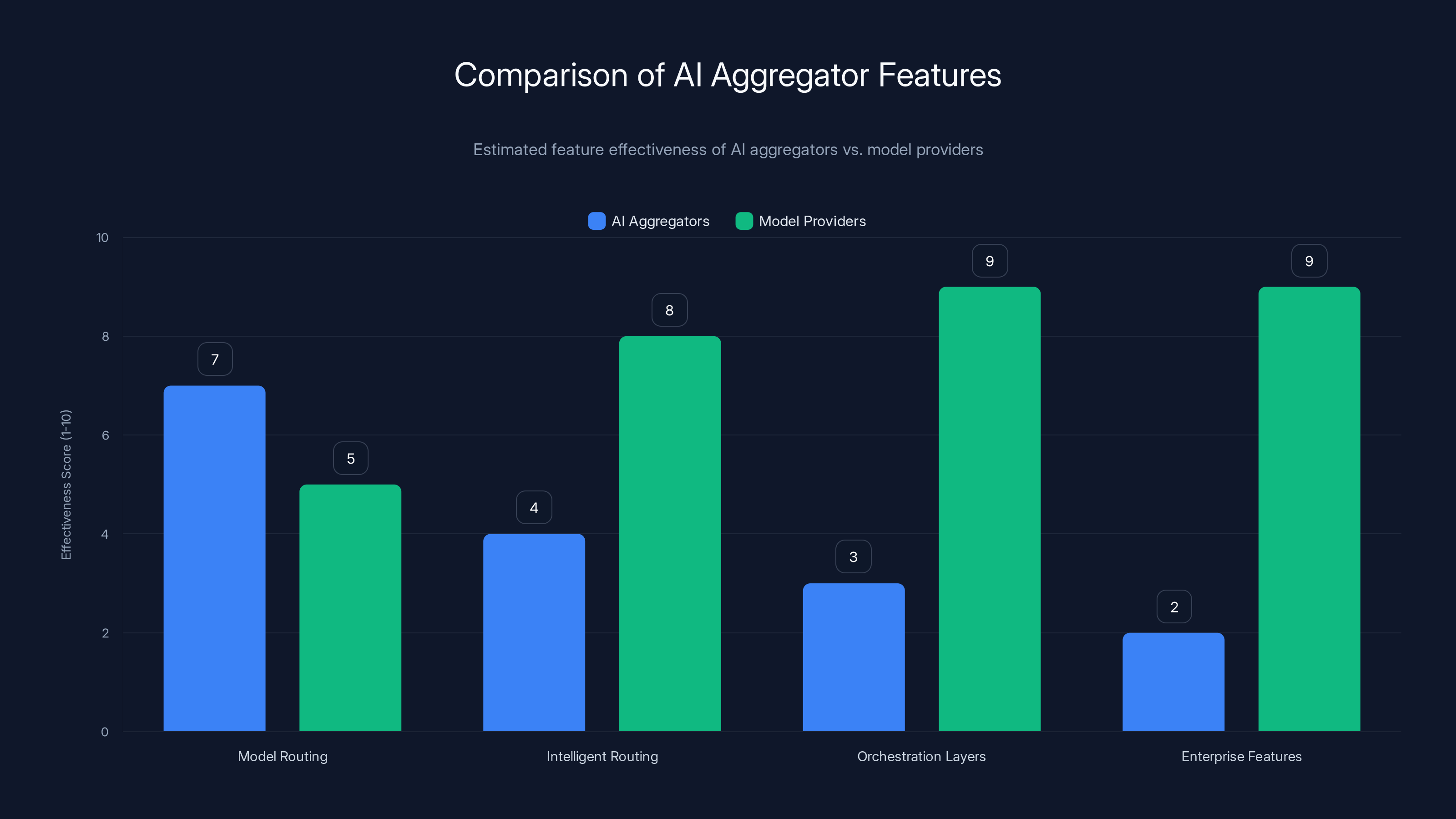

AI aggregators are wrappers on steroids. They're startups that don't just wrap one model. They wrap multiple models and promise to route your query to the optimal one. Use Perplexity and you're getting access to GPT, Claude, and other models through one interface. Use Open Router and you're accessing dozens of models through a single API.

The pitch sounds smart. Model prices vary. Model capabilities vary. Models have different strengths. An aggregator could theoretically route your question about code to Cursor's underlying model, your creative writing to Claude, and your reasoning task to a specialized reasoning model. They could play model arbitrage, buying cheap access and selling it at a premium.

The market doesn't believe in the pitch anymore.

Mowry's advice was even blunter for aggregators: "Stay out of the aggregator business."

His reasoning cuts through the clutter. Users don't want an aggregator because they need access to multiple models. They want it because they expect intelligent routing. They want the system to automatically send their query to whichever model is best suited. That requires intellectual property built into the routing logic.

For most aggregators, that IP doesn't exist. They're using rule-based routing (send code requests to Cursor's model, writing requests to Claude) or simple A/B testing. That's not a moat. That's a checklist item.

Here's the margin pressure that kills aggregators: Model providers are already building orchestration layers themselves. They're releasing APIs that let users compare models, run A/B tests, and switch between them. OpenAI could build a simple interface tomorrow that says "here's the best model for your task." Then your aggregator has no value prop.

Moreover, every model provider is expanding into enterprise features. OpenAI, Anthropic, and Google are all building monitoring, governance, evaluation tools, and fine-tuning capabilities. When they do, they're moving beyond the commodity of LLM access into the value-added services that aggregators were supposedly providing.

The AWS Reseller Playbook Repeating

Mowry has seen this movie before. In the late 2000s and early 2010s, AWS was new and intimidating. Startups emerged to resell AWS infrastructure with friendly interfaces, consolidated billing, and customer support. They had venture backing and plausible unit economics.

Then Amazon did two things. First, they improved AWS's interface and built out features that early resellers were providing. Second, they invested heavily in enterprise support and professional services.

The resellers got caught between two forces: Amazon improving their product and customers realizing they could manage AWS directly. The economics of being a middleman compressed instantly. The survivors weren't the ones with the best interfaces or the best billing tools. They were the ones who added genuine services: security consulting, migration expertise, architectural guidance, Dev Ops support.

The same thing is happening with AI aggregators right now. Model providers are building better interfaces and better enterprise tooling. Customers are learning to work directly with the models. And the middlemen are getting squeezed.

The only aggregators that might survive are the ones that become something else entirely. Maybe they become consulting firms that help enterprises deploy AI. Maybe they build proprietary datasets and fine-tuning infrastructure. Maybe they specialize in a vertical market where routing logic becomes genuinely sophisticated. But as pure aggregators that just collect models and route queries? They're doomed.

LLM wrappers face significant challenges, with the lack of switching costs and rapid interface improvements being the most impactful. (Estimated data)

The Developer Platform Renaissance

While wrappers and aggregators are facing extinction, other AI startup categories are thriving.

Developer platforms had a breakout year in 2025. Replit lets you code in the browser with AI assistance. Lovable lets you build web apps by describing them in English. Cursor became the IDE of choice for AI-assisted development. All three pulled massive funding and user adoption.

Why do these succeed where wrappers fail? Because they're not trying to be a thin layer on top of an LLM. They're building infrastructure. They're changing the fundamental workflow of how developers work.

Replit isn't just wrapping Chat GPT with a code editor. It's building a complete environment: code hosting, collaboration, deployment, and AI assistance all integrated. A developer using Replit has a completely different experience than a developer using Chat GPT in a browser tab and manually setting up infrastructure.

Lovable isn't just using GPT-4 to generate code. It's built an entire framework for turning design specs into deployed web apps. The AI is one component in a larger system that handles infrastructure, deployment, and updates.

Cursor took the GPT-4 base but built deep IDE integration, codebase awareness, and local context understanding that transformed how the AI works. Cursor's AI isn't better than GPT-4. But Cursor's experience is dramatically better than using GPT-4 in Chat GPT's interface.

These companies have moats because they own the environment. They can upgrade their AI models, but the value prop exists independent of which specific model they use. If OpenAI releases a better model, Replit can integrate it in days. The switching cost for the user remains high because Replit owns their entire development workflow.

Direct-to-Consumer AI Tools

Another category Mowry identified as having real potential is direct-to-consumer AI tools. These are applications that put AI capabilities into the hands of regular users for specific problems they face.

Google's AI video generator Veo is a perfect example. It's not an LLM wrapper. It's a specialized tool that does one thing exceptionally well: turns text descriptions into video. Mowry specifically mentioned students in film and TV using Veo to bring stories to life. That's a real workflow transformation, not a marginal improvement on an existing product.

Direct-to-consumer AI wins when it solves a complete problem end-to-end. It's not just running your prompt through an API and returning the result. It's understanding the user's intent, validating the output, and delivering something production-ready.

Consider video generation. The raw model is just one part of the solution. You need upscaling, quality assurance, format conversion, and possibly human review workflows. That's where you add value. That's where the business model works.

The key to success in this space is vertical focus combined with complete workflow automation. Pick a problem space that matters to customers, understand that space deeply, and build tools that automate the entire workflow, not just the LLM portion.

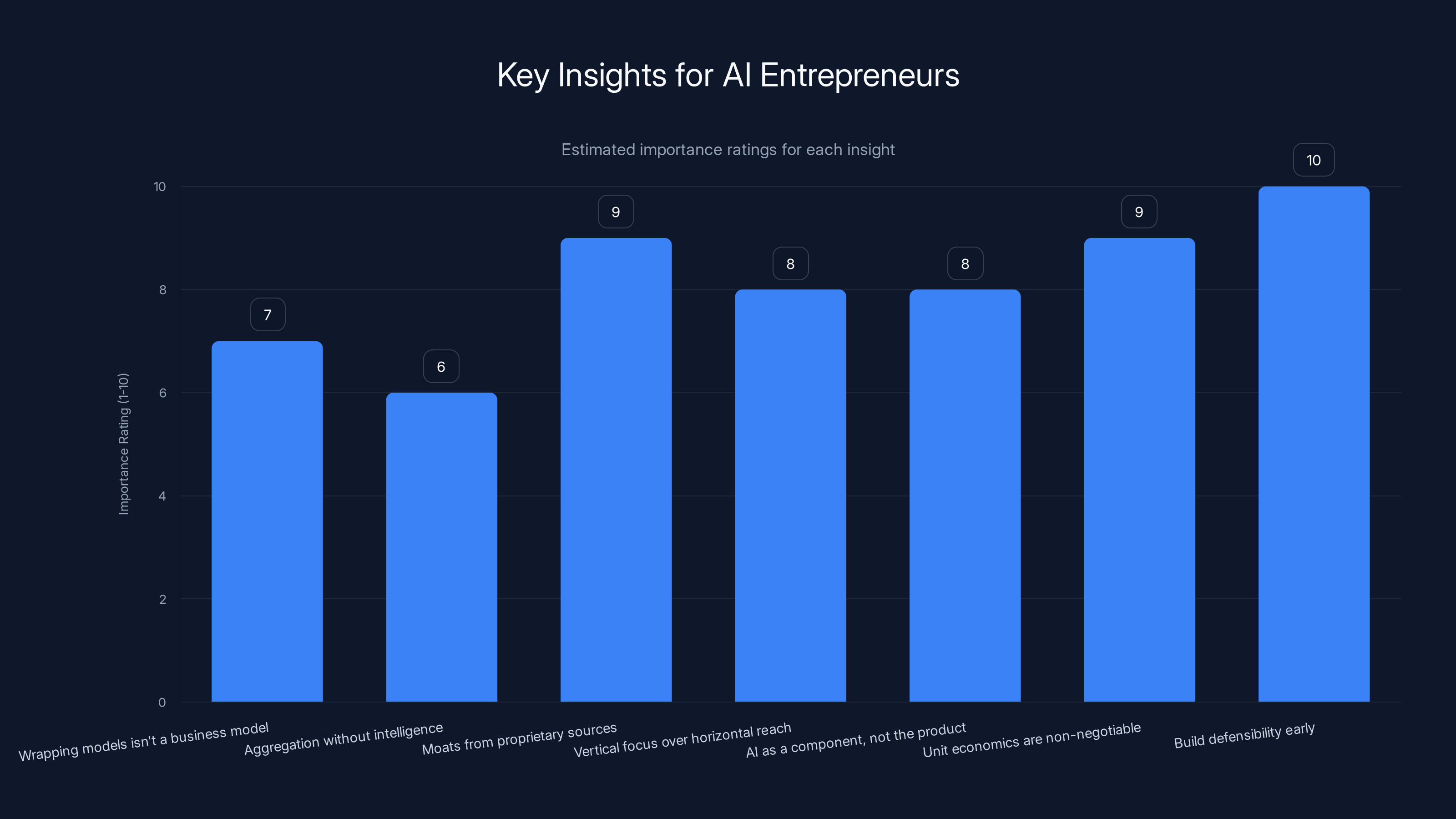

Building defensibility early and understanding unit economics are rated as the most critical insights for AI entrepreneurs. Estimated data.

The Biotech and Climate Tech Opportunity

Beyond AI, Mowry highlighted biotech and climate tech as categories with genuine momentum and venture attention.

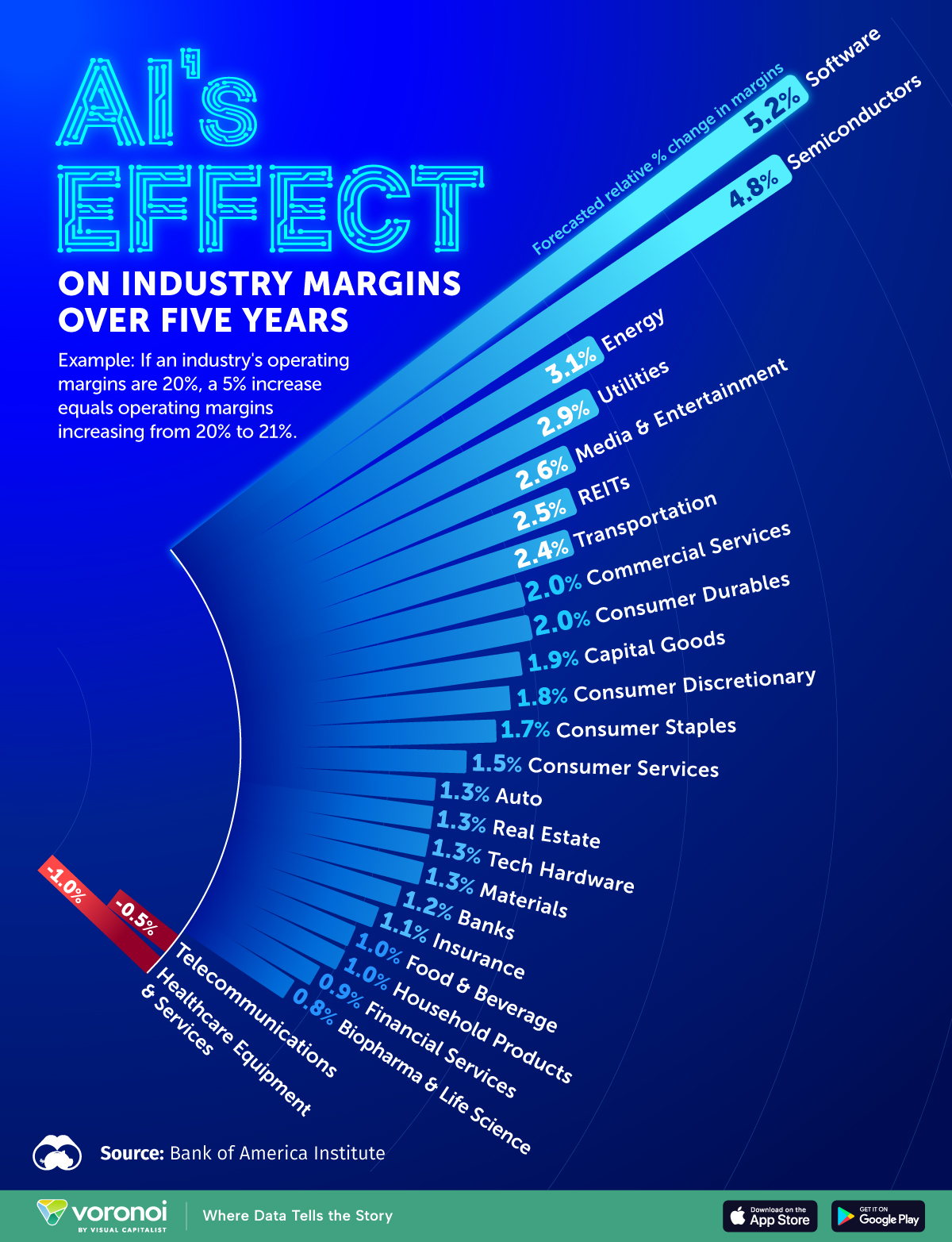

Here's why AI startups should pay attention: biotech and climate tech success requires infrastructure that AI startups have largely ignored. These aren't direct LLM wrappers. They're startups that use AI as a tool to solve hard scientific problems.

Biotech companies use AI to predict protein structures, design molecules, and accelerate drug discovery. The AI is a component in a pipeline that includes wet lab validation, regulatory work, and clinical trials. The company's value comes from the entire system, not just the AI model.

Climate tech companies use AI to optimize energy systems, predict environmental changes, and design carbon capture solutions. Again, AI is a tool, not the product.

These categories work because the AI enhances a fundamental workflow that has to happen anyway. You can't replace drug discovery with just an LLM. You can't solve climate change with just better models. The AI accelerates and optimizes existing processes.

The lesson for struggling AI startups: your AI shouldn't be your entire value prop. It should be one component in a larger system that solves a real problem end-to-end.

Why the Timing Matters

The market shift Mowry described didn't happen because AI got worse or wrappers got dumber. It happened because of market maturation.

In 2023, when Chat GPT launched, the market was so new that even mediocre wrappers could gain traction. Early adopters were experimenting. Venture capitalists were allocating blindly to anything with "AI" in the pitch deck. The bar for survival was low.

By 2024, reality set in. Customers tried hundreds of AI tools and realized most of them didn't materially improve their workflows. VCs started asking harder questions about unit economics and defensibility. The easy capital dried up.

Now in 2025, we're in the cleanup phase. The startups that have defensible moats, real unit economics, and genuine workflow improvements are getting stronger. The ones that were built on hype and thin differentiation are either folding or pivoting.

This is healthy. It's uncomfortable for founders in the LLM wrapper space, but it's good for the ecosystem. It forces startups to build real value instead of surfing the hype wave.

AI aggregators struggle with intelligent routing and enterprise features, where model providers excel. Estimated data shows aggregators lagging in key areas.

Defensibility Frameworks for AI Startups

If you're building an AI startup and want to survive, Mowry's advice maps to three defensibility frameworks:

Moat One: Proprietary Data

If your AI application works better because you control unique, high-quality data in your vertical, you have a real moat. A legal AI that has trained on millions of court decisions and regulatory filings has an advantage that a generic LLM doesn't have. A medical AI that has trained on decades of anonymized patient data has an advantage.

The barrier to replication is high. The data is expensive or impossible to collect. The competitive advantage is durable as long as you keep your data advantage.

Moat Two: Deep Integration

If your product works better because it's deeply integrated into your users' workflow and decision-making process, that's a moat. Cursor works because it's integrated into your IDE and understands your entire codebase. Slack could become unbeatable in AI because it already understands your entire communication history and organizational structure.

The switching cost is high. A competitor would have to rebuild the entire integration from scratch.

Moat Three: Specialized Workflow

If your AI application automates an entire workflow end-to-end instead of just one component, you have a moat. You're not just running prompts through an API. You're handling validation, quality assurance, feedback loops, and potentially human review.

The barrier to replication is the complexity of the entire workflow. Competitors can copy one feature but replicating the entire experience takes months or years.

The Economics of Building for Real Value

Building with these moats in mind changes everything about how you structure your business.

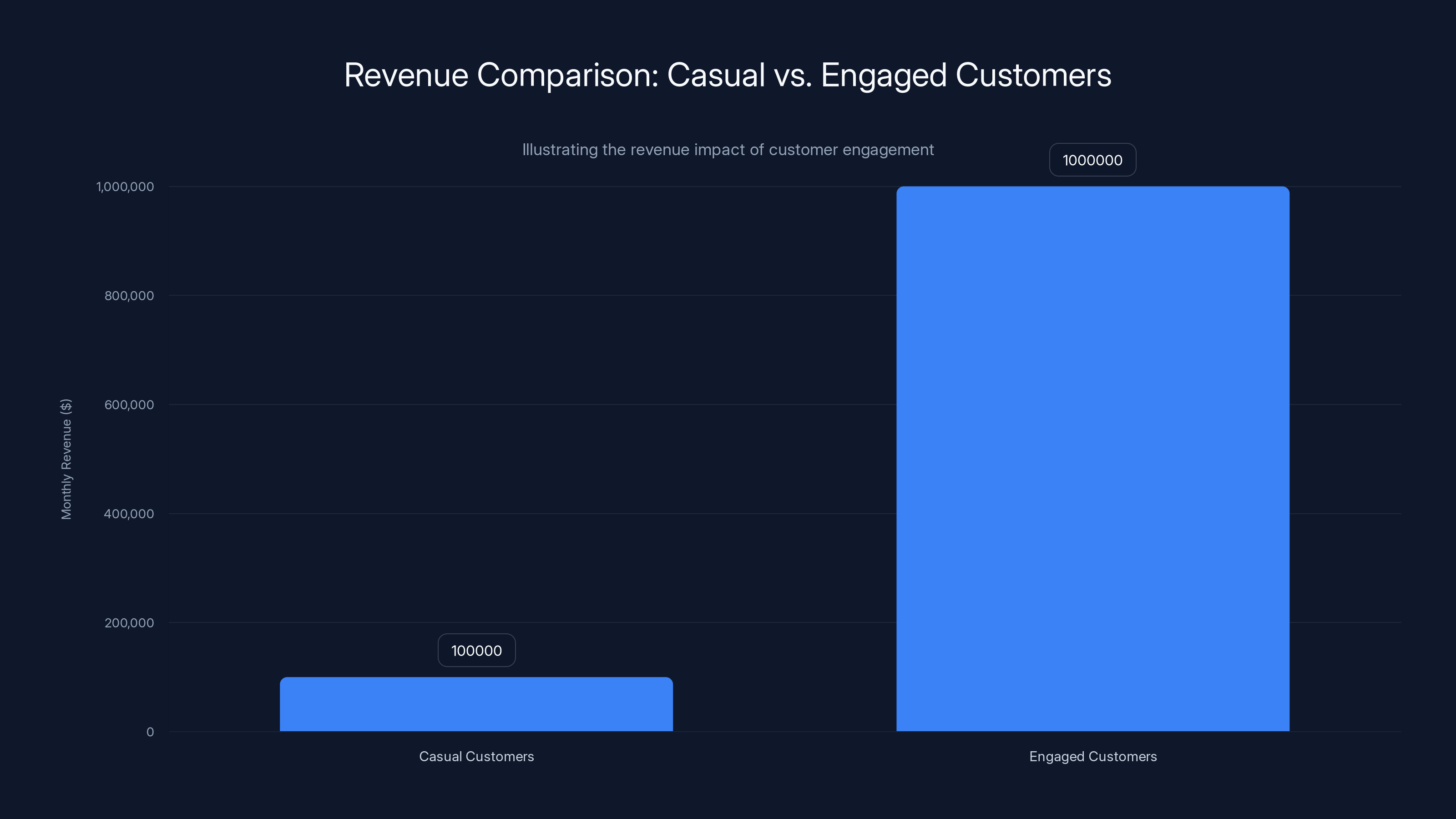

Instead of optimizing for quick initial adoption, you optimize for depth of usage. You don't care if 10,000 people use your product casually. You care if 1,000 people use it daily and can't live without it.

Instead of chasing revenue per customer, you chase revenue per engaged customer. You'd rather have 100 customers paying

Instead of assuming the underlying LLM is permanent, you assume it will change. You build your system so you can swap out the model and customers won't notice. You're building on top of AI, not dependent on specific AI.

Instead of competing on price, you compete on outcomes. "Our tool saves you 20 hours per week" beats "our tool is cheaper than Chat GPT" every single time.

Estimated data shows that innovative AI solutions have a higher success rate in 2025, while LLM wrappers and AI aggregators face significant challenges.

The Graveyard and the Future

What happens to the AI wrappers and aggregators that don't have moats?

Some will die. Some will pivot. Some will get acquired by larger companies that have distribution to hide their lack of differentiation.

But the broader ecosystem will be fine. Better than fine, actually. The capital and talent that goes into building thin wrappers right now will redirect toward building real infrastructure and real value.

The AI startup space is still in its infancy. But it's no longer in the "throw money at everything and see what sticks" phase. That phase produced a lot of noise and hype but not much durable value.

What's coming is better. Harder, slower, less hyped, but better. AI startups will be built to solve real problems with real defensibility. The founders will be in it for the long term, not the quick exit.

The market will be smaller than the hype suggested, but the companies that emerge will be dramatically more valuable than the 10,000 LLM wrappers that existed for six months.

Building AI Products That Last

If you're starting an AI startup right now, here's the framework that increases your odds of survival:

Start with the problem, not the AI. Identify a specific workflow that customers struggle with. Understand that workflow so deeply you can describe it in detail. Only then decide where AI fits in.

Build the entire solution, not the AI layer. Your product isn't Chat GPT with a different UI. Your product is a complete system that uses AI as one component to solve a customer's problem end-to-end.

Pick a vertical, own it. Don't try to build for everyone. Pick a specific vertical (law, construction, manufacturing, whatever) and build for that vertical so well that you become the obvious choice.

Measure what matters. Don't measure how many people use your product. Measure how much time it saves them or how much revenue it generates for them. Track retention and expansion revenue. Make sure your unit economics work.

Build data advantages into your roadmap from day one. How will you collect and leverage proprietary data? How will that data make your product better over time? If you don't have a data strategy, you're building on sand.

Plan to evolve your AI base. Assume that the models you're using today will be obsolete in 18 months. Build your architecture so you can upgrade models without rebuilding the product.

These frameworks aren't novel. They apply to any SaaS business. But they're the opposite of the LLM wrapper playbook that worked in 2023 and doesn't work anymore.

Engaged customers generate significantly higher revenue despite a smaller customer base. Estimated data highlights the strategic focus on depth of usage.

The Venture Capital Reckoning

Venture capitalists are finally asking the questions they should have asked from the beginning.

When you pitch an LLM wrapper to VCs in 2025, they'll ask: "What happens when OpenAI adds this feature to Chat GPT?" You need a real answer. "We'll move faster" or "our UI is better" aren't real answers.

When you pitch an AI aggregator, they'll ask: "How is your routing logic better than what the models provide natively?" Again, you need a real answer with competitive advantages that VCs can verify.

VCs are also asking harder questions about unit economics. How much does it cost you to acquire a customer? How much do they pay you? How long do they stay? At what scale are you profitable? These questions should have been table stakes from the beginning, but they weren't in 2023.

The winners in the next cycle will be founders who anticipated this shift. They'll be the ones who built real moats while everyone else was riding the hype wave.

Alternatives to the Wrapper Model

If you realize you're building an LLM wrapper and it doesn't have real defensibility, here are exit or pivot routes that might work:

Vertical specialization: Take your wrapper and make it infinitely better for one specific vertical. Instead of a general writing tool, become the writing tool for lawyers. Instead of a general coding assistant, become the coding assistant for Rust developers. Build domain-specific knowledge and optimizations that turn your wrapper into a specialized tool.

Data play: If you have access to proprietary datasets in your space, pivot toward building a fine-tuned model or a data product. You're no longer a wrapper. You're a data infrastructure company.

Integration pivot: If your wrapper gained traction in one specific platform (Slack, Teams, Notion, etc.), become the best-in-class AI for that platform. Build integrations, workflows, and context understanding that no standalone tool can match.

Consulting services: If your wrapper found a customer base, transition toward consulting and implementation services. You're no longer selling software. You're selling expertise. This is lower growth but often more profitable.

Acquisition target: Be realistic about whether your company is fundable as an independent entity. If you've built a user base and some revenue, you're an acquisition target for a larger company. That's not failure. That's a successful exit for seed and Series A investors.

What This Means for Established Tech Companies

For Google, OpenAI, Anthropic, and other model providers, the shift is strategic.

They want startups building on top of their models, but only specific types of startups. They want startups that add real value, build defensible moats, and create a rich ecosystem. They don't want thousands of thin wrappers that provide no differentiation.

Model providers are increasingly building the tools that wrappers were supposed to provide. They're adding fine-tuning, embeddings, function calling, and other features that let advanced users build sophisticated applications.

They're also moving upmarket themselves. OpenAI released enterprise features and deployment options. Google is building AI into Workspace and Cloud extensively. These companies are becoming full-stack AI platforms, not just model providers.

For startups, this means the relationship with model providers matters less and less. You're not betting on being the best wrapper of their model. You're building with their model as infrastructure, not core value prop.

The Talent and Capital Reallocation

The pressure on LLM wrappers and AI aggregators will cause talent and capital reallocation.

Talent will move from startups with dubious futures to categories that are actually growing: developer platforms, specialized vertical tools, AI infrastructure, and biotech/climate tech companies.

Capital will follow talent. VCs will see that AI wrapper returns are compressed and redeploy capital into categories with better economics. This doesn't mean AI funding stops. It means AI funding redistributes.

The founders and engineers leaving wrapper startups will either join better-positioned companies or start new companies with better defensibility. This churn is painful in the short term but healthy long term.

The Role of Execution Speed

One trap founders fall into: assuming that execution speed saves them from having thin defensibility.

Mowry's comments suggest that's wrong. Even if you move incredibly fast, you can't outrun a model provider building the same feature. You can't outrun physics and economics that make being a middleman untenable.

Execution speed matters in markets where speed creates defensibility. It doesn't matter in markets where the underlying economics are broken.

Speed doesn't solve thin defensibility. Only the three moats we discussed solve thin defensibility: proprietary data, deep integration, or specialized workflow automation.

Looking Forward: The 2025-2026 Shakeout

The next 12 to 18 months will be brutal for AI startups in the wrapper and aggregator categories.

Funding will dry up. Customers will churn to cheaper or more convenient alternatives. Founders will face hard choices about pivoting or shutting down.

But this is necessary. The AI startup ecosystem is maturing. Hype is being wrung out. Real business models are being established.

The survivors will be stronger. The companies that emerge from this shakeout will have real defensibility, real unit economics, and real paths to scale.

That's not glamorous. It's not hype. But it's how sustainable industries are built.

Key Insights for AI Entrepreneurs

Let's synthesize the core lessons from this market shift:

-

Wrapping models isn't a business model. Being a thin UX layer on top of an LLM isn't defensible. Model providers will add better UX. You'll lose.

-

Aggregation without intelligence is useless. Routing queries across models requires real IP in the routing logic. Otherwise, customers can do it themselves.

-

Moats come from one of three sources. Proprietary data, deep integration, or specialized workflow automation. Everything else is just noise.

-

Vertical focus beats horizontal reach. Own a specific vertical so completely that you become irreplaceable for that vertical. Don't try to serve everyone.

-

AI is a component, not the product. Your product should solve a complete problem. AI is one tool that helps solve it. If customers can replace the AI component and still get 80% of the value, you've failed.

-

Unit economics are non-negotiable. You need to know what it costs to acquire customers, what they pay, and how long they stay. If those numbers don't work, no amount of growth hype will save you.

-

The best time to build defensibility is now. Don't wait until you've gained traction. Build moats into your product from the first version.

FAQ

What is an LLM wrapper?

An LLM wrapper is a startup or product that takes an existing large language model (like Chat GPT or Claude) and adds a user interface, specialized features, or domain-specific optimizations on top of it. Examples include AI study assistants, coding helpers, or writing tools that all leverage underlying models without building their own. The core challenge with LLM wrappers is that they lack defensibility—as model providers improve their own interfaces and add specialized features, wrapper startups lose their competitive advantage.

Why are LLM wrappers facing extinction?

LLM wrappers are struggling because they lack defensible moats. When your entire business model is based on wrapping someone else's model, you face several fatal pressures: model providers can improve their own interfaces faster than you can, your margins compress as model prices drop, and there's no switching cost for customers because they could replicate your value in minutes. As Darren Mowry noted, the industry simply doesn't have patience for thin intellectual property wrapped around existing models anymore.

What is an AI aggregator?

An AI aggregator is a platform or API that consolidates access to multiple language models in one place. Services like Open Router or Perplexity allow users to access GPT, Claude, and other models through a single interface or API layer. Aggregators often promise intelligent routing—automatically sending queries to the best model for each task. However, without proprietary routing intelligence, aggregators are just middlemen between customers and model providers.

What moats do successful AI startups have?

Successful AI startups typically have one of three defensibility advantages. First, proprietary data that makes their AI work better in specific domains (like legal AI trained on court documents). Second, deep integration into customers' workflows that makes switching costs high (like IDE integration for coding assistants). Third, specialized workflow automation that handles an entire end-to-end process, not just the AI component. Without one of these moats, startups are vulnerable to displacement.

Which AI startup categories are actually thriving?

Developer platforms like Replit, Lovable, and Cursor had breakout growth in 2025 because they don't just wrap models—they build complete development environments. Direct-to-consumer AI tools that solve specific problems end-to-end also work well. Vertical-specific tools in biotech, climate tech, and professional services are growing because they use AI to enhance existing workflows rather than replace them. The common thread: successful startups own the entire solution, not just the AI layer.

How do I know if my AI startup will survive?

Ask yourself these questions: Can customers replace my AI with a different model without losing most of my value? If the answer is yes, you're in trouble. Do I own proprietary data, deep integrations, or workflow automation that competitors can't easily replicate? If not, you're building on sand. What happens when OpenAI or Anthropic adds the feature I'm building? If your answer is "we'll lose," you need to rethink your defensibility. If you can't answer these questions clearly, your startup has its check engine light on.

What should I build instead of an AI wrapper?

Instead of wrapping a model, build vertical-specific solutions that own an entire workflow. Instead of building for everyone, specialize deeply in one industry or use case. Instead of depending on a specific model, build architecture that works with any model. Instead of selling software, consider selling outcomes (time saved, revenue generated, problems solved). Instead of racing on features, build defensibility through data, integration, or workflow comprehensiveness that's hard for competitors to replicate.

What happened to AI startups that gained early traction in 2023-2024?

Many are facing a reckoning. In 2023-2024, the market was new and investors were allocating aggressively to anything with "AI" in the pitch. By 2025, those early moats have compressed. Customers who tried dozens of AI tools realized most didn't meaningfully improve their work. VCs started asking harder questions about unit economics and defensibility. Startups with real defensibility are thriving. Startups that relied on hype are struggling. Some are pivoting, some are getting acquired, and some are shutting down.

Can speed to market save a wrapper startup?

Not effectively. While execution speed matters in markets where rapid movement creates defensibility, it doesn't apply to LLM wrappers. You can't move faster than OpenAI improving Chat GPT's interface. You can't outrun model providers building enterprise features. You can't outrun the fundamental economics that make being a middleman unprofitable. Speed only saves you if you're building something defensible underneath that speed.

What's the playbook for pivoting out of the wrapper business?

If you're a wrapper without moats, consider these pivots: specialize deeply in one vertical and become the obvious choice for that vertical; pivot toward data if you have access to proprietary datasets; go deep on integrations with a specific platform (Slack, Teams, Notion); transition to professional services and consulting; or position yourself as an acquisition target for larger companies. The worst option is staying in the wrapper business and hoping the market changes in your favor.

The Bottom Line

The AI startup ecosystem is undergoing a brutal but necessary correction. The easy money is gone. The hype cycle is ending. The economics of being a thin wrapper or middleman are no longer tenable.

But this doesn't mean the AI startup opportunity is dying. It's evolving. The founders and investors who understand this shift will build better companies. The ones who don't will waste years and capital chasing models that don't work.

The question for you: Are you building something that's defensible and valuable, or are you hoping the market doesn't notice your lack of moats? Because the market is finally noticing.

Key Takeaways

- LLM wrappers that only add a UI layer on top of existing models face extinction as model providers improve their own interfaces and move upmarket

- AI aggregators have no defensibility because users don't need intelligent routing—they need proprietary IP that justifies paying for a middleman

- The three defensibility moats for AI startups are proprietary data, deep integration into workflows, and end-to-end workflow automation

- Developer platforms like Cursor, Replit, and Lovable thrive because they own the entire development environment, not just the AI component

- Vertical specialization with deep domain knowledge beats horizontal generalist tools in both customer retention and long-term survival

- The AWS reseller era offers a perfect historical parallel: middlemen lose defensibility when providers build their own enterprise tools

Related Articles

- The OpenAI Mafia: 18 Startups Founded by Alumni [2025]

- ChatGPT's Ad Integration: How Monetization Could Break User Trust [2025]

- xAI Founding Team Exodus: Why Half Are Leaving [2025]

- ChatGPT Ads 2025: Complete Guide to OpenAI's Advertising Strategy

- Neo's Low-Dilution Accelerator Model: Reshaping Founder Economics [2025]

- Anthropic's $30B Funding, B2B Software Gravity Well & AI Valuation [2025]

![Why AI Startups Are Dying: The Wrapper and Aggregator Reckoning [2025]](https://tryrunable.com/blog/why-ai-startups-are-dying-the-wrapper-and-aggregator-reckoni/image-1-1771691751482.png)