Why Top Talent Is Walking Away From Open AI and x AI [2025]

Introduction: The Great AI Exodus

Something's broken at the top of artificial intelligence. Not the algorithms, not the compute, not the venture capital flowing in like water. It's the people.

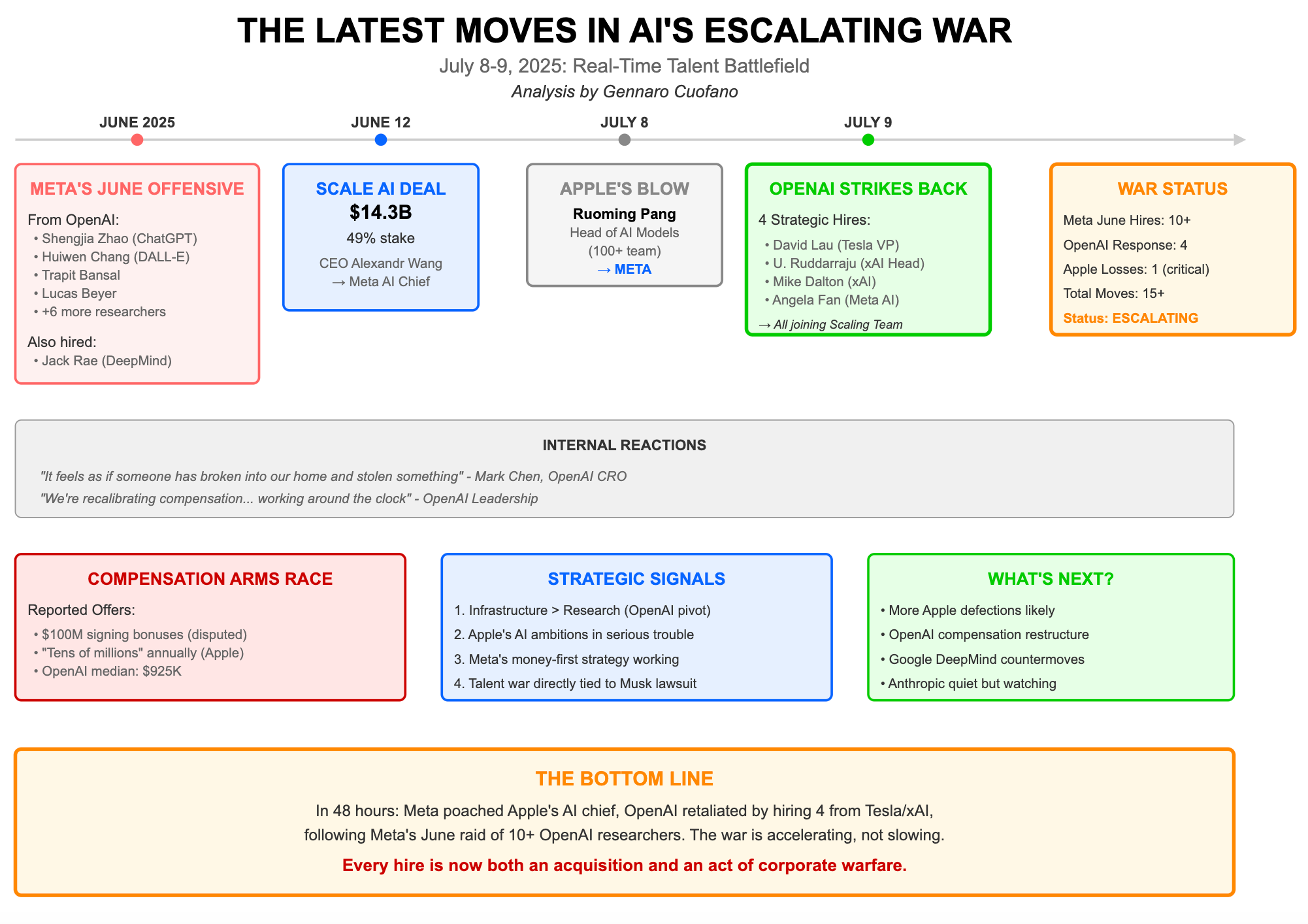

Over the past few weeks, two of the industry's most high-profile companies have been hemorrhaging talent at a pace that should worry every investor in this space. Half of x AI's founding team has walked out the door. Some left quietly. Others were pushed. At Open AI, the attrition has taken a different form, but it's equally telling. The company disbanded its entire mission alignment team. A policy executive who challenged the company's "adult mode" feature was fired. These aren't small departmental reshuffles. These are cracks in the foundation.

Here's what makes this moment different from typical tech churn. In artificial intelligence, talent isn't just a competitive advantage. It's the entire ballgame. You can't copy a brilliant researcher. You can't hire a replacement for a cofounder who understood the original vision. You can't easily rebuild institutional knowledge once the people who built it leave. When companies lose talent, especially founding talent, they lose the thread connecting past innovation to future possibility.

The departures tell us something crucial about what's happening inside these organizations. They're not happening because the work is boring or the compensation isn't competitive. They're happening because of fundamental disagreements about direction, culture, and values. They're happening because brilliant people are realizing they can make more of an impact elsewhere. They're happening because the startup phase where anything felt possible has collided with the reality of running a corporation where not everyone agrees on what should happen next.

This matters beyond Silicon Valley gossip because these two companies are shaping the trajectory of artificial intelligence itself. Their talent decisions ripple through the entire industry. When top researchers leave, they often start their own companies or join competitors, accelerating innovation elsewhere. When founding teams fracture, it suggests the underlying vision might be unstable. When leadership fires people for disagreeing on fundamental questions, it signals something about organizational culture that talented people notice and respond to.

Let's break down exactly what's happened, why it's happening, and what it means for the future of AI.

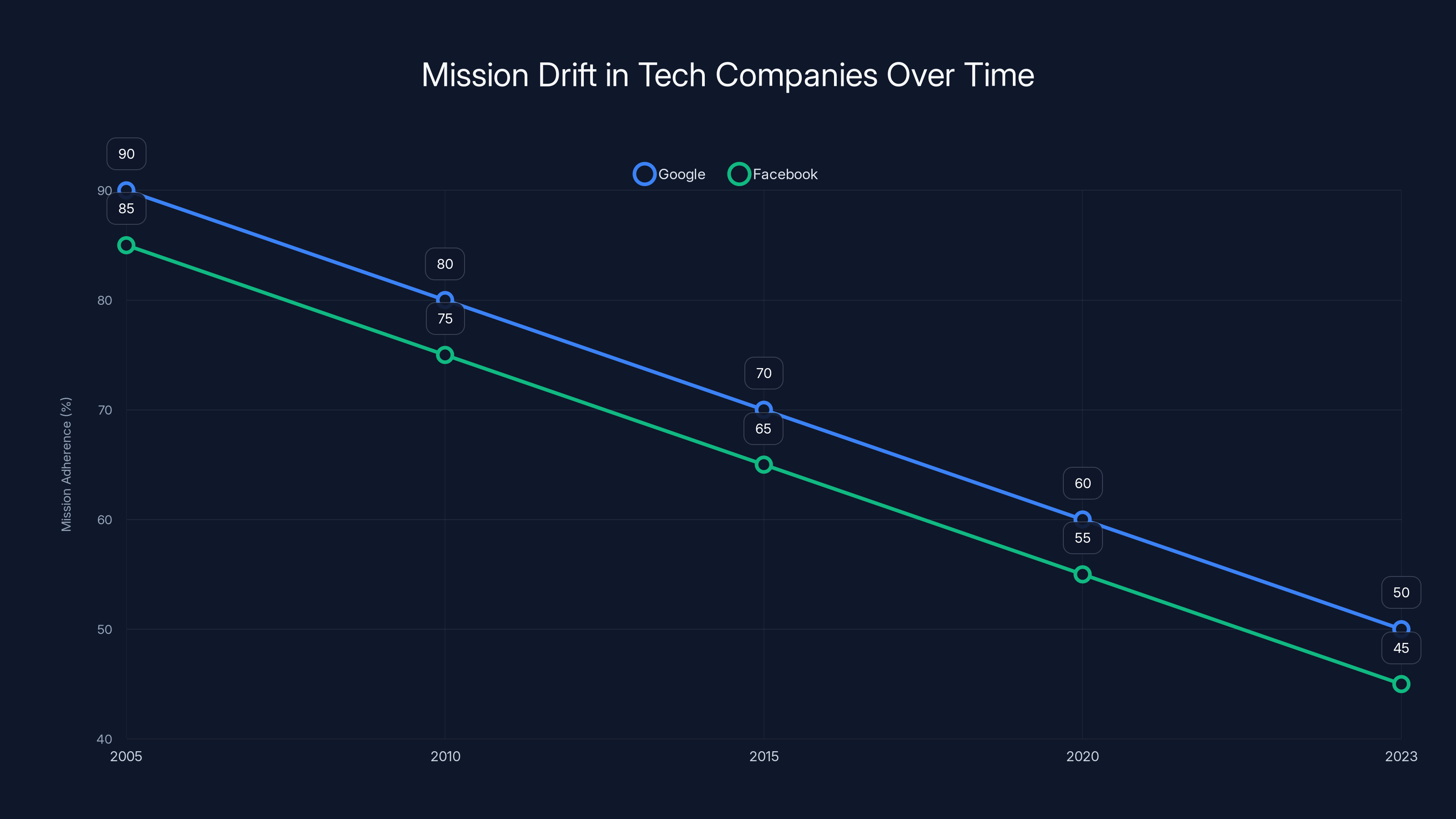

Both Google and Facebook have experienced a decline in mission adherence over time, with commercial pressures contributing to this trend. (Estimated data)

TL; DR

- x AI's crisis: 6 out of 12 founding team members have departed in roughly one year, half through apparent restructuring

- Open AI's turmoil: Mission alignment team disbanded, policy executive fired over "adult mode" opposition, signaling internal value conflicts

- Root causes: Disagreements over safety standards, profit prioritization, and fundamental company direction

- Industry impact: Loss of founding talent accelerates competition and raises questions about company stability

- Talent signal: Top researchers leaving suggests misalignment between stated mission and actual execution

The x AI Unraveling: How Half the Founding Team Disappeared

Let's start with the numbers, because they're stark. x AI launched in 2024 with a founding team of 12 people. That's small. That's intentional. You can count on two hands the number of people who shaped the company's initial direction, culture, and technical roadmap.

Now, six of those twelve are gone.

That's not attrition. That's a collapse.

What makes this worse is the timeline. These departures didn't happen all at once. They've been trickling out over roughly twelve months, which means the company has been bleeding founders continuously, never having a moment to stabilize. You lose one founder, you adapt. You lose three, you start to worry. You lose six, you have to ask whether the original vision even survives intact.

The pattern matters too. Some departures were framed as resignations, departing team members choosing to leave. Others came through what was delicately called "restructuring," which is corporate speak for "we fired them or forced them out." That distinction is crucial because it tells you whether people are running toward something better or running away from something broken.

The Restructuring Reality

When you hear "restructuring" at a one-year-old startup, it's never about optimizing org charts. Restructuring at that stage means the founders have irreconcilable differences with some of their founding team about what the company should be or how it should operate.

This is particularly damaging at x AI because the founding team was recruited specifically for their abilities and their shared understanding of what the company would do. If those founding members are being pushed out, it means either their understanding of the mission changed, or leadership's understanding changed. Either way, something fundamental broke.

In frontier AI research, this kind of split is especially costly. A researcher who helped design your initial architecture doesn't just understand the code. They understand the constraints you decided to accept, the tradeoffs you optimized for, the long-term vision embedded in early decisions. Lose that person, and you have to reverse-engineer their thinking from what they left behind.

Elon's Vision Collides With Reality

Elon Musk, x AI's founder, came into this company with a specific worldview. He wanted to build an AI system that was smarter and more honest than existing alternatives. He was critical of what he saw as safety theater at competitors. He wanted to move fast, not backward-engineer every safety constraint.

That vision probably resonated with the original founding team. But vision is one thing. Execution is another. As the company hired more people, built processes, faced real constraints around responsible deployment, some of the original founders may have realized the gap between the vision they signed up for and the compromises they had to make.

Musk didn't contradict his vision publicly, but his actions did. By pushing out some founding team members and restructuring, he was signaling something had to change. That change came at the cost of losing people who understood his original thinking.

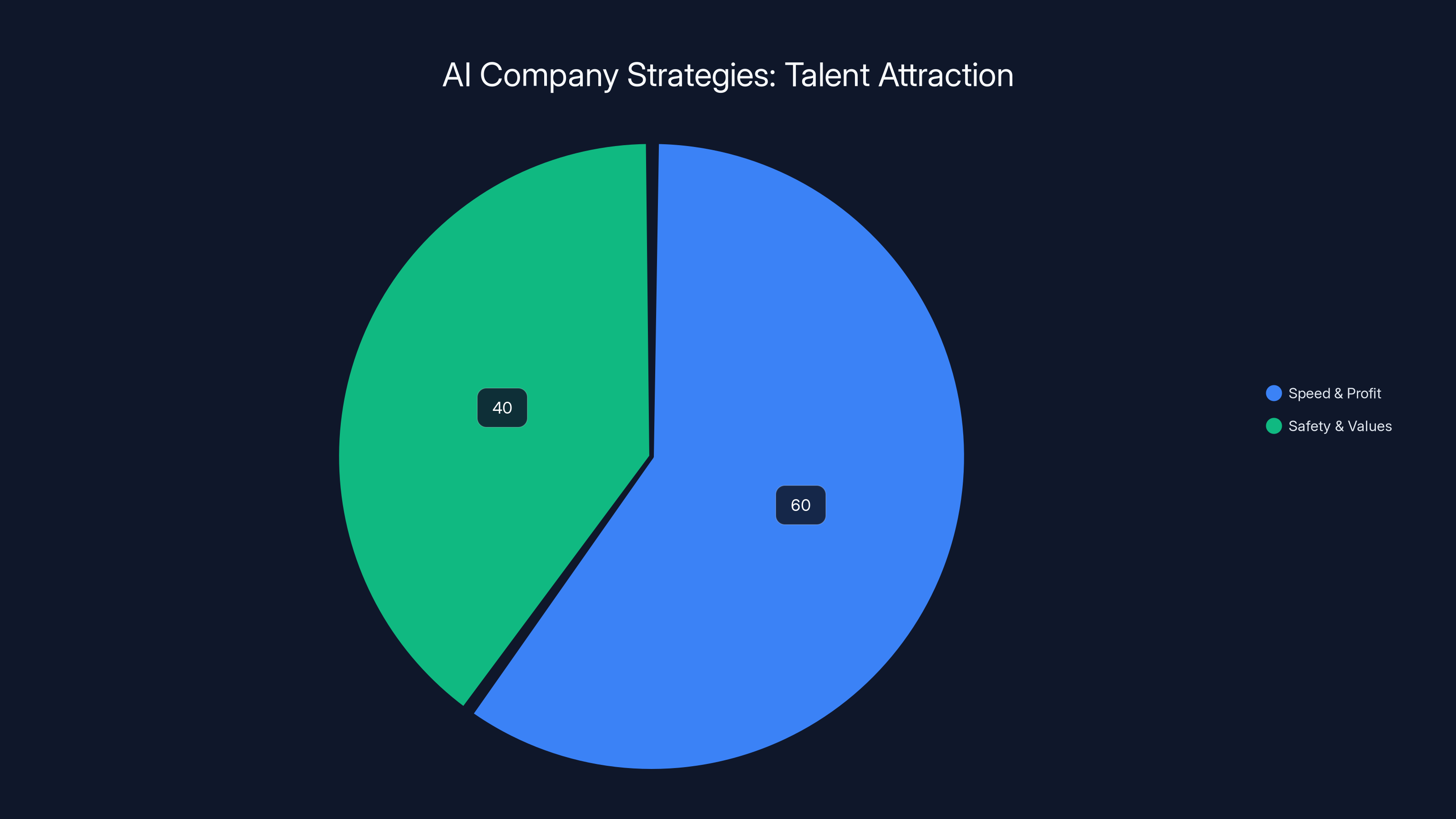

Estimated data suggests that 60% of AI companies prioritize speed and profit, while 40% focus on safety and values. This division reflects the industry's strategic choices in attracting talent.

Open AI's Internal Conflict: Mission vs. Monetization

Open AI's situation is different but equally revealing. This is a company that raised over $100 billion in valuation, that controls the most widely used AI system in the world. It should be thriving. Instead, it's fracturing from the inside.

The proximate cause is clear: Open AI disbanded its mission alignment team entirely. This wasn't a layoff of part of the safety division. This was the elimination of an entire function dedicated to ensuring the company's work aligned with its stated mission of beneficial AI development.

Then came the firing of a policy executive who had opposed the company's "adult mode" feature. This is where things get genuinely interesting, because it reveals what the company cares about and what it doesn't.

What Is "Adult Mode" and Why Did Someone Resign Over It?

Adult mode, as described, would be a version of Chat GPT with relaxed restrictions. Fewer guardrails. More willingness to engage with controversial content, adult themes, explicit material, and politically sensitive topics.

On the surface, this is a product decision. Some users want fewer restrictions. Some users believe safety features are overblown. Some users want AI that doesn't refuse their requests based on perceived political bias. These are legitimate perspective differences.

But the policy executive who opposed it saw something different. They likely saw a direct contradiction between Open AI's stated mission (beneficial, aligned AI) and a product feature that would move in the opposite direction. They probably articulated this internally. Then they were fired.

When you fire someone for objecting to a strategic decision on principled grounds, you're sending a message to everyone else in the organization: Don't raise ethical concerns. Keep your head down. The mission alignment language was never real.

The Mission Alignment Team Dissolution

Dissolving the mission alignment team right before rolling out adult mode is almost theatrical in its bluntness. It's like removing the quality assurance team right before shipping a risky product update.

The team probably included researchers and policy experts who would have raised exactly the kinds of questions the fired executive raised. Remove the team, you remove the constraint. Remove the people with institutional knowledge about your safety commitments, and you make it easier to pivot away from those commitments.

This isn't subtle. The people at Open AI know what message this sends. And that message is being heard loud and clear by everyone else in the organization considering whether to stay.

Why Founding Team Members Leave: The Psychology

There's a pattern to founder departures that deserves deeper understanding. It's not about the stock price. It's not about the salary they could negotiate somewhere else. It's almost always about one of three things: irreconcilable value conflicts, loss of leverage, or exhaustion.

Value Conflicts Are Irreversible

When you co-founded a company, you made a bet about what kind of organization you wanted to build. You probably had explicit conversations about mission, values, decision-making principles. In early days, these shared values are what held the company together because everything else was broken. The office was terrible, the compensation was minimal, the product didn't work. But the shared sense of purpose kept people pushing.

Over time, as the company succeeds, it gains resources and power. That's when value conflicts become visible. If you built something with the core belief that it should operate according to certain principles, but leadership decides to abandon those principles, you have an unsolvable problem. You can't negotiate a value. You can't compromise on mission. You either leave or you accept that you helped build something that contradicts what you believe.

For x AI founders, the value conflict likely centered on how to develop AI responsibly. Were they building something to maximize capability or to maximize safety? Were they moving too fast or not fast enough? Were they selling to the right people or cutting corners for revenue? These aren't questions with middle-ground answers.

Loss of Leverage Changes Everything

When you're a founding team member at a one-year-old company, you have significant leverage. The company can't function without you. You could leave and take key knowledge, relationships, talent networks. Leadership has to negotiate with you because losing you costs something.

But as the company matures, as the org grows, as institutional knowledge spreads across more people, your individual leverage diminishes. You become more replaceable. More expendable. The company can hire someone at a higher level and assimilate your responsibilities. Or it can reorganize and distribute what you did across others.

Once you realize you've lost leverage, the calculus for staying changes fundamentally. You're no longer a cofounder with voice in strategic direction. You're an employee with opinions leadership can choose to ignore. For talented people, that moment often triggers a reassessment. If you're going to be an employee anyway, why not get paid significantly more elsewhere? Why not join a company where you still have voice and influence?

The Exhaustion Factor

Building something from nothing is impossibly hard. For the first year, adrenaline carries you. For the second year, momentum helps. By year two or three, you're running on fumes.

When you're exhausted and you also have value conflicts with the direction, the decision to leave becomes inevitable. You're too tired to fight. You're frustrated that the company isn't operating according to the principles you signed up for. You see an opportunity to join something else where maybe you don't start from zero but you're also not constantly fighting the organization you're trying to lead.

The Signal This Sends to the Broader AI Industry

When half a startup's founding team leaves, the rest of the industry is watching. Not because the drama is entertaining (though it is), but because founding team departures are the canary in the coal mine for organizational health.

Talent Follows Talent

The best researchers in AI watch what happens at x AI and Open AI like hawks. They know these are frontier companies pushing the boundaries of what's possible. They also know that working there is a calculated bet on whether the organization will remain stable, coherent, and aligned with the mission they signed up for.

When half the founding team leaves, that's a clear signal: This bet has soured. These brilliant people, who understand AI better than almost anyone in the world, are deciding the risk/reward isn't worth it. That's information talent elsewhere processes immediately.

Some of that talent will jump to other companies hoping to get in early before the same fracture happens. Some will start their own companies, betting they can build something more stable. Some will join organizations with stronger value alignment, even if those organizations are less prestigious.

The net effect is that x AI and Open AI will find it harder to recruit and retain top talent. The departures create a signal that repels future talent.

Investor Confidence Gets Shaken

Investors bet on founders and founding teams. They bet that the people building the company have a coherent vision and the capability to execute it. When a founding team fractures, it raises the question: Did the original vision survive? Does the remaining team have conviction? Can they execute without the departed founders?

These aren't questions that get answered in quarterly earnings calls. They get answered in the market's willingness to continue funding the company at previous valuations.

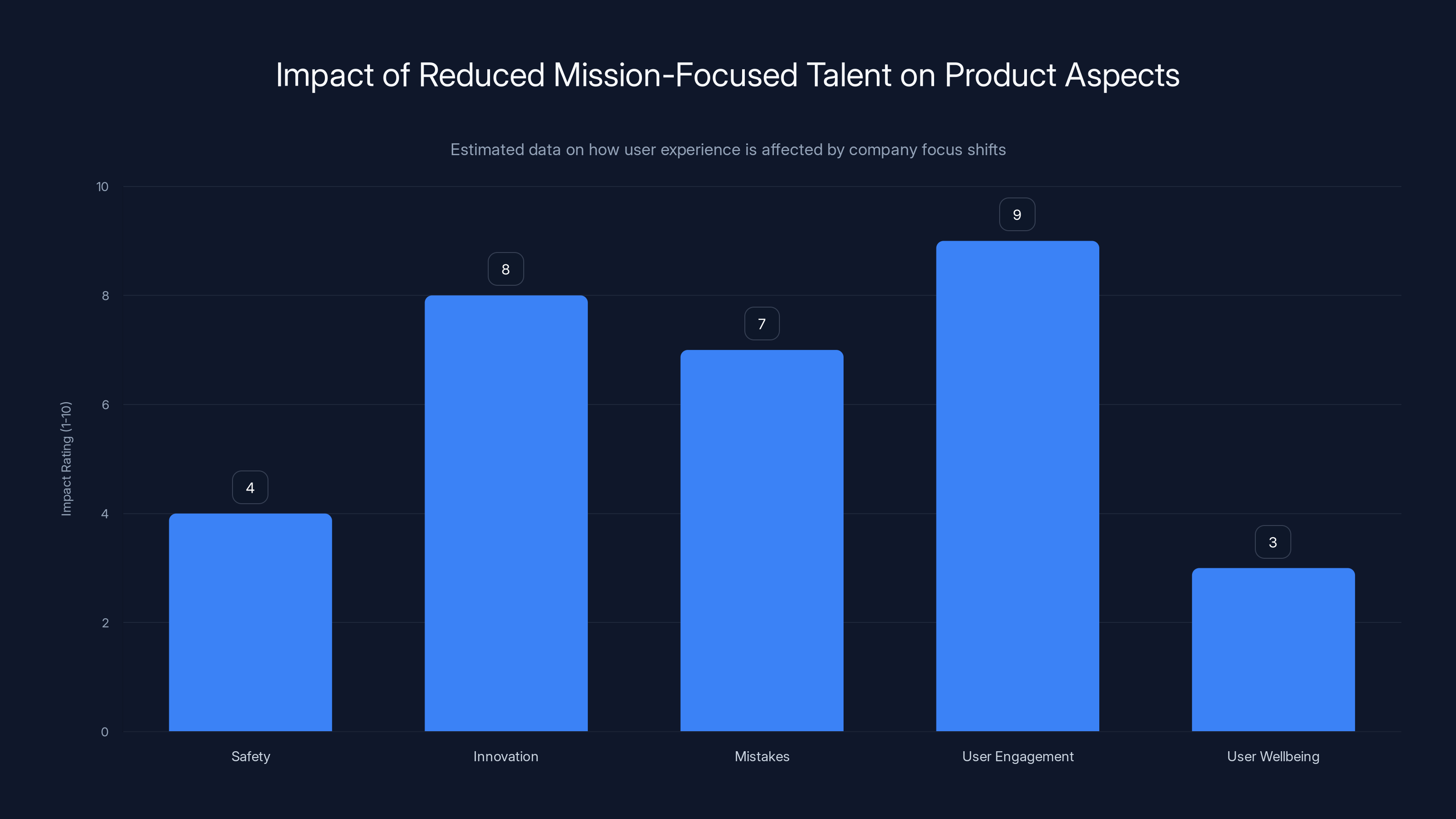

When companies prioritize profit and speed over mission alignment, products may become less safe and more mistake-prone, though innovation and user engagement may increase. Estimated data.

Safety Concerns vs. Competitive Pressure: The Core Tension

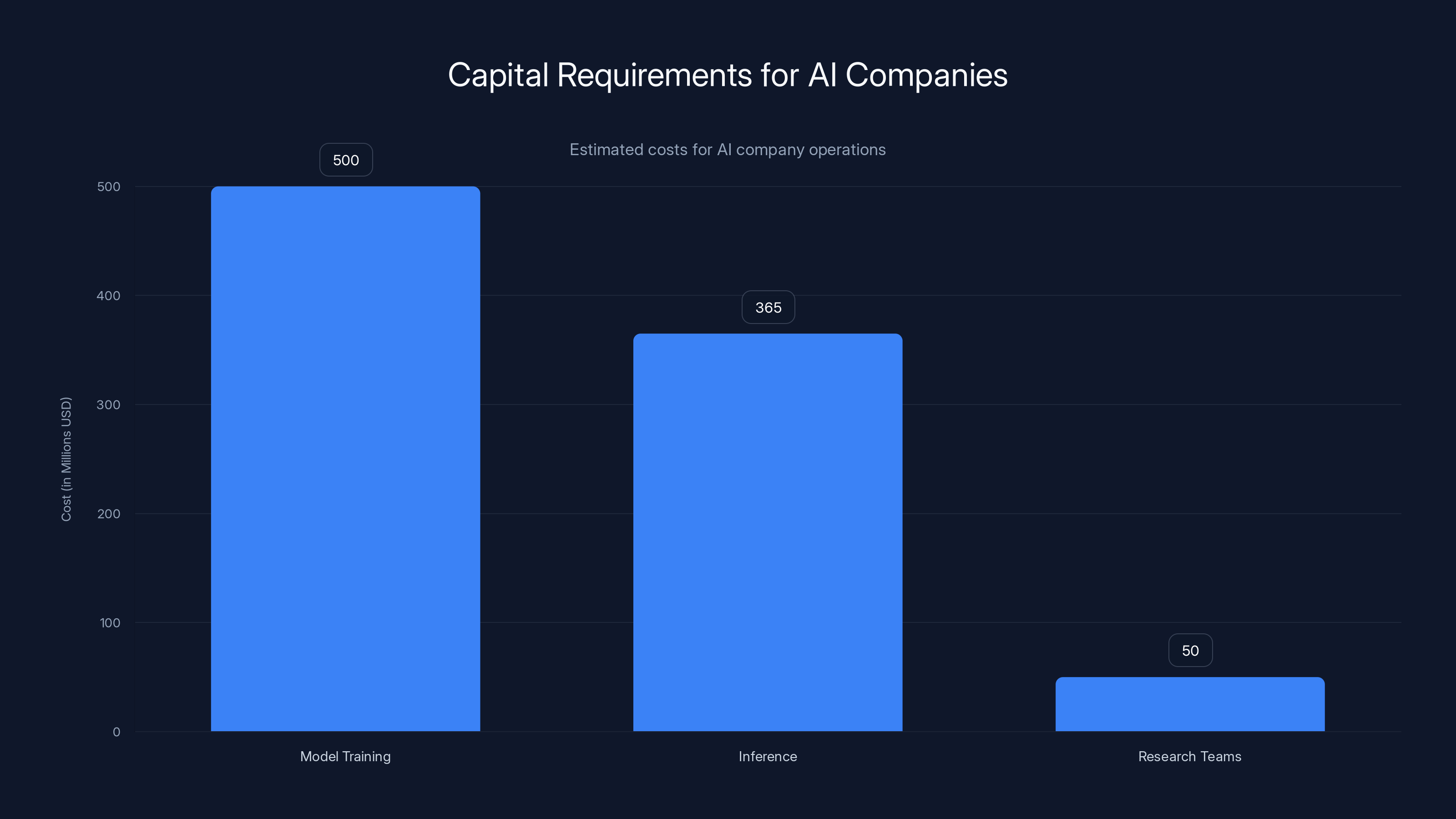

Underlying all of this is a fundamental tension that's becoming impossible to ignore. Building powerful AI systems is incredibly expensive. Training large language models costs hundreds of millions. Running inference at scale costs millions daily. Maintaining world-class research teams costs tens of millions annually. The math is relentless: if you want to lead in AI, you need revenue that matches those costs.

But the mission statements of these companies often included commitments to safety, responsible development, and alignment with human values. Those commitments cost money too. Safety research doesn't generate revenue. Ethical review processes slow down product releases. Turning down revenue opportunities for safety reasons is expensive.

As these companies have grown, the pressure to generate revenue and remain competitive has intensified. At some point, that pressure collides with the stated commitment to doing things safely and responsibly. Companies have to choose. Most are choosing revenue and competitiveness.

Open AI's Choice Is Clear

Open AI's disbanding of the mission alignment team and firing of the policy executive who objected to adult mode is a choice. It's a choice to prioritize speed and market appeal over internal governance structures built to ensure ethical development.

That choice probably makes business sense. Fewer restrictions means happier users. Happy users create more engagement, better word-of-mouth, stronger network effects. In a competitive market against Anthropic, Google Deep Mind, and others, you need every advantage.

But talented people who joined the company because they believed in the mission see this choice differently. They see it as confirmation that their earlier concerns were valid. That the organization isn't actually committed to the stated mission. That they're working in a place where principled objections get you fired, not listened to.

Those talented people leave. And in leaving, they make space for people who don't care about the mission, only about the impact and the resume line. Over time, that changes what the organization is.

The Competition Effect: Where Are They Going?

When top AI talent leaves Open AI and x AI, they don't disappear. They go somewhere. Understanding where they go is crucial to understanding what this exodus means for the industry.

Anthropic and Other AI Startups

Anthropic explicitly positions itself as the safety-focused alternative to Open AI. Guess where a lot of disillusioned Open AI researchers are looking? The company's founding was itself triggered by multiple high-level departures from Open AI. There's a clear pipeline.

For researchers who felt constrained by Open AI's move toward unrestricted models, Anthropic's explicit commitment to safety research is attractive. The company is hiring like crazy and it has the capital to compete on compensation. More importantly, it has the mission alignment that people are leaving Open AI to find.

New Startups Launched By Departing Founders

Some departing talent won't join another company. They'll launch their own. This is common in AI, where the cost of entry has dropped significantly. You need GPUs, capital, and talent. Departing founders have credibility that makes it easier to raise capital. They have talent networks to recruit from. And they have convictions about what the field needs that led them to leave in the first place.

Expect multiple startups launched by x AI and Open AI departures over the next 18 months. Some will be trivial and fail. Others will be genuinely innovative and well-positioned to compete in specific niches. The talent exodus becomes investment seed capital for competing approaches.

Big Tech Companies (Google, Microsoft, Meta)

Large tech companies have been quietly building their own AI research teams for years. They have resources, stability, and existing product ecosystems to leverage. For researchers who are exhausted by startup grinding, who want to work on unsolved problems without commercial pressure, big tech offers an attractive alternative.

Google, Microsoft, and Meta have all hired significant AI talent from startups in recent years. That pipeline will intensify as startup departures accelerate.

What This Means for AI Development: The Fragmentation

Historically, AI development has been concentrated. A few companies have the resources to train frontier models. That concentration made sense when training costs were stratospheric and talent was scarce. But the departures we're seeing now are creating fragmentation.

Decentralization of Frontier Research

As researchers leave the incumbent companies, they're taking knowledge and potentially code with them. A notable incident involved a Chinese engineer at x AI uploading the entire codebase to Open AI, then leaving and selling stock. That's extreme, but it illustrates how mobile this knowledge has become.

When founding talent leaves with deep understanding of technical architecture and training approaches, that knowledge diffuses into the broader ecosystem. It's not instantly reproducible (you still need compute and capital), but it reduces the intellectual moat around incumbent companies.

The net effect is faster diffusion of techniques across the industry. What Open AI kept secret three years ago, multiple companies are reimplementing now. The advantage of being first mover diminishes faster. Everyone gets better faster.

Specialization vs. Generalization

As the field fragments, you'll also see differentiation. Not every new company or departing team will try to rebuild a giant general-purpose language model. Some will specialize: safety-focused models, privacy-preserving models, energy-efficient models, specific vertical applications.

This specialization is probably healthier for the field overall. It means different approaches to building and deploying AI systems are being explored simultaneously. Some will fail. Some will succeed. The diversity of attempts increases the probability that someone finds approaches better than what the incumbents are doing.

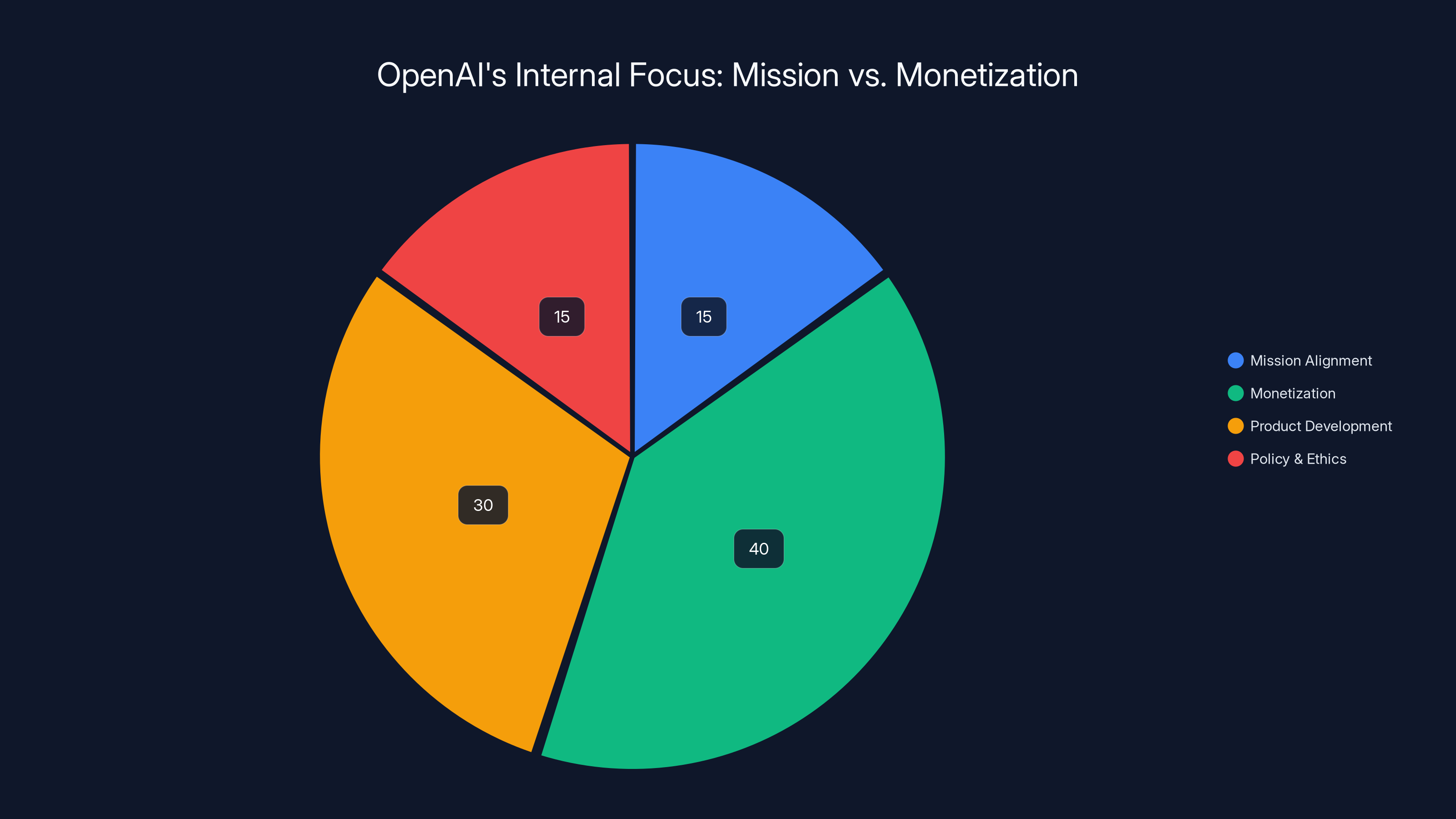

Estimated data suggests a shift towards monetization and product development at OpenAI, with less emphasis on mission alignment and policy/ethics.

The Specific Case: Mission Alignment as a Flashpoint

Let's zoom in on the specific issue that triggered the Open AI departures: mission alignment and what the company should optimize for.

What Is Mission Alignment, Really?

Mission alignment is a deceptively simple concept that becomes increasingly complex when executed. It means the organization's actions match its stated values. For Open AI, the stated mission is developing AI that benefits humanity and ensuring the benefits are widely distributed.

That mission implies certain constraints: you shouldn't build AI that concentrates power, you shouldn't optimize purely for profit at the expense of safety, you shouldn't rush capabilities that could be dangerous. These are the kinds of principles that should guide organizational decisions.

Mission alignment teams exist to help navigate the inevitable conflicts between these principles and business pressures. When a team wants to launch a product feature (adult mode) that contradicts the principles, a mission alignment team asks hard questions: Does this align with what we said we'd do? If not, how do we justify the contradiction? What's the decision-making process?

These aren't fun conversations. They slow things down. They create bureaucracy and oversight. In a competitive industry, they're extremely costly. But they also prevent organizational drift where the company slowly becomes something completely different from what it intended.

Why Companies Dissolve These Teams

Open AI dissolved its mission alignment team right before launching adult mode. This is probably not coincidental. The team would have asked hard questions about whether adult mode aligns with the stated mission. Rather than navigate those questions, the company eliminated the team.

This is a common move in organizations where leadership has decided to pivot away from stated values. You don't change the stated mission (that would be too honest). You just quietly eliminate or neutralize the function that holds you accountable to it.

The Signal to Remaining Employees

When you dissolve a mission alignment team right before launching a feature that contradicts alignment, everyone in the organization understands what just happened. The message is clear: Mission talk is marketing. Real decisions are made elsewhere. Compliance is optional if you have power.

Faced with that message, people who took the mission seriously have to decide: Do I stay and pretend this wasn't what I think it was, or do I leave? For many people, that's an easy decision.

Competitor Response: How Others Are Capitalizing

The departures at Open AI and x AI aren't just losses for those companies. They're opportunities for competitors.

Anthropic's Explicit Counter-Positioning

Anthropic was founded explicitly as the safety-focused alternative to Open AI. Multiple founders of Anthropic left Open AI because they felt the company was prioritizing capability over safety. Now, as Open AI dismantles its safety infrastructure, Anthropic is the obvious home for researchers who believe safety matters.

Anthropic has been positioning itself as the company that actually cares about alignment, not just as a talking point. That positioning is now being validated by departures from Open AI. Every engineer who leaves because of the mission alignment dissolving is a signal that Anthropic's messaging was right.

Startups Moving Into the Gap

Beyond Anthropic, there's space for multiple new companies founded by departing talent. A startup founded by x AI alumni might focus on efficient frontier models. Another founded by Open AI departures might focus on safety-preserving systems. Another might focus on a specific vertical (medicine, finance, scientific discovery).

Each of these companies benefits from the departures. They get founders with credibility, technical knowledge, industry relationships. They get a clear mission statement (we're doing this differently). They get early adopters who are frustrated with the incumbents.

Academic and Government Institutions

Major universities and government research institutes have also been hiring AI talent from startups. Berkeley, Stanford, MIT all have strong AI research programs and the resources to attract top talent. For researchers who want to work on foundational questions without commercial pressure, this is attractive.

The Broader Pattern: AI Company Culture Under Strain

Open AI and x AI aren't the only AI companies experiencing talent departures. This is a broader pattern. Anthropic itself has had departures. Google Deep Mind has seen turnover. Even smaller AI startups are dealing with retention challenges.

What's happening is that rapid success in AI is creating burnout and culture challenges that founders didn't anticipate. You start a company with a tight founding team, shared mission, commitment to doing something right. The company succeeds beyond anyone's imagination. Suddenly you're managing 500 people, dealing with regulatory pressure, navigating geopolitical dynamics. The original mission feels distant.

This is a general problem in startup success, but it's particularly acute in AI because the moral and political stakes feel higher. People joined because they wanted to ensure beneficial AI development. They're now watching their company make decisions they disagree with. That's cognitively dissonant in a way that working at a successful ad-tech company isn't.

AI companies face significant capital requirements, with model training costing an estimated

Retention Strategies That Actually Work

Since we're talking about talent leaving, it's worth understanding what actually retains talent in AI companies.

Alignment Between Values and Actions

This is number one. Pay enough that money isn't a constant source of frustration, then make decisions that demonstrate the organization actually believes what it says it believes. If you say safety matters, it should matter in how you allocate resources and make trade-offs. If you say the mission is benefiting humanity, it should guide product decisions, not just show up in the website.

The easiest way to lose talented people is to preach values you don't actually prioritize. They'll leave for somewhere that's at least honest about what it values.

Leverage and Voice in Decision-Making

Top talent wants to have influence on the organization. They want their opinions to matter. They want to see their arguments considered seriously in strategic decisions. When you grow large enough that founders stop having voice and become regular employees, that's when departures accelerate.

This is hard to solve at scale. You can create structures for feedback and input, but at some point, hierarchy means not everyone's voice can be equally weighted. The companies that handle this best build clear processes for how input is considered, not pretend that everyone has equal say.

Long-term Vision Clarity

People need to believe in where the company is going. For founders, that vision was clear. But as you bring in early employees, the vision needs to remain clear and compelling. If the vision shifts in ways that contradict the original mission, or if it becomes obvious leadership isn't sure where the company is headed, talented people leave to find clarity elsewhere.

Open AI's confusion over mission (profitable enterprise or nonprofit mission-driven organization?) is probably contributing to departures. Sam Altman and leadership haven't articulated how the company resolves this fundamental tension. Without clarity, people make their own decisions to leave.

Autonomy in How Work Gets Done

AI researchers in particular are used to significant autonomy. They want to decide how to approach problems, which research directions to pursue, how to validate their work. Excessive process, bureaucracy, and top-down direction is experienced as constraining.

Companies that retain AI talent tend to give significant autonomy within clear guidelines. You can pursue your research direction as long as it aligns with overall strategy. You have decision-making power over your team structure. You're not micromanaged. This feels obvious, but many companies grow process-heavy as they scale, which drives away the very people that made them successful.

The Economics of AI Company Growth

Understanding why these tensions exist requires understanding the economics that are actually shaping these companies.

The Capital Requirements

Frontier AI companies need billions in capital. Training the latest models costs hundreds of millions. Running inference at scale costs millions daily. Maintaining research teams costs tens of millions annually. This capital needs to come from somewhere.

Venture capital provided early funding, but as companies have grown, they've needed to access later stage capital and institutional investors. Those investors expect returns on their capital. Those returns either come from revenue or from acquisition (when a larger company buys you for capabilities and talent).

Both paths create pressure to prioritize commercial viability over the original mission. You either need to generate revenue quickly, which means building products people pay for, which means optimizing for user engagement and monetization. Or you need to be acquired, which means you need to be strategic about what you build and how it fits into the acquirer's roadmap.

Neither path is compatible with pure mission-driven decision-making.

The Talent Wars

Frontier AI talent is scarce. The total number of people in the world capable of doing cutting-edge AI research is probably in the hundreds. Maybe a couple thousand if you count people capable of doing high-quality but not groundbreaking work.

When Open AI and x AI are competing for the same handful of researchers, they're bidding against each other. The company that's not compensating aggressively, or that's creating a culture where top talent feels alienated, loses. As departures happen, it becomes harder to attract talent because departures signal something's wrong.

The Compute Cost Spiral

As AI models get larger and more powerful, the compute required to train and run them increases exponentially. This means the capital required keeps growing. You need more funding, which means you need clearer path to profitability or exit. Which means you need to make more commercial decisions. Which alienates mission-focused talent.

It's a spiral that's hard to escape. Companies that started with explicit commitment to not being purely profit-driven are now facing capital constraints that make profit a primary focus.

Historical Parallels: When Do Tech Companies Lose Their Mission?

This isn't the first time we've seen this pattern. Understanding how it's played out before might help predict where things go next.

Google's Evolution

Google started with "Don't Be Evil" and a stated commitment to organizing the world's information. For years, the company maintained surprising coherence around this mission. But as the company became dominant in search advertising, the tension between the mission and profit became harder to ignore.

Google's motto changed from "Don't Be Evil" to a more vague commitment to considering ethics. The company built internal AI ethics programs then eliminated them. It stated commitments to responsible AI development then took contracts with governments for surveillance and military applications. Over time, the company became less recognizable as the mission-driven organization it was in 2005.

The people who joined Google for the mission gradually left. Some left to startups. Some left to academia. Some just became comfortable with the contradiction. But the organization drifted away from its stated values as commercial pressures and scale made maintaining the mission difficult.

Facebook's Shift

Facebook (now Meta) started with a mission to connect people. That was genuinely the vision. But connecting people turned out to be incredibly profitable at scale, especially when you monetize through advertising based on behavioral data.

But the best way to keep people engaged (and thus create behavioral data to monetize) isn't always the best for people's mental health, political discourse, or authentic relationships. The company's optimization function gradually shifted toward engagement and ad revenue instead of connection quality.

Mission-focused people at Facebook noticed this contradiction and left. The company that remained was good at what it optimized for (engagement and ad revenue) but increasingly alienating to people who wanted to work on the original mission.

Lessons for Open AI and x AI

What happened at Google and Facebook could happen at Open AI and x AI. The company becomes successful at its commercial mission while losing coherence around its stated mission. The talented people who care about the original mission leave. The organization optimizes for what it measures (revenue, capability, market share) instead of what it intended.

The pattern is almost inevitable unless companies make explicit choices to resist the pressure. Those choices are expensive. They mean turning down revenue opportunities. They mean slower growth. They mean being willing to be strategically disadvantaged in pursuit of principle.

Most companies aren't willing to make those choices. Open AI appears to have decided not to. That decision comes with the cost of losing talent that believed in the original mission.

Estimated data suggests a steady increase in talent attrition for both OpenAI and xAI over the next year, potentially impacting their competitive edge.

What Rebuilding Would Look Like

If Open AI and x AI wanted to retain more talent and rebuild credibility around their stated missions, what would that actually require?

Transparency About Trade-offs

Instead of quietly dissolving mission alignment teams and firing policy executives, leadership could have been transparent. They could have said: "We're deciding to prioritize speed and user engagement over some safety constraints. Here's why. Here's how we're managing the risks. Here's what we're giving up and what we're gaining." Honest about the trade-off, not pretending the mission was still intact while dismantling it.

Talented people can live with trade-offs they understand and agree with. What they can't live with is being gaslit into thinking the organization still operates according to principles it's abandoned.

Real Governance Structures

If mission matters, it needs governance structure. It needs oversight boards that include external voices. It needs decision-making processes that require principled justification for decisions that contradict stated values. It needs transparency about how conflicts between mission and profit are resolved.

This is expensive. It slows things down. But it's the only way to genuinely maintain mission-driven decision-making at scale. Without it, the mission is just marketing language.

Resourcing the Mission

If safety and alignment research actually matter, they should be resourced accordingly. Not as percentage of revenue that varies based on profit. Not as overhead that gets cut during optimization drives. But as core strategic functions with dedicated capital and talent.

Open AI disbanded its mission alignment team while presumably spending significant capital on product launches and infrastructure. That sends a clear signal about where priority actually lies.

Honest Conversation About Purpose

Maybe the conversation Open AI and x AI need to have is more fundamental. Is building AGI in the next decade actually the shared goal, or is building a profitable business the shared goal? Because those might have different implications for how to operate.

If the goal is AGI and you believe safety is critical, then you need governance structures, oversight, slower deployment. If the goal is a profitable business and AGI is a nice-to-have, then you can move faster, take more risks, optimize for engagement. Both are legitimate goals. But they require different organizational structures and they attract different talent.

The current approach at Open AI and x AI seems to be trying to have it both ways: positioning as mission-driven AGI development companies while making decisions optimized for profit and market share. That contradiction is what's driving talented people to leave.

The Downstream Effects: How This Impacts Users

When companies lose mission-focused talent and the people remaining are optimizing for profit and speed, what happens to the products and services end users experience?

Less Safety Research, More Risk

Fewer safety researchers means less work being done on alignment, interpretability, and risk mitigation. That's not to say the company will deploy something catastrophically dangerous (there are still regulatory and reputational constraints). But it does mean the risk surface is less well-explored. Problems that could have been caught through safety research won't be. Failure modes that could have been mitigated won't be.

For users, this means a slightly more risky product. Not necessarily more risky than competitors, but more risky than it could have been with full safety research capacity.

Faster Iteration, Less Deliberation

Without mission alignment governance, product teams can move faster. New features get deployed with fewer approval steps. Experimental approaches get shipped before they're fully vetted. This creates products that move faster, which users often experience as more innovative.

But faster iteration also means more mistakes, more unintended consequences, more features that turn out to be problematic. Some of those mistakes would have been caught in the deliberation process that mission alignment teams create.

Product Design Optimized for Engagement, Not Wellbeing

When companies optimize for user engagement and time spent in product, they make different design choices than when they optimize for user wellbeing. This has been well-documented in social media where engagement optimization led to radicalization, decreased mental health, reduced attention spans.

For AI systems, this might mean features that are entertaining but less honest, content generation optimized for virality rather than accuracy, interactions designed to maximize usage rather than maximize user benefit. Not intentionally deceptive, but subtly skewed.

Less Truthfulness

A safety and alignment-focused team would raise concerns about feature deployments that reduce system truthfulness. For example, adult mode might optimize for user satisfaction by being less likely to refuse requests or correct misinformation. A mission-focused organization would flag that and probably decide not to deploy. A profit-focused organization might deploy it and let the market sort out the consequences.

Over time, this shapes what the product is and what it's good for.

Looking Forward: What Comes Next

We're in an inflection point in AI development. The companies that founded the field are facing fundamental questions about what they're trying to build and for whom. The answers they're giving (through their actions, not just their statements) are driving talent away. That talent is redistributing to other companies, startups, and research institutions.

Expect More Departures

The departures we're seeing now are probably just the beginning. When half a founding team leaves, it creates organizational uncertainty that triggers more departures. People who were on the fence about staying decide the organization is unstable. People who felt loyalty to founders start to doubt whether those founders are still in control of the vision. The departures cascade.

Both Open AI and x AI should expect continued attrition over the next 6-12 months. Not catastrophic departures, but a steady leakage of talented people who are reconsidering whether staying is the right call.

Expect Faster Diffusion of Techniques

As researchers leave these companies, they're taking knowledge with them. That knowledge gets applied to new problems at new companies. Research that's proprietary and closely held now gets replicated in 5-10 competing organizations. This is probably healthy for the field overall (it prevents excessive concentration of capability), but it's bad for the companies losing talent because their competitive advantage shrinks faster.

Expect Regulatory Attention

As frontier AI companies face questions about safety and governance, regulators are watching. A company that dissolves its mission alignment team while deploying less restricted systems is probably attracting regulatory scrutiny. Not necessarily enforcement, but attention that will lead to requirements and constraints that companies would prefer to avoid.

Mission-focused governance structures are, from a regulatory perspective, evidence that the company takes responsibility seriously. Companies that dismantle those structures are signaling the opposite.

Expect Consolidation Around Values

Over time, you'll probably see clearer differentiation between companies optimizing for capability/profit and companies explicitly optimizing for safety/alignment. Some users and enterprise customers will care about using models from companies with strong governance and transparent safety practices. Others will just care about capability and won't pay the cost of better governance.

That's actually a healthy market outcome. It means both approaches get to exist and compete, and users can choose based on their priorities.

Applying These Lessons: What Every Tech Leader Should Know

If you're running a technology company with an ambitious mission, the departures at Open AI and x AI are instructive.

Mission Needs to Be More Than Marketing

If you're going to claim your company has a mission, you need to organize your governance and decision-making around it. That's expensive and slow. It's a real constraint. But it's the only way to maintain mission coherence as you scale. Otherwise, you're just running a regular company with aspirational language.

Alignment Between What You Say and What You Do Is Everything

Employees are extremely sensitive to hypocrisy. They notice when you preach values you don't practice. They notice when you dissolve oversight functions right before doing something those functions would have objected to. They notice when you fire people for raising principled concerns.

Once they notice, your credibility is gone. You can't get it back by saying the right things. You have to actually change behavior.

Founders Giving Up Voice Is a Red Flag

When founding team members lose leverage in decision-making, they leave. That's almost inevitable. The only way to keep founders engaged is to maintain their voice and influence in the organization, even as the organization grows. This requires explicit structures and cultural norms that keep founder input valuable and considered.

Talent Leaves Toward Something, Not Just Away

People don't leave just because they're frustrated. They leave because they see somewhere better to go. Companies that want to retain talent need to be the somewhere better. That means being transparent about mission, giving talented people leverage, and making decisions that demonstrate the organization actually believes what it says.

The Bigger Picture: AI's Adolescence

All of this is happening at a specific moment in AI's development. The field is moving from research labs and startups to mainstream products and institutional influence. It's at the moment where the decisions made about values, governance, and priorities are setting patterns that will last for decades.

If we want AI systems that are beneficial and aligned with human values, the companies building them need to be organized around those priorities. The departures we're seeing at Open AI and x AI suggest they're not. And that suggestion is driving talented people to other organizations where they think those priorities are better maintained.

This is how fields change. Not through heroic individuals, but through where talented people choose to work. Where you work signals what you think is important. Where talented people congregate determines what gets built. Right now, those signals suggest a shift away from pure profit optimization toward approaches that take governance and safety more seriously.

That's not guaranteed to win in the market. It's very possible that the companies prioritizing speed and capability will outcompete the companies prioritizing governance and safety. But the talent votes we're seeing suggest there are enough people who believe governance and safety matter that alternate approaches will exist and compete.

The next few years will reveal whether that's true.

FAQ

Why would talented researchers leave companies that are pioneering AI development?

Talented researchers leave when their values conflict with the organization's actual priorities, even if the stated mission sounds compelling. When companies dissolve safety teams, fire executives for raising concerns, or prioritize capability over responsible deployment, researchers notice that the original mission has shifted. For people who joined to advance AI safely and beneficially, that's a signal to look elsewhere. It's not about money or prestige anymore, it's about working somewhere that actually believes what it says.

What does it mean when a company disbands its mission alignment team?

Dissolving a mission alignment team signals that the organization is de-prioritizing internal governance that would ensure decisions align with stated values. Mission alignment teams ask hard questions about whether product launches, features, and business decisions are consistent with the company's mission and values. Removing that function means those hard questions don't get asked internally before decisions are made. It's almost always a sign the company is deciding to move forward with something the mission alignment team would have objected to.

Could x AI and Open AI's talent departures indicate deeper problems?

Yes. When half of a founding team leaves, it suggests fundamental disagreements about company direction that couldn't be resolved internally. It's not normal attrition or people finding better opportunities elsewhere. It's usually a sign that the original vision fractured, leadership and founders no longer agree on what the company should be, or the company is moving in a direction some founders can't support. For investors and employees, this is a yellow flag that the organization's coherence might be compromised.

How does losing founding team members affect a company's ability to execute its mission?

Founding team members understand the original vision at a level nobody else can replicate. They made the early trade-offs, set the initial culture, and understand why certain decisions were made. When they leave, they take that context with them. New leaders and teams have to reverse-engineer the vision from what's left behind. That process is error-prone. More importantly, if the departing founders disagree with where the company is going, their departure usually means the original mission is being transformed into something else.

What should talented AI researchers consider when evaluating an employer?

Beyond compensation, researchers should evaluate whether leadership's actual decisions align with stated mission and values. Look at what the company cuts first when it needs to save money, who gets fired for disagreeing with leadership, what governance structures exist to ensure ethical decision-making, and how much voice researchers actually have in strategic decisions. Watch how the company treats people who raise concerns. If principled objections get someone fired, that's a signal the company doesn't actually want people who believe in the mission more than the bottom line.

Are these departures a sign that AI development is moving in the wrong direction?

Not necessarily in the wrong direction, but perhaps in a different direction than some talented researchers expected. The departures suggest there's genuine disagreement within the AI community about what should be prioritized: speed of capability development, safety and alignment research, or profit and market competition. Those aren't incompatible goals, but they do create tensions in decision-making. The departures show that some talented people believe these companies are resolving those tensions in ways they can't support. That disagreement is probably healthy for the field because it means different approaches are being tried simultaneously.

How do departures from frontier AI companies affect the broader AI industry?

When talented people leave Open AI and x AI, they redistribute to other companies, startups, and research institutions. This diffuses knowledge and techniques that were previously concentrated at one company. It accelerates the ability of competitors to catch up because departing researchers bring technical knowledge with them. It creates opportunities for new startups founded by people who left to pursue their vision differently. Overall, departures make the AI industry more distributed and competitive, which probably benefits users because more companies are pursuing different approaches rather than everyone copying the incumbent leaders.

Conclusion: The Talent Signal Matters

Where talented people choose to work is a real signal about what they think is valuable and important. The departures at Open AI and x AI are sending a clear message: these companies are not reliably maintaining the mission-focused approach that attracted the talent in the first place.

That message will resonate through the AI industry. Other companies will notice which founders left and why. They'll notice which departures were voluntary and which were forced. They'll understand that if they want to recruit top talent, they need to be credible about their commitment to values beyond profit.

Some companies will ignore this signal and optimize purely for capability and competitiveness. That's a legitimate choice. But it comes with the cost of finding it harder to recruit and retain talent that cares about how the technology is built and deployed.

Other companies will use this as an opportunity. They'll position themselves as the places where talented researchers can actually maintain commitment to responsible AI development. They'll build governance structures and decision-making processes that honor that commitment. They'll deliberately choose to be slower or less profitable in service of their values. Over time, these companies will attract the talent that Open AI and x AI are losing.

The field will probably end up with two tiers of companies: those optimized for speed and profit, and those optimized for safety and values. Both will exist. Both will pursue valid strategies. But the choice between them will determine what kind of AI researcher you are and what kind of company you want to build.

The departures we're seeing are the market making that choice visible. The talent is voting with its feet. In artificial intelligence, that vote might matter more than anyone realizes.

If you're building something in AI, the question isn't really whether you can compete on capability or compute. The question is whether talented people who care about how the technology is built believe you actually care about what you say you care about. When that belief breaks, the departures begin. And once they start, they're hard to stop.

Open AI and x AI have learned that lesson the hard way. Whether they'll use it as an opportunity to change course, or whether the departures will continue, is the real question now.

Use Case: Automate your weekly team alignment reports and mission documentation to keep your organization's values front-and-center as you scale.

Try Runable For FreeKey Takeaways

- Half of xAI's original 12-person founding team has departed within one year, signaling serious internal organizational conflicts

- OpenAI disbanded its mission alignment team and fired a policy executive who opposed 'adult mode,' revealing priorities shifting away from safety

- When companies abandon internal governance structures that enforce values alignment, talented mission-focused people leave

- Departing AI talent flows to competitors (especially Anthropic), new startups, and academic institutions, fragmenting the field

- These departures follow the historical pattern of tech companies (Google, Facebook) drifting from original missions as commercial pressures increase

- Talent departures accelerate knowledge diffusion and reduce competitive advantages for incumbent AI companies

- Companies that want to retain mission-focused talent must demonstrate alignment between stated values and actual decisions

Related Articles

- AI Agents & Collective Intelligence: Transforming Enterprise Collaboration [2025]

- xAI's Mass Exodus: What Musk's Spin Can't Hide [2025]

- The xAI Mass Exodus: What Musk's Departures Really Mean [2025]

- OpenAI Disbands Alignment Team: What It Means for AI Safety [2025]

- xAI Co-Founder Exodus: What Tony Wu's Departure Reveals About AI Leadership [2025]

- Anthropic's India Expansion Trademark Battle: What It Means for Global AI [2025]

![Why Top Talent Is Walking Away From OpenAI and xAI [2025]](https://tryrunable.com/blog/why-top-talent-is-walking-away-from-openai-and-xai-2025/image-1-1771016830046.jpg)