The AI Revolution Nobody Expected: World Models Are Coming to Your Design Toolbox

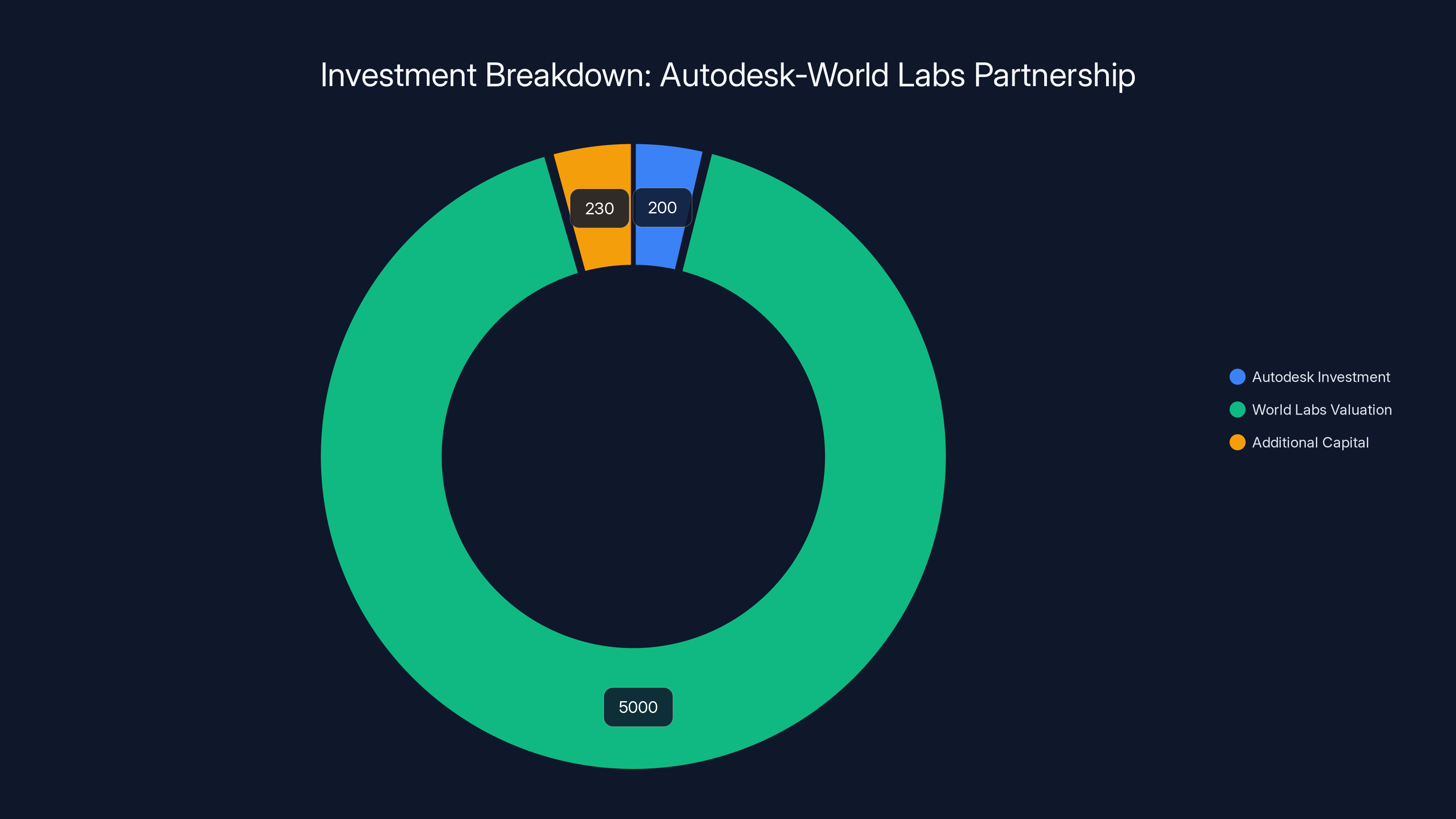

Fei-Fei Li just pulled off something that seemed impossible eighteen months ago. Her startup, World Labs, convinced Autodesk—one of the most established, conservative software companies in the world—to write a $200 million check to integrate AI models that understand 3D space into their core design platform. That's not just a business deal. That's a signal that the entire digital creation industry is about to shift.

Let me explain why this matters more than you might think. For decades, designers have worked with fragmented tools. You'd sketch in one app, model in another, animate in a third, and simulate physics in yet another. Each tool optimized for one specific task, forcing creators to spend hours moving data between systems, fixing incompatibilities, and battling learning curves.

World Labs is building something fundamentally different. Their AI world models aren't just fancy image generators that pretend to understand space. They're systems that actually comprehend geometry, physics, dynamics, and how objects interact in three-dimensional environments. Imagine asking an AI to design an office layout, and instead of getting a flat rendering, you get an interactive 3D environment you can edit, explore, and iterate on—all generated from a single text prompt.

Autodesk's investment isn't about charity or hype. It's about survival. The design software market is worth tens of billions annually, and whoever cracks AI-powered spatial creation owns the future. Autodesk clearly believes that's World Labs. But here's the plot twist: neither company is entirely sure yet what the final product will look like. They're building this together, figuring out integration points on the fly, and starting with entertainment because that's where the demand is hottest.

In this article, we're going to break down why this deal matters, how world models actually work, what Autodesk gets out of the partnership, and where the entire creative industry is headed when physics-aware AI becomes standard in design workflows.

TL; DR

- **World Labs landed 5 billion valuation, signaling massive institutional confidence in AI world models

- World models understand 3D space, physics, and dynamics, not just 2D images, making them fundamentally different from standard generative AI

- Entertainment and media production are the first battleground, with both companies targeting gaming, animation, and interactive content

- Integration is still in early stages, but the vision is clear: AI-assisted design tools that understand how physical objects actually behave

- Autodesk's neural CAD is the missing piece, combining world models with design intelligence to generate functional 3D models, not just pretty visualizations

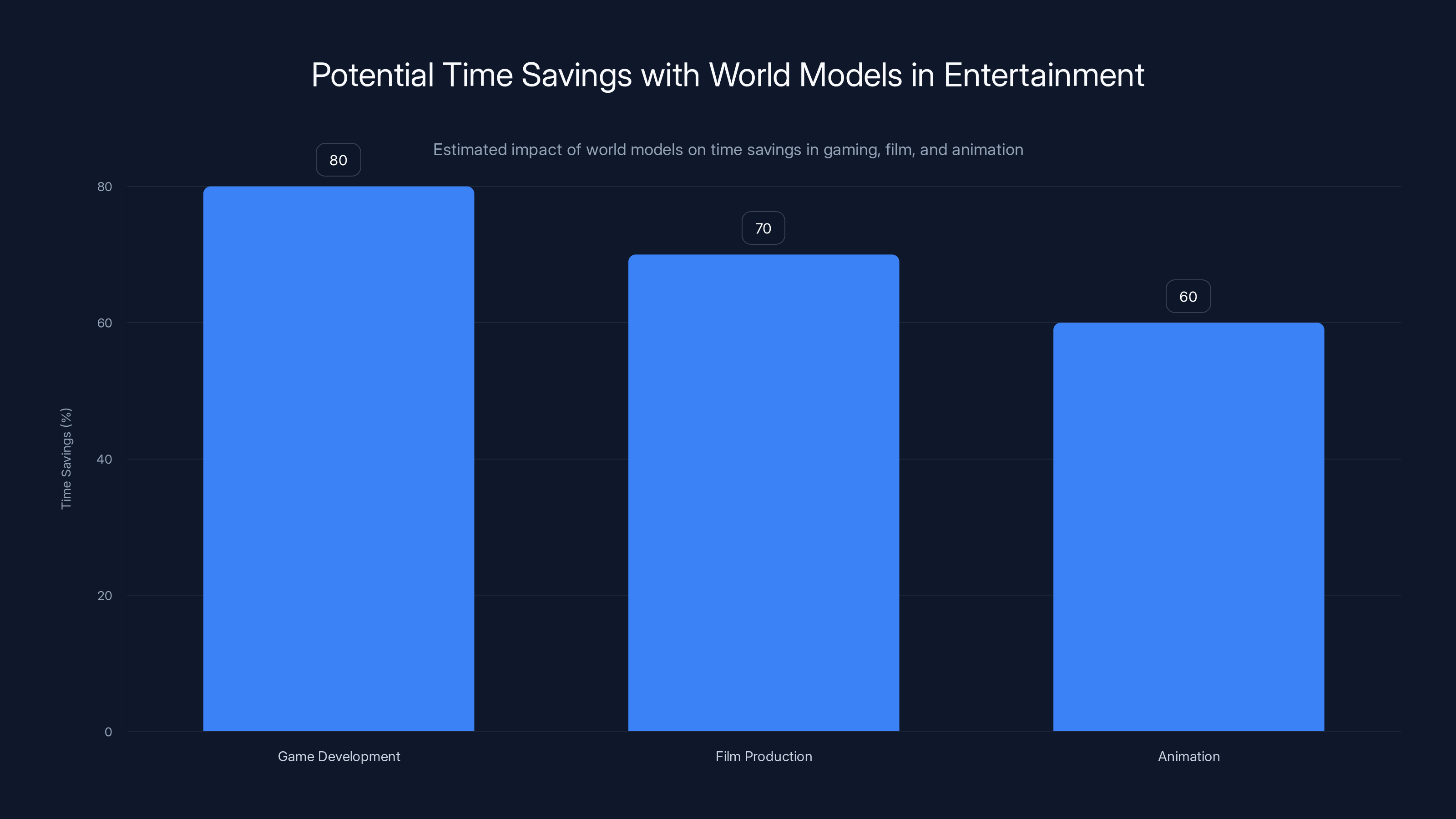

World models could potentially save up to 80% of time in game development, 70% in film production, and 60% in animation by automating initial design and optimization tasks. Estimated data.

What Just Happened: The $200M Autodesk-World Labs Partnership Explained

World Labs emerged from stealth in late 2024 with a splash. The startup raised

Their flagship product, Marble, launched in November 2024. It lets users create, edit, and download 3D environments from text descriptions. But that was just the beginning. The real opportunity was getting these models into the hands of professional creators who actually build things for a living.

Enter Autodesk. The company dominates professional design with software used by architects, engineers, product designers, animators, and construction companies. AutoCAD, Revit, Maya, and Fusion 360 are industry standards. When Daron Green, Autodesk's chief scientist, started talking to World Labs, he immediately saw the connection. World models could handle the "big picture" spatial reasoning that currently requires multiple tools and massive human effort.

The deal structure is straightforward: Autodesk invests

Autodesk's move also signals something to their customer base. For years, traditional design software companies have been nervous about AI. If AI can generate designs automatically, do designers still need expensive CAD software? Autodesk's answer is elegant: AI doesn't replace design tools, it augments them. The tools get smarter, faster, and more capable of handling routine spatial reasoning so humans can focus on creativity and problem-solving.

The partnership also positions Autodesk against competitors like Epic Games and Adobe who are also investing in spatial AI. This is an arms race. The company that integrates world models first, most elegantly, and most cheaply wins a disproportionate share of the creative tools market for the next decade.

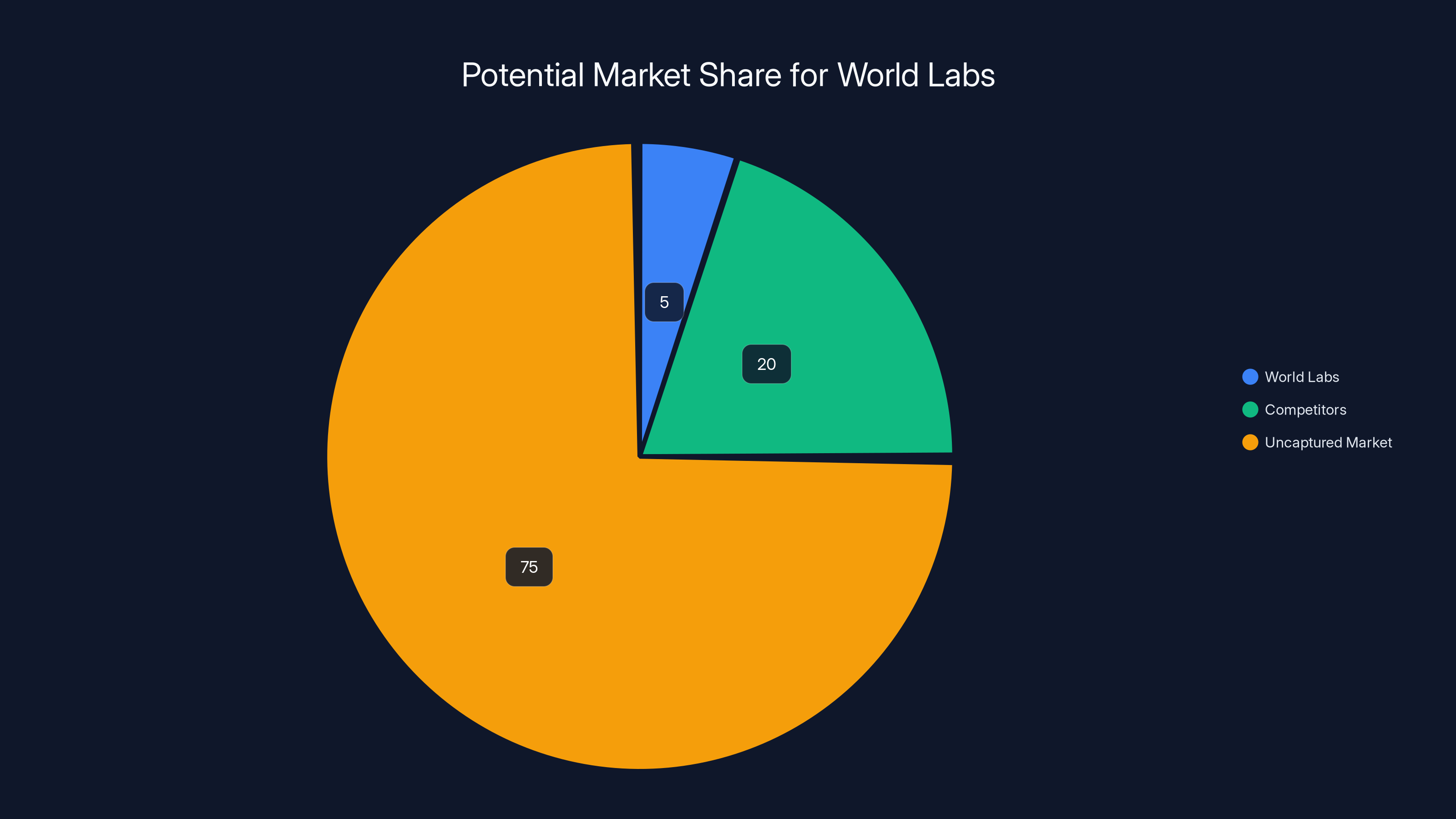

World Labs could capture 5% of the

Understanding World Models: AI That Actually Knows How Space Works

Most people confuse world models with image generation. That's a dangerous mistake. Chat GPT can write text. DALL-E can generate images. But neither system truly understands how the physical world operates. They're pattern-matching engines trained on vast amounts of data.

World models are different. They're trained to understand and predict how objects interact in three-dimensional space over time. Think about a robot walking across a room. A traditional AI might predict the next frame in a video sequence based on pixel patterns. But a world model would understand that the robot has mass, the floor has friction, gravity pulls downward, and the robot's legs need to maintain balance. It reasons about the physics.

Here's how this works technically. World models are built on foundation models—usually large language models or vision transformers trained on massive datasets. But instead of being trained just on images or text, they're trained on videos, 3D data, physics simulations, and geometric representations. The training process teaches the model: "When you see a scenario like this, here's how the physics should evolve."

The result is a system that can:

- Generate 3D environments from text descriptions with physically plausible layouts

- Predict object behavior across multiple frames with consistent physics

- Understand spatial relationships between objects and how they constrain each other

- Reason about functionality not just aesthetics (understanding that a chair needs legs positioned to support weight)

- Handle interactive queries where you can ask "what happens if this object moves here?"

Fei-Fei Li's framing is perfect: "Worlds are governed by geometry, physics, and dynamics, and reconciling the semantic, spatial, and physical is the next great frontier of AI." That reconciliation is what world models do. They bridge the gap between what something means (semantics), where it is and its shape (spatial), and how it behaves (physics).

The implications are staggering. Once you have a system that understands physics, you don't need separate tools for different types of design. An architect can describe a building layout, the world model generates it in 3D, and then that same system can simulate how light moves through it, how people navigate it, and how structural loads distribute. One system, multiple capabilities.

Compare this to current workflows. An architect uses Revit to model the building, passes it to someone who renders it in V-Ray or Corona, passes it to someone who animates it in 3ds Max, passes it to someone who simulates structural integrity in Autodesk Robot, and finally passes it to someone who makes a presentation video. That's five handoffs, five times to introduce errors, five opportunities to lose fidelity. A world model could do all of that in one integrated system.

The training data for world models is also critical. Companies like Google DeepMind are training on thousands of hours of video. Runway, another player in this space, trained on content creation data. World Labs has access to various datasets but keeps details close. The quality of the training data directly impacts how well the world model understands physics and generates plausible environments. More diverse, higher-quality training data means better understanding of edge cases and more reliable physics.

Why Autodesk Is Moving Now: The Threat and Opportunity Equation

Autodesk's business model was built on selling software licenses for specific tasks. Want to design a building? Buy Revit. Want to animate characters? Buy Maya. Want to simulate structural loads? Buy Robot. The company generates massive recurring revenue from millions of professionals who depend on these tools daily.

But that model is increasingly fragile. Here's why: as AI gets better at spatial reasoning, the complexity gap narrows. Ten years ago, only trained professionals could use CAD software. The learning curve was brutal. Today, a sophomore in college can learn AutoCAD in a month. Tomorrow, a high school student with a decent GPU and access to world models could generate complex 3D designs in hours.

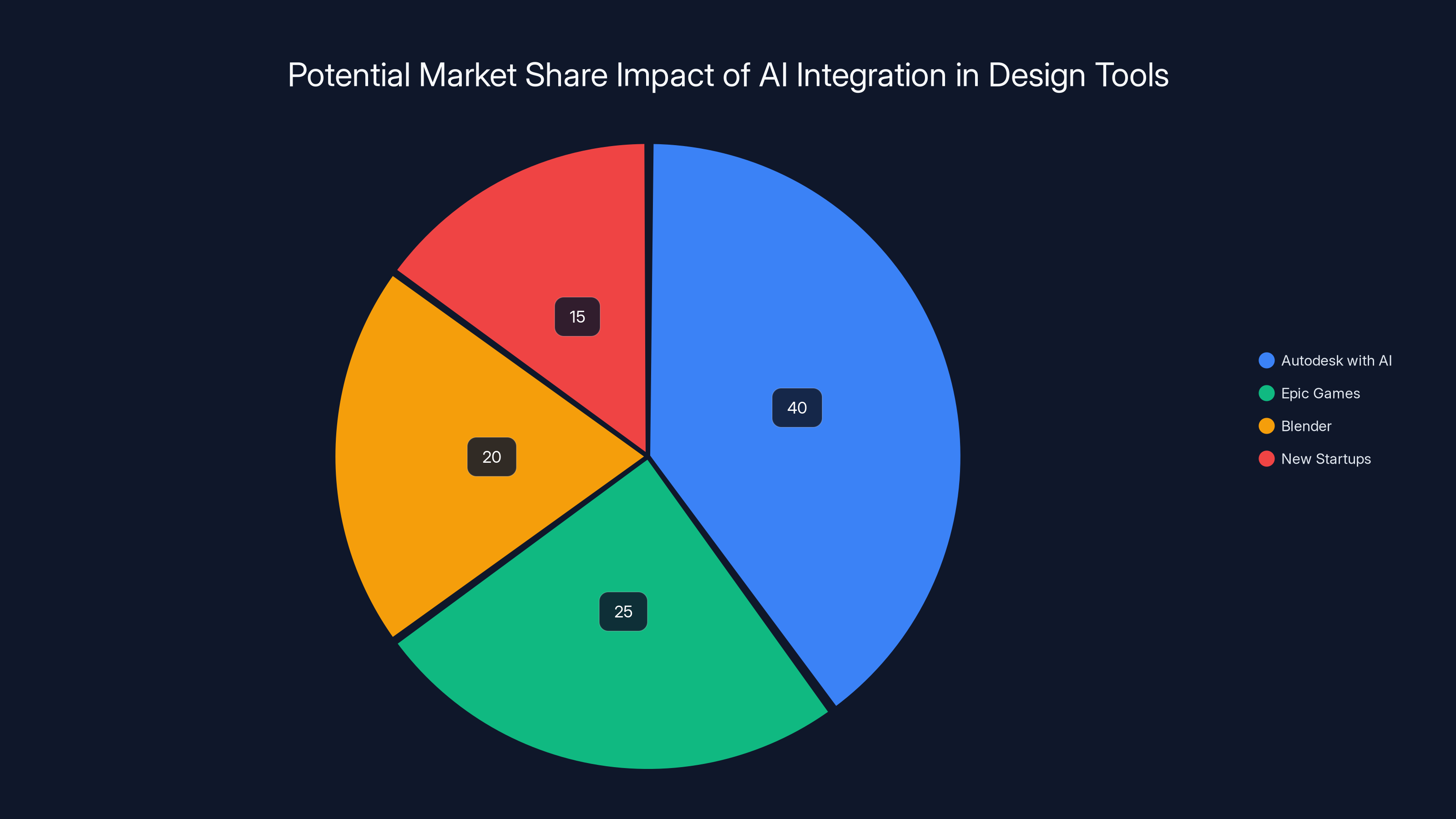

Autodesk sees this threat clearly. If they don't integrate world models into their tools, their customers will start using specialized AI tools instead. Some users might even try competing platforms that integrate AI more deeply. Companies like Epic Games (which owns Unreal Engine), Blender (open-source), and new startups are all exploring spatial AI integration. Autodesk needs to respond fast, or they risk losing market share to more AI-native platforms.

But there's also an enormous opportunity. Autodesk's strength isn't raw modeling capability anymore. It's the ecosystem. They have 20+ years of integrations with other professional tools. They have customer relationships with thousands of design firms. They have data about how real professionals work. If they can layer world models on top of that foundation, they create something new: AI-augmented professional design.

The entertainment industry is the perfect proving ground for this. Hollywood has always been an early adopter of cutting-edge technology. Animation studios, game developers, and VFX shops are desperate for faster, cheaper, higher-quality content creation. If Autodesk can demonstrate that world models integrated with their existing tools cut production time by 20-30% while improving quality, that's a market worth billions.

Daron Green's comments reveal the thinking. He talks about starting with a world model sketch of an office layout, then drilling down into specific design elements using Autodesk's precision tools. Or the reverse: take a carefully designed object from Autodesk's tools and place it in a context generated by a world model. This both/and approach is brilliant. It doesn't replace either system. It enhances both.

Autodesk also needs to own the narrative. By investing heavily in World Labs and committing to deep integration, they signal to their customer base: we're not afraid of AI, we're leading it. We're not being disrupted, we're directing the disruption. That messaging is worth $200 million in market confidence alone.

There's also the data angle. Autodesk will learn from this partnership how designers actually think about spatial problems. Those insights, fed back into product development, could make Autodesk's core tools significantly smarter. They're not just buying technology, they're buying signal about future product directions.

Autodesk's

Marble: World Labs' First Product and What It Can Actually Do

Marble is the tangible proof that world models work. Released in November 2024, it's surprisingly accessible for something that runs on cutting-edge AI. Users describe what they want—"a futuristic office with floor-to-ceiling windows overlooking a city"—and Marble generates a complete, editable 3D environment.

Here's what makes it different from a regular generative AI:

Editability: You're not locked into what the AI generated. You can grab objects, move them, rotate them, delete them, and add new ones. The AI respects the changes and maintains physical plausibility as you edit.

Downloadability: You get a real 3D file format you can import into other tools. This matters. Most generative AI tools lock you into their ecosystem. Marble gives you portable assets.

Physics Understanding: Objects don't float in the air. Lighting behaves realistically. Spatial relationships make sense. The model understands that a desk needs to fit under a table, that chairs sit on the ground, that windows are vertical.

Speed: Generating a complex 3D scene takes seconds to minutes, not hours or days. This changes the iterative design process fundamentally.

User reactions have been enthusiastic. Creators report that Marble cuts their early-stage design concept time from hours to minutes. For entertainment use cases—video game level design, film production design, architectural visualization—this is massive.

Marble isn't perfect. Complex interactions sometimes fail. Very precise designs need refinement. Physics simulation isn't perfect for everything. But the trajectory is clear. Each month, the model gets smarter, faster, more reliable.

The key insight is that Marble is just the first product. World Labs' real value isn't Marble itself. It's the underlying world model technology. That technology is what Autodesk wants to integrate into their entire product suite.

Entertainment First: Why Gaming and Film Are the Initial Battleground

Both World Labs and Autodesk are starting with entertainment because it's where world models create the most obvious value. Here's the specific logic:

In game development, level designers spend enormous time building spaces. They need to think about player navigation, sightlines, performance optimization, and atmosphere. A world model could generate initial level geometry, spatial flow, and basic optimization in hours instead of weeks. Designers would then refine, detail, and polish. But that 80% of grunt work? Gone.

In film and television, production designers build physical sets or create detailed virtual environments. The process is expensive and time-consuming. A world model could generate hero shots, layout concepts, and variations for director approval in hours. The production designer's time would shift from building every detail to curating, refining, and directing the AI toward the creative vision.

In animation, character animators work within environments they didn't design and often didn't optimize for their specific needs. Animators need to understand spatial constraints, surfaces, occlusion. A world model integrated with animation software could suggest optimal camera angles, identify spatial issues, and even auto-generate crowd behavior or environmental animation (like trees swaying, water flowing).

Autodesk already serves these industries extensively. Their character animation tools are used by major studios. Their rendering engines power countless production visualizations. Daron Green's mention of character animation models is telling: they're "close to world models" because they involve understanding how creatures interact with physical environments.

The entertainment industry also has high budgets, clear ROI on time savings, and rapid iteration cycles. A 20% speed improvement in animation or design is worth millions to a major studio. That creates powerful customer demand, which pushes both companies to build and refine integration.

This also avoids the thorniest problems early. Consumer applications of world models are messier. Home designers want perfect accuracy. Architects need building code compliance. Manufacturing needs precise tolerances. Entertainment is more forgiving. A generated building that "looks right" for a movie is acceptable even if it wouldn't pass structural engineering review.

Once Autodesk and World Labs prove the concept in entertainment, they'll expand outward. Architectural visualization for real estate is next. Then, eventually, could come serious tools for architects and engineers themselves. But that requires much higher accuracy and reliability than current world models offer.

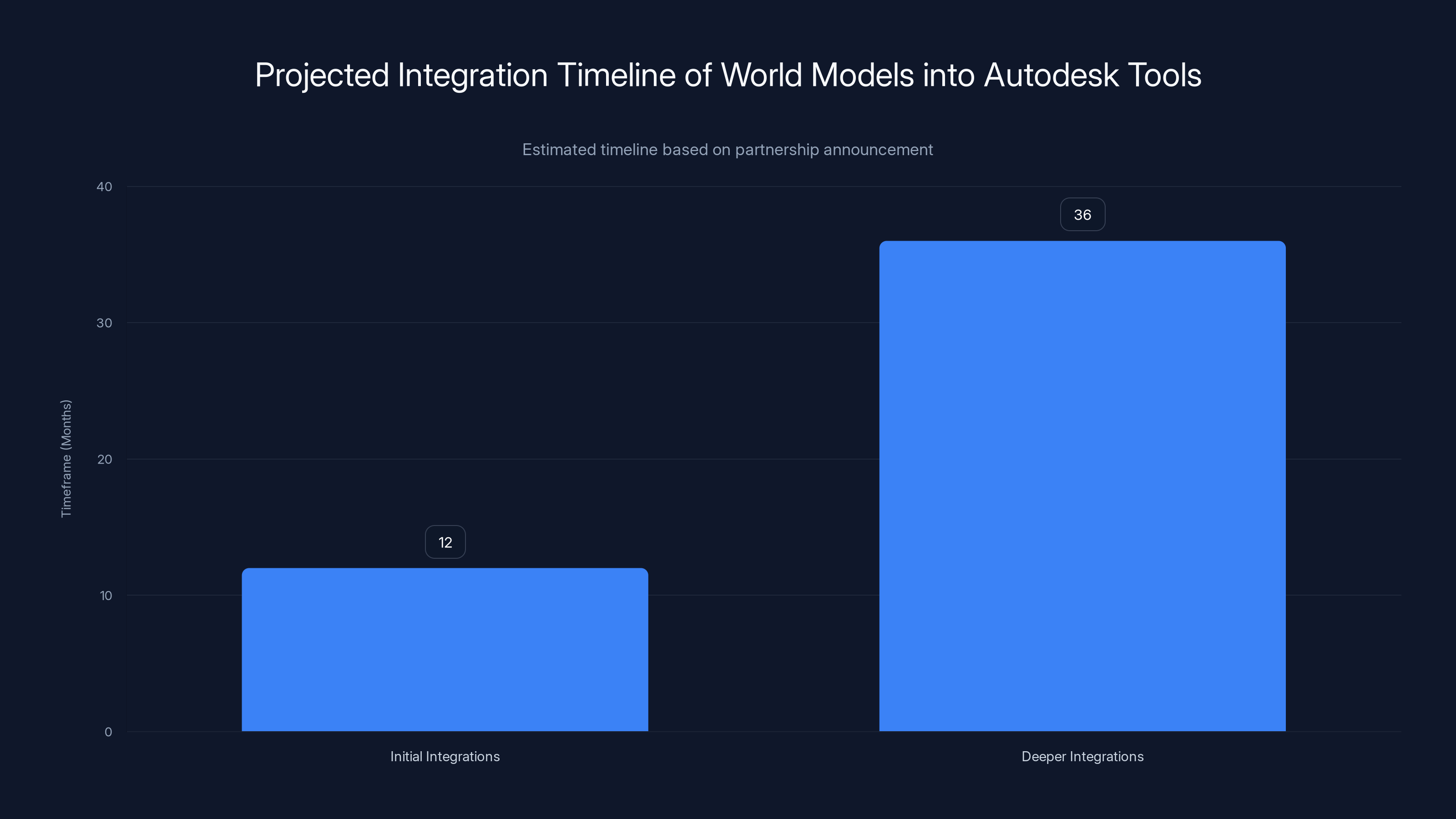

Initial integrations of world models into Autodesk tools are expected in 12-18 months, with deeper integrations developing over 2-3 years. Estimated data based on partnership details.

Autodesk's Neural CAD: The Secret Weapon That Makes Everything Work

Here's what most analysis of this deal misses: Autodesk isn't just buying access to World Labs' technology. They're combining it with neural CAD, an internal AI system they've been developing that's potentially more important than world models alone.

Neural CAD is Autodesk's answer to a specific problem. Traditional generative AI can create images. But creating functional 3D models that actually work—where structural integrity, tolerances, and physical laws are respected—is much harder. Neural CAD was trained on geometric data, building models, manufacturing specifications, and design patterns. It understands not just how to make something look right, but how to make it actually function.

When you combine neural CAD with world models, you get something powerful. The world model handles the big picture spatial reasoning (layouts, environments, relationships). Neural CAD handles the detailed functionality (component design, structural integrity, how objects interact). Together, they're approaching comprehensive design intelligence.

Autodesk is already integrating neural CAD into product design and architecture tools. The next step is tighter integration with World Labs' spatial understanding. Imagine a prompt like: "Design a modular office furniture system that adapts to different room sizes." A world model could generate various room layouts. Neural CAD could design furniture that fits those spaces structurally. The system would understand not just the aesthetics but the functionality.

This is where the value actually emerges. Generic world models are useful. Professional-grade neural CAD is expensive and hard. But combining them? That's defensible competitive advantage. Companies like Blender or Epic Games will have world models eventually. But they won't have a decade of Autodesk customer data about design patterns, professional workflows, and real-world constraints built into their neural CAD.

The Integration: What the Actual Collaboration Looks Like

The partnership is still incredibly early. Daron Green was explicit about this in his comments to Tech Crunch: the precise form hasn't been determined yet. That's both honest and revealing.

What we know is happening:

Model-Level Integration: World Labs' models will be called from within Autodesk tools. Instead of switching applications, users will stay in their familiar Autodesk interface, trigger world model generation, get results, and iterate.

Data Sharing (Limited): Notably, Green explicitly said data sharing is NOT part of the agreement. This matters. It means Autodesk isn't giving World Labs access to customer designs or project data. The companies maintain data sovereignty. This addresses privacy and IP concerns that many enterprise customers have.

Bidirectional Workflows: Green describes a scenario where you might generate a world model sketch in World Labs, then take it to Autodesk for detailed design work. Or the reverse: start with Autodesk designs and place them in world model contexts.

Advisory Relationship: Autodesk gets a board seat and advisor role, meaning they're guiding World Labs' product direction (to some extent). This ensures the technology develops in ways that actually serve Autodesk's customers.

The key phrase from Green is telling: "You could anticipate us consuming their models or them consuming our models in different settings." That's not exclusive. That's "we're figuring this out together and might integrate in multiple ways."

This suggests the final product might look like:

- World model generation within Autodesk apps for spatial design and iteration

- Export from Autodesk to World Labs for environmental context and visualization

- Nested integration where they share APIs and can call each other's functions

- Eventually, unified systems where the boundary between tools becomes invisible

This kind of integration is technically complex but not unprecedented. Adobe's Creative Cloud does this across Photoshop, After Effects, and Premiere. Autodesk themselves do this across their various design tools. The difference here is they're integrating externally developed AI systems, not just their own tools.

Estimated data suggests that Autodesk could maintain a leading market share of 40% if they successfully integrate AI, compared to competitors like Epic Games and Blender.

The Broader AI Strategy: World Models as Foundation Models

Fei-Fei Li's background matters. She's a foundational figure in AI research, known for emphasizing that intelligence requires understanding the visual world, not just language. Her move from Google to found World Labs is significant.

World Labs is building what might become a foundation model for spatial intelligence the same way GPT became a foundation model for language. Once you have a strong foundation model that understands 3D space, countless applications become possible. Companies can license or build on top of it instead of training their own from scratch.

This is valuable because training world models is expensive. You need massive computing resources, high-quality training data covering diverse environments, physics simulations for ground truth, and expertise in a relatively new field. Most companies don't have all three. Instead, they'd rather license access to a proven foundation model and build applications on top.

Autodesk's investment signals confidence that World Labs is building that foundation layer correctly. Over the next few years, we should see:

- World Labs licensing its models to other companies

- Various specialized applications built on World Labs' technology

- Continuous improvement as the models see more data and use cases

- Potential competition as other AI labs build their own world models

The comparison to Open AI and GPT is instructive. Open AI built a strong foundation model (GPT), released an API, and suddenly thousands of companies are building applications on top. World Labs could follow a similar path in the spatial AI space.

Google DeepMind and Runway are also playing in this space, but both approach it differently. DeepMind focuses on underlying research and physics understanding. Runway focuses on content creation for individual creators. World Labs is positioned between research and production, with Autodesk validating they're on the right track.

The Competitive Landscape: Who Else Is Building World Models?

World Labs isn't alone in this space, though they might be ahead. Here's the competitive breakdown:

Google DeepMind: They've published extensive research on world models and trained systems that can predict video frames while understanding physics. However, DeepMind tends to focus on research over products. Their systems are available mostly through academic papers and research APIs.

Runway: Explicitly positioning themselves in creative tools, Runway has released products for video generation, image-to-video, and motion synthesis. They're not explicitly calling these "world models," but they're solving similar problems. However, Runway is more focused on individual creators than professional software integration.

Epic Games (Unreal Engine): The company is investing heavily in procedural generation and AI-assisted content creation within their game engine. However, they're starting from an engine-first perspective, not an AI-first one.

Open AI, Anthropic, and others: Developing their own spatial reasoning capabilities, but mostly as features within larger language models, not standalone world model services.

World Labs' advantage is focus. They're building specifically for spatial intelligence without the baggage of a larger research organization or game engine. Autodesk's investment amplifies that advantage by committing a massive company to distribution and integration.

The competitive threat isn't really to World Labs right now. It's to companies like Runway that are going after individual creators, and to Autodesk's existing software if they don't integrate AI fast enough.

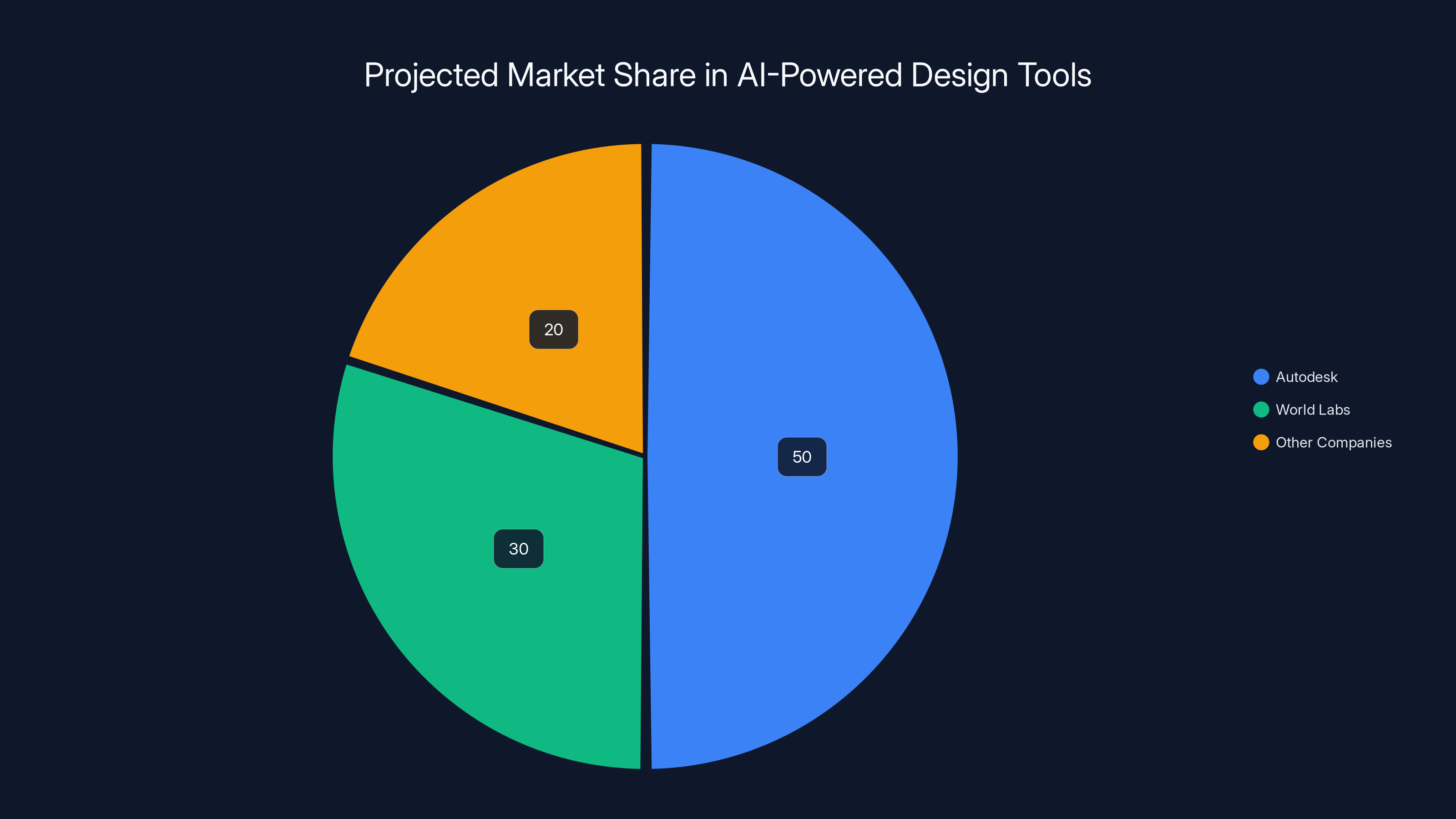

Autodesk's integration with World Labs is projected to capture a significant portion of the AI-powered design tools market, potentially leading with a combined 80% market share. Estimated data.

Data, Privacy, and Trust: The Elephant in the Integration Room

Green's statement that data sharing is NOT part of the agreement is critical and often overlooked. Enterprise customers—architectural firms, VFX studios, game developers—are paranoid about their data. They don't want proprietary designs sent to third-party AI systems for training or fine-tuning.

Autodesk understands this. Their customer base has built their entire business around trusting Autodesk with their designs. If Autodesk started feeding that data to World Labs, even anonymously, customers would revolt. The contracts would be breached. The lawsuits would fly.

So the integration must be architected carefully:

- Users control whether to send data to World Labs

- Data stays on-premise or private cloud when possible

- Results come back without ever touching World Labs' training infrastructure

- World Labs improves through their own data, not customer designs

This is technically achievable but adds complexity. It's why Daron Green described this as early-stage. They need to figure out the architecture that protects customer data while still enabling integration.

There's also a liability question. If a world model generates a design that infringes someone's patent, who's responsible? Autodesk? World Labs? The user? These legal frameworks don't exist yet, which is why both companies are moving cautiously.

Long-term, I'd expect federation models. World Labs trains on public data and their own data. Autodesk customers can optionally improve the models with their own data, locked in a private fine-tune. Best of both worlds: the model gets better, customer data stays private.

The Timeline: When Will This Actually Be Usable?

Here's where expectations need calibration. This announcement is about partnership structure, not product launch. The actual integrated tools are probably 12-24 months away from being serious products.

Typical timeline for something like this:

Months 1-6 (Now through Q2 2025): Deep technical collaboration. Both teams learn each other's codebase, establish APIs, define integration points, and identify blockers. It sounds boring but is critical. The work here determines whether integration is possible and seamless.

Months 6-12 (Q2-Q4 2025): Initial prototype release to select customers (likely entertainment companies). Gather feedback, iterate, and identify edge cases. Fix the inevitable issues when real users hit real workflows.

Months 12-18 (Q4 2025-Q2 2026): Broader beta release. Expand to more use cases. Integrate neural CAD more deeply. Polish the UX so it doesn't feel bolted on.

Months 18-24 (Q2-Q4 2026): Public release in Autodesk products. By this point, the integration should feel native and natural.

This is the optimistic path. Realistic obstacles:

- API complexity that forces rearchitecture

- Performance issues that require optimization

- Privacy or data handling problems requiring new approaches

- Customer feedback that changes product direction

- Competing priorities that delay the work

I'd honestly expect the timeline to slip toward 18-30 months for something truly production-ready. But even early releases in 12-15 months would be impressive and valuable.

For creators, this means: keep an eye on these products over the next year. By 2026, if Autodesk and World Labs pull this off, the tools you use will be noticeably smarter and faster. If you're a professional designer, animator, or architect, you'll want to understand how to leverage world models in your workflow before they become standard.

The Valuation Question: Is World Labs Actually Worth $5 Billion?

Reporting suggests World Labs is now in talks to raise capital at a

Here's the case for yes:

Network effects in creation: Once world models become integrated into professional tools, they become a standard feature. Network effects don't work the same way as social networks, but tool preference does. If most animators are using world model-augmented tools, that becomes competitive necessity.

B2B Saa S economics: If World Labs licenses their models to multiple companies (not just Autodesk), each integration is recurring revenue. Professional software customers pay significant Saa S fees monthly. Even at $100/month per studio, thousands of studios globally is enormous recurring revenue.

IP and defensibility: World models trained on diverse data are defensible. It's hard for competitors to replicate if the training data or architecture is superior. Fei-Fei Li's involvement adds credibility to claims of technical superiority.

Market size: The professional design tools market is worth

Here's the case for skepticism:

Product market fit still unclear: Marble is early. Real integration into Autodesk products is months away. We don't yet know if professional creators actually want and will pay for world models or if they're just a nice-to-have feature.

Competition will increase: Every AI lab will eventually build world models. Unique technology rarely stays unique for long in AI. By 2027, there could be five well-funded competitors.

Adoption risk: Professional tools have high switching costs and deep integration with workflows. Even if world models are 20% better, getting professionals to adopt new systems is slow and expensive.

Revenue model unclear: How exactly does World Labs make money? Licensing to Autodesk is happening, but does Autodesk pay per user, per feature, per license? The financial structure matters for valuation.

My honest take:

For now, Autodesk's

Future Scenarios: Where This Technology Goes From Here

Assume Autodesk and World Labs execute perfectly. What's the full product vision over the next 3-5 years?

Scenario 1: Augmentation (Most Likely)

World models become a feature, not a product. Users stay in Autodesk's tools, use world models for initial generation and exploration, then switch to traditional tools for refinement and precision. The technology handles the exploratory phase of design. Humans handle the refinement phase. This middle path seems most realistic because it doesn't require massive workflow change.

Scenario 2: Full Integration (What Both Companies Want)

Over time, the boundary between world models and traditional design tools blurs. Neural CAD and world models are so deeply integrated that they're effectively one system. Users don't think about "using a world model." They just design normally, and the system leverages both traditional and AI approaches seamlessly. This requires deeper technical work but is where the real magic is.

Scenario 3: Replacement (Bold Vision)

World models become so capable that traditional tools become optional. Designers mostly work through prompts and iterative refinement with AI systems. Traditional tools are there for edge cases and precision work, but they're increasingly less central. This requires massive improvements in world model reliability, physics accuracy, and precision.

Scenario 4: Ecosystem Play (Dark Horse)

World Labs becomes the foundation layer, and both Autodesk and other companies build specialized applications on top. Instead of tight 1-1 integration, World Labs licenses to dozens of companies. Each builds their own specialized tools. The network effects compound, and World Labs becomes the Intel of spatial AI—the invisible foundation everyone relies on. This requires World Labs to stay independent and build APIs strong enough to support multiple partners, which goes against the Autodesk investment's implied exclusivity.

Most likely, we'll see a combination of scenarios 1 and 2. Augmentation in the short term, with increasing integration as the technology matures. True replacement (scenario 3) is further out because world models need to be dramatically better to replace decades of specialized tools.

Implications for Creators: How This Actually Changes Your Work

If you're a designer, animator, architect, or content creator, here's what this means practically:

Animation and Character Design: Faster environment generation, better lighting and physics simulation, quicker iteration on complex scenes. Less time building, more time refining and making it look perfect.

Game Development: Level designers can explore spatial concepts much faster. Massive time savings on early gray-box level design. Physics and navigation can be validated earlier, catching design problems sooner.

Architectural Visualization: Clients get better presentations faster. Architects can explore variations and adaptations quickly. The back-and-forth with clients tightens significantly.

Product Design: Initial ideation is faster. You can explore spatial configurations and see how components interact before detailed CAD work. The iterative process compresses.

Content Creation: Smaller studios and independent creators can produce visual complexity that previously required larger teams. The leveling of the creative playing field continues.

The common theme: world models are a force multiplier for creativity. They don't make creators obsolete. They make creators more capable, productive, and able to do more ambitious work with smaller teams.

The profession itself might shift. We might see fewer people doing routine design work (generating standard environments, basic models, standard animations) and more people doing high-level creative direction and curation. The skill becomes directing AI toward your vision, not executing the vision manually.

The Broader Implications: What This Deal Signals About AI

This partnership is revealing about where AI is actually heading, beyond the hype.

First, it shows that real, serious money is flowing toward specialized AI, not just general-purpose language models. Everyone's obsessed with Chat GPT, but the actual value creation is happening in narrow domains where AI provides specific capabilities.

Second, it validates that AI at the foundation level (building blocks that others build on) might be more valuable long-term than AI at the application level (end-user products). World Labs isn't trying to make money directly from consumer creativity. They're providing technology that others layer on top of and charge for. That's the winner's play.

Third, it shows that enterprise integration is hard and slow. It's not "Chat GPT for design." It's careful, measured integration with enterprise considerations around data, privacy, liability, and workflow. That's unsexy but important.

Fourth, it demonstrates that traditional software companies are adapting to AI faster than many expected. Autodesk could have been complacent or moved slowly. Instead, they're doubling down and investing aggressively. Companies that embrace AI as a tool for their existing business (rather than viewing it as a threat) are going to win.

Finally, it signals that foundation models matter. The next decade will likely see multiple foundation models for different domains: language (GPT), images (DALL-E), video (maybe Runway), and now spatial/3D (World Labs). Companies that build those foundations have outsized leverage.

Conclusion: The Spatial AI Era Is Beginning

World Labs' $200 million investment from Autodesk is a watershed moment. It's not just a funding round or a partnership announcement. It's validation that spatial AI—systems that understand how the physical world actually works—is essential infrastructure for the creator economy and professional design.

The fact that Autodesk, a company built on traditional design tools, is investing this aggressively signals that they see world models as the future. They're not hedging their bets with a small investment. They're committing serious capital and executive attention.

For creators and designers, this is good news. Tools are about to get dramatically more capable. The bottleneck for creative ambition will shift from tool capability to imagination. A solo creator or small team will be able to execute ideas that previously required larger teams and budgets.

For AI researchers, this shows the value of specialization. General-purpose AI is important, but specialized foundation models for specific domains are where the economic value is concentrated.

For the software industry broadly, this is a wake-up call: AI isn't replacing your tools, it's augmenting them. Companies that integrate AI into their existing products while respecting their customers' needs and concerns will thrive. Companies that ignore AI or try to build it from scratch will struggle.

The timeline to meaningful impact is probably 18-24 months, with deeper integration over the next 3-5 years. If you work in creative fields, starting to understand how to work with AI-assisted tools now will put you ahead of the curve by the time these integrations become standard.

The spatial AI era isn't coming. It's here. And it's just getting started.

FAQ

What is a world model in AI?

A world model is an AI system trained to understand and predict how physical environments and objects behave over time. Unlike image generation systems that predict pixels based on patterns, world models understand geometry, physics, dynamics, and spatial relationships. They can generate 3D environments, predict how objects interact, and reason about functionality based on real-world physics. This is fundamentally different from traditional generative AI because it actually comprehends three-dimensional space, not just 2D patterns.

How does the Autodesk-World Labs partnership work?

Autodesk invested $200 million in World Labs and will work to integrate World Labs' AI models into Autodesk's design tools. The partnership operates at the model level, meaning World Labs' spatial AI systems will be called from within Autodesk applications without requiring users to switch to separate tools. The companies are starting with entertainment use cases like game design and animation. Critically, customer data is not shared between the companies, protecting intellectual property and privacy.

What products will come from this partnership?

The specific products are still being determined, but the vision is clear: world model capabilities will be integrated into Autodesk's existing tools like Maya, Revit, Fusion 360, and others. Users will likely be able to generate initial 3D concepts using world models, then refine them using traditional design tools. The first usable integrations are expected in 12-18 months, with deeper integration developing over 2-3 years.

Why is World Labs' technology different from other AI image generators?

World Models understand physics and 3D space, while image generators predict pixels based on patterns. An image generator might create a picture of an office that looks realistic but violates physics (floating chairs, impossible lighting). A world model understands that chairs need to sit on the ground, objects have weight and mass, and lighting follows real optical principles. This allows world models to generate physically plausible 3D environments that can be edited and refined.

Who competes with World Labs?

Google DeepMind is researching world models but focuses primarily on academic research. Runway is building world model-like technology for content creators but hasn't integrated deeply with professional tools. Epic Games is investing in procedural generation and AI within Unreal Engine. Open AI and other labs have spatial reasoning capabilities, but no one yet has combined Fei-Fei Li's foundational AI expertise with the startup's focused mission to the degree World Labs has. Autodesk's investment and commitment gives World Labs significant competitive advantage.

How will world models impact professional designers and animators?

World models will accelerate the early design phase, allowing creators to explore concepts and variations quickly before detailed work. Animators will spend less time building basic environments and more time on creative refinement. The technology won't replace designers; it will make them more productive and capable of tackling more ambitious projects. The profession itself may shift toward higher-level creative direction and curation rather than routine model generation.

What's the timeline for when these integrated tools will be available?

Based on typical enterprise software integration timelines, early versions for select customers (likely entertainment companies) should arrive in 12-18 months. Broader availability in mainstream Autodesk products will likely take 18-30 months. Full integration and maturity will probably require 3-5 years. These are estimates; actual timelines depend on technical challenges, customer feedback, and competing priorities.

Is customer data being shared between Autodesk and World Labs?

No. Autodesk explicitly stated that data sharing is not part of the agreement. This protects customer intellectual property, as professional designers don't want their designs used to train AI systems. The companies are architecting the integration so world models can be called from Autodesk tools without sending customer designs to external servers. This is technically complex but essential for enterprise adoption.

What is Autodesk's neural CAD and how does it fit with World Labs?

Neural CAD is Autodesk's internally developed AI system trained to understand geometric data, design patterns, and functional constraints. It can generate 3D models that don't just look right but actually work from a structural and functional perspective. Combined with World Labs' spatial reasoning, neural CAD handles the detailed functionality while world models handle big-picture spatial relationships. Together, they create comprehensive design intelligence.

What does a $5 billion valuation for World Labs actually mean?

World Labs is reportedly raising capital at a

Final Thoughts

The partnership between Autodesk and World Labs represents a maturation moment for AI in professional software. This isn't hype or speculation. It's one of the world's largest design software companies committing serious capital to integrate cutting-edge spatial AI into their core products.

For creators, this means tools are about to become significantly more capable. For AI researchers, it validates the importance of specialized foundation models over general-purpose systems. For the software industry, it's a template for how to integrate transformative technology while respecting customer needs and concerns.

The spatial AI era is starting now. The companies and creators who understand this and adapt will shape the next decade of design, entertainment, and product development. That's worth paying attention to.

Key Takeaways

- Autodesk's $200M investment in World Labs signals enterprise confidence that physics-aware AI is essential for professional design tools

- World models fundamentally differ from generative AI by understanding 3D space, geometry, and physics rather than just predicting pixels

- Entertainment and media production are the initial battleground, with gaming level design and animation showing the fastest ROI on world model integration

- Autodesk's neural CAD combined with World Labs' spatial reasoning creates defensible competitive advantage that competitors will struggle to replicate

- Practical integration timelines suggest 12-18 months for early versions, with enterprise-grade tools arriving 18-30 months from now

Related Articles

- Sarvam AI's Open-Source Models Challenge US Dominance [2025]

- Google I/O 2026: 5 Game-Changing Announcements to Expect [2025]

- Metroid Prime Art Book: Complete Guide to Piggyback's Visual Retrospective [2025]

- Google I/O 2026: Dates, AI Announcements & What to Expect [2025]

- Google I/O 2026: May 19-20 Dates, What to Expect [2025]

- Infosys and Anthropic Partner to Build Enterprise AI Agents [2025]

![World Labs $200M Autodesk Deal: AI World Models Reshape 3D Design [2025]](https://tryrunable.com/blog/world-labs-200m-autodesk-deal-ai-world-models-reshape-3d-des/image-1-1771425523090.png)