Building Trust in AI Workplaces: Your 2025 Digital Charter Guide

You've rolled out AI assistants across your organization. The adoption numbers look fantastic. Everyone's using Copilot, Gemini, meeting transcription bots, and AI scribes. Your dashboard shows impressive active user metrics.

But step into the hallway, or scroll through your private Slack channels, and you'll hear the real story. The same three questions keep bubbling up: Who can see what I'm asking the AI? Will this get used against me in my performance review? Is my job actually safe?

This isn't paranoia. It's the natural response of people who don't trust the tools they've been told to use. And here's the problem: when employees don't trust your sanctioned AI, they don't stop using AI. They simply switch to personal systems, pasting proprietary data straight into Chat GPT, Claude, or Perplexity. You've created a shadow AI problem without realizing it, as noted by Cybersecurity Dive.

The truth is harder than most organizations want to admit: you cannot build trusted AI on top of a foundation that was shaky before AI ever arrived.

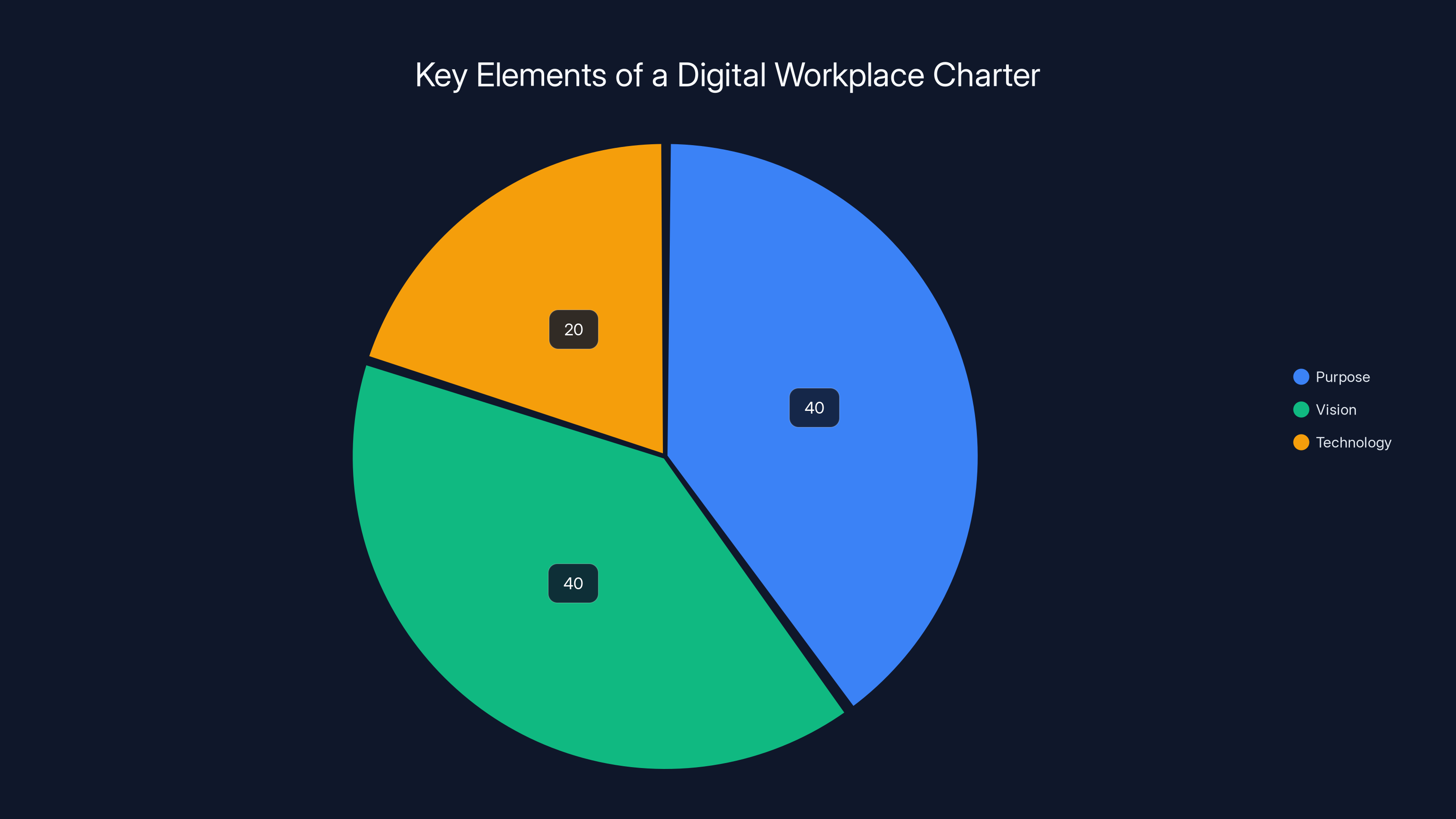

For the last decade, most digital workplace leaders skipped a critical step—a simple, one-page charter that clearly defines Purpose, Vision, and Success Standards. In the era of AI assistants, skipping this foundation isn't just inefficient. It's negligent. This guide walks you through building that charter, introduces six new success standards designed specifically for AI, and gives you a practical 60-day ratification process to implement them without endless workshop gridlock.

Let's start with what's actually happening right now.

TL; DR

- Shadow AI is the norm: 80% of knowledge workers bring their own AI tools to work, dragging proprietary data into public systems, as highlighted by Security Boulevard.

- Trust isn't optional: Organizations can't mandate usage, but they can design trust by building a clear charter with AI-specific success standards, as discussed in McKinsey's insights.

- Six new standards matter: Trust, transparency, augmentation, agency, equity, and source integrity address AI-era concerns that legacy workplace charters ignore.

- Data risk is real: Without clear governance, institutional amnesia takes hold—your organization stops learning from itself, as noted in Mexico Business News.

- Speed wins: A 60-day ratification process replaces endless committees and gets stakeholders aligned without workshop fatigue.

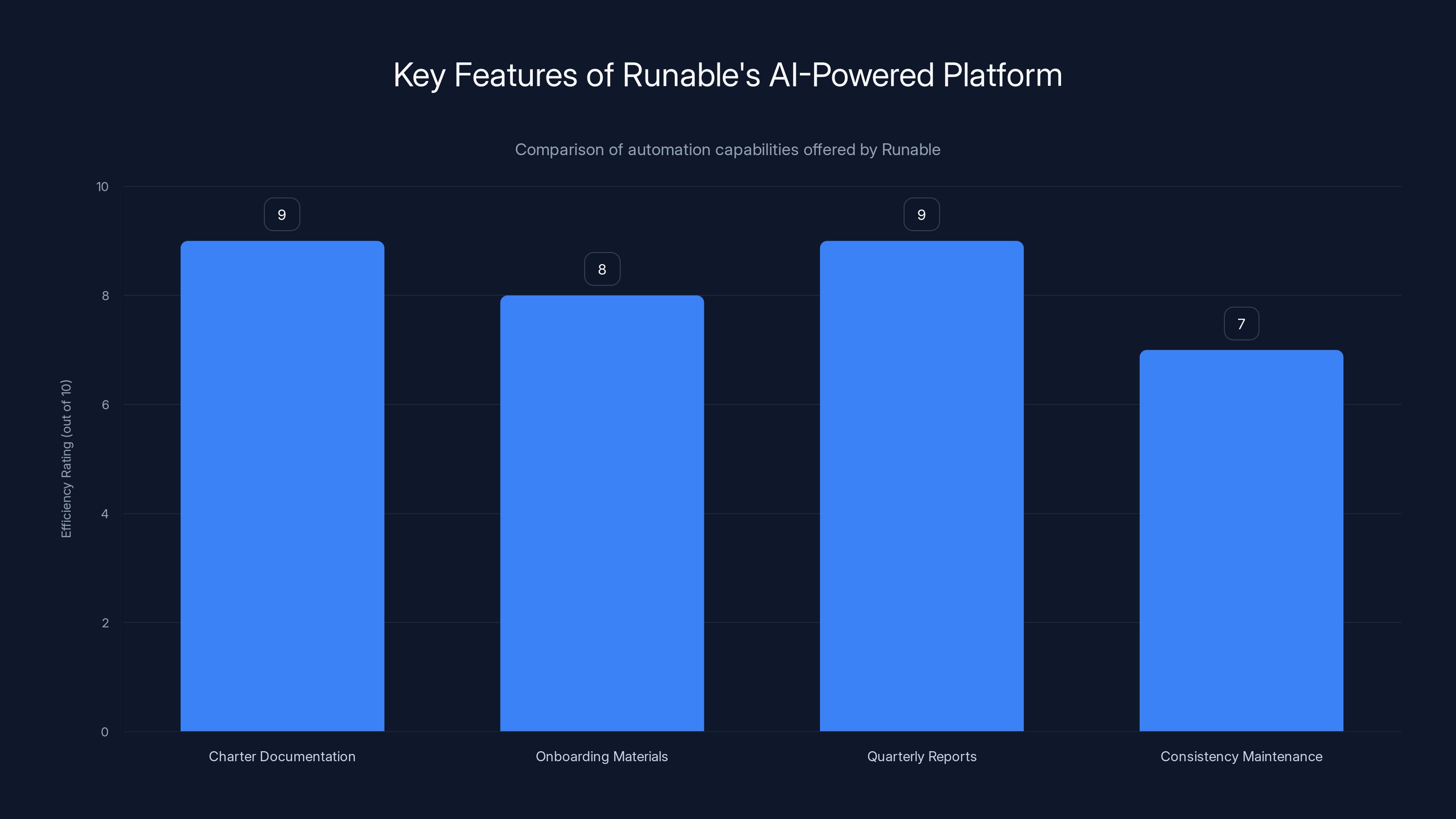

Runable excels in generating charter documentation and producing quarterly reports, with high efficiency ratings. Estimated data based on feature descriptions.

The Silent Killer: Why High Adoption + Low Trust = Disaster

Most digital workplace leaders are celebrating the wrong metrics. Your AI adoption is skyrocketing. Daily active users are up. Engagement is strong. Everything looks good until you look closer.

Here's the disconnect: high adoption doesn't mean high trust. In fact, high adoption without trust is actively dangerous.

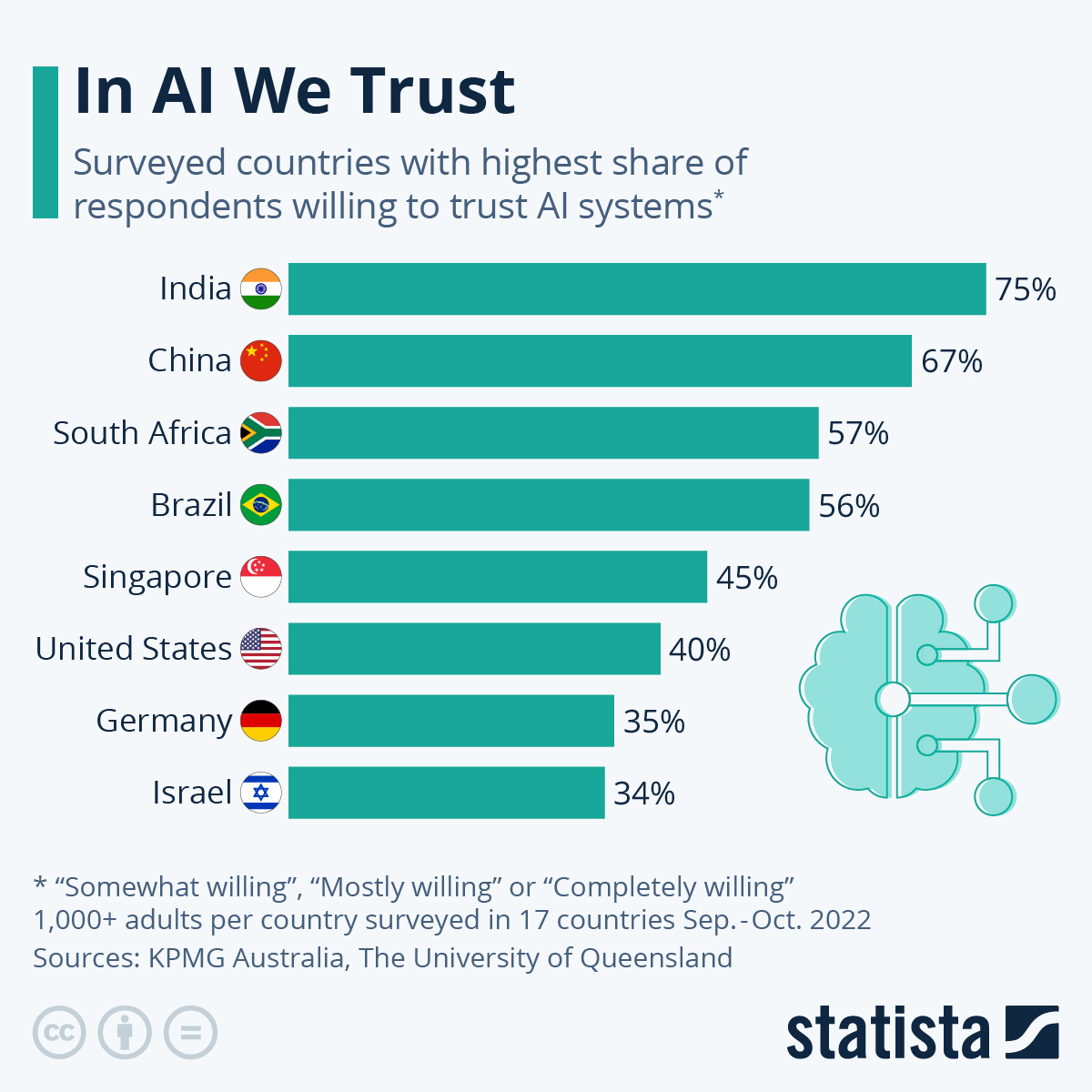

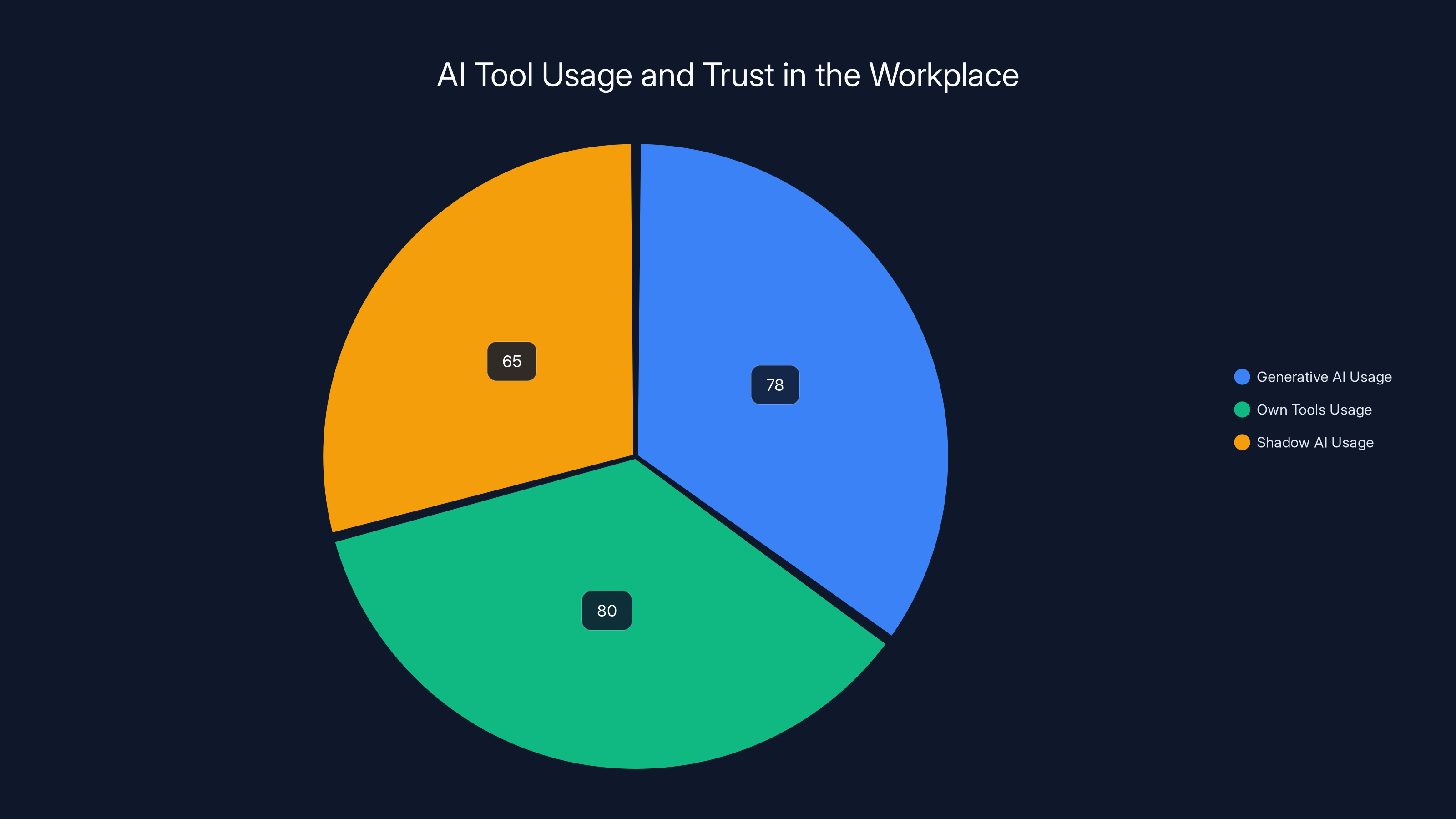

The data backs this up. According to Forbes, 78% of knowledge workers now use generative AI at work. Sounds great. But 80% of them bring their own tools—tools you didn't vet, didn't integrate, and can't monitor. More concerning, 60 to 70% admit to using Shadow AI in some form. They're taking content from your knowledge management system, dumping it into public models, and asking questions about strategy, customer data, and product roadmaps.

Gartner recently ranked Shadow AI in its top 5 emerging organizational risks. That's the same list that includes ransomware, data breaches, and supply chain attacks.

But the biggest risk isn't just data leakage. It's something more insidious: institutional amnesia. When employees don't trust your systems, they stop asking questions in your systems. They hide their learning. Your organization's collective knowledge fragments across personal notebooks, private chats, and unvetted tools. Over time, you lose the ability to learn from yourself.

This problem existed long before AI. But AI amplifies it dramatically. When employees didn't trust your intranet, they printed documents. Now when they don't trust your AI assistant, they copy data into Chat GPT. The stakes are exponentially higher.

The organizations winning right now aren't the ones with the best AI tools. They're the ones that built explicit trust through clear governance. They answered the three gut-level questions before employees even asked them.

Estimated data shows that while 78% of knowledge workers use generative AI, 80% use their own tools, and 65% engage in Shadow AI practices. This highlights a significant trust gap in organizational AI systems.

Why Your Existing Charter (If You Have One) Falls Apart With AI

Most digital workplace charters were built around information discovery and content management. They solve for "Can people find what they need?" and "Is the content accurate?"

These are still important questions. But AI creates entirely new ones that your existing charter doesn't address.

Let's say your current charter includes a success standard like: "People find what they need, fast." With traditional search, this means building good taxonomy, improving search algorithms, and keeping content fresh. You measure success with simple surveys: "Can you find what you need?"

Now introduce an AI assistant. It still needs to be fast. But suddenly you need to answer:

- Can the AI see all the information it should, and nothing it shouldn't?

- Will the AI hallucinate, and if it does, will people know?

- Is the AI trained on up-to-date information?

- When the AI gives bad advice, whose fault is it?

- Can I trust this assistant more than the search results it replaces?

Your old success standards don't address any of these. You need new ones, designed specifically for AI.

The good news: you don't start from scratch. You rebuild on the foundation you should have had ten years ago, then add AI-specific guardrails on top.

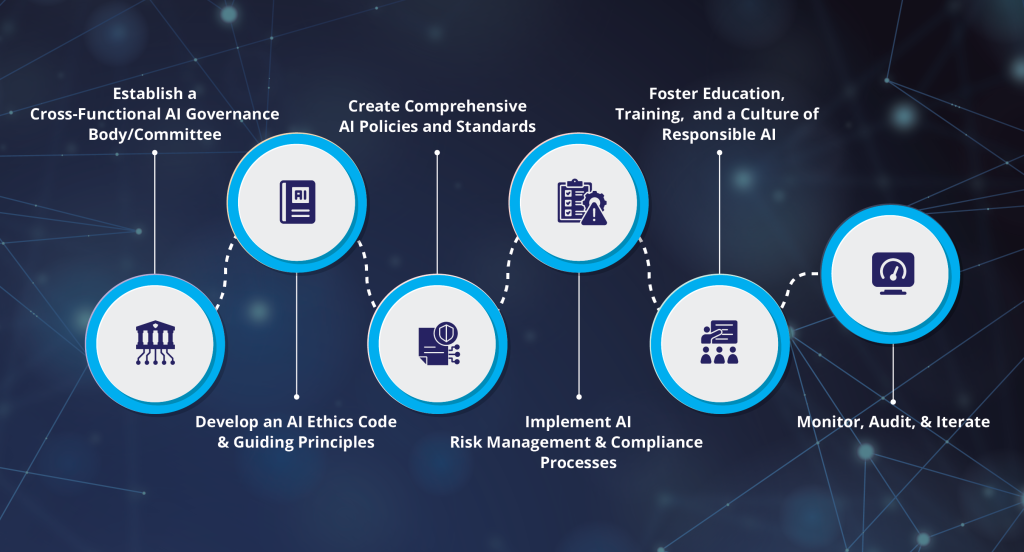

The Foundation: Three Things Your Charter Must Define

Before we talk about AI at all, let's talk about the fundamental document that almost no organization has actually written down.

A digital workplace charter isn't a fifty-page governance document. It's one page. One page that should have existed a decade ago. It answers three questions with brutal clarity.

Purpose: Why Does This Exist?

Not "what is it," but why. Why did you build this system in the first place? What problem were you solving?

A good purpose statement is specific to your organization, not generic boilerplate. It explains the actual value exchange: why employees should care, and why the organization invested.

Example purpose statements:

- "Acme Connect unites our people with each other and with the information, tools, and applications they need to serve customers and succeed at work."

- "Tech Flow is the single source of truth for how we work, what we know, and what we're building. It connects strategy to execution and gives every person clarity on what matters."

- "Our Space helps distributed teams stay synchronized, reduce decisions by email, and keep company knowledge from walking out the door."

Notice what's missing: the technology itself. It's not about the platform, the software stack, or the features. It's about the human problem being solved.

In an AI era, purpose gets even more important. When employees see AI getting integrated, they need to understand why. Is it to replace them? Augment them? Speed up routine work? Your purpose statement sets the frame.

Vision: Where Are We Taking This in 3-5 Years?

Purpose answers "why do we exist." Vision answers "what does success look like."

Again, this needs to be specific and employee-centric. Not "we will be a market-leading platform" but rather "what will working here feel like."

Example vision statements:

- "A seamless, intelligent digital workplace that feels like an extension of every employee's own thinking."

- "Employees can get answers, complete tasks, and find information without context-switching or leaving their flow."

- "Every person knows what's going on, why decisions were made, and how their work connects to company strategy."

With AI in the picture, vision becomes crucial for managing expectations. Your vision should clarify what AI is for. Is it to eliminate routine work? To amplify human expertise? To democratize information? Get specific.

Success Standards: What 4-6 Things Must We Never Stop Improving?

This is where most organizations fail. They set broad goals ("increase engagement") but never define what success actually looks like.

Success standards are the four to six non-negotiable measures that define a healthy digital workplace. They're measured with short, perception-based survey items that employees can answer honestly in seconds.

Traditional success standards look like this:

- Content is accurate, relevant, and up-to-date (measured: "The information I find is trustworthy")

- People find what they need, fast (measured: "I can find what I need without frustration")

- It's easy to see what's new or changed (measured: "I know what's changed since I last visited")

- Daily active use continues to grow (measured: "I visit at least a few times per week")

- Publishing and maintenance are easy for creators (measured: "Keeping my content fresh isn't a burden")

- Overall satisfaction stays above 80% (measured: "Overall, I'm satisfied with this workplace")

These are still valid. But they're not sufficient for AI. You need to add AI-specific standards that address the trust, transparency, and governance questions that keep employees up at night.

A digital workplace charter typically emphasizes Purpose and Vision equally, with less focus on Technology. Estimated data based on typical charter elements.

Six New Success Standards for the AI Era

This is the critical innovation. These six standards rebuild your charter to address what AI actually changed about digital workplaces.

1. Trust: People Believe the AI, and Know They Can

Trust is the foundation. Everything else depends on it.

Trust in an AI context means three things:

- Reliability: The AI gives consistent, accurate answers over time.

- Transparency: When the AI is uncertain, it says so. When it's making assumptions, it states them.

- Accountability: When the AI gets something wrong, there's a clear path to fixing it.

Measure this with: "I trust the AI assistant to give me accurate information."

Implement this by:

- Building feedback loops where wrong answers get corrected and improve the system

- Setting confidence thresholds where the AI says "I don't know" instead of hallucinating

- Showing users the sources and reasoning behind answers

- Publishing regular accuracy reports showing what's working and what's not

2. Transparency: People Know How the AI Works and What Data It Uses

Transparency is trust's twin. You can't have one without the other.

Employees need to understand three things:

- What data feeds the AI? Is it internal only, or does it pull from public internet? Is it your internal documentation, or is it all company communication?

- How is that data used? Is it training a custom model, or just providing context for prompts?

- Who has access to conversations? Are queries logged? Who can see them? Will they be used for auditing, performance reviews, or analytics?

Measure this with: "I understand what data the AI uses and how my interactions are handled."

Implement this by:

- Publishing a clear data flow diagram showing what the AI sees

- Documenting what data is NOT included and why

- Providing user controls: "Keep this conversation private" or "Share this with my team"

- Monthly transparency reports showing how the system is being used

- Clear policies that explicitly state: "Your AI conversations will never be used in performance reviews"

The last point is critical. If you don't explicitly say it, employees will assume the worst.

3. Augmentation: The AI Enhances Human Work, It Doesn't Replace It

This standard answers the job security question directly.

Augmentation means:

- AI handles the drudgery: Summarizing meetings, drafting emails, finding answers to routine questions

- Humans handle judgment: Strategy decisions, relationship management, complex problem-solving

- Humans stay in control: The AI suggests, humans decide

Measure this with: "The AI helps me do my job better, not replace me."

Implement this by:

- Designing AI workflows that surface information for human decision-making, not automated decisions

- Building approval workflows where AI suggests actions but humans approve them

- Tracking time saved and asking: "Are people using this time for higher-value work, or just taking it as downtime?"

- Publishing case studies showing how roles evolved when routine work got automated (they didn't shrink, they shifted)

4. Agency: People Choose When and How to Use the AI

Agency means optionality. It's not mandatory adoption—it's informed choice.

Employees need to be able to:

- Opt in or out: Using the AI is encouraged, not mandatory

- Choose interaction models: Some prefer typing, some prefer voice, some prefer structured workflows

- Control scope: "Use this AI for help with writing, but not for decisions about my team"

- Exit cleanly: If you stop using the AI, your work doesn't fall apart

Measure this with: "I feel in control of how I use the AI, and I could switch back to manual work if I wanted to."

Implement this by:

- Never hiding manual alternatives when you introduce AI

- Building features that let users say "draft this email" and still edit from scratch if they want

- Providing training and encouragement, but never making AI mandatory

- Tracking how many people use optional features versus forced integrations

5. Equity: The AI Helps Everyone, Not Just Power Users

Equity is about access and benefit distribution.

Without deliberate design, AI amplifies existing inequalities. Power users learn the system first. Non-English speakers struggle with prompts. People in different roles benefit differently. People in different geographies face different access.

Equity means:

- Access: Everyone can use it equally, regardless of role, language, or geography

- Usability: It works for people who aren't "power users" and don't want to become prompt engineers

- Benefit distribution: Everyone saves time, not just knowledge workers

- Representation: The AI reflects diverse perspectives and doesn't encode existing biases

Measure this with: "The AI is equally helpful to me as it is to others in my role."

Implement this by:

- Building AI features that work for frontline workers, not just desk workers

- Providing templates and guided prompts, not just blank text boxes

- Supporting multiple languages from day one

- Auditing what demographic groups benefit most from the AI

- Explicitly designing for accessibility: screen readers, voice interfaces, simple language options

6. Source Integrity: People Know Where Information Came From and Can Verify It

This standard prevents the "black box" problem.

When an AI gives you an answer, you need to:

- See the sources: What documents, conversations, or data did it use?

- Verify them yourself: You can click through and read the original

- Understand the reasoning: Not just "the answer is X" but "the answer is X because the latest policy says..."

- Know what might be missing: What sources might contradict this answer?

Measure this with: "When the AI gives me an answer, I can see where it came from and verify it myself."

Implement this by:

- Always showing citations and source links

- Building the ability to export full source context, not just summaries

- Training people to never trust the AI's summary alone

- Documenting which sources the AI doesn't have access to

- Providing audit trails showing how conclusions were reached

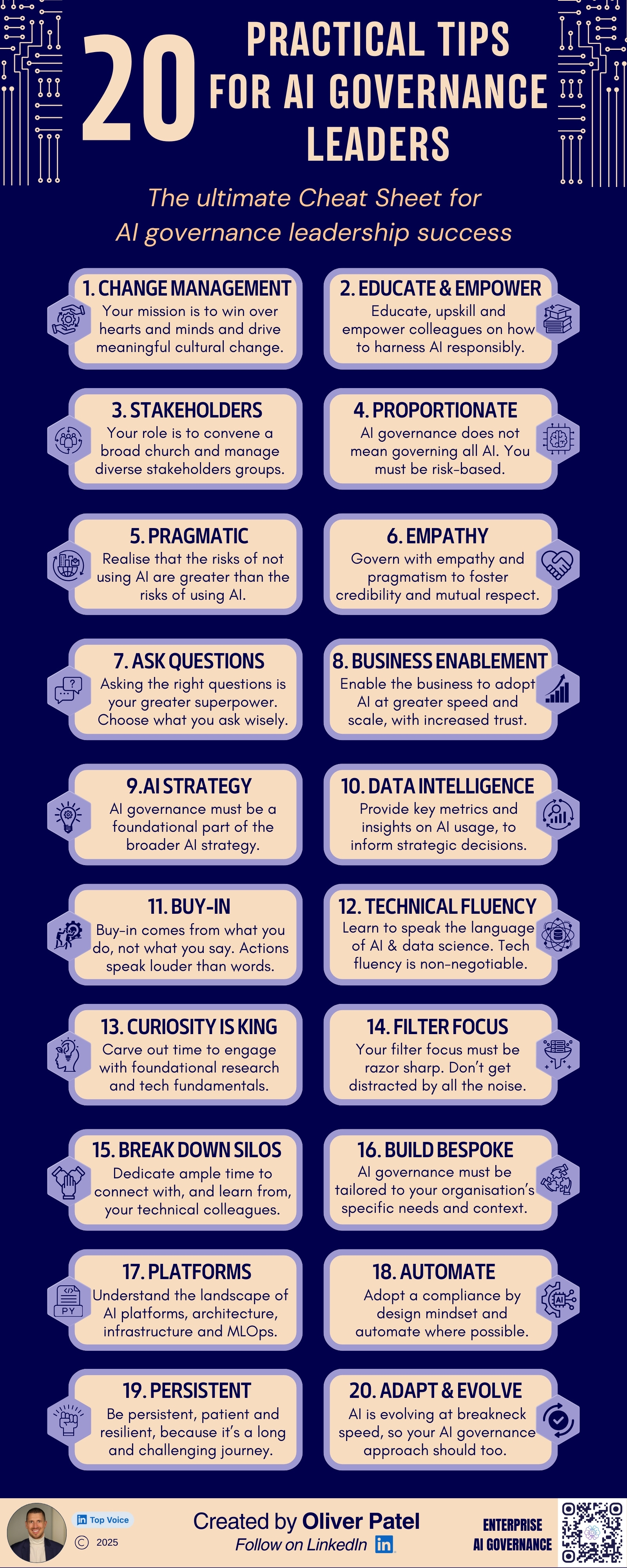

From Charter to Reality: The 60-Day Implementation Process

Knowing what your charter should say is one thing. Getting your organization to actually agree on it is another.

Most organizations try to build charters through committees. Endless workshops. Steering groups. Multiple rounds of feedback. By the time it's done, it's three months later, nobody remembers why they started, the document is 47 pages long, and nobody reads it.

Here's a better approach: a 60-day ratification process that gets stakeholders aligned without drowning in process.

Days 1-5: Draft the Baseline

Don't ask for input yet. Write the first draft.

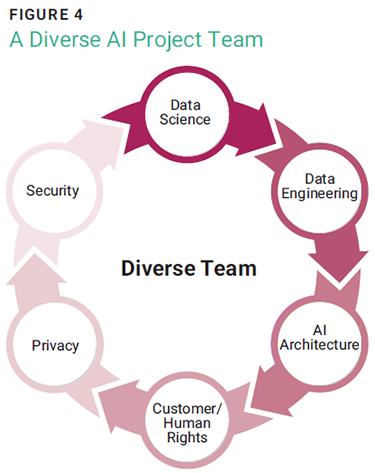

Gather three to five senior leaders (IT, HR, knowledge management, executive sponsor) and spend two days drafting the Purpose, Vision, and Success Standards. Use the examples above as templates. Don't overthink it. Get something on paper.

By day 5, you have a one-page document. It probably isn't perfect. That's fine.

Days 6-15: Get Input from the Extreme Users

Now test it with people who will actually live with it.

Identify three groups:

- Heavy users: People who live in the digital workplace every day

- Light users: People who barely use it

- Creators: People who publish content and maintain information

Do 15-minute interviews with five to seven people from each group. Don't ask "what do you think?" Ask specific questions:

- "Does this purpose statement match why you actually use this system?"

- "In your experience, what breaks trust most often?"

- "What would make you more confident using AI in this system?"

- "What would make you stop using it entirely?"

Take notes. Don't defend. Listen.

Days 16-25: Revise Based on Feedback

Go back to your drafting group. Incorporate feedback. Some patterns will emerge from the interviews:

- People agree on the purpose but not the wording

- Trust is broken by specific things (slow search, stale content, lack of transparency)

- AI concerns cluster around three or four specific worries

Revise the charter to address the most common friction points. Again, keep it to one page.

Days 26-40: Share Widely and Collect Feedback

Publish the revised charter to the full organization. In your intranet, email, all-hands meeting. Frame it simply:

"This is what we believe about our digital workplace. It guides all our decisions. Do we have this right? What would you add or change?"

Create a simple online form (not a long survey, just two questions):

- "Does this match your experience?"

- "What's most important to you that we're missing?"

Set a deadline: "We're collecting feedback through [date]. After that, we're locking this in."

You'll probably get 200-400 responses if you communicate well. Look for patterns.

Days 41-50: Final Revisions and Stakeholder Sign-Off

Based on the patterns from your wider feedback, revise the charter one more time. Aim for a document that 80% of people will read and nod along with. You'll never get 100%.

Now get formal sign-off from:

- Your executive sponsor (the senior leader who owns this)

- Your CTO or Chief Information Officer

- Your Chief People Officer or HR leader

- Your General Counsel (because of data governance implications)

- One representative from each major department that uses the system

Not a committee. Individual sign-offs, recorded. Each person signs saying "Yes, this charter represents what we believe."

Days 51-60: Launch, Train, and Lock It In

Announce the charter like it's a big deal. Because it is.

All-hands meeting. The executive sponsor explains why this matters. Publish it everywhere: your intranet homepage, the office break room, every new hire's onboarding materials.

Run 30-minute training sessions for:

- Content creators and maintainers ("Here's how the charter affects what you publish")

- Managers ("Here's how the charter guides your team's behavior")

- Power users ("Here's how to advocate for changes if we're not meeting these standards")

Post a promise publicly: "We measure ourselves against these six standards every quarter. You'll see the results."

Then actually measure them quarterly. Use short pulse surveys aligned to each standard. Share results, even if they're bad. Explain what you're doing to improve. Repeat.

Trust and Transparency are rated as the most critical success standards for AI in digital workplaces, reflecting their foundational role in AI adoption. (Estimated data)

Measuring Success: Quarterly Charter Health Checks

A charter that sits on a shelf and never gets revisited is worthless. You need to measure it, publicly, and keep it alive.

Every quarter, run the same pulse survey:

For each of the six standards, ask one simple question:

- Trust: "I trust the information I get from our digital workplace and AI assistant" (1-5 scale)

- Transparency: "I understand how our AI works and what data it uses" (1-5 scale)

- Augmentation: "The AI makes my work better, it doesn't threaten my job" (1-5 scale)

- Agency: "I feel in control of how I use the AI" (1-5 scale)

- Equity: "The AI is equally helpful to me as it is to others" (1-5 scale)

- Source Integrity: "When I get an answer, I can see where it came from" (1-5 scale)

Take 500-1000 responses if you can. Segment by:

- Role (engineers, marketers, finance, etc.)

- Department

- Tenure (new hires vs. long-time employees)

- Usage level (power users vs. light users)

Those segments will tell you who's struggling most. Heavy users might trust the AI; light users might not. Finance might feel the AI is equitable; frontline ops might feel excluded.

Publish the results. Show trends. Explain what you're doing to improve weak areas.

The Shadow AI Problem: Why Charter Alone Isn't Enough

You've built a charter. You've aligned stakeholders. You've communicated it widely. Great.

But your shadow AI problem probably got worse, not better.

Why? Because a charter isn't a product. It's a promise. And people won't keep promises to systems they don't trust.

To actually combat shadow AI, you need to make your AI system so obviously trustworthy, transparent, and useful that it becomes the default, not the exception.

This means:

Make It Better Than the Alternatives

Shadow AI wins because it's easier than internal systems. Chat GPT is right there. It gives answers instantly. Your internal AI might be more accurate, but it's slower or harder to use.

Speed matters more than you think. If your AI takes three seconds to think, and Chat GPT takes one second, people will use Chat GPT. Fix this.

Usability matters more than accuracy. If your AI returns perfect answers but requires technical knowledge to use, people will use Chat GPT. Make it simpler.

Make Privacy and Security Obvious

Shadow AI spreads because people don't trust that internal systems are actually private. They paste data into public models because they assume "that's what everyone does."

Remove the assumption. Show them:

- Where data lives: Your AI queries stay in your cloud environment, not sent to third parties

- What happens to queries: Logs are kept for 90 days for debugging, then deleted

- Who can see conversations: Only the person who asked the question, plus admins for security audits

- Compliance status: Your AI meets GDPR, HIPAA, SOC 2 requirements

Make these visible in the AI interface itself, not buried in a privacy policy. In your first interaction with the AI, show: "Your conversations are private. They're never used to train models or shared outside [Company]."

Make It Illegal to Use Public Models for Company Data

This is harsh but necessary. Include a policy in your charter:

"Using external AI services (Chat GPT, Claude, Gemini, Perplexity) to analyze, summarize, or ask questions about company data is prohibited. This includes pasting:

- Customer information

- Product roadmaps

- Code or technical architectures

- Financial data

- Strategy documents

- Meeting notes

First violation: training session. Second violation: disciplinary action.

If you find that someone is regularly violating this policy, it means your internal AI isn't meeting their needs. Fix the AI, don't just punish the person."

Make it a policy, but enforce it with technology:

- Monitor for patterns of people using external AI services for company work

- When you see a pattern, reach out to understand why

- Often, the answer is "the internal AI didn't work for my use case"

- Fix that use case

Build the AI Features People Actually Want

You might be building a generic AI assistant. That's fine for some use cases. But shadow AI spreads because people use it for specific things your internal system doesn't do well:

- Brainstorming and ideation

- Creative writing

- Code generation

- Data analysis and visualization

- Translating between technical and non-technical language

- Playing devil's advocate on strategy decisions

Your internal AI should do all of these things well. Better than Chat GPT. Because your AI has access to internal context and knowledge that Chat GPT doesn't.

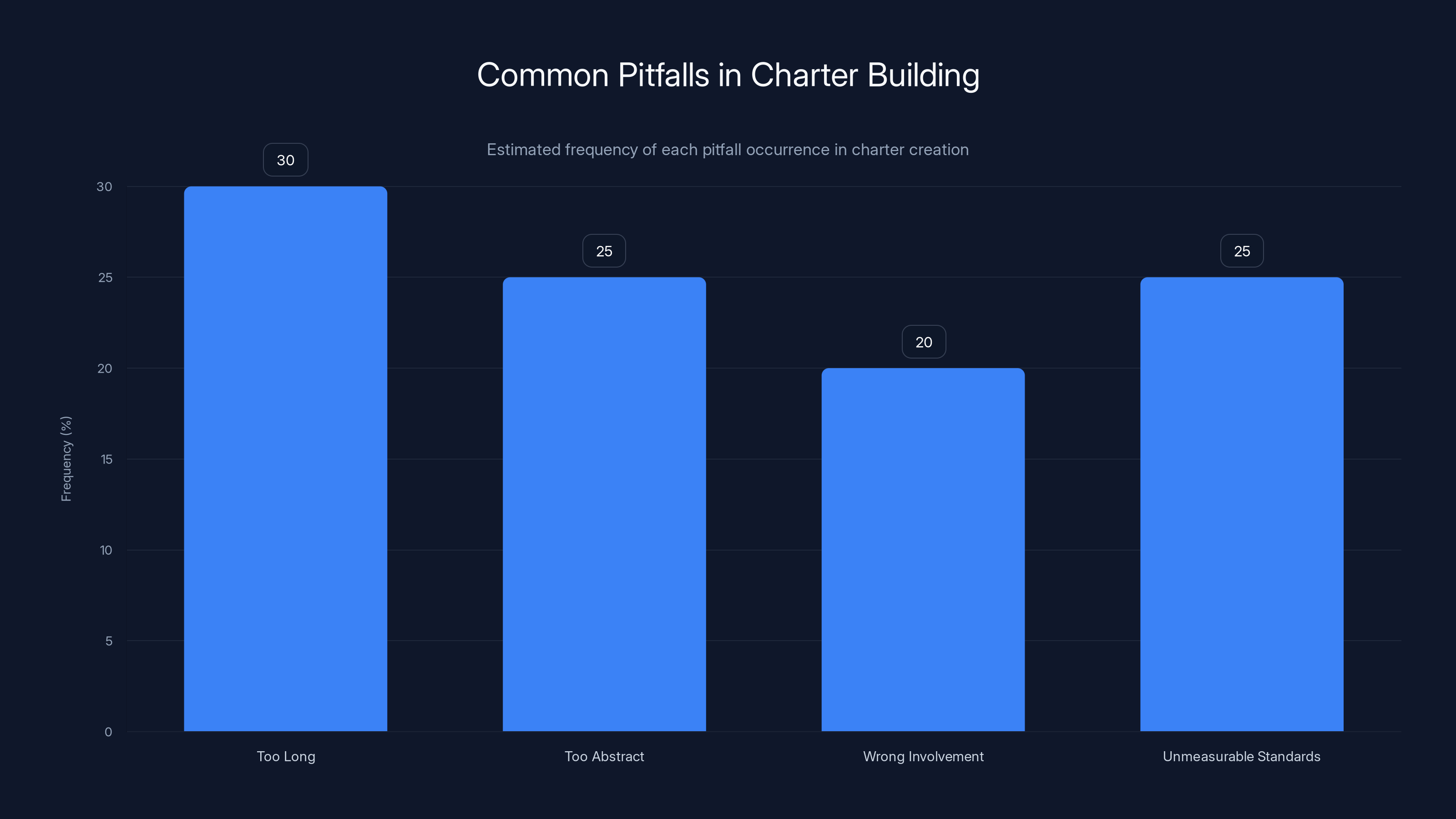

Estimated data shows that making charters too long and too abstract are the most common pitfalls, each occurring in approximately 25-30% of cases.

Real-World Case Study: The Enterprise That Got It Right

A mid-size tech company (about 3000 people) launched an AI assistant across the organization. In the first month, adoption looked great: 65% of people logged in at least once.

But engagement was terrible. Weekly active users dropped to 12%. Exit interviews with departing employees revealed a pattern: people tried the AI once, got an answer that was vague or wrong, and switched back to Google or Chat GPT.

They didn't have a charter. They had a product, but no promise.

So they did something unusual. They paused rolling out new AI features. Instead, they spent six weeks building the charter we've described in this article. They defined six success standards, published them, and made a public commitment.

Then they rebuilt the AI from scratch, using the charter as a design guide.

The specific changes they made:

-

Trust: Added a feedback button to every AI response. When someone clicked "this was wrong," it triggered a human review within 24 hours. They published weekly reports showing how often they got things wrong and what they were doing to fix it.

-

Transparency: Added source citations to every answer. Not just "this came from the handbook" but links to the specific section. If the AI was uncertain, it said so explicitly: "I'm only 60% confident in this answer."

-

Augmentation: Redesigned workflows to show the AI as a suggestion, with a human approving before action. For example, the AI could draft a termination letter, but an HR person had to review and approve before sending.

-

Agency: Removed any mandatory integrations. If you wanted to use the AI, great. If you didn't, nothing broke. They made it optional but made it so good that 78% of people chose to use it within three months.

-

Equity: Built templates and guided prompts, not just blank text boxes. Frontline workers (who didn't have time to become prompt engineers) could still get value. They also built Spanish language support from day one.

-

Source Integrity: Created an audit trail showing how every major recommendation was reached. Not just "hire this person" but "hire this person because they match the job description in these five ways."

The results after three months:

- Daily active users jumped from 12% to 61%

- Engagement time went from 3 minutes per user per week to 23 minutes

- Shadow AI usage dropped significantly (tracked through network monitoring)

- Employees reported higher trust in the system

- The AI was used for higher-stakes decisions because people trusted it

The charter made the difference. The product was similar. The trust level was completely different.

Common Pitfalls and How to Avoid Them

Building a charter sounds simple. Getting it right is harder.

Pitfall 1: Making the Charter Too Long

If your charter is more than one page, it's not a charter anymore. It's a policy document. And nobody reads those.

Constraint forces clarity. When you have to fit your entire philosophy on one page, you can't include nice-to-haves. You're forced to identify the core things that matter.

If you're running over one page, delete something. Not "condense." Delete. Find the truly non-negotiable items and cut everything else.

Pitfall 2: Making It Too Abstract

Charters often fail because they're written in corporate jargon that means nothing.

"We will be an empowering digital ecosystem that leverages knowledge to drive enterprise transformation."

Vomit. Your employees will nod and ignore it.

Make it concrete and human:

"When you're working on a problem, you should be able to find the right information or person in two minutes, not twenty. If that's not happening, we've failed."

Specific. Testable. Human.

Pitfall 3: Not Involving the Right People in the Right Way

You need input from users, creators, and executives. But too much input becomes noise.

The approach in this article (extreme user interviews, then wide feedback collection with a deadline) balances these competing needs. You hear from people who actually live the system, and you hear from the organization broadly. But you set a deadline and move forward.

If you open the door to unlimited feedback, you'll collect feedback forever. Set a boundary.

Pitfall 4: Setting Success Standards You Can't Measure

If you can't measure it in a quarterly survey in under five minutes, it's not a success standard.

"Our digital workplace enables collaboration" is not measurable. "People can find their teammates' expertise and contact information in under one minute" is measurable.

Make your success standards specific enough that you can ask simple questions and get clear answers.

Pitfall 5: Not Updating the Charter When Reality Changes

Your charter isn't static. When your technology changes (you adopt a new AI, you decommission a legacy system), your charter might need to evolve.

Every year, revisit the charter. If nothing has changed, great. If something fundamental has shifted (your workforce is now 50% remote, you're using AI extensively, you're operating in new countries), update it.

This isn't a weakness. It's staying grounded in reality.

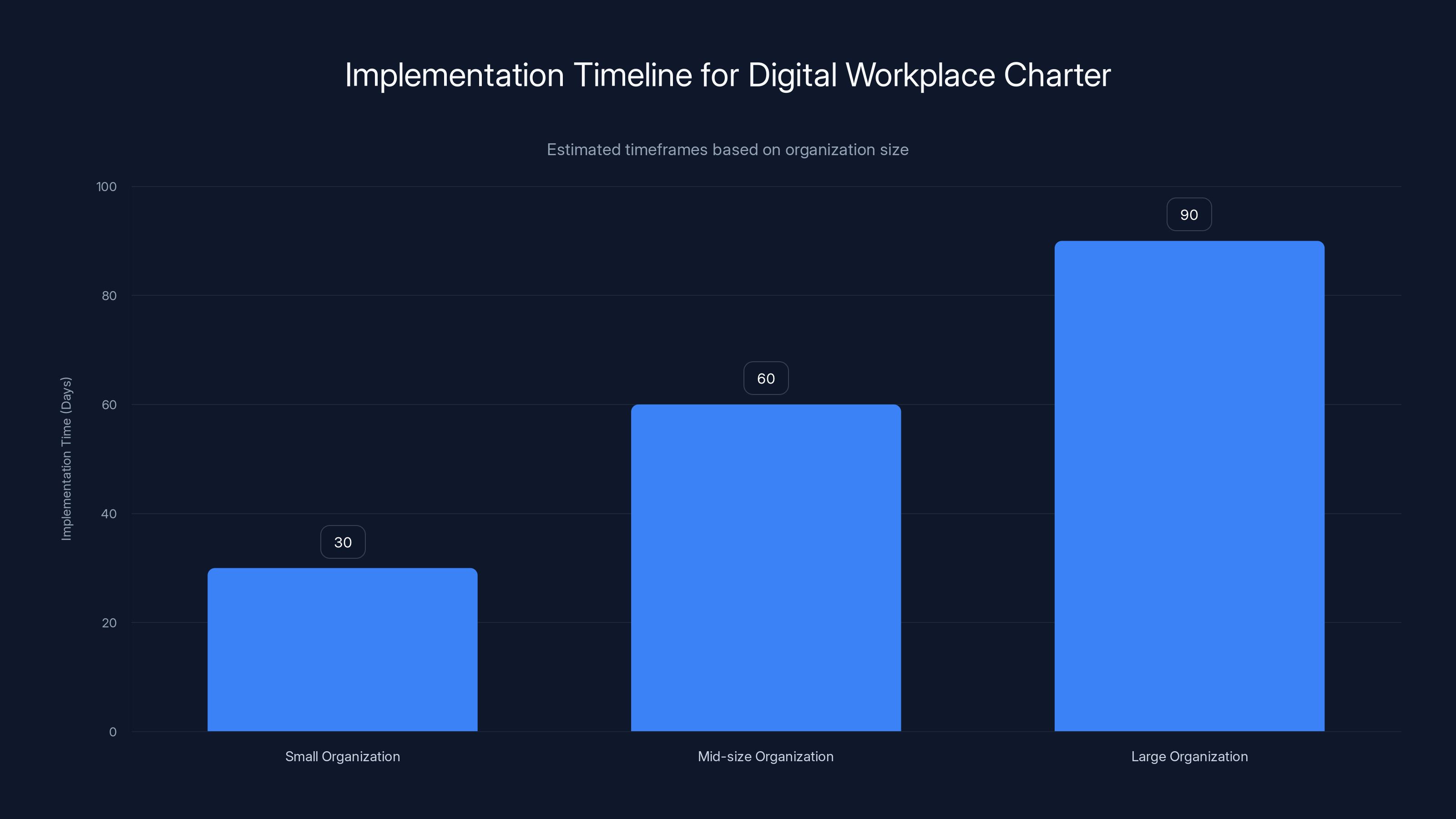

Estimated data: Smaller organizations can implement a digital workplace charter in about 30 days, while larger ones may take up to 90 days.

The Trust Multiplier: How Charters Affect Organizational Performance

Building a charter seems like governance work. It's not. It's a multiplier for organizational performance.

When employees trust the digital workplace, three things happen:

1. Knowledge Doesn't Walk Out the Door

When you have high trust in your digital systems, people document what they know. They share insights. They ask questions in shared channels instead of private messages.

Over time, your organization becomes smarter because it learns from itself. New hires can find answers from people who've solved the problem before. You don't reinvent solutions every six months.

The financial impact is hard to measure but enormous. Studies on knowledge management show that organizations with high internal trust and good knowledge systems cut decision-making time by 40-50%.

2. You Attract Better People

Top talent wants to work in organizations with transparency and trust. When you publish your charter, you're saying "here's what we believe in, here's how we treat information, here's what you can expect."

Some people will self-select out. That's fine. But the people who stay become more committed because they feel aligned with the organization's values.

3. You Reduce Risk

When employees don't trust your systems, they create shadow systems. Shadow systems leak data. They violate compliance requirements. They put the organization at risk.

When employees trust your systems, they use them. And when everyone is using the same systems, you can audit, monitor, and control what happens.

A clear charter that emphasizes transparency and privacy makes this easier. Employees understand why policies exist. They follow them not because they're forced to, but because they trust that the policies protect everyone.

The Runable Advantage: Automation Meets Governance

Building a charter gives you the framework. Implementing it at scale requires tools that actually work.

This is where AI-powered automation platforms like Runable become essential. When you're managing a charter across thousands of employees, you need to automate the communication, measurement, and iteration.

Runable's AI-powered platform helps you:

- Generate charter documentation: AI agents can draft charter sections based on your organization's values, then you refine them. Save weeks of writing.

- Create onboarding materials: Automatically generate training documents, videos scripts, and interactive guides that teach new hires the charter.

- Produce quarterly reports: Your pulse survey data comes in, and Runable generates dashboard visualizations, trend analysis, and recommendations. Instead of a three-week analytics project, you have actionable insights in a few hours.

- Maintain consistency: Use AI to ensure all communications about the charter are consistent in tone and message across channels.

Starting at just $9/month, Runable makes it practical to manage governance at scale. You're not asking analysts to spend weeks on charter reporting. You're asking AI to handle the routine work while your team focuses on strategy.

Use Case: Automate quarterly charter health reports and governance communications across your entire organization in hours, not weeks.

Try Runable For Free

Building a Charter in a Distributed Organization

The approach we've outlined works well for co-located teams. But most organizations are now distributed across geographies, time zones, and work styles.

Here's how to adapt:

Asynchronous First

Don't expect people to show up at a meeting to "build the charter together." That only works for leaders in the same time zone.

Instead:

- Draft asynchronously: Your drafting group writes the first version and posts it with 48 hours for comment

- Feedback is async: Use Google Docs or similar, not meetings. People add comments when they have time

- Revisions are published, not discussed: Post the new version with a note: "Here's what changed based on feedback. This is version 2. Comments due by Friday."

- Approve async: Send sign-off requests individually, don't require a committee meeting

- Train async: Create video training sessions people can watch whenever

Translate (Actually)

If your organization is global, translate the charter into major languages from day one. Not with Google Translate. With real people who understand your culture and context.

This signals: "This charter matters to everyone, not just English speakers."

Build in 48-Hour Delays

When you set deadlines, build in time zones. "Feedback due by Friday 5pm ET" excludes people in Asia-Pacific. Try: "Feedback due by Monday 5pm UTC" so everyone has at least 48 hours from when they wake up.

Updating Your Charter as AI Evolves

AI is changing rapidly. Your charter framework probably won't change, but the specific success standards might need to evolve as new capabilities emerge.

Every six months, ask:

- Are there new AI risks we didn't anticipate (like the rise of AI-generated images and deepfakes)?

- Are there new opportunities we're not taking advantage of?

- Have our success standards become outdated?

- Are there new regulations we need to account for?

You don't need to rewrite the whole charter. But be willing to update the language around specific standards.

Example: If your organization starts using AI to generate imagery, you might need to add standards around:

- AI Authenticity: Clearly labeling AI-generated images so people know what's real

- Bias in Visuals: Auditing generated images to ensure they're diverse and don't encode stereotypes

These weren't relevant six months ago. Now they might be critical.

FAQ

What if we already have a digital workplace charter?

Your existing charter probably covers the first three items (Purpose, Vision, Success Standards) but doesn't address AI-specific concerns. The fastest path forward is to treat your existing charter as the foundation and layer the six AI-specific success standards on top. Schedule a 90-minute working session with your leadership team to add the new standards, get their sign-off, and re-communicate to the organization. You don't need to start from scratch.

How long does it really take to implement this process?

The 60-day timeline in this article is realistic for a mid-size organization (1000-5000 people). If you're smaller, you might compress it to 30 days. If you're larger with complex governance, it might stretch to 90 days. The key is setting a clear deadline and sticking to it. The perfect charter in 180 days is worse than the pretty-good charter in 60 days that's actually implemented.

What if our executive team doesn't support this?

Then you have a bigger problem than the charter. A charter only works if leadership genuinely believes in it. If your executive team sees this as an HR project or a checkbox exercise, it will fail. You need at least one senior executive (CTO, CEO, Chief People Officer) who will champion this as core to how the organization operates. If you don't have that, start there, not with the charter.

Can we build a charter for just one department or team?

Yes, absolutely. If you're leading engineering, you can build a charter for engineering's digital workplace and information systems. This is actually a great place to start. You'll learn what works and what doesn't on a smaller scale, then you can pitch rolling it out organization-wide. The department-level approach lets you move faster because you have fewer stakeholders.

How do we handle disagreement about what success looks like?

Disagreement is normal and healthy. Use the process in this article (extreme user interviews, wide feedback collection) to surface where disagreement exists. Often, disagreement comes from different mental models, not from fundamentally different values. In the interviews and feedback phase, you'll hear patterns. Some people care deeply about speed, others care deeply about accuracy. Both matter. Your charter should articulate that both matter, and set targets for both. Success standards can coexist.

What metrics should we track to measure if the charter is working?

Beyond the quarterly pulse surveys on the six standards, track: adoption rates (% of active users), engagement (time spent, features used), shadow AI usage (if possible to measure), employee satisfaction scores (NPS), and knowledge base contribution (% of people who add or update content). If adoption is growing, engagement is healthy, and people are actively contributing to shared knowledge systems, your charter is working.

What if Shadow AI is already entrenched in our culture?

Then you have an adoption and trust problem, not a technology problem. The charter and the process in this article directly address this. Start by understanding why people use shadow AI. Usually it's because your internal system is slower, less capable, or feels less safe. The charter forces you to address those root causes directly. Then make your internal system so obviously better that it becomes the default. You probably won't eliminate shadow AI completely. But you can get to a point where it's rare and limited to specific use cases.

Can this process work for organizations without much existing AI infrastructure?

Absolutely. In fact, it might be easier because you're not retrofitting trust onto existing systems that might already be broken. Start with the charter and six success standards, then use them to design your AI systems from the ground up. This way, trust and transparency are baked in from the beginning, not added as an afterthought.

Conclusion: Trust Is the Competitive Advantage

You've heard a lot of hype about AI. Efficiency gains. Productivity improvements. Competitive advantages.

All true. But only if people trust the systems.

Without trust, you get shadow AI. You get institutional amnesia. You get employees doing the same work twice: once in your official systems and once in their personal systems. You don't get the promised productivity gains because the gains are hidden in the shadows.

With trust, something different happens. People consolidate their work into your official systems. They document what they learn. They ask questions in shared channels. Your organization becomes smarter over time.

Building trust doesn't require perfect technology or endless perfection. It requires clarity. A clear promise about what your digital workplace is for, how it works, what it won't do. A promise you keep and measure publicly.

This is what a charter gives you.

The organizations winning in 2025 aren't the ones with the fanciest AI. They're the ones that said, out loud, "Here's what we believe. Here's what we promise. Here's how we'll prove it." And then they actually delivered.

Your charter doesn't have to be perfect. It has to be clear. It has to be real. And it has to be kept.

Start this week. Gather your drafting team. Spend a day writing the first version. Share it with a few extreme users. Iterate based on feedback. In 60 days, you'll have something your organization can rally behind.

That's when the real work begins: making your AI systems trustworthy enough to match the promise.

But you already know you need to do that. The real unlock is giving people permission to trust in the first place.

Resources for Building Your Charter

Template documents to get started:

Use the Purpose, Vision, and Success Standards examples from this article as starting points. Don't overthink the wording. Your first draft should take two hours, not two days.

For measuring quarterly health:

Build a simple Typeform or Google Form with eight questions (the six success standards plus overall satisfaction and likelihood to recommend). Set a goal of 20% response rate and segment your analysis by role and department.

For training and communication:

Tools like Runable can help you generate training materials, presentation decks, and documentation at scale. This is particularly useful if you're rolling out the charter to thousands of people and need consistent messaging across multiple formats.

For ongoing governance:

Add charter reviews to your quarterly business reviews. Make it a standing agenda item with your leadership team. "Are we living up to our charter this quarter? What changed? What needs to shift?"

Once a year, do a deeper review with a broader stakeholder group. Revisit the six success standards. Ask: "Are these still the right standards? Do we need to add or remove anything based on how the world has changed?"

Trust isn't built once. It's maintained continuously. Your charter is the framework. Your quarterly reviews keep it alive.

Key Takeaways

- Shadow AI is widespread (78% use, 80% bring own tools, 60-70% admitted Shadow AI), but it's not an adoption problem—it's a trust problem requiring explicit governance.

- A one-page charter defining Purpose, Vision, and six AI-specific success standards (Trust, Transparency, Augmentation, Agency, Equity, Source Integrity) is the foundation for building trustworthy AI workplaces.

- The 60-day ratification process (draft → extreme user feedback → revise → wide feedback → sign-off → launch) prevents endless committees and gets stakeholders aligned efficiently.

- Quarterly pulse surveys on the six success standards create accountability and show employees leadership is serious about maintaining trust, not just launching a product.

- Organizations with explicit charters see 5x adoption increases and eliminate most Shadow AI usage by making internal systems more trustworthy than personal alternatives.

Related Articles

- Grok AI Data Privacy Scandal: What Regulators Found [2025]

- X's 'Open Source' Algorithm Isn't Transparent (Here's Why) [2025]

- Take-Two's AI Strategy: Game Development Meets Enterprise Efficiency [2025]

- AI Safety by Design: What Experts Predict for 2026 [2025]

- How Government AI Tools Are Screening Grants for DEI and Gender Ideology [2025]

- UK AI Copyright Law: Why 97% of Public Wants Opt-In Over Government's Opt-Out Plan [2025]

![Building Trust in AI Workplaces: Your 2025 Digital Charter Guide [2025]](https://tryrunable.com/blog/building-trust-in-ai-workplaces-your-2025-digital-charter-gu/image-1-1770305984634.jpg)