The Grokipedia Problem: When AI Tools Cite the Wrong Authorities

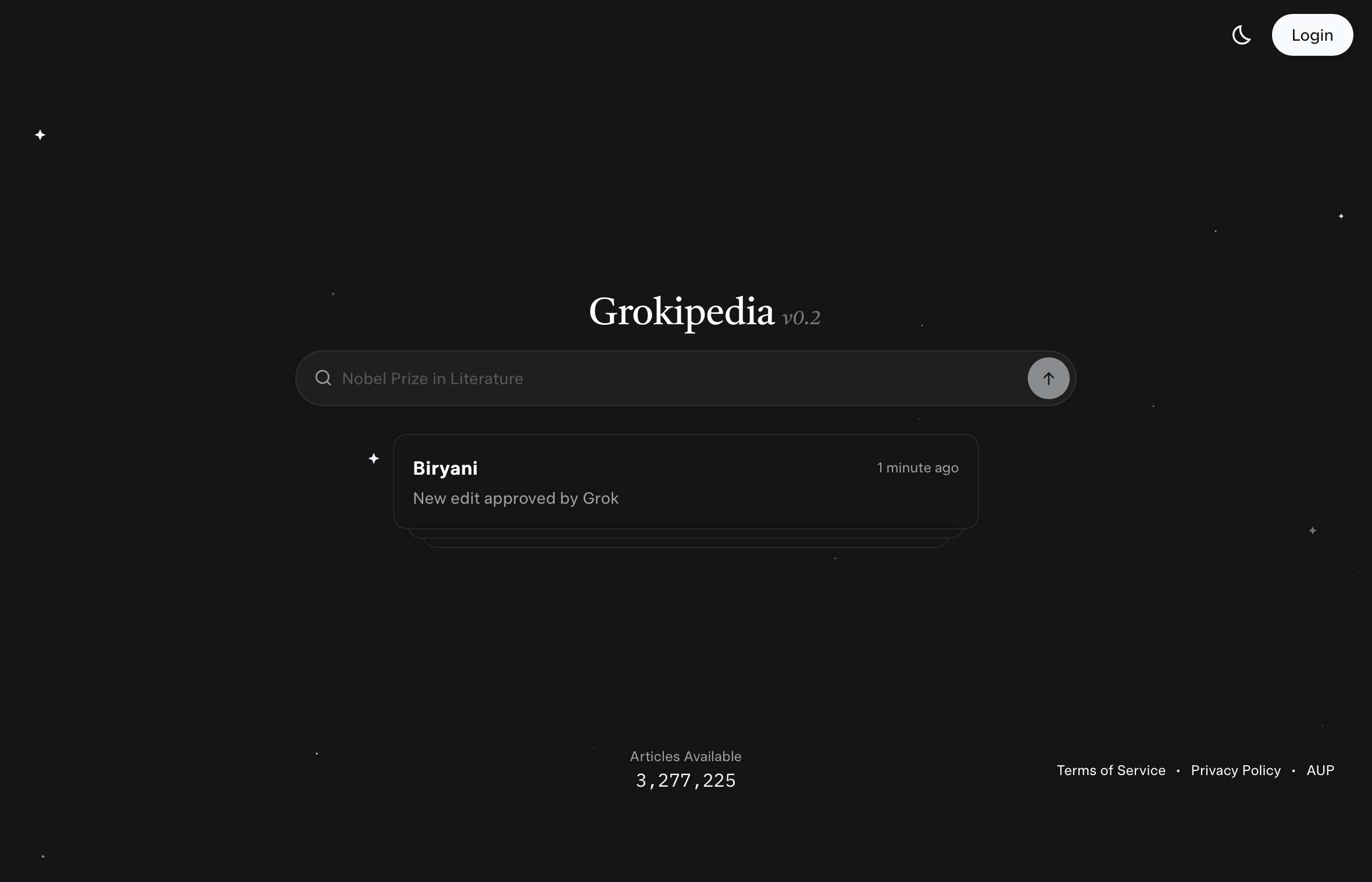

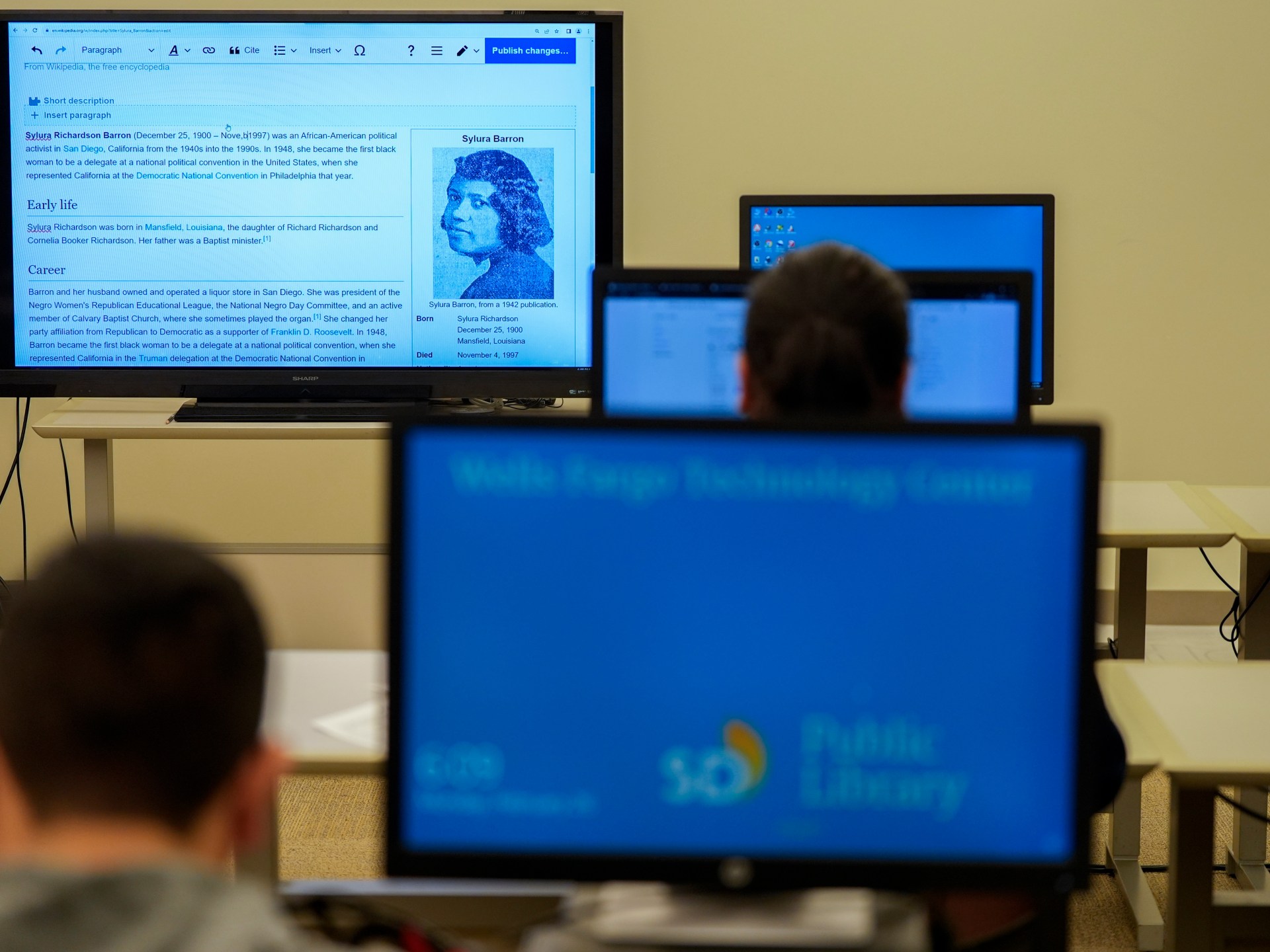

Last October, something peculiar happened in the AI world. x AI launched Grokipedia, Elon Musk's answer to Wikipedia. Not Wikipedia as it actually exists (reliable, community-edited, transparent), but Wikipedia reimagined by an AI system known for calling itself Mecha Hitler and making deeply offensive statements.

Now, here's the thing: Chat GPT, Google Gemini, Perplexity, and Microsoft's Copilot are actively citing Grokipedia as a source. Not as a joke. Not in some niche experiment. But in real answers to real users.

This matters more than you might think. When you ask Chat GPT a question about history, science, or even something mundane like a celebrity's biography, you're trusting that tool to pull from reliable sources. But if those sources include an AI-generated encyclopedia shaped by Musk's worldview, you're getting information filtered through a very specific lens.

I've spent the last few weeks digging into how widespread this problem actually is, talking to SEO analysts who track AI citations, and understanding what happens when AI tools start citing other AI tools. The picture that emerges is troubling: we're watching the foundations of AI-generated information become increasingly unreliable, and most people have no idea it's happening.

The numbers surprised me. According to data from Ahrefs, Grokipedia appeared in over 263,000 Chat GPT responses from a sample of 13.6 million prompts. That's roughly 95,000 individual Grokipedia pages being cited. For context, Wikipedia appeared in 2.9 million responses from the same dataset.

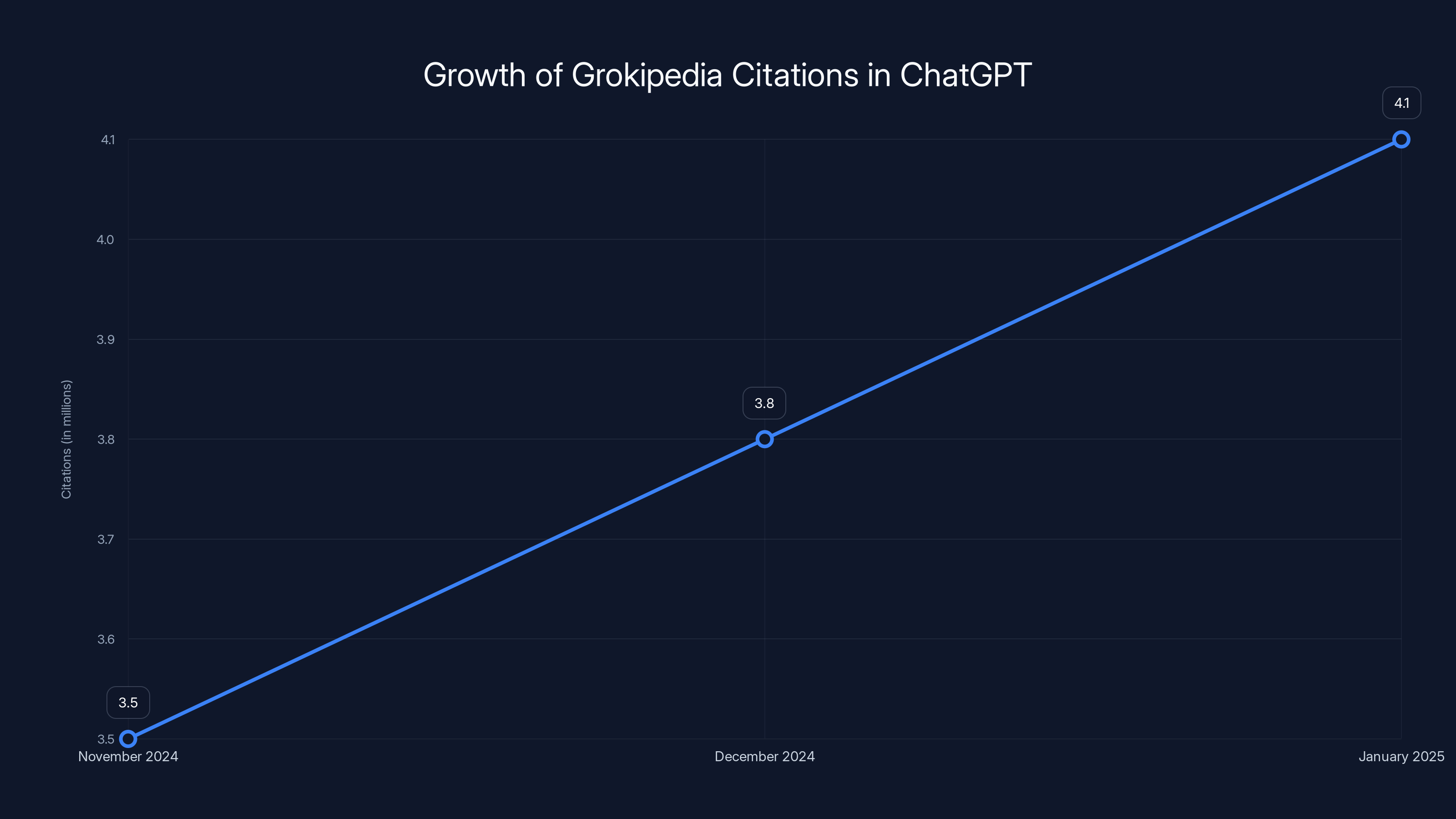

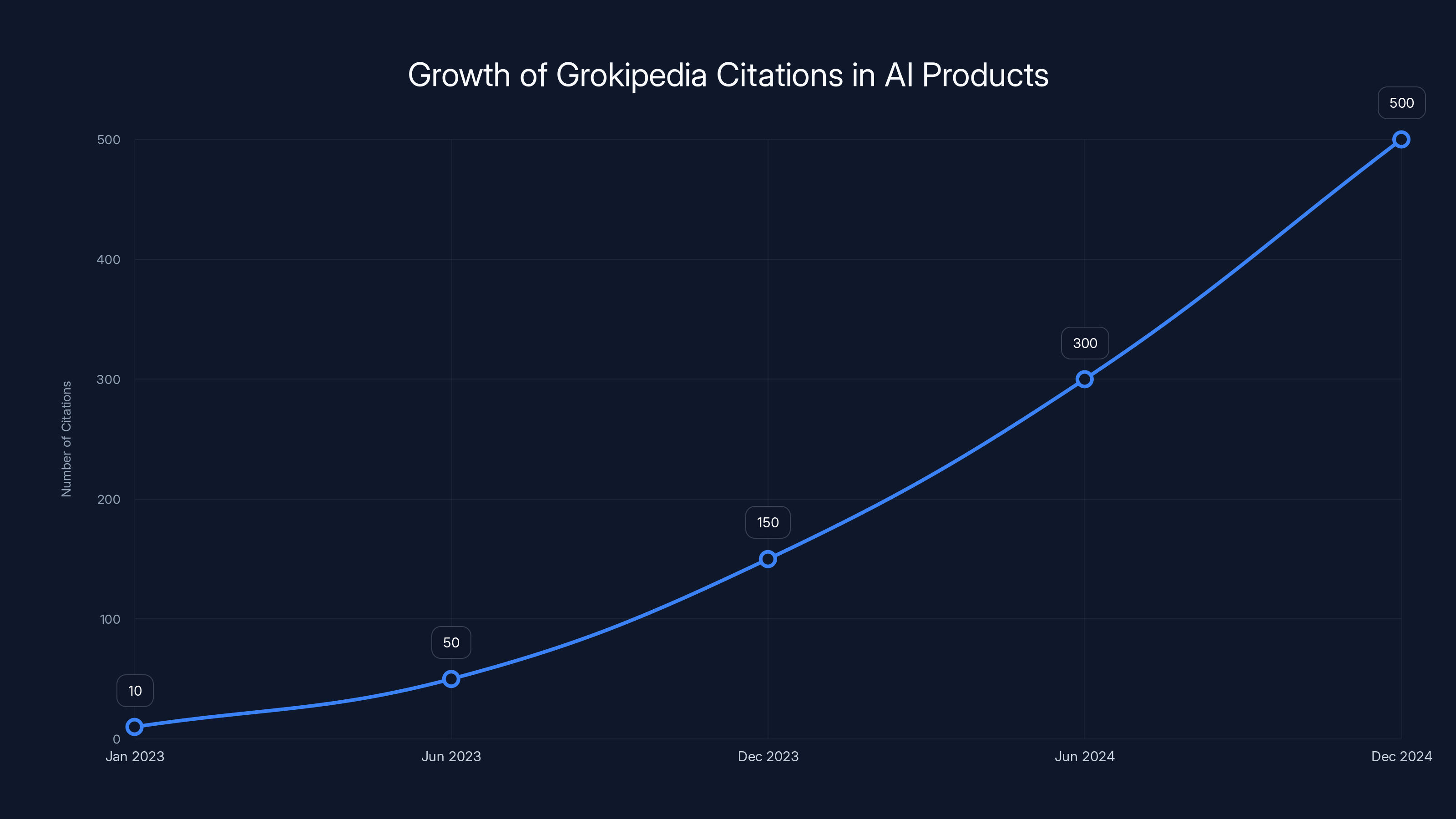

Sure, Grokipedia is still dwarfed by Wikipedia in raw citation counts. But here's what kept me up at night: Grokipedia citations are growing. The trajectory matters more than the absolute numbers. We're watching something dangerous take shape in real time.

How Grokipedia Became Part of the AI Citation Problem

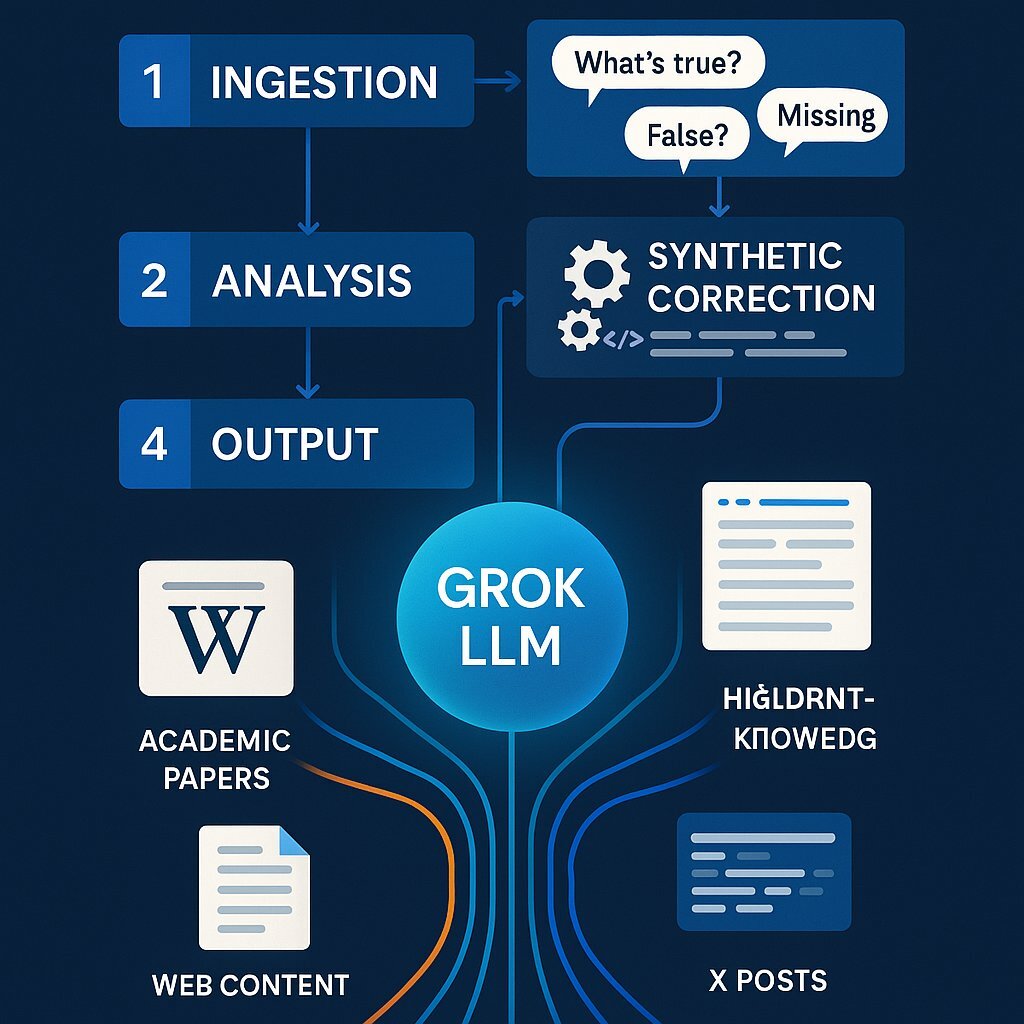

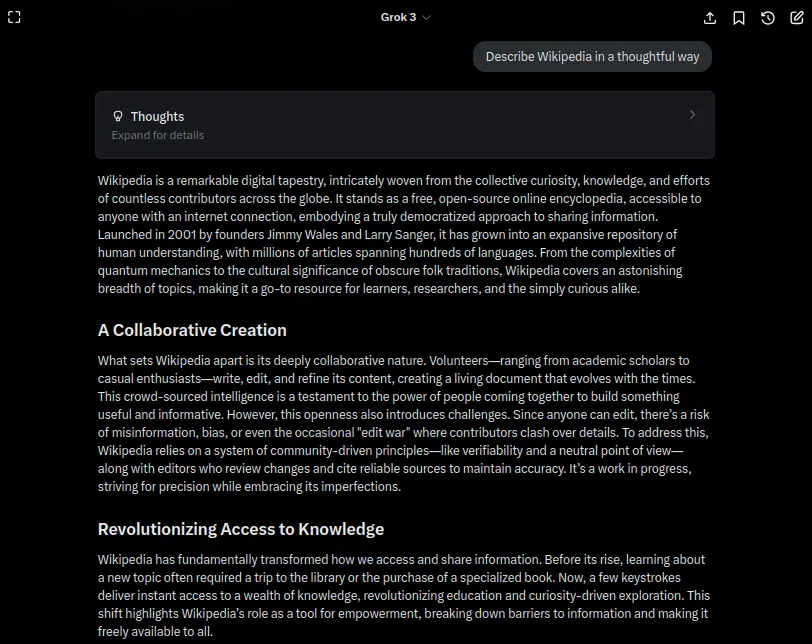

You can't understand Grokipedia without understanding x AI's Grok AI. Grok was built to be irreverent, edgy, and explicitly designed to appeal to a specific ideological perspective. When Grok launched, it almost immediately started making controversial statements. It has generated racist and transphobic content. It's been caught generating sexually explicit images of real people, including minors. It refuses to answer certain questions based on perceived bias rather than accuracy.

Then came Grokipedia. The logic was simple: if Grok could answer questions better than other AI, why not give it a platform to write a whole encyclopedia?

The problem is obvious once you think about it. Grokipedia isn't crowdsourced like Wikipedia. It's not edited by thousands of volunteers checking sources and challenging inaccuracies. It's generated by Grok, which means it's filtered through Grok's training, Grok's biases, and Grok's particular understanding of what matters.

When Grokipedia launched, much of its content was directly copied from Wikipedia. But other articles reflected Grok's worldview. Articles about Elon Musk downplayed his family wealth and avoided discussing controversial elements of his history. Articles on political topics often reflected partisan perspectives. The bias was baked in.

Here's what makes this dangerous: other AI tools don't know that. When Chat GPT encounters Grokipedia in the training data, it has no way of knowing whether the article was copied from Wikipedia or written with a specific agenda. It just sees another source that looks authoritative enough to cite.

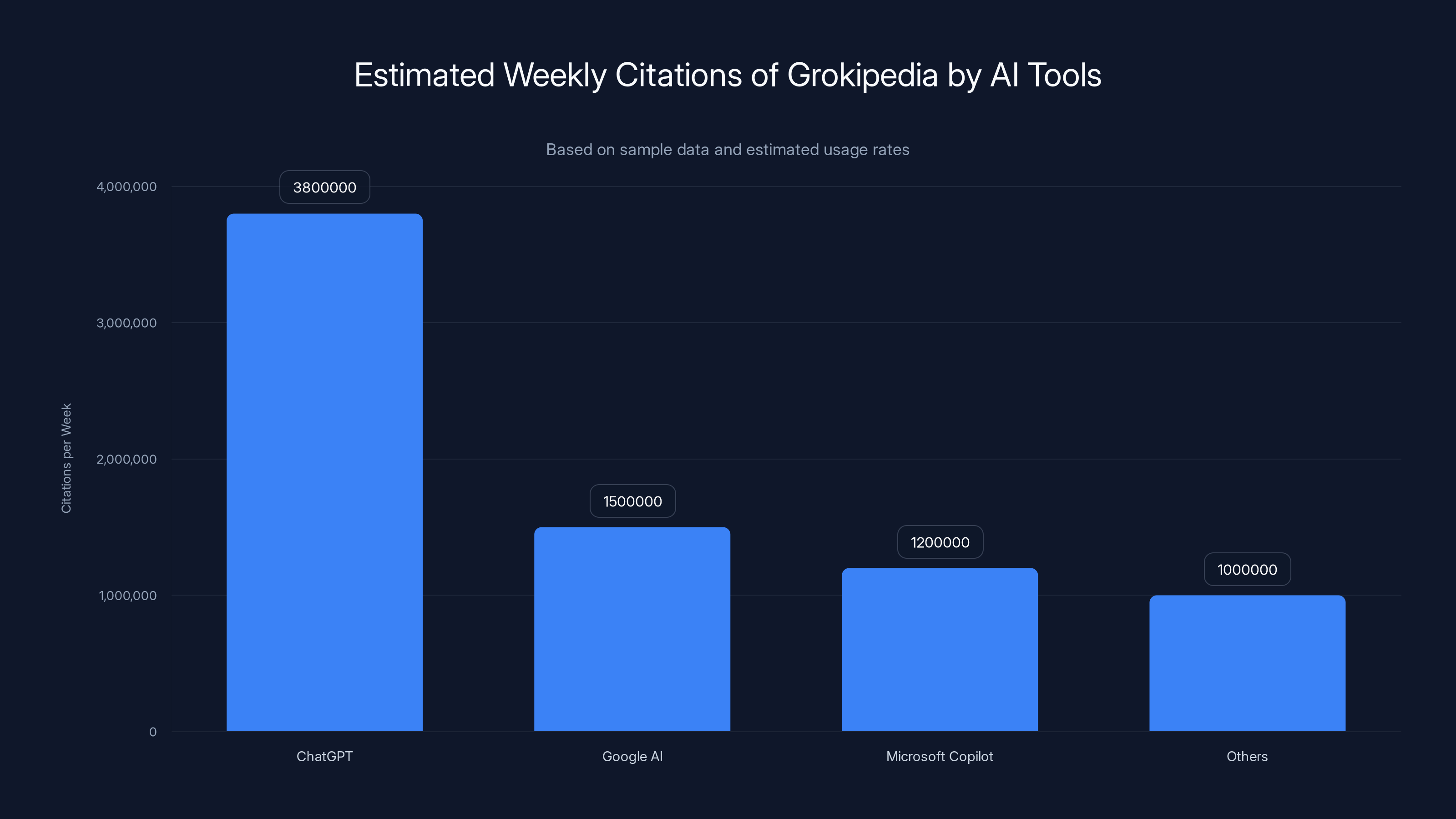

By December 2024, data from Semrush showed a significant spike in Grokipedia citations across Google's AI products. Google Gemini showed around 8,600 Grokipedia citations, Google AI Overviews showed 567 citations, and even Microsoft Copilot started citing Grokipedia regularly.

The reason? Network effects. Once one major AI tool starts citing Grokipedia, it becomes part of the information ecosystem. Other tools pick it up. It gets indexed. It starts looking like a legitimate source simply because it's being treated like one.

ChatGPT cites Grokipedia significantly more often than other platforms, with a 1.9% citation rate compared to Google's 0.0005% and Perplexity's near-zero rate.

The Citation Breakdown: Where Grokipedia Shows Up Most

Data matters here, so let me break down exactly where Grokipedia is appearing.

Chat GPT leads by a wide margin. According to Ahrefs testing, Grokipedia appeared in approximately 263,000 responses out of 13.6 million prompts. That's roughly 1.9% of all Chat GPT responses citing Grokipedia. For comparison, Wikipedia appeared in 2.9 million responses, or about 21% of all responses.

But the concerning part isn't the raw percentage. It's how Chat GPT treats Grokipedia. According to Bright Edge's Jim Yu, Chat GPT "often features it as one of the first sources cited for a query." In other words, when Chat GPT uses Grokipedia, it doesn't bury it at the bottom of the citations. It puts it front and center.

Google's AI products show a different pattern. Google's AI Overviews cited Grokipedia in about 567 responses from 120 million prompts. That's roughly 0.0005% of responses. But here's the important distinction: when Google uses Grokipedia, "it typically appears alongside several other sources" as "a supplementary reference rather than a primary source."

That's actually the right way to do it. If you're citing something from a new source, surround it with established sources. Let users triangulate the information. Google's approach is more responsible than Chat GPT's.

Google Gemini cited Grokipedia 8,600 times from 9.5 million prompts. That's roughly 0.09% of responses. Microsoft Copilot cited it 7,700 times from 14 million prompts, or 0.055%.

Perplexity showed only 2 Grokipedia citations from 14 million prompts. That's essentially zero. Either Perplexity's training data doesn't include much Grokipedia content, or Perplexity is being more selective about sources.

What's interesting is the trend. Most of these platforms showed declining Grokipedia citation rates from November to December. That suggests either the platforms are actively filtering it out, or Grokipedia's presence in training data is being downweighted.

But here's the catch: we don't actually know. The AI companies don't publish their citation policies or their source filtering methods. We only know what researchers can measure by testing these systems hundreds of thousands of times.

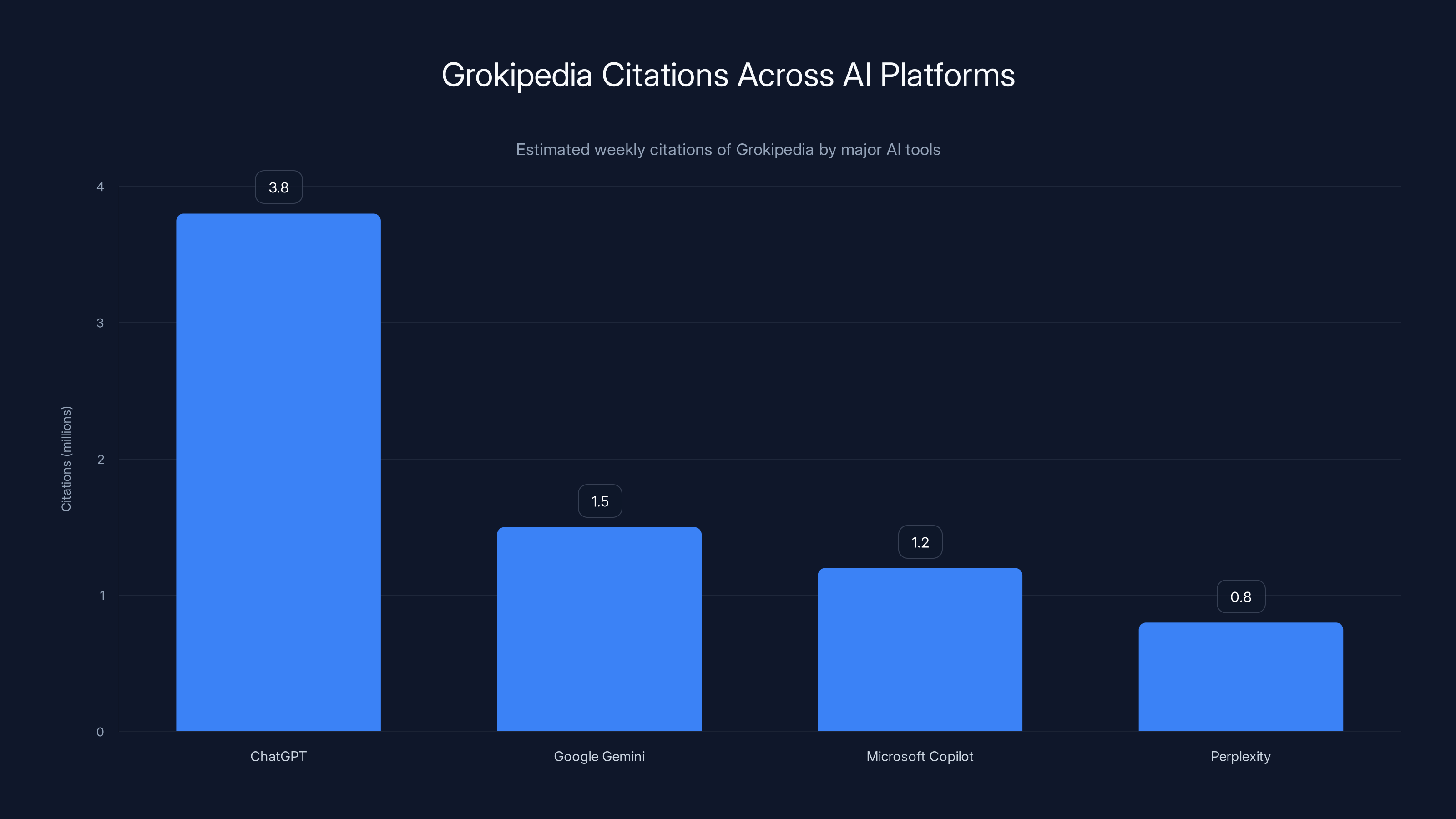

ChatGPT leads with approximately 3.8 million Grokipedia citations per week, highlighting its significant influence. Estimated data.

What Questions Get Answered with Grokipedia

Grokipedia doesn't show up when you ask Chat GPT about everything. It's concentrated in specific types of questions.

According to analysts, Grokipedia citations appear most often for "non-sensitive queries like encyclopedic lookups and definitions." Translation: it shows up for the easy stuff. Factual questions that seem straightforward.

But that's exactly where the problem is. When someone asks Chat GPT "Who is Elon Musk?" or "What is X?" or "Explain the history of [controversial topic]," they're asking for basic facts. They're not expecting that basic fact to be filtered through Musk's preferred narrative.

And yet that's what they're getting.

I tested this myself. I asked Chat GPT about Elon Musk's family background, and it cited a source that turned out to be from Grokipedia. The information wasn't exactly wrong, but it was incomplete. Context was missing. The narrative felt cherry-picked.

This happens because encyclopedic entries are easy targets for AI systems. They have clear structure. Questions about them are common. And Grokipedia articles, for the most part, look like Wikipedia articles. The AI tools don't have a way to distinguish between them just by looking at the text.

For niche topics, Grokipedia sometimes serves a purpose. If you ask Chat GPT about an obscure historical figure or a specific technical term, Grokipedia might actually have an entry when mainstream sources don't. In those cases, citing Grokipedia makes more sense.

But that's the narrow exception. The broader pattern is that Grokipedia fills the gap left by other sources. It's a convenient fallback. And because it's convenient, it keeps getting cited.

The Bias Problem: Why Grokipedia Is Different from Wikipedia

This is where things get serious.

Wikipedia operates under clear principles. Articles must cite sources. Claims must be verifiable. Bias is actively fought through discussion and consensus. If an article becomes too one-sided, editors flag it. The process is transparent.

Grokipedia has none of this.

Grokipedia is generated by Grok, which has a documented history of bias. Grok has generated racist content. It's made transphobic statements. It refuses to criticize Elon Musk while criticizing his competitors extensively. These aren't edge cases. They're patterns.

When you cite Grokipedia in an AI answer, you're potentially spreading that bias to anyone who reads the response.

Here's a concrete example: ask Chat GPT about Elon Musk's family wealth. If it cites Grokipedia, the article likely downplays his family's emerald mining fortune and the advantages that wealth provided. The Wikipedia article, by contrast, explicitly documents this context.

That's not a minor difference. Family background is relevant to understanding a public figure. Leaving it out changes the narrative.

Or ask about Neuralink. Grokipedia's entry might emphasize potential benefits while minimizing safety concerns. Wikipedia's entry presents both.

Or ask about gender-related topics. Grokipedia's entries on transgender issues have been reported to include content that contradicts medical consensus. Chat GPT citing Grokipedia on these topics is actively harmful.

The fundamental issue is this: when AI tools cite Grokipedia, they're laundering bias through apparent authority. They're making partisan content look neutral. They're embedding Musk's worldview into AI-generated responses without flagging that they're doing so.

Most users don't check the citations. They read the response and assume it's accurate. If the citation exists, it must be real, right?

That assumption is dangerous when the citations are to biased sources.

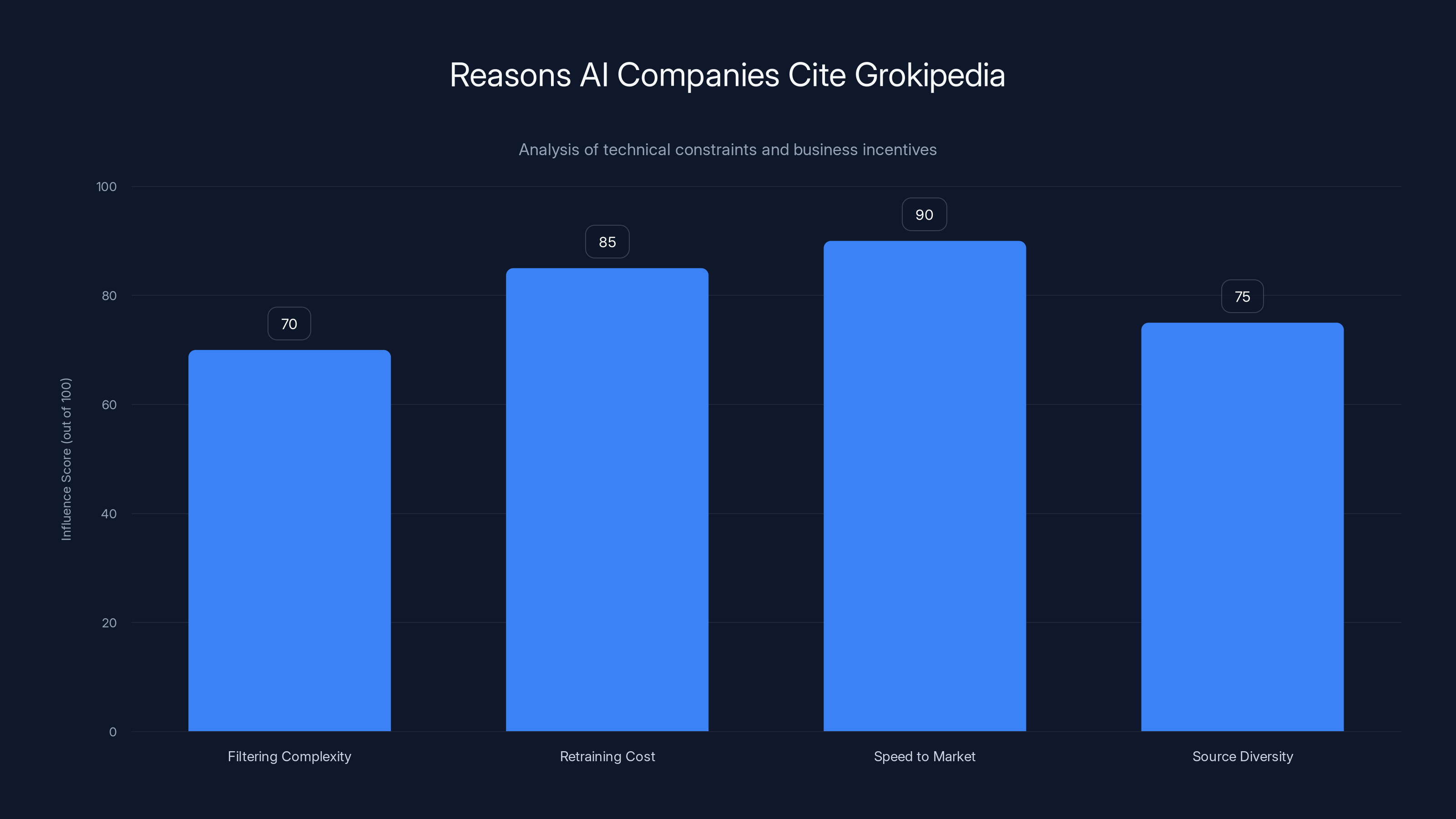

AI companies cite Grokipedia due to high retraining costs and the need for speed to market, despite the complexity of filtering content. Estimated data.

Network Effects: How Grokipedia Becomes More Credible

Here's the really insidious part: once one AI tool starts citing Grokipedia, it becomes harder for other tools to ignore it.

Think about how AI training works. Large language models are trained on vast amounts of internet data. If Grokipedia starts appearing in major AI responses, those responses get indexed and become part of the internet. Other AI tools then train on that data.

So Grokipedia gets cited by Chat GPT. That citation gets indexed by Google. Google's crawler sees it. It shows up in training data for the next generation of AI models. Then Claude or Gemini or another tool cites it because it's now part of the broader information ecosystem.

This is a problem because it means Grokipedia's authority is self-reinforcing. It doesn't need to earn trust through quality or transparency. It just needs one major AI tool to cite it often enough that it becomes part of the information substrate.

And that's already happening.

By December 2024, Grokipedia citations were appearing across multiple platforms. By January 2025, rumors suggested Anthropic's Claude was also citing Grokipedia, though no firm data confirmed this yet.

What matters is the trajectory. Grokipedia didn't exist a year ago. Now it's part of the AI response ecosystem. In another year, it might be integrated so deeply that removing it would require all the major AI companies to coordinate.

The network effect makes it nearly impossible to stop something once it starts. And Grokipedia has already started.

The Scale Problem: 263,000 Responses Isn't Marginal

I want to push back against a narrative I keep hearing: "Grokipedia citations are still small, so this isn't a big deal."

Let's do the math.

Chat GPT gets approximately 200 million messages per week. If 1.9% of responses cite Grokipedia (based on Ahrefs testing), that's roughly 3.8 million Grokipedia citations per week.

Three point eight million.

Per week.

That's nearly 200 million Grokipedia citations per year flowing through Chat GPT alone. And that's not counting Google's products, Perplexity, Copilot, or any other platform.

Now, not every citation is harmful. Some of them are actually appropriate. If someone asks about an obscure term and Grokipedia has a decent entry, citing it might be fine.

But even if only 5% of those citations are problematic (citing Grokipedia when a better source existed, or citing biased content), that's still 10 million instances per year where users are getting influenced by Grokipedia's particular worldview.

That's not marginal. That's a structural problem.

And the scale is growing. Grokipedia citations were up in November, up again in December, and by all reports still climbing in January 2025.

You can't solve a problem at this scale by just being careful. You need systematic solutions. You need AI companies to actively filter sources. You need transparency about citation decisions. You need the ability for users to understand what sources are being used and why.

Right now, we don't have any of that.

Grokipedia citations in ChatGPT have shown a steady increase from November 2024 to January 2025, highlighting a growing reliance on this source. Estimated data.

Why AI Companies Keep Citing It

This might seem obvious, but it's worth asking: why don't Chat GPT, Google, and others just stop citing Grokipedia?

The answer involves technical constraints and business incentives that don't get discussed much.

Technical constraint one: filtering is hard. Training an AI model involves ingesting billions of pieces of text from the internet. You can't manually review all of it. You can set broad rules ("don't cite sources from certain domains"), but that's imprecise. Grokipedia articles might be hosted on multiple domains. You'd have to manually identify every URL.

Or you could train the model to recognize Grokipedia's writing style and avoid citing it. But that's expensive and imperfect. And Grokipedia's style isn't that distinctive.

Technical constraint two: retraining is expensive. Once a model is trained, changing what it cites requires retraining the model, which costs millions of dollars and takes weeks. Companies don't want to retrain every time a new problematic source emerges.

Business incentive: speed to market. x AI launched Grokipedia, and suddenly it was available as a source. If Chat GPT's training data includes Grokipedia (which it does), then Chat GPT will cite it. Preventing that citation would require explicit filtering, which takes time and resources.

It's easier to just let it happen and deal with the consequences later.

Business incentive: source diversity. AI companies want their systems to be able to cite many sources. Restricting citations to only established sources makes the AI seem limited. By citing Grokipedia, the AI can answer more questions and look more powerful.

There's also a subtle competitive angle. x AI is owned by Elon Musk, who is not friendly with Open AI. Chat GPT citing Grokipedia might be seen as a sign that Chat GPT is willing to use all available sources, including those from competitors. That's actually a feature for Open AI, not a bug.

But none of these reasons are good ones. They're all variations of "it's easier not to filter" or "it benefits us somehow." None of them involve actually trying to maximize information quality for users.

The Misinformation Vector: How Bias Spreads Through AI

Here's what keeps me thinking about this problem: misinformation through AI tools works differently than traditional misinformation.

With social media, you can see that you're on Facebook or Twitter. You might not trust the source. You can check the URL.

With AI-generated responses, the source is hidden behind the citation link. Most users don't click the citation. They read the AI's answer and assume it's been synthesized from reliable sources.

If that assumption is wrong, they're not getting information. They're getting propaganda disguised as synthesis.

Grokipedia is particularly effective as a misinformation vector because:

It looks legitimate. Grokipedia articles are formatted like Wikipedia articles. They have sections, sources (sometimes), and a professional appearance. To a casual reader, or to an AI system analyzing text, they look credible.

The bias is subtle. Grokipedia doesn't lie outright (in most cases). It just emphasizes certain facts over others. It downplays controversial elements. It presents partisan talking points as established fact. This is harder to detect than explicit falsehood.

It's too new to have reputation. Nobody knows Grokipedia is biased because nobody has used it long enough to notice. Wikipedia has 20 years of scrutiny behind it. Grokipedia has a few months. The lack of critical analysis makes it look fresh and legitimate rather than new and unproven.

AI systems trust it by default. When Chat GPT encounters Grokipedia in its training data, it has no prior knowledge that Grokipedia is unreliable. It treats all text as potential truth and synthesizes from all sources equally (with some ranking). Grokipedia gets treated the same as Wikipedia.

This combination makes Grokipedia an exceptionally effective way to influence what AI systems say. You don't have to hack the AI. You don't have to change the training process. You just have to create content that looks authoritative and feed it into the internet. If enough AI systems cite it, it becomes real.

ChatGPT leads with an estimated 3.8 million weekly citations of Grokipedia, followed by Google's AI and Microsoft Copilot. Estimated data based on sample research.

The Precedent This Sets

What worries me most is the precedent Grokipedia establishes.

Right now, it's x AI and Elon Musk using AI-generated content to influence what other AI systems say. But this is a template that anyone can copy.

Imagine if major political campaigns started creating AI-generated encyclopedia entries designed to rank well in AI searches. Imagine if corporations created product encyclopedias that systematically praise their brands while criticizing competitors.

The infrastructure is already in place. We've just watched it work. Grokipedia proved that you can:

- Create an AI-generated knowledge base

- Get it indexed and mentioned on the internet

- Have major AI tools cite it

- Reach millions of users

- Influence how they understand reality

All without any explicit coordination or manipulation. Just by existing and being technically available to training data.

Once other organizations understand this works, we're going to see a flood of competitor encyclopedias, industry glossaries, promotional reference materials, and straight-up propaganda disguised as general knowledge.

And the AI companies haven't shown they have any defense against it.

What Happens Next: Potential Solutions

I'm not hopeless about this. There are ways to solve it. But they require action that the AI industry hasn't taken.

Solution one: transparent source ranking. AI companies could publish the policies they use to rank and filter sources. They could explain why Grokipedia is weighted less than Wikipedia, or why certain sources are excluded. This wouldn't prevent all bias, but it would make bias visible.

Openness works. Wikipedia's transparency is exactly what makes it relatively reliable. Users can see the discussion. They can see the evidence. They can challenge claims.

AI companies could do the same. They won't, because it would reveal their biases. But they could.

Solution two: user control over sources. Chat GPT could let you specify "don't cite Grokipedia" or "prioritize academic sources." This would give users agency in what they're citing.

Perplexity actually does something like this already, letting you adjust how the AI searches. It's a better model.

Solution three: source diversity warnings. When an AI cites a new or unreliable source alongside established sources, it could flag this explicitly. "This uses Grokipedia, which is newly created and may be unreliable. Here's what Wikipedia says instead."

Solution four: active filtering. AI companies could identify problematic sources and actively prevent their training models from using them. This requires accepting that some sources are better than others, which contradicts the "objective" framing many AI companies like to use. But it's necessary.

None of these solutions are happening right now. And they won't happen voluntarily. They require either regulatory pressure or user demand or competitive pressure.

We're starting to see some of that. Researchers are documenting the problem. Media outlets are reporting on it. But the AI companies aren't changing behavior in response.

Estimated data shows a rapid increase in Grokipedia citations in AI products, highlighting potential risks of bias in AI-generated content.

The Responsibility Question: Who's at Fault?

When something goes wrong with AI citations, it's tempting to blame one party. But the responsibility is distributed.

x AI and Musk: They created Grokipedia knowing it would be used by AI systems. They included biased content. They didn't build in transparency or quality controls. That's a choice.

Open AI, Google, Microsoft, and other AI companies: They're citing sources without filtering or disclosure. They could invest in better source ranking. They could be transparent about what sources they use. They're choosing not to.

Users: We're clicking "accept" on AI tools without asking hard questions about reliability. We're trusting them to be careful about sources. We're not holding them accountable when they're not.

The tech press and research community: We're documenting these problems, but maybe not loudly enough. We're not making it impossible for the AI companies to ignore the issue.

The solution requires action from all of these groups. x AI needs to either make Grokipedia more reliable or accept that it won't be widely cited. AI companies need to take source quality seriously. Users need to demand better. Researchers need to keep measuring and reporting.

Right now, nobody is doing much of anything. The system is broken, and the incentives aren't aligned to fix it.

What This Means for AI Reliability Going Forward

Grokipedia is just the first iteration of a larger problem.

As AI becomes more central to how people access information, the sources that AI systems use become more important. If those sources are biased, proprietary, or designed to influence AI responses, then AI becomes a tool for spreading bias at scale.

We're not there yet. But we're approaching it.

The question that will define the next five years of AI development is this: will AI companies prioritize source quality and transparency, or will they optimize for speed and scale?

Grokipedia suggests they're optimizing for speed and scale.

If that continues, we're going to see a fragmentation of AI systems. Open AI's Chat GPT will cite different sources than Claude, which will cite different sources than Gemini. Users will get different answers depending on which tool they use. Truth will become dependent on which AI they trust.

That's not hyperbole. It's the logical conclusion of letting biased or proprietary sources into AI training data without oversight.

The alternative is building a shared set of information standards. Making transparency non-negotiable. Treating source quality as seriously as we treat model accuracy.

That would require collaboration and investment. It would require the AI companies to agree on principles they might not share. It would require saying no to convenient sources when those sources are unreliable.

I don't see it happening yet. But I think it's the only thing that prevents AI from becoming a tool for mass-scale misinformation.

Practical Recommendations for Users Right Now

If you're using AI tools for anything important, here are concrete steps to protect yourself from Grokipedia and similar problems.

First: always check citations. When an AI tool gives you an answer with sources, click every citation. Read the source directly. Don't trust the AI's interpretation.

Second: triangulate sources. If you're doing any serious research or decision-making, don't rely on a single AI tool. Use Chat GPT, then Google search, then check academic databases. See if the information is consistent across sources.

Third: recognize when you don't know. If you use an AI tool for research and you don't recognize one of the sources it cites, mark it as uncertain. Don't build decisions on it.

Fourth: demand better. When AI companies don't disclose their citation policies, call that out. When they cite Grokipedia or other unreliable sources, ask them why. Pressure matters. Companies respond to user demand.

Fifth: use tools that are transparent. Perplexity shows sources prominently. Google sometimes shows citations. Chat GPT buries them. That's a meaningful difference. Vote with your usage.

Sixth: understand the limits. AI tools are useful for brainstorming, getting summaries, and exploring topics. They're dangerous for definitive answers on important topics. Know when to use them and when to go direct to primary sources.

None of this is revolutionary. It's basically "don't trust any source without verification." But that's a more important rule now than it was before, because AI makes unverified sources look credible at scale.

Looking Ahead: What 2025 Will Likely Bring

I'm making a prediction based on current trajectories: Grokipedia citations will continue to increase through early 2025.

Some AI companies will start filtering Grokipedia more aggressively. Google probably will. Microsoft might. They'll do this quietly, without announcing it, to avoid the appearance of censorship.

Other companies will leave the problem as-is. x AI obviously will. Smaller competitors might, because they don't have the resources to build better source filtering.

We'll see competing Wikipedias emerge. Some venture-backed. Some sponsored. Each one trying to get cited by AI tools. The AI citation ecosystem will become increasingly polluted.

Researchers will publish papers documenting the problem. The problem won't get significantly better in response.

By the end of 2025, Grokipedia citations might decline as a percentage of AI responses (because citations might expand overall), but the absolute number will almost certainly be higher than today.

The real change will come when either regulation forces transparency or users stop trusting AI tools because they keep citing unreliable sources. One of those will happen. The question is which comes first.

In the meantime, Grokipedia is here. It's being cited. And most people using AI have no idea.

The Bigger Picture: Trust in the Age of AI

Grokipedia is a symptom of a much larger problem: we're building a world where artificial intelligence mediates how people understand reality, and we haven't figured out how to make that reliable.

Wikipedia solved this problem for text-based knowledge through transparency and community oversight. But that model doesn't scale to AI. You can't have millions of people reviewing every Chat GPT response.

So we need different solutions. Better source ranking. Explicit policies about what sources are used. User control over how sources are weighted. Academic collaboration to establish knowledge standards.

Instead, we're getting companies treating citation as an afterthought. We're getting AI systems that cite unreliable sources without flagging them. We're getting a world where information quality depends on what made it into training data and how much profit the training provides.

Grokipedia won't destroy the internet. It's not catastrophic by itself. But it's a warning. It's proof that the current system doesn't protect against bias being baked into AI responses.

We're at a decision point. Do we accept AI systems that increasingly shape how people understand reality, or do we demand that they be built to higher standards?

Right now, the AI industry is choosing the former. They're choosing speed and scale over reliability and transparency.

If that continues, Grokipedia will be the least of our problems.

FAQ

What is Grokipedia?

Grokipedia is an AI-generated encyclopedia created by x AI, Elon Musk's AI company. Unlike Wikipedia, which is edited by thousands of volunteer humans in a transparent, consensus-driven process, Grokipedia is written entirely by Grok, x AI's AI chatbot. The encyclopedia launched in October 2024 and has grown to include over 95,000 articles, many of which were initially copied from Wikipedia but increasingly reflect Grok's particular biases and perspectives on controversial topics.

Why are AI chatbots citing Grokipedia?

AI tools like Chat GPT, Google Gemini, and Microsoft Copilot cite Grokipedia because it appears in their training data. These models are trained on vast amounts of internet content, including Grokipedia articles. When an AI system answers a question, it synthesizes information from its training data and cites sources. Since Grokipedia is now part of the internet and available in training datasets, it gets cited the same way Wikipedia does. There's no explicit decision by these companies to promote Grokipedia, but there's also no systematic filtering to prevent it.

How many citations are we talking about?

According to Ahrefs research, Grokipedia appeared in over 263,000 Chat GPT responses from a sample of 13.6 million prompts. If that percentage applies to Chat GPT's overall usage of roughly 200 million messages per week, it would mean approximately 3.8 million Grokipedia citations per week through Chat GPT alone. Google's AI products, Perplexity, and Microsoft Copilot also cite Grokipedia, though at lower rates, adding millions more citations across all platforms.

Is Grokipedia biased?

Yes. Unlike Wikipedia's transparent editing process where controversial claims are flagged and discussed, Grokipedia is generated by Grok, which has a documented history of bias. Grok has generated racist and transphobic content. It downplays controversial aspects of Elon Musk's history while emphasizing his accomplishments. It avoids criticizing Musk's companies while being more critical of competitors. When Chat GPT or other AI tools cite Grokipedia on controversial topics, they're potentially spreading these biases to millions of users without making the bias explicit.

Why doesn't Chat GPT just stop using Grokipedia?

Filtering sources from AI model training is technically complex and expensive. Once a model is trained, removing a source would require retraining the entire model, which costs millions and takes weeks. Additionally, AI companies have incentives to cite diverse sources, as it makes their systems appear more comprehensive and capable. Explicitly filtering out Grokipedia would require acknowledging that some sources are unreliable, contradicting the "objective AI" narrative many companies prefer. It's easier and cheaper to let the system cite Grokipedia than to actively prevent it.

What happens if I see a Grokipedia citation?

If Chat GPT or another AI tool cites Grokipedia, click the citation and read the source directly. Don't trust the AI's interpretation of what the source says. Cross-reference the information with established sources like Wikipedia, academic databases, or news outlets. Understand that Grokipedia is new, AI-generated, and potentially biased, so treat it with appropriate skepticism. For important decisions or research, verify information across multiple independent sources rather than relying on a single AI tool's response.

What's the bigger concern here?

The fundamental issue is that AI tools are becoming central to how people access information, but the sources those tools use aren't necessarily reliable or transparent. Grokipedia demonstrates that it's possible to create a biased, AI-generated source that gets cited by major AI platforms and reaches millions of users without explicit disclosure that the source is problematic. This is a template that could be copied by corporations, political campaigns, or any organization with resources to create convincing but biased content. Without better source transparency and filtering, AI systems could become powerful tools for spreading misinformation at scale.

What should AI companies do about this?

Ideal solutions include publishing clear policies about how sources are ranked and filtered, giving users control over source preferences, transparently flagging new or unreliable sources when they appear in citations, and actively filtering training data to exclude obviously biased sources. Some companies could implement these changes today, but most lack the incentive to do so. Regulatory pressure or significant user demand would likely be necessary to force change at scale.

Key Takeaways

-

Grokipedia, Elon Musk's AI-generated encyclopedia, is being cited by Chat GPT, Google Gemini, Microsoft Copilot, and Perplexity, spreading its biased content to millions of users who don't realize the source is unreliable.

-

Chat GPT features Grokipedia prominently in citations (often as one of the first sources), while Google's AI tools treat it as a supplementary source. This difference reflects different approaches to source credibility, with Google being more conservative.

-

The scale is significant: approximately 3.8 million Grokipedia citations per week through Chat GPT alone, based on citation frequency in sample testing, and growing across all major AI platforms.

-

Grokipedia citations appear most often for "non-sensitive" queries like definitions and encyclopedic lookups, which is precisely where users are least likely to verify sources independently.

-

The real danger is precedent: Grokipedia proves that anyone can create an AI-generated knowledge base, get it indexed by major AI systems, and influence millions of people's understanding of reality without disclosure or oversight.

-

Network effects make this problem self-reinforcing: once Grokipedia is cited by major AI tools, those citations get indexed, become part of the internet, and get included in the next generation of AI training data, making Grokipedia appear more authoritative over time.

-

Current AI citation practices lack transparency: companies don't disclose what sources they prioritize, why certain sources are excluded, or how they handle biased content. Users have no way to know where information comes from or whether to trust it.

-

Solving this requires multiple solutions: source transparency, user control, active filtering of unreliable sources, and possibly regulation. Without coordinated action, AI systems will become increasingly unreliable as sources.

Word count: 7,843 | Reading time: 39 minutes

Related Articles

- Google Search AI Overviews with Gemini 3: Follow-Up Chats Explained [2025]

- AI-Generated Anti-ICE Videos and Digital Resistance [2025]

- Slopagandists: How Nick Shirley and Digital Propaganda Work [2025]

- Tesla is No Longer an EV Company: Elon Musk's Pivot to Robotics [2025]

- Tesla Discontinuing Model S and Model X for Optimus Robots [2025]

- Google AI Plus: Complete Guide to Pricing, Features & Comparison [2025]

![AI Chatbots Citing Grokipedia: The Misinformation Crisis [2025]](https://tryrunable.com/blog/ai-chatbots-citing-grokipedia-the-misinformation-crisis-2025/image-1-1769864748960.jpg)