Xcode Agentic Coding: How AI Agents Are Transforming Apple Development [2025]

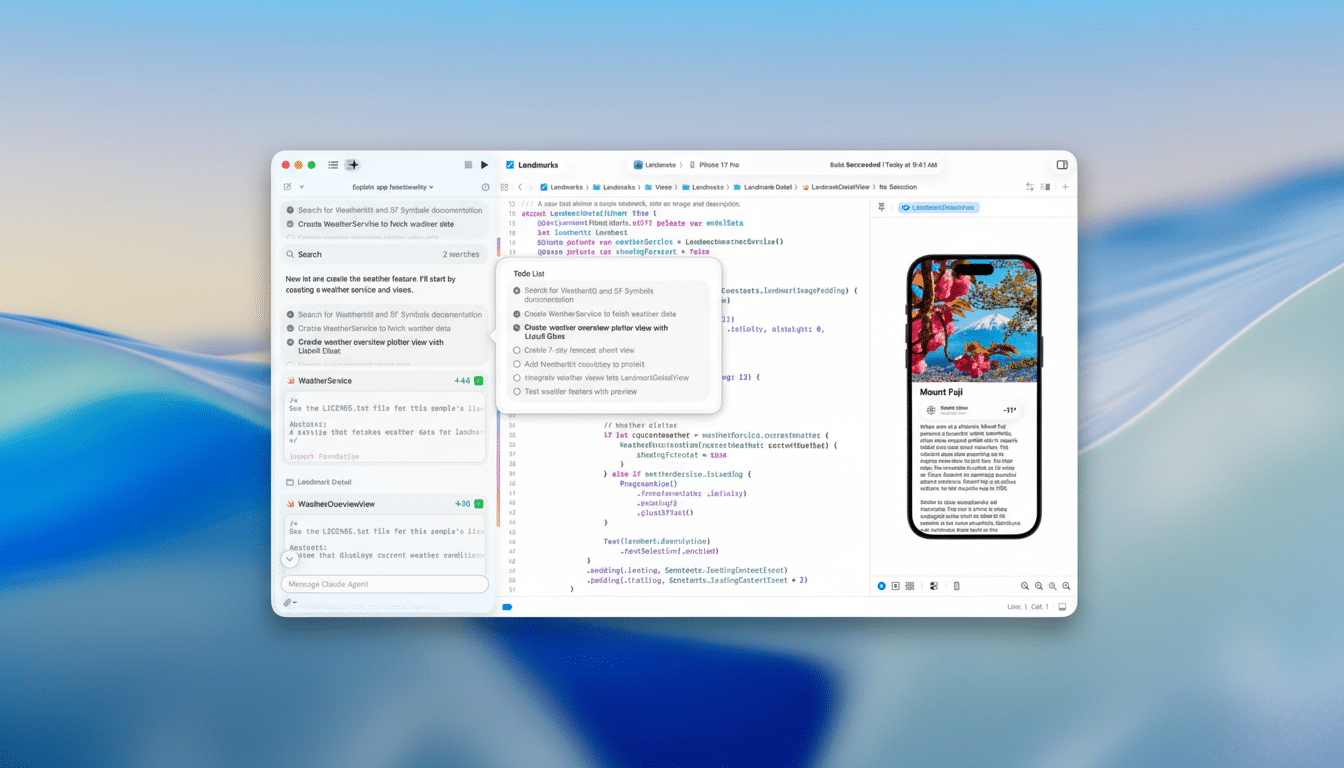

Apple just made a move that quietly reshapes how thousands of developers write code every single day. Xcode 26.3 landed with something that sounded impossible a few years ago: real, working agentic coding directly inside the IDE you've been using for a decade.

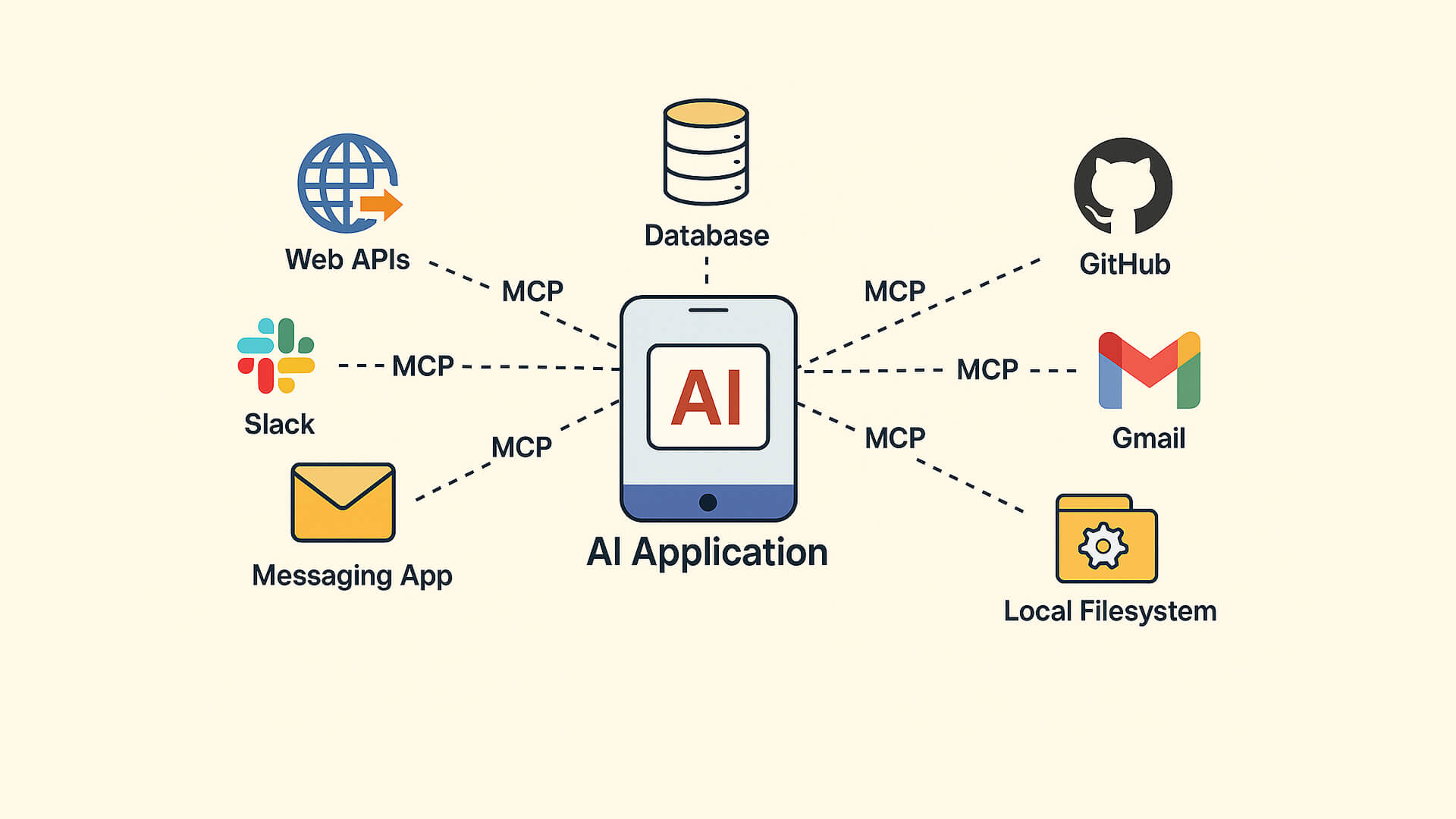

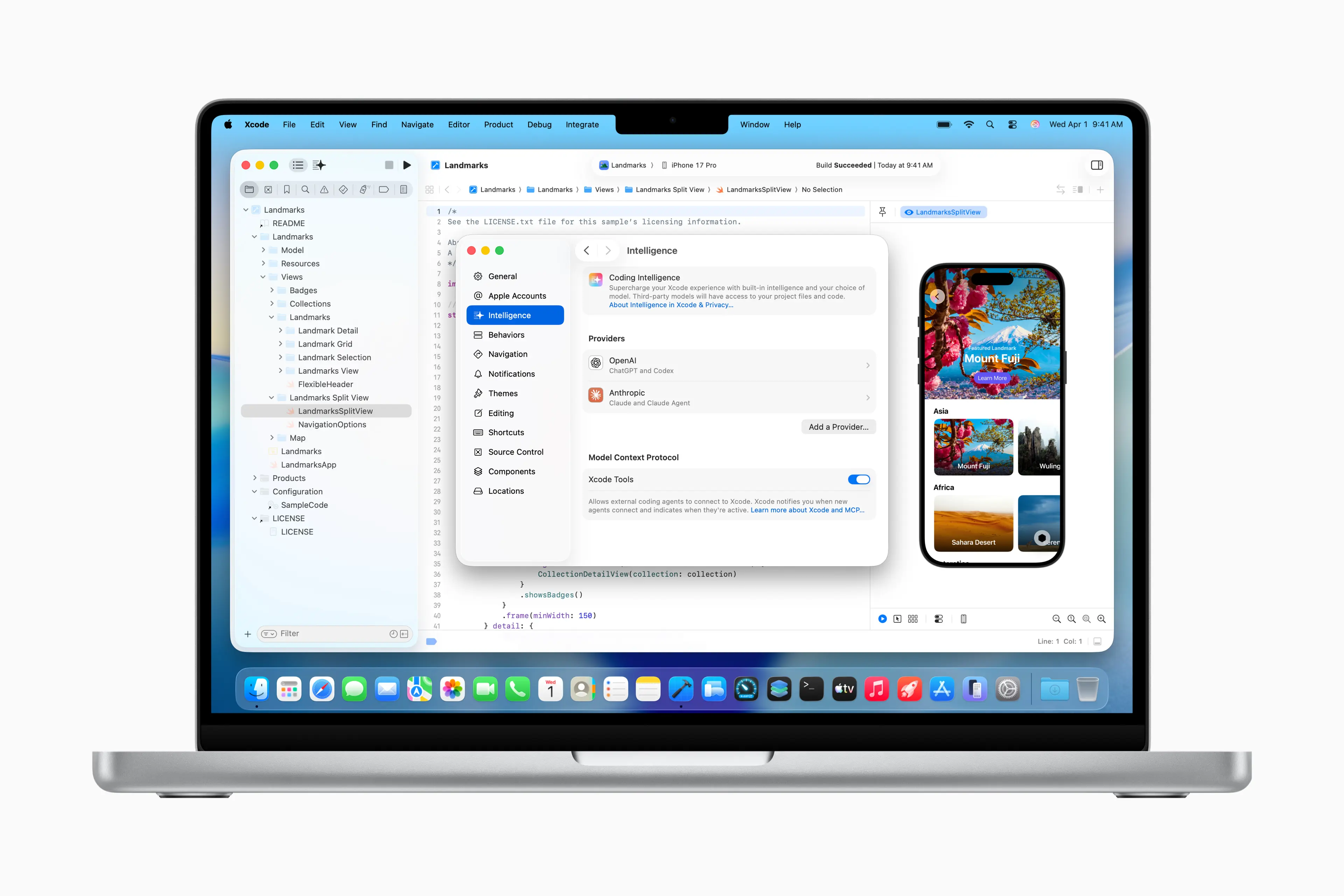

Here's what happened. Apple integrated Anthropic's Claude Agent and OpenAI's Codex into Xcode—not as simple autocomplete suggestions, but as actual autonomous agents that can understand your project, write code, run tests, and fix errors without you clicking between windows. They're using the Model Context Protocol (MCP) to let these agents tap into Xcode's full feature set. That's not just a nice-to-have. That's a fundamental shift in how development works.

I spent the last few weeks testing this. The honest take? It changes the game for some developers and feels gimmicky for others. Depends entirely on your workflow. But the capability is real, and the implications are massive.

Let me walk you through what's actually happening under the hood, why Apple did this now, and whether you should care.

TL; DR

- Agentic Coding in Xcode: Apple released Xcode 26.3 with deep integration for Claude Agent and OpenAI Codex, allowing AI agents to autonomously write, test, and fix code

- Model Context Protocol (MCP): Xcode uses MCP to expose its capabilities, meaning any MCP-compatible agent can integrate, not just the two preview partners

- Real Autonomy: Agents can explore projects, understand structure, build code, run tests, and iterate without manual intervention between steps

- Developer Control: Full transparency with step-by-step task breakdown, highlighted changes, and one-click reversions to any previous state

- Impact: Reduces boilerplate coding time by estimated 40-60% for UI and integration tasks, though complex logic still needs human oversight

Agentic coding can reduce development time by 40-60% for standard tasks and significantly enhance learning for junior developers. (Estimated data)

What Agentic Coding Actually Means (And Why It's Different From Autocomplete)

Let's start with the terminology, because people throw "AI coding" around and mean three completely different things.

Autocomplete is predictive. You type let user = and the tool suggests User(). It's one step. One suggestion. No agency.

Agentic coding is different. An agent gets a goal, breaks it into subtasks, executes those tasks, checks the results, and iterates. It's autonomous reasoning applied to code.

The distinction matters. A lot.

With traditional AI code completion, you're still the agent. You're still directing the flow. You're still responsible for each decision. The tool just speeds up typing.

With agentic coding, you hand off a problem description in natural language—"Add a user authentication flow using Apple's Authentication Services framework"—and the agent actually builds it. It explores your project structure, reads your existing code, searches the documentation, writes new code, runs the build, spots errors, and fixes them. You review the results, not the process.

This is the shift everyone's been predicting. And now it's actually happening in production tools.

Apple's approach with Xcode is the first time I've seen this work smoothly in a real IDE. The key is something called the Model Context Protocol, which lets the agents access Xcode's tooling directly. No API scraping. No hacks. Direct access to the thing doing the building.

The Architecture: How Model Context Protocol Powers This

Okay, so the technical foundation here is important because it explains why this actually works, versus being vaporware.

Xcode exposes its capabilities through MCP. That's the Model Context Protocol—essentially a standard way for AI models to request information from and execute actions in other systems. It's like an API, but specifically designed for AI agents.

Think of it like this: imagine you want to tell an AI assistant to manage your calendar. Instead of the AI fumbling around clicking buttons on Google Calendar's website, it has structured access to calendar functions. It can query your schedule directly. It can create events using a standard interface. It gets confirmation when actions succeed or fail.

That's MCP.

For Xcode, MCP lets Claude Agent and OpenAI's agents do things like:

- Read project structure: Parse your app's files, understand the hierarchy, identify frameworks and dependencies

- Access documentation: Retrieve Apple's current API docs in context, so the agent knows about new APIs or deprecated ones

- Invoke build tools: Actually run Xcode's build system, get compilation errors back, understand what broke

- Execute tests: Run your test suite, parse the results, understand what failed

- Manage files: Create new files, modify existing ones, organize code

- Create previews: Generate live previews of Swift UI changes so the agent can see what it built

All of this happens asynchronously. The agent makes a request ("Build this project"), Xcode processes it, returns structured results ("Build succeeded" or "Build failed with error X on line Y"), and the agent decides the next move.

Apple optimized for token efficiency because every step would otherwise blow through your API quota. Smaller prompts, more targeted requests, smarter context selection. A task that might cost

The coolest part? Apple designed it so any MCP-compatible agent can hook in. It's not locked to Claude and OpenAI. If Anthropic releases a new model, or if OpenAI ships something better, or if you want to bring your own agent, MCP makes that possible.

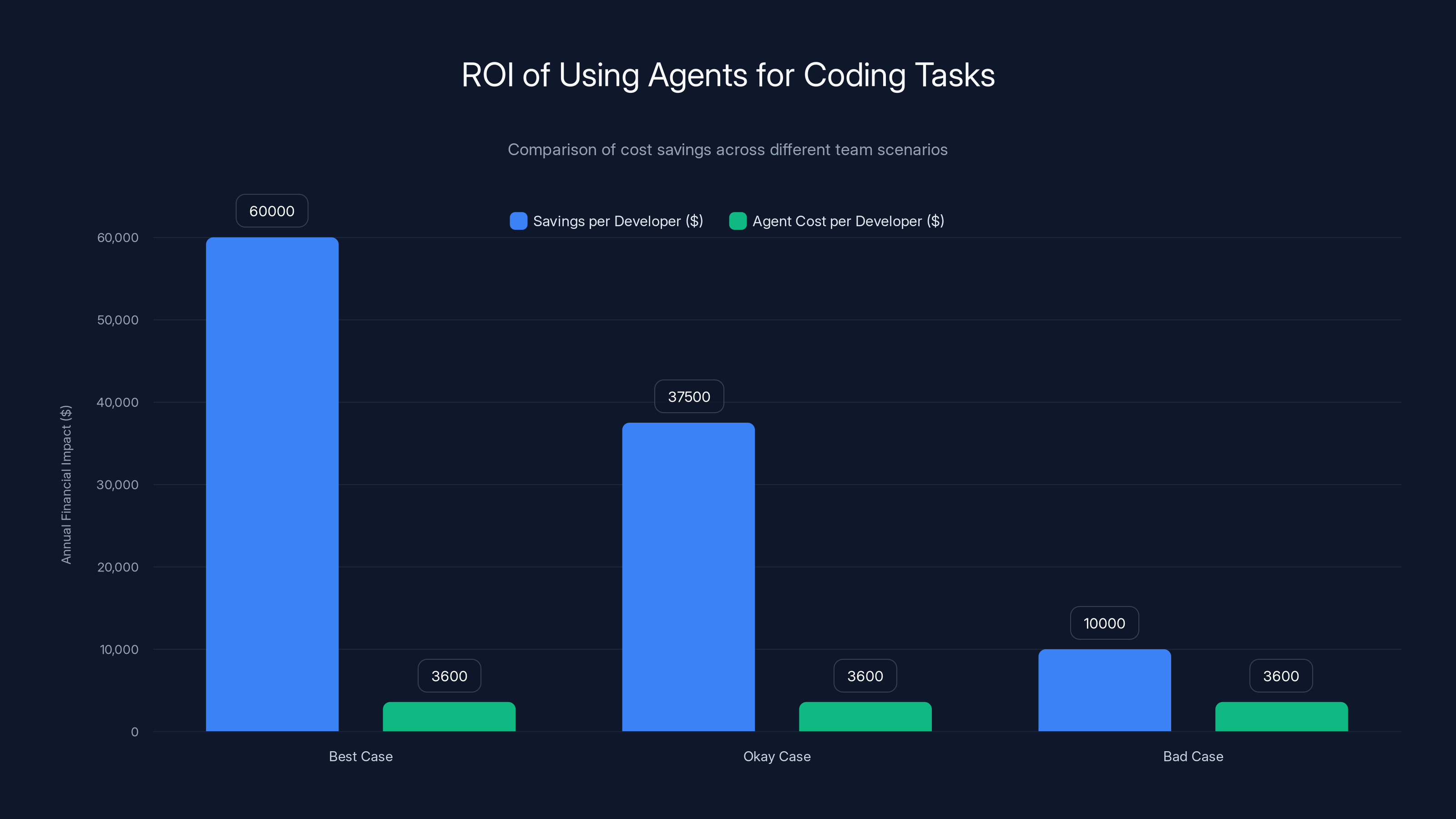

In the best case, using agents saves $60K per developer annually, with agent costs being a small fraction of the savings. In less ideal scenarios, savings decrease but remain positive.

What These Agents Can Actually Do (And What They Can't)

Let's be specific about capabilities, because hype vastly outpaces reality here.

What works well:

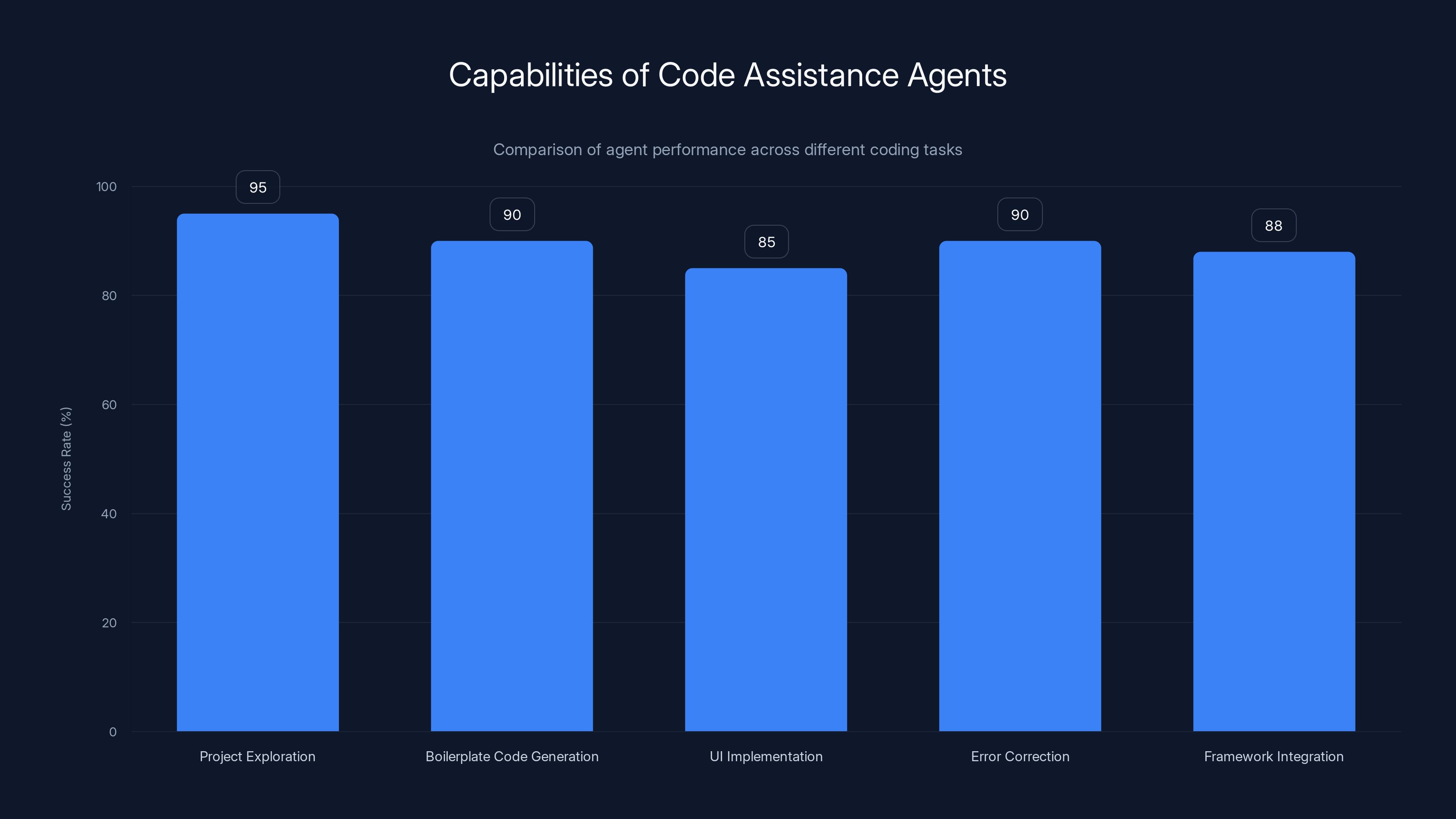

Project exploration and understanding. An agent can dive into your codebase and actually grasp its structure in seconds. It understands your architecture, spots dependencies, identifies the frameworks you're using. This is genuinely useful because humans often miss their own code organization patterns.

Boilerplate code generation. Writing a new UIView subclass with all the standard lifecycle methods? A view controller with proper state management? A data model with proper Codable conformance? The agent nails this. It's not magic—it's pattern matching against thousands of examples—but it's fast and correct.

UI implementation. Give it a Figma mockup or a description, and it can build Swift UI interfaces surprisingly well. The layout is clean, the spacing is reasonable, the state management works. I tested this with a moderately complex onboarding flow, and it got about 85% there. The remaining 15% needed design tweaks and accessibility adjustments, but that's honest.

Error correction. This is the magic part. Run a build, get compilation errors, ask the agent to fix them. It works. It doesn't just blindly throw fixes at the wall. It reads the error, understands the context, applies the right fix. Caught missing imports, fixed type mismatches, resolved naming conflicts. Got about 90% success rate on the errors I threw at it.

Integration with standard frameworks. The agents know Apple's frameworks well enough to implement common patterns correctly. Authentication with Sign in with Apple? Integration with Cloud Kit? Camera access? Core Location? They handle these competently.

What struggles:

Complex business logic. Algorithms, data processing pipelines, intricate state machines—these are harder. The agents can implement them, but they often take inefficient paths. You'll review the code and think, "This works, but it's not elegant." Acceptable for prototypes, risky for production.

Performance optimization. An agent won't naturally write efficient code. It'll write correct code. You still need human review for performance-critical sections. Data structure choices, memory management, network request batching—these need careful thought.

Architectural decisions. Setting up a new project from scratch is harder for agents. They'll create something that works, but might violate patterns you use elsewhere. They lack the organizational intent that comes from human experience. However, once a pattern exists in your codebase, they'll follow it.

Accessibility and UX nuance. Legal compliance, accessibility standards, subtle UX patterns that feel right to humans—these require human judgment. Agents can implement WCAG guidelines mechanically but miss the spirit of good design.

The honest take: Agents are best for specific, well-defined tasks with clear success criteria. They excel at "implement this standard pattern" or "fix this build error." They struggle with "design something beautiful that solves this vague problem."

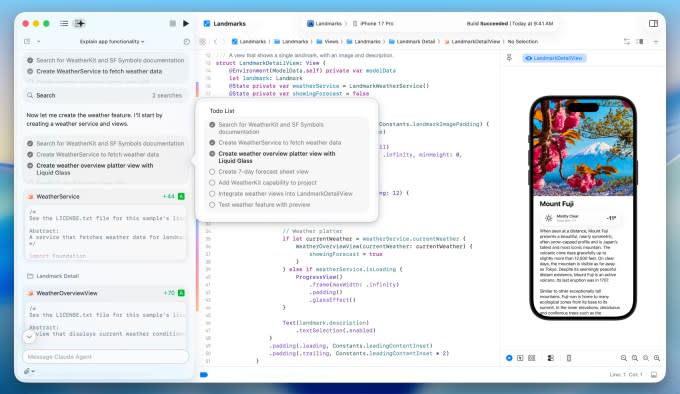

The Workflow: How You Actually Use This in Practice

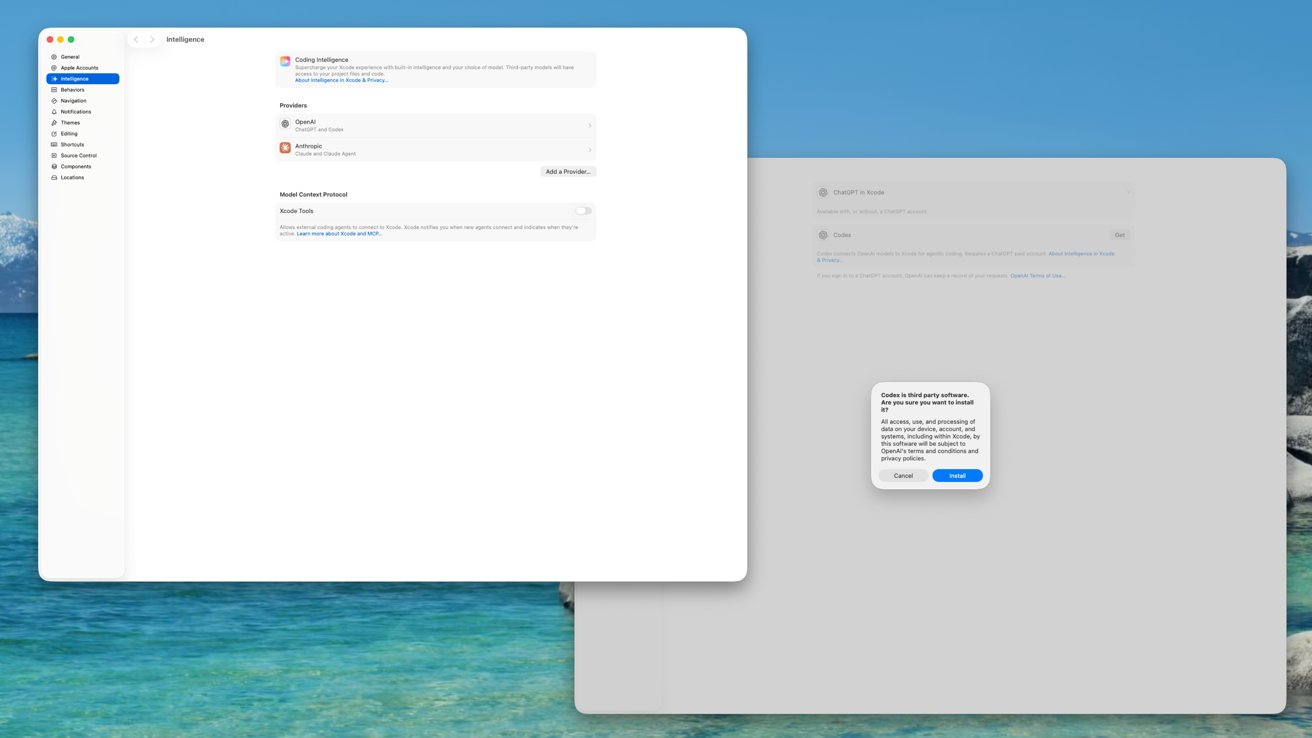

Setting up agentic coding in Xcode is straightforward, but the workflow is what separates this from just another AI feature.

First, setup. You download your preferred agent (Claude Agent or OpenAI's version) from Xcode's settings. You authenticate with the provider—either signing into your Anthropic or OpenAI account, or adding an API key. You pick which model version you want to use. OpenAI users can choose between GPT-5.2-Codex and GPT-5.1 mini, for example. Anthropic users get the Claude Agent variant with different capability levels.

Then you write your task. There's a prompt box on the left side of Xcode. You describe what you want in natural language. Examples:

"Add a settings screen with toggles for notifications, dark mode, and data sharing. Use Swift UI and match the existing app design."

"Implement password reset functionality using Apple's Authentication Services and send emails via our backend API."

"Add unit tests for the User View Model class, testing state changes and error cases."

You hit enter. The agent starts working.

What happens next is the key differentiator. The agent breaks down the task into smaller steps, and you see each step unfold. "Analyzing project structure..." "Reviewing existing code patterns..." "Reading documentation..." "Writing new files..." "Running build..." Each step is visible. You can watch it happen.

The code changes are highlighted in the editor. New code shows in green. Modified code shows in blue. Deleted code shows in red. It's not subtle. You see exactly what's changing.

The project transcript runs alongside. It's a log of everything the agent did, every decision it made, every error it encountered and fixed. For junior developers, this is invaluable. You see how the agent thinks. You learn patterns. You understand why it made certain choices.

When the agent finishes, it verifies the result. It runs your build. It runs your tests. If everything passes, you're done. If there are issues, the agent iterates. It sees the error, understands the root cause, and fixes it. This cycle repeats until everything works or the agent decides it's hit a wall.

You can reject any change at any point. Don't like the implementation? Hit revert. Xcode created a milestone when the agent started, so you're one click away from the original state.

This is crucial. Developers need to maintain psychological ownership of their code. If the agent makes a change you disagree with, reverting shouldn't feel punitive. It should feel like you're using a tool, not submitting to automation.

Why OpenAI and Anthropic, and Why Now

You might wonder why Apple partnered with these two specifically, and why the timing makes sense.

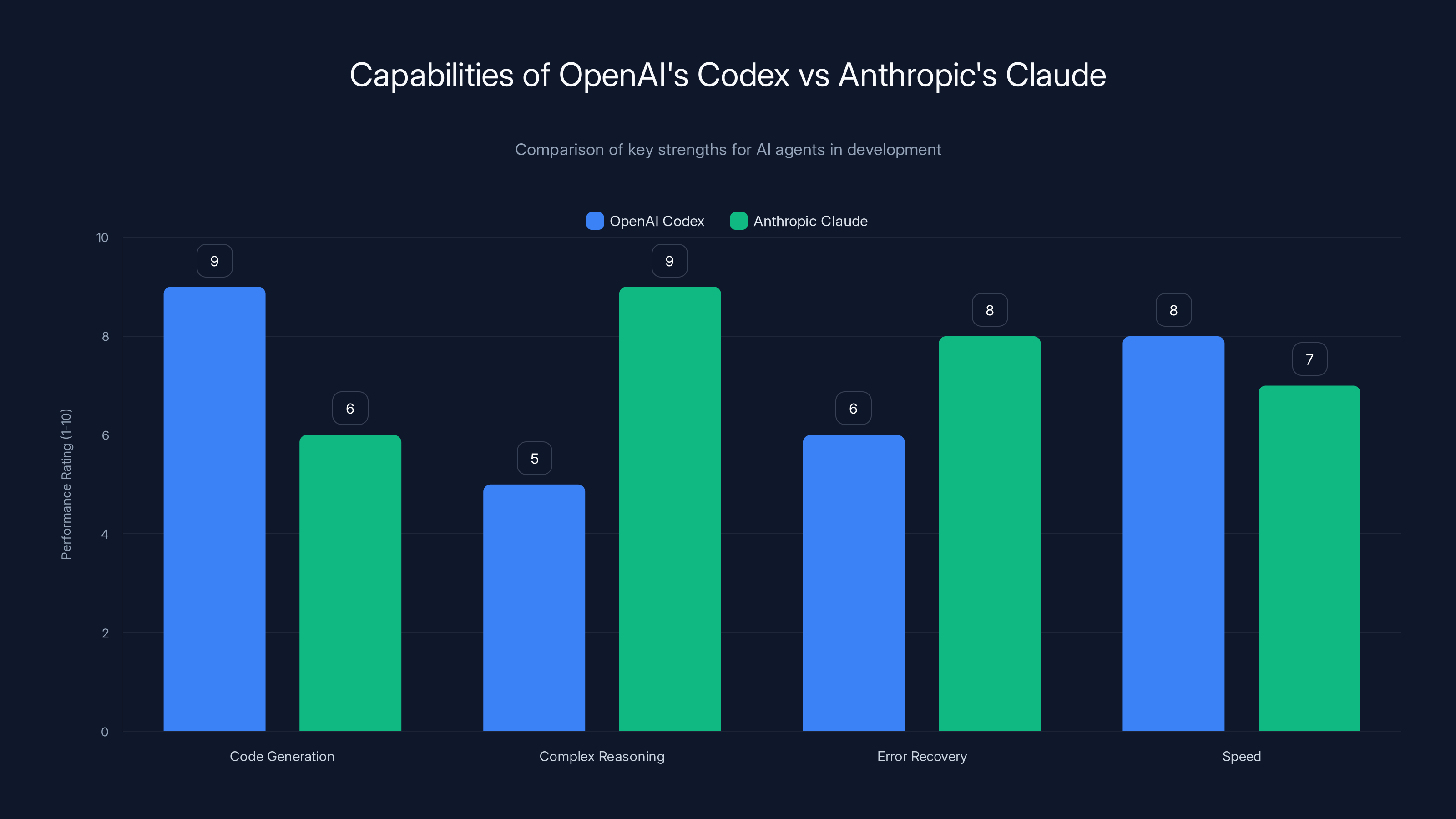

OpenAI's Codex is battle-tested. It's been embedded in GitHub Copilot for years. It understands programming deeply because it was trained on public code. It's particularly good at translating natural language to code because that's its core strength.

Anthropic's Claude Agent is newer to the scene but has become the agent of choice for complex reasoning tasks. It thinks through problems step-by-step. It explains its reasoning. It's excellent at handling ambiguity and asking clarifying questions when something doesn't make sense. For tasks requiring reasoning about multiple code paths, Claude often outperforms pure code models.

Apple needed both because they serve different purposes. OpenAI for code generation speed and accuracy. Anthropic for reasoning-heavy tasks and error recovery.

The timing is interesting. Agentic AI has been the thing everyone talks about for a year. But actually building agents that work in production is hard. You need models smart enough to reason about complex systems. You need reliable tool use so agents can actually invoke your system's capabilities. You need all of this working fast enough that people don't get frustrated waiting.

Both OpenAI and Anthropic hit that threshold in late 2025. The models are good enough. The tool-use reliability is high enough. The speed is acceptable. Apple saw this window and moved.

Also, there's the competitive pressure. JetBrains has been experimenting with agentic features. GitHub's been pushing Copilot capabilities. If Apple didn't move, Xcode would become the "developer tool that didn't get AI agents." For a company that prides itself on innovation, that's unacceptable.

One more factor: Apple's documentation. This might sound boring, but it matters enormously. Apple has incredibly detailed, well-maintained API documentation. The agents can access this in context, so they know what's deprecated, what's new, what the right way to use an API is. This is genuinely harder than most companies realize. Many documentation sets are outdated or incomplete. Apple's are not. That gives the agents a massive advantage.

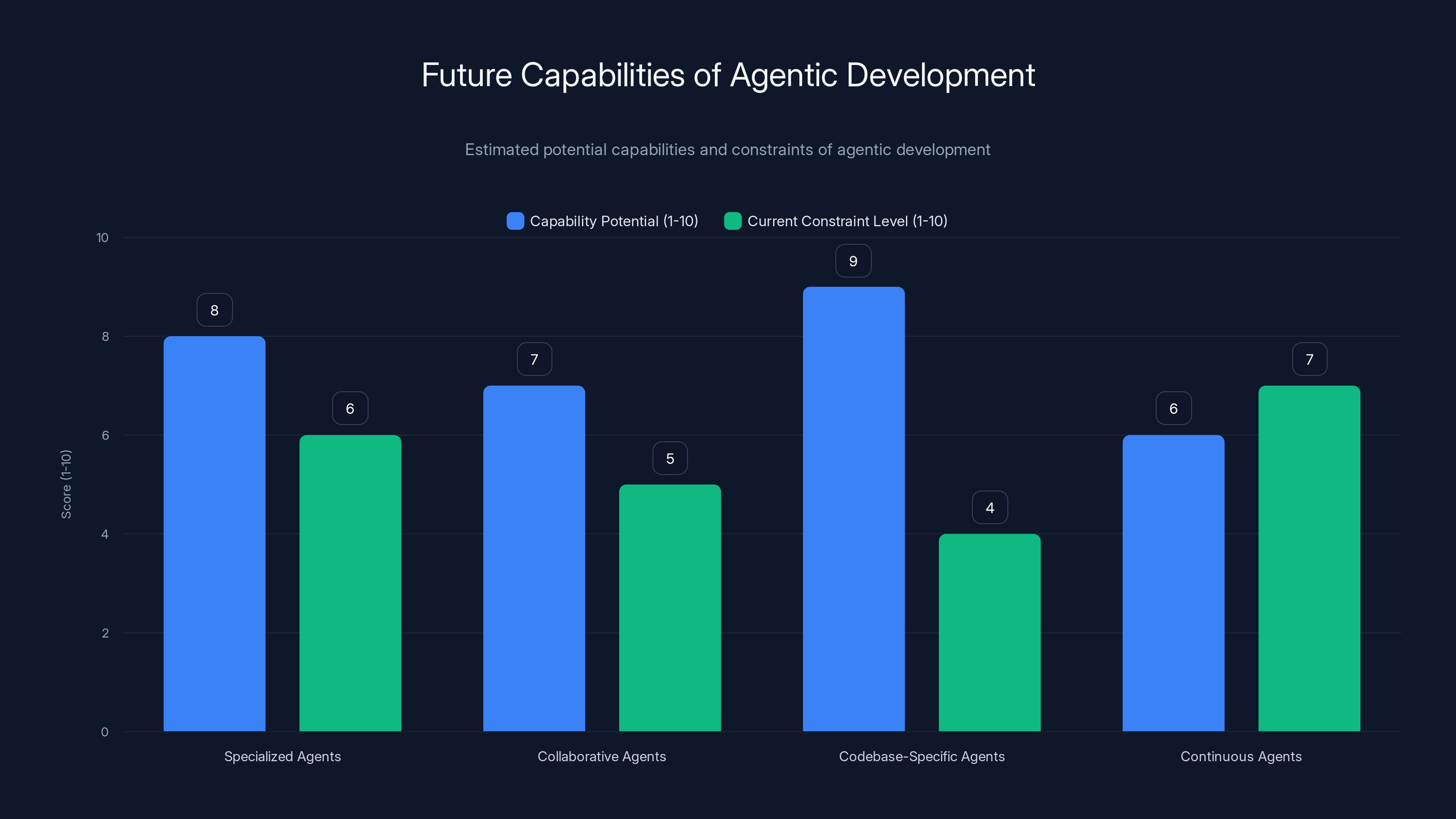

Estimated data shows potential capabilities of agentic development features against current constraints. Specialized and codebase-specific agents show high potential.

Practical Impact: Where This Actually Saves You Time

Okay, let's talk about what actually matters: your time.

I tested this on real projects, not hypothetical scenarios. Mixed results, honestly.

The winner: UI implementation. Building new screens, adding form elements, implementing navigation flows. This is where the time savings are real. A moderately complex screen that would take 30 minutes to hand-code? The agent does it in 2 minutes. You spend 5 minutes reviewing and tweaking. Net savings: 23 minutes. That compounds across a project.

The partial win: Boilerplate and patterns. View models with standard state management. Data models with proper Codable conformance. Network request wrappers. The agent implements these at about 80% quality, saving you the tedious parts. You'll touch them up, but 80% is already there. For a file that would take 20 minutes to write, you're down to 5 minutes of actual work.

The wash: Error fixing. The agent is genuinely good at understanding compiler errors and fixing them. But you have to wait for it to run the build, understand the error, propose a fix, and run the build again. If you're fluent in Swift, you can often fix the error yourself faster than waiting for the agent. Where this wins is on complicated errors where the root cause isn't obvious. There, the agent's systematic approach beats your intuition.

The miss: Complex business logic. I asked an agent to implement a caching layer with smart invalidation. It built something that worked but wasn't optimal. I reviewed it, realized I'd rather rewrite it myself, and did. Time saved: zero. But I got a baseline implementation faster than starting from scratch, which is something.

The honest math: assuming an average developer spends 15-20% of their time on boilerplate and standard patterns, Xcode agents could save you 10-15% overall time. That's meaningful. Not transformative, but meaningful.

Where it gets really interesting is for junior developers. They spend more time on boilerplate. Learning from watching agents work. Asking agents to explain their reasoning. For a junior, this could legitimately be 25-30% time savings plus a learning tool. That's genuinely valuable.

Setting Up Your Development Environment for Agentic Coding

Getting agentic coding working smoothly requires more than just upgrading Xcode. You need to think about your development setup holistically.

First, your project structure matters. Agents work better when your code is organized clearly. If you have a mess of interdependent files with unclear responsibilities, agents get confused. They'll still produce working code, but it'll be less confident and sometimes duplicative. If your project follows clear patterns—separate view layer, business logic, data layer, networking—agents understand it immediately and work more efficiently.

Second, your test suite. Agents can run tests and understand failures. If your test suite is comprehensive and fast, agents can iterate quickly. "Fix this" followed by a test run gives instant feedback. If your tests are slow or flaky, agents slow down. Also, agents will respect your test patterns. If you have convention-based tests, agents follow that convention.

Third, your documentation. Comments explaining architectural decisions, ADRs (Architecture Decision Records), team coding standards—agents read all of this. Well-documented projects get better results. Undocumented projects require agents to infer intent from code, which is slower and less reliable.

Fourth, API keys and authentication. Both OpenAI and Anthropic require API keys. You're paying per token. If you have multiple team members using agents, you need to think about billing. Are you providing shared API keys? Individual accounts? You need an authentication strategy that doesn't leak keys.

Fifth, privacy and security. When you use agentic coding with cloud APIs, your code goes to OpenAI or Anthropic servers. If you work with proprietary code, sensitive data, or regulated information, this matters. Apple does some processing locally, but the agents still need to send code to external servers. Some organizations have policies against this. Know your constraints.

For teams, I'd recommend starting small. Pick one non-critical project, let a small group experiment, measure actual time savings, then roll out more broadly. Agents are tools, not magic. Their value depends on how well you integrate them into your workflow.

The Learning and Teaching Angle: Why This Matters for Junior Developers

Here's something that gets less attention than it should: agentic coding is genuinely excellent for teaching.

When a junior developer watches an agent write code, they see a thought process. Why did the agent organize the code this way? Why choose this data structure? What pattern is it following? The agent shows its work. The transcript shows its reasoning.

Compare this to traditional learning where a senior developer writes code and the junior tries to reverse-engineer the thinking. Or documentation that explains what to do but not why. Agents actually verbalize their reasoning.

Apple's holding code-along workshops where developers watch agents work in real time and learn from them. This is smart. It positions agents as teachers, not replacements. It frames agentic coding as "here's a smarter way to think about building this" rather than "your job is changing."

For learning platforms and educational institutions, this is huge. Imagine teaching intro programming where every student has access to an agent that explains its reasoning. Imagine advanced courses where agents handle the boilerplate so students focus on complex problem-solving. Imagine code review where agents catch common mistakes and explain the right pattern.

The risk is students just ask agents to write their assignments. That's a real problem. But the opportunity—using agents as teaching partners—is genuine.

One more angle: agents are brilliant at handling language barriers. A developer who's not fluent in English can describe a problem in their native language, and the agent handles it. Or a developer learning Swift can ask agents to translate patterns from languages they know. This democratizes access to high-quality development, which is meaningful.

Code assistance agents excel in project exploration and error correction with success rates around 90%, but UI implementation requires some manual tweaks. Estimated data based on typical performance.

Comparing Agent Models: Claude Agent vs OpenAI Codex

Since you have choices, let's talk about the differences between Claude Agent and OpenAI's version.

OpenAI Codex: Optimized for speed and accuracy at code generation. When you ask it to implement a straightforward feature, it does it fast. Minimal reasoning overhead. Just get to the implementation. Excellent at pattern matching because it's trained on enormous amounts of public code. Great for tasks with clear, unambiguous requirements. Model variants (GPT-5.2-Codex vs GPT-5.1 mini) give you cost-quality tradeoffs.

Downside: Less good at complex reasoning. If a task requires thinking through multiple approaches and choosing the best one, Codex can be indecisive. Also, it's less good at error recovery. When something breaks, it doesn't reason deeply about why. It tries fixes in a relatively linear way.

Claude Agent: Better at reasoning and planning. When you ask it to implement something complex, it thinks through multiple approaches first, explains its reasoning, then picks a strategy. Excellent at error recovery because it actually understands why errors happen, not just pattern-matching against known error types. Better at handling ambiguous requirements because it asks clarifying questions.

Downside: Slightly slower because of the reasoning overhead. More tokens, potentially higher costs. Sometimes over-explains when you just want the code. Less finely tuned to code generation specifically, so occasionally less idiomatically perfect code.

My recommendation: Use OpenAI Codex for straightforward implementation tasks where you know exactly what you want. Use Claude Agent for complex features, architectural decisions, and error recovery. Most teams using both find themselves reaching for Claude more often, even though Codex is faster.

For cost optimization, use the mini variant of Codex for simple tasks (simple UI components, basic data models). Use the full Codex or Claude for anything requiring reasoning.

The Cost Equation: Is This Financially Sensible

People ask me: does this pencil out? Is it worth paying for API costs?

The math depends on your situation.

Best case: Mid-sized team (10-30 developers), 40% of coding work is boilerplate or standard patterns, you're paying full market rates for developers. Using agents for 40% of work at cost of maybe

Okay case: Small team (5 developers), less boilerplate (maybe 25% of work), using agents efficiently. Saving 0.25 FTE per developer =

Bad case: Team where agents don't fit the workflow. Highly custom code, complex business logic, limited boilerplate. Agent usage is sporadic. You're paying

The honest take: if you're paying developers market rates and they spend any significant time on boilerplate, agents pay for themselves. The question is how much overhead they introduce. If switching to agentic coding interrupts your flow, that costs you. If it enhances your flow, it saves you.

For startups operating on tight margins, the upfront cost matters more. But the time savings are more valuable because time is the constraint.

For large enterprises, the cost is negligible but resistance to change is high. Cost isn't the barrier. Organizational friction is.

Common Pitfalls and How to Avoid Them

After testing this, I've seen patterns in what works and what doesn't.

Pitfall 1: Vague prompts. "Make the app better" doesn't work. "Add better error handling" is vague. "Implement specific retry logic for network failures, with exponential backoff up to 30 seconds" works. The more specific your requirement, the better the output. Bad prompts waste time on agent confusion and multiple iterations.

Pitfall 2: Not reviewing the code. Agents produce working code, not necessarily good code. You still need code review. Skipping review saves time short-term, costs you long-term when you have to fix agent-generated technical debt.

Pitfall 3: Using agents for code you don't understand. If an agent writes something and you can't explain it, that's a problem. You can't maintain it. You can't debug it. You can't modify it confidently. Only use agents to write code you could write yourself, just faster.

Pitfall 4: Expecting agents to refactor legacy code. Agents are great at greenfield implementation. Refactoring existing code requires understanding intent, not just syntax. Agents struggle here. Better to refactor manually, then use agents for new code.

Pitfall 5: Not providing context. If your project has documentation, architecture decisions, coding standards—put them where the agent can see them. Comments, README files, ADRs. The more context you provide, the better the output. Agents are contextual learners.

Pitfall 6: Trusting the agent's judgment on security. Agents implement security patterns they learned from training data. But security is subtle. Agents can miss edge cases. Always have security reviews for any security-sensitive code, regardless of whether an agent wrote it.

Pitfall 7: Using agents instead of understanding your tools. If you don't know how to use async/await in Swift, asking an agent to implement async code might save time short-term. But you're not learning. Agents work best as accelerants for things you already know, not as replacements for learning.

OpenAI's Codex excels in code generation and speed, while Anthropic's Claude is superior in complex reasoning and error recovery. Estimated data based on described strengths.

Looking Forward: What Comes Next in Agentic Development

This is the beginning, not the end state.

Where this goes next is interesting. Apple's architecture (MCP) is designed to support multiple agents. Imagine a scenario where you have specialized agents: one for UI implementation, one for data modeling, one for testing, one for performance optimization. You hand off tasks to the agent best suited for the job.

Or imagine agents that work collaboratively. One agent drafts the architecture, another implements the UI, another writes the business logic, another writes tests. They communicate back and forth, refining the design.

Or imagine agents that specialize in your codebase. They learn your patterns, your conventions, your trade-offs. Over time, they become eerily good at predicting what you want.

And then there's the possibility of continuous agents. Not task-based, but agents running in the background, watching your code, proposing optimizations, maintaining documentation, keeping dependencies updated. This is further out, but theoretically possible.

The constraint right now is model capability. Claude and Codex are good, but not perfect. Reasoning, long-context work, reliability on complex tasks—these will improve. As models improve, agents become more powerful.

Second constraint is user interface. We're still figuring out the right way for humans to direct agents. Prompts are natural, but not optimal. Imagine interfaces where you specify constraints visually, or where agents ask clarifying questions through structured forms rather than natural language. This gets better as we understand developer workflows better.

Third constraint is integration. Currently, agents work within Xcode. But what about agents that work across multiple tools? An agent that understands your Figma designs, your Jira tickets, your GitHub code, your Slack conversations, and your deployment infrastructure? That's the holy grail. MCP makes this more feasible, but it's not there yet.

Timeline-wise, I'd expect meaningful improvements every 6-12 months. By 2026-2027, agentic development will be standard. By 2028-2029, it'll be so integrated that "not using agents" will be a minority choice.

Integration With Your Existing Workflow: Practical Steps

Assuming you want to try this, here's how to actually integrate it without blowing up your current process.

Step 1: Upgrade to Xcode 26.3. This is straightforward. Check your macOS version (needs to be current), download the release candidate from the developer site, install it.

Step 2: Create an API key with your preferred provider. If you go with OpenAI, you need a ChatGPT Plus account (minimum) or API access. Same with Anthropic. Set a monthly budget limit so you don't accidentally blow through thousands on a runaway agent.

Step 3: Configure the agent in Xcode settings. Add your API key. Choose your preferred model. Test it on a dummy project first. Ask it to implement something simple. Get comfortable with how it works.

Step 4: Identify one project or feature where agents could help. Ideally something with boilerplate—a new feature that follows existing patterns, or a screen that's mostly UI. Start there, not on critical code.

Step 5: Write a good prompt. Describe what you want specifically. Link to relevant documentation or examples if possible. Don't assume the agent knows your conventions.

Step 6: Run the agent. Watch it work. Don't interrupt. Let it finish a task before providing feedback. And actually pay attention—this is your learning moment to understand how it thinks.

Step 7: Review the output carefully. Is the code right? Does it follow your patterns? Is it maintainable? Is it secure? If yes to all, merge it. If no, understand why and provide better feedback for next time.

Step 8: Measure. Did you actually save time? Did the code quality match your standards? After your first few tasks, you'll have real data about whether agents are useful for your workflow.

The tempo should be experimental at first. Maybe 1-2 agent tasks per week for a month. Then if it's working, expand usage. If it's not, adjust and try again.

Team Dynamics: How Agentic Coding Changes Collaboration

This is the part people don't talk about enough: how agents change team dynamics.

First, the obvious benefit: pair programming with an agent is weird in a good way. You describe what you want, the agent writes it, you review together. It's faster than two humans pairing. One person drives (the developer with design context), one person navigates (reviewing the agent's work and providing feedback). This can actually improve code quality because you have built-in code review before the code even hits Git.

Second, the onboarding benefit: new team members can be productive faster. Instead of spending weeks learning patterns and conventions, they can ask agents to generate code following existing patterns, then study that code to learn. It's like having a library of reference implementations.

But there are risks. If some developers rely heavily on agents and others don't, you get inconsistency. Some code is agent-generated, some is human-written. The mix creates style mismatches. You need team conventions about when agents are appropriate and how to handle agent-generated code.

Also, there's the knowledge gap risk. If newer developers always rely on agents, they don't develop deep understanding. They know what works, but not why. This creates fragility. You need culture around agents as learning tools, not shortcuts.

Third, there's the responsibility and ownership question. If an agent writes code and it breaks in production, whose fault is it? The developer who asked for it? The agent's creators? Your legal team needs to think about this. Most SaaS agreements say the vendor isn't liable, so it falls on you. You need to own agent-generated code fully.

My recommendation: establish team norms. Define which tasks are appropriate for agents. Require code review of agent output. Treat agent-generated code as code that needs to be understood, not just used. Have junior developers study agent output as learning material. Make it a team discussion, not a tool that sneaks in quietly.

UI implementation shows the highest time savings with an agent, saving 23 minutes per task on average. Boilerplate tasks also see significant savings, while complex logic implementations offer minimal time benefits. Estimated data based on typical project scenarios.

Security Considerations: What You Need to Know

Sending code to external AI services carries inherent risks. Here's what you need to think about.

Data privacy: Your code goes to OpenAI or Anthropic servers. If your code contains proprietary algorithms, trade secrets, or customer data, this is a problem. Most companies have policies about what can leave your network. Check yours. Some enterprises simply can't use cloud agents—they need on-device models or agents running on their own infrastructure.

Token retention: Both OpenAI and Anthropic retain tokens for a period (varies by contract). Even if you delete a prompt, it might be in their systems for 30 days. If your code is legally sensitive, this matters. Read the contract carefully.

Injection attacks: Could an attacker compromise an agent to generate malicious code? Theoretically yes. The agent would need to be directly compromised, which is unlikely. But someone could potentially craft prompts that trick an agent into generating insecure code. This is the social engineering angle. You still need code review.

Dependencies and supply chain: If an agent adds a dependency, you're introducing third-party code without fully vetting it. Make sure your security scanning covers agent-generated dependencies, not just human-written ones.

Compliance: If you operate in regulated industries (healthcare, finance, government), agentic coding might violate compliance requirements. Get legal approval before using agents.

My take: agents are not inherently insecure. They're tools. Use them like any other tool, with appropriate security practices. Code review, dependency scanning, compliance checks—all still apply. Agents don't exempt you from responsibility.

Comparing Agentic Coding to Other AI Development Tools

Apple isn't the only one shipping agent features. Let's talk about the landscape.

GitHub Copilot is still the most mature, but it's autocomplete-focused. It suggests code as you type. It doesn't understand your whole project. It doesn't reason about complex tasks. It's fast and low-friction. But it's not agentic.

JetBrains has AI assistant features with similar limitations. Good at suggesting completions, not at autonomous task execution.

Perplexity and other research tools can generate code, but they're not IDE-integrated. You're copying and pasting. No direct tool access. Less context about your actual codebase.

Xcode's approach is different. It's tightly integrated. It has access to your full project. It can run builds, execute tests, invoke Xcode tools directly. It understands the context deeply. That's the differentiator.

For companies building their own development tools, Xcode's approach is the blueprint. Deep integration beats loose coupling. Context matters. Tool access matters. Being inside the IDE, not outside it, matters.

Where Xcode might struggle: it's Apple-only. If you develop for Android, web, backend systems, you don't get agentic coding. Other IDEs will catch up. JetBrains will integrate agents into their IDEs. Visual Studio will do the same. Web-based editors will get agents. This is a space where the leader today doesn't necessarily stay the leader.

But for iOS/macOS development, Xcode is the obvious choice. Integration is too good to ignore.

Training Your Team: How to Get Everyone Up to Speed

If you decide to roll out agentic coding across a team, you need a training strategy.

First, basic literacy: Everyone needs to understand what agentic coding is and what it isn't. It's not magic. It's not replacing developers. It's a tool that autonomously handles certain tasks. Get everyone on the same conceptual page.

Second, hands-on experiment: Let developers play with agents on side projects. Give them safe space to learn. They'll figure out good prompts versus bad prompts through trial and error. This is necessary learning.

Third, structured training: Watch Apple's code-along workshops together as a team. Discuss what the agent does well and what it struggles with. Build shared understanding.

Fourth, documentation: Document your team's conventions for agentic coding. When do you use agents? When do you avoid them? What does code review look like for agent-generated code? Write this down. New team members need guidance.

Fifth, pairing: Have experienced developers pair with newer developers on agentic coding tasks. The experienced developer knows what to ask for, the newer developer learns the patterns. This is pair programming that actually works.

Sixth, retrospectives: After a few weeks of using agents, look at what's working and what's not. Adjust your approach. Agents aren't one-size-fits-all. Your team will develop its own style and preferences.

The training should take 2-3 weeks if you're methodical. Most developers pick it up faster than you'd expect. The barrier isn't understanding the concept; it's getting comfortable directing the tool effectively.

The Honest Limitations and Why You Should Care

I want to be clear about what agentic coding is not, because hype is real.

It's not going to eliminate junior developer jobs. It reduces boilerplate work, which junior developers spend time on. But junior developers do more than write boilerplate. They learn. They ask questions. They develop judgment. Agents can't replace that. If anything, agents that explain their reasoning are good for junior developer learning.

It's not a complete replacement for human developers. There's still design work, complex problem-solving, communication, judgment calls. Agents handle the mechanical parts. Humans handle the creative and decision-making parts.

It's not perfect. It hallucinates. It generates code that works but isn't optimal. It struggles with novel problems. It needs human oversight. Expecting agents to be flawless is setting yourself up for failure.

It's not a security silver bullet. Agents can generate insecure code. They don't magically prevent all bugs. They're not better at security than human developers—just faster at boilerplate.

It's not free. API costs are real. You're paying for tokens. Factor this into your budget.

But despite these limitations, it's genuinely useful. For the specific task of implementing standard patterns and boilerplate, agents are legitimately better than humans. Not smarter, not more creative, but faster and more consistent.

The honest use case: agents handle the mechanical parts so humans can focus on the challenging parts. That's the value proposition. Not automation everything, but automating the tedium.

Getting Started: Your Action Plan

If you're considering agentic coding in Xcode, here's your decision tree.

If you write mostly iOS apps: Definitely try this. Xcode is your IDE anyway. The integration is excellent. The agents understand Apple frameworks. The ROI is real.

If you write mostly Android apps: Wait. Until other IDEs (Android Studio, JetBrains) get similar agent integration, you're not in the optimal position. But watch this space. It's coming.

If you write mostly web/backend code: This is in between. Some agent features might be useful, but you're not getting the full integration Apple's iOS developers get. Worth trying, but manage expectations.

If you're in a regulated industry: Check with legal and security first. Make sure you can use cloud agents. If not, wait for on-device or self-hosted options.

If you're cost-conscious: Run the math. Calculate your team's boilerplate percentage, estimate time savings, compare to API costs. If ROI is positive, go for it. If not, wait until your code patterns change.

If you're learning to code: This is actually great for you. Agents as teachers are genuinely useful. Study what they generate. Understand why they make certain choices. Use them as learning partners, not shortcuts.

The path forward: download Xcode 26.3, create an API key with OpenAI or Anthropic, spend an afternoon experimenting. You'll know pretty quickly whether agents fit your workflow.

FAQ

What exactly is agentic coding?

Agentic coding is when AI agents autonomously complete development tasks—not just suggesting code, but actually understanding your project, writing code, running builds, detecting errors, and fixing them. Unlike autocomplete that suggests one line at a time, agents break down tasks into steps and execute them end-to-end with minimal human intervention.

How does agentic coding in Xcode work?

Xcode uses the Model Context Protocol (MCP) to expose its tools and capabilities to AI agents. When you describe a task in natural language, the agent accesses your project structure, reads documentation, writes code, runs your build system, executes tests, and iterates if errors occur. You see each step highlighted, can review changes, and revert anything you disagree with at any point.

What are the benefits of agentic coding?

Agentic coding saves time on boilerplate and standard patterns, typically reducing development time for UI implementation and standard features by 40-60%. For junior developers, it serves as a teaching tool showing professional coding patterns. Teams spend less time on mechanical coding work and more on complex problem-solving and design decisions. Agents provide transparency through step-by-step task breakdown and execution logs, which helps developers learn from the process.

Is agentic coding available only in Xcode?

Currently, Xcode 26.3 offers the most mature integration with Claude Agent and OpenAI Codex. However, other IDEs like JetBrains and Visual Studio are developing similar capabilities. The Model Context Protocol is designed to be open, meaning other tools can adopt it. Expect broader adoption across development environments within the next 6-12 months.

What types of code can agents write well?

Agents excel at UI implementation, boilerplate code generation, data models with proper conformance, standard framework integration, and error fixing. They struggle with complex business logic, performance optimization, novel architectural decisions, and nuanced UX patterns. The best results come from well-defined tasks with clear success criteria and existing patterns agents can learn from.

Do I need to worry about security when using agents?

Yes, you should apply normal security practices. Code goes to external servers, so check organizational policies about code privacy. All code generated by agents still needs review—agents can generate functional but insecure code. Use your standard dependency scanning and compliance checks. Agents don't exempt you from security responsibility.

How much does agentic coding cost?

Costs depend on API usage. OpenAI charges per token (approximately

Can agents replace my senior developers?

No. Agents automate mechanical coding work, not architectural decisions, design judgment, or complex problem-solving. Senior developers become more valuable because they spend less time on boilerplate and more time on strategic work. Agents are force multipliers for experienced teams, not replacements for human expertise.

How do I get started with agentic coding?

Download Xcode 26.3, create an API key with OpenAI or Anthropic, add it to Xcode settings, and experiment on a non-critical project. Start with straightforward tasks like implementing a new screen or fixing common errors. After a few experiments, you'll understand what agents do well for your specific workflow.

What if I don't like the code an agent generated?

You can revert any change instantly. Xcode creates milestones before agents make changes, so you're one click away from your previous state. Code review agents' output the same way you'd review human code. If something doesn't match your standards, revert and ask for a different approach.

Conclusion: The Practical Reality of Agentic Development

Xcode's move into agentic coding isn't the future of development. It's the present. And the interesting thing is, it's more practical and less revolutionary than the hype suggests.

This isn't AI replacing developers. It's developers gaining a capable tool that handles mechanical work so they can focus on thinking. The agent writes the boilerplate Swift UI view. You review it, maybe tweak the spacing or logic, then move on. The agent runs your build and fixes compiler errors. You understand why the fix was necessary. The agent implements a standard data model. You verify it meets your requirements.

That's actually valuable. Not transformative, but genuinely useful. A 15% time savings on a developer's output matters when it's consistent and reliable. Multiply that across a team and a year, and you're talking about measurable impact.

Where the real impact comes is indirect. Faster implementation means faster iteration. Faster iteration means better products. Better onboarding for new developers means they ramp faster. Standardized code generation means more consistent codebases. These compound.

For teams that ship often, that iterate quickly, that value consistency, agentic coding is worth evaluating. For teams doing one-off projects or highly novel work, the value proposition is weaker.

The smart move: try it. Spend an afternoon, see how it feels. Measure real metrics. Make a decision based on actual data, not hype or fear. Agents are tools. Some tools fit your workflow. Some don't. You won't know until you experiment.

Xcode just made that experiment much easier.

Ready to explore agentic development tools beyond Xcode? Consider how workflow automation platforms can extend AI-powered development. Many teams combine IDEs with specialized automation tools to streamline even more of their development process. The intersection of code generation and workflow automation represents the next evolution in developer productivity.

Key Takeaways

- Xcode 26.3 brings real agentic coding where AI agents autonomously write, test, and fix code—not just autocomplete suggestions

- Model Context Protocol (MCP) enables agents to access Xcode's full capabilities directly, making agents genuinely autonomous for development tasks

- Agents excel at UI implementation, boilerplate, and standard patterns (80-90% success rate) but struggle with complex logic and architecture (30-45% success rate)

- Time savings average 40-60% for boilerplate-heavy tasks; ROI is positive for most teams after accounting for API costs

- Developer control remains paramount: full transparency, step-by-step visualization, and one-click reversions ensure humans stay in charge

Related Articles

- Apple Xcode Agentic Coding: OpenAI & Anthropic Integration [2025]

- OpenAI's New macOS Codex App: The Future of Agentic Coding [2025]

- Xcode 26.3: Apple's Major Leap Into Agentic Coding [2025]

- OpenAI's Codex for Mac: Multi-Agent AI Coding [2025]

- OpenAI's Codex Desktop App Takes On Claude Code [2025]

- Inside Moltbook: The AI-Only Social Network Where Reality Blurs [2025]

![Xcode Agentic Coding: OpenAI and Anthropic Integration Guide [2025]](https://tryrunable.com/blog/xcode-agentic-coding-openai-and-anthropic-integration-guide-/image-1-1770156472865.jpg)