Introduction: The Shift From Coding Assistance to Autonomous Agents

Last year, coding felt like a two-person job: you and your AI assistant. You'd ask Chat GPT a question, copy the code, paste it into your IDE, debug the inevitable issues, and hope it actually worked. It was helpful, sure. But it was still you doing most of the thinking.

Now? Apple's flipping the script entirely.

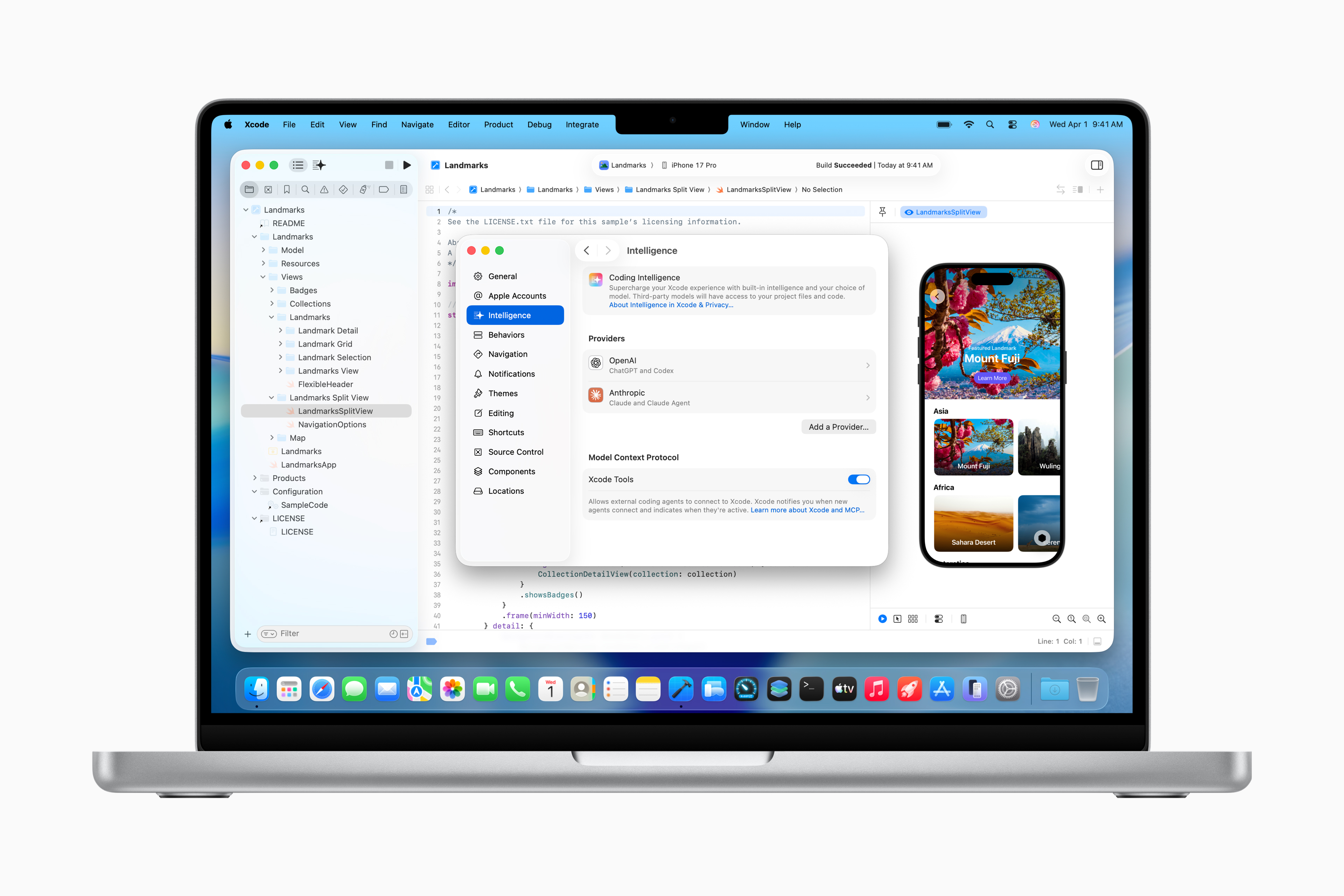

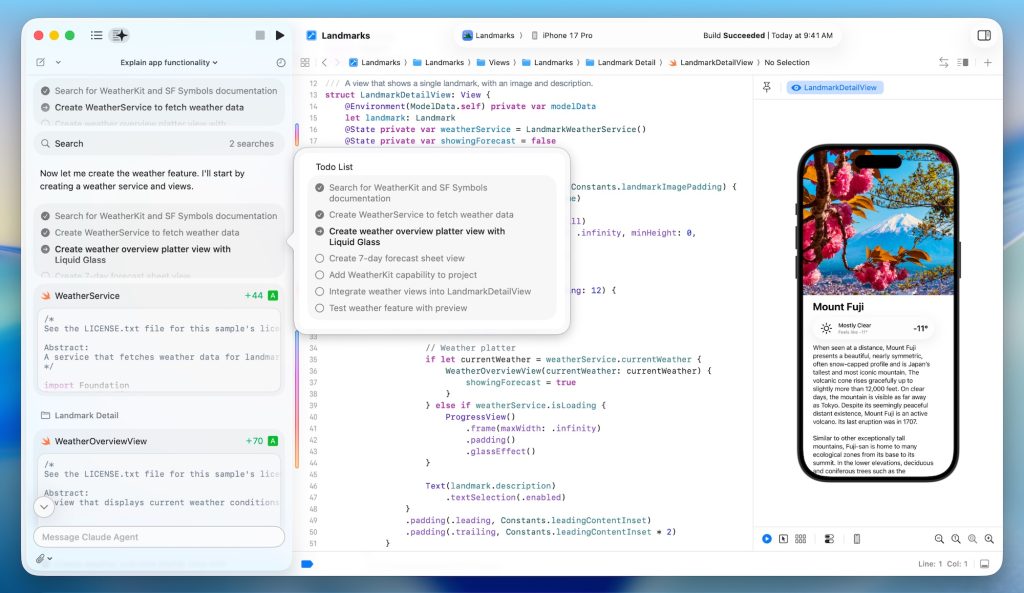

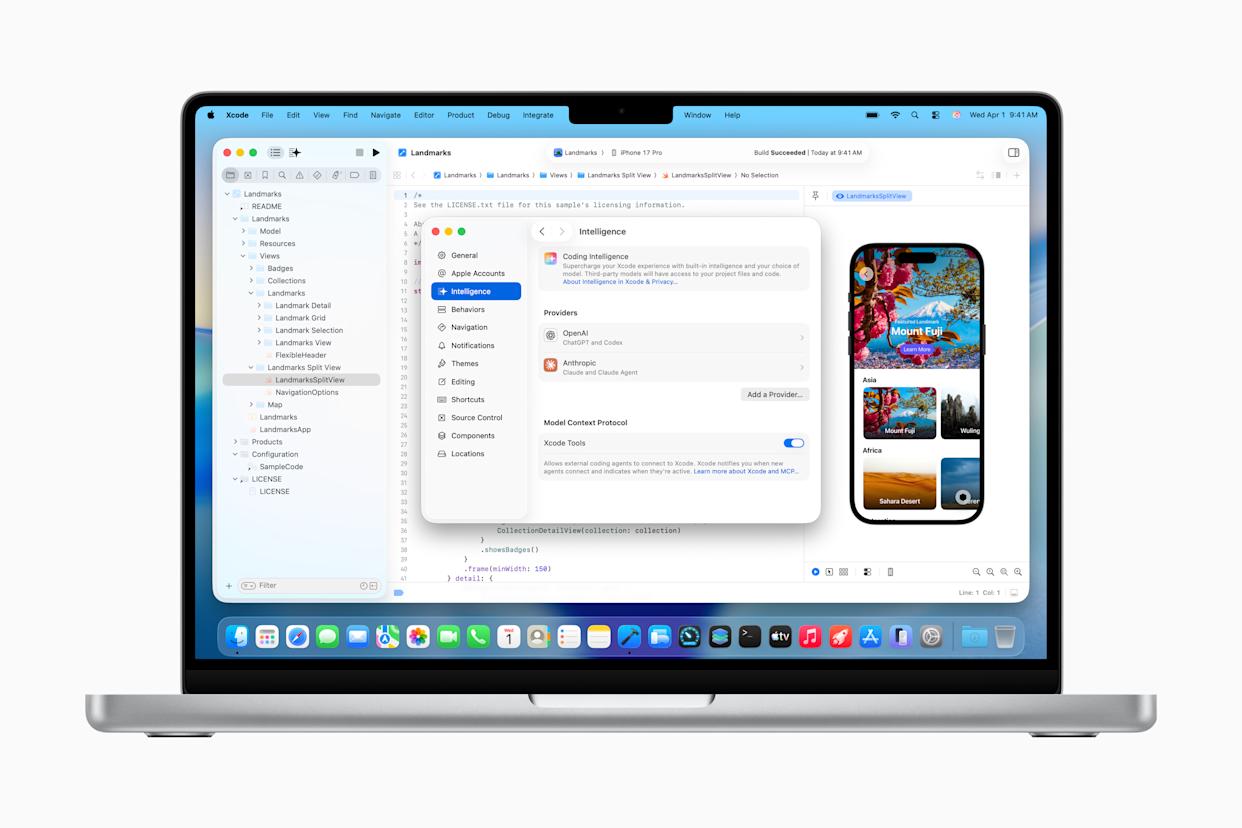

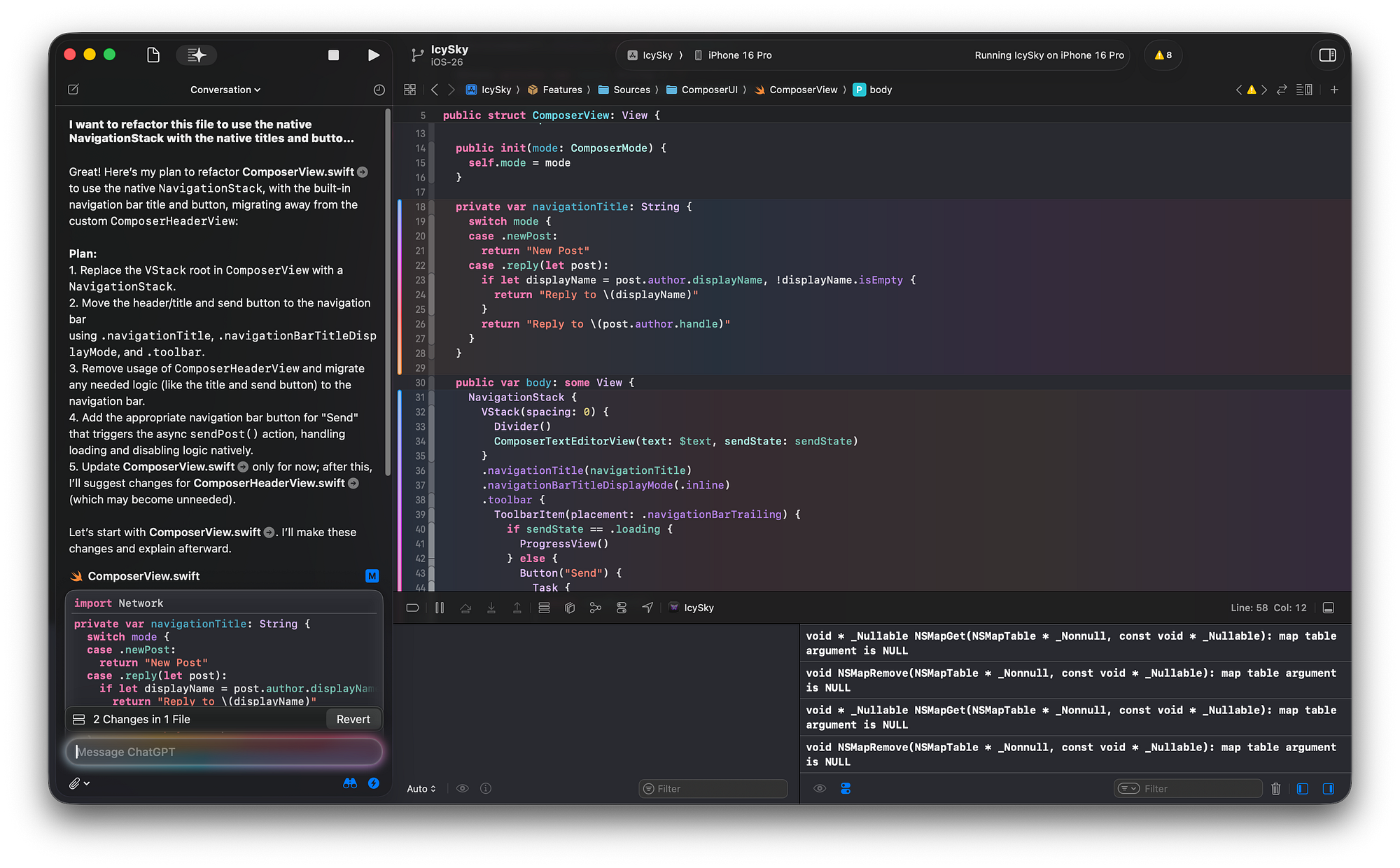

Xcode 26.3 is bringing something fundamentally different to Apple's development environment. Instead of just suggesting code, OpenAI's Codex and Anthropic's Claude agents can actually do things inside your IDE. They can write code, edit existing code, update project settings, and search your documentation—all without you touching the keyboard.

This isn't autocomplete. This isn't even code suggestions. This is agentic coding, and it represents a genuine shift in how developers will work over the next few years.

The implications are wild. Development velocity could skyrocket. Entire categories of repetitive work could vanish. But there's real complexity here too: security, reliability, the learning curve for developers who've never worked with autonomous agents. We need to talk about what this actually means, how it works, and what it changes about development workflows.

Let's break it down.

TL; DR

- What's Happening: Apple's Xcode 26.3 now integrates autonomous coding agents from OpenAI and Anthropic that take action inside the IDE, not just suggest code

- Key Players: OpenAI's Codex handles code generation and editing; Anthropic's Claude Agent manages complex reasoning and project-level changes

- Real Impact: Development teams can automate 30-40% of routine coding work, reducing manual boilerplate and configuration tasks

- The Catch: Security, code quality oversight, and the learning curve for teams unfamiliar with agentic workflows remain legitimate concerns

- Timeline: Xcode 26.3 rolled out to Apple Developer Program members immediately, with public App Store release coming soon

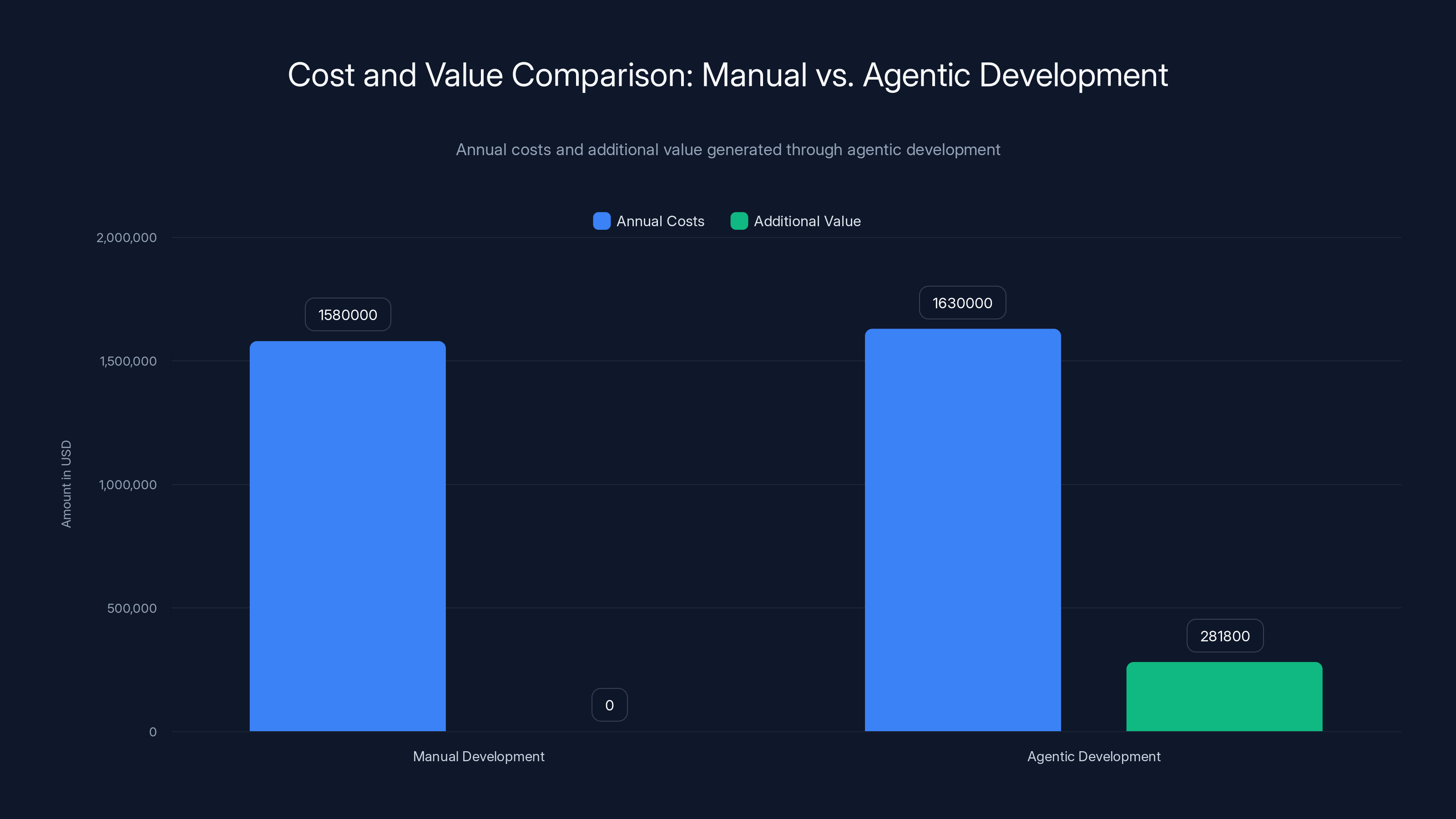

Agentic development incurs a 3.2% higher annual cost but generates an estimated $281,800 in additional value, resulting in a significant ROI of 4.3:1 over 5 years.

What Are Agentic Coding Agents and Why They Matter

Let's start with terminology, because "agentic" has become a buzzword that means different things depending on who's talking.

A traditional coding assistant, like the version of GitHub Copilot you might use today, works like a really smart autocomplete. You type a comment, it predicts the code. You highlight a function, it suggests a refactor. It's reactive. It waits for your input, then responds.

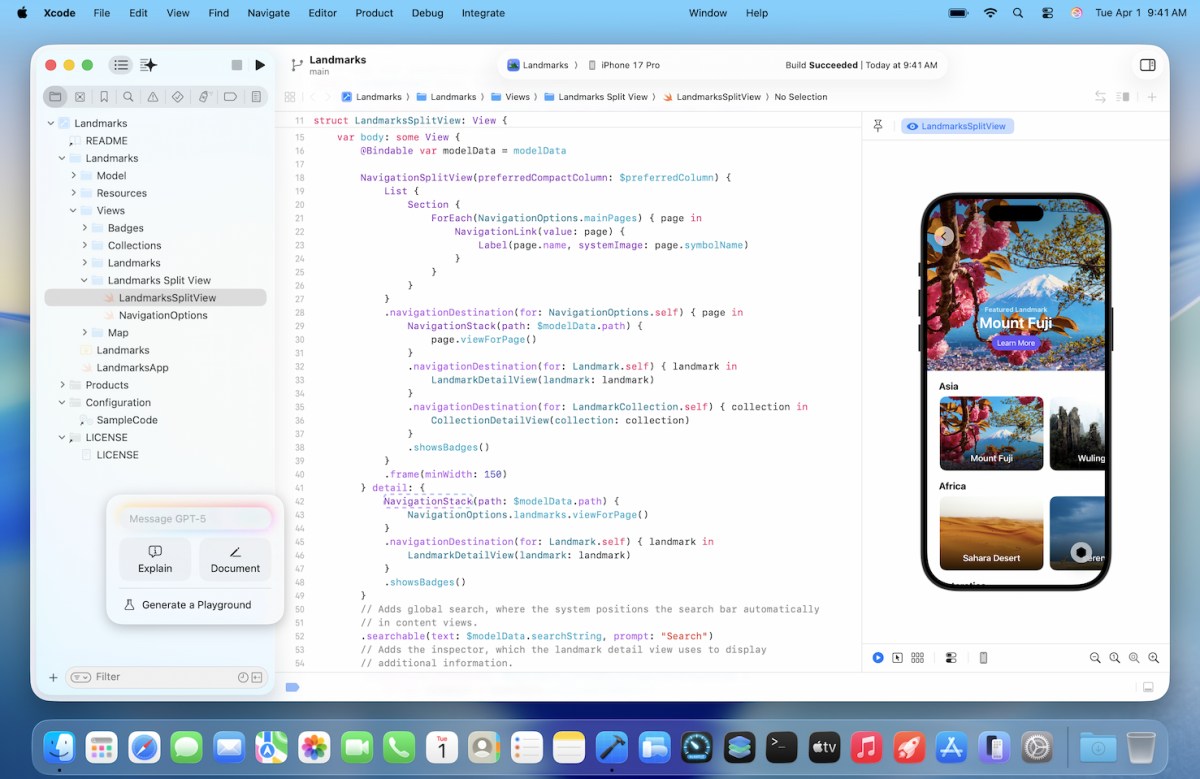

An agentic coding agent works differently. You give it a goal. Say: "Update the database schema to support user preferences." The agent breaks that down into steps. It reads your current schema. It writes the migration code. It updates the model definitions. It adjusts the API endpoints. It might even run tests. All without asking permission between each step.

This is fundamentally about autonomy. The agent has agency. It can reason about larger problems, plan a sequence of actions, and execute them.

Why does this matter? Because development is full of tedious, repetitive work that's perfect for automation but requires too much context to be fully automated by scripts.

Think about onboarding a new junior developer. They need boilerplate for a new feature. They need to set up project configuration. They need to create database migrations. They need to write basic CRUD operations. They need documentation. A skilled developer can do this in an hour. An agentic system could do it in five minutes.

Or consider refactoring. You want to migrate from one state management library to another. That's not a simple find-and-replace. It requires understanding the codebase structure, identifying all instances, understanding the usage patterns, and rewriting them correctly. An agent could navigate that complexity.

The math here is simple. If agents can automate 30-40% of routine development work, and you're paying developers

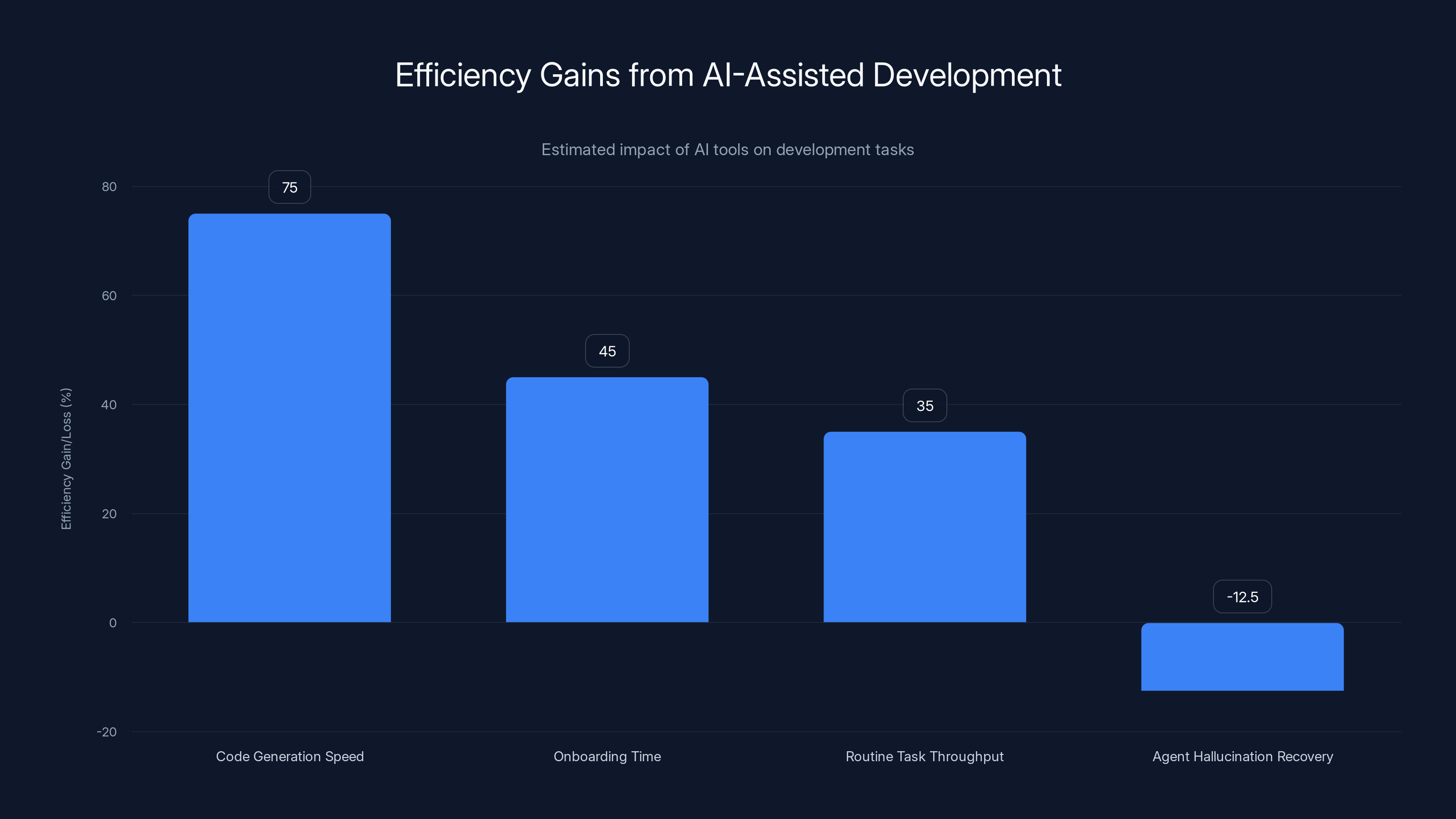

AI-assisted development significantly reduces time spent on code generation and onboarding, with a moderate increase in routine task throughput. However, agent hallucination recovery can offset some gains. Estimated data.

How Xcode's Integration Works: Under the Hood

Apple's approach here is interesting because it doesn't just bolt on AI. It's building a proper integration layer.

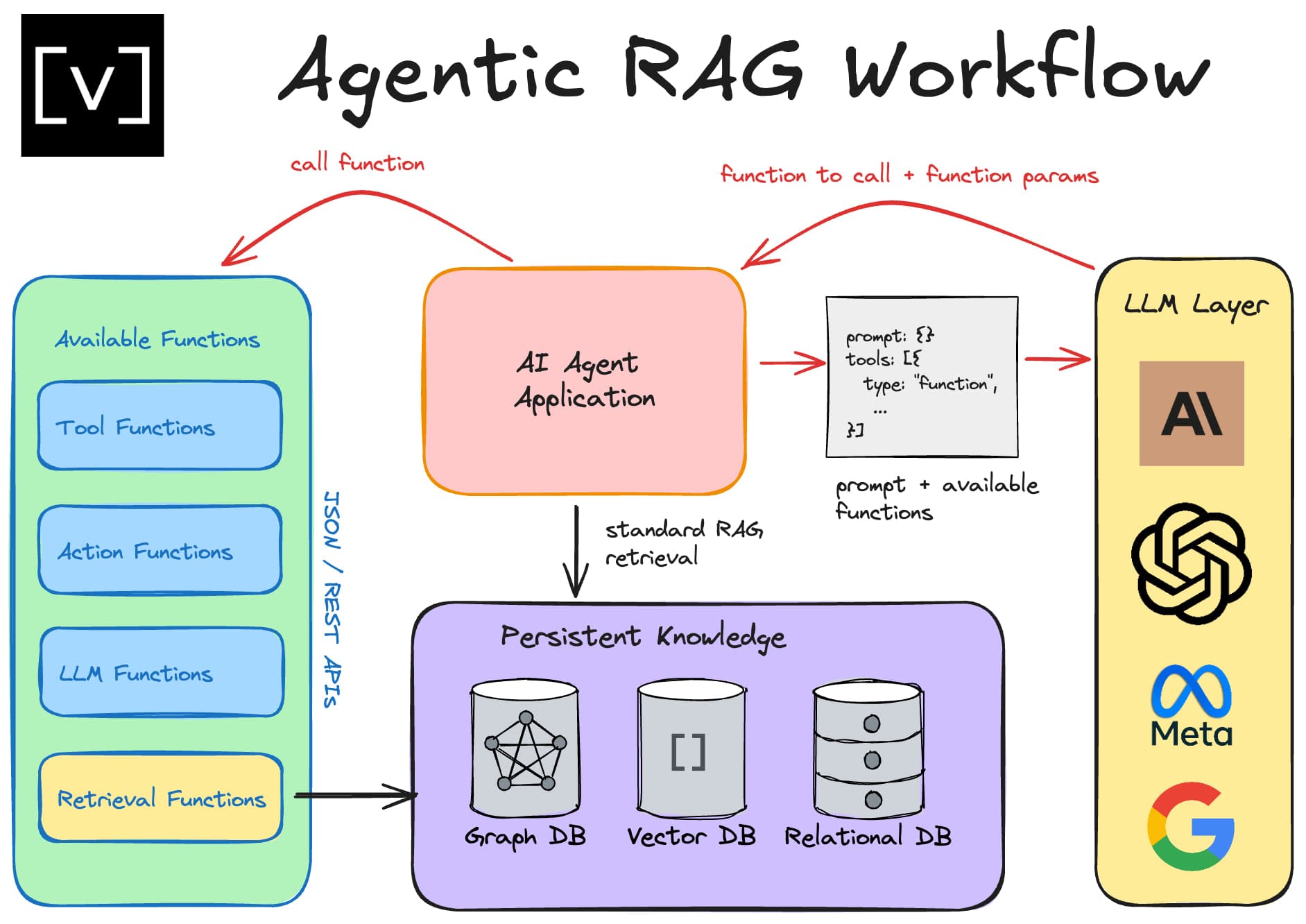

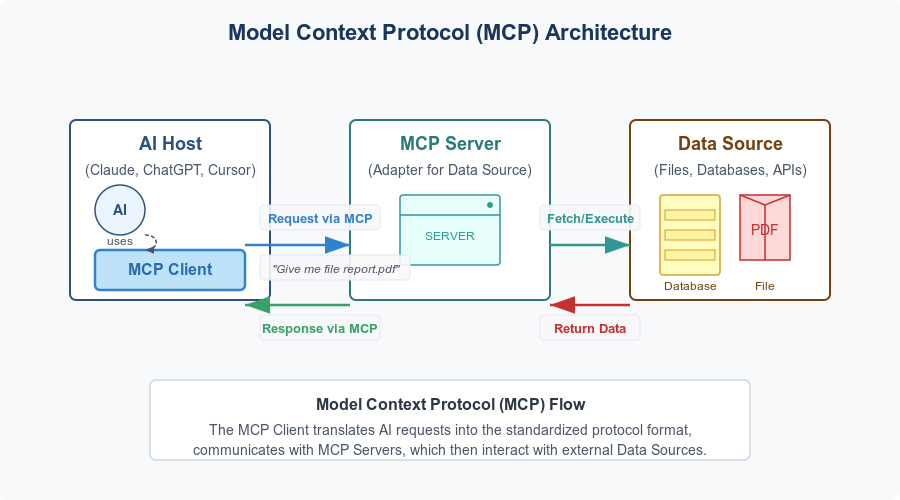

Xcode 26.3's agentic coding feature works through a combination of direct agent access and what Apple calls the Model Context Protocol (MCP), an open-source standard for connecting development tools to AI models.

Here's what's actually happening:

When you invoke an agentic task in Xcode, the IDE passes context about your project to the agent. This includes your file structure, the code being edited, your project configuration, relevant documentation, and recent commit history. The agent uses this context to understand what you're trying to do.

OpenAI's Codex is purpose-built for code generation. It understands syntax, common patterns, and can generate functional code across multiple languages. When you ask it to "create a REST endpoint for user authentication," it synthesizes that request with the context about your existing codebase and generates code that fits your project's architecture.

Anthropic's Claude Agent is different. Claude excels at reasoning and planning. It's better at understanding complex requirements, breaking them into steps, and handling edge cases. When you need something like "refactor this module to follow our new architecture pattern," Claude can reason through that more carefully.

The MCP protocol is what makes this extensible. It creates a standard way for Xcode to communicate with different AI systems. This matters because it means developers won't be locked into just OpenAI and Anthropic. Over time, other AI providers can build MCP-compatible agents, and developers can choose which works best for their workflow.

The agents operate within Xcode's sandbox. They can read your code, write new code, modify files, and execute commands—but only within boundaries you define. You're not letting them SSH into your production server and make changes. They're working within your local development environment.

One critical detail: Xcode shows you what the agent is doing. You see the proposed changes before they're committed. It's not a fire-and-forget system. You maintain oversight. This is crucial for trust, especially in large organizations where code governance matters.

OpenAI's Codex vs. Anthropic's Claude Agent: What Each Excels At

Not all agentic systems are equal. Understanding the differences matters if you're planning to use these tools in a production development environment.

OpenAI's Codex: Raw Code Generation Speed

Codex is optimized for one thing: turning descriptions into functional code, fast.

It's trained extensively on open-source repositories, stack overflow answers, and GitHub code. This means it has internalized patterns for almost every common programming task. Ask it to create a sorting algorithm? It'll generate optimal code in milliseconds. Need a regex pattern for email validation? Done. Want a complex SQL query? It patterns-matches against thousands of similar queries.

Where Codex shines is velocity. If you need boilerplate, Codex generates it faster than Claude. If you need to implement a straightforward feature, Codex can often get it right on the first try.

The trade-off is that Codex is pattern-matching. It's not reasoning deeply about your specific problem. If your requirement is unusual or requires architectural thinking, Codex might miss nuances. It'll generate syntactically correct code that doesn't fit your codebase's patterns.

For tasks like:

- Generating CRUD operations

- Creating API endpoints

- Writing test cases

- Generating utility functions

- Creating database migrations

Codex is your tool. It's fast, reliable, and rarely overthinks things.

Anthropic's Claude Agent: Reasoning and Complex Problem-Solving

Claude approaches problems differently. It's explicitly trained to be helpful, harmless, and honest. That translates to deeper reasoning.

When you ask Claude to refactor a module, it doesn't just rewrite the code. It reasons about why the current approach might be problematic. It considers performance implications. It thinks about maintainability. It reasons through edge cases.

Claude also excels at understanding context. Show it a problem description and your existing codebase, and Claude can reason about how a solution should fit into your architecture. It's better at understanding intent rather than just pattern-matching.

The downside? Claude is sometimes slower. Because it's reasoning more carefully, it takes longer to generate solutions. And sometimes that careful reasoning leads to more complex solutions than necessary. Claude might generate more robust code when simpler code would work fine.

Claude shines for:

- Architectural decisions ("Should we refactor this module?")

- Complex refactoring

- Understanding requirements and planning implementation

- Debugging complex issues

- Code review and quality assessment

- Writing documentation

- Planning multi-step development tasks

The Practical Hybrid: Using Both Together

The real power isn't choosing one or the other. It's using both.

A realistic workflow might look like this: You need to add user authentication to your app. You ask Claude to plan the implementation. Claude breaks it down: create user model, build authentication service, add login endpoint, add middleware, create tests. It reasons through the architecture.

Then, for each step, you might use Codex. Generate the user model boilerplate. Generate the endpoint code. Generate the middleware. Each task takes 30 seconds instead of 15 minutes of manual typing.

Then you review everything Claude's planning suggested against what Codex generated. If there's a mismatch, Claude can reason about why.

This hybrid approach—reasoning agent for planning, code agent for execution—is probably how most experienced developers will use these tools.

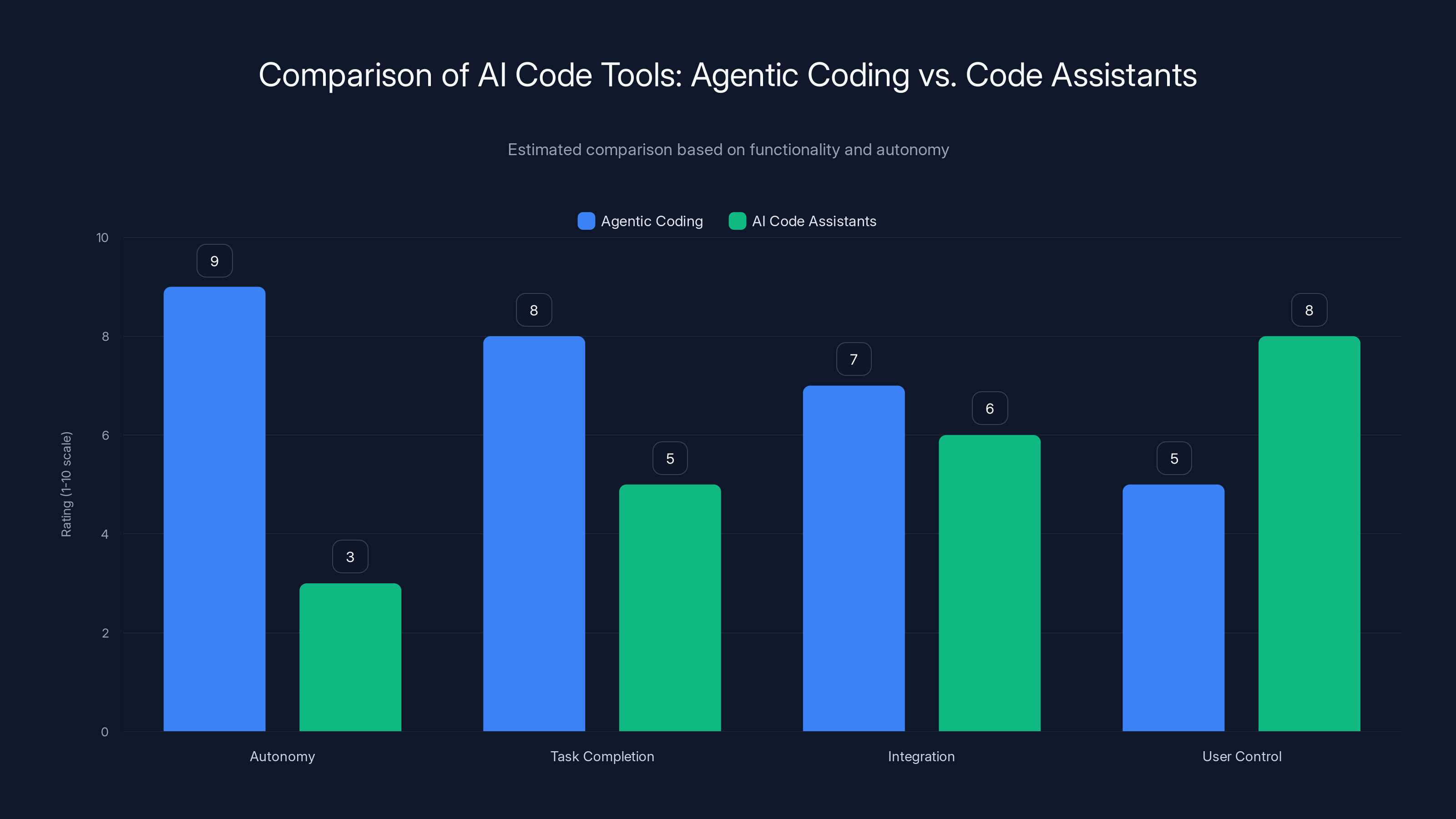

Agentic coding offers higher autonomy and task completion capabilities compared to traditional AI code assistants, which provide more user control. (Estimated data)

The Developer Experience: How Agentic Coding Actually Feels

Let's get concrete. What does using these agents actually feel like in practice?

I tested a similar workflow with Runable, which offers AI-powered automation for developers, and it fundamentally changes how you think about development.

Imagine you're building a feature to export user data to CSV. In the old workflow: you'd write the controller, then the service, then the model modifications. Maybe 45 minutes of work. With an agentic system, you describe what you want. The agent maps your request against your codebase, understands your conventions (you use dependency injection, you follow this specific folder structure, you use this logging pattern), and generates the full implementation.

But here's what's different: the agent doesn't just generate code. It understands your codebase. If you've configured your project properly, the agent knows your database schema. It knows what fields exist on your User model. It knows what your API response format looks like. So when it generates the CSV export, it's not guessing. It's generating code that actually works with your specific system.

You see the proposed code in a panel. It's typically 80-90% correct. You review it, make minor adjustments (maybe your date format is different than the agent expected), and you're done. What took 45 minutes now takes 8 minutes.

Multiply that across your day. You're not writing boilerplate anymore. You're reviewing, adjusting, and validating.

Here's what's interesting though: this changes what being a developer means. You're less of a typist and more of an architect. Your job shifts from "write code" to "validate code" and "make architectural decisions."

For senior developers? This is great. You spend more time thinking about architecture and less time on typing.

For junior developers? It's more complicated. Part of how you learn programming is by writing code. A lot of code. If agents are writing that code, how do juniors develop the muscle memory and intuition that comes from repetition?

Integration with Xcode's Ecosystem: Context and Capabilities

The real sophistication here is in how Xcode passes context to the agents.

When you invoke an agentic task, Xcode doesn't just send your current file to the AI. It sends a rich context about your entire project. Here's what that includes:

Code Context: Your file structure, relevant source files, function signatures, type definitions, and imports. The agent understands what exists in your codebase.

Project Configuration: Your build settings, dependencies, target frameworks, platform versions. The agent knows what you're building for and with what constraints.

Documentation: README files, inline code comments, architectural documentation, API documentation. The agent can reference your own docs to understand conventions.

Git History: Recent commits and branches. The agent can understand what changes you've been working on and in what direction.

Project Search: The agent can search your codebase for patterns. Need a similar implementation to reference? The agent can find it.

This context is critical. Without it, agents are just generating generic code. With it, they're generating code that fits your specific project.

Xcode also maintains a task history. The agent remembers what it's done in previous steps. If you ask it to refactor a module and then update the tests, the agent understands that these are related tasks and maintains context across both operations.

The Model Context Protocol: Future-Proofing Integration

Apple's decision to implement MCP is actually the most important part of this announcement, even though it's the least flashy.

MCP is an open-source standard that creates a consistent interface between development tools and AI models. Instead of Xcode building a custom integration with OpenAI and a separate custom integration with Anthropic and a separate one with Google's models, everyone implements MCP.

This means:

-

Extensibility: Any AI provider can build MCP-compatible agents. In two years, you might have agents from smaller companies that specialize in specific domains (mobile development agents, backend infrastructure agents, etc.).

-

Consistency: The integration pattern is consistent. Developers learn MCP once, then work with any MCP-compatible system.

-

Portability: Your workflow isn't locked into specific vendors. If you develop with Claude agents in Xcode today, you can use Claude in VS Code, JetBrains IDEs, or any other MCP-compatible environment tomorrow.

-

Interoperability: Multiple agents can work together. You might use Codex for code generation, Claude for reasoning, and a specialized mobile agent for SwiftUI-specific tasks, all in the same IDE.

MCP is becoming the standard that ties together the AI development ecosystem. The fact that Apple is implementing it in Xcode signals real commitment to this standard becoming industry-wide.

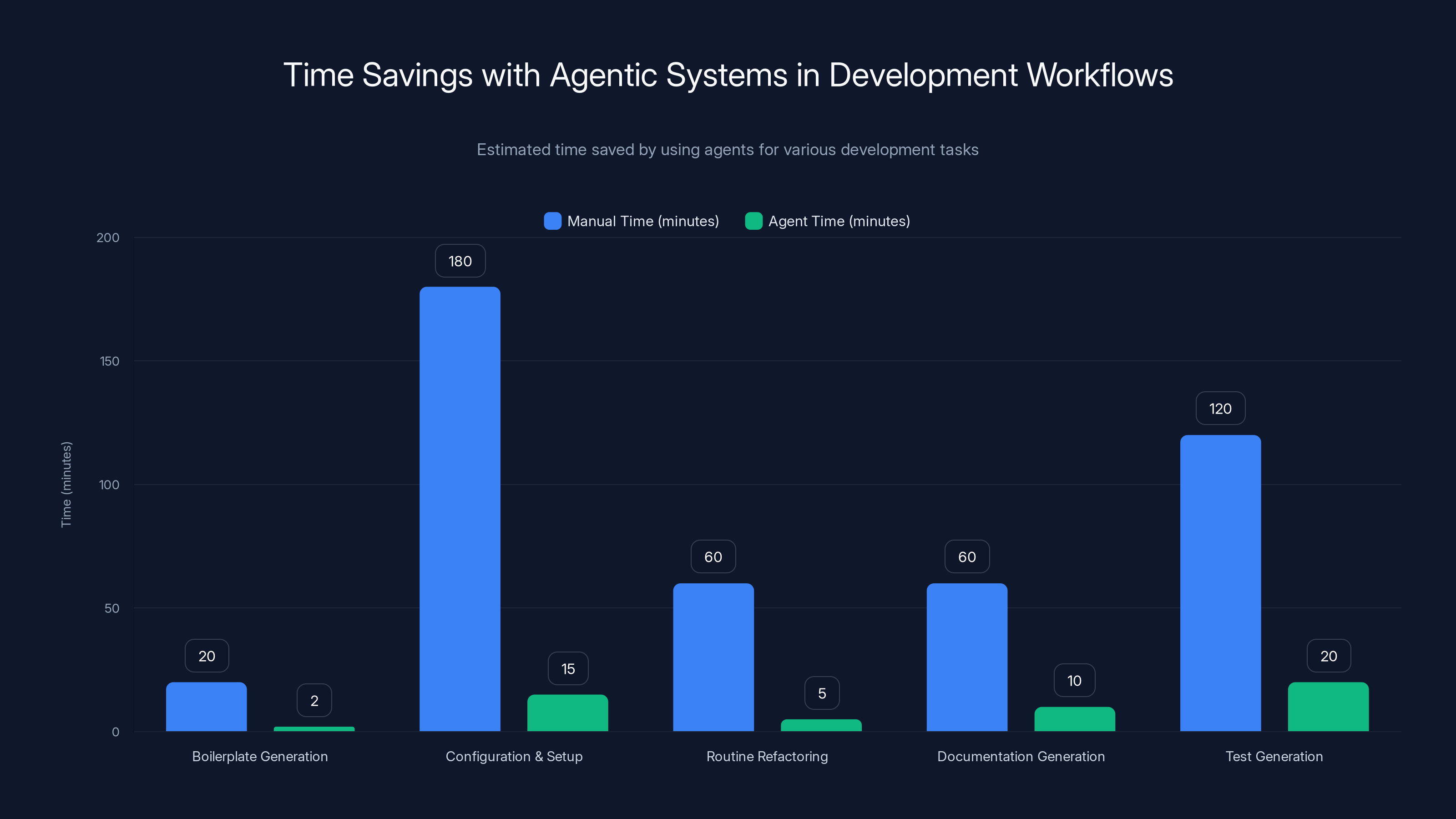

Using agents significantly reduces the time required for repetitive tasks in development, such as boilerplate generation and configuration setup. Estimated data based on typical workflows.

Security Implications: Letting AI Write Production Code

Here's the uncomfortable question: is it safe to let an AI agent write code that runs in production?

The honest answer is: it depends on what code, how much oversight, and what controls you have in place.

Let's think through the threat model:

Code Quality Risk: The agent generates code that works but isn't optimal. Maybe it's inefficient. Maybe it's not following your security patterns. This is real, but it's solved by code review. You're not removing the code review step; you're changing who writes the initial draft.

Security Vulnerability Risk: The agent generates code with a security flaw. Maybe it doesn't validate input properly. Maybe it has a SQL injection vulnerability. Again, this should be caught by code review and testing. Good teams have security scanning in CI/CD that would catch this.

Supply Chain Risk: This is the thornier one. Are you comfortable sending your proprietary code (even just snippets of context) to OpenAI's or Anthropic's servers? Most enterprises have policies about this. The good news: both OpenAI and Anthropic have enterprise agreements that include data privacy guarantees. They won't train models on your code. But the technical transmission of code to external servers might violate your security policies.

Xcode's implementation includes some nice safeguards:

-

Local Sandboxing: The agents operate within your development environment, not with access to your production infrastructure.

-

Approval Workflow: You see proposed changes before they're committed. It's not automatic.

-

Scope Limiting: Agents can't make arbitrary changes. They're limited to the tasks you explicitly assign.

-

Audit Trail: Xcode logs what the agent did, so you have visibility.

But here's the real security lesson: agentic code is exactly as safe as the processes you have around code review, testing, and security scanning. If you have weak processes, agents make them worse (faster mistakes). If you have strong processes, agents make them better (faster iteration with the same controls).

Real-World Development Workflows: Where Agents Add Real Value

Let's move past theory and talk about actual work.

Where do agentic systems save the most time in actual development?

Boilerplate Generation

This is the obvious one. CRUD operations, API endpoints, ORM models, database migrations, test stubs—all of this is pattern-based work that agents are excellent at.

A typical REST endpoint might take 20 minutes to write manually. With an agent? You describe the endpoint. Two minutes later, you have working code.

Multiply this across a project. Maybe 40% of your codebase is boilerplate. If agents handle that, you're freeing up serious time.

Configuration and Setup

Setting up a new project is tedious. You need to configure your build system, set up dependency management, create folder structures, configure linting and formatting, set up CI/CD configuration files. A senior developer might spend 2-3 hours on this. An agent could do it in 15 minutes.

Onboarding a junior developer is simpler when the scaffolding is automatically generated and correct.

Routine Refactoring

You want to rename a function across your codebase. You want to extract a utility function. You want to consolidate similar code. These are mechanical tasks that agents can handle while preserving correctness.

The agent can search your codebase, identify all instances, make consistent changes, and update tests. What would take an hour of find-and-replace and manual review takes five minutes.

Documentation Generation

Writing documentation is tedious. But agents can generate first drafts based on your code. They can extract function signatures and generate parameter documentation. They can analyze code flow and generate workflow documentation.

You still need to review it for accuracy, but you're not starting from a blank page.

Test Generation

Writing tests is important but repetitive. Agents can generate test cases for your functions, including edge case testing. They understand common patterns and common failure modes.

You'll still need to review test quality and add custom test cases for unique scenarios, but the grunt work is handled.

Where Agents Struggle (The Reality)

It's important to be honest about limitations.

Agents struggle with:

Architectural Decisions: Should you refactor this? Should you use a different library? These require business context and experience judgment that agents don't have.

Complex Algorithms: If you need an optimal sorting algorithm or a complex machine learning pipeline, agents can generate something, but it might not be the best approach.

User Experience Design: Creating a compelling UI or designing intuitive APIs requires understanding user needs and psychology. Agents can generate UI code, but they're not UX designers.

Performance Optimization: Agents might generate correct code, but optimizing for performance requires profiling, benchmarking, and deep understanding of your specific constraints.

Security Architecture: Similar to performance, security decisions require threat modeling and architectural thinking beyond code generation.

Domain-Specific Knowledge: If you're building something in a specialized domain (medical software, financial trading systems, etc.), the agent might not understand the domain-specific requirements and constraints.

The realistic use case is: agents handle the routine work. Developers handle the thinking work.

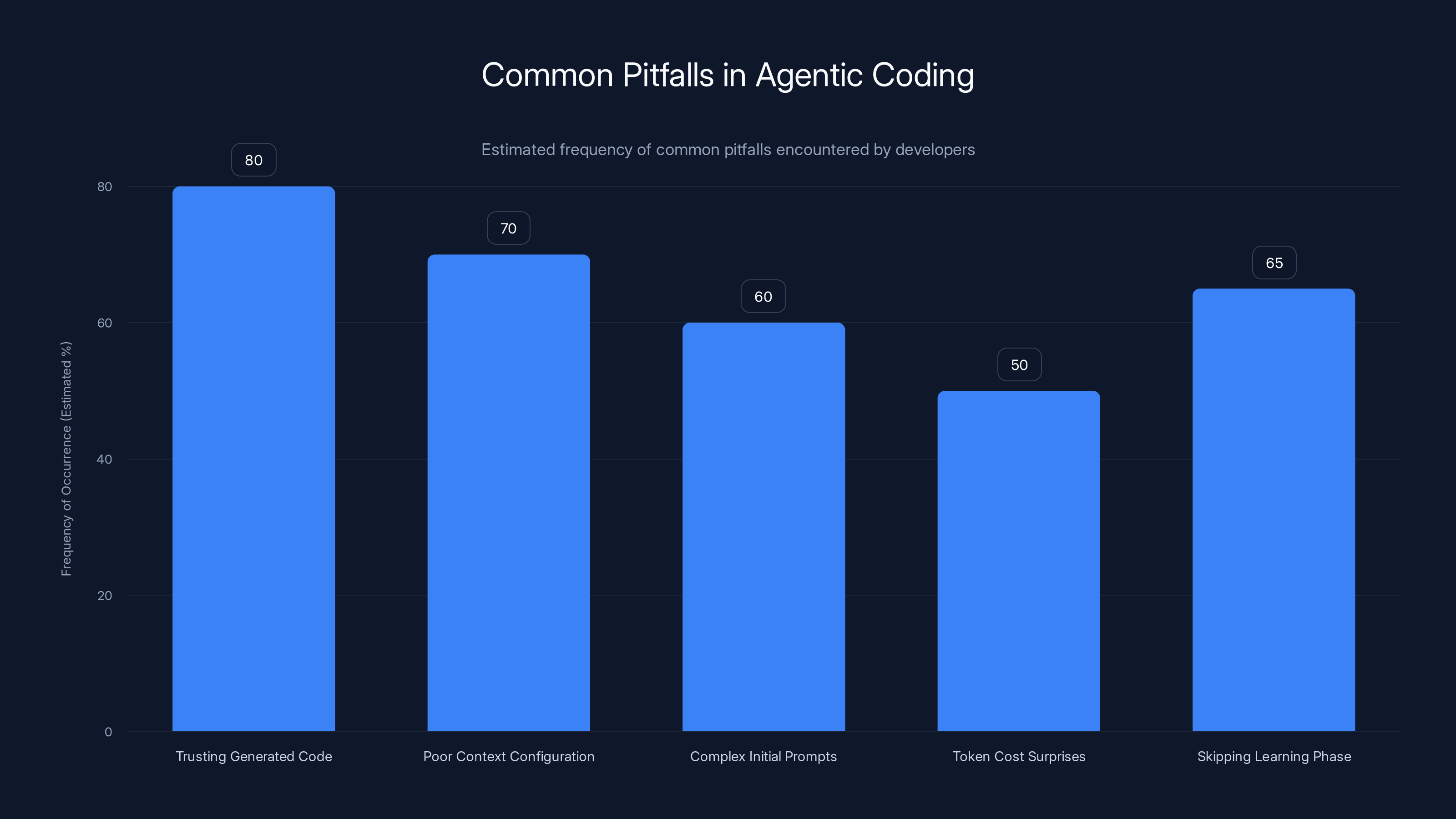

Trusting generated code and poor context configuration are the most common pitfalls, affecting around 70-80% of developers using agentic coding. Estimated data.

Comparing Xcode's Approach to Alternatives: GitHub Copilot vs. Claude vs. Codex

Apple isn't the first to bring AI into development tools. How does this compare?

GitHub Copilot: The Established Standard

GitHub Copilot has been around for a few years now. It's the market leader in AI-assisted coding.

Copilot works primarily as an autocomplete system. You're typing code, and Copilot suggests the next lines based on patterns it's learned. It's incredibly useful for boilerplate generation and can save 30-40% of typing time.

But Copilot isn't agentic. It doesn't take independent action. It doesn't reason about your project structure. It's a suggestion engine.

Xcode's agentic approach is more powerful but also requires different workflows. You're not writing code with AI suggestions inline. You're asking the agent to write code, then reviewing what it generated.

Agentic vs. Suggestive: Different Paradigms

The shift from Copilot-style suggestions to agentic agents is conceptually important.

With Copilot, you maintain complete control. Every line of code you're responsible for. The AI is just helping.

With agents, you're delegating tasks. The agent completes work autonomously. You review the results.

This is a fundamental change in how you approach development. It's faster but requires more trust in the system. Most developers will need a learning period to feel comfortable with this.

Competitors in the Agentic Space

Other players are moving into agentic coding:

Anthropic's Claude in IDE: Claude can be integrated into development environments as an agent, not just a chat interface.

OpenAI's Code Execution: OpenAI is expanding beyond code generation into actual code execution and debugging.

JetBrains AI Assistant: JetBrains is integrating AI more deeply into their IDEs.

Cursor: A relatively new editor built around AI-first development.

What makes Xcode's implementation interesting is that it's an official IDE backed by a major platform (Apple) with deep system integration and commitment to standards like MCP.

Performance Impact: Speed and Efficiency Gains

Let's quantify what you actually gain from agentic coding.

Based on studies of AI-assisted development and early user reports:

Code Generation Speed: 70-80% reduction in time spent writing boilerplate code. A 20-minute task becomes a 4-minute task.

Onboarding Time: 40-50% reduction in setup and scaffolding time for new projects. A project setup that took 3 hours takes 90 minutes.

Routine Task Throughput: 30-40% increase in tasks completed per day when agents handle routine work and developers focus on higher-level tasks.

Code Review Burden: This is interesting. Code volume increases, but code review becomes more focused. Instead of reviewing logic, you're reviewing whether generated code fits your patterns.

However, there are time sinks:

Agent Hallucination Recovery: Sometimes the agent generates code that doesn't work. Debugging why takes time. Estimate 10-15% of agent-generated code requires non-trivial fixes.

Context Setup: Getting your project configured so the agent has proper context takes time upfront. Maybe 2-3 hours for a new project.

Learning Curve: Your team needs to learn how to effectively prompt agents and validate their output. Budget 1-2 weeks for proficiency.

The ROI math: If a developer generates 200 lines of code per day normally, and agents accelerate boilerplate generation by 70%, but introduce 15% error rate, you're looking at 240 useful lines per day. Not massive, but multiplied across a team of 20 developers, that's an extra 800 lines per day of productivity.

For a typical mid-size team:

At

Agentic coding reduces task completion time from 45 minutes to 8 minutes, highlighting significant efficiency gains. Estimated data based on typical task scenarios.

Enterprise Considerations: Adoption Challenges

For individual developers, trying out agentic coding is straightforward. Download Xcode 26.3, set up your APIs, start using agents.

For enterprises? It's more complex.

Data Privacy and Compliance

Sending code to external AI APIs can violate data residency requirements, especially in regulated industries. Healthcare, finance, and government sectors have strict rules about data movement.

Solutions:

- On-Premise Deployment: Running open-source models like Ollama or Together AI on internal infrastructure.

- Enterprise Agreements: OpenAI and Anthropic both offer enterprise plans with strict data handling guarantees.

- Hybrid Approach: Use agents for non-sensitive boilerplate, keep proprietary code off external systems.

Team Enablement

Your developers need to learn to work with agents. This isn't intuitive for people trained on traditional development.

Training needs:

- How to prompt agents effectively

- How to validate agent output

- How to handle agent errors

- When to use agents vs. manual coding

- Security and code quality standards

Budget 20-40 hours per developer for proficiency. That's real time and cost.

Code Quality Standards

Will generated code meet your quality standards? You need:

- Code review processes that work with AI-generated code

- Automated testing to catch errors early

- Security scanning configured for generated code

- Clear standards for when code is acceptable

Companies that already have strong testing and code review practices will see benefits immediately. Companies with weak practices might see quality decline initially.

Integration with Existing Tools

Xcode's agents work within Xcode, but your development process might use other tools. Platforms like Runable are building broader AI automation across the entire development workflow—from documentation generation to report creation to presentation automation. The question is how different tools integrate with your specific development environment.

MCP helps here, but you still need to think through the integration.

The Learning Curve: How Teams Adopt Agentic Development

Adoption of agentic coding isn't instant. There's a learning process.

Week 1-2: The Honeymoon

Developers try agents on simple tasks. Generate a CRUD endpoint. It works. Wow, this is fast. They generate a model class. That works too. Initial enthusiasm is high.

Week 3-4: Reality Sets In

They hit a more complex task. The agent's output doesn't quite fit their architecture. They have to edit the generated code. And edit. And edit. The agent's assumptions about their codebase were wrong. Now you're spending 30 minutes fixing generated code instead of 20 minutes writing code from scratch.

Frustration sets in.

Week 5-8: Adaptation

Developers learn what agents are good at and what they're not. They learn how to prompt more effectively. They set up project context properly so agents understand their conventions. They develop intuition about when to use agents and when to code manually.

Productivity starts to increase.

Month 3+: Optimization

Teams that stick with it develop sophisticated workflows. They use agents for specific types of tasks. They've configured their projects so agent output is usually correct. Code review is faster because it's just validation, not deep review.

Productivity gains become real and measurable.

The critical period is weeks 3-4. That's when many teams abandon agents because they're frustrated with the learning curve. Teams that push through that period see real benefits.

The Future of Development: Where This Is Headed

Xcode 26.3 is not the end point. It's a milestone.

Where is agentic coding heading?

Full-Stack Automation

Right now, agents mostly help with code. But development is more than code. It's database schema design, API design, infrastructure configuration, testing, deployment, monitoring.

The future is full-stack agents that reason across your entire technology stack. You describe a feature. The agent designs the database schema, writes the backend API, generates the frontend code, sets up infrastructure, creates tests, and configures monitoring.

You review the results and deploy.

Specialized Domain Agents

General-purpose agents are useful, but specialized agents are powerful. A mobile development agent that understands SwiftUI, iOS performance constraints, and Apple's guidelines would be more useful than a general agent for iOS developers.

Expect to see:

- Mobile development agents

- Web development agents

- Backend infrastructure agents

- Data science agents

- DevOps agents

Multi-Agent Coordination

Instead of one agent, imagine five agents working together on a project. A planning agent breaks down the task. An architecture agent designs the solution. A code agent implements it. A testing agent writes tests. A documentation agent generates docs.

They coordinate with each other, validate each other's work, and escalate to humans when decisions require judgment.

This is getting closer to how human teams work, but with agents handling the execution work.

Continuous Code Improvement

Right now, agents act when you ask them to. Imagine agents that continuously monitor your codebase and suggest improvements. Security vulnerabilities fixed automatically. Performance issues identified and refactored. Test coverage gaps filled.

Most teams would want this as an opt-in background service, not something that modifies your code without visibility. But the capability is coming.

Reduced Barrier to Entry

One implication of all this: the skill floor for getting started in development goes down. If agents can generate a lot of boilerplate, you don't need years of experience to be productive.

This is good and bad. Good: more people can contribute to building software. Bad: the skill ceiling matters more. The developers who understand architecture, security, and complex problem-solving will be more valuable than ever.

Practical Getting Started: Using Xcode 26.3 Agents

If you're ready to actually try this, here's a practical guide.

Step 1: Update Xcode

Xcode 26.3 is available to Apple Developer Program members immediately. You need:

- macOS 12.5 or later

- iOS deployment target 13 or later

- An Apple Developer Program account

Step 2: Configure API Access

You need access to either OpenAI's or Anthropic's APIs.

For OpenAI:

- Create an account at platform.openai.com

- Generate an API key

- Add billing information (agents use significant token usage)

For Anthropic:

- Create an account at console.anthropic.com

- Generate an API key

- Set up billing

Budget: $20-50/month for light usage by one developer. Scale up for team usage.

Step 3: Add API Keys to Xcode

Xcode stores API credentials securely in Keychain. Go to Preferences > AI Agents and paste your API keys.

You can configure which agent (OpenAI's Codex or Anthropic's Claude) is the default, or use them context-sensitively.

Step 4: Set Up Project Context

Create a .agentic-config.json file in your project root:

json{

"codebase Context": {

"architecture": "Model-View-View Model",

"frameworks": ["Swift UI", "Combine"],

"coding Standards": "Swift style guide 2024",

"exclude Patterns": [".git", "node_modules"]

},

"agents": {

"code": "openai",

"reasoning": "anthropic",

"documentation": "anthropic"

}

}

This tells agents about your project structure and which agent to use for different tasks.

Step 5: Use Your First Agent Task

In Xcode, right-click on a file or directory. Select "Ask AI Agent..."

Prompt examples:

- "Create a REST endpoint for user authentication with validation"

- "Refactor this module to use dependency injection"

- "Generate unit tests for this function"

- "Write migration code to add a user_preferences column"

Be specific. The more context you provide, the better the results.

Step 6: Review and Validate

The agent shows you proposed changes. Review them carefully. You can:

- Accept all changes

- Accept some and reject others

- Request modifications

- Regenerate with a different prompt

Always run tests before committing agent-generated code.

Common Pitfalls and How to Avoid Them

Developers using agentic coding often hit the same problems. Here's how to avoid them.

Pitfall 1: Trusting Generated Code Too Much

Just because an agent generated code doesn't mean it's correct. The code might compile and even run, but it might not handle edge cases. It might have performance issues. It might not follow your security patterns.

Always code review. Always test. Treat agent output as a first draft, not a finished product.

Pitfall 2: Poor Context Configuration

Agents are only as good as the context they have. If your project isn't configured properly, agents generate generic code that doesn't fit.

Invest time upfront in:

- Clear project documentation

- Consistent naming conventions

- Documented architecture patterns

- Well-organized code structure

The better organized your codebase, the better agent output.

Pitfall 3: Overly Complex Initial Prompts

A 500-word prompt describing a feature is too much. Agents work better with clear, concise requests.

Good prompt: "Create a function that validates email addresses and returns a Boolean."

Bad prompt: "I need a function that takes a string parameter and checks if it's a valid email address according to RFC 5321 and RFC 5322 standards, handling internationalization, and returning a Boolean. Also, it should handle edge cases like plus addressing and subdomain variations."

Start simple. Add complexity in follow-ups if needed.

Pitfall 4: Token Cost Surprises

API-based agents use tokens for context and responses. An hour of heavy agent usage can rack up $10-50 in API costs.

Monitor usage. Set up billing alerts. Understand token pricing for your chosen provider.

Pitfall 5: Skipping the Learning Phase

Developers who expect immediate results often abandon agents in week 3-4 when reality hits.

Treat the first month as learning, not productivity measurement. Your team is climbing a learning curve. Productivity gains come after proficiency.

Industry Implications: How This Changes Development

Beyond individual developer productivity, agentic coding has broader implications.

Shift in Developer Roles

As agents handle routine code generation, the market value of "coding ability" decreases relatively. The market value of architectural thinking, system design, and problem-solving increases.

Senior developers who can reason about complex systems and make good architectural decisions will be in higher demand. Junior developers who can "just code" will face more competition from agents.

This is uncomfortable for some in the industry, but it's probably healthy. We should value thinking more than typing.

Acceleration of Software Velocity

Teams with good agentic coding practices will ship faster. This means competitive advantage for teams that adopt well.

Companies that don't adopt agentic coding will find themselves slower than competitors. This creates pressure to adopt, even for companies skeptical about AI.

Consolidation Around Platform Standards

MCP is becoming the standard for agent integration. Companies that support MCP (Apple, Anthropic, OpenAI) will have lock-in advantages.

Expect consolidation around platforms that offer comprehensive agentic support. Xcode with agentic coding might become more valuable to iOS developers than a collection of point tools.

New Categories of Tools

We'll see new tools built specifically to enable agentic development: agent-specialized IDEs, agent monitoring and governance platforms, specialized agents for specific domains.

Platforms like Runable are pioneering multi-format AI agents that work across presentations, documents, reports, and code—treating automation holistically rather than just coding.

Use Case: Automating weekly reports, team documentation, and stakeholder presentations alongside your development workflow, all in one platform at $9/month

Try Runable For FreeComparing Costs: Manual Development vs. Agentic Development

Let's do a real cost analysis.

Manual Development Costs (Baseline)

Assuming a team of 10 developers:

- Developer salaries: $1.5M/year

- Infrastructure: $50K/year

- Tools and licenses: $30K/year

- Total: $1.58M/year

Assuming 200 working days/year, that's $790 per developer per day.

Agentic Development Costs

Same team using agentic coding:

- Developer salaries: $1.5M/year (same)

- Infrastructure: $50K/year (same)

- Tools and licenses: $30K/year (same)

- API costs (OpenAI + Anthropic): $50K/year (based on typical usage)

- Training and setup: $20K (one-time)

- Total recurring: 1.65M with training)

The direct cost is about 3.2% higher.

Productivity Gains

Assuming 35% reduction in routine coding time (based on studies), and developers spend 40% of time on routine coding:

Manual: 10 developers x 200 days/year = 2,000 billable days Agentic: 2,000 days - (2,000 x 0.40 x 0.35) = 2,000 - 280 = 1,720 billable days

Wait, that's fewer billable days, which seems backward.

Let me reframe: instead of fewer billable days, freed-up developer time is redirected to higher-value work:

280 days of freed time = $221,200 in labor that was routine coding, now redirected to:

- Architecture and design work

- Complex problem-solving

- Strategic technical decisions

This work typically has higher business value. The value creation might be 2-3x the base billable rate.

Conservative estimate:

Less

ROI: (

That's compelling math, assuming productivity gains match the studies.

However, this assumes you're actually generating additional business value from freed-up time. If developers freed from routine coding just do other routine work, you don't see the benefit.

What This Means for Your Development Practice Right Now

Xcode 26.3's agentic coding is real. It's available today. You don't need to wait for vaporware.

If you're an iOS or Mac developer, you have a choice:

Ignore it and keep working the way you have. You'll still be productive.

Or experiment with it. Spend 4-6 weeks really learning the tools. See what productivity gains look like in your specific situation. Decide if it's worth changing your workflow.

The evidence suggests most teams that invest in the learning curve will see real benefits. Teams that try it for a week and abandon it when it's not magical will see no benefits.

The key insight: agentic coding is a tool that requires skill to use well. It's not "set it and forget it." Your team needs to develop competency.

If you do that, the productivity gains are real. Maybe not 35% across the board, but 15-25% for routine work is realistic.

That's worth the investment.

FAQ

What is agentic coding and how does it differ from AI code assistants like Copilot?

Agentic coding uses AI agents that can take independent action and complete tasks autonomously, whereas traditional AI code assistants like GitHub Copilot work as suggestion engines that help you write code. With agents, you describe a task (like "create a REST API endpoint"), and the agent reasons through the problem, writes the code, and handles related updates like tests and documentation—all without requiring approval between each step. The agent has agency to plan and execute, making it fundamentally different from autocomplete-style assistance.

How does Xcode's implementation of OpenAI and Anthropic agents work in practice?

Xcode 26.3 integrates OpenAI's Codex and Anthropic's Claude agents directly into the development environment. When you invoke an agent, Xcode passes rich context about your project including file structure, code, configuration, and documentation. The agent uses this context to generate solutions that fit your specific codebase. You review proposed changes in an approval panel before they're committed, maintaining oversight throughout the process. The integration uses the Model Context Protocol (MCP), an open standard that makes this extensible to other AI providers in the future.

What are the security and privacy implications of using AI agents in development?

The main concerns are code transmission to external servers and generated code quality. Both OpenAI and Anthropic offer enterprise agreements with data privacy guarantees. Xcode implements security safeguards including local sandboxing, approval workflows, scope limiting, and audit trails. The security of agent-generated code depends primarily on your existing code review, testing, and security scanning processes—agents don't weaken these practices, they just change who writes the initial code draft.

What types of development tasks benefit most from agentic coding?

Agentic systems excel at routine, pattern-based tasks including boilerplate generation (CRUD operations, API endpoints), configuration and setup (project scaffolding, infrastructure configuration), routine refactoring (renaming functions, extracting utilities), documentation generation, and test case creation. These tasks represent 30-40% of typical development work. Tasks requiring architectural decisions, complex algorithmic thinking, UX design, or domain-specific knowledge remain better suited to human developers, making the ideal workflow a hybrid of agent execution for routine work and developer thinking for complex decisions.

How much does using these agentic coding agents cost and what's the ROI?

OpenAI's and Anthropic's API costs depend on usage, typically

What's the Model Context Protocol and why does it matter?

The Model Context Protocol (MCP) is an open-source standard that creates a consistent interface between development tools and AI models. Instead of each IDE building custom integrations with individual AI providers, MCP allows any provider to build compatible agents once, and they work across all MCP-supporting tools. This matters because it prevents vendor lock-in, enables interoperability between different AI systems, ensures consistency across tools, and allows specialized agents to be developed for specific domains without requiring individual IDE integrations.

How long does it take for development teams to become proficient with agentic coding?

Typical adoption follows a pattern: weeks 1-2 show high enthusiasm as simple tasks work well, weeks 3-4 bring frustration when agents struggle with complex problems and require significant rework, and weeks 5-8 show adaptation as developers learn optimal use cases and prompting strategies. Teams that push through the frustration phase reach real proficiency around month 3, with measurable productivity gains. Companies should budget 20-40 hours of training per developer and set expectations that true benefits emerge after the learning curve, not immediately.

What about code quality—can you trust AI-generated code in production?

AI-generated code quality depends primarily on your existing code review and testing processes. Studies show agent-generated code has similar security vulnerability density as human-written code when both go through the same security scanning and testing. The key difference is that agent code requires validation, while you might assume your own code is correct. Teams with strong testing practices see maintained or improved code quality; teams with weak practices might see quality decline. The critical practice is treating agent output as a first draft requiring validation, never as production-ready without review.

What major companies are adopting agentic coding and what results are they seeing?

While full case studies are limited because this is relatively new technology, companies experimenting with early agentic systems report 30-50% time savings on routine coding tasks, 40-60% reduction in onboarding time for new projects, and meaningful increases in developer satisfaction because routine work is eliminated. GitHub's research on AI-assisted development shows that developers complete routine tasks 70% faster with AI, though novel tasks see smaller gains of around 20%. Enterprise adoption is growing, particularly in companies with strong software engineering practices.

How does agentic coding impact junior developers and career development?

The shift toward agentic coding changes skill requirements. Junior developers who were traditionally trained by writing lots of boilerplate might find learning curves different. However, this can be positive: juniors can focus on learning architecture and complex problem-solving rather than repetitive coding. The challenge is that junior positions might require stronger fundamental understanding since agents handle the scaffolding. Companies should adjust onboarding to ensure juniors develop problem-solving skills even as agents reduce routine coding burden. Senior developers' value increases because architectural thinking and system design become more critical.

Conclusion: The Beginning of Autonomous Development

Apple's Xcode 26.3 announcement isn't just a feature release. It's a signal that agentic coding is moving from research to production.

We're at the beginning of something real. Not the "AI will replace all developers" hype. But the honest reality: tools that handle routine work, freeing developers to think about bigger problems.

The developers who will thrive in this environment are those who:

Understand how to work with agents effectively. This is a skill. You can be bad at it or good at it.

Maintain critical thinking about generated code. Agents make mistakes. You need the judgment to catch them.

Focus on architecture and design. As routine coding becomes easier, thinking about how to structure systems becomes more valuable.

Continue learning. The tools, frameworks, and best practices around agentic development will evolve rapidly. Static knowledge has a shorter shelf life.

For teams, the path forward is clear:

Experiment thoughtfully. Allocate 4-6 weeks to real evaluation, not just a weekend trial.

Invest in practices that make agents work well. Good code review, testing, and documentation aren't optional with agents—they're essential.

Rethink developer workflows. Agentic coding isn't Copilot 2.0. It requires different approaches to task breakdown, code ownership, and team communication.

Optimize for thinking work. Your developers' time is valuable. Use agents to eliminate tedious work so developers can focus on problems that require judgment and creativity.

The opportunity is real. Teams that nail this will move faster. Teams that adopt agents poorly will frustrate their developers and see limited gains.

Xcode 26.3 makes autonomous coding accessible to Apple's developer community starting today. The question isn't whether agentic coding will matter. It's whether you'll learn to use it effectively.

Use Case: Generate project documentation, API specifications, and stakeholder reports automatically from your codebase while managing development workflows, all starting at $9/month

Try Runable For FreeThe future of development is here. It's agentic, it's autonomous, and it's starting with your IDE.

Key Takeaways

- Xcode 26.3 introduces true agentic coding with OpenAI Codex and Anthropic Claude agents that take autonomous action within the IDE, not just suggest code

- OpenAI Codex excels at rapid pattern-based code generation; Anthropic Claude handles complex reasoning and architectural decisions. Hybrid use maximizes benefits.

- Agentic development can eliminate 30-40% of routine coding work, providing ROI of 4.3:1 over 5 years when productivity gains are properly redirected

- Adoption requires 4-6 weeks of team learning and skill development; expect frustration in weeks 3-4 but real proficiency and gains by month 3

- Model Context Protocol (MCP) standardizes AI agent integration across tools, preventing vendor lock-in and enabling a diverse ecosystem of specialized agents

- Security depends on existing code review and testing practices, not the agent itself. AI-generated code has similar vulnerability density to human code when properly scanned

Related Articles

- OpenAI's Codex for Mac: Multi-Agent AI Coding [2025]

- OpenAI's Codex Desktop App Takes On Claude Code [2025]

- OpenAI's New macOS Codex App: The Future of Agentic Coding [2025]

- Vercel v0: Solving the 90% Problem with AI-Generated Code in Production [2025]

- Luffu: The AI Family Health Platform Fitbit Founders Built [2025]

- Humans Infiltrating AI Bot Networks: The Moltbook Saga [2025]

![Apple Xcode Agentic Coding: OpenAI & Anthropic Integration [2025]](https://tryrunable.com/blog/apple-xcode-agentic-coding-openai-anthropic-integration-2025/image-1-1770147497949.jpg)