YouTube's AI Slop Problem: Why Top Channels Are Disappearing [2025]

Last month, something quietly shifted on YouTube. Some of the platform's most-subscribed channels simply vanished. Not because they violated community guidelines in the traditional sense. They disappeared because they were pumping out what the internet now calls "AI slop."

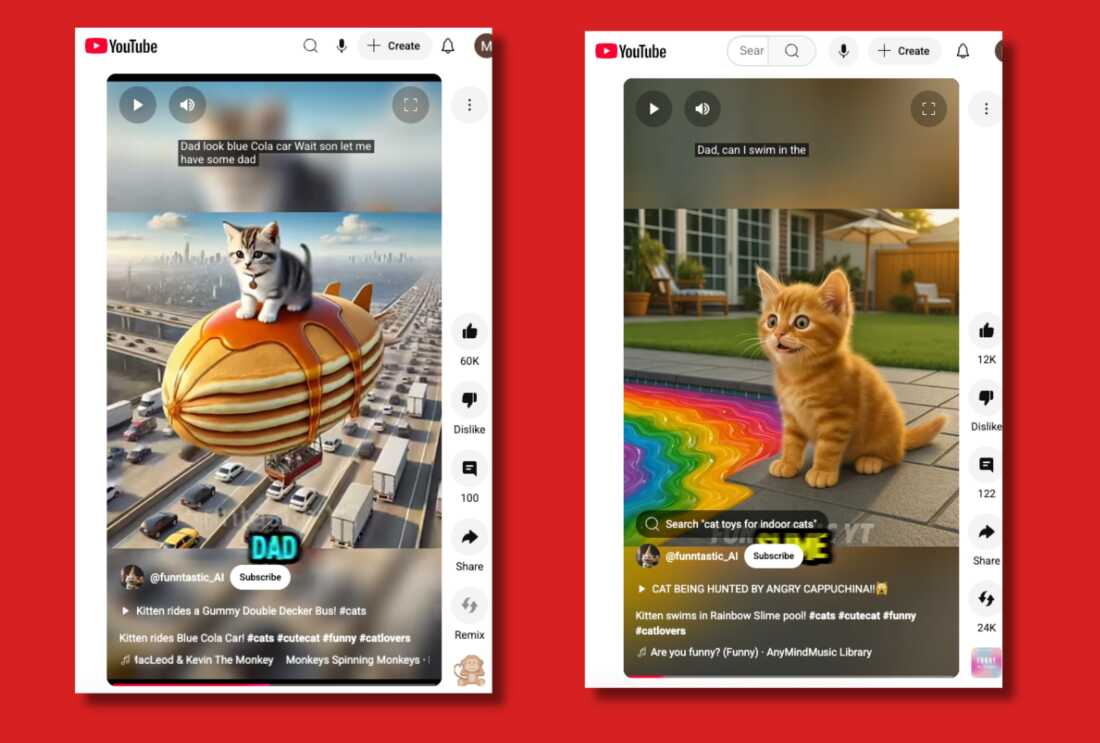

You've probably seen it. Low-quality AI-generated videos that flood your recommendations. Dragon Ball sequences stitched together by a machine. Fake testimonials with emotionless voice-overs. Clickbait thumbnails promising life-changing secrets. They accumulate millions of views, generate advertising revenue, and then get wiped off the platform overnight.

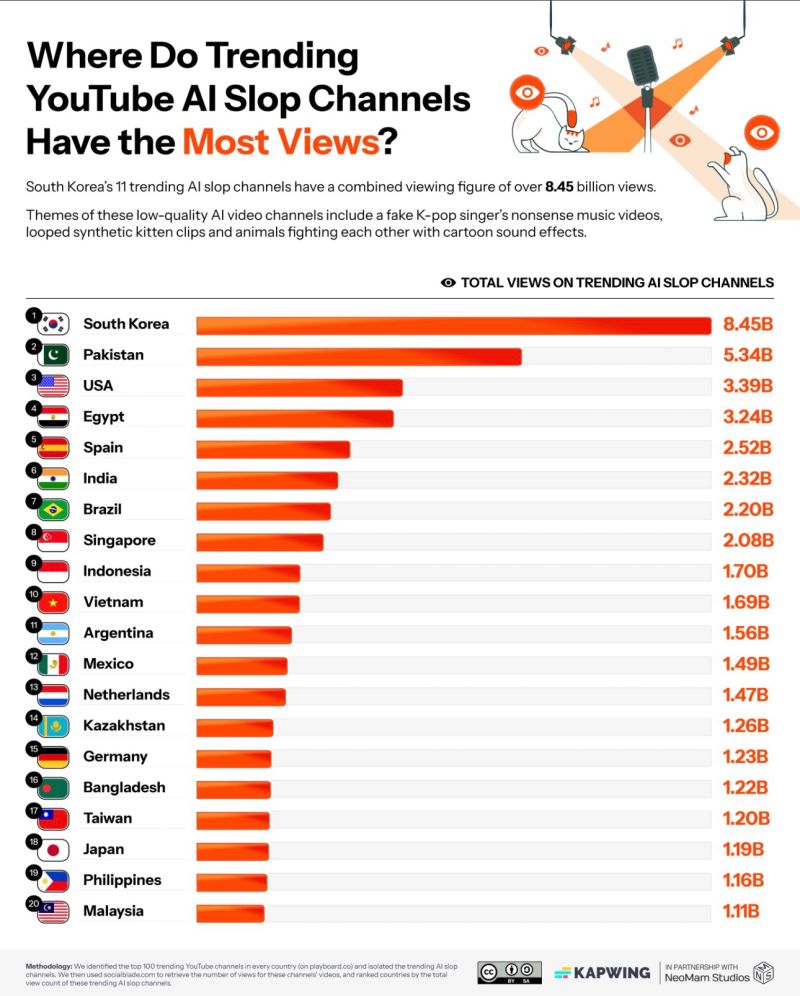

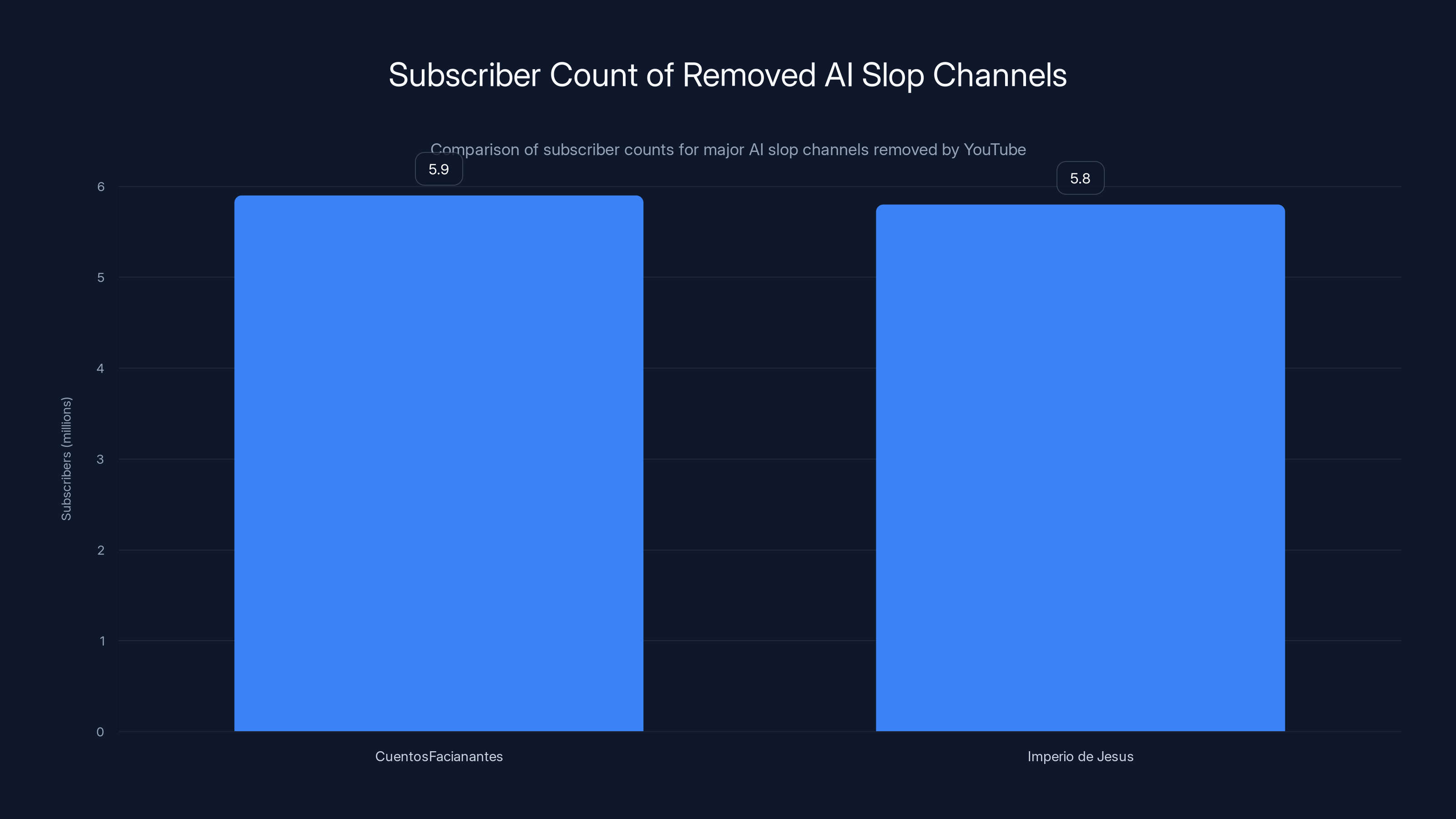

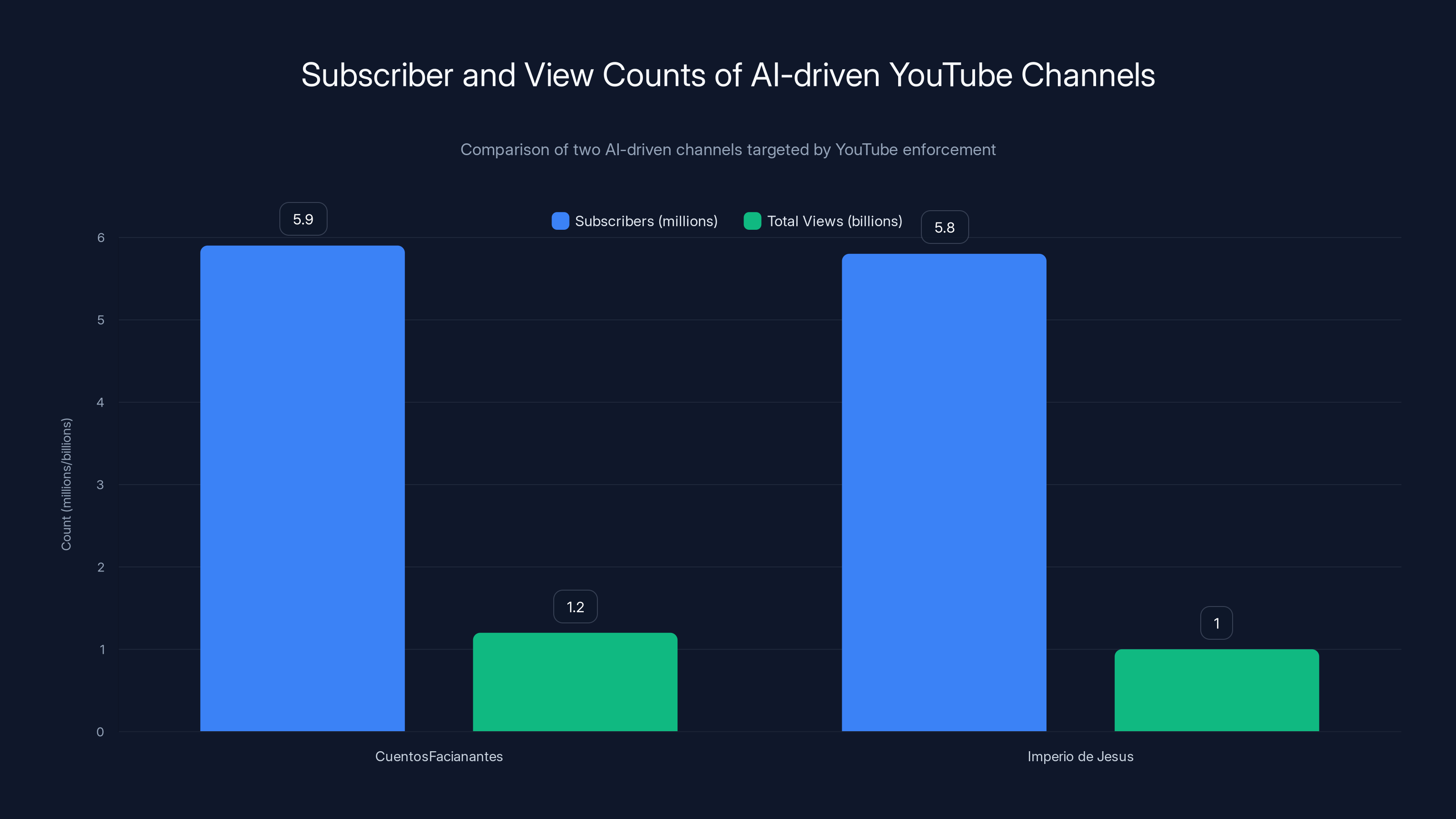

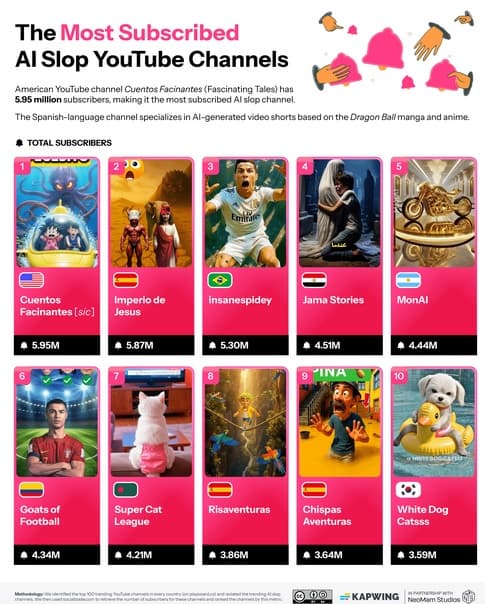

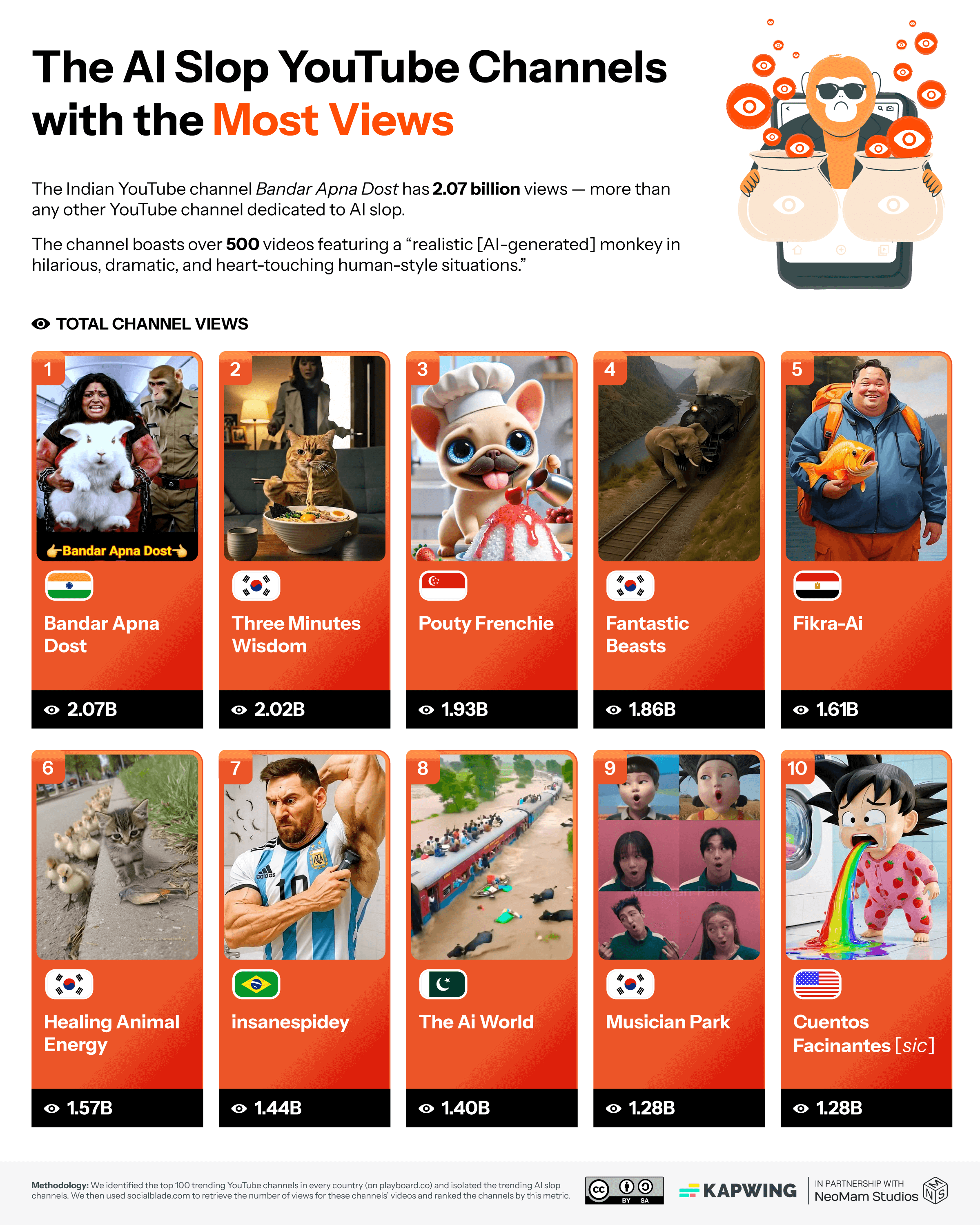

What makes this moment significant isn't just that YouTube finally acted. It's that the scale of the problem had become undeniable. Kapwing's research team identified Cuentos Facianantes, which had accumulated 5.9 million subscribers and over 1.2 billion total views, as the most-subscribed AI slop channel on the entire platform. Its Spanish name translates to "Fascinating Tales," but the content was anything but—just machine-generated Dragon Ball videos with minimal human curation.

The disappearance of these channels represents a critical inflection point. YouTube CEO Neal Mohan publicly acknowledged the issue, committing to "reduce the spread of low quality AI content." But here's the tension: YouTube is simultaneously encouraging creators to use AI tools. The platform wants the benefits of AI without the junk. That's harder than it sounds.

This article explores what happened, why it happened, and what it means for the future of content creation on the world's largest video platform.

TL; DR

- YouTube removed major AI slop channels: Cuentos Facianantes (5.9M subscribers) and Imperio de Jesus (5.8M subscribers) disappeared after Kapwing identified them as top AI slop producers.

- The scale was staggering: Over 1.2 billion views accumulated on low-quality AI-generated content across these channels.

- CEO promised action: YouTube's Neal Mohan committed to reducing low-quality AI content spread using existing spam and clickbait detection systems.

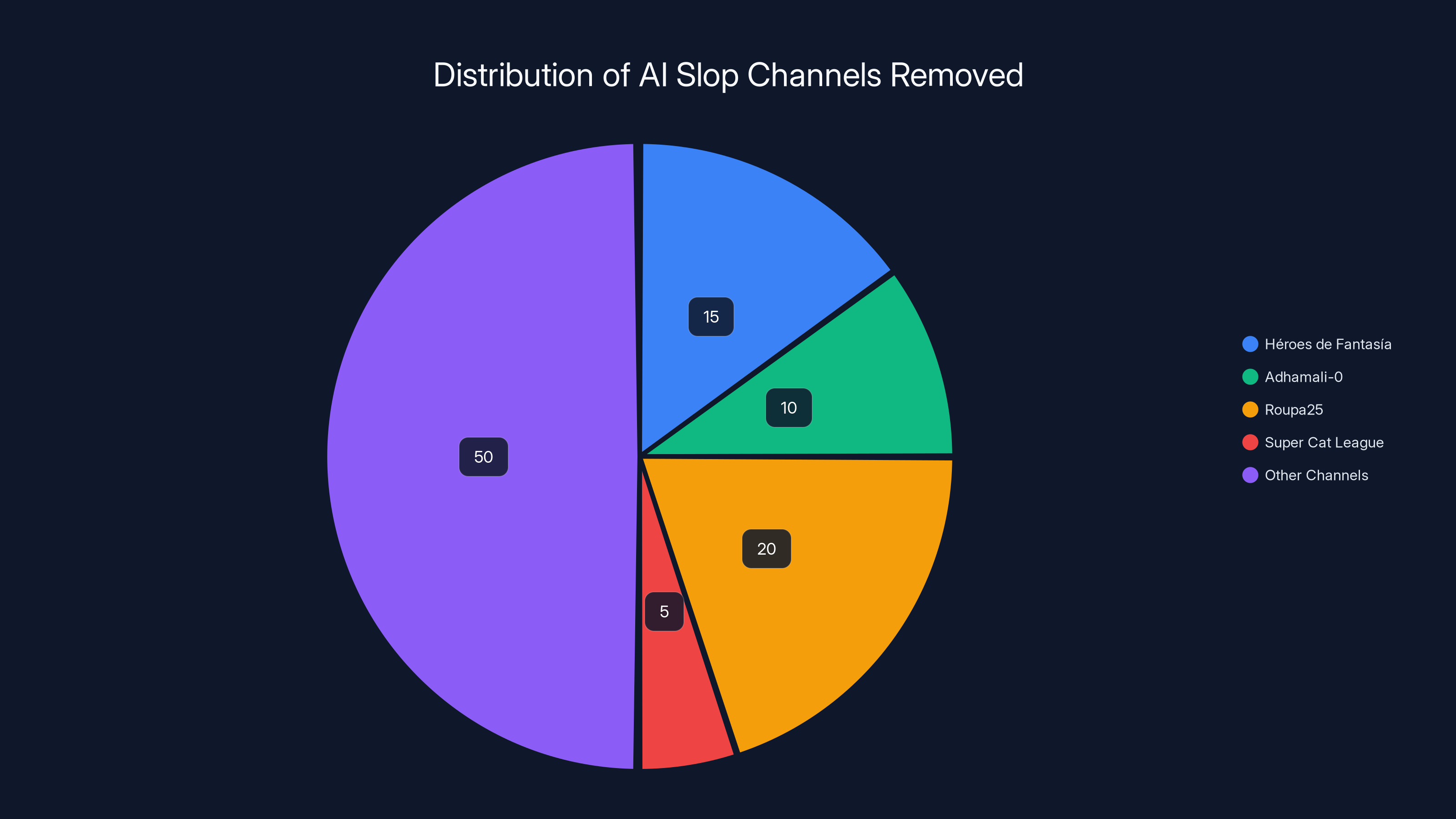

- 16+ channels wiped: Beyond the two major channels, 16 other AI slop generators were deleted or stripped of content.

- The contradiction: YouTube is simultaneously encouraging creators to use AI tools while removing AI-generated slop, creating conflicting incentives.

CuentosFacianantes and Imperio de Jesus were among the largest AI slop channels removed, each with nearly 6 million subscribers, highlighting the significant reach of low-quality AI content on YouTube.

What Exactly Is AI Slop and Why Does It Matter?

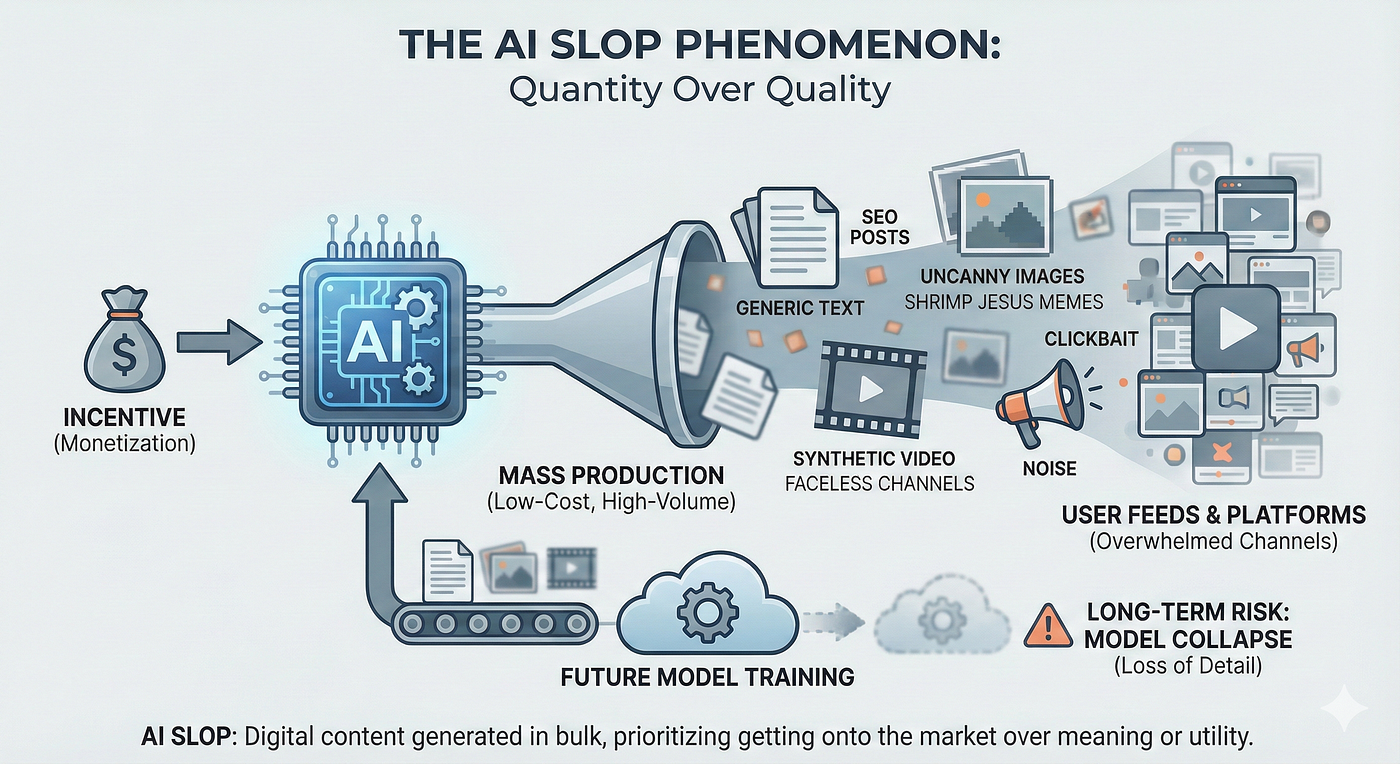

AI slop isn't a technical term. It's a descriptor that emerged organically across tech communities to describe low-effort, low-quality content generated almost entirely by artificial intelligence with minimal human creativity or oversight.

Think of it this way: a human creator might spend days scripting, filming, and editing a ten-minute video. An AI slop creator runs a prompt through an image generator, feeds the output to a video synthesis tool, adds a text-to-speech voice-over, slaps on a clickbait thumbnail, and uploads. The entire process might take thirty minutes.

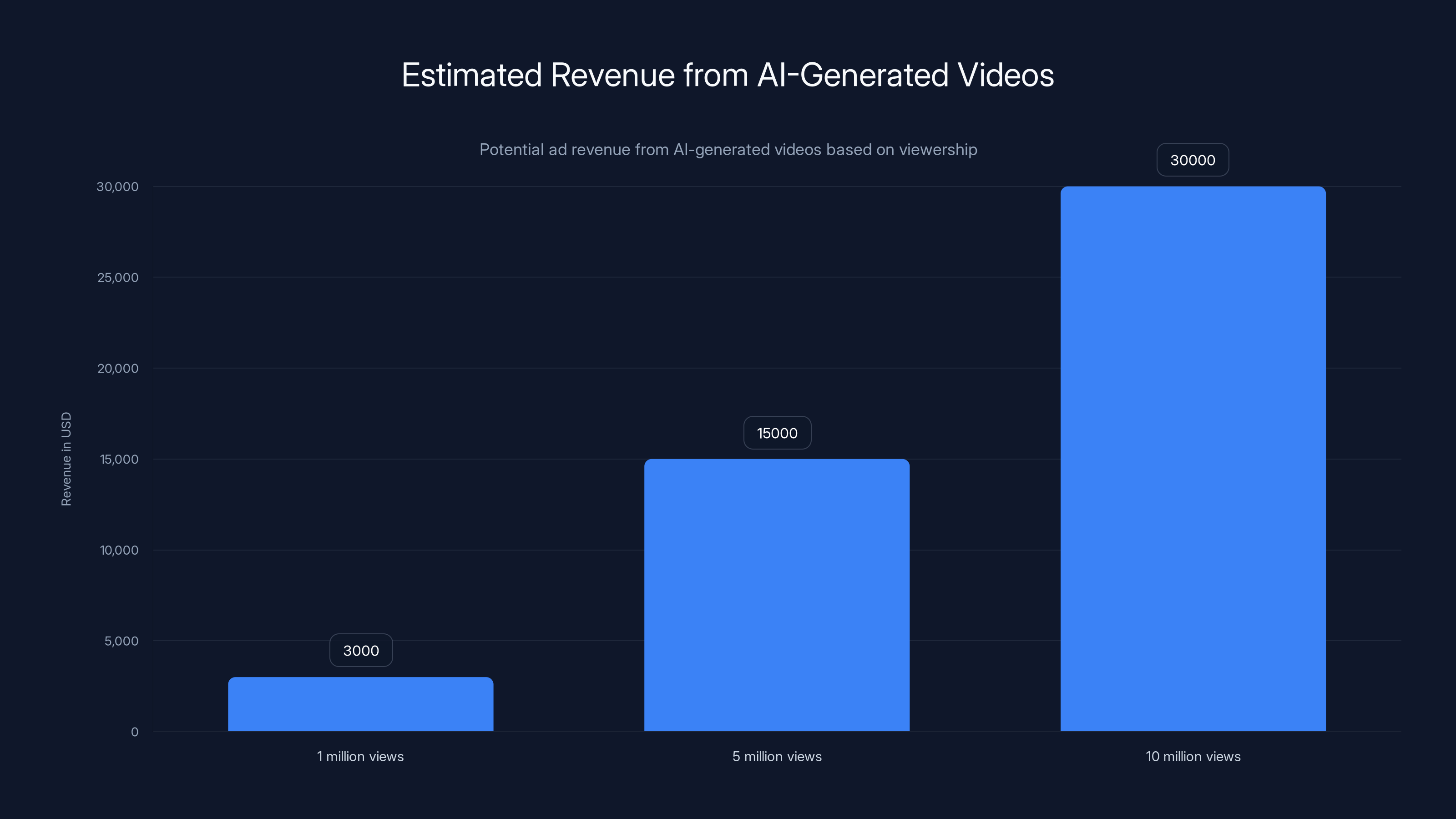

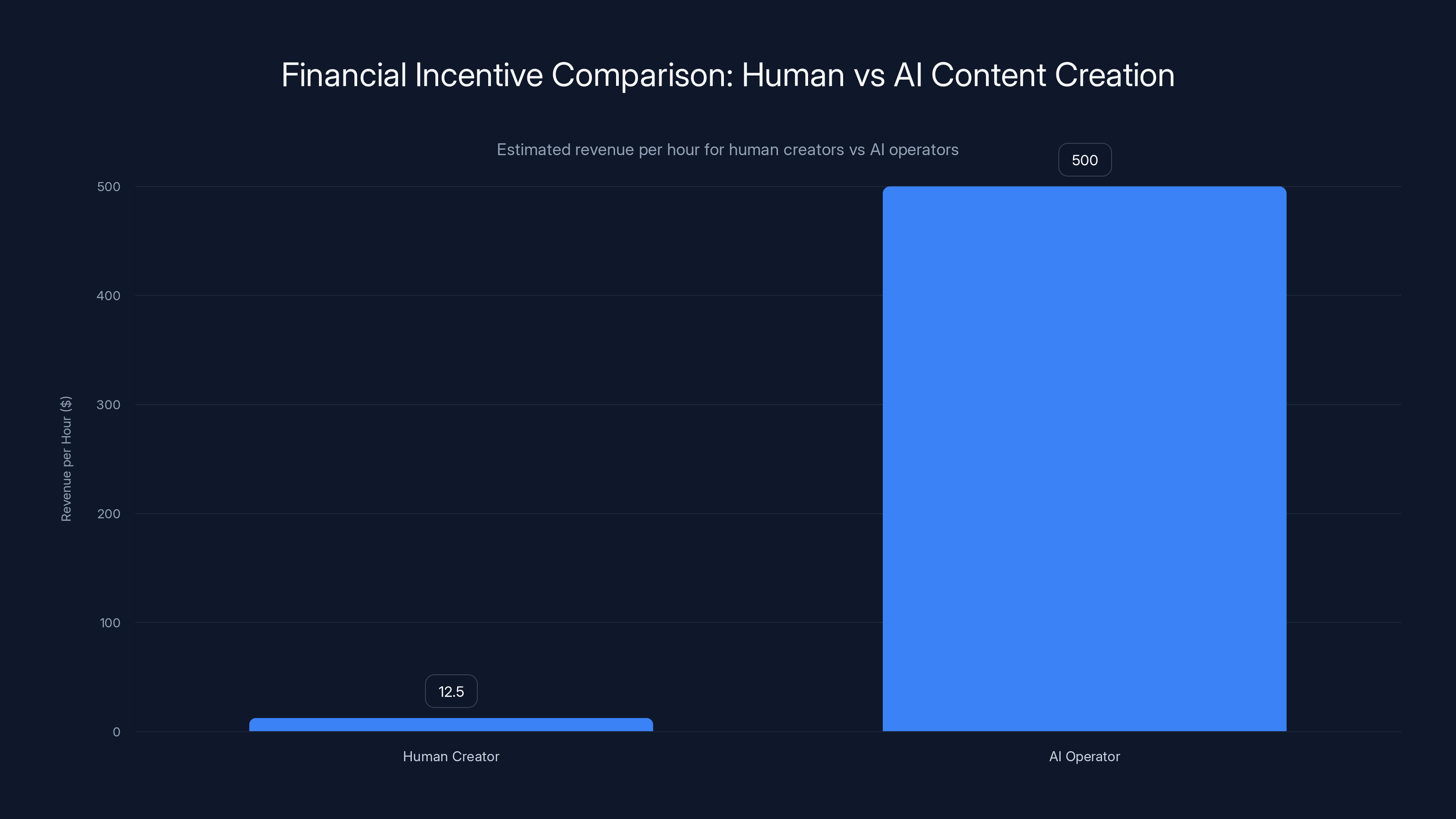

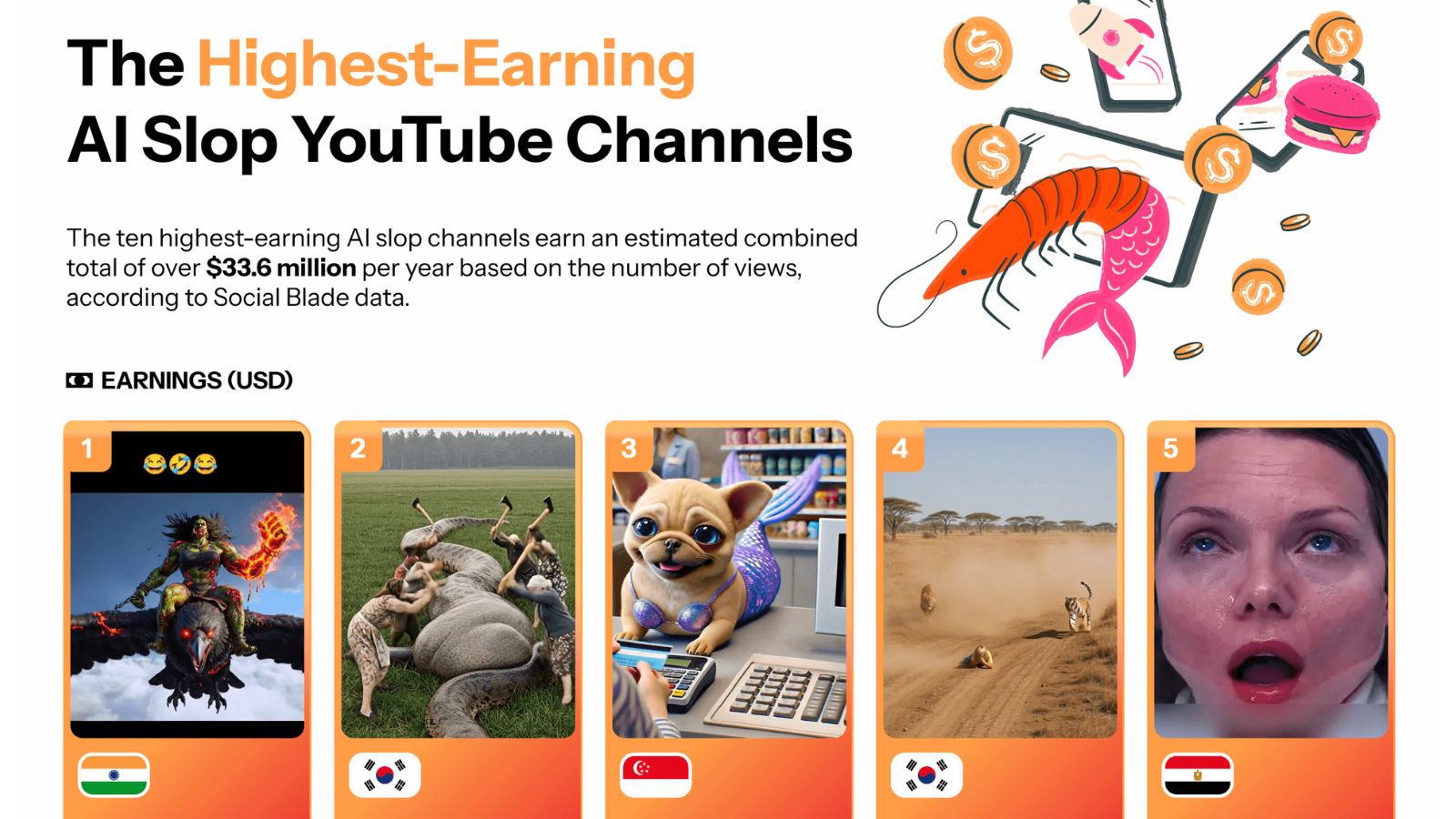

The financial incentive is obvious. YouTube's ad-supported model pays creators based on watch time and engagement. If a piece of AI slop reaches 10 million views, it generates thousands of dollars regardless of whether anyone learned anything or enjoyed the experience. The platform's algorithm doesn't distinguish between "earned" views from quality content and views from users clicking a misleading thumbnail.

But AI slop causes real problems. It pollutes YouTube's recommendation system, pushing out human-created content. Users waste time watching empty, repetitive videos. Legitimate creators struggle to compete with machines that never sleep and never demand fair compensation. The platform's signal system breaks down when engagement metrics no longer correlate with actual value.

It also damages trust. After watching your fifth "miraculous weight loss secret" video powered by AI voice synthesis, you stop trusting YouTube's recommendations entirely. The platform becomes less useful to everyone.

Kapwing's November 2025 report crystallized the problem. They identified Cuentos Facianantes as a case study in pure scale. The channel operated on a simple model: generate Dragon Ball fan fiction using AI tools, upload daily, let YouTube's algorithm amplify it. The numbers were staggering—1.2 billion total views. That's not a fringe phenomenon. That's a mainstream problem.

AI-generated videos can earn substantial revenue, with 10 million views potentially generating around $30,000. Estimated data based on typical ad revenue rates.

The Two Channels That Triggered the Crackdown

YouTube's enforcement action targeted two specific channels, though reporters are still unclear on exactly when they were removed or the precise trigger.

Cuentos Facianantes: The Dragon Ball Empire

This channel operated with stunning simplicity and equally stunning scale. Its formula: take Dragon Ball characters, generate AI images and video sequences, synthesize a voiceover in Spanish, publish daily. Repeat endlessly.

The numbers tell the story. 5.9 million subscribers. 1.2 billion total views. Those metrics place it in the upper percentile of YouTube creators. A legitimate gaming channel or animation studio would celebrate those numbers. But Cuentos Facianantes achieved them through pure automation.

What made this channel particularly egregious wasn't the AI usage itself—it was the lack of any redeeming human creativity. A human using AI as a tool might generate backgrounds automatically but create original characters and storylines. This channel did none of that. It was AI from prompt to publish.

The November 2025 Kapwing report identified this channel as "the most-subscribed-to AI slop channel." That designation gave YouTube political cover to remove it. If the research was public and documented, and YouTube allowed it to continue, the optics became indefensible.

Imperio de Jesus: Fake Inspiration

The second channel, Imperio de Jesus (Empire of Jesus), followed a different formula but with equally hollow execution. Its channel description promised faith-based content that would strengthen "our faith with Jesus through fun interactive quizzes."

The reality was far simpler. Generate quiz-style videos with AI visuals, add dramatic music and synthesized narration, claim the quizzes reveal spiritual truths. The channel accumulated 5.8 million subscribers and became a template for a certain type of AI slop: fake self-help and inspiration content.

This category of AI slop is particularly dangerous because it plays on genuine human needs. People want spiritual growth, personal development, and meaningful experiences. AI generators offer empty versions of these desires, monetized.

Both channels, despite their different niches, shared core characteristics:

- Daily upload schedules with minimal variation

- No discernible human authorship in the creative process

- Mass audience appeal through controversial or emotional triggers

- Minimal production cost relative to watch time generated

- Extreme subscriber counts accumulated over months or years

The Cascade: 16+ Additional Channels Vanish

The removal of these two channels wasn't an isolated action. Kapwing's research identified at least 16 other AI slop channels that either disappeared entirely or had all their videos deleted.

Channels included in this wider purge:

- Héroes de Fantasía (Fantasy Heroes)

- Adhamali-0

- Roupa 25

- Super Cat League (still exists but with zero videos)

Super Cat League deserves specific mention because its channel description reveals the brazenness of AI slop operators. The description promised: "The World's #1 AI Cat Cinema!" featuring "hyper-realistic, emotional, and viral adventures" powered by "Advanced Generative AI."

This channel didn't hide what it was. It proudly marketed AI-generated cat videos as premium content. The fact that it accumulated more than 2 million subscribers suggests that a significant portion of YouTube's audience either didn't care about AI origin or didn't understand it.

The scale of the purge raises an important question: How many of these channels were removed by YouTube's automated systems versus human review? YouTube hasn't provided transparency on this. The removal might indicate sophisticated detection, or it might indicate that user reporting finally reached critical mass.

More importantly: are these removals permanent? Or will new channels appear with identical content within days, simply rebranding the same AI slop model?

CuentosFacianantes and Imperio de Jesus amassed millions of subscribers and billions of views, highlighting the scale of AI-generated content on YouTube. Estimated data for Imperio de Jesus views.

Why YouTube Allowed This for So Long

Understanding the crackdown requires understanding why YouTube tolerated AI slop in the first place. The answer isn't incompetence. It's incentive misalignment.

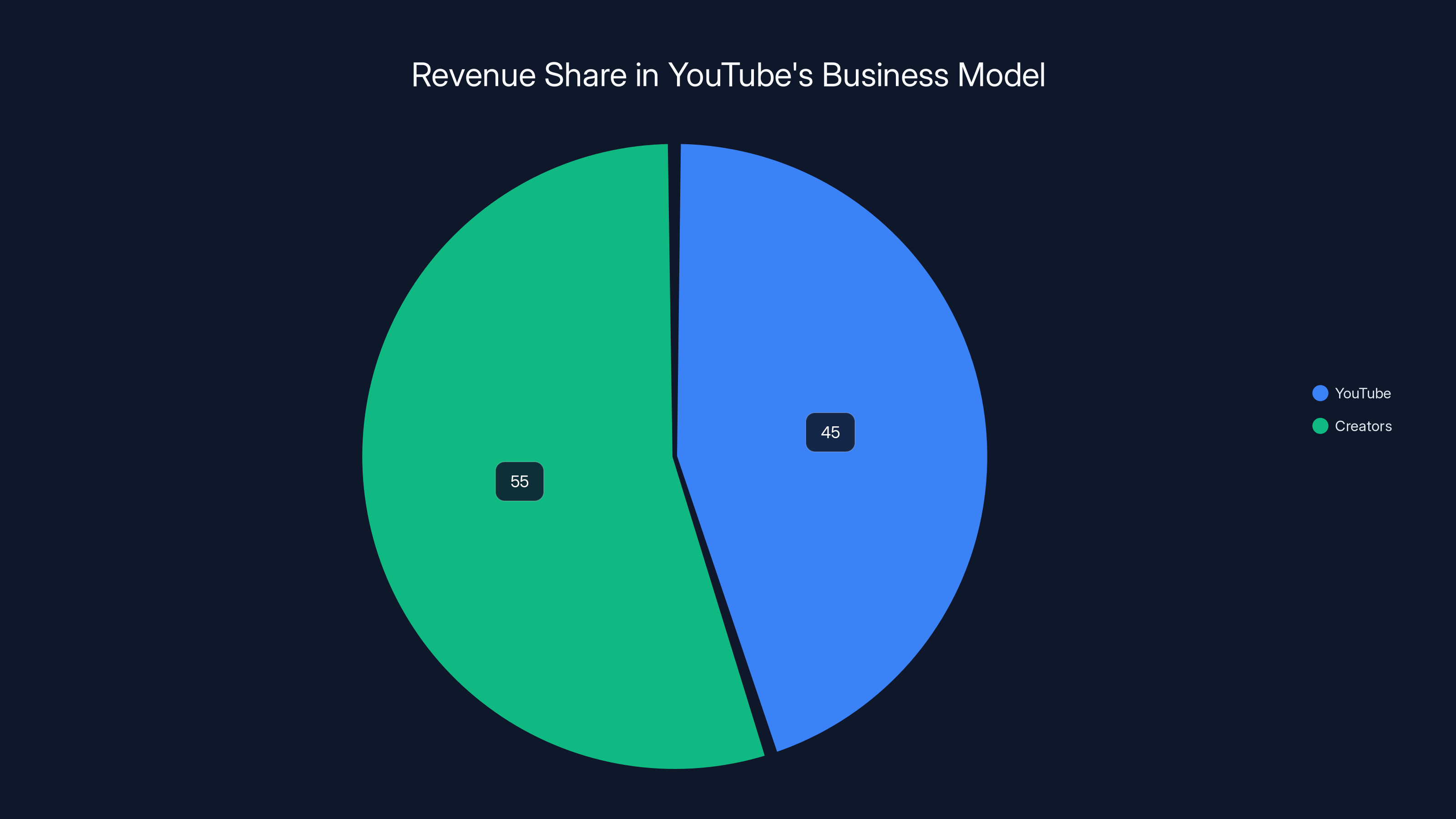

YouTube's business model is fundamentally attention-based. Creators upload content. Users watch it. YouTube serves ads against that watch time. YouTube takes 45%, creators take 55%. This model works beautifully for legitimate creators producing valuable content.

But it also creates perverse incentives. A video that gets 10 million views generates identical revenue whether those views come from people learning something or people mindlessly clicking a misleading thumbnail. The algorithm can't distinguish quality from engagement-hacking because engagement is the only signal it measures.

AI slop exploits this misalignment perfectly. It's optimized purely for clicks and watch time. It has zero concern for viewer satisfaction or actual value because the creator doesn't need to build a sustainable brand. They're farming the algorithm's attention signal, not building an audience.

YouTube's historical response to this problem has been reactive, not proactive. The platform removes spam and clickbait after it becomes a public relations problem. But the detection mechanisms were never designed to catch AI-generated content specifically because, until recently, AI-generated video at scale was technically difficult.

Now it's not. Tools like Runway, Synthesia, and open-source models make photorealistic video generation accessible to anyone with a laptop. The technical barrier disappeared. YouTube's enforcement mechanisms didn't adapt fast enough.

Another factor: international enforcement gaps. Many of these channels were created by operators in countries with weak intellectual property enforcement. Cuentos Facianantes was Spanish-language. Imperio de Jesus likely as well. YouTube's enforcement team is primarily English-speaking. Language and geographic barriers made these channels lower priority for investigation.

The CEO's Promise vs. The Platform's Incentives

In November 2025, YouTube CEO Neal Mohan sent a letter addressing the AI slop problem directly. He committed to "reduce the spread of low quality AI content" by enhancing the spam and clickbait detection systems already in place.

This commitment is notable, but it's also insufficient as currently framed. Here's why.

Mohan's approach relies on existing infrastructure designed to catch spam and clickbait. But AI slop isn't always spam. Some channels operate within YouTube's technical guidelines while violating its spirit. Super Cat League didn't violate community standards—it just generated cat videos with AI.

The gap between what's technically against policy and what's actually harmful is where AI slop lives.

Moreover, the commitment lacks specific mechanisms. What counts as "low quality AI content"? Is using AI for backgrounds in an otherwise human-created video slop? What about AI voice-overs for high-quality original scripts? The company hasn't drawn these lines clearly.

Mohan's letter also reveals a deeper contradiction. While promising to remove low-quality AI content, YouTube is simultaneously investing in AI creation tools for its creators. The platform wants legitimate creators using AI as a productivity tool. But where's the line between "AI as creative assistant" and "AI as replacement for creativity"?

This contradiction isn't unique to YouTube. Meta faces it with Instagram. TikTok faces it with its algorithm. These platforms want to enable AI innovation while preventing AI exploitation. The tension is real and unresolved.

The financial incentive for AI-generated content is approximately 40 times stronger than for human-created content, highlighting the economic drive behind AI slop. Estimated data.

The Technical Challenge: Detection at Scale

Detecting AI slop at the scale YouTube operates requires solving a specific technical problem: distinguishing between human-created content using AI tools and AI-created content with minimal human input.

This isn't solved yet. Current approaches include:

1. Upload Metadata Analysis YouTube could examine file metadata, encoding patterns, and compression artifacts to identify AI-generated video. Different AI tools leave different digital fingerprints. This approach works but requires constant updates as tools evolve.

2. Content Pattern Recognition AI slop often follows predictable patterns: identical scene transitions, uniform lighting, synthetic voice characteristics, repetitive music. Pattern-matching algorithms can catch these. But false positives are a real problem—legitimate creators might use templates and achieve similar consistency.

3. Creator Behavior Analysis Channels uploading 10-15 videos daily with identical production quality are suspicious. YouTube could flag this behavior and route to human review. This approach catches scaling but might penalize legitimate automation-savvy creators.

4. Watermark Detection Some AI tools embed watermarks in generated content. YouTube could scan for these watermarks during upload. But creators can strip watermarks, and not all AI tools embed them.

5. Synthetic Media Provenance Ideally, AI tools would include metadata indicating what was generated artificially. Adobe's Content Credentials initiative works in this direction. But adoption is voluntary, and bad actors won't use it.

The truth is that perfect detection is technically impossible. There will always be AI-generated content that passes as human-created, and human-created content that looks AI-generated. YouTube has to accept some false positives and false negatives.

The company's current approach seems to be targeting scale and consistency rather than attempting perfect detection. Channels uploading 20+ videos daily get flagged for human review. That catches most AI slop while allowing legitimate high-volume creators to operate.

What This Means for Creators: The New Uncertainty

For creators using AI tools legitimately, the crackdown creates uncomfortable uncertainty.

If you're a small animation studio using AI to generate backgrounds and speed up production, are you safe? What if your animation style happens to be consistent across videos—does that trigger investigation?

If you're a tutorial creator using AI voice-overs to localize content into multiple languages, where does YouTube draw the line between acceptable and slop?

The lack of clear policy creates chilling effects. Conservative creators might abandon AI tools entirely rather than risk demonetization or channel removal. That's genuinely harmful because AI-augmented creation is legitimate and valuable when combined with human creativity.

YouTube hasn't published creator guidelines specifically addressing this. The company's position appears to be "we'll know it when we see it," which is the worst possible policy for creator confidence.

Smart creators are responding by:

- Maintaining human elements that establish creative authorship (voiceovers, original scripts, unique editing decisions)

- Transparency about tools used in video descriptions

- Moderate upload schedules that don't trigger algorithmic flags

- Community engagement that demonstrates genuine audience relationship

- Diverse content showing artistic range beyond template repetition

Estimated data shows that 'Other Channels' made up the majority of AI slop channels removed, highlighting the widespread nature of the purge.

The Broader Ecosystem Problem: Where AI Slop Thrives

AI slop isn't unique to YouTube. It's emerging everywhere:

Instagram and TikTok are experiencing similar problems with AI-generated short videos designed purely for engagement.

Reddit is dealing with AI-generated comments and posts designed to manipulate discussion.

Search results are becoming polluted with AI-generated blog posts created purely for SEO.

Email is experiencing AI-generated newsletters with zero original reporting.

This suggests that AI slop isn't a YouTube problem. It's an attention economy problem. When platforms reward engagement above all else, and when AI tools make scale infinitely cheap, low-quality content becomes inevitably dominant.

The math is brutal. Suppose a human creator spends 40 hours creating a video that generates

Until platforms change their underlying incentive structures—moving from pure engagement metrics to quality signals—AI slop will persist. YouTube's removals might slow the tide temporarily. They won't stop it.

The Copyright Angle: Why This Matters Legally

The AI slop problem intersects with copyright in ways that could make this crackdown legally significant.

Many of these channels were generating content based on copyrighted material. Cuentos Facianantes was creating Dragon Ball content. While fan fiction technically violates copyright, enforcement against individual creators is rare. Enforcement at the platform level is different.

If YouTube faced pressure from copyright holders, the company has strong incentive to remove AI-generated derivative works at scale. An AI tool can generate 100 Dragon Ball videos in a day. A human fan fiction writer might create one per week. The scale of derivative content generated by AI is simply unprecedented.

YouTube removing these channels might represent the company protecting itself from liability rather than protecting content quality. That's a less noble motivation but potentially more durable—legal risk doesn't disappear with CEO promises.

This also raises a fascinating legal question: who's liable for copyright infringement when AI generates derivative content? The platform? The operator? The AI company? Courts haven't definitively answered this yet. YouTube might be removing channels preemptively to avoid finding out.

YouTube takes 45% of ad revenue, while creators receive 55%. This model incentivizes engagement over content quality.

The Algorithm's Role: How AI Slop Wins

Underlying all of this is YouTube's recommendation algorithm—a black box that decides which videos surface to which users.

AI slop thrives in this algorithm because it's optimized for the algorithm's signals, not for human satisfaction. The algorithm measures:

- Click-through rate (did the thumbnail work?)

- Watch time (how long did the user watch?)

- Session time (did they continue watching other videos?)

- Viewer retention (when did they stop watching?)

AI slop is specifically engineered to trigger these signals. Misleading thumbnails maximize clicks. Paced editing with frequent cuts maintains watch time. The algorithm doesn't measure actual satisfaction or value—just engagement.

A legitimate creator might spend 100 hours creating a documentary that's genuinely valuable but slightly slow-paced. An AI operator spends 2 hours creating sensationalist garbage with rapid cuts and clickbait that generates more watch time.

The algorithm doesn't know which is better. It just knows which generates more engagement. So it recommends the garbage.

This is the fundamental problem that's harder to solve than any technical detection mechanism. YouTube's algorithm is trained on engagement metrics. It will naturally optimize for content that games those metrics. AI slop is just the most extreme version of a problem YouTube has always had.

Authenticity vs. Automation: The Real Tension

Underlying YouTube's AI slop crackdown is a deeper question about authenticity in digital media.

Humans have always wanted genuine connection with creators. We follow people we find interesting, not content we find optimal. YouTube's recommendation algorithm inherently works against this by treating all content as fungible objects to be ranked by engagement metrics.

AI slop is what happens when you maximize for metrics while abandoning authenticity. It's the pure logical conclusion of treating creation as optimization.

YouTube's crackdown suggests the company recognizes this. There's recognition that an ecosystem of pure optimization is actually worse for the platform long-term than an ecosystem with authentic creators.

But can YouTube rebuild authenticity while maintaining its engagement-based business model? That's the real question. The answer probably involves trade-offs YouTube won't want to make—like prioritizing creator satisfaction over watch time, or ranking content differently for discovery.

What Happened to the Removed Channels' Audiences?

A curious footnote: what happened to the millions of people subscribed to these channels?

Many probably didn't notice. They were watching AI slop passively in their recommendations, not building conscious relationships with creators. The removal was a net positive for their viewing experience even if they didn't realize it.

But some subscribers were apparently engaged. Super Cat League accumulated 2 million followers before its content vanished. Some of those followers presumably enjoyed AI cat videos specifically because they were AI-generated—novelty-seeking behavior.

These viewers now face a choice: find new AI-generated content, or explore actual human creators. If they choose the former, they'll simply migrate to replacement channels. New AI slop operators are undoubtedly already scrambling to fill the void.

This is the fundamental challenge with content removals on scale: they're whack-a-mole. Remove one channel, three more appear. The underlying incentive structure remains unchanged.

The International Dimension: Why Enforcement Is Geographically Biased

Two of the three largest removed AI slop channels were Spanish-language. This isn't coincidental.

YouTube's content moderation is dominated by English-language teams. The company has native speakers and trained reviewers across many languages, but review capacity isn't proportional to creator base. Spanish-language creators get less review attention than English-language creators. This creates opportunity for AI slop to scale internationally before being caught.

Moreover, the creators operating these channels were likely in countries where YouTube enforcement is harder—possibly Latin America, possibly Eastern Europe. Pursuing enforcement internationally is expensive and complicated. Much easier to remove content than trace and sue operators.

This also raises questions about bias in AI slop removal. Are non-English creators disproportionately targeted? Or do they disproportionately create AI slop? YouTube hasn't published data on this, so we can only speculate.

What's clear: geography matters in platform enforcement. Centralized content moderation inherently creates geographic bias in outcomes.

Looking Forward: What Changes Are Coming

Based on the pattern of YouTube's enforcement actions and CEO statements, several changes seem likely:

1. Stricter upload rate limits for channels without established history. Prevent bulk uploading of potential AI slop.

2. Watermark detection embedded in upload systems. Flag videos generated by known AI tools for review.

3. Creator verification programs. Verified creators get more latitude with AI tools; unverified creators face scrutiny.

4. Explicit AI disclosure requirements. Creators must disclose which portions of their videos were AI-generated.

5. Algorithm adjustments that down-rank content with certain AI-generated signatures, prioritizing diverse creators and upload patterns.

6. Creator grants and support for legitimate AI tool usage. Position YouTube as supporting AI-augmented creation, not just preventing AI slop.

The most effective long-term solution would involve incentive restructuring, but that's economically radical for YouTube. The company would need to move partially away from pure engagement metrics toward quality metrics. Possible? Maybe. Likely? Unlikely, because it means less total watch time and less ad revenue.

The Bigger Picture: AI Content Quality as a Real Problem

The removal of these channels represents YouTube confronting a genuine problem: AI-generated content at scale is actually inferior to human-generated content in ways that matter to users.

This contradicts some AI hype that suggested AI could eventually replace human creators entirely. The reality is messier. AI excels at specific tasks: background generation, voice synthesis, basic video editing. AI is poor at originality, narrative arc, and authentic human expression.

AI slop emerges when someone tries to skip the human elements and go purely mechanical. That's technically possible but produces demonstrable garbage.

The lesson for creators and platforms: AI augmentation works. AI replacement doesn't. The future is likely human creativity combined with AI productivity tools, not pure AI generation.

YouTube's crackdown isn't really about AI. It's about acknowledging that pure automation produces inferior content, and users (and algorithms, ultimately) prefer content with actual human creativity involved.

What You Should Know if You Create Content

If you're creating content on YouTube or any major platform, the implications are:

Your authenticity is becoming more valuable, not less. As AI slop commodifies low-quality content, creators with genuine voices, perspectives, and talent have wider moats. Invest in developing your actual creative voice rather than optimizing purely for algorithm signals.

AI tools are here to stay. The genie isn't going back in the bottle. The question is how you use them. Using AI to speed up legitimate creation work is fine. Using AI to replace creation entirely is increasingly risky.

Transparency builds trust. If you're using AI tools, say so. Users generally don't care about AI usage; they care about dishonesty. Honest disclosure builds the brand loyalty that survives algorithm changes.

Consistency needs context. Uploading daily is fine if you're a legitimate operation. But daily uploads need to show variation and growth. Pure template repetition gets flagged—rightly so.

Build relationships, not just metrics. Metrics can be gamed. Relationships with actual audiences are harder to game and more durable long-term.

Why This Story Matters Beyond YouTube

YouTube's AI slop crackdown isn't just platform news. It's a signal about how the internet's quality infrastructure is starting to push back against low-quality automation.

For years, the internet's incentive structures favored volume over quality. Spam won. Clickbait won. Bot content won. Gradually, platforms are realizing this is unsustainable.

The removal of 18+ major AI slop channels represents a turning point. It's the first visible sign that platforms are willing to sacrifice some engagement metrics to maintain content quality and ecosystem health.

This matters because it suggests that the internet's future doesn't have to be purely automated low-quality content. There's still room for authenticity. There's still demand for genuine human creativity.

But that future requires constant enforcement. AI slop will return unless YouTube's underlying incentives change. The crackdown is necessary but insufficient.

FAQ

What is AI slop on YouTube?

AI slop refers to low-quality, low-effort video content generated almost entirely by AI tools with minimal human creativity or oversight. Examples include AI-generated Dragon Ball videos, fake spiritual quizzes, and automated cat video compilations. These channels prioritize maximizing views and revenue through algorithmic manipulation rather than creating genuine value for viewers.

Why did YouTube remove these AI slop channels?

YouTube removed channels like Cuentos Facianantes and Imperio de Jesus due to their violation of the platform's policies against low-quality content and potential spam. The removals followed public reports documenting the scale of AI-generated content, combined with CEO Neal Mohan's stated commitment to reducing low-quality AI content. The timing suggests both public pressure and recognition that AI slop was polluting the platform's recommendation system.

How many subscribers did the removed channels have?

The two largest removed channels had massive audiences. Cuentos Facianantes accumulated 5.9 million subscribers and over 1.2 billion total views. Imperio de Jesus had 5.8 million subscribers. Combined with the 16+ other channels removed or stripped of content, these removals affected tens of millions of cumulative subscribers and billions of views. This scale demonstrates how pervasive AI slop had become on the platform.

Can creators use AI tools without getting removed?

Yes, using AI tools for legitimate creative purposes is generally acceptable. YouTube distinguishes between AI-augmented creation (where AI assists human creators) and AI replacement (where AI generates all content with minimal human input). Creators using AI for background generation, voice synthesis, or productivity enhancement are typically safe, especially if they disclose tool usage and maintain creative authorship through original scripts, editing decisions, and unique perspectives.

What's YouTube's official policy on AI-generated content?

YouTube has committed to reducing low-quality AI content but hasn't published detailed, creator-facing guidelines defining exactly what's acceptable. The company's approach appears to target scale indicators (high upload frequency, repetitive patterns, minimal variation) rather than attempting to ban all AI-generated content. Creator guidance emphasizes that AI usage should enhance human creativity, not replace it entirely, though the company hasn't formalized this distinction in official policy.

How does YouTube detect AI-generated content?

YouTube likely uses multiple detection approaches including upload metadata analysis, content pattern recognition, creator behavior analysis, and potentially watermark detection from known AI tools. The company scans for consistent indicators of pure automation: daily uploads with minimal variation, uniform visual characteristics, synthetic voice patterns, and repetitive structures. However, perfect detection is technically impossible, so YouTube appears to focus on targeting high-scale operations rather than identifying every instance of AI-generated content.

What about creators in other countries using AI tools?

YouTube's enforcement has been geographically uneven, with stronger oversight in English-language content and weaker enforcement internationally. This created opportunity for non-English creators to scale AI slop operations before detection. However, the recent crackdown targets channels regardless of language or geography, suggesting YouTube is expanding enforcement capacity. International creators using AI tools legitimately should follow the same best practices as English-language creators: maintain human creativity, disclose tool usage, and avoid bulk-upload patterns.

Will AI slop creators just start new channels?

Yes, this is likely. Removing channels addresses the symptom but not the underlying financial incentive. AI slop operators can easily create replacement channels using similar strategies. Without changing YouTube's engagement-based monetization model or implementing stronger prevention at upload time, the removal of existing channels is largely whack-a-mole. The crackdown slows the problem but doesn't solve it permanently.

Should I worry about my channel getting removed if I use AI?

If you're creating legitimate content with human creativity and using AI as a productivity tool, the risk is low. Channels most at risk are those showing clear indicators of pure automation: daily uploads of template-based content, no discernible human authorship, rapid pattern repetition, and dramatic subscriber growth from engagement manipulation. Building genuine audience relationships, maintaining creative variation, and disclosing your process all reduce risk substantially.

How does this affect YouTube's competition with other platforms?

The AI slop crackdown positions YouTube as quality-focused relative to platforms that haven't taken similar action. Platforms like TikTok and Instagram are experiencing similar AI-generated content problems but haven't enforced at comparable scale. However, YouTube's enforcement is incomplete and ongoing, suggesting the company is still developing its approach. Competitors might differentiate by implementing stronger AI quality controls earlier, though this would require sacrificing short-term engagement metrics.

Conclusion: The Reckoning Has Begun

YouTube's removal of 18+ major AI slop channels marks the beginning of a necessary reckoning with low-quality automated content. The problem was real, the scale was massive, and the platform finally acted.

But action against AI slop is only the first step. The deeper issues remain unresolved: YouTube's engagement-based monetization model still rewards optimization over authenticity. The recommendation algorithm still prioritizes watch time over value. The infrastructure still makes bulk automation more profitable than careful curation.

What changed is recognition. YouTube's CEO publicly acknowledged that low-quality AI content is a problem worth addressing. The company is investing in detection and removal. But this is reactive enforcement, not systemic change.

For creators, the lesson is clear: authenticity is becoming more valuable as AI commodifies mediocrity. Build genuine relationships with audiences. Use AI as a tool, not a replacement. Maintain creative voice. Invest in quality over quantity.

For platforms, the lesson is equally clear: algorithms that reward pure engagement eventually destroy themselves. YouTube's crackdown suggests the company understands this. Whether that understanding translates into fundamental incentive restructuring remains to be seen.

The disappearance of Cuentos Facianantes and its peers won't solve AI slop. But it signals that the era of pure algorithmic optimization without quality filters is ending. The internet's next phase will require platforms making harder choices about what they actually want to optimize for.

For everyone's sake, they should choose authenticity.

Key Takeaways

- YouTube removed 18+ AI slop channels including CuentosFacianantes (5.9M subscribers, 1.2B views) in response to documented scale of low-quality AI content.

- Financial incentives massively favor AI slop: operators earn 12.50/hour for equivalent revenue.

- CEO Neal Mohan committed to reducing low-quality AI content but hasn't published detailed enforcement guidelines, leaving creators uncertain.

- Detection at scale requires multiple approaches: metadata analysis, pattern recognition, behavior flagging, but perfect detection is technically impossible.

- Legitimate creators can safely use AI by maintaining human creative elements, transparent disclosure, moderate upload schedules, and diverse content.

- The underlying problem isn't AI technology—it's YouTube's engagement-based monetization model that rewards optimization over authenticity.

- Enforcement is temporary without incentive restructuring; new AI slop channels will emerge unless platform fundamentals change.

Related Articles

- Payment Processors' Grok Problem: Why CSAM Enforcement Collapsed [2025]

- Substack's TV App Launch: Why Creators Are Torn [2025]

- YouTube's 2026 AI Strategy: What Creators Need to Know [2025]

- Bandcamp's AI Music Ban Sets the Bar for Streaming Platforms [2025]

- Netflix's Video Podcast Bet: Pete Davidson and Michael Irvin [2025]

- AI Music Flooding Spotify: Why Users Lost Trust in Discover Weekly [2025]

![YouTube's AI Slop Problem: Why Top Channels Are Disappearing [2025]](https://tryrunable.com/blog/youtube-s-ai-slop-problem-why-top-channels-are-disappearing-/image-1-1769634425268.jpg)