The $1.7 Billion Bet That's Reshaping AI

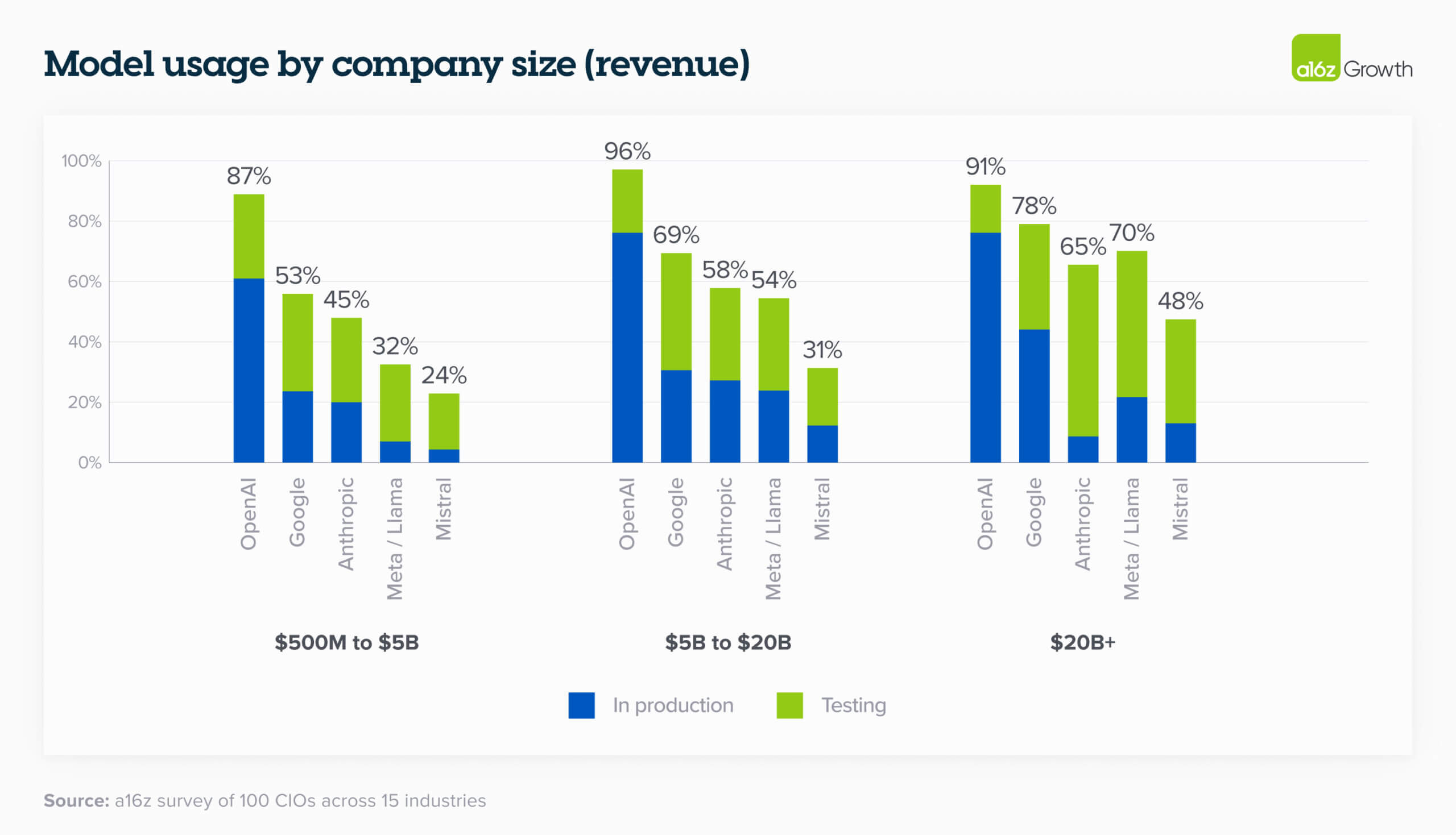

Andreessen Horowitz just did something that made the entire venture capital world stop and take notes. Out of a massive

Here's why that matters: while everyone's watching the latest Chat GPT demos and debating whether Claude can write better code, the real competition is happening behind the scenes. It's happening in data centers, in search algorithms, in video processing pipelines, and in the developer tools that companies like Cursor, Eleven Labs, and Fal are building on top of.

When Jennifer Li, the general partner leading a16z's infrastructure team, talks about where this money is going, she's not talking about funding the next consumer AI toy. She's talking about the foundational layer—the stuff that makes everything else possible. This is the difference between owning a lemonade stand and owning the lemon farm. Everyone notices the stand. Nobody notices the farm until it runs out of lemons.

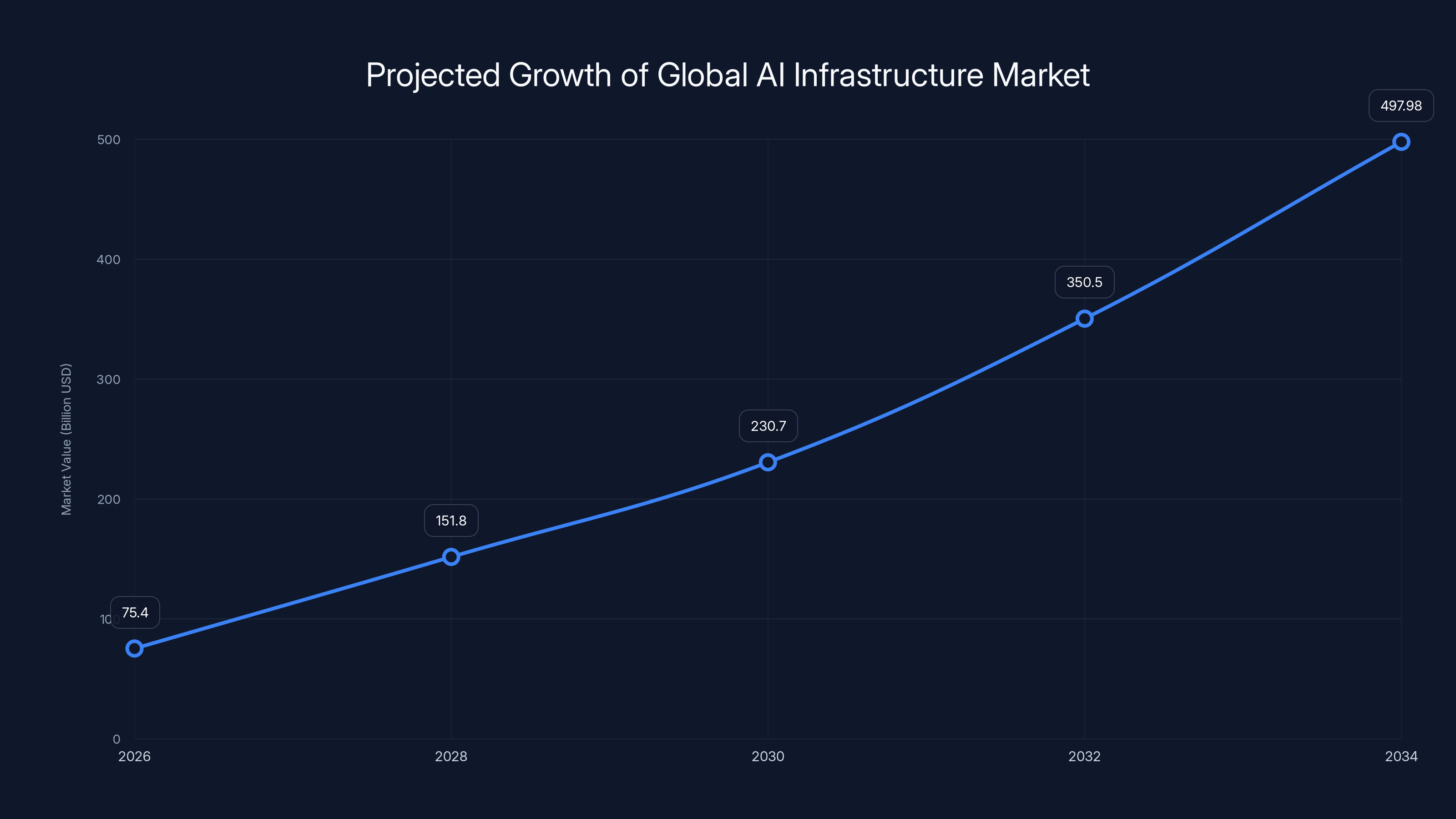

The global AI infrastructure market is projected to grow from

But here's the thing: most people don't understand what AI infrastructure actually is. They think it's just GPUs in a warehouse. It's so much more than that. It's the search systems that help AI models find relevant information. It's the APIs that let developers integrate AI into their products without reinventing the wheel. It's the optimization software that makes expensive AI models run cheaper and faster. It's the video and audio processing engines that power the next generation of content creation tools.

This article breaks down exactly where a16z sees this AI super cycle going next, which companies are actually getting funded right now, why the infrastructure layer is more critical than most people realize, and what this means for developers, startups, and everyone building on top of AI.

TL; DR

- **Infrastructure gets 11B valuation), Ideogram, and Fal, betting on foundational technologies.

- Search infrastructure matters: The ability to retrieve and rank relevant information has become as important as the models themselves.

- Talent crunch is real: AI-native startups are competing ruthlessly for engineers, with salaries and equity packages skyrocketing, as noted by Forbes.

- Platform dominance shifts: The firms that control infrastructure will define the next era of platform winners, similar to how AWS defined cloud computing.

- The super cycle continues: Despite predictions of an AI bubble, a16z's thesis is that we're still in the early innings of AI adoption and infrastructure buildout, as discussed in Economic Times.

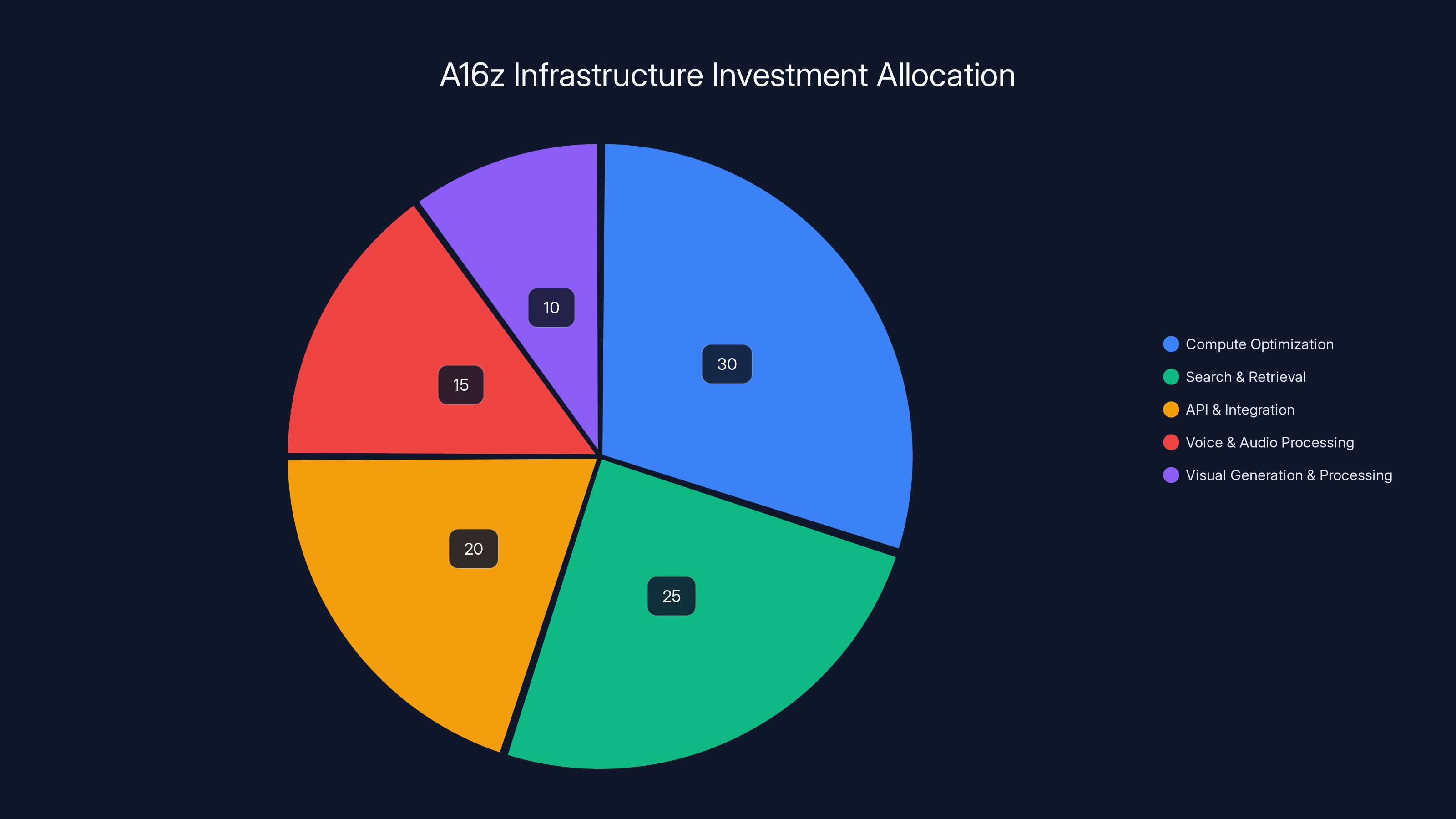

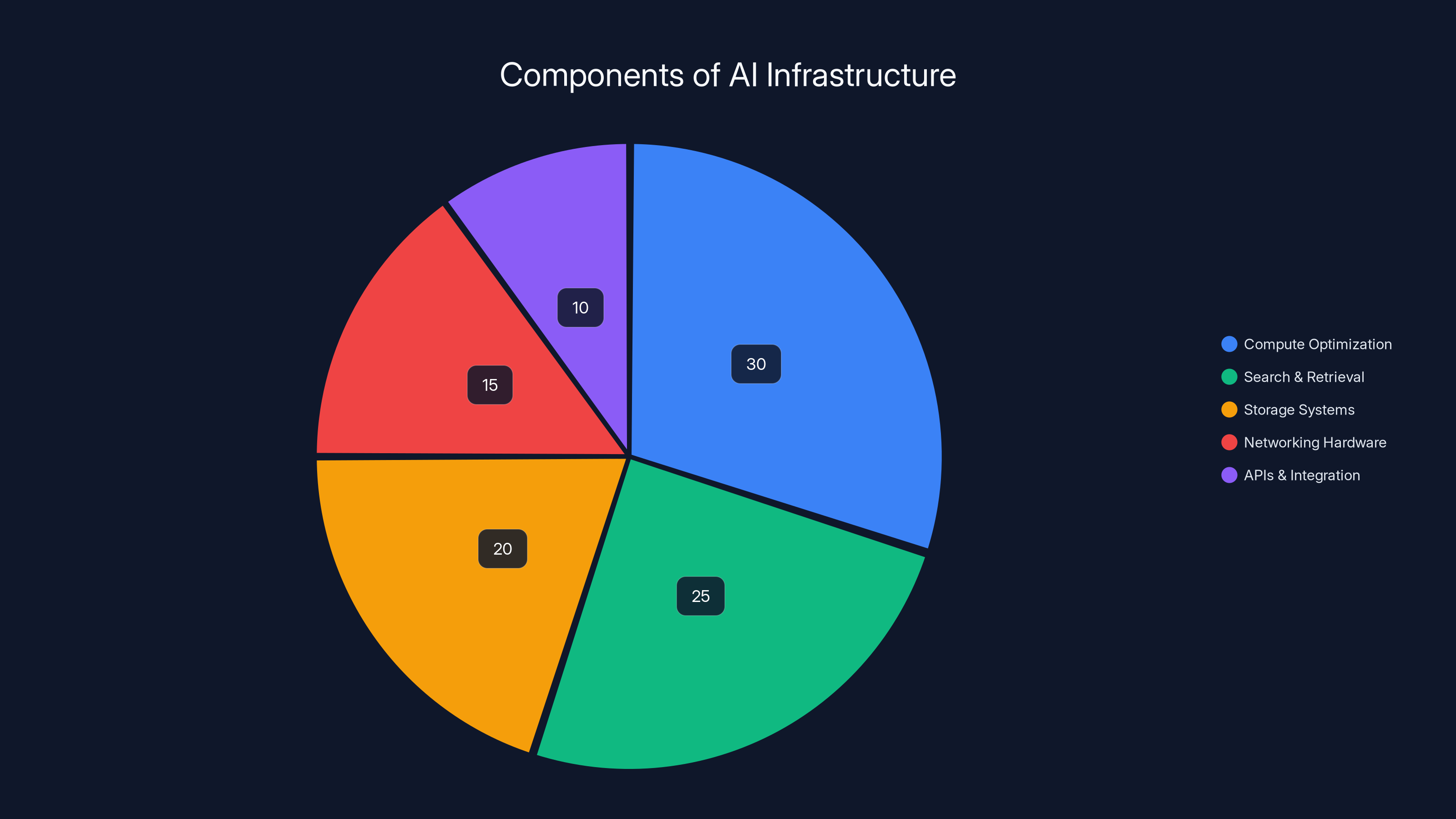

Estimated data shows a16z's focus on compute optimization and search systems, reflecting priorities in making AI models more efficient and accessible.

Understanding AI Infrastructure: It's Not What You Think

When most people hear "AI infrastructure," they picture server farms with thousands of GPUs pumping out computations. That's part of it. But the reality is far more nuanced and, frankly, more interesting.

AI infrastructure is the entire ecosystem of tools, platforms, and services that make it possible to train, deploy, and run AI models at scale. It includes compute (yes, GPUs), but also storage systems, networking hardware, databases, monitoring software, and the APIs that tie everything together.

Think of it like the difference between a car and the entire transportation system. The car is important. But so are the roads, the gas stations, the traffic lights, the repair shops, and the insurance companies. Building a car is hard. Building an entire transportation ecosystem is harder, more valuable, and ultimately more defensible as a business.

Jennifer Li's team at a16z isn't just investing in companies that build AI models. They're investing in the companies that make it possible for thousands of other companies to build AI models more efficiently, more cheaply, and more reliably.

The infrastructure layer includes several critical categories:

Compute Optimization: Getting AI models to run faster and cheaper. Companies in this space figure out how to squeeze maximum performance from expensive hardware, often reducing inference costs by 50% or more.

Search and Retrieval Systems: The ability to quickly find and rank relevant information so that AI models can make better decisions. This is why Perplexity is valuable—it's not just asking language models questions, it's building a search layer that helps those models find accurate, sourced information.

API and Integration Layers: Making it trivially easy for developers to integrate AI capabilities into their products. Fal is a great example here. Instead of forcing developers to set up their own image generation infrastructure, Fal wraps everything into clean APIs that work in seconds.

Voice and Audio Processing: Eleven Labs built the infrastructure for high-quality voice synthesis and cloning. Before Eleven Labs, voice AI was a side feature. Now it's a product category.

Visual Generation and Processing: Ideogram represents the next generation of image generation models, offering better composition, typography, and creative control than earlier tools.

Each of these areas represents a different piece of the infrastructure puzzle. And each one is generating billions of dollars in value because they enable thousands of other companies to do more with less.

The global AI infrastructure market is expected to grow from

The Infrastructure Thesis: Why A16z Is Betting Big

A16z isn't throwing money at infrastructure because it's fashionable. They're doing it because they have a specific thesis about how AI markets develop, and that thesis is grounded in historical precedent.

Look back at the cloud computing revolution. In the early 2000s, everyone was excited about web applications. But the real winners weren't the first web apps—they were AWS, Azure, and Google Cloud. Those companies didn't build a better email client or a more innovative project management tool. They built the infrastructure that made it possible for thousands of other companies to build better applications.

The same pattern is playing out with AI. Right now, everyone's focused on language models and chatbots. But the real defensible value is being created in the infrastructure layer. Here's why:

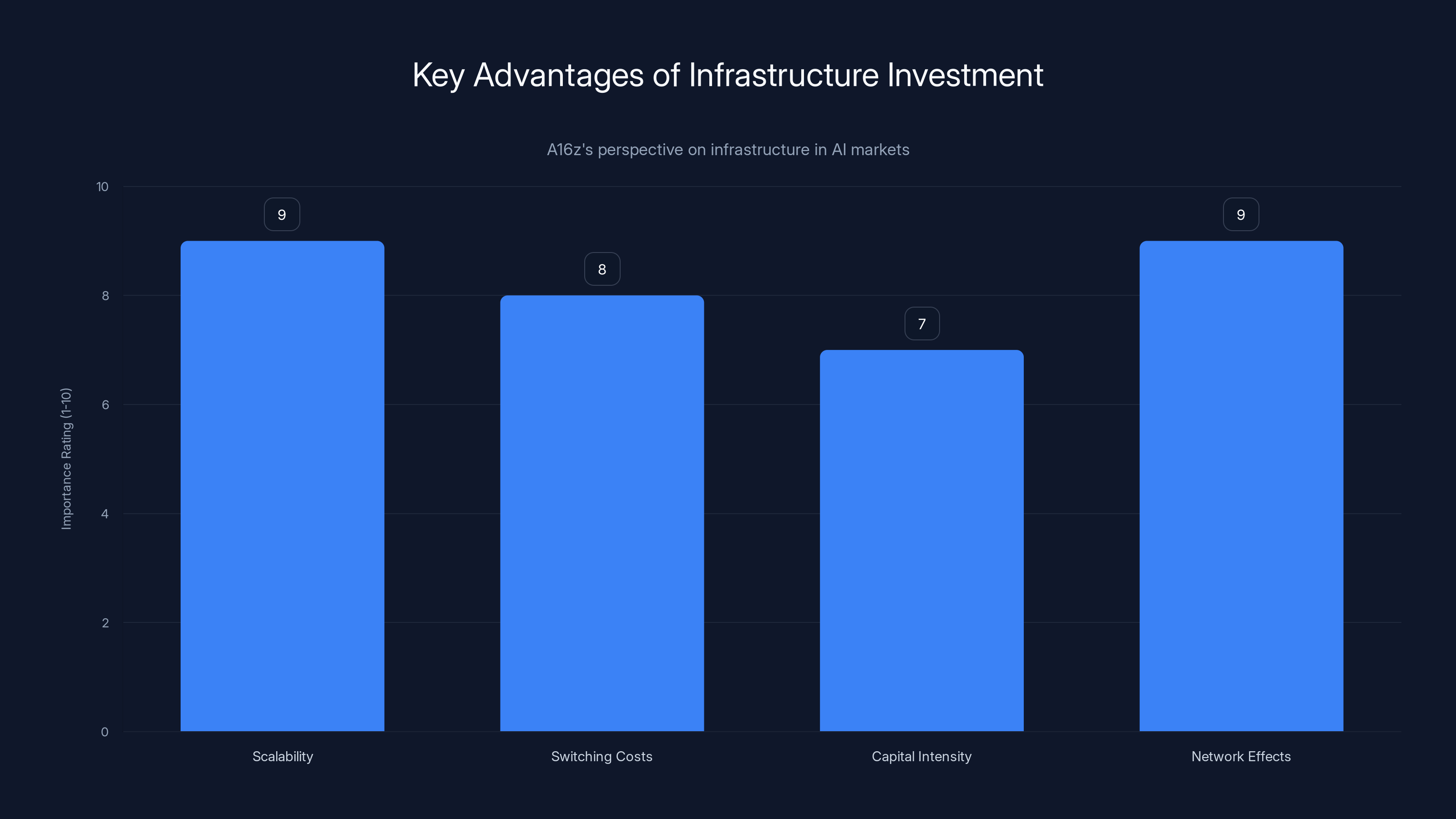

Infrastructure scales across multiple customers: When Eleven Labs builds better voice synthesis, every company that uses Eleven Labs gets better voice synthesis. That's leverage. Every customer benefits from the same investment.

Infrastructure creates switching costs: Once you build your application on top of a specific infrastructure platform, switching gets expensive. This is good business because it creates retention and defensibility.

Infrastructure is capital-intensive but not talent-constrained: Building a good infrastructure product requires serious engineering, but you don't need 10 different specialized teams. One really strong engineering team can build infrastructure that serves thousands of customers.

Infrastructure creates network effects: The more developers and companies that use a particular infrastructure platform, the better it gets. More usage means more data, more feedback, more optimization opportunities.

Jennifer Li has been explicit about this thesis. The team at a16z believes that infrastructure companies are going to define the next era of platform dominance, just like AWS, Azure, and Google Cloud defined the last one.

The Talent Crunch: Why Building AI is Getting Harder

Here's something nobody talks about enough: building AI infrastructure requires a very specific type of talent, and that talent is in short supply.

You can't just hire a smart software engineer and ask them to optimize AI inference costs. You need people who understand GPU architecture, CUDA programming, distributed systems, numerical optimization, and the specific challenges of machine learning. These people exist, but there aren't many of them, and every AI company is competing for the same pool.

Jennifer Li mentioned this explicitly: the talent crunch is hitting AI-native startups hard. We're talking about situations where a startup needs a specific expert, and there are maybe five people in the entire country who have that expertise. When you're competing against Google, Open AI, and Anthropic for that person, the economics get brutal.

The salary numbers alone are staggering. A top-tier AI engineer with specialized infrastructure expertise can command

But here's the thing: throwing money at the talent problem doesn't solve it. You can't print specialized experts. Companies have to get creative about how they attract and retain this talent:

Equity and ownership: Early-stage infrastructure companies often offer larger equity packages than they can afford in salary. The idea is that you're betting on the outcome, not just the paycheck.

Interesting problems: Top talent in AI infrastructure is often motivated by the technical challenge. Working on a problem that hasn't been solved before is more attractive than maximizing salary.

Speed and autonomy: In a startup, you can move faster. You make decisions without 47 layers of approval. This appeals to people who are frustrated by bureaucracy at big companies.

Recruitment networks: Successful infrastructure companies create their own talent pipelines. If you're building something people care about, the next generation of engineers wants to join you.

The talent crunch also creates a moat for companies that successfully hire great people. Once you have a strong team, they become more productive, which makes the company better, which makes it easier to hire the next person. It's a virtuous cycle that's hard to break if you're starting behind.

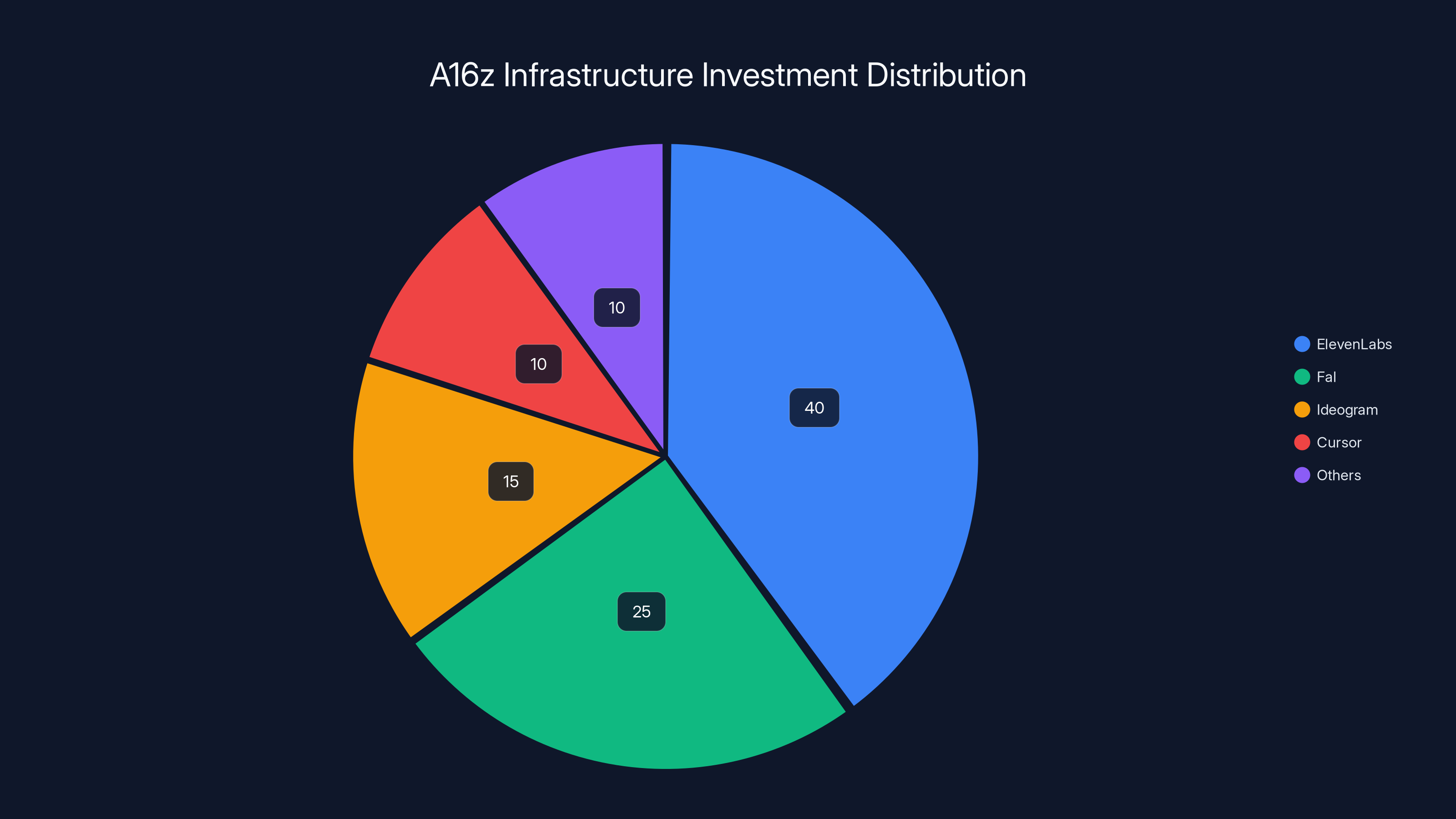

ElevenLabs receives the largest share of A16z's infrastructure investments, reflecting its leading position in voice AI technology. Estimated data.

Search Infrastructure: The Unsexy Secret Sauce

One of the most interesting insights from a16z's thesis is that search infrastructure matters more than people realize. This isn't obvious to most people, which makes it a really valuable insight.

Here's the thing: modern AI models are powerful, but they're also prone to hallucinating. They'll confidently make up facts that sound plausible but are completely wrong. The solution to this problem is better search and retrieval.

If you can build a system that helps an AI model find relevant, sourced information before generating an answer, you get better outputs. You get citations. You get accuracy. You create trust.

This is why Perplexity is interesting. On the surface, it looks like another AI chatbot. But underneath, it's a sophisticated search and retrieval system that helps large language models provide better answers by giving them access to recent, relevant information.

The infrastructure for search and retrieval is complex. You need to:

Index information at massive scale: Processing and organizing billions of documents, images, and videos so that relevant items can be retrieved in milliseconds.

Rank results by relevance: Not all search results are equal. Your search infrastructure needs to understand what the user actually cares about and bubble up the most relevant information.

Handle multiple data types: Modern search needs to work with text, images, video, code, scientific papers, and domain-specific documents. Each requires different indexing and ranking strategies.

Optimize for speed: Search results need to come back in under 100 milliseconds, ideally much faster. Anything slower and the user experience suffers.

Maintain freshness: If your search index is stale, your AI model will have stale information. You need systems that continuously update indexes with new information.

Building search infrastructure is unsexy work. Nobody gets excited about faster retrieval latency or better ranking algorithms. But it's incredibly valuable because it's the layer between users and the information they need.

A16z recognizes that in the age of AI, search infrastructure becomes even more critical. The companies that can help AI models find information quickly and accurately will become essential infrastructure.

The Portfolio: Where A16z's Infrastructure Money is Actually Going

Talk is cheap. What matters is where the money actually goes. A16z's infrastructure investments tell a clear story about what the firm believes will matter in the next five years.

Eleven Labs is the crown jewel of the infrastructure portfolio. The company has reached an $11 billion valuation by building the best voice synthesis and cloning technology on the market. Before Eleven Labs, voice AI was a gimmick. The voice quality was obviously AI, the options were limited, and the latency was unacceptable.

Eleven Labs solved all of those problems. Their voices sound human. They support multiple languages and accents. The latency is low enough for real-time conversations. They've made voice AI actually useful, which means thousands of companies can now build voice features into their products.

Fal represents a different category: the API layer. Fal makes it simple for developers to access cutting-edge image generation models without having to manage their own infrastructure. You send an API request, Fal handles everything, you get back an image. That simplicity enables thousands of developers to build image features into their applications.

Ideogram is investing in better image generation models, particularly for tasks like typography and detailed composition where earlier models struggled.

Cursor represents the developer tools category—building an AI-powered code editor that understands context, predicts what you want to do next, and automates repetitive coding tasks.

Then there are the less visible investments that are equally important. Companies building:

Inference optimization: Reducing the cost of running AI models in production by 50%, 70%, or even 90%.

Training infrastructure: Making it faster and cheaper to train new models, which accelerates innovation.

Monitoring and observability: Understanding what's happening inside your AI systems, debugging problems, and optimizing performance.

Security and compliance: Ensuring that AI systems are safe, auditable, and compliant with regulations.

Each of these categories serves a different need in the AI stack, but they all share the same characteristic: they enable other companies to build better AI products more efficiently.

A16z emphasizes infrastructure's scalability, network effects, and switching costs as key advantages in AI markets. Estimated data based on thematic analysis.

Why Consumer AI Apps Won't Dominate

This is perhaps the most controversial part of a16z's thesis, but it's also the most important. The firm believes that consumer-facing AI applications—think AI chatbots, AI writing assistants, AI art generators—won't be where the real value accumulates.

Here's why: consumer AI apps are incredibly expensive to build and operate. Training a competitive language model costs hundreds of millions of dollars. Running that model at scale costs tens of millions of dollars per year. Customer acquisition costs for consumer apps are high. Converting users into paying customers is hard.

Meanwhile, everyone can see the business model clearly. So when one company builds a successful consumer AI app, ten competitors immediately try to copy it. The market gets crowded fast, and prices get driven down.

Contrast that with infrastructure. Infrastructure companies have:

Higher margins: Once you've built the infrastructure, serving additional customers costs much less. Your profit margin scales with volume.

Defensibility: If you're the best at inference optimization, or the best at voice synthesis, or the best at image retrieval, that's not something a competitor can easily replicate.

Multiple revenue streams: Infrastructure vendors can sell to developers, to other startups, to enterprises, to research institutions. You're not dependent on a single customer segment.

Longer runways: Because the economics work better, infrastructure companies can last longer and invest more in R&D without running out of money.

This is a contrarian view. It's tempting to believe that the next big thing will be a consumer AI app that goes viral and becomes the next Chat GPT or Midjourney. But a16z's historical track record suggests that's not where the real money is.

The real money is in the picks and shovels. It's in the companies that enable everyone else to build faster, cheaper, and better.

The Competitive Dynamics: Why This Matters Now

A16z's commitment of $1.7 billion isn't just a vote of confidence in AI infrastructure. It's a strategic move in an increasingly competitive landscape.

Open AI, Anthropic, and Google all have their own AI infrastructure investments. But they're building these primarily for internal use. A16z recognizes that there's an opportunity to build independent infrastructure companies that serve the entire ecosystem, not just one company's needs.

This matters because it creates diversity in the AI stack. If all AI infrastructure is controlled by a handful of large labs, then startups and smaller companies have limited options. But if there are independent infrastructure companies that are best-of-breed, then the entire ecosystem gets better.

There's also a defensive strategy here. By investing in infrastructure across multiple companies, a16z is hedging against any single company dominating the AI stack. The firm is essentially saying: we don't know which infrastructure companies will win, so we're investing in a portfolio of them.

This is smart venture capital strategy. Venture capital is about finding the winners before they're obvious. By spreading capital across multiple infrastructure investments, a16z increases the probability that they'll own significant equity in whatever infrastructure layers end up mattering.

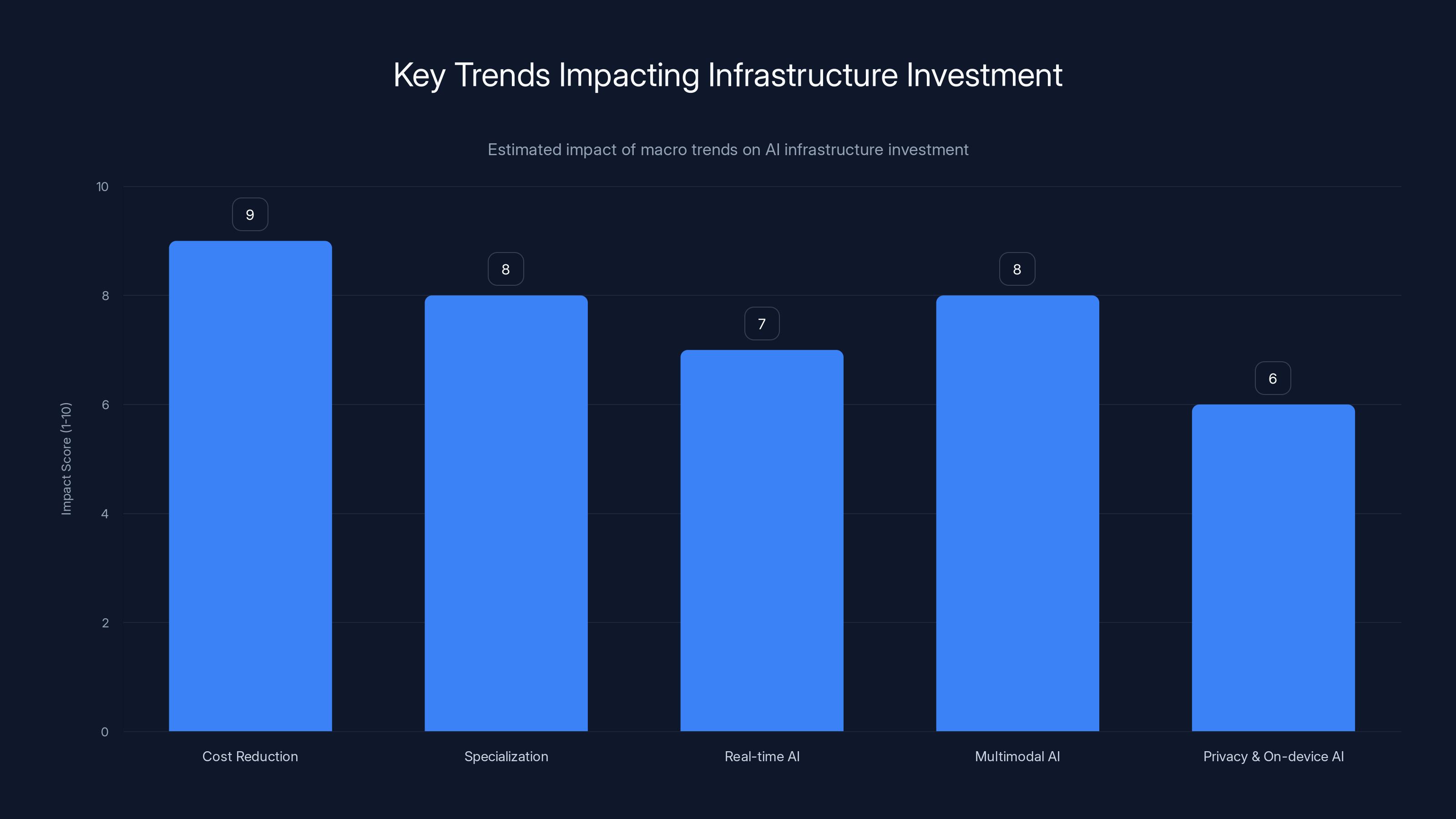

Cost reduction and specialization are leading trends driving infrastructure investment, with high impact scores. Estimated data based on industry analysis.

The Macro Trends Driving Infrastructure Investment

A16z's infrastructure bet makes sense when you look at the broader trends in AI development and deployment.

Cost reduction is critical: Right now, running large AI models is expensive. If you can reduce inference costs by 50%, you've just made your product twice as profitable. If you can reduce training costs by 70%, you've just enabled companies to experiment with new models much more frequently. There's enormous value in cost reduction, which means there's enormous value in the companies that figure out how to reduce costs.

Specialization is inevitable: We're not going to have one model that does everything well. We're going to have many specialized models, each optimized for specific tasks. This requires infrastructure that can run multiple different models, each with different requirements and performance characteristics.

Real-time AI is becoming essential: Early AI applications were batch processes. Now, we need AI systems that respond in real-time. This requires infrastructure that can handle low-latency inference, which is technically harder and more valuable.

Multimodal AI is the future: AI systems that can understand and generate text, images, audio, and video simultaneously are far more useful than systems that only handle one modality. Multimodal AI requires more sophisticated infrastructure.

Privacy and on-device AI: Consumers care about privacy. Enterprises care about keeping data inside their networks. This drives demand for infrastructure that can run AI models locally, without sending data to external servers. On-device AI requires specialized optimization because devices have limited compute and memory.

Each of these trends creates opportunities for infrastructure companies to build valuable, defensible businesses. A16z is betting that these trends will accelerate over the next five years, which means infrastructure investments will generate outsized returns.

How Startups Can Capitalize on Infrastructure Investment

If you're building an AI startup, understanding a16z's infrastructure thesis is genuinely valuable because it tells you what venture capital will fund in the next wave of investments.

The first thing to understand is that the best time to build infrastructure is when there's already proven demand for the product or service it enables. Eleven Labs succeeded because voice AI was already a thing, it just sucked. Eleven Labs made it not suck.

So if you're thinking about building infrastructure, ask yourself: what are developers complaining about right now? What's slow, expensive, or difficult? What do they wish they could do but can't because the infrastructure doesn't exist?

Second, recognize that infrastructure plays have different unit economics than consumer plays. You're not trying to acquire millions of users at low cost. You're trying to acquire hundreds of customers at higher cost, with longer sales cycles. This means you need a business model that works with that customer acquisition profile.

Third, remember that infrastructure is only valuable if it enables other people to build better products. So you need to think constantly about your customers' experience. How do they integrate with you? How easy is it to get started? How much operational overhead does your infrastructure add to their business? The better you make those things, the faster you'll grow.

Estimated data shows that compute optimization and search & retrieval systems are major focus areas within AI infrastructure, highlighting their critical role in enhancing AI model efficiency.

The Role of Developer Experience in Infrastructure Success

One of the least appreciated factors in infrastructure success is developer experience. This might sound soft or unimportant, but it's actually critical.

Consider two companies with identical technology. Company A makes you jump through hoops to integrate their API. Company B has a five-line code example that gets you up and running in minutes. Company B wins, every time. Developer experience isn't a nice-to-have feature of infrastructure. It's the core differentiator.

This is why Fal is interesting. The company focused obsessively on making it easy for developers to use cutting-edge image generation models. The result is that thousands of developers who would never have built image generation features into their products are now doing it because Fal made it trivial.

When a16z evaluates infrastructure companies, developer experience is one of the first things they look at. How many steps does it take to get started? What's the time to first success? How obvious are best practices? What's the support experience like?

A company that gets developer experience right can grow virally because developers tell other developers. A company that ignores developer experience can have superior technology and still struggle to gain adoption because building on top of them is too painful.

The lesson here: if you're building infrastructure, invest at least as much in developer experience as you invest in the core technology. Make it trivial for developers to use your infrastructure, and they'll use it. Make it hard, and they'll use something else, even if your technology is better.

The Future of Infrastructure: What Comes Next

A16z's $1.7 billion commitment suggests that infrastructure investment will continue to be a major focus for venture capital in the next few years. But what specific areas will get the most attention?

Model compression and optimization: Making large AI models smaller and faster without losing quality. This is critical for on-device AI, for edge computing, and for cost reduction.

Fine-tuning and customization infrastructure: As companies increasingly want to customize AI models for their specific use cases, there will be enormous demand for infrastructure that makes fine-tuning easy and affordable.

AI safety and interpretability infrastructure: As AI systems become more powerful, there's increasing demand for infrastructure that helps you understand what your AI model is doing, detect issues, and ensure safety.

Multimodal model infrastructure: The next generation of AI models will seamlessly handle text, images, audio, and video. The infrastructure to train and deploy these models will be worth billions.

Real-time AI infrastructure: Inference latency matters more and more. There will be enormous value in infrastructure that can push AI models to edge devices, to local networks, and to devices in-camera, enabling real-time AI without cloud compute.

Data infrastructure for AI: Training better models requires better data. There will be infrastructure plays around data collection, labeling, curation, and management.

Each of these represents a multi-billion-dollar opportunity, which is exactly the kind of bet venture capital loves to make.

How to Think About Infrastructure Investments

If you're an investor, operator, or entrepreneur trying to understand a16z's thesis, here are the key principles to internalize:

First, infrastructure is where the value accumulates in a new computing paradigm. This happened with cloud computing (AWS won), it happened with mobile (Apple's infrastructure won), and it's happening with AI.

Second, infrastructure wins because of network effects, defensibility, and economics. Once you're the standard infrastructure layer for something, it's hard to displace you.

Third, infrastructure investments take longer to pay off than consumer investments, but they pay off bigger and for longer. AWS took five years to generate significant revenue, but once it hit scale, the returns were extraordinary.

Fourth, the winners in infrastructure are often companies that solve a specific problem extremely well, rather than companies that try to do everything. Eleven Labs dominates voice because they focus exclusively on voice. They don't try to do images or code generation or anything else.

Fifth, infrastructure companies that are successful generally have deep technical expertise and long-term thinking. You can't build great infrastructure by cutting corners or thinking about quick exits. You need people who care deeply about the problem and are willing to invest years in solving it.

The Risk: What Could Go Wrong

A16z's infrastructure thesis is compelling, but it's not without risks. Understanding these risks is important if you're going to evaluate whether this is a smart bet.

First, some infrastructure plays will be made obsolete by changes in AI architecture: What if future AI models don't need inference optimization because they're designed differently? What if search infrastructure becomes less important because models become better at reasoning? Technological disruption can make even good infrastructure plays obsolete.

Second, compute costs might drop faster than expected: If GPUs become 100 times cheaper, a lot of companies focused on cost reduction become less valuable. This is good for the ecosystem but bad for specific infrastructure investors.

Third, large AI labs might build their own infrastructure: Open AI, Google, and Anthropic might decide to build or acquire the infrastructure they need rather than relying on independent companies. This would eliminate entire market categories.

Fourth, network effects might not develop: Infrastructure is often valuable because of network effects. But not every infrastructure company develops meaningful network effects. You can build a great product that doesn't scale because of fundamental limitations.

Fifth, regulation could reshape the entire industry: If regulators decide that AI needs to be deployed in certain ways, or restricted in other ways, it could upend infrastructure assumptions.

These risks are real. But a16z's view is that the upside of being right about infrastructure is large enough to justify the risk of being wrong about some specific bets.

The Reality Check: Are We In a Bubble?

A reasonable person might look at a16z committing $1.7 billion to AI infrastructure and wonder: aren't we in an AI bubble? Aren't valuations insane? Isn't everyone overpaying for AI startups?

A16z's argument is that we're early in a super cycle, not late. The firm points to historical precedent: in the cloud computing cycle, we had multiple boom-bust cycles, but the fundamental trend continued upward for 20 years. Companies that looked overvalued in 2010 look cheap today.

The firm also points out that infrastructure has different economics than applications. Infrastructure is easier to monetize, has better margins, and creates more defensible businesses. So even if you overpay for an infrastructure company, you're more likely to make it work than if you overpay for a consumer app.

That said, a16z is clearly hedging its bets. The firm is committing $1.7 billion to infrastructure across dozens of companies, not betting all the money on one company. This is smart portfolio strategy. It acknowledges that you'll probably get some bets wrong, but the winners will be large enough to make up for the losers.

Is a16z right that we're early in the AI cycle and that infrastructure will be the big winner? That's unknowable until we look back in ten years. But the logic is sound, the historical precedent is strong, and the bet is structured in a way that makes sense given the uncertainty.

Practical Implications for Developers and Teams

If you're a developer or running a team, what should you take away from all this?

First, understand that the AI landscape will continue to become more specialized. You won't use one AI service for everything. You'll use Eleven Labs for voice, Fal for images, a language model API for text generation, and multiple other specialized tools.

Second, think about building your product with infrastructure. Don't build everything from scratch. Use existing infrastructure to move faster and focus on what makes your product unique.

Third, recognize that cost will continue to be a competitive factor. As infrastructure companies optimize performance and cost, the cost of running AI-powered features will continue to drop. This makes it increasingly viable to add AI features to products that previously couldn't afford them.

Fourth, invest in understanding your infrastructure dependencies. Which infrastructure providers are you relying on? How locked in are you? What happens if they raise prices, or go out of business, or make breaking changes to their API?

Tenth, contribute to the infrastructure ecosystem. If you find bugs in a tool, report them. If you have feedback about developer experience, share it. The infrastructure companies that respond to feedback and iterate will be the ones that succeed.

The Broader AI Market Implications

A16z's $1.7 billion bet has implications that extend far beyond venture capital returns. It's a signal about how the AI market will develop over the next five years.

What a16z is saying is that the winners in AI won't necessarily be the companies with the biggest models or the best demos. The winners will be the companies that make it easiest for everyone else to build AI products.

This is good news for startups and developers because it means you don't have to compete with Google or Open AI directly. You can build on top of their infrastructure, or on top of smaller specialized infrastructure companies, and focus on your own unique value proposition.

It's also a signal that the AI market is maturing. We're past the phase where AI is a novelty or a party trick. We're entering the phase where AI is becoming infrastructure, integrated into everything, and the question shifts from "should we use AI?" to "which AI infrastructure should we build on top of?"

This maturation will accelerate. And as it accelerates, the infrastructure companies that a16z is investing in today will become increasingly critical to the entire AI ecosystem.

FAQ

What exactly is AI infrastructure, and how does it differ from AI models?

AI infrastructure is the foundational systems that enable AI models to be built, trained, and deployed efficiently. While AI models are the algorithms themselves (like GPT-4 or Claude), infrastructure is everything else: compute resources, storage systems, APIs, optimization software, and services that make using those models practical. Think of it like the difference between a car engine (the model) and the entire transportation system including roads, gas stations, and repair shops (the infrastructure). Infrastructure companies create the environment where AI models can actually be useful.

Why is a16z investing $1.7 billion specifically in infrastructure rather than in AI models or consumer applications?

A16z believes infrastructure generates higher margins, creates more defensible businesses, and serves as a foundation for thousands of other companies. Infrastructure companies benefit from network effects: as more people use their platform, it becomes more valuable. Additionally, infrastructure investments have clearer monetization paths than consumer AI apps, which face high customer acquisition costs and pricing pressure. Historically, the biggest winners in computing paradigm shifts (like cloud computing with AWS) were infrastructure companies, not consumer apps. A16z is betting that the same pattern will hold for AI.

What are the key areas where a16z's infrastructure funding is being deployed?

A16z's infrastructure investments span multiple critical categories: compute optimization (making AI models faster and cheaper), search and retrieval systems (helping AI models find relevant information), API and integration layers (like Fal for image generation), voice and audio processing (like Eleven Labs), visual generation and processing (like Ideogram), developer tools (like Cursor), and monitoring and security systems. Each category addresses a different piece of the AI infrastructure puzzle, collectively enabling thousands of companies to build better AI products.

How does the AI infrastructure market size projection of growing from 497.98B by 2034 impact these investment decisions?

This projection represents a compound annual growth rate of 26.60%, indicating that infrastructure will be one of the fastest-growing technology sectors. A16z's $1.7 billion commitment reflects confidence that capturing even a small percentage of this massive market will generate enormous returns. A 26.60% CAGR means the market is growing approximately 2.5 times larger every five years, creating a multi-year runway for infrastructure companies to mature and reach scale.

What is the talent crunch in AI infrastructure, and how does it affect startup funding and growth?

The talent crunch refers to severe competition for engineers with specialized expertise in areas like GPU optimization, distributed systems, numerical algorithms, and machine learning infrastructure. There are perhaps only hundreds of people worldwide who have world-class expertise in these areas, while hundreds of companies need them. This drives salaries to

Why does a16z emphasize that search infrastructure matters more than people think?

Search infrastructure is critical because modern AI models hallucinate and make up information. By adding a search and retrieval layer, AI models can access real, relevant, sourced information before generating answers, dramatically improving accuracy and trustworthiness. This is why Perplexity is valuable—it's not just a chatbot, it's a search infrastructure that makes AI models more reliable. As AI becomes embedded in more critical applications, the need for accurate, sourced information becomes paramount. Companies that build excellent search infrastructure for AI will have a major competitive advantage.

How should startups think about building on top of AI infrastructure versus building their own infrastructure?

Most startups should build on top of existing infrastructure rather than building their own. Using Fal for image generation or Eleven Labs for voice allows you to launch products faster and focus on your unique value proposition. Building your own infrastructure is only justified if: (1) existing infrastructure doesn't meet your needs, (2) cost savings from building your own are enormous, or (3) you're building infrastructure as your core business. Most successful AI startups focus on applications or services built on top of infrastructure, not infrastructure itself.

What risks could undermine a16z's infrastructure thesis?

Several risks could challenge this thesis. First, technological disruption could make current infrastructure obsolete if AI architecture fundamentally changes. Second, compute costs might drop faster than expected, reducing demand for optimization services. Third, large AI labs like Open AI and Google could build their own proprietary infrastructure instead of using independent vendors. Fourth, network effects might not develop for all infrastructure plays, limiting growth. Finally, regulation could reshape the industry entirely. A16z manages these risks through portfolio diversification, investing in multiple companies across different infrastructure categories rather than betting everything on one approach.

Conclusion: The Infrastructure Era is Beginning

A16z's $1.7 billion commitment to AI infrastructure isn't just a venture capital investment. It's a statement about the future of technology. The firm is saying that the next era of computing will be defined by companies that build the foundational layers that everyone else builds on top of.

This represents a fundamental shift in how we think about AI. We're moving past the phase where AI is a novelty or a competitive advantage. We're entering the phase where AI is infrastructure, increasingly integrated into every product and service.

The companies that win in this environment won't necessarily be the ones with the biggest models or the best marketing. They'll be the ones that solve fundamental problems more efficiently than anyone else, create defensible businesses, and build products that thousands of other companies want to build on top of.

If you're a developer, entrepreneur, or investor, understanding this thesis matters because it tells you where the money will flow, which companies will thrive, and where the real opportunities lie in the AI economy.

The unsexy work of optimization, integration, and infrastructure building is where the real value is being created. A16z sees it. Smart capital is flowing toward it. And over the next five years, we'll see which infrastructure plays become the AWS of the AI era.

For everyone building in this space, that's not a threat. It's an opportunity. The infrastructure layer is being built right now, and the companies getting funded today will define how AI works for the next decade.

Key Takeaways

- A16z's $1.7B infrastructure allocation reflects a strategic bet that infrastructure companies will dominate the AI era, similar to how AWS dominated cloud computing.

- AI infrastructure includes compute optimization, search systems, APIs, voice/audio processing, and developer tools that enable thousands of other companies to build AI products.

- The global AI infrastructure market will grow from 497.98B by 2034 at a 26.60% CAGR, creating enormous value for infrastructure winners.

- Infrastructure businesses have higher margins, better defensibility, and longer runways than consumer AI applications, making them more attractive to venture capital.

- Talent shortage for specialized AI infrastructure engineers (salaries of 500K+) creates barriers to entry and defensibility for companies that successfully recruit teams.

- Search infrastructure is becoming critical to AI reliability and trustworthiness, helping models avoid hallucinations by accessing sourced information.

- Companies like ElevenLabs ($11B valuation), Fal, Cursor, and Ideogram represent a16z's bet on which infrastructure categories will matter most.

- Startups should generally build on top of existing infrastructure rather than building their own, reserving custom infrastructure for unique competitive advantages.

Related Articles

- ElevenLabs 11B Valuation [2025]

- Nvidia's $100B OpenAI Gamble: What's Really Happening Behind Closed Doors [2025]

- SpaceX Acquires xAI: The 1 Million Satellite Gambit for AI Compute [2025]

- ChatGPT Outages & Service Issues: What's Happening [2025]

- GitHub's Claude & Codex AI Agents: A Complete Developer Guide [2025]

- SNAK Venture Partners $50M Fund: Digitalizing Vertical Marketplaces [2025]

![A16z's $1.7B AI Infrastructure Bet: Where Tech's Future is Going [2025]](https://tryrunable.com/blog/a16z-s-1-7b-ai-infrastructure-bet-where-tech-s-future-is-goi/image-1-1770237447343.png)