Nvidia's $100B Open AI Gamble: What's Really Happening Behind Closed Doors [2025]

Let's cut to the chase. Jensen Huang just told the world that reports of friction between Nvidia and OpenAI are "nonsense". But here's the thing—when a CEO has to explicitly deny rumors in Taipei, something's usually worth paying attention to.

This isn't just drama between two tech titans. It's a multi-billion-dollar relationship that literally powers the AI revolution, and right now, it's sitting at a fascinating inflection point. Huang's public statements paint a picture of unwavering support and massive investment. But privately? The conversation appears far more complicated.

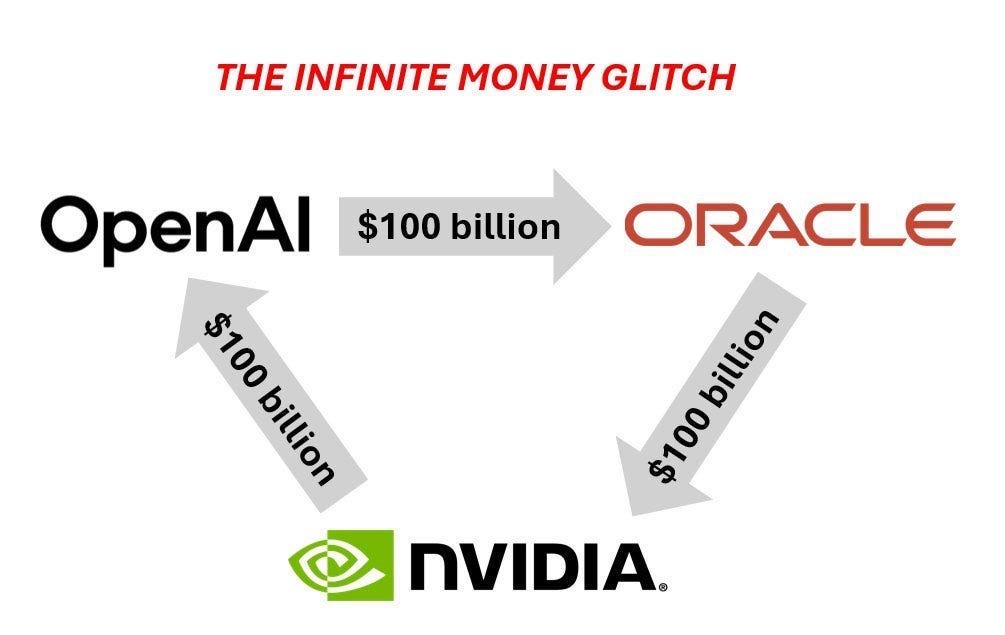

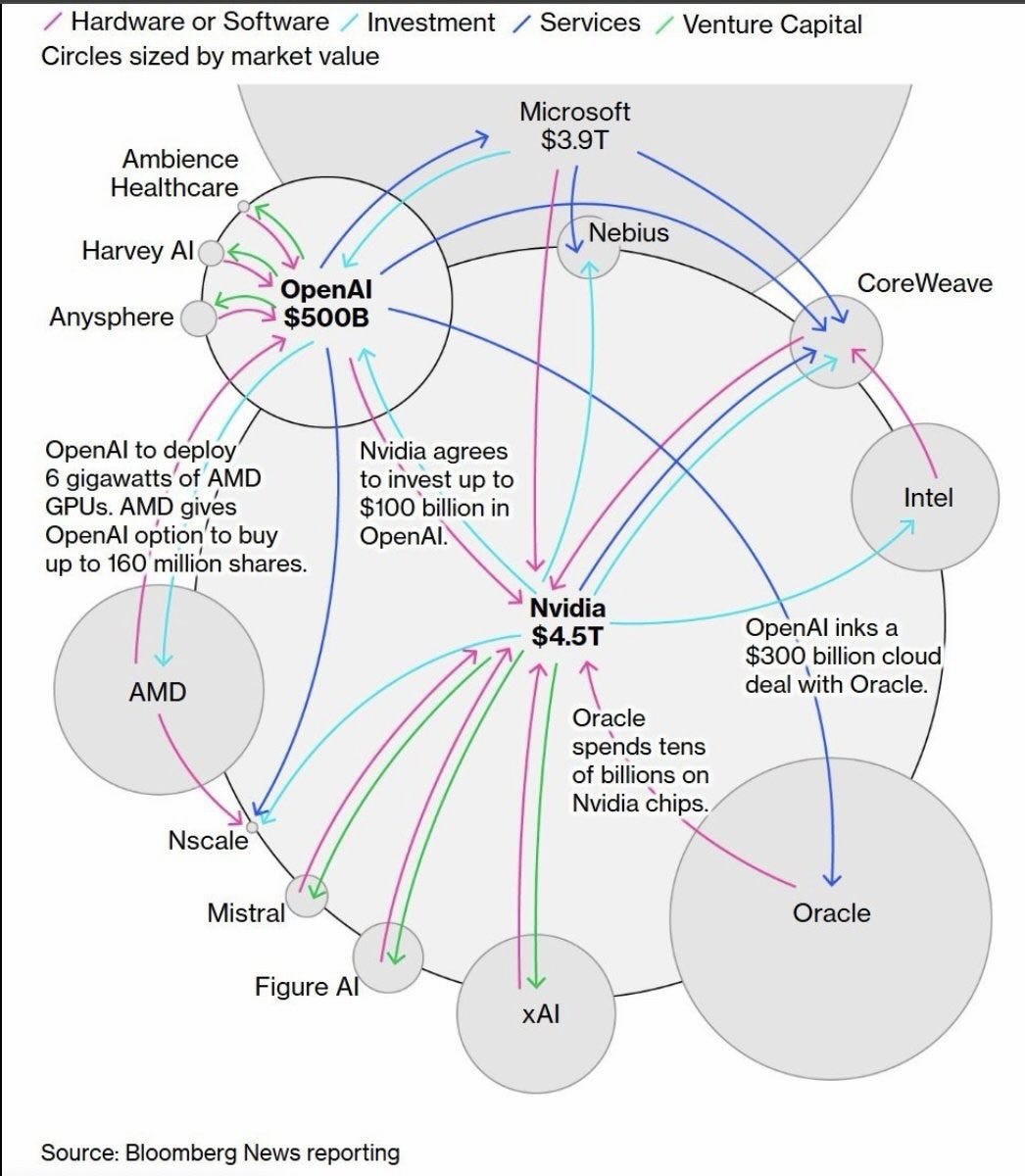

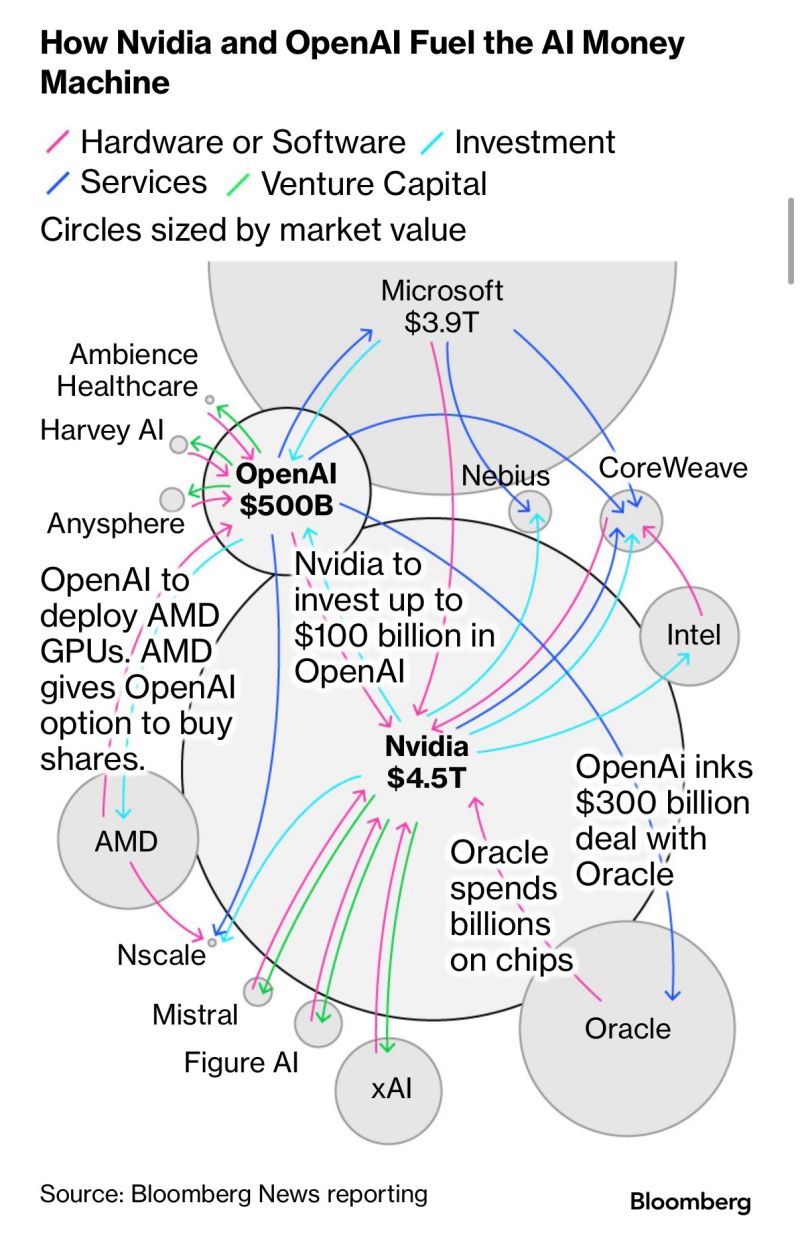

What we're really watching unfold is a case study in how corporate partnerships work when the stakes are existential. Nvidia needs OpenAI to keep buying chips. OpenAI needs Nvidia's chips to function. Both companies are trying to position themselves as the dominant force in AI. And somewhere in the middle, billions of dollars are being negotiated behind closed doors.

The Public Statement: "It's Nonsense"

Jensen Huang's dismissal of friction reports was direct and unambiguous. Speaking during a visit to Taipei, the Nvidia CEO characterized the rumors as false and doubled down on his company's commitment to OpenAI. He didn't hedge. He didn't qualify his statements. He simply said the partnership is "as strong as ever."

The timing matters here. Huang made these comments after weeks of reporting suggested Nvidia was reconsidering its massive investment commitment to OpenAI. The Wall Street Journal and other outlets had published pieces indicating that Huang was privately tempering expectations about the scale of Nvidia's participation in OpenAI's next funding round.

But Huang's public position is crystal clear: Nvidia considers OpenAI "one of the most consequential companies of our time." That's not casual language. That's the kind of statement you only make when you're genuinely betting your company's future on another company's success. Or when you need investors and the market to believe you are.

He also explicitly stated that Nvidia would "definitely participate" in the next OpenAI funding round. And he confirmed that Nvidia "will invest a great deal of money" because OpenAI is "such a good investment." Notice that language—Huang framed this in purely financial terms. It's not about partnerships or collaboration. It's about returns.

When asked for specific numbers, though, Huang punted. "Let Sam announce how much he's going to raise," he said, referring to OpenAI CEO Sam Altman. "It's for him to decide." That deflection is interesting because it creates plausible deniability. If the investment ends up being smaller than expected, Huang can point to this moment and say he never committed to a specific figure.

The public narrative, though, is clear: Nvidia and OpenAI have a strong relationship and will continue investing heavily together.

OpenAI's potential $100 billion valuation is significantly lower than major tech giants like Nvidia, Microsoft, and Apple, highlighting its relatively smaller market cap in comparison.

The Funding Round Expectations

Back in December 2024, OpenAI was exploring a $100 billion funding round. That's not a typo. One hundred billion dollars. To put that in context, that would make OpenAI one of the most valuable private companies in history, surpassing the valuation of most major public corporations.

The ambition here is staggering. OpenAI isn't just trying to build a better AI model. The company is trying to build the infrastructure needed to train and deploy AI at planetary scale. And that infrastructure costs serious money.

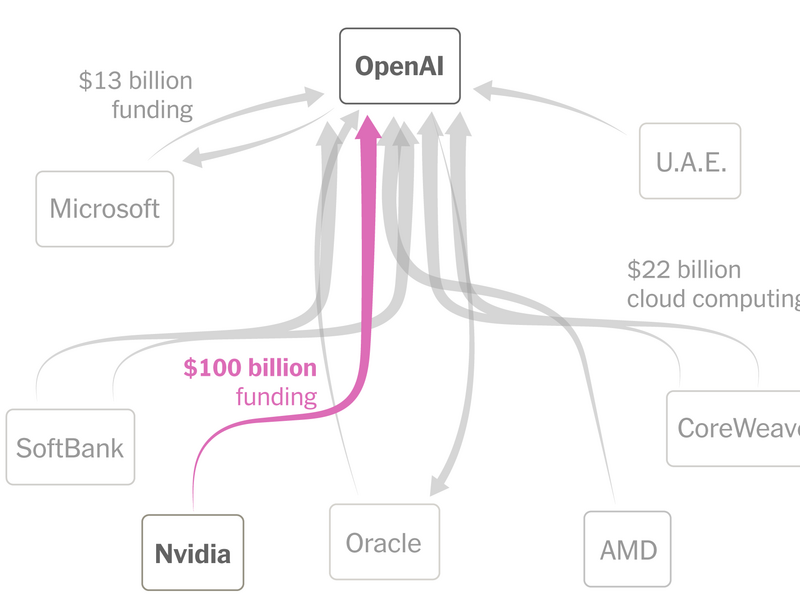

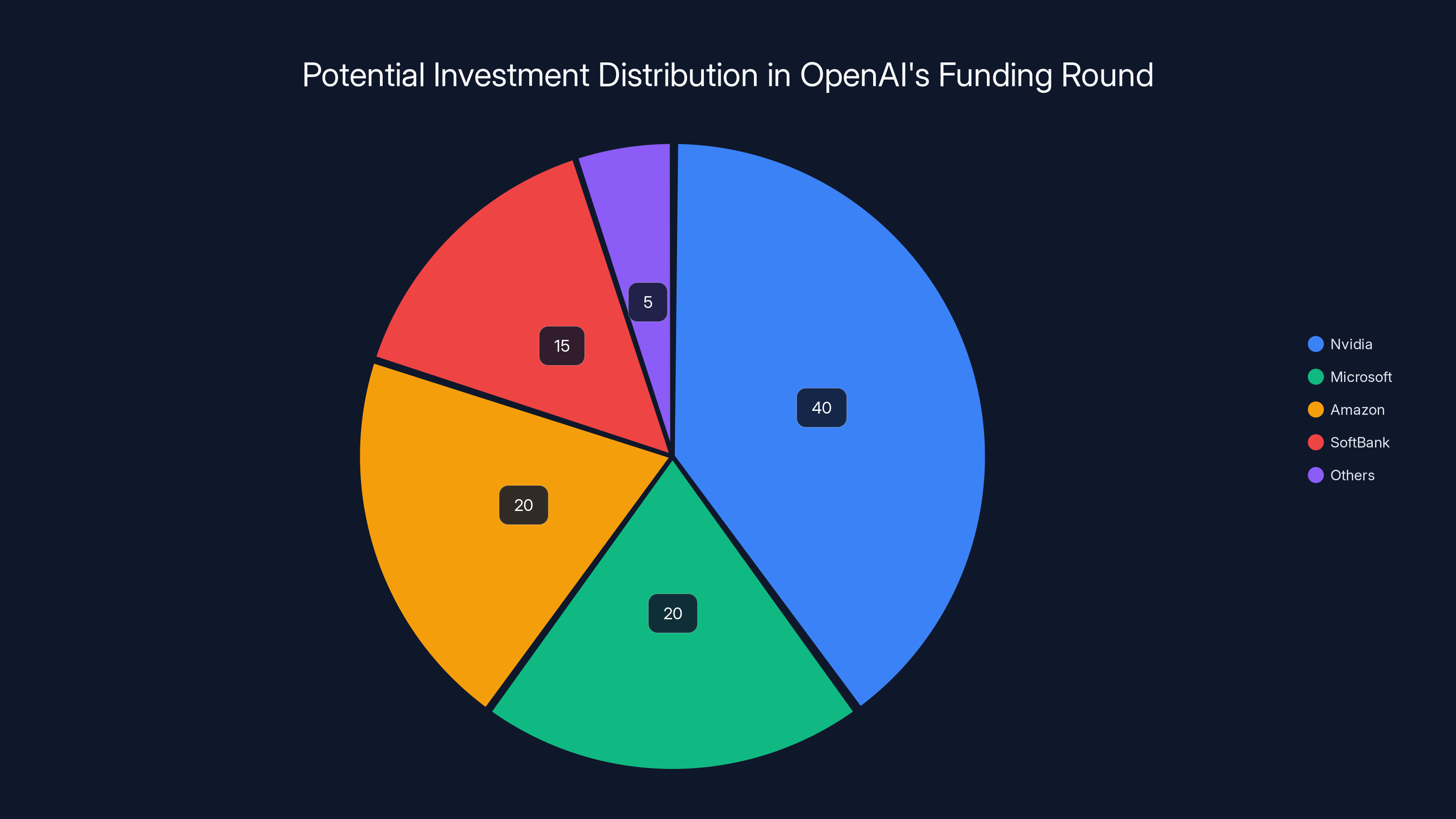

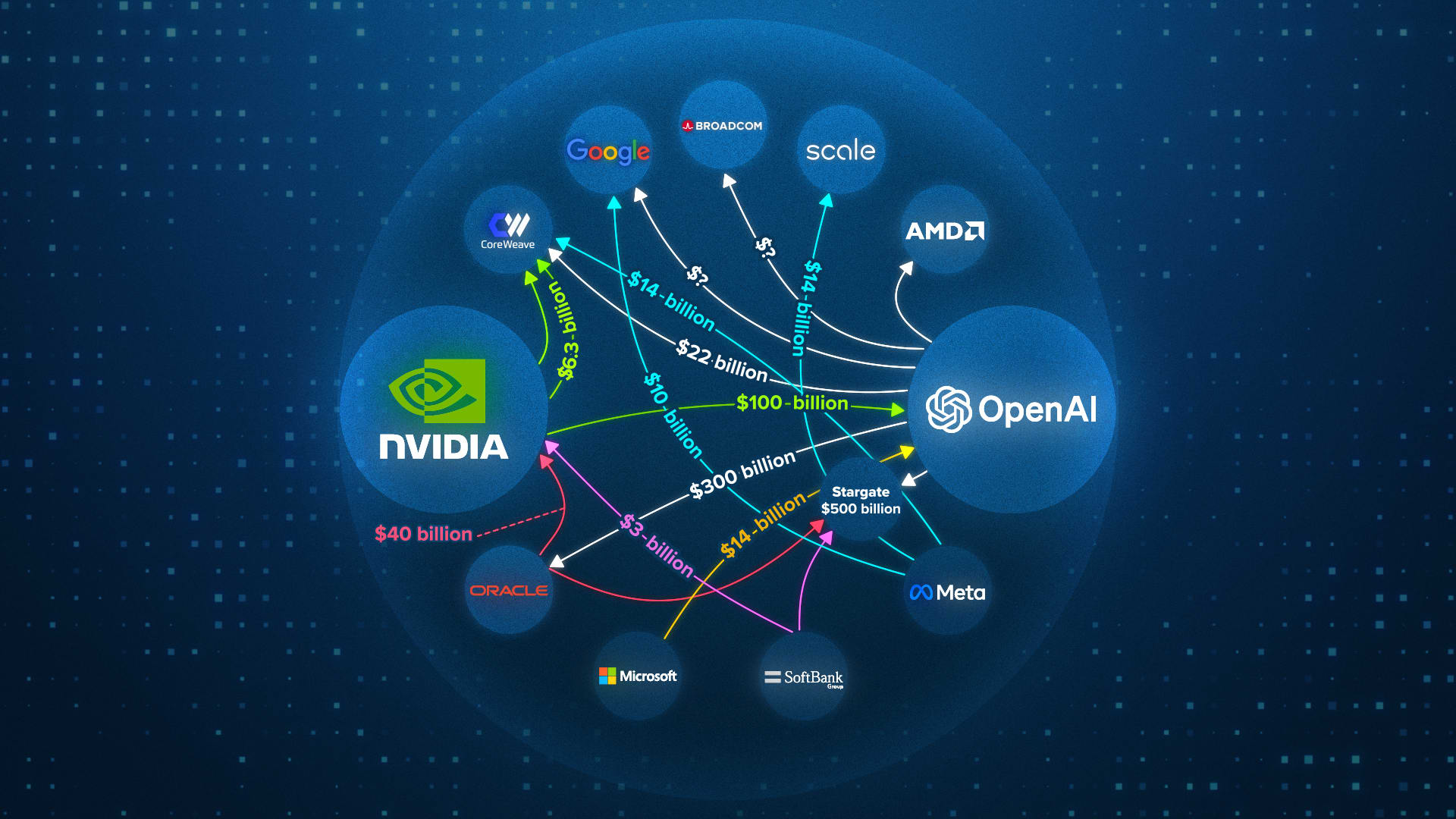

Reports at that time suggested that Nvidia, Microsoft, Amazon, and SoftBank were all discussing potential investments in this round. Nvidia's commitment was reported to be substantial—potentially up to $100 billion over time. That's roughly equivalent to Nvidia's entire 2024 operating revenue. For context, that's the kind of bet you only make when you truly believe in a company's future.

But here's where things get murky. In the weeks following those initial reports, the New York Times published a story suggesting that Huang had been emphasizing, in private conversations, that Nvidia's $100 billion commitment was "nonbinding." That's a critical distinction. Nonbinding means Nvidia can walk back the commitment if conditions change.

Why does this matter? Because it signals doubt. Even if Huang is bullish on OpenAI's long-term prospects, the fact that Nvidia is publicly positioning its commitment as flexible suggests the company is hedging its bets. Financial commitments are typically structured one of two ways: binding or nonbinding. If you believe in something completely, you make the commitment binding. You lock yourself in. The fact that Nvidia didn't suggests caution.

The timing of these clarifications is also significant. They came after OpenAI had been consistently in the headlines for the wrong reasons. Leadership instability, board drama, and questions about the company's path to profitability had all weighed on sentiment. It's possible Nvidia looked at these headlines and thought, "Maybe we should keep our options open."

Reports also suggested that recent discussions within Nvidia have focused on "scaling the investment," which is corporate speak for "making it smaller or conditional." Some of these conversations apparently centered on an equity stake measured in the "tens of billions of dollars" rather than the $100 billion figure that had been reported earlier.

Tens of billions is still enormous, but it's a meaningful difference from $100 billion. It suggests negotiation. It suggests compromise. It suggests that the relationship, while still strong, is being calibrated based on changing circumstances.

Estimated data suggests Nvidia could contribute the largest share of OpenAI's $100 billion funding round, though their commitment is nonbinding. Estimated data.

The Infrastructure Dependency

Beyond the pledged funding, there's another critical dimension to the Nvidia-OpenAI relationship: infrastructure. The two companies planned to build massive computing capacity together, including tens of thousands of servers. This isn't hypothetical. This is happening right now.

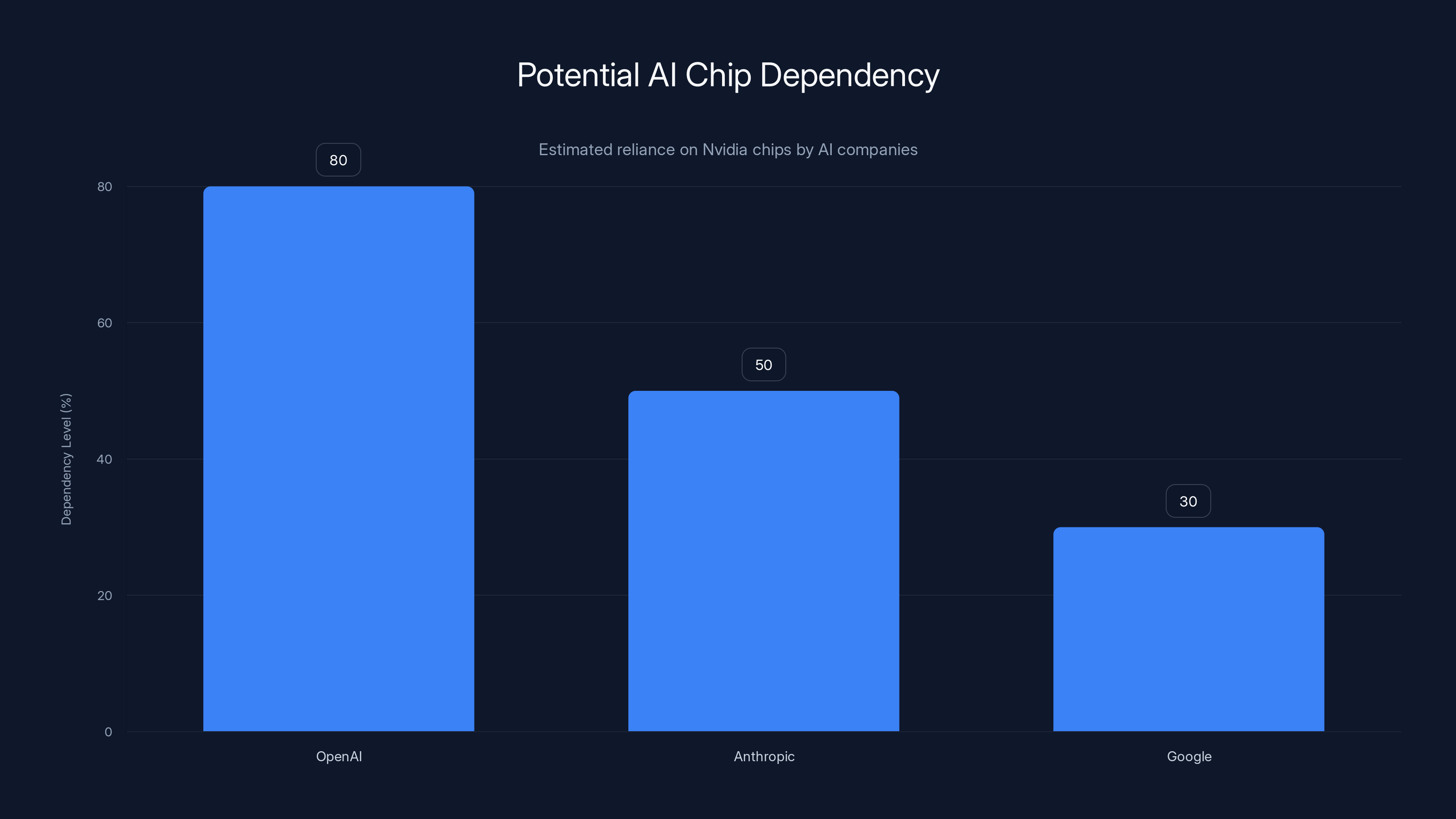

OpenAI's systems rely almost entirely on Nvidia chips. GPUs made by Nvidia power the training of their models and the inference that happens when users interact with ChatGPT. This dependence is so complete that it's almost impossible to imagine OpenAI operating without Nvidia hardware.

But it goes even deeper. OpenAI doesn't just use Nvidia chips internally. The company relies on cloud infrastructure from providers like Microsoft, which also uses Nvidia chips. When you use ChatGPT through the web, you're likely hitting infrastructure powered by Nvidia GPUs. When you use it through an API, same thing.

This creates a fascinating dynamic. OpenAI is locked into Nvidia for hardware. But Nvidia is equally locked into OpenAI for revenue. Nvidia's data center revenue has exploded in recent years, driven almost entirely by AI workloads. And OpenAI represents one of the largest and most visible of those workloads.

So even if there's internal disagreement about the level of financial investment, both companies have strong incentives to maintain a functional partnership. The alternative—fragmentation or conflict—would be catastrophic for both sides.

The infrastructure commitment also signals something about the scale of operations OpenAI is planning. If you're ordering tens of thousands of servers from Nvidia, you're not planning to stay static. You're planning to grow exponentially. You're planning to scale from millions of users to billions.

That scale requires not just chips, but also the integration of those chips into coherent systems. Custom networking, custom software optimization, custom cooling solutions. This is where Nvidia's advantage becomes almost unassailable. No other chip company has the ecosystem, the software support, or the customer relationships to deliver at that scale.

The Private Doubts

Here's where it gets interesting. Multiple reports have suggested that Huang has privately criticized aspects of OpenAI's business strategy. Specifically, he's apparently expressed concerns about competition from companies like Anthropic and Google.

Now, none of these reports have been independently verified. We're operating in the realm of "sources close to Huang" and "people familiar with his thinking." That's important context. But the consistency of these reports across multiple outlets suggests there's something to them.

Why would Huang be worried about competition from Anthropic and Google? Because those companies are also building AI models. And those models also need chips. But here's the thing—they might not need Nvidia chips exclusively.

Anthropic, for instance, has been exploring custom chip development. If Anthropic successfully builds chips optimized for their models, they reduce their dependence on Nvidia. Same with Google, which already has TPUs that power much of their internal AI work. These are existential threats to Nvidia's dominance in the AI infrastructure space.

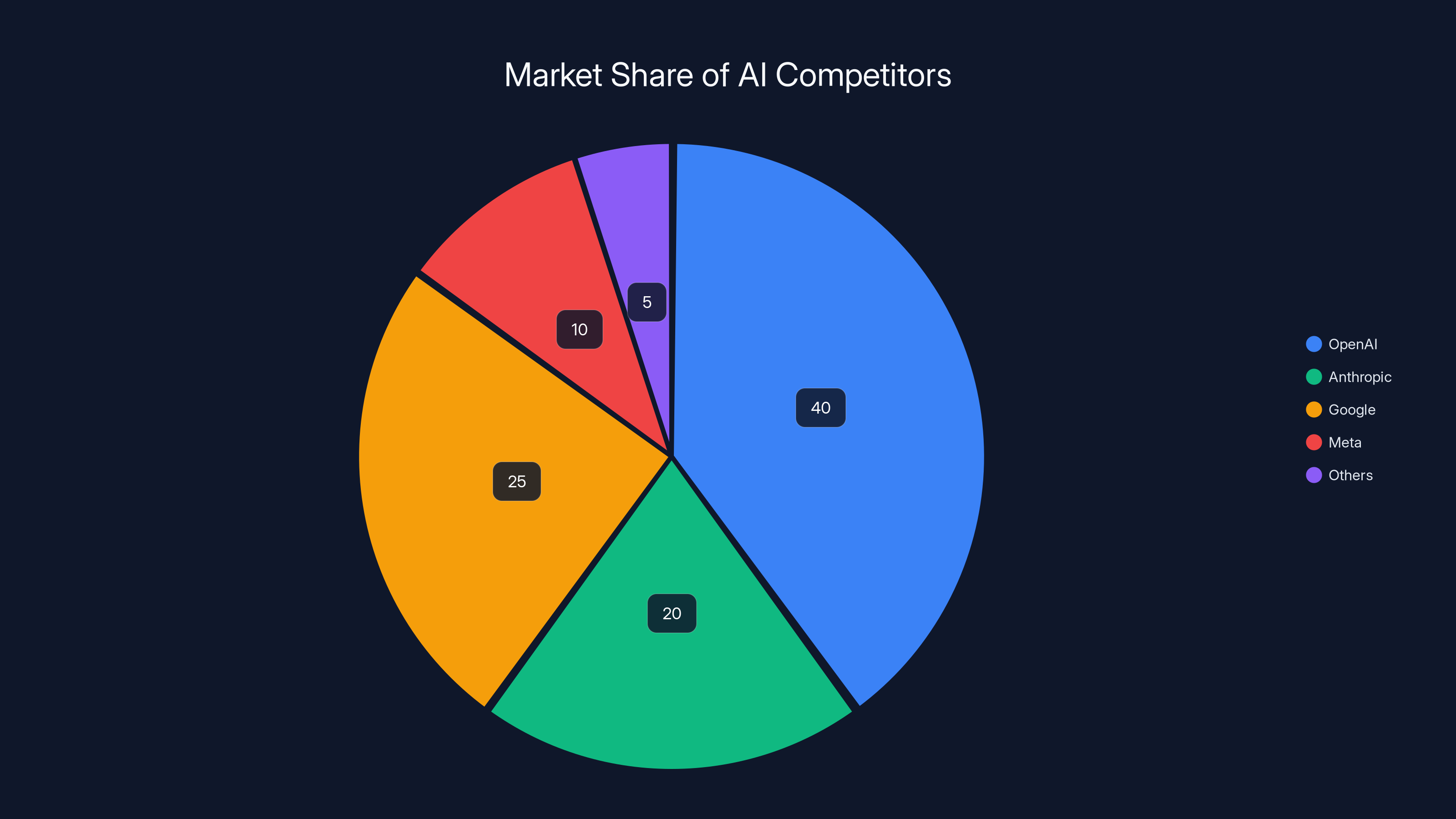

OpenAI, by contrast, has publicly committed to using Nvidia chips. But that commitment is only valuable to Nvidia if OpenAI remains the dominant AI platform. If Claude becomes more popular than ChatGPT, or if Gemini captures more enterprise adoption, then OpenAI becomes less valuable to Nvidia. And therefore, Nvidia's investment becomes less valuable.

This explains why Huang might privately harbor doubts about OpenAI's competitive position, even while publicly praising the company. He's not wrong about OpenAI being consequential. But he might be worried about whether OpenAI will remain consequential if competitive pressure increases.

There's also the question of pricing power. OpenAI charges for API access to its models. Nvidia charges for chips. If OpenAI's margins get compressed by competition, the company might reduce its infrastructure spend. That directly impacts Nvidia's business.

The contrast between Huang's confident public statements and reports of caution in private discussions is worth taking seriously. It suggests he's carefully managing both his investor relations ("Nvidia is betting big on AI") and his risk management ("But we're not putting all our eggs in one basket").

Estimated data suggests OpenAI holds a significant market share, but competitors like Google and Anthropic are also key players. Estimated data.

Sam Altman's Fundraising Challenge

While Huang is managing expectations, Sam Altman is in a different position. He's trying to raise capital for OpenAI's next phase. Whether it's

The fundraising environment has gotten harder in 2025. The initial euphoria around AI has given way to more sober assessment. Investors are asking harder questions: How do you monetize these models? What's your path to profitability? How do you defend against competition?

OpenAI's answers to these questions are becoming clearer, but they're not universally convincing. The company has released ChatGPT 4 and continues to push the boundaries of what language models can do. But the path from "impressive technology" to "billion-dollar business" remains uncertain.

This is where Nvidia's investment becomes valuable not just financially, but strategically. When a major hardware provider commits to investing in your company, it sends a signal. It says, "We believe in this company's future." That signal can help Altman convince other investors.

But here's the catch: if Nvidia's commitment is nonbinding and smaller than expected, that signal becomes weaker. Other investors might think, "If Nvidia is hedging, maybe I should too." That dynamic could make it harder for Altman to close his round at the valuation he wants.

The partnership, from this angle, is as much about signaling as it is about capital. Nvidia needs to signal confidence in OpenAI to maintain its position as the inevitable hardware partner for AI. OpenAI needs to signal confidence in its own future to attract investors.

When you look at Huang's public statements from this perspective, they make more sense. He's not just making a business statement. He's helping Altman make his pitch to investors. He's saying, "Nvidia believes in OpenAI's future enough to invest a great deal of money." That's powerful messaging.

The Competitive Landscape

Anthropic, founded by former OpenAI VP of Research Dario Amodei, has emerged as OpenAI's most credible competitor. The company has released Claude, a language model that many experts consider competitive with or superior to ChatGPT in certain domains.

Claude's strength in reasoning, nuance, and detailed analysis has attracted serious interest from enterprises. More importantly, Anthropic has been more transparent about safety measures and more conservative about deployment, which has earned trust from institutional buyers who are nervous about AI risks.

Google, meanwhile, has Gemini. The company's AI offerings are integrated into Gmail, Workspace, and Search. That's a distribution advantage that OpenAI simply doesn't have. If Google accelerates Gemini development and successfully integrates AI features across its products, it could capture a huge portion of the value being created by AI.

Meta has released Llama 2, an open-source language model that's actually quite capable. The open-source approach means anyone can fine-tune Llama 2 for their own purposes. That's a different business model than OpenAI's, but it's potentially more powerful because it distributes the development effort across thousands of companies and researchers.

Then there are the custom chip players. Anthropic is exploring custom chips. Google has TPUs. Amazon is developing Trainium chips for training and Inferentia chips for inference. Intel is trying to catch up with its data center GPU efforts.

Nvidia's position is that it's the most mature and capable option, and therefore the default choice. But every new competitor, every custom chip, every alternative architecture chips away at that dominance. Huang's private doubts probably center on the question: "How long until one of these alternatives becomes good enough?"

OpenAI's competitive position is similarly under pressure. ChatGPT is still the most widely adopted AI application, but adoption growth has slowed in recent quarters. Claude is gaining market share. Google's enterprise sales team is pushing Gemini hard. The market is fragmenting.

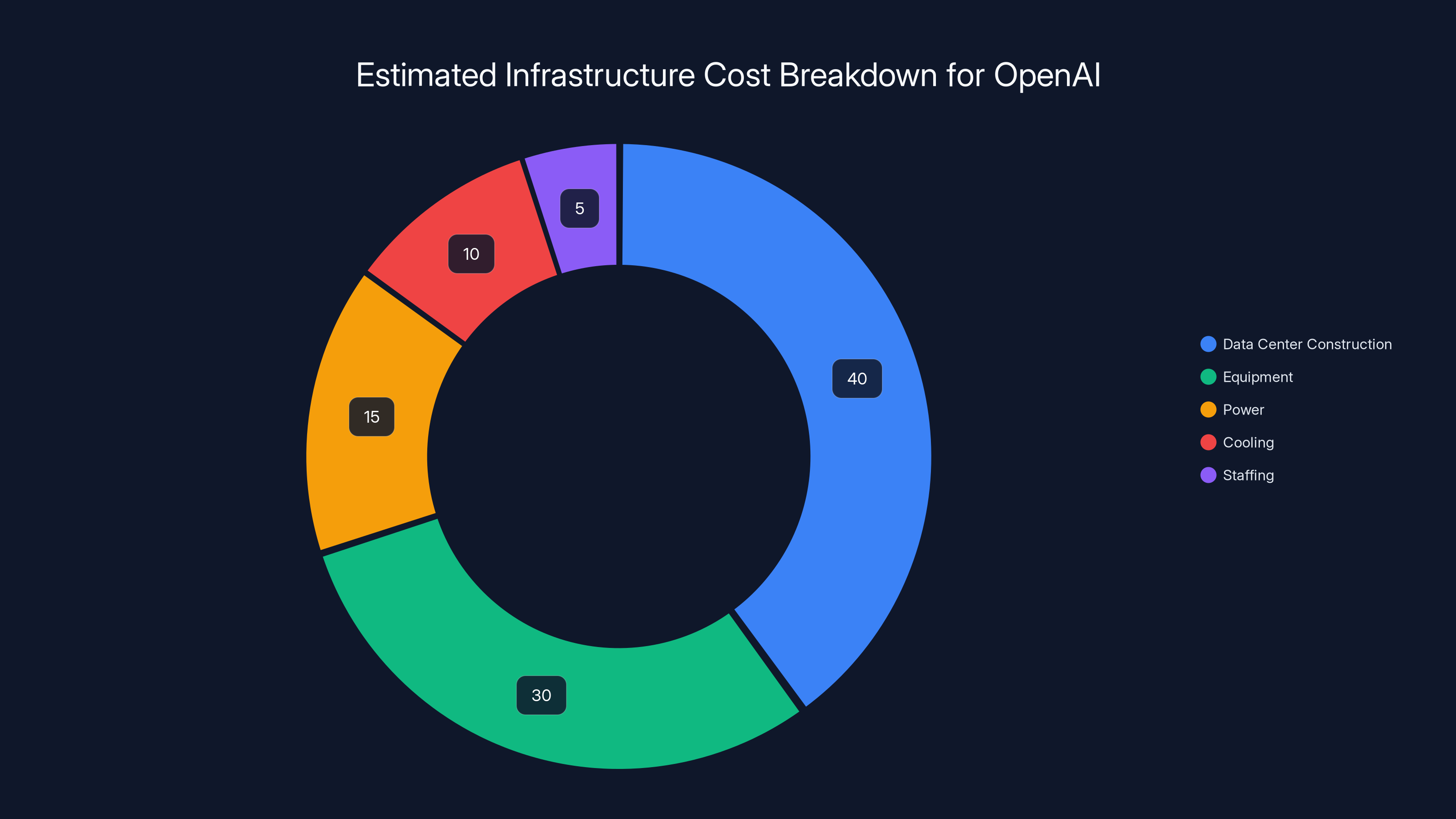

Estimated data shows that data center construction and equipment are the largest cost components in building AI infrastructure, together accounting for 70% of the total projected $100 billion investment.

The Valuation Question

The $100 billion figure for OpenAI's potential valuation is mind-bending. But let's put it in perspective. Here are approximate market capitalizations for comparison:

- Nvidia (as of early 2025): ~$3 trillion

- Microsoft: ~$2.8 trillion

- Apple: ~$2.7 trillion

- Tesla: ~$1.3 trillion

- Meta: ~$1 trillion

A $100 billion valuation for OpenAI would put it ahead of most Fortune 500 companies. But would it behind several of the mega-cap tech firms. The question is whether OpenAI's technology and market position justify that valuation.

The argument for it is straightforward: AI is the most important technology since the internet. OpenAI is the most visible AI company. Therefore, OpenAI should be extremely valuable. By that logic, $100 billion might even be conservative.

The argument against it is more nuanced: Most of OpenAI's value depends on maintaining technological leadership. But competition is intensifying. The field of AI research is moving fast, and OpenAI doesn't have a monopoly on talent or innovation. Companies like Anthropic and Google have serious researchers too. If OpenAI loses its technological edge, its valuation could fall dramatically.

There's also the question of monetization. How much revenue can OpenAI actually generate? The company has been cagey about its financials, but reports suggest annual revenue in the range of

Is that possible? Maybe. But it requires OpenAI to capture a significant share of enterprise AI spending, which is still in its infancy. It requires the company to maintain pricing power against intensifying competition. It requires OpenAI to successfully navigate regulatory scrutiny around AI safety and liability.

Huang's reluctance to commit to the full $100 billion might reflect skepticism about these projections. Or it might just reflect financial prudence. But either way, it suggests the valuation is being negotiated down from the initial targets.

Microsoft's Role

Here's a detail that doesn't get enough attention: Microsoft has already invested over $13 billion in OpenAI and has a long-term commercial partnership worth billions more. Microsoft also uses Nvidia chips in its Azure data centers and its own AI infrastructure.

Microsoft's position is interesting. The company benefits from OpenAI's success because of its partnership, but Microsoft also has its own AI ambitions with Copilot and its integration of AI across Office, Windows, and other products. Microsoft doesn't want to be purely dependent on OpenAI.

Nvidia, on the other hand, wants everyone—Microsoft, OpenAI, Google, Meta, Amazon, every startup, every company—to be dependent on Nvidia chips. The more players in the AI space, the better for Nvidia, as long as all of them need Nvidia hardware.

This creates a complex three-way dynamic. Microsoft benefits from supporting OpenAI, but not exclusively. Nvidia benefits from the entire AI ecosystem. OpenAI benefits from investment from both, but neither is its sole owner or controller.

Microsoft's position in the OpenAI funding round is crucial. If Microsoft commits significant capital alongside Nvidia, it reinforces the message that this company is worth funding. If Microsoft sits out or commits only the minimum, it could signal that even close partners see risks worth hedging.

There's also a regulatory angle. If Nvidia and Microsoft together fund OpenAI at a valuation above a certain threshold, antitrust regulators might pay closer attention. The question of whether a few giant companies controlling the AI infrastructure and the AI applications is healthy for competition is one that regulators are already asking.

Estimated data shows OpenAI has a high dependency on Nvidia chips, while Anthropic and Google are exploring alternatives, potentially reducing their reliance.

The Infrastructure Challenge

Let's talk about what it actually takes to power OpenAI at scale. Training a state-of-the-art language model requires:

- Massive GPU clusters: Thousands of high-end GPUs networked together, coordinated, and optimized

- Specialized networking: Custom interconnects to move massive amounts of data between GPUs without bottlenecks

- Power infrastructure: Enormous amounts of electricity—we're talking 100+ megawatts for a large training run

- Cooling systems: Liquid cooling, thermal management, heat dissipation

- Software optimization: Custom kernels, libraries, and algorithms to maximize utilization

- Physical facilities: Data centers designed specifically for this kind of workload

Nvidia doesn't provide all of this. But Nvidia chips are the foundation. Nvidia's CUDA software ecosystem is the standard. Nvidia's customer relationships with data center operators are crucial.

The estimated cost to build out the infrastructure for OpenAI's next generation of models is in the range of

These are civilization-scale projects. They require government-level coordination and resources. They require a level of capital commitment that only a handful of companies can sustain.

Nvidia's investment in OpenAI is, from this perspective, an investment in the infrastructure ecosystem that keeps Nvidia at the center. If OpenAI successfully builds out $100 billion in AI infrastructure powered by Nvidia chips, that's a massive endorsement of Nvidia's technology and a powerful driver of future revenue.

But it's also a bet. If the infrastructure turns out to be underutilized, or if competitors build alternative infrastructure faster and cheaper, then it's money wasted.

Regulatory Scrutiny

The Biden administration, and now the Trump administration, have both expressed concern about AI concentration and the concentration of AI infrastructure. There are ongoing discussions about whether entities like OpenAI should be required to share compute access, whether AI capabilities should be restricted, and whether the infrastructure powering AI should be treated like critical infrastructure.

A $100 billion fund for OpenAI, controlled largely by Nvidia and Microsoft, raises some of these questions. If OpenAI becomes the de facto standard for AI applications, backed by Nvidia hardware and Microsoft distribution, are we creating a choke point that's unhealthy for the industry?

Huang's hedging on the investment amount might also reflect an awareness of these regulatory risks. If Nvidia commits the full $100 billion and then regulators block it or place conditions on it, Nvidia looks foolish. By positioning the commitment as flexible and nonbinding, Huang preserves optionality.

OpenAI faces similar regulatory questions. The company hasn't disclosed a clear governance structure around safety and oversight that would satisfy all regulators. If OpenAI is raising $100 billion, regulators will certainly want a say in how that capital is deployed and what safeguards are in place.

These regulatory considerations are probably being discussed in the private conversations between Huang and others. The public statements about strong partnerships might mask more complicated negotiations with regulators, governments, and other stakeholders.

The Broader AI Infrastructure Market

Nvidia's dominance in AI chips is real but not inevitable. The market for AI infrastructure is evolving rapidly, and new alternatives emerge constantly.

In the GPU space, AMD has been improving its offerings with RDNA architecture for inference. Intel has Arc GPUs that are competitive for certain workloads. Neither company has Nvidia's ecosystem or track record, but both are investing heavily.

In the custom chip space, Google TPUs are mature and excellent for training and inference of Google's models. Amazon's Trainium and Inferentia chips are specialized for training and inference respectively, and they're cost-effective for many workloads. Graphcore, Cerebras, and other startups are experimenting with entirely different approaches to AI computing.

The question is whether any of these alternatives become better or cheaper than Nvidia GPUs for a significant portion of AI workloads. If they do, Nvidia's pricing power and market dominance erode.

Huang's investment in OpenAI is, partly, a bet that the world will standardize on Nvidia chips as the foundation of AI infrastructure. The bigger and more visible OpenAI's success is, powered by Nvidia hardware, the more companies will trust that Nvidia is the safe bet.

But it's also a risk. If OpenAI doesn't deliver the returns investors expect, if competitive models outperform ChatGPT, or if the AI infrastructure market fragments more than expected, then Nvidia's bet hasn't paid off.

Looking Forward: 2025 and Beyond

We're at an inflection point. The initial hype cycle around AI is giving way to reality checks. Investors are asking tougher questions. Companies are facing real competition. Regulators are paying closer attention.

In this environment, the Nvidia-OpenAI partnership becomes more important, not less. Both companies need each other. But the terms of their engagement will likely shift.

Huang's public statements are setting expectations for a continued strong partnership. But his private positioning suggests Nvidia is hedging its bets. The investment will likely happen, but probably at a smaller scale than initially discussed. Equity stakes might be restructured. Conditions might be added.

OpenAI, meanwhile, will have to make peace with a more conditional relationship. The days of unlimited capital from well-intentioned partners are probably over. The company will need to demonstrate real progress toward profitability and continued technological leadership.

For everyone else watching this unfold—investors, startups, researchers, policymakers—the key lesson is this: the AI infrastructure market is being shaped by these high-stakes partnerships and negotiations. The winner will be whoever controls the most critical chokepoint in the system.

Right now, that's Nvidia with chips. But it might shift to whoever controls the most capable models (OpenAI), or whoever controls the most powerful compute (Microsoft), or whoever captures the most users (any of them).

The public statements are theater. The real negotiations happen behind closed doors. And those negotiations are determining the structure of the AI industry for the next decade.

FAQ

What is the Nvidia-OpenAI partnership exactly?

Nvidia and OpenAI have agreed to collaborate on infrastructure development and investment. Nvidia provides GPU chips and computing infrastructure that power OpenAI's models, and has committed to investing significantly in OpenAI's next funding round. The partnership extends beyond just financial investment to include joint infrastructure projects with tens of thousands of servers.

Why did Jensen Huang say reports of friction were "nonsense"?

Huang was responding to reports that suggested Nvidia was backing away from its massive commitment to OpenAI. By explicitly denying these reports and praising OpenAI as "one of the most consequential companies of our time," Huang was trying to reaffirm the strength of the partnership, likely to boost investor confidence and support OpenAI's fundraising efforts.

What's the significance of Nvidia's $100 billion commitment being "nonbinding"?

A nonbinding commitment means Nvidia can walk back or reduce its investment without legal consequences. This is significant because it suggests Nvidia is hedging its bets. It means the company believes in OpenAI but is also protecting itself against the possibility that market conditions or competitive dynamics change. A binding commitment would signal absolute conviction.

How dependent is OpenAI on Nvidia chips?

OpenAI is almost entirely dependent on Nvidia GPUs for both training its models and powering inference when users interact with ChatGPT. This includes not just Nvidia chips in OpenAI's own infrastructure, but also in the cloud providers like Microsoft Azure that OpenAI uses. This deep dependence gives Nvidia leverage in negotiations.

What are OpenAI's main competitors, and why does this matter to the Nvidia investment?

OpenAI's main competitors include Anthropic (with Claude), Google (with Gemini), and Meta (with Llama). If these competitors successfully capture market share from OpenAI, then OpenAI becomes a less valuable company, which means Nvidia's investment is worth less. This is why Huang might privately worry about competition even while publicly supporting OpenAI.

Could OpenAI actually reach a $100 billion valuation?

It's theoretically possible but ambitious. OpenAI would need to capture enormous enterprise AI spending, maintain technological leadership against fierce competition, and generate tens of billions in annual revenue. Current estimates suggest OpenAI's annual revenue is around

What happens if OpenAI doesn't secure the full funding it's seeking?

OpenAI could still operate successfully with a smaller funding round, but it might have to slow infrastructure buildout, be more selective about computing power usage, or accept a lower valuation. This would also affect Nvidia's calculus around its own investment since the opportunity to be the foundation of a $100 billion AI company would be diminished.

How does Microsoft's investment influence the Nvidia-OpenAI dynamic?

Microsoft has already invested over $13 billion in OpenAI and benefits from the partnership through its Copilot and Office AI integrations. Microsoft also uses Nvidia chips in its data centers, so there's alignment between all three companies, but each has its own competitive interests and isn't entirely dependent on the others.

Are there regulatory concerns about this partnership?

Yes. A $100 billion fund concentrated in OpenAI, powered by Nvidia hardware and distributed through Microsoft, raises questions about concentration of AI power and infrastructure. Regulators are scrutinizing whether this creates unhealthy monopolistic control over AI infrastructure that could disadvantage competitors.

What's the real timeline for this funding to be finalized?

While neither company has stated a firm deadline, these types of massive funding rounds typically take months to finalize with due diligence, negotiations, and regulatory review. Huang's comments suggest something is imminent, but the exact timing remains unclear. The fact that details are still being negotiated indicates closure isn't immediate.

Key Takeaways

The Nvidia-OpenAI partnership is one of the most consequential technology relationships of our era. On the surface, it looks like a straightforward investment: a hardware company betting on an AI company. But underneath, it's a complex negotiation about valuation, control, competitive positioning, and the future structure of the AI industry.

Jensen Huang's public statements are carefully crafted to signal confidence while preserving flexibility. OpenAI is in a precarious position where it needs external capital to fund growth but faces intensifying competition from well-funded rivals. The broader infrastructure market is fragmenting, with new competitors and alternatives emerging constantly.

The partnership will likely continue and deepen, but probably with terms that are less favorable to OpenAI than initially hoped. Nvidia will remain the default infrastructure provider, but with its dominance increasingly questioned by alternatives. And the broader AI industry will continue to consolidate around a few major players with the capital and technological chops to compete at scale.

What Huang said is true—the partnership is strong. What he didn't say is equally important: it's strong because both companies need each other, not because either one is absolutely committed to an unlimited relationship. The billions being discussed will flow, but under conditions, with guardrails, and with each party carefully managing its risks.

Related Articles

- SpaceX Acquires xAI: Building a 1 Million Satellite AI Powerhouse [2025]

- AI Agents Getting Creepy: The 5 Unsettling Moments on Moltbook [2025]

- SpaceX Acquires xAI: The 1 Million Satellite Gambit for AI Compute [2025]

- SpaceX Acquires xAI: Creating the World's Most Valuable Private Company [2025]

- Microsoft's Maia 200 AI Chip Strategy: Why Nvidia Isn't Going Away [2025]

- SpaceX and xAI Merger: Inside Musk's $1.25 Trillion Data Center Gamble [2025]

![Nvidia's $100B OpenAI Gamble: What's Really Happening Behind Closed Doors [2025]](https://tryrunable.com/blog/nvidia-s-100b-openai-gamble-what-s-really-happening-behind-c/image-1-1770158265237.jpg)