Introduction: The Next Evolution of Developer Tools

The relationship between developers and their tools has always been one of iteration. First came syntax highlighting, then intelligent autocomplete. Then came refactoring tools, debuggers, and version control integration. Each innovation compressed the gap between intention and implementation. Now we're at another inflection point.

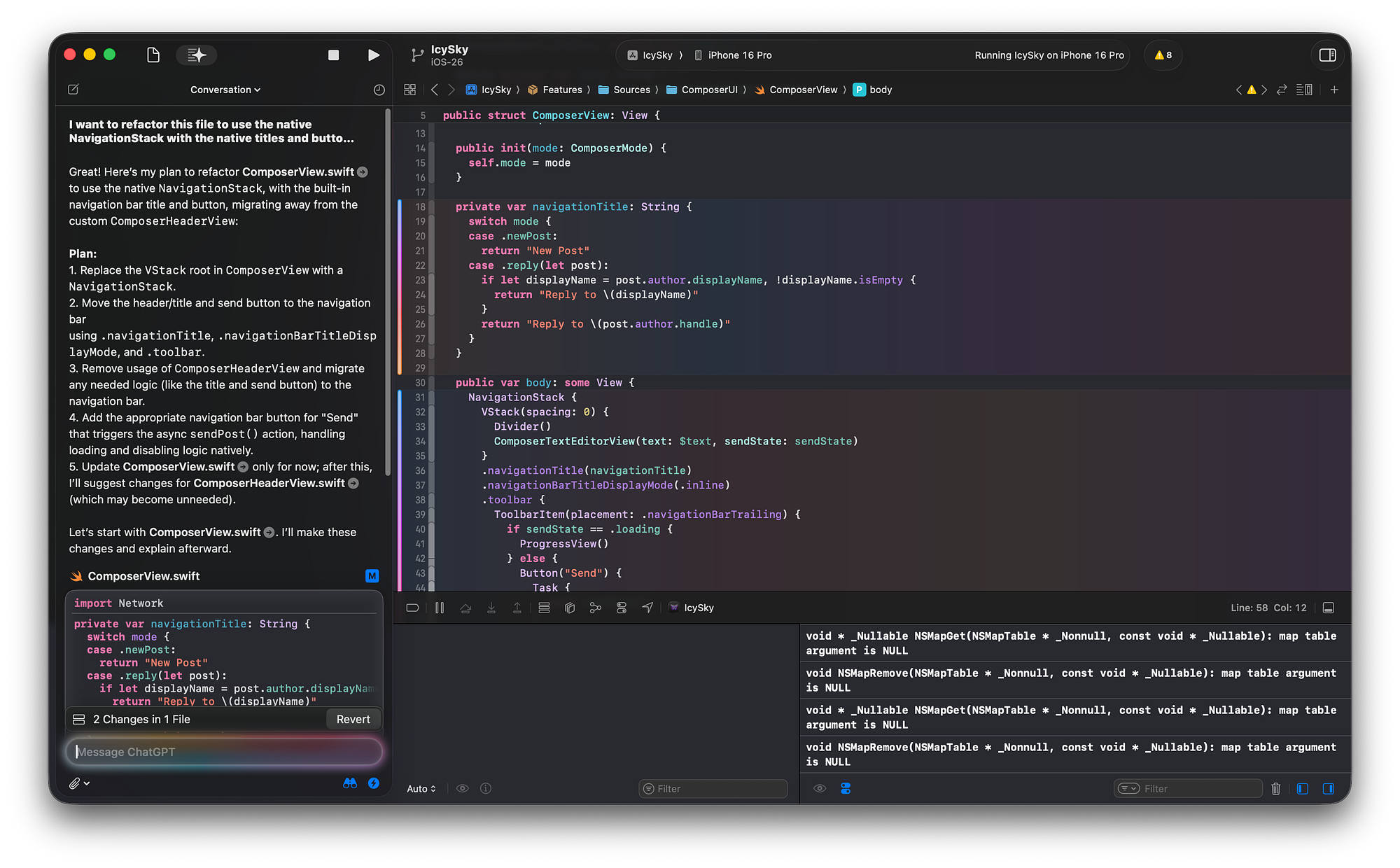

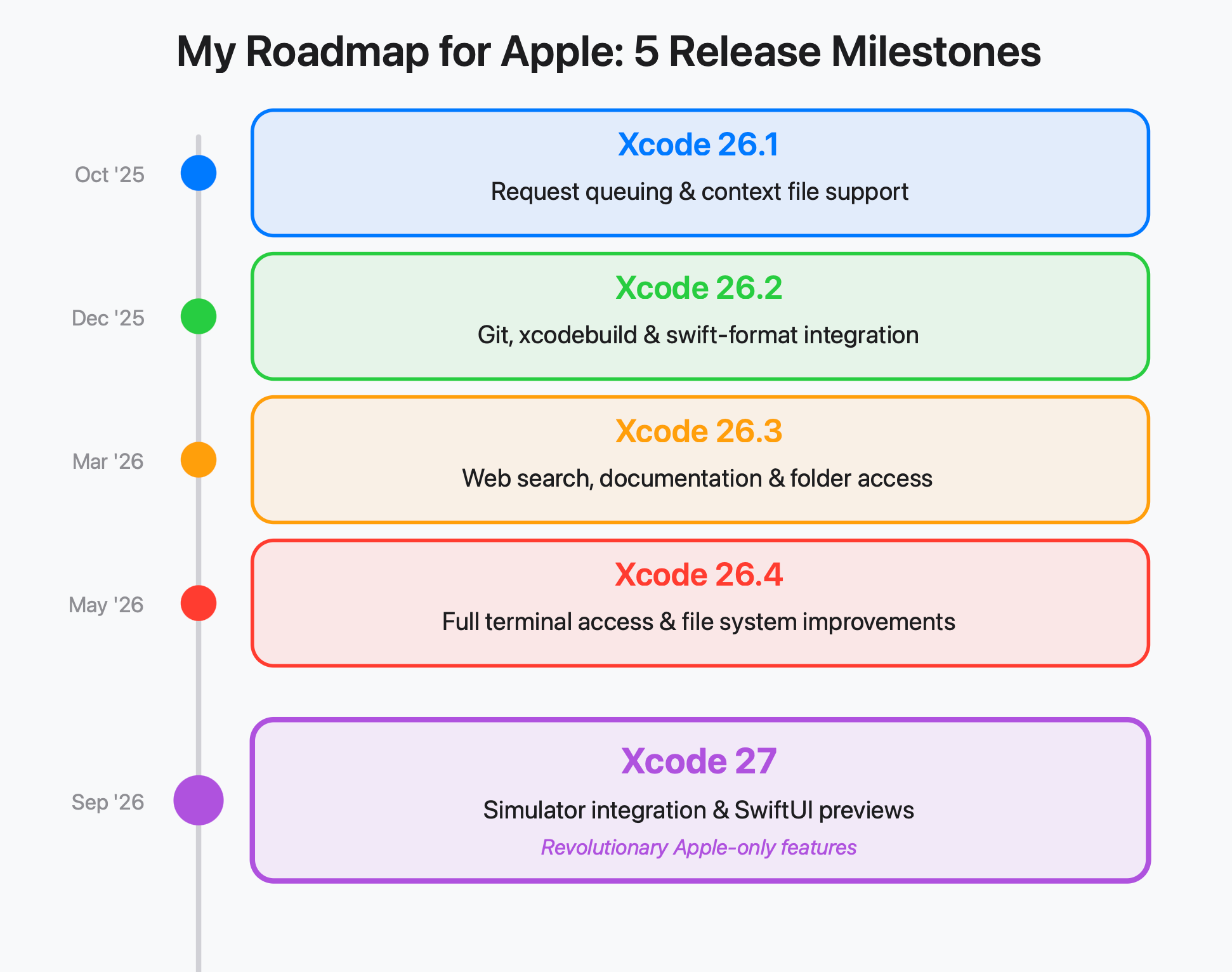

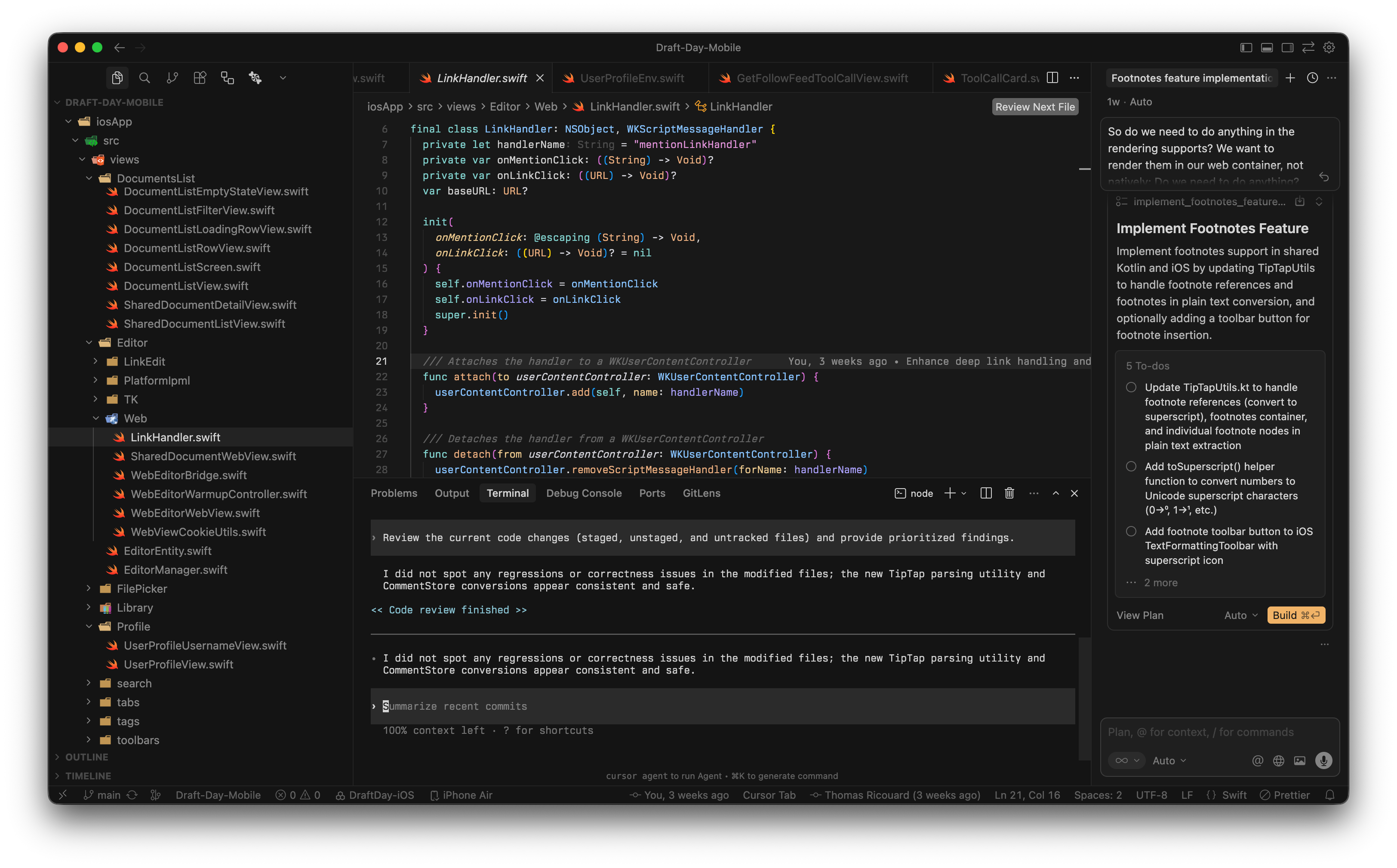

Apple just announced Xcode 26.3, and the update marks something genuinely different from what came before. This isn't another autocomplete feature or a smarter code suggestion system. This is agentic coding—where AI agents actually work inside your development environment, autonomously exploring your codebase, planning changes, implementing features, running tests, and iterating on failures without requiring you to validate every step.

For most developers, this sounds like science fiction. For some, it sounds terrifying. The reality sits somewhere in between, and it's worth understanding what's actually happening here, why it matters, and what it means for how you'll work in the next few years.

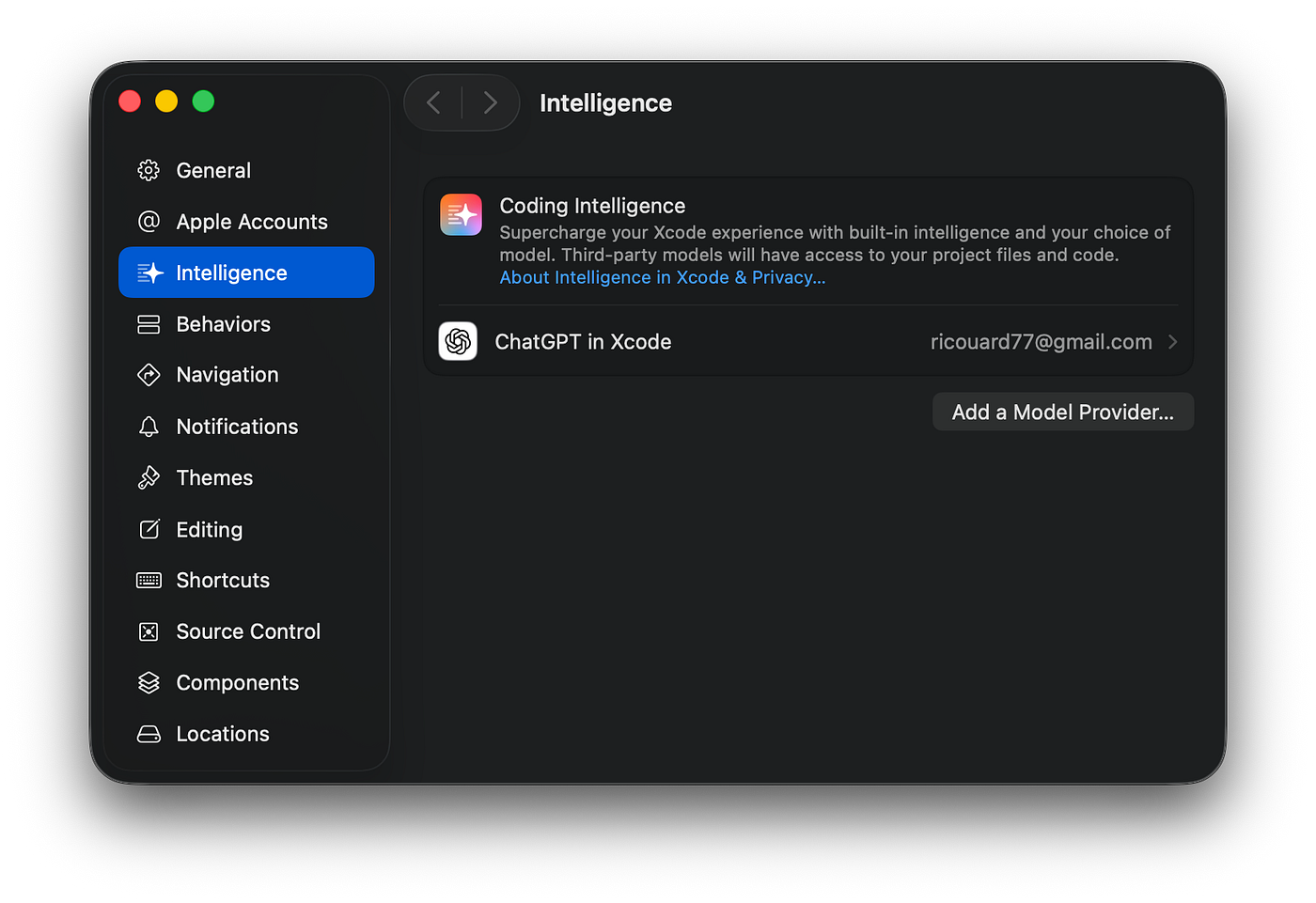

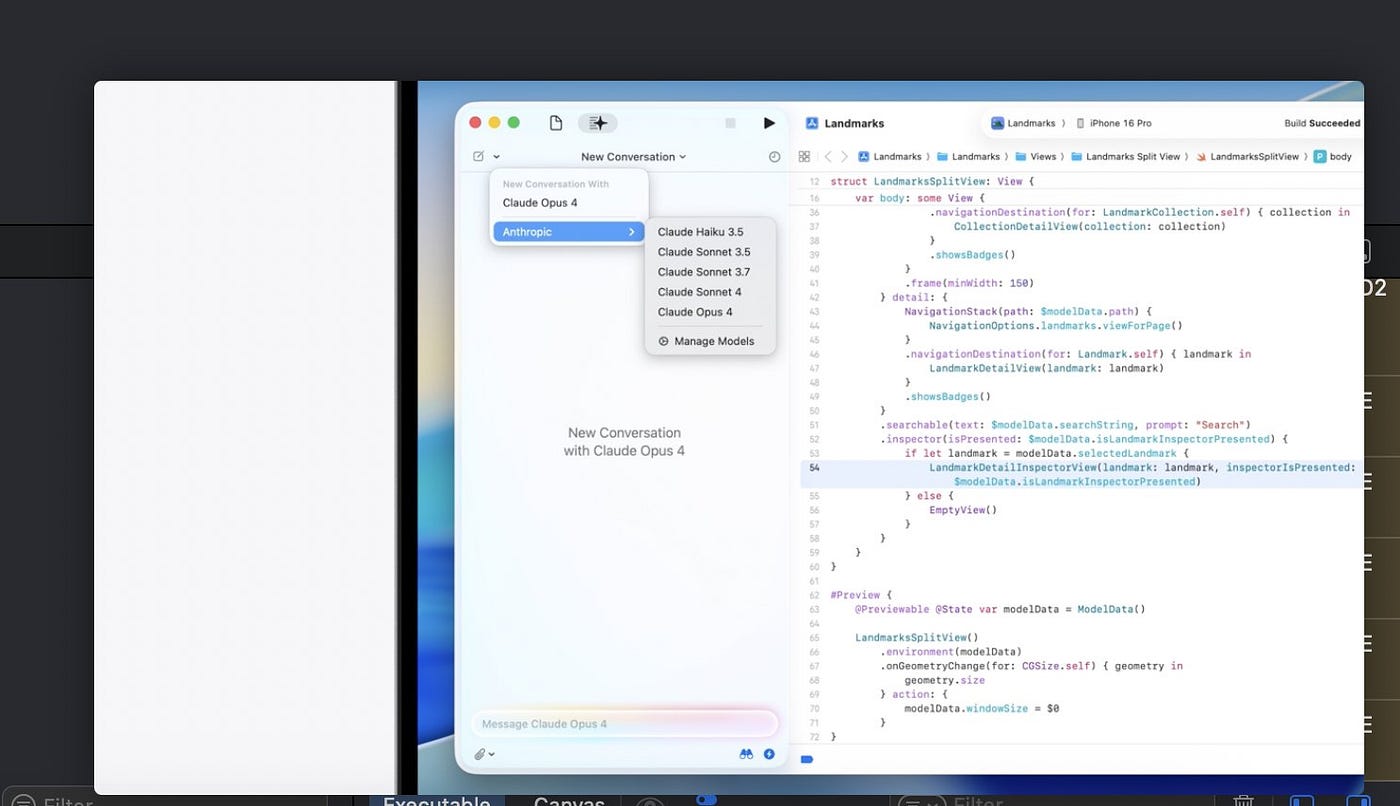

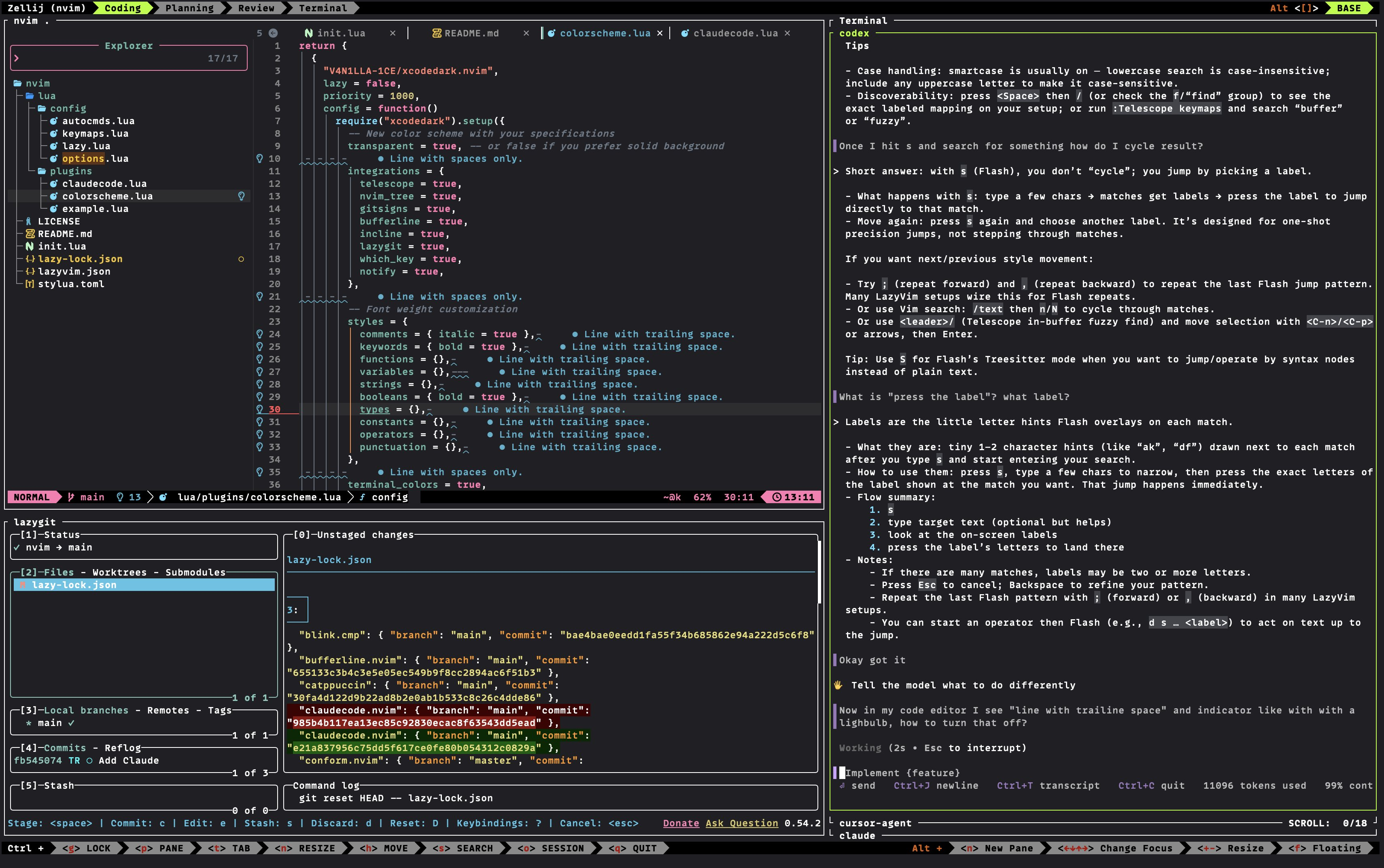

Xcode 26.3 integrates agents from two of the most significant AI companies today: Anthropic's Claude Agent and Open AI's Codex. Both are now first-class citizens in Apple's IDE, accessible through a simple interface and designed specifically to work with Apple's frameworks, documentation, and development workflows. The implementation is technically sophisticated—using something called the Model Context Protocol (MCP)—and the implications are surprisingly broad.

This isn't just about making coding faster. It's about fundamentally changing what a developer is doing when they're coding. It's about shifting from typing code to directing agents, from building in isolation to collaborating with systems that think differently than you do. It's about automation that's genuinely useful rather than just flashy.

Let's dig into what's actually happening, how it works, and why this matters for developers and teams building the next generation of apps.

TL; DR

- Xcode 26.3 introduces true agentic coding, where AI agents autonomously explore, plan, implement, test, and iterate on code changes without constant human validation

- Two major AI providers integrated: Anthropic's Claude Agent and Open AI's Codex now work natively inside Xcode with full access to project structure, documentation, and testing frameworks

- MCP (Model Context Protocol) enables extensibility, allowing any MCP-compatible agent to integrate with Xcode's capabilities, not just the two initial partners

- Token optimization was critical: Apple worked extensively with Anthropic and Open AI to ensure agents run efficiently, tracking costs and performance to make real-world usage practical

- Transparency and control remain paramount: Developers see each step the agent takes, can intervene at any point, revert changes, and learn from what the AI is doing—essential for trust and education

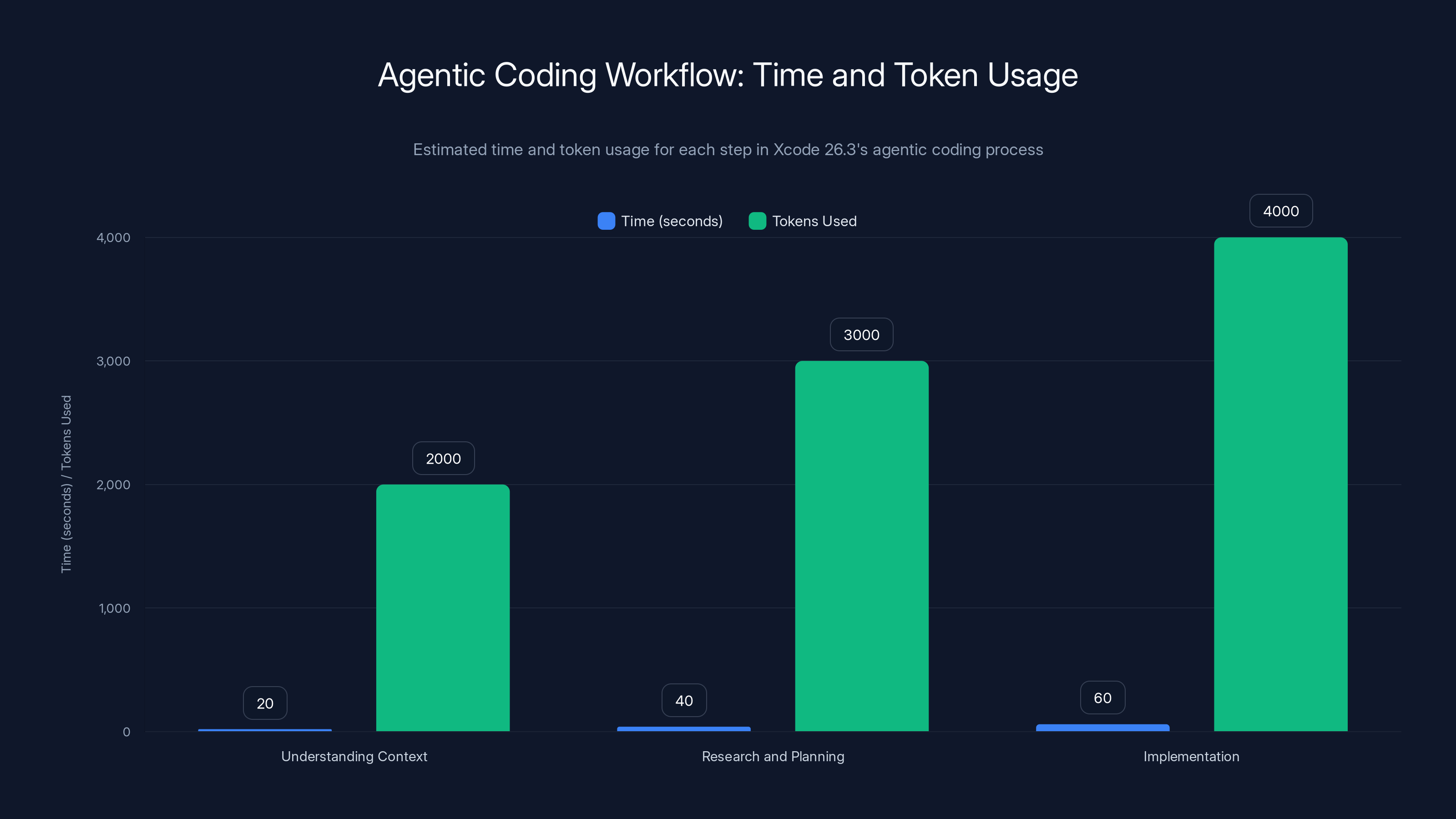

Estimated token usage ranges from 8,000 to 20,000 per agent task, impacting cost and efficiency. Estimated data.

What Is Agentic Coding? Understanding the Paradigm Shift

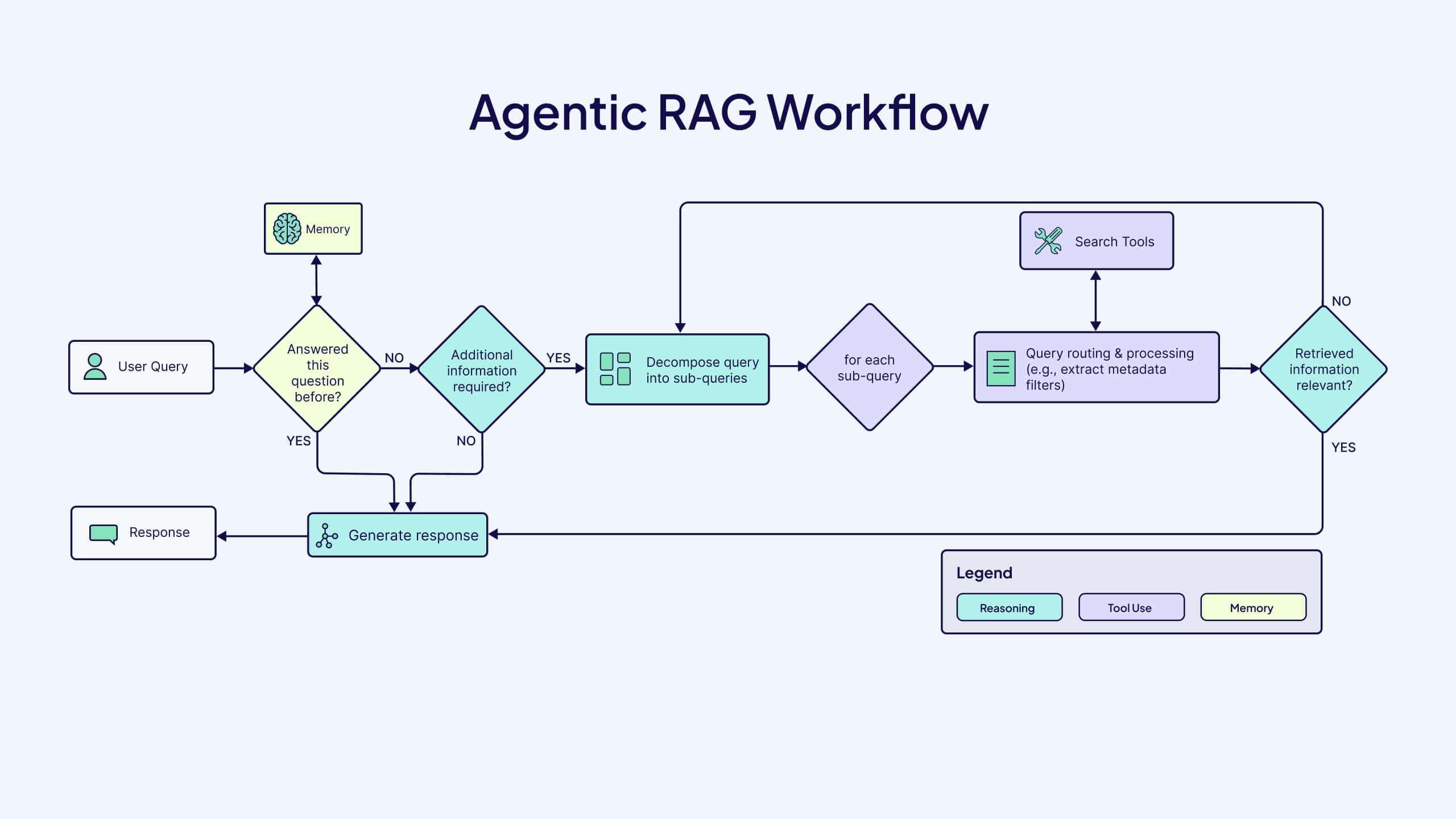

Before diving into Xcode's implementation, we need to clear up what "agentic coding" actually means. The term gets thrown around loosely, and it's worth being precise.

Traditional AI coding assistance works like this: you write code, the system suggests completions or refactorings, you accept or reject them. The human remains the decision-maker and executor. The AI is a helper—powerful, but ultimately reactive.

Agentic coding flips the script. You describe what you want—"Add a feature that lets users save their progress using Cloud Kit" or "Refactor the authentication module to use the new async/await pattern"—and the agent takes that goal and runs with it. It breaks the task into steps, explores your codebase to understand the current architecture, reads the relevant documentation, writes code, runs tests, sees failures, and iterates on those failures. You're no longer typing every character. You're directing an intelligent agent that knows how to code.

This is a meaningful shift in mental model. It's closer to pair programming with someone who's incredibly fast and tireless, but also sometimes makes mistakes that need catching. The agent doesn't need you to type every line—it needs you to set direction, validate decisions, and catch problems it misses.

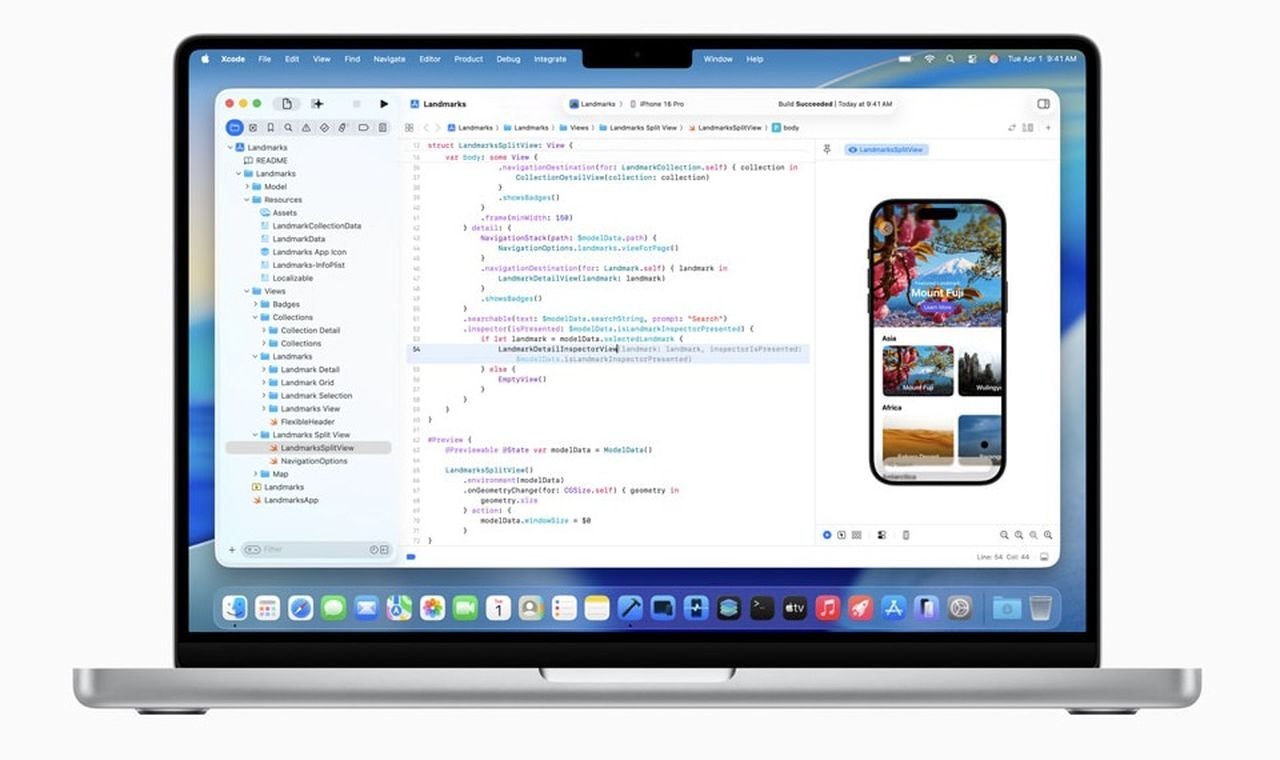

Xcode 26.3 implements this through a structured workflow. You open a prompt box on the left side of the editor. You type in natural language what you want to accomplish. The agent then:

- Explores the project to understand its structure, dependencies, and current patterns

- Reads documentation from Apple's developer resources to ensure it's using current APIs and following best practices

- Plans the implementation by breaking it into smaller, manageable steps

- Writes code iteratively, highlighting changes so you see what's being modified

- Runs tests to verify the implementation works as intended

- Iterates on failures if tests fail, examining error messages and fixing problems

- Presents results with a full transcript of everything that happened, so you understand the complete chain of reasoning

The key distinction is autonomy within scope. The agent operates autonomously within the task you've defined, making decisions about implementation details without asking you to approve each step. But you maintain control over the overall direction and can revert or redirect at any point.

It's also worth noting what this isn't. It's not a replacement for developers. It's not "AI writes your app, you just watch." Building software still requires judgment—about architecture, about user experience, about trade-offs. What agentic coding does is automate the execution layer, the grunt work of actually implementing decisions that humans make about design and direction.

The Technical Architecture: How Xcode and AI Agents Actually Communicate

Getting an AI agent to write code in your IDE isn't trivial from an engineering perspective. The agent needs to understand your project structure, make changes to files, read documentation, run build processes, and report back. That's a lot of integration points.

Apple solved this using the Model Context Protocol, or MCP. This is a critical technical detail because it shapes everything about how this feature works and how it will evolve.

MCP is an open standard that defines how large language models can connect with external tools and data sources. Think of it as a standardized language that lets an AI agent say things like "read this file" or "run this command" or "search this documentation" without needing custom integration code for each tool.

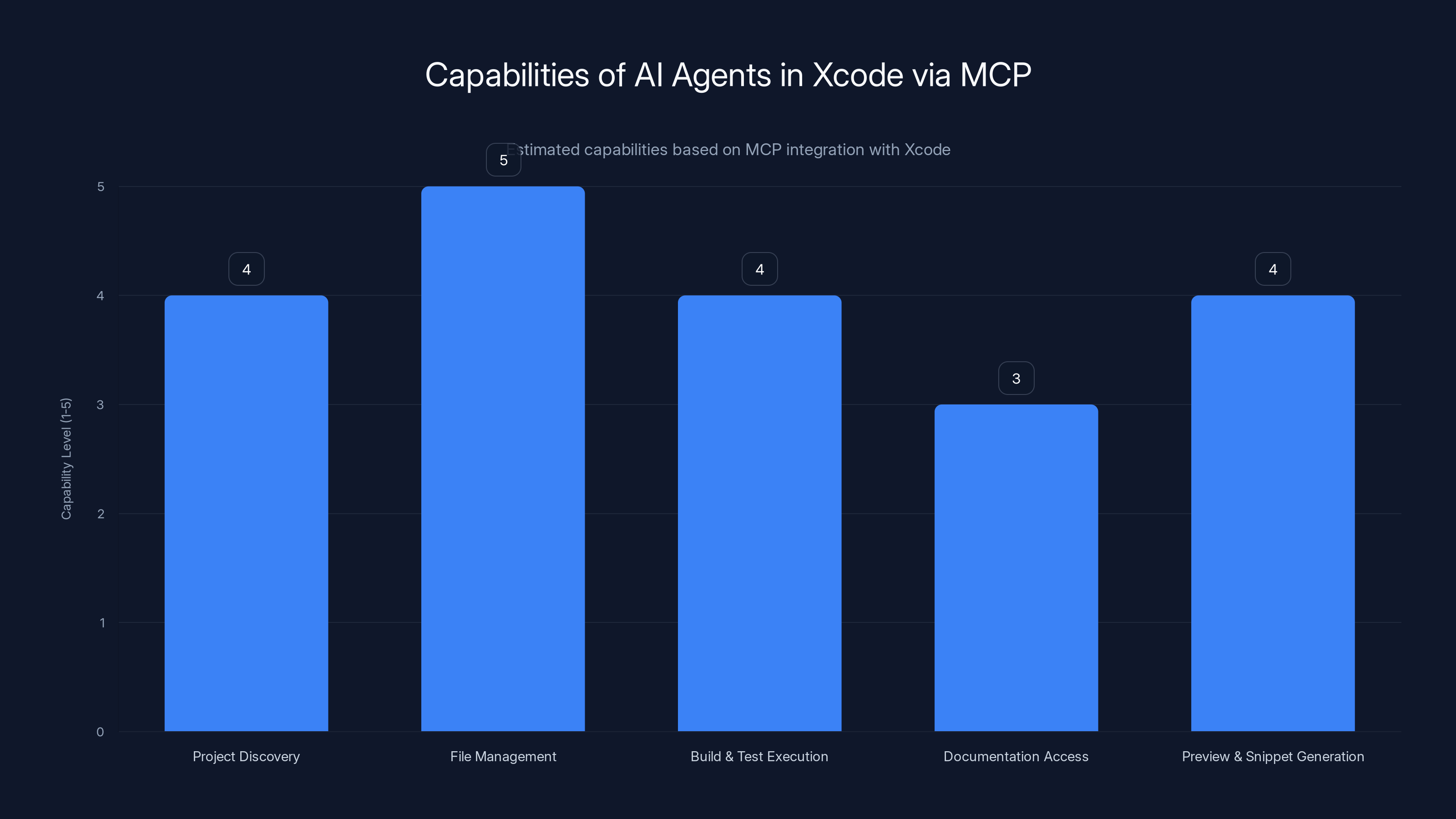

Here's the practical architecture: Xcode exposes a set of capabilities through MCP. These capabilities include:

Project discovery and understanding: The agent can browse your project structure, read metadata about your targets and dependencies, understand which frameworks you're using, and see the patterns in your code.

File management: The agent can read files, write changes, create new files, and understand how modifications to one file might affect others. This is more sophisticated than just text editing—the system has semantic understanding of code relationships.

Build and test execution: The agent can trigger your project's build process and run tests, then parse the output to understand what succeeded and what failed. This feedback loop is essential because it's how agents learn whether their implementation works.

Documentation access: Apple includes its current developer documentation as context the agent can consult. When deciding whether to use a particular API, the agent can read the official documentation to understand the right patterns and best practices.

Preview and snippet generation: For UI-focused development, agents can generate Swift UI previews to visualize what they're building. This is particularly useful for designers and frontend-focused developers.

The beauty of MCP is extensibility. Xcode doesn't have to integrate separately with Anthropic and Open AI—it exposes these capabilities through MCP, and any MCP-compatible agent can access them. This is significant because it means as new, more capable models arrive from other providers, the integration path is standardized.

But here's where the engineering gets interesting. Large language models consume tokens, and tokens cost money. Running an agent that needs to explore a large codebase, read documentation, write code, and run tests multiple times as it iterates can burn through tokens quickly. This is why Apple emphasized that it "worked closely with both Anthropic and Open AI to design the new experience... to optimize token usage and tool calling."

What does this optimization actually mean? It means things like:

Efficient caching: When the agent reads a file or documentation page once, that context is cached so subsequent references don't require re-reading and re-tokenizing.

Structured tool calling: Instead of having the agent generate text descriptions of what it wants to do, MCP uses structured calls where the agent specifies exact parameters, reducing ambiguity and wasted tokens on clarification.

Intelligent context windows: The agent doesn't need to load your entire codebase into context at once. It loads relevant sections based on what it's working on, minimizing context size while maintaining understanding.

Minimal feedback loops: When the agent runs a test that fails, the error messages are parsed and summarized rather than dumping entire build logs into the agent's context.

These optimizations matter because they make the feature economically viable. Without them, running an agent on a moderately complex project could cost enough that developers would think twice about using it.

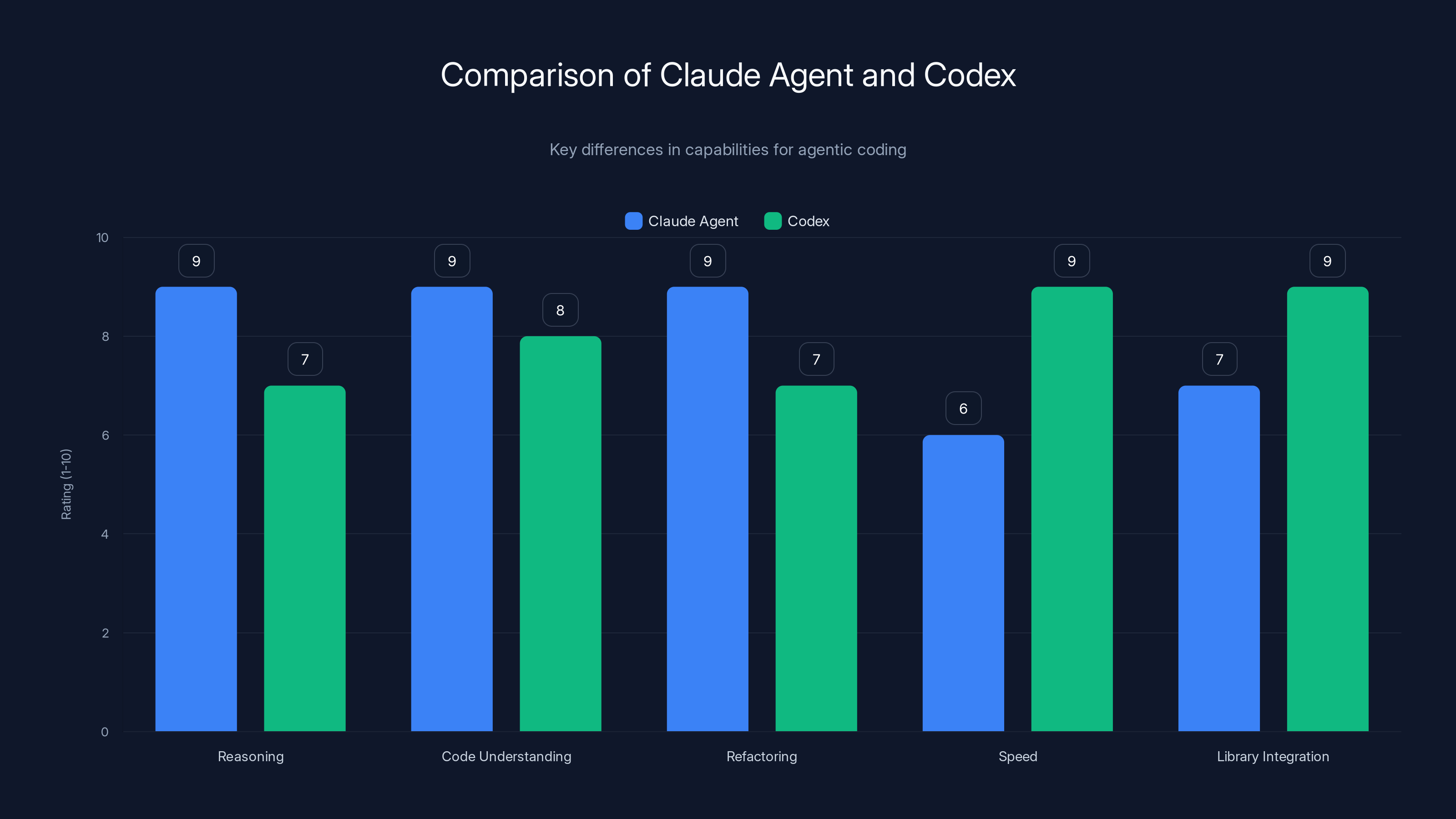

Claude Agent excels in reasoning, code understanding, and refactoring, while Codex is faster and better at library integration. Estimated data based on described characteristics.

Anthropic's Claude Agent: Design and Capabilities

Anthropic is one of the two primary partners in Xcode 26.3, providing Claude Agent. Understanding Anthropic's approach to agentic AI helps explain what Claude brings to the development environment.

Anthropic has positioned Claude as a model that prioritizes harmlessness and explainability. For an agent operating inside someone's codebase—potentially making significant changes to production-critical systems—this philosophy matters. Claude agents are designed to be interpretable: they show their reasoning, they're careful about potential consequences, and they're less likely to take shortcuts or make risky assumptions.

For coding specifically, Claude Agent in Xcode has been optimized for several specific strengths. First, it's genuinely good at reading and understanding existing code. It can examine your implementation patterns and follow them consistently. If your codebase uses specific conventions—custom error handling, particular architecture patterns, naming schemes—Claude tends to pick up on these and maintain them rather than imposing its own style.

Second, Claude has strong capabilities in code review and refactoring tasks. Beyond just writing new features, the agent can understand when existing code could be improved. You can ask it to "refactor the authentication flow to be more testable" and it will actually think about dependency injection, mocking capabilities, and test structure rather than just doing superficial renaming.

Third, Claude's architecture makes it strong at maintaining context across complex tasks. If you ask it to implement a feature that spans multiple files and requires understanding how one change affects another, Claude tends to maintain a coherent mental model of the whole system rather than losing track of constraints and relationships.

For Apple developers specifically, Anthropic has trained Claude extensively on Swift, Swift UI, and Apple's frameworks. This is evident in task completion—when using Claude Agent in Xcode, you'll notice it understands Swift UI patterns, async/await patterns, and property wrappers intuitively.

One practical detail: Anthropic designed Claude Agent to be cautious about side effects. If an agent makes a change that could have unintended consequences—like modifying initialization code that multiple classes depend on—Claude is more likely to flag this uncertainty and ask for validation rather than proceeding confidently. This is a deliberate design choice aimed at preventing subtle bugs.

You configure Claude Agent through Xcode's settings. You specify which version of Claude you want to use, connect your Anthropic API key, and the agent becomes available in the prompt interface. For developers who want to track costs precisely, you can monitor token usage and adjust model selection—using Claude 3.5 Sonnet for complex tasks and Claude 3 Haiku for simpler changes to reduce costs.

Open AI's Codex Integration: A Different Approach

While Anthropic brings philosophical rigor and code understanding, Open AI brings raw capability and breadth. Codex, Open AI's code-specialized model (now part of GPT-5 and beyond), has different strengths that complement the ecosystem.

Open AI's approach to agentic coding emphasizes speed and generality. Codex was trained on an enormous corpus of public code from Git Hub, Stack Overflow, and other sources. This means it has "seen" more code patterns, more library usages, and more real-world solutions than almost any model. When you ask it to integrate a particular API or implement a pattern, it often has direct knowledge from seeing that exact problem solved before.

For i OS development specifically, this means Codex has deep familiarity with popular libraries. If you're using a third-party framework like Realm, Firebase, or Alamofire, Codex likely has seen hundreds of real implementations and will default to proven patterns rather than inventing solutions.

Codex also tends to be faster at code generation. The model structure is optimized for quick, fluent code writing. This makes it particularly good for feature implementation tasks where you're starting from scratch. Ask it to build a feature, and it will often have a working implementation faster than Claude, though sometimes with less nuance in error handling or edge cases.

Open AI has invested heavily in tool calling optimization for Codex. When operating as an agent in Xcode, Codex is particularly efficient at understanding which tools to call and in what sequence. It quickly decides "I need to read this file, then check the tests, then make changes," and executes that plan with minimal backtracking.

One distinction: Codex tends to be more aggressive in its implementation. Where Claude might flag a potential issue and ask for clarification, Codex makes an assumption and proceeds. This can be faster when the assumption is right, but requires more human oversight.

Configuration is similar to Claude—you add your Open AI API key in Xcode settings and select which variant of Codex you want to use. Open AI offers different model sizes, from more powerful but slower versions to faster lightweight versions, giving you control over the cost/speed trade-off.

The Workflow in Practice: From Prompt to Production

Understanding how agentic coding actually works in real development is where theory meets reality. Let's walk through what happens when you use Xcode 26.3's agent features.

You start by opening the agent prompt interface—a text input box on the left side of your editor. This is where you describe what you want to accomplish. You might type something like: "Add a feature to let users export their data as a CSV file, with options for filtering by date range. Use the standard i OS file sharing mechanism."

That's your direction. You're not writing code—you're describing intent.

When you hit enter or click "Execute," the agent springs into action. Here's what happens internally:

Step 1: Understanding the Context

The agent first explores your project. It reads the project structure to understand your app's architecture. It looks at existing code to see how features are implemented. It checks what frameworks are currently in use. This exploration phase typically takes 10-30 seconds and burns 1,000-3,000 tokens as the agent reads and processes this information.

Step 2: Research and Planning

The agent consults documentation. For the CSV export feature, it reads about Swift's File Manager APIs, learns about the document sharing mechanisms in i OS, understands the UIActivity View Controller patterns, and identifies relevant frameworks. It then plans an implementation strategy, breaking the feature into steps: create a data formatter, implement the export logic, integrate with the sharing sheet, add options for date filtering.

Step 3: Implementation

Now the agent starts writing code. But here's what's different from typical code generation—the agent writes, submits its work to Xcode for compilation, sees failures, and immediately starts iterating. If there's a syntax error, the agent fixes it. If there's a logic error, the agent debugging.

Each change is highlighted in the editor. You see the diff appearing in real time, with color coding showing additions, modifications, and deletions. A transcript panel on the side shows what the agent is doing, why it's making decisions, and what it's learned from testing.

Step 4: Testing and Iteration

Once the implementation is written, the agent builds the project and runs tests. If you have existing tests that cover data export functionality, the agent runs them and sees whether the implementation passes. If tests fail, the agent reads the failure messages and adapts the code to fix the problem.

This iteration loop is critical. The agent might write an implementation that compiles but has a logic bug. The test output shows exactly what's wrong. The agent reads that, understands the issue, and fixes it. This might happen once or three times depending on the complexity—but each iteration gets you closer to working code.

Step 5: Validation and Presentation

Once the implementation passes tests, the agent presents the results. You see the complete diff of all changes, a summary of what was implemented, and a transcript of the entire process. You can see what the agent tried, what failed, how it adapted, and why the current solution works.

At this point, you have full control. You can:

- Accept the changes and move on

- Ask the agent to modify the implementation ("Make the date filtering use a date range picker instead")

- Revert to a previous state if you decide to go in a different direction

- Learn from what the agent did by reading the transcript and understanding the approach

Each time the agent makes significant progress, Xcode creates a milestone. You can jump back to any milestone and try a different direction without losing work.

The entire process might take 30 seconds for a simple feature, or several minutes for something complex. Throughout, you're seeing exactly what the agent is doing and can intervene at any point.

Estimated data: AI agents in Xcode have strong capabilities in file management and project discovery, with robust support for build execution and preview generation.

Transparency by Design: Why You See Everything

One of the most thoughtful aspects of Xcode's agentic coding implementation is its commitment to transparency. You see every step the agent takes, every piece of documentation it reads, every test failure and fix. This isn't accidental—it's a deliberate design choice, and it matters for several reasons.

First, transparency builds trust. When you can see that the agent read the official Apple documentation before using an API, that it tried an approach, saw it fail, and fixed it, you develop confidence in the results. You're not getting mysterious code from a black box—you're watching a clear chain of reasoning and execution.

Second, transparency enables learning. Apple specifically highlighted this for new developers. If you're learning to code, watching an AI agent solve problems provides insight into how experienced developers think. You see the planning process. You see how the agent reads documentation when uncertain. You see how it debugs failures. This is educational value that pure code generation doesn't provide.

Third, transparency catches problems. Humans are better at spotting subtle issues when we can see the full context. If the agent makes an assumption about your app's architecture that's slightly wrong, you might catch it while reviewing the implementation. If there's a performance implication you should know about, you see it in the code and transcript.

The transcript is particularly clever. As the agent works, it logs its reasoning in human-readable form. It's not AI-generated sales copy—it's more like a debugger's log, showing what tools the agent called, what results it got, and how those results affected the next decision. You can read through and understand the complete chain of causality from initial prompt to final implementation.

Xcode also makes changes visually distinct. New code is highlighted. Modified code is shown with diffs. Deleted code is marked. You can see at a glance what's changing and where. This visual clarity matters when reviewing changes across multiple files.

One nuance: transparency doesn't mean the agent explains every micro-decision. It won't tell you why it chose guard let instead of if let—that's implementation detail. But it will explain architectural choices. Why did it create a separate service class for CSV export instead of putting it in the view controller? You'll know because it explains that decision.

Token Consumption: The Economics of Agentic Development

One thing worth understanding is why Apple emphasized token optimization so heavily. The economics of agentic AI are straightforward: more tokens = more cost.

Let's do some simple math. At current pricing, Anthropic's Claude costs roughly

- Reading your project and understanding its structure: 2,000-5,000 tokens

- Consulting documentation: 1,000-3,000 tokens

- Initial code generation: 3,000-8,000 tokens

- Test failures and iteration: 500-2,000 tokens per iteration (usually 1-3 iterations)

- Final validation and reporting: 1,000-2,000 tokens

Total: roughly 8,000-20,000 tokens per agent task

At mid-range pricing, that's about $0.10-0.30 per task. For teams running dozens of agent tasks per day, that adds up. For large teams, it could be meaningful budget.

This is why Apple focused on optimization. By reducing token consumption through smart caching, efficient tool calling, and minimal feedback loops, they made the feature economically viable for everyday use rather than something you'd use occasionally.

Another consideration is latency. Each token takes time to generate. A more efficient prompt structure that accomplishes the same task with fewer tokens completes faster. Developers want quick feedback—if running an agent takes 5 minutes instead of 30 seconds, the value proposition changes.

Developers concerned about costs have options. You can monitor token usage in Xcode's settings and see how many tokens each task consumed. You can select lighter models for simpler tasks. You can batch agent work—instead of running the agent for each small feature, you might ask it to implement several related features in one session, which is often more efficient.

There's also a strategic consideration around when to use agents versus doing work manually. For routine tasks where you know exactly what you need, typing the code might be faster than waiting for an agent. For complex tasks, especially ones involving extensive refactoring or integration of unfamiliar APIs, agents shine.

Model Selection and Configuration: Choosing Your Agent

Xcode's implementation gives developers control over which model to use, which matters because different models have different characteristics.

With Anthropic's Claude, you typically have options like:

Claude 3.5 Sonnet: The most capable model, best for complex tasks. Stronger reasoning, better code understanding, more reliable error handling. Costs more, takes slightly longer.

Claude 3 Opus: Balanced capability and speed, good for most general tasks.

Claude 3 Haiku: Lightweight and fast, good for simple changes. Lower cost.

With Open AI's Codex, similarly:

GPT-5.2: Most powerful, best for complex implementation. Useful when you need aggressive optimization or novel approaches.

GPT-5.1: Balanced option for general development tasks.

GPT-5.1-mini: Fast and cheap, good for routine changes.

How do you decide which to use? There's an emerging art to this:

Use bigger models when: You're working with unfamiliar code, tackling complex integration, or the task has many interdependencies. The extra capability is worth the cost and latency.

Use smaller models when: The task is straightforward, the scope is clear, and you know your codebase well. They're faster and cheaper with no loss of quality.

Use Claude for: Code understanding, refactoring, debugging, maintaining patterns. Its reasoning strength shines here.

Use Codex for: Feature implementation, integration with libraries, speed. It's the faster code generator.

Configuration happens in Xcode settings. You connect your API keys once, select default models, and can override them per-task. Some teams run both providers in parallel for important tasks, letting both agents solve the problem and comparing results before committing to either. This costs more but sometimes catches issues one agent missed.

There's also version management. Models evolve. When Anthropic releases Claude 3.6, you'll probably want to try it. When Open AI's pricing improves, you might shift toward heavier usage. Xcode's model-agnostic design (through MCP) means you can experiment with different approaches without relearning your tools.

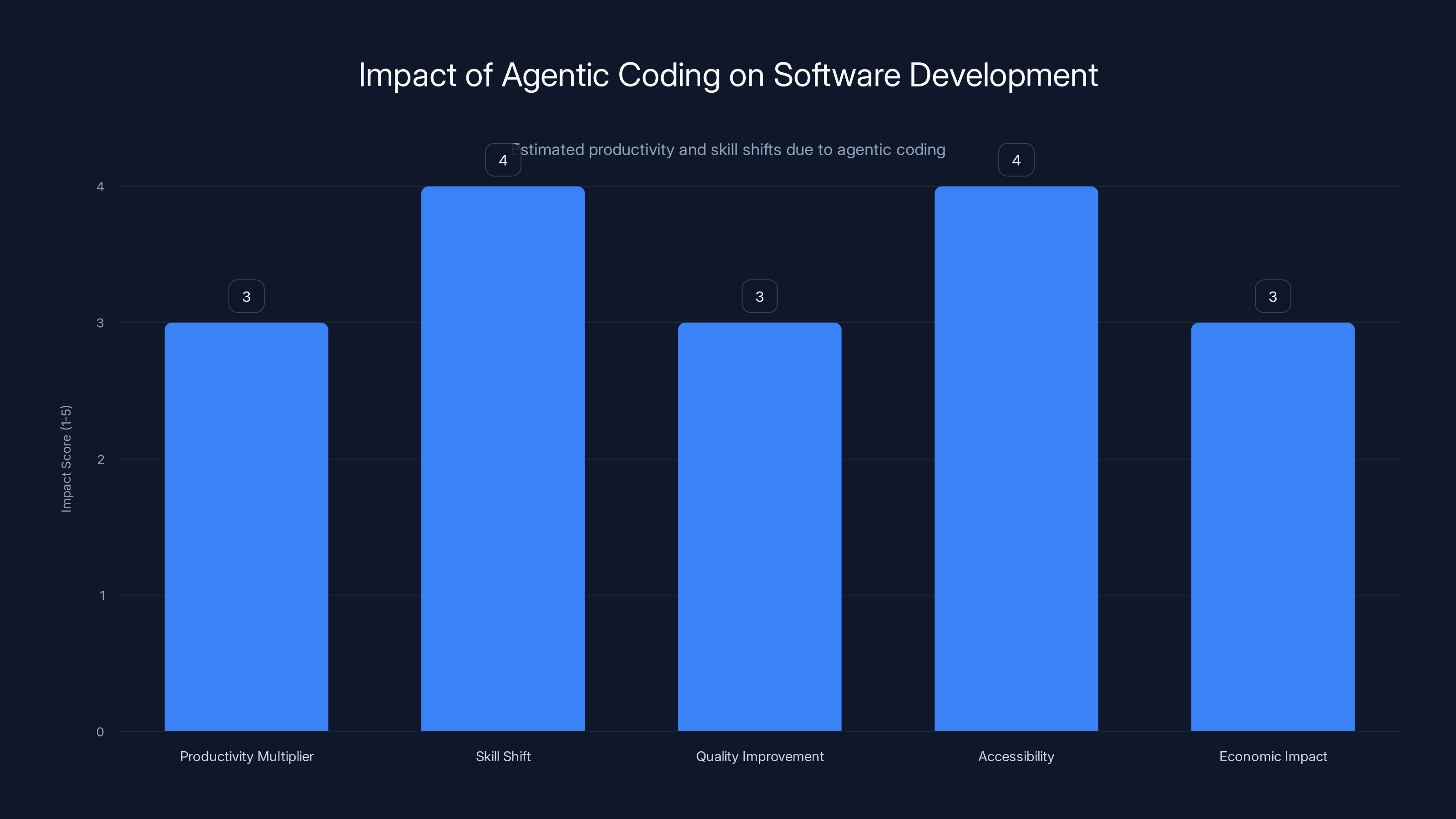

Agentic coding is estimated to significantly shift skills and productivity in software development, with increased accessibility and quality improvements. Estimated data.

Real-World Use Cases: Where Agentic Coding Actually Helps

Theory is fine, but what about reality? Where does agentic coding actually provide value?

Boilerplate Elimination: When starting a new feature, you often need standard components—view models, repository classes, networking layers. Agents are phenomenal at this. "Create a repository class for managing user data with CRUD operations following my existing patterns." Boom. Five minutes of work becomes 30 seconds.

Test Coverage: Writing tests is often tedious because tests follow patterns. An agent can examine your code and generate comprehensive tests quickly. Not perfect tests, but solid baseline tests you'd normally spend hours writing.

API Integration: When you need to integrate a new service—analytics, payment processing, a third-party API—the agent can handle the boilerplate integration. It reads your existing code, understands how services are integrated, and applies that pattern to the new one.

Refactoring: Moving from one architecture pattern to another is error-prone. An agent can systematically refactor code while maintaining functionality. "Refactor this module to use MVVM instead of MVC. Maintain all existing functionality." The agent handles the mechanical work while you think about whether the results make sense.

Documentation: Some agents are good at generating documentation from code. "Generate a README explaining the architecture of this module." Not perfect, but useful starting points.

Migration Tasks: When Apple releases a new framework version or a new pattern becomes standard (like the shift to async/await), migrating existing code is tedious. Agents can handle much of this automatically.

Bug Fixing: When you report an error message to an agent—"This test is failing with NSCoder error in encoding"—it can often diagnose and fix the issue faster than you can. It reads the error, searches your code for the likely culprit, and fixes it.

What agentic coding is not as good at:

Architectural decisions: An agent can implement a decision, but shouldn't make the architectural choice for you.

Complex domain logic: Business logic that requires deep understanding of the domain needs human judgment.

Performance optimization: Agents can make code faster, but knowing which bottleneck matters requires profiling and business understanding.

UI/UX polish: The visual and interaction polish that makes apps delightful is fundamentally a human concern.

The pattern that emerges is clear: use agents for execution of decisions, not for making decisions. You choose direction, the agent executes. This complementary relationship is where the real value is.

Team Dynamics: How Agentic Coding Changes Collaboration

When everyone on a team has access to agentic coding, team dynamics shift.

First, bottlenecks move. In many teams, senior developers are bottlenecks—everyone needs code review, everyone asks questions about patterns. With agents handling routine implementation, senior developers can focus on architecture, design, and the decisions that actually require their expertise. Junior developers spend less time waiting for review and more time learning because agents provide constant feedback.

Second, context matters less. If a junior developer needs to work on an unfamiliar module, an agent can help them understand it. "Read this module and explain its responsibility." "Add a feature to this component, following its current patterns." The agent acts as a patient mentor, helping people get productive in unfamiliar code faster.

Third, PR reviews change. When agents write much of the routine code, pull requests shift from reviewing basic implementation to reviewing higher-level decisions. Did the agent make reasonable assumptions about architecture? Are the overall choices sound? This is more valuable discussion than reviewing syntax or catching off-by-one errors.

Fourth, knowledge sharing accelerates. When a team documents its patterns and conventions, agents can apply them consistently. Senior developers spend time documenting patterns explicitly; agents apply them automatically. This is a major scaling mechanism.

Fifth, schedule flexibility improves. If you're waiting for someone to complete a feature, agents can sometimes make progress in parallel. "Implement the API layer for this feature," one agent task, while another developer works on the UI layer. This parallelization wasn't possible before without explicit coordination.

There are challenges too. Teams need to establish norms around when to use agents versus doing work manually. You need code review processes that catch agent mistakes. You might have situations where two team members work on the same area and agents create conflicts. But these are coordination problems, not insurmountable ones.

Safety, Security, and Concerns About Automation

When powerful automation enters development workflows, legitimate questions arise about safety.

Can agents introduce security vulnerabilities? Yes, but no more readily than a human developer would. An agent might use an insecure API pattern or fail to validate user input if it's not trained on security practices. This is why code review—whether by humans or specialized security tools—remains essential. Agents don't change the requirement for security review; they make it more important to automate because you're reviewing more code changes.

Can agents create unmaintainable code? Possibly, if the patterns in your codebase are unclear or inconsistent. This actually forces teams to clarify and codify their patterns. Agents are motivating factors for better code practices.

Can agents cause data loss? Only if they're given permissions to modify production data. In a development environment, you have version control, so changes can be reverted. In production, agents shouldn't have direct data access anyway.

What if an agent makes subtle logical errors? Then tests catch them, just like tests would catch subtle logical errors in human-written code. The existence of automated agents doesn't reduce the importance of test coverage—it arguably increases it.

The real safety concern is more subtle: over-reliance. If developers become too comfortable letting agents make decisions, they might stop thinking critically. This is a human problem, not a technology problem, and it requires intentional culture and process.

Apple's emphasis on transparency helps here. By showing you exactly what the agent does and forcing you to review results, the tool actively encourages understanding rather than blind trust.

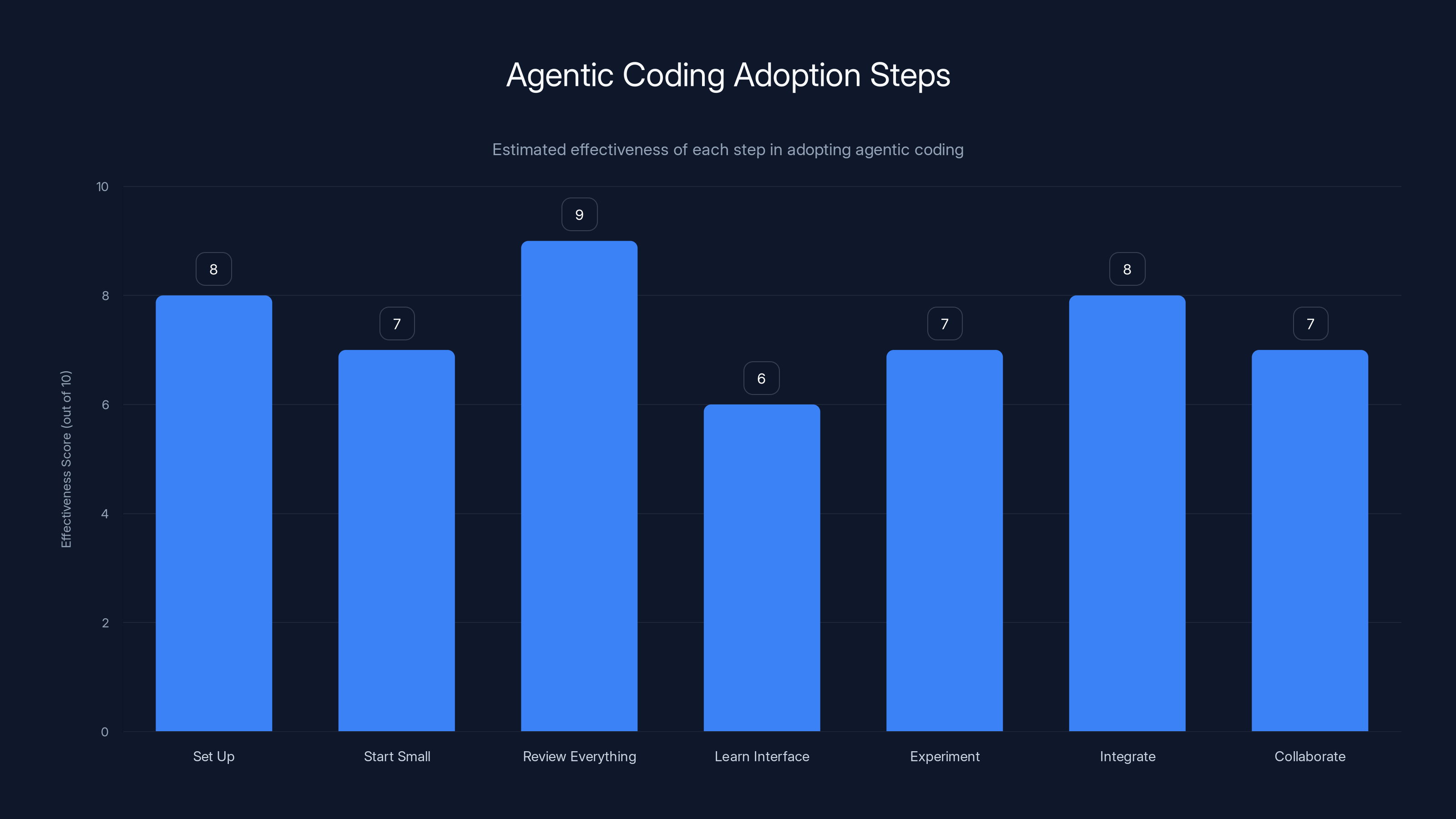

Estimated data shows 'Review Everything' is crucial with the highest effectiveness score, highlighting the importance of code verification in agentic coding.

Educational Value: Learning from AI Agents

One underrated aspect of agentic coding is its potential as a learning tool, which Apple specifically highlighted.

When you watch an agent solve a problem, you're watching some version of expert thinking. It's not perfect—agents make mistakes—but it's fast, systematic, and explicit. For developers learning Swift, Swift UI, or i OS development generally, this is valuable.

Consider a junior developer trying to implement a complex feature. They could:

Option 1: Struggle through documentation and Stack Overflow for hours, learning as they go but slowly and inefficiently.

Option 2: Ask a senior developer, who explains the approach, but might miss some details or have limited time.

Option 3: Ask an agent to implement it, then read the transcript and code to understand the approach, then ask follow-up questions to clarify.

Option 3 scales in a way the other two don't. The agent is infinitely patient, always available, and always shows its reasoning.

Apple is capitalizing on this with educational initiatives. The "code-along" workshops mentioned in Xcode's launch let developers watch as agents solve problems in real-time, pause to understand decisions, and experiment with variations.

Over time, developers who learn this way internalize better problem-solving approaches. They see how code is organized, how edge cases are handled, how APIs are used correctly. This accelerates learning substantially.

For beginners, this is potentially transformative. A beginner using Xcode 26.3 with agents available has access to something like a patient mentor that's always there, never frustrated, and always explains its reasoning.

Integration with Existing Workflows: MCP and the Broader Ecosystem

While Xcode 26.3 includes integrations with Claude and Codex, the real story is the Model Context Protocol and what it enables.

MCP is an open standard. This means other tools can implement MCP compatibility. VS Code, Jet Brains IDEs, Sublime Text—theoretically, any editor can integrate MCP-compatible agents.

It also means any model can integrate with Xcode if it supports MCP. When Anthropic releases Claude 4, when Open AI releases new models, when startups create specialized coding models, they can all work with Xcode through the same interface.

From a developer's perspective, this means the choice of which agent to use becomes more fluid. You're not locked into Claude or Codex. You can try a new model, see if it works better for your specific workflow, and switch if it doesn't.

From a product perspective, this is intentionally non-proprietary. Apple could have built custom integrations that only work in Xcode, creating lock-in. Instead, MCP creates a standard, making the ecosystem more competitive and innovative.

There's a strategic motivation here. Apple's development ecosystem competes against VS Code (which dominates for many developers) and Jet Brains tools (which dominate for enterprise Java). By embracing an open standard rather than proprietary integration, Apple makes Xcode more attractive. It's saying: "You can use any agent you want, not just the ones we built."

This openness also future-proofs the tool. Technology evolves. AI models improve. Coding practices change. An ecosystem built on open standards can evolve faster than one built on proprietary integration.

Performance Implications: Speed and Resource Usage

Running large language models, even optimized ones, isn't free from a resource perspective. What are the practical implications for developers using Xcode with agents?

Network usage: Agent tasks require sending code and receiving responses. For a typical task, you're transmitting 5-20 KB of code and receiving 10-50 KB of responses. For most developers on broadband, this is imperceptible. For developers on slower connections, this might matter.

Latency: The time from sending a prompt to getting results depends on model size, complexity of the task, and network conditions. Simple tasks might complete in 10-20 seconds. Complex tasks might take 2-5 minutes. If you're in flow state, waiting for an agent to finish might break your rhythm. This is why having model selection (faster models for quick tasks) matters.

Local resource usage: Xcode itself needs to display agent output, run tests, and update the UI as changes happen. This requires CPU and memory, but the requirements are modest. An agent task might briefly bump CPU usage to 30-50% as Xcode processes results, but this settles quickly.

Battery impact: On laptops, the network and CPU usage during agent tasks has modest battery impact. Extended agent usage (many tasks in sequence) might reduce battery life by 10-15%, but it's not dramatic.

From a user experience perspective, the most important performance consideration is latency. Developers expect fast feedback. If an agent takes 10 seconds to implement a feature that would take you 30 seconds to type, it's not worth using. But if it takes 30 seconds for something that would take 20 minutes to do correctly by hand, it's clearly valuable.

This is why different models and sizes exist. Claude Haiku is significantly faster than Claude Sonnet for simple tasks, making it worth using despite being less capable. The point is optionality—you choose based on the specific task and your current priorities.

The agentic coding process in Xcode 26.3 involves three main steps, with implementation taking the longest time and using the most tokens. Estimated data.

Limitations and Edge Cases: When Agents Struggle

Agentic coding isn't a panacea. There are categories of tasks where agents struggle:

Ambiguous requirements: If you ask an agent to "improve the performance of this module," it might not know what "improve" means. Does that mean faster execution? Lower memory usage? Reduce network calls? Agents prefer concrete requirements.

Novel architectures: If your codebase uses an unusual architectural pattern that the agent hasn't seen before, it might not understand how to apply that pattern to new code. This is why maintaining clear documentation of patterns helps.

Complex refactoring: Moving from one major architecture to another—say, from MVC to clean architecture—requires systemic changes. Agents can handle some of it, but often need human guidance to restructure correctly.

Business logic: Code that embodies complex business rules or domain-specific logic is hard for agents. They can implement logic they're told, but understanding the why behind requirements requires human explanation.

Cross-cutting concerns: Changes that need to be made in multiple places throughout a codebase (logging, error handling, analytics) are harder for agents than point changes.

Testing complex interactions: When test coverage requires understanding subtle interaction patterns or race conditions, agents might miss cases that experienced developers would think about.

The pattern: agents struggle with open-ended problems and problems requiring deep domain understanding. They excel at well-defined, scoped tasks with clear success criteria.

Experienced developers will learn to work with these limitations. You give agents the tasks suited to them and handle the rest yourself. You use agents as force multipliers, not replacements.

The Broader Shift: What Agentic Coding Means for Software Development

Zoom out beyond Xcode 26.3. What does the emergence of genuinely useful agentic coding mean for software development as a profession and practice?

Skill shifts: In the near term, developers who know how to work with agents effectively will be more productive. This isn't about knowing how to code anymore—it's about knowing how to direct agents, set up effective systems, and review automated work. The skills that matter are problem-solving, architecture, and judgment. The skills that matter less are typing speed and memorizing syntax.

Productivity lever: Well-deployed, agentic coding could be a 2-4x productivity multiplier on routine implementation work. That's not trivial. It means a team that currently ships four features per quarter might ship eight or twelve. The ceiling of what's possible increases.

Quality changes: Code written by agents is consistent, tested, and documented. For routine code, it's often higher quality than human-written code because it doesn't have the inconsistencies or shortcuts that tired developers introduce at 4 PM on Friday. For complex logic, human code is probably better because it reflects deep thinking.

Accessibility improves: If agentic coding works well, it lowers the barrier to entry for development. Someone without years of experience can accomplish more, faster. This could help address talent shortages in some areas, though it probably creates demand for more specialized skills at higher levels.

Economics shift: If agents make developers more productive, either (a) each developer accomplishes more, improving their own career trajectory and compensation, or (b) companies need fewer developers, impacting job market. Realistically, it's probably both happening in different contexts.

Specialization increases: As routine development becomes automated, demand for specialized developers increases. Mobile development might become more routine, but AR development, machine learning integration, and novel interface design become premium skills.

These are deep shifts. Xcode 26.3 isn't causing them—it's accelerating trends already in motion—but it's worth recognizing the magnitude.

Practical Implementation: Getting Started with Agentic Coding

If you're an i OS developer considering using Xcode 26.3's agentic features, here's a practical path forward:

Step 1: Set Up

Update to Xcode 26.3 or later. Create accounts with Anthropic and Open AI (if you don't have them). Generate API keys for both. Add these keys to Xcode's settings. Both services offer free trial credits, so you can experiment without cost initially.

Step 2: Start Small

Don't try to have agents rebuild your entire app. Start with a specific feature that's well-scoped. "Add a settings screen that saves user preferences to User Defaults." Something with clear requirements and measurable success.

Step 3: Review Everything

When the agent finishes, review the code carefully. Don't trust it just because it came from an agent. Look for logic errors, missing edge cases, and violations of your code standards. This review is essential and builds your understanding of what agents do well and where they miss.

Step 4: Learn the Interface

Get familiar with how to configure models, monitor token usage, review transcripts, and revert changes. Understanding the tool well makes you more effective with it.

Step 5: Experiment

Try different prompts. See what works. Some prompts are too vague; agents need specific direction. Some are too detailed and create confusion. There's a middle ground where agents thrive.

Step 6: Integrate into Workflows

Identify the tasks in your workflow where agents provide the most value. Maybe it's writing boilerplate when starting a new feature. Maybe it's test generation. Maybe it's code review assistance. Integrate agents into your process where they actually help.

Step 7: Collaborate

If you're on a team, discuss how to use agents in code review. What should you check carefully? What's likely to be fine? Develop shared norms about agent-generated code.

Looking Forward: What's Next for Agentic Development

Xcode 26.3 is a significant step, but it's not the final form of agentic coding. What's likely to evolve?

More capable agents: As models improve, agents will handle more complex tasks autonomously. Today's agents might need human guidance for architectural decisions; future agents might handle that themselves.

Better IDE integration: Over time, IDEs will expose more capabilities to agents. Debuggers, profilers, and deployment tools will become agent-accessible, enabling agents to optimize performance and handle production issues.

Cross-project reasoning: Currently, agents understand one project at a time. Future agents might understand how changes in one project affect dependent projects, enabling more sophisticated refactoring and integration tasks.

Specialized agents: Generic agents work reasonably well for general tasks. Specialized agents trained on specific frameworks or domains will emerge. An agent trained specifically on Swift UI will outperform generic agents on UI code.

Real-time collaboration: Agents and humans working synchronously, with agents making suggestions as you code and you providing feedback in real-time, rather than the current batch-oriented approach.

Proactive assistance: Instead of waiting for you to ask, agents might proactively suggest optimizations, security improvements, or test cases based on what they observe in your code.

The trajectory is clear: agents becoming more integrated into development workflows, more capable, and more collaborative with human developers.

FAQ

What is agentic coding?

Agentic coding is a development approach where AI agents autonomously handle coding tasks within your IDE. Unlike traditional autocomplete that suggests code as you type, agents take high-level goals (e.g., "Add a user authentication feature") and autonomously explore your codebase, plan implementation, write code, run tests, and iterate on failures—all while showing you their reasoning and giving you control to accept, modify, or revert changes. The agent works independently within the scope of the task you define, but you remain the decision-maker about direction and architecture.

How does agentic coding in Xcode 26.3 actually work technically?

Xcode 26.3 uses the Model Context Protocol (MCP) to connect with AI agents from Anthropic and Open AI. MCP is a standardized interface that exposes Xcode's capabilities—like file access, build systems, test runners, and documentation—in a way agents can understand and use. When you submit a task, the agent uses MCP to explore your project structure, read relevant code and documentation, write changes, trigger builds and tests, and parse results. This standardized approach means any MCP-compatible agent can theoretically work with Xcode, not just Claude and Codex.

What are the main differences between Anthropic's Claude Agent and Open AI's Codex?

Claude Agent emphasizes careful reasoning, code understanding, and maintaining existing patterns. It's particularly strong at refactoring, understanding complex code, and maintaining consistency with your existing architecture. It tends to be cautious, flagging uncertainties when appropriate. Codex, built on Open AI's broader training, emphasizes speed and generality. It's trained on more code examples, so it often has direct knowledge of how to integrate specific libraries. It's faster at code generation but sometimes less careful about edge cases. For i OS development, both are capable—Claude is better for understanding and refactoring existing code; Codex is better for rapid feature implementation. Many teams use both for different types of tasks.

Will agents replace developers?

No. Agentic coding automates the execution of coding tasks—writing code, running tests, fixing bugs—but doesn't replace the judgment and creativity required to build software. Choosing architecture, making design decisions, understanding user needs, and thinking critically about trade-offs require human expertise. What agents do replace is routine typing and implementation grunt work. This frees developers to spend more time on higher-level thinking and less time on mechanical coding. Over time, this shifts what developers do, not whether they're needed. Junior developers might be less needed for pure implementation work, but demand for architects, senior engineers, and developers who can guide agents and make critical decisions increases.

How much does it cost to use agentic coding with Claude and Codex?

Both Anthropic and Open AI charge for API usage based on tokens consumed. For a typical agent task, you'll consume 8,000-20,000 tokens, costing roughly

What happens if an agent makes a mistake or creates bad code?

Xcode maintains full version control of your code, creating milestones each time an agent makes significant progress. You can revert to any previous milestone if results aren't satisfactory. Additionally, your responsibility includes reviewing agent-generated code before committing it. Tests catch logical errors—if your tests are comprehensive, agent mistakes will surface during testing. Poor code style or architectural violations you'd catch in code review, similar to how you'd catch human-written mistakes. Agents don't reduce the need for code review; they make good review practices more important because you're reviewing more code changes.

Can I use agents for production-critical code?

Yes, but with the same care you'd use for any code. If you trust a human developer to write production code after review, you can trust agent-generated code with the same review rigor. The question isn't whether agents can write production code—they can—but whether you've reviewed it adequately. For safety-critical or security-sensitive code, you might want more thorough review, but the principle is the same: good code review catches problems regardless of whether they're human or agent-generated. Some teams implement additional policies around agent-generated production code (e.g., requiring two reviewers instead of one), but this is organizational choice, not a technical limitation.

How do agents handle my codebase's specific patterns and conventions?

Agents learn from existing code in your project. When you start a task, the agent reads your existing code, identifies patterns (your naming conventions, how you structure classes, how you handle errors, etc.), and applies those patterns to new code. This works best if your codebase is consistent. If you have five different ways of implementing the same pattern, agents might pick up all five variations. This actually incentivizes good code hygiene—consistent patterns in your codebase make agents more useful. Over time, agents become more aligned with your team's style, especially if you review and adjust their output to match your standards.

What are the limitations of agentic coding? What tasks should I not use agents for?

Agents struggle with ambiguous requirements (be specific), novel architectures they haven't seen before, complex business logic requiring domain expertise, and subtle interactions between components. They're less effective at making architectural decisions (humans should do that) and understanding non-technical constraints. For tasks that have clear scope, measurable success criteria, and involve standard patterns, agents excel. For open-ended exploration, novel problem-solving, or decisions with major system-wide implications, agents work best as assistants rather than primary decision-makers. The general rule: use agents for execution of decisions humans have made, not for making the decisions themselves.

Will Xcode agents work with my private code and frameworks?

Yes. Agents access your code directly through MCP within Xcode. Your code never leaves your machine unless you explicitly use cloud building or CI/CD services. The agent reads your project files locally, makes changes locally, and runs tests locally. The only data transmitted to Anthropic or Open AI is the code you send in the agent prompt and the code context it needs to understand your project. You can exclude sensitive files from agent access if needed. If you use private frameworks, the agent can access them the same way it accesses your own code—by reading files and understanding dependencies.

How do I choose between Claude and Codex for a specific task?

Use Claude for refactoring, code understanding, and maintaining patterns—it's better at reasoning about existing code. Use Codex for feature implementation, especially involving libraries you're unfamiliar with—it's faster and has seen more code examples. For learning and educational use cases, Claude's detailed explanations might be more valuable. For pure speed and productivity, Codex often gets results faster. Many teams use both, trying them on important tasks and noting which produces better results for their specific context. Your own experimentation is probably the best guide.

What's the Model Context Protocol (MCP) and why does it matter?

MCP is an open standard that defines how large language models can connect with tools and data sources. Instead of building custom integrations for each model in each application, MCP provides a standardized interface. Xcode exposes its capabilities through MCP (file access, build tools, documentation, etc.), and any MCP-compatible agent can access those capabilities. This matters because it creates competition and choice—you're not locked into specific vendors. It also means future models and agents can integrate with Xcode without Apple needing to build new integrations. From a user perspective, it means you can try new AI models as they're released and switch to ones that work best for you, all within the same IDE.

How will agentic coding evolve in the next few years?

Agents will become more capable, more integrated into development workflows, and more specialized. Expect faster agents for routine tasks, more capable agents for complex reasoning, and agents tailored to specific frameworks or domains. IDEs will expose more capabilities to agents (debugging, profiling, deployment). Agents will likely become more proactive, suggesting improvements rather than just responding to requests. Cross-project understanding will improve, enabling more sophisticated refactoring. The trajectory is clear: agents becoming more essential to development, not less. For developers, the implication is learning to work effectively with agents will become a core skill, alongside traditional coding ability.

Conclusion: The Beginning of a New Development Era

Xcode 26.3 represents a significant inflection point. This isn't the first time AI has touched development tools. Code completion, linting, and automated refactoring have been around for years. But agentic coding is fundamentally different. It's the first time developers have autonomous agents that can truly understand projects, make complex decisions, and implement substantial features with minimal hand-holding.

The implications are substantial. For individual developers, agentic coding means productivity gains on routine tasks and access to assistance that was previously only available to teams with senior mentors. For teams, it means multiplication of output and better utilization of senior developers on high-value problems rather than routine implementation. For the industry, it suggests an acceleration of software development pace and a shift in what it means to be a developer.

Like any powerful tool, agentic coding brings challenges. Trust and safety require good code review. Skill development requires intentional learning from what agents do. Team dynamics require clear norms about agent usage. But these are solvable challenges, and the benefits far outweigh the friction.

For i OS developers, the choice isn't whether to use agentic coding—it's when and how to integrate it effectively into your workflow. The tool is here. The question now is how you'll use it.

The developers and teams that figure this out first will ship faster, build more ambitious things, and lead their domains. The ones who ignore it will find themselves slower and less capable. This isn't hyperbole—it's the nature of significant tools. They change the game for people who learn to use them well.

Xcode 26.3 is available now. If you're an i OS developer, the experiment is waiting. Try it on a small task. See what it can do. Learn its strengths and limitations. Then decide how it fits into your practice.

The future of development isn't coming. It's here, in the form of intelligent agents that work alongside you in your IDE. How you respond to that determines whether you'll be riding that wave or trying to catch up to it.

Key Takeaways

- Agentic coding in Xcode 26.3 represents autonomous AI agents working inside your IDE, exploring code, planning changes, implementing features, and iterating on test results without constant human validation

- Two major AI providers integrated: Anthropic's Claude Agent excels at code understanding and refactoring, while OpenAI's Codex emphasizes rapid feature implementation and library integration

- Model Context Protocol (MCP) enables extensibility—any MCP-compatible agent can theoretically access Xcode's tools, not just Claude and Codex, creating an open ecosystem

- Token optimization was critical to viability: Apple reduced token consumption by 70-90% through caching, structured calling, and intelligent context windows, making per-task costs $0.10-0.30

- Agents excel at execution tasks (boilerplate, testing, refactoring) but humans must handle architectural decisions, business logic, and UX/design choices—complementary relationship drives value

Related Articles

- Replit 24/7 AI Agents: The Future of Software Development

- OpenAI Codex Desktop App: AI Coding Agents & Alternatives [2025]

- Kilo Slack Bot: AI-Powered Code Shipping & Alternatives [2025]

- Claude Cowork: Complete Guide to AI Agent Collaboration [2025]

- Moltbot AI Assistant: The Future of Desktop Automation (And Why You Should Be Careful) [2025]

- Yahoo's AI-Powered Search Engine: How Scout Changes Search Forever [2025]

![Agentic Coding in Xcode: How AI Agents Transform Development [2025]](https://tryrunable.com/blog/agentic-coding-in-xcode-how-ai-agents-transform-development-/image-1-1770142159162.jpg)