The New Reality: AI Adoption as a Career Prerequisite

Let me be direct: your next promotion might depend on how much you've embraced artificial intelligence at work.

This isn't speculation. Financial Times reporting revealed that Accenture, one of the world's largest consulting firms, has begun tying employee promotions directly to what they call "regular adoption" of internal AI tools. The company sent emails to staff stating plainly: "Use of our key tools will be a visible input to talent discussions."

If you work in tech, finance, professional services, or really any knowledge-intensive field, this should grab your attention. What Accenture is doing doesn't exist in isolation. It signals a massive shift in how corporations are measuring employee value and performance. And it's raising uncomfortable questions about fairness, capability gaps, and whether the people running companies actually understand the technology they're forcing everyone to adopt.

Here's what's happening, why it matters, and what it means for you.

Understanding Accenture's AI Adoption Mandate

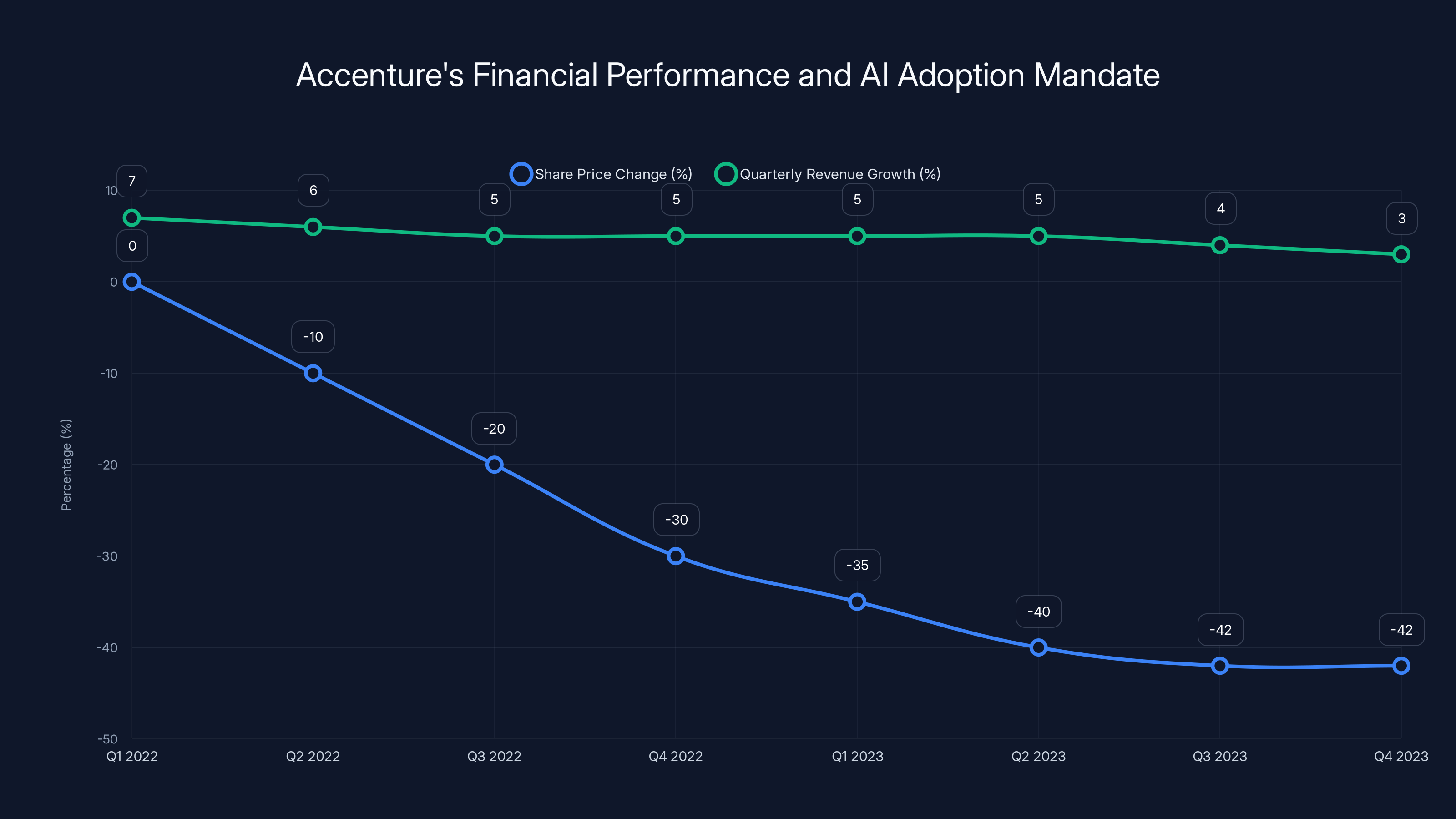

Accenture didn't wake up one morning and decide to make AI usage a promotion criteria on a whim. The company's facing real pressure. Share prices have dropped roughly 42% over the past year. Revenue growth has stalled at 5% quarterly, with full-year projections sitting at a modest 3-5%. In the consulting world, that's not just slow—it's alarming.

When CEO Julie Sweet warned that staff unable to adapt to AI "may not be suitable for the company anymore," she wasn't being dramatic. She was signaling an existential crisis. Accenture's value proposition to clients is that they'll help companies navigate digital transformation. But if Accenture's own employees aren't comfortable with AI, that's a credibility problem.

So the company implemented what amounts to forced adoption. Employees now have a financial incentive (promotion eligibility) to regularly use AI tools. On paper, it's a brilliant move. Make AI adoption visible. Track it. Tie it to career progression. Problem solved.

Except the real world is messier than that.

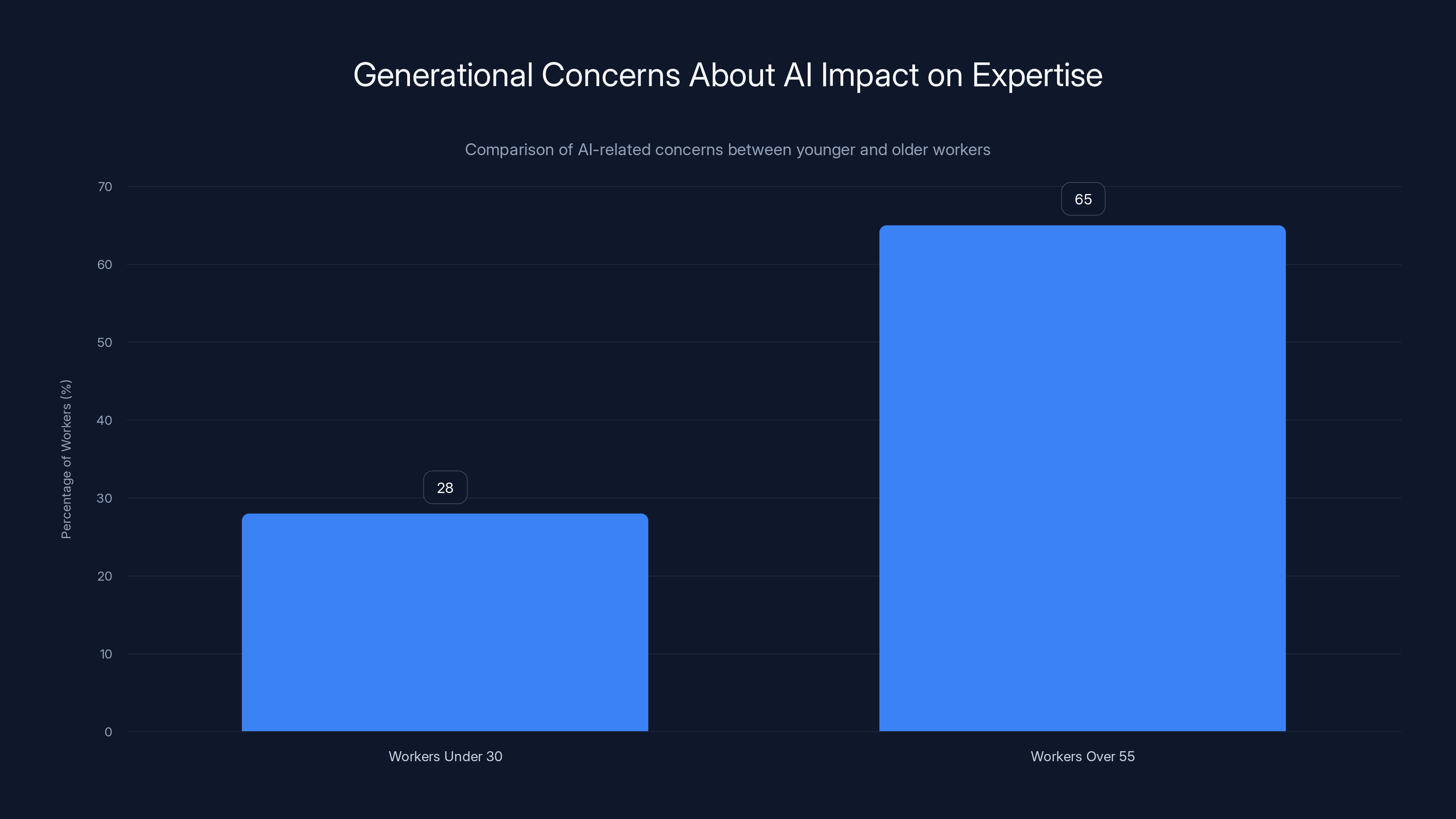

Estimated data shows that 65% of workers over 55 are concerned about AI diminishing their expertise, compared to only 28% of workers under 30.

The Generational Divide in AI Adoption

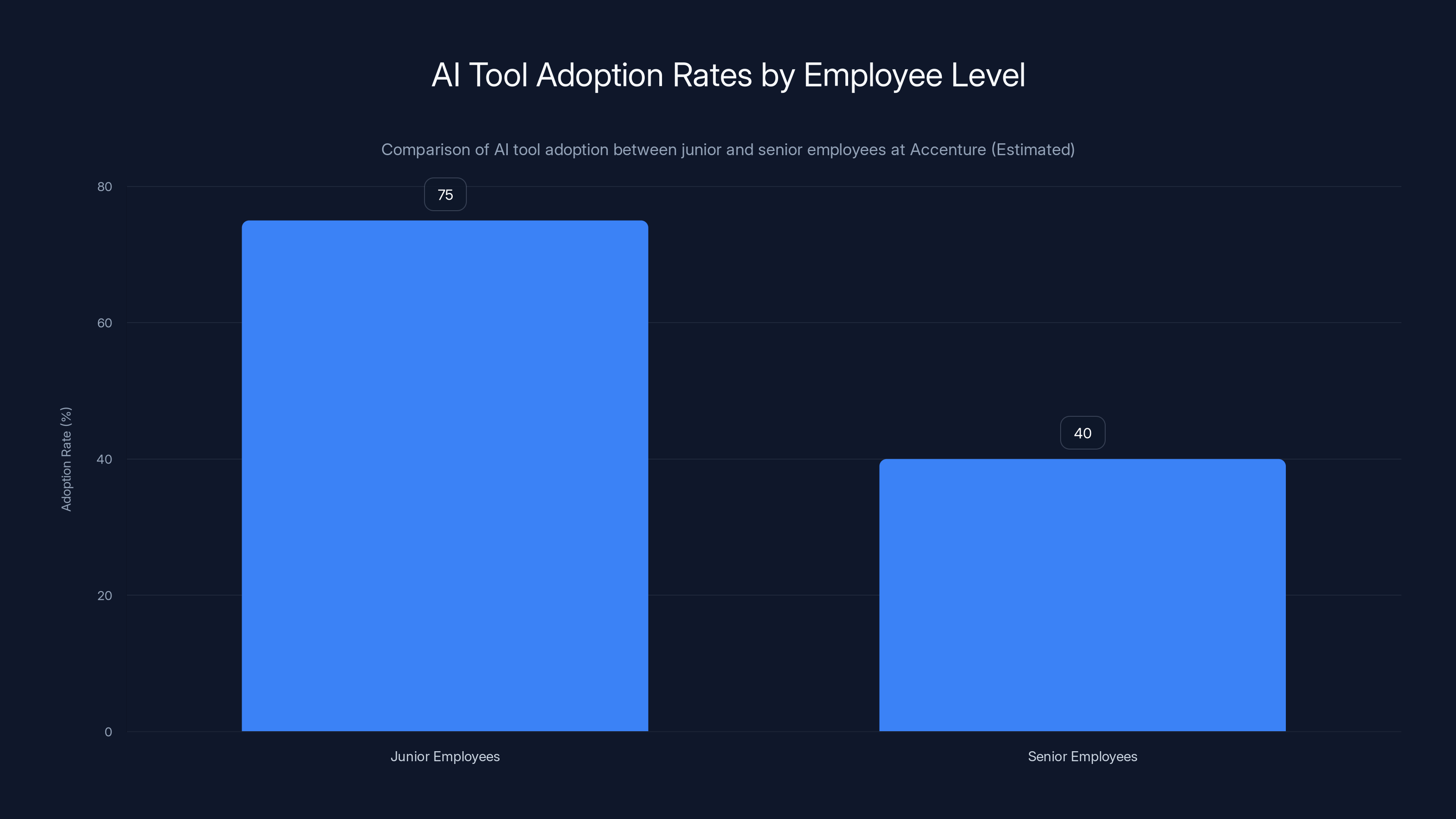

Here's where it gets interesting. Accenture's internal data reportedly shows a sharp divide: junior employees adopt AI tools readily, while senior staff remain reluctant.

This shouldn't surprise anyone who's worked in corporate environments. Younger workers grew up with technology that changes constantly. Adaptation is second nature. A new AI tool? Sure, let's try it. What's the worst that happens?

Senior staff—the people with the most institutional knowledge, the deepest client relationships, and often the most developed problem-solving skills—are more hesitant. Some of this is purely cognitive. The older you are, generally speaking, the longer you've relied on established workflows. Changing those workflows requires mental energy and carries real risk if something goes wrong.

But there's also something deeper happening here. Many senior professionals have built careers on expertise. On being the expert in the room. AI tools that can generate reports, analyze data, or draft proposals threaten that. If anyone can use Chat GPT to produce a client deliverable, what's the value of decades of accumulated skill?

That's a legitimate concern, not just resistance to change.

Accenture's promotion policy essentially penalizes this hesitation. If you're a 20-year veteran skeptical of AI's quality, you're now at a disadvantage for advancement. The company's betting that forcing adoption will overcome resistance. What they might actually be doing is accelerating departures among experienced staff.

The Quality Problem: When AI Tools Don't Actually Work

Here's the part that makes this policy particularly contentious: the tools themselves are inconsistent.

Accenture employees have reportedly called internal AI tools "broken slop generators." One worker threatened to quit immediately if held accountable for using them. That's not small talk. That's genuine frustration.

The problem is fundamental to where AI is right now. These tools are powerful. They're useful for certain tasks. But they're also unreliable. They hallucinate facts. They generate plausible-sounding but completely false code. They miss context. They reflect the biases in their training data.

For a consulting firm selling expertise to clients, this is catastrophic. Imagine a consultant speeds up their work by having an AI assistant draft sections of a proposal. The AI generates something that sounds good but contains a critical error. The client catches it, loses confidence, and takes their business elsewhere.

Now imagine that consultant's performance is being measured partly on AI tool usage. They're incentivized to use the tool more, even when doing so introduces risk. That's a misaligned incentive structure.

The quality problem gets worse when you consider that different roles have different needs. An AI tool that helps a junior analyst organize data might actively harm a senior architect's work. But if the metric is just "usage," those differences disappear. Everyone's measured the same way.

This is why some of the smartest people at Accenture are probably using the tools the least—they understand their limitations and aren't willing to compromise their output quality.

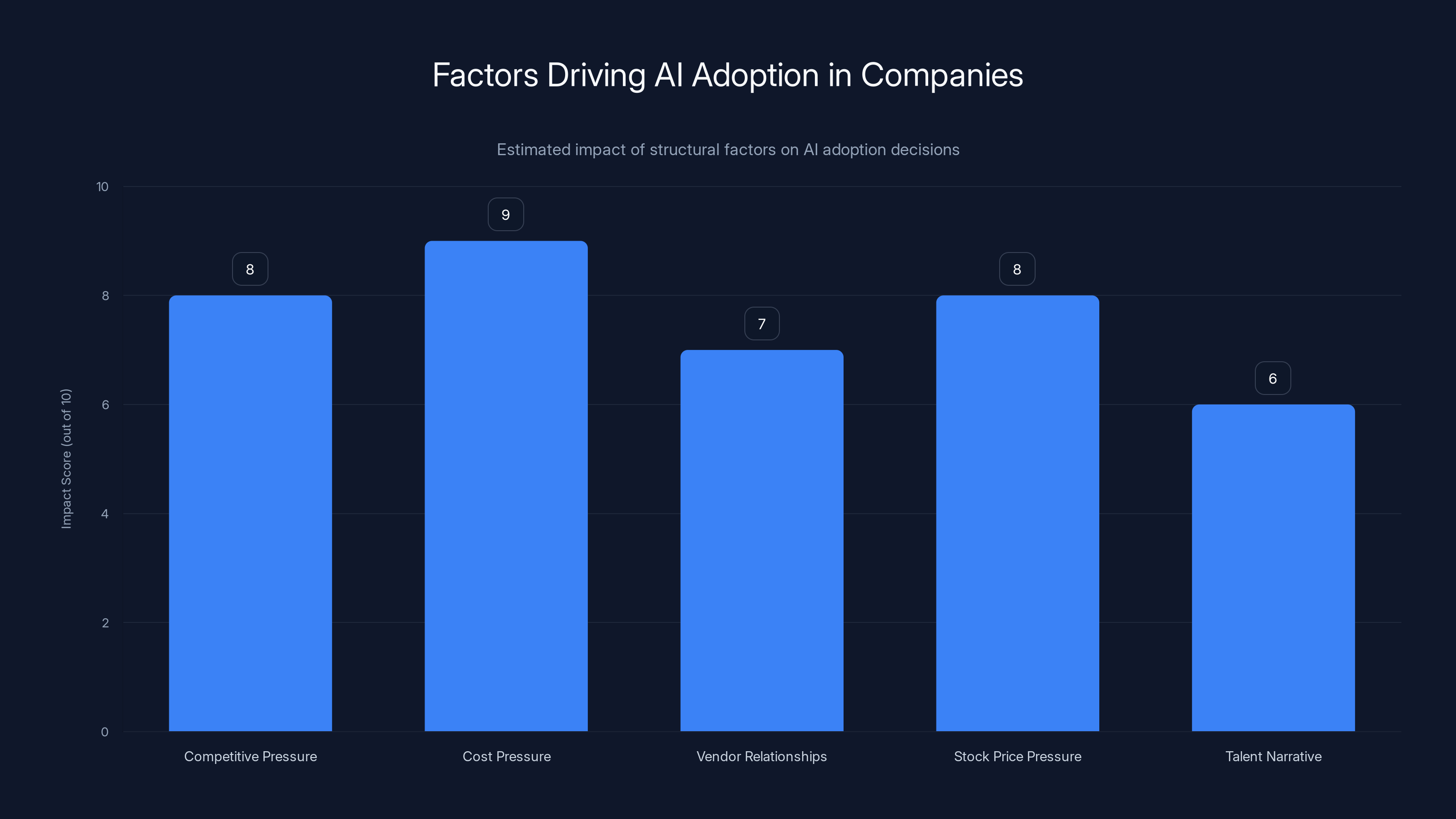

Competitive and cost pressures are the most significant factors driving AI adoption, with estimated impact scores of 8 and 9 respectively. Estimated data.

Geographic Exceptions and What They Reveal

Here's something that should bother everyone about this policy: not everyone is subject to it.

Accenture employees in 12 European countries get a pass. So do workers in the company's U. S. federal government contracts division.

Why? The European opt-outs likely reflect GDPR and data privacy regulations. Using AI tools on client data gets legally complicated in Europe. The federal government exemption probably reflects security clearance requirements and risk management protocols.

But here's what's weird: those exceptions prove Accenture knows AI adoption carries real risks. They're comfortable imposing risks on regular employees but not on operations that have regulatory oversight.

That's a credibility issue. It suggests the company's confidence in these tools is conditional—conditional on regulatory pressure not being a factor. If the tools were universally safe and valuable, why would operating in Europe or for the federal government require different standards?

The Broader Corporate Trend: AI Adoption Metrics

Accenture isn't alone in measuring AI adoption. Accenture's just the one that got caught—or perhaps the one brazen enough to admit it publicly.

Across corporate America, companies are implementing:

- Usage dashboards that track which employees use AI tools and how frequently

- Adoption scorecards that include AI tool usage in performance reviews

- Training requirements that mandate completion of AI certification programs

- Team metrics that measure organizational AI adoption rates

- Budget incentives that reward departments showing high AI tool usage

Some of this makes sense. If you're investing in expensive software, you want to know whether people are actually using it. But there's a slippery slope between tracking adoption and making adoption a compliance issue.

When adoption becomes a compliance issue, you get perverse incentives. People use tools not because they're productive but because they need to show they're using them. They optimize for visibility rather than output quality.

The Financial Incentive Problem

Let's talk about the economics here. Promotions at companies like Accenture typically come with 15-25% salary increases and access to better projects, clients, and long-term earning potential.

If promotion eligibility is partly determined by AI tool adoption, then you're effectively creating a financial penalty for not adopting. Skip using the tool, and you don't just miss out on potential benefits—you actively fall behind peers who do use it.

That's not optional. That's not a suggestion. That's a requirement with financial consequences.

For junior employees, this might not feel like a massive burden. They're more comfortable with technology anyway. But for mid-career professionals with families, mortgages, and years of organizational debt, the stakes are different.

Consider Sarah, a fictional but representative case: She's 48, been at Accenture for 12 years, and has been passed over once for promotion already. She uses AI tools cautiously because she's seen them produce bad outputs. Her boss tells her she needs to show more "regular adoption" to be promoted. She has a choice: compromise her professional standards to satisfy a metric, or accept that her promotion path is now constrained by factors outside her control.

That's the human cost of these policies.

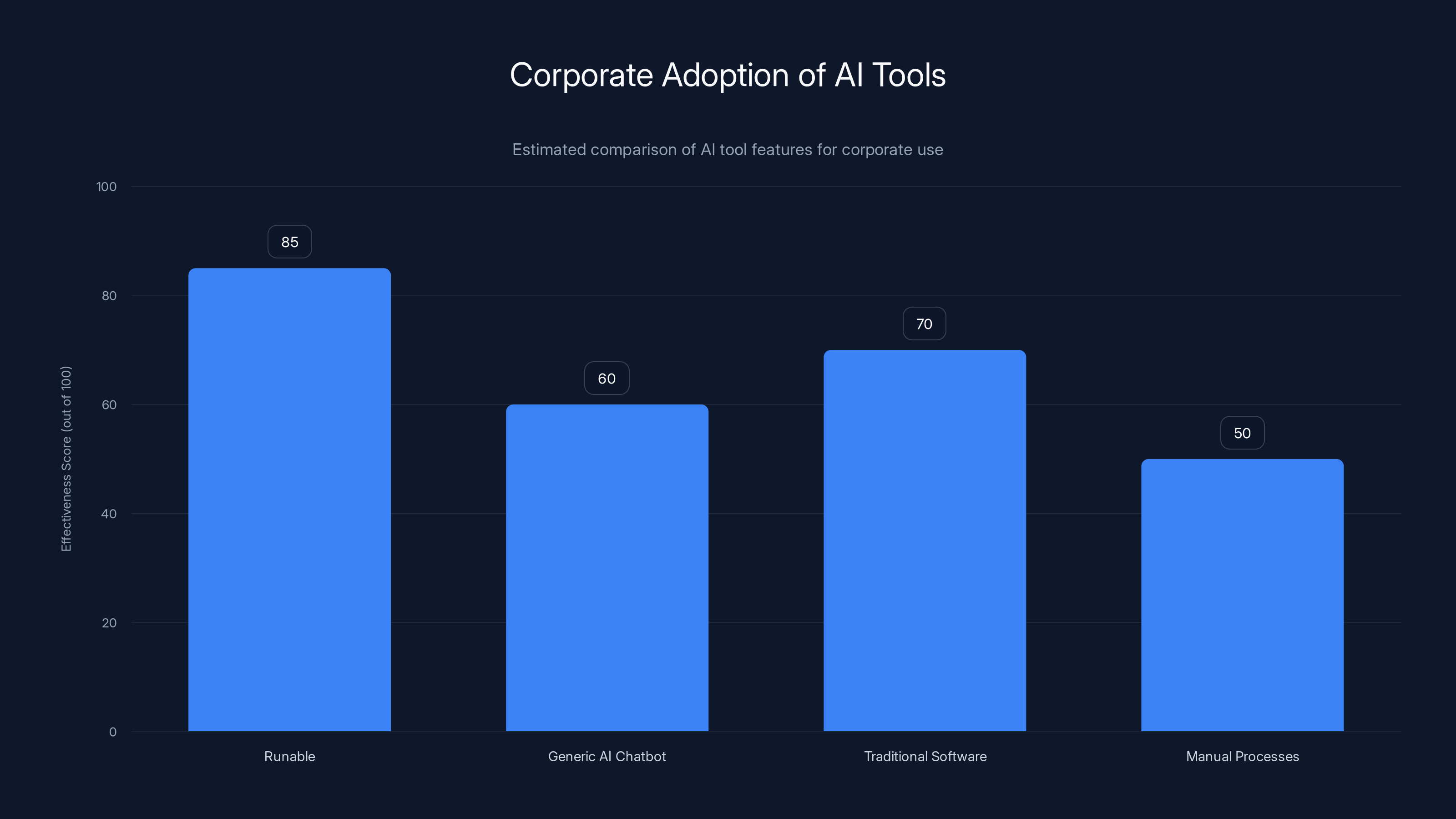

Runable scores highest in effectiveness for corporate adoption due to its tailored features for professional output. Estimated data based on typical corporate needs.

Employee Sentiment and Workplace Morale

Employee reactions to Accenture's policy have ranged from skeptical to openly hostile.

Linked In has been flooded with posts about this, and the tone is revealing. People aren't upset about AI existing. They're upset about being forced to adopt tools they don't trust, with their career advancement hanging in the balance.

Workers have expressed concerns about:

- Quality compromise: Using inferior tools to hit an adoption metric

- Job security: Fear that increased AI adoption might eventually eliminate their roles

- Unfair evaluation: Being judged on tool usage rather than actual output quality

- Pressure to perform: Stress about having to learn new systems while managing existing workload

- Loss of autonomy: Having their tool choices dictated rather than selected based on job requirements

This isn't theoretical frustration. This is affecting morale at a company that's already struggling with revenue growth and market share. Talented people are evaluating whether to stay.

Philosophical Questions: Who Decides What Gets Done?

Beneath all this lies a more fundamental question: Who decides how work gets done?

Traditionally, professionals have some autonomy over their methods. A consultant might prefer certain frameworks, analysis approaches, or presentation styles. That autonomy is part of what makes knowledge work satisfying and allows experienced professionals to leverage their expertise.

When a company mandates specific tools, they're removing that autonomy. They're saying: "We've decided how you'll work, and you need to conform."

That might be fine if the mandated tools are genuinely superior. But when employees perceive the tools as lower quality than their alternatives, mandatory adoption feels like a step backward.

It also raises questions about accountability. If an AI tool generates something problematic, who's responsible? The employee who used it? The company that mandated it? The vendor who built it? That ambiguity is uncomfortable, and for good reason.

Structural Factors: Why Companies Are Pushing AI Adoption

There are real business reasons driving this trend, worth understanding:

Competitive pressure: Every consulting firm is trying to position itself as AI-enabled. If Accenture's competitors are adopting AI and showing productivity gains, Accenture can't afford to be seen as behind. Mandatory adoption is their way of demonstrating that the entire organization is AI-forward.

Cost pressure: AI tools promise efficiency. If adoption increases productivity by even 10%, that translates to massive cost savings across thousands of employees. The company's incentivized to push adoption because the financial upside is real.

Vendor relationships: Accenture has likely invested heavily in AI platform partnerships. They need volume usage to justify those investments and keep vendors happy for future deals.

Stock price pressure: Consulting stocks are valued partly on growth prospects. Companies demonstrating rapid AI adoption get valued more highly by investors. Julie Sweet needs to show shareholders that Accenture is "AI-enabled" and moving decisively.

Talent narrative: There's a narrative around tech talent right now that says: "If you can't work with AI, you're becoming obsolete." Companies want to be seen as leading this trend, not falling behind.

None of these reasons is inherently bad. But they're structural factors pushing companies toward mandatory adoption, regardless of whether it's actually the best approach.

Junior employees at Accenture are adopting AI tools at a significantly higher rate (75%) compared to senior employees (40%). Estimated data based on narrative context.

The Resistance Movement: Why Smart People Are Skeptical

The people most skeptical of AI tools aren't typically Luddites. They're actually often the people who understand technology best.

They've seen waves of technology adoption before. They remember when social media was going to revolutionize business. When blockchain was going to replace traditional finance. When natural language processing was definitely solved (spoiler: it wasn't).

They're skeptical because they understand the gap between hype and capability. They can read a research paper and spot where models are overfitted to benchmarks. They know that benchmark performance doesn't translate to real-world reliability.

They're also often the people who've built valuable careers on deep expertise. They know things. And they're uncomfortable with tools that generate outputs that sound authoritative but might be wrong.

This isn't Luddism. It's empiricism. It's saying: "Show me the evidence that this actually works before I restructure my workflow around it."

Companies that punish this skepticism aren't accelerating progress. They're pushing out the people most capable of using these tools effectively.

Measurement Problems: What "Adoption" Actually Means

One of the deepest problems with Accenture's approach is measurement.

What counts as "adoption"? Is it:

- Just opening the tool once per month?

- Using it for a certain percentage of tasks?

- Completing company-mandated training?

- Generating a minimum number of outputs via the tool?

- Mentioning the tool in project retrospectives?

The problem is that all of these can be gamed. You can "adopt" a tool without genuinely integrating it into your workflow. You can use it for low-stakes tasks while avoiding it for important work.

Moreover, adoption shouldn't be the goal. Productivity should be. Better client outcomes should be. Faster time-to-delivery should be. But measuring those things is harder than counting how many times someone clicked a button.

So companies measure the easy thing (adoption) and hope it correlates with the hard thing (value). Often, it doesn't.

Consider: A consultant who uses an AI tool to draft 50 low-value project summaries has "high adoption." Another consultant who carefully uses an AI tool to analyze client data and produce one high-impact strategic recommendation has "low adoption." If you're measuring adoption, the first consultant looks better.

If you're measuring business value, they don't.

The Unintended Consequences of Forced Adoption

When you mandate adoption, you typically get several predictable second-order effects:

Quality degradation: People use tools to hit metrics rather than to produce good work. The quality of outputs decreases even as the quantity increases.

Talent flight: Your most talented people often have the most options. If they don't like your culture, they leave. Forced adoption policies signal a culture that doesn't trust professional judgment. Talented people vote with their feet.

Strategic misuse: People figure out how to game the system. They use the tool in ways the company didn't intend, just to satisfy the metric. This wastes the tool's potential on low-impact usage.

Organizational ossification: When you mandate how work gets done, you reduce experimentation. People stop asking "Is there a better way?" and start asking "How do I comply?" Innovation suffers.

Legitimate risk: Some of the skepticism about AI tools is warranted. Forcing adoption of tools with known reliability problems introduces organizational risk. When something goes wrong, the company bears the consequences.

Accenture's probably going to see all of these. Some of them are already visible in employee sentiment.

Accenture's share price has dropped by 42% over the past year, while quarterly revenue growth has stalled at around 5%. This financial pressure has led to the implementation of an AI adoption mandate. Estimated data for Q3 and Q4 2023.

How Other Companies Are Handling AI Adoption

Not every company is taking Accenture's approach. There's a spectrum:

Permissive approach: Make tools available, offer training, but don't mandate usage. Let teams decide how and when to use AI. This respects professional autonomy but might result in slower organizational adoption.

Incentive-based approach: Offer bonuses or career benefits to people who reach adoption milestones, but don't penalize non-adoption. This is more carrots, fewer sticks.

Outcome-based approach: Measure productivity and client value, not tool usage. If AI adoption contributes to better outcomes, it happens naturally. If it doesn't, don't force it.

Selective deployment: Use AI for specific functions (like data analysis or content summarization) where it's proven reliable, but not as a blanket mandate across all work types.

Pilot programs: Test AI tools with volunteer users, gather data on outcomes, and only mandate adoption if the data supports it.

Accenture chose forced adoption with career consequences. That's the most aggressive approach on the spectrum. It maximizes short-term adoption numbers but carries the highest risk of negative consequences.

The Role of Tools Like Runable in Corporate Adoption

As companies navigate AI adoption, they're looking for platforms that actually deliver reliable results. This is where tools designed for professional use make a difference.

Runable represents a different approach to AI automation in the workplace. Rather than forcing employees to adopt generic AI chatbots, it provides AI-powered tools specifically designed for professional output: presentations, documents, reports, images, and videos. Starting at just $9/month, it's built around actual business use cases—creating slides from data, generating reports from research, automating document workflows—rather than asking people to figure out how to use general-purpose AI.

The key difference is output quality and reliability. When professionals can depend on tools to produce consistent, high-quality results, adoption happens naturally. You don't need mandates. You don't need to tie promotions to usage. People use tools that actually make their jobs better.

Use Case: Automate weekly status reports and client presentations in under 5 minutes instead of spending 2 hours manually formatting slides and documents.

Try Runable For FreeThis is what responsible AI adoption looks like: tools that solve real problems reliably enough that people want to use them.

The Trust Problem: Building Genuine AI Confidence

Underlying all of this is a trust problem.

Employees don't trust the tools because the tools don't consistently deliver. The company doesn't trust employees to adopt willingly because they haven't proven they will. Management doesn't trust that current approaches will keep the company competitive, so they're imposing solutions from above.

Building genuine AI adoption requires breaking this cycle. It requires:

Honesty about capabilities: Admitting what AI tools can and can't do. This tool is great at X but unreliable at Y. Here's where you should use it, and here's where you shouldn't.

Selective deployment: Using AI where it actually works well, rather than mandating it everywhere. Success builds confidence. Failure erodes it.

Professional discretion: Trusting professionals to evaluate tools and decide when to use them. This respects expertise and allows for adaptation to different roles and situations.

Accountability: Clear definitions of who's responsible when AI-generated outputs cause problems. Employees won't trust tools they're forced to use if they'll be blamed for the results.

Time for learning: Actually allowing people to develop competency with tools rather than just checking a compliance box.

Measuring outcomes, not adoption: Removing the incentive to use tools for visibility rather than value.

Accenture's approach does none of these things. It's maximizing short-term adoption metrics at the expense of building genuine trust.

The Future: Where This Trend Is Heading

If Accenture's approach becomes standard across professional services and corporate America, expect several changes:

Compliance requirements: AI adoption will become like other compliance training—something you have to do annually, minimum bars you need to hit. It becomes checkbox management rather than actual adoption.

Stratification: Senior employees who don't adopt will be passed over for promotions, gradually reducing seniority in leadership. This might actually benefit the company if they hire younger, more tech-comfortable replacements. Or it might hollow out the organization if they lose valuable expertise.

Tool proliferation: Companies will deploy multiple AI tools, all of which employees are expected to use. Adoption fatigue becomes real.

Resistance consolidation: Employee pushback will intensify. Some companies will double down on mandates (creating culture problems). Others will back off (creating competitive disadvantages if rivals adopt successfully).

Regulatory interest: If adoption mandates become tied to compensation and advancement, regulators might start asking questions. Is this age discrimination if older workers resist adoption? Is it disability discrimination if someone can't use these tools effectively? These are coming questions.

Generational divergence: We'll see starker differences between organizations that force adoption and those that support voluntary adoption. Some will thrive with younger, tech-native workforces. Others will struggle because they've driven out experienced people.

The question isn't whether AI adoption will happen. It will. The question is whether it happens because it's genuinely valuable or because it's been mandated.

What This Means for Workers: Practical Implications

If you're an employee at a company considering or implementing similar policies, here's what to know:

Document everything: Keep records of what tools you use, what you create with them, and what the outcomes are. If adoption becomes a metric, you want evidence that you're adopting responsibly.

Quality first: Don't sacrifice work quality to hit adoption metrics. Your reputation is worth more than a policy. If something's going wrong with the tool, say so.

Understand the policy: Get clarity on exactly what "adoption" means at your company. Ask for written definitions. If metrics are being used to evaluate you, you deserve transparency.

Learn selectively: Get good at tools that actually help your work. Skip tools that don't. Competence matters more than compliance.

Know your market: If you work in knowledge services or professional services, understand what's normal in your industry. If everyone's adopting AI tools, that's a market signal. If only your company is, that's different.

Evaluate your options: If you're genuinely uncomfortable with a company's AI adoption approach, and the company won't change it, consider whether you want to work there long-term. Your satisfaction matters.

The Leadership Question: Are Executives Actually Ready?

Here's something that should concern every Accenture client right now: If Accenture is forcing employees to adopt tools the employees see as unreliable, how much do you trust Accenture's recommendations about your own AI adoption?

If Accenture recommends that you implement AI tools to improve your operations, but Accenture's own employees are openly skeptical of those same tools, that's a credibility issue.

For professional services firms, credibility is everything. You're selling expertise and judgment. If you can't demonstrate that you're actually using the tools you're recommending to clients, why should they trust your recommendations?

This is the paradox Accenture's created: Forced adoption might boost short-term usage metrics, but it undermines the trust and credibility that professional services actually depends on.

The Path Forward: How Companies Should Actually Approach AI

Here's what works instead of mandates:

Start with specific problems: Identify a real, important problem your organization faces. Then find AI tools that might help solve it. This is pull adoption, not push.

Use volunteer teams: Let interested teams pilot tools. Measure actual outcomes. Share results.

Invest in real training: Not compliance training, but actual skill development. Help people become good at using these tools.

Build support infrastructure: Create internal expertise. Have people who actually understand the tools and can help others troubleshoot.

Measure outcomes, not adoption: Track productivity, quality, time-to-delivery, client satisfaction. These are the metrics that matter.

Allow flexibility: Different roles need different tools. Let teams adapt approaches based on their work.

Maintain standards: Don't sacrifice quality for AI usage. If the AI output isn't good enough, don't use it. Period.

Create psychological safety: People need to feel safe saying "This tool isn't working for this task." Punitive adoption policies destroy psychological safety.

This approach is slower than mandates. It doesn't produce the same compliance metrics. But it builds genuine organizational capability and culture that actually supports good work.

Conclusion: Adoption vs. Forced Compliance

Accenture's policy is a symptom of a broader problem: companies trying to solve an adaptation challenge with an enforcement mechanism.

The real challenge isn't getting people to use AI tools. It's building organizational competency with these tools so that they actually add value. That's harder. It takes time. It requires trust, transparency, and real commitment to training.

But it's the only approach that actually works long-term.

Mandates get short-term adoption numbers. They get headlines that say the company is "AI-forward." They might even get stock price bumps. But they don't build genuine capability. They don't solve the underlying problem of how to integrate AI into professional practice effectively.

What Accenture's done is create a proxy metric (adoption) and tie it to career consequences. This will boost the proxy metric. It might even boost some productivity metrics in the short term.

But it will also drive out skeptics, erode trust between employees and management, and potentially reduce the organization's actual effectiveness on important work. The people most likely to leave are often the ones best equipped to use these tools thoughtfully.

For Accenture's employees, the path forward depends on whether the company is willing to back off this approach or double down. For workers everywhere, this is a case study in what adoption policies look like when driven by leadership fear rather than genuine strategic thinking.

The future of AI in the workplace isn't going to be determined by mandates. It's going to be determined by tools that are actually useful and organizations that trust their people to make good decisions about how to work.

That's not what Accenture's created. But it could be.

FAQ

What is the AI adoption requirement that Accenture implemented?

Accenture now ties employee promotions to "regular adoption" of internal AI tools. The company sent communications stating that use of AI tools will be "a visible input to talent discussions," meaning adoption is factored into promotion eligibility. This makes AI tool usage a requirement for career advancement, not just an optional resource.

Why did Accenture decide to implement this policy?

Accenture is facing significant business pressure, with share prices down approximately 42% over the past year and quarterly revenue growth stagnant at 5%. CEO Julie Sweet previously warned that employees unable to adapt to AI may not be suitable for the company. The company is attempting to accelerate organizational AI adoption to remain competitive and demonstrate to clients and investors that it's an AI-enabled organization.

How are employees responding to the mandatory AI adoption requirement?

Employee sentiment has been largely negative. Staff have called internal AI tools "broken slop generators" and some have threatened to quit if directly affected. The policy has sparked significant discussion on professional networks, with concerns centering on forced usage of unreliable tools, fear about job security, and loss of professional autonomy in choosing methods and tools.

Does this policy apply to all Accenture employees globally?

No. Accenture employees in 12 European countries are exempt, likely due to GDPR and data privacy regulations. Workers in the U. S. federal government contracts division are also exempt, probably reflecting security clearance and regulatory compliance requirements. This selective exemption raises questions about whether the company actually believes the tools are universally safe and valuable.

What's the difference between senior and junior employee adoption rates?

Junior employees are adopting AI tools at significantly higher rates than senior staff. This generational divide reflects different comfort levels with technology, different approaches to risk, and legitimate concerns among experienced professionals about whether AI tools truly deliver the quality standards they've built their careers on.

How could adoption metrics be improved to measure actual value instead of compliance?

Organizations should measure outcomes rather than adoption rates. Instead of tracking how many times someone uses a tool, measure productivity changes, client satisfaction, quality improvements, and time-to-delivery. This approach aligns incentives with actual business value and allows professionals to use tools selectively where they genuinely improve work.

What are the risks of mandatory AI adoption policies?

Mandatory adoption risks include quality degradation, talent flight (losing experienced employees), strategic misuse of tools to satisfy metrics rather than produce good work, reduced innovation and experimentation, and organizational risk from forcing adoption of tools with known reliability problems. There's also potential legal risk if adoption becomes entangled with compensation and discrimination questions.

How should companies approach AI adoption more effectively?

Successful adoption starts with identifying specific problems AI can solve, using volunteer pilot teams to test tools, investing in real training and support, measuring outcomes rather than adoption metrics, allowing flexibility for different roles, maintaining quality standards, and creating psychological safety so employees can honestly discuss when tools aren't working. This approach takes longer but builds genuine organizational capability.

What does this mean for consulting clients working with Accenture?

Clients should consider whether they can trust Accenture's AI recommendations if Accenture's own employees are openly skeptical of the tools. Professional services firms depend on credibility and demonstrated expertise. If employees are forced to use tools they don't trust, that undermines confidence in the firm's judgment about client recommendations.

Are other companies implementing similar AI adoption requirements?

While most companies haven't tied promotions directly to AI adoption, many are implementing adoption metrics, usage dashboards, and training requirements. There's a spectrum of approaches ranging from completely voluntary adoption to incentive-based adoption to Accenture's compliance-based model with career consequences. The trend is toward more tracking and formalization of AI adoption expectations.

Key Takeaways

- Accenture now ties employee promotions directly to 'regular adoption' of internal AI tools, creating financial consequences for non-adoption

- Senior employees resist AI tool adoption at significantly higher rates than junior staff, creating a generational divide in organizational AI readiness

- Employee skepticism centers on actual tool quality and reliability rather than technology resistance, with workers calling tools 'broken slop generators'

- Mandatory adoption policies create unintended consequences including quality degradation, talent flight, strategic misuse of tools, and reduced innovation

- Accenture exempts European employees and federal contractors from the policy, suggesting the company acknowledges legitimate risks that don't apply universally

- Successful AI adoption requires outcome-based measurement and professional autonomy, not compliance metrics and mandatory usage

Related Articles

- Why AI Pilots Fail: The Gap Between Ambition and Execution [2025]

- The AI Productivity Paradox: Why 89% of Firms See No Real Benefit [2025]

- OpenAI's 100MW India Data Center Deal: The Strategic Play for 1GW Dominance [2025]

- The AI Agent 90/10 Rule: When to Build vs Buy SaaS [2025]

- VMware Customer Exodus: Why 86% Still Want Out After Broadcom [2025]

- OpenClaw AI Ban: Why Tech Giants Fear This Agentic Tool [2025]

![AI Adoption Requirements for Employee Promotions [2025]](https://tryrunable.com/blog/ai-adoption-requirements-for-employee-promotions-2025/image-1-1771504548722.jpg)