The $2 Billion Race for AI Infrastructure Dominance: Open AI's India Play

When Open AI announced its partnership with Tata Group in early 2025, the tech world barely flinched. Another data center deal. Another expansion. Another press release in an endless stream of infrastructure announcements.

But here's what actually happened: Open AI just secured one of the most strategically important pieces of real estate in the global AI arms race, and most people missed it entirely.

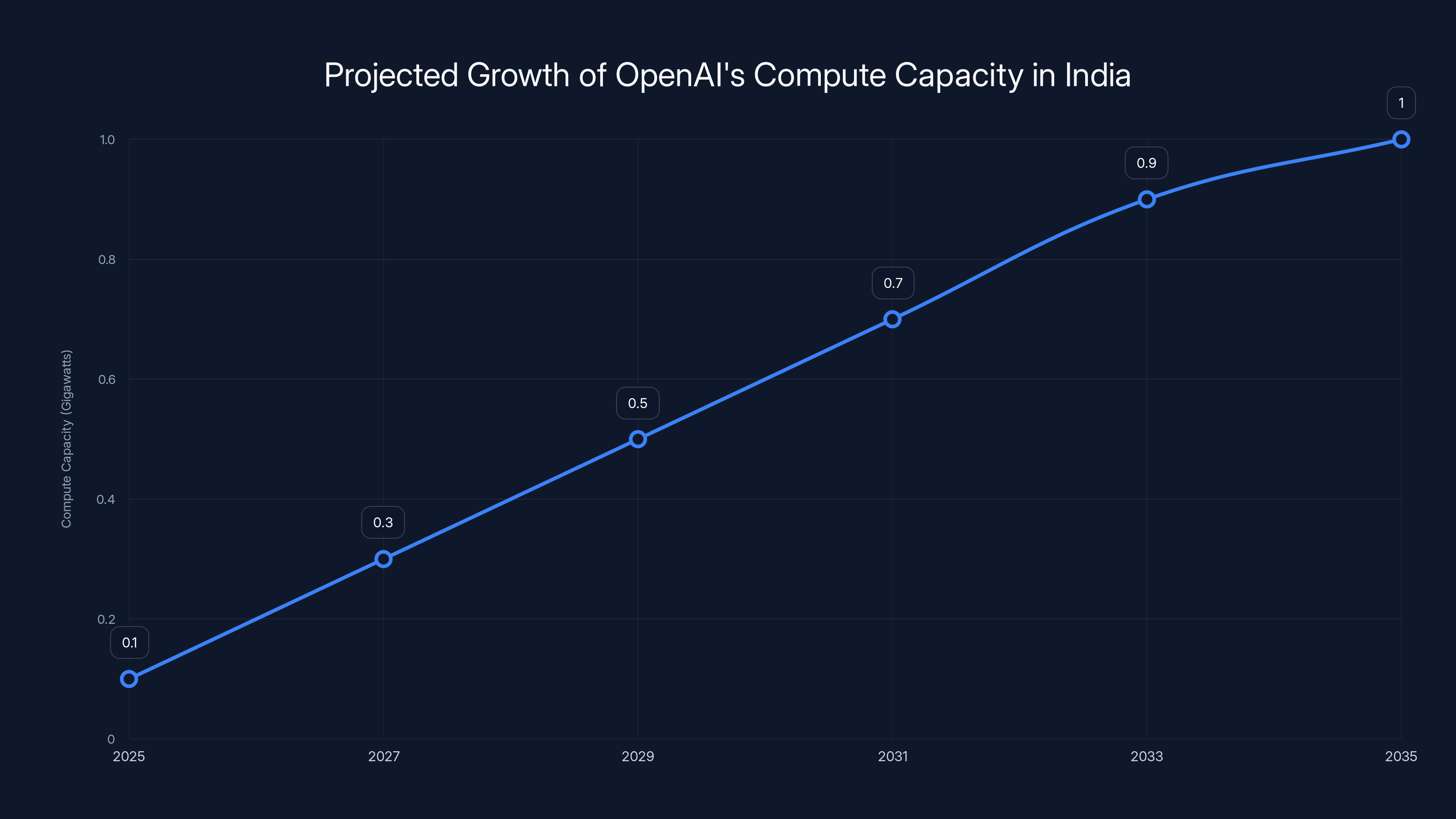

The deal is massive in scope, deceptively simple in structure, and reveals something fundamental about how the race for AI dominance is reshaping global infrastructure. A 100-megawatt initial commitment scaling to 1 gigawatt doesn't just mean more compute power in India. It means Open AI is betting the next decade of its business on having compute close to its users, on solving regulatory bottlenecks before competitors do, and on owning the infrastructure that underpins enterprise AI adoption in what might be the world's most important emerging market.

Let's break down what's actually happening here, why it matters, and what it tells us about the future of AI infrastructure globally.

Understanding the Scale: What 100MW Actually Means

If you're not deeply embedded in data center infrastructure, "100 megawatts" sounds like a number. Just another power rating. It's not. It's a statement.

One megawatt is enough to power roughly 200 homes in the US. So 100 megawatts? That's the equivalent of powering 20,000 homes continuously. For a data center. For AI.

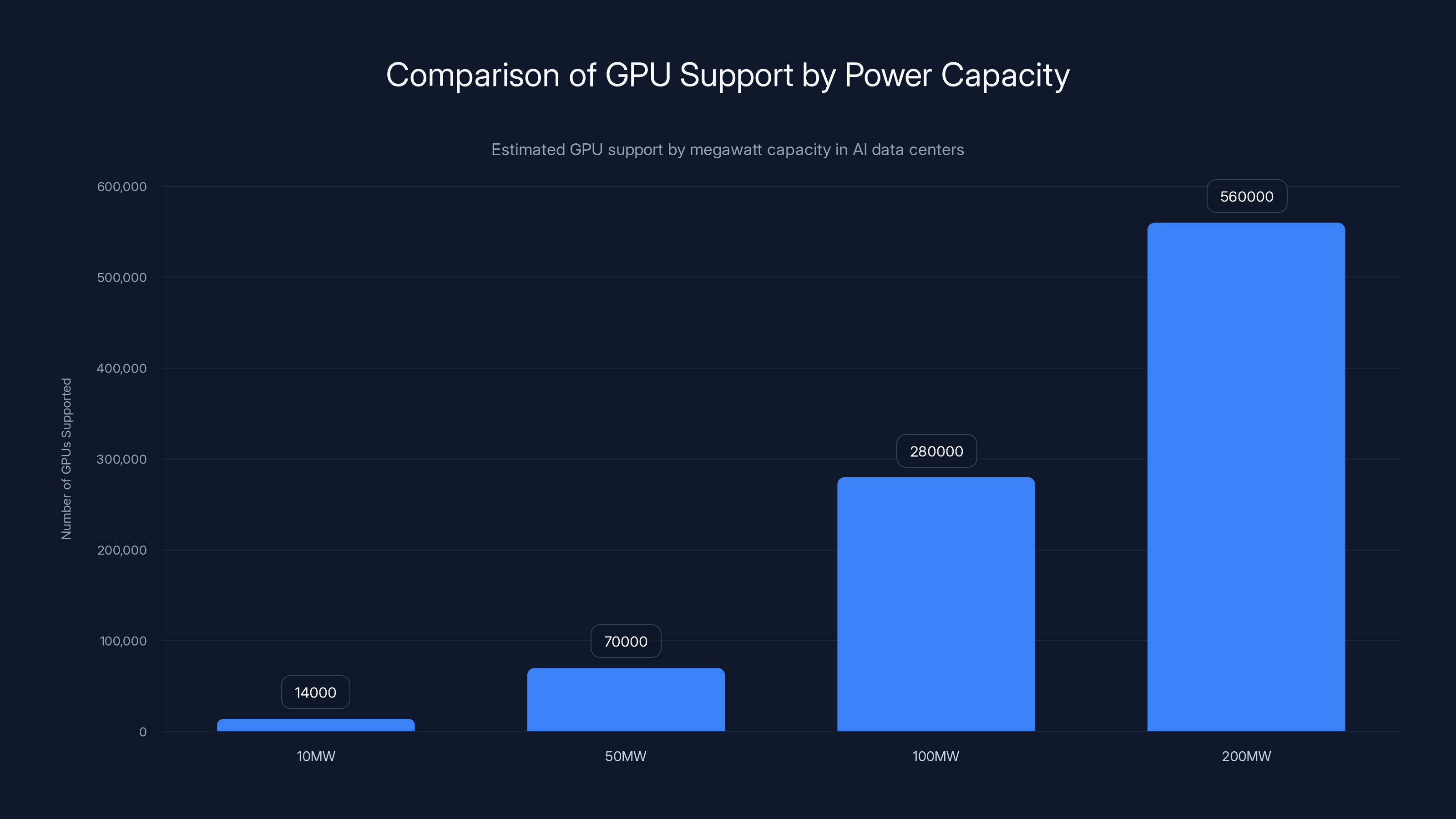

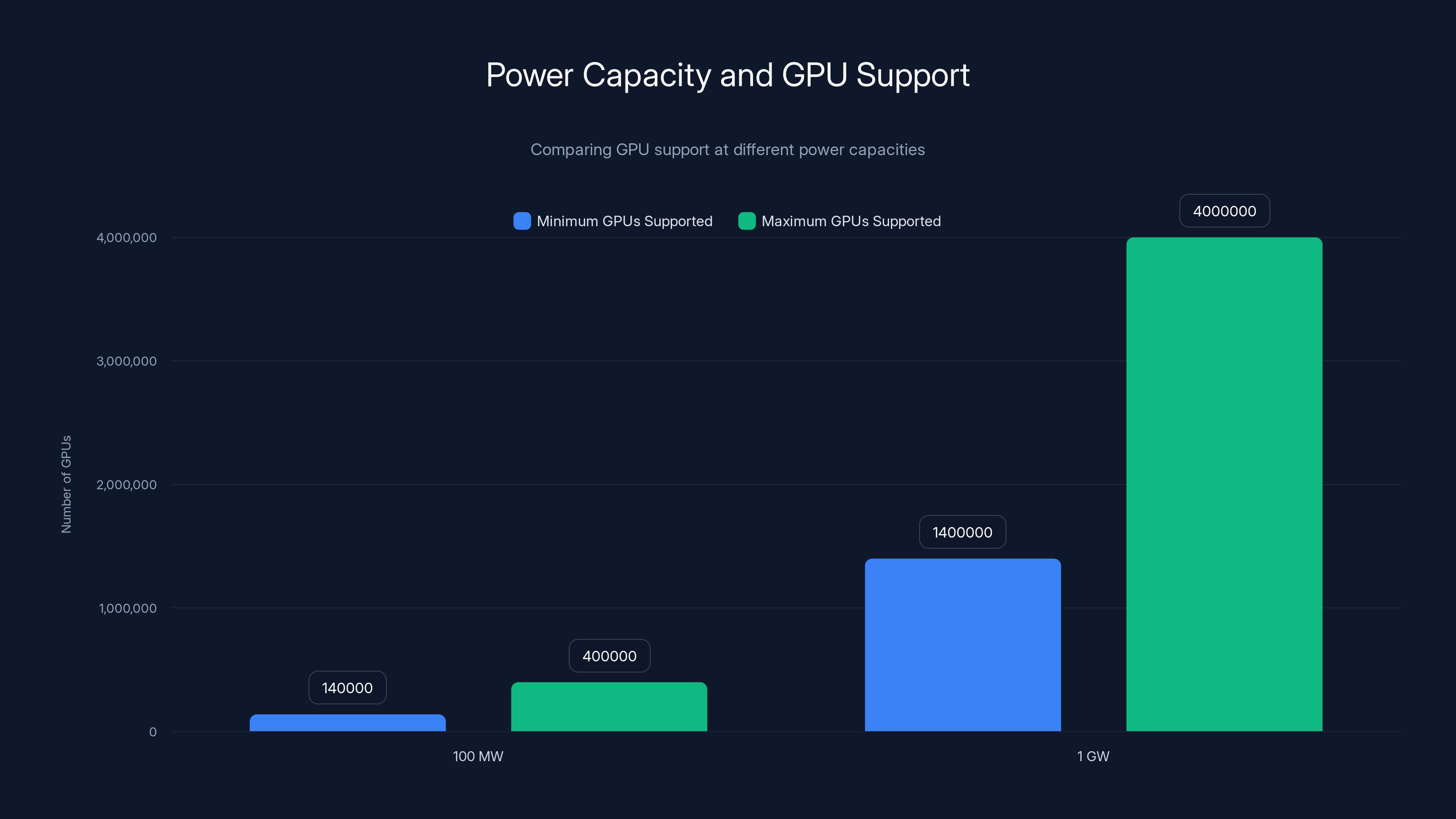

But the real way to understand scale is through the lens of GPU clusters. A modern graphics processing unit consumes somewhere between 250 and 700 watts under load. An NVIDIA H100, which is essentially the gold standard for large-scale AI training right now, pulls about 700 watts. That means 100 megawatts of power can theoretically support something in the range of 140,000 to 400,000 H100 GPUs, depending on cooling efficiency and how aggressively the data center is utilized.

For context, when Meta built one of its largest AI training clusters, it deployed around 16,000 GPUs. Google's largest AI clusters rumor to be in the 100,000 to 300,000 GPU range. So 100 megawatts isn't just big. It's competitive-scale big.

The commitment to scale to 1 gigawatt changes the conversation entirely. That's ten times the initial deployment. A 1GW facility would be among the largest AI-focused data centers in the world, comparable to some of the biggest hyperscaler deployments globally.

For a company that's been burning through compute resources at an exponential rate, securing the physical infrastructure to run models domestically in India is exactly the kind of bet that shapes the next decade.

Estimated data shows that a 100MW capacity can support approximately 280,000 GPUs, highlighting the scale of AI data centers.

The Tata Advantage: Why Hyper Vault Matters More Than You Think

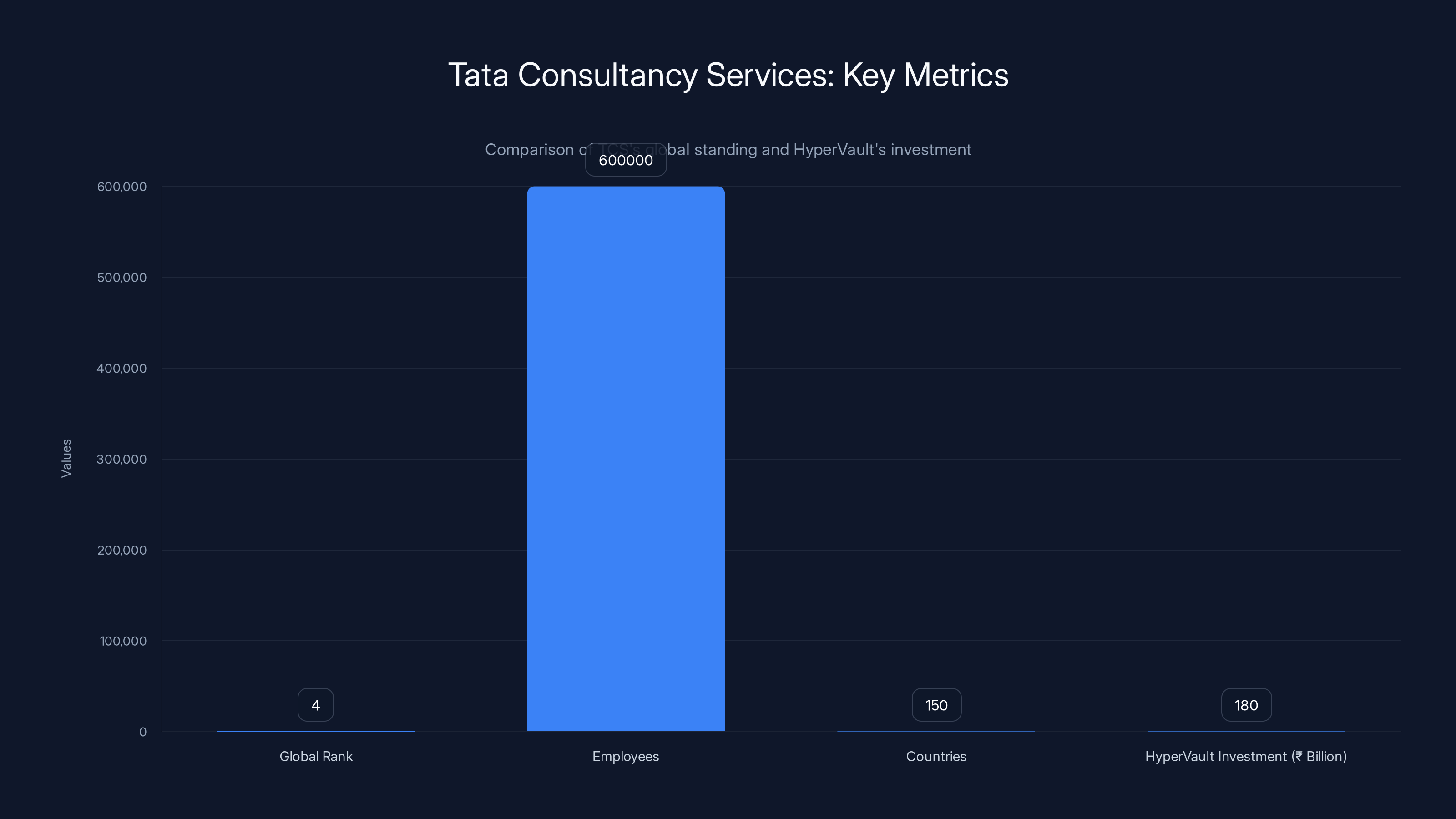

Tata Consultancy Services isn't the flashy play Open AI could have made. You can't write headlines about TCS the way you write about hyperscalers or edge AI companies. But that's precisely why this partnership is so smart.

TCS is the fourth-largest IT services company in the world. It employs over 600,000 people across 150 countries. More importantly, it already has enormous relationships with enterprise customers who absolutely need data residency compliance for their AI workloads.

Tata Group's Hyper Vault is a newer initiative, backed by about ₹180 billion (approximately $2.1 billion USD) in planned investment and designed from the ground up for large-scale AI compute. The company launched Hyper Vault specifically to capture demand from hyperscalers and enterprises that need India-based infrastructure but want to work with local partners who understand the regulatory landscape.

Open AI's choice to become Hyper Vault's first major customer does something interesting. It validates the entire Hyper Vault platform before it scales. Customers who were skeptical about trusting a new data center provider get reassurance from the fact that Open AI vetted it thoroughly enough to make it their primary India infrastructure partner. That's worth genuine money in the enterprise sales cycle.

TCS also brings something else to the table: institutional knowledge of how India's data localization and compliance frameworks actually work in practice. This isn't theoretical understanding. TCS has been navigating Indian regulatory requirements for decades. When enterprises ask Open AI whether their data is truly secure and compliant in India, being able to say "we're using infrastructure operated by Tata, which knows Indian regulation inside and out" carries substantial weight.

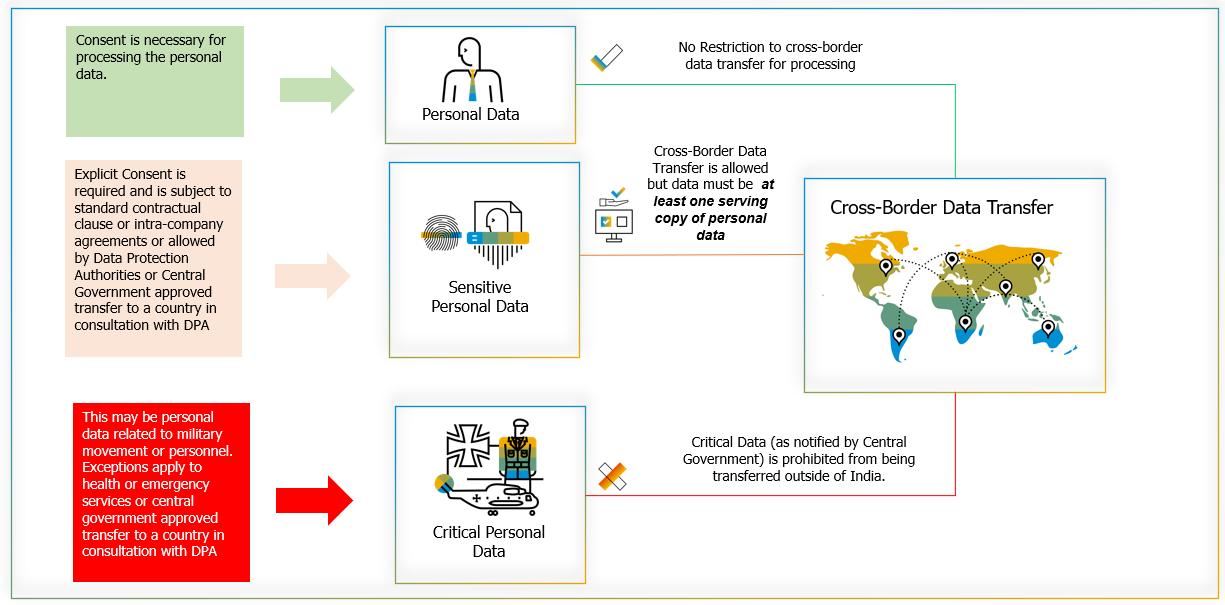

The Data Localization Problem Open AI is Solving

Here's a problem that doesn't make headlines but shapes enormous amounts of infrastructure investment: data localization requirements.

Indian regulation, like regulations in many countries, requires certain types of data to remain within India's borders. This applies particularly to financial services data, health records, government information, and increasingly, any personally identifiable information tied to Indian citizens.

Before the Tata deal, Open AI faced a choice with every enterprise customer in India: either the customer had to accept running their AI workloads on infrastructure outside India, with all the compliance headaches that creates, or Open AI had to find partners with existing India-based infrastructure.

That's a hard sell for regulated enterprises. A bank in Mumbai doesn't want to run customer financial data through servers in the US, even if technically encrypted. A hospital doesn't want patient records crossing borders. A government agency has explicit legal requirements against it.

Having Open AI-operated infrastructure in India solves this instantly. Suddenly, every regulated enterprise in India becomes a potential customer. Every government workload becomes an option. Every piece of sensitive data can be processed domestically.

The addressable market here is genuinely massive. India has over 600,000 registered companies, thousands of banks and financial institutions, tens of thousands of hospitals, plus all levels of government. The percentage of that market currently using enterprise AI? Single digits. The potential? Enormous.

Open AI just opened a door that was previously locked.

A 100 MW facility can support between 140,000 to 400,000 GPUs, while a 1 GW facility can support 1.4 to 4 million GPUs, showcasing the massive scale of AI data centers. Estimated data.

The Enterprise Deployment Strategy: When Scale Meets Adoption

The partnership doesn't stop at infrastructure. Open AI also announced plans to deploy Chat GPT Enterprise across TCS's entire workforce.

TCS has 640,000 employees globally, but the initial rollout is focused on the engineering teams at TCS. That means somewhere between 100,000 and 300,000 developers, architects, and engineers getting access to Chat GPT Enterprise integrated into their daily workflows.

This is brilliant for multiple reasons, and most of them have nothing to do with how much Open AI makes from TCS directly.

First, it creates a massive showcase for enterprise AI adoption at scale. When the largest IT services company in the world standardizes on Chat GPT Enterprise, that signals to other enterprises that this isn't experimental. It's how work gets done. Other Indian enterprises watch TCS and ask themselves why they're not doing the same thing.

Second, it creates a feedback loop. TCS engineering teams using Chat GPT Enterprise across hundreds of thousands of developers will generate extraordinary amounts of data about how enterprise AI adoption actually works. Where do people get stuck? What features do they actually use? How does AI integration affect productivity? Open AI gets to watch an experiment at unprecedented scale play out with a partner that has deep enterprise relationships.

Third, it makes TCS a reference customer for every other enterprise AI deal Open AI does in India. When Open AI's sales team talks to Infosys or HCL Technologies about Chat GPT Enterprise adoption, the first thing they say is, "TCS is already doing this at scale across 300,000 developers."

The deal also includes standardizing on Open AI's Codex tools for AI-native software development. This is Open AI essentially saying, "We're not just selling you compute and chat tools. We're helping you rebuild your entire development process around AI."

For a company like TCS that does software development at massive scale, that's a fundamental shift. If TCS's 300,000 developers are all building with AI-native practices using Open AI tools, they become ambassadors for those tools. Every client project becomes an opportunity to introduce their clients to the same tools. It's a distribution advantage that echoes through the entire IT services industry.

The Certification Play: Building the Next Generation of AI Talent

Embedded in this deal is something that sounds almost boring on the surface: Open AI is expanding its certification programs in India, with TCS becoming the first participating organization outside the United States.

But this is actually one of the most important long-term pieces of the entire partnership.

AI talent in India is desperately needed but severely short on supply. India has hundreds of millions of people with basic tech skills and software development background, but relatively few with deep experience in prompt engineering, AI system design, enterprise AI deployment, and building with LLMs.

Open AI running certification programs through TCS means several things simultaneously:

First, it creates a pathway for Indian developers to build formal credentials in AI skills. Those certifications have value in the job market. They signal to employers that someone knows how to work with these tools. Over time, that creates a talent premium for people with Open AI certifications.

Second, it creates institutional momentum. If TCS is officially certifying people in Open AI tools, other companies start doing the same. Educational institutions add Open AI-focused curriculum. A whole ecosystem forms around these skills.

Third, it's distribution disguised as education. Every person who gets certified becomes someone with practical experience using Open AI tools. They go work at other companies. They recommend Open AI tools to their managers. They become default choices for new projects because they're what people know.

This is how you build a moat that's almost impossible to compete against. Not by forcing adoption, but by building so much human expertise around your tools that it becomes the natural choice.

India as the Critical Growth Market: 100+ Million Users Already

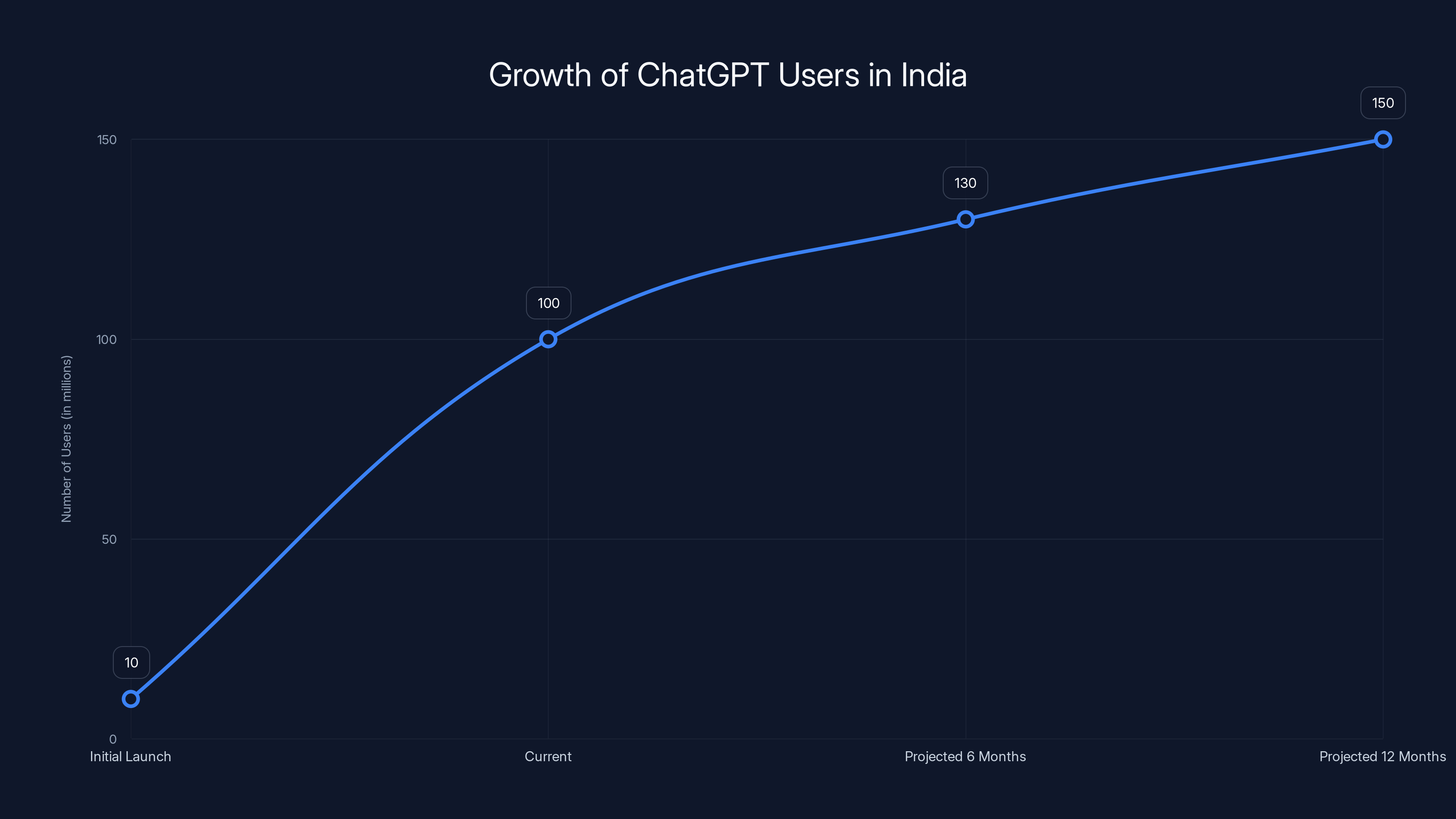

The numbers here are worth taking seriously. Open AI's CEO has stated that India has more than 100 million weekly active Chat GPT users.

Let that sink in. That's more than the total population of most countries. That's comparable to the entire user base of many social networks.

For context, India's total internet user base is around 750 million. So Open AI has penetrated roughly 13% of India's internet population into weekly active users. That adoption rate is extraordinary for any service, let alone for something as technically complex as a large language model chatbot.

That adoption is coming from students, teachers, developers, entrepreneurs, and increasingly, enterprise users. But here's what's remarkable: that 100 million number is probably already outdated. The adoption trajectory in India is exponential. By the time this article is fully indexed in search engines, the number is probably pushing 130 or 150 million.

This is the market Open AI is playing for. It's not just about India's current size. It's about the fact that India's internet adoption is still accelerating. India is adding more internet users every month than the total population of many countries. The ceiling on potential Chat GPT users in India is genuinely massive.

Every infrastructure investment, every enterprise partnership, every certification program is Open AI saying: "We're going to own this market before anyone else figures out how important it is."

ChatGPT's user base in India is experiencing exponential growth, with projections suggesting up to 150 million users within a year. (Estimated data)

The Regulatory Arbitrage: Winning Before the Game is Defined

One of the most underrated aspects of this deal is the regulatory positioning.

AI regulation globally is still extremely nascent. The EU has the AI Act. The US is working through various regulatory frameworks. India is still in the very early stages of defining what AI regulation will look like.

By establishing deep partnerships and infrastructure commitments before those regulatory frameworks solidify, Open AI gets a seat at the table when India starts writing those rules. When Indian regulators ask what's necessary for responsible AI deployment, Open AI gets to answer from a position of operational knowledge and established presence.

This isn't cynical. It's actually the right thing. Companies that operate within a market do have better knowledge of what regulation is practical and what's theoretical. But it's also strategically valuable. Open AI is positioning itself to help write the regulatory playbook in India before competitors even have operations there.

When Facebook or other social networks tried to enter China, they entered too late. The regulatory framework was already written. The game was already over.

Open AI is making sure that doesn't happen with India. By being there early, building relationships, and establishing operational infrastructure, Open AI gets to shape how India thinks about AI governance.

The Competitive Landscape: Who's Playing This Game?

Open AI isn't the only company betting big on India infrastructure. But the scale and speed of Open AI's moves are distinctive.

Google, through Sundar Pichai and its India operations, has been present in India for years. But Google's AI strategy in India has been more scattered. Partnerships with Indian startups, some API access, some integration into Google Cloud. Nothing approaching the level of integrated infrastructure and enterprise deployment Open AI is planning.

Microsoft has a relationship with Open AI through the partnership. But Microsoft's own infrastructure play in India is less developed than what Open AI is building with Tata.

Anthropic, Claude's maker, has been largely absent from India infrastructure announcements.

Meta has been focused on other markets for their AI initiatives.

This means Open AI has a window where it can establish market dominance before competitors catch up. That window is closing, but it's not closed yet. The next 18 to 24 months are critical. If Open AI can ship the 100MW facility, scale to 500MW, and establish Chat GPT as the default enterprise AI tool across major Indian companies, that advantage becomes durable.

Competitors might build their own infrastructure eventually. But by then, Open AI will have cemented relationships, trained an entire generation of developers, and built institutional habits that are hard to break.

The Financial Architecture: Who's Paying for What

Here's an interesting detail buried in the announcement: financial terms of the deal were not disclosed.

That's actually telling. There are a few possibilities. Open AI might be making a capital investment in Hyper Vault directly, taking an equity stake in the data center business. That would align incentives long-term and give Open AI more control over capacity planning.

Alternatively, Open AI might be signing long-term capacity contracts, essentially guaranteeing to pay for 100MW of power and facilities over a defined period. This is how most hyperscaler data center relationships work.

Or it could be some hybrid: upfront investment in infrastructure development plus long-term capacity agreements.

The fact that Open AI isn't disclosing suggests the deal is probably more sophisticated than simple lease agreements. Capital investments would typically be disclosed in financial filings. The silence suggests either the deal structure is proprietary, or the terms are asymmetric in ways Open AI doesn't want public.

There's also the question of who's paying for the build-out. TCS received backing from TPG, the private equity firm, to develop Hyper Vault. So TPG has invested roughly $2 billion in infrastructure development. Open AI's willingness to be the anchor customer probably de-risks that investment substantially, making it more attractive to TPG.

From Open AI's perspective, not disclosing financial terms is smart. It keeps competitors guessing about the actual costs and terms. If you disclosed that you're paying $X per megawatt per year, competitors know exactly what your cost structure looks like. By staying quiet, you maintain strategic ambiguity.

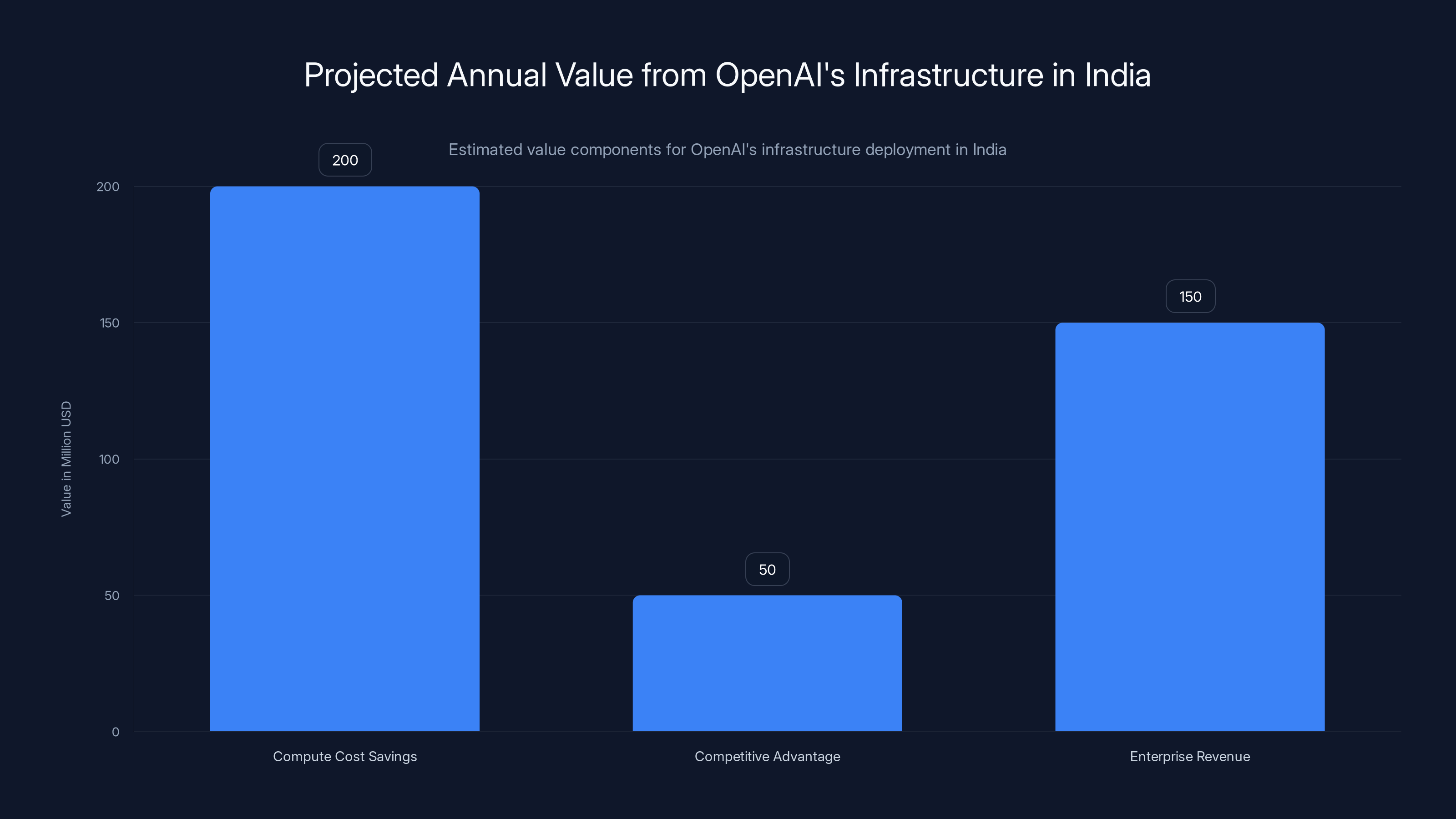

Estimated data suggests OpenAI could generate over $400 million annually from compute savings, competitive advantages, and enterprise revenue in India.

The Expansion Beyond Infrastructure: Mumbai, Bengaluru, and the Physical Presence Game

The infrastructure deal gets all the attention, but the office expansion might matter just as much.

Open AI plans to open new offices in Mumbai and Bengaluru in 2025, adding to its existing presence in New Delhi. That means presence in all three of India's major tech hubs.

New Delhi is the regulatory and government center. Mumbai is finance and enterprise headquarters. Bengaluru is the tech and startup hub.

By establishing offices in all three, Open AI is building a presence that mirrors how Indian business actually works. You need people in New Delhi to understand government procurement and regulatory requirements. You need people in Mumbai to talk to banks and large enterprises. You need people in Bengaluru to recruit engineers and work with startups.

The offices aren't just symbolic. They're operational necessity for the enterprise sales cycle. When you want to sell Chat GPT Enterprise to a major Indian bank, you need a team in Mumbai that can visit customers, understand their requirements, and provide local support. That requires local employees, local offices, local presence.

Open AI is essentially saying, "We're not treating India as a market we service from overseas. We're treating it as a core market where we operate." That's a significant commitment.

The Partner Ecosystem Strategy: Building Network Effects

The announcement mentions that Open AI has been expanding partnerships with companies including Pine Labs, Jio Hotstar, Eternal, Cars 24, HCLTech, Phone Pe, CRED, and Make My Trip.

Look at these companies closely. Pine Labs is payments infrastructure. Jio Hotstar is streaming and entertainment. Cars 24 is e-commerce. Phone Pe is digital payments. CRED is fintech. Make My Trip is travel.

Open AI is building a presence across the entire consumer and enterprise technology stack in India. The strategy is to embed Chat GPT and Open AI tools into platforms that hundreds of millions of Indians use daily.

When you book a ride through a partner that uses Chat GPT-powered customer service, you're interacting with Open AI's infrastructure without knowing it. When you make a payment through a partner that uses Open AI-powered fraud detection, the same thing happens.

This is network effects through partnership. Open AI doesn't need to build every application. It needs to be the underlying infrastructure that every application uses. By being in the payment stack, the entertainment stack, the e-commerce stack, the travel stack, Open AI becomes invisible infrastructure that's nonetheless essential.

Competitors trying to move into India now would have to convince all of these companies to switch infrastructure providers. That's expensive, risky, and unnecessary for those partners. They've already integrated. Switching means rebuilding.

The Manufacturing and AI Connection: Why India Matters for Hardware

One angle that's not explicitly mentioned but is absolutely part of the strategic picture: India is becoming important for hardware manufacturing.

AI companies need chips. NVIDIA makes the best ones. But the supply of H100s, the GPUs powering most AI training and inference right now, has been constrained. Everyone's competing for limited capacity.

India, through partnerships with companies and increasingly through government initiatives, is trying to develop indigenous semiconductor and chip manufacturing capacity. Building relationships with major hardware manufacturers and data center operators positions Open AI well if and when that manufacturing capacity comes online.

It's a longer-term play. India's semiconductor manufacturing is still in early stages. But the infrastructure investments today position Open AI to be a primary customer when that capacity matures.

OpenAI's compute capacity in India is projected to grow from 0.1 gigawatts in 2025 to 1 gigawatt by 2035, reflecting strategic infrastructure expansion. Estimated data based on initial commitment.

The Timing Question: Why Now in 2025?

You could ask: why is Open AI making this move now? Why not six months ago or six months from now?

Several factors probably align:

First, Tata's Hyper Vault platform has reached sufficient maturity that taking on a major customer makes sense. Early versions of data center infrastructure are unstable. By 2025, Hyper Vault has probably proven itself reliable enough for a company like Open AI to trust critical workloads.

Second, India's AI policy environment is crystallizing. The government has made clear that it views AI as strategically important. Companies making major infrastructure bets now get to work with policymakers on favorable terms before frameworks harden.

Third, competitor moves. Other companies making India plays probably forced Open AI's hand. You want to be first or early in emerging markets. If you wait too long, you're always reacting to what competitors built first.

Fourth, Open AI's own growth trajectory. The company has been raising enormous sums of capital. It has capital to deploy. Making big infrastructure bets is how you convert capital into long-term advantage.

The Implementation Question: Can Tata Actually Execute?

The deal is announced. The commitment is made. But can Tata actually build this?

TCS is an enormous company with deep infrastructure expertise. Hyper Vault is a newer initiative, but it's backed by TPG capital, which has invested in data center infrastructure before and knows what success looks like.

The main risks are technical and logistical. Building a 100MW data center takes time. You need to acquire land, secure power supply, handle environmental permitting, and test infrastructure. The timeline from announcement to first operational capacity is typically 18 to 36 months.

Then there's the question of whether India's power infrastructure can support it. Large data centers are power-hungry. India's electricity grid has gotten more reliable, but peak load issues still exist in parts of the country. A 100MW facility needs reliable, consistent power.

There's also the human element. Building and operating a world-class data center requires expertise. You need to recruit engineers who know how to manage massive GPU clusters, handle cooling at scale, and troubleshoot failures when they happen.

But Tata has advantages here. It owns significant power generation capacity through its subsidiaries. It has relationships with India's power distribution companies. It has engineering talent available. These aren't trivial advantages.

The risk isn't that the project fails entirely. The risk is that it takes longer than expected or encounters cost overruns. That delays when Open AI can actually use the capacity, which pushes back enterprise deployments and customer implementations.

Workforce Upskilling and the Long Game

The partnership includes Open AI certification programs in India, which signals something deeper: Open AI is betting on India's workforce transformation.

India has over 1.4 billion people. The percentage with technical skills is still relatively small but growing rapidly. The number with AI skills is tiny.

By creating certification pathways and working with educational institutions, Open AI is helping build the talent infrastructure that will use its tools for the next decade.

This is a patient-capital play. You're not going to see return on certification program investments immediately. But in five to ten years, when a huge fraction of Indian software developers have Open AI certification and experience with Chat GPT and Codex, those developers become default choices for companies building with those tools.

It's empire building. And it works.

TCS is the 4th largest IT services company, with 600,000 employees in 150 countries. HyperVault's investment of ₹180 billion underscores its commitment to AI infrastructure.

Looking Ahead: What This Means for the Rest of the World

If India is the template, what does it tell us about Open AI's global strategy going forward?

The pattern is: (1) Identify a high-growth market with regulatory clarity, (2) Build foundational infrastructure partnerships, (3) Deploy tools across enterprise partners, (4) Expand presence in major cities, (5) Build talent through certifications and partnerships.

Southeast Asia probably looks very attractive through this lens. Vietnam, Thailand, Indonesia, Philippines. High growth, emerging regulatory frameworks, millions of potential users.

Latin America is similar. Brazil has regulatory interest in AI governance. Mexico has deep enterprise relationships.

Europe is probably less attractive right now because the regulatory framework is already set and tilts toward local preferences. The AI Act imposes requirements that complicate Open AI's model.

Middle East and North Africa probably come later, but will eventually follow similar patterns.

The company that builds infrastructure and partner relationships in these markets before competitors show up wins the market for a decade. Open AI is executing that strategy systematically.

The Competitive Pressure This Creates

The India deal changes the competitive game for everyone else.

Google has to respond. It can't let Open AI own India infrastructure while Google is relegated to being a service provider. Expect Google Cloud announcements around India infrastructure and enterprise partnerships within the next two quarters.

Microsoft's relationship with Open AI makes this complicated. Microsoft has its own interests in India but also benefits from Open AI's success. Still, expect more aggressive Azure infrastructure plays in India.

Anthropic, if it's serious about being more than a research lab, needs to make similar moves. It has the capital. It doesn't have the execution track record with governments and enterprises that Open AI is building.

Meta hasn't played in the AI infrastructure game competitively. This is probably one of the reasons why. The capital required is enormous, the regulatory complexity is high, and the competitive window moves fast.

The next 12 months of announcements from these companies will be the market responding to what Open AI just did. That's how to read announcements going forward. Not as independent strategic choices, but as reactions to Open AI's moves.

The Quantification Question: How Much is This Really Worth?

If you were trying to value this deal from Open AI's perspective, what would the economics look like?

Let's start with a simple model. Assume Open AI needs to serve 200 million users in India within five years. Assume each user needs on average 1 unit of compute annually, where 1 unit of compute costs $1 in the cloud data center market.

That's

But the real value is bigger. Having domestic infrastructure means higher quality product (lower latency), better regulatory compliance (required for certain enterprise deals), and competitive advantage (competitors need to build their own infrastructure or rent from less favorable providers).

Estimating the competitive advantage value is speculative, but not zero. Having infrastructure that competitors don't have, in a market competitors are trying to enter, is worth real money.

Then there's the enterprise deployment angle. If you get Chat GPT Enterprise standardized across hundreds of companies in India, each paying tens or hundreds of thousands per year, that's recurring revenue that wouldn't exist without the infrastructure and partnership.

A rough order-of-magnitude guess:

The Regulatory Tailwind and Headwind Risk

One thing to watch closely: India's AI governance evolution.

Right now, India is broadly supportive of AI development. The government has signaled that it wants India to be an AI leader. But sentiment can shift quickly if there are perceived harms or if concerns about data privacy escalate.

Open AI's move to have data processing happen within India, using Tata infrastructure, probably helps protect against sudden regulatory restrictions. If India banned foreign companies from processing Indian citizen data, Open AI would already have domestic infrastructure in place.

But it's also possible that India, learning from China and the EU, decides it wants more control over AI infrastructure and deployment. Regulations requiring Indian data center operators to limit access to foreign companies, or restrictions on where trained models can be deployed, would materially change the value of this infrastructure.

The bet Open AI is making is that India's AI governance will remain permissive and that early infrastructure investment provides protection against future restrictive policies. That's a reasonable bet, but not guaranteed.

Integration with Open AI's Global Infrastructure Story

The India deal doesn't exist in isolation. It's part of a larger infrastructure story.

Open AI has been building partnerships globally. It's in discussions about infrastructure in other markets. The Tata deal is the first major public announcement of this global infrastructure strategy, but it's probably not the last.

The pattern is becoming clear: Open AI wants to operate infrastructure regionally, not globally. That means US infrastructure for North America, Europe infrastructure for EMEA, India infrastructure for South Asia, and similar for other regions.

That's a fundamentally different model than the pure cloud service model, where everything runs in a few hyperscaler data centers. It requires more capital, more operational complexity, more local expertise.

But it also creates defensibility. If every region has Open AI-operated infrastructure, competitors can't easily replicate that in emerging markets. They'd have to build everywhere simultaneously, which is capital-prohibitive.

The Taiwan Semiconductor Vulnerability

One critical thing worth noting: the chips that run these data centers come from Taiwan, through NVIDIA and other suppliers.

Open AI's infrastructure play in India, and by extension globally, depends on continued access to cutting-edge semiconductors. If geopolitical tensions around Taiwan escalate, or if US export controls on advanced chips tighten further, the entire infrastructure strategy becomes vulnerable.

This isn't a reason not to invest in India infrastructure. It's a reason to diversify across regions and to work on alternative chip sources if possible. But it's a real constraint on global infrastructure strategies that don't acknowledge the Taiwan risk.

The Employee and Recruitment Angle

Opening offices in Mumbai and Bengaluru isn't just about customer relationships. It's about hiring.

Open AI needs people who understand India's market, regulatory environment, and enterprise landscape. Those people need to work in India, or at least be able to travel there frequently.

Bengaluru specifically is attractive because it's where Indian tech talent is concentrated. Open AI can hire experienced engineers, product managers, and operations people who might otherwise work for Google, Microsoft, or Indian companies like Flipkart or Amazon India.

The salary expectations in Bengaluru are lower than in the US, but higher than five years ago. Open AI will need to pay competitive salaries to attract top talent. But there's still a cost advantage versus building the same team in the Bay Area.

Long-term, Open AI probably wants to have significant portions of its workforce in India. Not just for cost reasons, but because the people who understand India's market best are from India.

FAQ

What exactly is a megawatt and why does 100MW matter for AI?

A megawatt is a measure of power (one million watts). One megawatt can power roughly 200 homes. For AI data centers, 100MW can support approximately 140,000 to 400,000 modern GPU accelerators like NVIDIA H100s, making it one of the largest AI-focused data center deployments globally. This scale is essential for training large language models and running inference for millions of concurrent users.

Why did Open AI choose to partner with Tata instead of building infrastructure independently?

Tata brings several advantages: established regulatory knowledge through TCS's decades of operating in India, access to power generation infrastructure through Tata subsidiaries, and the Hyper Vault platform backed by $2 billion in investment capital. By partnering rather than building independently, Open AI reduces capital requirements, accelerates timeline to operational capacity, and gains a partner with existing enterprise relationships across India.

How does data residency requirement affect AI companies operating in India?

Indian regulation requires certain data types (financial, health, government information) to remain within Indian borders. Before this infrastructure was available, companies using Open AI's tools had to either process data internationally (creating compliance headaches) or avoid using AI tools entirely. Having domestic infrastructure means regulated enterprises can now use Chat GPT and related tools while maintaining full compliance with data localization laws.

What does Chat GPT Enterprise include that the free version doesn't?

Chat GPT Enterprise provides data privacy guarantees (messages don't train future models), admin controls for managing team access, detailed usage analytics and cost controls, higher rate limits for large teams, and dedicated account support. For enterprises deploying AI across hundreds or thousands of employees, these features are essential for security, cost management, and operational control.

How does Open AI's certification program in India create competitive advantage?

By providing AI certifications through TCS, Open AI creates a skilled workforce that defaults to using Open AI tools because they trained on them. These certified developers become default choices when companies build new projects, since they already have practical experience with the tools. Over five to ten years, this creates a moat that's difficult for competitors to overcome, even if they eventually build competing infrastructure.

What does scaling from 100MW to 1GW mean for Open AI's India strategy?

The 1GW target represents a tenfold expansion, making Hyper Vault one of the largest AI-focused facilities globally. This suggests Open AI expects India's AI usage to grow from current 100+ million weekly users to a potential market many times larger. The long-term commitment signals Open AI's confidence in India as a core market for the next decade, not just an emerging opportunity.

How does this deal impact competition between Open AI and other AI companies?

Open AI has established infrastructure and enterprise partnerships in India before major competitors built local presence. This creates a first-mover advantage: competitors like Google and Microsoft now need to build competing infrastructure, negotiate partnerships, and recruit teams to match Open AI's position. Being second in a market this important creates lasting competitive disadvantage.

What are the risks that could prevent Tata from successfully executing this project?

Potential risks include: timeline delays in data center construction (typical timeline is 18-36 months), power supply constraints in India's electricity grid, recruitment challenges for skilled data center operations staff, and potential regulatory changes that restrict operations. However, Tata's existing infrastructure and capital backing substantially reduce these risks compared to a startup attempting the same project.

Why does Open AI need regional infrastructure instead of using cloud providers like AWS or Google Cloud?

Regional infrastructure provides four advantages: (1) Lower latency for local users, improving user experience, (2) Regulatory compliance for data residency requirements, (3) Cost efficiency—owning infrastructure is cheaper than renting cloud capacity at massive scale, and (4) Competitive differentiation—competitors must either build competing infrastructure or rent from the same cloud providers, putting them at disadvantage.

How does this India infrastructure play fit into Open AI's global strategy?

The India deal appears to be a template for Open AI's global expansion: identify high-growth markets with clear regulatory frameworks, build regional infrastructure partnerships, deploy enterprise tools across major companies, establish local offices, and create talent development programs. Expect similar announcements in Southeast Asia, Latin America, and potentially the Middle East over the next two to three years.

Key Takeaways for Strategic Understanding

Open AI's 100MW data center deal with Tata Group represents far more than infrastructure investment. It's a comprehensive market capture strategy that locks in competitive advantage through physical infrastructure, enterprise partnerships, regulatory positioning, and talent development across India's tech ecosystem. The move signals Open AI's commitment to building durable competitive advantage through owning infrastructure in key markets rather than depending on cloud providers. Success here establishes a template for global expansion that competitors will struggle to replicate at similar speed and scale.

Related Articles

- EU Parliament Bans AI on Government Devices: Security Concerns [2025]

- Alibaba's Qwen 3.5 397B-A17: How Smaller Models Beat Trillion-Parameter Giants [2025]

- Agentic AI & Supply Chain Foresight: Turning Volatility Into Strategy [2025]

- VMware Customer Exodus: Why 86% Still Want Out After Broadcom [2025]

- OpenAI Loses Cameo Trademark Case: What It Means for AI Companies [2025]

- Google I/O 2026: May 19-20 Dates, What to Expect [2025]

![OpenAI's 100MW India Data Center Deal: The Strategic Play for 1GW Dominance [2025]](https://tryrunable.com/blog/openai-s-100mw-india-data-center-deal-the-strategic-play-for/image-1-1771481175529.jpg)