The Human Touch in Tech: Why Over-Automation Fails [2025]

Automation has become the default answer to nearly every business problem. Need to cut costs? Automate it. Want to move faster? Automate it. Trying to handle more customers with fewer people? You know the answer.

The pressure is real. Leaders watch competitors scale with smaller teams. Vendors promise AI solutions that'll transform everything overnight. Cloud platforms offer automation templates pre-built and ready to deploy. It all sounds logical, even inevitable.

But here's what's actually happening across IT departments, customer support teams, sales organizations, and security operations: efficiency is improving while trust is collapsing.

Tickets get resolved faster. Response times drop. Cost per transaction shrinks. But customer satisfaction scores fall. Prospects ignore outbound campaigns. Security teams miss context-critical anomalies. And when something unusual happens, the system breaks entirely because no human ever learned how the customer actually works.

This isn't a failure of automation technology. It's a failure of strategy. Most organizations have automated the wrong parts of their workflows, removing the very human elements that create resilience, build relationships, and catch problems before they explode.

The uncomfortable truth: your automation is making you brittle, not efficient. And that brittleness will cost you more than you're saving.

TL; DR

- Full automation without human oversight fails when it encounters exceptions, customer nuance, or context that AI can't interpret

- Customer satisfaction plummets when support feels generic and no one understands their specific situation or constraints

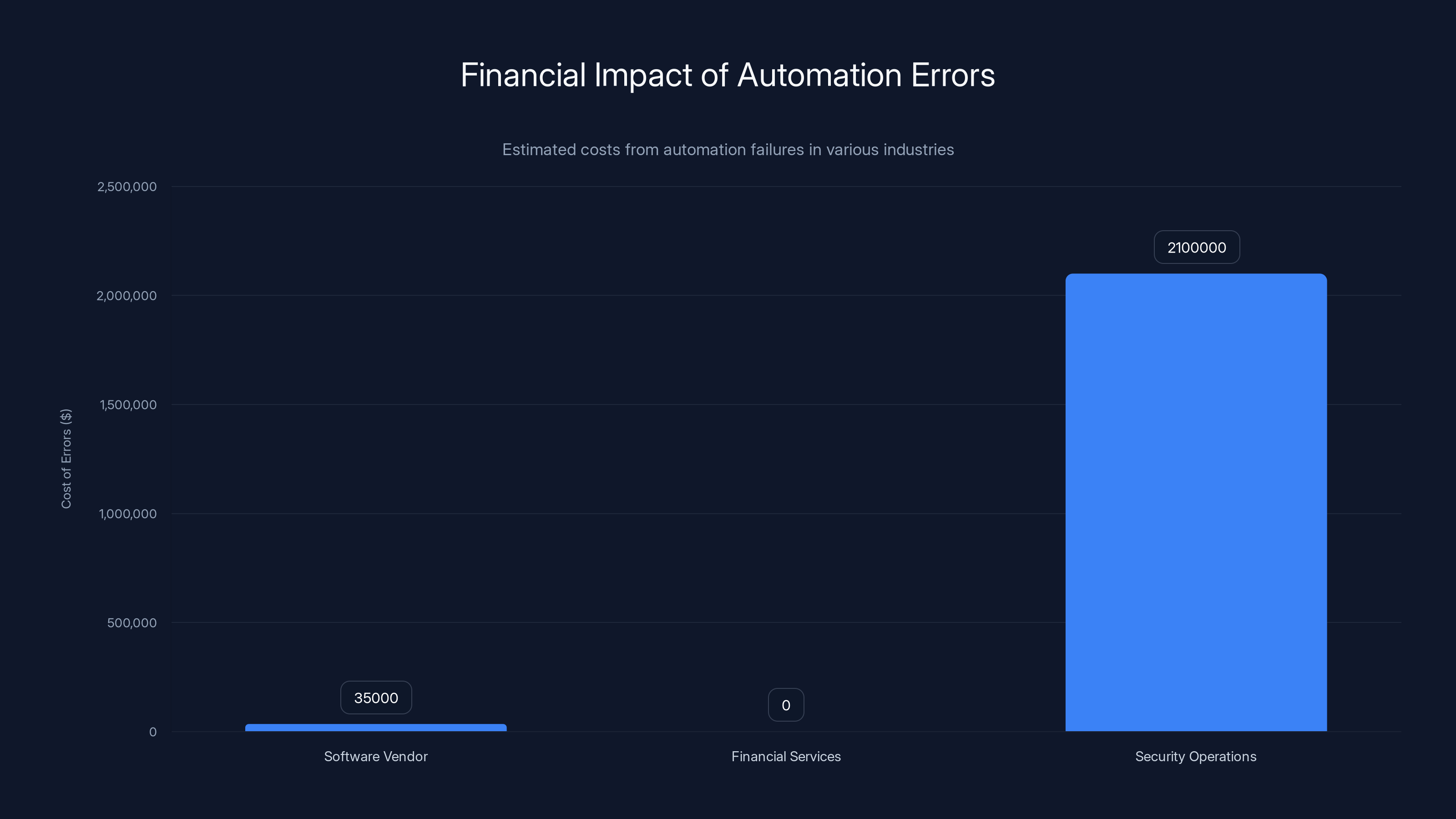

- Hidden costs emerge from misconfigured systems (like a $35K Microsoft SKU mistake), wrong decisions, and service failures

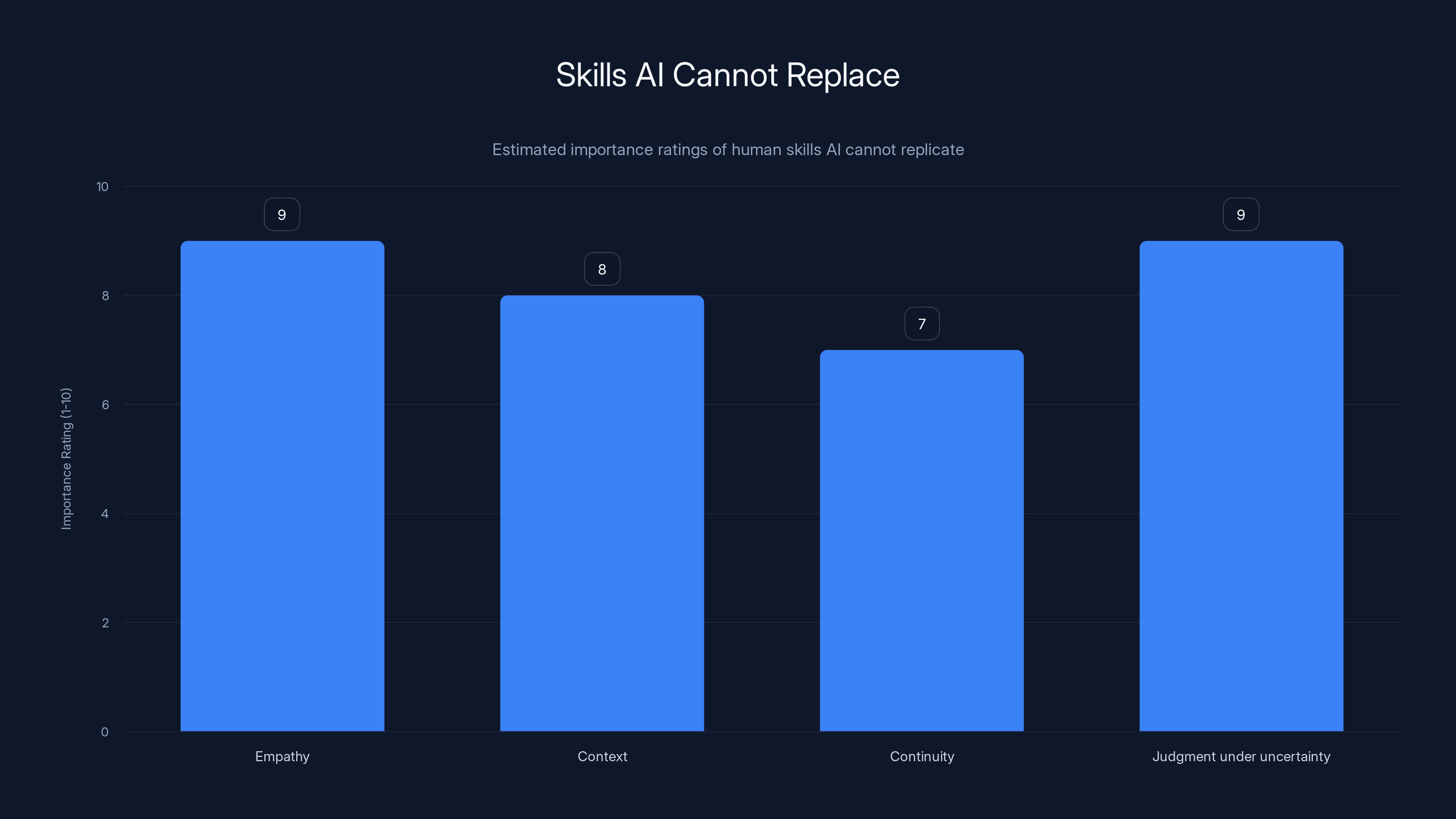

- Human skills increased in value, not decreased, in the age of AI—empathy, context, continuity, and judgment matter more than ever

- The winning strategy is strategic automation that elevates human expertise, not replaces it—keeping humans at decision points that matter

Automation errors can lead to significant financial losses, as shown by the

The Automation Trap: Why Most Companies Get It Wrong

Automation has a seductive logic. When you look at a support queue with 500 tickets, a sales team making 200 calls a day, or a security operations center fielding dozens of alerts hourly, the instinct is obvious: remove the bottleneck.

Chatbots handle initial responses. AI triage assigns tickets to categories automatically. Machine learning flags security anomalies. Robotic process automation handles repetitive data entry. Workflow automation manages approval chains. It all gets faster.

What gets slower is everything else.

Customers notice when they've been speaking to a system for five interactions and still haven't reached anyone who understands their actual problem. They notice when a support ticket gets closed automatically even though their underlying issue wasn't addressed. They notice when they try to escalate to a human and discover there are no humans left to escalate to.

I've watched this play out across industries. A software company automated its entire support intake. Within six months, their Net Promoter Score dropped 22 points. A financial services firm used AI to route inbound calls. Call handle time improved 18%, but first-contact resolution fell from 71% to 49% because the system couldn't recognize when a customer's issue required cross-departmental context.

The problem isn't automation itself. The problem is automation without human judgment at the decision points that matter.

Most automation gets designed from the wrong angle. Organizations look at high-volume, repetitive work and assume that's where the value is. So they automate intake, triage, initial response, and first-level troubleshooting. They automate everything except the part that should never have been automated in the first place: understanding why the problem matters to the customer.

According to Forbes, 73% of customers will abandon a brand after a poor automated support experience, yet 68% of companies cite "reducing headcount" as their primary automation goal.

That disconnect is where everything breaks.

When you remove human judgment from decision-making, you lose the ability to recognize context. An automated system sees a password reset request and resets the password. A human sees a password reset request from a new user in an unusual geography and verifies identity first. An automated system sees a customer calling the third time about the same issue and closes the ticket. A human hears a customer calling the third time and realizes the first solution didn't actually work, then investigates what went wrong.

These aren't minor differences. They're the difference between a service that works and a service that frustrates people into leaving.

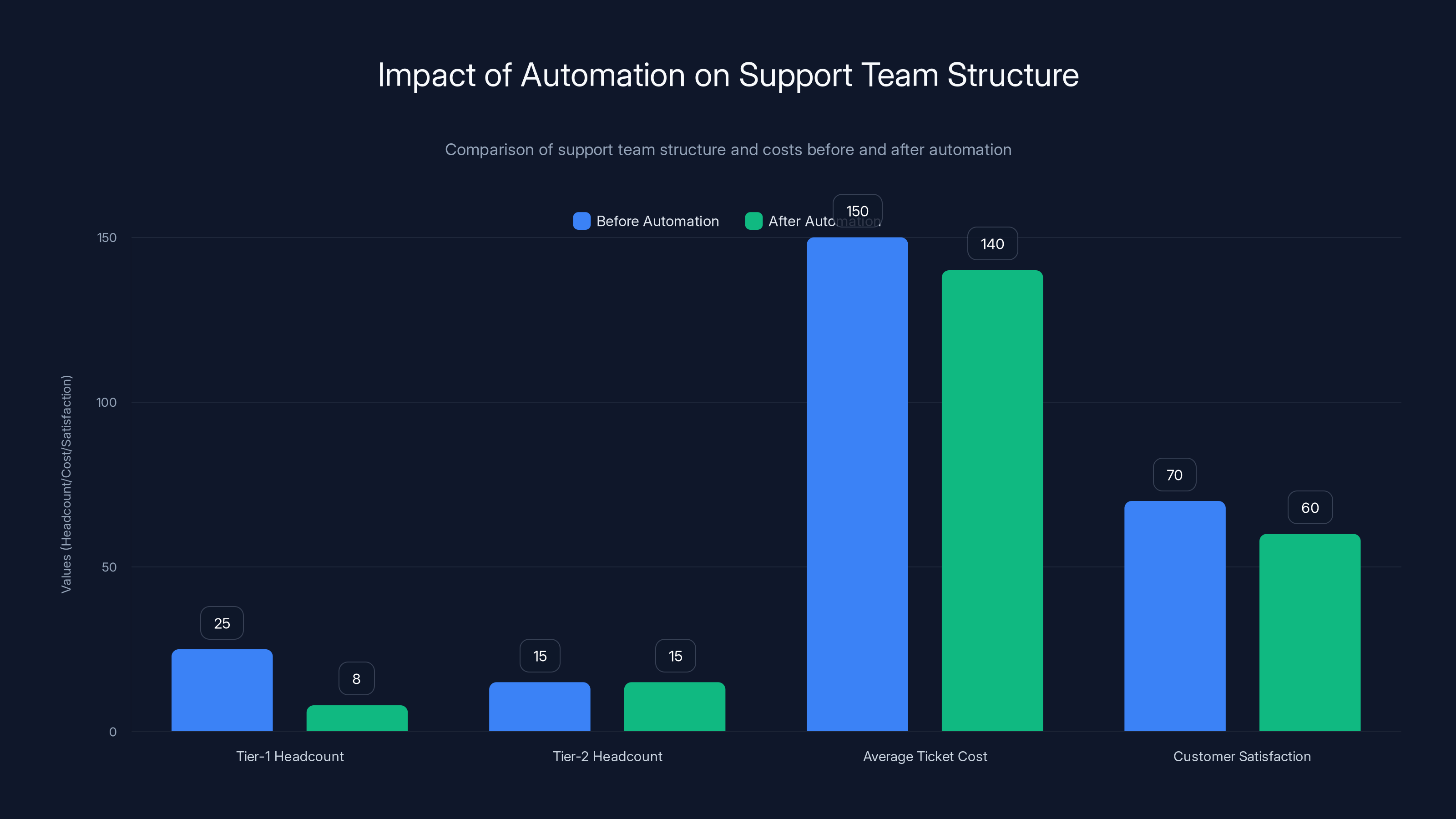

After automation, tier-1 headcount drops significantly, but average ticket cost only slightly decreases, and customer satisfaction declines. Estimated data highlights the inefficiencies of over-automation.

The Real Cost of Automation Gone Wrong

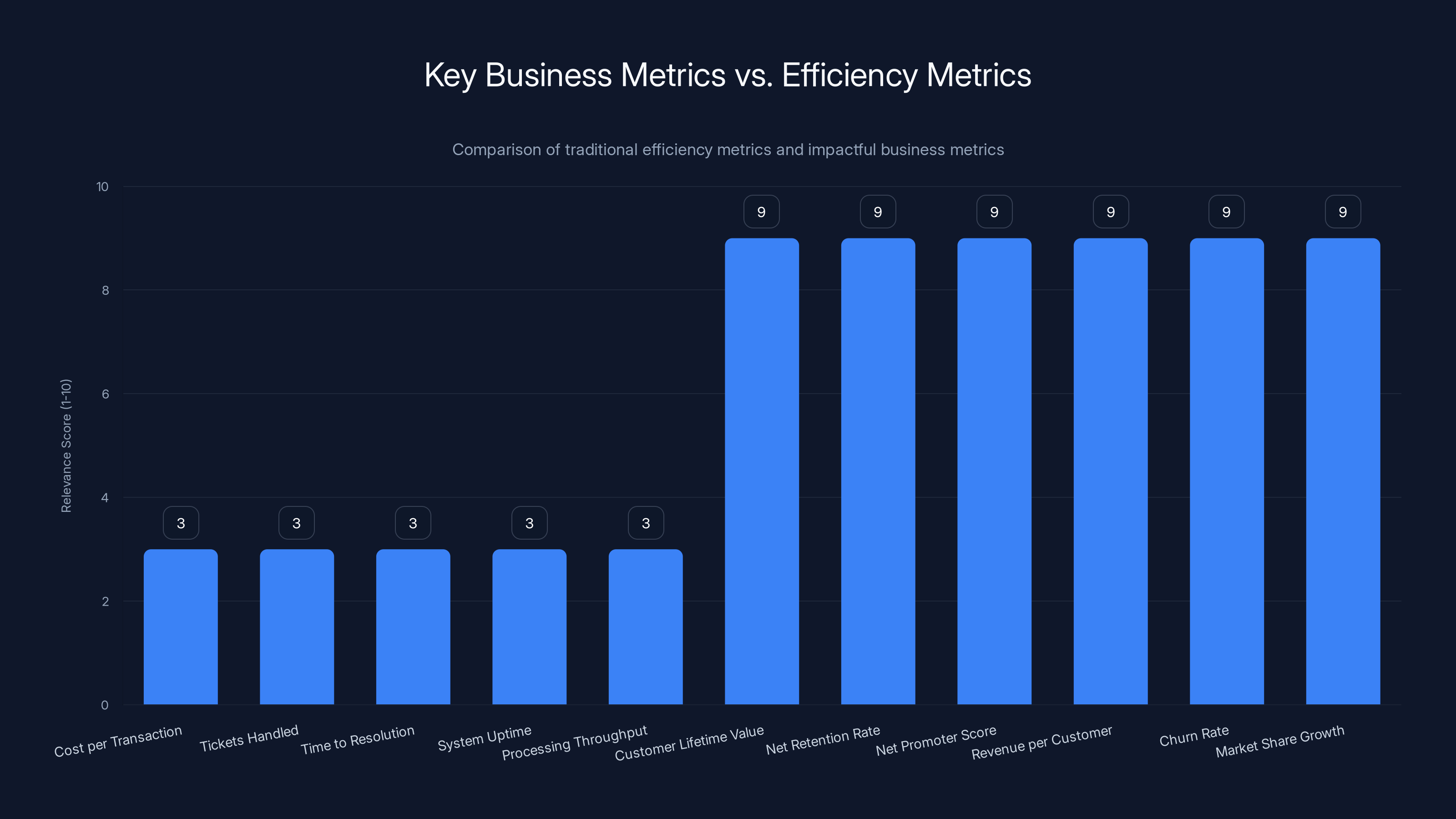

Most organizations measure automation ROI by looking at surface metrics: cost per transaction, tickets handled per agent, time to resolution. These are easy to track, easy to report to executives, and easy to celebrate.

What they don't measure is the damage.

Consider what happened at one mid-sized software vendor. They implemented an AI-assisted Copilot workflow to handle Microsoft licensing recommendations. The system was trained to suggest SKUs based on usage patterns. No human oversight. The automation was supposed to save the licensing team 15 hours per week.

Within three months, the company had incurred $35,000 in unnecessary licensing costs because the system confidently recommended the wrong SKU for one major customer account. The customer didn't catch it for two billing cycles. By the time anyone noticed, the damage was done.

That's not a unique story. It's a pattern.

A financial services firm automated loan application triaging. The system rejected 12% more applications than the previous manual process, citing policy violations. When a compliance officer finally sampled the rejections, she found the system was using outdated policy rules that had been updated six months earlier. No one had told the automation about the changes. Thousands of customers were rejected unnecessarily.

A security operations center automated alert triage. Ninety-eight percent of alerts were automatically marked as false positives based on historical data. When a real breach finally happened, it appeared as alert number 4,302 in a queue of 100,000 alerts marked the same way. It took three weeks to identify the breach. The damage cost the company $2.1 million.

These aren't edge cases. They're the inevitable result of removing human oversight from systems where judgment matters.

The financial impact of automation gone wrong typically shows up in three categories:

Direct costs. Misconfigured systems, wrong recommendations, incorrectly processed transactions. These are expensive and often take months to discover and fix.

Customer churn. When customers realize no human is actually supporting them, they leave. And they tell others. Net Promoter Score drops, retention plummets, acquisition costs rise because reputation suffers.

Operational rigidity. When exceptions happen, there's no one to handle them. A customer needs something outside the normal workflow. An employee has a unique situation. A regulatory requirement changes. The system can't adapt, so either it fails silently (and nobody notices until it's too late) or it requires emergency intervention that costs more than the automation saved in the first place.

Add these three up, and the supposed cost savings from automation frequently evaporate. Yet most organizations never calculate this total cost. They only see the line item: "Support agents reduced from 40 to 28. Savings:

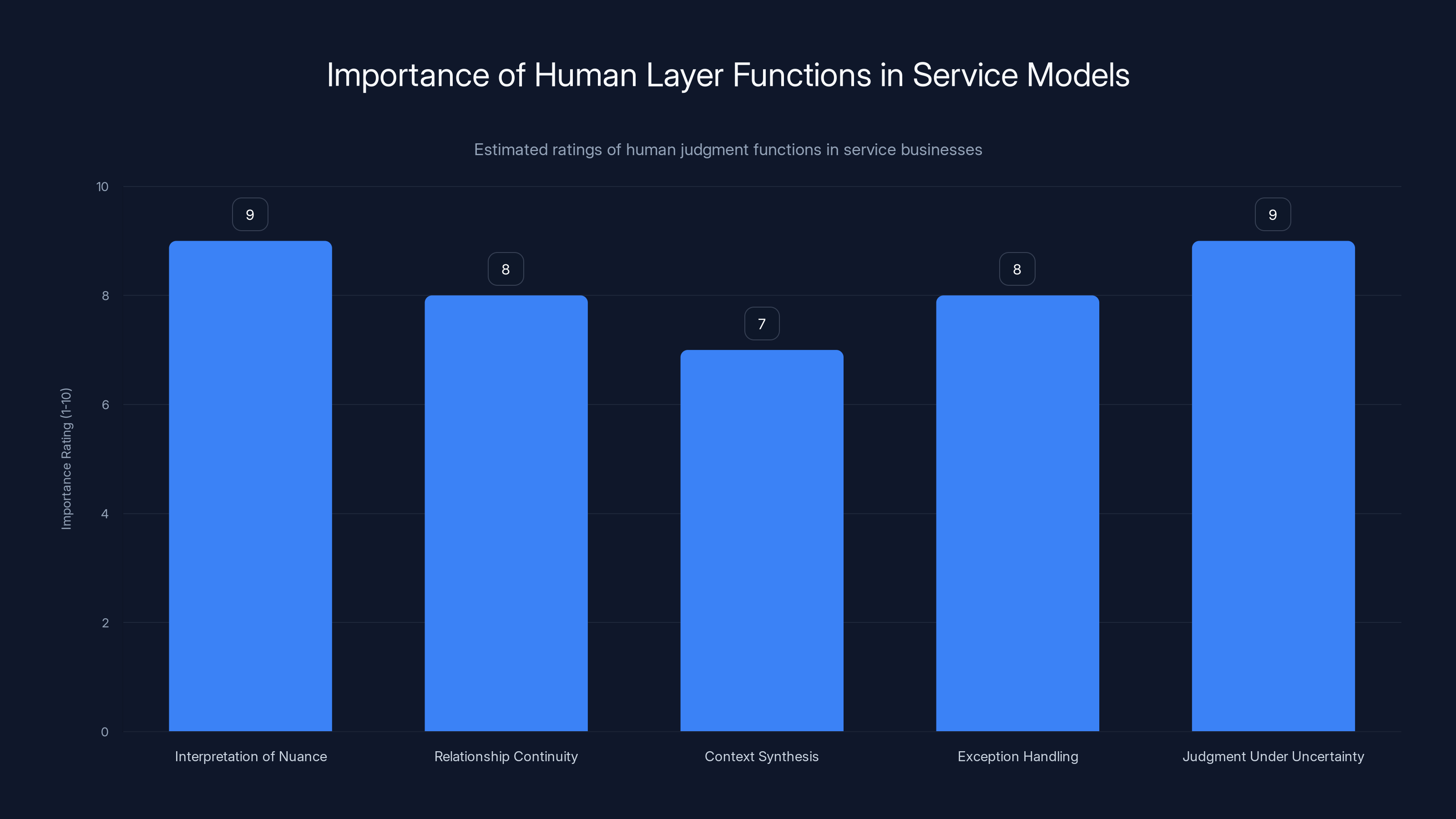

Where Service Models Break: The Human Layer Problem

Every successful service business has what I call a "human layer." It's not a group of people (though it might include one). It's a set of functions that require human judgment:

- Interpretation of nuance. Understanding why a problem matters to a specific customer, not just what the problem is.

- Relationship continuity. Remembering previous interactions, learning customer preferences, building trust over time.

- Context synthesis. Connecting a customer's stated problem to their larger business situation, their constraints, their goals.

- Exception handling. Recognizing when something doesn't fit the normal pattern and deciding what to do about it.

- Judgment under uncertainty. Making decisions when the data is incomplete, the situation is ambiguous, or the stakes are high.

That human layer is what separates a service provider from a self-serve platform. Customers don't pay for the ability to submit tickets to a machine. They pay for the ability to work with someone who understands them and will figure things out.

When organizations automate away the human layer entirely, they lose that differentiation. They become a platform. And platforms are commodity businesses with razor-thin margins and endless competition.

I've seen this happen in support, sales, security operations, and cloud management. The pattern is always the same:

Support teams automate intake and triage. The customer submits a ticket. An AI system reads it, extracts the issue category, assigns it to a queue. The system works. Tickets flow faster.

But now the person who gets the ticket has no context about why the customer chose to contact support in the first place. What did they already try? What's their technical background? Are they someone who's worked with your company for years or brand new? Is this a business-critical issue or a nice-to-have feature question? The automation stripped all of that away to optimize for speed.

The support person spends 20 minutes asking follow-up questions to rebuild context that the customer would have provided naturally to another human. The customer gets frustrated because they have to repeat themselves. And after the support person solves the immediate problem, they've built no relationship, learned nothing about the customer's broader needs, and have no basis for suggesting a better solution. The customer leaves a mediocre review, and the organization declares the automation a failure. But they don't revert it—they just hire more support people and accept that satisfaction scores will be lower.

Sales teams deploy AI outreach. An AI system identifies target accounts, researches them, and sends personalized emails. At scale, the system sends 500 emails per day. Open rates are tracked. The system learns which messages get responses.

But the emails are generic, even if they're personalized. They reference a company fact scraped from LinkedIn or Crunchbase, but they don't reflect any actual understanding of the prospect's business. A real sales conversation would explore the prospect's challenges, goals, constraints, and current approach. The AI email can't do that. So it gets ignored. Open rates stay high (because the emails are personalized) but response rates plummet (because they say nothing that matters).

The few prospects who do respond often aren't the high-value ones. They're people who respond to any sales email. The high-value prospects, the ones who would actually be good fits, never see the emails as relevant because no human ever took the time to understand their situation.

Security operations automate alert triage. An AI system receives thousands of alerts per day, marks 98% as false positives based on historical patterns. The 2% that remain are escalated to humans for investigation.

Except the system is confident in its false positive classifications only because all the previous breaches were minor ones that followed recognizable patterns. A new type of attack, or an attack that mimics normal activity, gets classified as a false positive. And because 98% of alerts are already false positives, security teams have learned to ignore the remaining 2% until something actually breaks.

This is the automation trap: the system works perfectly until it encounters something it wasn't trained to handle. And by definition, the thing that will actually hurt the organization is something it wasn't trained to handle.

According to SC World, the average time to detect a data breach is 207 days. In 89% of those cases, the breach indicator appeared in logs or alerts but was classified as benign by automated triage systems.

The human layer isn't a cost center to be minimized. It's the core value proposition. When you automate it away, you convert your business from a service to a platform, from trusted advisor to transaction processor.

And platforms compete on price. Services compete on value.

Efficiency metrics like 'Cost per Transaction' score low in relevance to business success, while metrics like 'Customer Lifetime Value' score high, highlighting the need to focus on customer-centric metrics.

The Skills That AI Cannot Replace (And Why They're More Valuable Now)

When AI and automation first became mainstream in IT, the fear was obvious: machines will replace workers. And yes, machines will do some jobs that humans used to do. But the flip side is less obvious and more important: the jobs machines can't do are becoming more valuable, not less.

Consider what it takes to actually run a successful IT organization, support a complex customer relationship, manage a security operation, or grow a sales team:

Empathy. Understanding what a customer is really trying to accomplish, and what's frustrating them about the current state. An AI system can analyze sentiment in text and respond with appropriate tone. But it can't actually understand why something matters to someone. You don't need empathy to follow a script. You do need it to figure out what the script should say.

Context. Knowing how this customer's situation connects to their larger business, their constraints, their strategy. An AI system can read a customer's website and summarize it. But it can't understand how their CTO's decision to go all-cloud affects their network architecture, which affects what they need from a vendor. A human with experience in that industry can make those connections in seconds.

Continuity. Remembering what you learned from a customer over months or years, and using that knowledge to anticipate their needs. An AI system has no memory. It processes information in real-time. But customer relationships compound over time. Someone you've worked with for three years trusts you to understand their constraints without having to be told again. That trust is worth a huge amount.

Judgment under uncertainty. Making good decisions when the data is incomplete, the situation is ambiguous, or the stakes are high. An AI system will make consistent decisions based on available data. But the most important decisions often involve information that's not available, patterns that haven't happened before, or stakes that are uniquely high for that customer.

I interviewed a CIO recently who had replaced his entire infrastructure support team with a managed service provider using AI automation. Cost dropped 40%. Mean time to recovery improved 22%. He was happy.

Then a critical business system went down during his company's biggest sales event of the year. The AI system took 8 minutes to triage the issue, route it to the right queue, and escalate it. During those 8 minutes, the customer lost $200K in sales. A human support person with context would have recognized the business criticality immediately and called in the senior engineer without waiting for proper escalation procedures.

After that event, the CIO hired a human architect to sit between the managed service provider and the business. Cost went back up $300K annually. But it prevented the next catastrophe.

He didn't need to replace automation. He needed to wrap automation in human judgment.

Technical depth. Understanding the actual technology—how it works, what it can and can't do, what the real constraints are. Modern IT environments are extraordinarily complex. Cloud platforms, hybrid infrastructure, security frameworks, compliance requirements, legacy systems still in production. The surface is wide. The depth is immense.

An AI system can follow a playbook: if X happens, do Y. But it can't design the playbook if the situation is novel. And novel situations happen constantly in modern IT.

I spoke with a security director who had implemented an automated threat response system. The system detected suspicious login patterns and automatically locked accounts. It worked well—reduced the time from detection to containment from 4 hours to 2 minutes.

But then it locked 340 accounts in a single hour because a contractor's batch script had a bug and was retrying failed logins across multiple accounts. The security team spent 6 hours unlocking accounts, investigating whether the batch script was malicious, and communicating with business leaders about what had happened.

A human who understood both security protocols and the business context would have recognized the pattern (escalating attempts from a single source IP, all within minutes) as likely to be a system error, not a breach. They would have picked up the phone and asked the IT team if anyone knew about it. The whole thing would have been resolved in 15 minutes.

Automation didn't make security faster. It made security more brittle.

The skills that matter most—empathy, context, continuity, judgment, technical depth—are the skills that automation can't provide. And in a world where automation handles routine work, these skills are more valuable than they've ever been.

Yet most organizations respond to automation by reducing headcount, not upskilling it. They see automation handling 60% of the work and conclude they need 40% fewer people. But they really need the same people, focused entirely on the 40% of work that requires judgment, context, and expertise.

According to ERP Today, job postings for roles requiring "customer empathy," "business acumen," and "problem-solving" have increased 34% since 2020, while postings for routine technical roles have declined 18%.

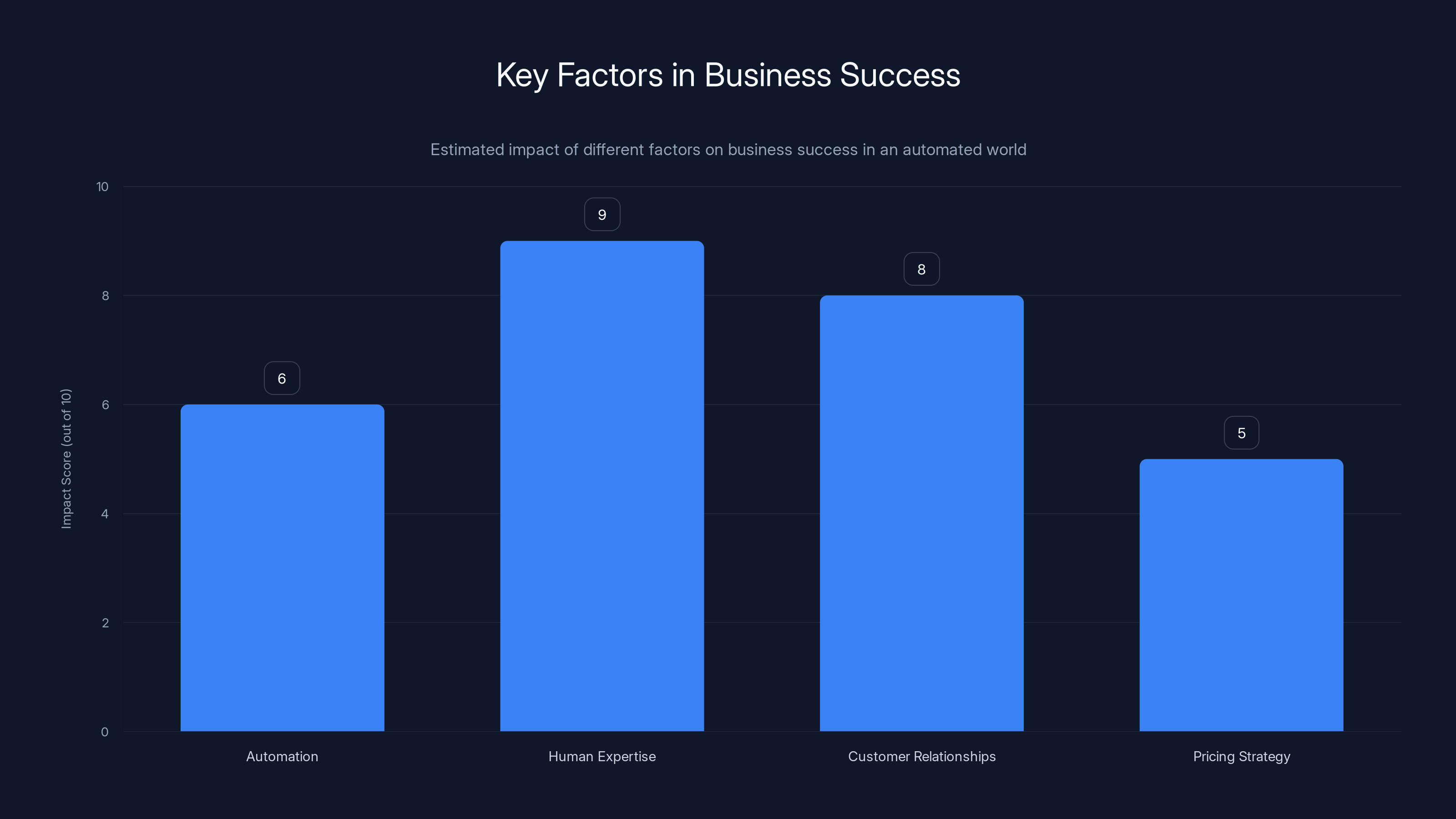

The organizations winning in the market aren't the ones with the most automation. They're the ones with the best combination of automation and human expertise. Automation handles volume. Humans provide value.

How Successful Companies Are Balancing Automation and Humanity

The companies that are winning aren't rejecting automation. They're being more strategic about where and how it's deployed.

The best pattern I've seen follows a consistent logic: Automate the gathering, accelerate the decision, keep the human accountable.

Here's what that looks like in practice:

In customer support: Automation gathers information, categorizes the issue, pulls up relevant documentation. A human specialist takes it from there. The system has done the work that takes time but doesn't require judgment. The human can focus on understanding the customer's context and figuring out a solution.

One software company restructured their support team this way. Instead of a two-tier system where first-level support handled 60% of questions and 40% escalated to experts, they moved to a single-tier system where automation handled the first 60% of work (gathering information, pulling docs, suggesting solutions) and then a single specialist took over from there.

Cost per ticket went up slightly—specialists earn more than first-level support. But first-contact resolution improved from 71% to 84%. Customer satisfaction improved. Specialists were happier because they weren't answering the same basic questions repeatedly. And total cost went down because fewer escalations and faster resolution time more than offset the higher per-ticket cost.

In sales: AI identifies prospects and researches them. Humans establish relationships and understand real needs. The system has done the work that finds potential customers. The human can do the work that actually converts them.

One B2B sales team implemented AI lead research and account intelligence. The system pulled in company information, funding announcements, job openings, technology stack changes. The sales team used this information to have smarter first conversations.

What didn't change: the sales team still made their own decisions about who to target. The system provided input, but humans decided. And the decision wasn't about which account to call. It was about which account to invest in building a real relationship with. The automation accelerated research. Humans accelerated decision-making.

In cloud operations: Automation monitors systems, identifies anomalies, suggests remediation. A human operator confirms the suggestion and executes it. The system has done the work of surveillance and pattern recognition. The human does the work of judgment and accountability.

One company automated patch management. Servers got scanned, vulnerabilities identified, patches flagged as ready for deployment. But a human security engineer reviewed each patch, understood the risk if it failed, understood the business impact of downtime, and decided whether to deploy immediately, schedule for off-hours, or defer. The automation did the surveillance. The human did the thinking.

In security operations: Automation triages thousands of alerts, groups them by pattern, escalates outliers. Humans investigate the outliers and decide whether they're breaches or false alarms. The system filters noise. Humans catch signal.

The critical difference: outliers are investigated by humans who understand the organization's technology and business context. It's not enough to flag something unusual. A human needs to understand what makes it unusual in this specific environment, given these specific systems and these specific business processes.

The pattern across all these examples is identical: automation handles high-volume, pattern-based work. Humans handle exceptions, judgment, and context. And critically, humans remain accountable.

Accountability is what changes everything. When a human knows they're responsible for the final decision, they think differently. They don't just execute what the system suggested. They question it. They check assumptions. They consider edge cases. And they'll escalate or make a different decision if they think the system is wrong.

When humans are removed from the equation, accountability vanishes. The system does what it was programmed to do. If the programming was wrong, nobody catches it.

One IT leader I spoke with puts it this way: "We automate tasks, not decisions. A task is something we do repeatedly with the same process every time. A decision is something we do differently based on context. We'll always have humans doing decisions."

He has a small team managing a large infrastructure. They automate everything routine. But when something unusual happens, a human is there to think about it. That human costs money. But it saves far more money than the automation generates, because it prevents the catastrophes that automation creates.

Empathy and judgment under uncertainty are rated as the most critical human skills that AI cannot replace, highlighting their increased value in the workforce. Estimated data.

The Economics of Over-Automation: Why Cutting People Backfires

Here's the business logic that usually drives over-automation: If automation can handle 80% of the work, we need 20% of the people.

It sounds mathematically sound. It's not.

The first problem is that automation isn't perfect. It handles 80% of routine work. But when it encounters exceptions, it either fails silently (creating problems that emerge later) or escalates everything up the chain (creating a bottleneck that's worse than the original).

So you don't actually get to a 20% headcount reduction. You get to a 40% reduction, and then customer satisfaction drops, churn increases, revenue decreases, and you realize you can't scale the business with the remaining team.

The second problem is that automation handles the easy 80%. It's the 20% of exceptions, contexts, and judgments that is actually hard. That 20% is where service quality is determined. That 20% is where customer relationships are built. That 20% is where innovation happens because someone recognizes a pattern in customer problems.

When you cut headcount by 40%, you're not reducing the easy work by 40%. You're reducing the hard work by 40% too. And the hard work is what actually matters.

Let me put this in concrete terms. A support team of 40 people, handling 1,000 tickets per week:

Before automation: 25 people do tier-1 support (handling straightforward requests, closing tickets). 15 people do tier-2 support (handling complex issues, investigating root causes). Average ticket cost:

After automation (poorly implemented): Automation handles tier-1 work. You reduce tier-1 headcount to 8. Tier-2 stays at 15 because the work is still complex. Average ticket cost:

After automation (well-implemented): Automation handles tier-1 work. You reduce tier-1 headcount to 8. You redeploy your tier-1 people toward: (a) training the automation system, (b) handling escalations, (c) proactively identifying customer issues before they become problems. You add 2 new tier-2 people who focus on account health and building relationships.

Now your tier-1 people aren't answering tickets anymore. They're making sure the automation is accurate, handling edge cases, and helping customers succeed. Your tier-2 people still investigate issues, but they also proactively reach out to customers with suggestions based on patterns they're seeing.

Average ticket cost:

The difference between the second and third scenario isn't the automation. It's whether you cut people or redeploy them.

Most organizations choose the second scenario because it looks better on a spreadsheet for the first year. Cost goes down. Metrics improve. Executives celebrate. But it creates the brittleness I've been describing. And brittleness eventually creates catastrophe.

The third scenario requires faith that the freed-up capacity will actually create value. And it requires discipline to not just pocket the savings but to reinvest it in human expertise.

According to Shreveport Times, companies that automate and redeploy workers see 3x higher profitability growth over 5 years compared to companies that automate and reduce headcount, according to MIT research analyzing 1,000+ companies from 2015-2022.

The economics are clear, but most organizations don't see it because they're measuring the wrong things. They measure cost per transaction, not customer lifetime value. They measure tickets closed, not customer relationships built. They measure efficiency, not resilience.

If you measure only efficiency, automation that removes humans always looks good. But if you measure business outcomes—revenue, retention, growth, reputation—the math changes dramatically.

Building Accountability Into Automated Systems

If you're going to automate decisions, someone has to be accountable for those decisions. Otherwise, the system becomes a black box, and when it fails, there's nobody to blame, nobody to learn from, and nothing to improve.

Accountability doesn't mean that a human has to make every decision. It means that someone is responsible for the system's outcomes, which means they have the authority to override it, modify it, or shut it down.

In practice, this looks like:

Clear ownership. Every automated workflow has a named person who is responsible for it. Not a team, not a department. A person. That person reviews the system regularly, investigates failures, and can explain why the system was designed the way it is.

Why a person, not a team? Because teams distribute accountability. Nobody ends up responsible. A person knows they'll be asked to explain why the system did what it did. That creates a very different incentive structure.

Oversight mechanisms. The accountable person reviews the system's decisions, either continuously (for high-stakes decisions) or periodically (for lower-stakes decisions). They sample the outputs to make sure the system is doing what it's supposed to do.

One IT operations team implemented this for their infrastructure automation. Every automated change was logged. Once per week, the responsible engineer reviewed a random sample of 10 changes to make sure they were correct. When they found an error (the automation had done something slightly wrong that wouldn't cause an immediate failure but would cause problems later), they understood the pattern, updated the automation, and prevented future errors.

Explicit permission to override. The accountable person has the authority to override the system's decisions without going through multiple approval layers. If the system suggests a patch, and the responsible engineer thinks it's wrong, they can skip it. That doesn't mean the system is bad. It means the system is incomplete, and humans recognize situations the system can't.

Post-mortems on failures. When the automation causes a failure, there's a structured process to understand what happened, why the system didn't catch it, and what needs to change. This isn't about blame. It's about learning.

One security operations team automates a huge portion of their alert triage. But when a real threat gets missed, they don't just fix the immediate problem. They do a post-mortem: Did the system have the information to catch this? If not, why not? If yes, why did it miss it? What needs to change?

These post-mortems have revealed that the system has blind spots in detecting certain types of threats that are rarer but higher-impact. Now they have a tier of high-stakes alerts that humans review, even if the system says they're false positives.

Traceability. Every decision the system makes can be traced back to the logic that created it. The person reviewing the decision can see: Why did the system recommend this? What input data triggered this decision? What would need to change to trigger a different decision?

This traceability is important because it allows the accountable person to distinguish between "the system is broken" and "the system is working as designed but the design is wrong."

One company automated customer tier classification (determining whether a customer is an enterprise account, mid-market, or small business). The accountable person noticed the system was classifying a long-time customer with low usage as mid-market instead of enterprise, which affected support prioritization.

She traced the logic and found the system was basing tier on current annual contract value, not on historical relationship or strategic importance. The system was working as designed, but the design was wrong. They updated the algorithm to include tenure and strategic fit. Problem solved.

Without traceability, she would have just seen a misclassification and blamed the system. With traceability, she could understand the design decision, evaluate whether it made sense, and improve it.

The infrastructure required for accountability is real. Someone's job is now to oversee the automation. You can't count that person's salary as a cost savings from the automation. But that person prevents the

She's not a cost. She's insurance. And she's insurance that pays for itself many times over by preventing the catastrophes that unmonitored automation creates.

The human layer functions such as interpretation of nuance and judgment under uncertainty are highly rated in importance, highlighting their critical role in differentiating service providers from automated platforms. Estimated data.

The Future: Augmentation, Not Replacement

The automation debate often frames this as a binary: either humans make decisions or machines do. That frame is wrong.

The future isn't automation. It's augmentation.

Augmentation means machines and humans working in a loop where they're each doing what they're best at:

Machines are best at: processing volume, recognizing patterns, consistency, speed, tireless execution.

Humans are best at: understanding context, making judgment calls, recognizing novel situations, communicating, building relationships.

Augmentation means automation systems that are designed from the ground up to work with human judgment, not replace it.

In customer support, that's a system that gathers all relevant customer information and suggests possible solutions, and a human who reads the information and picks the best solution given the customer's context.

In security operations, that's a system that flags anomalies and groups them by type, and humans who decide whether each group represents a real threat or a false alarm.

In cloud operations, that's a system that suggests changes and predicts their impact, and humans who make the final decision about whether to implement based on their understanding of the business.

In sales, that's a system that identifies opportunities and prospects research, and humans who use that research to build relationships and close deals.

This is more complex than either pure automation or pure human decision-making. You need both the system and the human in the loop. But the complexity is worth it because the output is dramatically better than either could achieve alone.

I've been watching this pattern emerge across industries for the past few years, and it's accelerating:

In healthcare, diagnostic AI systems that give doctors recommendations, but the doctors still make the diagnosis. The system catches patterns humans miss. The human prevents the system from hallucinating.

In financial services, algorithmic trading that suggests trades based on pattern recognition, but humans still approve large trades. The system finds opportunities. The human prevents catastrophic decisions.

In legal services, document review automation that identifies relevant documents and flags potential issues, but lawyers still make strategy decisions. The system accelerates the routine work. The human provides judgment.

In manufacturing, predictive maintenance systems that flag equipment likely to fail, but technicians still inspect the equipment before preventive maintenance. The system identifies risks. The human confirms whether the risk is real.

The common pattern: automation is for speed and scale. Humans are for judgment and accountability.

The organizations that will succeed in the next decade aren't the ones with the most automation. They're the ones that figure out the right way to combine automation and human judgment.

According to a Stanford study, radiologists paired with AI diagnostic systems had 8.5% higher accuracy than radiologists alone, and 15% higher accuracy than AI systems alone. The best results came from human-AI collaboration, not replacement.

Building augmentation systems requires different thinking than building automation systems. Instead of asking "How do we automate this?" you ask "What would it look like if a machine did the routine work and a human did the thinking?"

Instead of designing systems that maximize efficiency, you design systems that maximize outcomes.

Instead of minimizing human involvement, you optimize human involvement.

This is harder than just deploying automation. But it's also more resilient, more adaptable, and more likely to create actual business value.

The companies that are winning—the ones with growing revenue, high customer retention, and strong reputations—are the ones that got this balance right.

The Customer Experience Equation: Why Automation Alone Isn't Enough

Here's a hard truth about customer experience: it's not determined by efficiency. It's determined by feeling known.

A customer doesn't care if their support ticket was resolved in 8 minutes. They care if the person who helped them understood their problem, knew their constraints, and actually solved something that matters to them.

Automation optimizes for efficiency. Customer experience requires something else entirely.

I've worked with companies that have 10-minute average resolution times (great efficiency) and NPS scores in the 30s (terrible experience). And I've worked with companies that have 2-hour resolution times (poor efficiency) and NPS scores in the 70s (excellent experience).

The difference isn't effort. It's attention.

When a customer talks to a human who clearly understands their business, their constraints, and what they're trying to accomplish, they feel known. That feeling is worth a longer wait time. That feeling is worth staying loyal even if a competitor is slightly cheaper.

When a customer talks to an automated system, they feel processed. They're a ticket. They're a data point. They're efficiently converted into whatever the system thinks they need.

One software company tried to improve customer satisfaction by adding more automation. Chatbots for common questions. Auto-response to tickets. Automated ticket closing for resolved issues. They invested heavily in these systems.

Customer satisfaction went down.

So they added human specialists to handle the overflow from the chatbots, the edge cases, the escalations. The humans were busier than ever because they were handling the hard stuff while the automation handled the easy stuff.

What actually fixed the problem was removing the automation and giving each customer a dedicated person whose job was to know their account and help them succeed. Cost per customer went up. Efficiency went down. Customer satisfaction went up dramatically.

They had optimized for the wrong variable.

Now, this doesn't mean automation is bad. It means automation without human connection is bad.

The same company implemented a new approach: automation handles the routine work (answering common questions, gathering information, suggesting solutions). A human takes it from there. The human can focus entirely on understanding the customer and making sure they succeed, because the routine work is handled.

Cost per customer went down compared to the pure human model (because automation is efficient). Efficiency went up compared to the previous system (because automation handles volume). And customer satisfaction went way up because the human is focused on relationship, not efficiency.

This is the pattern that works: automate the work, keep the person.

Too many companies try to automate the person away. That's when everything breaks.

Building this feeling requires consistency. The same person (or a small team that knows each other) handling the relationship. Continuity. Remembering what you learned from that customer previously. Proactive help. Reaching out to suggest something before the customer has to ask.

Automation can't do any of this. Only humans can.

But humans without automation are overwhelmed and can't scale. So you need both.

While automation is important, human expertise and customer relationships have a higher impact on business success in an automated world. Estimated data.

Measuring What Actually Matters: Beyond Efficiency Metrics

Most organizations measure the wrong things when evaluating automation.

They measure:

- Cost per transaction

- Tickets handled per agent

- Time to resolution

- System uptime

- Processing throughput

These are all efficiency metrics. They're useful. But they're not correlated with business success.

The metrics that actually matter are:

- Customer lifetime value

- Net Retention Rate (what percentage of existing customers are still there next year)

- Net Promoter Score (would they recommend you)

- Revenue per customer

- Churn rate

- Market share growth

I've watched companies optimize for efficiency metrics and watch these business metrics decline simultaneously. The automation works perfectly. The business gets worse.

The reason is that efficiency optimization and customer experience optimization are often at odds.

If your goal is to handle the maximum number of tickets per hour, you optimize for speed. You route tickets to the fastest handler. You close tickets as soon as the immediate issue is resolved. You minimize escalations.

If your goal is to maximize customer lifetime value, you optimize for relationship. You match customers with people who understand their business. You proactively reach out. You solve underlying problems, not just immediate symptoms. You invest in the relationship knowing it will pay off over years, not this transaction.

These require different systems, different incentives, and different staffing.

One IT consulting firm was measured on billable hours. So they optimized to maximize billable hours. They did consulting work that required ongoing support. They created dependencies. They built systems that needed constant maintenance.

Their utilization rates were excellent. Their employees were busy. The business was dying because customers were increasingly frustrated.

When they switched to being measured on customer retention and customer satisfaction, everything changed. They started building systems that required less ongoing support. They automated things that weren't billable but were valuable to customers. They did less work, but did work that actually mattered.

Billable hours went down. Revenue went up because customers stayed and referred others.

The systems didn't change. The measurement system changed. And that changed everything.

According to Bain & Company research analyzing 500+ companies, companies that optimize for customer lifetime value grow revenue 40% faster on average than companies that optimize for transaction volume.

When implementing automation, measure:

Customer retention: What percentage of customers you lose to competitors or churn. If automation improves efficiency but causes retention to drop, you're losing money.

Customer expansion: What percentage of customers increase their spending with you over time. Automation should free up capacity for relationship building, which leads to expansion.

Net Promoter Score: Whether customers would recommend you. Automation that tanks NPS is automating too much.

Revenue per employee: Your total revenue divided by headcount. Automation should increase this by allowing employees to focus on higher-value work. If it stays the same or decreases, the automation isn't working.

Time in market: How long it takes to respond to new customer needs or market changes. Automation that makes you rigid and unable to adapt is expensive.

Employee satisfaction: Whether your team is happy and staying with you. Automation that eliminates the interesting work and leaves people feeling like babysitters creates turnover, which is expensive.

These metrics require a different way of thinking about automation. Instead of "How much can we automate?" the question becomes "What should we automate to maximize business value?"

The answers are often surprising. Sometimes it's better to automate less, not more. Sometimes it's better to keep people and automate the support systems around them. Sometimes the right automation is building tools that make humans faster, not automating the humans away.

Building Human-Centered Automation Systems

If you're going to automate, do it right. Human-centered automation is different from automation-first approaches.

Start with the human workflow, not the technology. Watch someone actually doing the job. Understand what parts require judgment, what parts require context, what parts are repetitive and routine. Automate the routine parts. Keep the judgment parts for humans.

Most automation projects start the opposite way: "Here's this AI technology, how can we apply it?" That usually leads to automating the wrong things.

Design for augmentation, not replacement. Ask: "How can this automation make the human more effective?" not "How can this automation replace the human?"

One customer service team implemented automation that gathered customer information and drafted potential responses. The rep still reviewed and sent the response. Reps could handle 30% more customers because the information gathering was automated. But they were still making decisions and building relationships.

The alternative automation would have been: automatically send recommended responses. Faster for the system. Worse for the customer.

Build in override capability from day one. A human should be able to override the system's decision without special permissions. This isn't failure. This is the system working as designed.

One ops team implemented automation that scaled infrastructure based on load. But a human could still override it if they knew something the system didn't (like a planned traffic spike that hadn't shown up in historical data yet). That override capability has prevented multiple outages.

Invest in explainability. The system should be able to explain its decisions in a way a human can understand and verify. If the decision is a black box, nobody can override it intelligently.

One hiring automation system would auto-reject candidates. When someone asked why a particular candidate was rejected, the system couldn't explain. That's a problem. You want the human to be able to look at the system's reasoning and say, "That's wrong, override." But if the reasoning is opaque, that's impossible.

Implement feedback loops. What the system learns from should be what actually happened, not what the system predicted. If the system recommends a solution and the human does something different, what happens next? Does the system learn from the outcome?

One support system would suggest solutions. Reps would implement them. The system would track whether the ticket was resolved, but not whether the suggested solution was actually the right one. Over time, it learned to make suggestions that were usually wrong, but it didn't have feedback to improve.

When they added feedback (rep marks whether the suggested solution was correct), the system improved dramatically.

Monitor outcomes, not just efficiency. Make sure the automation is actually making things better, not just faster.

One company automated problem classification. Tickets were classified faster, but into the wrong categories more often. Speed went up, but accuracy went down, and the wrong classifications sent work to the wrong teams, causing delays elsewhere.

Only when they started measuring end-to-end time to resolution (not just time to classification) did they realize the automation was actually making things worse.

Invest in the humans who work with the system. Train them. Give them tools. Make sure they understand not just how to use the system but how to think about when the system is right and when it's wrong.

One IT team implemented automation to manage routine tasks. But nobody trained the team on when the automation could make mistakes. So the team trusted it completely, and problems went unnoticed. When they trained the team on the system's limitations, they started catching and fixing errors, and the system improved dramatically.

Build community around the system. Create a space where people using the system can share what works and what doesn't. Crowdsource improvements.

One company had reps using an automation system to draft responses. A couple of reps figured out that if you phrased your request differently, the system would give much better suggestions. That insight spread through the team and made everyone more effective. But it only worked because there was a culture of sharing improvements.

Building human-centered automation requires more upfront investment than just deploying an off-the-shelf AI system. It requires understanding your workflow, designing carefully, and maintaining ongoing investment in the human side.

But the payoff is enormous. Systems built this way are more effective, more resilient, and more aligned with business outcomes.

The Organizational Shift: From Cost Center to Value Driver

Most organizations view their service delivery team as a cost center. The goal is to minimize the cost of support, sales, operations, or security.

When you're optimizing for cost minimization, automation looks great. Every person you can eliminate is a cost reduction. Every process you can speed up is a headcount reduction opportunity.

But service delivery should be a revenue driver, not a cost center.

The companies that win aren't the ones with the lowest cost per transaction. They're the ones with the highest customer lifetime value, the highest NPS, the strongest retention.

They get there by having great service. And great service comes from having smart people with deep expertise, time to understand customers, and the authority to solve problems creatively.

That's expensive. But it drives revenue in a way that bare-bones cost-minimized service never will.

One company decided to stop viewing their support team as a cost center and start viewing it as a revenue driver. They increased support headcount by 30% but trained them deeply. They gave them authority to solve customer problems creatively. They made sure each customer had a dedicated person who understood their account.

Cost per ticket went up significantly. Customer lifetime value went up more significantly. Revenue grew. Profit grew. Stock price went up.

Cost went up. Profit went up. That's the dynamic that matters.

A traditional cost minimization approach would have tried to reduce the support headcount even more, deploy more automation, and try to maintain revenue while cutting costs. That's a race to the bottom that ends with the lowest-cost provider winning through sheer price competition. Margins collapse.

A value-driver approach invests in support as a way to increase customer lifetime value. Margins improve because customers stay longer and spend more.

This shift requires changing how you measure success, how you allocate resources, and how you think about your team.

Instead of "How do we minimize support cost?" the question becomes "How do we maximize customer value through great support?"

Instead of "How many customers can one support person handle?" the question becomes "How much revenue can we generate by having great relationships with our customers?"

Instead of "How fast can we resolve tickets?" the question becomes "How can we proactively help customers succeed?"

Automation absolutely has a role in this. But it's a role of supporting the humans who create value, not replacing them.

Organizations that get this right are the ones that will win in the next decade. Not because they have the most automation, but because they have the right combination of automation and human expertise, focused on creating value rather than minimizing cost.

According to Forbes, companies in the top quartile for customer service quality have 25-35% higher profit margins than companies in the bottom quartile, even when controlling for price and market share.

The shift is organizational, not technological. It requires leadership to decide that service is a revenue driver, not a cost center. Everything else flows from that decision.

Looking Forward: The Human Advantage in an Automated World

Automation will continue to advance. AI will get better at recognizing patterns, processing data, and executing routine tasks. This isn't speculation. It's already happening.

But the competitive advantage won't go to the companies with the most automation. It will go to the companies that figure out how to combine automation with human judgment effectively.

Here's why:

Every automation technology that matters becomes commoditized. If Chatbot A works great, Chatbot B will work almost as well within two years. If Machine Learning Algorithm X improves security by 20%, Algorithm Y will improve it by 19% six months later.

Automation differentiates you temporarily. Eventually, everybody has similar automation. Then it's not a competitive advantage anymore. It's table stakes.

What doesn't commoditize is judgment, expertise, and relationships. The person who understands your business deeply, who remembers what you said last year, who proactively helps you avoid problems, who you trust to make good decisions on your behalf—that person is hard to replace with a machine. That relationship is valuable. That expertise commands premium pricing.

So the companies that will win are the ones that invest in automation to handle routine work, freeing up human expertise to do high-value work that builds relationships and drives revenue.

They'll spend more on people than their competitors. But they'll make more from those people. Higher revenue per person. Higher customer lifetime value. Higher margins.

Lower-cost competitors will optimize for efficiency and keep losing customers to the better-service competitors.

It's already happening. The enterprise software companies winning in the market aren't the ones with the lowest price or the most efficient support model. They're the ones with the best customer success teams, the strongest customer relationships, and the highest Net Retention Rates.

They've figured out that automation + expertise = competitive advantage.

Automation alone = commodity.

Expertise alone (in an age of automation) = inefficiency.

But automation + expertise, focused on customer value = unstoppable.

According to Shreveport Times, enterprise software companies with Net Retention Rates above 120% (meaning existing customers are growing their spending) trade at 5-10x higher multiples than companies with Net Retention Rates below 100%, even if total revenue is similar.

The future of work isn't humans vs. machines. It's humans and machines working together, each doing what they're best at, creating value that neither could create alone.

The organizations that see this clearly and act on it will win. The organizations that still frame it as "automation or people" will lose to competitors who chose "automation and people."

Making the Shift: A Practical Framework for Your Organization

If you're reading this and thinking, "Okay, but how do we actually do this?" here's a practical framework:

Step 1: Audit your current automation. For every automated system you have, ask: Is this system making humans more effective, or replacing them? If it's replacing them, what's the business impact? (Look at retention, NPS, revenue—not just efficiency metrics.)

Step 2: Identify high-value work that isn't automated yet. What work requires human judgment, context, and expertise? That work should be protected. Don't automate it. Invest in doing it better.

Step 3: Identify low-value work that should be automated. What work is routine, repetitive, and doesn't require judgment? That's what you automate. The goal is to free up capacity.

Step 4: Redeploy capacity to high-value work. Don't pocket the savings. Don't cut headcount. Redeploy people to the high-value work you identified in Step 2.

Step 5: Measure business outcomes, not efficiency. Track retention, NPS, revenue per customer, and customer expansion. These are the metrics that matter.

Step 6: Build accountability into automated systems. Assign clear ownership. Build feedback loops. Create oversight mechanisms. Make sure someone is responsible for outcomes.

Step 7: Invest in continuous improvement. As you learn what works and what doesn't, update your systems. Create feedback loops. Listen to the people using the systems and the customers experiencing them.

Step 8: Shift your culture from cost minimization to value creation. This is the hard part. It requires leadership to change what they measure and how they think about the business. But it's the foundation for everything else.

These steps won't create immediate cost savings. They'll create long-term revenue growth. They'll increase profit margins. They'll build customer loyalty. They'll create defensible competitive advantage.

But they require patience and faith that investment in people and relationships will pay off. That's harder to justify to Wall Street than automation that shows immediate cost reductions. But it's the right bet.

FAQ

What is the human touch in technology?

The human touch in technology refers to the irreplaceable role that human judgment, empathy, and expertise play in delivering technology services and solving customer problems. It's not about rejecting automation or avoiding efficiency improvements. It's about recognizing that some aspects of service delivery—understanding context, building relationships, making complex judgments, and handling exceptions—require human decision-making and cannot be fully replaced by automated systems without creating problems.

How does over-automation undermine trust in IT?

Over-automation undermines trust because customers and users stop feeling known and understood. When every interaction is with an automated system, customers notice that nobody understands their specific situation or constraints. When automated systems make decisions without human oversight, errors go undetected until they cause problems. When organizations remove the people who could escalate issues or provide judgment, users feel abandoned. Trust is built through consistent, knowledgeable relationships over time—something that machines cannot provide.

What are the hidden costs of automation without human oversight?

The hidden costs include customer churn (people leave when they don't feel understood), service failures (when exceptions occur that the automation can't handle), compliance violations (when automated systems make decisions that violate regulations or policies), and opportunity costs (when humans spend their time managing automation exceptions instead of creating value). Additionally, misconfigured automated systems can cause significant financial damage before anyone notices, as happened with the $35,000 Microsoft SKU mistake described in the article.

Why are human skills more valuable now, not less?

As automation handles routine, repetitive work, the work that remains—interpretation of nuance, understanding context, building relationships, making judgments under uncertainty, and providing technical expertise—becomes more valuable. Machines can execute consistent decisions based on available data, but they cannot understand the human context, anticipate unstated needs, or make good decisions when information is incomplete. In a world where machines handle routine work, the ability to do judgment-based work becomes a scarcer, more valuable skill.

How should organizations balance automation and human expertise?

The best approach is to automate the information gathering and pattern recognition, then keep humans in the loop for decisions and judgment. Automation handles routine work like data gathering, initial categorization, and suggesting solutions. Humans review this information, apply their expertise and understanding of context, and make final decisions. This approach gives you the efficiency benefits of automation plus the judgment benefits of human expertise. The result is better outcomes than either automation or humans alone could achieve.

What metrics should we use to measure automation success?

Instead of measuring only efficiency metrics like cost per transaction or tickets handled per hour, measure business outcomes like customer retention, customer expansion, Net Promoter Score, revenue per employee, and profit per customer. Automation should increase these metrics. If automation improves efficiency but harms these business metrics, you've automated the wrong things.

How can we maintain accountability in automated systems?

Assign clear ownership for each automated system to a specific person who is responsible for its outcomes. Build oversight mechanisms so that person reviews the system's decisions regularly. Give them the authority to override the system without special permission. Create audit trails so every decision can be traced back to the logic that created it. Conduct post-mortems when things go wrong to understand what happened and what needs to change. Accountability is what transforms automation from a black box into a trustworthy tool.

What does human-centered automation look like in practice?

Human-centered automation augments human work rather than replacing humans. In support, it gathers customer information and suggests solutions, but a human specialist still reviews the information and decides the best approach. In security operations, it flags anomalies and groups them by type, but humans still investigate to determine if they're real threats. In sales, it researches prospects and suggests outreach, but humans still make the relationship-building decisions. In all cases, the system handles the routine parts, freeing humans to focus on judgment and relationships.

The next wave of successful businesses won't be defined by how much they automated. They'll be defined by how well they combined automation with human expertise to create value that competitors can't match.

The companies winning now understand this. They're automating the routine work and investing heavily in the people who do judgment-based work. They're measuring business outcomes, not efficiency metrics. They're treating their service teams as revenue drivers, not cost centers.

That's not nostalgia for a pre-automation era. It's clear-eyed understanding of what actually drives business success in a world where automation is everywhere.

The human touch isn't the opposite of technology. It's the thing that makes technology valuable. Protect it.

Key Takeaways

- Removing human judgment entirely from automated systems creates brittleness and catastrophic failures when exceptions occur

- Customer satisfaction and retention decline sharply when automation eliminates personal relationships and contextual understanding

- Hidden costs of over-automation (churn, service failures, compliance violations) typically exceed efficiency savings within 12-24 months

- Human skills in judgment, empathy, context, and expertise increased in value during the AI era, not decreased

- Successful organizations automate routine work to free humans for high-value judgment-based work, not to eliminate human roles

- Accountability structures are essential: named owners, regular review, override authority, and audit trails prevent automation disasters

- Measuring efficiency metrics instead of business outcomes (retention, revenue, NPS) causes organizations to optimize for the wrong goals

- Companies treating service delivery as a revenue driver with human expertise win against low-cost automation-focused competitors

Related Articles

- The AI Productivity Paradox: Why 89% of Firms See No Real Benefit [2025]

- AI Adoption Requirements for Employee Promotions [2025]

- 7 Biggest Tech News Stories This Week [February 2026]

- Meta's Metaverse Pivot: From VR to Mobile-First Strategy [2025]

- Data Leadership & Strategy: The Foundation of AI Success [2025]

- Wix and QuickBooks Integration: Complete Guide for SMBs [2025]

![The Human Touch in Tech: Why Over-Automation Fails [2025]](https://tryrunable.com/blog/the-human-touch-in-tech-why-over-automation-fails-2025/image-1-1771683089389.jpg)